Abstract

Cybercrime is currently rapidly developing, requiring an increased demand for information security knowledge. Attackers are becoming more sophisticated and complex in their assault tactics. Employees are a focal point since humans remain the ‘weakest link’ and are vital to prevention. This research investigates what cognitive and internal factors influence information security awareness (ISA) among employees, through quantitative empirical research using a survey conducted at a Dutch financial insurance firm. The research question of “How and to what extent do cognitive and internal factors contribute to information security awareness (ISA)?” has been answered, using the theory of situation awareness as the theoretical lens. The constructs of Security Complexity, Information Security Goals (InfoSec Goals), and SETA Programs (security education, training, and awareness) significantly contribute to ISA. The most important research recommendations are to seek novel explaining variables for ISA, further investigate the roots of Security Complexity and what influences InfoSec Goals, and venture into qualitative and experimental research methodologies to seek more depth. The practical recommendations are to minimize the complexity of (1) information security topics (e.g., by contextualizing it more for specific employee groups) and (2) integrate these simplifications in various SETA methods (e.g., gamification and online training).

1. Introduction

Cyber-attacks impose more threats to information security; data usage, digital footprints, and (internet) consumption rates rise continuously [1,2,3]. Since COVID, cyberthreat volumes have increased by 25% [4,5,6]. It is predicted that by 2025, 40% of cybersecurity programs will utilize socio-behavioral principles influencing security culture across organizations due to the ineffectiveness of traditional security awareness programs [7]. Gartner expects that from less than 5% in 2021 to 40% in 2025 of cybersecurity programs will have deployed socio-behavioral principles (such as nudge techniques) to influence security culture across the organization [3]. This shift is supported by recent academic research, which highlights the significant role of incorporating behavioral and social science principles to enhance cybersecurity outcomes, underscoring that traditional technical defences alone are insufficient [8]. Human aspects stay present in most data breaches and phishing; security awareness efforts are too static to prepare technologists and others for effective security decision-making. Many breaches result from preventable human behaviors, emphasising the need for security behavior and awareness programs [9,10,11,12]. Thus, attention should be focused on holistic behavior and change programs rather than just compliance-based awareness efforts [7,10,13,14].

Organizations are encouraged to adopt new secure behaviors for cyber resilience due to the increasing sophistication of cyberattacks. Information is one of the most valuable assets of an organization, hence why employees are obligated to undergo various forms of education and other training efforts. As a result, organizations often use standardized security education programs (SETA) to develop ISA, defined as user awareness and commitment to information security guidelines [15,16,17]. SETA efforts, being top-down and supply-driven, fail to account for internal characteristics and personal behavior in ISA development. Information Security Policies (ISP) serve as controls, but their effectiveness is compromised when employees lack sufficient awareness, potentially leading to violations of the policies. Raising ISA is thus seen as a crucial first step [18,19,20]. Employees must also be aware of security risks to have an effective first line of defense. A narrow focus on technical aspects is insufficient given the multidisciplinary nature of information security, where human aspects are crucial. Hence, ISA is diverse, requiring various awareness capabilities to address different threat categories, underscoring its ongoing importance. Effective education approaches for continuous ISA serve as crucial complements to regular monitoring efforts. The financial and insurance industry, the second most attacked sector, faces a need for robust security awareness efforts despite its professionalism and compliance obligations [21,22].

Recent Information System (IS) literature predominantly focuses on security behavior, policies, violation and compliance, and education tools for security awareness, with limited attention given to ISA [23]. Current research lacks an emphasis on ISA; prioritization factors such as individual awareness, cognition, and organizational culture are underexplored. Notably, there is a scarcity of IS security literature relating to cognitive and learning mechanisms, such as behaviorism, cognitivism, or constructivism. Opportunities for future research include exploring learning and awareness programs’ promotion and expanding the understanding of ISA capabilities [24]. Hence, a more concentrated focus on these factors is needed in IS research. There is a recognized need for more research on ISA, considering factors like industry, organizational types, employee roles [25,26,27,28,29], personality traits [14,30], and cognitive and behavioral mechanisms [25,31,32,33]. Additionally, there is no common understanding of decisive factors of ISA [32,33]. Hence, this research distinguishes itself by exploring cognitive and internal organizational mechanisms, departing from traditional behavioral theories, and focusing on ISA as a dependent variable to understand its formulation for improving the effectiveness of the ‘human firewall.’ Overall, the broader literature reflects the need for additional research, particularly focused on understanding how ISA shapes itself and its role in establishing the ‘human firewall,’ making it academically and practically relevant.

This research seeks to develop a validated theory to enhance ISA in the financial and insurance sectors, with broader applicability. The goal is to understand employees’ mental models regarding security threats, enabling more effective ISA education. The insights obtained can strengthen the ‘human firewall’ across different industries. The research emphasizes addressing ‘how’ to engage employees in ISA education. The findings can be used to tailor security awareness programs, modify processes like onboarding, and target ISA education to meet organizational needs [10,34].

Academic contributions manifest, particularly in the insurance and financial sectors, by examining internal factors influencing ISA. A smaller group of research papers investigate antecedents of ISA [19,35,36,37]; most existing research focuses on ISP compliance or violations, neglecting the understanding of ISA among employees [25,38,39,40,41,42,43,44,45]. This study addresses the gap by delving into several employee factors, providing a unique approach to building a research and conceptual model. With this in mind, Haeussinger and Kranz [36] argue that further research is needed to seek antecedents of ISA; they emphasized institutional, individual, and socio-environmental antecedents as well. Furthermore, Hwang et al. [19] uphold that wish by stressing the need for researching cognitive and perceptual factors on how ISA is accomplished. The findings can be valuable for researchers in IS security and other social science fields, offering insights applicable in various contexts and types of awareness beyond information security.

2. Literature Review

A deep understanding of the antecedents of ISA is essential for ensuring the security of organizations and employees. In the 1990s, scholars began recognizing the significance of human factors in information security, moving beyond a purely technological focus [46]. Since then, behavioral information security has emerged as a field with distinct research streams in need of further exploration, including researching ISA [47]. ISA is defined as “a state of having knowledge allowing a person to: (1) recognize a threat when one is encountered, (2) assess the type and the magnitude of damage it can cause, (3) identify what vulnerabilities a threat can exploit, (4) identify what countermeasures can be employed to avoid or mitigate the threat, (5) recognize one’s responsibilities for threat reporting and avoidance, and (6) implement recommended protective behaviours” ([48], p. 109). This aligns with the holistic frameworks of Maalem Lahcen et al. [49] and Stanton et al. [50], which also encompass human factors and behavioral cybersecurity.

2.1. Information Security Behavior

Proper and compliant information security behavior is reached by transforming awareness to correct behavioral intentions, as evidenced by several studies [25,38,39,40,41,42,43,44,51,52,53]. The investigation of employee behavior in the context of IS has advanced over the years this century. A prevalent theme within the domain of information security behavior is the concept of ISPs, which employees either adhere to or violate. The above-referenced extensive research has aimed to understand the predictors and motivations behind employee behavior. Internal factors such as self-efficacy and attitude have been found to play significant roles in shaping compliance behavior. Table 1 provides an overview of (1) studies’ research objectives, (2) the role of self-efficacy and/or attitude, and (3) the role of ISA.

Table 1.

Ten studies on information security behavior compliance and the role of Information Security Awareness (unit of analysis: employee/user).

In analyzing ten empirical studies, it is apparent that while only two did not include awareness as a construct [51,54], all ten demonstrated the direct (included in the research question) or indirect (used as a core element but not in the research question) impact of ISA on compliant behavior. ISA positively influences individuals’ attitudes towards secure behavior and their motivation for security goals, crucial for predicting information security behavior or compliance. Employees’ perceptions of misbehavior consequences also clearly shape compliance intentions [25,40]. ISA emerges as a fundamental determinant of employees’ adherence to information security practices, underscoring the importance of maintaining sufficient awareness levels [38,53,55]. As per the references in Table 1, ISA consistently shows a positive impact on secure behavior. These findings align with our research model, which focuses on awareness. Fear has also been studied in the context of explaining information security behavior. It is often indirectly addressed through the concept of deterrence, which instils doubt or fear of potential consequences for non-compliance. Research has shown that deterrence affects malicious computer abuse and can heighten perceptions of threat severity and vulnerability [28,56]. Furthermore, threats that embody fear that employees have no awareness of yet should be covered more in depth to acknowledge the perceived severity of this [26]. Fear can also lead to attitudinal ambivalence towards ISPs, influencing protection-motivated behavior. The lack of security awareness contributes to this ambivalence and subsequent misbehavior [29]. Similarly, building employee awareness of information security threats is essential for promoting secure behavior [57,58]. Moreover, a greater presence of deterrence increases awareness of ISP and security measures [56].

Overall, while fear plays a role in the broader context of information security, our research model specifically highlights the critical importance of ISA. The insights from both Table 1 and the additional studies relating to deterrence and fear appeals underscore the necessity of maintaining high levels of awareness to shape employee behavior and attitudes towards security measures. Hence, there is a critical need to improve awareness programs to shape the attitudes and behavior of employees in any organization regarding information security.

2.2. From Information Security Behavior to Awareness

Theoretical perspectives have been utilized to explain security behavior in the existing literature. However, these theories may not align well with the context of this research, which focuses on awareness rather than behavior. The most commonly used theories to explain behavior include the (a) Theory of Reasoned Action/Planned Behavior [35,36,37,59,60,61,62,63], (b) Technology Acceptance Model [59], (c) General Deterrence Theory [61,62,63], and (d) Protection Motivation Theory [54,61,62,6465,66,67,68]. These studies were contextual for the selected situation awareness framework used in this study. Additionally, the social cognitive theory and social learning theory, particularly focusing on self-efficacy, have been used to explain behavior and ISA in studies [19,35,38,41,52,68,69]. Furthermore, theories such as relational awareness [35], affective absorption and affective flow [70], routine activity theory [66], social judgment theory [71], collectivism [72], and situation awareness [42,73] have been explored.

However, this research argues, implementing the suggestions from Lebek et al. (2014) [74], that while most of these theories may explain behavior well, they may not adequately explain awareness. Research for developing measures and process models that influence ISA, rather than solely relying on established relationships from commonly used theories, is needed. Notably, situation awareness is one of the few exceptions in explaining ISA, highlighting a shift towards dissecting awareness rather than behavior.

Situation awareness (SA), as defined by Endsley [75], involves perceiving, comprehending, and projecting elements in the environment to make informed decisions. Initially developed for aviation, SA applies to various dynamic situations, including everyday activities like walking on the street. In environments with constantly changing security risks, such as those in IS, SA becomes crucial [75]. The individual must recognize relevant knowledge and important environmental facts to make safe judgments [76]. SA comprises three levels: (1) perception, (2) comprehension, and (3) projection. Perception involves sensing environmental attributes, while comprehension involves understanding them, and projection involves foreseeing future developments [73,75].

Factors like information-processing mechanisms and memory influence SA [73]. Experimental studies explore the impact of information richness in SETA programs and phishing experienced with situation ISA, respectively [42,73]. This research differs from these studies in terms of methodology and included constructs. SA has been utilized in the cybersecurity literature for proposed frameworks but is less explored at the individual level [77,78,79]. Furthermore, Kannelønning and Katsikas [20] suggest that there is room for the further exploration of situation awareness at the level of individual employees within (cyber)security environments.

Endsley’s [75] definition of ISA aligns with the essence of SA’s three levels. While SA’s application in the IS literature is limited, this study explores new perspectives on awareness influences, motivating its inclusion in the IS literature.

2.3. Information Security Awareness

Bulgurcu et al. [25] (p. 532) combined general ISA and ISP awareness, defining it as “an employee’s knowledge of information security and their organization’s ISP requirements”. This highlights the two key dimensions. Hanus et al.’s [17] (p. 109) research adopts a broader definition based on security awareness: “A state of having knowledge allowing a person to: (1) recognize threats, (2) assess their impact, (3) identify exploitable vulnerabilities, (4) employ countermeasures, (5) recognize reporting responsibilities, and (6) implement protective behaviours.” This definition emphasizes that ISA goes beyond knowledge, requiring its application of the knowledge. Furthermore, it aligns with Siponen’s [15] view. ISA is described as a cognitive state of mind [62]. Additionally, ISA involves knowledge about information security, influenced by experiences or external sources [25]. ISA is positioned as the foundation of SETA [67] and is crucial for information security compliance [19].

Research into ISA and its antecedents has been conducted, with Haeussinger and Kranz [38,39] categorizing them into (1) individual, (2) institutional, and (3) socio-environmental predictors. Subsequent studies [35,42,80] built on these dimensions, exploring awareness antecedents further. Jaeger [41] added a fourth dimension: technological factors. The majority of studies contain institutional (management and firm) antecedents [35,40,42,45,72,81,82], such as leadership and information and education channels.

The individual dimension is equally represented, although its antecedents are more varied, covering topics such as personality traits and demographic factors, including age and personality traits predicting ISA [45,55,83,8485]. Additional individual antecedents include self-efficacy cues and behavioral traits like the tendency for risky behavior [38,41,52,64].

In Appendix A, an extensive table details all the studies and the dimensions utilized for their research constructs. Other studies examine awareness in the context of severity and susceptibility awareness [37] and countermeasure and threat awareness [66].

This research stresses the need to address human information processing, decision-making, the needs of employees, security culture, and the cognitive mechanisms that generate awareness, as these aspects are currently underexplored in the existing studies according to researchers [21,45,86]. This research aims to contribute to filling that gap to provide a better understanding of how to enhance ISA.

2.4. Proposition Development & Research Model

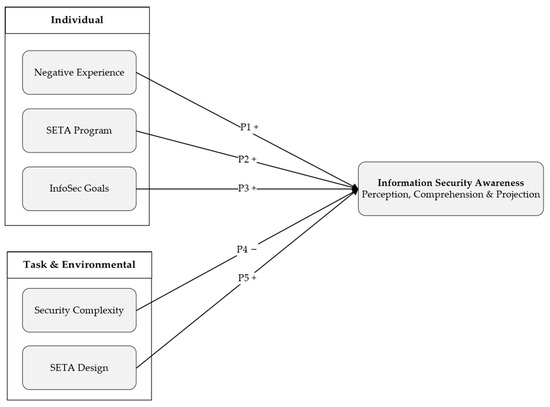

In this research, there are five propositions developed; this includes three individual propositions (Negative Experience, SETA Program, and InfoSec Goals) and two task and environmental propositions (Security Complexity and SETA Design). The following subchapters will detail the five propositions based on the literature.

2.4.1. Negative Experience

Endsley [75,87] suggests that experiencing an environment contributes to the development of expectations regarding novel events. Experience is a key factor influencing the aforementioned information-processing mechanisms. While work experience may not directly impact eventual behavior [44], individuals with greater security experience utilize their ISA by recognizing specific familiar cues. This indicates that experience fosters the development of schemata and mental models, enabling individuals to identify patterns that contribute to awareness [42]. Additionally, negative experiences with incidents affect one’s awareness, and experience also eventually affects one’s behavior [36]. In this research, negative experience is defined as past encounters with any kind of information security incidents such as worms, viruses, and phishing attacks in both private and work contexts [36,42]. This assertion is echoed in another study, which demonstrates that experiences with breaches positively influence security-related behavior, with behavior being determined both directly and indirectly by ISA [88]. Frank and Kohn [89] posited that when co-workers share information security experiences, it could raise awareness.

Proposition 1.

Negative experiences positively influence information security awareness.

2.4.2. SETA Program

SETA is an overarching term encompassing programs or activities that involve awareness, training, and education. Awareness should be created to stimulate and motivate individuals to understand what is expected of them regarding information security and organizational measures/policies, providing them with knowledge. Training is a process aimed at teaching individuals a skill or demonstrating how to use specific tools. Education entails more in-depth schooling as a career development initiative or to support the aforementioned tools [90]. In this research, SETA (programs) is defined as “any endeavour that is undertaken to ensure that every employee is equipped with the information security skills and information security knowledge specific to their roles and responsibilities by using practical instructional methods” [91] (p. 250). In addition to internal organizational objectives, SETA initiatives are influenced by external authorities such as regulatory and compliance drivers [92]. General observations regarding the positive effects of SETA are apparent, such as a reduction in weak passwords and an increase in ISP compliance [93]. There is a clear negative relationship between SETA and cybersecurity-related incidents [94], such as a reduction in phishing susceptibilities [95]. Other studies also found that SETA efforts raise awareness and discourage IS misuse and risks among employees [40,93]. Again, in general, SETA programs raise the knowledge of employees [96].

A variety of educational tools have been utilized, including online games and short animation films [97] and the gamification effectiveness [98,99,100]. Furthermore, the media and message types are important and have different levels of effectiveness [101], which are linked to internal personality traits [102]. Furthermore, it is important to integrate cognitive and psychological factors, such as cultural and cognitive bias [86], psychological ownership [103], and motivation to process information systematically and cognitively [104]. However, not all SETA programs are currently adequate enough yet and require more socio-behavioral principles.

Training efforts are one of the individual factors impacting SA [75,87]. Within these efforts, the essence is beyond training. So, receiving information for better and conscious decision-making [19] is vital for comprehension and having the right amount of knowledge for handling future situations (Projection). Effective SETA programs instil confidence in employees to address security threats [103]. Consequently, the impact of previous general security training undertaken by an employee becomes apparent. Therefore, this research posits that security education and training initiatives, collectively referred to as the SETA program, exert a positive influence.

Proposition 2.

A SETA program positively affects information security awareness.

2.4.3. InfoSec Goals

Goals largely affect SA by directing attention and shaping the perception and interpretation of information, leading to comprehensive understanding. According to Endsley, a pilot’s goals, such as defeating enemies or ensuring survival, impact SA through either a top-down or bottom-up process [75]. In a top-down process, a person’s goals and objectives give direction to what environmental elements a person will be aware of and notices, shaping SA and informing comprehension. Conversely, in a bottom-up process, environmental cues may alter goals for safety reasons. Meaning is given when that information is put in a context related to one’s goals, resulting in the individual acting upon activities aligned with that goal. While both processes are effective in dynamic environments, the top-down approach is often more applicable in information security contexts. This research posits that goals are an extension of organizational commitment, with employees demonstrating greater attentiveness to information security when their goals align with those of their organization [105]. Goo et al. [106] and Liu et al. [43] support the positive impact of affective and organiza-tional commitment on compliance intention and ISP compliance.Hence, this research defines InfoSec Goals as feelings of identification with, attachment to, and involvement in organizational InfoSec performance. InfoSec Commitment manifests as valuing one’s role in organizational information security, taking personal responsibility for organizational InfoSec performance, and dedication to remaining competent in it [107]. In the study of Davis et al. [107], they use the explanation to define InfoSec Commitment. However, as this research uses the SA theory, it is aligned with this theory by using the term goals rather than commitment. InfoSec Commitment serves as a foundation for InfoSec Goals; in this research, the transformation from InfoSec Commitment to InfoSec Goals will involve careful consideration of the underlying principles.

Specifically within the information security context, InfoSec Commitment reflects involvement, attachment, and identification with the information security performance of a firm [107]. Furthermore, Cavallari [108] posited that motivation and intent have a positive effect on compliant behavior to shape the information security plan of the organization. Motivation, via managers to employees, shape the overarching goals of information security [108]. Similarly, in later studies, it was found that commitment and support for the organization have a positive effect on compliant behavior [109].

To conclude, these insights align with Endsley’s framework [75], indicating that commitment to information security involves recognizing opportunities to act safely and acting upon these values, primarily through top-down processing.

Proposition 3.

InfoSec goals positively affect information security awareness.

2.4.4. Security Complexity

Stress factors, encompassing both physical and social-psychological aspects, can significantly influence SA [75,110]. Physical stressors, such as noise, fatigue, and lighting, as well as social psychological stressors like fear, anxiety, and time pressure, can narrow an individual’s field of attention, potentially causing them to overlook critical elements. Endsley highlights that stress can disrupt Perception, the first level of SA, and early decision-making stages [110]. It is found that lower job stress correlates with ISA, leading to higher employee productivity [111]. Conversely, information security stress, particularly work overload, can decrease ISA and lead to non-compliance with information security policies (ISPs) [112,113]. This finding is shared by another study; security fatigue symptoms make employees prone to ignoring ISPs and minimize their security efforts [114].

Complexity also plays a crucial role in SA, representing the number of system components, their interaction degree, and the change rate. Security warnings, which call employees’ attention to potential threats, add a layer of complexity to SA by requiring more mental effort to process [42]. This complexity can increase the mental workload and diminish SA, especially in an information security context, where employees must navigate complex security issues and ISP requirements. Complexity is closely linked with security-related stress, requiring employees to invest additional time and effort to understand and address security challenges, such as technical jargon, security issues, and ISPs. Complexity is one of the stress-related factors contributing to ISP violations [115]. Hence, complexity in this research is defined as situations where information security requirements and threats are perceived as complex and force employees to invest time and effort in learning and understanding information security, which can lead to stress (result of an interaction between external environmental stimuli and an individual’s reactions to them). This definition builds on other studies [112]. We posit that complex security requirements are complex to follow. This could result in work overload, which aligns with other findings [112,113]. This in turn can lead to emotion-focused coping strategies such as moral disengagement, which may increase the likelihood of non-compliant behavior [115]. Although awareness was not explicitly addressed in this study, as discussed earlier, this research has established that awareness is a driver of behavior. Therefore, the research posits that complexity also influences awareness. In summary, stress and complexity are intertwined factors that influence ISA. This research argues that minimized security efforts result from inadequate levels of ISA, affected by complexity including stress-related factors, as noted by Endsley [75].

Proposition 4.

Security complexity has a negative impact on information security awareness.

2.4.5. SETA Design

In the context of SA, the effectiveness of an individual’s perception and comprehension is influenced by two key design elements: system design and interface design [75,87]. System design refers to how information is gathered and presented to the individual, such as the display of data by aircraft systems to pilots. This design aspect plays a crucial role in shaping SA by determining the amount and manner of information available to the individual [87,116]. On the other hand, interface design focuses on how information is presented to the user, including factors like quantity, accuracy, and compatibility with the user’s needs [75]. A study discusses how effective interface design reduces the cognitive load by tailoring information to specific situations and adapting to current conditions, thereby enhancing situational awareness [111]. To optimize SA, design guidelines should prioritize aligning with SA goals rather than solely focus on technological aspects. Critical cues should be emphasized in interface designs to facilitate effective SA mechanisms [75].

In the realm of information security, system and interface design act as intermediaries through which employees receive security-related information via SETA methods. Various SETA methods are employed, such as text-based, video-based, or game-based delivery, but their effectiveness can vary significantly [73,99,101,102]. Some studies suggest that SETA initiatives can be perceived as burdensome by employees, leading to careless completion [100,103]. To link this to the research gap, since each SETA method can have a different impact on employees, it is possible that one method may be more effective than others in raising awareness. SETA is a tool used to raise awareness among employees. The importance of SETA has only increased over time, with an emphasis on its effectiveness rather than solely just its use of it. Another observation is that there is no universal perfect SETA; it has versatile delivery methods, and not all methods work the same in all contexts and demographics. It was also posited that it is target audience-dependable [113]. They argue that certain methods are most popular, and a combination of methods could be effective; however, passive methods remain ineffective [113]. Furthermore, there are still challenges in differentiating between the various types of methods and programs and assessing their effectiveness regarding knowledge, attitude, intention, and behavioral outcomes at both individual and organizational levels [117]. The lack of differentiation is attributed to an approach to ISA measurement that is broad and heterogeneous, making it difficult to determine specific effects of different SETA programs. This results in the definition of SETA Design as the various delivery methods and techniques, with their own attributes and characteristics used to transmit information security knowledge to employees for educational purposes, which builds on the work of the previously mentioned studies [75,113].

Thus, the effectiveness of different SETA methods varies, impacting ISA [102]. This was also underscored by Nwachukwu et al. [118], although more research is required to understand the effects of different methods, considering how information is presented to employees (system design) and the quality, quantity, and relevance of the information provided (interface design) and how it shapes ISA.

Proposition 5.

Conforming to the SETA design interventions positively affects information security awareness.

Based on the constructed research variables, the following model in Figure 1 represents the research in a visual manner.

Figure 1.

Research Model.

3. Materials and Methods

This research applies a critical literature review [119,120]. The literature review is descriptive in nature; the focus is on the identification of the relevant perspectives of ISA [121]. The search focused on papers from 1995 onwards, using the keywords “security awareness” and “information security awareness”. The literature review facilitated relevant perspectives on ISA and formed the foundation for the research model.

This research is an empirical study. The main research methodology is quantitative—cross-sectional survey research (N = 156) at one of the largest Dutch insurance firms. The pilot survey, shared with a diverse group of employees, resulted in an improved survey, especially in terms of brevity and accessibility, with a reduced number of questions. The survey questions are based on the literature, supplemented with survey-specific questions. Perceptual questions were measured using a seven-point Likert scale, while other questions had dichotomous and categorical answers. Table 2 provides an overview of all items.

Table 2.

Table of Measurement Items—(A): Interval 1–7 Likert Scale, (B): nominal dichotomy (Yes/No, 9 or below/above 9), and (C): nominal age categories (younger than 25, 25–34, 35–44, 45–54, 55–64, older than 65).

The survey was conducted anonymously and in Dutch, reflecting the main language of the organization. The survey is distributed via email to various groups and through a newsletter to 317 employees; thus, it is convenience sampling. Following Roscoe’s [122] guidelines, the sample includes two subgroups (non-IT employees and IT employees and managers), which include over 30 subjects, except for managers (N-managers = 26); this influenced the external validity of the research. The average time needed for the respondents to complete the survey was 12 min, and the collection of the data took place in the first two weeks of December 2022.

The literature suggests targeting specific groups for SETA initiatives due to the inadequacy of generic activities [123,124]. Furthermore, an existing research gap indicates whether managers and employees from the same firm differ in their security behavior [20]. Hence two perspectives will be considered: management/non-management and non-IT/IT roles. Research indicates that IT-related personnel generally have higher awareness levels, regardless of industry specifics [8,12]. Therefore, the study will examine these perspectives, each comprising two groups, to discover differences for targeted and effective SETA initiatives, subsequently enhancing the overall ISA.

3.1. Data Analysis

The collected data are analyzed in IBM SPSS (version 29.0.2.0) by means of descriptive and inferential statistics. For the inferential part, different techniques have been used. The correlation between construct items has been demonstrated by means of a Pearson’s r correlation matrix. Correlations also assisted in interpreting possible multicollinearity [125]—this has been controlled with a correlation matrix, as with the variance inflation factor diagnostic [126]. To test the effect of the propositions and significance, a multiple linear regression has been used, with a significance level of 0.05. Before the regressions were conducted, the assumptions associated with multiple linear regression were checked and corrective measures were taken. Subsequently, separated regression for the subgroups of the two group perspectives has been conducted as well to compare groups with each other, with an adjusted significance level to prevent family-wise errors. Additionally, independent sample t-tests and non-parametric Mann–Whitney U tests have been utilized to compare the means of the different groups [127].

3.2. Reliability & Validity

Cronbach’s Alpha is used for the internal reliability of measurement items. Techniques contributing to construct validity are the compound use of factor loading and average variance extracted. A confirmatory factor analysis (CFA) has been conducted in SmartPLS, rather than an exploratory factor analysis (EFA), as the propositions are supported by theory, and the focus of this research is not on discovering factors [128,129].

The factor analysis is used for convergent and discriminant validity, subsets of construct validity. The construct validity indicates when measurement items are strongly correlated with the research construct. To indicate discriminant validity for items, items that correlate weakly with items of other constructs have been examined, except for the construct where the items are associated (critical value: equal to or higher than 0.5). The square root of the average variance extracted, following the Fornell and Larcker criterion [130], has been applied.

To address potential Common Method Variance (CMV) bias, the Harman’s single factor test is conducted. This step ensures that the results are not influenced by methodological biases in the data collection process.

4. Results

4.1. Descriptive Statistics

The survey has reached an n of 156. All sample size indications mentioned in the previous chapter are succeeded, except for one. The groups were IT, non-IT, managers, and non-managers. The subset of management has not reached the preferred minimum of participants—26 instead of 30. Thus, all calculations for this group will be based on 26 cases and have influence on the external validity of the research. The sample’s largest groups are the age brackets of 25–34 and 45–54. Employees under 25 and employees of 65 and older are a vast minority. Regarding the subgroups, the following frequencies are present; IT employees (54.5%) exceed the number of non-IT employees (45.5%). For the level of participants, 130 respondents are non-management (83.3%), and 26 are managers (16.7%).

In terms of the means of constructs, Table 3 demonstrates the mean of combined items belonging to one construct and standard deviations. Table 3 provides all constructs.

Table 3.

Construct means and standard deviation.

ISA and InfoSec Goals have high means, M = 6.35 and M = 6.53, respectively. SETA Design carries the highest variability (SD = 1.14).

Regarding negative experiences, 10.3% of respondents reported having experienced malware, while the remaining 89.7% did not. The incidence of phishing is higher, with 19.2% of respondents having encountered phishing, compared to 80.8% who have not.

4.1.1. Malware Experience

The frequency of malware experience is similar between IT and non-IT respondents (seven and nine, respectively). Among management, two respondents reported malware experience, whereas fourteen non-managers did. This notable difference is expected given the smaller number of managers. Approximately 11% of non-managers have experienced malware, compared to approximately 8% of managers. Despite these observations, the differences for these two perspectives are minimal in terms of percentage points.

4.1.2. Phishing Experience

Phishing is a more prevalent issue compared to malware. Approximately 23% of non-IT employees have experienced phishing, in contrast to 16% of IT employees. Among management, nearly 27% have been targeted by phishing, compared to almost 18% of non-managers. These findings indicate that the differences in phishing experience percentages are more pronounced than those for malware. Non-IT employees and managers are more frequently affected by phishing than their counterparts.

4.1.3. Measurement Item Statistics

The statistical analysis of the survey provides insight into the central tendencies and variability of the items. Table 4 demonstrates the means and standard deviation for each measurement item.

Table 4.

Measurement Item Statistics (N = 156).

4.2. Reliability & Validity of Results

This section details the reliability and validity of the survey instrument. It covers correlation, internal consistency by means of Cronbach’s Alpha, and, subsequently, the convergent and discriminant validity.

Cronbach’s Alpha was calculated to assess reliability for each construct. Values of 0.6 and above are acceptable [131]. Although values below 0.7 are generally questioned, all item-total correlations exceeded 0.3, meeting the criteria [132]. All constructs are reliable, with the following values: SETA Program α = 0.64, Security Complexity α = 0.63, InfoSec Goals α = 0.90, SETA Design α = 0.83, and ISA α = 0.89.

A correlation matrix (Appendix B) revealed significant associations between ISA and all dependent variables except Negative Experience (r = 0.045). Potential multicollinearity issues were assessed with a Variance Inflation Factor (VIF) analysis, confirming no high multicollinearity (Appendix C) [133,134], meeting one of the assumptions for linear regression.

For the convergent and discriminant validity, the Kaiser–Meyer–Olkin (KMO) test indicated sampling adequacy for factor analysis (KMO = 0.875, p < 0.001), meeting Kaiser’s (1974) criterion of >0.80. Confirmatory factor analysis in SmartPLS confirmed convergent validity, with AVE values exceeding 0.5, per Fornell and Larcker [130]. See Appendix D for detailed results.

The Harman’s single factor test for potential Common Method Variance bias showed that a single factor explained 32.738% of the total variance. Since this is well below the 50% threshold, it suggests that CMV is unlikely to significantly affect the results. The full results are detailed in Appendix E.

4.3. Inferential Statistics

In this section, the tested propositions will be demonstrated with an additional group comparison. Before the propositions were tested, the assumptions of (multiple) linear regression were checked (with the exception of multicollinearity, since that has already been checked for in the previous section). Furthermore, additional comparisons were executed between the four different demographic groups (IT employees, non-IT employees, managers, and non-managers).

The assumptions of linearity, the independence of errors, and the normal distribution of errors have been satisfactorily met. Linearity was confirmed through scatter plots, and the independence of errors was validated with a Durban Watson statistic of 2.24. Despite minor deviations due to some noticeable deviations at the lower and upper ends of the distribution, the P-P plot indicated a generally normal distribution of residuals. However, the assumption of homoscedasticity was violated due to a cone-shaped pattern in the residuals plot. To address this, logarithmic transformations were applied by a weighted regression to correct heteroscedasticity, using weights based on transformed unstandardized residuals.

4.3.1. Proposition Results

To test the propositions and regress the predictors on ISA, the following model has been used for the weighted regression:

Information Security Awareness = β0 + β1 × SETA Program + β2 × Security Complexity + β3 × Negative Experience + β4 × InfoSec Goals + β5 × SETA Design + ε

The regression model examines the factors that influence ISA. The coefficients (β1 to β5) represent the independent variables, which indicate the influence of each variable on ISA. The error term (ε) captures the variation in ISA that is not explained by the independent variables. The regression analysis shows how these factors collectively determine employees’ ISA.

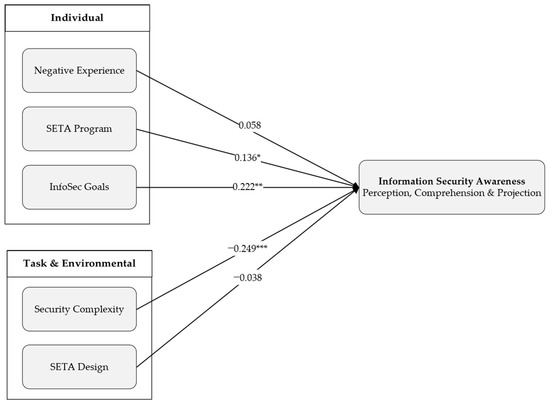

The regression model predicted approximately 42% of the variance in ISA based on an R2 of 0.413; the R2adj value of 0.394 indicates that 39% of the variation in ISA is jointly explained by the number of predictors. Thus, 61% of the variation in ISA can be explained by other explanatory variables. The model summary indicates that the overall model is significant, F(5, 150) = 21.15, p < 0.001.

Proposition 1.

Negative experience positively affects information security awareness.

The regression indicated that negative experience has no significant negative effect on ISA (β = 0.058, t = 0.477, p = 0.634). Again, there is no significant effect due to the p-value exceeding the mentioned cut-off of 5% significance. This proposition is thus not supported.

Proposition 2.

An SETA program positively affects information security awareness.

It was found that SETA Programs in general significantly predict ISA (β = 0.136, t = 2.402, p = 0.018). Since the associated p-value is less than 0.05 at 5%, it is concluded that SETA Programs have a significant positive impact on ISA. Hence, if the SETA Program increases by one unit, the ISA increases by 0.136 units on average.

Proposition 3.

Information security goals positively affect information security awareness.

InfoSec Goals positively contribute to ISA (β = 0.222, t = 3.089, p = 0.002). Since 0.002 < 0.05, it means that this proposition is significant and supported. The coefficient of InfoSec Goals is positive—that is to say, with a one-unit increase, the ISA will increase by 0.222 on average.

Proposition 4.

Security complexity has a negative impact on information security awareness.

It was found that complexity has a negative contribution to ISA (β = −0.249, t = −7.807, p < 0.001). This contribution was found to be significant, and this proposition is supported. It implies that when complexity increases with one unit, ISA decreases on average with 0.249 units.

Proposition 5.

Conforming to the SETA design interventions positively affects information security awareness.

SETA Design as a construct was not found to be significant (β = −0.038, t = −1.431, p = 0.155), indicating that SETA interventions that coincide with employees’ information needs and using various techniques do not contribute to ISA since the associated p-value exceeds 0.05.

Table 5 presents the regression analysis results for all propositions, with significance levels indicating whether each proposition is supported.

Table 5.

Proposition Results.

Based on the following research model, the sequence of impact on ISA is (from strongest to least strong): Security Complexity, InfoSec Goals, and SETA (ceteris paribus). Figure 2 demonstrates the path coefficients where each variable indicates the strength. From this, theoretical and practical implications will follow in the next chapter.

Figure 2.

Regression Path Analysis—* p < 0.05, ** p < 0.01, *** p < 0.001.

4.3.2. Additional Separate Regressions per Group

Separate regressions were conducted for each group (IT, non-IT, managers, and non-managers). This was executed to identify potential group differences in the relationship between each independent variable and ISA. However, a series of comparisons can result in a Type I error possibility. An alpha level of 0.05 was used in the prior section, but it cannot be used for this section due to the increased risk of errors [135]. Therefore, the Bonferroni Correction will be applied to prevent family-wise error, which has a 26% chance of occurring. For the following comparisons, an alpha level below 0.008 will be used. Although this value is close to 0.01, using a less conservative value also helps prevent Type II errors. Thus, this value will be used.

Each of the three different perspectives includes two groups. All models were significant. The adjusted R² values of the separate models range between 0.337 and 0.452. For a concise overview, Table 6 presents the unstandardized beta coefficients and p-value indicators.

Table 6.

Regression Coefficients for Group Comparison.

4.3.3. Additional Mean Comparisons of ISA per Group

Last, the means of ISA were compared for each group. The assumptions for the t-tests were checked first. The cases in the sample are independent of each other and all represent individual employees, thus meeting the first assumption of independent observations. The groups of IT and non-IT have a sample size of ≥30, satisfying the Central Limit Theorem and ensuring the sample data are normally distributed. Therefore, this assumption is also met. The group of managers does not meet this assumption (n = 26); hence, the non-parametric Mann–Whitney U test was conducted for this group since the sample is not normally distributed.

The third assumption of homogeneity of variances was checked using Levene’s test. The IT and non-IT groups meet this assumption (F = 0.126, p = 0.723).

IT vs. non-IT employees: The t-test revealed no significant differences in ISA between IT (M = 6.36, SD = 0.70) and non-IT employees (M = 6.33, SD = 0.57). The associated two-sided p-value was 0.802.

Managers vs. non-Managers: The differences between managers (Mean Rank = 80.21) and non-managers (Mean Rank = 78.16) were also not significant (U = 1645.5, z = −0.213, p = 0.831).

Based on the conducted t-tests and Mann–Whitney U tests, no significant differences were found in ISA between IT and non-IT employees and managers and non-managers. This implies that, within the sample and variables studied, these demographic factors did not influence the awareness of information security.

5. Discussion

This section presents the key findings of this research by interpreting them and their relationship with the literature. Afterward, the limitations this research has, implications for theory and practice, and future research directions will be discussed.

5.1. Key Findings

This research investigated the factors contributing to ISA based on an SA perspective. Literature review identified several antecedents forming a unique research model: SETA Program, Negative Experience, Security Complexity, SETA Design, and InfoSec Goals. Significant contributions to ISA were found from Security Complexity, SETA Program, and InfoSec Goals [36,45,56,67]. The study also used two group perspectives, as recommended by the literature, to tailor SETA efforts in order to improve ISA [20]. Additionally, it explored ISA differences across groups and their significance. The findings for each antecedent and their impacts are discussed.

Contrary to expectations, prior negative experiences did not significantly affect employees’ ISA. This finding deviates from two studies referred to in this research, which found that negative experiences enhanced ISA [36,88]. A possible explanation is that cyber-attacks have become more sophisticated over time [136], making past experiences less relevant against current threats. The descriptive statistics indicated that managers and non-IT employees encountered more negative experiences compared to their counterparts. This finding suggests that past experiences in shaping ISA may diminish over time, since threats become more sophisticated. Hence, this emphasizes the need for continuous updates in SETA efforts.

As for InfoSec Goals, they positively contributed to ISA. Employees who care about and engage in their firm’s information security tend to have higher ISA. This aligns with the SA theory, where comprehension is based on environmental perceptions [75]. Employees with strong InfoSec Goals develop a comprehensive understanding of information security and consider it a priority, leading to greater attentiveness and caution regarding potential risks [107]. This finding is consistent with the literature emphasizing the importance of employee commitment and involvement in information security [43,105,106]. This research adds to the literature by linking InfoSec Goals to ISA.

A remarkable finding was Security Complexity having a significant negative impact on ISA, with a strong adverse effect and a moderate negative correlation (r(154) = −0.49). Security Complexity was the only factor significant across all group perspectives and individual groups, with the highest negative impact observed in employees with lower salary grades (β = −0.290, p < 0.001). An increased workload due to higher complexity not only negatively affects SA by jeopardizing perception but also negatively affects IS compliance intentions [137], which is similar to our findings. The finding underscores the importance of minimizing Security Complexity for an improved ISA. This research also aligns with the findings in other research [42] on phishing and on job stress [111], showing that complexity generally reduces ISA and can lead to lower productivity and security fatigue [40,112,114].

SETA programs were found to have a significant contribution to ISA, although smaller than that of Security Complexity and InfoSec Goals. This aligns with previous studies highlighting the positive impact of SETA on ISA [19,40,93,94,95,103]. According to the SA theory, training primarily enhances comprehension, providing employees with the necessary knowledge to handle potential risks. Thus, SETA remains a critical and standardized component of information security for employees.

On the other hand, SETA Design, encompassing system and interface design, is complex to interpret. The construct measures employees’ experiences with methods (system design) and the quality and amount of information provided (interface design) to form their perception. While it was expected that better methods and information would increase ISA, the results were insignificant, likely due to varied employee opinions, reflected in the wide data variance (SD = 1.14). Effective SETA Design should match employees’ needs and foster long-term awareness, which may vary across different employee groups. Thus, while SETA is generally effective, its methods and information transfer require further investigation to foster long-term awareness. This finding is in line with other research [23].

In terms of mean comparisons, there was no significant difference in ISA for the group perspectives, as indicated. In case the opposite was to be present, the separate regression models could be further analysed to find factors explaining why the levels of ISA differ based on the antecedents, their significance level, and the strength of the coefficients in the regression.

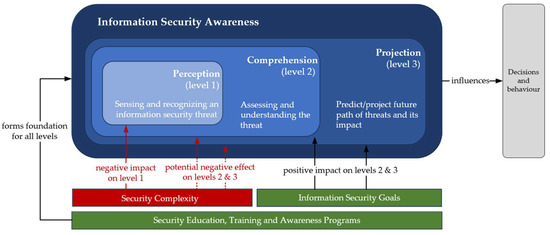

5.2. ISA and Findings in Relation to SA

In Figure 3, ISA is visually demonstrated from an SA perspective. Under the taxonomy, the three significant variables are placed, showing their relationship with the three levels of SA.

Figure 3.

Information Security Awareness from a Situation Awareness Perspective.

First, it demonstrates that Security Complexity is positioned under level 1, as Security Complexity, according to SA theory, poses a threat to the first level. However, it can also extend to levels 2 and 3. This positioning highlights the importance of minimizing complexity as a primary focus for ISA, which aligns with the goal of this research.

Second, InfoSec Goals are placed under comprehension and projection. Based on the literature, goals impact how attention is focused and how information is processed and interpreted to form comprehension. InfoSec Goals enable the employee to use the perceived elements from level 1 to assess the threat in the context of the organization/firm and understand the potential damage (level 3, projection). Thus, InfoSec Goals have a vital role in shaping ISA.

Lastly, SETA Program spans the entire taxonomy horizontally, indicating its contribution to all levels and forming a foundational basis. This implies that SETA Programs are crucial for level 1, which fuels the remaining two levels. At the same time, SETA Program for level 1 can be contrasted with Security Complexity and its potential to jeopardize level 1. This practical implication will be further explored in the next sections.

5.3. Research Limitations

This research is subject to several limitations, both methodological and theoretical. Methodologically, the reliability of measurement items posed a challenge. Although the Cronbach’s Alpha value of all items together was 0.828 (20 measurement items), the items of Security Complexity and SETA Program were 0.629 and 0.641, respectively. This indicates potential room for improvement. Additionally, the use of convenience sampling, while chosen for practical reasons, limits the external validity and generalizability of the findings. Notably, the underrepresentation of managers in the sample highlights a potential bias that could have been mitigated with a more robust sampling technique.

Additionally, the measurement of ISA and the other constructs was based on perceptions. The data obtained from the survey responses were self-reported, which can lead to an overestimation of capabilities. Research indicates that employees often overestimate their ISA, with a greater likelihood of the Dunning–Kruger effect as threats become more complex [138]. This can be a limitation and will be addressed in the research recommendation in the next section. The last theoretical development limitations concern the SA theory content. Endsley details information-processing mechanism limitations such as attention and short-, working-, and long-term memory [75]. These structures were not explicitly integrated in the development and execution of this research.

Having established the significant factors influencing ISA and the limitations of this research, the next section details the theoretical implications of these findings.

5.4. Implications for Theory

The previous section has already discussed the extent to which the findings align with prior research. This study contributes to theory by applying the SA theory at an employee level within the context of information security. While existing research in the realm of SA and security has focused on cyber SA and various organizational levels such as management, networks, and firms, this research introduces novel constructs (SETA Design, InfoSec Goals, and Security Complexity) and propositions to explain ISA, which can serve as a foundation for future research. By shedding light on the cognitive factors influencing awareness in information security, this study provides new insights into the field of behavioral information security. Moreover, it opens avenues for criticism and research enhancements, fostering new perspectives. As ISA forms a crucial inception for IS behavior, this research enriches the underexplored investigations into ISA from a theoretical standpoint.

5.5. Implications for Practice

This research has also led to several practical implications. In this section, four main practical implications will be covered and positioned in the context of other relevant studies.

Practical Implication I—Simplify Complexity: Minimizing complexity is crucial, as indicated by the research’s strong negative impact on ISA. Beyond content and materials, complexity encompasses factors like workload, pressure, and knowledge gaps. Organizations can improve Level 1 awareness by simplifying communication, avoiding technical jargon, and ensuring that security education is accessible and relevant to specific employee groups. Tailoring security initiatives to different real-life contexts and employee roles enhances relevance and understanding. For instance, employees with specialized business knowledge can better grasp security concepts within their own work domains. This aligns with other findings [139]; their research findings suggest that individuals may continue engaging in risky behavior if they do not perceive the threat as undesirable and the solution as practical, rather than responding to a rational fear-based appeal [139]. Hence, positioning SETA content as relevant to achieve higher undesirability could help. Furthermore, from an SA-theory perspective, this makes information more comprehensible. Customized complexity reduction strategies for various employee groups are essential, since employees could get overwhelmed by all the possible consequences of threats [58]. Additionally, making security training interactive and enjoyable can enhance engagement. Taking employee feedback into account is vital for improving SETA programs further.

Practical Implication II—Enhance InfoSec Goals: Increasing employee commitment to the IS of their organization is vital for strengthening ISA and establishing a robust human firewall, enabling the democratization of ISA. Organizations should prioritize fostering a culture of commitment to IS, aligning it with broader organizational objectives. This can be achieved through strategies such as emphasizing rewards over punishment for compliant behavior and IS culture within the organizational culture. In terms of using fear appeals to increase ISA, employees may also become less sensitive to fear appeals. In contrast, commitment and organizational citizenship create a positive reinforcement culture, where employees are rewarded for their compliant behavior [109]. In general, organizational culture and organizational commitment have a positive relationship with each other [140]. By integrating InfoSec Goals into the organizational culture, we believe employees are more likely to be intrinsically motivated to prioritize information security.

Practical Implication III—Set priorities for SETA Programs: Organizations should assess the effectiveness of their SETA programs and prioritize areas of improvement based on employee feedback and performance metrics. Organizations should also allocate resources to address other security concerns identified by employees. This includes diversifying SETA methods to accommodate employee preferences. This implication aligns well with other research [141], which has postulated the same.

Practical Implication IV—Using target groups for SETA practices: If organizations would like to nuance their SETA program practice (such as phishing simulation practice) results more by grouping up employees, they could consider the differences. Based on the Negative Experience results from the data, managers have fallen more for phishing efforts than non-managers (27% vs. 18%). It is challenging to come up with clarifications as to why this is. However, it can imply that differences can be further investigated per group as to why this is and practices can be modified for each target group based on their statistics. This recommendation is in line with the recommendations postulated in another study, where the differences between employees and management from the same organisation were investigated [20].

5.6. Future Research Directions

The research offers several recommendations for future studies. Based on the R2adj, which indicated that all variables collectively explain 39% of ISA’s variation, it implies that researchers can seek other constructs to explain ISA. Some of the following recommendations will follow from this.

Further exploration of InfoSec Goals is recommended, considering its significance in this study. Research could delve into how these goals are formed and influenced, examining their relationship with information security climate and culture. Building on other findings, investigating organizational, cognitive, personal, and cultural factors can provide valuable insights into improving information security behavior [106,142]. Furthermore, it was found that top management can influence the behavior of employees by forming organizational cultures that are goal-oriented [142]. This matches neatly with the findings of this research and simultaneously calls for novel findings yielded by new research. These findings [142] are also stressed as a research recommendation in a more recent study [108]. This study constructed several propositions based on pre-existing work, concerning managers’ behavior and organizational culture affecting compliant behavior [108].

For Security Complexity, there is the potential for the deeper exploration of complexity through the lens of psychological distance. Research in this area, inspired by studies [143], could illuminate how the psychological distance of employees influences perceptions of complexity and information security incidents. Incorporating psychological perspectives can enhance understanding and practical implications for minimizing obstacles to ISA.

In terms of methodology, qualitative approaches, particularly for complex constructs like SETA Design, are recommended to gain deeper insights beyond quantitative surveys. Experimental research could also offer direct measurements of awareness, especially for abstract constructs like ISA, where subjects cannot manipulate their behavior. Alternatively, ISA could also be measured by means of the Human Aspects of Information Security Questionnaire (HAIS-Q) [144]. This is recommended due to the earlier mention of ISA measures based on one’s own perception, which can lead to overestimated capabilities.

Conducting research in diverse firms across various sectors and sizes is advised to enrich the field of ISA. This allows for the broader application of theoretical frameworks across different domains.

Scholars are also encouraged to explore alternative research models that account for high correlations between the explanatory constructs since the correlations between SETA Design and SETA, r(154) = 0.66, p = < 0.01, and between SETA and InfoSec Goals, r(154) = 0.57, p = < 0.01, were alarming for potential multicollinearity. Different models with varied relationships between constructs can offer new insights into ISA.

6. Conclusions

In this research, it was investigated which individual factors have a direct influence on the ISA of an employee. To seek and form the antecedents, the theory of SA was utilized as the corresponding theoretical lens. Subsequently, a survey has been generated to quantitatively test the following main research question: “How and to what extent do cognitive and internal factors contribute to information security awareness (ISA)?”.

- Research Constructs & Method: Five explaining constructs have been incorporated in the research to answer the research question, and their direct relationship with ISA has been tested by means of weighted regression analyses. The constructs are Negative Experience, SETA Program, InfoSec Goals, Security Complexity, and SETA Design.

- Impact of constructs on ISA:

- ∘

- InfoSec Goals: Have a positive impact on ISA, demonstrating that employees with a strong commitment to information security are more aware.

- ∘

- SETA Program: Has a positive impact on ISA by providing necessary education and training.

- ∘

- Security Complexity: Has a negative impact on ISA, indicating that higher complexity in security measures can decrease awareness and increase cognitive overload.

- Significant Insights: Security Complexity emerged as the most significant factor, followed by InfoSec Goals and SETA Program, aligning with the three levels of ISA within the SA framework.

- Group Analyses:

- ∘

- Additional group analyses and separate regressions have been conducted with the help of two group perspectives, (1) IT and non-IT employees and (2) managers and non-managers.

- ∘

- The separate regressions held a stricter significance level, demonstrating that Security Complexity has a significant contribution for each group.

- ∘

- Mean comparisons did not yield notable findings in ISA per group.

This research has established novel constructs and propositions to explain how cognitive and internal factors influence employees’ ISA. These findings can inform future studies and help organizations, ultimately enhancing their overall security posture.

Author Contributions

Conceptualization, M.D. and E.B.; methodology, M.D. and E.B.; software, M.D.; validation, M.D.; formal analysis, M.D. and E.B.; investigation, M.D.; data curation, M.D.; writing—original draft preparation, M.D.; writing—review and editing, E.B.; visualization, M.D.; supervision, E.B. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Informed consent was obtained from all subjects involved in the study.

Data Availability Statement

The data presented in this study are available in this article.

Acknowledgments

We highly appreciate the opportunity to conduct this research in a large Dutch insurance firm that focuses a lot on security awareness.

Conflicts of Interest

The authors declare no conflicts of interest.

Appendix A

Table A1.

Summary of ISA-focused research—Antecedent Dimensions: INST = institutional, IND = individual, ENV = (socio-)environmental, TECH = technological. Unit of Analysis: employee/end user. Dimensions derived from studies [36,81].

Table A1.

Summary of ISA-focused research—Antecedent Dimensions: INST = institutional, IND = individual, ENV = (socio-)environmental, TECH = technological. Unit of Analysis: employee/end user. Dimensions derived from studies [36,81].

| Study | INST | IND | ENV | TECH | Theoretical Lens/Angle to Explain Awareness | Observation |

|---|---|---|---|---|---|---|

| [52] | X | X | Social Learning Theory | - | ||

| [36] | X | X | X | Combines elements of general deterrence theory and social psychology | - | |

| [35] | X | X | Relational Awareness | - | ||

| [80] | X | X | Innovation Diffusion Theory | - | ||

| [42] | X | X | Situation Awareness | Experimental, phishing context. Personality trait integration. | ||

| [37] | X | Leadership Styles | Consistent results: leadership positively influences ISA | |||

| [63] | X | X | Theory of Planned Behavior | |||

| [19] | X | Social Learning Theory | Educational information and channels positively influence ISA | |||

| [82] | X | Theory of Reasoned Action | ||||

| [45] | X | Organizational and security culture | - | |||

| [84] | X | Big Five Personality Traits Model | Consistent and similar results in demographic factors | |||

| [83] | X | |||||

| [55] | X | Demographic differences | ||||

| [72] | X | Collectivism | - | |||

| [85] | X | X | Demographic attributes and socioeconomic resources | - |

Appendix B

Table A2.

Pearson’s Correlation Matrix.

Table A2.

Pearson’s Correlation Matrix.

| SETA | COMP | NEG | GOAL | SETAD | ISA | |

|---|---|---|---|---|---|---|

| SETA Program | -- | |||||

| Security Complexity (COMP) | −0.102 | -- | ||||

| Negative Experience (NEG) | 0.152 | 0.152 | -- | |||

| InfoSec Goals (GOAL) | 0.568 ** | −0.112 | 0.160 * | -- | ||

| SETA Design (SETAD) | 0.661 ** | 0.040 | 0.100 | 0.453 ** | -- | |

| Information Security Awareness (ISA) | 0.402 ** | −0.493 ** | 0.045 | 0.550 ** | 0.184 * | -- |

**. Correlation is significant at the 0.01 level (two-tailed). *. Correlation is significant at the 0.05 level (two-tailed).

Appendix C

Table A3.

Collinearity Diagnostics.

Table A3.

Collinearity Diagnostics.

| Coefficients | |||

|---|---|---|---|

| Model | Collinearity Statistics | ||

| Tolerance | VIF | ||

| 1 | SETA | 0.460 | 2.172 |

| Complexity | 0.930 | 1.075 | |

| Negative Experience | 0.937 | 1.068 | |

| InfoSec Goals | 0.654 | 1.529 | |

| SETA Design | 0.540 | 1.852 | |

Dependent Variable: ISA.

Appendix D. Convergent & Discriminant Validity

First, the Kaiser–Meyer–Olkin test for sampling adequacy was used to determine if a factor analysis was suitable. A KMO value larger than 0.80 is beyond fit for a factor analysis [145]. The KMO of all items together was 0.875, with a Bartlett’s Test of Sphericity p < 0.001. Subsequently, the confirmatory factor analysis was executed in SmartPLS. Again, the AVE should be larger than 0.5 according to the criterion of Fornell and Larcker [130] to establish convergent validity. The following table demonstrates the square root AVE values in bold.

Initially, the Kaiser–Meyer–Olkin (KMO) test was utilized to assess the adequacy of sampling for factor analysis. A KMO value exceeding 0.80 is indicative of excellent suitability for factor analysis [145]. In this study, the KMO value for all items collectively yielded 0.875, and Bartlett’s Test of Sphericity returned a significant result (p < 0.001).

Subsequently, confirmatory factor analysis was conducted using SmartPLS. Convergent validity was evaluated based on the Average Variance Extracted (AVE), with a criterion set by Fornell and Larcker [130] suggesting that AVE values should surpass 0.5. The table below presents the square root of the AVE values, highlighted in bold.

Table A4.

Square Root of AVE Values and Inter-Construct Correlations.

Table A4.

Square Root of AVE Values and Inter-Construct Correlations.

| AVE | COMP | GOAL | ISA | SETA | SETAD | |

|---|---|---|---|---|---|---|

| COMP | 0.568 | 0.753 | ||||

| GOAL | 0.858 | −0.145 | 0.926 | |||

| ISA | 0.658 | −0.495 | 0.607 | 0.811 | ||

| SETA | 0.579 | −0.286 | 0.582 | 0.53 | 0.761 | |

| SETAD | 0.601 | −0.012 | 0.468 | 0.259 | 0.557 | 0.775 |

The table demonstrates that all AVE values exceed 0.5 and the bold values exceed the values below and horizontally on the corresponding columns and rows. Hence, discriminant validity is indicated.

Appendix E. Harman’s Single Factor Test Results

The results of the Harman’s single factor test indicated that a single factor accounted for 32.738% of the variance. CMV values below 50% are considered acceptable, indicating that our CMV value falls within the acceptable range [146].

Table A5.

Principal Component Analysis.

Table A5.

Principal Component Analysis.

| Total Variance Explained | ||||||

|---|---|---|---|---|---|---|

| Component | Initial Eigenvalues | Extraction Sums of Squared Loadings | ||||

| Total | % of Variance | Cumulative % | Total | % of Variance | Cumulative % | |

| 1 | 7.202 | 32.738 | 32.738 | 7.202 | 32.738 | 32.738 |

| 2 | 3.646 | 16.571 | 49.309 | |||

| 3 | 1.677 | 7.625 | 56.933 | |||

| 4 | 1.134 | 5.155 | 62.088 | |||

| 5 | 1.044 | 4.745 | 66.833 | |||

| 6 | 912 | 4.144 | 70.977 | |||

| 7 | 766 | 3.483 | 74.460 | |||

| 8 | 686 | 3.119 | 77.579 | |||

| 9 | 647 | 2.941 | 80.520 | |||

| 10 | 584 | 2.655 | 83.175 | |||

| 11 | 555 | 2.521 | 85.696 | |||

| 12 | 465 | 2.115 | 87.811 | |||

| 13 | 432 | 1.962 | 89.773 | |||

| 14 | 387 | 1.758 | 91.532 | |||

| 15 | 358 | 1.629 | 93.161 | |||

| 16 | 307 | 1.396 | 94.557 | |||

| 17 | 306 | 1.392 | 95.949 | |||

| 18 | 239 | 1.084 | 97.033 | |||

| 19 | 215 | 978 | 98.012 | |||

| 20 | 196 | 890 | 98.902 | |||

| 21 | 160 | 728 | 99.629 | |||

| 22 | 82 | 371 | 100.000 | |||

| Extraction Method: Principal Component Analysis. | ||||||

Appendix F

Table A6.

Basic Concepts of INTRODUCTION.

Table A6.

Basic Concepts of INTRODUCTION.

| Term | Definition | Reference |

|---|---|---|

| Cybersecurity | “Prevention of damage to, protection of, and restoration of computers, electronic communications systems, electronic communications services, wire communication, and electronic communication, including information contained therein, to ensure its availability, integrity, authentication, confidentiality, and nonrepudiation”. | [147] |

| Cyber resilience | “Cyber resilience refers to the ability to continuously deliver the intended outcome despite adverse cyber events.” | [148] (p. 2) |

| Information security | “The protection of information, which is an asset, from possible harm resulting from various threats and vulnerabilities”. | [149] (p. 4) |

| Information security policies (ISPs) | Information security policies are formalized documents that outline the rules and guidelines for protecting an organization’s information and technology resources. These policies aim to ensure that employees understand their roles and responsibilities in maintaining the security of the organization’s information systems. | [25] |

| Information systems | “Information systems are interrelated components working together to collect, process, store, and disseminate information to support decision making, coordination, control, analysis, and visualization in an organization.” | [150] (p. 44) |

References

- Admass, W.S.; Munaye, Y.Y.; Diro, A. Cyber security: State of the art, challenges and future directions. Cyber Secur. Appl. 2023, 2, 100031. [Google Scholar] [CrossRef]

- Thakur, M. Cyber Security Threats and Countermeasures in Digital Age. J. Appl. Sci. Educ. (JASE) 2024, 4, 1–20. [Google Scholar]

- Gartner. Top Trends in Cybersecurity for 2024; Gartner: Stamford, CT, USA, 2024; Available online: https://www.gartner.com/en/cybersecurity/trends/cybersecurity-trends (accessed on 23 June 2024).

- Borkovich, D.; Skovira, R. Working from Home: Cybersecurity in the Age of Covid-19. Issues Inf. Syst. 2020, 21, 234–246. [Google Scholar] [CrossRef]

- Weil, T.; Murugesan, S. IT risk and resilience—Cybersecurity response to COVID-19. IT Prof. 2020, 22, 4–10. [Google Scholar] [CrossRef]

- Saleous, H.; Ismail, M.; AlDaajeh, S.H.; Madathil, N.; Alrabaee, S.; Choo, K.K.R.; Al-Qirim, N. COVID-19 pandemic and the cyberthreat landscape: Research challenges and opportunities. Digit. Commun. Netw. 2023, 9, 211–222. [Google Scholar]

- Gartner. Top Trends in Cybersecurity 2022; Gartner: Stamford, CT, USA, 2022. [Google Scholar]

- Almansoori, A.; Al-Emran, M.; Shaalan, K. Exploring the Frontiers of Cybersecurity Behaviour: A Systematic Review of Studies and Theories. Appl. Sci. 2023, 13, 5700. [Google Scholar] [CrossRef]

- Bowen, B.M.; Devarajan, R.; Stolfo, S. Measuring the human factor of cyber security. In Proceedings of the 2011 IEEE International Conference on Technologies for Homeland Security (HST), Waltham, MA, USA, 15–17 November 2011; pp. 230–235. [Google Scholar]

- Onumo, A.; Ullah-Awan, I.; Cullen, A. Assessing the moderating effect of security technologies on employees compliance with cybersecurity control procedures. ACM Trans. Manag. Inf. Syst. 2021, 12, 11. [Google Scholar] [CrossRef]

- Jeong, C.Y.; Lee, S.Y.T.; Lim, J.H. Information security breaches and IT security investments: Impacts on competitors. Inf. Manag. 2019, 56, 681–695. [Google Scholar]

- Alsharida, R.A.; Al-rimy, B.A.S.; Al-Emran, M.; Zainal, A. A systematic review of multi perspectives on human cybersecurity behaviour. Technol. Soc. 2023, 73, 102258. [Google Scholar]

- Cram, W.A.; D’Arcy, J. ‘What a waste of time’: An examination of cybersecurity legitimacy. Inf. Syst. J. 2023, 33, 1396–1422. [Google Scholar]

- Baltuttis, D.; Teubner, T.; Adam, M.T. A typology of cybersecurity behaviour among knowledge workers. Comput. Secur. 2024, 140, 103741. [Google Scholar]