An Anomaly Detection Approach to Determine Optimal Cutting Time in Cheese Formation

Abstract

1. Introduction

- Investigate the optimal cutting time: We conducted a feasibility study by introducing a novel AD-based approach to determine the optimal cutting time during curd formation in cheese production.

- Development of a one-class Fully Convolutional Data Description Network: We propose and implemented a one-class FCDDN to identify curd formation by treating it as an anomaly to verify against the milk in its usual state.

- Comparison with shallow AD methods: We compared the proposed approach with shallow learning methods to emphasize its robustness in this scenario on different sets of images.

- High accuracy in AD: The proposed approach achieved encouraging results with F1 scores of up to 0.92, demonstrating the effectiveness of the method.

- Application in the dairy industry: This work investigates if the curd-firming time identification can be achieved with an AD-based approach and, at the same, aims to provide a non-invasive, non-destructive, and technologically advanced solution.

2. Related Work

2.1. Automated Methods in Dairy Industry

2.2. Anomaly Detection

2.2.1. Statistical Methods

2.2.2. Machine Learning-Based Methods

- Clustering-based methods and density estimation: Techniques such as k-means and DBSCAN for clustering and Gaussian Mixture Models for density estimation are commonly used for AD without requiring labeled data. These methods detect outliers by identifying deviations from normal data distributions. However, they can struggle with high-dimensional or sparse data and are sensitive to parameter settings [24,25].

- Unsupervised learning techniques: When labeled data are available, modifications of classic ML algorithms are employed for AD. Notable examples include one-class SVM (OCSVM) [26] and Isolation Forest (IF) [27,28], which are adaptations of Support Vector Machines (SVMs) and Random Forest (RF), respectively.

- Ensemble and hybrid approaches: Ensemble methods enhance AD performance and robustness by combining multiple algorithms. Techniques such as IF [27,28] and the Local Outlier Factor [29,30] utilize ensemble principles, aggregating results from several base learners to identify anomalies. Hybrid approaches, which integrate various AD techniques, further improve detection accuracy and reliability by leveraging the strengths of each method [31]. In industrial applications, hybrid methods involving both ML and DL techniques have been proposed. For instance, Wang et al. [32] introduced a loss switching fusion network that combines spatiotemporal descriptors, applying it as an AD approach for classifying background and foreground motions in outdoor scenes.

2.2.3. Deep Learning-Based Methods

- Autoencoder-based architectures: Autoencoders, including Variational Autoencoders (VAEs) [36], are a popular choice for AD in CV. VAEs learn to encode input data into a compact latent representation and then reconstruct the data from this representation. Anomalies are detected based on the reconstruction error, as anomalous data typically result in higher reconstruction errors compared to normal data [37,38].

- Generative Adversarial Networks (GANs): In a typical GAN setup, a generator network creates synthetic data, while a discriminator network attempts to distinguish between real and generated data. For AD, the generator learns to produce data that mimic the normal data distribution. Anomalies can then be identified based on how well the discriminator distinguishes the actual data from the generated data. High discriminator scores indicate potential anomalies, as the generated data fail to accurately represent these outliers. GANs have also been successfully applied to AD tasks [39,40].

- Recurrent Neural Networks (RNNs) and Long Short-Term Memory (LSTM) networks: for sequential data, such as video frames or time series, RNNs and LSTM networks are particularly effective due to their ability to capture temporal dependencies [41,42]. These networks maintain a memory of previous inputs, allowing them to understand context over time. In the context of AD, RNNs and LSTMs can model the normal sequence of events or patterns. Anomalies are detected when the predicted sequence deviates significantly from the actual observed sequence [43].

- Convolutional neural networks: CNNs are widely used, even in AD, for their powerful feature-extraction capabilities from image data [44]. By learning hierarchical feature representations, CNNs can detect subtle anomalies in visual data that may not be apparent to traditional methods. In AD, CNNs are often combined with other architectures, such as autoencoders or GANs, to enhance detection accuracy [45].

- Attention mechanisms and transformers: Attention mechanisms and transformer models, initially proposed for natural language processing tasks, have been adapted for CV and AD. These models can focus on relevant parts of the input data, improving the detection of anomalies in complex scenes [46]. Transformers, with their self-attention layers, have shown remarkable success in modeling dependencies and identifying anomalies in high-dimensional data [47].

- Self-supervised and unsupervised learning: DL methods for AD often rely on self-supervised [48,49] and unsupervised [34,50] learning approaches, where the model learns useful representations without requiring labeled data. Techniques such as contrastive learning and pretext tasks enable the model to learn discriminative features that are effective for identifying anomalies, for example in scenarios where labeled anomalous data are scarce or unavailable.

- Hybrid models: Recent advancements have explored hybrid models that combine multiple DL architectures to leverage their individual strengths [50,51]. For instance, combining CNNs with LSTMs allows the model to capture both spatial and temporal features, improving the robustness [52,53]. Similarly, integrating VAEs with GANs can enhance the model’s ability to generate realistic data and detect anomalies based on reconstruction errors and adversarial loss, particularly on time series data [54].

3. Materials and Methods

3.1. Dataset

3.2. Feature Extraction

3.2.1. Handcrafted Features

- Chebyshev moments (CHs): Introduced by Mukundan and Ramakrishnan [56] and derived from Chebyshev polynomials, they were employed with both first-order (CH_1) and second-order (CH_2) moments of order 5.

- Zernike moments (ZMs): Introduced by Oujaoura et al. [59] and derived from Zernike polynomials, they were applied with order 6 and a repetition of 4.

- Haar features (Haar): Consisting of adjacent rectangles with alternating positive and negative polarities, they were used in various forms, such as edge features, line features, four-rectangle features, and center-surround features. Haar features play a crucial role in cascade classifiers as part of the Viola–Jones object-detection framework [60].

- Rotation-invariant Haralick features (HARris): Thirteen Haralick features [61], derived from the Gray-Level Co-occurrence Matrix (GLCM), were transformed into rotation-invariant features [62]. This transformation involved computing GLCM variations with the parameters set to and angular orientations .

3.2.2. Deep Features

3.3. Classification Methods

3.3.1. One-Class SVM

3.3.2. Isolation Forest

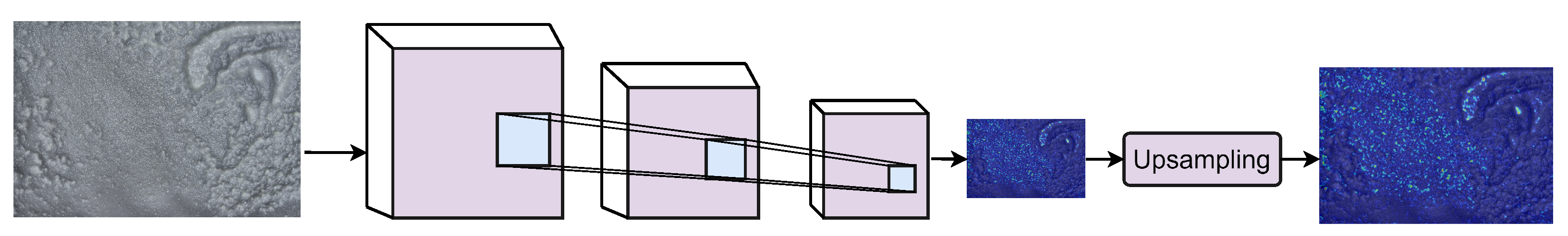

3.3.3. FCDD Network

3.4. Evaluation Measures

- True negatives (TNs): instances correctly predicted as negative.

- False positives (FPs): instances incorrectly predicted as positive.

- False negatives (FNs): instances incorrectly predicted as negative.

- True positives (TPs): instances correctly predicted as positive.

- Precision (P): the fraction of positive instances correctly classified among all instances classified as positive:

- Recall (R) (or sensitivity): measures the classifier’s ability to predict the positive class against FNs (also known as the true positive rate):

- F1 score (F1): the harmonic mean between precision and recall:

4. Experimental Results

4.1. Experimental Setup

4.2. Quantitative Results

4.2.1. Results with ML Approaches and HC Features

4.2.2. Results with ML Approaches and Deep Features

4.2.3. Results with FCCDN

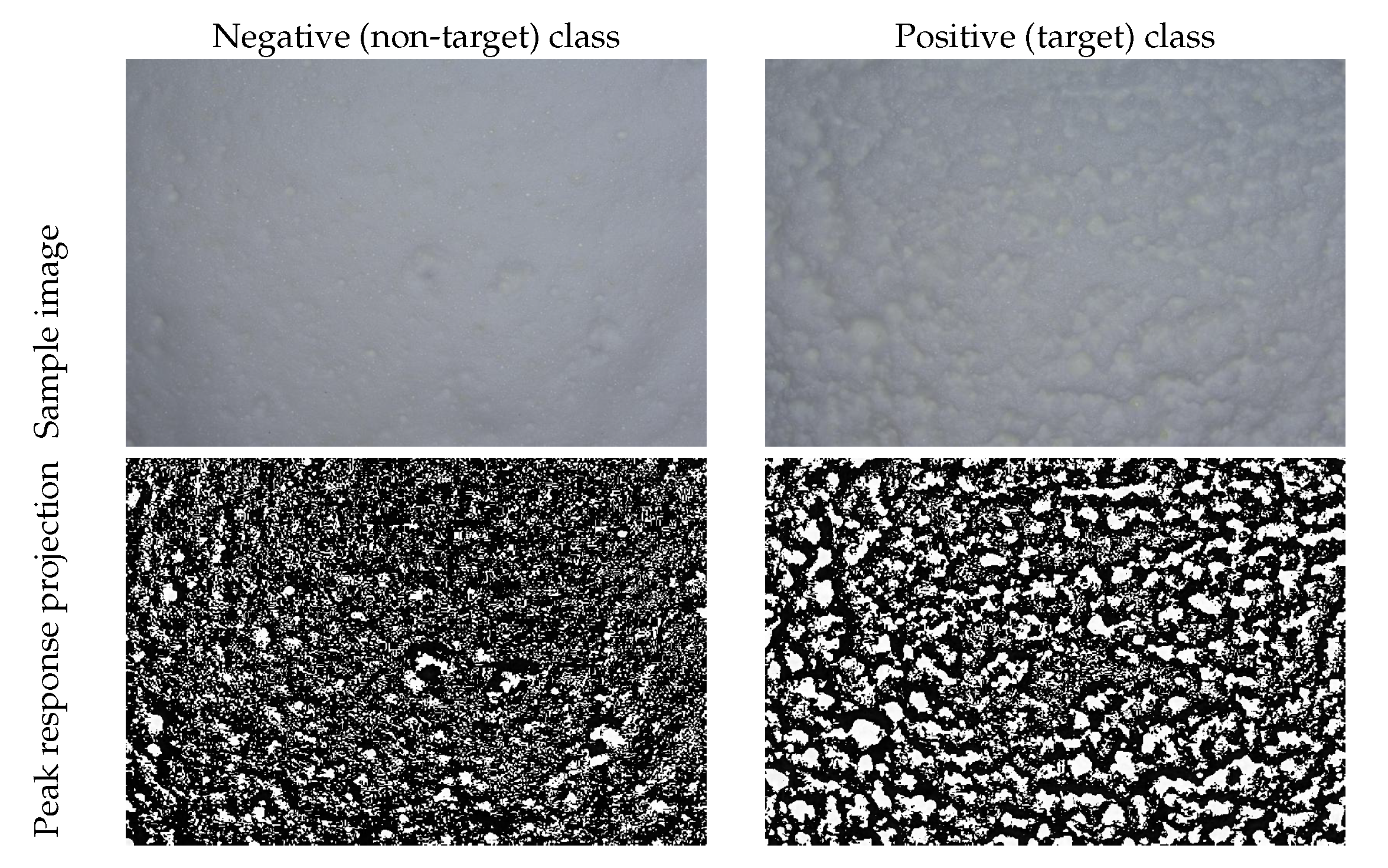

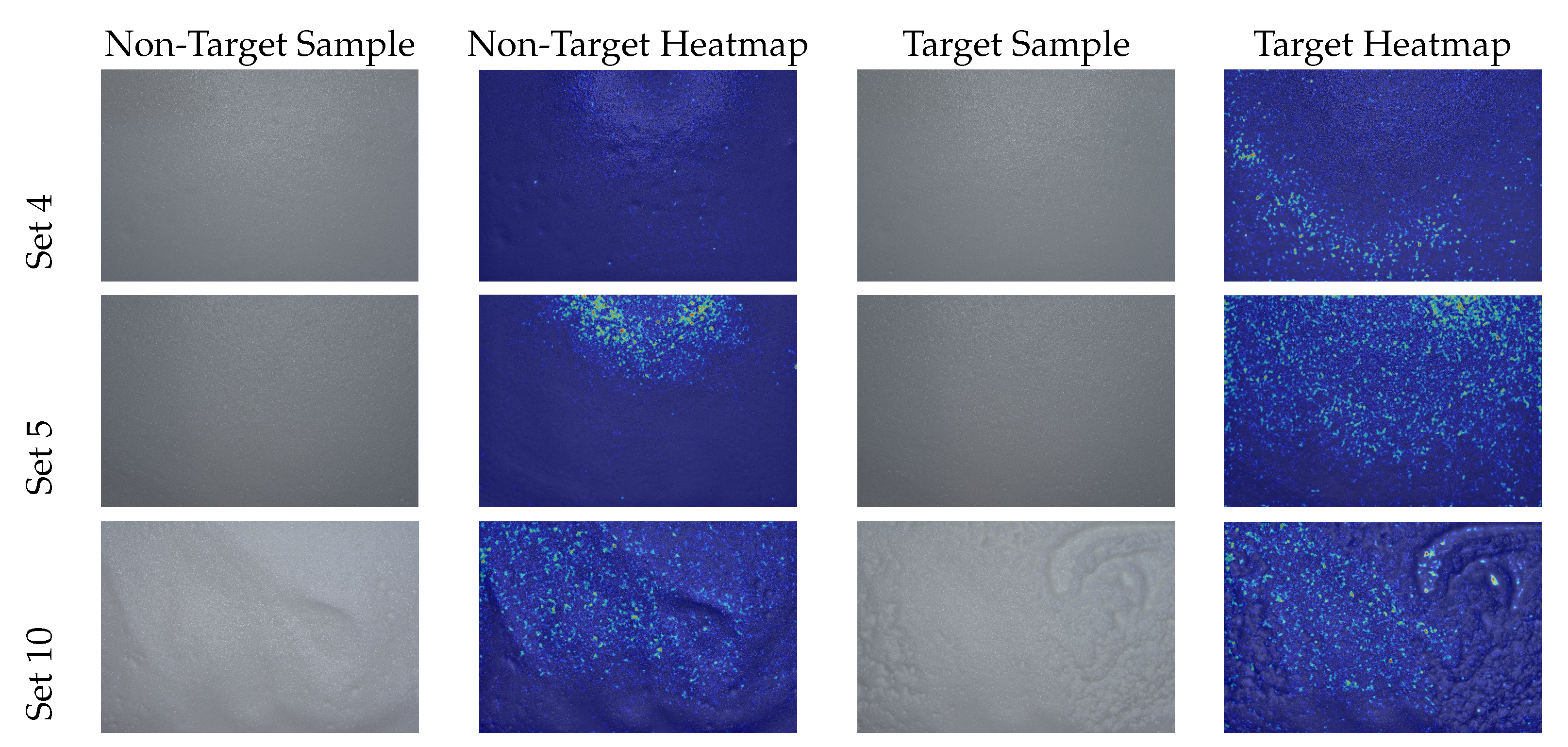

4.3. Qualitative Results

4.4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| CV | computer vision |

| AD | anomaly detection |

| DL | deep learning |

| FCDDN | Fully Convolutional Data Description Network |

| HC | handcrafted |

| CNN | convolutional neural network |

| CH | Chebyshev moment |

| LM | Legendre moment |

| ZM | Zernike moment |

| Haar | Haar feature |

| HARri | rotation-invariant Haralick features |

| LBP | Local Binary Pattern |

| Hist | grayscale histogram feature |

| OCSVM | one-class SVM |

| IF | Isolation Forest |

| VAE | Variational Autoencoder |

| GAN | Generative Adversarial Network |

| RNN | Recurrent Neural Network |

| LSTM | Long Short-Term Memory |

References

- Lei, T.; Sun, D.W. Developments of Nondestructive Techniques for Evaluating Quality Attributes of Cheeses: A Review. Trends Food Sci. Technol. 2019, 88, 527–542. [Google Scholar] [CrossRef]

- Castillo, M. Cutting Time Prediction Methods in Cheese Making; Taylor & Francis Group: Oxford, UK, 2006. [Google Scholar] [CrossRef]

- Johnson, M.E.; Chen, C.M.; Jaeggi, J.J. Effect of rennet coagulation time on composition, yield, and quality of reduced-fat cheddar cheese. J. Dairy Sci. 2001, 84, 1027–1033. [Google Scholar] [CrossRef] [PubMed]

- Grundelius, A.U.; Lodaite, K.; Östergren, K.; Paulsson, M.; Dejmek, P. Syneresis of submerged single curd grains and curd rheology. Int. Dairy J. 2000, 10, 489–496. [Google Scholar] [CrossRef]

- Thomann, S.; Brechenmacher, A.; Hinrichs, J. Comparison of models for the kinetics of syneresis of curd grains made from goat’s milk. Milchwiss.-Milk Sci. Int. 2006, 61, 407–411. [Google Scholar]

- Gao, P.; Zhang, W.; Wei, M.; Chen, B.; Zhu, H.; Xie, N.; Pang, X.; Marie-Laure, F.; Zhang, S.; Lv, J. Analysis of the non-volatile components and volatile compounds of hydrolysates derived from unmatured cheese curd hydrolysis by different enzymes. LWT 2022, 168, 113896. [Google Scholar] [CrossRef]

- Guinee, T.P. Effect of high-temperature treatment of milk and whey protein denaturation on the properties of rennet–curd cheese: A review. Int. Dairy J. 2021, 121, 105095. [Google Scholar] [CrossRef]

- Liznerski, P.; Ruff, L.; Vandermeulen, R.A.; Franks, B.J.; Kloft, M.; Müller, K. Explainable Deep One-Class Classification. In Proceedings of the 9th International Conference on Learning Representations, ICLR 2021, Virtual Event, Austria, 3–7 May 2021; Available online: https://openreview.net/ (accessed on 26 April 2024).

- Alinaghi, M.; Nilsson, D.; Singh, N.; Höjer, A.; Saedén, K.H.; Trygg, J. Near-infrared hyperspectral image analysis for monitoring the cheese-ripening process. J. Dairy Sci. 2023, 106, 7407–7418. [Google Scholar] [CrossRef]

- Everard, C.D.; O’callaghan, D.; Fagan, C.C.; O’donnell, C.; Castillo, M.; Payne, F. Computer vision and color measurement techniques for inline monitoring of cheese curd syneresis. J. Dairy Sci. 2007, 90, 3162–3170. [Google Scholar] [CrossRef] [PubMed]

- Loddo, A.; Di Ruberto, C.; Armano, G.; Manconi, A. Automatic Monitoring Cheese Ripeness Using Computer Vision and Artificial Intelligence. IEEE Access 2022, 10, 122612–122626. [Google Scholar] [CrossRef]

- Badaró, A.T.; de Matos, G.V.; Karaziack, C.B.; Viotto, W.H.; Barbin, D.F. Automated method for determination of cheese meltability by computer vision. Food Anal. Methods 2021, 14, 2630–2641. [Google Scholar] [CrossRef]

- Goyal, S.; Kumar Goyal, G. Shelflife Prediction of Processed Cheese Using Artificial Intelligence ANN Technique. Hrvat. Časopis Prehrambenu Tehnol. Biotehnol. Nutr. 2012, 7, 184–187. [Google Scholar]

- Goyal, S.; Goyal, G. Smart artificial intelligence computerized models for shelf life prediction of processed cheese. Int. J. Eng. Technol. 2012, 1, 281–289. [Google Scholar] [CrossRef][Green Version]

- da Paixao Teixeira, J.L.; dos Santos Carames, E.T.; Baptista, D.P.; Gigante, M.L.; Pallone, J.A.L. Rapid adulteration detection of yogurt and cheese made from goat milk by vibrational spectroscopy and chemometric tools. J. Food Compos. Anal. 2021, 96, 103712. [Google Scholar] [CrossRef]

- Vasafi, P.S.; Paquet-Durand, O.; Brettschneider, K.; Hinrichs, J.; Hitzmann, B. Anomaly detection during milk processing by autoencoder neural network based on near-infrared spectroscopy. J. Food Eng. 2021, 299, 110510. [Google Scholar] [CrossRef]

- Li, Z.; Zhu, Y.; van Leeuwen, M. A Survey on Explainable Anomaly Detection. ACM Trans. Knowl. Discov. Data 2024, 18, 23:1–23:54. [Google Scholar] [CrossRef]

- Ruff, L.; Kauffmann, J.R.; Vandermeulen, R.A.; Montavon, G.; Samek, W.; Kloft, M.; Dietterich, T.G.; Müller, K. A Unifying Review of Deep and Shallow Anomaly Detection. Proc. IEEE 2021, 109, 756–795. [Google Scholar] [CrossRef]

- Samariya, D.; Aryal, S.; Ting, K.M.; Ma, J. A New Effective and Efficient Measure for Outlying Aspect Mining. In Proceedings of the Web Information Systems Engineering—WISE 2020—21st International Conference, Amsterdam, The Netherlands, 20–24 October 2020; Proceedings, Part II; Lecture Notes in Computer Science. Huang, Z., Beek, W., Wang, H., Zhou, R., Zhang, Y., Eds.; Springer: Berlin/Heidelberg, Germany, 2020; Volume 12343, pp. 463–474. [Google Scholar]

- Nguyen, V.K.; Renault, É.; Milocco, R.H. Environment Monitoring for Anomaly Detection System Using Smartphones. Sensors 2019, 19, 3834. [Google Scholar] [CrossRef] [PubMed]

- Violettas, G.E.; Simoglou, G.; Petridou, S.G.; Mamatas, L. A Softwarized Intrusion Detection System for the RPL-based Internet of Things networks. Future Gener. Comput. Syst. 2021, 125, 698–714. [Google Scholar] [CrossRef]

- Papageorgiou, G.; Sarlis, V.; Tjortjis, C. Unsupervised Learning in NBA Injury Recovery: Advanced Data Mining to Decode Recovery Durations and Economic Impacts. Information 2024, 15, 61. [Google Scholar] [CrossRef]

- Zhao, X.; Zhang, L.; Cao, Y.; Jin, K.; Hou, Y. Anomaly Detection Approach in Industrial Control Systems Based on Measurement Data. Information 2022, 13, 450. [Google Scholar] [CrossRef]

- Li, J.; Izakian, H.; Pedrycz, W.; Jamal, I. Clustering-based anomaly detection in multivariate time series data. Appl. Soft Comput. 2021, 100, 106919. [Google Scholar] [CrossRef]

- Falcão, F.; Zoppi, T.; Silva, C.B.V.; Santos, A.; Fonseca, B.; Ceccarelli, A.; Bondavalli, A. Quantitative comparison of unsupervised anomaly detection algorithms for intrusion detection. In Proceedings of the 34th ACM/SIGAPP Symposium on Applied Computing, SAC 2019, Limassol, Cyprus, 8–12 April 2019; Hung, C., Papadopoulos, G.A., Eds.; ACM: New York, NY, USA, 2019; pp. 318–327. [Google Scholar] [CrossRef]

- Schölkopf, B.; Williamson, R.C.; Smola, A.; Shawe-Taylor, J.; Platt, J. Support Vector Method for Novelty Detection. In Advances in Neural Information Processing Systems; Solla, S., Leen, T., Müller, K., Eds.; MIT Press: Cambridge, MA, USA, 1999; Volume 12. [Google Scholar]

- Liu, F.T.; Ting, K.M.; Zhou, Z.H. Isolation Forest. In Proceedings of the 2008 Eighth IEEE International Conference on Data Mining, Pisa, Italy, 15–19 December 2008; pp. 413–422. [Google Scholar] [CrossRef]

- Liang, J.; Liang, Q.; Wu, Z.; Chen, H.; Zhang, S.; Jiang, F. A Novel Unsupervised Deep Transfer Learning Method With Isolation Forest for Machine Fault Diagnosis. IEEE Trans. Ind. Inform. 2024, 20, 235–246. [Google Scholar] [CrossRef]

- Wang, W.; ShangGuan, W.; Liu, J.; Chen, J. Enhanced Fault Detection for GNSS/INS Integration Using Maximum Correntropy Filter and Local Outlier Factor. IEEE Trans. Intell. Veh. 2024, 9, 2077–2093. [Google Scholar] [CrossRef]

- Kumar, R.H.; Bank, S.; Bharath, R.; Sumati, S.; Ramanarayanan, C.P. A Local Outlier Factor-Based Automated Anomaly Event Detection of Vessels for Maritime Surveillance. Int. J. Perform. Eng. 2023, 19, 711. [Google Scholar] [CrossRef]

- Siddiqui, M.A.; Stokes, J.W.; Seifert, C.; Argyle, E.; McCann, R.; Neil, J.; Carroll, J. Detecting Cyber Attacks Using Anomaly Detection with Explanations and Expert Feedback. In Proceedings of the IEEE International Conference on Acoustics, Speech and Signal Processing, ICASSP 2019, Brighton, UK, 12–17 May 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 2872–2876. [Google Scholar] [CrossRef]

- Wang, L.; Huynh, D.Q.; Mansour, M.R. Loss Switching Fusion with Similarity Search for Video Classification. In Proceedings of the 2019 IEEE International Conference on Image Processing, ICIP 2019, Taipei, Taiwan, 22–25 September 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 974–978. [Google Scholar]

- LeCun, Y.; Bengio, Y.; Hinton, G.E. Deep learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef] [PubMed]

- Zipfel, J.; Verworner, F.; Fischer, M.; Wieland, U.; Kraus, M.; Zschech, P. Anomaly detection for industrial quality assurance: A comparative evaluation of unsupervised deep learning models. Comput. Ind. Eng. 2023, 177, 109045. [Google Scholar] [CrossRef]

- Xie, G.; Wang, J.; Liu, J.; Lyu, J.; Liu, Y.; Wang, C.; Zheng, F.; Jin, Y. IM-IAD: Industrial Image Anomaly Detection Benchmark in Manufacturing. IEEE Trans. Cybern. 2024, 54, 2720–2733. [Google Scholar] [CrossRef] [PubMed]

- Liu, W.; Li, R.; Zheng, M.; Karanam, S.; Wu, Z.; Bhanu, B.; Radke, R.J.; Camps, O. Towards Visually Explaining Variational Autoencoders. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020. [Google Scholar]

- Wang, K.; Yan, C.; Mo, Y.; Wang, Y.; Yuan, X.; Liu, C. Anomaly detection using large-scale multimode industrial data: An integration method of nonstationary kernel and autoencoder. Eng. Appl. Artif. Intell. 2024, 131, 107839. [Google Scholar] [CrossRef]

- Kim, S.; Jo, W.; Shon, T. APAD: Autoencoder-based Payload Anomaly Detection for industrial IoE. Appl. Soft Comput. 2020, 88, 106017. [Google Scholar] [CrossRef]

- Ravanbakhsh, M.; Nabi, M.; Sangineto, E.; Marcenaro, L.; Regazzoni, C.S.; Sebe, N. Abnormal event detection in videos using generative adversarial nets. In Proceedings of the 2017 IEEE International Conference on Image Processing, ICIP 2017, Beijing, China, 17–20 September 2017; IEEE: Piscataway, NJ, USA, 2017; pp. 1577–1581. [Google Scholar] [CrossRef]

- Zhang, L.; Dai, Y.; Fan, F.; He, C. Anomaly Detection of GAN Industrial Image Based on Attention Feature Fusion. Sensors 2023, 23, 355. [Google Scholar] [CrossRef]

- Liu, W.; Luo, W.; Lian, D.; Gao, S. Future Frame Prediction for Anomaly Detection—A New Baseline. In Proceedings of the 2018 IEEE Conference on Computer Vision and Pattern Recognition, CVPR 2018, Salt Lake City, UT, USA, 18–22 June 2018; Computer Vision Foundation: New York, NY, USA; IEEE Computer Society: Washington, DC, USA, 2018; pp. 6536–6545. [Google Scholar] [CrossRef]

- Georgescu, M.I.; Barbalau, A.; Ionescu, R.T.; Khan, F.S.; Popescu, M.; Shah, M. Anomaly Detection in Video via Self-Supervised and Multi-Task Learning. In Proceedings of the Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, 20–25 June 2021; pp. 12742–12752.

- Zhou, X.; Hu, Y.; Liang, W.; Ma, J.; Jin, Q. Variational LSTM Enhanced Anomaly Detection for Industrial Big Data. IEEE Trans. Ind. Inform. 2021, 17, 3469–3477. [Google Scholar] [CrossRef]

- Ullah, W.; Hussain, T.; Ullah, F.U.M.; Lee, M.Y.; Baik, S.W. TransCNN: Hybrid CNN and transformer mechanism for surveillance anomaly detection. Eng. Appl. Artif. Intell. 2023, 123, 106173. [Google Scholar] [CrossRef]

- Lu, S.; Dong, H.; Yu, H. Abnormal Condition Detection Method of Industrial Processes Based on the Cascaded Bagging-PCA and CNN Classification Network. IEEE Trans. Ind. Inform. 2023, 19, 10956–10966. [Google Scholar] [CrossRef]

- Smith, A.D.; Du, S.; Kurien, A. Vision transformers for anomaly detection and localisation in leather surface defect classification based on low-resolution images and a small dataset. Appl. Sci. 2023, 13, 8716. [Google Scholar] [CrossRef]

- Yao, H.; Luo, W.; Yu, W.; Zhang, X.; Qiang, Z.; Luo, D.; Shi, H. Dual-attention transformer and discriminative flow for industrial visual anomaly detection. IEEE Trans. Autom. Sci. Eng. 2023, 1–15. [Google Scholar] [CrossRef]

- Jézéquel, L.; Vu, N.; Beaudet, J.; Histace, A. Efficient Anomaly Detection Using Self-Supervised Multi-Cue Tasks. IEEE Trans. Image Process. 2023, 32, 807–821. [Google Scholar] [CrossRef]

- Tang, X.; Zeng, S.; Yu, F.; Yu, W.; Sheng, Z.; Kang, Z. Self-supervised anomaly pattern detection for large scale industrial data. Neurocomputing 2023, 515, 1–12. [Google Scholar] [CrossRef]

- Yan, S.; Shao, H.; Xiao, Y.; Liu, B.; Wan, J. Hybrid robust convolutional autoencoder for unsupervised anomaly detection of machine tools under noises. Robot. Comput. Integr. Manuf. 2023, 79, 102441. [Google Scholar] [CrossRef]

- Rosero-Montalvo, P.D.; István, Z.; Tözün, P.; Hernandez, W. Hybrid Anomaly Detection Model on Trusted IoT Devices. IEEE Internet Things J. 2023, 10, 10959–10969. [Google Scholar] [CrossRef]

- Borré, A.; Seman, L.O.; Camponogara, E.; Stefenon, S.F.; Mariani, V.C.; dos Santos Coelho, L. Machine Fault Detection Using a Hybrid CNN-LSTM Attention-Based Model. Sensors 2023, 23, 4512. [Google Scholar] [CrossRef] [PubMed]

- Abir, F.F.; Chowdhury, M.E.H.; Tapotee, M.I.; Mushtak, A.; Khandakar, A.; Mahmud, S.; Hasan, A. PCovNet+: A CNN-VAE anomaly detection framework with LSTM embeddings for smartwatch-based COVID-19 detection. Eng. Appl. Artif. Intell. 2023, 122, 106130. [Google Scholar] [CrossRef] [PubMed]

- Niu, Z.; Yu, K.; Wu, X. LSTM-Based VAE-GAN for Time-Series Anomaly Detection. Sensors 2020, 20, 3738. [Google Scholar] [CrossRef] [PubMed]

- Putzu, L.; Loddo, A.; Ruberto, C.D. Invariant Moments, Textural and Deep Features for Diagnostic MR and CT Image Retrieval. In Proceedings of the Computer Analysis of Images and Patterns: 19th International Conference, CAIP 2021, Virtual Event, 28–30 September 2021; Proceedings, Part I. Springer: Berlin/Heidelberg, Germany, 2021; pp. 287–297. [Google Scholar] [CrossRef]

- Mukundan, R.; Ong, S.; Lee, P. Image analysis by Tchebichef moments. IEEE Trans. Image Process. 2001, 10, 1357–1364. [Google Scholar] [CrossRef] [PubMed]

- Teague, M.R. Image analysis via the general theory of moments*. J. Opt. Soc. Am. 1980, 70, 920–930. [Google Scholar] [CrossRef]

- Teh, C.H.; Chin, R. On image analysis by the methods of moments. IEEE Trans. Pattern Anal. Mach. Intell. 1988, 10, 496–513. [Google Scholar] [CrossRef]

- Oujaoura, M.; Minaoui, B.; Fakir, M. Image Annotation by Moments. Moments Moment Invariants Theory Appl. 2014, 1, 227–252. [Google Scholar] [CrossRef]

- Viola, P.; Jones, M. Rapid object detection using a boosted cascade of simple features. In Proceedings of the 2001 IEEE Computer Society Conference on Computer Vision and Pattern Recognition. CVPR 2001, Kauai, HI, USA, 8–14 December 2001; Volume 1, p. I, ISSN: 1063-6919. [Google Scholar] [CrossRef]

- Haralick, R.M.; Shanmugam, K.; Dinstein, I. Textural Features for Image Classification. IEEE Trans. Syst. Man Cybern. 1973, SMC-3, 610–621. [Google Scholar] [CrossRef]

- Putzu, L.; Di Ruberto, C. Rotation Invariant Co-occurrence Matrix Features. In Proceedings of the Image Analysis and Processing—ICIAP 2017, Catania, Italy, 11–15 September 2017; Lecture Notes in Computer, Science. Battiato, S., Gallo, G., Schettini, R., Stanco, F., Eds.; Springer: Cham, Switzerland, 2017; pp. 391–401. [Google Scholar] [CrossRef]

- He, D.-c.; Wang, L. Texture Unit, Texture Spectrum, And Texture Analysis. IEEE Trans. Geosci. Remote Sens. 1990, 28, 509–512. [Google Scholar] [CrossRef]

- Ojala, T.; Pietikäinen, M.; Mäenpää, T. Multiresolution Gray-Scale and Rotation Invariant Texture Classification with Local Binary Patterns. IEEE Trans. Pattern Anal. Mach. Intell. 2002, 24, 971–987. [Google Scholar] [CrossRef]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. ImageNet classification with deep convolutional neural networks. Commun. ACM 2017, 60, 84–90. [Google Scholar] [CrossRef]

- Redmon, J.; Farhadi, A. YOLOv3: An Incremental Improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar] [CrossRef]

- Huang, G.; Liu, Z.; Maaten, L.V.D.; Weinberger, K.Q. Densely Connected Convolutional Networks. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Los Alamitos, CA, USA, 21–26 July 2017; pp. 2261–2269. [Google Scholar] [CrossRef]

- Szegedy, C.; Liu, W.; Jia, Y.; Sermanet, P.; Reed, S.E.; Anguelov, D.; Erhan, D.; Vanhoucke, V.; Rabinovich, A. Going deeper with convolutions. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, CVPR 2015, Boston, MA, USA, 7–12 June 2015; IEEE Computer Society:: Washington, DC, USA, 2015; pp. 1–9. [Google Scholar] [CrossRef]

- Tan, M.; Le, Q. EfficientNet: Rethinking Model Scaling for Convolutional Neural Networks. In Proceedings of Machine Learning Research, Proceedings of the 36th International Conference on Machine Learning, Long Beach, CA, USA, 9–15 June 2019; Volume 97, pp. 6105–6114.

- Szegedy, C.; Vanhoucke, V.; Ioffe, S.; Shlens, J.; Wojna, Z. Rethinking the Inception Architecture for Computer Vision. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition, CVPR 2016, Las Vegas, NV, USA, 27–30 June 2016; IEEE Computer Society: Washington, DC, USA, 2016; pp. 2818–2826. [Google Scholar] [CrossRef]

- Szegedy, C.; Ioffe, S.; Vanhoucke, V.; Alemi, A.A. Inception-v4, inception-ResNet and the impact of residual connections on learning. In Proceedings of the Thirty-First AAAI Conference on Artificial Intelligence, San Francisco, CA, USA, 4–9 February 2017; AAAI Press: Washington, DC, USA, 2017; pp. 4278–4284. [Google Scholar]

- Zoph, B.; Vasudevan, V.; Shlens, J.; Le, Q.V. Learning Transferable Architectures for Scalable Image Recognition. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Los Alamitos, CA, USA, 18–23 June 2018; pp. 8697–8710. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar] [CrossRef]

- Simonyan, K.; Zisserman, A. Very Deep Convolutional Networks for Large-Scale Image Recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- Chollet, F. Xception: Deep Learning with Depthwise Separable Convolutions. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 1800–1807. [Google Scholar]

- Petrovska, B.; Zdravevski, E.; Lameski, P.; Corizzo, R.; Štajduhar, I.; Lerga, J. Deep Learning for Feature Extraction in Remote Sensing: A Case-Study of Aerial Scene Classification. Sensors 2020, 20, 3906. [Google Scholar] [CrossRef] [PubMed]

- Barbhuiya, A.A.; Karsh, R.K.; Jain, R. CNN based feature extraction and classification for sign language. Multimed. Tools Appl. 2021, 80, 3051–3069. [Google Scholar] [CrossRef]

- Varshni, D.; Thakral, K.; Agarwal, L.; Nijhawan, R.; Mittal, A. Pneumonia Detection Using CNN based Feature Extraction. In Proceedings of the 2019 IEEE International Conference on Electrical, Computer and Communication Technologies (ICECCT), Coimbatore, India, 20–22 February 2019; pp. 1–7. [Google Scholar] [CrossRef]

- Rippel, O.; Mertens, P.; König, E.; Merhof, D. Gaussian Anomaly Detection by Modeling the Distribution of Normal Data in Pretrained Deep Features. IEEE Trans. Instrum. Meas. 2021, 70, 1–13. [Google Scholar] [CrossRef]

- Yang, J.; Shi, Y.; Qi, Z. Learning deep feature correspondence for unsupervised anomaly detection and segmentation. Pattern Recognit. 2022, 132, 108874. [Google Scholar] [CrossRef]

- Reiss, T.; Cohen, N.; Bergman, L.; Hoshen, Y. PANDA: Adapting Pretrained Features for Anomaly Detection and Segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, CVPR 2021, Virtual, 19–25 June 2021; Computer Vision Foundation: New York, NY, USA; IEEE: Piscataway, NJ, USA, 2021; pp. 2806–2814. [Google Scholar] [CrossRef]

- Deng, J.; Dong, W.; Socher, R.; Li, L.; Li, K.; Fei-Fei, L. ImageNet: A large-scale hierarchical image database. In Proceedings of the 2009 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR 2009), Miami, FL, USA, 20–25 June 2009; IEEE Computer Society: Washington, DC, USA, 2009; pp. 248–255. [Google Scholar] [CrossRef]

- Alam, S.; Sonbhadra, S.K.; Agarwal, S.; Nagabhushan, P. One-class support vector classifiers: A survey. Knowl. Based Syst. 2020, 196, 105754. [Google Scholar] [CrossRef]

- Cheng, X.; Zhang, M.; Lin, S.; Zhou, K.; Zhao, S.; Wang, H. Two-Stream Isolation Forest Based on Deep Features for Hyperspectral Anomaly Detection. IEEE Geosci. Remote. Sens. Lett. 2023, 20, 5504205. [Google Scholar] [CrossRef]

- Delussu, R.; Putzu, L.; Fumera, G. Synthetic Data for Video Surveillance Applications of Computer Vision: A Review. Int. J. Comput. Vis. 2024, 1–37. [Google Scholar] [CrossRef]

- Foszner, P.; Szczesna, A.; Ciampi, L.; Messina, N.; Cygan, A.; Bizon, B.; Cogiel, M.; Golba, D.; Macioszek, E.; Staniszewski, M. CrowdSim2: An Open Synthetic Benchmark for Object Detectors. In Proceedings of the 18th International Joint Conference on Computer Vision, Imaging and Computer Graphics Theory and Applications, Lisbon, Portugal, 19–21 February 2023; Radeva, P., Farinella, G.M., Bouatouch, K., Eds.; Volume 5, pp. 676–683. [Google Scholar]

- Murtaza, H.; Ahmed, M.; Khan, N.F.; Murtaza, G.; Zafar, S.; Bano, A. Synthetic data generation: State of the art in health care domain. Comput. Sci. Rev. 2023, 48, 100546. [Google Scholar] [CrossRef]

- Boutros, F.; Struc, V.; Fiérrez, J.; Damer, N. Synthetic data for face recognition: Current state and future prospects. Image Vis. Comput. 2023, 135, 104688. [Google Scholar] [CrossRef]

- Zhang, Z.; Zhao, Z.; Zhang, X.; Sun, C.; Chen, X. Industrial anomaly detection with domain shift: A real-world dataset and masked multi-scale reconstruction. Comput. Ind. 2023, 151, 103990. [Google Scholar] [CrossRef]

| Set | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 | 11 | 12 |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Number of images | 94 | 102 | 128 | 112 | 77 | 70 | 84 | 96 | 105 | 94 | 89 | 111 |

| Non-target samples | 77 | 90 | 108 | 89 | 54 | 45 | 63 | 72 | 68 | 59 | 60 | 77 |

| Target samples | 17 | 12 | 20 | 23 | 23 | 25 | 21 | 24 | 37 | 35 | 29 | 34 |

| Ref. | Params (M) | Input Shape | Feature Layer | # of Features |

|---|---|---|---|---|

| AlexNet [65] | 60 | Pen. FC | 4096 | |

| DarkNet-53 [66] | 20.8 | Conv53 | 1000 | |

| DenseNet-201 [67] | 25.6 | Avg. Pool | 1920 | |

| GoogLeNet [68] | 5 | Loss3 | 1000 | |

| EfficientNetB0 [69] | 5.3 | Avg. Pool | 1280 | |

| Inception-v3 [70] | 21.8 | Last FC | 1,000 | |

| Inception-ResNet-v2 [71] | 55 | Avg. pool | 1536 | |

| NasNetL [72] | 88.9 | Avg. Pool | 4032 | |

| ResNet-18 [73] | 11.7 | Pool5 | 512 | |

| ResNet-50 [73] | 26 | Avg. Pool | 1024 | |

| ResNet-101 [73] | 44.6 | Pool5 | 1024 | |

| VGG16 [74] | 138 | Pen. FC | 4096 | |

| VGG19 [74] | 144 | Pen. FC | 4096 | |

| XceptionNet [75] | 22.9 | Avg. Pool | 2048 |

| Non-Target | Target | ||||||

|---|---|---|---|---|---|---|---|

| Set | Features | Precision | Recall | F1 | Precision | Recall | F1 |

| 1 | ZM | 0.97 (0.07) | 0.61 (0.08) | 0.74 (0.08) | 0.75 (0.02) | 0.99 (0.01) | 0.85 (0.02) |

| 2 | ZM | 0.94 (0.03) | 0.62 (0.03) | 0.75 (0.03) | 0.62 (0.03) | 0.93 (0.02) | 0.75 (0.02) |

| 3 | CH_2 | 0.81 (0.11) | 0.76 (0.09) | 0.78 (0.10) | 0.75 (0.02) | 0.80 (0.02) | 0.77 (0.02) |

| 4 | ZM | 0.88 (0.07) | 0.48 (0.27) | 0.62 (0.14) | 0.71 (0.06) | 0.95 (0.02) | 0.81 (0.09) |

| 5 | Haar | 1.00 (0.05) | 0.11 (0.35) | 0.2 (0.15) | 0.71 (0.06) | 1.00 (0.01) | 0.83 (0.03) |

| 6 | ZM | 0.40 (0.03) | 0.16 (0.03) | 0.21 (0.03) | 0.76 (0.03) | 0.94 (0.03) | 0.84 (0.04) |

| 7 | ZM | 1.00 (0.03) | 0.38 (0.03) | 0.52 (0.03) | 0.73 (0.04) | 1.00 (0.01) | 0.84 (0.03) |

| 8 | ZM | 1.00 (0.02) | 0.25 (0.03) | 0.40 (0.03) | 0.69 (0.14) | 1.00 (0.01) | 0.82 (0.03) |

| 9 | Hist | 0.82 (0.03) | 0.70 (0.04) | 0.75 (0.03) | 0.90 (0.00) | 0.93 (0.01) | 0.91 (0.01) |

| 10 | ZM | 0.69 (0.14) | 0.22 (0.07) | 0.30 (0.09) | 0.79 (0.04) | 0.98 (0.01) | 0.87 (0.02) |

| 11 | Hist | 1.00 (0.04) | 0.30 (0.14) | 0.45 (0.11) | 0.78 (0.04) | 1.00 (0.01) | 0.87 (0.02) |

| 12 | ZM | 0.40 (0.03) | 0.39 (0.03) | 0.39 (0.03) | 0.72 (0.02) | 0.72 (0.02) | 0.72 (0.02) |

| Non-Target | Target | ||||||

|---|---|---|---|---|---|---|---|

| Set | Features | Precision | Recall | F1 | Precision | Recall | F1 |

| 1 | XceptionNet | 1.00 (0.01) | 0.47 (0.07) | 0.64 (0.04) | 0.67 (0.02) | 1.00 (0.01) | 0.81 (0.01) |

| 2 | ResNet-18 | 1.00 (0.01) | 0.41 (0.11) | 0.57 (0.09) | 0.54 (0.04) | 1.00 (0.01) | 0.70 (0.05) |

| 3 | EfficientNetB0 | 1.00 (0.01) | 0.56 (0.07) | 0.71 (0.08) | 0.68 (0.03) | 1.00 (0.01) | 0.81 (0.01) |

| 4 | EfficientNetB0 | 1.00 (0.01) | 0.54 (0.04) | 0.68 (0.06) | 0.75 (0.05) | 1.00 (0.01) | 0.85 (0.01) |

| 5 | XceptionNet | 0.79 (0.02) | 0.68 (0.03) | 0.72 (0.02) | 0.86 (0.01) | 0.90 (0.02) | 0.87 (0.02) |

| 6 | EfficientNetB0 | 0.80 (0.01) | 0.31 (0.14) | 0.44 (0.12) | 0.80 (0.02) | 1.00 (0.01) | 0.89 (0.02) |

| 7 | XceptionNet | 1.00 (0.01) | 0.27 (0.04) | 0.41 (0.03) | 0.70 (0.02) | 1.00 (0.01) | 0.82 (0.02) |

| 8 | Inception-ResNet-v2 | 1.00 (0.01) | 0.32 (0.09) | 0.48 (0.03) | 0.71 (0.04) | 1.00 (0.01) | 0.83 (0.02) |

| 9 | XceptionNet | 1.00 (0.01) | 0.29 (0.02) | 0.43 (0.03) | 0.80 (0.01) | 1.00 (0.01) | 0.89 (0.02) |

| 10 | XceptionNet | 1.00 (0.01) | 0.50 (0.00) | 0.66 (0.02) | 0.86 (0.01) | 1.00 (0.01) | 0.92 (0.03) |

| 11 | XceptionNet | 1.00 (0.01) | 0.27 (0.02) | 0.41 (0.01) | 0.77 (0.03) | 1.00 (0.01) | 0.87 (0.02) |

| 12 | XceptionNet | 1.00 (0.01) | 0.65 (0.03) | 0.78 (0.01) | 0.87 (0.03) | 1.00 (0.01) | 0.93 (0.03) |

| Non-Target | Target | |||||

|---|---|---|---|---|---|---|

| Set | Precision | Recall | F1-Score | Precision | Recall | F1-Score |

| 1 | 1.00 (0.00) | 0.75 (0.05) | 0.86 (0.03) | 1.00 (0.00) | 0.83 (0.03) | 0.96 (0.03) |

| 2 | 1.00 (0.01) | 0.92 (0.01) | 0.96 (0.02) | 0.75 (0.02) | 1.00 (0.00) | 0.86 (0.01) |

| 3 | 1.00 (0.01) | 0.89 (0.01) | 0.94 (0.02) | 1.00 (0.01) | 0.80 (0.02) | 0.89 (0.01) |

| 4 | 1.00 (0.00) | 0.83 (0.03) | 0.96 (0.03) | 1.00 (0.00) | 0.80 (0.02) | 0.89 (0.01) |

| 5 | 1.00 (0.01) | 0.75 (0.02) | 0.86 (0.03) | 1.00 (0.01) | 0.83 (0.03) | 0.96 (0.03) |

| 6 | 1.00 (0.01) | 0.80 (0.02) | 0.89 (0.02) | 1.00 (0.01) | 0.80 (0.02) | 0.89 (0.01) |

| 7 | 1.00 (0.01) | 0.86 (0.01) | 0.92 (0.02) | 1.00 (0.01) | 0.75 (0.02) | 0.86 (0.01) |

| 8 | 1.00 (0.01) | 0.88 (0.03) | 0.94 (0.01) | 1.00 (0.01) | 0.80 (0.02) | 0.89 (0.01) |

| 9 | 1.00 (0.01) | 0.88 (0.03) | 0.94 (0.01) | 1.00 (0.01) | 0.86 (0.01) | 0.92 (0.01) |

| 10 | 1.00 (0.01) | 0.83 (0.03) | 0.96 (0.03) | 1.00 (0.01) | 0.86 (0.01) | 0.92 (0.01) |

| 11 | 1.00 (0.01) | 0.86 (0.01) | 0.92 (0.01) | 1.00 (0.01) | 0.83 (0.03) | 0.96 (0.03) |

| 12 | 1.00 (0.01) | 0.88 (0.03) | 0.94 (0.02) | 1.00 (0.01) | 0.86 (0.04) | 0.92 (0.01) |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Loddo, A.; Ghiani, D.; Perniciano, A.; Zedda, L.; Pes, B.; Di Ruberto, C. An Anomaly Detection Approach to Determine Optimal Cutting Time in Cheese Formation. Information 2024, 15, 360. https://doi.org/10.3390/info15060360

Loddo A, Ghiani D, Perniciano A, Zedda L, Pes B, Di Ruberto C. An Anomaly Detection Approach to Determine Optimal Cutting Time in Cheese Formation. Information. 2024; 15(6):360. https://doi.org/10.3390/info15060360

Chicago/Turabian StyleLoddo, Andrea, Davide Ghiani, Alessandra Perniciano, Luca Zedda, Barbara Pes, and Cecilia Di Ruberto. 2024. "An Anomaly Detection Approach to Determine Optimal Cutting Time in Cheese Formation" Information 15, no. 6: 360. https://doi.org/10.3390/info15060360

APA StyleLoddo, A., Ghiani, D., Perniciano, A., Zedda, L., Pes, B., & Di Ruberto, C. (2024). An Anomaly Detection Approach to Determine Optimal Cutting Time in Cheese Formation. Information, 15(6), 360. https://doi.org/10.3390/info15060360