Abstract

This work investigates how interpersonal interactions among individuals could affect the dynamics of awareness raising. Even though previous studies on mathematical models of awareness in the decision making context demonstrate how the level of awareness results from self-observation impinged by optimal decision selections and external uncertainties, an explicit accounting of interaction among individuals is missing. Here we introduce for the first time a theoretical mathematical framework to evaluate the effect on individual awareness exerted by the interaction with neighbor agents. This task is performed by embedding the single agent model into a graph and allowing different agents to interact by means of suitable coupling functions. The presence of the network allows, from a global point of view, the emergence of diffusion mechanisms for which the population tends to reach homogeneous attractors, and, among them, the one with the highest level of awareness. The structural and behavioral patterns, such as the initial levels of awareness and the relative importance the individual assigns to their own state with respect to others’, may drive real actors to stress effective actions increasing individual and global awareness.

1. Introduction

This work focuses on the study of how interaction among individuals can influence individuals’ awareness dynamics. Throughout the work, we adopt a description of awareness as an end-stage that results from the filtering and processing of several experiences happening simultaneously in our bodies and brains []. This description focuses on the process and character of awareness that makes it emerge by tacit knowledge, enabling the decision maker to define (in a largely unconscious way) a sort of implicit metric space able to accommodate a given problem in a suitable class. To make a long story short, we can equate awareness to the acquisition of ‘contextual’ information located at a different hierarchical level with respect to the direct optimization process. Specifically, the individual applies their own policy function to make a preliminary decision, and then the outcome of their decisions is immediately perturbed any time they interact with others, due to taking into account others’ awareness states. In a previous study [], we focused on the dynamics of awareness emerging at a single individual level. However, these considerations of the individuals’ awareness-raising process were not completely comprehensive, lacking an analysis of the impact exerted by the interaction of individuals. This is exactly the topic addressed with the present work, which provides an attempt to give a quantitative analysis of the crucial aspects characterizing the so-called interpersonal perspective on awareness. To accomplish this task, we adopt a network-based Markov decision model. Although purely phenomenological, the mathematical formulation gives an immediate appreciation of the effects of different styles of coping with interacting agents by the decision maker correspondent to different leadership attitudes and consensus formation.

The interest in developing such a mathematical model of awareness is manifold. From a theoretical point of view, it provides a reference for evaluating the impact of personal relations on awareness raising, thus allowing us to analyze it from both the individual and the social perspectives []. Moreover, it can be used to investigate the pros and cons of using artificial intelligence in human decision making and identify new directions for integrating a prototypical consciousness into AI algorithms [,]. From this point of view, it is worth noting that the different individuals interacting with each other could be, in the most generic view, also non-human agents, AIs or robots for example []. Obviously, in these cases, the concept of awareness has a different meaning, albeit retaining both its character of ‘slow accumulation’ of knowledge from past examples and of the generation of ‘contextual’ knowledge at a different hierarchical level with respect to step-by-step optimization.

Although awareness is a very broad and multifaced concept, difficult to uniquely define, it is still possible to characterize it by extrapolating a series of peculiarities it has, which can represent the pillars for the following mathematical formulation.

Dynamics. Individuals’ awareness is a dynamic process [], continuously evolving over the individual’s lifetime, which can be mathematically represented by a sequence of states. Directionality. Awareness represents a certain state of the individual, which completely incorporates their past, and its evolution results from a conscious directed focus, motivation, and effort of the individual toward the increase of their level of awareness []. Optimization. This goal-directed behavior, natural in human lives, can be mathematically transposed by an optimization process, depending on the state itself, which tends to obtain the maximum benefit. This benefit is assumed, in our case, as directly proportional to the state, which in turn indicates the level of awareness, so that living with a higher level of awareness produces higher individual wellbeing from the physical, psychological, and emotional points of view.

Decision making. From the general considerations reported above, we particularly focus on the relation with the decision making process, claiming that the state of awareness determines the choices the individual made. Uncertainty. Choices are anyway subjected to external uncertainty given by uncontrollable environmental factors. Reasoning propensity. Each individual is characterized by a certain reasoning propensity which embodies the preferred way to process the available information about the decisional problem to face, and results from a combination of an intuitive and an analytical component, as proposed in []. For the purpose of our study, the intuitive individual thinks to have access to personal abilities, not related to cognition, allowing their level of awareness to increase using an appropriate degree of intuition; this has to do with the personal confidence in tacit knowledge. Vice versa, the analytical individual faces the efforts to acquire and elaborate quantitative information they use to make their decisions. Information costs. The knowledge of their propensity determines, for the individual, a reduction of the incurred costs defined as the resource’s consumption deriving from the process of acquiring, processing, and analyzing all the data about the problem. This knowledge represents the intrapersonal dimension of self-awareness reported in the definition given by Carden et al. [], already explored in our previous work [], opposite but also integrated by the interpersonal dimension, upon which is focused the present work. Backward induction. Moreover, the backward induction mechanism applied to solve the optimization process explains not only the future choices, but also the fact that naturally the individuals tend to reconstruct their past choices, and consequent outcomes in terms of wellbeing, a posteriori, as if we imagine solving the decision problem from the present state in a past horizon.

The reported characteristics and mechanisms can be modeled by the sequential model class of Markov Decision Processes (MDPs), mathematically formulated in the next section. However, this is not enough, and an additional perspective must be considered in order to take into account the interaction component among individuals.

In fact, interaction is an essential component of human life, an aspect from which one cannot escape. It is said that “people seek the truth collectively, not individually” []. This collective dimension implies that the decision process, in many cases, becomes an emergent property of a given set of individuals. To mathematically shape the interaction among a given set of individuals and to analyze the resulting behaviors and dynamics, it is possible to formalize it in terms of networks in which the nodes are different individuals and the edges their pairwise interaction []. In our approach, we formalized the effect of interaction as impinging on the single individual decision process and thus acting as a modifier of their dynamics.

As mentioned before, the concept of awareness synthesizes two different but equally important perspectives: the first corresponds to an intrapersonal aspect centered on the awareness of one’s own resources and internal state, as well as any kind of knowledge that the Decision Maker (DM) can learn from the context. The second, defined as interpersonal, focuses on the impact exerted by other agents on the subject and vice versa [] and is equally important and essential as the first. The interpersonal perspective can be further separated into two components: (a) Behavior corresponds to an externally visible action that might directly affect others; (b) Others’ perception corresponds to how an individual is perceived by their neighborhoods and has to do with the ability to “receive and interpret” feedback.

These components join the intrapersonal perspective, requiring the individual to be consciously aware of and to understand how their decisions could influence and be influenced by the other’s choices. Sometimes awareness and self-awareness can be traced back to the interpersonal view defining it as “anticipating how others perceive you, evaluating yourself and your actions according to collective beliefs and values, and caring about how others evaluate you” []. Other times, the literature emphasized both components, claiming that awareness consists of the internal—recognizing one’s own inner state—and the external—recognizing one’s impact on others [], but the second component is no more elaborate than pointing out that it was a self-evaluative component referring to becoming conscious of one’s impact on others. The interpersonal perspective is the focal point of this work.

A relevant example case showing the importance of the interpersonal aspect comes from the theme of Leadership. Although scholars continue to debate how to define, detect, and measure awareness in people, most of them would intuitively regard it as fundamental to effective leadership behavior []. For example, it has been established that by becoming more self-aware, leaders better understand their strengths and self-resources and better identify where they might need further development [].

In our opinion, these aspects of intrapersonal perspective should be complemented by a second, sometimes neglected, interpersonal view. This is critical to leadership and corresponds to the ability to interpret (1) the emotions, thoughts, and preferences of others and (2) the influence leaders have on people. Because leadership is relational [], leaders must develop peer-to-peer connections with their team members, so they become aware of their perspectives, hopes, goals, strengths, and developmental needs and achieve the full extent of the desired outcome and the ability to anticipate their impact and influence on others.

The manager who is consistently inclined to consider only profits or goal attainment, rather than others’ perceptions, as well as the general, who is only focused on winning the battle, rather than being constantly connected to their subordinates, may restrict the ability to be an aware leader []. If leaders are not aware of their impact on others and if they cannot effectively diagnose how others experience their efforts, then their ability to lead effectively will be severely hampered. To cope with these limitations, leaders should develop a greater appreciation for extrinsic standards, such as an awareness of how others perceive them as well as of their own emotions and the emotions of others, thus building an overall positive work environment.

The case of leadership is an example highlighting the importance that the interpersonal dimension covers in many different fields of our lives. Extending the concepts reported above to the framework proposed in this paper, aimed at theoretically describing the impact of others’ behavior on individual awareness, we will sketch a prototypical model based on interaction networks in which the ‘neighborhoods sentiment’ is modeled (from the individual’s point of view) as a global network response generated from its wiring structure.

2. Methods

This section reports a mathematical formulation that can be considered a first attempt to evaluate how the interaction among individuals impacts the dynamics of awareness in the context of decision making processes. The starting point is a framework embedding the different phenomena impacting the awareness-raising process, with a focus on the single individual in isolation. Then, a reasonable formulation is introduced to describe the contribution on the dynamic of the single agent provided by the interactions among others, exploiting a network and a suitable coupling function.

2.1. Modeling Individual Awareness

To provide a mathematical model embedding the basic mechanisms of awareness raising, we start from the wide definition of a deterministic finite-state automaton as a quintuple (, , , , ) where is the input alphabet, consisting of a finite non-empty set of symbols, is a finite non-empty set of states, is an initial state, is a transition function, and is the set of final states. Then we focus on the framework of Markov Decision Processes (MDPs) which embed a Markov-chain with the addition of input () and costs/rewards, according to a function [,].

The MDP is then defined as a tuple (, , , ) where is a finite set of feasible states, called state space, is a finite section of available actions, namely the action space, is a stochastic transition function defining the probability to shift from a state to a state by choosing the input , and is a reward function incurred.

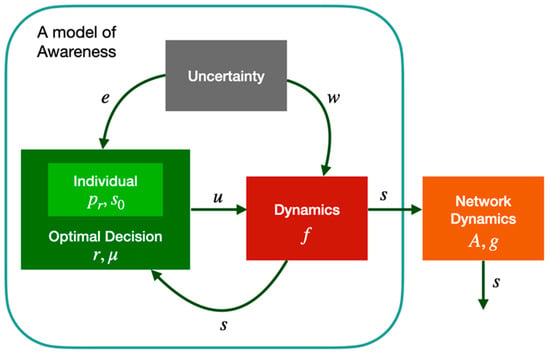

A mathematical model grounded on MDPs describing the basic elements which impact the individual’s reasoning and analyzing how they are related to the process of increasing personal awareness is shown in Figure 1, which reports a graphical scheme of the mathematical model where the individual mechanisms introduced in [,] (green border sub-scheme), and the networked system of interconnected individuals (complete scheme) are highlighted.

Figure 1.

Pictorial representation of the model of awareness. The main elements of the mathematical model are: 1. The individual characteristics (light green box) consisting of the awareness state , the reasoning propensity , and the initial level of awareness ; 2. The optimization process (dark green box) defined by the reward function , and the optimal policy . The awareness dynamics (red box) determined by the action , the sources of uncertainties and (gray box), and the transition function ; 4. The effect of the interconnection with others (orange box), where is the adjacency matrix of the graph and is the interaction function.

In particular, the single individual model considers a discrete and finite time horizon of length , in which each time-epoch, , corresponds to a moment of making a decision, , under the conventional assumption that the more analytical the choice, the higher the value of , and, vice versa, the more intuitive the choice, the lower the value of . The state, , of the individual at each time , is a representation of their level of awareness, incorporating all past experiences. Therefore, awareness is considered to be a process—mathematically a sequence of states—involving the DM’s experiences, filtered by their perspectives, beliefs, and values. Moreover, in a MDP we have at the same time the presence of a choice of the DM and uncertainty about its outcomes given by uncontrollable external factors, represented in our framework by a stochastic variable , as always happens in our decisions. This model is good trade-off between realism and simplicity: broad enough to account for realistic sequential decision making problems while simple enough to allow it to be understood and applied by different kind of practitioners. Each individual possesses a reasoning propensity, , which embeds the specific attitude in processing the information about the problem and represents the trade-off between two diametrically opposed reasoning modalities: intuitive () and analytical (), assuming in this way, that both are always involved, with different amounts, in any decision [,]. The reasoning propensity affects the policy, , of the individual. A policy is a function prescribing the DM the action to take for each possible state at any time instant, and it is represented by a matrix of dimension . Therefore, the policy turns out in the decision:

The choice, driven by the policy as exposed in Equation (1), leads to two results: the DM receives a reward, defined by a reward function , and the system evolves to a possibly new state according to a stochastic transition function defined as:

Equation (2) characterizes the dynamics of the process of self-knowledge indicating how the future level of awareness of the individual depends on the current state, the choices they make, and its outcome which is subject to some uncertainty represented by . We assume, for simplicity, that by deciding , the state can increase (), decrease (), or remain the same (), according to a certain probability expressed by the transition probability function P. In particular, we define a stationary probability constant for all , a forward probability as a function of , and a backward probability computed starting from the first two: since as probabilities their sum must be equal to one. The presence of uncertainty affecting the outcomes of the decision, given by uncontrollable elements in the environment, makes the state evolution and the rewards sequence stochastic. The reward function incorporates the assumption that the wellbeing increases with the state, which in turn indicates the level of awareness, so that living with a higher level of awareness produces a higher individual’s global wellbeing. On the other hand, it introduces a component related to information costs, which are higher the more the choice of the individual is analytical, i.e., in our convention, the more is near 1 (see Appendix A for more details on the reward function r).

The individual is focused on the maximization of their wellbeing i.e., the sequence of rewards they incur over time, embedding a directionality typical of the human goal-directed behaviors. The optimal decisions are obtained by maximizing the sequence of expected rewards, according to the following problem:

The last reward the DM incurs at the last time-epoch , namely the term in Equation (3), is a priori fixed because it is used as the starting point for the reconstruction of the optimal policy in the process of optimization described below, embedded applying a backward induction method. We assume that the terminal benefits the DM incurs at the final time increases by increasing the values of the final state . Moreover, a factor , weighting future rewards, has been introduced.

The maximization problem expressed in Equation (3) is recursively solved by implementing a Dynamic Programming algorithm, where the original problem is separated into a recursive series of easier sub-problems considering a shorter time horizon from τ to T and given the step-by-step initial state , representing the state at time . The expected reward function to optimize at each stage is explicitly described as:

where the coefficients γ1, γ2 and γ3 weight the cases of increasing, maintaining constant, or decreasing the state. These parameters allow us to take into account different attitudes of the DM, for example penalizing the eventuality of decreasing awareness.

The optimization process, intended to maximize the sequence of rewards expressed in Equation (4), embeds in itself an element of individual’s self-observation, which determined the action/decision to made. This feedback component allows the DM to build a policy by having knowledge of the form of the transition probability function, and the current state at each time epoch. In this way, the DM can overcome their usual, automatic process by shifting from their habitual to a new aware policy, which results from the reward maximization process, thus mitigating the mechanistic tendencies of the individual []. Because of the dependence of the reward on the level of awareness, this maximization also models the fact that the increase of personal awareness follows a focus on this specific objective, emerging from personal effort and motivation [].

It is possible to include in the model the individual’s immediate emotions [,,], considering also that the role played by emotions could depend on the present level of awareness of the individual. For example, at a low level of awareness, emotions prevail on individual reasoning, so DM is driven in its choices by the research of instant satisfaction. In this condition, the future (intended to be long-term perspectives) will have very little weight on their choice, the DM is enslaved by immediate reward, a condition which could turn out to be highly damaging. This ‘immediate reward’ dynamics loses its importance when the individual reaches a high level of awareness. In the mathematical model, emotions affect the function , showing how the individual differently weighs the future with respect to the present and taking into account also the dependence of function on the state .

Once the generic structure of the model has been defined, it is possible to assign specific forms to the different model’s functions to perform numerical simulations aimed to study the emergence of behaviors and dynamics. The different functions used are reported in Appendix A.

Figure 2 reports a comparison of the aware policy with respect to a habitual policy referred to as the most basic and simplest mechanism governing an individual’s usual decisions. It assumes that decisions spring from habits, automatic, non-conscious mechanisms, represented in the model by individual reasoning propensity . Furthermore, it is fixed and constant in time, with the only addition of noise , as reported in Equation (5), normally distributed with mean zeros and variance (where is fixed to 0.08 in the simulations). It takes into account a source of uncertainty, mainly due to external factors, influencing the effective choice and shifting it around the reasoning propensity of the individual.

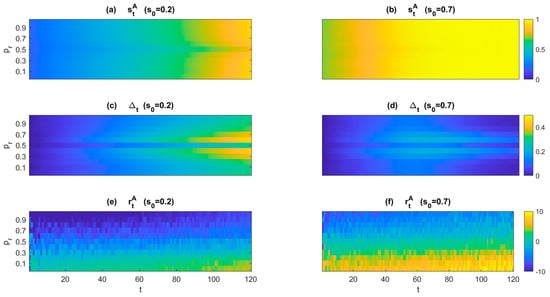

Figure 2.

Comparison between aware (A) and habitual (H) states and rewards over time, for individuals with different levels of reasoning propensities . The color bar reports the different variables of interest. For the two upper panels (a,b) the color bar indicates the level of the state, for the two middle panels (c,d) the payoff obtained by applying an aware policy with respect to the habitual one, where , and in the two bottom panels (e,f) the reward for an aware policy. All the panels on the left consider a low initial state () while the ones on the right consider a high initial level (). The aware model’s parameters used for the comparison are fixed to . The functions used to perform the simulations are described in detail in Appendix A.

On the contrary, the aware policy is embodied by the faceted optimization process described in Equations (3) and (4).

Figure 2 shows, before anything else, the effect resulting from the application of the aware policy in terms of a high final level of the state (near 1) starting from a low state (Figure 2a) and speed in reaching the maximum state level starting from a high initial state (Figure 2b). The shift from a habitual to an aware policy has an improving action on the dynamics of the state (Figure 2c,d), except when the initial level of awareness is high enough (see Figure 2d, where ) and after a long time horizon (the similarity at the first time instant in Figure 2c,d is due to the equal initial state assumptions). The benefit of the shifting increases as the grows from zero to 0.5 where there is a discontinuity for which the difference between the two situations is neglectable, and then symmetrically decreases as the grows further from 0.6 to 1. This fact is due to the symmetrical form assumed for the theoretical probability functions defined for an analytical and intuitive individual, as expressed in Equations (8) and (A1), respectively. In the end, the two panels e and f highlight how the improvement of the state dynamics not necessarily corresponds to an increase in the reward, , because the model considers also a part of costs related to the level of analyticity adopted in the decision so that an individual can reach a high level of the state but can also have some losses simply indicating a high cost due to excessive use of analytic reasoning.

2.2. Interactions and Awareness: A Network Model

The framework exposed until now describes the emergence of awareness considering the decision making process of a single agent taken into isolation. However, it lacks an essential dimension: how the interaction with other agents can impact the awareness dynamic of every single agent. The novelty of the present work is specifically to establish a mathematical declination of the interactions among agents in the optic to study the awareness-raising phenomenon. This interaction can be modeled through a Network—also called a Graph in the mathematical literature—that is as a collection of vertices—nodes—joined by links—edges [,]. Whether time series of behavioral data are available for each node, the links can derive from pairs’ partial correlation coefficients, mutual entropy, and so on. Furthermore, conditional probabilities computed over some relevant variable or distance between pairs of nodes over a metric space can be used as quantifiers of edge strength []. Since the model developed in this study is based on theoretical assumptions as well as behavioral and psychological evidence of prototypical cases, the connections are undirected and weighted uniformly in the population. Estimating specific values for these edges is crucial; to this aim, suitable surveys are under development by the authors of the present paper.

According to the common notation applied in the mathematical literature, a graph is defined by giving the set of n nodes and the set of m edges linking them. The effective meaning of nodes and edges depends on the specific context in which the graph is applied: in our case, the nodes are the agents [], and the edges model a relationship among them, in the sense that each agent is influenced directly by their neighborhoods, i.e., all the other agents it is connected to, and indirectly by all agents without any specific dependence on the distance. Now, there are many ways to represent a network mathematically, but typically it is given the adjacency matrix in which each element indicates the presence or absence of a link between nodes and , and eventually the weight of that connection [].

The kind of network we are now considering has at maximum one edge between the same pair of vertices, in opposition to a multi-edge graph; moreover, there are no edges that connect vertices to themselves, the so-called self-edges or self-loops. A network of this type that has neither self-edges nor multi-edges is called a simple network or simple graph. The network considered is undirected and unweighted, so the adjacency matrix has all zeros on the diagonal (no self-loop) and it is symmetric. All are equal to 1 or 0 to indicate the presence and absence, respectively, of an interaction between nodes i and j; they represent simple on/off connections among different agents.

As future development, it might be interesting to consider a weighted graph by explicitly defining a certain strength/weight of each edge connecting nodes i and j, represented by . It might be equally interesting to consider a direct graph for which the adjacency matrix is not symmetrical, exploiting the fact that a connection between i and j does not have the same weight in both directions.

The aim of this work is to evaluate the mutual impact of individuals’ decisions on their respective awareness dynamics. To mathematically analyze this phenomenon, it is necessary to introduce a dependence among individuals characterizing their interactions [,]. A possible way to insert this interaction is to define the state evolution of each agent as related to the state of the other agents in the network. From a mathematical point of view, we introduce a new interaction term , claiming that the state evolution for each agent is governed by the function:

For the interaction term, we can assume that there exists an imitative behavior driving people to behave like their neighbors. The same consideration is applied in scientific literature to describe mechanism of “gossip”, in which people tend to copy their friends: they increase the amount they talk about a topic if their friends are talking about it more than they are, and decrease if their friends are talking about it less [].

This imitative behavior can be represented by putting:

This is the form introduced for the first time to describe the synchronizing Kuramoto oscillators [,].

If we consider, for Equation (7), the simple linear case for which , then Equation (6) reads as follows:

Indicating that the state evolution of the agent depends on its previous state (), on the stochastic process, , and on an interaction term that describes the dynamics of all the nodes linked to node . This term is the summation of all the differences between the state values of the considered node and the ones of the nodes to which it is connected, tuned using two constant positive parameters and These parameters reflect how the present state of the individual is weighted with respect to the ones of the neighbors (considering, in this first case, an equal coefficient for all the neighborhoods of node ). When the interaction term present in Equation (8) is null, and the state evolves as the single individual awareness model. In the end, the summation is modulated with a term , where is the degree of node (i.e., the number of its neighborhoods).

Recalling that the state value is defined as the level of awareness of agent at time , we can appreciate the meaning of the proposed equation. The form of the equation encompasses the fact that neighborhoods of with higher state values have a positive impact on the dynamic of node , while neighborhoods with lower values have a negative impact on it, i.e., agents with a higher and lower level of awareness can help or damage, respectively, the level of awareness of the node .

3. Results

By applying the mathematical framework described above, it was possible to evaluate the resulting dynamics by solving the optimization problem defined in Equation (3) and analyzing the corresponding simulations.

In these simulations, we applied the aware policy described above also considering the presence of immediate emotions. In our previous work [], we analyzed how emotions, when they embed a dependence on the level of awareness, could have a very different impact on individual’s dynamics depending on the specific level, so they can speed up the dynamic when the individual has a high state of awareness, whereas can be more damaging the more the state is low. It is possible to observe this phenomenon in Figure 3c,d, considering the dynamics without interaction and showing that individuals with an initial state under a certain threshold (around 0.8) suffer from a negative impact of emotions that prevents the state to increase.

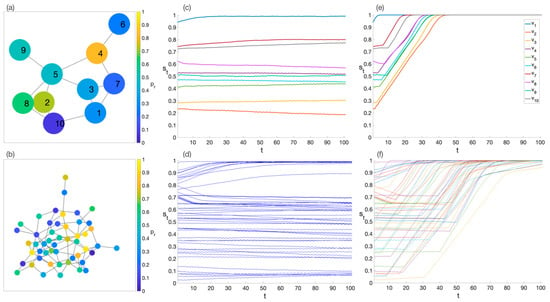

Figure 3.

State evolution in a network of 10 and 50 individuals. Panels (a,b): in both the 10 and the 50 nodes cases, the graph topology is Erdős–Rényi with an average degree of 4. The colors indicate the value of the reasoning propensity () for all nodes, uniformly distributed in the open interval (0, 1). The same is done for the initial state, which is uniformly distributed in the open interval (0, 1). State dynamics without panels (c,d) and with panels (e,f) interactions among individuals. The synchronization parameters are and there is included the presence of emotions. All the other parameters are .

Moreover, Figure 3e,f highlight a synchronization mechanism that involves the individuals and diffuses on the network, eventually becoming more evident at increasing number of nodes. This process corresponds to the information spreading through the network making all the individuals to reach equal values of the ending state. The collapsing to a single attractor corresponding to a uniform state of all the nodes, is a consequence of the high level of correlation between nodes, which is precisely a universal of tipping points before a phase transition [,,]. The correlation effect (and the consequent generation on unanimity) is extended by the recurrence condition allowing each individual to have a ‘complete view’ of the entire system. Because the network is represented by a connected graph, each individual has an awareness of the entire system: the state depends on the state of the neighbors, but in turn their states depend on the states of their neighbors and so on. Therefore, ultimately, the state of each node depends on the state of all other nodes, be they closer (in the neighborhood) or further away, at the margins of the system. Given that the decision of the individual derives from the policy, which has a specific value for each possible state at each time instant, the coupling term produces an impact on the state dynamics but also on the choices. The policy remains the same, it is not influenced by the network, in the sense that the single individual has their own way of reasoning, but the effective values suggested by the policy, depending on the state evolution, are affected by the presence of interaction.

It is interesting to notice that over the network, the state overcomes the stopping phenomena reported above (Figure 3c,d), enabling all the individuals to asymptotically reach the maximum level of the state (), independently from their initial state (Figure 3e,f). Table 1 reports simple statistical metrics based on the mean and variance of the state across the 10 and 50 nodes network models with and without interaction. A rapid increase in mean awareness level, coupled with a progressive synchronization (decrease in variance) of different agent states, can be observed. Moreover, it is worth noticing that the lack of change in time in the ‘without interaction’ condition stems from the much longer characteristic time of isolated nodes’ awareness increase with respect to the interaction condition.

Table 1.

Statistical comparison of the model simulations developed for the two networks of Figure 2. The mean and the variance of the simulations without (Figure 3c,d) and with (Figure 3e,f) interactions are evaluated at equally spaced time instants, from the initial time t0 to the final time T. The values reported in the table are approximated to two digits.

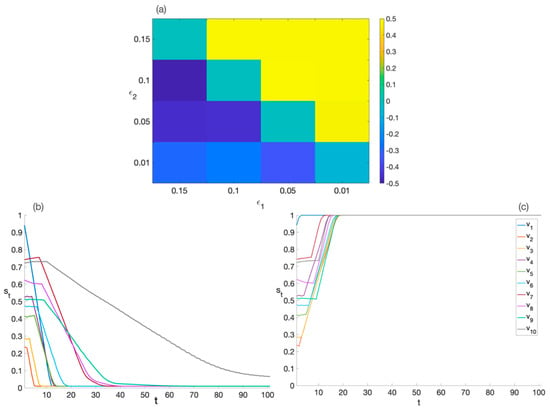

Different combinations of the coefficients and , which weigh the interactions, have been analyzed (see Figure 4a), highlighting the different pairs that can provide a worsening or an improvement of the state evolution. We note how similar parameters’ values have very different effects, defining a diagonal of the matrix; pairs with helpful influences () are positioned above the diagonal, whereas pairs with harmful influences () are below it.

Figure 4.

Comparison of pairs of state evolutions, with and without interactions, for different combinations of the parameters and (panel a). The color indicates the gain obtained considering each specific pair. It is computed as , where and are the state evolutions with and without interaction, respectively, and n is the number of nodes. State evolutions for harmful (panel b, = 0.01, = 0.1) and helpful (panel c, = 0.1, = 0.01) interactions, respectively. All the other parameters are .

4. Discussion and Conclusions

The aim of this research was to propose a model characterizing the dynamic of awareness raising in individual’s decision making embedding an interpersonal perspective, to take into account the presence of a bi-directional influence of other agents. To do this we considered a simple and immediately comprehensible coupling term, claiming that the state evolution of each individual depends on the state of all their neighbors. This term incorporates in the state evolution of each node a factor which depending on the distance between its state and the one of all its neighbors, weighted by two constant factors. This difference claims that for each node:

- Neighbors with higher state level are helpful in the state evolution.

- Neighbors with lower state level are harmful in the state evolution.

Since the considered network is fully connected, each node is influenced directly or indirectly by the states of all the other nodes in the graph.

For particular combinations of and , a synchronization mechanism is observed among the nodes of the network allowing each individual to reach the highest level of the state. This is an interesting way to overcome the problems related to the presence of emotions, indeed, for individuals starting from low states of awareness, it enables a further increase of the state.

Different coupling mechanisms can be considered, also taking into account more complex relationships among individuals, such as weighted and directed graphs, as well as different kinds of random graph topologies, such as scale-free degree distributions that have been recognized as ubiquitous in social relations.

In this work, the focus is on personal choices made with an explicit intention/motivation of increasing personal awareness, consequently each decision maker has their own way of reasoning that cannot be modified, but surely influenced, by the other individuals. It is said that “people seek the truth collectively, not individually” [] and also the awareness-raising process must take into account this collective perspective. It is essential to enrich the personal self-awareness with the inclusion of a knowledge of the external environment, for example considering the state of all other individuals to which a decision maker is inevitably related in their decisions. It has been analyzed how this impact can provide also beneficial stimulations, allowing the individual to overcome personal phenomena preventing further raising of awareness.

To the best of our knowledge, this is the first explicit quantitative model addressing the awareness dynamics in an interpersonal context, this generates the obvious limitation of the impossibility of a comparison with alternative formulations and, still more important, with real-life situations. As for this second point, we are planning a benchmark study with suitable real-life data. The relative data sources should be carefully chosen in order to obtain reliable proxies of collective awareness raising: we can speculate the ideal situation could be a training process as in introductory sport, dance, or music schools. The benefits of the proposed model are its complete transparency and analytical character allowing for a full explanation of the obtained results.

Author Contributions

Conceptualization, F.B., A.G. and C.M.; methodology and formal analysis, C.M. and F.B.; software, F.B.; validation, F.B., A.G. and C.M.; writing—original draft preparation, F.B.; writing—review and editing, C.M. and A.G. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

No real data have been used for this study. The Matlab code developed for the simulations can be made available upon request.

Acknowledgments

F.B. and C.M. thank Jean Carlo Pech de Moraes for the fruitful meetings, suggestions and for his encouragement to proceed with this study.

Conflicts of Interest

The authors declare no conflict of interest.

Appendix A. Model Functions and Parameters Specification

This appendix reports the specific functions’ shapes applied for developing the model simulations reported in the paper.

Equations (A1) and (A2) describe the reward functions at a generic time t and at the final time T, respectively, while Equation (A3) defines the function regulating the state evolution over time. It incorporates a dependence on the stochastic variable that can assume values in the set {−, according to the forward, stationary, and backward transition probabilities, , , and . The forward probability results from the linear combination of two basic theoretical functions, and , representing the propensities of individuals towards analytic or intuitive reasoning. The propensities are described by Equations (A4) and (A5), where is introduced to resize the variable so that it belongs to the set and to allow the propensities and laying in the interval . The propensity to the intuitive reasoning is shaped similarly to the one proposed by Moerland et al. in their experimental research []. and are combined using as coefficient the reasoning propensity of the individual, thus giving rise to the forward transition probability of Equation (A6). The stationary transition probability is assumed constant (Equation (A7)). Finally, the backward transition probability is obtained by difference from the previous two, as described by Equation (A8).

The parameters’ setting for , and the initial state included in the model equations are varied in the simulations and will be clarified in the different reports of the results. Other parameters are assumed equal for all the simulations and set to the values ,

,

, .

References

- Vaneechoutte, M. Experience, Awareness and Consciousness: Suggestions for definitions as offered by an evolutionary approach. Found. Sci. 2000, 5, 429–456. [Google Scholar] [CrossRef]

- Bizzarri, F.; Giuliani, A.; Mocenni, C. Awareness: An empirical model. Front. Psychol. 2022, 13, 933183. [Google Scholar] [CrossRef] [PubMed]

- Steixner-Kumar, S.; Rusch, T.; Doshi, P.; Spezio, M.; Gläscher, J. Humans depart from optimal computational models of interactive decision-making during competition under partial information. Sci. Rep. 2022, 12, 289. [Google Scholar] [CrossRef] [PubMed]

- Lake, B.; Ullman, T.; Tenenbaum, J.; Gershman, S. Building machines that learn and think like people. Behav. Brain Sci. 2017, 40, e253. [Google Scholar] [CrossRef]

- Naveed Uddin, M. Cognitive science and artificial intelligence: Simulating the human mind and its complexity. Cogn. Comput. Syst. 2019, 1, 113–116. [Google Scholar] [CrossRef]

- Casalino, A.; Messeri, C.; Pozzi, M.; Zanchettin, A.M.; Rocco, P.; Prattichizzo, D. Operator awareness in human–robot collaboration through wearable vibrotactile feedback. IEEE Robot. Autom. Lett. 2018, 3, 4289–4296. [Google Scholar] [CrossRef]

- Carden, J.; Jones, R.J.; Passmore, J. Defining self-awareness in the context of adult development: A systematic literature review. J. Manag. Educ. 2022, 46, 140–177. [Google Scholar] [CrossRef]

- Laske, O.E. From coach training to coach education: Teaching coaching withina comprehensively evidence-based framework. Int. J. Evid. -Based Coach. Mentor. 2006, 4, 45–57. [Google Scholar]

- Allinson, C.W.; Hayes, J. The cognitive style index: A measure of intuition-analysis for organizational research. J. Manag. Stud. 1996, 33, 119–135. [Google Scholar] [CrossRef]

- Baumeister, R.; Masicampo, E.; DeWall, C. Arguing, reasoning, and the interpersonal (cultural) functions of human consciousness. Behav. Brain Sci. 2011, 34, 74. [Google Scholar] [CrossRef]

- Liu, X.; Li, D.; Ma, M.; Szymanski, B.K.; Stanley, H.E.; Gao, J. Network resilience. Phys. Rep. 2022, 971, 1–108. [Google Scholar] [CrossRef]

- Baumeister, R.F. The nature and structure of the self. In The Self in Social Psychology; Psychology Press: Philadelphia, PA, USA, 1999; pp. 1–20. [Google Scholar]

- Hall, D.T. Self-awareness, identity, and leader development. In Leader Development for Transforming Organizations; Psychology Press: Philadelphia, PA, USA, 2004; pp. 173–196. [Google Scholar]

- Taylor, S.N. Redefining leader self-awareness by integrating the second component of self-awareness. J. Leadersh. Stud. 2010, 3, 57–68. [Google Scholar] [CrossRef]

- Avolio, B.J.; Griffith, J.; Wernsing, T.S.; Walumbwa, F.O. What is authentic leadership development? In Oxford Handbook of Positive Psychology and Work; Oxford University Press: Oxford, UK, 2010; pp. 39–51. [Google Scholar]

- Uhl-Bien, M. Relational leadership theory: Exploring the social processes of leadership and organizing. Leadersh. Q. 2006, 17, 654–676. [Google Scholar] [CrossRef]

- Puterman, M.L. Markov Decision Processes: Discrete Stochastic Dynamic Programming; John Wiley & Sons: Hoboken, NJ, USA, 2014. [Google Scholar]

- Landry, P.; Webb, R.; Camerer, C.F. A Neural Autopilot Theory of Habit. Soc. Sci. Res. Netw. 2021, 41, 185–190. [Google Scholar] [CrossRef]

- Bizzarri, F.; Mocenni, C. Awareness. Acad. Lett. 2022, 4688. [Google Scholar] [CrossRef]

- Kahneman, D. Thinking, Fast and Slow; Farrar, Straus and Giroux: New York, NY, USA, 2017. [Google Scholar]

- Hodgkinson, G.P.; Langan-Fox, J.; Sadler-Smith, E. Intuition: A fundamental bridging construct in the behavioural sciences. Br. J. Psychol. 2008, 99, 1–27. [Google Scholar] [CrossRef]

- Burda, Y.; Edwards, H.; Pathak, D.; Storkey, A.; Darrell, T.; Efros, A.A. Large-scale study of curiosity-driven learning. arXiv 2018, arXiv:1808.04355. [Google Scholar]

- Loewenstein, G. Emotions in economic theory and economic behavior. Am. Econ. Rev. 2000, 90, 426–432. [Google Scholar] [CrossRef]

- Soosalu, G.; Henwood, S.; Deo, A. Head, heart, and gut in decision making: Development of a multiple brain preference questionnaire. SAGE Open 2019, 9, 2158244019837439. [Google Scholar] [CrossRef]

- Sayegh, L.; Anthony, W.P.; Perrewé, P.L. Managerial decision-making under crisis: The role of emotion in an intuitive decision process. Hum. Resour. Manag. Rev. 2004, 14, 179–199. [Google Scholar] [CrossRef]

- Newman, M. Networks: An Introduction; Oxford University Press: Oxford, UK, 2018. [Google Scholar]

- Kivelä, M.; Arenas, A.; Barthelemy, M.; Gleeson, J.P.; Moreno, Y.; Porter, M.A. Multilayer networks. J. Complex Netw. 2014, 2, 203–271. [Google Scholar] [CrossRef]

- Borsboom, D.; Deserno, M.K.; Rhemtulla, M.; Epskamp, S.; Fried, E.I.; McNally, R.J.; Waldorp, L.J. Network analysis of multivariate data in psychological science. Nat. Rev. Methods Prim. 2021, 1, 58. [Google Scholar] [CrossRef]

- Bandura, A. Social cognitive theory: An agentic perspective. Annu. Rev. Psychol. 2001, 52, 1–26. [Google Scholar] [CrossRef] [PubMed]

- Pikovsky, A.; Rosenblum, M.; Kurths, J. Synchronization: A Universal Concept in Nonlinear Science; Cambridge University Press: New York, NY, USA, 2002. [Google Scholar]

- Gómez-Gardeñes, J.; Moreno, Y. From scale-free to Erdos-Rényi networks. Phys. Rev. E 2006, 73, 056124. [Google Scholar] [CrossRef] [PubMed]

- Rodrigues, F.A.; Peron, T.K.D.; Ji, P.; Kurths, J. The Kuramoto model in complex networks. Phys. Rep. 2016, 610, 1–98. [Google Scholar] [CrossRef]

- O’Keeffe, K.P.; Hong, H.; Strogatz, S.H. Oscillators that sync and swarm. Nat. Commun. 2017, 8, 1504. [Google Scholar] [CrossRef] [PubMed]

- Gorban, A.N.; Smirnova, E.V.; Tyukina, T.A. Correlations, risk and crisis: From physiology to finance. Phys. A Stat. Mech. Its Appl. 2010, 389, 3193–3217. [Google Scholar] [CrossRef]

- Karsenti, E. Self-organization in cell biology: A brief history. Nat. Rev. Mol. Cell Biol. 2008, 9, 255–262. [Google Scholar] [CrossRef]

- Moerland, T.M.; Deichler, A.; Baldi, S.; Broekens, J.; Jonker, C.M. Think too fast nor too slow: The computational trade-off between planning and reinforcement learning. arXiv 2020, arXiv:2005.07404. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).