Abstract

Twitter, one of the most important social media platforms, has been in the headlines regularly since its acquisition by Elon Musk in October 2022. This acquisition has had a strong impact on the employees, functionality, and discourse on Twitter. So far, however, there has been no analysis that examines the perception of the acquisition by the users on the platform itself. For this purpose, in this paper, we use georeferenced Tweets from the US and classify them using a polarity-based sentiment analysis. We find that the number of Tweets about Twitter and Elon Musk has increased significantly, as have negative sentiments on the subject. Using a spatial hot spot analysis, we find distinct centres of discourse, but no clear evidence of their significant change over time. On the West Coast, however, we suspect the first signs of polarisation, which could be an important indication for the future development of discourse on Twitter.

1. Introduction

Social media has long established itself as a central medium of communication between people. Especially when it comes to the public sharing of information and opinions, the short-message service Twitter is particularly popular. Twitter has existed since 2006 and has 237.8 million monetizable users, 76.9 million of which are from the United States (US) [1,2]. Twitter has been in the headlines regularly throughout 2022. In April 2022, South African investor Elon Musk became the largest shareholder of Twitter with 9.1%. On 14 April, Musk made an unsolicited offer to take over the entire company, which, after some reluctance, was accepted on 25 April for USD 44 billion. The acquisition was completed on 27 October, making Elon Musk the new owner and CEO of Twitter. This acquisition directly had a profound impact on the company’s structure, including Musk laying off about half of the workforce, while many others quit [3,4]. Despite being propagated under the claim of increasing “freedom of speech” [5], the reforms announced by Musk received a very mixed media reception. Examples of this were the reinstatement of the banned former US president Donald Trump or the restructuring of profile verification, which was characterised by trolling and misinformation [6,7].

The aforementioned media evaluation of the Twitter acquisition, however, largely refers to public sources, such as newspapers. A detailed evaluation of Elon Musk’s acquisition of Twitter on the basis of Tweets has not yet been carried out. For this paper, we therefore conducted the first such study by analysing georeferenced Tweets from late 2022 and classifying them using a sentiment analysis method. Based on this evaluation, we were then able to make statements about the temporal and spatial representation of opinions regarding the Twitter acquisition. For this, we performed a spatial hot spot analysis on county level. Our paper addresses the following two research questions (RQ):

- RQ1: How was the acquisition of Twitter by Elon Musk perceived by Twitter users in the US?

- RQ2: Are there any regional differences in this perception?

The paper follows the classical structure in which we first present similar research in a literature review. Then, we outline our methodology in more detail, especially with regard to sentiment analysis and spatial hot spot analysis. After describing our results, we discuss them critically.

2. Literature Review

Georeferenced Twitter data have been used for the analysis of many different use cases, such as natural disasters [8], refugee crises [9], or epidemiology [10]. In addition to statistical analyses or topic modelling approaches for semantic classification, sentiment analysis methods are also used frequently. Sentiment analysis is a branch of research that analyses people’s feelings, opinions, evaluations and emotions about certain objects or persons and their characteristics from textual data [11]. It aims to assign general sentiment labels to a data set [12]. It is commonly understood as a sub-discipline of natural language processing (NLP) and semantic analysis [13].

In psychology, a distinction is often made between emotion, i.e., a distinct feeling, and sentiment, which can be understood as a mental attitude based on an emotion. However, this distinction is quite blurry, which also holds true for the difference between sentiment and opinion. Sentiment can be defined as a combination of domain dependent type, valence (positive, neutral, and negative) and intensity. It is important to note that the object to which such a sentiment can refer can also vary greatly. Sentiment analysis can thus be performed on different levels of granularity, i.e., referring to entire texts, sentences or entities, such as singular words [11]. As with other machine learning approaches, there are both supervised (e.g., simple decision trees) and unsupervised methods [14]. In recent years, deep learning based methods have also been developed. Here, convolutional neural networks (CNNs) [15], recurrent neural networks (RNNs) [16] and deep belief networks (DBNs) have been applied for sentiment analysis tasks [17]. There are some general challenges, such as the handling of sarcasm, ambiguous word meanings, the absence of sentiment words, or the ignorance of pronouns [11,12].

There have been many studies on a plethora of topics that use sentiment analysis methods on social media data, mainly from Twitter. For example, [18] utilise Twitter data to analyse the sentiments surrounding the 2012 London Olympics. For this, they consider different timestamps and user groups and employ the Hu Liu lexicon of positive and negative words. In a study on the perception of urban parks, [19] use a partitioning around medoids clustering algorithm to combine sentiment scores and emotion detection results. Another study on the subject employs a graph-based semi-supervised learning approach [20]. In a study on Brexit, [21] use ensemble learning to identify pro- and anti-sentiments. With the help of Twitter data and the VADER (Valence Aware Dictionary and Sentiment Reasoner) lexicon, the online reception of the murder of the Slovak journalist Ján Kuciak is examined by [22]. There has also been research on economic phenomena using Twitter data. Valle-Cruz et al. [23] analyse the importance of influential Twitter accounts on the behaviour of financial indices, finding that markets reacted 0 to 10 days after critical information was posted on Twitter during the COVID-19 pandemic. Similarly, [24] create social sentiment indexes for large publicly traded companies from Twitter data to describe effects of negative information on stock prices. Various deep learning methods have also been applied for sentiment analyses. For instance, [25] use a bidirectional long short-term memory (BiLSTM) to classify sentiments regarding COVID-19, obtaining very high accuracy and sensitivity. The COVID-19 pandemic in general has been a popular research object for social media-based sentiment analysis. Using the AFINN lexicon, [26] analyse opinions towards different COVID-19 vaccines, identifying that the sentiment towards some vaccines has turned negative over time. Using a logistic regression classifier, [27] split Tweets on COVID-19 vaccinations in Mexico into positive and negative. A similar study in Indonesia is also performed by [28], who employ a naive Bayes classifier.

To our knowledge, this is the first scientific paper that deals with the reception and perception of the 2022 Twitter acquisition. As it is, therefore, still unclear how the acquisition of Twitter by Elon Musk was received on the platform itself, there is a distinct research gap. The wide range of content in studies that use emotions and opinions from Twitter, outlined in this section, highlights the importance of having a clear understanding of Twitter data characteristics. Filling the aforementioned research gap is therefore of great significance for future research with Twitter data, as the acquisition might result in a change of contents, user structure and attitude. The study presented in this paper provides an initial indication of how Twitter might develop in the future.

3. Materials and Methods

The following section will outline the methodological steps of our analysis. First, we will present our data selection process, before explaining how we performed a sentiment and subsequent spatial hot spot analysis.

3.1. Data

Twitter makes it possible to access its data using various application programming interfaces (API). In particular, the streaming and resting API should be mentioned here, which we used for our data acquisition. For this, we used a proprietary Java-based crawler to collect Tweets from the last 7 days [8,10]. We queried georeferenced Tweets that can contain either a point geometry (based on the GPS data of the sending device) or a polygon geometry (based on the bounding box of a location specified by the user). This information is independent of the user profile location. Additionally, information such as a precise timestamp, the full text and details about the user are provided by Twitter. The research area of our study was limited to the contiguous US. We only kept Tweets whose geometry was entirely within a county to reduce the inaccuracy of the bounding box geometries somewhat. We additionally performed a temporal filtering of data, only considering Tweets that were posted between 1 October 2022 and 23 November 2022 (inclusive). Purely on the basis of our coarse bounding box and timeframe, we were able to collect and store 35,998,468 million Tweets in a PostgreSQL database, chosen for its open source nature and suitability for large geospatial data with its PostGIS extension. Since we assumed that only a fraction of these Tweets were relevant for our analyses, we carried out keyword-based filtering. For this, we limited ourselves to the two terms “Elon Musk” and “Twitter” in order to keep the bias in the data selection as low as possible. We tried to catch different writing styles. Accordingly, our search terms were “elon musk”, “ elon”, “elon ”, “#elonmusk”, “#elon” and “twitter”. For filtering, we converted the entire Tweet text to lower case. Consequently, texts including hashtags like #TwitterIsDead, #TwitterCEO or #ElonMuskBuysTwitter were also identified by our approach. The resulting data set consisted of 97,662 relevant Tweets with a georeference within the US, which we used for the subsequent analyses.

3.2. Sentiment Analysis

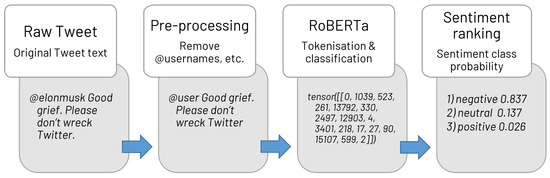

For our data set, we performed a simple document-level, polarity-based sentiment analysis (cf. Figure 1) in Python 3.9 to categorise the Tweets as either “positive”, “neutral“ or ”negative“. For this, we used a state-of-the-art RoBERTa-base sentiment model (Github repository, https://github.com/cardiffnlp/timelms (accessed on 28 December 2022), and the hugging face model card, https://huggingface.co/cardiffnlp/twitter-roberta-base-sentiment-latest (accessed on 28 December 2022)) [29], which is based on the original RoBERTa-base model [11] and further trained on 123.86 million Tweets acquired through the Twitter Academic API from January 2018 to December 2021. This Twitter RoBERTa base model was then fine-tuned for document-level sentiment analysis using the TweetEval benchmark [30], which is a measure of seven heterogeneous tasks related to Twitter NLP classification tasks, including hate speech detection, irony detection, and sentiment analysis. The sentiment analysis task in TweetEval uses the SemEval2017 data set for Subtask A [31] as a baseline for model comparison. In this metric, the model by [29] achieves a better performance relative to the RoBERTa base, BERTweet, Bidirectional LSTM, and other models. In its application, the model predicts a softmax score for each of the three labels (negative, neutral, and positive), thereby indicating each label’s probability. These probabilities are ranked and the most probable label is then assigned to the Tweet. In this case study, we did not take into account how high the softmax score for the top label was, i.e., how certain the model was about the respective label, but simply accepted the most likely label as the final sentiment label for each Tweet in our data set.

Figure 1.

Workflow of sentiment analysis with example Tweet.

The model required only minimal preprocessing so as to preserve as much information contained within the text. The input text was first split into a list of individual words, user references (indicated by ’@’ followed by at least one additional character) were replaced with a more generic ’@user’ token, and all weblinks were reduced to ’http’ to indicate the presence of a link. The adapted word tokens were then re-joined into a single string and passed to the tokenizer, which in turn generated a tensor, i.e., a numeric representation of the text, along with an attention mask. These were the final inputs for the model. It is important to note that our sentiment analysis task referred to a document-level classification task where a document (i.e., a Tweet) can consist of several sentences, wherein each section of a sentence can have a different polarity. This means that all sentiments contained within a Tweet influenced the single final score, causing an averaging whenever more than one sentiment was detected. For example, if a Tweet consisted of two sentences containing opposing sentiments, they could cancel each other out. This approach to sentiment classification therefore came with an inherent drawback in the form of fine-grained information loss at the aspect level. Regarding the results in this case study, we considered all “neutral” Tweets to be a product of one of two scenarios: either the Tweet was consistent in a neutral polarity, or it contained both positive and negative polarities that cancelled each other out. As the meaningfulness of the neutral label was thereby compromised in its interpretability, we refrained from using “neutral” Tweets in the subsequent hot spot analysis.

3.3. Hot Spot Analysis

With the results of the sentiment analysis, i.e., a sentiment label for each Tweet, we then performed a spatial hot spot analysis. For this, we used the classic Getis-Ord Gi* algorithm implemented in ArcGIS Pro (version 2.8.8). It is a method that considers the values of spatially adjacent observations, defined by some kind of spatial weights matrix, and detects so-called hot and cold spots. The algorithm examines whether the null hypothesis that the spatial clustering of high attribute values is purely random can be rejected. The output is a so-called Z score, which shows whether clusters have high (hot spots) or low attribute values (cold spots). The Getis-Ord Gi* statistic can be described by Formula (1):

where is the attribute value of the feature j; is the spatial weight between feature i and j; n the sample size; the mean of the data set, and S the standard deviation of the data set [32].

For this analysis, we only considered the Tweets classified as ”positive“ or “negative”, which we examined separately. Furthermore, we also split our dataframe into two parts: one representing the period before the Twitter acquisition (1–26 October 2022), one representing the period afterwards (27 October–23 November 2022). Consequently, we ran four separate hot spot analyses. For each of these, we aggregated our data on the county level, i.e., counted the number of all Tweets and relevant Tweets per sentiment score within each US county. From this, we then calculated a ratio (relevant Tweets/all Tweets), which was ultimately the actual input attribute to the hot spot analysis. For the representation of the spatial neighbourhood, we chose the k-nearest neighbours approach (k = 8).

4. Results

In the following section, we will describe our results, focusing mainly on the distribution of sentiment scores and the outputs of the spatial hot spot analysis. We split our data set into two—one period representing a timeframe before the Twitter acquisition (1–26 October 2022) and one period representing the timeframe after (27 October–23 November 2022)—and this is also represented in the description of our results. In general, the spatial distribution of our Twitter data corresponded quite well to the population distribution in the US, which has been shown in the literature [33]. Consequently, most Tweets were located in the vicinity of major agglomerations, such as New York City, Los Angeles or Chicago. On the other hand, the states in the central US were characterised by a data scarcity.

4.1. Sentiment Analysis

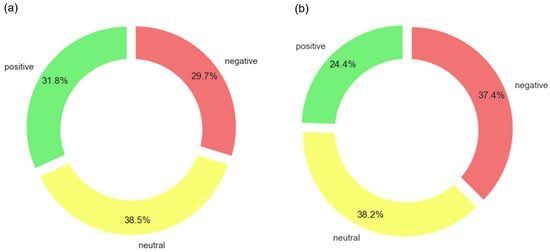

Figure 2 shows how many Tweets were assigned to the respective sentiment classes per timeframe. In the first timeframe, 31.8% (n = 9571) of the Tweets in our data set had a positive sentiment, while 29.7% (n = 8962) of Tweets were classified as negative. The largest amount were neutral tweets (38.5%, n = 11,595). After the Twitter acquisition, this distribution changed considerably, although the amount of neutral Tweets remained practically the same (38.2%, n = 25,767). Positive Tweets about Twitter or Elon Musk decreased to 24.4% (n = 16,487), while the number of negative Tweets increased to 37.4% (n = 25,280).

Figure 2.

Distribution of sentiment classes (n = 97,662). (a) before Twitter acquisition (n = 30,128), (b) after Twitter acquisition (n = 67,534).

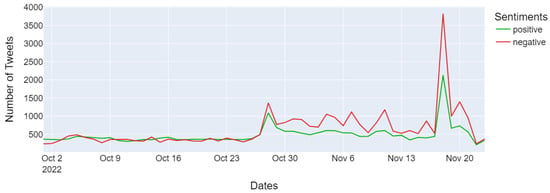

The timeline in Figure 3 shows how much the number of Tweets with the search terms “Twitter” or “Elon Musk” changed on a daily basis during the study period. The mean number of daily relevant Tweets was 1808.6, while the median was at 1405.5. For about the first half, no major changes could be detected. However, from 27 October, the date of Elon Musk’s acquisition of Twitter, the numbers increased significantly (more than doubled). Subsequently, they remained at a significantly higher level. A clear peak emerged on 18 November, when 9.725 relevant Tweets were sent in the study area, 3.824 of which were negative. This was also the day when the most Tweets were recorded for all categories. There was also a small spike in negative Tweets on 20 November. In the first part of our study period, there were mostly more daily tweets with positive than negative sentiments. This changed with 27 October, the day of the takeover, after which there were more negative than positive tweets in our data set every day.

Figure 3.

Timeline of relevant Tweets and their respective sentiment scores.

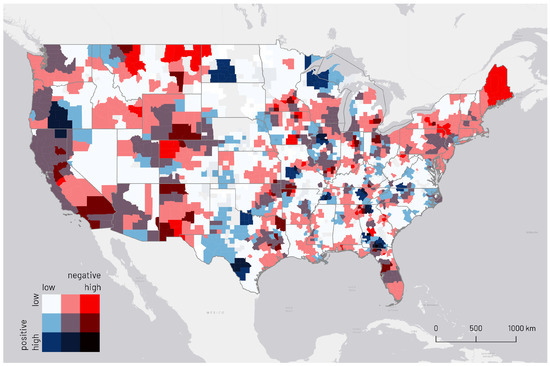

4.2. Hot Spot Analysis

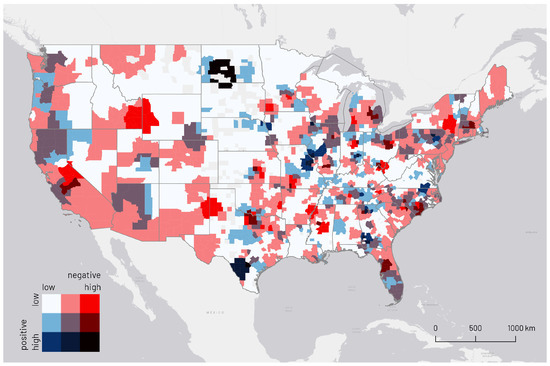

We visualised the results of our spatial hot spot analysis as so-called bivariate choropleth maps. They show both the hot spots of positive and negative sentiments on county-level. We created two maps: one for the timeframe before and one for the timeframe after the acquisition. For easier comparability, we used the same range of values for the colour scale of both maps. Both sentiment categories were divided into three classes: low, medium and high. Low referred to Z scores <= 0, while medium included Z scores > 0 and <2, indicating weak hot spots. The high class only included Z scores > 2. The stronger the shade of red, the higher the Z score of the negative sentiments. The same applies to the positive sentiments, but in blue shades. We decided to use these classes as we were mainly interested in identifying hot spots over the respective sentiment, where cold spots were not of particular interest for us. In white regions, both negative and positive sentiments were hardly present. Some counties were not coloured at all because no Tweets had been posted there.

Figure 4 is a bivariate map containing both positive and negative hot spots for the timeframe before the Twitter acquisition. There were a few regions that were strong hot spots in both categories, mainly a region in the east of Texas and one in North Dakota. On the West Coast, there were some contiguous areas that tended to be represented by negative sentiments. There was a quite large number of counties in the central US where neither positive nor negative hot spots were identified or where there were no Tweets at all.

Figure 4.

Bivariate map of negative and positive hot spots before the Twitter acquisition (1–26 October 2022). Counties without Tweets are transparent.

Figure 5 is structured in the same way as the previous map. However, it shows information for the timeframe after the Twitter acquisition. As the colouring scheme of the map is identical, i.e., based on the same range of values, the maps become visually comparable. This one clearly shows more regions in saturated shades of red, indicating a higher share of negative sentiment hot spots. Additionally, there were fewer counties that were classified as “low” for both variables. There were some areas where there were mainly negative and few positive sentiments, i.e., in Maine and Montana. On the West Coast, there were large areas where both sentiments were considered at least medium hot spots. There were only some counties where positive sentiments dominated, e.g., in Texas, Wisconsin and Michigan.

Figure 5.

Bivariate map of negative and positive hot spots after the Twitter acquisition (27 October–23 November 2022). Counties without Tweets are transparent.

Additionally, we calculated the Moran’s I score of the resulting Z scores to check whether the spatial clustering of the hot spots changed between the two timeframes, using once again the KNN (k = 8) implementation of spatial neighbourhood. For the Z scores of positive sentiments, there was an increase from 0.528 to 0.599. Similarly, the Moran’s I Z scores of negative sentiments increased from 0.599 to 0.652. Each Moran’s I score was statistically significant within the 0.1% confidence interval.

5. Discussion

In the following section, we will discuss our results, giving some interpretations. Furthermore, we will critically evaluate our methodological approach.

5.1. Discussion of Results

Our results can be understood as a first indication of how the discourse on the 2022 Twitter acquisition was perceived by users. We identified an increase in Tweets about our two study objects, i.e., Twitter and Elon Musk, in general after 27 October 2022. We also found that after this date, there were consistently more negative than positive Tweets. The dates of 18 and 20 November have to be singled out here, where there were particularly many negative Tweets. We assumed that this was mainly related to the high number of employees dismissed by Elon Musk around this time. However, other reasons are also conceivable, such as the reinstatement of accounts of controversial figures such as Donald Trump.

As we focused on georeferenced Tweets, the spatial perspective was of high importance for our analysis. By performing spatial hot spot analyses, we tried to identify geographic patterns of sentiments on the Twitter acquisition. In general, there was a higher number of counties regarded as hot spots in the second timeframe. This was consistent with the sheer number of Tweets that increased after the acquisition and could be understood as the discourse about Twitter gaining momentum across the entire US. However, we could not identify a clear spatial clustering of this, i.e., there were no large regions changing from being hot spots of positive to negative sentiments or vice versa. Nevertheless, we found that there were more hot spots of negative sentiments on the East Coast after the Twitter acquisition, at least in the New England region. In particular, the emergence of a strong hot spot in Maine should be mentioned here, which might, however, be biassed due to the spatial neighbourhood implementation and the low number of neighbouring observations. However, there were also some regions where negative sentiment hot spots before the acquisition turned into regions with generally low attribute values of sentiment Z scores. Many regions across the US also remained quite similar in both timeframes. This also applied for some counties that were only hot spots of positive sentiments. An interesting case was the West Coast, particularly the state of California where the headquarters of Twitter are located. Before the acquisition, its counties were mostly classified as medium hot spots of negative sentiments. After the acquisition, the predominating category was the medium class for both sentiments. This could be understood as an indication for a polarisation of the discourse in this region.

The highly positive scores of the Z scores were not too surprising, as the Getis Ord-Gi* method used for the spatial hot spot analysis is somewhat based on Moran’s I. Nonetheless, the increase in Moran’s I values might be understood as a strengthening of the clustering of sentiments. Moreover, they are statistical evidence that negative sentiments were more spatially clustered than positive ones.

In addition, we examined whether there is a connection between our results and the US political landscape, i.e., the election results of Democrats and Republicans, as this is often reflected in social variables in the US. However, we could not find any clear evidence for this. A closer look, also on a textual basis, might still be interesting for the future.

5.2. Limitations

Our analysis also had some limitations that often occur in research with Twitter data. The official Twitter API does not allow access to the entire data set, i.e., the millions of Tweets sent daily, but only to a representative sample. It is not entirely clear how this sample is collected, which of course means that a bias on our results cannot be ruled out. However, since numerous studies have already been able to make significant statements with such a representative sample, we assumed that this also applied in our case.

Another problem specific to georeferenced Tweets is their geometry. In some cases, this is provided by Twitter as an exact point. However, the majority of Tweets only receive a bounding box based on a location specified by the user. Of course, this creates quite a bit of inaccuracy, as this location can also be large regions such as entire states or even countries. We tried to address this inaccuracy by only keeping Tweets whose geometry was completely within the respective county. This, however, resulted in some unwanted data loss. In the future, the data quality could be increased by additionally geocoding location mentions in the Tweet text [34]. We also found 78 Tweets whose place was specified as “Twitter HQ”, wherefore they were georeferenced in San Francisco. However, we considered it quite unlikely that these Tweets were actually posted from there, but that the users only gave this location as a reference to the content of their tweet.

In our case, the pure use of keyword-based filtering can also be cited as a valid criticism. Tweets that do not contain a keyword but nevertheless semantically relate to the topic under investigation cannot be taken into account here. However, we assumed that Tweets relating to the Twitter acquisition were very likely to contain either “twitter” or “elon musk”, which is why we considered a keyword-based approach sufficient for our study. We also created the requested variants of the keywords in such a way that they appeared as infrequently as possible in other terms. For example, using only the first name “elon” without a leading or trailing space would also have picked up Tweets with the words “belong” or “elongate”, i.e., presumably unrelated Tweets.

Furthermore, our choice of timeframe could be criticised. A longer study period would definitely be very interesting, particularly extending from November 2022 onward. We chose the date when we started our analysis as the end data of our timeframe. However, it would have been possible to split our entire timeframe in smaller units than before and after the acquisition. Since it would have been quite arbitrary (e.g., one week) and more difficult to reason, we discarded this idea.

Our paper focused solely on a polarity-based sentiment analysis. Therefore, we were only able to account for positive, neutral and negative sentiments. As stated before, we also only performed a document-level analysis which resulted in information loss, e.g., when multiple sentiments cancelled each other out. As shown in the literature review, many more complex methods of sentiment analysis exist. With these, it would, for example, be possible to assign concrete emotions (e.g., anger, and joy) to Tweets. With aspect-based methods, this can even be applied to topics within the Tweet text. Consequently, such a more complex analysis could be performed in the next step.

Another point of criticism concerns our choice of representation of the spatial neighbourhood in the hot spot analysis, i.e., the KNN spatial weights matrix (k = 8). Especially in regions where the number of neighbouring counties was small, this could have created a bias. However, this is also reserved for other methods of spatial neighbourhood delimitation and falls under the modifiable areal unit problem (MAUP) [35]. One way of reducing this bias would be the use of a regular grid [8] which would, however, be more difficult to interpret visually.

6. Conclusions

In our study, we analysed how the 2022 Twitter acquisition by Elon Musk was perceived by users on the platform. For this, we performed a polarity-based sentiment analysis on georeferenced Tweets. In the next step, we ran a spatial hot spot analysis on county level. In general, we found that the number of Tweets about Twitter and Elon Musk increased considerably with the acquisition. There were also overall more negative than positive sentiments in our data set, particularly after the acquisition (RQ1). On a spatial level, we did not identify clear changes, referring to the spatial clustering of sentiments. However, we found indications for a polarisation in the discourse on the West Coast (RQ2).

While Elon Musk’s acquisition of Twitter is viewed critically by some, as this paper has demonstrated, it also opens up some possibilities for future research. One obvious option is to continue this study. This could be done spatially, i.e., an investigation of perception in another region of the world. More interesting, however, is probably a long-term monitoring of the self-referred Twitter discourse over the coming months. On this basis, statements could be made about possible changes in data quality, e.g., with regard to pure quantity or political radicalisation. This observation would also be very interesting, especially in regard to the changing user structure or semantic content. The latter could be addressed through a topic modelling-based approach (e.g., using BERTopic).

Author Contributions

Conceptualization, S.S., C.Z. and D.A.; methodology, S.S. and C.Z.; software, S.S., C.Z. and D.A.; validation, C.Z.; formal analysis, S.S. and C.Z.; investigation, S.S. and C.Z.; resources, B.R.; writing—original draft, S.S. and C.Z.; writing—review & editing, S.S. and B.R.; visualization, S.S. and C.Z.; supervision, B.R.; project administration, B.R.; funding acquisition, B.R. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the Austrian Research Promotion Agency (FFG) through the project MUSIG (Grant Number 886355) and the Austrian Science Fund through the project “Spatio-temporal Epidemiology of Emerging Viruses” (Grant Number I 5117).

Data Availability Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest. The funders had no role in the design of the study; in the collection, analyses, or interpretation of data; in the writing of the manuscript; or in the decision to publish the results.

References

- STATISTA. Number of Monetizable Daily Active Twitter Users (mDAU) Worldwide from 1st Quarter 2017 to 2nd Quarter 2022. 2022. Available online: https://www.statista.com/statistics/970920/monetizable-daily-active-twitter-users-worldwide/ (accessed on 28 December 2022).

- STATISTA. Leading Countries Based on Number of Twitter Users as of January 2022. 2022. Available online: https://www.statista.com/statistics/242606/number-of-active-twitter-users-in-selected-countries/ (accessed on 28 December 2022).

- Wile, R. A Timeline of Elon Musk’s Takeover of Twitter. Available online: https://www.nbcnews.com/business/business-news/twitter-elon-musk-timeline-what-happened-so-far-rcna57532 (accessed on 28 December 2022).

- Conger, K.; Hirsch, L. Elon Musk Completes $44 Billion Deal to Own Twitter. 2022. Available online: https://www.nytimes.com/2022/10/27/technology/elon-musk-twitter-deal-complete.html (accessed on 28 December 2022).

- Zakrzewksi, C.; Siddiqui, F.; Menn. Musk’s ’Free Speech’ Agenda Dismantles Safety Work at Twitter, Insiders Say. 2022. Available online: https://www.washingtonpost.com/technology/2022/11/22/elon-musk-twitter-content-moderations/ (accessed on 16 January 2023).

- Mac, R.; Browning, K. Elon Musk Reinstates Trump’s Twitter Account. 2022. Available online: https://www.nytimes.com/2022/11/19/technology/trump-twitter-musk.html (accessed on 28 December 2022).

- Mac, R.; Mullin, B.; Conger, K.; Isaac, M. A Verifiable Mess: Twitter Users Create Havoc by Impersonating Brands. 2022. Available online: https://www.nytimes.com/2022/11/11/technology/twitter-blue-fake-accounts.html (accessed on 28 December 2022).

- Havas, C.; Resch, B. Portability of Semantic and Spatial-Temporal Machine Learning Methods to Analyse Social Media for near-Real-Time Disaster Monitoring. Nat. Hazards 2021, 108, 2939–2969. [Google Scholar] [CrossRef] [PubMed]

- Petutschnig, A.; Havas, C.R.; Resch, B.; Krieger, V.; Ferner, C. Exploratory Spatiotemporal Language Analysis of Geo-Social Network Data for Identifying Movements of Refugees. GI_Forum 2020, 1, 137–152. [Google Scholar] [CrossRef]

- Kogan, N.E.; Clemente, L.; Liautaud, P.; Kaashoek, J.; Link, N.B.; Nguyen, A.T.; Lu, F.S.; Huybers, P.; Resch, B.; Havas, C.; et al. An Early Warning Approach to Monitor COVID-19 Activity with Multiple Digital Traces in near Real Time. Sci. Adv. 2021, 7, eabd6989. [Google Scholar] [CrossRef] [PubMed]

- Liu, B. Sentiment Analysis: Mining Opinions, Sentiments, and Emotions, 2nd ed.; Studies in Natural Language Processing; Cambridge University Press: Cambridge, MA, USA; New York, NY, USA, 2020. [Google Scholar]

- Birjali, M.; Kasri, M.; Beni-Hssane, A. A Comprehensive Survey on Sentiment Analysis: Approaches, Challenges and Trends. Knowl.-Based Syst. 2021, 226, 107134. [Google Scholar] [CrossRef]

- Yue, L.; Chen, W.; Li, X.; Zuo, W.; Yin, M. A Survey of Sentiment Analysis in Social Media. Knowl. Inf. Syst. 2019, 60, 617–663. [Google Scholar] [CrossRef]

- Yadav, A.; Vishwakarma, D.K. Sentiment Analysis Using Deep Learning Architectures: A Review. Artif. Intell. Rev. 2020, 53, 4335–4385. [Google Scholar] [CrossRef]

- Chachra, A.; Mehndiratta, P.; Gupta, M. Sentiment Analysis of Text Using Deep Convolution Neural Networks. In Proceedings of the 2017 Tenth International Conference on Contemporary Computing (IC3), Noida, India, 10–12 April 2017; pp. 1–6. [Google Scholar] [CrossRef]

- Huang, Q.; Chen, R.; Zheng, X.; Dong, Z. Deep Sentiment Representation Based on CNN and LSTM. In Proceedings of the 2017 International Conference on Green Informatics (ICGI), Fuzhou, China, 15–17 April 2017; pp. 30–33. [Google Scholar] [CrossRef]

- Jin, Y.; Zhang, H.; Du, D. Improving Deep Belief Networks via Delta Rule for Sentiment Classification. In Proceedings of the 2016 IEEE 28th International Conference on Tools with Artificial Intelligence (ICTAI), San Jose, CA, USA, 6–8 November 2016; pp. 410–414. [Google Scholar] [CrossRef]

- Kovacs-Györi, A.; Ristea, A.; Havas, C.; Resch, B.; Cabrera-Barona, P. #London2012: Towards Citizen-Contributed Urban Planning through Sentiment Analysis of Twitter Data. Urban Plan. 2018, 3, 75–99. [Google Scholar] [CrossRef]

- Kovacs-Györi, A.; Ristea, A.; Kolcsar, R.; Resch, B.; Crivellari, A.; Blaschke, T. Beyond Spatial Proximity—Classifying Parks and Their Visitors in London Based on Spatiotemporal and Sentiment Analysis of Twitter Data. ISPRS Int. J. Geo-Inf. 2018, 7, 378. [Google Scholar] [CrossRef]

- Roberts, H.; Chapman, L.; Resch, B.; Sadler, J.; Zimmer, S.; Petutschnig, A. Investigating the Emotional Responses of Individuals to Urban Green Space Using Twitter Data: A Critical Comparison of Three Different Methods of Sentiment Analysis. Urban Plan. 2018, 3, 21–33. [Google Scholar] [CrossRef]

- del Gobbo, E.; Fontanella, L.; Fontanella, S.; Sarra, A. Geographies of Twitter Debates. J. Comput. Soc. Sci. 2021, 5, 647–663. [Google Scholar] [CrossRef]

- Kovács, T.; Kovács-Győri, A.; Resch, B. #AllforJan: How Twitter Users in Europe reacted to the murder of Ján Kuciak—Revealing spatiotemporal patterns through sentiment analysis and topic modeling. ISPRS Int. J. Geo-Inf. 2021, 10, 585. [Google Scholar] [CrossRef]

- Valle-Cruz, D.; Fernandez-Cortez, V.; López-Chau, A.; Sandoval-Almazán, R. Does Twitter Affect Stock Market Decisions? Financial Sentiment Analysis during Pandemics: A Comparative Study of the H1N1 and the COVID-19 Periods. Cogn. Comput. 2022, 14, 372–387. [Google Scholar] [CrossRef] [PubMed]

- Mendoza-Urdiales, R.A.; Núñez-Mora, J.A.; Santillán-Salgado, R.J.; Valencia-Herrera, H. Twitter Sentiment Analysis and Influence on Stock Performance Using Transfer Entropy and EGARCH Methods. Entropy 2022, 24, 874. [Google Scholar] [CrossRef] [PubMed]

- Kumari, S.; Pushphavathi, T.P. Intelligent Lead-Based Bidirectional Long Short Term Memory for COVID-19 Sentiment Analysis. Soc. Netw. Anal. Min. 2022, 13, 1. [Google Scholar] [CrossRef] [PubMed]

- Marcec, R.; Likic, R. Using Twitter for Sentiment Analysis towards AstraZeneca/Oxford, Pfizer/BioNTech and Moderna COVID-19 Vaccines. Postgrad. Med J. 2022, 98, 544–550. [Google Scholar] [CrossRef]

- Bernal, C.; Bernal, M.; Noguera, A.; Ponce, H.; Avalos-Gauna, E. Sentiment Analysis on Twitter about COVID-19 Vaccination in Mexico. In Advances in Soft Computing; MICAI 2021; Lecture Notes in Computer Science; Springer: Cham, Switzerland, 2021; Volume 13068, pp. 96–107. [Google Scholar] [CrossRef]

- Putri Aprilia, N.; Pratiwi, D.; Barlianto, A. Sentiment Visualization of Covid-19 Vaccine Based on Naive Bayes Analysis. J. Inf. Technol. Comput. Sci. 2021, 6, 195–208. [Google Scholar] [CrossRef]

- Loureiro, D.; Barbieri, F.; Neves, L.; Anke, L.E.; Camacho-Collados, J. TimeLMs: Diachronic Language Models from Twitter. arXiv 2022, arXiv:cs/2202.03829. [Google Scholar]

- Barbieri, F.; Camacho-Collados, J.; Neves, L.; Espinosa-Anke, L. TweetEval: Unified Benchmark and Comparative Evaluation for Tweet Classification. arXiv 2020, arXiv:cs/2010.12421. [Google Scholar]

- Rosenthal, S.; Farra, N.; Nakov, P. SemEval-2017 Task 4: Sentiment Analysis in Twitter. arXiv 2019, arXiv:cs/1912.00741. [Google Scholar]

- Ord, J.K.; Getis, A. Local Spatial Autocorrelation Statistics: Distributional Issues and an Application. Geogr. Anal. 1995, 27, 286–306. [Google Scholar] [CrossRef]

- Steiger, E.; Westerholt, R.; Resch, B.; Zipf, A. Twitter as an Indicator for Whereabouts of People? Correlating Twitter with UK Census Data. Comput. Environ. Urban Syst. 2015, 54, 255–265. [Google Scholar] [CrossRef]

- Serere, H.N.; Resch, B.; Havas, C.R.; Petutschnig, A. Extracting and Geocoding Locations in Social Media Posts: A Comparative Analysis. GI_Forum 2021, 1, 167–173. [Google Scholar] [CrossRef]

- Buzzelli, M. Modifiable Areal Unit Problem. In International Encyclopedia of Human Geography; Elsevier: Amsterdam, The Netherlands, 2020; pp. 169–173. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).