Methodological Study on the Influence of Truck Driving State on the Accuracy of Weigh-in-Motion System

Abstract

:1. Introduction

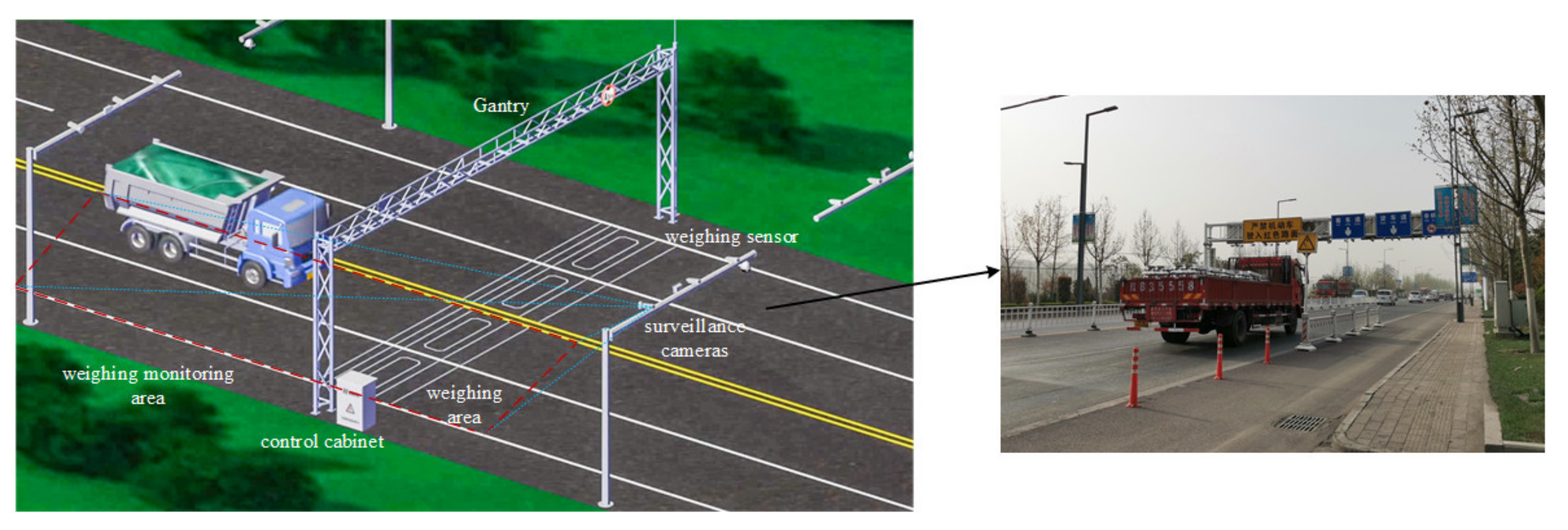

2. Weigh-in-Motion System Framework

3. Methods

3.1. Problem Statement

3.2. Model Formulation

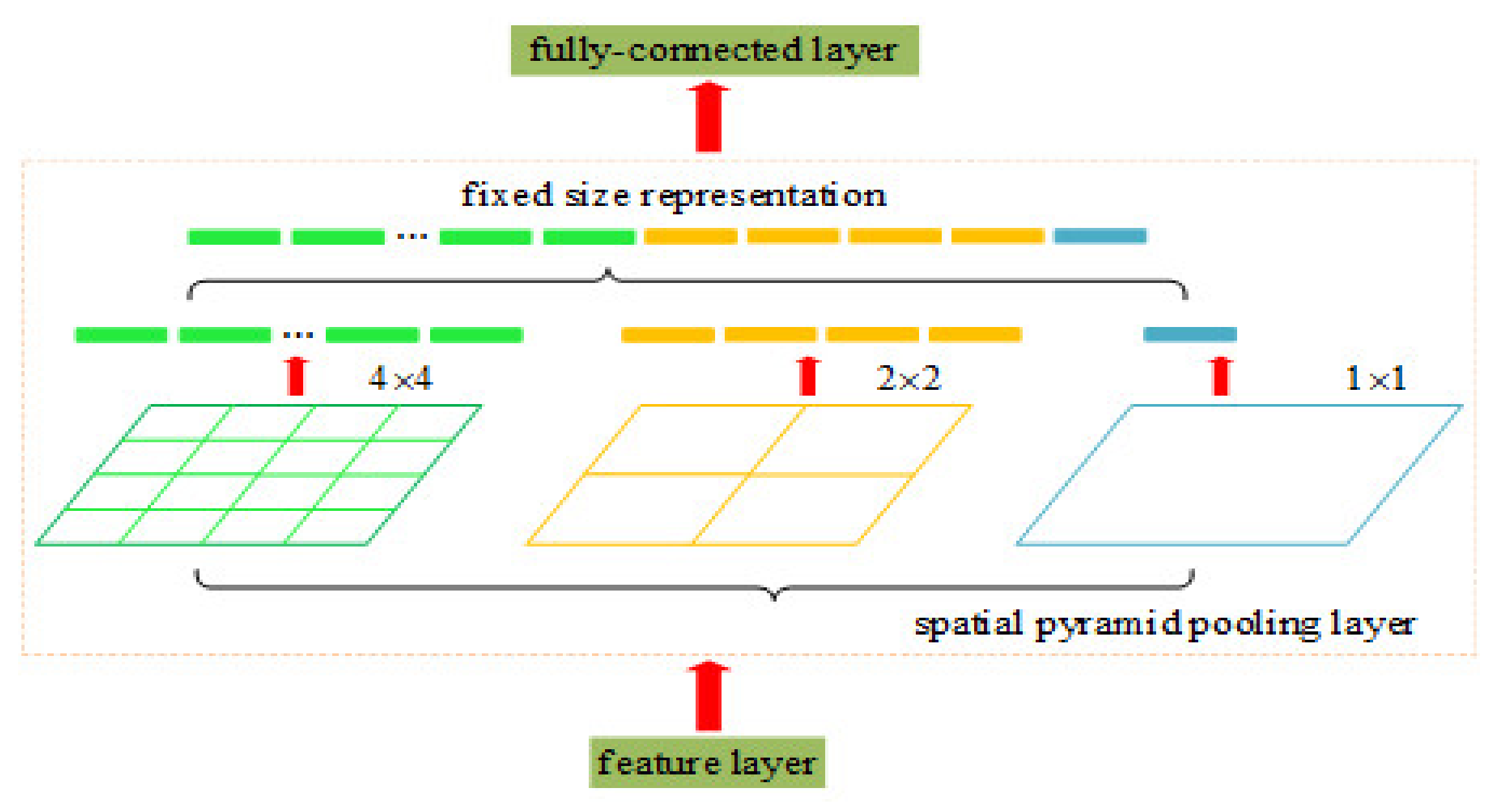

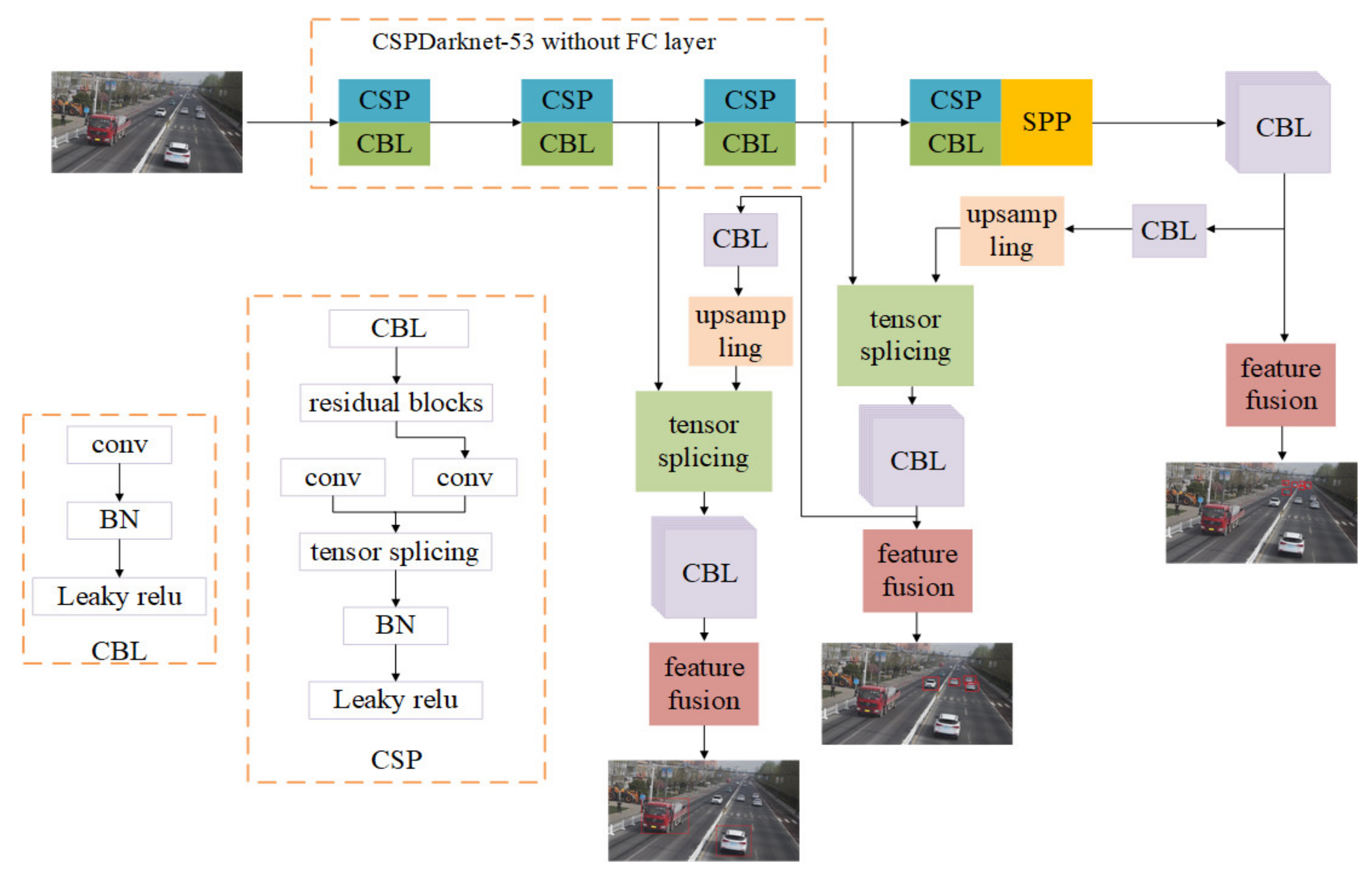

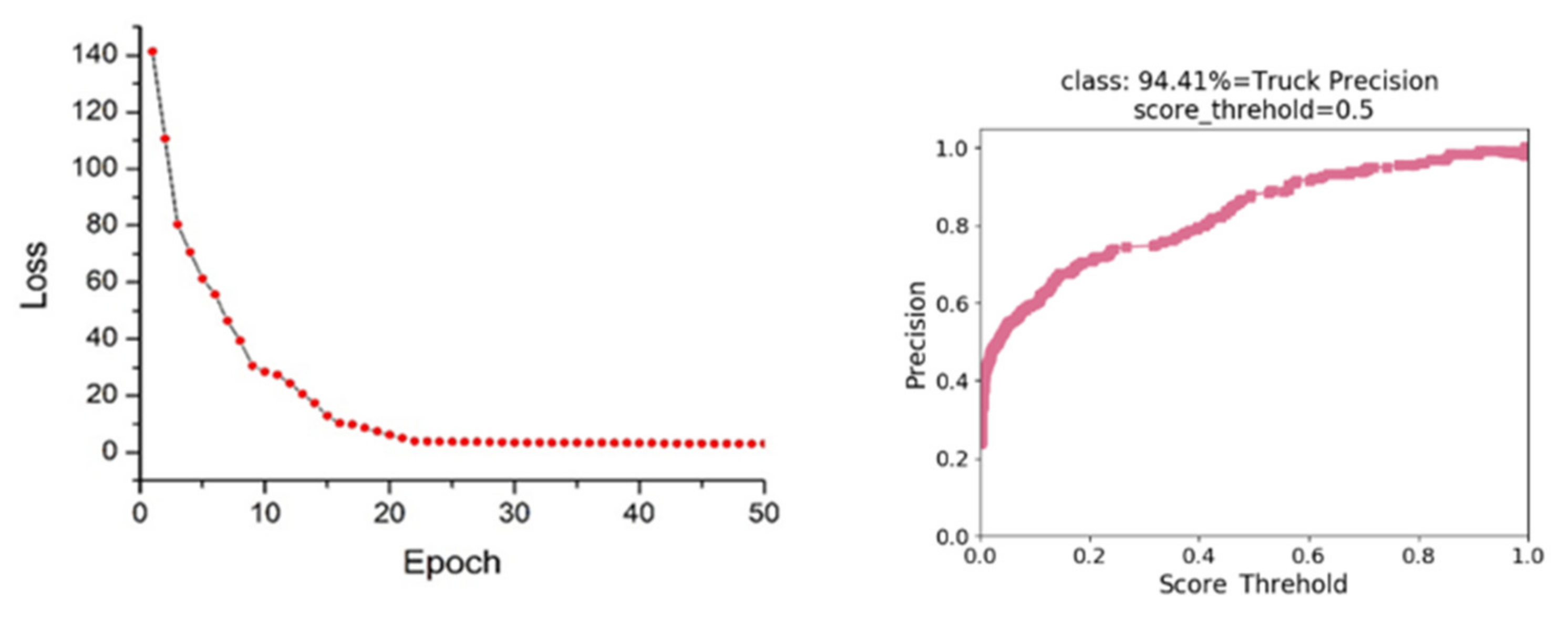

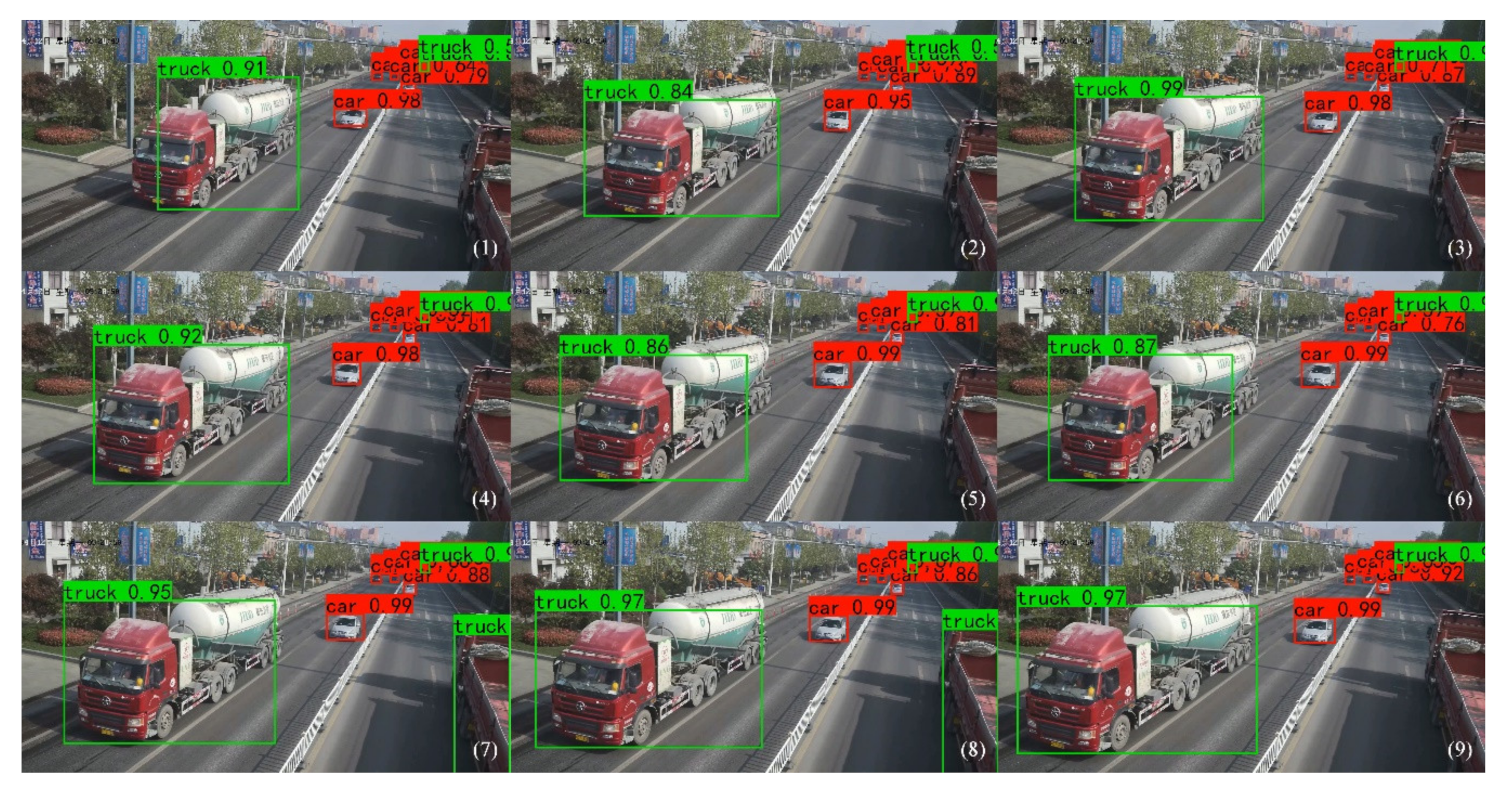

3.2.1. Improved YOLOv3 Detection Model

3.2.2. Detection-Based Target Tracking Methods

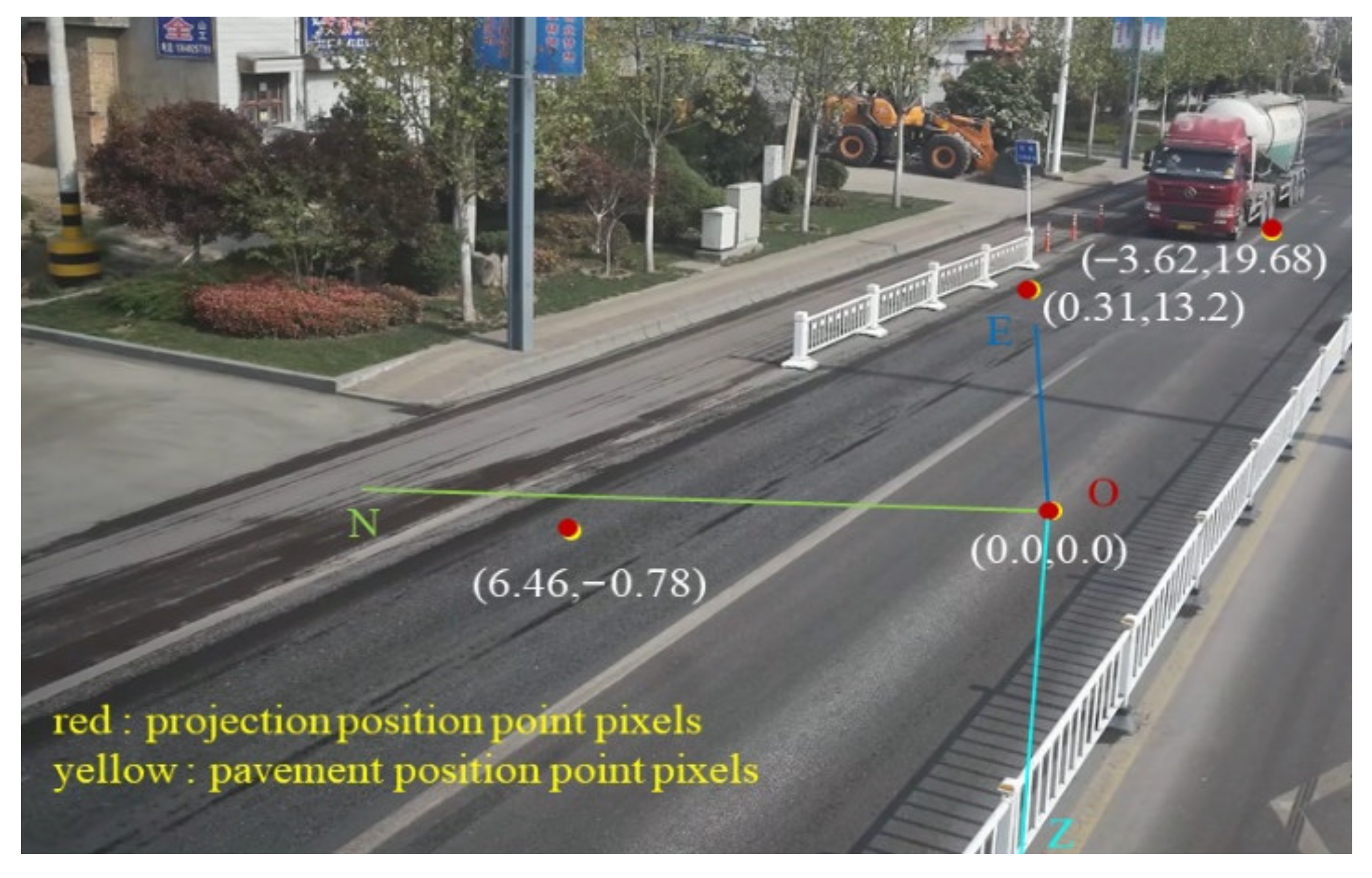

3.2.3. Coordinate Conversion

4. Experimental Procedure and Results Analysis

4.1. Data Collection

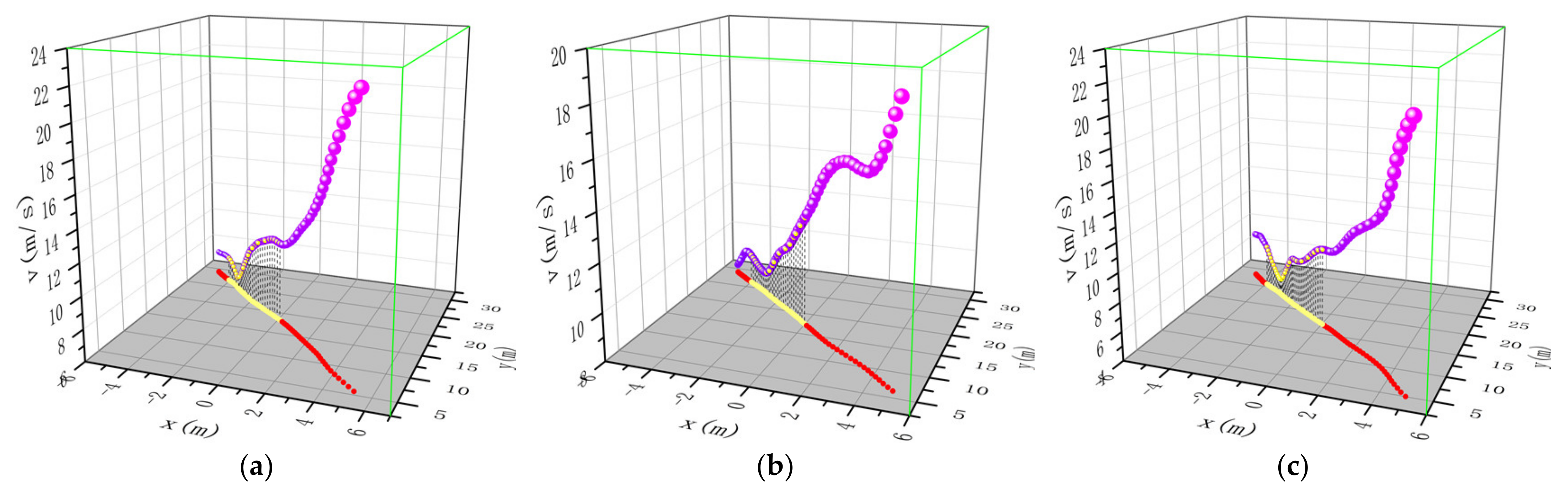

4.2. Analysis of Network Detection and Tracking Results

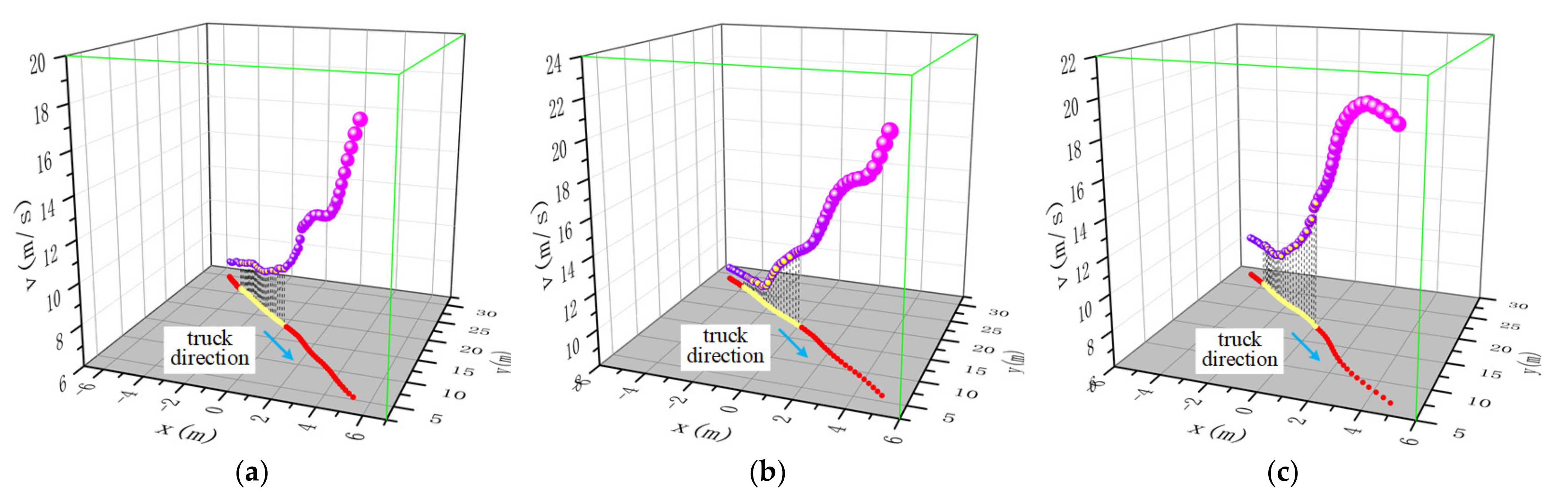

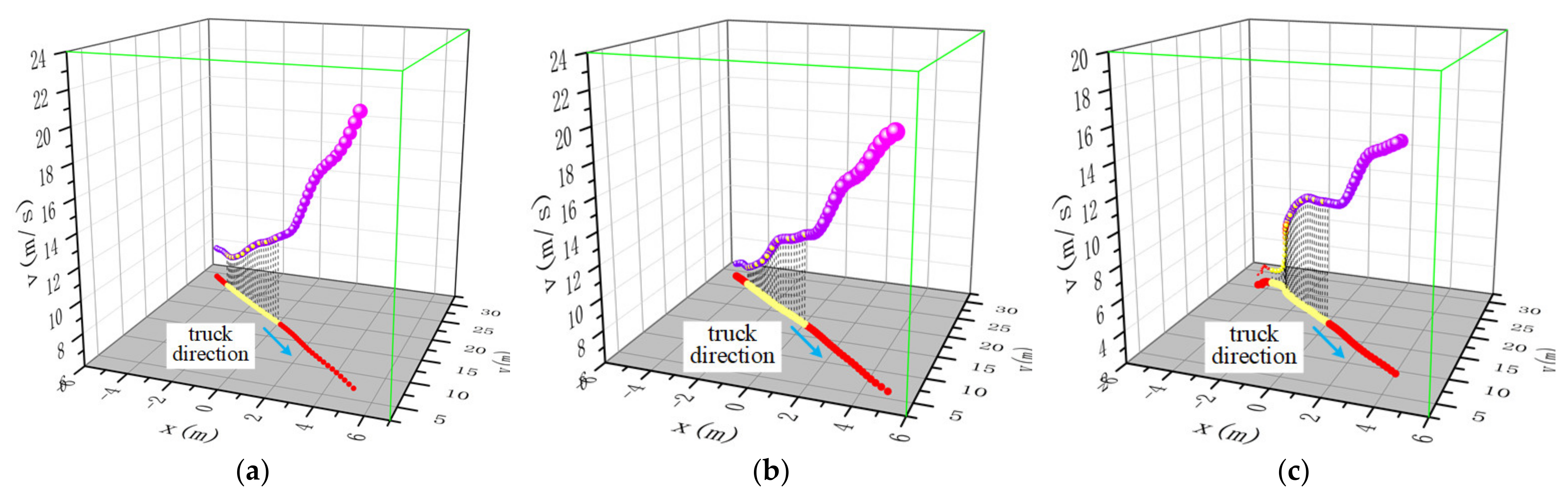

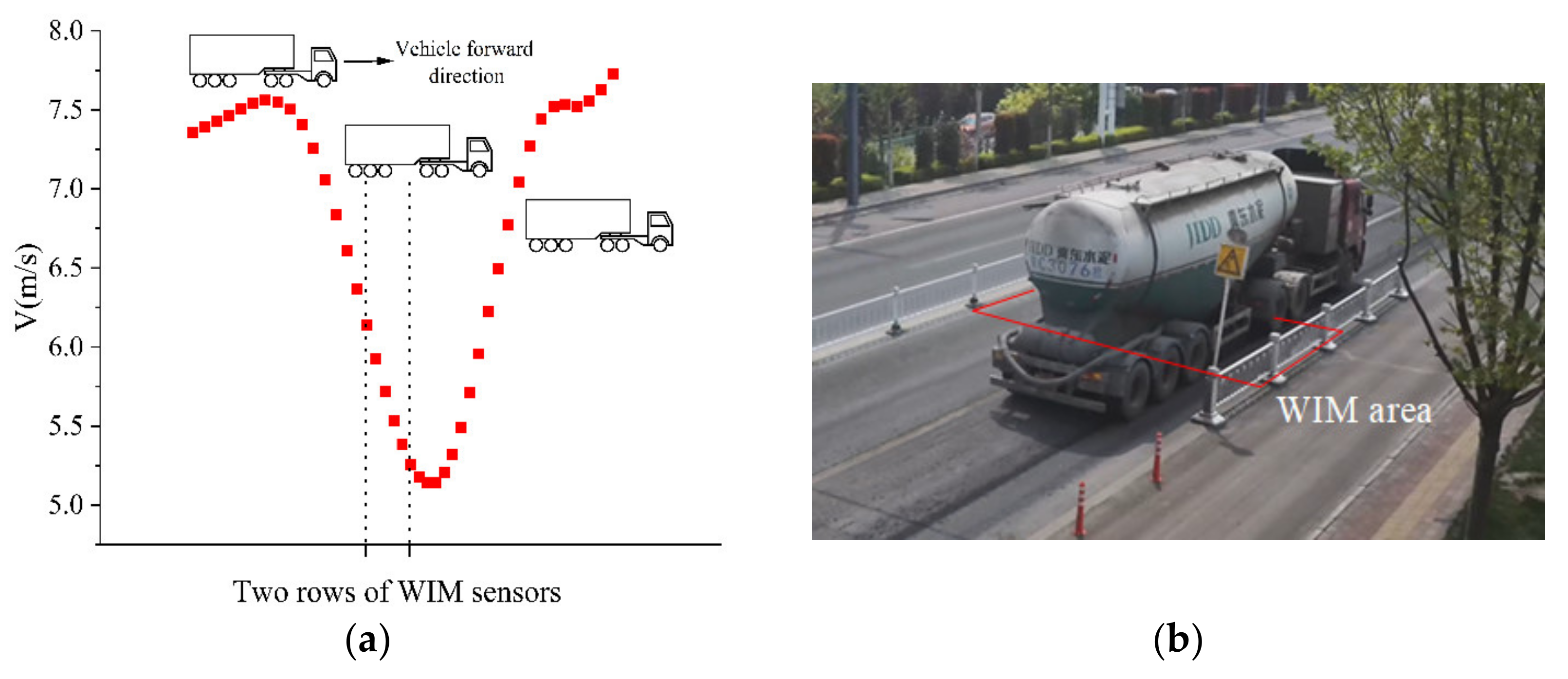

4.3. Analysis Results on the Influence of Vehicle Driving State on Weight Accuracy

- (1)

- When the truck passes the weighing area in the smooth state and acceleration state, its WIM result and static weight error is within 1.5%, indicating that the truck passes the weighing area in this driving state and the WIM system can accurately detect the weight of the truck.

- (2)

- When the truck passes the weighing area under the state of deceleration and acceleration, the WIM result and static weight have an error of 1–4 tons as shown in Table 2 because the center of gravity has obvious backward and forward movement in the weighing process. Therefore, the truck passes through the weighing area in this driving state, which will have a negative impact on the weighing accuracy of WIM system.

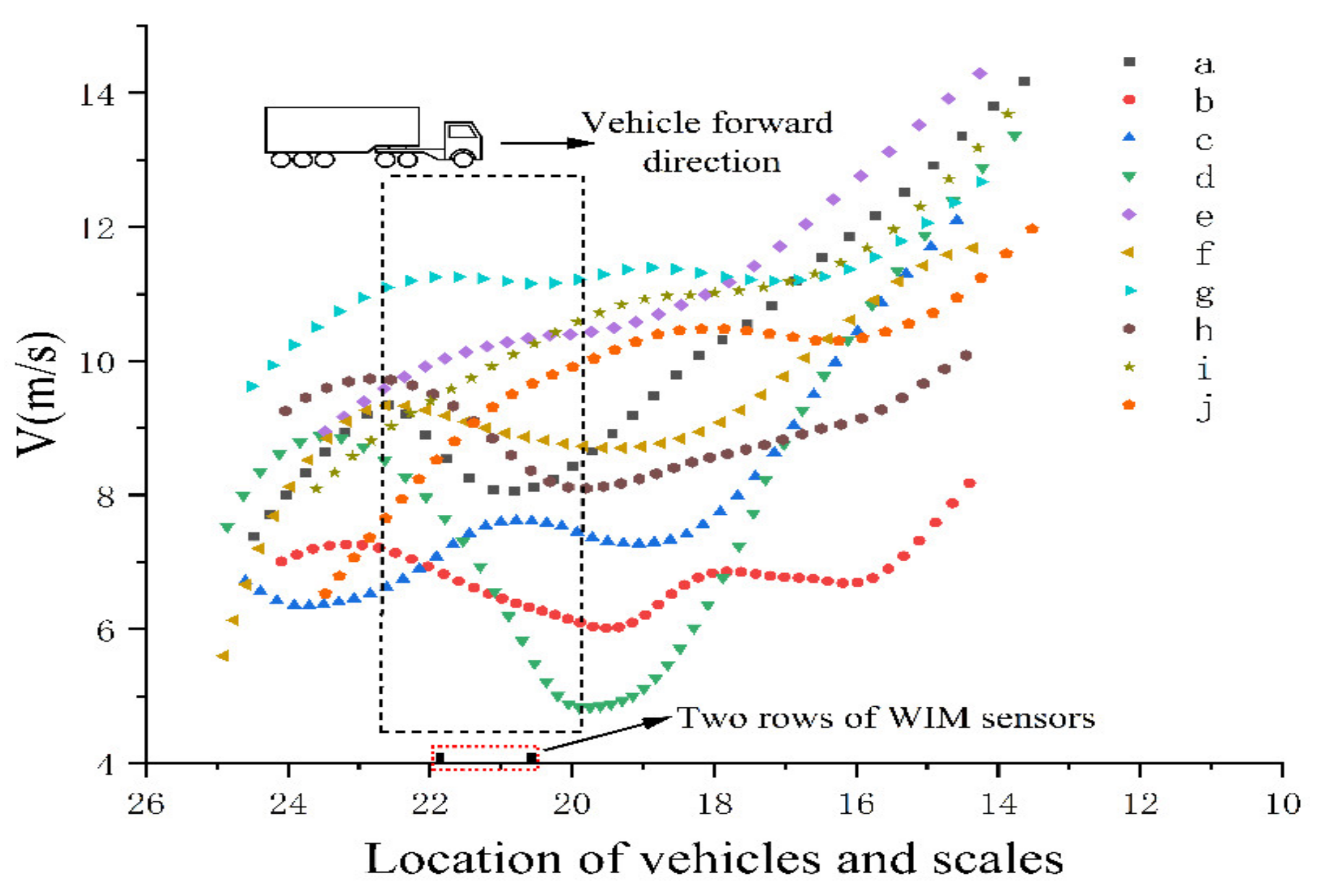

4.4. Analysis of the Results of the Method Validation Experiment

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Xiong, W.; Cai, C.S.; Kong, B.; Ye, J. Overturning-collapse modeling and safety assessment for bridges supported by single-column piers. J. Bridge Eng. 2017, 22, 04017084. [Google Scholar] [CrossRef]

- Han, W.; Wu, J.; Cai, C.S.; Chen, S. Characteristics and dynamic impact of overloaded extra heavy trucks on typical highway bridges. J. Bridge Eng. 2015, 20, 05014011. [Google Scholar] [CrossRef]

- Xu, F.Y.; Zhang, M.J.; Wang, L.; Zhang, J.R. Recent highway bridge collapses in China: Review and discussion. J. Perform. Constr. Facil. 2016, 30, 04016030. [Google Scholar] [CrossRef]

- Clemente, P. Monitoring and evaluation of bridges: Lessons from the Polcevera viaduct collapse in Italy. J. Civ. Struct. Health Monit. 2020, 10, 177–182. [Google Scholar] [CrossRef]

- Pushka, A.; Regehr, J.D. Retrospective longitudinal study of the impact of truck weight regulatory changes on operating gross vehicle weights. Transp. Res. Rec. 2021, 2675, 34–47. [Google Scholar] [CrossRef]

- Miao, N.; Wang, Y.; Peng, L.; Zhang, C.; Luo, Z. Analysis of accuracy affecting factors of off-site law enforcement equipment. In IOP Conference Series: Materials Science and Engineering; IOP Publishing: Bristol, UK, 2018; Volume 452, p. 042193. [Google Scholar]

- Xia, Y.; Jian, X.; Yan, B.; Su, D. Infrastructure safety oriented traffic load monitoring using multi-sensor and single camera for short and medium span bridges. Remote Sens. 2019, 11, 2651. [Google Scholar] [CrossRef] [Green Version]

- Zhao, Q. Development of a Weigh-In-Motion System Based on Multiple Sensors; University of Science and Technology Beijing: Beijing, China, 2020. [Google Scholar] [CrossRef]

- Dolcemascolo, V.; Jacob, B. Multiple sensor weigh-in-motion: Optimal design and experimental study. In Proceedings of the Second European Conference on Weigh-In-Motion of Road Vehicles, Lisbon, Portugal, 14–16 September 1998. [Google Scholar]

- Burnos, P.; Gajda, J. Thermal property analysis of axle load sensors for weighing vehicles in weigh-in-motion system. Sensors 2016, 16, 2143. [Google Scholar] [CrossRef] [PubMed]

- Gajda, J.; Sroka, R.; Zeglen, T.; Burnos, P. The influence of temperature on errors of WIM systems employing piezoelectric sensor. Metrol. Meas. Syst. 2013, 20, 171–182. [Google Scholar] [CrossRef]

- Hashemi, V.S.; Haas, C.R.; Haas, C.; Rothenburg, L. Investigation of the effects of air temperature and speed on performance of piezoelectric weigh-in-motion systems. Can. J. Civ. Eng. 2013, 40, 935–944. [Google Scholar] [CrossRef]

- Tan, S.; Li, L.-H. Vehicle dynamic weighing data processing based on grey neural network. Chin. J. Sens. Actuators 2016, 29, 1205–1209. [Google Scholar]

- Xiang, T.; Huang, K.; Zhang, H.; Zhang, Y.Y.; Zhang, Y.N.; Zhou, Y.H. Detection of moving load on pavement using piezoelectric sensors. Sensors 2020, 20, 2366. [Google Scholar] [CrossRef] [PubMed]

- Cheng, C. Design of Vehicle Weigh-in-Motion System Based on Neural Network. In Advanced Materials Research; Trans Tech Publications Ltd.: Freienbach, Switzerland, 2012; Volume 459, pp. 557–560. [Google Scholar]

- Faruolo, L.B.; Pinto, F.A.N.C. Metrological approach to the force exerted by the axle of a road vehicle in motion carrying liquid. Meas. Sci. Technol. 2015, 27, 015101. [Google Scholar] [CrossRef]

- Zhang, R.; Lv, W.; Guo, Y. A vehicle weigh-in-motion system based on hopfield neural network adaptive filter. In Proceedings of the 2010 International Conference on Communications and Mobile Computing, Shenzhen, China, 12–14 April 2010; Volume 3, pp. 123–127. [Google Scholar]

- McNulty, P.; O’Brien, E.J. Testing of bridge weigh-in-motion system in a sub-Arctic climate. J. Test. Eval. 2003, 31, 497–506. [Google Scholar]

- Yannis, G.; Antoniou, C. Integration of weigh-in-motion technologies in road infrastructure management. ITE J. 2005, 75, 39–43. [Google Scholar]

- Zhou, Y.; Pei, Y.; Li, Z.; Fang, L. Vehicle weight identification system for spatiotemporal load distribution on bridges based on non-contact machine vision technology and deep learning algorithms. Measurement 2020, 159, 107801. [Google Scholar] [CrossRef]

- Dan, D.; Ge, L.; Yan, X. Identification of moving loads based on the information fusion of weigh-in-motion system and multiple camera machine vision. Measurement 2019, 144, 155–166. [Google Scholar] [CrossRef]

- Ojio, T.; Carey, C.H.; OBrien, E.J.; Doherty, C. Contactless bridge weigh-in-motion. J. Bridge Eng. 2016, 21, 04016032. [Google Scholar] [CrossRef] [Green Version]

- Wang, C.; Yuan, Q.N.; Bai, H.; Li, H.; Zong, W.Z. Lightweight Object Detection Algorithm for Warehouse Goods. Laser and Optoelectronics Progress:1-10[2022-02-28]. Available online: http://kns.cnki.net/kcms/detail/31.1690.tn.20211110.1211.024.html (accessed on 4 January 2022).

- Li, H.B.; Xu, C.Y.; Hu, C.C. Improved real-time vehicle detection method based on YOLOV3. Laser Optoelectron. Prog. 2020, 57, 332–338. [Google Scholar]

- Xing, Z.; Zhao, S.; Guo, W.; Guo, X.; Wang, Y. Processing laser point cloud in fully mechanized mining face based on DGCNN. ISPRS Int. J. Geo-Inf. 2021, 10, 482. [Google Scholar] [CrossRef]

- Liu, H.; Wang, C.; Lu, H.; Yang, W. Outdoor camera calibration method for a GPS & camera based surveillance system. In Proceedings of the 2010 IEEE International Conference on Industrial Technology, Via del Mar, Chile, 14–17 March 2010; pp. 263–267. [Google Scholar]

- Zhu, S.; Hu, D. An improved outdoor camera calibration method based on EKF. In Proceedings of the 2014 International Conference on Computer, Communications and Information Technology (CCIT 2014); Atlantis Press: Amsterdam, The Netherlands, 2014; pp. 261–265. [Google Scholar]

| Contrast Model | Precision/% | Recall/% | FPS |

|---|---|---|---|

| YOLOv3 | 94.6 | 85.3 | 15.72 |

| Improved YOLOv3 | 94.4 | 87.2 | 17.12 |

| Time | No. | Weigh/ton | WIM Weigh (ton) | Error |

|---|---|---|---|---|

| 3.18–10:02:58 | 6 | 17.5 | 16.0 | 8.6% |

| 3.18–11:17:35 | 13 | 17.5 | 15.8 | 9.7% |

| 3.19–09:52:31 | 17 | 17.5 | 16.2 | 7.4% |

| 3.19–10:11:23 | 26 | 17.5 | 16.2 | 7.4% |

| 3.20–15:23:52 | 31 | 17.5 | 14.5 | 17.1% |

| Classification | Deceleration Range (m/s) | Weighing Error(ton) | Number of Trucks | Recommended Compensation Accuracy (ton) |

|---|---|---|---|---|

| 1 | 1.5~3.9 | 1.0~1.9 | 177 | 1 |

| 2 | 3.9~5.9 | 1.9~2.9 | 99 | 2 |

| 3 | 5.9~8.5 | 2.9~3.9 | 34 | 3 |

| Experimental Sequence | Standard Weight (kg) | Detection Weight (kg) | Speed Change Value (m/s) | Weighing Error (ton) |

|---|---|---|---|---|

| a | 36,380 | 35,121 | 2.04 | 1.26 |

| b | 36,380 | 35,553 | 1.20 | 0.83 |

| c | 36,380 | 35,894 | 0.87 | 0.49 |

| d | 36,380 | 34,169 | 4.02 | 2.21 |

| e | 36,380 | 36,187 | 0.92 | 0.19 |

| f | 36,380 | 36,001 | 0.63 | 0.38 |

| g | 36,380 | 36,292 | 0.19 | 0.09 |

| h | 36,380 | 35,262 | 1.63 | 1.12 |

| i | 36,380 | 36,033 | 1.70 | 0.35 |

| j | 36,380 | 36,202 | 2.51 | 0.18 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhao, S.; Yang, J.; Tang, Z.; Li, Q.; Xing, Z. Methodological Study on the Influence of Truck Driving State on the Accuracy of Weigh-in-Motion System. Information 2022, 13, 130. https://doi.org/10.3390/info13030130

Zhao S, Yang J, Tang Z, Li Q, Xing Z. Methodological Study on the Influence of Truck Driving State on the Accuracy of Weigh-in-Motion System. Information. 2022; 13(3):130. https://doi.org/10.3390/info13030130

Chicago/Turabian StyleZhao, Shuanfeng, Jianwei Yang, Zenghui Tang, Qing Li, and Zhizhong Xing. 2022. "Methodological Study on the Influence of Truck Driving State on the Accuracy of Weigh-in-Motion System" Information 13, no. 3: 130. https://doi.org/10.3390/info13030130

APA StyleZhao, S., Yang, J., Tang, Z., Li, Q., & Xing, Z. (2022). Methodological Study on the Influence of Truck Driving State on the Accuracy of Weigh-in-Motion System. Information, 13(3), 130. https://doi.org/10.3390/info13030130