Serious Games for Vision Training Exercises with Eye-Tracking Technologies: Lessons from Developing a Prototype

Abstract

1. Introduction

2. Background Literature

3. Materials and Methods

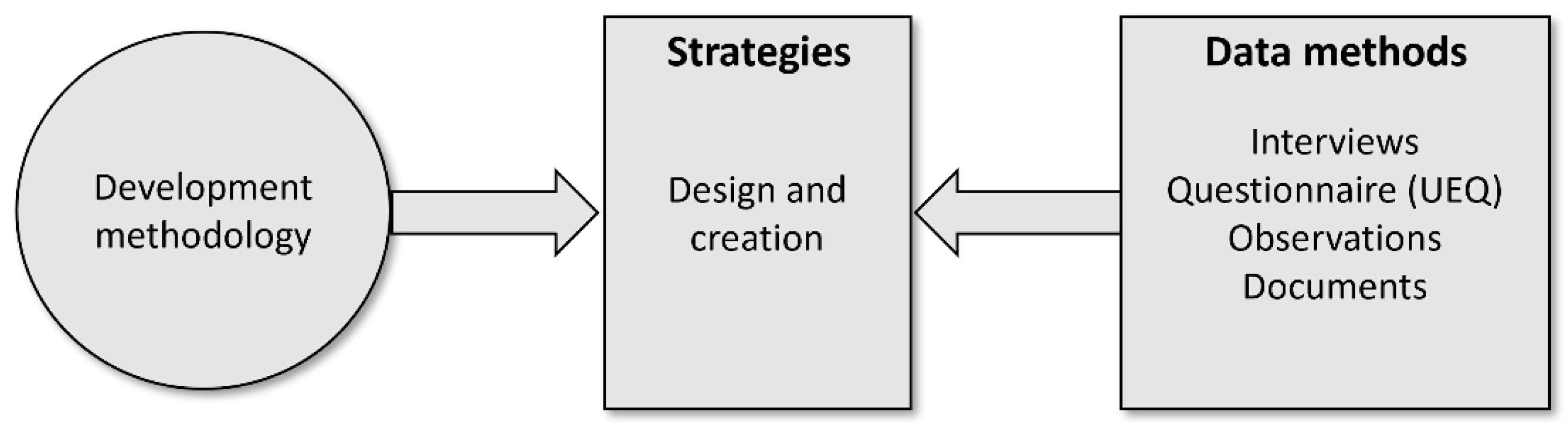

3.1. Overall Approach to Design and Development of Eye-Tracking Based Technologies for Vision Training

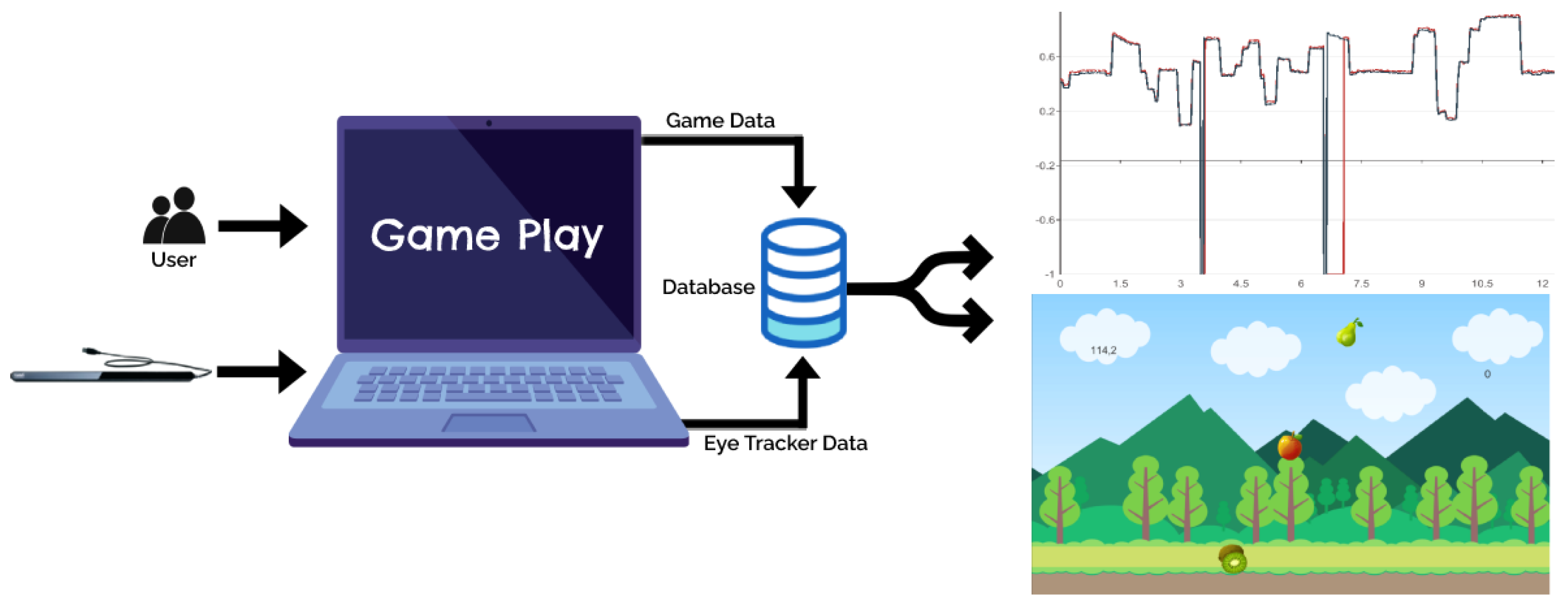

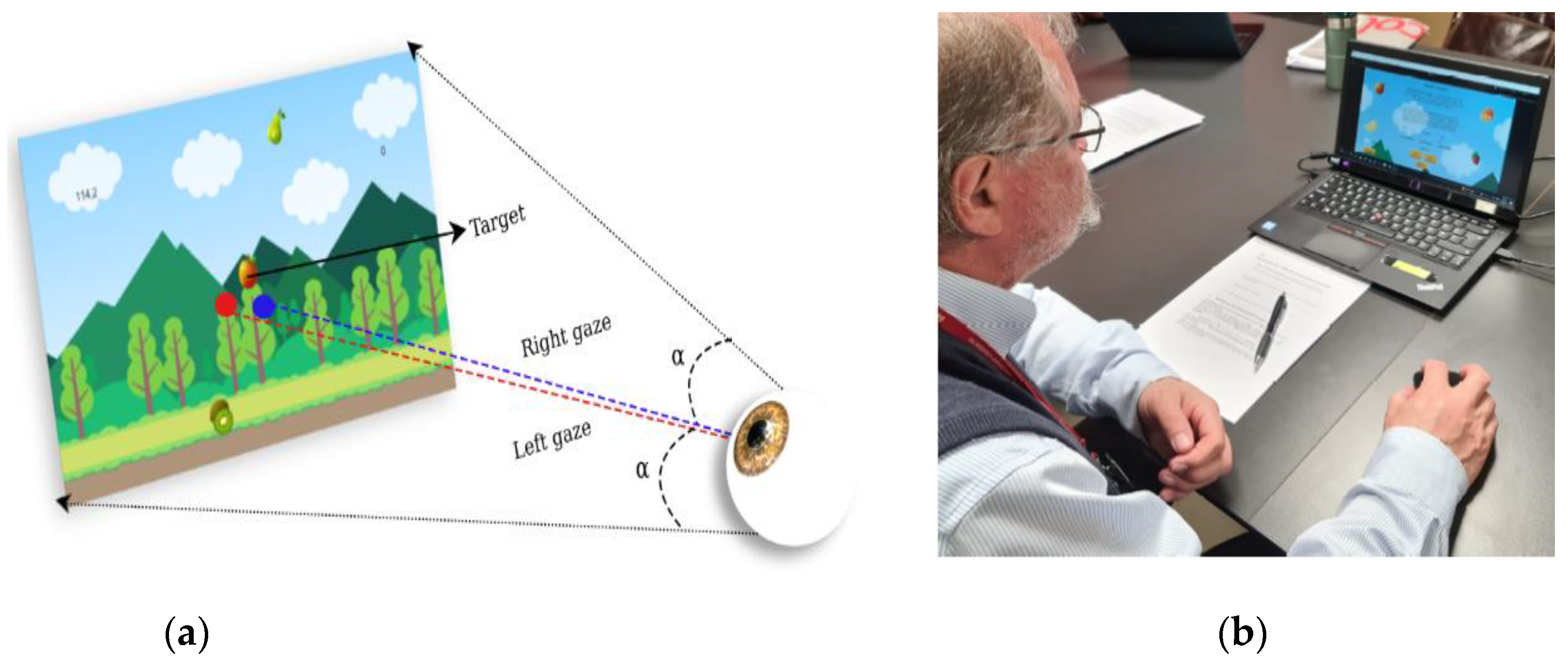

3.2. System Design for Vision Training

- Register a new user.

- Adjust the difficulty level of the game.

- Play/ replay the games.

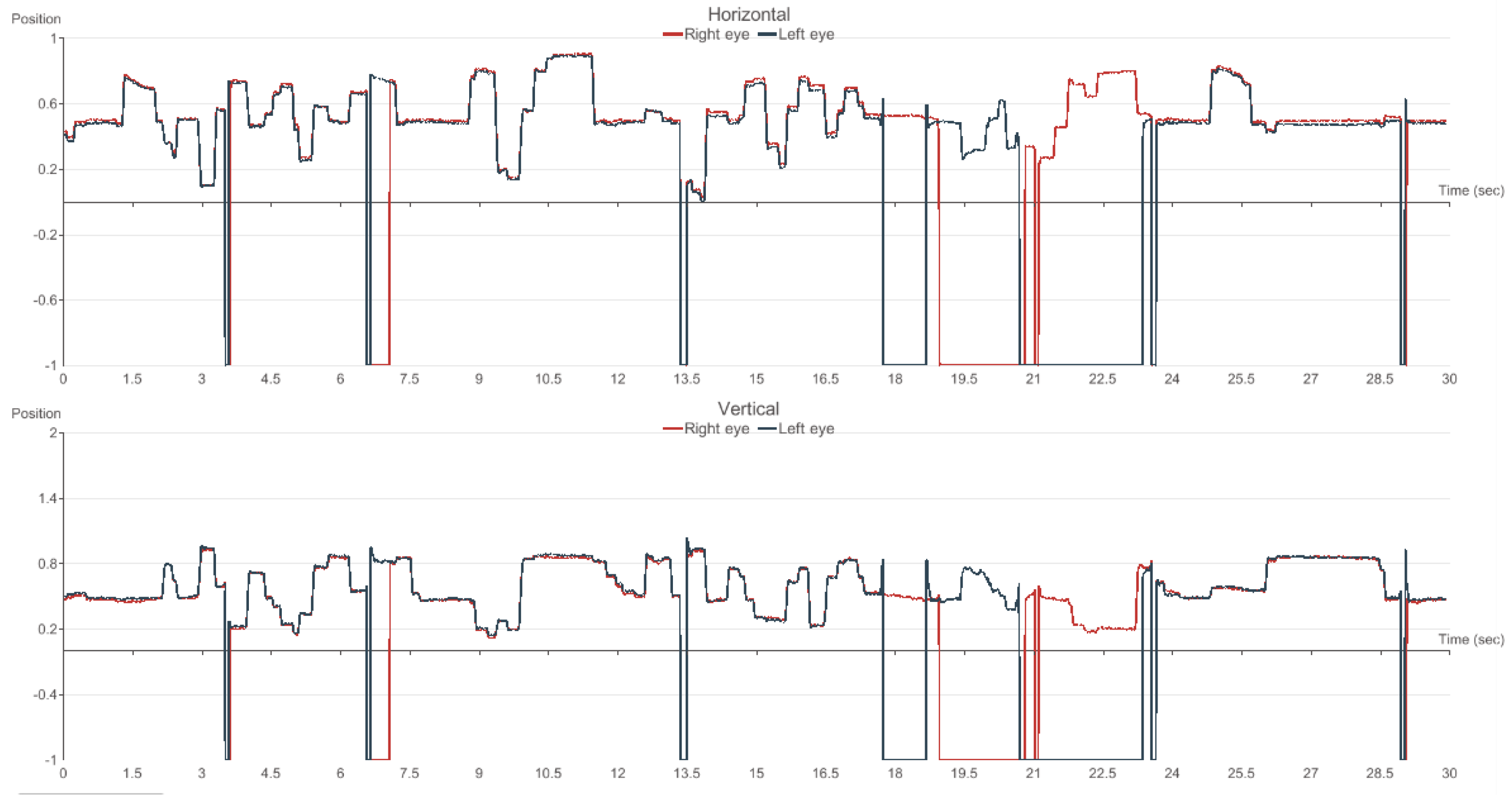

- Examine visual graphs of eye movements.

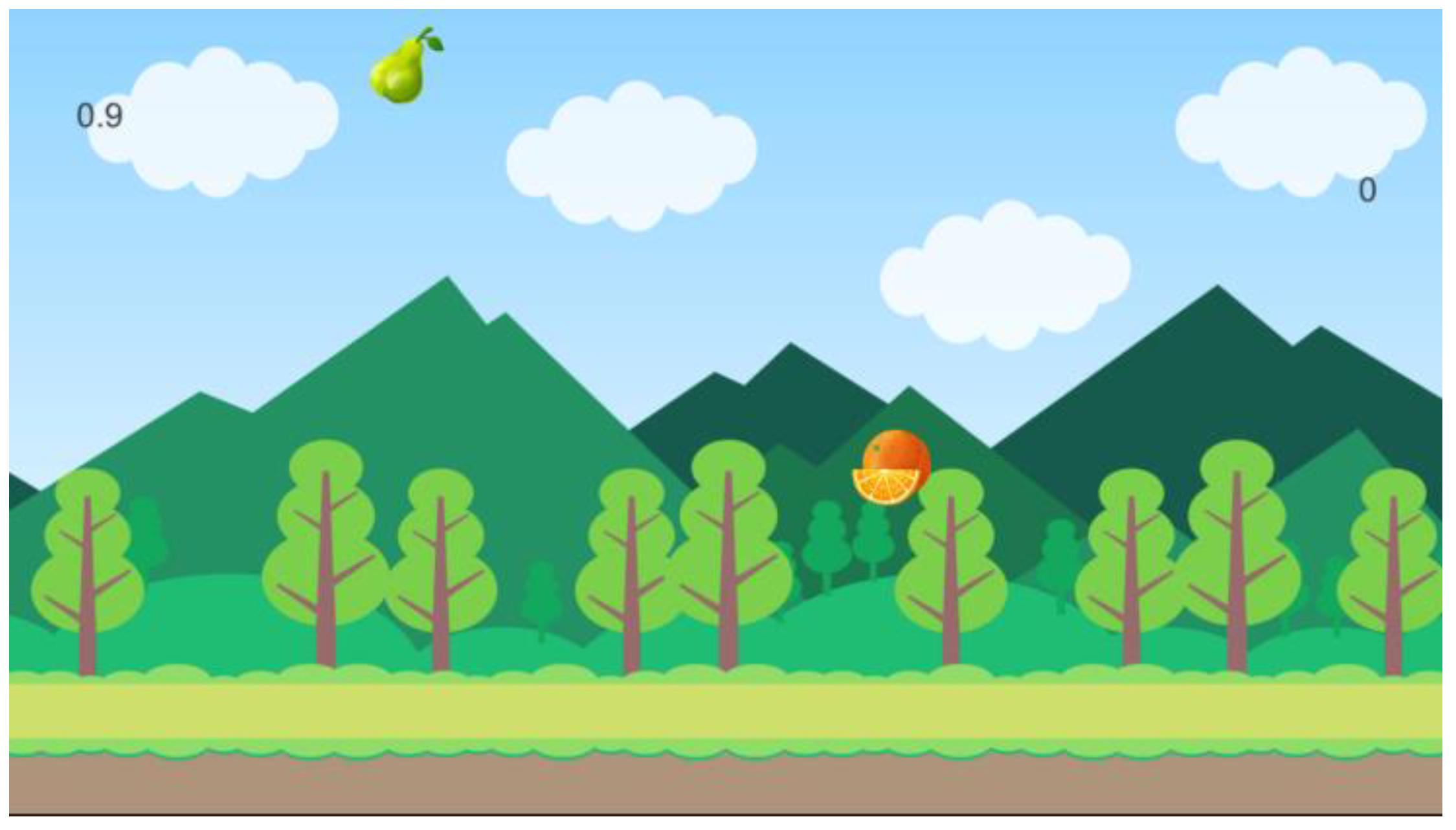

3.3. Catch the Fruits Game

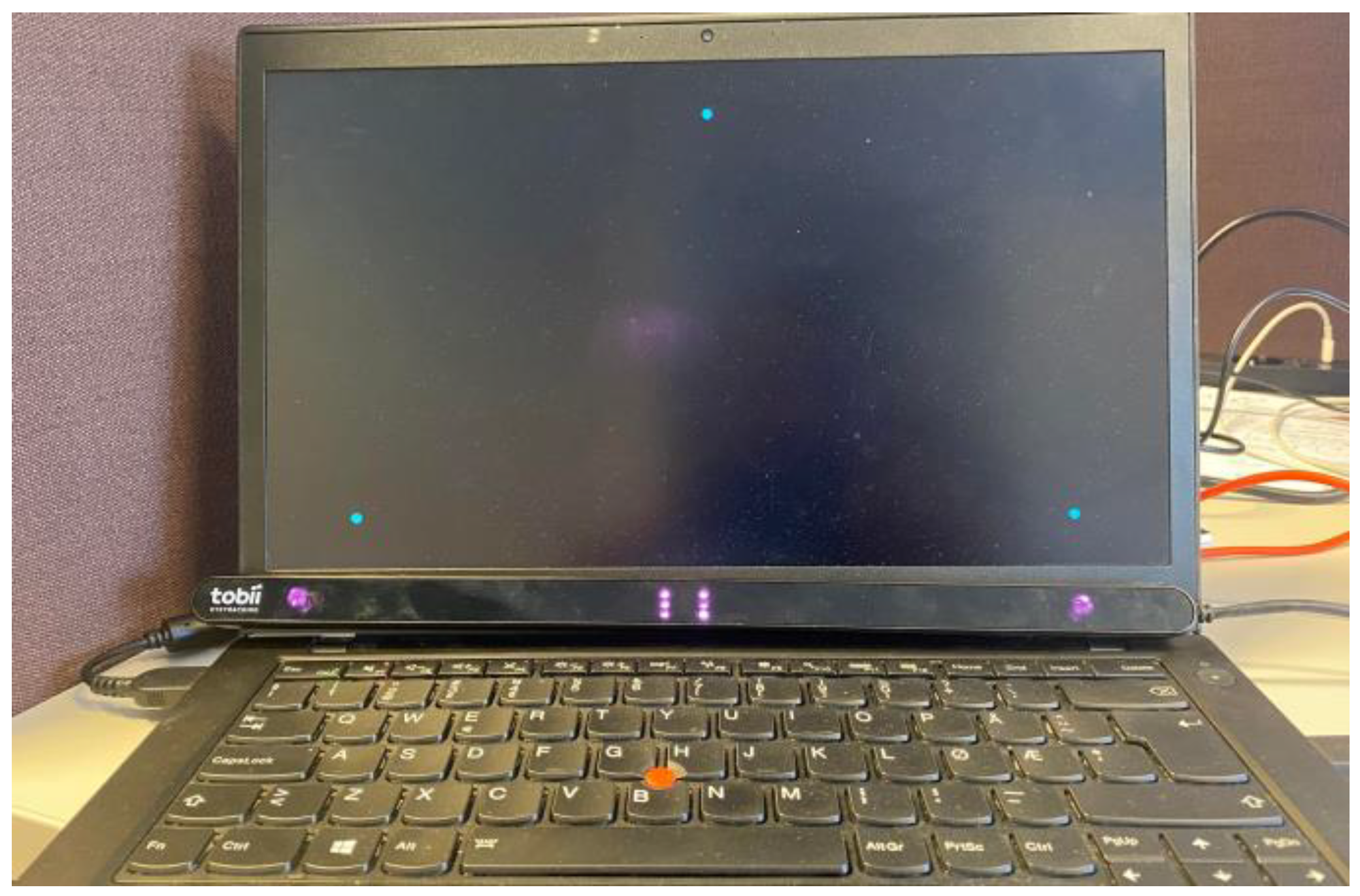

3.4. Eye-Tracker Setup

3.5. User Experience Questionnaire (UEQ)

3.6. The Participants

4. Results

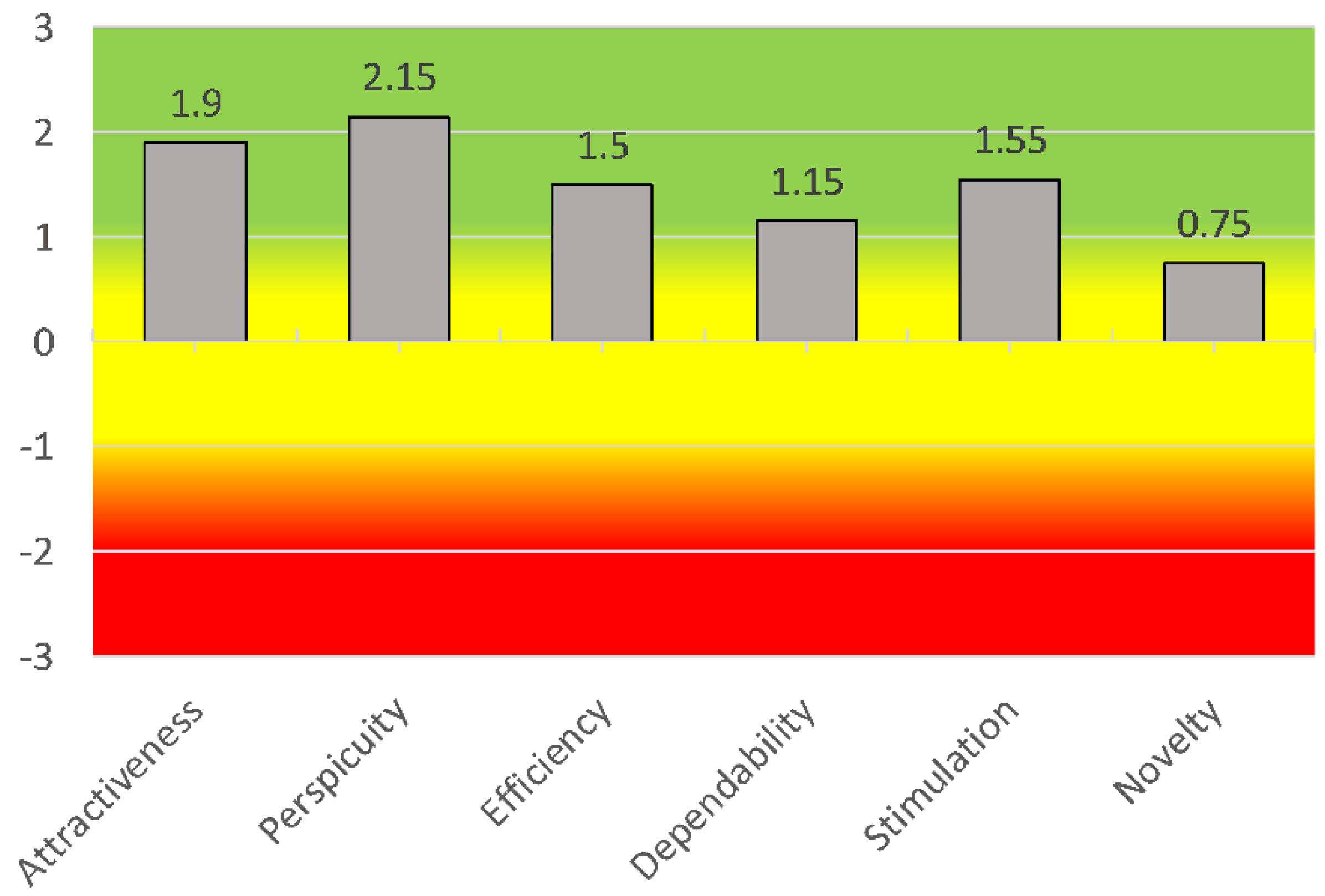

4.1. UEQ Results

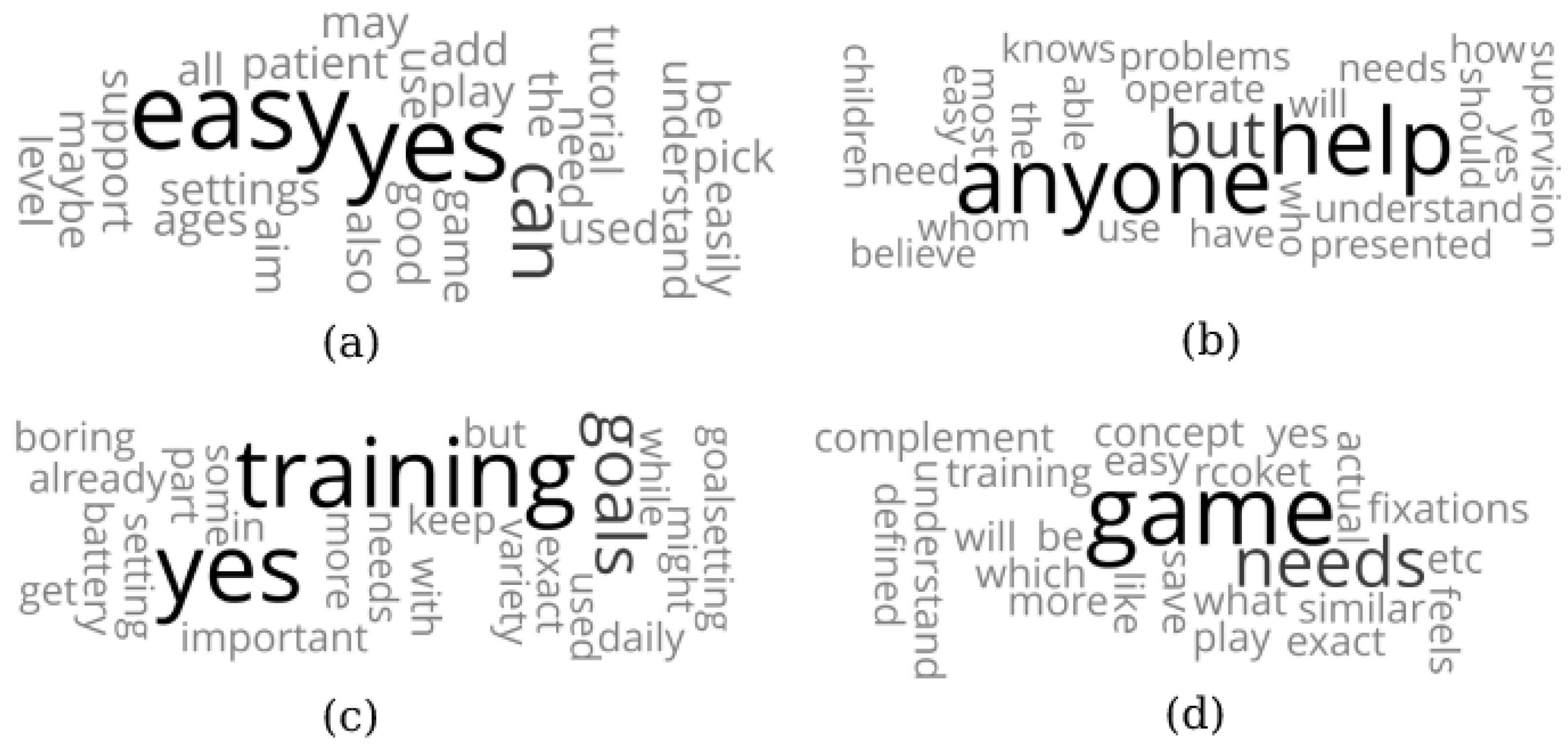

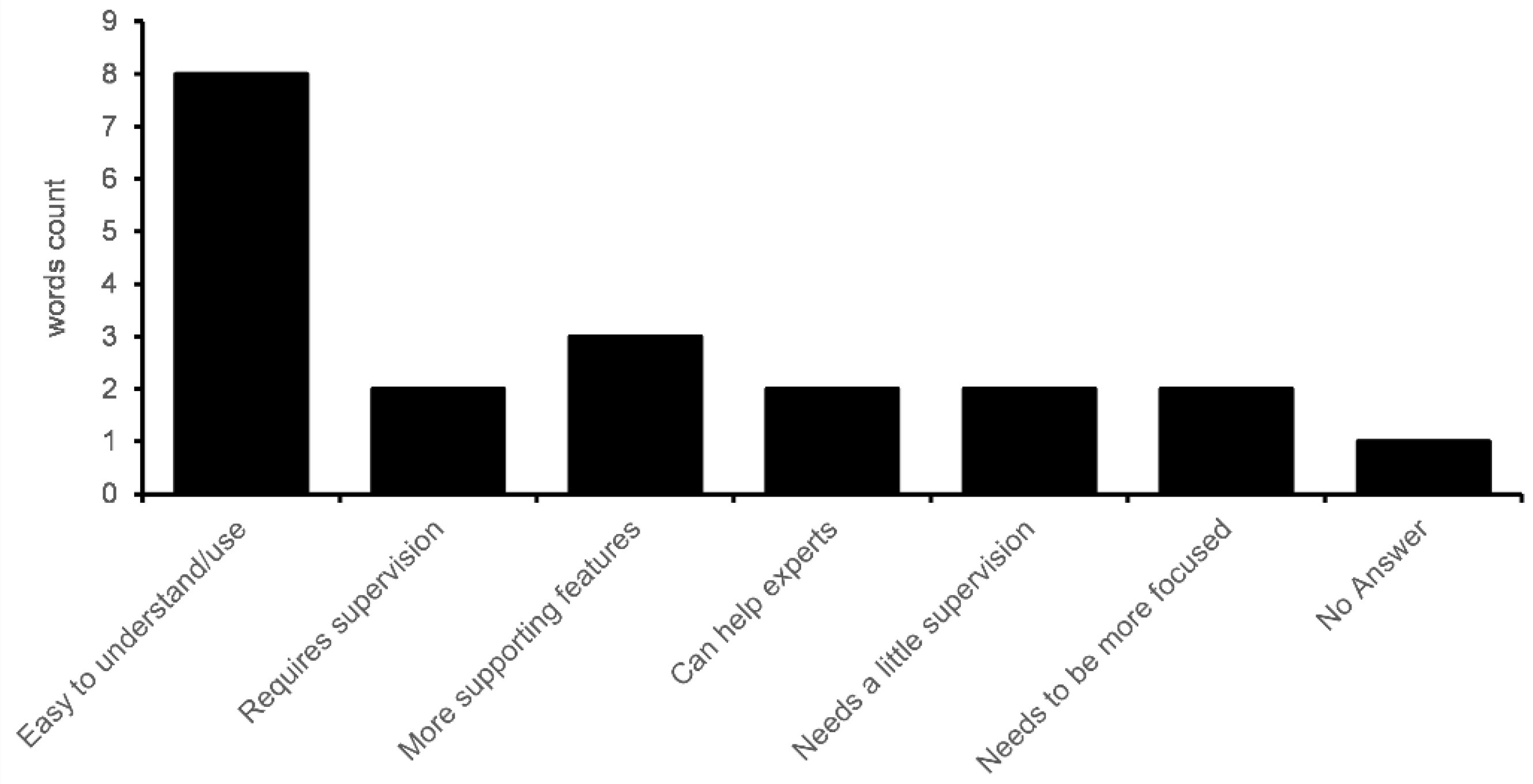

4.2. Open-Ended Questionnaire Results

- Used by children/patients (alone)?

- Used with help from somebody (parents/experts, etc.). If help is needed, who can help?

- Can it be used every day, if needed?

- Does it complement the other training? If yes, how?

4.3. Interview Results

4.3.1. Using the Eye-Tracker

4.3.2. Interaction with the Game and Objects

4.3.3. Performance of the Game

4.3.4. Suggested Improvements

5. Discussion and Future Work

- Clear and short instructions should guide the gameplay. These instructions were defined and implemented in the flow of the game.

- Short tutorials should be provided before playing the game with an illustration about the game. However, these tutorials must be tailored to the relevant problems and stakeholders.

- A complete goal should be defined when starting with the training solution—for example, adjusting parameters of games such as frequency, speed, and time for each session.

- For children who have difficulty understanding the games, some help should be given by someone, e.g., parents. How people can help, depending on their role, should also be defined. At this stage, this study only included help from the developers based on initial guidelines from vision teachers [10].

- Games should have different levels and backgrounds to overcome the boredom that can arise with repetition. This boredom can be mitigated by, e.g., including different challenges and levels in the game. While for these short tests, the games were appreciated as challenging and able to support high-quality user experiences, these issues should be considered for real use, such as when users need to train several days a week and for several weeks [44].

- Games should include factors that focus on eye movements, e.g., fixations, saccades, and smooth pursuits. Measurements of these eye movements that indicate eventual problems have not yet been described.

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Alvarez-Peregrina, C.; Sánchez-Tena, M.Á.; Andreu-Vázquez, C.; Villa-Collar, C. Visual Health and Academic Performance in School-Aged Children. Int. J. Environ. Res. Public Health 2020, 17, 2346. [Google Scholar] [CrossRef] [PubMed]

- Zaba, J.N. Children’s Vision Care in the 21st Century & Its Impact on Education Literacy, Social Issues, & The Workplace: A Call to Action. J. Behav. Optom. 2011, 22, 39–41. [Google Scholar]

- Iyer, J.; Taub, M.B. The VisionPrint System: A new tool in the diagnosis of ocular motor dysfunction. Optom. Vis. Dev. 2011, 42, 17–24. [Google Scholar]

- Tanke, N.; Barsingerhorn, A.D.; Boonstra, F.N.; Goossens, J. Visual fixations rather than saccades dominate the developmental eye movement test. Sci. Rep. 2021, 11, 1162. [Google Scholar] [CrossRef] [PubMed]

- Bonilla-Warford, N.; Allison, C. A Review of the Efficacy of Oculomotor Vision Therapy in Improving Reading Skills. J. Optom. Vis. Dev. 2004, 35, 108–115. [Google Scholar]

- Ali, Q.; Heldal, I.; Helgesen, C.G.; Costescu, C.; Kovari, A.; Katona, J.; Thill, S. Eye-tracking Technologies Supporting Vision Screening In Children. In Proceedings of the 2020 11th IEEE International Conference on Cognitive Infocommunications (CogInfoCom), Mariehamn, Finland, 23–25 September 2020; pp. 471–478. [Google Scholar]

- Eide, M.G.; Heldal, I.; Helgesen, C.G.; Wilhelmsen, G.B.; Watanabe, R.; Geitung, A.; Soleim, H.; Costescu, C. Eye tracking complementing manual vision screening for detecting oculomotor dysfunction. In Proceedings of the 2019 E-Health and Bioengineering Conference (EHB), Lasi, Romania, 21–23 November 2019; pp. 1–5. [Google Scholar]

- Thiagarajan, P.; Ciuffreda, K.J.; Capo-Aponte, J.E.; Ludlam, D.P.; Kapoor, N. Oculomotor neurorehabilitation for reading in mild traumatic brain injury (mTBI): An integrative approach. NeuroRehabilitation 2014, 34, 129–146. [Google Scholar] [CrossRef]

- Wilhelmsen, G.B.; Felder, M. Structured Visual Learning and Stimulation in School: An Intervention Study. Create. Educ. 2021, 12, 757–779. [Google Scholar] [CrossRef]

- Heldal, I.; Helgesen, C.; Ali, Q.; Patel, D.; Geitung, A.B.; Pettersen, H. Supporting School Aged Children to Train Their Vision by Using Serious Games. Computers 2021, 10, 53. [Google Scholar] [CrossRef]

- Ujbanyi, T.; Katona, J.; Sziladi, G.; Kovari, A. Eye-tracking analysis of computer networks exam question besides different skilled groups. In Proceedings of the 2016 7th IEEE International Conference on Cognitive Infocommunications (CogInfoCom), Wroclaw, Poland, 16–18 October 2016; pp. 277–282. [Google Scholar]

- Hirota, M.; Kato, K.; Fukushima, M.; Ikeda, Y.; Hayashi, T.; Mizota, A. Analysis of smooth pursuit eye movements in a clinical context by tracking the target and eyes. Sci. Rep. 2022, 12, 8501. [Google Scholar] [CrossRef]

- Murray, N.; Kubitz, K.; Roberts, C.-M.; Hunfalvay, M.; Bolte, T.; Tyagi, A. An examination of the oculomotor behavior metrics within a suite of digitized eye tracking tests. IEEE J. Transl. Eng. Health. Med. 2019, 5, 1–5. [Google Scholar]

- Fortenbacher, D.L.; Bartolini, A.; Dornbos, B.; Tran, T. Vision Therapy and Virtual Reality Applications. Adv. Ophthalmol. Optom. 2018, 3, 39–59. [Google Scholar] [CrossRef]

- Carvelho, T.; Allison, R.S.; Irving, E.L.; Herriot, C. Computer gaming for vision therapy. In Proceedings of the 2008 Virtual Rehabilitation, Vancouver, BC, Canada, 25–27 August 2008; pp. 198–204. [Google Scholar]

- Gaggi, O.; Ciman, M. The use of games to help children eyes testing. Multimed. Tools Appl. 2016, 75, 3453–3478. [Google Scholar] [CrossRef]

- Facchin, A. Spotlight on the Developmental Eye Movement (DEM) Test. Clin. Optom. 2021, 13, 73–81. [Google Scholar] [CrossRef] [PubMed]

- Ayton, L.N.; Abel, L.A.; Fricke, T.R.; McBrien, N.A. Developmental eye movement test: What is it really measuring? Optom. Vis. Sci. 2009, 86, 722–730. [Google Scholar] [CrossRef] [PubMed]

- Sehgal, S.; Satgunam, P. Quantifying Suppression in Anisometropic Amblyopia With VTS4 (Vision Therapy System 4). Transl. Vis. Sci. Technol. 2020, 9, 24. [Google Scholar] [CrossRef]

- Sanchez, I.; Ortiz-Toquero, S.; Martin, R.; de Juan, V. Advantages, limitations, and diagnostic accuracy of photoscreeners in early detection of amblyopia: A review. Clin. Ophthalmol. 2016, 10, 1365–1373. [Google Scholar] [CrossRef]

- Bortoli, A.D.; Gaggi, O. PlayWithEyes: A new way to test children eyes. In Proceedings of the 2011 IEEE 1st International Conference on Serious Games and Applications for Health (SeGAH), Braga, Portugal, 16–18 November 2011; pp. 1–4. [Google Scholar]

- Li, S.L.; Reynaud, A.; Hess, R.F.; Wang, Y.Z.; Jost, R.M.; Morale, S.E.; De La Cruz, A.; Dao, L.; Stager, D., Jr.; Birch, E.E. Dichoptic movie viewing treats childhood amblyopia. J. Am. Assoc. Pediatr. Ophthalmol. Strabismus 2015, 19, 401–405. [Google Scholar] [CrossRef]

- Hernández-Rodríguez, C.J.; Piñero, D.P.; Molina-Martín, A.; Morales-Quezada, L.; de Fez, D.; Leal-Vega, L.; Arenillas, J.F.; Coco-Martín, M.B. Stimuli Characteristics and Psychophysical Requirements for Visual Training in Amblyopia: A Narrative Review. J. Clin. Med. 2020, 9, 3985. [Google Scholar] [CrossRef]

- Eastgate, R.M.; Griffiths, G.D.; Waddingham, P.E.; Moody, A.D.; Butler, T.K.H.; Cobb, S.V.; Comaish, I.F.; Haworth, S.M.; Gregson, R.M.; Ash, I.M.; et al. Modified virtual reality technology for treatment of amblyopia. Eye 2006, 20, 370–374. [Google Scholar] [CrossRef]

- Singh, A.; Saxena, V.; Yadav, S.; Agrawal, A.; Ramawat, A.; Samanta, R.; Panyala, R.; Kumar, B. Comparison of home-based pencil push-up therapy and office-based orthoptic therapy in symptomatic patients of convergence insufficiency: A randomized controlled trial. Int. Ophthalmol. 2021, 41, 1327–1336. [Google Scholar] [CrossRef]

- Boon, M.Y.; Asper, L.J.; Chik, P.; Alagiah, P.; Ryan, M. Treatment and compliance with virtual reality and anaglyph-based training programs for convergence insufficiency. Clin. Exp. Optom. 2020, 103, 870–876. [Google Scholar] [CrossRef] [PubMed]

- Donmez, M.; Cagiltay, K. Development of eye movement games for students with low vision: Single-subject design research. Educ. Inf. Technol. 2019, 24, 295–305. [Google Scholar] [CrossRef]

- Kita, R.; Yamamoto, M.; Kitade, K. Development of a Vision Training System Using an Eye Tracker by Analyzing Users’ Eye Movements. In Proceedings of the 22nd HCI International Conference 2020—Late Breaking Papers: Interaction, Knowledge and Social Media, Copenhagen, Denmark, 19–24 July 2020; pp. 371–382. [Google Scholar]

- Handa, T.; Ishikawa, H.; Shoji, N.; Ikeda, T.; Totuka, S.; Goseki, T.; Shimizu, K. Modified iPad for treatment of amblyopia: A preliminary study. J. Am. Assoc. Pediatr. Ophthalmol. Strabismus 2015, 19, 552–554. [Google Scholar] [CrossRef] [PubMed]

- Jiménez-Rodríguez, C.; Yélamos-Capel, L.; Salvestrini, P.; Pérez-Fernández, C.; Sánchez-Santed, F.; Nieto-Escámez, F. Rehabilitation of visual functions in adult amblyopic patients with a virtual reality videogame: A case series. Virtual Real. 2021. [Google Scholar] [CrossRef]

- Oates, B.J. Researching Information Systems and Computing, 1st ed.; Sage: Thousand Oaks, CA, USA, 2005; p. 360. [Google Scholar]

- Hettervik-Frøland, T.; Heldal, I.; Ersvaer, E.; Sjøholt, G. Merging 360°-videos and Game-Based Virtual Environments for Phlebotomy Training: Teachers and Students View. In Proceedings of the 2021 International Conference on e-Health and Bioengineering, Lasi, Romania, 18–19 November 2021; pp. 1–6. [Google Scholar]

- Costescu, C.; Rosan, A.; Brigitta, N.; Hathazi, A.; Kovari, A.; Katona, J.; Demeter, R.; Heldal, I.; Helgesen, C.; Thill, S.; et al. Assessing Visual Attention in Children Using GP3 Eye Tracker. In Proceedings of the 2019 10th IEEE International Conference on Cognitive Infocommunications (CogInfoCom), Naples, Italy, 23–25 October 2019; pp. 343–348. [Google Scholar]

- Günther, V.; Holzner, B.; Kemmler, G.; Pircher, M.; Trebo, E.; Hinterhuber, H. Computer-assisted cognitive training in psychiatric outpatients. Eur. Neuropsychopharmacol. 1997, 1002, 287–288. [Google Scholar] [CrossRef]

- Murray, N.P.; Hunfalvay, M.; Roberts, C.-M.; Tyagi, A.; Whittaker, J.; Noel, C. Oculomotor Training for Poor Saccades Improves Functional Vision Scores and Neurobehavioral Symptoms. Arch. Rehabil. Res. Clin. Transl. 2021, 3, 100126. [Google Scholar] [CrossRef]

- Aker, Ç.; Rızvanoğlu, K.; İnal, Y.; Yılmaz, A.S. Analyzing playability in multi-platform games: A case study of the Fruit Ninja Game. In Proceedings of the 5th 2016 International Conference of Design, User Experience, and Usability, Toronto, Canada, 17–22 July 2016; pp. 229–239. [Google Scholar]

- Eide, G.M.; Watanabe, R.; Heldal, I.; Helgesen, C.; Geitung, A.; Soleim, H. Detecting oculomotor problems using eye tracking: Comparing EyeX and TX300. In Proceedings of the 10th IEEE Conference on Cognitive Infocommunication (CogInfoCom), Naples, Italy, 23–25 October 2019; pp. 381–388. [Google Scholar]

- Ramanauskas, N. Calibration of Video-Oculographical Eye-Tracking System. Elektron. Elektrotech. 2006, 8, 65–68. [Google Scholar]

- Timans, R.; Wouters, P.; Heilbron, J. Mixed methods research: What it is and what it could be. Theory Soc. 2019, 48, 193–216. [Google Scholar] [CrossRef]

- Schrepp, M.; Hinderks, A.; Thomaschewski, J. Construction of a Benchmark for the User Experience Questionnaire (UEQ). Int. J. Interact. Multi. 2017, 4, 40–44. [Google Scholar] [CrossRef]

- Tarantino, E.; De Falco, I.; Scafuri, U. A mobile personalized tourist guide and its user evaluation. Inf. Technol. Tour. 2019, 21, 413–455. [Google Scholar] [CrossRef]

- Fan, Z.; Brown, K.; Nistor, S.; Seepaul, K.; Wood, K.; Uribe-Quevedo, A.; Perera, S.; Waller, E.; Lowe, S. Use of Virtual Reality Technology for CANDU 6 Reactor Fuel Channel Operation Training. In Proceedings of the 9th Games and Learning Alliance, Laval, France, 9–10 December 2020; pp. 91–101. [Google Scholar]

- Turner, C.W.; Nielsen, J.; Lewis, J.R. Current issues in the determination of usability test sample size: How many users is enough. In Proceedings of the 2002 Usability Professionals Association, Orlando, FL, USA, 8–12 July 2002; pp. 1–5. [Google Scholar]

- Ali, Q.; Heldal, I.; Eide, M.G.; Helgesen, C.; Wilhelmsen, G.B. Using Eye-tracking Technologies in Vision Teachers’ Work–a Norwegian Perspective. In Proceedings of the 2020 E-Health and Bioengineering Conference (EHB), Lasi, Romania, 29–30 October 2020; pp. 1–5. [Google Scholar]

- Hyldgaard, D.; Schweder, F.J.v.; Ali, Q.; Heldal, I.; Knapstad, M.K.; Aasen, T. Open Source Affordable Balance Testing based on a Nintendo Wii Balance Board. In Proceedings of the 2021 International Conference on e-Health and Bioengineering (EHB), Lasi, Romania, 18–19 November 2021; pp. 1–4. [Google Scholar]

- Ciman, M.; Gaggi, O.; Sgaramella, T.M.; Nota, L.; Bortoluzzi, M.; Pinello, L. Serious Games to Support Cognitive Development in Children with Cerebral Visual Impairment. Mob. Netw. Appl. 2018, 23, 1703–1714. [Google Scholar] [CrossRef]

- Blignaut, P. Fixation identification: The optimum threshold for a dispersion algorithm. Atten. Percept. Psychophys. 2009, 71, 881–895. [Google Scholar] [CrossRef] [PubMed]

- Ali, Q.; Heldal, I.; Helgesen, C.G. A Bibliometric Analysis of Virtual Reality-Aided Vision Therapy. Stud. Health Technol.Inform. 2022, 295, 526–529. [Google Scholar] [PubMed]

- Salvucci, D.; Goldberg, J. Identifying fixations and saccades in eye-tracking protocols. In Proceedings of the 2000 the Eye Tracking Research and Application Symposium (ERTA), Beach Gardens, FL, USA, 6–8 November 2002; pp. 71–78. [Google Scholar]

- Mankins, J.C. Technology readiness levels- A White Paper. Business 1995, 6, 1–5. [Google Scholar]

- Rowe, F.; Brand, D.; Jackson, C.A.; Price, A.; Walker, L.; Harrison, S.; Eccleston, C.; Scott, C.; Akerman, N.; Dodridge, C.; et al. Visual impairment following stroke: Do stroke patients require vision assessment? Age Ageing 2009, 38, 188–193. [Google Scholar] [CrossRef]

- Russell, L.L.; Greaves, C.V.; Convery, R.S.; Bocchetta, M.; Warren, J.D.; Kaski, D.; Rohrer, J.D. Eye movements in frontotemporal dementia: Abnormalities of fixation, saccades and anti-saccades. Alzheimer’s Dement. Transl. Res. Clin. Interv. 2021, 7, e12218. [Google Scholar] [CrossRef]

- Arnarson, G.; Eliassen, K.; Grønås, S. Improvement of Games using Eye Tracking for Oculomotor Training. Bachelor’s Thesis, Western Norway University of Applied Sciences, Bergen, Norway, 2021. [Google Scholar]

| Authors | Year | Vision Problem | Technology | Gamification Elements |

|---|---|---|---|---|

| Li et al. [22] | 2015 | Amblyopia | 3D monitor | × |

| Handa et al. [29] | 2015 | Amblyopia | iPad | ✓ |

| Eastgate et al. [24] | 2006 | Amblyopia | Virtual Reality | ✓ |

| Jiménez et al. [30] | 2021 | Amblyopia | Virtual Reality | ✓ |

| Carvelho et al. [15] | 2008 | Convergence insufficiency | Computer | ✓ |

| Boon et al. [26] | 2020 | Convergence insufficiency | Virtual Reality | ✓ |

| Donmez et al. [27] | 2019 | Low vision | Eye-tracker | ✓ |

| Kita et al. [28] | 2020 | Eye-movements | Eye-tracker | × |

| Challenge | Ocular Motor Activities | Exercises |

|---|---|---|

| Field of view | Saccades Visual attention Regression | Horizontal movements Vertical movements Diagonal movements Circular movements |

| Visual acuity | Fixations Endurance Saccades Mini saccades | Searching/Scanning Find objects in a crowd Horizontal movements Vertical movements Diagonal movements Circular movements Smooth pursuit Find pairs/similarities Labyrinths point to point |

| Stereopsis | Accommodations Convergence Double vision | Movements and flashes at a distance—different depths Objects that vary in size |

| Eye-hand coordination | Use the mouse or keyboard-based on events on the screen, and vice versa. |

| 1 | 2 | 3 | 4 | 5 | 6 | 7 | |||

|---|---|---|---|---|---|---|---|---|---|

| 1 | Uncomfortable | ◯ | ◯ | ◯ | ◯ | ◯ | ◯ | ◯ | Comfortable |

| 2 | Incomprehensible | ◯ | ◯ | ◯ | ◯ | ◯ | ◯ | ◯ | Comprehensible |

| 3 | Creative | ◯ | ◯ | ◯ | ◯ | ◯ | ◯ | ◯ | Not creative |

| 4 | Easy to understand | ◯ | ◯ | ◯ | ◯ | ◯ | ◯ | ◯ | Difficult to understand |

| 5 | Noticeable | ◯ | ◯ | ◯ | ◯ | ◯ | ◯ | ◯ | Poor |

| 6 | Boring | ◯ | ◯ | ◯ | ◯ | ◯ | ◯ | ◯ | Fascinating |

| 7 | Insignificant | ◯ | ◯ | ◯ | ◯ | ◯ | ◯ | ◯ | Interesting |

| 8 | Unpredictable | ◯ | ◯ | ◯ | ◯ | ◯ | ◯ | ◯ | Predictable |

| 9 | Fast | ◯ | ◯ | ◯ | ◯ | ◯ | ◯ | ◯ | Slow |

| 10 | Original | ◯ | ◯ | ◯ | ◯ | ◯ | ◯ | ◯ | Conventional |

| 11 | Obstructive | ◯ | ◯ | ◯ | ◯ | ◯ | ◯ | ◯ | Of support |

| 12 | Agreeable | ◯ | ◯ | ◯ | ◯ | ◯ | ◯ | ◯ | Disagreeable |

| 13 | Complicate | ◯ | ◯ | ◯ | ◯ | ◯ | ◯ | ◯ | Easy |

| 14 | Repellent | ◯ | ◯ | ◯ | ◯ | ◯ | ◯ | ◯ | Attractive |

| 15 | Usual | ◯ | ◯ | ◯ | ◯ | ◯ | ◯ | ◯ | Modern |

| 16 | Appreciated | ◯ | ◯ | ◯ | ◯ | ◯ | ◯ | ◯ | Unpleasant |

| 17 | Sure | ◯ | ◯ | ◯ | ◯ | ◯ | ◯ | ◯ | Unsure |

| 18 | Stimulating | ◯ | ◯ | ◯ | ◯ | ◯ | ◯ | ◯ | Soporific |

| 29 | Satisfying | ◯ | ◯ | ◯ | ◯ | ◯ | ◯ | ◯ | Scant |

| 20 | Inefficient | ◯ | ◯ | ◯ | ◯ | ◯ | ◯ | ◯ | Efficient |

| 21 | Clear | ◯ | ◯ | ◯ | ◯ | ◯ | ◯ | ◯ | Messy |

| 22 | Not much practical | ◯ | ◯ | ◯ | ◯ | ◯ | ◯ | ◯ | Practical |

| 23 | Ordered | ◯ | ◯ | ◯ | ◯ | ◯ | ◯ | ◯ | Unordered |

| 24 | Attractive | ◯ | ◯ | ◯ | ◯ | ◯ | ◯ | ◯ | Not attractive |

| 25 | Friendly | ◯ | ◯ | ◯ | ◯ | ◯ | ◯ | ◯ | Hostile |

| 26 | Conservative | ◯ | ◯ | ◯ | ◯ | ◯ | ◯ | ◯ | Innovative |

| Participant | Profession | Use Spectacles | Age |

|---|---|---|---|

| P1 | Vision Teacher | Yes | ≈55 |

| P2 | Teacher | Yes | ≈60 |

| P3 | Student | Yes | 30 |

| P4 | Student | No | 25 |

| P5 | Student | No | 23 |

| Indicator | Attractiveness | Perspicuity | Efficiency | Dependability | Stimulation | Novelty |

|---|---|---|---|---|---|---|

| Value | 1.9 | 2.15 | 1.5 | 1.15 | 1.55 | 0.75 |

| Category | Excellent | Excellent | Good | Above Average | Excellent | Above average |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ali, Q.; Heldal, I.; Helgesen, C.G.; Dæhlen, A. Serious Games for Vision Training Exercises with Eye-Tracking Technologies: Lessons from Developing a Prototype. Information 2022, 13, 569. https://doi.org/10.3390/info13120569

Ali Q, Heldal I, Helgesen CG, Dæhlen A. Serious Games for Vision Training Exercises with Eye-Tracking Technologies: Lessons from Developing a Prototype. Information. 2022; 13(12):569. https://doi.org/10.3390/info13120569

Chicago/Turabian StyleAli, Qasim, Ilona Heldal, Carsten Gunnar Helgesen, and Are Dæhlen. 2022. "Serious Games for Vision Training Exercises with Eye-Tracking Technologies: Lessons from Developing a Prototype" Information 13, no. 12: 569. https://doi.org/10.3390/info13120569

APA StyleAli, Q., Heldal, I., Helgesen, C. G., & Dæhlen, A. (2022). Serious Games for Vision Training Exercises with Eye-Tracking Technologies: Lessons from Developing a Prototype. Information, 13(12), 569. https://doi.org/10.3390/info13120569