Abstract

Web systems have become a valuable source of semi-structured and streaming data. In this sense, Entity Resolution (ER) has become a key solution for integrating multiple data sources or identifying similarities between data items, namely entities. To avoid the quadratic costs of the ER task and improve efficiency, blocking techniques are usually applied. Beyond the traditional challenges faced by ER and, consequently, by the blocking techniques, there are also challenges related to streaming data, incremental processing, and noisy data. To address them, we propose a schema-agnostic blocking technique capable of handling noisy and streaming data incrementally through a distributed computational infrastructure. To the best of our knowledge, there is a lack of blocking techniques that address these challenges simultaneously. This work proposes two strategies (attribute selection and top-n neighborhood entities) to minimize resource consumption and improve blocking efficiency. Moreover, this work presents a noise-tolerant algorithm, which minimizes the impact of noisy data (e.g., typos and misspellings) on blocking effectiveness. In our experimental evaluation, we use real-world pairs of data sources, including a case study that involves data from Twitter and Google News. The proposed technique achieves better results regarding effectiveness and efficiency compared to the state-of-the-art technique (metablocking). More precisely, the application of the two strategies over the proposed technique alone improves efficiency by 56%, on average.

1. Introduction

Currently, there is an increasing number of information systems that produce large amounts of data continuously, such as Web systems (e.g., digital libraries, knowledge graphs, and e-commerce), social media (e.g., Twitter and Facebook), and the Internet of Things (e.g., mobiles, sensors, and devices) [1]. These applications have become a valuable source of heterogeneous data [2,3]. These kinds of data present a schema-free behavior and can be represented in different formats (e.g., XML, RDF, and JSON). Commonly, data are provided by different data sources and may have overlapping knowledge. For instance, different types of social media will report the same event and generate similar mass data. In this sense, Entity Resolution (ER) emerges as a fundamental step to support the integration of multiple knowledgebases or identify similarities between entities. The ER task aims to identify records (i.e., entity profiles) from several data sources (i.e., entity collections) that refer to the same real-world entity (i.e., similar/correspondent entities) [4,5,6].

The ER task commonly includes four steps: blocking, comparison, classification, and evaluation [7]. In the former, to avoid the quadratic cost of the ER task (i.e., comparisons guided by the Cartesian product), blocking techniques are applied to group similar entities into blocks and perform comparisons within each block. In the comparison step, the actual entity pair comparison occurs, i.e., the entities of each block are pairwise compared using a variety of comparison functions to determine the similarity between them. In the classification step, based on the similarity level, the entity pairs are classified into matches, non-matches, and potential matches (depending on the decision model used). In the evaluation step, the effectiveness of the ER results and the efficiency of the process are evaluated. This work focuses on the blocking step.

The heterogeneity of the data compromises the block generation (by the blocking techniques) since the entity profiles hardly share the same schema. Therefore, traditional blocking techniques (e.g., sorted neighborhood and adaptive window) do not possess satisfactory effectiveness because blocking is based on a fixed entity profile schema [8]. In turn, the heterogeneous data challenge is addressed by schema-agnostic blocking techniques, which disregard attribute names and consider the values related to the entity attributes to perform blocking [5]. Furthermore, we tackle three other challenges related to the ER task and, consequently, the blocking techniques: streaming data, incremental processing, and noisy data [9,10,11].

Streaming data are related to dynamic data sources (e.g., from Web systems, social media, and sensors), which are continuously updated. When blocking techniques receive streaming data, we assume that not all data (from the data sources) are available at once. Therefore, blocking techniques need to group the entities as they arrive, also considering the entities already blocked previously.

Incremental blocking is related to receiving data continuously over time and re-processing only the portion of the generated blocks (i.e., that store similar entities) that were affected by the data increments. For this reason, it commonly suffers from resource consumption issues (e.g., memory and CPU) since ER approaches need to maintain a large amount of data in memory [9,10,12]. This occurs due to the fact that incremental processing consumes information processed in previous increments. Considering this behavior, it is necessary to develop strategies that manage computational resources. In this sense, when blocking techniques face these scenarios, memory consumption tends to be the biggest challenge that is handled by incremental techniques [13,14]. Considering that streaming data are frequently processed in an incremental way [2], the challenges are strengthened when the ER task deals with heterogeneous data, streaming data, and incremental processing simultaneously. For this reason, the development of efficient incremental blocking techniques able to handle streaming data appears as an open problem [13,15]. To improve efficiency and provide resources for incremental blocking techniques, parallel processing can be applied [16]. Parallel processing distributes the computational costs (i.e., to block entities) among the various resources (e.g., computers or virtual machines) of a computational infrastructure to reduce the overall execution time of blocking techniques.

Concerning noisy data, in practical scenarios, people are less careful with the lexical accuracy of content written in informal virtual environments (e.g., social networks) or when they are under some kind of pressure (e.g., business reports) [17]. For these reasons, real-world data often present noise that can impair data interpretations, data manipulation tasks, and decision making [18]. As stated, in the ER context, noisy data enhance the challenge of determining the similarities between entities. This commonly occurs in scenarios where the similarity between entities is based on the lexical aspect of their attribute values, which is the case in a vast number of blocking techniques [19,20,21,22,23,24]. In this sense, the two most common types of noise in the data are considered in this work: typos and misspelling errors [17].

Considering the challenges described above, this research proposes a parallel-based blocking technique able to process streaming data incrementally. The proposed blocking technique is also able to process noisy data without a considerable impact on the effectiveness of the blocking results. Moreover, we propose two strategies to be applied in the blocking technique: attribute selection and top-n neighborhood. Both strategies intend to enhance the efficiency of the blocking technique by quickly processing entities (sent continuously) and preventing the blocking from consuming resources excessively. Therefore, the general hypothesis of our work is to evaluate whether (or not) the application of the proposed blocking technique is able to improve the efficiency of the ER task without decreasing the effectiveness in streaming data scenarios.

Although the proposed blocking technique follows the idea behind token-based techniques such as those in [21,22], the latter are neither able to handle streaming data nor perform blocking incrementally. Since they were originally developed for batch data, they do not take into account the challenges related to incremental processing and streaming data. To the best of our knowledge, there is a lack of blocking techniques that address all the challenges faced in this work. Among the recent works, we can highlight the work in [19], which proposes a schema-agnostic blocking technique to handle streaming data. However, this work presents several limitations related to blocking efficiency and excessive resource consumption. Therefore, as part of our research, we propose an enhancement of the technique proposed in [19], by offering an efficient schema-agnostic blocking technique able to incrementally process streaming data. Overall, the main contributions of our work are:

- An incremental and parallel schema-agnostic blocking technique able to deal with streaming and incremental data, as well as minimize the challenges related to both scenarios;

- An attribute selection algorithm, which discards the superfluous attributes of the entities, to enhance efficiency and minimize resources consumption;

- A top-n neighborhood strategy, which maintains only the n most similar entities (i.e., neighbor entities) of each entity, improving the efficiency of the proposed technique;

- A noise-tolerant algorithm, which generates hash values from the entity attributes and allows the generation of high-quality blocks even in the presence of noisy data;

- An experimental evaluation applying real-world data sources to analyze the proposed technique in terms of efficiency and effectiveness;

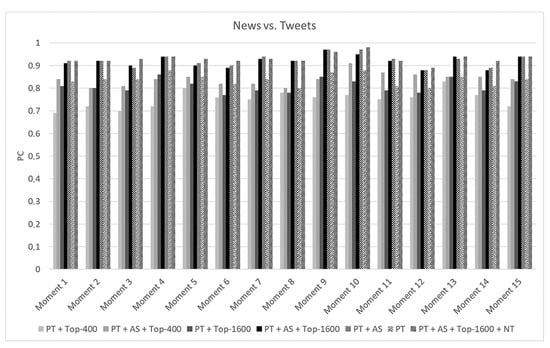

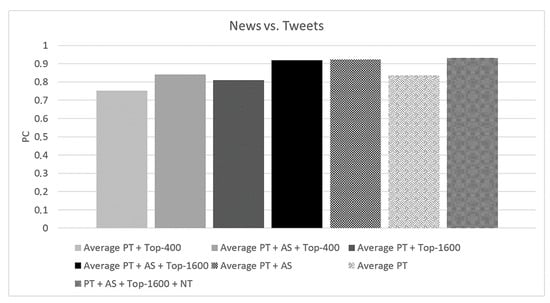

- A real-world case study involving data from Twitter and Google News to evaluate the application of the attribute selection and top-n neighborhood strategies over the proposed technique in terms of effectiveness.

The rest of the paper is structured as follows: Section 2 formalizes the problem statement related to the understanding of this work. In Section 3, we present the most relevant works available in the literature related to the field addressed in this paper. Section 4 presents the parallel-based blocking technique and describes the workflow to process streaming data. Section 5 describes the time-window strategy, which is applied in the proposed technique to avoid the excessive consumption of computational resources. Section 6 presents the attribute selection strategy, which discards superfluous attributes in order to improve efficiency. In Section 7, we discuss the experimental evaluation and in Section 8, the results of the case study are addressed. Finally, Section 9 concludes the paper along with directions for future works.

2. Problem Statement

Given two entity collections and , where and represent two data sources, the purpose of ER is to identify all matches among the entities (e) of the data sources [5,7]. Each entity or can be denoted as a set of key-value elements that models its attributes (a) and the values (v) associated with each attribute . Given that is the function that determines the similarity between the entities and , and is the threshold () that determines whether the entities and are considered a match (i.e., truly similar entities), the task of identifying similar entities (ER) can be denoted as .

In traditional ER, entity comparisons are guided by the Cartesian product (i.e., ). For this reason, blocking techniques are applied to avoid the quadratic asymptotic complexity of ER. Note that the blocking output is a set of blocks containing entities such that, . Thus, each block b can be denoted as , where is the threshold of the blocking criterion.

Since this work considers that the data are sent incrementally, the blocking is divided into a set of increments , where is the number of increments and each contains entities from and . Given that the sources provide data in streams, each increment is associated with a time interval . Thus, the time interval between two increments is , i.e., , where returns the timestamp that the increment was sent and is the index of the increment . For instance, in a scenario where data sources provide data every 30 s, we can assume that = 30 s.

For each , each data source sends an entity collection . Thus, = , where contains the entities of and contains the entities of for the specific increment i. Since the entities can follow different loose schemes, each has a specific attribute set and value associated with each attribute denoted by such that is the number of attributes associated with e. When an entity e does not present a value for a specific attribute, it implies the absence of this attribute. For this reason, can be different for a distinct e. As stated in Section 1, an attribute selection algorithm, which discards the superfluous attributes from the entities, is proposed in this work. Hence, when the attribute selection algorithm is applied, the attributes in may be removed, as discussed later in Section 6. In this sense, the set of attributes after the application of the attribute selection is given by , where represents the attribute selection algorithm that returns true if the attribute (as well as its value) should be removed and false otherwise.

In order to generate the entity blocks, tokens (e.g., keywords) are extracted from the attribute values. Note that the blocking techniques aim to group similar entities (i.e., ) to avoid the quadratic cost of the ER task, which compares all entities of with all entities of . To group similar entities, blocking techniques such as token-based techniques can determine the similarity between entities based on common tokens extracted from the attribute values. For token extraction, all tokens associated with an entity e are grouped into a set , i.e., , such that is a function to extract the tokens from the attribute value v. For instance, can split the text of v using whitespace and remove stop words (e.g., “and”, “is”, “are”, and “the”). The set of the remaining words is considered the set of tokens. Stop words are not useful as tokens because they are common words and hardly determine a similarity between entities. In scenarios involving noisy data, we apply LSH algorithms to generate a hash value for each token. Therefore, a hash function H should be applied to , i.e., , to generate the set of hash values instead of the set of tokens . In summary, when noisy data are considered, should be replaced with .

To generate the entity blocks, a similarity graph is created in which each is mapped to a vertex and a non-directional edge is added. Each l is represented by a triple such that and are the vertices of G and is the similarity value between the vertices. Thus, the similarity value between two vertices (i.e., entities) and is denoted by . In this work, the similarity value between and is given by the average of the common tokens between them . This similarity function is based on the functions proposed in [11,21]. In scenarios involving noisy data, the similarity value between and is given by the average of the common hash values between them. Since the idea is not to compare (or link) two entities from the same data source, and must be from different data sources. Therefore, is a vertex generated from an entity and is a vertex generated from another entity .

The attribute selection algorithm aims to discard superfluous attributes in the sense of providing tokens that will hardly assist the blocking task to find similar entities. For instance, consider two general entities (i.e., ) and (i.e., ). Let and ; if only the attributes and have the same meaning (i.e., contain attribute values regarding the same content), the tokens generated from , , and will hardly result in blocking keys that truly represent the similarity between the entities. To this end, the attribute selection strategy is applied.

The idea behind attribute selection is to determine the similarity among attributes based on their values. After that, the attributes (and, consequently, their values) that do not have similarities with others are discarded. Formally, all attributes associated with the entities belonging to data sources and are extracted. Moreover, all values associated with the same attribute () are grouped into a set . In turn, the pair represents the set of values associated with a specific attribute . Let R be the list of discarded attributes, which contains the attributes that do not have similarities with others. Formally, given two attributes and , , where calculates the similarity between the sets and and is a given threshold. In this work, we compute the value for based on the similarity value of all attribute pairs provided by and . From the mean of the similarity between the attributes, assumes the value of the first quartile. Then, all attribute pairs whose similarities are in the first quartile (i.e., comprise 25% of the attribute pairs with the lowest similarities) are discarded. The choice of the value was based on previous experiments, which indicates a negative influence on the effectiveness results when the value is given after the first quartile. For this reason, assumes the value of the first quartile to avoid significantly impacting the effectiveness results. Then, the token extraction considering attribute selection is given by . Similarly, when noisy data are considered, the token extraction considering attribute selection is given by .

In ER, a blocking technique aims to group the vertices of G into a set of blocks denoted by . In turn, a pruning criterion is applied to remove entity pairs with low similarities, resulting in a pruned graph . For pruning, a criterion is applied to G (i.e., ) so that the triples whose are removed, generating the pruned graph . Thus, the vertices of are grouped into a set of blocks denoted by , such that and , where is a similarity threshold defined by a pruning criterion . In Section 4.2.3, how a pruning criterion determines the value for in practice is presented.

However, when blocking techniques deal with streaming and incremental challenges, other theoretical aspects need to be considered. Intuitively, each data increment, denoted by , also affects G. Thus, we denote the increments over G by . Let be a set of data increments on G. Each is directly associated with an entity collection , which represents the entities in the increment . The computation of for each is performed on a parallel distributed computing infrastructure composed of multiple nodes (e.g., computers or virtual machines). Note that to compute for a specific , previous are considered, following the incremental behavior of G. In this context, it is assumed that is the set of nodes used to compute . The execution time using a single node is denoted by . On the other hand, the execution time using the whole computing infrastructure N is denoted by .

Execution time for parallel blocking. Since blocking is performed in parallel over the infrastructure N, the whole execution time is given by the execution time of the node that demanded the longest time to execute the task for a specific increment : .

Time restriction for streaming blocking. Considering the streaming behavior, where each increment arrives in each time interval, it is necessary to determine a restriction on the execution time to process each increment, given by .

This restriction aims to prevent the blocking execution time from overcoming the time interval of each data increment. Note that the time restriction is related to streaming behavior, where data are produced continuously. To achieve this restriction, blocking must be performed as quickly as possible. As stated previously, one possible solution to minimize the execution time of the blocking step is to execute it in parallel over a distributed infrastructure.

Blocking efficiency in distributed environments. Given the inputs , N, , , , , and , the blocking task aims to generate over a distributed infrastructure N in an efficient way. To enhance the efficiency, the blocking task needs to minimize the value of . For instance, considering a distributed infrastructure with N = 10 nodes and 10 s as the required time to perform the blocking task using only one node (i.e., ), the ideal execution time using all nodes (i.e., ) should be one second. Thus, ideally, the best minimization is . Due to the overhead required to distribute the data in each node and the delay in the communication between the nodes, is practically unreachable. However, the minimization of is important to provide efficiency to the blocking task.

Table 1 highlights the goals regarding the stated problems, which are exploited throughout this paper. Furthermore, Table 2 summarizes the symbols used throughout the paper.

Table 1.

Summary of the problem statement.

Table 2.

Summary of symbols.

3. Related Works

According to the taxonomy proposed in [5], blocking techniques employed in the ER task are classified into two broad categories: schema-aware (e.g., canopy clustering [25,26], sorted neighborhood [27,28], and adaptive window [29]) and schema-agnostic (e.g., token blocking [30,31], metablocking [21,22], attribute clustering blocking [8], and attribute-match induction [11,20,32]). Concerning the execution model, several schema-agnostic blocking techniques have been proposed to deal with heterogeneous data in standalone [20,31,33,34] and parallel [11,16,22,35] modes. In this sense, the research focus of this work is to explore open topics related to schema-agnostic blocking techniques.

3.1. Blocking for Heterogeneous Data

In the context of heterogeneous data, entities rarely follow the same schema. For this reason, the block generation (in blocking techniques) is compromised. Therefore, traditional blocking techniques (e.g., sorted neighborhood [27] and adaptive window [29]) do not present satisfactory effectiveness since blocking is based on the entity profile schema [33]. In this sense, the challenges inherent in heterogeneous data are addressed by schema-agnostic blocking techniques, which ignore scheme-related information and consider the attribute values of each entity [5].

Among the existing schema-agnostic blocking techniques, metablocking has emerged as one of the most promising regarding efficiency and effectiveness [33]. Metablocking aims to identify the closest pairs of entity profiles by restructuring a given set of blocks into a new one that involves significantly fewer comparisons. To this end, this technique’s blocks form a weighted graph and pruning criteria are applied to remove edges with weights below a threshold, aiming to discard comparisons between entities with few chances of being considered a match. Originally, the authors in [21] named the pruning step metablocking, which receives a redundancy-positive set of blocks and generates a new set of pruned blocks. However, to avoid misunderstanding, in this work, we call metablocking the blocking technique as a whole and pruning the step responsible for pruning the blocking graph. Recently, some works [16,22] have proposed parallel-based blocking techniques to increase the efficiency of metablocking. However, these state-of-the-art techniques (either standalone or parallel-based) do not work properly in scenarios involving incremental and streaming data since they were developed to work with batch data [6].

3.2. Blocking in the Noisy-Data Context

To address the problem of noisy data, three strategies are commonly applied: n-gram algorithms, Natural Language Processing (NLP), and Locality-Sensitive Hashing (LSH) [36,37]. In the context of blocking techniques based on tokens, the application of n-gram strategies negatively affects efficiency since n-gram algorithms increase the number of tokens and consequently the number of blocks managed by the blocking technique. Regarding NLP applications, word vectors and dictionaries can be applied in the sense of fixing misspelled words and recovering the correct tokens. However, NLP also negatively affects the efficiency of the blocking task as a whole since it is necessary to consult the word vector for each token. Thus, among the possible strategies to handle noisy data, LSH is the most promising in terms of results [20,32,38].

Recently, the BLAST technique [20] has been applied to LSH to determine the linkages between the attributes of two large data sources in order to address efficiency issues. However, the BLAST technique does not explore noisy data as a contribution but introduces the application of LSH in blocking techniques as a factor for improving effectiveness. More specifically, this technique reduces the dimensionality of data through the application of LSH and guides the blocking task to enhance the effectiveness results. Following the BLAST idea, the work in [32] applies LSH in order to hash the attribute values and enable the generation of high-quality blocks (i.e., blocks that contain a significant number of entities with high chances of being considered similar/matches), even with the presence of noise in the attribute values. In [38], the Locality-Sensitive Blocking (LSB) strategy is proposed. LSB applies LSH to standard blocking techniques in order to group similar entities without requiring the selection of blocking keys. To this end, LSH works in the sense of generating hash values and guiding the blocking, which increases the robustness toward blocking parameters and data noise.

In contrast to the previously mentioned works, our work not only focuses on noisy data but also allows the proposed incremental blocking technique to handle streaming and noisy data simultaneously. Although the works in [20,32,38] do not explore aspects such as incremental processing or streaming data, the idea behind the application of LSH to minimize the negative effects of noisy data on the blocking techniques can also be applied to the proposed technique to expand its applications. Therefore, this work adapts the application of LSH (in blocking techniques) to the contexts of distributed computing, incremental processing, and streaming data.

3.3. Blocking Benefiting from Schema Information

Schema information can benefit matching tasks by enhancing efficiency without compromising effectiveness. Works such as [39,40] suggest the application of strategies able to identify the functional dependencies (FDs) among the attributes of a schema to support data quality and data integration tasks. FDs are constraints that determine the relationship between one attribute and another in a schema. Therefore, these relations can guide matching tasks in order to compare values considering only the values from similar attributes, which are determined by the FDs. Regarding ER, works such as [41,42] propose extracting information from relational schemas (i.e., rigid schemas) based on the matching dependencies, which are extensions of FDs. In this sense, the idea is to select matching dependencies as rules that determine the functional dependencies among attributes, where attribute values do not need to be exactly equal but similar. Thus, the idea behind matching dependencies is to efficiently select the best subset of rules (i.e., dependencies among attributes) to support the ER task. However, this strategy does not consider data heterogeneity, which does not follow a rigid schema, or the streaming context, which demands processing entities following a time budget. Particularly, the approach proposed in [42] can take several minutes to complete the process, even for data sources with a few thousand entities.

For contexts involving blocking and heterogeneous data, we can identify the works in [11,20,31,32], which propose blocking techniques that benefit from information related to entity attributes. In the attribute-based blocking in [31], before the blocking step, there is an initial grouping of attributes that is generated based on the similarities of their values. External sources such as a thesaurus can be consulted to determine similar attributes. Similarly, the idea behind [11,20,32] is to exploit the loose schema information (i.e., statistics collected directly from the data) to enhance the quality of blocks. Then, rather than looking for a common token regardless of the attribute to which it belongs, entity descriptions are compared only based on the values of similar attributes. Hence, comparisons of descriptions that do not share a common token in a similar attribute are discarded. To summarize, the main idea is to exploit the schema information of the entity descriptions in order to minimize the number of false matches.

On the other hand, the attribute selection algorithm (proposed in the present work) before the token blocking step identifies and discards superfluous attributes, which will provide useless tokens that unnecessarily consume memory. Note that attribute selection aims to minimize the number of false-positive matches and improve resource consumption. Therefore, even though the related works extract information from the attributes, they do not use this information to discard superfluous attributes; the idea is to perform a kind of attribute clustering to guide block generation. Furthermore, the stated works deal with neither streaming nor incremental data, whose challenges are addressed in our work.

3.4. Incremental Blocking

In terms of incremental blocking techniques for relational data sources, the authors of [9,43,44,45] propose approaches that are capable of blocking entities incrementally. These works propose an incremental workflow for the blocking task, considering the evolutionary behavior of data sources to perform the blocking. The main idea is to avoid (re)processing the entire dataset during the incremental ER to update the deduplication results. For doing so, different classification techniques can be employed to identify duplicate entities. Therefore, these works propose new metrics for incremental record linkage using collective classification and new heuristics (which combine clustering, coverage component filters, and a greedy approach) to further speed up incremental record linkage. However, the stated works do not deal with heterogeneous and streaming data.

In terms of other incremental tasks that propose useful strategies for ER, we can identify the works in [14,46,47], which present parallel and incremental models to address the tasks of name disambiguation, event processing, and dynamic graph processing, respectively. More specifically, in the ER context, the work in [48] proposes an incremental approach to perform ER on social media data sources. Although such sources commonly provide heterogeneous data, the work in [48] generates an intermediate schema so that the extracted data from the sources follow this schema. Therefore, although the data are originally semi-structured, they are converted to a structured format before being sent to the ER task. For this reason, the approach in [48] differs from our technique since it does not consider the heterogeneous data challenges and does not apply/propose blocking techniques to support ER.

3.5. Blocking in the Streaming Data context

Streaming data commonly add several challenges, not only in the context of ER. The works in [49,50], which address clustering and itemset frequencies, highlight the necessity of developing strategies to continuously process data sent in short intervals of time. Even if the works in [49,50] do not consider other scenarios, such as heterogeneous data and incremental processes, both suggest the need to develop an appropriate architecture to deal with streaming data, which is discussed in Section 4.

Regarding ER approaches that deal with streaming data, we can highlight the works in [2,10,51]. These works propose workflows to receive data from streaming sources and perform the ER task. In these workflows, the authors suggest the application of time windows to discard old data and, consequently, avoid the growing consumption of resources (e.g., memory and CPU). On the other hand, it is important to highlight that these works apply time windows only in the sense of determining the entities to be processed together. Hence, they do not consider incremental processing and, therefore, discard the previously processed data. Thus, none of them deal simultaneously with the three challenges (i.e., heterogeneous data, streaming data, and incremental processing) addressed by our work.

In the ER context, we can highlight only the recent work proposed in [19], which addresses challenges related to streaming data and incremental processing. The authors of [19] propose a Spark-based blocking technique to handle heterogeneous streaming data. As previously stated, the present research is an evolution of the work in [19]. Overall, it is possible to highlight the following improvements: (i) an efficient workflow able to address the memory consumption problems present in [19], which decrease the technique’s efficiency, as well as its ability, to process large amounts of data; (ii) an attribute selection algorithm, which discards superfluous attributes to enhance efficiency and minimize memory consumption; (iii) a top-n neighborhood strategy, which maintains only the “n" most similar neighbor entities of each entity; (iv) a noise-tolerant algorithm, which allows the proposed technique to generate high-quality blocks, even in the presence of noisy data; and (v) a parallel architecture for blocking streaming data, which divides all the blocking processes among two components (sender and blocking task) to enhance efficiency.

Based on the related works cited in this section (summarized in Table 3) and information provided by several other works [5,52,53], it is possible to identify a lack of works in different areas that address the challenges related to streaming data and incremental processing efficiently. The same applies to ER approaches to heterogeneous data [19]. In this sense, our work addresses an open research area, and it can be a useful schema-agnostic blocking technique for supporting ER approaches in scenarios involving not only streaming data and incremental processing but also noisy data.

Table 3.

Related work comparison. Note, “X” means that the works address the research topic, “-” otherwise.

4. Incremental Blocking over Streaming Data

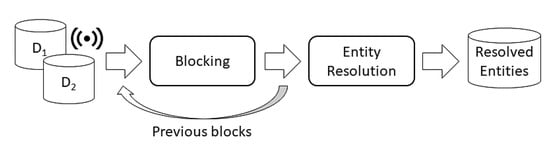

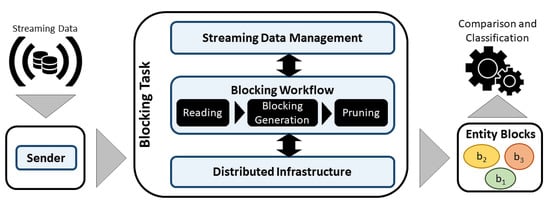

Figure 1 illustrates the traditional steps involved in an ER process. However, it is necessary to redesign this workflow to adapt it to the needs of the context addressed in this work. The focus of this work is the blocking step; therefore, information about the data processing step is limited throughout this section. When streaming data are considered, the traditional ER workflow should include a pre-step responsible for organizing the micro-batches to be processed. For this reason, the sender component was inserted into the architecture to block streaming data, as depicted in Figure 2. Note that since streaming data are constantly sent, the ER steps should be performed according to a time budget (represented by in this work, as stated in Section 2). This behavior creates a challenge for the workflow, which needs to be performed as fast as possible. To address this challenge, the top-n neighborhood and attribute selection strategies were proposed to enhance blocking efficiency without having a negative impact on effectiveness.

Figure 1.

ER workflow considering the streaming data and incremental context.

Figure 2.

Parallel architecture for blocking streaming data.

Regarding incremental blocking, the idea is to consider the coming streaming data and generate incremental blocks during a time window. Therefore, the traditional ER workflow should be updated to receive as input not only the data from the sources but also the blocks previously generated in the previous increments. For the first increment, the received entities are blocked similarly to the traditional ER workflow. However, from the second increment, the received entities are blocked, merging/updating with the blocks generated previously. To handle this behavior, we propose an incremental architecture able to store the generated blocks and update them as the new entities arrive.

Since the data can present noise, which commonly minimizes blocking effectiveness, we also propose a noise-tolerant algorithm to be applied to the ER workflow. The noise-tolerant algorithm benefits from the idea behind LSH to generate high-quality blocks, even in the presence of noisy data. In this sense, the sender component (see Figure 2) applies this algorithm to the data in order to generate hash values instead of tokens. Thus, the blocking step generates blocks using hash values as the blocking keys, as explained later in this section.

Throughout this section, we propose a parallel-based blocking technique able to incrementally process streaming data. Furthermore, we describe a parallel architecture, which hosts the components necessary to perform the blocking of streaming data, as well as clarify how the proposed technique is coupled to the architecture.

4.1. Parallel Architecture for Blocking Streaming Data

The architecture is divided into two components: the sender and blocking task, as depicted in Figure 2. The sender component, which is executed in standalone mode, consumes the data provided by the data sources in a streaming way, buffers it in micro-batches, and sends it to the blocking task component. Note that buffering streaming data in micro-batches is a common strategy to process streaming data [47,54,55], even in critical scenarios where data arrive continuously. Thus, it is possible to follow the time interval and respect the time restriction for streaming blocking, as defined in Section 2. In the blocking task component, blocking is performed over a parallel infrastructure. To connect both components, a streaming processing platform, namely Apache Kafka, is applied.

The blocking task component is divided into three layers: streaming data management, the blocking workflow, and the distributed infrastructure. The first layer is responsible for continuously receiving and manipulating the streaming data. Thus, the data provided by the sender are collected and sent to the blocking workflow layer where blocking is performed. Therefore, the data (i.e., entities) received in the first layer are processed (i.e., blocked) in the blocking layer. In this layer, the proposed blocking technique is coupled to the architecture. Note that different parallel-based blocking techniques can be applied to the blocking workflow layer. The blocking layer is directly connected to the distributed infrastructure, which provides all the distributed resources (such as the MapReduce engines and virtual machines) needed to execute the proposed streaming blocking technique. Finally, after blocking, the generated blocks are sent to the next steps in the ER task (i.e., comparison and classification of entity pairs). Note that the scope of this paper is limited to the blocking step.

When noisy data are considered, the noise-tolerant algorithm is applied. The algorithm is hosted by the sender component. Thus, as the data arrive in a streaming way, the noise-tolerant algorithm is applied to convert the tokens present in each entity (from its attribute values) into hash values. To this end, the noise-tolerant algorithm applies Locality-Sensitive Hashing (LSH) to the tokens from the entities in order to avoid the issues generated by the noisy data [36]. In general, LSH is used for approximating the near-neighbor search in high-dimensional spaces [56]. It can be applied to reduce the dimensionality of a high-dimensional space, preserving the similarity distances of the tokens to be evaluated. Thus, for each attribute value, a hash function (e.g., MinHash [56]) converts the attribute value into a probability vector called a signature (Minhash signature). Then, the arriving entities are buffed in micro-batches and sent to the blocking task component. Note that the execution of the noise-tolerant algorithm occurs in the order of milliseconds per entity, which does not negatively impact the efficiency results of blocking as a whole, as addressed in Section 7 and Section 8.

Since the hash function preserves the similarity of the attribute values, it is possible to take advantage of this behavior and obtain a similar hash signature for similar attribute values, even in the presence of noise [56]. Then, the hash function can generate similar signatures that will be used to guide the blocking task. To clarify how the noise-tolerant algorithm works over the proposed technique, consider the following running example. Given two entities from two different datasets and , where and represent the same entities in the real world but there are typos in . Without the application of the noise-tolerant algorithm, the tokens considered to block the entities will be “Linus” and “Torvalds” from , and, “Lynus” and “Torvalds” from . Note that the token-based blocking will not group the entities and at the same block since they do not share common tokens and, consequently, they will not be considered a match. On the other hand, with the application of the noise-tolerant algorithm, the attribute values of and will be converted into a probability vector with the same size as their token sets. Then, could be converted to and could be converted to . After the execution, the entities and (with their hash signatures) are sent to the blocking task component. Since the hash function preserves the similarity of the attribute values, it is important to highlight that it is common to obtain the same hash value, even in the presence of noise on the data (as occurs with the tokens “Linus” and “Lynus”). In this sense, instead of considering tokens during the blocking task, the noise-tolerant algorithm allows the proposed technique to take into account the hash signatures and applies them to the block. Therefore, entities that share a common hash value (in the hash signatures) should be grouped at the same block. For this reason, and will be grouped at the same block due to the hash value “8709973”.

4.2. Incremental Blocking Technique for Streaming Data

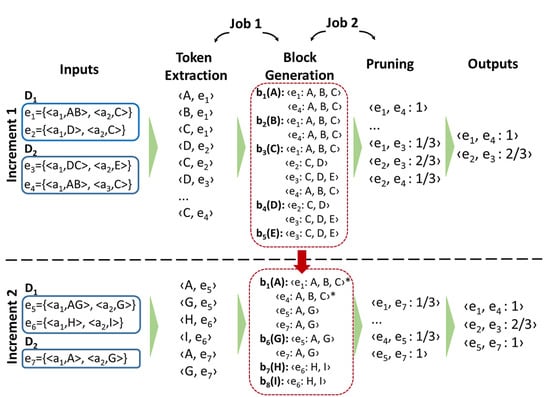

To address the challenges previously stated, the proposed blocking technique is based on a MapReduce workflow. The workflow is composed of two MapReduce jobs (Figure 3), i.e., one fewer job than the method available in [22] (further details can be found in the token extraction step below). In addition, the proposed workflow does not negatively affect the effectiveness results since the block generation is not modified. The workflow is divided into three steps as depicted in Figure 3: token extraction, blocking generation, and pruning. It is important to highlight that for each increment, the three blocking steps are performed following the workflow depicted in Figure 3.

Figure 3.

Workflow for the streaming blocking technique.

4.2.1. Token Extraction Step

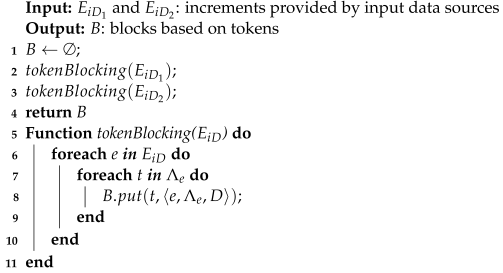

This step is responsible for extracting the tokens from the attribute values (of the input entities), where each token will be used as a blocking key, as illustrated in Algorithm 1. Technically, the token step can be considered a map function through the application of the Flink function called flatmap. Thus, each entity will generate a set of key–value pairs where the key is represented by the token (blocking key) and the value is the entity in question.

| Algorithm 1: Token Extraction |

|

For each increment i, the blocking receives a pair of entity collections and provided by data sources and (lines 2 and 3). For each entity (lines 6 to 10), all tokens associated with e are extracted and stored. Each token in will be a blocking key that determines a specific block , and the set will be applied to determine the similarity between the entities in the next step. Similarly, when noisy data are present, is replaced with . Note that, as stated, . Then, each hash value in will be a blocking key that determines a specific block , and the set will be applied to determine the similarity between the entities in the next step. From now on, to facilitate the understanding, we will consider only . Therefore, note that the hash values in work in a similar way to the tokens in .

It is important to highlight that from this step, every entity e contains information regarding its tokens (blocking keys) and the data source D that it comes from (lines 7 and 9). From the stored in each entity during the token extraction step, it is possible to avoid one MapReduce job (compared with the workflow proposed in [22]). In [22], the workflow applies an extra job to process the entities in each block and determine all the blocks that contain each entity. In other words, an extra job is used to compose the . On the other hand, our blocking technique determines in this step and spreads this information to the next steps, avoiding the necessity of another MapReduce job. Note that although the work in [22] presents various strategies for parallelizing each pruning algorithm of the metablocking, the avoided MapReduce job is related to the token extraction step (i.e., before the pruning step). Thus, the proposed workflow tends to enhance the efficiency of blocking as a whole.

The time complexity of the token extraction step is directly related to the generation of the blocking keys. This step evaluates all tokens in of each entity (i.e., ) to generate the blocking keys. Therefore, the time complexity of this step is , where is given by and is given by . We guarantee that the produced blocking keys are the same as the ones produced by metablocking using the following lemma.

Lemma 1.

Considering the same entity collection as input, if the proposed technique and metablocking apply the same function to extract tokens from attribute values, then both techniques will produce the same blocking keys .

Proof.

In the token extraction step, the proposed technique receives as input the entity collection . During this step, the entities provided by are processed to extract tokens, applying a function . For each entity , a set of blocking keys is produced from the application of to the attribute values v of e. If metablocking receives the same and applies the same to the attribute values v of , the technique will produce the same tokens and, consequently, the same blocking keys , even if the parallel workflows differ. □

In Figure 3, there are two different increments (i.e., Increment 1 and Increment 2) containing the entities that will be processed by the blocking technique. For the first increment (top of the figure), provides entities and , and provides entities and . From entity , the tokens A, B, and C are extracted. To clarify our example, can be represented as . Thus, the tokens A, B, and C represent the attribute values “Linus”, “Torvalds”, and “Linux”, respectively. Since the generated tokens work as blocking keys, the entities are arranged in the format such that k represents a specific blocking key and e represents an entity linked to the key k.

4.2.2. Blocking Generation Step

In this step, the blocks are created and a weighted graph is generated from the blocks in order to define the level of similarity between entities, as described in Algorithm 2. From the key–value pairs generated at the token extraction step, the values (i.e., entities) are grouped by key (i.e., blocking key) to generate the entity blocks. The entity blocks are generated through a groupby function, provided by Flink. Note that the first MapReduce job, which started in the token extraction step, concludes with the groupby function. Moreover, during the blocking generation step, another MapReduce job is started. To compute the similarity between the entities, a flatmap function generates pairs in the format , which will be processed in the pruning step.

| Algorithm 2: Blocking Generation |

|

Initially, the entities are arranged in the format ; implicitly, denotes the blocks that contain entity e. In scenarios involving noisy data, the entities assume the format , where also represents the blocks that contain entity e. Based on the blocking keys (i.e., tokens or hash signatures), all entities sharing the same key are grouped in the same block. Then, a set of blocks B is generated so that a block is linked with a key k and all share (at least) the key k (lines 2 to 14). For instance, in Figure 3, is related to the key A and contains entities and since both entities share the key A. The set of blocks B generated in this step is stored in memory to be used for the next increments. In this sense, new blocks will be included or merged with the blocks previously stored.

Afterward, entities stored in the same block are compared (lines 3 to 13). Thus, entities provided by different data sources (line 7) are compared to define the similarity between them (line 8). The similarity is defined based on the number of co-occurring blocks between the entities. Note that co-occurring blocks are a well-known strategy for defining similarity between entities in scenarios involving token-based blocking. The works in [21,57,58] formalize and describe the effectiveness of defining entity similarity based on the number and frequency of common blocks. After defining the similarity between the entities, the entity pairs are inserted into the graph G (lines 9 and 10) such that the weight of an edge linking two entities is given by the similarity (computed in lines 16 to 18) between them. The blocking generation step is the most computationally costly in the workflow since the comparison of the entities is performed in this step. The time complexity of this step is given by the sum of the cost to compare the entity pairs in each block . Therefore, the time complexity of the blocking generation step is such that is given by and is the number of comparisons to be performed in b. Furthermore, to guarantee that the graphs generated by our technique and metablocking are the same, the following lemma states the conditions formally.

Lemma 2.

Assuming that the proposed technique and metablocking apply the same similarity function Φ to the same set of blocks B (derived from the blocking keys ), both techniques will produce the same set of vertices X and edges L. Therefore, since the blocking graph G is composed of vertices (i.e., X) and edges (i.e., L), the techniques will generate the same graph G.

Proof.

During the blocking generation step, the set of blocks B (based on the blocking keys ) is given as input. The goal of this step is to generate the graph G(X, L). To this end, each entity (such that ) is mapped to a vertex . Thus, if the metablocking technique receives the same set of blocks B, the technique will generate the same set of vertices X. To generate the edges (represented by ) between the vertices, the similarity function (such as and ) is applied to determine the similarity value . Therefore, assuming that the metablocking technique applies the same to the vertices of X, the set of edges L produced by the proposed technique will be the same. Since both techniques produce the same X and L sets, the generated blocking graph G will be identical, even though they apply different workflows. □

In Figure 3, contains entities and . Therefore, these entities must be compared to determine their similarity. Their similarity is one since they co-occur in all blocks in which each one is contained. On the other hand, in the second increment (bottom of the figure), receives entities and . Thus, in the second increment, contains entities , , , and since all of them share token A. For this reason, entities , , , and must be compared with each other to determine their similarity. However, since and were already compared in the first increment, they should not be compared twice. This would be considered an unnecessary comparison.

Technically, the strategies used to avoid redundant comparisons are conditional restrictions implemented at the flatmap function of the blocking generation step. During incremental blocking, three types of unnecessary comparisons should be avoided. First, due to the block overlapping, an entity pair can be compared in several blocks (i.e., more than once). Since this type of comparison is commonly related to metablocking-based techniques, we apply the Marked Common Block Index (MaCoBI) [16,22] condition to avoid this type of unnecessary comparison. The MaCoBI condition aims to guarantee that an entity pair will be compared in only one block. To this end, it uses the intersection of blocking keys (stored in both entities) to select the block in which this entity pair should be compared. Thus, based on the intersection of blocking keys, the comparison is performed only in the first block in which both entities co-occur, preventing the comparison from being performed more than once (on the other blocks).

The second type of comparison is related to incremental blocking. For a specific increment, it is possible that some blocks may not suffer updates. This occurs due to the fact that the entities provided by this specific increment may not be related to any of the preexisting blocks and, consequently, do not update them. Therefore, the entities contained in these blocks that did not suffer updates must not be compared again. To solve this issue, the proposed workflow applies an update-oriented structure to store the blocks previously generated. This structure, commonly provided and managed by MapReduce frameworks (e.g., Flink (https://ci.apache.org/projects/flink/flink-docs-release-1.9/dev/stream/state/state.html, accessed on 28 September 2022)), loads only blocks that have been updated for the current increment. Note that the blocks that did not suffer updates are not removed; they just are not loaded into memory for the current increment. The application of update-oriented structures assists the technique as a whole to improve the consumption of computational resources. Moreover, the application of an update-oriented structure (e.g., stateful data streams (https://flink.apache.org/stateful-functions.html, accessed on 28 September 2022) provided by Flink) allows the technique to maintain all the blocks during a time window. Thus, it is possible to add new blocks and update the pre-existing blocks (i.e., add entities from the current increment) over the structure, which satisfies the incremental behavior of the proposed blocking technique. In Section 5, we discuss the time-window strategy, which discards entities from the blocks based on their arrival time.

The third type of comparison is also related to incremental blocking. Since the blocking technique can receive a high (or infinite) number of increments and the blocks can still exist for a huge number of increments, it is necessary to take into account the comparisons that have already been performed in previous iterations (increments). Note that even updated blocks contain entity pairs that have been compared in previous iterations, which should not be compared again. For this reason, our blocking technique marks the entity pairs that have already been compared. This mark works like a flag to decide if the entity pair was compared in previous iterations. Thus, an entity pair must only be compared if at least one of the entities is not marked as already compared.

To illustrate how our technique deals with the three types of unnecessary comparisons, we consider the second iteration (bottom of Figure 3). The entity pair should be compared in blocks , , and , which represents the first type of unnecessary comparison. However, the MaCoBi condition is applied to guarantee that is compared only once. To this end, the MaCoBi condition evaluated the intersection of blocks (i.e., , , or ) between both entities and determined that the comparison should be performed only in the first block of the intersection list (i.e., ). Considering the second type of unnecessary comparison, the blocks , , , , and are considered pre-existing blocks since they were generated in the first iteration. In the second iteration, only block is updated. In addition, blocks , , and are created. To avoid the blocks that did not suffer updates (i.e., , , , and ) from being considered in the second iteration, the update-oriented structure only takes into account blocks , , , and . Therefore, this strategy avoids a huge number of blocks from being loaded into memory unnecessarily. To avoid the third type of unnecessary comparison, during the first iteration (top of Figure 3), entities and are marked (with the symbol *) at block to indicate that they have already been compared. Therefore, since an entity pair is only compared if at least one of the entities is not marked, the pair will not be compared again in the second iteration.

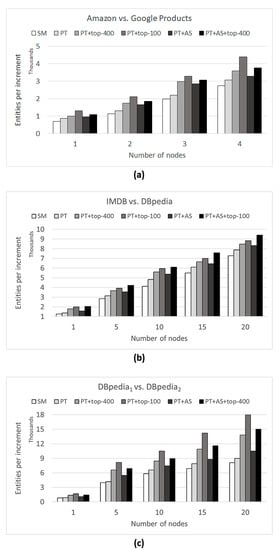

Top-n neighborhood strategy. Incremental approaches commonly suffer from resource issues [9,10], for instance, the work in [19] highlights problems related to memory consumption such as a lack of memory to process large data sources. To avoid excessive memory consumption, we propose a top-n neighborhood strategy to be applied during the creation of the graph G (i.e., in the blocking generation step). The main idea of this strategy is to create a directed graph, where each node (i.e., entity) maintains only the n most similar nodes (neighbor entities). To this end, each node will store only a sorted list of its neighbor entities (in descending order of similarity).

Note that the number of entities and connections between the entities in G is directly related to the resource consumption (e.g., processing and memory) since G needs to be processed and stored (in memory) during the blocking task. Considering incremental processing, this resource consumption needs to be treated since G also needs to maintain the entities according to incremental behavior (i.e., in an accumulative way). For this reason, a limitation on the number of entity neighbors is useful in the sense that it helps to reduce the number of connections (i.e., edges) between the entities. Furthermore, it is necessary to remember that entities (nodes) without any connections (edges) should be discarded from G (following the metablocking restrictions). Therefore, the application of the top-n neighborhood strategy saves memory and processing by minimizing the number of entities and connections in G. On the other hand, it decreases the effectiveness of the blocking technique since truly similar entities (in the neighborhood) can be discarded. However, as discussed later in Section 7, it is possible to increase efficiency by applying the top-n neighborhood strategy without significant decreases in effectiveness.

For instance, consider the first increment in Figure 3 has as the set of neighbor entities. The neighbor entities of are sorted in descending order of similarity . Thus, for each increment, the top-n neighbor entities of can be updated according to the order of similarity. To maintain only the top-n neighbor entities, the less similar neighbor entities are removed. If we hypothetically apply a top-1 neighborhood, entities and will be removed from the set of neighbor entities. Considering large graphs generated from large data sources or as a result of incremental processing, reducing the information stored in each node will result in a significant decrease in memory consumption as a whole. Therefore, the application of the top-n neighborhood strategy can optimize the memory consumption of our blocking technique, which enables the processing of large data sources. In Section 7, we discuss the gains in terms of resource savings of the impact on the effectiveness results when different values of n are applied.

4.2.3. Pruning Step

The pruning step is responsible for discarding entity pairs with low similarity values, as described in Algorithm 3. To generate the output (i.e., pruned blocks), the pruning step is composed of a groupby function, which receives the pairs from the blocking generation step and groups the entities by . Thus, the neighborhood of is defined and the pruning is performed. Note that the second MapReduce job, which started in the blocking generation step, concludes with the groupby function, whose outputs are the pruned blocks.

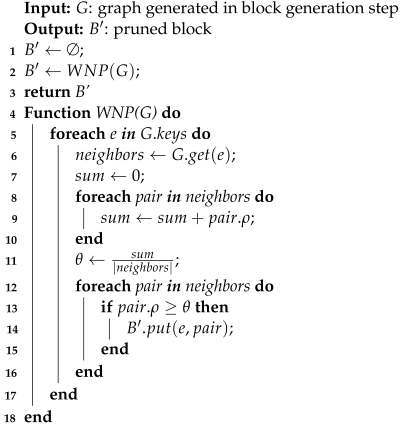

| Algorithm 3: Pruning |

|

After generating the graph G, a pruning criterion is applied to generate the set of high-quality blocks (lines 1 to 3). As a pruning criterion, we apply the WNP-based pruning algorithm [33] since it has achieved better results than its competitors [22,33]. The WNP is a vertex-centric pruning algorithm, which evaluates each node (of G) locally (lines 5 to 17), considering a local weight threshold (based on the average edge weight of the neighborhood—lines 6 to 11). Therefore, the neighbor entities whose edge weights are greater than the local threshold are inserted into (lines 12 to 16). Otherwise, the entities (i.e., whose edge weights are lower than the local threshold) are discarded. Note that the application of the top-n neighborhood strategy (in the previous step) does not make the pruning step useless. On the contrary, the top-n neighborhood can provide a refined graph in terms of the high-quality entity pairs and smaller neighborhood to be pruned in this step.

The time complexity of this step is given by the cost of the WNP pruning algorithm, which is [33], where is the number of vertices and is the number of edges in the graph G. Therefore, the time complexity of the pruning step is . Finally, the following lemma and theorem state the conditions to guarantee that the proposed technique and metablocking produce the same output (i.e., ) and, therefore, present the same effectiveness results.

Lemma 3.

Considering that the proposed technique and metablocking apply the same pruning criterion Θ for the same input blocking graph G, both techniques will produce the same pruned graph . Since the output blocks are directly derived from , both techniques will produce the same output blocks .

Proof.

In the pruning step, the proposed technique receives as input the blocking graph . This step aims to generate the pruned graph according to a pruning criterion . To this end, the edges (represented by ), whose value of are removed, and the vertices that have no associated edges are discarded. Note that is given by a pruning criterion . Therefore, if the metablocking technique receives the same blocking graph as input and applies the same pruning criterion , both techniques will generate the same pruned graph and, consequently, the same output blocks . □

Theorem 1.

For the same entity collection , if the proposed technique and metablocking apply the same function to extract tokens, the same similarity function , and the same pruning criterion , both techniques will generate the same output blocks .

Proof.

Based on Lemma 1, with the same and , the proposed technique and metablocking will generate the same blocking keys and, consequently, the same set of blocks B. Based on Lemma 2, if both techniques receive the same set of blocks B and apply the same , they will generate the same blocking graph G. Based on Lemma 3, when the proposed technique and metablocking prune the same graph G using the same , both techniques generate the same pruned graph and, consequently, the same output blocks . □

5. Window-Based Incremental Blocking

In scenarios involving streaming data, data sources provide an infinite amount of data continuously. In addition, incremental processing consumes information processed in previous increments. Therefore, the computational resources of a distributed infrastructure may not be enough for the accumulative sum of entities to be processed in each increment. Considering these scenarios, memory consumption is stated as being one of the biggest challenges faced by incremental blocking techniques [14,19,43]. Time windows are at the heart of processing infinite streams since they split the stream into buckets of finite size, reducing the memory consumption (since entities that exceeded the time threshold are discarded) of the proposed technique [59]. For this reason, a time window is used in the blocking generation step of our technique, where blocks are incrementally stored in the update-oriented data structure (see Section 4.2). In addition to being one of the most commonly used strategies in incremental approaches [9,14], time windows emerged as a viable solution for the experiments and case study developed in this work since time is a reasonable criterion for discarding.

For instance, in a scenario involving incremental and streaming data, the entities arrive continually over time (e.g., over hours or days). Therefore, as time goes by, the number of entities increases due to incremental behavior. In other words, the number of blocks and the number of entities arriving in the blocks increase. On the other hand, commonly, the amount of computational resources (especially memory and CPU) is limited, which hampers the techniques to consider all entities during blocking. For this reason, a criterion such as a time window for removing entities becomes necessary. The application of a time window is a trade-off between the effectiveness results (since it discards entities) and rational use of computational resources (since a large number of entities can overload the available resources). Note that the idea behind time windows is to discard old entities based on the principle that entities sent in a similar period of time have a higher chance of resulting in matches [9,14,59].

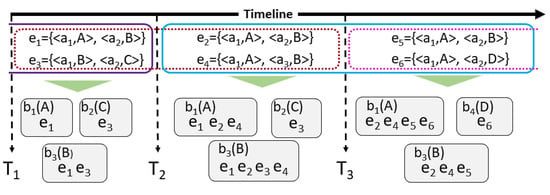

In this work, we apply a sliding (time)-window strategy, which defines the time interval for which the entities should be maintained in the update-oriented data structure, preventing the structure from excessively consuming memory. For instance, consider the example in Figure 4, where the entities are divided into three increments and sent at three different time points , , and . The size of the sliding window (i.e., the time threshold) is given by the time interval of two increments. Therefore, entities that exceeded the time equivalent to the time of two increments will be discarded. In other words, these entities will no longer be considered by the blocking technique. In the first increment, i.e., , and are blocked, generating blocks , , and . In , and are blocked. Considering the blocks previously created, and are added to and . Since the size of the window is equivalent to two increments, for the third increment, the window slides for the next increment (i.e., ). For this reason, entities and (sent in the first increment) are removed from the blocks that contain them. Block is discarded since all entities contained in it were removed. Regarding the entities of the third increment, entity is inserted into and , and is also inserted into , triggering the creation of a new block .

Figure 4.

Time-window strategy for incremental blocking.

6. Attribute Selection

Real-world data sources can present superfluous attributes, which can be ignored by the blocking task [5]. Superfluous attributes are attributes whose tokens will generate low-quality blocks (i.e., with entities with low chances of matching) and, consequently, increase the number of false matches. To prevent the blocking task from handling unnecessary data, which consumes computational resources (processing and memory), we propose the attribute selection algorithm to remove the superfluous attributes.

Although some works [11,20,31,32] exploit attribute information to enhance the quality of blocks, none of them proposes the disposal of attributes. Therefore, in these works, the tokens from superfluous attributes are also considered in the blocking task and, consequently, they consume computational resources. On the other hand, the discarding of attributes may negatively affect the identification of matches since tokens from discarded attributes can be decisive in the detection of these matches. Therefore, the attribute selection should be performed accurately, as discussed in Section 7. To handle the superfluous attributes, we developed an attribute selection strategy, which is applied to the sender component (see Figure 2). Note that, the sender component is executed in a standalone way.

For each increment received by the sender component, the entity attributes are evaluated and the superfluous ones are discarded. Thus, the entities sent by the sender are modified by removing the superfluous attributes (and their values). As stated, discarding attributes may negatively impact effectiveness, since the discarded attributes for a specific increment can become relevant in the next increment. To avoid this problem, the attribute selection strategy takes the attributes into account globally, i.e., the attributes are evaluated based on the current increment and the previous ones. Moreover, the applied criterion to discard attributes focuses on attributes whose tokens have a high chance of generating low-quality blocks, which is discussed throughout this section. Based on these two aspects, the discarded attributes tend to converge as the increments are processed and do not significantly impact the effectiveness results, as highlighted in Section 7.2. Note that even if a relevant attribute is discarded for a specific increment it can return (not be discarded) for the next increments.

In this work, we explore two types of superfluous attributes: attributes of low representativeness and unmatched attributes between the data sources. The attributes of low representativeness are related to attributes whose contents do not contribute to determining the similarity between entities. For example, the attribute gender has a low impact on the similarity between entities (such as two male users from different data sources) since its values are commonly shared by a huge number of entities. Note that the tokens extracted from this attribute will generate huge blocks that consume memory unnecessarily since these tokens are shared by a large number of entities. Hence, this type of attribute is discarded by our blocking technique to optimize memory consumption without a significant impact on the effectiveness of the generated blocks.

To identify the attributes of low representativeness, the attribute selection strategy measures the attribute entropy. The entropy of an attribute indicates the significance of the attribute, i.e., the higher the entropy of an attribute, the more significant the observation of a particular value for that attribute [11]. We apply the Shannon entropy [60] to represent the information distribution of a random attribute. Assume a random attribute X with alphabet and the probability distribution function . The Shannon entropy is defined as . The attributes with low entropy values (i.e., low representativeness) are discarded. In this work, we removed the attributes whose entropy values were in the first percentile (i.e., 25%) of the lowest values. As stated in Section 2, the use of this condition was based on previous experiments.

Unmatched attributes between the data sources are attributes that do not have a corresponding attribute (i.e., with similar content) in the other data source. For instance, assume two data sources and , where contains the attributes full name and age, and the attributes name and surname. Since full name, name, and surname address the same content, it is possible to find several similar values provided by these attributes. However, does not have any attributes related to age. That is, age will generate blocks from its values but these blocks will hardly be relevant to determining the similarities between entities. It is important to highlight that even though some metablocking-based techniques remove blocks with only one entity, the blocks are generated and consume resources until the blocking filter removes them. For this reason, this type of attribute is discarded by our blocking technique before the block generation.

Regarding the unmatched attributes, attribute selection extracts and stores the attribute values. Hence, for each attribute, a set of attribute values is generated. Based on the similarity between the sets, a similarity matrix is created denoting the similarity between the attributes of and . To avoid expensive computational costs for calculating the similarity between attributes, we apply Locality-Sensitive Hashing (LSH) [61]. As previously discussed, LSH is commonly used to approximate near-neighbor searches in high-dimensional spaces, preserving the similarity distances and significantly reducing the number of attribute values (or tokens) to be evaluated. In this sense, for each attribute, a hash function converts all attribute values into a probability vector (i.e., hash signature).

Since the hash function preserves the similarity of the attribute values, it is possible to apply distance functions (e.g., Jaccard) to efficiently determine the similarity between the sets of attribute values [56]. Then, the hash function can generate similarity vectors that will feed the similarity matrix and guide the attribute selection algorithm. This similarity matrix is constantly updated as the increments arrive. Therefore, the similarity between the attributes is given by the mean of the similarity per increment. The matrix is evaluated and the attributes with no similarity to attributes from the other data source are discarded. After removing the superfluous attributes (and their values), the entities are sent to the blocking task. Note that instead of only generating hash signatures as in the noise-tolerant algorithm, LSH is applied in the attribute selection to reduce the high-dimensional space of the attribute values and determine the similarity between attributes based on a comparison of their hash signatures.

7. Experiments

In this section, we evaluate the proposed blocking technique in terms of efficiency and effectiveness, as well as the application of the attribute selection and top-n neighborhood strategies to the proposed technique. To conduct a comparative experiment, we also evaluate the proposed technique against a baseline one, which is described in Section 7.1. Moreover, we present the configuration of the computational cluster, the experimental design, and the achieved results.

The experiments address the following research questions:

- RQ1: In terms of effectiveness, is the proposed blocking technique equivalent to the state-of-the-art technique?

- RQ2: Does the noise-tolerant algorithm improve the effectiveness of the proposed blocking technique in scenarios of noisy data?

- RQ3: Regarding efficiency, does the proposed blocking technique (without the attribute selection and top-n neighborhood strategies) outperform the baseline technique?

- RQ4: Does the attribute selection strategy improve the efficiency of the proposed blocking technique?

- RQ5: Does the top-n neighborhood strategy improve the efficiency of the proposed blocking technique?

7.1. Baseline Technique

As stated in previous sections, state-of-the-art blocking techniques do not work properly in scenarios involving incremental and streaming data. For this reason, a comparison of our technique with these blocking techniques is unfair and mostly unfeasible. Thus, in this work, to define a baseline to compare with the proposed blocking technique, we implemented a metablocking technique capable of dealing with streaming and incremental data called streaming metablocking. This section provides an overview of streaming metablocking in terms of implementation and how it applies to the context of this work (i.e., streaming and incremental data).

Streaming metablocking is based on the parallel workflow for metablocking proposed in [22]. However, it was adapted to provide a fair comparison with our technique in terms of effectiveness and efficiency. The first adaptation was related to addressing incremental behavior, which needs to update the blocks to consider the arriving entities (i.e., new entities). Using a brute-force strategy, after the arrival of a new increment, streaming metablocking needs to rearrange all blocks including the blocks that were not updated (e.g., insertion of new entities). This strategy is costly in terms of efficiency since it performs a huge number of unnecessary comparisons that have already been performed. To avoid this, streaming metablocking only considers the blocks that were updated for the current increment. Note that the original workflow proposed in [22] did not consider incremental behavior; therefore, the idea to (re)process the blocks generated previously was considered in this work.

The second adaptation of metablocking was related to streaming behavior. Streaming metablocking was implemented following the MapReduce workflow proposed in [22]. However, note that this parallel-based workflow was developed to handle batch data at once. Therefore, the workflow was redesigned to consider incremental behavior and allow the processing of streaming data. To this end, streaming metablocking was implemented using the Flink framework (i.e., MapReduce), which natively supports streaming behavior, as described in Section 4. In this sense, streaming metablocking applies a data structure to store the blocks previously generated and also avoids comparisons between entities previously compared (i.e., unnecessary comparisons).

On the other hand, it is necessary to highlight that the blocking technique proposed in this paper applies a different workflow, which reduces the number of MapReduce jobs compared to the streaming metablocking workflow described in Section 4. Moreover, the proposed strategies (i.e., attribute selection and top-n neighborhood) are not applied in streaming metablocking.

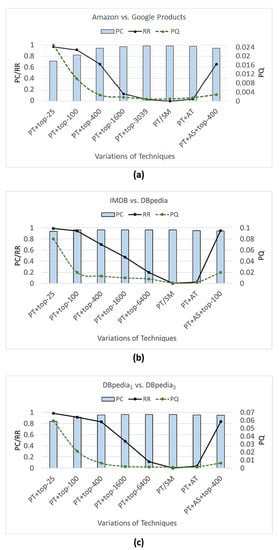

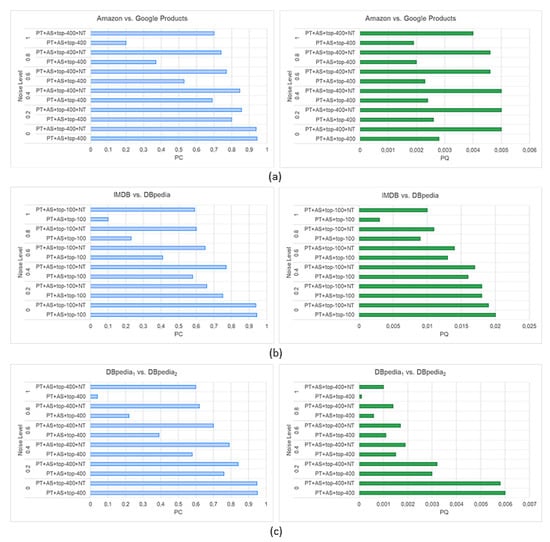

7.2. Configuration and Experimental Design