Multimodal EEG Emotion Recognition Based on the Attention Recurrent Graph Convolutional Network

Abstract

1. Introduction

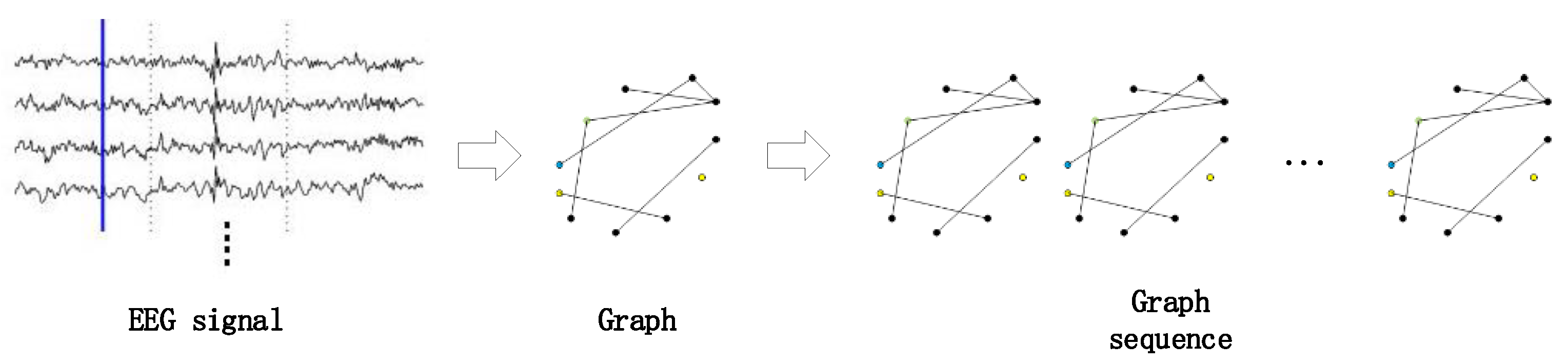

- In terms of feature selection and feature fusion, we utilize multiple physiological signals contained in the dataset to make emotion classification. The different kinds of physiological signals are fused at the data level and transformed from a one-dimensional time series into a graph structure that contains more temporal and spatial information related to human emotion.

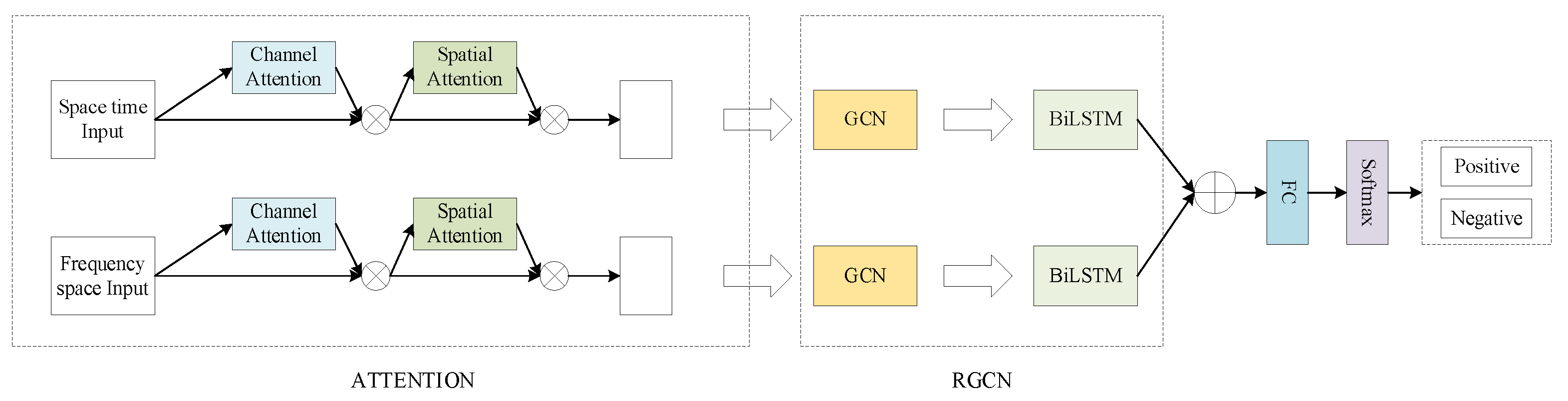

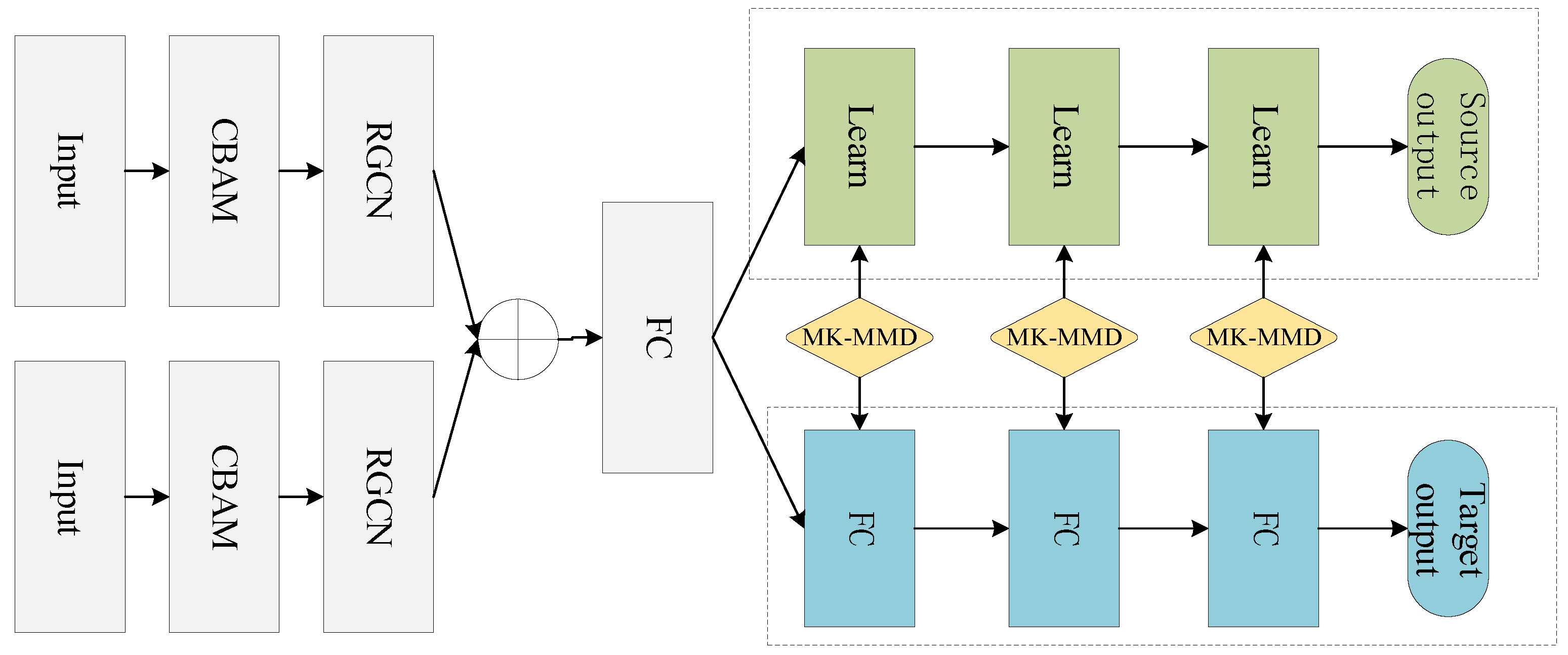

- In terms of models, we design the Mul-AT-RGCN, which combines the CBAM module and graph convolution and bidirectional LSTM to capture EEG-based multimodal physiological signals in time, frequency, and space domains to correlate and effectively extract emotion-related features of the multimodal signals.

2. Construction of Multimodal Space–Time Graph and Frequency–Space Graph

3. Attention Recurrent Graph Convolutional Network

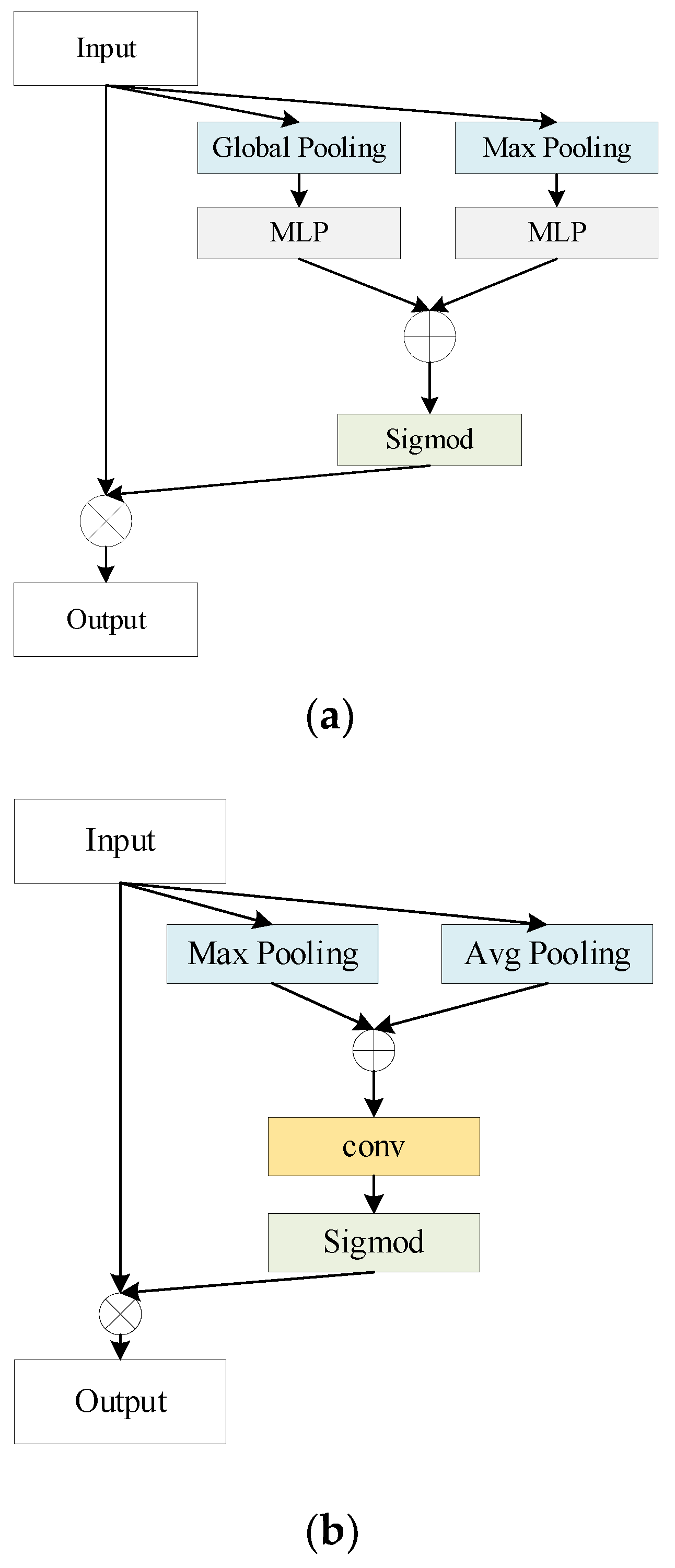

3.1. Convolutional Block Attention Module

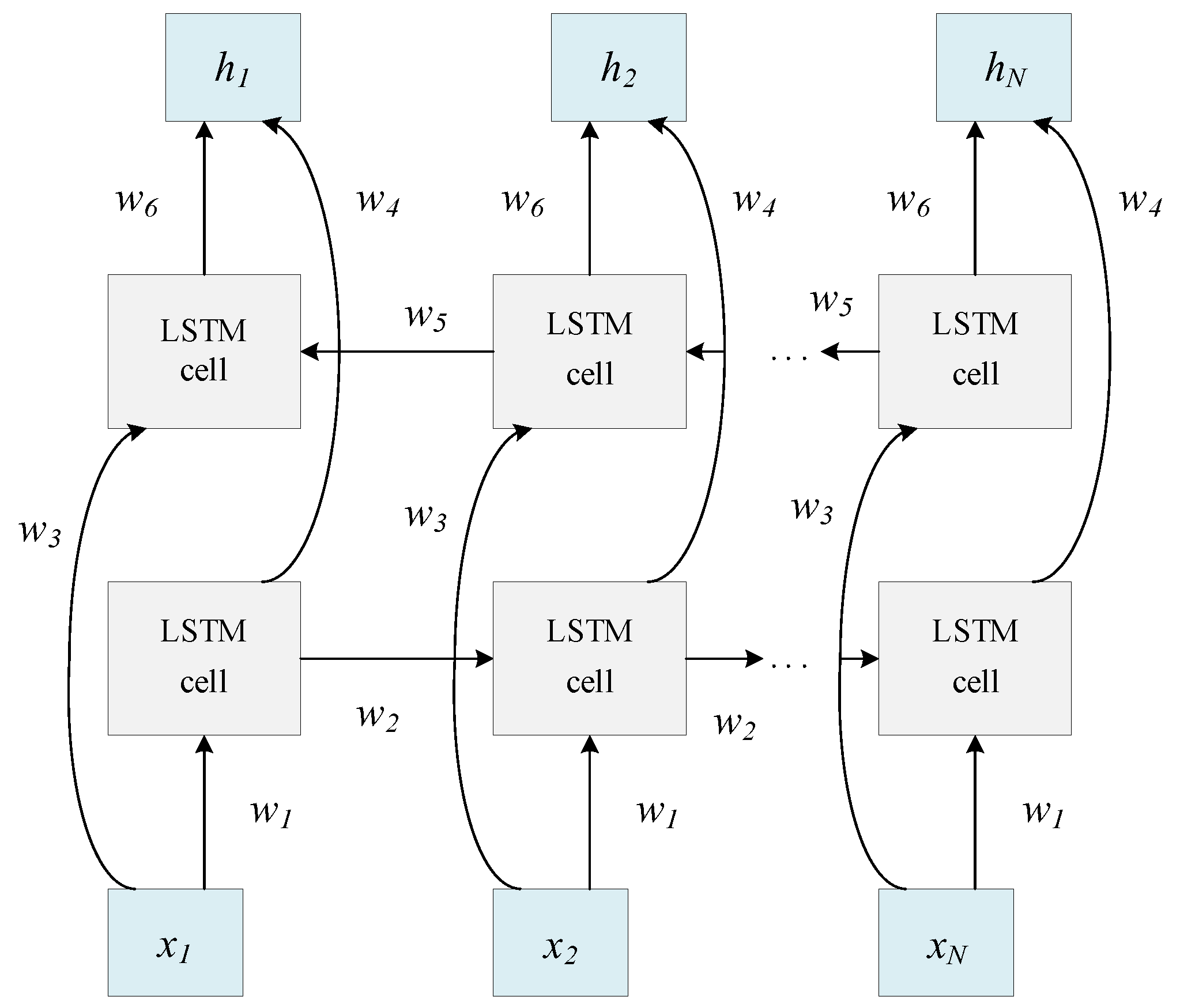

3.2. Construction of Recurrent Graph Neural Network

3.3. Multidimensional Feature Fusion and Emotion Recognition

3.4. Domain Adaptation Module for Model Optimization

4. Experimental Results and Analysis

4.1. Dataset and Preprocessing

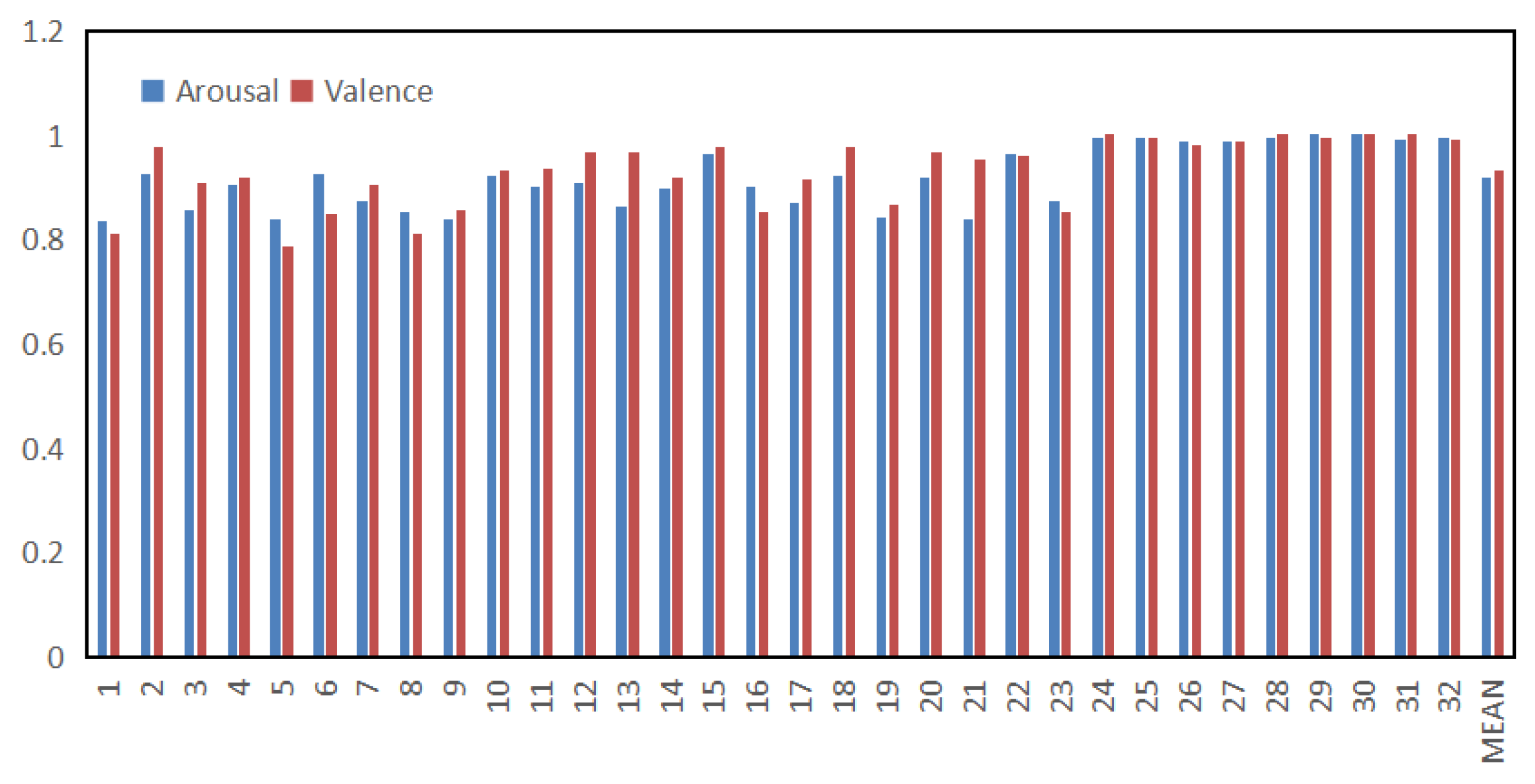

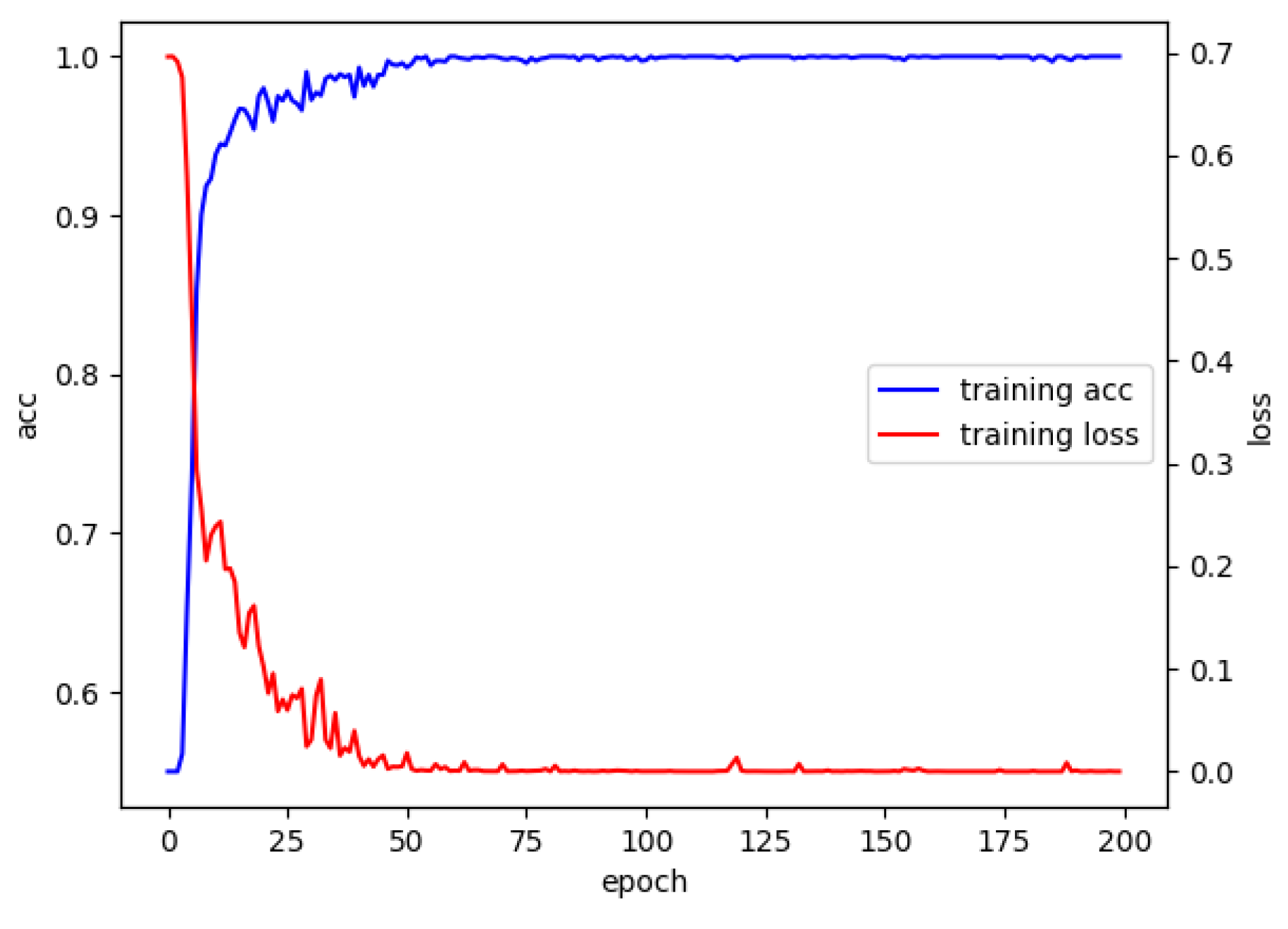

4.2. Within-Subject Experiment

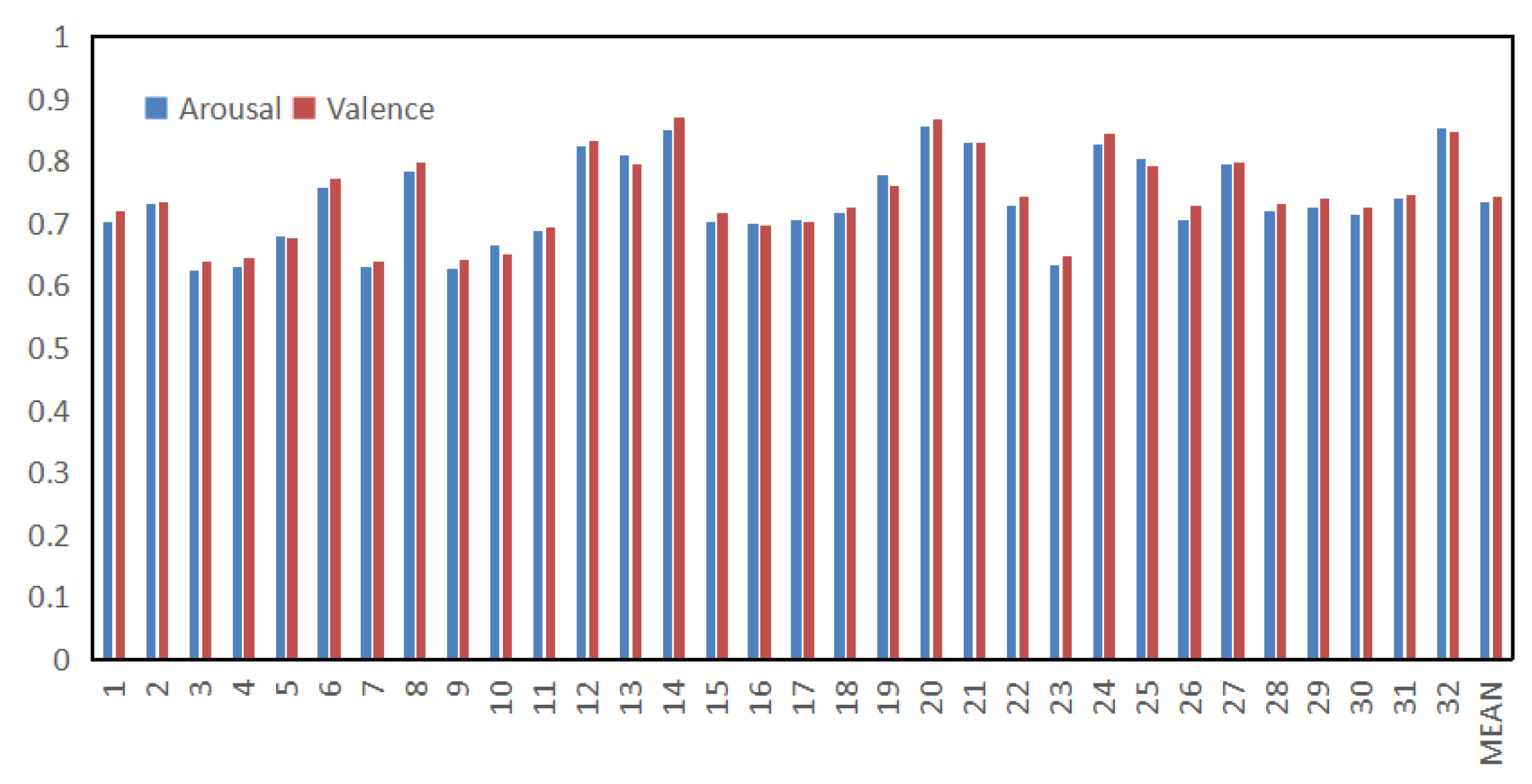

4.3. Cross-Subject Experiment

5. Discussion

5.1. Within-Subject Ablation Experiment and Model Comparison

5.2. Cross-Subject Ablation Experiment and Model Comparison

5.3. Model Limitations and Future Research

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Picard, R.W. Affective Computing; MIT Press: Cambridge, MA, USA, 2000. [Google Scholar]

- Zhao, S.; Jia, G.; Yang, J.; Ding, G.; Keutzer, K. Emotion recognition from multiple modalities: Fundamentals and methodologies. IEEE Signal Process. Mag. 2021, 38, 59–73. [Google Scholar] [CrossRef]

- Pan, J.H.; He, Z.P.; Li, Z.N.; Yan, L.; Lina, Q. A review of multimodal emotion recognition. CAAI Trans. Intell. Syst. 2020, 15, 633–645. [Google Scholar] [CrossRef]

- Verma, G.K.; Tiwary, U.S. Multimodal fusion framework: A multiresolution approach for emotion classification and recognition from physiological signals. NeuroImage 2014, 102, 162–172. [Google Scholar] [CrossRef] [PubMed]

- Tuncer, T.; Dogan, S.; Subasi, A. LEDPatNet19: Automated emotion recognition model based on nonlinear LED pattern feature extraction function using EEG signals. Cogn. Neurodynamics 2022, 16, 779–790. [Google Scholar] [CrossRef] [PubMed]

- Tuncer, T.; Dogan, S.; Baygin, M.; Acharya, U.R. Tetromino pattern based accurate EEG emotion classification model. Artif. Intell. Med. 2022, 123, 102210. [Google Scholar] [CrossRef] [PubMed]

- Dogan, A.; Akay, M.; Barua, P.D.; Baygin, M.; Dogan, S.; Tuncer, T.; Dogru, A.H.; Acharya, U.R. PrimePatNet87: Prime pattern and tunable q-factor wavelet transform techniques for automated accurate EEG emotion recognition. Comput. Biol. Med. 2021, 138, 104867. [Google Scholar] [CrossRef] [PubMed]

- Subasi, A.; Tuncer, T.; Dogan, S.; Tanko, D.; Sakoglu, U. EEG-based emotion recognition using tunable Q wavelet transform and rotation forest ensemble classifier. Biomed. Signal Process. Control 2021, 68, 102648. [Google Scholar] [CrossRef]

- Yang, Y.; Wu, Q.; Fu, Y.; Chen, X. Continuous convolutional neural network with 3D input for EEG-based emotion recognition. In Proceedings of the International Conference on Neural Information Processing, Siem Reap, Cambodia, 13–16 December 2018; Springer: Cham, Switzerland, 2018; pp. 433–443. [Google Scholar]

- Chen, J.X.; Hao, W.; Zhang, P.W.; Min, C.D.; Li, Y.C. Sentiment classification of EEG spatiotemporal features based on hybrid neural network. J. Softw. 2021, 32, 3869–3883. [Google Scholar]

- Du, R.; Zhu, S.; Ni, H.; Mao, T.; Li, J.; Wei, R. Valence-arousal classification of emotion evoked by Chinese ancient-style music using 1D-CNN-BiLSTM model on EEG signals for college students. Multimed. Tools Appl. 2022; 1–18. [Google Scholar]

- Yin, Y.; Zheng, X.; Hu, B.; Zhang, Y.; Cui, X. EEG emotion recognition using fusion model of graph convolutional neural networks and LSTM. Appl. Soft Comput. 2021, 100, 106954. [Google Scholar] [CrossRef]

- Dobrišek, S.; Gajšek, R.; Mihelič, F.; Pavešić, N.; Štruc, V. To-wards efficient multi-modal emotion recognition. Int. J. Adv. Robot. Syst. 2013, 10, 53. [Google Scholar] [CrossRef]

- Zhang, S.; Zhang, S.; Huang, T.; Gao, W.; Tian, Q. Learning affective features with a hybrid deep model for audio–visual emotion recognition. IEEE Trans. Circuits Syst. Video Technol. 2017, 28, 3030–3043. [Google Scholar] [CrossRef]

- Nakisa, B.; Rastgoo, M.N.; Rakotonirainy, A.; Maire, F.; Chandran, V. Automatic emotion recognition using temporal multimodal deep learning. IEEE Access 2020, 8, 225463–225474. [Google Scholar] [CrossRef]

- Tang, H.; Liu, W.; Zheng, W.L.; Lu, B.L. Multimodal emotion recognition using deep neural networks. In Proceedings of the International Conference on Neural Information Processing, Guangzhou, China, 14–18 November 2017; Springer: Cham, Switzerland, 2017; pp. 811–819. [Google Scholar]

- Huang, Y.; Yang, J.; Liu, S.; Pan, J. Combining facial expressions and electroencephalography to enhance emotion recognition. Future Internet 2019, 11, 105. [Google Scholar] [CrossRef]

- Woo, S.; Park, J.; Lee, J.Y.; Kweon, I.S. Cbam: Convolutional block attention module. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 3–19. [Google Scholar]

- Kipf, T.N.; Welling, M. Semi-supervised classification with graph convolutional networks. arxiv 2016, arXiv:1609.02907. [Google Scholar]

- Graves, A.; Schmidhuber, J. Framewise phoneme classification with bidirectional LSTM and other neural network architectures. Neural Netw. 2005, 18, 602–610. [Google Scholar] [CrossRef]

- Long, M.; Cao, Y.; Wang, J.; Jordan, M. Learning transferable features with deep adaptation networks. In Proceedings of the International Conference on Machine Learning, PMLR, Lille, France, 7–9 July 2015; pp. 97–105. [Google Scholar]

- Koelstra, S.; Muhl, C.; Soleymani, M.; Lee, J.; Yazdani, A.; Ebrahimi, T.; Pun, T.; Nijholt, A. Deap: A database for emotion analysis; using physiological signals. IEEE Trans. Affect. Comput. 2011, 3, 18–31. [Google Scholar] [CrossRef]

- Rumelhart, D.E.; Hinton, G.E.; Williams, R.J. Learning Internal Representations by Error Propagation; ICS Report 8506; California Univ San Diego La Jolla Inst for Cognitive Science: San Diego, CA, USA, 1985. [Google Scholar]

- Suykens JA, K.; Vandewalle, J. Least squares support vector machine classifiers. Neural Process. Lett. 1999, 9, 293–300. [Google Scholar] [CrossRef]

- Kwon, Y.H.; Shin, S.B.; Kim, S.D. Electroencephalography Based Fusion Two-Dimensional (2D)-Convolution Neural Networks (CNN) Model for Emotion Recognition System. Sensors 2018, 18, 1383. [Google Scholar] [CrossRef] [PubMed]

- Lin, W.; Li, C.; Sun, S. Deep convolutional neural network for emotion recognition using EEG and peripheral physiological signal. In Proceedings of the International Conference on Image and Graphics, Shanghai, China, 13–15 September 2017; Springer: Cham, Switzerland, 2017; pp. 385–394. [Google Scholar]

- Qiu, J.L.; Liu, W.; Lu, B.L. Multi-view emotion recognition using deep canonical correlation analysis. In Proceedings of the International Conference on Neural Information Processing, Siem Reap, Cambodia, 13–16 December 2018; Springer: Cham, Switzerland, 2018; pp. 221–231. [Google Scholar]

- Song, T.; Zheng, W.; Song, P.; Cui, Z. EEG emotion recognition using dynamical graph convolutional neural networks. IEEE Trans. Affect. Comput. 2018, 11, 532–541. [Google Scholar] [CrossRef]

- Chuang, S.W.; Ko, L.W.; Lin, Y.P.; Huang, R.; Jung, T.; Lin, C. Co-modulatory spectral changes in independent brain processes are correlated with task performance. Neuroimage 2012, 62, 1469–1477. [Google Scholar] [CrossRef]

- Yang, F.; Zhao, X.; Jiang, W.; Gao, P.; Liu, G. Multi-method fusion of cross-subject emotion recognition based on high-dimensional EEG features. Front. Comput. Neurosci. 2019, 13, 53. [Google Scholar] [CrossRef] [PubMed]

- Cimtay, Y.; Ekmekcioglu, E. Investigating the use of pretrained convolutional neural network on cross-subject and cross-dataset EEG emotion recognition. Sensors 2020, 20, 2034. [Google Scholar] [CrossRef] [PubMed]

| Model | Valence | Arousal |

|---|---|---|

| MLP [23] | 74.31% | 76.23% |

| SVM [24] | 79.75% | 78.90% |

| KNN [25] | 90.39% | 89.06% |

| CNN [26] | 85.50% | 87.30% |

| LSTM [16] | 83.82% | 83.23% |

| DCCA [27] | 85.62% | 84.33% |

| GCN [19] | 89.17% | 90.33% |

| DGCNN [28] | 90.44% | 91.70% |

| Mul-AT-RGCN | 93.19% | 91.82% |

| Model | Average ACC |

|---|---|

| BT [29] | 71.00% |

| SVM [24] | 71.06% |

| ST-SBSSVM [30] | 72.00% |

| InceptionResNetV2 [31] | 72.81% |

| Mul-AT-RGCN | 73.80% |

| Modality | Valence | Arousal |

|---|---|---|

| EEG | 88.09% | 87.13% |

| EEG+PPS | 93.19% | 91.82% |

| Model | Valence | Arousal |

|---|---|---|

| RGCN | 87.17% | 86.42% |

| ATT-RGCN | 92.33% | 91.67% |

| AT-LGCN | 90.75% | 90.03% |

| Mul-AT-RGCN | 93.19% | 91.82% |

| Model | Modality | Valence | Arousal |

|---|---|---|---|

| Mul-AT-RGCN-DAN | EEG | 71.46% | 70.85% |

| Mul-AT-RGCN-noDAN | EEG+PPS | 60.17% | 59.45% |

| Mul-AT-RGCN-DAN | EEG+PPS | 74.13% | 73.47% |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Chen, J.; Liu, Y.; Xue, W.; Hu, K.; Lin, W. Multimodal EEG Emotion Recognition Based on the Attention Recurrent Graph Convolutional Network. Information 2022, 13, 550. https://doi.org/10.3390/info13110550

Chen J, Liu Y, Xue W, Hu K, Lin W. Multimodal EEG Emotion Recognition Based on the Attention Recurrent Graph Convolutional Network. Information. 2022; 13(11):550. https://doi.org/10.3390/info13110550

Chicago/Turabian StyleChen, Jingxia, Yang Liu, Wen Xue, Kailei Hu, and Wentao Lin. 2022. "Multimodal EEG Emotion Recognition Based on the Attention Recurrent Graph Convolutional Network" Information 13, no. 11: 550. https://doi.org/10.3390/info13110550

APA StyleChen, J., Liu, Y., Xue, W., Hu, K., & Lin, W. (2022). Multimodal EEG Emotion Recognition Based on the Attention Recurrent Graph Convolutional Network. Information, 13(11), 550. https://doi.org/10.3390/info13110550