A Deep Learning Approach for Diabetic Foot Ulcer Classification and Recognition

Abstract

1. Introduction

- To use several end-to-end CNN-based deep learning architectures to transfer the learnt knowledge and update and analyze visual features for infection and ischemia categorization using the DFU202 dataset.

- To use fine-tune weight to overcome a lack of data and avoid computational costs.

- To investigate whether Affine transform techniques for the augmentation of input data affect the performance of transfer learning based on a fine tuned approach or not.

- To investigate and select the best CNN model for DFU classification.

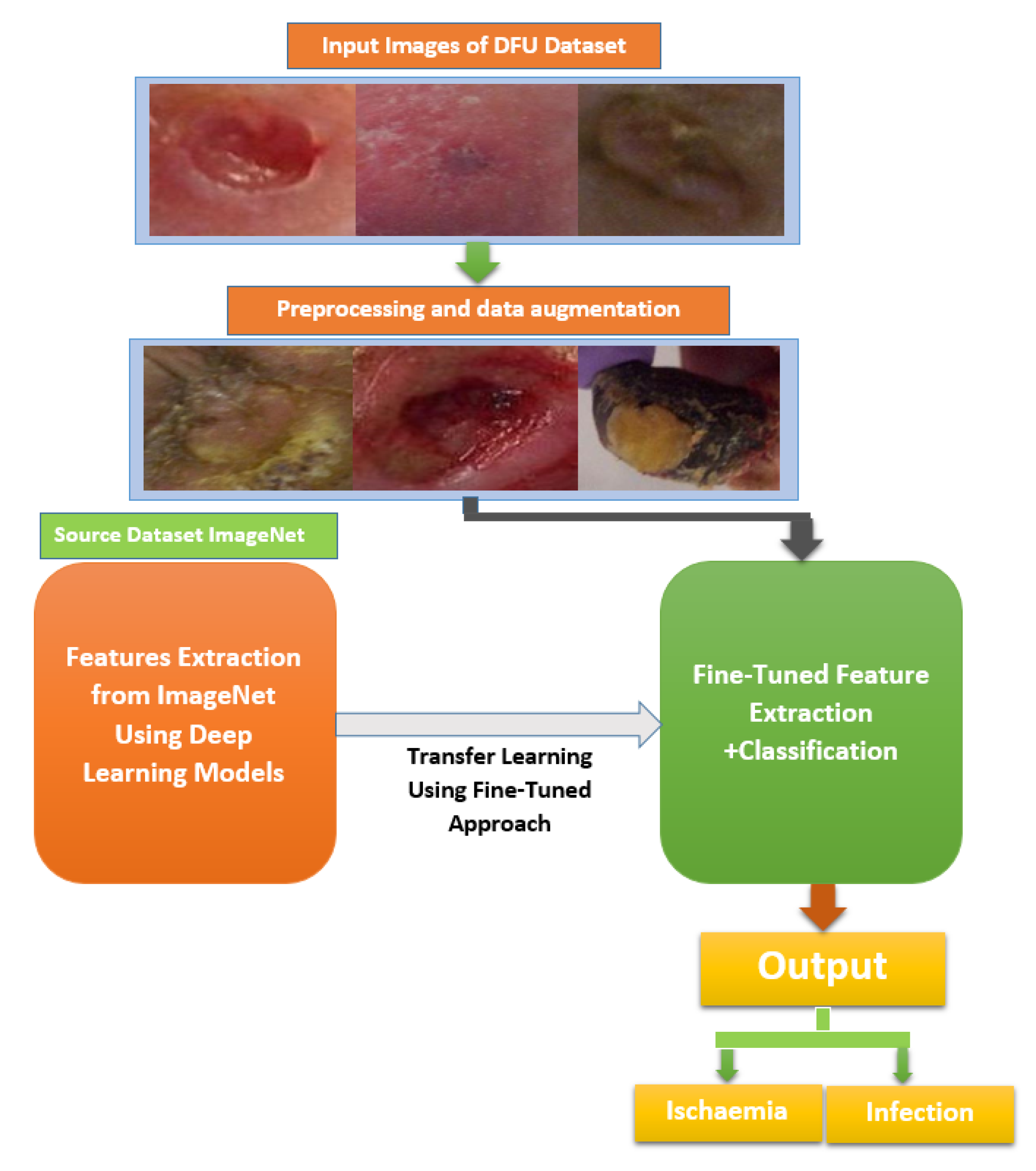

2. Materials and Methods

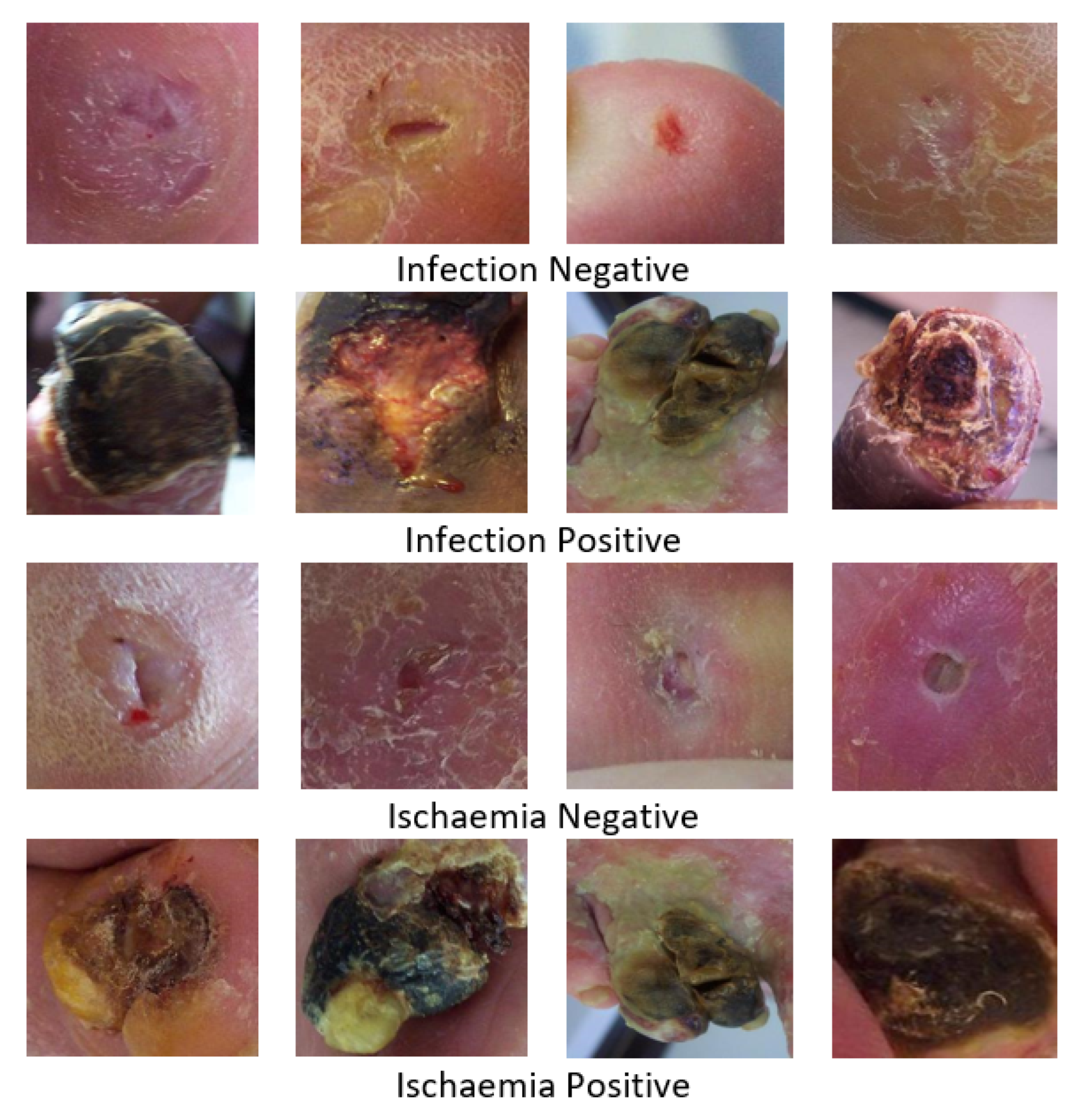

2.1. Dfu Dataset and Preprocessing

2.2. Features Learning and Classification

3. Experimental Results, Analysis, and Comparison

3.1. Results and Analysis

3.2. Comparison

4. Conclusions and Future Work

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| ML | Machine |

| DFU | Diabetic Foot Ulcer |

| CNN | Convolutional Neural Network |

| MRI | Magnetic resonance imaging |

| CT | Computed Tomography |

References

- Crocker, R.M.; Palmer, K.N.; Marrero, D.G.; Tan, T.W. Patient perspectives on the physical, psycho-social, and financial impacts of diabetic foot ulceration and amputation. J. Diabetes Its Complicat. 2021, 35, 107960. [Google Scholar] [CrossRef] [PubMed]

- Navarro-Flores, E.; Cauli, O. Quality of life in individuals with diabetic foot syndrome. Endocr. Metab. Immune-Disord.-Drug Targets (Former. Curr. Drug-Targets-Immune Endocr. Metab. Disord.) 2020, 20, 1365–1372. [Google Scholar] [CrossRef] [PubMed]

- Bus, S.; Van Netten, S.; Lavery, L.; Monteiro-Soares, M.; Rasmussen, A.; Jubiz, Y.; Price, P. IWGDF guidance on the prevention of foot ulcers in at-risk patients with diabetes. Diabetes/Metab. Res. Rev. 2016, 32, 16–24. [Google Scholar] [CrossRef] [PubMed]

- Saeedi, P.; Petersohn, I.; Salpea, P.; Malanda, B.; Karuranga, S.; Unwin, N.; Colagiuri, S.; Guariguata, L.; Motala, A.A.; Ogurtsova, K.; et al. Global and regional diabetes prevalence estimates for 2019 and projections for 2030 and 2045: Results from the International Diabetes Federation Diabetes Atlas. Diabetes Res. Clin. Pract. 2019, 157, 107843. [Google Scholar] [CrossRef] [PubMed]

- Iraj, B.; Khorvash, F.; Ebneshahidi, A.; Askari, G. Prevention of diabetic foot ulcer. Int. J. Prev. Med. 2013, 4, 373. [Google Scholar]

- Cavanagh, P.; Attinger, C.; Abbas, Z.; Bal, A.; Rojas, N.; Xu, Z.R. Cost of treating diabetic foot ulcers in five different countries. Diabetes/Metab. Res. Rev. 2012, 28, 107–111. [Google Scholar] [CrossRef]

- Reyzelman, A.M.; Koelewyn, K.; Murphy, M.; Shen, X.; Yu, E.; Pillai, R.; Fu, J.; Scholten, H.J.; Ma, R. Continuous temperature-monitoring socks for home use in patients with diabetes: Observational study. J. Med. Internet Res. 2018, 20, e12460. [Google Scholar] [CrossRef]

- Sumpio, B.E. Foot ulcers. N. Engl. J. Med. 2000, 343, 787–793. [Google Scholar] [CrossRef]

- Goyal, M.; Reeves, N.D.; Rajbhandari, S.; Ahmad, N.; Wang, C.; Yap, M.H. Recognition of ischaemia and infection in diabetic foot ulcers: Dataset and techniques. Comput. Biol. Med. 2020, 117, 103616. [Google Scholar] [CrossRef]

- Yap, M.H.; Hachiuma, R.; Alavi, A.; Brüngel, R.; Cassidy, B.; Goyal, M.; Zhu, H.; Rückert, J.; Olshansky, M.; Huang, X.; et al. Deep learning in diabetic foot ulcers detection: A comprehensive evaluation. Comput. Biol. Med. 2021, 135, 104596. [Google Scholar] [CrossRef]

- Alzubaidi, L.; Fadhel, M.A.; Oleiwi, S.R.; Al-Shamma, O.; Zhang, J. DFU_QUTNet: Diabetic foot ulcer classification using novel deep convolutional neural network. Multimed. Tools Appl. 2020, 79, 15655–15677. [Google Scholar] [CrossRef]

- Santos, E.; Santos, F.; Dallyson, J.; Aires, K.; Tavares, J.M.R.; Veras, R. Diabetic Foot Ulcers Classification using a fine-tuned CNNs Ensemble. In Proceedings of the 2022 IEEE 35th International Symposium on Computer-Based Medical Systems (CBMS), Shenzen, China, 21–23 July 2022; pp. 282–287. [Google Scholar]

- Xie, P.; Li, Y.; Deng, B.; Du, C.; Rui, S.; Deng, W.; Wang, M.; Boey, J.; Armstrong, D.G.; Ma, Y.; et al. An explainable machine learning model for predicting in-hospital amputation rate of patients with diabetic foot ulcer. Int. Wound J. 2022, 19, 910–918. [Google Scholar] [CrossRef]

- Khandakar, A.; Chowdhury, M.E.; Reaz, M.B.I.; Ali, S.H.M.; Hasan, M.A.; Kiranyaz, S.; Rahman, T.; Alfkey, R.; Bakar, A.A.A.; Malik, R.A. A machine learning model for early detection of diabetic foot using thermogram images. Comput. Biol. Med. 2021, 137, 104838. [Google Scholar] [CrossRef]

- Goyal, M.; Reeves, N.D.; Davison, A.K.; Rajbhandari, S.; Spragg, J.; Yap, M.H. Dfunet: Convolutional neural networks for diabetic foot ulcer classification. IEEE Trans. Emerg. Top. Comput. Intell. 2018, 4, 728–739. [Google Scholar] [CrossRef]

- Ayaz, Z.; Naz, S.; Khan, N.H.; Razzak, I.; Imran, M. Automated methods for diagnosis of Parkinson’s disease and predicting severity level. Neural Comput. Appl. 2022, 1–36. [Google Scholar] [CrossRef]

- Naseer, A.; Rani, M.; Naz, S.; Razzak, M.I.; Imran, M.; Xu, G. Refining Parkinson’s neurological disorder identification through deep transfer learning. Neural Comput. Appl. 2020, 32, 839–854. [Google Scholar] [CrossRef]

- Ashraf, A.; Naz, S.; Shirazi, S.H.; Razzak, I.; Parsad, M. Deep transfer learning for alzheimer neurological disorder detection. Multimed. Tools Appl. 2021, 80, 30117–30142. [Google Scholar] [CrossRef]

- Naz, S.; Ashraf, A.; Zaib, A. Transfer learning using freeze features for Alzheimer neurological disorder detection using ADNI dataset. Multimed. Syst. 2022, 28, 85–94. [Google Scholar] [CrossRef]

- Kamran, I.; Naz, S.; Razzak, I.; Imran, M. Handwritten Dynamics Assessment for Early Identification of Parkinson’s Patient. Future Genration 2020, 117, 234–244. [Google Scholar]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. Imagenet classification with deep convolutional neural networks. Commun. ACM 2017, 60, 84–90. [Google Scholar] [CrossRef]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- Szegedy, C.; Liu, W.; Jia, Y.; Sermanet, P.; Reed, S.; Anguelov, D.; Erhan, D.; Vanhoucke, V.; Rabinovich, A. Going deeper with convolutions. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 July 2015; pp. 1–9. [Google Scholar]

- Iandola, F.N.; Han, S.; Moskewicz, M.W.; Ashraf, K.; Dally, W.J.; Keutzer, K. SqueezeNet: AlexNet-level accuracy with 50x fewer parameters and <0.5 MB model size. arXiv 2016, arXiv:1602.07360. [Google Scholar]

- Huang, G.; Liu, Z.; Van Der Maaten, L.; Weinberger, K.Q. Densely connected convolutional networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 4700–4708. [Google Scholar]

- Sandler, M.; Howard, A.; Zhu, M.; Zhmoginov, A.; Chen, L.C. Mobilenetv2: Inverted residuals and linear bottlenecks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 4510–4520. [Google Scholar]

- Pham, T.C.; Luong, C.M.; Hoang, V.D.; Doucet, A. AI outperformed every dermatologist in dermoscopic melanoma diagnosis, using an optimized deep-CNN architecture with custom mini-batch logic and loss function. Sci. Rep. 2021, 11, 17485. [Google Scholar] [CrossRef] [PubMed]

- Al-Garaawi, N.; Ebsim, R.; Alharan, A.F.; Yap, M.H. Diabetic foot ulcer classification using mapped binary patterns and convolutional neural networks. Comput. Biol. Med. 2022, 140, 105055. [Google Scholar] [CrossRef] [PubMed]

| Model | Accuracy | Sensitivity | Specificity | Precision | F-Score | AUC | MCC |

|---|---|---|---|---|---|---|---|

| DFU Ischaemia | |||||||

| AlexNet | 83.56 | 84.41 | 82.71 | 83.00 | 83.70 | 91.42 | 67.14 |

| VGG16 | 98.58 | 98.07 | 99.09 | 99.08 | 98.57 | 99.87 | 97.17 |

| VGG19 | 98.48 | 98.28 | 98.68 | 98.68 | 98.48 | 99.87 | 96.96 |

| GoogleNet | 99.65 | 99.80 | 99.49 | 99.49 | 99.65 | 99.59 | 99.29 |

| ResNet50 | 99.49 | 99.59 | 99.39 | 99.39 | 99.49 | 99.96 | 98.99 |

| ResNet101 | 99.39 | 99.39 | 99.80 | 99.80 | 99.39 | 99.59 | 99.19 |

| MobileNet | 99.08 | 99.70 | 99.90 | 99.90 | 99.40 | 99.91 | 99.59 |

| SqueezeNet | 99.04 | 99.09 | 99.90 | 99.90 | 99.44 | 99.92 | 99.09 |

| DenseNet | 99.30 | 99.49 | 99.80 | 99.80 | 99.34 | 99.93 | 99.29 |

| DFU Infection | |||||||

| AlexNet | 83.22 | 91.19 | 75.25 | 78.65 | 84.46 | 90.43 | 67.30 |

| VGG16 | 79.32 | 76.61 | 82.03 | 81.00 | 78.75 | 86.87 | 58.73 |

| VGG19 | 80.17 | 76.61 | 83.73 | 82.48 | 79.44 | 87.05 | 60.49 |

| GoogleNet | 79.66 | 92.54 | 66.78 | 73.58 | 81.98 | 91.09 | 61.39 |

| ResNet50 | 84.76 | 89.80 | 85.71 | 83.27 | 85.00 | 94.16 | 75.57 |

| ResNet101 | 84.12 | 92.20 | 82.03 | 83.69 | 84.74 | 93.05 | 74.62 |

| MobileNet | 82.48 | 85.07 | 77.89 | 79.75 | 83.25 | 90.30 | 65.24 |

| SqueezeNet | 82.88 | 72.20 | 93.56 | 91.81 | 80.83 | 93.34 | 67.32 |

| DenseNet | 83.20 | 89.80 | 80.61 | 81.24 | 83.85 | 92.13 | 70.71 |

| Evaluation Metrics (%) | ||||||

|---|---|---|---|---|---|---|

| Study | Model | Class | Accuracy | F1-Score | MCC | AUC |

| Goyal et al. [9] | Ensemble of CNNs | ischaemia | 90.3 | 90.2 | 80.7 | 90.4 |

| Infection | 72.7 | 72.2 | 45.4 | 73.1 | ||

| Al-Garaawi et al. [28] | CNNs | ischaemia | 99 | 99 | – | 99.5 |

| Infection | 74.4 | 74.4 | – | 82.0 | ||

| Proposed Systems | ResNet50 | ischaemia | 99.49 | 99.49 | 99.80 | 99.99 |

| infection | 84.76 | 85.00 | 94.16 | 75.57 | ||

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ahsan, M.; Naz, S.; Ahmad, R.; Ehsan, H.; Sikandar, A. A Deep Learning Approach for Diabetic Foot Ulcer Classification and Recognition. Information 2023, 14, 36. https://doi.org/10.3390/info14010036

Ahsan M, Naz S, Ahmad R, Ehsan H, Sikandar A. A Deep Learning Approach for Diabetic Foot Ulcer Classification and Recognition. Information. 2023; 14(1):36. https://doi.org/10.3390/info14010036

Chicago/Turabian StyleAhsan, Mehnoor, Saeeda Naz, Riaz Ahmad, Haleema Ehsan, and Aisha Sikandar. 2023. "A Deep Learning Approach for Diabetic Foot Ulcer Classification and Recognition" Information 14, no. 1: 36. https://doi.org/10.3390/info14010036

APA StyleAhsan, M., Naz, S., Ahmad, R., Ehsan, H., & Sikandar, A. (2023). A Deep Learning Approach for Diabetic Foot Ulcer Classification and Recognition. Information, 14(1), 36. https://doi.org/10.3390/info14010036