Transparency Assessment on Level 2 Automated Vehicle HMIs

Abstract

1. Introduction

Transparency

- A standardized and robust transparency assessment method would be proposed.

- Verification of the proposed method would be conducted using commercially available HMI designs.

- Information critical to HMI designs’ functional transparency would be identified using the proposed method.

- Q1: How sensitive is the proposed transparency assessment method when evaluating different HMI designs and ADS experiences?

- -

- H1a: There is significant difference in functional transparency among different HMI designs.

- -

- H1b: There is a significant difference in functional transparency among participants with different ADS experiences.

- Q2: How does the proposed functional transparency relate to self-reported transparency?

- -

- H2: The higher the functional transparency, the higher the self-reported transparency.

- Q3: How is the information used by participants with different levels of functional transparency?

- -

- H3: Participants with different levels of functional transparency use different information sources when estimating system states.

2. Materials and Methods

2.1. Definition of Transparency

2.2. Study Design

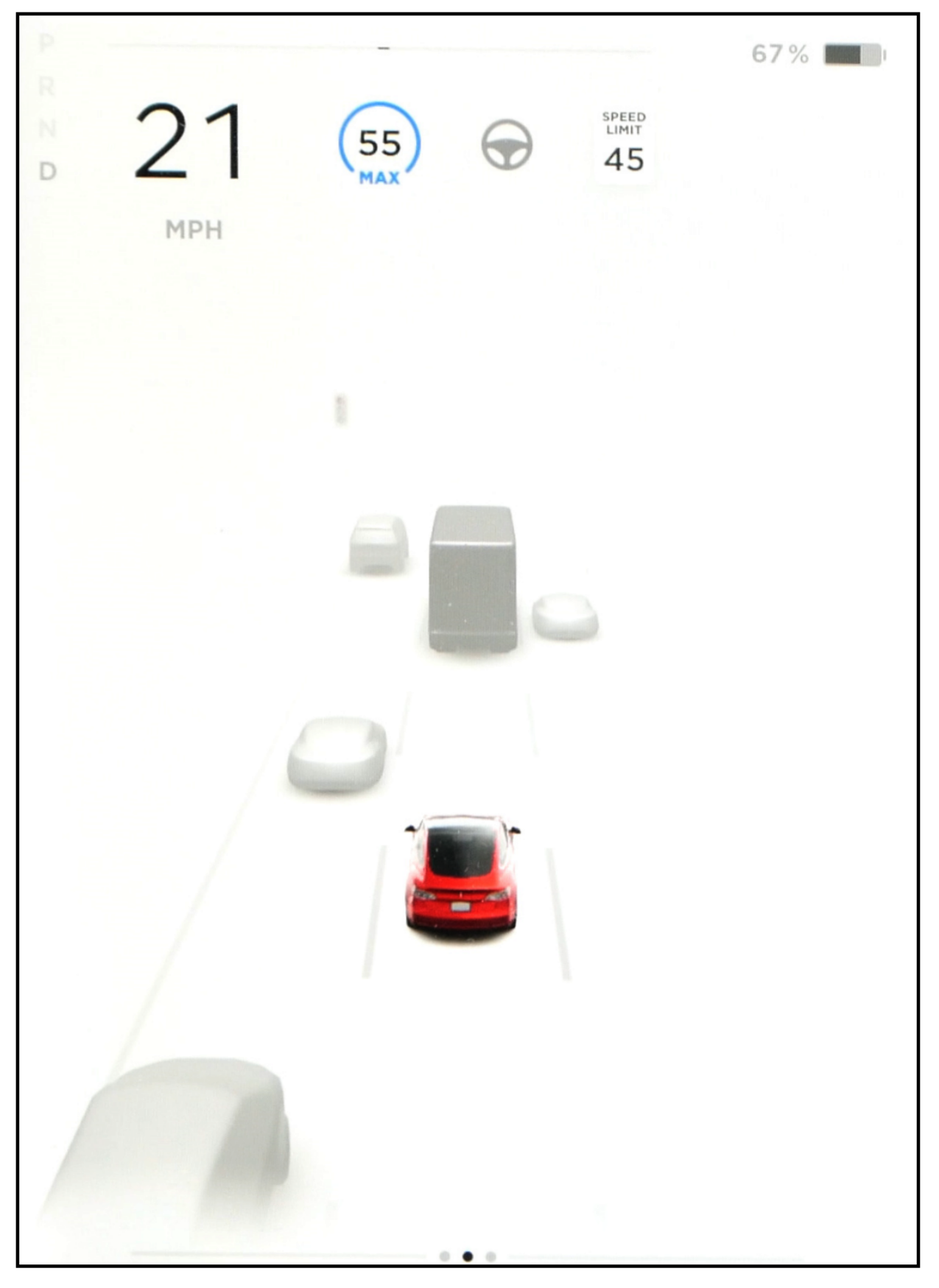

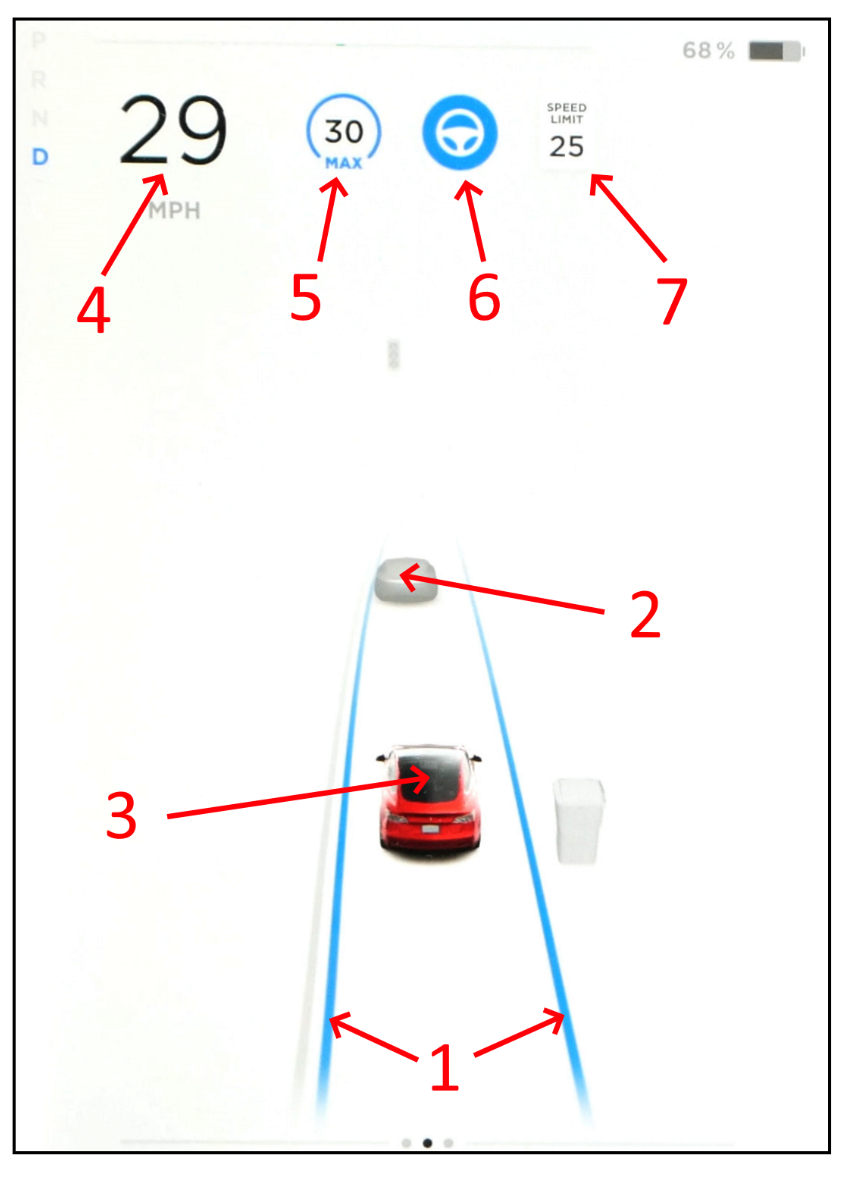

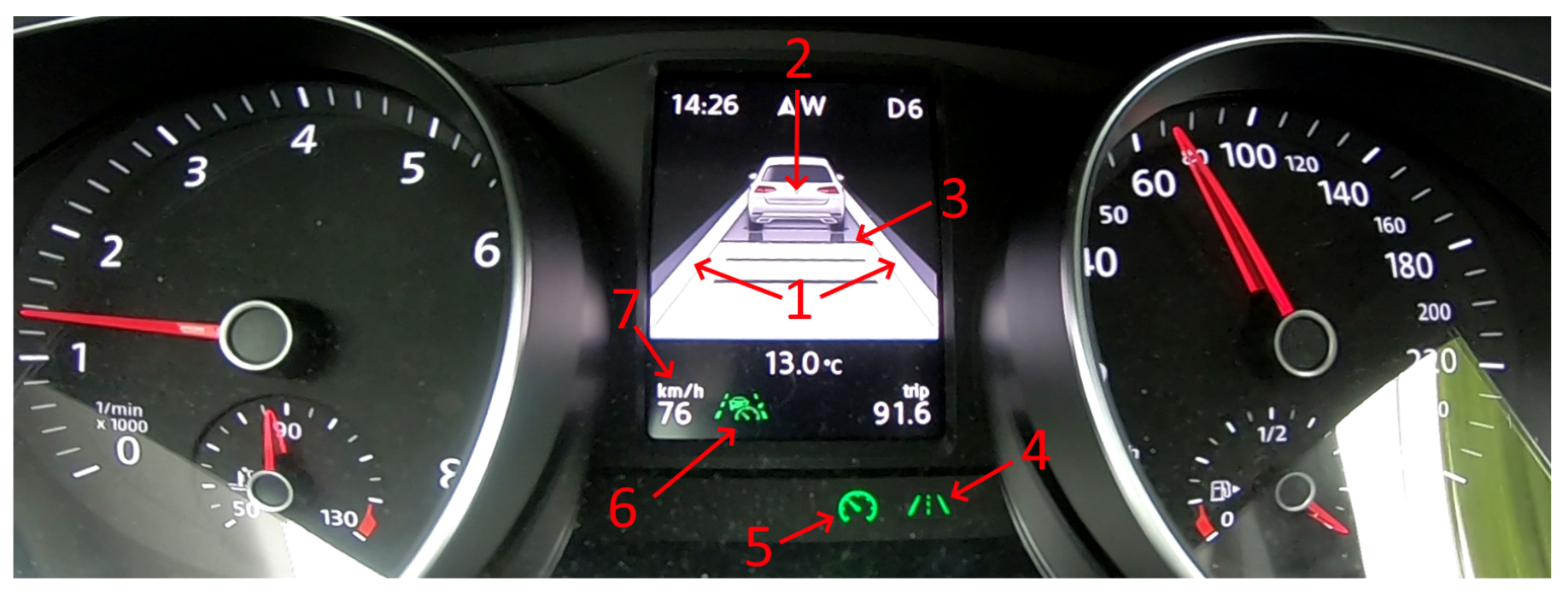

2.2.1. HMI Designs

2.2.2. Transparency Assessment Test

- Is the driving assistance system carrying out longitudinal control?

- Is the driving assistance system carrying out lateral control?

- Is the front vehicle detected by the driving assistance system?

- Is the lane marking detected by the driving assistance system?

- Can you activate the automated driving assistance (which performs both longitudinal and lateral controls automatically).

2.2.3. Self-Reported Transparency Test

2.2.4. Information Used Test

2.2.5. Procedure

2.3. Analysis

2.3.1. Transparency Assessment Test

2.3.2. Self-Reported Transparency Test

2.3.3. Information Used Test

3. Results

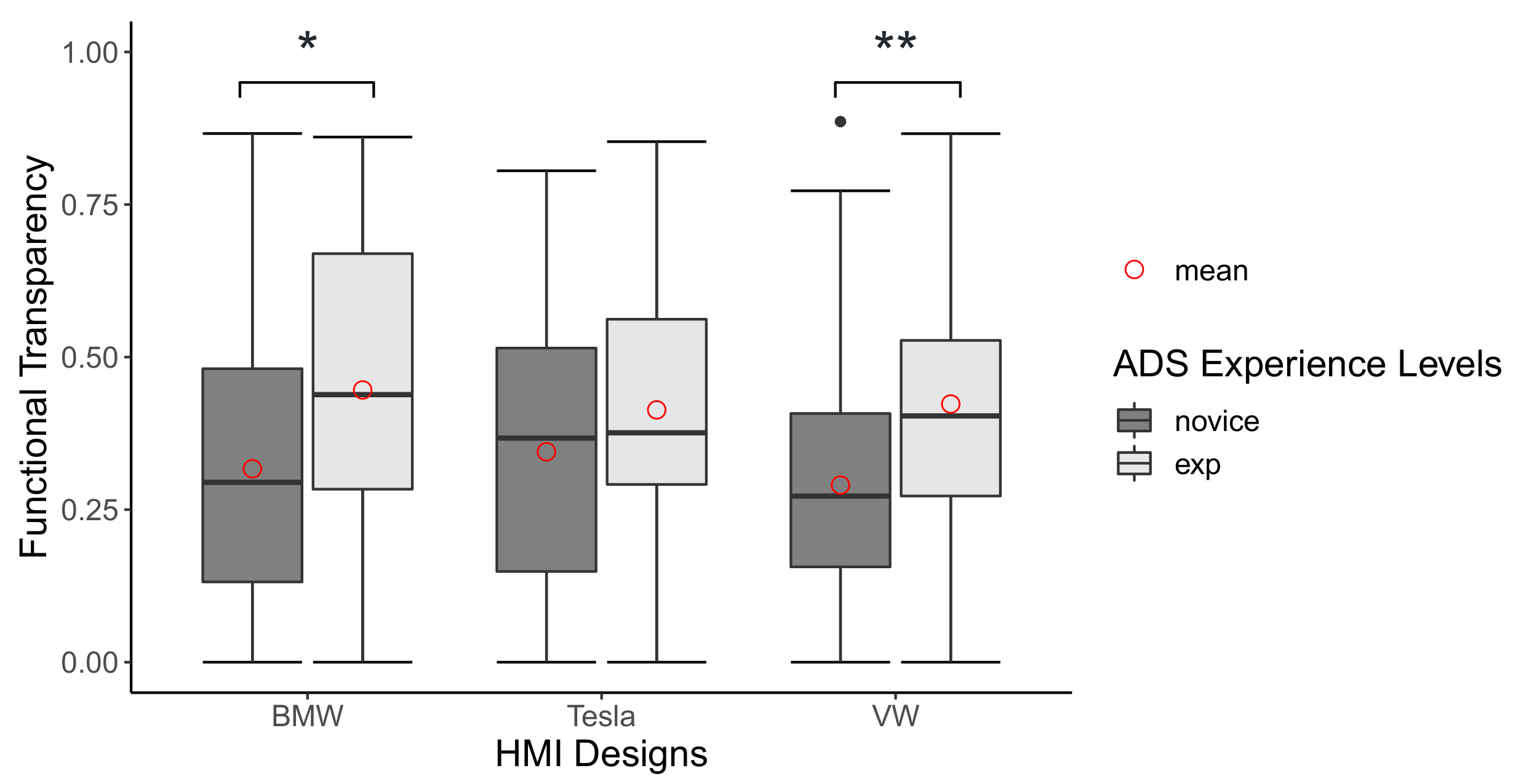

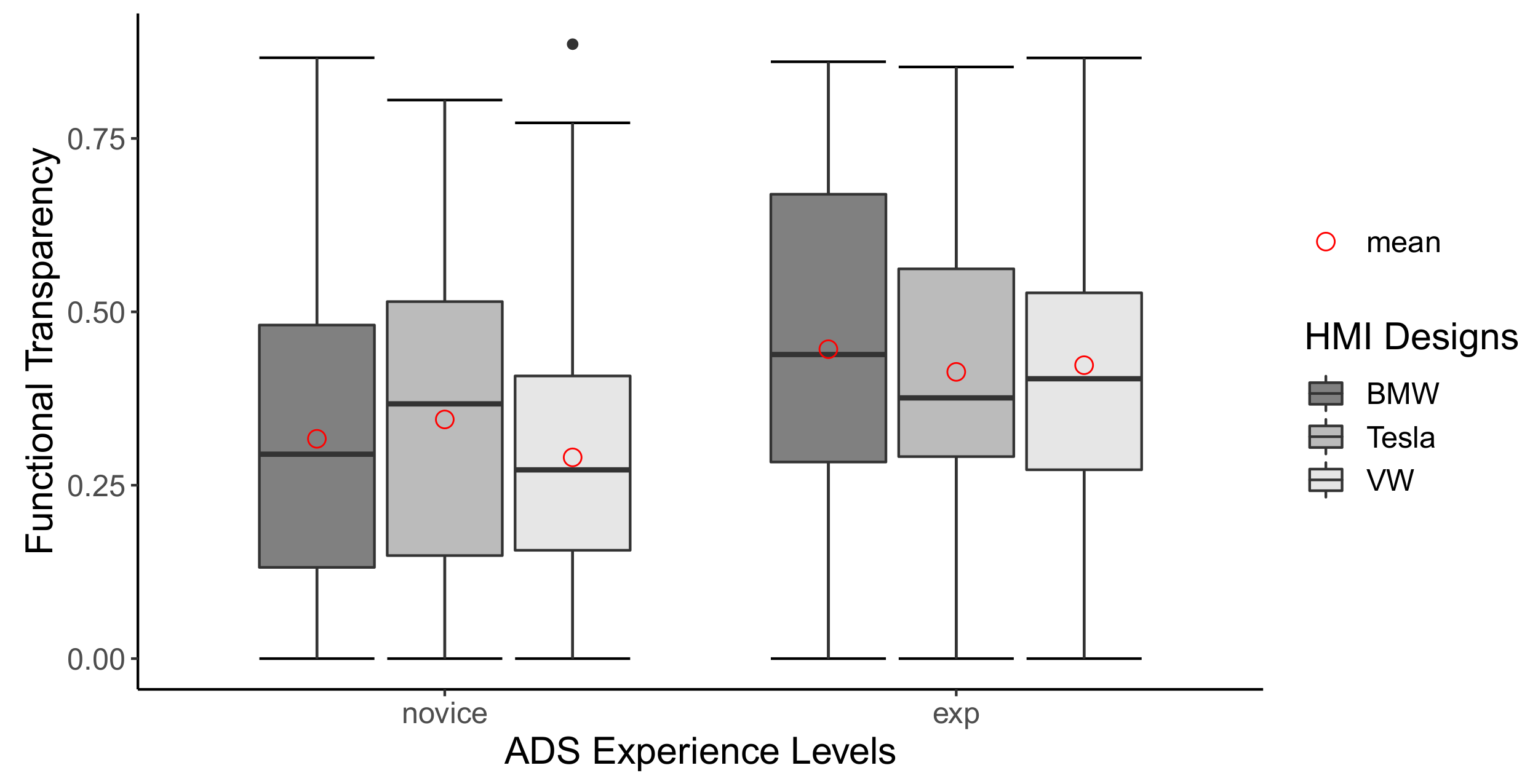

3.1. Transparency Assessment Test

3.2. Self-Reported Transparency Test

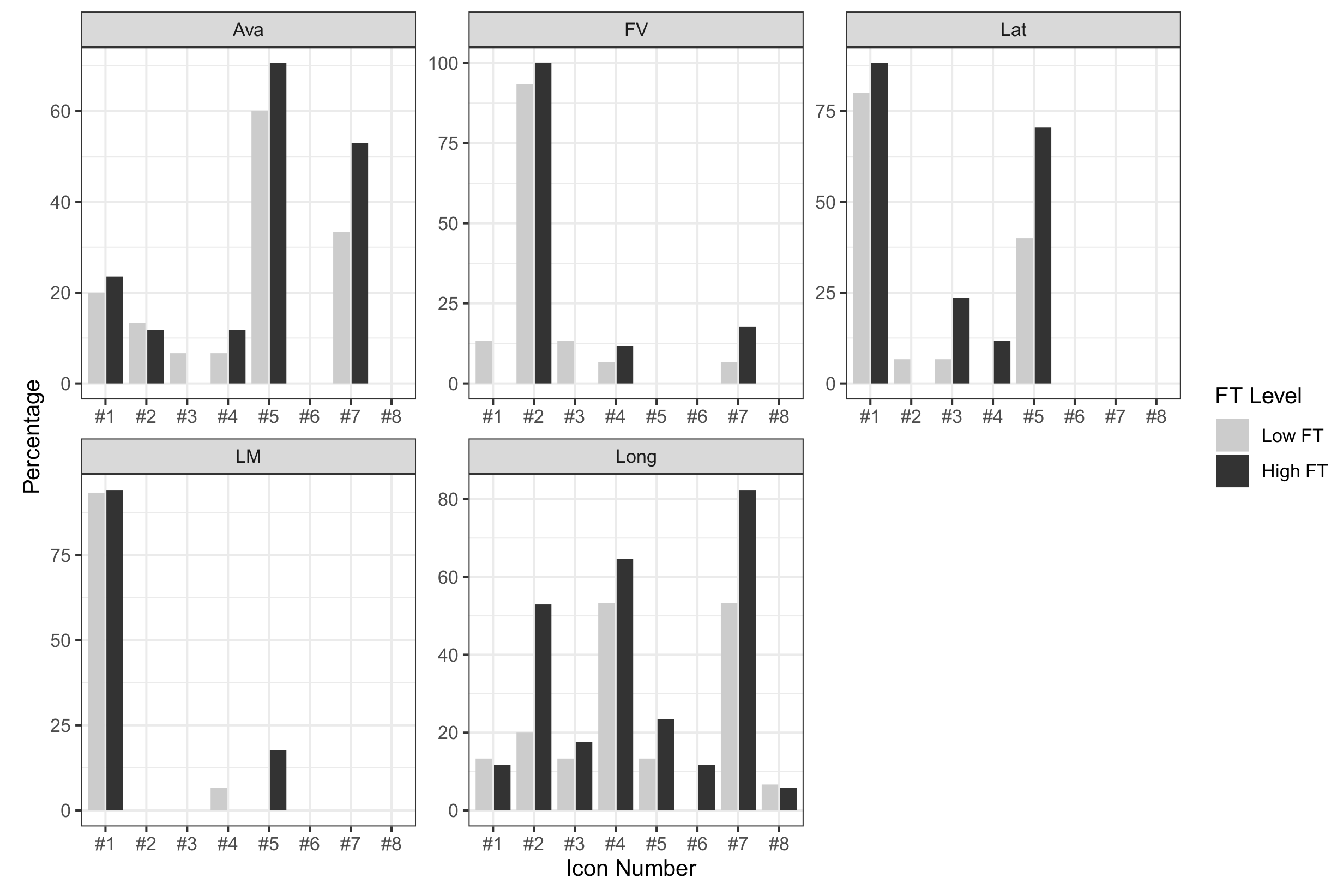

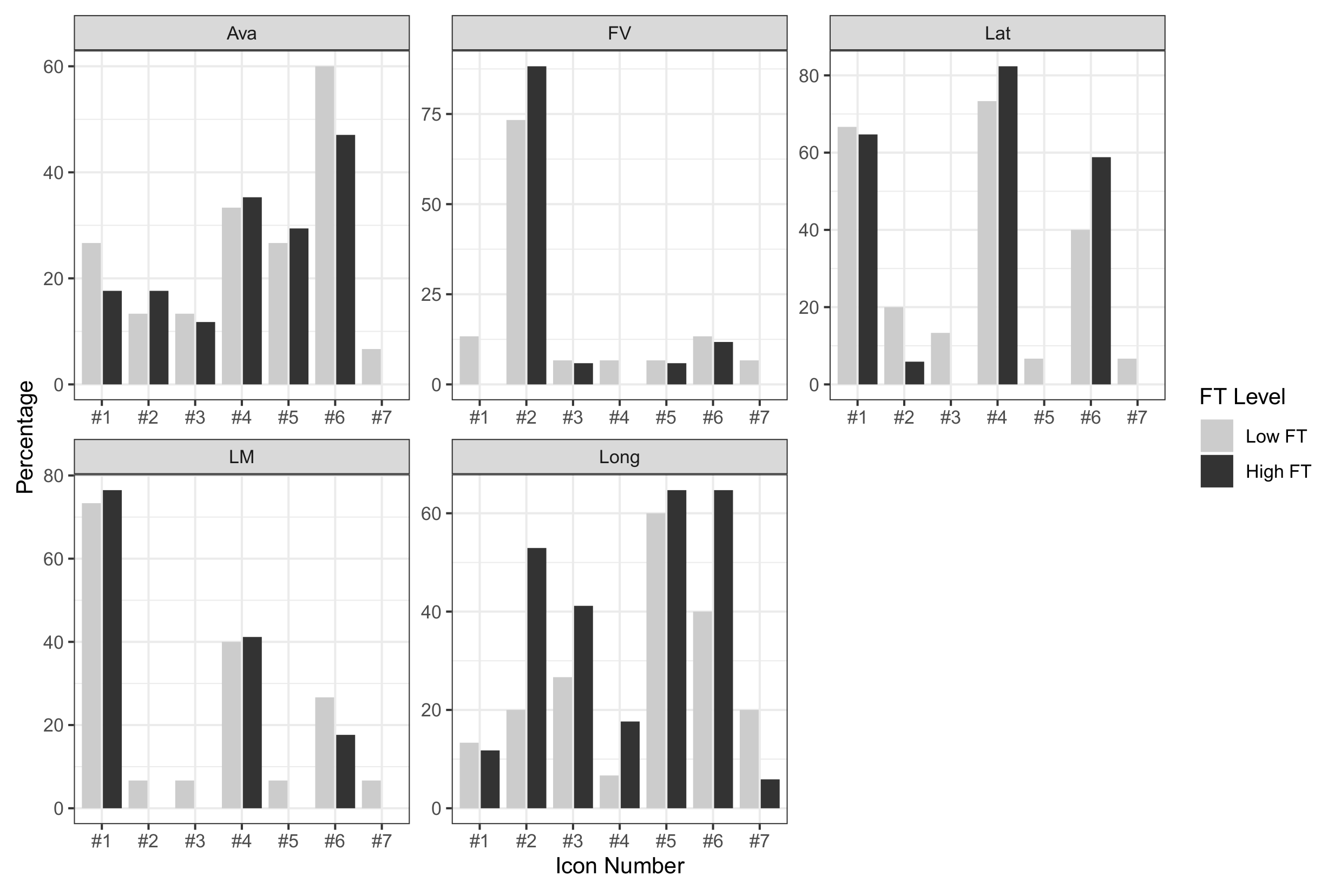

3.3. Information Used Test

4. Discussion

4.1. Summary

4.2. Limitations and Future Works

4.3. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| ACC | adaptive cruise control |

| ADS | automated driving system |

| AU | actual understandability |

| BMW | Bayerische motoren werke AG |

| FT | Functional Transparency |

| HMI | human-machine interface |

| L2 AV | SAE level 2 automated vehicle |

| LKA | lane-keeping assistance |

| SAT | situation awareness-based agent transparency |

| TRASS | transparency assessment test |

| TNPU | time needed for perceived understandability |

| VW | Volkswagen AG |

References

- SAE International. Taxonomy and Definitions for Terms Related to Driving Automation Systems for On-Road Motor Vehicles (SAE Standard J3016, Report No. J3016-202104); Technical Report; SAE International: Warrendale, PA, USA, 2021. [Google Scholar]

- National Highway Traffic Safety Administration. ODI Resume. Technical Report. 2017. Available online: https://static.nhtsa.gov/odi/inv/2016/INCLA-PE16007-7876.PDF (accessed on 29 September 2022).

- Banks, V.A.; Plant, K.L.; Stanton, N.A. Driver error or designer error: Using the Perceptual Cycle Model to explore the circumstances surrounding the fatal Tesla crash on 7th May 2016. Saf. Sci. 2018, 108, 278–285. [Google Scholar] [CrossRef]

- Carsten, O.; Martens, M.H. How can humans understand their automated cars? HMI principles, problems and solutions. Cogn. Technol. Work. 2019, 21, 3–20. [Google Scholar] [CrossRef]

- Rezvani, T.; Driggs-Campbell, K.; Sadigh, D.; Sastry, S.S.; Seshia, S.A.; Bajcsy, R. Towards trustworthy automation: User interfaces that convey internal and external awareness. In Proceedings of the 2016 IEEE 19th International conference on intelligent transportation systems (ITSC), Rio de Janeiro, Brazil, 1–4 November 2016; pp. 682–688. [Google Scholar]

- Russell, S.M.; Blanco, M.; Atwood, J.; Schaudt, W.A.; Fitchett, V.; Tidwell, S. Naturalistic Study of Level 2 Driving Automation Functions; Technical Report; Department of Transportation, National Highway Traffic Safety: Wasington, DC, USA, 2018.

- Boelhouwer, A.; Van den Beukel, A.P.; Van der Voort, M.C.; Hottentot, C.; De Wit, R.Q.; Martens, M.H. How are car buyers and car sellers currently informed about ADAS? An investigation among drivers and car sellers in the Netherlands. Transp. Res. Interdiscip. Perspect. 2020, 4, 100103. [Google Scholar] [CrossRef]

- Banks, V.A.; Eriksson, A.; O’Donoghue, J.; Stanton, N.A. Is partially automated driving a bad idea? Observations from an on-road study. Appl. Ergon. 2018, 68, 138–145. [Google Scholar] [CrossRef] [PubMed]

- Naujoks, F.; Wiedemann, K.; Schömig, N.; Hergeth, S.; Keinath, A. Towards guidelines and verification methods for automated vehicle HMIs. Transp. Res. Part F Traffic Psychol. Behav. 2019, 60, 121–136. [Google Scholar] [CrossRef]

- Pokam, R.; Debernard, S.; Chauvin, C.; Langlois, S. Principles of transparency for autonomous vehicles: First results of an experiment with an augmented reality human-machine interface. Cogn. Technol. Work. 2019, 21, 643–656. [Google Scholar] [CrossRef]

- Oliveira, L.; Burns, C.; Luton, J.; Iyer, S.; Birrell, S. The influence of system transparency on trust: Evaluating interfaces in a highly automated vehicle. Transp. Res. Part F Traffic Psychol. Behav. 2020, 72, 280–296. [Google Scholar] [CrossRef]

- Chen, J.Y.; Lakhmani, S.G.; Stowers, K.; Selkowitz, A.R.; Wright, J.L.; Barnes, M. Situation awareness-based agent transparency and human-autonomy teaming effectiveness. Theor. Issues Ergon. Sci. 2018, 19, 259–282. [Google Scholar] [CrossRef]

- Ososky, S.; Sanders, T.; Jentsch, F.; Hancock, P.; Chen, J.Y. Determinants of system transparency and its influence on trust in and reliance on unmanned robotic systems. In Proceedings of the Unmanned Systems Technology XVI; International Society for Optics and Photonics: Bellingham, WA, USA, 2014; Volume 9084, p. 90840E. [Google Scholar]

- Körber, M.; Prasch, L.; Bengler, K. Why do I have to drive now? Post hoc explanations of takeover requests. Hum. Factors 2018, 60, 305–323. [Google Scholar] [CrossRef]

- Maarten Schraagen, J.; Kerwien Lopez, S.; Schneider, C.; Schneider, V.; Tönjes, S.; Wiechmann, E. The role of transparency and explainability in automated systems. In Proceedings of the Human Factors and Ergonomics Society Annual Meeting, Baltimore, MD, USA, 3–8 October 2021; SAGE Publications Sage CA: Los Angeles, CA, USA, 2021; Volume 65, pp. 27–31. [Google Scholar]

- Bengler, K.; Omozik, K.; Müller, A.I. The Renaissance of Wizard of Oz (WoOz): Using the WoOz methodology to prototype automated vehicles. In Proceedings of the Human Factors and Ergonomics Society Europe Chapter, Nantes, France, 28 October–1 November 2019; pp. 63–72. Available online: https://www.researchgate.net/profile/Kamil-Omozik/publication/346659448_The_Renaissance_of_Wizard_of_Oz_WoOz_-_Using_the_WoOz_methodology_to_prototype_automated_vehicles/links/5fcd24ef92851c00f8588cbf/The-Renaissance-of-Wizard-of-Oz-WoOz-Using-the-WoOz-methodology-to-prototype-automated-vehicles.pdf (accessed on 29 September 2022).

- Choi, J.K.; Ji, Y.G. Investigating the importance of trust on adopting an autonomous vehicle. Int. J.-Hum.-Comput. Interact. 2015, 31, 692–702. [Google Scholar] [CrossRef]

- Chen, J.Y.; Procci, K.; Boyce, M.; Wright, J.; Garcia, A.; Barnes, M. Situation Awareness-Based Agent Transparency; Technical Report; Army Research Lab Aberdeen Proving Ground MD Human Research and Engineering: Aberdeen Proving Ground, MD, USA, 2014. [Google Scholar]

- Endsley, M.R. Toward a theory of situation awareness in dynamic systems. In Situational Awareness; Routledge: London, UK, 2017; pp. 9–42. [Google Scholar]

- Yang, X.J.; Unhelkar, V.V.; Li, K.; Shah, J.A. Evaluating effects of user experience and system transparency on trust in automation. In Proceedings of the 2017 12th ACM/IEEE International Conference on Human-Robot Interaction (HRI 2017), Vienna, Austria, 6–9 March 2017; pp. 408–416. [Google Scholar]

- Bengler, K.; Rettenmaier, M.; Fritz, N.; Feierle, A. From HMI to HMIs: Towards an HMI framework for automated driving. Information 2020, 11, 61. [Google Scholar] [CrossRef]

- Feierle, A.; Danner, S.; Steininger, S.; Bengler, K. Information needs and visual attention during urban, highly automated driving—An investigation of potential influencing factors. Information 2020, 11, 62. [Google Scholar] [CrossRef]

- Bhaskara, A.; Skinner, M.; Loft, S. Agent transparency: A review of current theory and evidence. IEEE Trans.-Hum.-Mach. Syst. 2020, 50, 215–224. [Google Scholar] [CrossRef]

- Akash, K.; Polson, K.; Reid, T.; Jain, N. Improving human-machine collaboration through transparency-based feedback—Part I: Human trust and workload model. IFAC-PapersOnLine 2019, 51, 315–321. [Google Scholar] [CrossRef]

- Flemisch, F.; Schieben, A.; Kelsch, J.; Löper, C. Automation spectrum, inner/outer compatibility and other potentially useful human factors concepts for assistance and automation. In Human Factors for Assistance and Automation; Shaker Publishing: Duren, Germany, 2008. [Google Scholar]

- Cao, Y.; Griffon, T.; Fahrenkrog, F. Code of Practice for the Development of Automated Driving Functions; Technical Report, L3Pilot Deliverable D2.3; version 1.1. 2021. Available online: https://www.eucar.be/wp-content/uploads/2022/06/EUCAR_CoP-ADF.pdf (accessed on 29 September 2022).

- Albers, D.; Radlmayr, J.; Loew, A.; Hergeth, S.; Naujoks, F.; Keinath, A.; Bengler, K. Usability evaluation—Advances in experimental design in the context of automated driving human-machine interfaces. Information 2020, 11, 240. [Google Scholar] [CrossRef]

- Jian, J.Y.; Bisantz, A.M.; Drury, C.G. Foundations for an empirically determined scale of trust in automated systems. Int. J. Cogn. Ergon. 2000, 4, 53–71. [Google Scholar] [CrossRef]

- Venkatesh, V.; Morris, M.G.; Davis, G.B.; Davis, F.D. User acceptance of information technology: Toward a unified view. MIS Q. 2003, 27, 425–478. [Google Scholar] [CrossRef]

- Forster, Y.; Hergeth, S.; Naujoks, F.; Krems, J.F. How usability can save the day-methodological considerations for making automated driving a success story. In Proceedings of the 10th International Conference on Automotive User Interfaces and Interactive Vehicular Applications, Toronto, ON, Canada, 23–25 September 2018; pp. 278–290. [Google Scholar]

- ISO/IEC 9126; Software Engineering—Product Quality. ISO/IEC: Geneva, Switzerland, 2001.

- Scalabrino, S.; Bavota, G.; Vendome, C.; Poshyvanyk, D.; Oliveto, R. Automatically assessing code understandability. IEEE Trans. Softw. Eng. 2019, 47, 595–613. [Google Scholar] [CrossRef]

- Mueller, A.S.; Cicchino, J.B.; Singer, J.; Jenness, J.W. Effects of training and display content on Level 2 driving automation interface usability. Transp. Res. Part F Traffic Psychol. Behav. 2020, 69, 61–71. [Google Scholar] [CrossRef]

- Bates, D.; Mächler, M.; Bolker, B.; Walker, S. Fitting Linear Mixed-Effects Models Using lme4. J. Stat. Softw. 2015, 67, 1–48. [Google Scholar] [CrossRef]

- R Core Team. R: A Language and Environment for Statistical Computing; R Foundation for Statistical Computing: Vienna, Austria, 2021. [Google Scholar]

- Barr, D.J.; Levy, R.; Scheepers, C.; Tily, H.J. Random effects structure for confirmatory hypothesis testing: Keep it maximal. J. Mem. Lang. 2013, 68, 255–278. [Google Scholar] [CrossRef]

- Lenth, R.V. Emmeans: Estimated Marginal Means, aka Least-Squares Means, R package version 1.7.0; R Foundation for Statistical Computing: Vienna, Austria, 2021. [Google Scholar]

- Forster, Y.; Hergeth, S.; Naujoks, F.; Krems, J.; Keinath, A. User education in automated driving: Owner’s manual and interactive tutorial support mental model formation and human-automation interaction. Information 2019, 10, 143. [Google Scholar] [CrossRef]

- Boos, A.; Emmermann, B.; Biebl, B.; Feldhütter, A.; Fröhlich, M.; Bengler, K. Information Depth in a Video Tutorial on the Intended Use of Automated Driving. In Proceedings of the Congress of the International Ergonomics Association; Springer: Berlin/Heidelberg, Germany, 2021; pp. 575–582. [Google Scholar]

- Bauerfeind, K.; Stephan, A.; Hartwich, F.; Othersen, I.; Hinzmann, S.; Bendewald, L. Analysis of potentials of an HMI-concept concerning conditional automated driving for system-inexperienced vs. system-experienced users. In Proceedings of the Human Factors and Ergonomics Society Europe, Rome, Italy, 28–30 September 2017; pp. 67–77. Available online: https://www.researchgate.net/profile/Kassandra-Bauerfeind/publication/339900783_Analysis_of_potentials_of_an_HMI-concept_concerning_conditional_automated_driving_for_system-inexperienced_vs_system-experienced_users/links/5e6b682c458515e55576ac14/Analysis-of-potentials-of-an-HMI-concept-concerning-conditional-automated-driving-for-system-inexperienced-vs-system-experienced-users.pdf (accessed on 29 September 2022).

- Beggiato, M.; Hartwich, F.; Schleinitz, K.; Krems, J.; Othersen, I.; Petermann-Stock, I. What would drivers like to know during automated driving? Information needs at different levels of automation. In Proceedings of the 7. Tagung Fahrerassistenzsysteme, Munich, Germany, 25–26 November 2015. [Google Scholar]

- Forster, Y.; Geisel, V.; Hergeth, S.; Naujoks, F.; Keinath, A. Engagement in non-driving related tasks as a non-intrusive measure for mode awareness: A simulator study. Information 2020, 11, 239. [Google Scholar] [CrossRef]

| Scenarios | Is ACC Available? | Is LKA Available? * | Is Front/Side Vehicle Visible? | Is There Warning Signal? |

|---|---|---|---|---|

| Nothing activated | Yes | No | No | (None) |

| Yes | No | Yes | (None) | |

| Yes | Yes | No | (None) | |

| Yes | Yes | Yes | (None) | |

| Only ACC activated | (activated) | No | No | (None) |

| (activated) | No | Yes | (None) | |

| (activated) | Yes | No | (None) | |

| (activated) | Yes | Yes | (None) | |

| ACC and LKA activated (Level 2) | (activated) | (activated) | No | No |

| (activated) | (activated) | Yes | No | |

| (activated) | (activated) | (None) | Yes |

| HMI Design | ADS Experience Level | Functional Transparency Mean (SD) | Self-Reported Transparency Mean (SD) | N |

|---|---|---|---|---|

| BMW | experienced | 0.45 (0.26) | 0.67 (0.23) | 52 |

| novice | 0.32 (0.24) | 0.71 (0.21) | 54 | |

| Tesla | experienced | 0.41 (0.24) | 0.56 (0.24) | 51 |

| novice | 0.34 (0.24) | 0.59 (0.23) | 60 | |

| VW | experienced | 0.42 (0.21) | 0.62 (0.20) | 59 |

| novice | 0.29 (0.21) | 0.58 (0.19) | 54 |

| Estimate | Std. Error | df | t Value | Sig. | 95% Conf. Int. | ||

|---|---|---|---|---|---|---|---|

| Lower Bound | Upper Bound | ||||||

| Intercept | 0.45 | 0.040 | 65.11 | 11.24 | <0.0005 | 0.37 | 0.52 |

| ADS experience: exp. | 0 | 0 | . | . | . | . | . |

| ADS experience: novice | −0.12 | 0.048 | 122.01 | −2.54 | 0.012 | −0.22 | −0.027 |

| HMI design: BMW | 0 | 0 | . | . | . | . | . |

| HMI design: Tesla | −0.035 | 0.043 | 309.08 | −0.80 | 0.43 | −0.12 | 0.051 |

| HMI design: VW | −0.018 | 0.041 | 302.56 | −0.44 | 0.66 | −0.10 | 0.063 |

| Given Variable | Comparison | Estimate | SE | DOF | t-Value | p-Value |

|---|---|---|---|---|---|---|

| HMI design: BMW | exp - novice | 0.12 | 0.049 | 127 | 2.50 | 0.014 ** |

| HMI design: Tesla | exp - novice | 0.06 | 0.048 | 121 | 1.26 | 0.21 |

| HMI design: VW | exp - novice | 0.14 | 0.047 | 118 | 3.06 | 0.003 *** |

| ADS experience level: exp | BMW - Tesla | 0.035 | 0.044 | 313 | 0.79 | 0.71 |

| BMW - VW | 0.018 | 0.042 | 307 | 0.43 | 0.90 | |

| Tesla - VW | −0.017 | 0.042 | 305 | −0.39 | 0.92 | |

| ADS experience level: novice | BMW - Tesla | −0.027 | 0.042 | 318 | −0.66 | 0.79 |

| BMW - VW | 0.040 | 0.043 | 320 | 0.94 | 0.62 | |

| Tesla - VW | 0.068 | 0.041 | 305 | 1.65 | 0.23 |

| Question | HMI Designs | Valid Icons | False Icons |

|---|---|---|---|

| Ava | BMW | Unknown | Unknown |

| Tesla | #6 | #1, #2, #3, #4, #5, #7 | |

| VW | Unknown | Unknown | |

| FV | BMW | #2, #7 | #1, #3, #4, #5, #6, #8 |

| Tesla | #2 | #1, #3, #4, #5, #6, #7 | |

| VW | #2 | #1, #3, #4, #5, #6, #7 | |

| Lat | BMW | #1, #5 | #2, #3, #4, #6, #7, #8 |

| Tesla | #1, #6 | #2, #3, #4, #5, #7 | |

| VW | #1, #6 | #2, #3, #4, #5, #7 | |

| LM | BMW | #1 | #2, #3, #4, #5, #6, #7, #8 |

| Tesla | #1 | #2, #3, #4, #5, #6, #7 | |

| VW | #1, #4, #6 | #2, #3, #5, #7 | |

| Long | BMW | #2, #4, #7 | #1, #3, #5, #6, #8 |

| Tesla | #5 | #1, #2, #3, #4, #6, #7 | |

| VW | #2, #3, #5, #6 | #1, #4, #7 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Liu, Y.-C.; Figalová, N.; Bengler, K. Transparency Assessment on Level 2 Automated Vehicle HMIs. Information 2022, 13, 489. https://doi.org/10.3390/info13100489

Liu Y-C, Figalová N, Bengler K. Transparency Assessment on Level 2 Automated Vehicle HMIs. Information. 2022; 13(10):489. https://doi.org/10.3390/info13100489

Chicago/Turabian StyleLiu, Yuan-Cheng, Nikol Figalová, and Klaus Bengler. 2022. "Transparency Assessment on Level 2 Automated Vehicle HMIs" Information 13, no. 10: 489. https://doi.org/10.3390/info13100489

APA StyleLiu, Y.-C., Figalová, N., & Bengler, K. (2022). Transparency Assessment on Level 2 Automated Vehicle HMIs. Information, 13(10), 489. https://doi.org/10.3390/info13100489