FIRE: A Finely Integrated Risk Evaluation Methodology for Life-Critical Embedded Systems

Abstract

1. Introduction

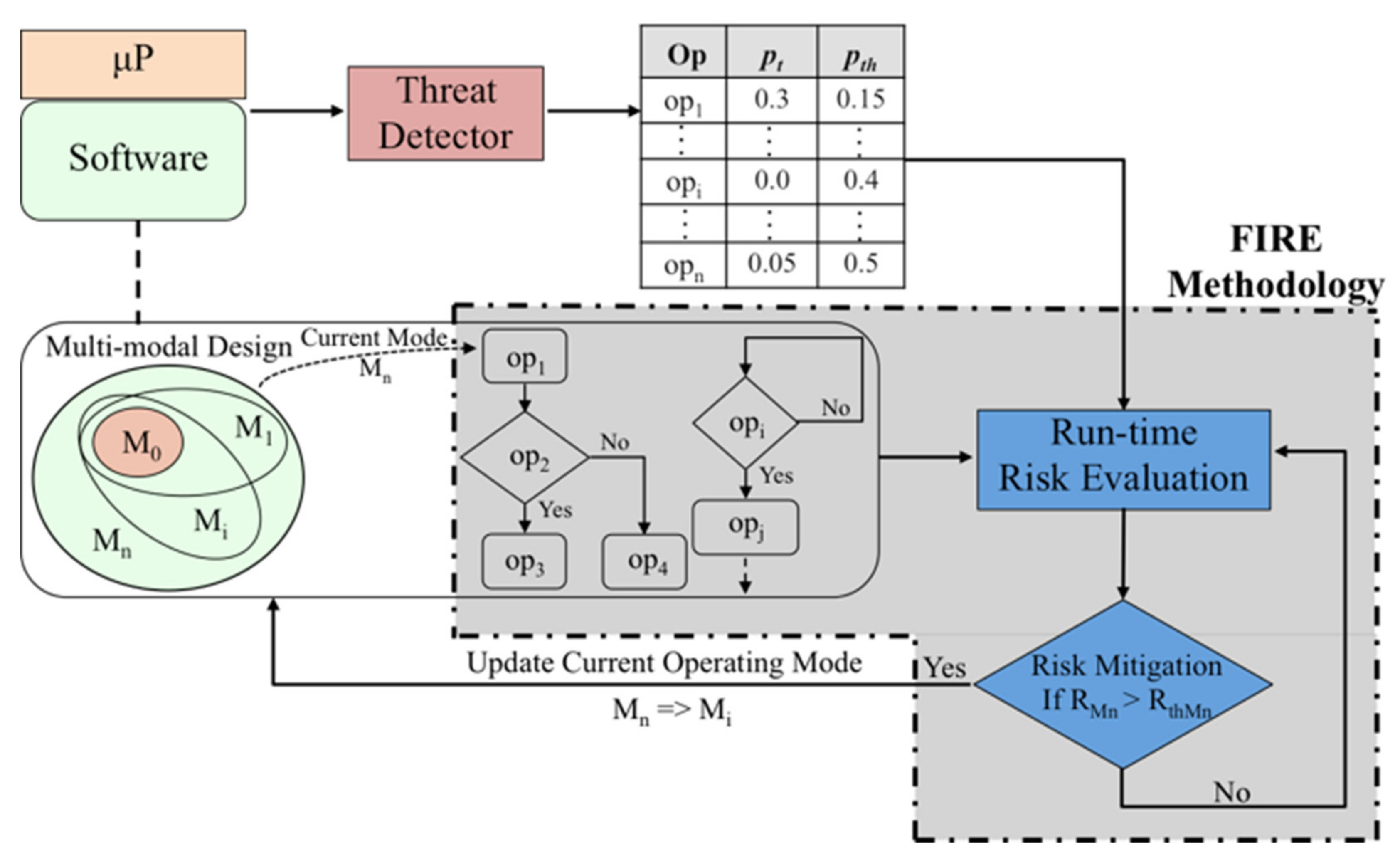

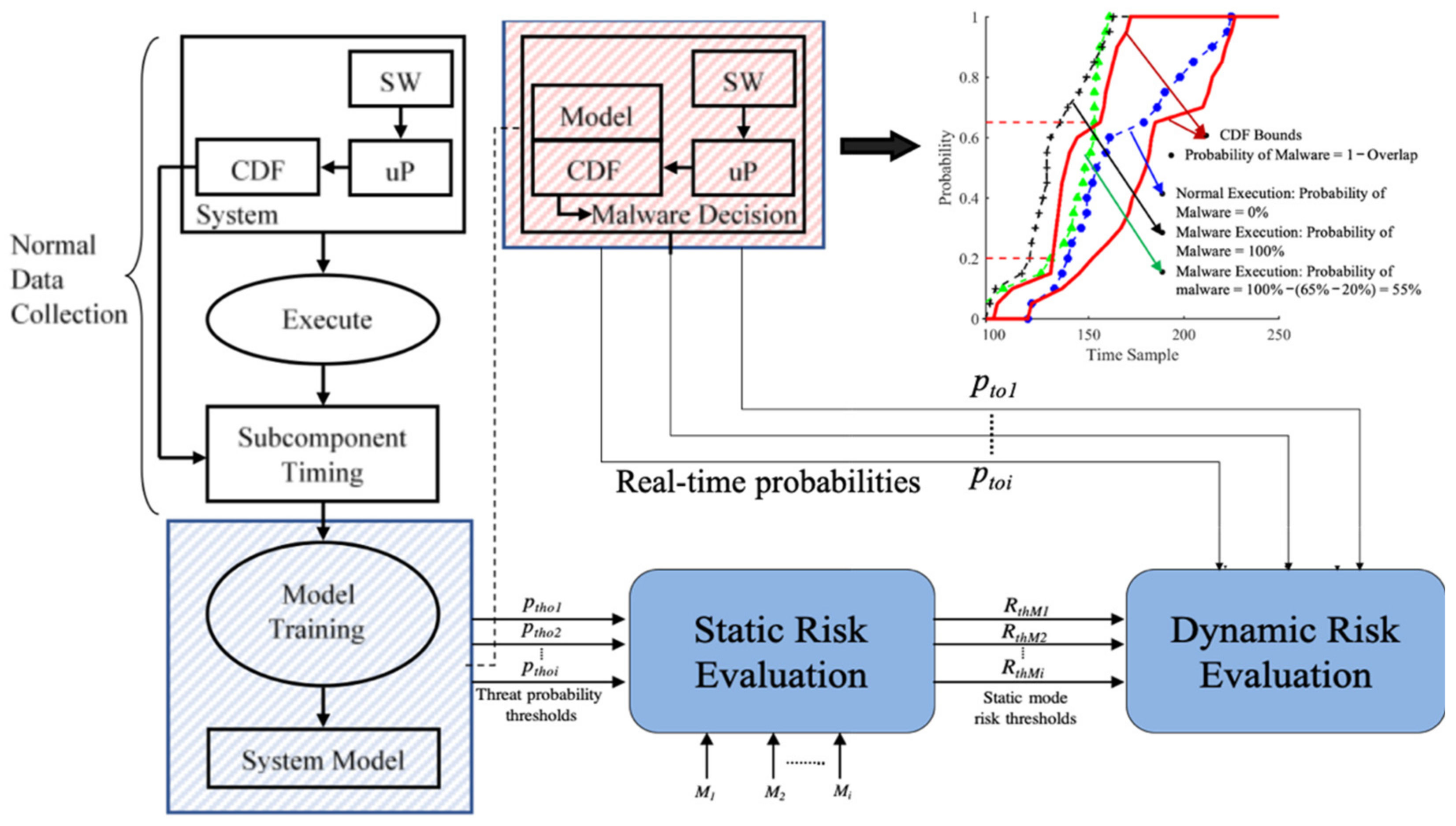

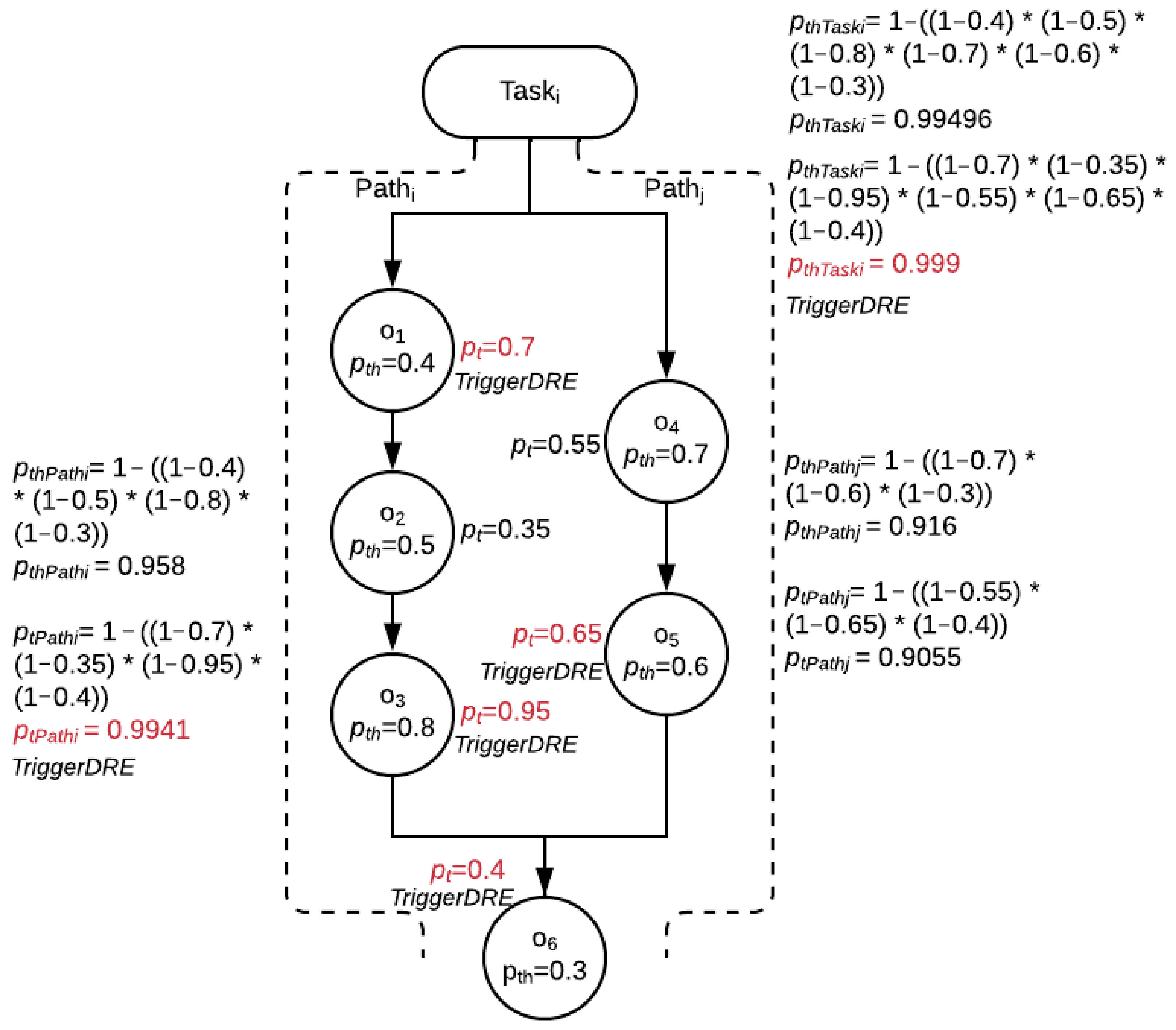

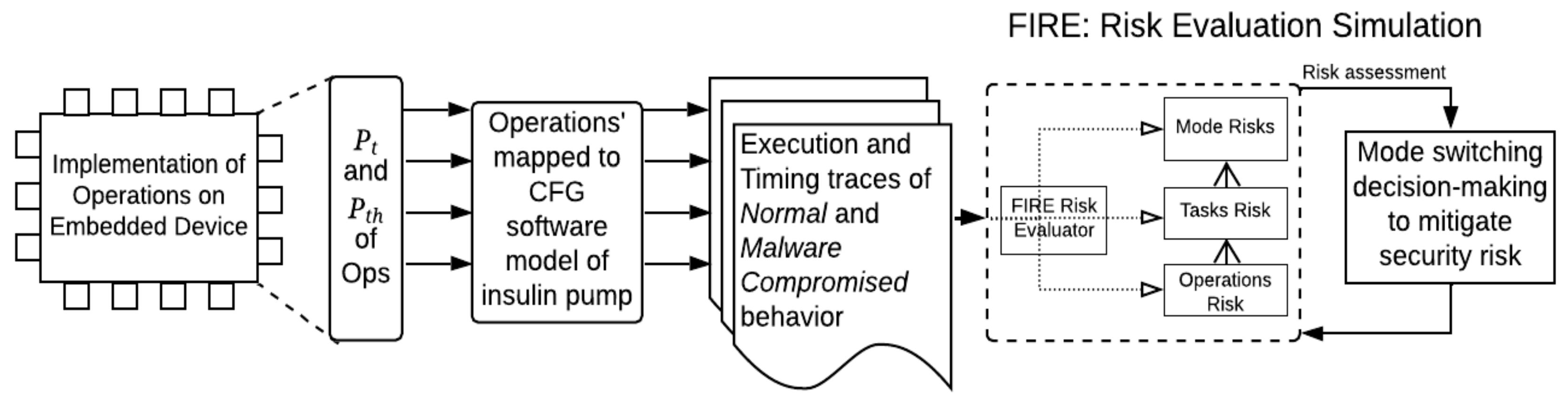

- During design—Static risk evaluation: (i) We assign baseline security-health and security-data-sensitivity impact scores in terms of confidentiality, integrity, and availability metrics to fundamental device operations [6,7]. These scores are aggregated to the composing software tasks using a fuzzy union to generate task impact scores. Task risks are calculated using these impact scores. The task risks are accumulated to the successive operating mode risks. Mode risks are normalized in the range of [0–10.0] to adhere to popular standards established in the Common Vulnerability Scoring System (CVSS) [6]. We build a weighted hierarchical control-flow risk graph (HCFRG) for static and dynamic risk evaluation. An HCFRG is defined as a control-flow graph where the nodes are operations, software tasks, and modes weighted by the calculated risk-impact scores. (ii) We utilize the threat probability thresholds of individual operations provided by a threat detector, such as described in [9], to establish static risk thresholds for each software mode. The calculated static risk thresholds assist in automatically ordering the operating modes in a monotonically increasing fashion of security risk impact. During deployment, these thresholds also establish the risks beyond which the mode is likely compromised by a security threat.

- During deployment—Dynamic risk assessment: Using the HCFRG, the dynamic operations, tasks, and system-level risk are assessed using threat probabilities measured at runtime by the threat detector. This approach ensures robustness by utilizing the same static design time model at runtime to assess the risks of threats.

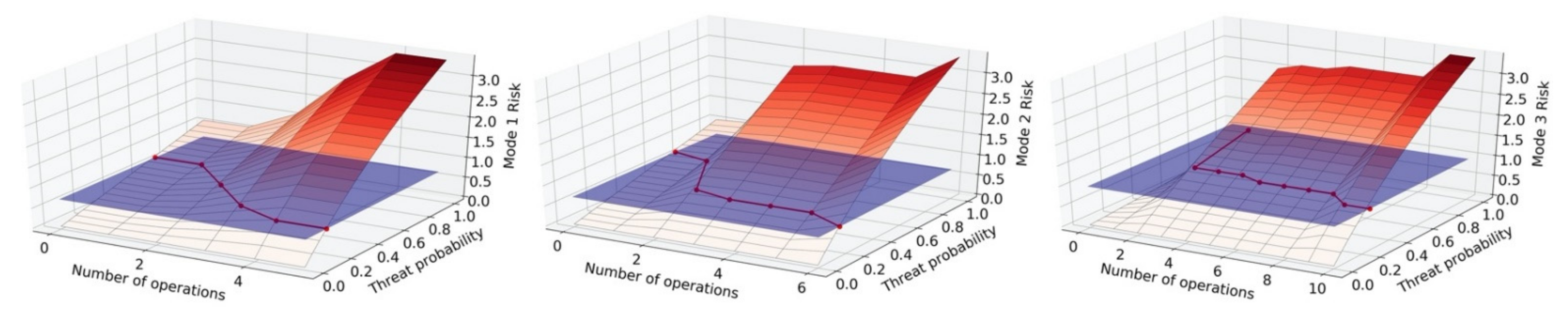

- Systematic evaluation: With the static mode risk threat threshold and dynamic risk evaluation established, a systematic analysis is performed to analyze the impact of the overall system risk by potential security threats affecting differing numbers of operations and with a range of threat probabilities (from 0 to 1.0). This evaluation seeks to generalize our approach for a broad range of possible security threats and analyzing the criteria under which appropriate mitigative actions must be taken, independent of the threat detector utilized. In addition, the results and evaluation help in designing the modes of the system by providing tradeoff metrics for composing operations and their risk impacts to the system.

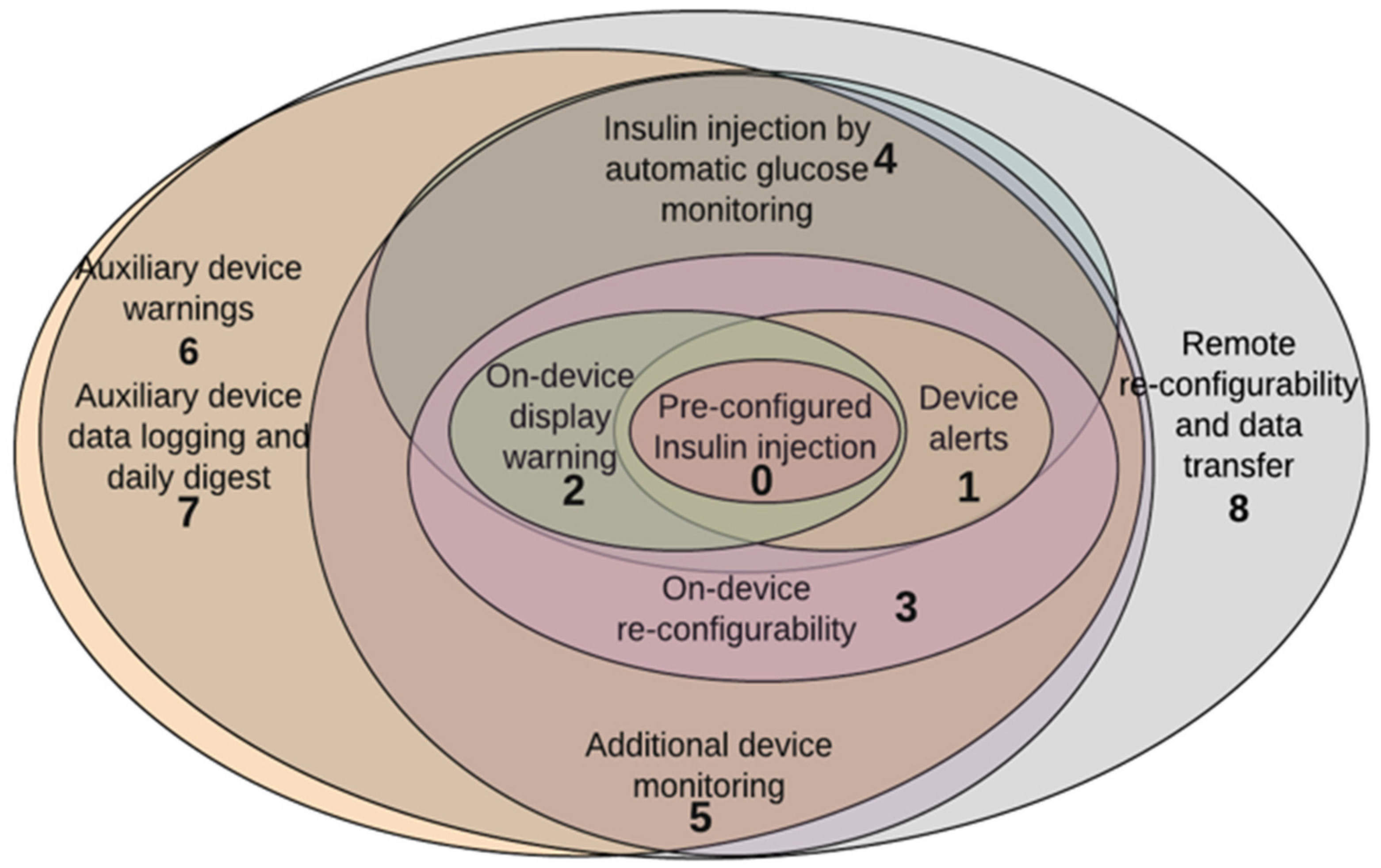

- Experimental evaluation: We perform a model-based simulation to demonstrate and evaluate the FIRE methodology, provided that life-critical embedded systems’ (medical devices’) software is a protected IP by manufactures and lacks well-documented open-source medical device software, particularly in a multimodal fashion. We simulate FIRE in a multimodal software model of a smart connected insulin pump and evaluate it based on risk assessment and management, the false-positive mode switching rate, the mode switching latency, and deviations in threat probabilities by injecting the model with eight different malware samples.

2. Related Work

3. System Overview and Assumptions

4. FIRE Methodology

4.1. Definitions

- Security Value: the security value is defined as the fuzzy value describing the impact of the security exploit to the health and data sensitivity of the life-critical system.

- CIA Impact Score: The impact score is a weighted impact score of confidentiality, integrity, and availability based on the assigned security values for health (Ch, Ih, and Ah) and data sensitivity (Cs, Is, and As). The weights can be assigned by security standards such as the CVSS.

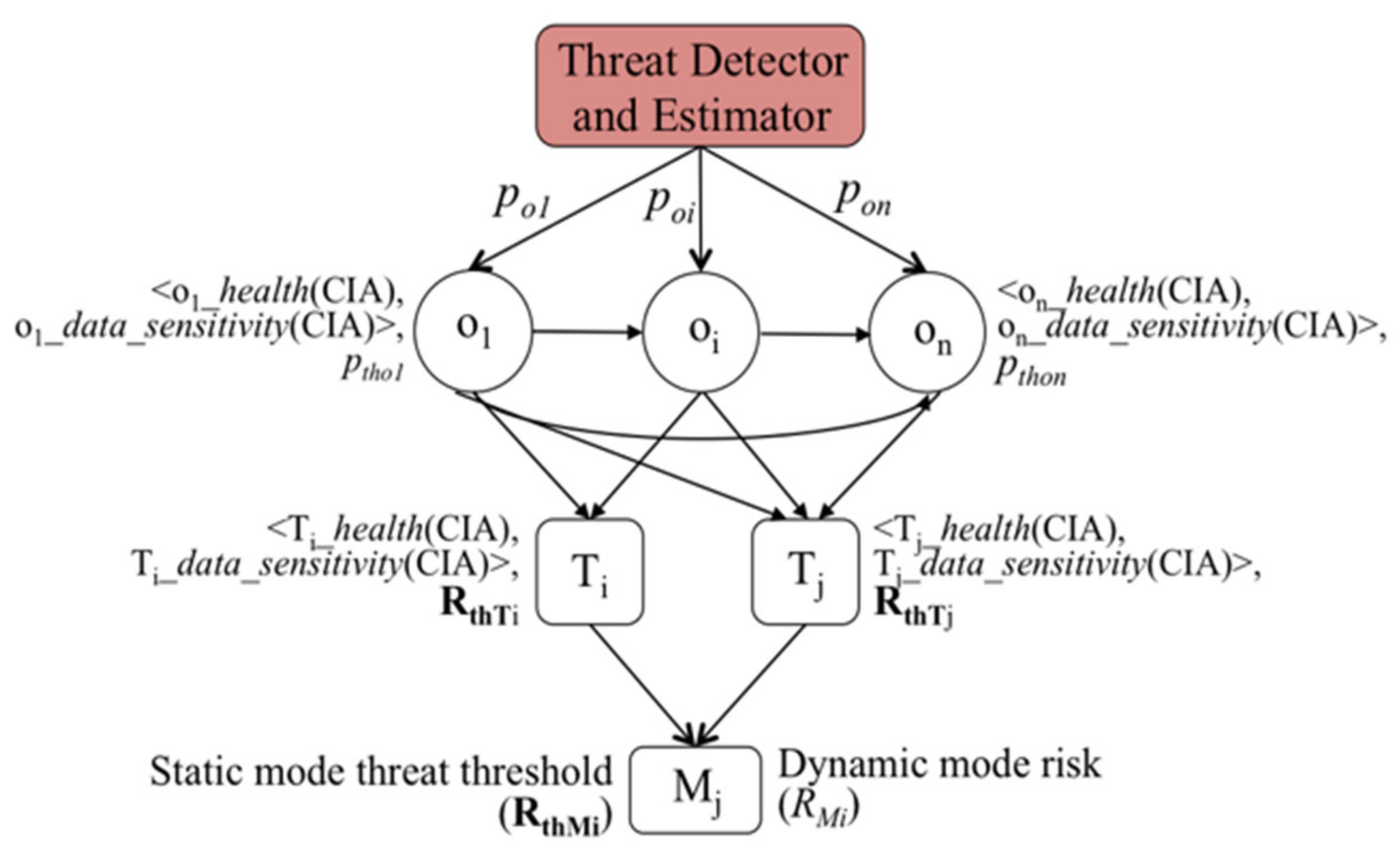

- Security Risk: Security principles state the definition of security risk as the likelihood of a security threat times the impact that the threat causes to the system (i.e., Risk = impact score×p, where p represents the probability of a threat and impact score is the CIA value). In our work, the CIA impact score is assigned during static risk evaluation, and the probability of a threat is provided by the threat detector and estimator at runtime.

- System Risk Threshold: the system risk threshold, RS, represents the system risk value beyond which the security threat impacts life criticality and threat mitigation by mode switching is needed.

- Operational Threat Probability:ptoi is the threat probability of an operation that is provided by an on-chip threat detector and estimator.

- Operation Threat Probability Threshold: pthoi represents the operation probability threat value beyond which the operation is said to be compromised.

4.2. Hierarchical Control-Flow Risk Graph

- (1)

- SL represents a finite set of states, S, at the respective multimodal hierarchical levels, L, such that L {M T O}, where M is the set of modes, T is the set of tasks, and O is the set of operations.

- (2)

- P: (SL | L O) is the labeling of the states at the operations level with the runtime threat probabilities from the threat estimator such that:

- (3)

- I: (SL | L {O, T}) is the labeling of the states at the tasks and operations levels with the associated CIA impact scores.

- (4)

- R: (SL | L {T, M}) is the labeling of the states at the tasks and modes levels with the corresponding computed risks using the risk calculation function, .

- (5)

- is the FIRE risk calculation and assessment function given the CIA impact scores, I, and runtime threat probabilities, P, such that (I, P) = R.

- (6)

- τ SL S’L is the state (mode) switching transition from (SL | L Mi) (S’L | L M0, …, i−1), where i represents the current operating state of the system.

5. Static Risk Evaluation

6. Dynamic Risk Evaluation

| Algorithm 1: Adaptive Risk Management by Mode Switching |

| Static risk evaluation: evaluate (static_risk_thresholds) RS = RthMs = k, k := highest operating mode while (execution), do if DREFilterCondition (ptoi = 0,..,i,..,n, pthoi = 0,..,i,..,n), do Dynamic risk evaluation: evaluate (RTi = 0,..,j,..,l) RMs = k = max (RTi = 0,..,j,..,l) if RMk > RS do modeSwitch (Ms = e), e is switched mode | e < k RS = RthMs = e end |

7. Threat Model

- Health-Compromising Malware: In this category, we consider fuzz and data manipulation malware. The fuzz malware is a mimicry malware that interferes with a system’s predefined functionality by slightly changing (i.e., randomizing) data [34,35]. In our application, the fuzz malware is implemented at two levels, namely 20% and 100% randomization, which enables the evaluation of risk at different fuzzification levels. The data manipulation malware manipulates data within the target system to disrupt normal control-flow execution. These types of malwares can cause a direct threat to the health of a patient.

- Data-sensitivity-Compromising Malware: the information leakage malware breaks confidentiality by covertly leaking private data stored in the system to an unauthorized party.

- Synthetic Security Threats: We also implement synthetic security threat scenarios by injecting actual malware samples in specific execution paths. This is carried out to demonstrate the functionality of FIRE and how risks are managed by mode switching, which involves single- and multiple-mode switches based on the compromised operations/tasks.

8. FIRE Evaluation: Insulin Pump Case Study

8.1. Multimodal Design

8.2. Experimental Setup

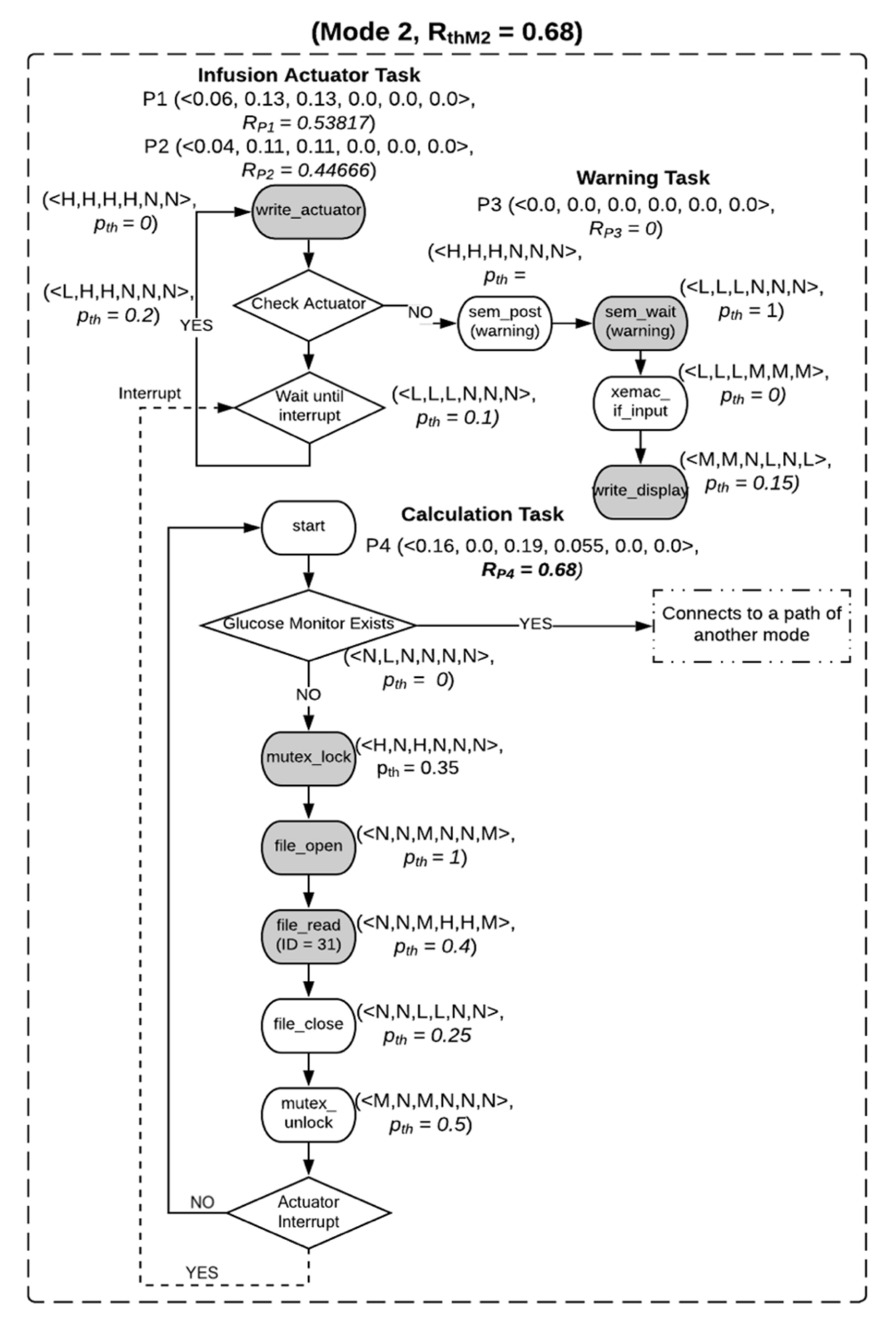

8.3. Static Risk Evaluation

8.3.1. Static Risk Threshold Calculation

8.3.2. Experimental Setup and Analysis

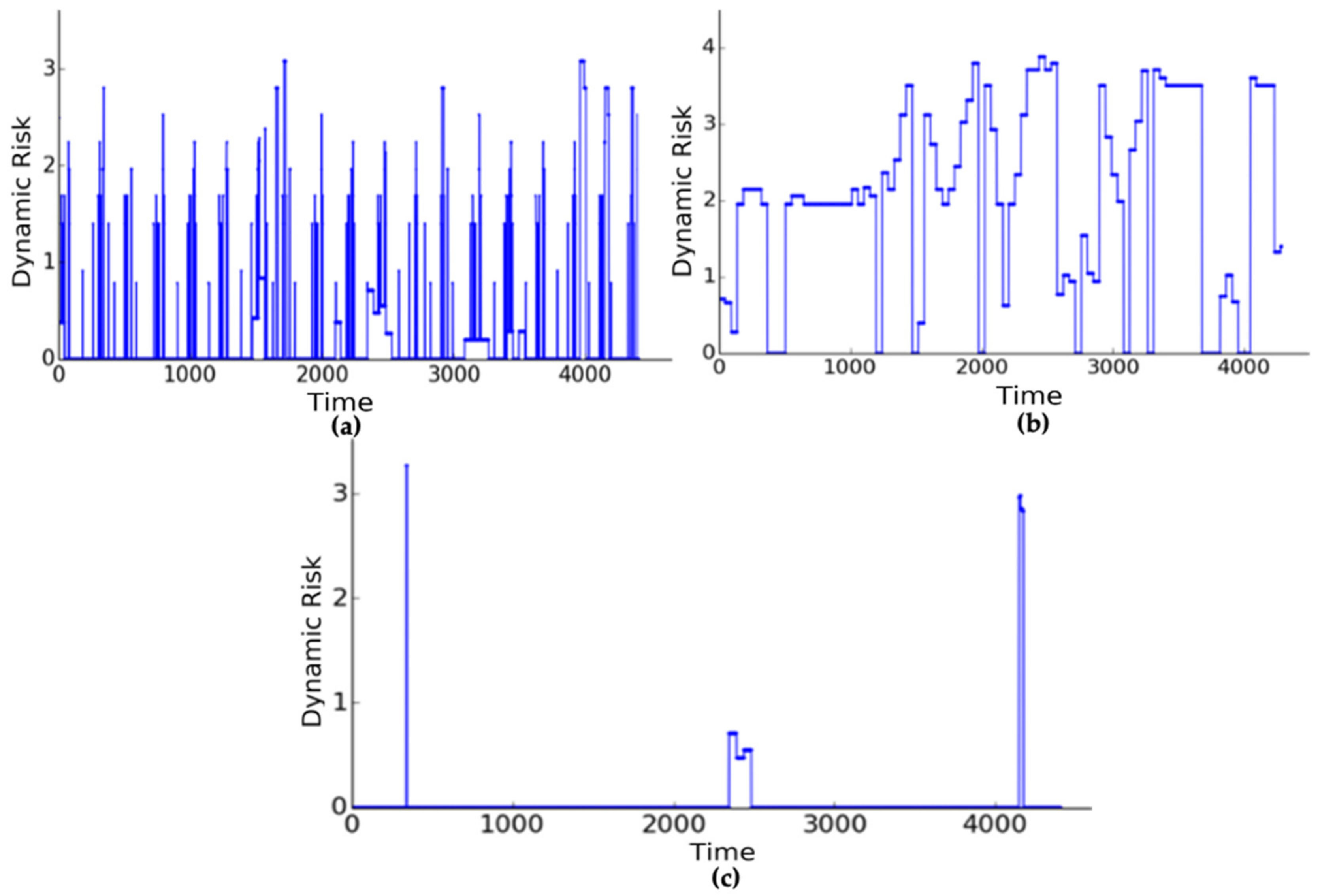

8.4. Dynamic Risk Evaluation

8.4.1. Normal Execution

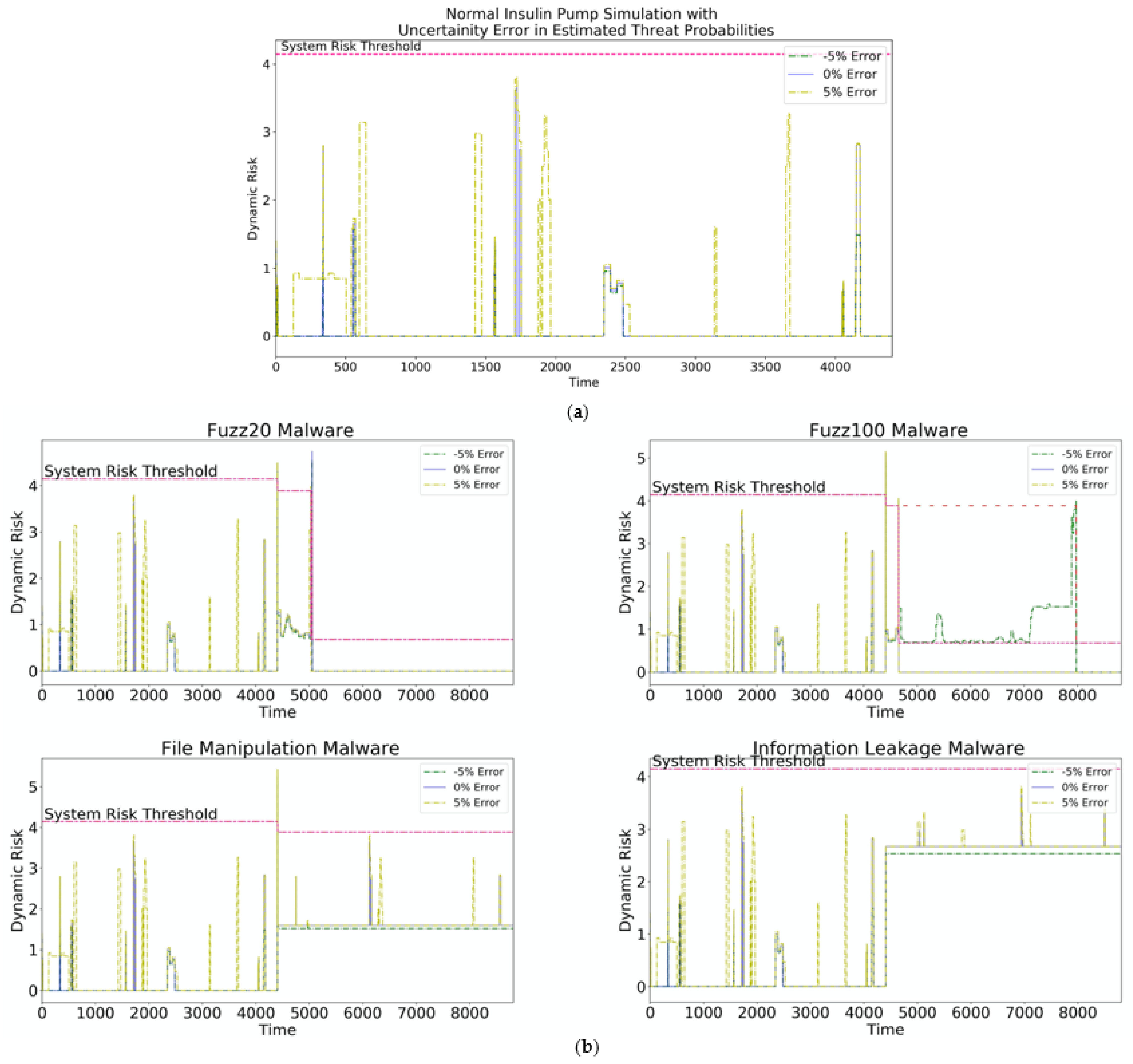

8.4.2. Malware

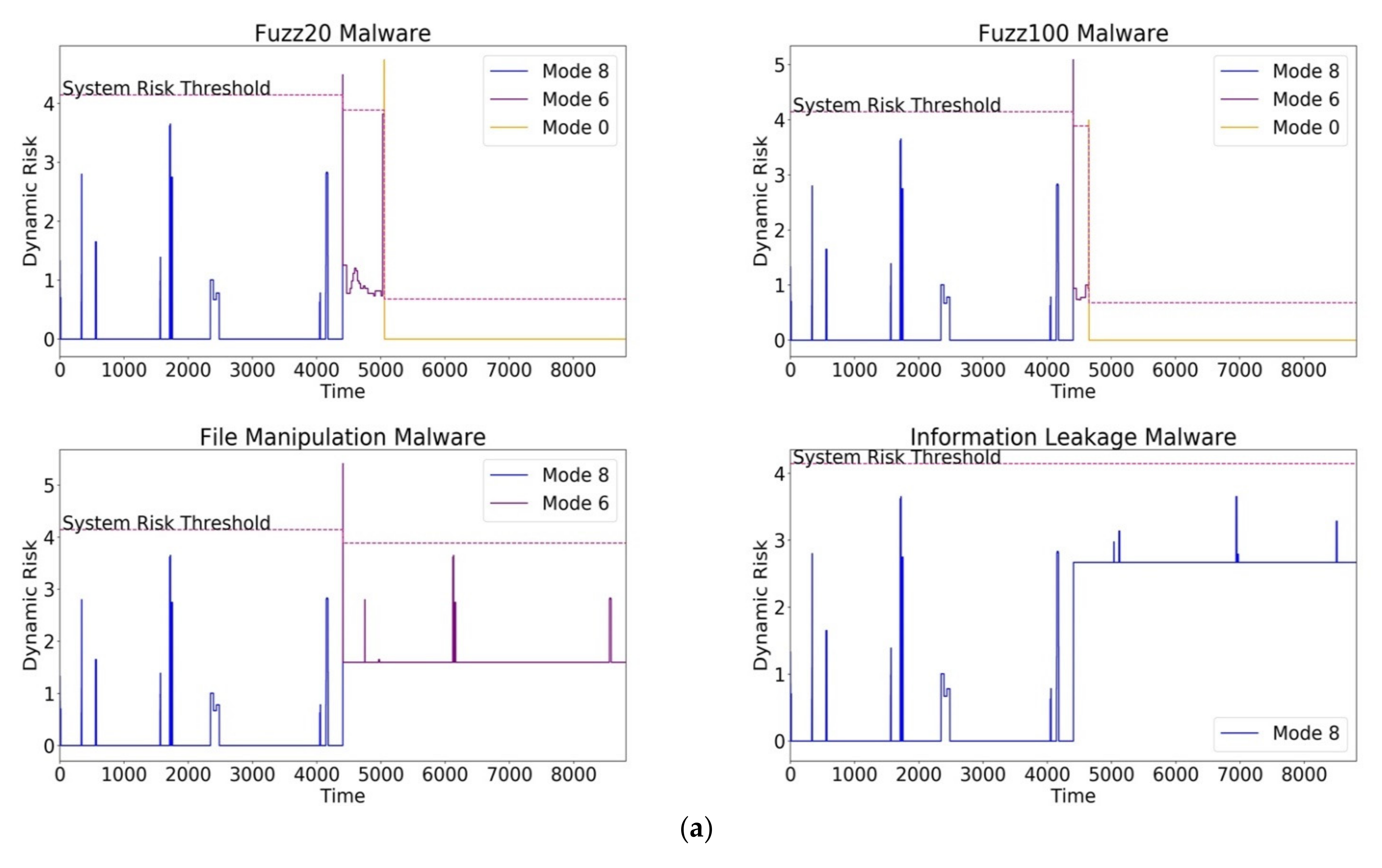

- Safety-Compromising Malware: The file manipulation malware impacted a few operations of one execution path of the communication, calculation, and display task. The operations in the communication and display task had high CIA impact scores and mandated mode switching to M6 (RthM6 = 3.887). The dynamic risk in M6 was still above the normal system risk because of the sustained threat to the calculation task. However, the compromised operations in M6 had lower CIA impact scores that did not significantly increase RM6. The expected mode to mitigate the file manipulation malware was mode 6, as this operating mode is the highest operating mode that does not contain the communication and display tasks that are responsible for transferring and displaying manipulated data (ref. Table 3). FIRE achieved the expected mode switch to M6.

- Fuzz20 and Fuzz100 directly impacted the calculation, communication, display, and configuration write tasks. Since the fuzz malware is a randomization malware, an intermittent mode switch to M6 briefly occurred when the risk in the calculation and communication threat was mitigated by this switch. The malware persisted, as it impacted the execution path (ref. Table 3) responsible for computing the amount of insulin needed to be pumped based on the sensor and preconfigured inputs (M1, M2, M3, M4, and M5). Since these operations had the highest CIA impact on safety, a direct switch to M0 (essential mode) was needed. The expected ideal mode switch for both the Fuzz20 and Fuzz100 malwares was to M0 from the beginning of the execution, as the malwares impact safety-critical operations. However, FIRE overshot the execution times by 2.7% for Fuzz100 and 7.3% for Fuzz20 by switching to M6 before switching to the expected M0. Since Fuzz malwares are based on the time randomization of exploits, FIRE successfully recognized the impact of this compromise (system risk is higher than zero) but took a conservative approach in mode switching. We will analyze the impact of this overshooting and explore further heuristics to tune our mode switching algorithm in future work.

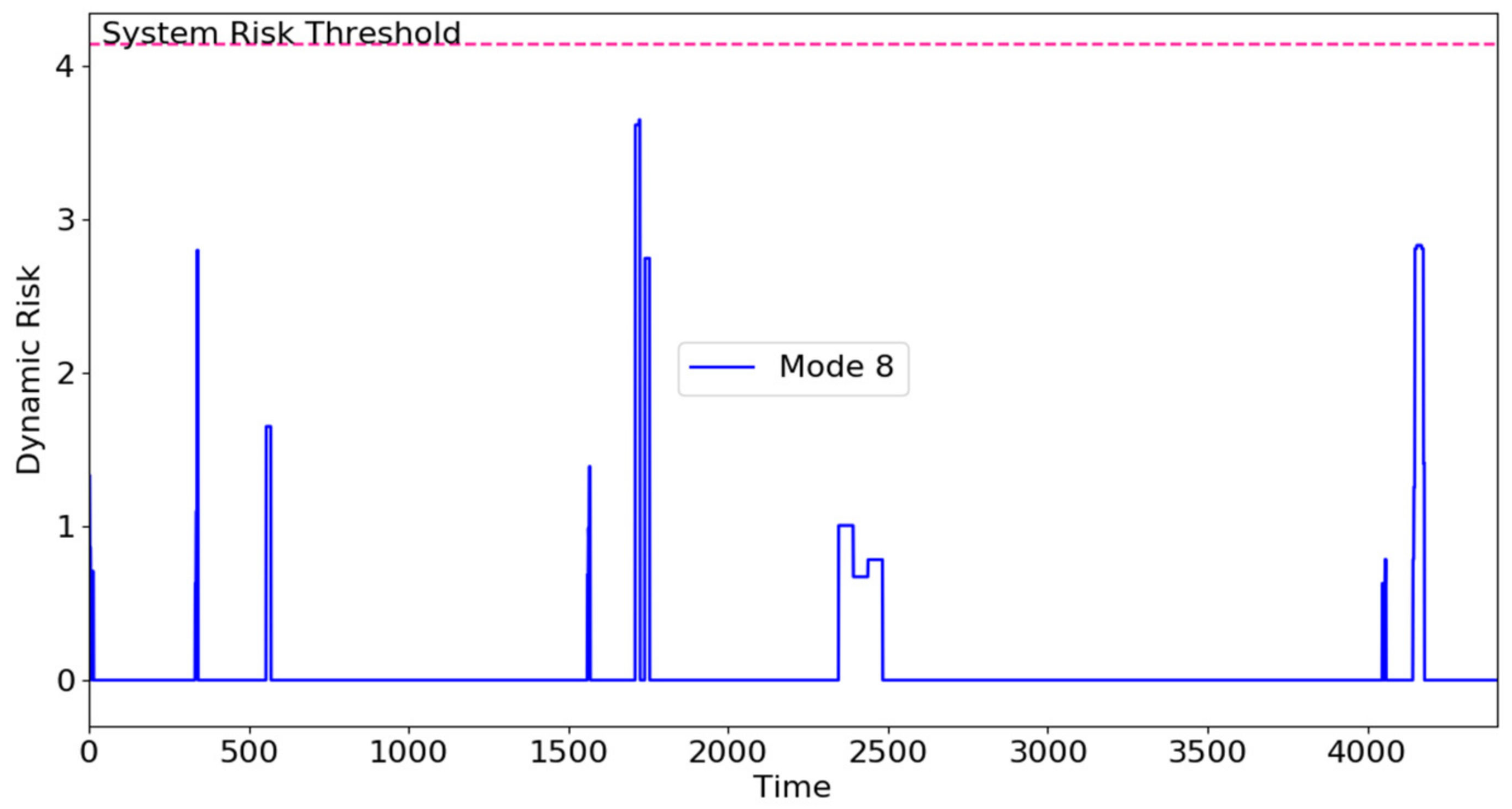

- Data-sensitivity-Compromising Malware: The information leakage malware covertly transmitted sensitive information via a TCP channel in the warning task. The operations in the warning task had considerable impact on data sensitivity, which is shown in the consistent rise in the risk, RM8. However, the system risk was not high enough to mandate a mode switch, as this malware did not significantly impact safety and remained in M8. The expected mode switch for the information leakage malware was to remain in the highest operating mode, M8, as safety was not compromised, and FIRE achieved this expected mode while signifying a potential threat with higher system risk.

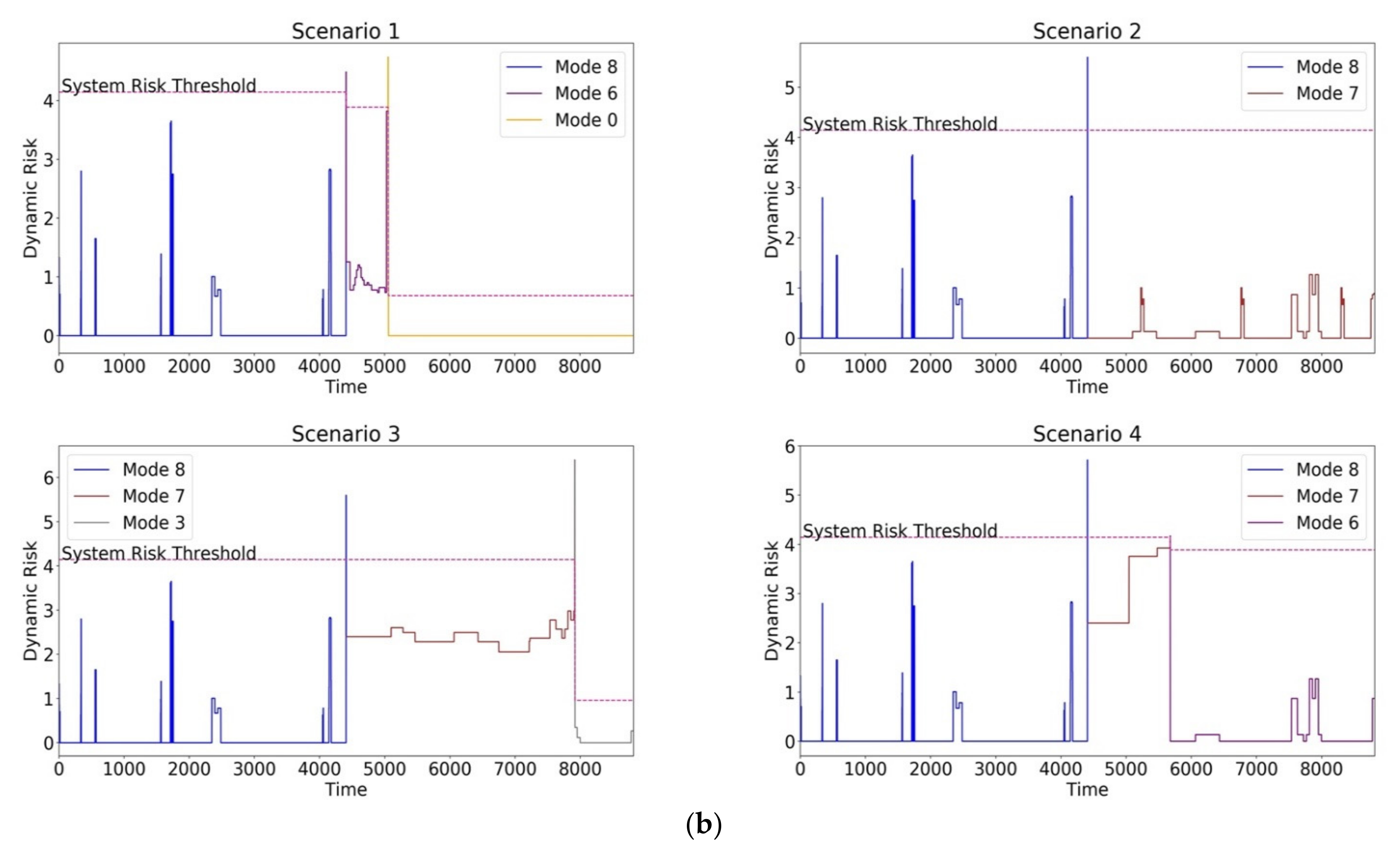

- Synthetic Security Threat: The simulation traces of synthetic malware are shown in Figure 12b. Scenario 1 was similar to the Fuzz malware, requiring multiple multimode switches to M0 to ensure safe operation. Scenario 2 represented a synthetic malware injected only into operations in the communication thread and hence needing a single mode switch to M7 (RthM7 = 4.144) to ensure safe operation. Scenarios 3 and 4 represented monotonous mode switches where the synthetic malware targeted specific operations in M4 and M7, requiring the system to switch to M3 (RthM3 = 0.958) and M6 (RthM6 = 3.887), respectively.

8.4.3. Mitigation Latency

8.4.4. Threat Probability Deviation Evaluation

9. Conclusions and Future Work

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Williams, P.A.; Woodward, A.J. Cybersecurity Vulnerabilities in Medical Devices: A Complex Environment and Multifaceted Problem. Med. Devices 2015, 8, 305–316. [Google Scholar] [CrossRef] [PubMed]

- Halperin, D.; Heydt-Benjamin, T.S.; Ransford, B.; Clark, S.S.; Defend, B.; Morgan, W.; Fu, K.; Kohno, T.; Maisel, W.H. Pacemakers and Implantable Cardiac Defibrillators: Software Radio Attacks and Zero-Power Defenses. In Proceedings of the 2008 IEEE Symposium on Security and Privacy (sp 2008), Oakland, CA, USA, 18–21 May 2008; pp. 129–142. [Google Scholar]

- Li, C.; Raghunathan, A.; Jha, N.K. Hijacking an Insulin Pump: Security Attacks and Defenses for a Diabetes Therapy System. In Proceedings of the 2011 IEEE 13th International Conference on e-Health Networking, Applications and Services, Columbia, MD, USA, 13–15 June 2011; pp. 150–156. [Google Scholar]

- Maisel, W.H.; Kohno, T. Improving the Security and Privacy of Implantable Medical Devices. N. Engl. J. Med. 2010, 362, 1164–1166. [Google Scholar] [CrossRef] [PubMed]

- Postmarket Management of Cybersecurity in Medical Devices. Available online: https://www.fda.gov/regulatory-information/search-fda-guidance-documents/postmarket-management-cybersecurity-medical-devices (accessed on 5 August 2022).

- Mell, P.; Scarfone, K.; Romanosky, S. A Complete Guide to the Common Vulnerability Scoring System Version 2.0. In Proceedings of the Published by FIRST-Forum of Incident Response and Security Teams, 2007; pp. 1–23. Available online: https://tsapps.nist.gov/publication/get_pdf.cfm?pub_id=51198 (accessed on 11 August 2022).

- Carreón, N.A.; Sonderer, C.; Rao, A.; Lysecky, R. A Medical Vulnerability Scoring System Incorporating Health and Data Sensitivity Metrics. Int. J. Comput. Inf. Eng. 2021, 15, 458–466. [Google Scholar]

- Boehm, B.W. Software Risk Management: Principles and Practices. IEEE Softw. 1991, 8, 32–41. [Google Scholar] [CrossRef]

- Carreon, N.A.; Lu, S.; Lysecky, R. Hardware-Based Probabilistic Threat Detection and Estimation for Embedded Systems. In Proceedings of the 2018 IEEE 36th International Conference on Computer Design (ICCD), Orlando, FL, USA, 7–10 October 2018; pp. 522–529. [Google Scholar] [CrossRef]

- Rao, A.; Carreón, N.; Lysecky, R.; Rozenblit, J. Probabilistic Threat Detection for Risk Management in Cyber-Physical Medical Systems. IEEE Softw. 2018, 35, 38–43. [Google Scholar] [CrossRef]

- Rao, A.; Rozenblit, J.; Lysecky, R.; Sametinger, J. Trustworthy Multi-Modal Framework for Life-Critical Systems Security. In Proceedings of the Annual Simulation Symposium: Society for Computer Simulation International, San Diego, CA, USA, 15 April 2018; pp. 1–9. [Google Scholar]

- Lyu, X.; Ding, Y.; Yang, S.-H. Safety and Security Risk Assessment in Cyber-Physical Systems. IET Cyber-Phys. Syst. Theory Appl. 2019, 4, 221–232. [Google Scholar] [CrossRef]

- Siddiqui, F.; Hagan, M.; Sezer, S. Establishing Cyber Resilience in Embedded Systems for Securing Next-Generation Critical Infrastructure. In Proceedings of the 2019 32nd IEEE International System-on-Chip Conference (SOCC), Singapore, 3–6 September 2019; pp. 218–223. [Google Scholar] [CrossRef]

- Ashibani, Y.; Mahmoud, Q.H. Cyber Physical Systems Security: Analysis, Challenges and Solutions. Comput. Secur. 2017, 68, 81–97. [Google Scholar] [CrossRef]

- Kure, H.I.; Islam, S.; Razzaque, M.A. An Integrated Cyber Security Risk Management Approach for a Cyber-Physical System. Appl. Sci. 2018, 8, 898. [Google Scholar] [CrossRef]

- Bialas, A. Risk Management in Critical Infrastructure—Foundation for Its Sustainable Work. Sustainability 2016, 8, 240. [Google Scholar] [CrossRef]

- Baiardi, F.; Telmon, C.; Sgandurra, D. Hierarchical, Model-Based Risk Management of Critical Infrastructures. Reliab. Eng. Syst. Saf. 2009, 94, 1403–1415. [Google Scholar] [CrossRef]

- Poolsappasit, N.; Dewri, R.; Ray, I. Dynamic Security Risk Management Using Bayesian Attack Graphs. IEEE Trans. Dependable Secur. Comput. 2012, 9, 61–74. [Google Scholar] [CrossRef]

- Szwed, P.; Skrzyński, P. A new lightweight method for security risk assessment based on fuzzy cognitive maps. Int. J. Appl. Math. Comput. Sci. 2014, 24, 213–225. [Google Scholar] [CrossRef]

- Lindvall, M.; Diep, M.; Klein, M.; Jones, P.; Zhang, Y.; Vasserman, E. Safety-Focused Security Requirements Elicitation for Medical Device Software. In Proceedings of the 2017 IEEE 25th International Requirements Engineering Conference (RE), Lisbon, Portugal, 4–8 September 2017; pp. 134–143. [Google Scholar] [CrossRef]

- Jagannathan, S.; Sorini, A. A Cybersecurity Risk Analysis Methodology for Medical Devices. In Proceedings of the 2015 IEEE Symposium on Product Compliance Engineering (ISPCE), Chicago, IL, USA, 18–20 May 2015; pp. 1–6. [Google Scholar]

- Sango, M.; Godot, J.; Gonzalez, A.; Ruiz Nolasco, R. Model-Based System, Safety and Security Co-Engineering Method and Toolchain for Medical Devices Design. In Proceedings of the 2019 Design for Medical Devices Conference, Minneapolis, MN, USA, 15 April 2019. [Google Scholar] [CrossRef]

- Ngamboé, M.; Berthier, P.; Ammari, N.; Dyrda, K.; Fernandez, J.M. Risk Assessment of Cyber-Attacks on Telemetry-Enabled Cardiac Implantable Electronic Devices (CIED). Int. J. Inf. Secur. 2021, 20, 621–645. [Google Scholar] [CrossRef]

- Ni, S.; Zhuang, Y.; Gu, J.; Huo, Y. A Formal Model and Risk Assessment Method for Security-Critical Real-Time Embedded Systems. Comput. Secur. 2016, 58, 199–215. [Google Scholar] [CrossRef]

- Easttom, C.; Mei, N. Mitigating Implanted Medical Device Cybersecurity Risks. In Proceedings of the 2019 IEEE 10th Annual Ubiquitous Computing, Electronics & Mobile Communication Conference (UEMCON), New York, NY, USA, 10–12 October 2019; pp. 0145–0148. [Google Scholar] [CrossRef]

- Carreón, N.A.; Gilbreath, A.; Lysecky, R. Statistical Time-Based Intrusion Detection in Embedded Systems. In Proceedings of the 23rd Conference on Design, Automation and Test in Europe, Grenoble, France, 9–13 March 2020; pp. 562–567. [Google Scholar] [CrossRef]

- Phan, L.; Lee, I. Towards a Compositional Multi-Modal Framework for Adaptive Cyber-Physical Systems. In Proceedings of the 2011 IEEE 17th International Conference on Embedded and Real-Time Computing Systems and Applications, Toyama, Japan, 28–31 August 2011; Volume 2, pp. 67–73. [Google Scholar]

- Pinto, S.; Gomes, T.; Pereira, J.; Cabral, J.; Tavares, A. IIoTEED: An Enhanced, Trusted Execution Environment for Industrial IoT Edge Devices. IEEE Internet Comput. 2017, 21, 40–47. [Google Scholar] [CrossRef]

- Chen, T.; Phan, L.T.X. SafeMC: A System for the Design and Evaluation of Mode-Change Protocols. In Proceedings of the 2018 IEEE Real-Time and Embedded Technology and Applications Symposium (RTAS), Porto, Portugal, 11–13 April 2018; pp. 105–116. [Google Scholar]

- Eidson, J.C.; Lee, E.A.; Matic, S.; Seshia, S.A.; Zou, J. Distributed Real-Time Software for Cyber–Physical Systems. Proc. IEEE 2012, 100, 45–59. [Google Scholar] [CrossRef]

- Liu, P. Some Hamacher Aggregation Operators Based on the Interval-Valued Intuitionistic Fuzzy Numbers and Their Application to Group Decision Making. IEEE Trans. Fuzzy Syst. 2014, 22, 83–97. [Google Scholar] [CrossRef]

- de Gusmão, A.P.H.; e Silva, L.C.; Silva, M.M.; Poleto, T.; Costa, A.P.C.S. Information Security Risk Analysis Model Using Fuzzy Decision Theory. Int. J. Inf. Manag. 2016, 36, 25–34. [Google Scholar] [CrossRef]

- Yaqoob, T.; Abbas, H.; Atiquzzaman, M. Security Vulnerabilities, Attacks, Countermeasures, and Regulations of Networked Medical Devices—A Review. Commun. Surv. Tuts. 2019, 21, 3723–3768. [Google Scholar] [CrossRef]

- Wasicek, A.; Derler, P.; Lee, E.A. Aspect-Oriented Modeling of Attacks in Automotive Cyber-Physical Systems. In Proceedings of the 51st Annual Design Automation Conference, New York, NY, USA, 1 June 2014; Association for Computing Machinery: New York, NY, USA; pp. 1–6. [Google Scholar]

- Lu, S.; Lysecky, R. Time and Sequence Integrated Runtime Anomaly Detection for Embedded Systems. ACM Trans. Embed. Comput. Syst. 2017, 17, 38:1–38:27. [Google Scholar] [CrossRef]

- MiniMedTM 770G System. Available online: https://www.medtronicdiabetes.com/products/minimed-770g-insulin-pump-system (accessed on 6 August 2022).

- Walsh, J.; Roberts, R.; Heinemann, L. Confusion Regarding Duration of Insulin Action. J. Diabetes Sci. Technol. 2014, 8, 170–178. [Google Scholar] [CrossRef] [PubMed]

| Security Value | Description | CIA Impact Score |

|---|---|---|

| None (N) | Operation has no impact on health and sensitivity. | 0.0 |

| Low (L) | Impact of exploited operation on health and sensitivity is minimal. | 0.22 |

| Medium (M) | If compromised, operation can considerably impact health and sensitivity, but patient is not at risk. | 0.31 |

| High (H) | If compromised, operations may lead to life-threatening health consequences or the loss/invasion of critical sensitive data. | 0.56 |

| DRE Thresholds | DRE Execution Rate |

|---|---|

| POT | 20.80% |

| PTT | 38.92% |

| PPT | 5.85% |

| Malware | Impacted Tasks | Implementation |

|---|---|---|

| Fuzz20, Fuzz100 | T6 = {P7, P8, P9, P10}, T7 = {P11}, T9 = {P13, P14}, T11 = {P16, P17} | Randomizes operations and data in the tasks that perform blood glucose sensor processing, insulin amount calculation, physician configuration updates, and data transfer and display. |

| File Manipulation | T6 = {P7, P8, P9, P10}, T9 = {P13, P14}, T11 = {P16, P17} | Manipulates tasks that perform data transfer and display. |

| Information Leakage | T2 = {P3}, T10 = {P15} | Utilizes tasks at display alerts and warnings to covertly leak corresponding (private) data. |

| Synthetic Malware 1 | T6 = {P7, P8, P9, P10}, T11 = {P16, P17} | Synthetic malware is infused in the critical task of insulin calculation. |

| Synthetic Malware 2 | T11 = {P16, P17} | Synthetic malware is injected into the task that transfers data to an external device. |

| Synthetic Malware 3 | T7 = {P11}, T8 = {P12} | Malware is injected into the tasks that change insulin pump settings based on manual inputs. |

| Synthetic Malware 4 | T9 = {P13, P14} | Synthetic malware is injected into the task that transfers data to the insulin pump display. |

| Malware-Impacted Tasks | Execution Time (ms) |

|---|---|

| T11 Fuzz 20 | 28.69 |

| T6 Fuzz 20 | 448.32 |

| T11 Fuzz 100 | 1.8 |

| T6 Fuzz 100 | 447.43 |

| T10 Info Leak | 279.23 |

| T9 File Manipulation | 0.34 |

| System Execution | FP Mode Rate (%) | ||

|---|---|---|---|

| Estimated Threat Probabilities’ Deviation Percent | 0% | −5% | +5% |

| Normal | 0 | 0 | 0 |

| File Manipulation | 0 | 0 | 0 |

| Fuzz20 | 0 | 0 | 0.26 |

| Fuzz100 | 0 | 37.7 | 0 |

| Information Leakage | 0 | 0 | 0 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Rao, A.; Carreón, N.A.; Lysecky, R.; Rozenblit, J. FIRE: A Finely Integrated Risk Evaluation Methodology for Life-Critical Embedded Systems. Information 2022, 13, 487. https://doi.org/10.3390/info13100487

Rao A, Carreón NA, Lysecky R, Rozenblit J. FIRE: A Finely Integrated Risk Evaluation Methodology for Life-Critical Embedded Systems. Information. 2022; 13(10):487. https://doi.org/10.3390/info13100487

Chicago/Turabian StyleRao, Aakarsh, Nadir A. Carreón, Roman Lysecky, and Jerzy Rozenblit. 2022. "FIRE: A Finely Integrated Risk Evaluation Methodology for Life-Critical Embedded Systems" Information 13, no. 10: 487. https://doi.org/10.3390/info13100487

APA StyleRao, A., Carreón, N. A., Lysecky, R., & Rozenblit, J. (2022). FIRE: A Finely Integrated Risk Evaluation Methodology for Life-Critical Embedded Systems. Information, 13(10), 487. https://doi.org/10.3390/info13100487