S.A.D.E.—A Standardized, Scenario-Based Method for the Real-Time Assessment of Driver Interaction with Partially Automated Driving Systems

Abstract

1. Background

- The method should be able to identify problems related to HMI aspects as well as to system functionality aspects;

- The method should allow both a global analysis as well as a very differentiated analysis of the interaction with the system in the investigated scenarios;

- The method should be adaptable to different test environments (e.g., driving simulation, test track or real-world driving);

- The method should allow a quick and efficient data assessment and analysis;

- The method should enable a standardized procedure with regard to the testing conditions, test scenarios and evaluation criteria;

- The method should allow an objective evaluation according to clear rules;

- The method should allow the assessment of driver interaction and experiences with the system directly in the situation, i.e., in real time;

- The method should be unobtrusive (i.e., it should not disturb the user).

2. Method Description

2.1. Evaluation Criteria—Which Behavior Should Be Observed?

2.1.1. Observation Categories of Driver Behavior

- System operation;

- Driving behavior;

- Monitoring behavior.

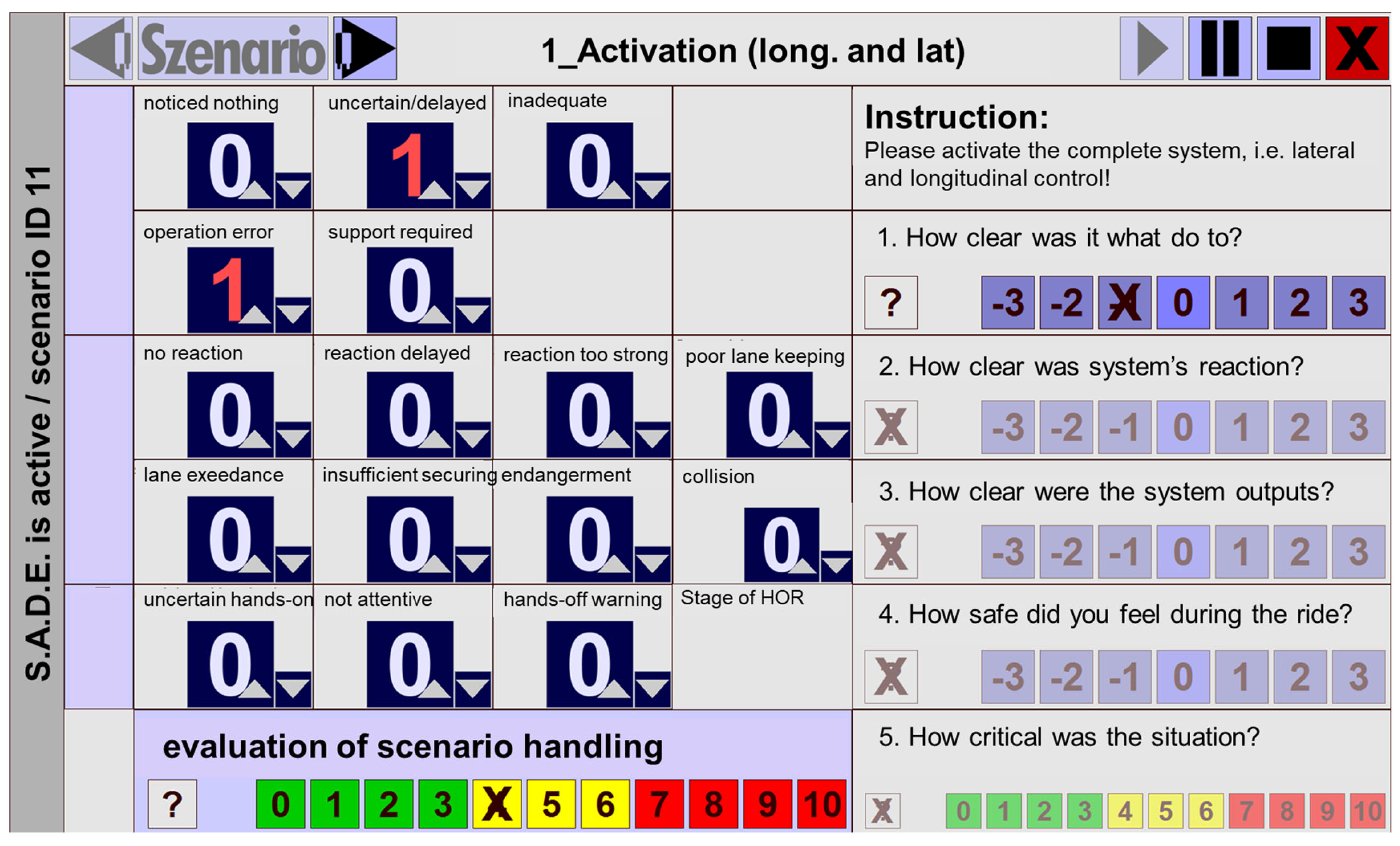

2.1.2. Subjective Driver Evaluation

- Comprehensibility of the required driver action: Does the driver know what to do in a certain situation, e.g., in order to activate the system, to deactivate it or to adequately react to a system limit?

- Understandability of system behavior: Does the driver understand why the system behaves in a certain way in a situation, e.g., when the lateral control is switched off?

- Comprehensibility of system outputs: Does the driver understand what the visual system indicators or acoustic signals mean?

- Perceived situation criticality: How critical does the driver perceive a certain situation as a result of the combination of the objective demands of the situation and the required reaction?

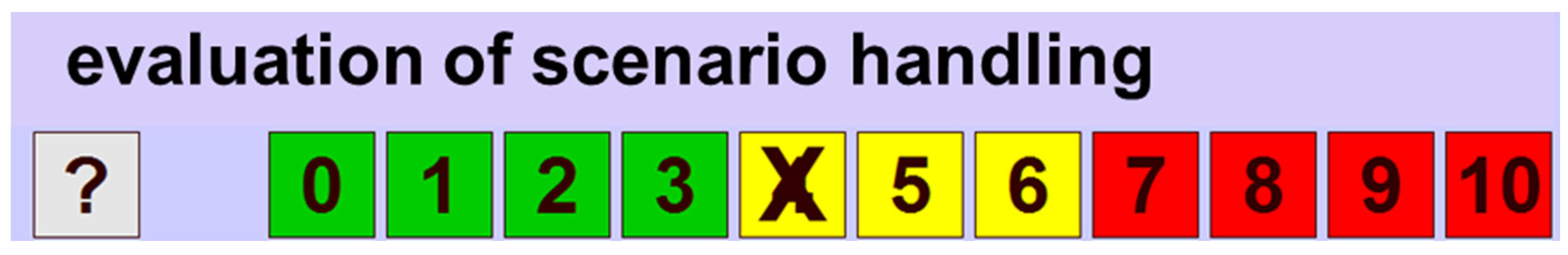

2.1.3. Global Rating of Scenario Handling

2.1.4. Rater Training

2.2. Definition of Relevant Test Scenarios

- System activation by the driver;

- System deactivation by the driver;

- Longer driving with active L2 system;

- Driver-initiated lane change;

- Temporary standby mode of lateral control;

- System limit and/or system malfunction (system limits can be both detectable and predictable as well as not detectable and not predictable; e.g., in longitudinal control: sensors are not able to detect a stationary vehicle or any other obstacle; in lateral control: system is not able to apply the necessary steering torque to manage a situation, e.g., a sharp bend).

2.3. Implementation of the Method in a Tablet App

2.4. Application of the Method

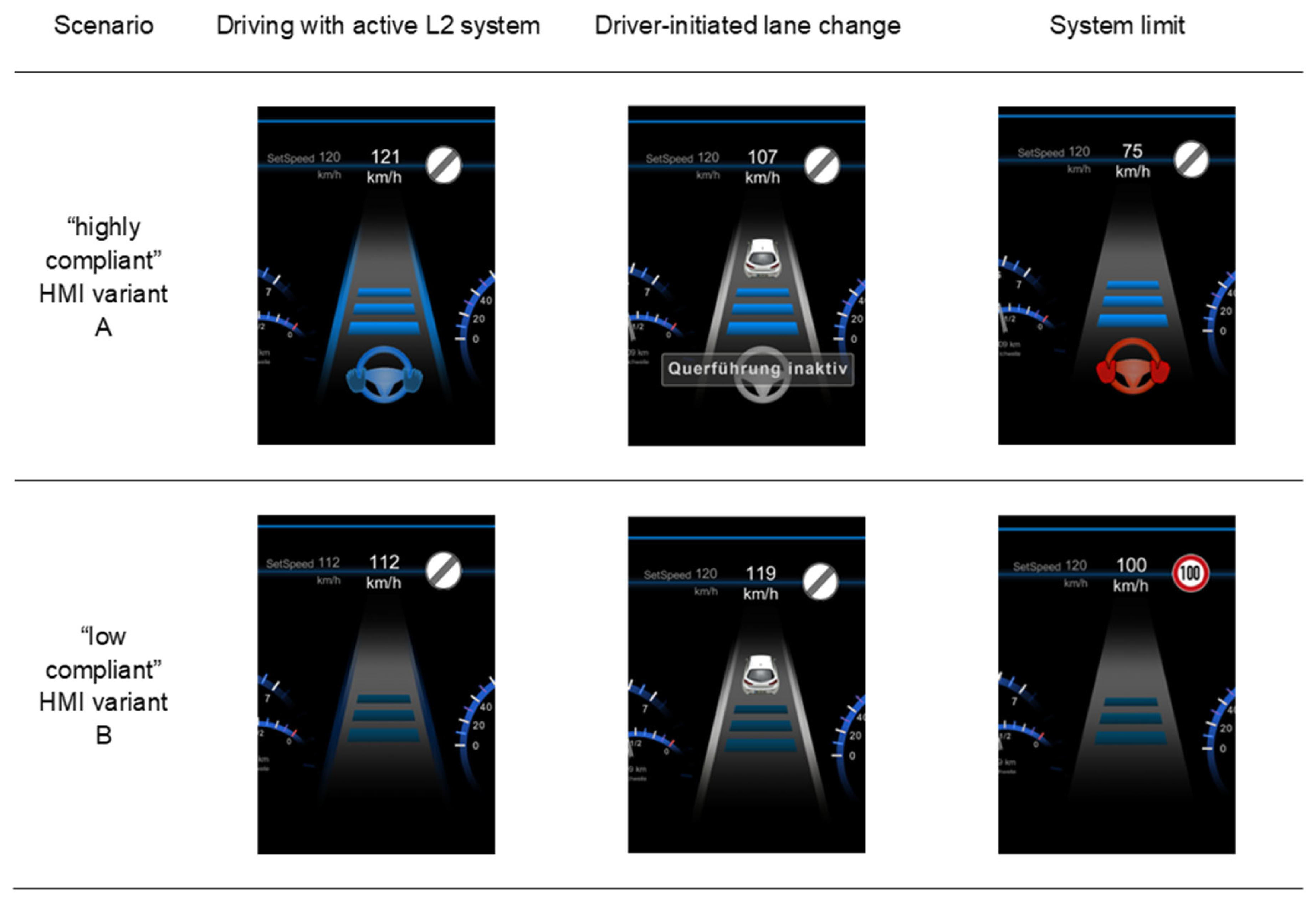

3. Results of an Explorative Study in the WIVW Driving Simulator

- System operation: operation logic regarding the activation of the longitudinal vehicle control (one-step vs. two-step activation);

- Control elements: labeling consistent vs. not consistent with the user manual;

- Visual indicators for active lateral vehicle control: with vs. without additional symbol of a steering wheel and text;

- Visual contrast: high vs. low contrast between foreground and background;

- Warning concept in situations with predictable system limits: presence vs. absence of a visual and acoustic warning.

- The designed differences in the two HMI variants affected drivers’ behavior and subjective experiences of the system assessed via the S.A.D.E. app only to some extent. For most of the analyses, only a tendency towards the significant effects of the HMI variant was found. On the one hand, this could be due to the small sample size. On the other hand, it could be possible that some design issues did not affect driver behavior in such a significant way that real problems occurred which could be detected by the tool.

- The following results were observed on the level of single observational categories (effects with p-values < 0.15 are defined as tendentially significant, effects with p-values < 0.05 are defined as significant):

- ○

- Effect of different warning strategies (with vs. without visual–acoustic warning) in scenario “sharp bend”: In HMI variant B (without the warning), a tendency towards worse lane-keeping behavior was observed (i.e., a higher frequency of problems in the category “driving behavior” was coded; p = 0.132). The subjective evaluation of the drivers revealed greater problems in system understanding for HMI variant B (p = 0.000).

- ○

- Effect of differences in system operation in scenario “first system activation” and scenario “deactivation”: The more complex system activation and deactivation in HMI variant B resulted in a higher frequency of problems in the category “system operation” for HMI variant B (especially more frequently coded events in the category “support required by the experimenter” in scenario “first system activation”: p = 0.019; a tendency towards this effect in scenario “system deactivation”: p = 0.140). In addition, there was a tendency towards a higher perceived subjective difficulty for system activation in variant B (p = 0.061).

- ○

- Effect of differences in visual contrast and visual indicators for the active lateral vehicle control subtask in the scenario “standby mode of lateral control”: The lower distinctiveness of system states in HMI variant B did not lead to observable differences in driving performance. However, the drivers from HMI variant B subjectively reported a tendency towards greater problems in identifying the system status based on the HMI output (p = 0.093).

- Global rating of the experimenter per scenario: The global rating of the experimenter differed in the scenarios “first system activation” (tendentially significant; p = 0.111), “second system activation” (significant; p = 0.024), “system deactivation” (tendentially significant; p = 0.064) and “obstacle” (statistically significant; p = 0.041041). No differences were found in the scenarios “lane change”, “standby mode of lateral control”, “sharp bend” and “active driving with the system”.

- HMI differences in the scenario “obstacle”: In contrast to expectations, a tendency towards worse driving behavior was observed for drivers in the group with HMI variant A, i.e., a higher frequency of drivers produced endangerments in terms of too low a minimum distance from the obstacle (p = 0.102). System behavior and HMI outputs did not differ between the HMI variants in this scenario. One possible explanation for this result could be that the highly compliant HMI variant A resulted in overtrust in the system, leading to the impression of reliable system performance. As a result, drivers may have taken longer to realize that the system would not be able to handle the situation. However, this interpretation can currently only remain on a hypothetical level. Additional questions regarding driver trust would have helped to support this explanation.

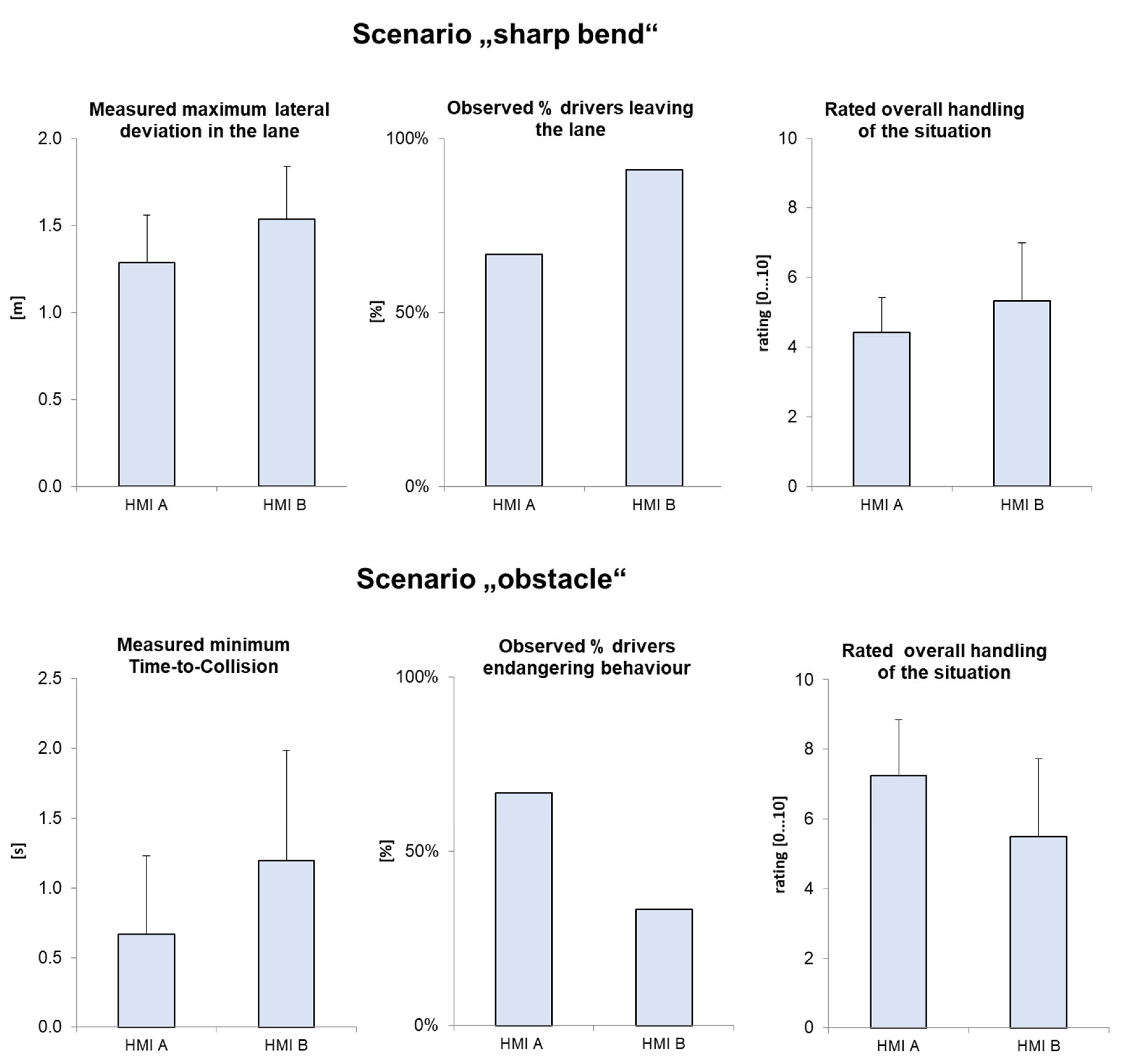

- The identified trends in the observed driving behavior based on the categorial evaluation in the S.A.D.E. app corresponded with the analysis of measured continuous driving data:

- ○

- The tendentially significant more frequent lane exceedances (observed and coded via the S.A.D.E. app; p = 0.132) corresponded with tendentially significant lower ratings of vehicle handling (rated by the experimenter via the S.A.D.E. app, p = 0.145) and with tendentially significant higher maximum measured lateral deviations in the scenario “sharp bend” (measured via the simulation software; see Figure 4 above for a comparison of the measures in the scenario “sharp bend”, p = 0.058).

- ○

- The significantly more frequent delayed braking reactions and endangerments (observed and coded with the S.A.D.E. app, p = 0.012) corresponded with a significantly lower rating of situation handling (rated by the experimenter via the S.A.D.E. app, p = 0.024) and with tendentially significant smaller minimum time-to-collision values in the scenario “obstacle” (indicating more critical scenarios; measured via the simulation software; see Figure 4 below for a comparison of the measures in the scenario “obstacle”, p = 0.058).

- ○

- These results indicate that categorial observation can partly replace the very time-intensive and resource-intensive analysis of time-based measures without losing too much information. This is an advantage of the method when used in studies with real vehicles, since it is usually time-consuming and costly to collect the necessary continuous driving data for an evaluation.

4. Results from the First Application of the Method in the BASt Driving Simulator

5. Summary

6. Discussion of Limitations and Future Challenges

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- J3016_202104; SAE On-Road Automated Vehicle Standards Committee. Taxonomy and definitions for terms related to on-road motor vehicle automated driving systems. SAE International: Warrandale, PA, USA, 2021.

- Bainbridge, L. Ironies of automation. In Analysis, Design and Evaluation of Man–Machine Systems; Elsevier: Amsterdam, The Netherlands, 1983; pp. 129–135. [Google Scholar]

- National Transportation Safety Board. Collision between a Car Operating with Automated Vehicle Control Systems and a Tractor-Semitrailer Truck near Williston, Florida, May 7, 2016; Highway Accident Report NTSB/HAR-17/02; National Transportation Safety Board: Washington, DC, USA, 2017.

- Schaller, T.; Schiehlen, J.; Gradenegger, B. Stauassistenz–Unterstützung des Fahrers in der Quer- und Längsführung: Systementwicklung und Kundenakzeptanz. In 3 Tagung Aktive Sicherheit durch Fahrerassistenz; Lehrstuhl für Fahrzeugtechnik, Technische Universität: München, Germany, 2008. [Google Scholar]

- Kircher, K.; Larsson, A.; Hultgren, J.A. Tactical driving behavior with different levels of automation. IEEE Trans. Intell. Transp. Syst. 2014, 1, 158–167. [Google Scholar] [CrossRef]

- Begiatto, M.; Hartwich, F.; Schleinitz, K.; Krems, J.; Othersen, I.; Petermann-Stock, I. What would drivers like to know during automated driving? Information needs at different levels of automation. In 7. Tagung Fahrerassistenzsysteme; Lehrstuhl für Fahrzzeugtechnik, Technische Universtität: München, Germany, 2015. [Google Scholar]

- Large, D.R.; Banks, V.A.; Burnett, G.; Baverstock, S.; Skrypchuk, L. Exploring the behaviour of distracted drivers during different levels of automation in driving. In Proceedings of the 5th International Conference on Driver Distraction and Inattention (DDI2017), Paris, France, 20–22 March 2017; pp. 20–22. [Google Scholar]

- Llaneras, R.E.; Salinger, J.; Green, C.A. Human Factors Issues Associated with Limited Ability Autonomous Driving Systems: Drivers’ Allocation of Visual Attention to the Forward Roadway. In Proceedings of the 7th International Driving Symposium on Human Factors in Driver Assessment, Training, and Vehicle Design, Bolton, NY, USA, 17–20 June 2013. [Google Scholar]

- Naujoks, F.; Purucker, C.; Neukum, A. Secondary task engagement and vehicle automation—Comparing the effects of different automation levels in an on-road experiment. Transp. Res. Part F 2016, 38, 67–82. [Google Scholar] [CrossRef]

- Kleen, A.T. Beherrschbarkeit von teilautomatisierten Eingriffen in die Fahrzeugführung. Ph.D. Thesis, Technische Universität Braunschweig, Braunschweig, Germany, 2014. [Google Scholar]

- Louw, T.; Kuo, J.; Romano, R.; Radhakrishnan, V.; Lenné, M.G.; Merat, N. Engaging in NDRTs affects drivers’ responses and glance patterns after silent automation failures. Transp. Res. Part F Traffic Psychol. Behav. 2019, 62, 870–882. [Google Scholar] [CrossRef]

- Stanton, N.A.; Young, M.S.; Walker, G.H.; Turner, H.; Randle, S. Automating the driver’s control tasks. Int. J. Cogn. Ergon. 2001, 5, 221–236. [Google Scholar] [CrossRef]

- Wulf, F.; Zeeb, K.; Rimini-Döring, M.; Arnon, M.; Gauterin, F. Effects of human-machine interaction mechanisms on situation awareness in partly automated driving. In Proceedings of the 16th International IEEE Conference on Intelligent Transportation Systems (ITSC 2013), The Hague, The Netherlands, 6–9 October 2013; pp. 2012–2019. [Google Scholar]

- Naujoks, F.; Wiedemann, K.; Schömig, N.; Jarosch, O.; Gold, C. Expert-based controllability assessment of control transitions from automated to manual driving. MethodsX 2018, 5, 579–592. [Google Scholar] [CrossRef] [PubMed]

- Naujoks, F.; Hergeth, S.; Wiedemann, K.; Schömig, N.; Forster, Y.; Keinath, A. Test procedure for evaluating the human–machine interface of vehicles with automated driving systems. Traffic Inj. Prev. 2019, 20, 146–151. [Google Scholar] [CrossRef] [PubMed]

- National Highway Traffic Safety Administration. Federal Automated Vehicles Policy 2.0. Washington, DC: National Highway Traffic Safety Administration (NHTSA), Department of Transportation (DOT); National Highway Traffic Safety Administration: Washington, DC, USA, 2017.

- Wiggerich, A.; Hoffmann, H.; Schömig, N.; Wiedemann, K.; Segler, K. Bewertung der Sicherheit der Mensch-Maschine-Interaktion teilautomatisierter Fahrfunktionen (Level 2). In Proceedings of the Beitrag auf dem 13, Uni-DAS e.V. Workshop Fahrerassistenz und automatisiertes Fahren, digital conference, 16–17 July 2020. [Google Scholar]

- Menold, N.; Bogner, K. Gestaltung von Ratingskalen in Fragebögen. Mannh. GESIS—Leibniz-Inst. Für Soz. (SDM Surv. Guidel.) 2015. [Google Scholar] [CrossRef]

- Neukum, A.; Lübbeke, T.; Krüger, H.-P.; Mayser, C.; Steinle, J. ACC-Stop&Go: Fahrerverhalten an funktionalen Systemgrenzen. In Proceedings of the Workshop Fahrerassistenzsysteme—FAS2008, Walting, Germany, 2–4 April 2008; Maurer, M., Stiller, C., Eds.; fmrt Karlsruhe: Walting, Germany, 2008; pp. 141–150. [Google Scholar]

- Kaussner, Y. Assessment of driver fitness: An alcohol calibration study in a high-fidelity simulation. In Proceedings of the Fit to Drive 7th International Traffic Expert Congress, Berlin, Germany, 25–26 April 2013. [Google Scholar]

- Kenntner-Mabiala, R.; Kaussner, Y.; Jagiellowicz-Kaufmann, M.; Hoffmann, S.; Krüger, H.-P. Driving performance under alcohol in simulated representative driving tasks: An alcohol calibration study for impairments related to medicinal drugs. J. Clin. Psychopharmacol. 2015, 35, 134–142. [Google Scholar] [CrossRef] [PubMed]

- Kircher, K.; Ahlstrom, C. Evaluation of methods for the assessment of attention while driving. Accid. Anal. Prev. 2018, 114, 40–47. [Google Scholar] [CrossRef] [PubMed]

- Zwahlen, H.T. Eye scanning rules for drivers: How do they compare with actual observed eye scanning behaviour. Transp. Res. Rec. J. Transp. Res. Board 1991, 1403, 14–22. [Google Scholar]

| Category | Observable Error/Problem | Description/Example |

|---|---|---|

| System operation (especially driver-initiated operations) | Noticed nothing | Driver does not notice changes in system state |

| Uncertain/delayed operation | Driver shows uncertainties in system operation, e.g., searches for a certain button; Driver takes a long time to perform an action | |

| Inadequate operation | Driver shows inadequate system operation, e.g., activates the system in situations where it should not be used | |

| Operation error | Driver initiates an incorrect operation, e.g., driver presses wrong button, driver presses correct button but not firmly enough, driver wants to activate the system when it is not possible/available | |

| Support by experimenter in operation required | Driver is not able to execute the expected action until a certain defined point in time so that the experimenter must give support to reach the designated system mode | |

| Driving behavior (especially relevant to driver-initiated system operations and system-initiated transitions) | No reaction | Driver does not show any reaction in a given situation which would require one |

| Reaction delayed | Driver reacts to an event with a clear delay, e.g., by a braking maneuver | |

| Reaction too strong | Driver reacts too strongly to an event, e.g., with oversteering | |

| Lane exceedance | The vehicle crosses the lane marking with one wheel | |

| Poor lane keeping | The vehicle visibly swerves within the lane to the right and/or to the left | |

| Insufficient securing behavior | Driver does not execute a control glance in the mirror in the case of a lane change | |

| Endangerment | Safety distance below 1 s to the front/side or behind/towards other vehicles | |

| Collision | Vehicle collides with another traffic participant or a stationary obstacle | |

| Monitoring behavior | Uncertainties in hands-on behavior | Driver shows clear uncertainties as to whether hands should be left on the steering wheel or not, takes them away repeatedly or rests them too weakly on the wheel |

| Not attentive enough | Driver shows clear signs of inattention, e.g., no control glances to HMI for longer time intervals, direction of attention towards NDRT (non-driving-related task) | |

| Hands-off warning | The hands-off warning was triggered by the system | |

| Stage of hands-off warning | The maximum stage of the hands-off warning was reached within a scenario |

| Error/Problem | Verbal Category | Numeric Category |

|---|---|---|

| Scenario not handled successfully | 10 |

| Not acceptable problems | 9 |

| 8 | ||

| 7 | ||

| Error-prone, but acceptable | 6 |

| 5 | ||

| 4 | ||

| good | 3 |

| 2 | ||

| 1 | ||

| perfect | 0 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Schömig, N.; Wiedemann, K.; Wiggerich, A.; Neukum, A. S.A.D.E.—A Standardized, Scenario-Based Method for the Real-Time Assessment of Driver Interaction with Partially Automated Driving Systems. Information 2022, 13, 538. https://doi.org/10.3390/info13110538

Schömig N, Wiedemann K, Wiggerich A, Neukum A. S.A.D.E.—A Standardized, Scenario-Based Method for the Real-Time Assessment of Driver Interaction with Partially Automated Driving Systems. Information. 2022; 13(11):538. https://doi.org/10.3390/info13110538

Chicago/Turabian StyleSchömig, Nadja, Katharina Wiedemann, André Wiggerich, and Alexandra Neukum. 2022. "S.A.D.E.—A Standardized, Scenario-Based Method for the Real-Time Assessment of Driver Interaction with Partially Automated Driving Systems" Information 13, no. 11: 538. https://doi.org/10.3390/info13110538

APA StyleSchömig, N., Wiedemann, K., Wiggerich, A., & Neukum, A. (2022). S.A.D.E.—A Standardized, Scenario-Based Method for the Real-Time Assessment of Driver Interaction with Partially Automated Driving Systems. Information, 13(11), 538. https://doi.org/10.3390/info13110538