A Brief Analysis of Key Machine Learning Methods for Predicting Medicare Payments Related to Physical Therapy Practices in the United States

Abstract

1. Introduction

2. Background

2.1. Summarizing Previous Work Conducted in Using Random Forest Analysis for Predicting Medicare Payments

2.2. Decision Tree Regression

2.3. K-Nearest Neighbors

2.4. Linear Generalized Additive Model

2.5. Comparison with Other Key Related Works

3. Materials and Methods

3.1. Description of the Dataset Used for Experimentation

3.2. Data Pre-Processing

4. Results

4.1. Results of Data Pre-Processing

4.2. Multiple Linear Regression Results

4.3. Decision Tree Regression Analysis and Results

4.4. Application of Decision Tree Regression

4.5. Random Forest Analysis and Results

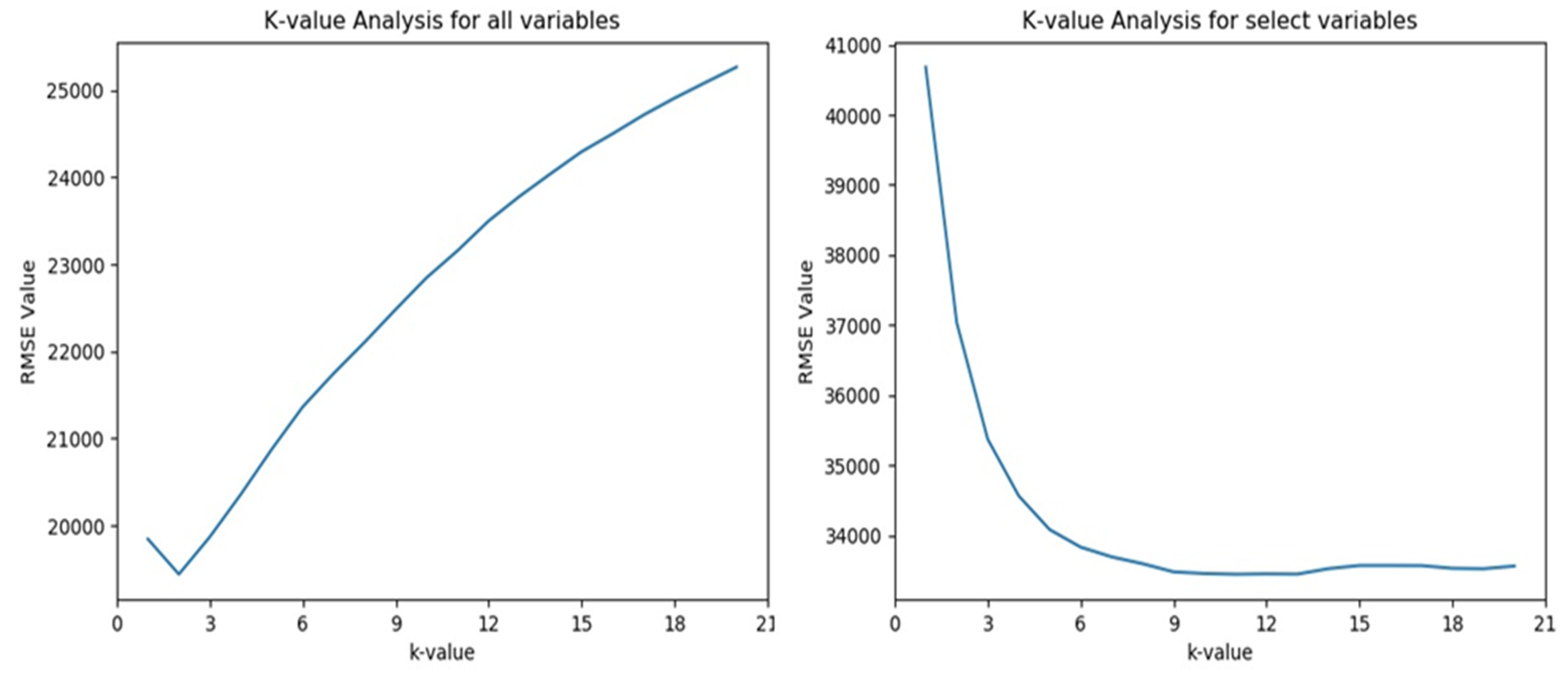

4.6. K-Nearest Neighbors Analysis and Results

4.7. Analysis of Linear Generalized Additive Model

5. Discussion

5.1. Discussion on Efficiency and Computational Time for the Methods Applied

5.2. Limitations and Future Work

6. Conclusions

Author Contributions

Funding

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Pedregosa, F.; Varoquaux, G.; Gramfort, A.; Michel, V.; Thirion, B.; Grisel, O.; Blondel, M.; Müller, A.; Nothman, J.; Louppe, G.; et al. Scikit-learn: Machine Learning in Python. J. Mach. Learn. Res. 2011, 12, 2825–2830. [Google Scholar]

- Zhang, T. Solving large scale linear prediction problems using stochastic gradient descent algorithms. In Proceedings of the Twenty-First International Conference on Machine Learning—ICML’04, Banff, AB, Canada, 4–8 July 2004; p. 116. [Google Scholar]

- Podgorelec, V.; Kokol, P.; Stiglic, B.; Rozman, I. Decision Trees: An Overview and Their Use in Medicine. J. Med. Syst. 2002, 26, 445–463. [Google Scholar] [CrossRef]

- Breiman, L. Random Forests. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef]

- Zaman, M.F.; Hirose, H. Classification Performance of Bagging and Boosting Type Ensemble Methods with Small Training Sets. New Gener. Comput. 2011, 29, 277–292. [Google Scholar] [CrossRef]

- Rajaram, R.; Bilimoria, K.Y. Medicare. JAMA 2015, 314, 420. [Google Scholar] [CrossRef]

- Gornick, M.; Newton, M.; Hackerman, C. Factors affecting differences in Medicare reimbursements for physicians’ services. Health Care Financ. Rev. 1980, 1, 15–37. [Google Scholar]

- Gurupur, V.P.; Kulkarni, S.A.; Liu, X.; Desai, U.; Nasir, A. Analysing the power of deep learning techniques over the traditional methods using Medicare utilization and provider data. J. Exp. Theor. Artif. Intelli-Gence 2018, 31, 99–115. [Google Scholar] [CrossRef]

- Arnold, D.; Wagner, P.; Baayen, R.H. Using generalized additive models and random forests to model prosodic prominence in German. In Proceedings of the 14th Annual Conference of the International Speech Communication Association (INTERSPEECH 2013), Lyon, France, 25–29 August 2013; Bimbot, F., Ed.; International Speech Communications Association: Lyon, France, 2013; pp. 272–276. [Google Scholar]

- O’Donnell, B.E.; Schneider, K.M.; Brooks, J.M.; Lessman, G.; Wilwert, J.; Cook, E.; Martens, G.; Wright, K.; Chrischilles, E.A. Standardizing Medicare Payment Information to Support Examining Geographic Variation in Costs. Medicare Medicaid Res. Rev. 2013, 3, E1–E21. [Google Scholar] [CrossRef]

- Futoma, J.; Morris, J.; Lucas, J. A comparison of models for predicting early hospital readmissions. J. Biomed. Inform. 2015, 56, 229–238. [Google Scholar] [CrossRef]

- CMS Medicare Fee for Service Payment Presentation. Available online: https://www.cms.gov/Medicare/Medicare-Fee-for-Service-Payment/PhysicianFeedbackProgram/Downloads/122111_Slide_Presentation.pdf (accessed on 7 November 2020).

- Gurupur, V.P.; Tanik, M.M. A System for Building Clinical Research Applications using Semantic Web-Based Approach. J. Med. Syst. 2010, 36, 53–59. [Google Scholar] [CrossRef]

- Long, W.J.; Griffith, J.L.; Selker, H.P.; D’Agostino, R.B. A Comparison of Logistic Regression to Decision-Tree Induction in a Medical Domain. Comput. Biomed. Res. 1993, 26, 74–97. [Google Scholar] [CrossRef]

- Loh, W.-Y. Fifty Years of Classification and Regression Trees. Int. Stat. Rev. 2014, 82, 329–348. [Google Scholar] [CrossRef]

- Torgo, L. Inductive Learning of Tree-Based Regression Models; Universidade do Porto. Reitoria: Porto, Portugal, 1999. [Google Scholar]

- Khalilia, M.; Chakraborty, S.; Popescu, M. Predicting disease risks from highly imbalanced data using random forest. BMC Med. Inform. Decis. Mak. 2011, 11, 51. [Google Scholar] [CrossRef] [PubMed]

- Breiman, L.; Friedman, J.H.; Olshen, R.A.; Stone, C.J. Classification and Regression Trees; CRC Press: Boca Raton, FL, USA, 2017; p. 358. [Google Scholar]

- Bellman, R. Dynamic Programming; Princeton University Press: Princeton, NJ, USA, 2010; p. 340. [Google Scholar]

- Williams, C.; Wan, T.T.H. A remote monitoring program evaluation: A retrospective study. J. Eval. Clin. Pr. 2016, 22, 982–988. [Google Scholar] [CrossRef] [PubMed]

- Zhang, S.; Li, X.; Zong, M.; Zhu, X.; Wang, R. Efficient kNN Classification with Different Numbers of Nearest Neighbors. IEEE Trans. Neural Netw. Learn. Syst. 2018, 29, 1774–1785. [Google Scholar] [CrossRef] [PubMed]

- Cherif, W. Optimization of K-NN algorithm by clustering and reliability coefficients: Application to breast-cancer diagnosis. Procedia Comput. Sci. 2018, 127, 293–299. [Google Scholar] [CrossRef]

- Hastie, T.J.; Tibshirani, R.J. Generalized Additive Models; Chapman: New York, NY, USA; Hall/CRC: Boca Raton, FL, USA, 1990. [Google Scholar]

- Ilseven, E.; Gol, M. A Comparative Study on Feature Selection Based Improvement of Medium-Term Demand Forecast Accuracy. In Proceedings of the 2019 IEEE Milan PowerTech, Milan, Italy, 23–27 June 2019; pp. 1–6. [Google Scholar]

- Wu, A.S.; Liu, X.; Norat, R. A Genetic Algorithm Approach to Predictive Modeling of Medicare Payments to Physical Therapists. In Proceedings of the 32nd International Florida Artificial Intelligence Research Society Conference (FLAIRS-32), Honolulu, HI, USA, 27 January–1 February 2019; pp. 311–316. [Google Scholar]

- Gurupur, V.P.; Gutierrez, R. Designing the Right Framework for Healthcare Decision Support. J. Integr. Des. Process. Sci. 2016, 20, 7–32. [Google Scholar] [CrossRef]

- Zuckerman, R.B.; Sheingold, S.H.; Orav, E.J.; Ruhter, J.; Epstein, A.M. Readmissions, Observation, and the Hospital Readmissions Reduction Program. N. Engl. J. Med. 2016, 374, 1543–1551. [Google Scholar] [CrossRef]

- Patrick, S.; Christa, B.; Lothar, A.D. Correlation Coefficients: Appropriate Use and Interpretation. Anesth. Analg. 2018, 126, 1763–1768. [Google Scholar]

- Bertsimas, D.; Dunn, J.; Paschalidis, A. Regression and classification using optimal decision trees. In Proceedings of the 2017 IEEE MIT Undergraduate Research Technology Conference (URTC), Cambridge, MA, USA, 3–5 November 2017; pp. 1–4. [Google Scholar]

- Gurupur, V.P.; Jain, G.P.; Rudraraju, R. Evaluating student learning using concept maps and Markov chains. Expert Syst. Appl. 2015, 42, 3306–3314. [Google Scholar] [CrossRef]

- Kearns, M.; Mansour, Y. On the Boosting Ability of Top–Down Decision Tree Learning Algorithms. J. Comput. Syst. Sci. 1999, 58, 109–128. [Google Scholar] [CrossRef]

- Morid, M.A.; Kawamoto, K.; Ault, T.; Dorius, J.; Abdelrahman, S. Supervised Learning Methods for Predicting Healthcare Costs: Systematic Literature Review and Empirical Evaluation. In Proceedings of the AMIA Annual Symposium 2017, Washington, DC, USA, 4–8 November 2017. [Google Scholar]

- Kong, Y.; Yu, T. A Deep Neural Network Model using Random Forest to Extract Feature Representation for Gene Expression Data Classification. Sci. Rep. 2018, 8, 16477. [Google Scholar] [CrossRef] [PubMed]

- Robinson, J.W. Regression Tree Boosting to Adjust Health Care Cost Predictions for Diagnostic Mix. Health Serv. Res. 2008, 43, 755–772. [Google Scholar] [CrossRef] [PubMed]

- Seligman, B.; Tuljapurkar, S.; Rehkopf, D. Machine learning approaches to the social determinants of health in the health and retirement study. SSM Popul. Health 2018, 4, 95–99. [Google Scholar] [CrossRef]

- Hempelmann, C.F.; Sakoglu, U.; Gurupur, V.P.; Jampana, S. An entropy-based evaluation method for knowledge bases of medical information systems. Expert Syst. Appl. 2016, 46, 262–273. [Google Scholar] [CrossRef]

- Lemon, S.C.; Roy, J.; Clark, M.A.; Friendmann, P.D.; Rakowski, W. Classification and regression tree analysis in public health: Methodological review and comparison with logistic regression. Ann. Behav. Med. 2003, 26, 172–181. [Google Scholar] [CrossRef]

- Gurupur, V.P.; Sakoglu, U.; Jain, G.P.; Tanik, U.J. Semantic requirements sharing approach to develop software systems using concept maps and information entropy: A Personal Health Information System example. Adv. Eng. Softw. 2014, 70, 25–35. [Google Scholar] [CrossRef]

- LeCun, Y.; Bengio, Y.; Hinton, G. Deep learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef]

- Biggio, B.; Roli, F. Wild patterns: Ten years after the rise of adversarial machine learning. Pattern Recognit. 2018, 84, 317–331. [Google Scholar] [CrossRef]

- Xiao, H.; Biggio, B.; Nelson, B.; Xiao, H.; Eckert, C.; Roli, F. Support vector machines under adversarial label contamination. Neurocomputing 2015, 160, 53–62. [Google Scholar] [CrossRef]

| Research Study | Analysis Techniques | Results |

|---|---|---|

| This Project | Multiple linear regression vs. decision tree vs. random forest | Random forest and decision tree analysis outperformed multiple linear regression in predicting Medicare physical therapy payments. |

| Loh 2014 [15] | Decision tree analysis | Decision tree analysis was effective in use for continuous variable prediction (baseball player salaries). |

| Long 1993 [14] | Decision tree analysis vs. logistic regression | Logistic regression slightly outperforms decision tree analysis for predicting acute cardiac ischemia classification. |

| Futoma 2015 [11] | Logistic regression, logistic regression with multi-step variable selection, penalized logistic regression, random forest, and support vector machine | Random forests were superior in predicting readmission rates compared to other methods of predictive analysis. |

| Index | Alias Name | Feature Name | Correlation |

|---|---|---|---|

| 27 | TotalPayment | Total Medicare Standardized Payment Amount | 1.000000 |

| 7 | PatientProxy | Proxy for # of new patients | 0.796309 |

| 3 | #MedicareBeneficiaries | Number of Medicare Beneficiaries | 0.747528 |

| 5 | MedicareBenefit | Medicare standardized amount benefit | 0.474725 |

| 2 | HCPCS | Number of HCPCS | 0.335347 |

| 6 | PhysicalAgentPercentage | Physical agent percentage | 0.205529 |

| 8 | BeneficiaryAge | Average Age of Beneficiaries | 0.175424 |

| 9 | HCCBeneficiary | Average HCC Risk Score of Beneficiaries | 0.170843 |

| 14 | RiskAdjustedCost | Standardized Risk-Adjusted Per Capita Medicare Costs | 0.135006 |

| 17 | MedicareBenefitPopulation | Percent Medicare Fee-For-Service(FFS) benefit pop 2014 | 0.130285 |

| 18 | AverageAgeFee | Medicare FFS Benefit Average Age Fee for Service 2014 | 0.128929 |

| 20 | AverageHCCScoreFee | Medicare FFS Benefit Avg HCC Score Fee for Service 2014 | 0.120106 |

| 23 | OldInDeepPoverty | Percent of persons 65 or older in Deep Poverty 2014 | 0.080313 |

| 19 | FemaleMedicareBenefit | Percent of Medicare FFS Benefit Female 14 | 0.064783 |

| 13 | LargeMetroArea | Large metro area | 0.012649 |

| 12 | NonMetroArea | Non-metropolitan area or missing (9 counties missing) | 0.012599 |

| 21 | MedicareBeneficiaryforMedicaid | Percent Medicare Beneficiary Eligible for Medicaid 14 | 0.002792 |

| 11 | SmallMetroArea | Small metro area | −0.000148 |

| 22 | MedianHouseholdIncome2014 | Median Household Income 2014 | −0.008139 |

| 10 | MiSizedMetroArea | Mid-sized metro area | −0.024261 |

| 1 | ReportingDPTDegree | Reporting DPT degree | −0.048336 |

| 16 | PhysicalTherapistsPer10000 | Physical Therapists per 10,000 pop 2009 | −0.068251 |

| 15 | PrimaryCarePer10000 | Primary care Physicians per 10,000 pop 2014 | −0.081168 |

| 24 | PhysicalTherapsistsPerBeneficiary | Physical Therapists per beneficiaries ratio | −0.084612 |

| 4 | ChargeToAllowedAmount | Charge to allowed amount ratio | −0.098310 |

| 0 | Female | Female gender | −0.175792 |

| Model and Type | Dataset | Root Mean Square Error | R2 Score |

|---|---|---|---|

| Multiple linear regression (parametric) | All variables and unscaled data | 15,349.55 | 0.83 |

| Multiple linear regression (parametric) | Selected variables and unscaled data | 32,172.84 | 0.24 |

| Linear generalized additive model (semiparametric) | All variables and unscaled data | 13,469.41 | 0.87 |

| Linear generalized additive model (semiparametric) | Selected variables and unscaled data | 31,057.77 | 0.29 |

| Decision tree regression (nonparametric) | All variables and unscaled data | 8204.61 | 0.95 |

| Decision tree regression (nonparametric) | Selected variables and unscaled data | 32,587.74 | 0.22 |

| Random forest regression (nonparametric) | All variables and unscaled data | 3739.26 | 0.99 |

| Random forest regression (nonparametric) | Selected variables and unscaled data | 30,685.62 | 0.30 |

| K-nearest neighbors (nonparametric) | All variables and unscaled data | 19,438 | 0.72 |

| K-nearest neighbors (nonparametric) | Selected variables and unscaled data | 33,445.64 | 0.17 |

| Analysis Technique | Computational Time (ms) |

|---|---|

| Multiple linear regression | 63.558 |

| Decision tree | 532.042 |

| Random forest | 288,897.591 |

| K-nearest neighbor | 950.597 |

| Linear GAM | 1134.083 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kulkarni, S.A.; Pannu, J.S.; Koval, A.V.; Merrin, G.J.; Gurupur, V.P.; Nasir, A.; King, C.; Wan, T.T.H. A Brief Analysis of Key Machine Learning Methods for Predicting Medicare Payments Related to Physical Therapy Practices in the United States. Information 2021, 12, 57. https://doi.org/10.3390/info12020057

Kulkarni SA, Pannu JS, Koval AV, Merrin GJ, Gurupur VP, Nasir A, King C, Wan TTH. A Brief Analysis of Key Machine Learning Methods for Predicting Medicare Payments Related to Physical Therapy Practices in the United States. Information. 2021; 12(2):57. https://doi.org/10.3390/info12020057

Chicago/Turabian StyleKulkarni, Shrirang A., Jodh S. Pannu, Andriy V. Koval, Gabriel J. Merrin, Varadraj P. Gurupur, Ayan Nasir, Christian King, and Thomas T. H. Wan. 2021. "A Brief Analysis of Key Machine Learning Methods for Predicting Medicare Payments Related to Physical Therapy Practices in the United States" Information 12, no. 2: 57. https://doi.org/10.3390/info12020057

APA StyleKulkarni, S. A., Pannu, J. S., Koval, A. V., Merrin, G. J., Gurupur, V. P., Nasir, A., King, C., & Wan, T. T. H. (2021). A Brief Analysis of Key Machine Learning Methods for Predicting Medicare Payments Related to Physical Therapy Practices in the United States. Information, 12(2), 57. https://doi.org/10.3390/info12020057