A Bioinspired Neural Network-Based Approach for Cooperative Coverage Planning of UAVs

Abstract

1. Introduction

Current Work

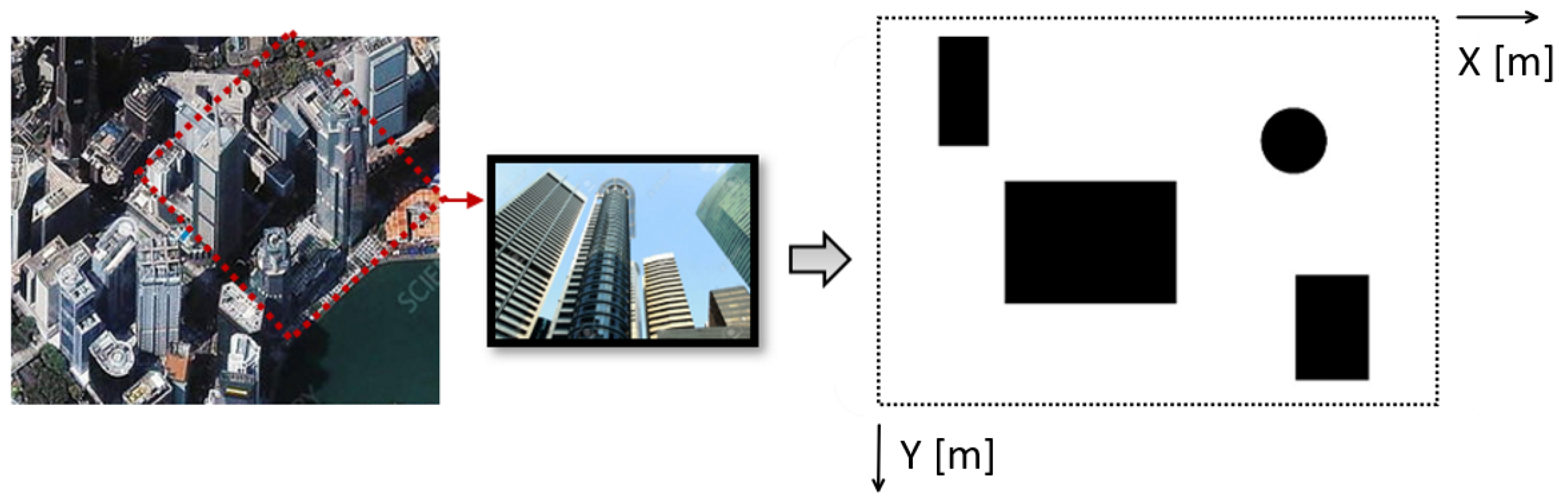

2. Assumptions, Notation, and Problem Description

- The map is known as a priori. As a consequence, the dimension of the search space (i.e., the map) and the displacement of obstacles are known;

- The dimension of the fleet is always set before the coverage planning task starts. Anyway, the proposed approach is tested in different scenarios with fleets consisting of 3 to 10 vehicles;

- The map is considered fully covered when at least 99% of the map is visited.

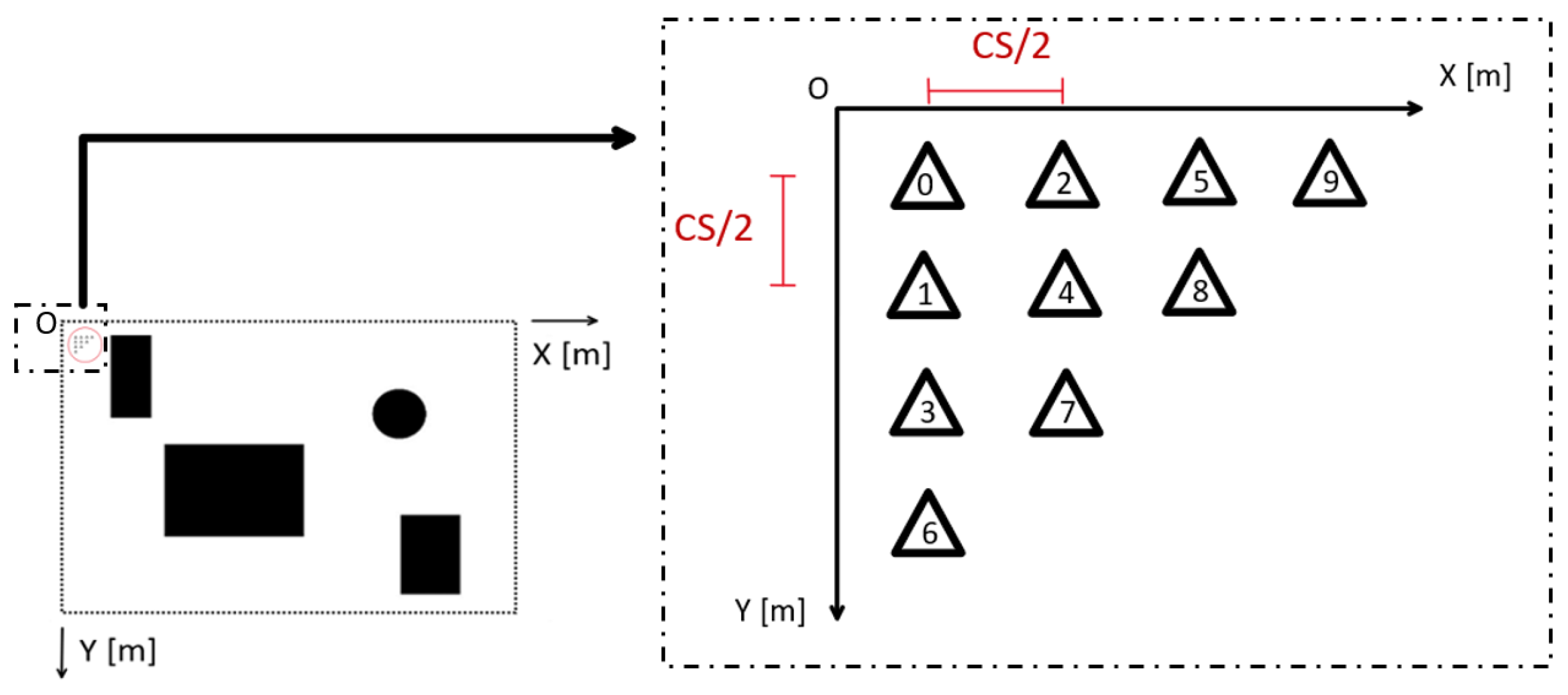

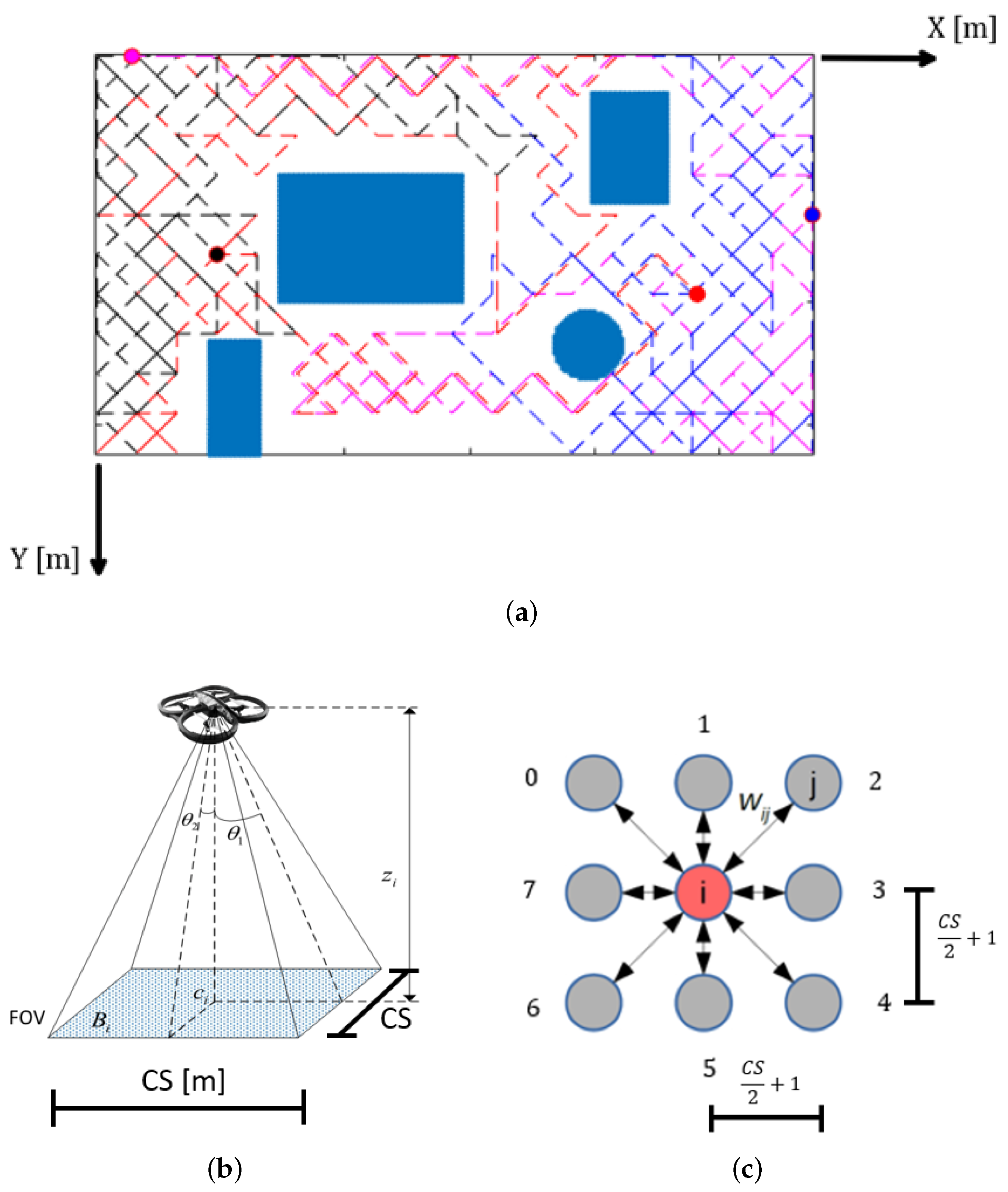

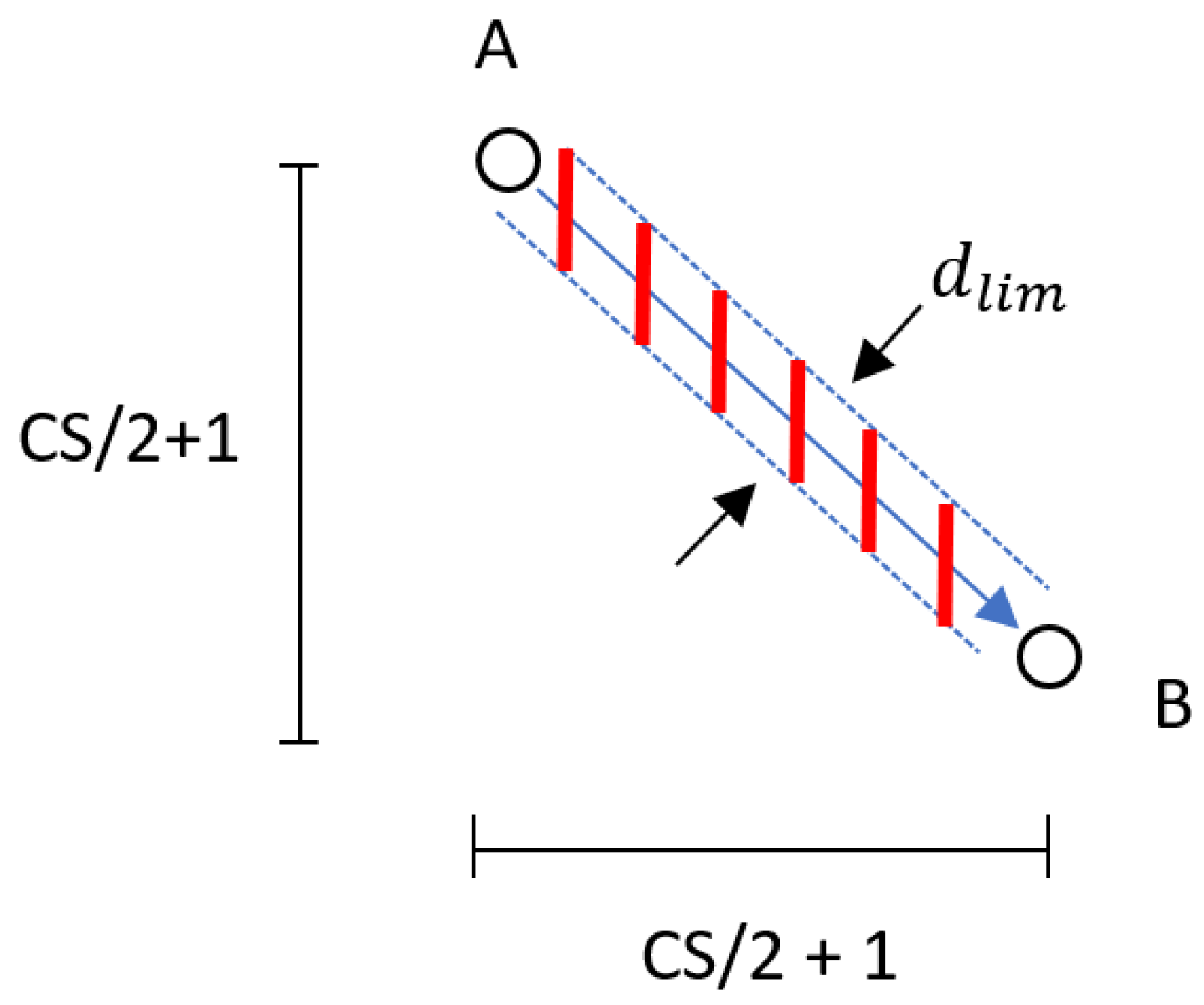

3. Proposed Approach

Pseudocode

| Algorithm 1 The main algorithm |

|

| Algorithm 2 The ComputeCostmap() function |

|

4. Simulations

4.1. Assumptions

- The positions of all UAVs are always known; therefore, the cost-map C, the mean distance , the standard deviation of distances between vehicles , and contributions can be computed by the centralized coordination unit at each time step;

- The UAVs are flying at a fixed flight altitude, and their field of view is constant and defined as shown in Figure 3b;

- We always assume a known map. However, the proposed approach can be used also in unknown environments requiring obstacle detection with sensors to update the map during the exploration.

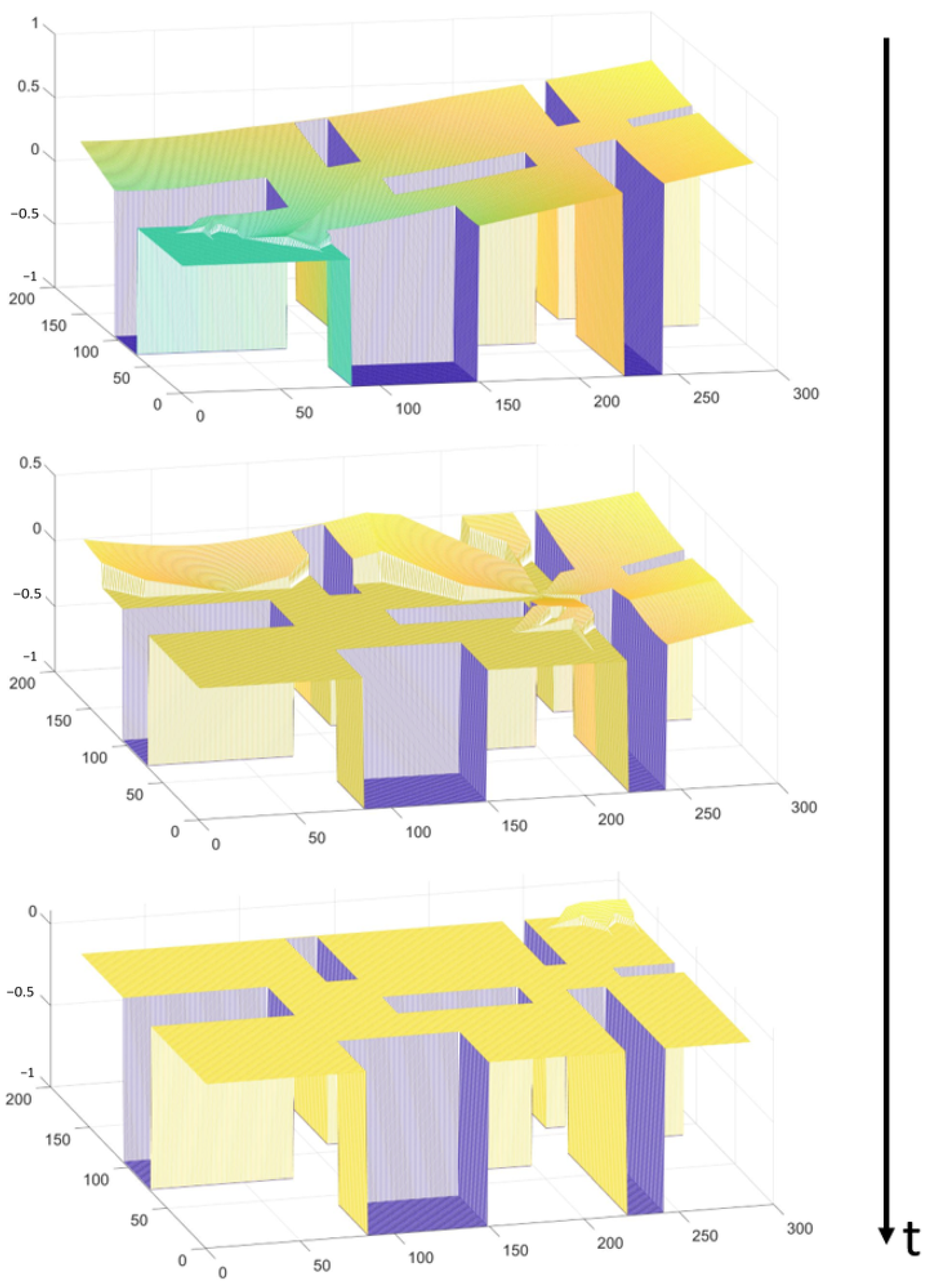

4.2. Preliminary Simulations

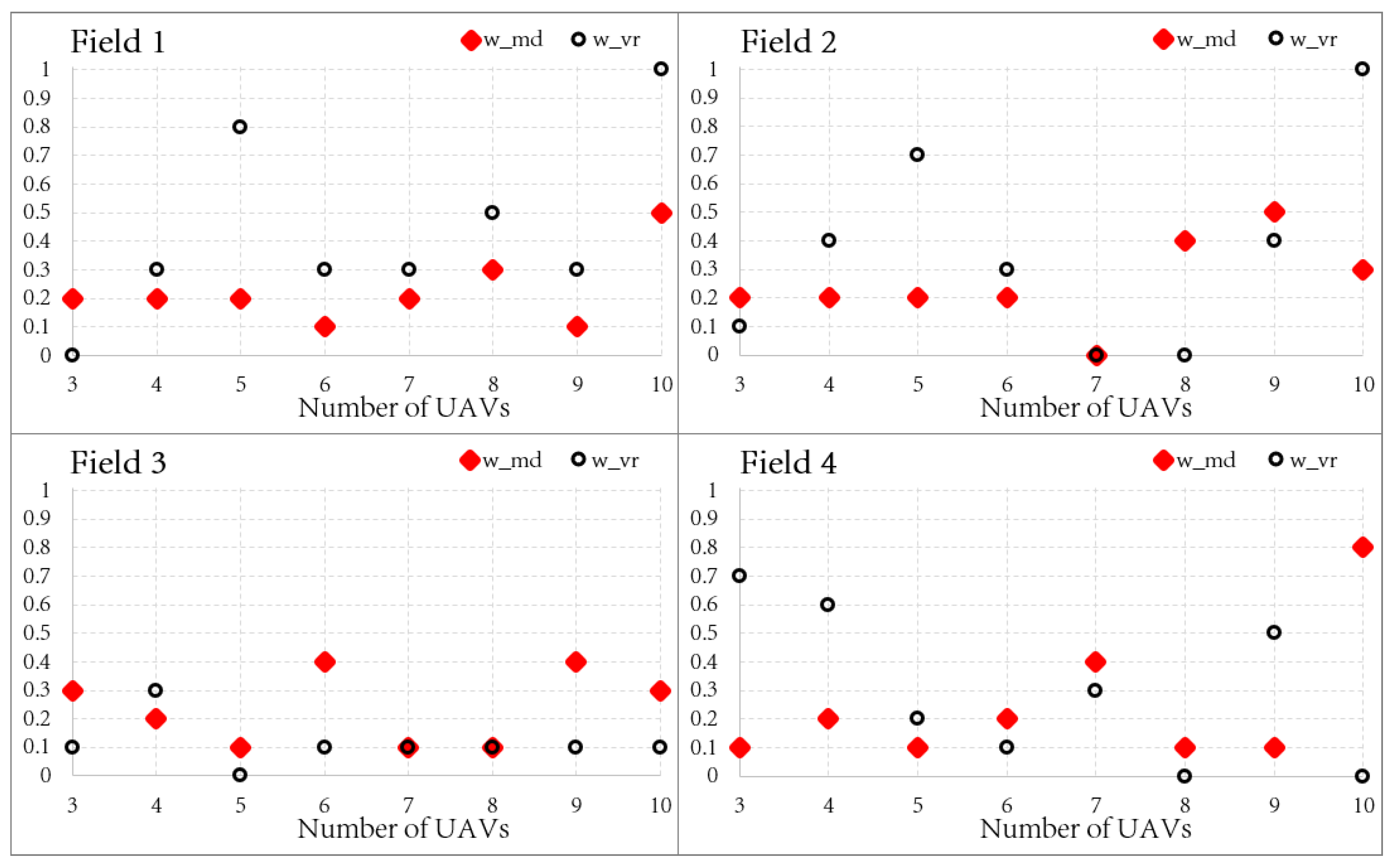

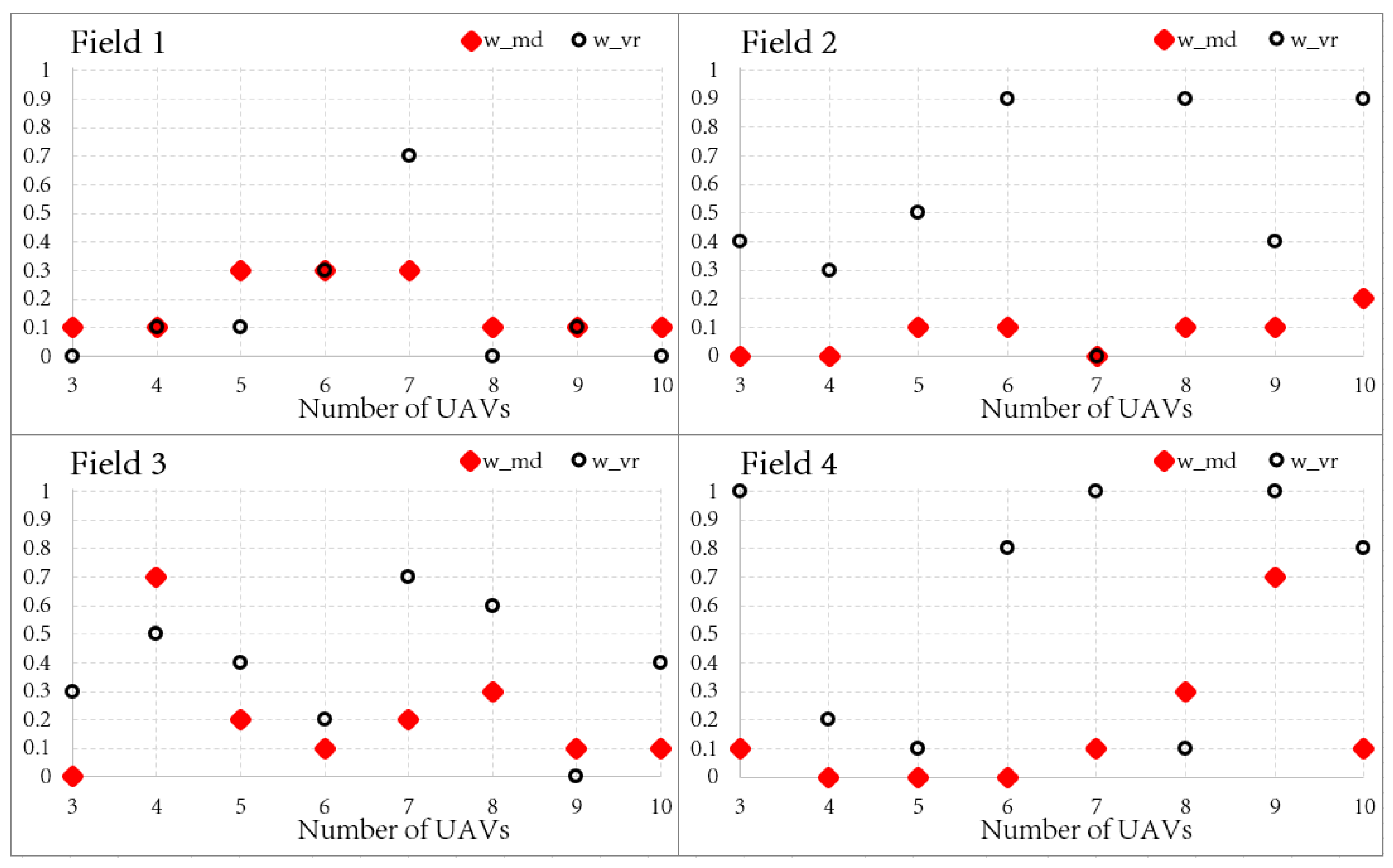

4.3. Tuning Parameters

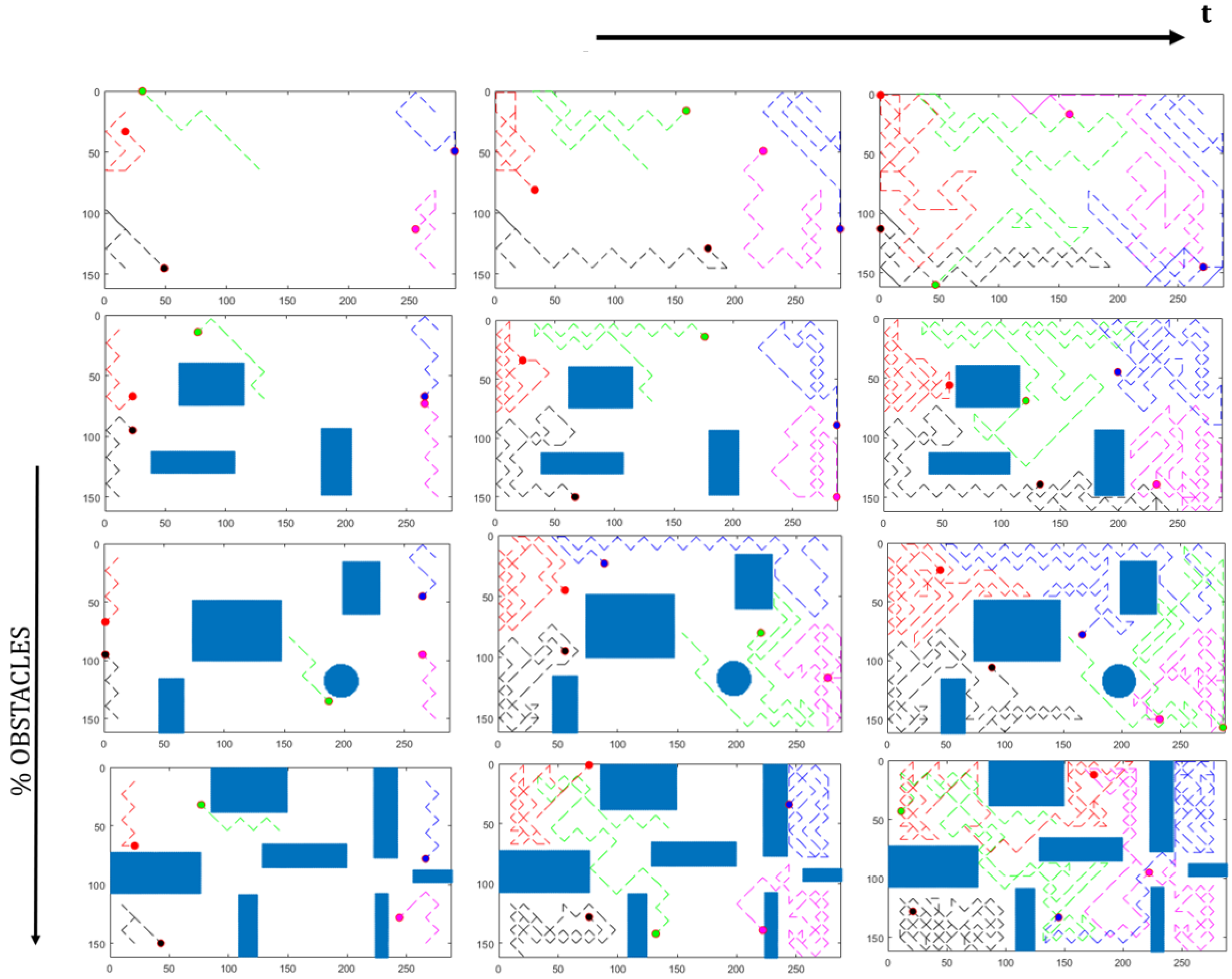

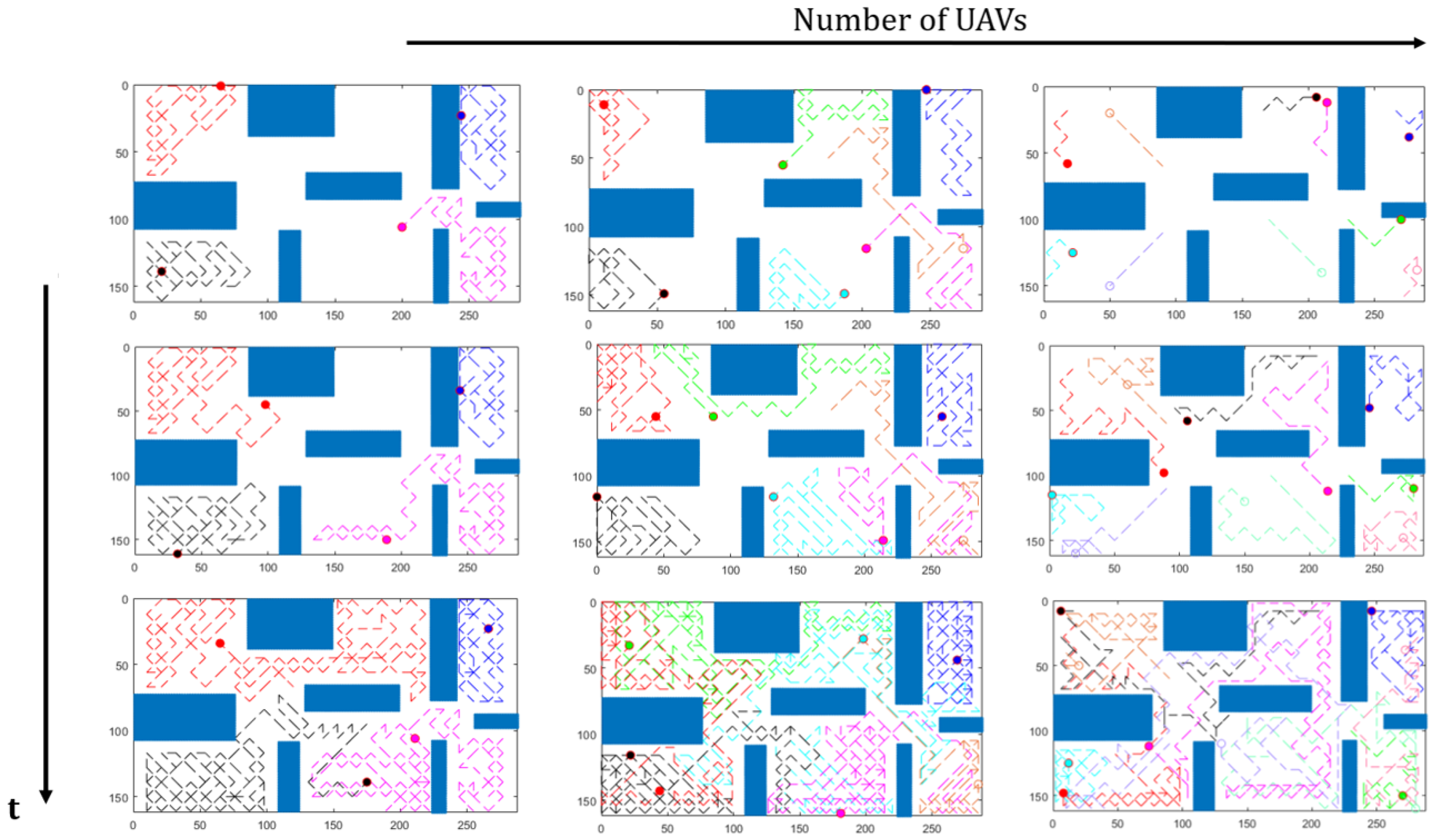

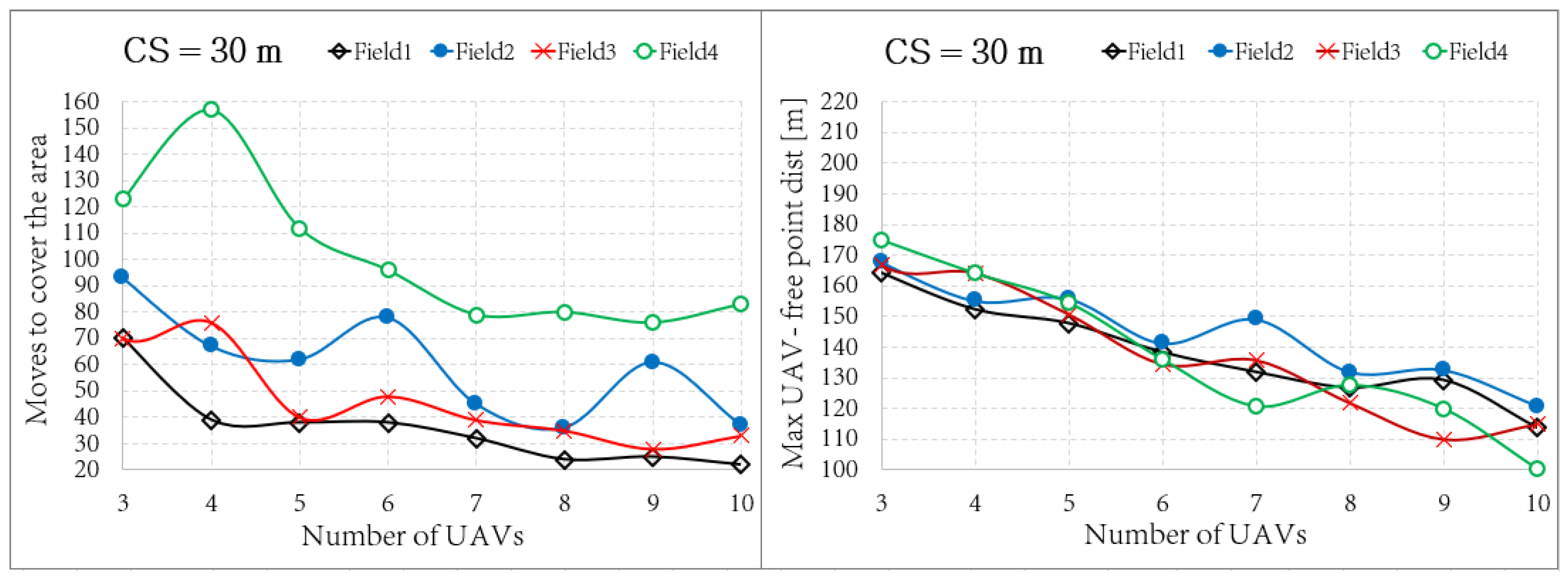

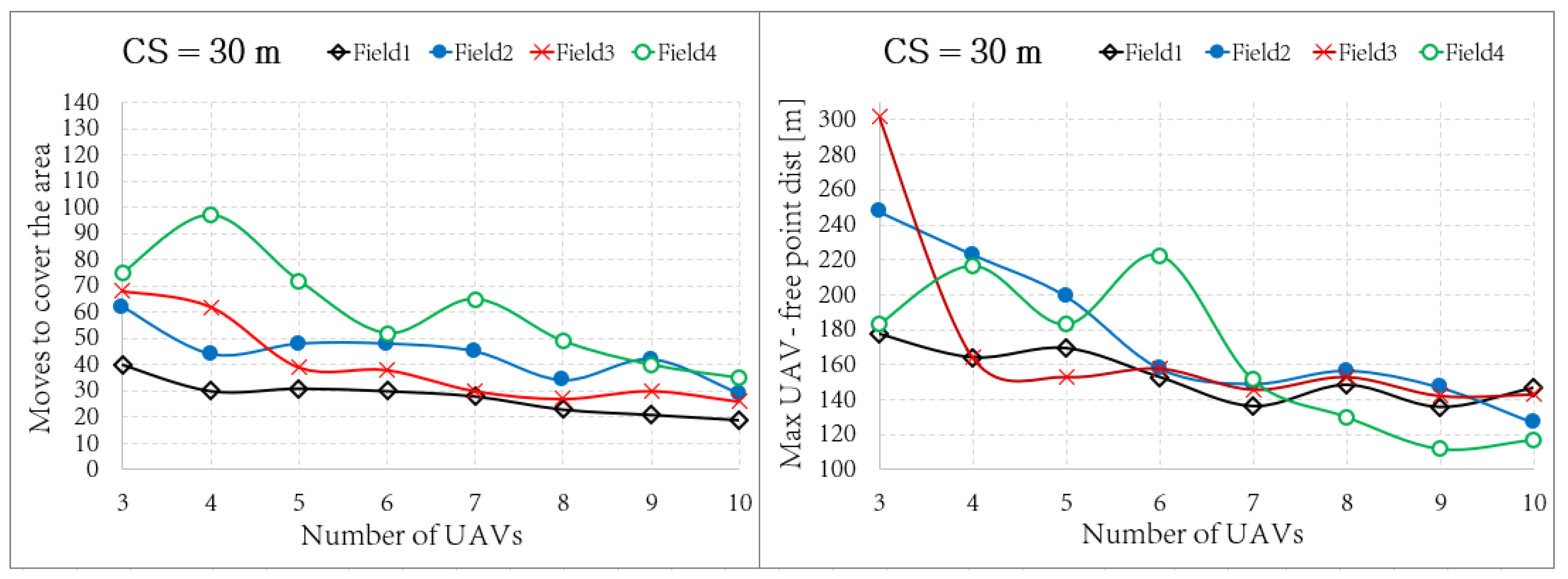

4.4. Collected Results

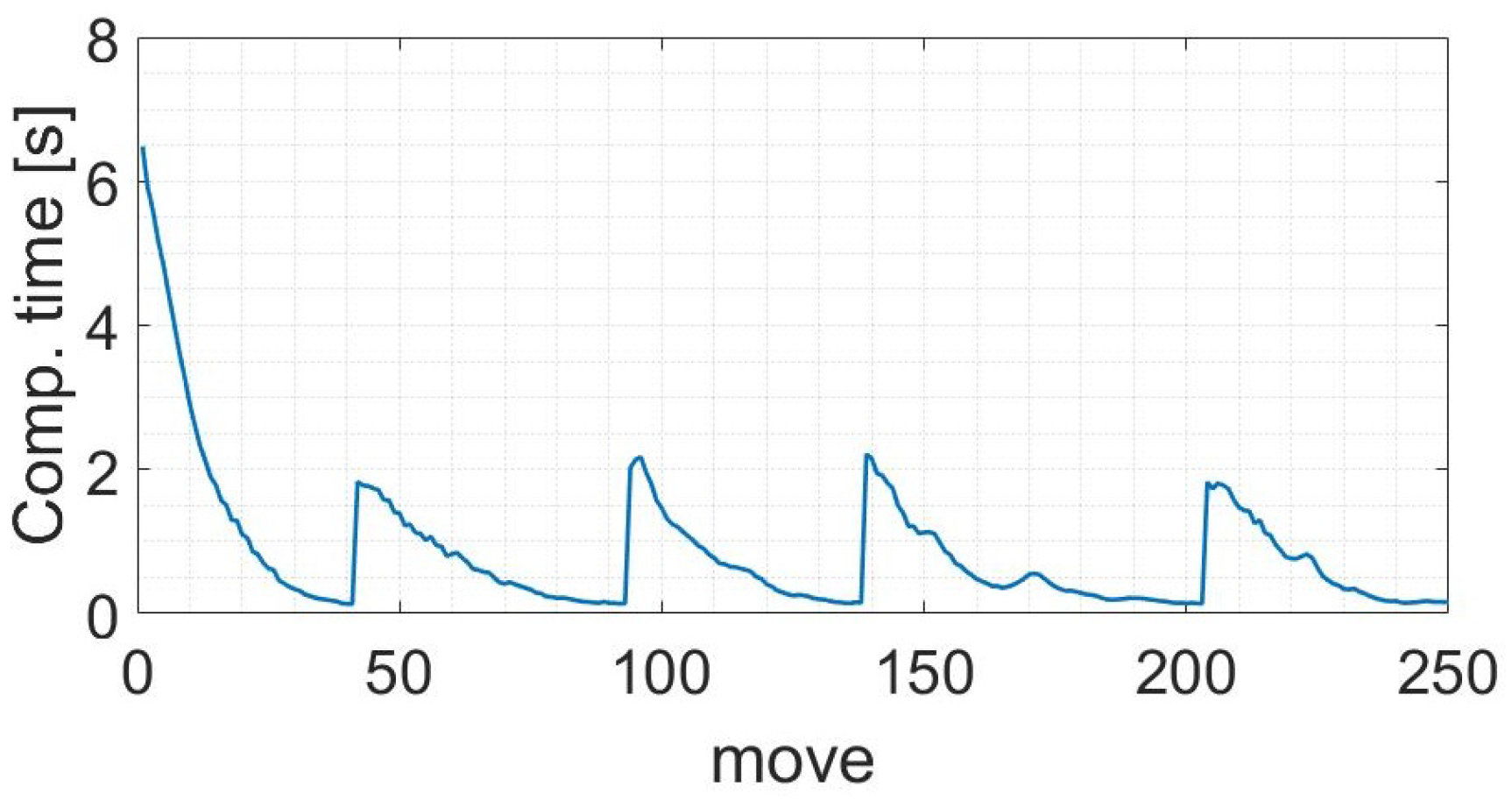

4.5. Computational Time

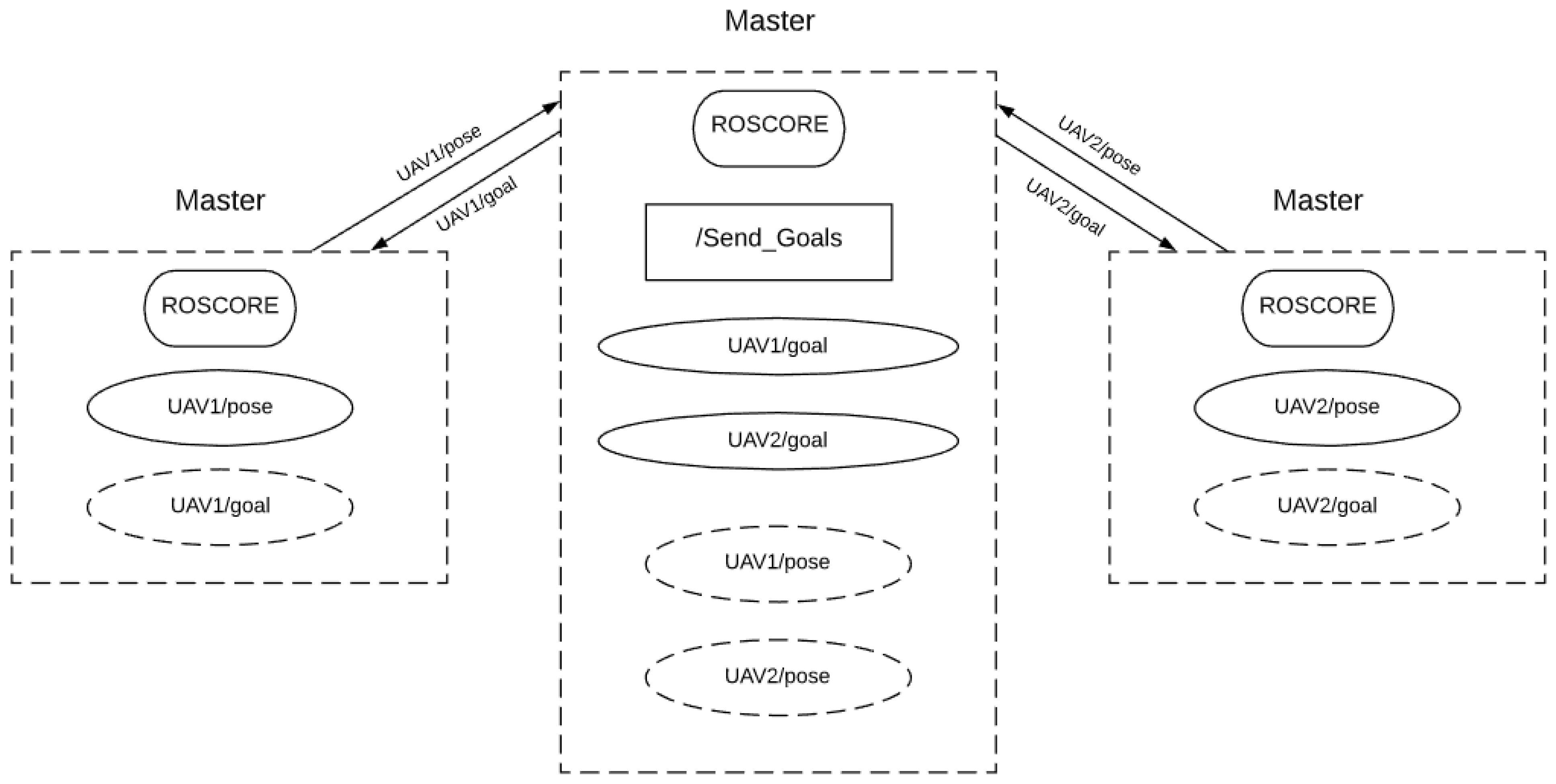

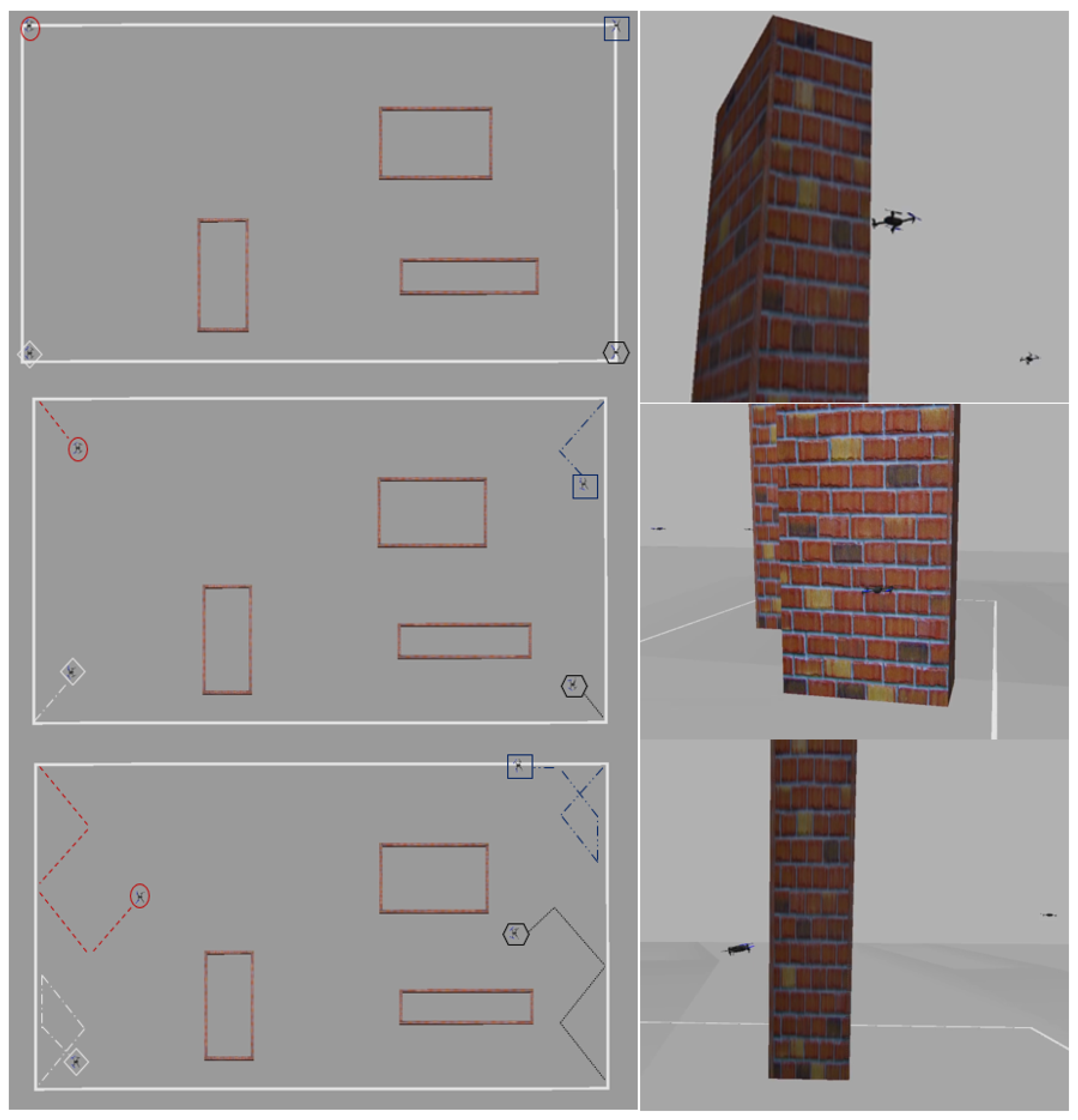

4.6. Realistic Simulations

5. Conclusions and Further Developments

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| CS | Covered square side (in meters) of the field of view |

| max point dist | Maximum distance between an obstacle free point of the map and the closest UAV |

| SITL | Software In The Loop |

| ROS | Robot Operating System |

| UAV | Unmanned Aircraft System |

| D | Number of Units |

| FOV | Field Of View |

References

- Otto, A.A.; Agatz, N.; Campbell, J.J.; Golden, B.B.; Pesch, E.E. Optimization approaches for civil applications of unmanned aerial vehicles (UAVs) or aerial drones. Networks 2018. [Google Scholar] [CrossRef]

- Juliá, M.; Gil, A.; Reinoso, O. A comparison of path planning strategies for autonomous exploration and mapping of unknown environments. Auton Robot 2012, 33, 427–444. [Google Scholar] [CrossRef]

- Chen, Y.; Zhang, H.; Xu, M. The coverage problem in UAV network: A survey. In Proceedings of the Fifth International Conference on Computing, Communications and Networking Technologies (ICCCNT), Hefei, China, 11–13 July 2014; pp. 1–5. [Google Scholar] [CrossRef]

- Khan, A.; Noreen, I.; Habib, Z. On Complete Coverage Path Planning Algorithms for Non-holonomic Mobile Robots: Survey and Challenges. J. Inf. Sci. Eng. 2017, 33, 101–121. [Google Scholar]

- Cabreira, T.M.; Brisolara, L.B.; Ferreira, P.R., Jr. Survey on coverage path planning with unmanned aerial vehicles. Drones 2019, 3, 4. [Google Scholar] [CrossRef]

- Waharte, S.; Trigoni, N. Supporting search and rescue operations with UAVs. In Proceedings of the 2010 International Conference on Emerging Security Technologies, Canterbury, UK, 6–7 September 2010; pp. 142–147. [Google Scholar]

- Alami, R. A fleet of autonomous and cooperative mobile robots. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems, Osaka, Japan, 4–8 November 1996. [Google Scholar]

- Albani, D.; Nardi, D.; Trianni, V. Field coverage and weed mapping by UAV swarms. In Proceedings of the IEEE International Workshop on Intelligent Robots and Systems (IROS), Vancouver, BC, Canada, 24–28 September 2017. [Google Scholar]

- Shakhatreh, H.; Khreishah, A.; Chakareski, J.; Salameh, H.B.; Khalil, I. On the continuous coverage problem for a swarm of UAVs. In Proceedings of the IEEE Princeton Section Sarnoff Symposium, Newark, NJ, USA, 19–21 September 2016. [Google Scholar]

- Belkadi, A.; Abaunza, H.; Ciarletta, L.; Castillo, P.; Theilliol, D. Design and Implementation of Distributed Path Planning Algorithm for a Fleet of UAVs. IEEE Trans. Aerosp. Electron. Syst. 2019, 55, 2647–2657. [Google Scholar] [CrossRef]

- Shao, Z.; Yan, F.; Zhou, Z.; Xiaoping, Z. Path Planning for Multi-UAV Formation Rendezvous Based on Distributed Cooperative Particle Swarm Optimization. Appl. Sci. 2019, 9, 2621. [Google Scholar] [CrossRef]

- Ropero, F.; Munoz, P.; R-Moreno, M. TERRA: A Path Planning Algorithm for Cooperative UGV-UAV Exploration. J. Logo 2018, 78, 260–272. [Google Scholar] [CrossRef]

- Ashraf, A.; Majid, A.; Troubitsyna, E. Online Path Generation and Navigation for Swarms of UAVs. Sci. Program. 2020, 2020, 8530763. [Google Scholar] [CrossRef]

- Gorecki, T.; Piet-Lahanier, H.; Marzat, J. Cooperative guidance of UAVs for areaexploration with final target allocation. In Proceedings of the IFAC Symposium on Automatic Control for Aerospace, Wurzburg, Germany, 2–6 September 2013. [Google Scholar]

- Messous, M.; Senouci, S.; Sedjelmaci, H. Network Connectivity and Area Coverage for UAV Fleet Mobility Model with Energy Constraint. In Proceedings of the IEEE Wireless Communications and Networking Conference, Doha, Qatar, 3–6 April 2016. [Google Scholar]

- Maza, I.; Caballero, F.; Capitan, J.; Martinez-de Dios, J.; Ollero, A. Experimental Results in Multi-UAV Coordination for Disaster Management and Civil Security Applications. J. Intell. Robot. Syst. 2011, 61, 563–585. [Google Scholar] [CrossRef]

- Perez-Montenegro, C.; Scanavino, M.; Bloise, N.; Capello, E.; Guglieri, G.; Rizzo, A. A Mission Coordinator Approach for a Fleet of UAVs in Urban Scenarios; Elsevier: Amsterdam, The Netherlands, 2018. [Google Scholar]

- Elias, X.; Zacharia, P.; Nearchou, A. Path Planning and scheduling for a fleet of autonomous vehicles. Robotica 2015, 34, 2257–2273. [Google Scholar]

- Shorakaei, H.; Mojtaba, V.; Imani, B.; Gholami, A. Optimal cooperative path planning of unmanned aerial vehicles by a parallel genetic algorithm. Robotica 2016, 34, 823–836. [Google Scholar] [CrossRef]

- Mezghani, F.; Mitton, N. Opportunistic multi-technology cooperative scheme and UAV relaying for network disaster recovery. Information 2020, 11, 37. [Google Scholar] [CrossRef]

- Liang, M.; Delahaye, D. Drone Fleet Deployment Strategy for Large Scale Agriculture and Forestry Surveying. In Proceedings of the IEEE Intelligent Transportation Systems Conference (ITSC), Auckland, New Zealand, 27–30 October 2019. [Google Scholar]

- Bailon-Ruiz, R.; Bit-Monnot, A.; Lacroix, S. Planning to Monitor Wildfires with a Fleet of UAVs. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Madrid, Spain, 1–5 October 2018. [Google Scholar]

- Zhang, M.; Song, J.; Huang, L.; Zhang, C. Distributed Cooperative Search with Collision Avoidance for a Team of Unmanned Aerial Vehicles Using Gradient Optimization. J. Aerosp. Eng. 2017, 30, 1–11. [Google Scholar] [CrossRef]

- Pham, H.X.; La, H.; Feil-Seifer, D.; Nguyen, L. Cooperative and Distributed Reinforcement Learning of Drones for Field Coverage. arXiv 2018, arXiv:1803.07250. [Google Scholar]

- Theile, M.; Bayerlein, H.; Nai, R.; Gesbert, D.; Caccamo, M. UAV Coverage Path Planning under Varying Power Constraints using Deep Reinforcement Learning. arXiv 2020, arXiv:2003.02609. [Google Scholar]

- Zhu, L.; Zheng, X.; Li, P. Reconstruction of 3D Maps for 2D Satellite Images. In Proceedings of the 2013 IEEE International Conference on Green Computing and Communications and IEEE Internet of Things and IEEE Cyber, Physical and Social Computing, Beijing, China, 20–23 August 2013. [Google Scholar]

- Lee, D.; Yom, J.; Shin, S.W.; Oh, J.; Park, K. Automatic Building Reconstruction with Satellite Images and Digital Maps. ETRI J. Comput. Sci. 2011, 33, 537–546. [Google Scholar] [CrossRef]

- Glasius, R.; Komoda, A.; Gielen, S.C. Neural network dynamics for path planning and obstacle avoidance. Neural Netw. 1995, 8, 125–133. [Google Scholar] [CrossRef]

- Yang, S.X.; Luo, C. A neural network approach to complete coverage path planning. IEEE Trans. Syst. Man Cybern. Part B 2004, 34, 718–724. [Google Scholar] [CrossRef] [PubMed]

- Quigley, M.; Conley, K.; Gerkey, B.; Faust, J.; Foote, T.; Leibs, J.; Wheeler, R.; Ng, A.Y. ROS: An open-source Robot Operating System. In Proceedings of the ICRA Workshop on Open Source Software, Kobe, Japan, 12–13 May 2009; Volume 3.2, p. 5. [Google Scholar]

- Koenig, N.P.; Howard, A. Design and use paradigms for Gazebo, an open-source multi-robot simulator. IROS Citeseer 2004, 4, 2149–2154. [Google Scholar]

- Meier, L.; Honegger, D.; Pollefeys, M. PX4: A node-based multithreaded open source robotics framework for deeply embedded platforms. In Proceedings of the 2015 IEEE international conference on robotics and automation (ICRA), Seattle, WA, USA, 26–30 May 2015; pp. 6235–6240. [Google Scholar]

- SITL Contributors. SITL Guide. 2020. Available online: http://ardupilot.org/dev/docs/sitl-simulator-software-in-the-loop.html (accessed on 22 January 2021).

- Singhal, A.; Pallav, P.; Kejriwal, N.; Choudhury, S.; Kumar, S.; Sinha, R. Managing a fleet of autonomous mobile robots (AMR) using cloud robotics platform. In Proceedings of the 2017 European Conference on Mobile Robots (ECMR), Paris, France, 6–8 September 2017; pp. 1–6. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Godio, S.; Primatesta, S.; Guglieri, G.; Dovis, F. A Bioinspired Neural Network-Based Approach for Cooperative Coverage Planning of UAVs. Information 2021, 12, 51. https://doi.org/10.3390/info12020051

Godio S, Primatesta S, Guglieri G, Dovis F. A Bioinspired Neural Network-Based Approach for Cooperative Coverage Planning of UAVs. Information. 2021; 12(2):51. https://doi.org/10.3390/info12020051

Chicago/Turabian StyleGodio, Simone, Stefano Primatesta, Giorgio Guglieri, and Fabio Dovis. 2021. "A Bioinspired Neural Network-Based Approach for Cooperative Coverage Planning of UAVs" Information 12, no. 2: 51. https://doi.org/10.3390/info12020051

APA StyleGodio, S., Primatesta, S., Guglieri, G., & Dovis, F. (2021). A Bioinspired Neural Network-Based Approach for Cooperative Coverage Planning of UAVs. Information, 12(2), 51. https://doi.org/10.3390/info12020051