A Metric Learning-Based Univariate Time Series Classification Method

Abstract

1. Introduction

- How to treat higher dimensional time series data.

- How to find a suitable distance measure method to improve classification accuracy.

- How to compare unequal time series.

2. Background

2.1. Dimension Reduction

2.2. Metric Learning

2.3. Dynamic Time Warping

3. ML-UTSC

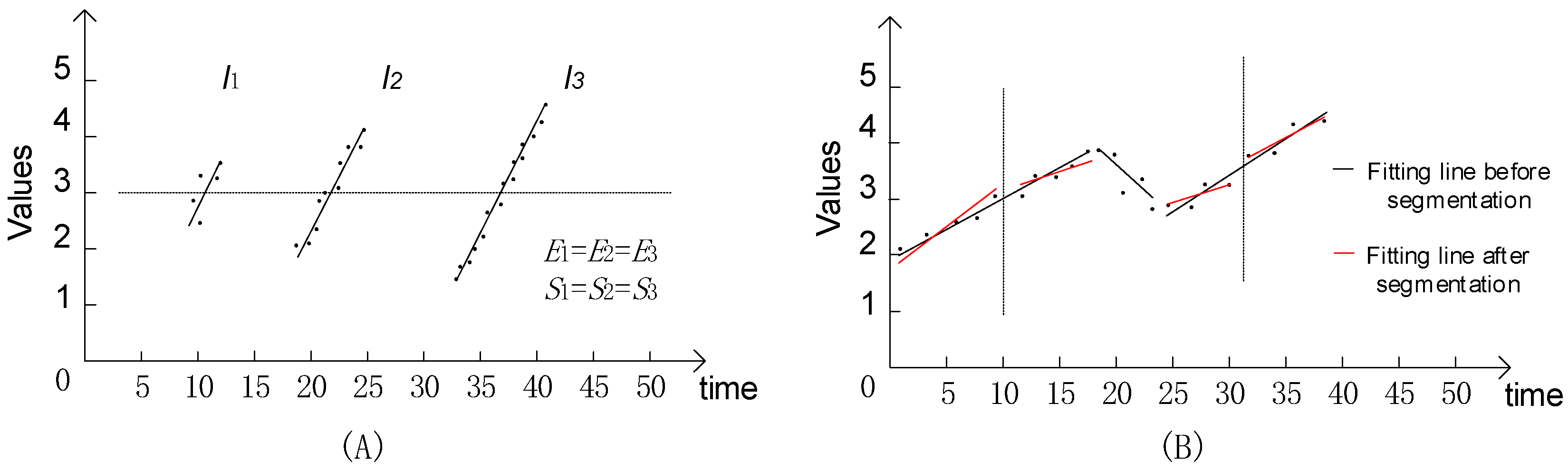

3.1. Least Squares Fitting

| Algorithm 1: [TS] = Bottom_Up_Fitting(T,max_error) |

| 1: for i = 1:2:length(T) 2: TS = concat(TS, create_segment(T[i:i + 1])); 3: end; 4: for i = 1:length(TS) − 1 5: merge_cost(i) = calculate_error([merge(TS(i), TS(i + 1))]); 6: end; 7: while min(merge_cost)<max_error 8: index = min(merge_cost); 9: TS(index) = merge(TS(index), TS(index + 1)); 10: delete (TS(index + 1)); 11: merge_cost(index) = calculate_error(merge(TS(index); 12: merge_cost(index − 1) = calculate_error(merge(TS(index − 1); 13: end |

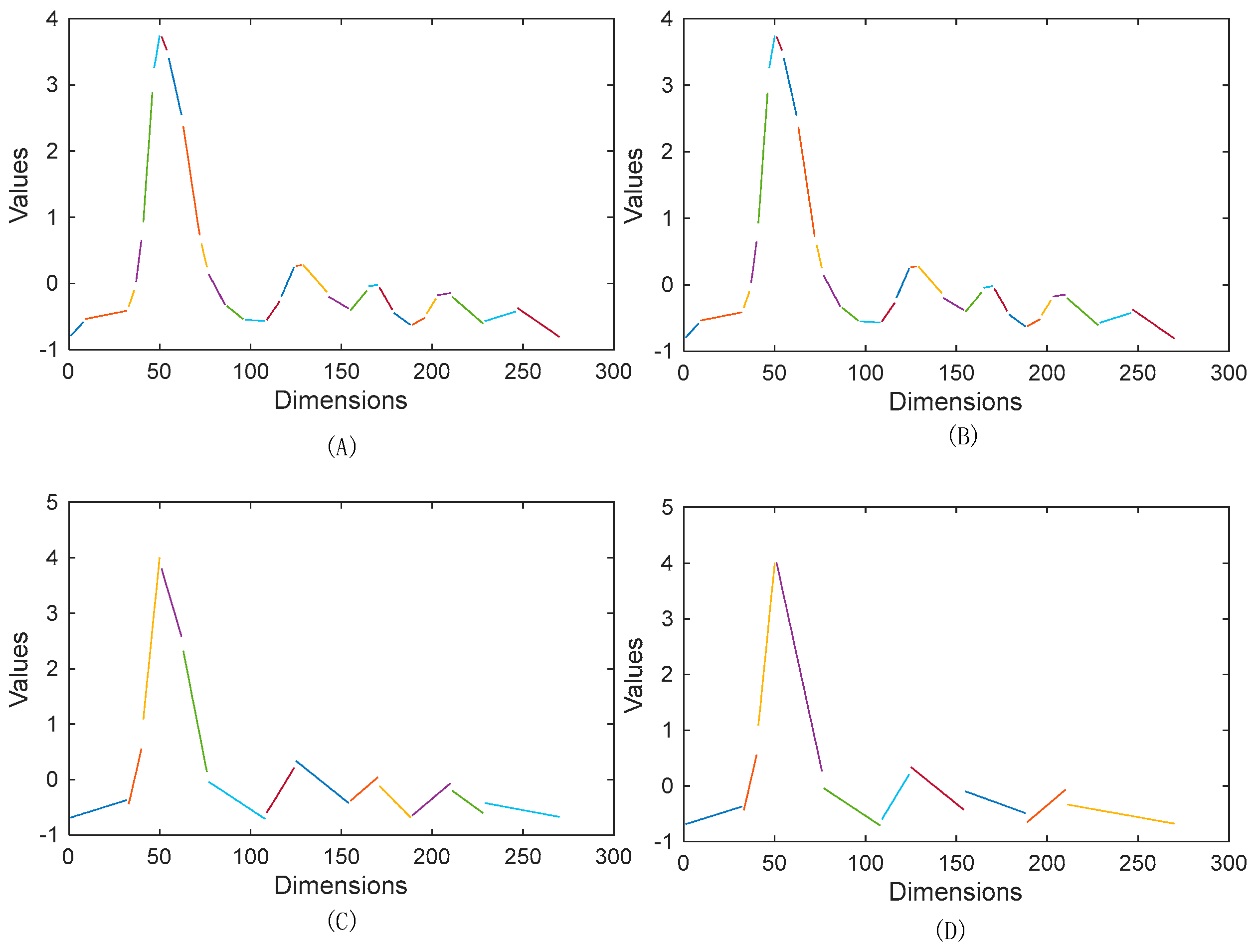

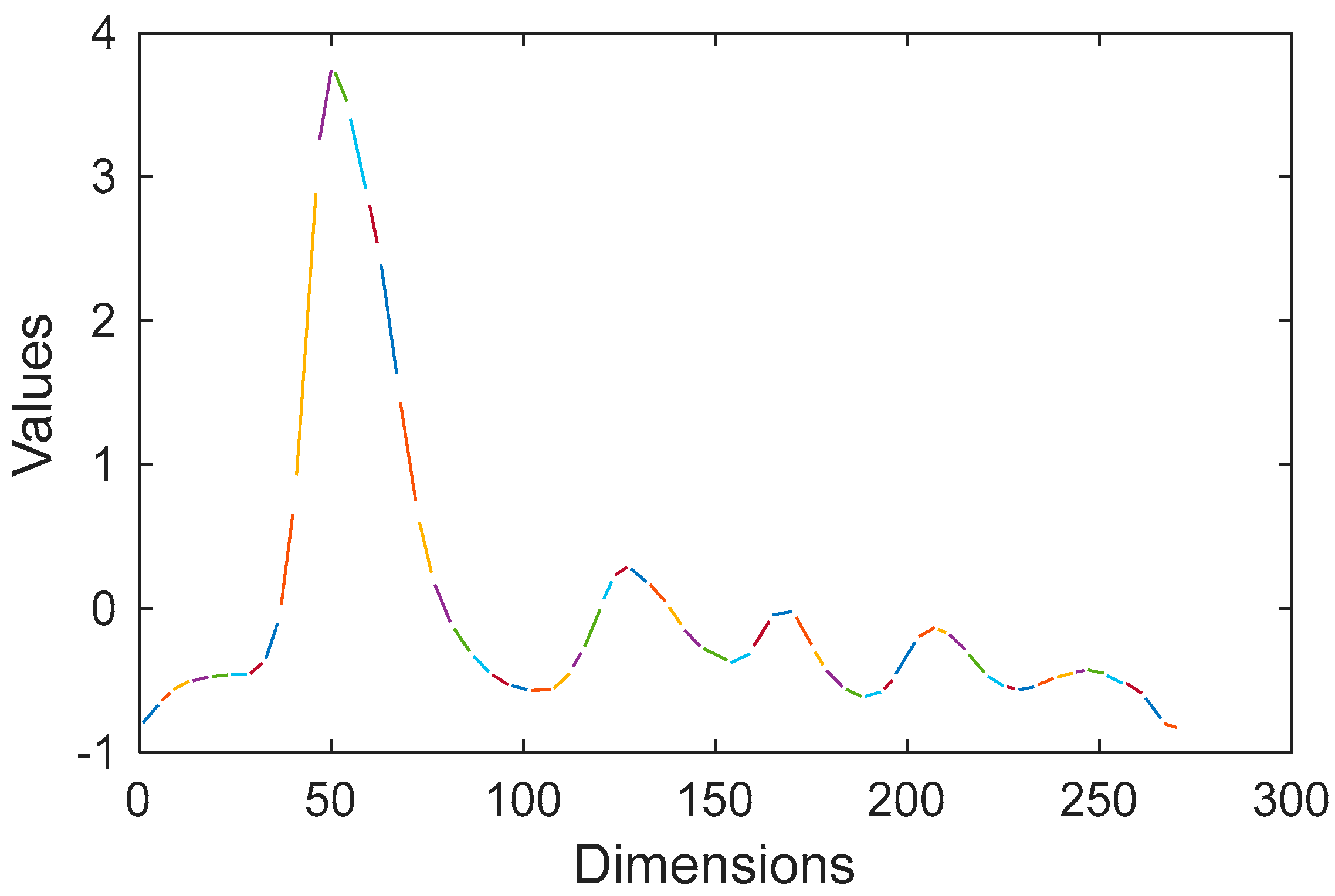

3.2. Feature Representation

3.3. Metric Learning

3.3.1. Calculate Multivariate Local Distance

3.3.2. Learning A Mahalanobis Matrix

4. Experimental Confirmation

4.1. Data Set

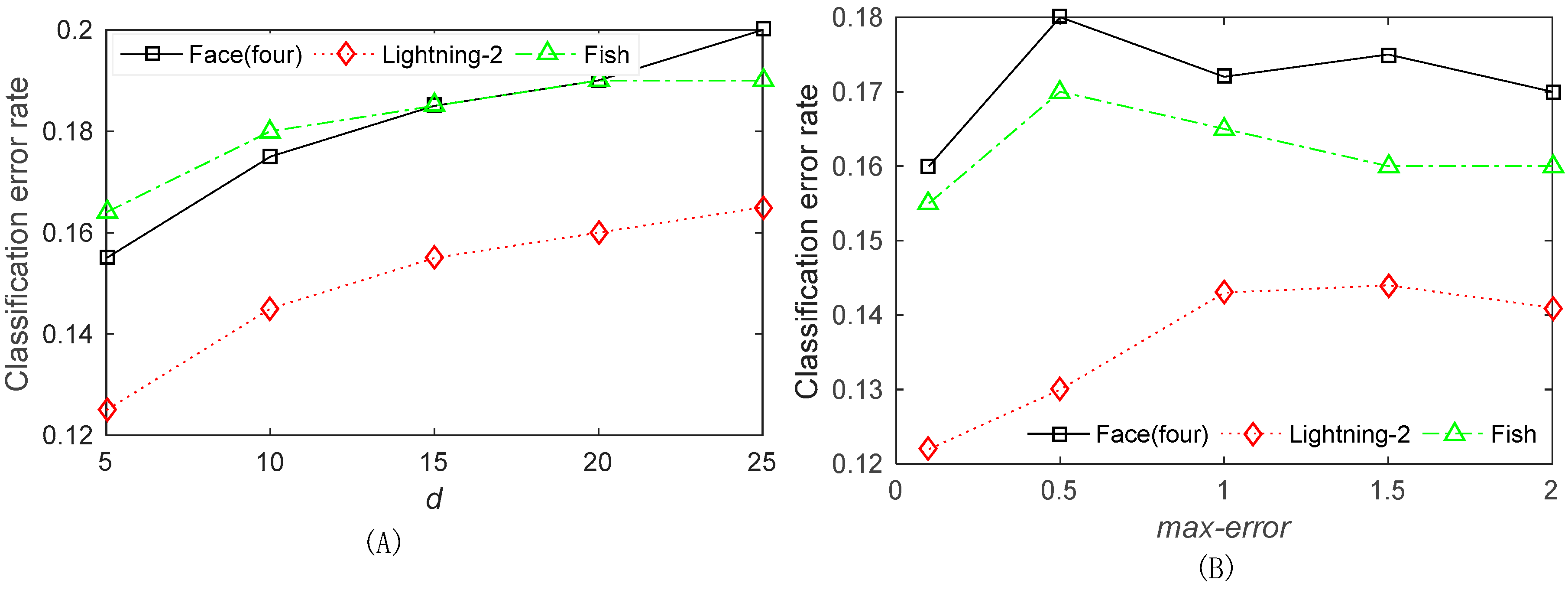

4.2. Comparison Methods and Parameter Setting

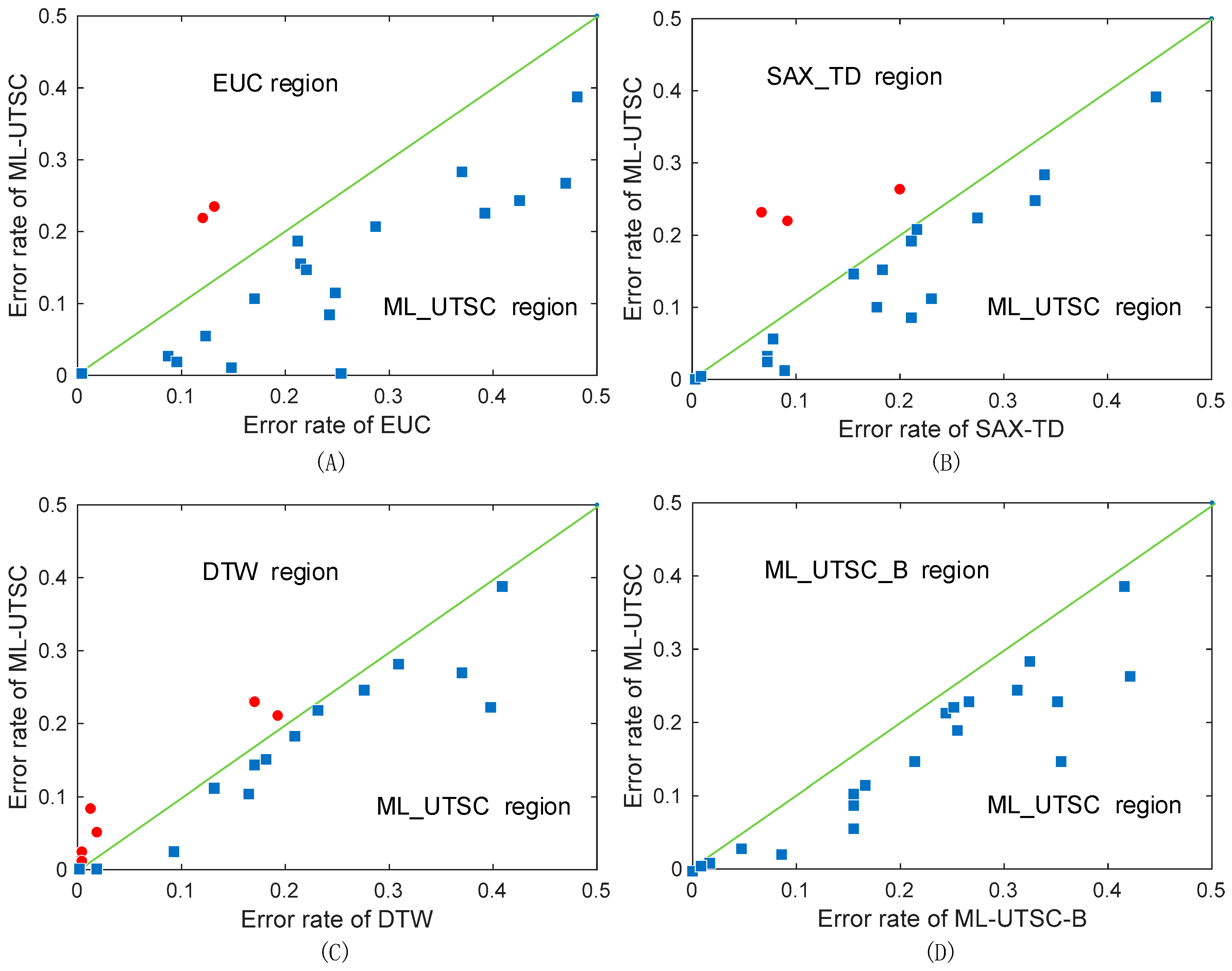

4.3. Classification Results Analysis

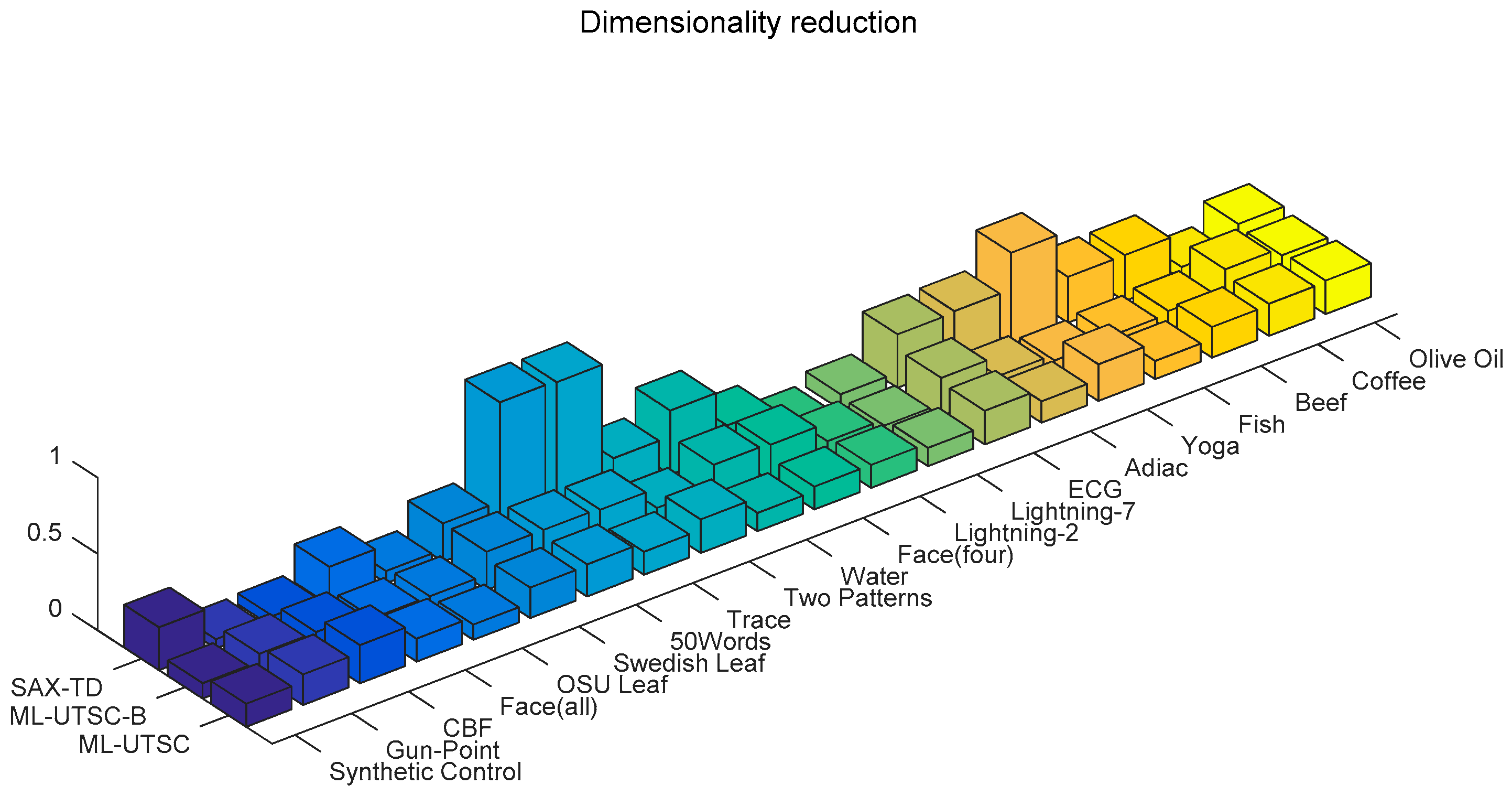

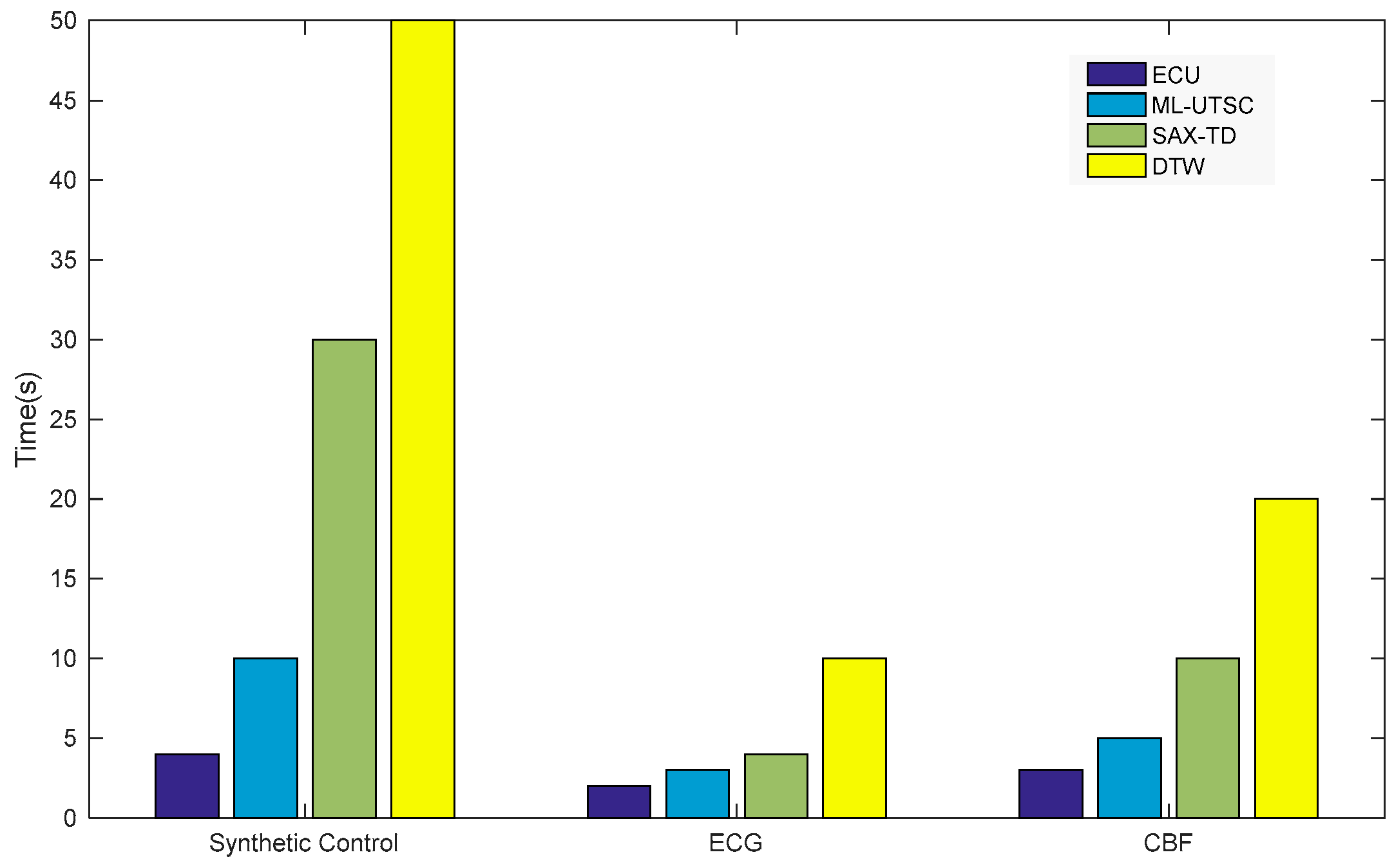

4.4. Dimension Reduction and Time Efficiency

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Drożdż, S.; Forczek, M.; Kwapień, J.; Oświe, P.; Rak, R. Stock market return distributions: From past to present. Phys. A Stat. Mech. Its Appl. 2007, 383, 59–64. [Google Scholar] [CrossRef]

- Shah, S.M.S.; Batool, S.; Khan, I.; Ashraf, M.U.; Abbas, S.H.; Hussain, S.A. Feature extraction through parallel probabilistic principal component analysis for heart disease diagnosis. Phys. A Stat. Mech. Its Appl. 2017, 482, 796–807. [Google Scholar] [CrossRef]

- Verbesselt, J.; Hyndman, R.; Newnham, G.; Culvenor, D. Detecting trend and seasonal changes in satellite image time series. Remote Sens. Environ. 2010, 114, 106–115. [Google Scholar] [CrossRef]

- Contreras-Reyes, J.E.; Canales, T.M.; Rojas, P.M. Influence of climate variability on anchovy reproductive timing off northern Chile. J. Mar. Syst. 2016, 164, 67–75. [Google Scholar] [CrossRef]

- Chan, K.-P.; Fu, A.W.-C. Efficient time series matching by wavelets. In Proceedings of the 15th International Conference on Data Engineering (Cat. No. 99CB36337), Sydney, Australia, 23–26 March 1999; IEEE: Piscataway, NJ, USA, 1999; pp. 126–133. [Google Scholar]

- Moon, Y.-S.; Whang, K.-Y.; Loh, W.-K. Duality-based subsequence matching in time-series databases. In Proceedings of the 17th International Conference on Data Engineering, Heidelberg, Germany, 2–6 April 2001; IEEE: Piscataway, NJ, USA, 2001; pp. 263–272. [Google Scholar]

- Ravi Kanth, K.; Agrawal, D.; Singh, A. Dimensionality reduction for similarity searching in dynamic databases. In ACM SIGMOD Record; ACM: New York, NY, USA, 1998; pp. 166–176. [Google Scholar]

- Keogh, E.; Chakrabarti, K.; Pazzani, M.; Mehrotra, S. Dimensionality reduction for fast similarity search in large time series databases. Knowl. Inf. Syst. 2001, 3, 263–286. [Google Scholar] [CrossRef]

- Keogh, E.; Chu, S.; Hart, D.; Pazzani, M. An online algorithm for segmenting time series. In Proceedings of the IEEE International Conference on Data Mining, Maebashi City, Japan, 9 December 2002; pp. 289–296. [Google Scholar]

- Lin, J.; Keogh, E.; Lonardi, S.; Chiu, B. A symbolic representation of time series, with implications for streaming algorithms. In Proceedings of the ACM SIGMOD Workshop on Research Issues in Data Mining and Knowledge Discovery, San Diego, CA, USA, 13 June 2003; pp. 2–11. [Google Scholar]

- Cover, T.; Hart, P. Nearest neighbor pattern classification. IEEE Trans. Inf. Theory 1967, 13, 21–27. [Google Scholar] [CrossRef]

- Suykens, J.A.; Vandewalle, J. Least squares support vector machine classifiers. Neural Process. Lett. 1999, 9, 293–300. [Google Scholar] [CrossRef]

- De Maesschalck, R.; Jouan-Rimbaud, D.; Massart, D.L. The mahalanobis distance. Chemom. Intell. Lab. Syst. 2000, 50, 1–18. [Google Scholar] [CrossRef]

- Keogh, E.; Ratanamahatana, C.A. Exact indexing of dynamic time warping. Knowl. Inf. Syst. 2005, 7, 358–386. [Google Scholar] [CrossRef]

- Berndt, D.J.; Clifford, J. Using dynamic time warping to find patterns in time series. In KDD Workshop; AAAI: Menlo Park, CA, USA, 1994; pp. 359–370. [Google Scholar]

- Bagnall, A.; Lines, J.; Bostrom, A.; Large, J.; Keogh, E. The great time series classification bake off: A review and experimental evaluation of recent algorithmic advances. Data Min. Knowl. Discov. 2017, 31, 606–660. [Google Scholar] [CrossRef] [PubMed]

- Liu, Y. Distance metric learning: A comprehensive survey. Michigan State Universiy 2006, 2, 4. [Google Scholar]

- Shen, J.Y.; Huang, W.P.; Zhu, D.Y.; Liang, J. A novel similarity measure model for multivariate time series based on LMNN and DTW. Neural Process. Lett. 2017, 45, 925–937. [Google Scholar] [CrossRef]

- Mei, J.Y.; Liu, M.; Wang, Y.F.; Gao, H. Learning a mahalanobis distance-based dynamic time warping measure for multivariate time series classification. IEEE Trans. Cybern. 2016, 46, 1363–1374. [Google Scholar] [CrossRef] [PubMed]

- Xing, E.P.; Ng, A.Y.; Jordan, M.I.; Russell, S. Distance metric learning, with application to clustering with side-information. In Proceedings of the International Conference on Neural Information Processing Systems, Vancouver, British, 9–14 December 2002; pp. 521–528. [Google Scholar]

- Weinberger, K.Q.; Blitzer, J.; Saul, L.K. Distance metric learning for large margin nearest neighbor classification. J. Mach. Learn. Res. 2009, 10, 207–244. [Google Scholar]

- Chen, L.; Ng, R. On the marriage of lp-norms and edit distance. In Proceedings of the Thirtieth International Conference on Very Large Data Bases-Volume 30, Trondheim, Norway, 30 August–2 September 2004; pp. 792–803. [Google Scholar]

- Sun, Y.Q.; Li, J.Y.; Liu, J.X.; Sun, B.Y.; Chow, C. An improvement of symbolic aggregate approximation distance measure for time series. Neurocomputing 2014, 138, 189–198. [Google Scholar] [CrossRef]

- Vlachos, M.; Kollios, G.; Gunopulos, D. Discovering similar multidimensional trajectories. In Proceedings of the International Conference on Data Engineering, San Jose, CA, USA, 26 February–1 March 2002; pp. 673–684. [Google Scholar]

- Chen, L.; Özsu, M.T.; Oria, V. Robust and fast similarity search for moving object trajectories. In Proceedings of the 2005 ACM SIGMOD International Conference on Management of Data, Baltimore, MD, USA, 14–16 June 2005; pp. 491–502. [Google Scholar]

- Ye, L.; Keogh, E. Time series shapelets: A new primitive for data mining. In Proceedings of the 15th ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, ACM, Paris, France, 28 June–1 July 2009; pp. 947–956. [Google Scholar]

- Grabocka, J.; Wistuba, M.; Schmidt-Thieme, L. Fast classification of univariate and multivariate time series through shapelet discovery. Knowl. Inf. Syst. 2016, 49, 429–454. [Google Scholar] [CrossRef]

- Ten Holt, G.A.; Reinders, M.J.; Hendriks, E. Multi-dimensional dynamic time warping for gesture recognition. In Proceedings of the Thirteenth Annual Conference of the Advanced School for Computing and Imaging, Heijen, the Netherlands, 13–15 June 2007; pp. 1–10. [Google Scholar]

| No. | Dataset Name | Classes | Size of Training Set | Size of Testing Set | Length of Time Series |

|---|---|---|---|---|---|

| 1 | Synthetic Control | 6 | 300 | 300 | 60 |

| 2 | Gun-Point | 2 | 50 | 150 | 150 |

| 3 | CBF | 3 | 30 | 900 | 128 |

| 4 | Face (all) | 14 | 560 | 1690 | 131 |

| 5 | OSU Leaf | 6 | 200 | 242 | 427 |

| 6 | Swedish Leaf | 15 | 500 | 625 | 128 |

| 7 | 50Words | 50 | 450 | 455 | 270 |

| 8 | Trace | 4 | 100 | 100 | 275 |

| 9 | Two Patterns | 4 | 1000 | 4000 | 128 |

| 10 | Water | 2 | 1000 | 6174 | 152 |

| 11 | Face (four) | 4 | 24 | 88 | 350 |

| 12 | Lightning-2 | 2 | 60 | 61 | 637 |

| 13 | Lightning-7 | 7 | 70 | 73 | 319 |

| 14 | ECG | 2 | 100 | 100 | 96 |

| 15 | Adiac | 37 | 390 | 391 | 176 |

| 16 | Yoga | 2 | 300 | 3000 | 426 |

| 17 | Fish | 7 | 175 | 175 | 463 |

| 18 | Beef | 5 | 30 | 30 | 470 |

| 19 | Coffee | 2 | 28 | 28 | 286 |

| 20 | Olive Oil | 4 | 30 | 30 | 570 |

| No. | Dataset Name | EUC Error | SAX-TD Error (w,α) | DTW Error | MLUTSC_B Error | ML-UTSC Error(max_error, d) |

|---|---|---|---|---|---|---|

| 1 | Synthetic Control | 0.120 | 0.077 (8,10) | 0.017 | 0.152 | 0.053 (1,10) |

| 2 | Gun-Point | 0.087 | 0.073 (4,3) | 0.093 | 0.046 | 0.026 (0.1,5) |

| 3 | CBF | 0.148 | 0.088 (4,10) | 0.004 | 0.014 | 0.011 (1,5) |

| 4 | Face (all) | 0.286 | 0.215 (16,8) | 0.192 | 0.244 | 0.210 (0.5,10) |

| 5 | OSU Leaf | 0.483 | 0.446 (32,7) | 0.409 | 0.415 | 0.388 (0.5,10) |

| 6 | Swedish Leaf | 0.211 | 0.213 (16,7) | 0.208 | 0.255 | 0.188 (0.1,5) |

| 7 | 50Words | 0.369 | 0.338 (128,9) | 0.310 | 0.323 | 0.284 (0.5,5) |

| 8 | Trace | 0.240 | 0.210 (128,3) | 0.010 | 0.152 | 0.084 (0.1,10) |

| 9 | Two Patterns | 0.093 | 0.071 (16,10) | 0.002 | 0.086 | 0.023 (0.5,5) |

| 10 | Water | 0.005 | 0.004 (32,8) | 0.020 | 0.005 | 0.001 (0.1,10) |

| 11 | Face (four) | 0.216 | 0.181 (32,9) | 0.170 | 0.352 | 0.147 (0.1,5) |

| 12 | Lightning-2 | 0.246 | 0.229 (8,9) | 0.131 | 0.163 | 0.114 (0.5,10) |

| 13 | Lightning-7 | 0.425 | 0.329 (16,10) | 0.274 | 0.312 | 0.246 (1,10) |

| 14 | ECG | 0.120 | 0.092 (16,5) | 0.230 | 0.252 | 0.220 (0.1,5) |

| 15 | Adiac | 0.389 | 0.273 (32,9) | 0.396 | 0.351 | 0.224 (0.5,10) |

| 16 | Yoga | 0.170 | 0.179(128,10) | 0.164 | 0.155 | 0.102 (1,5) |

| 17 | Fish | 0.217 | 0.154 (64,9) | 0.177 | 0.213 | 0.151 (1,10) |

| 18 | Beef | 0.467 | 0.200 (64,9) | 0.367 | 0.421 | 0.266 (1,5) |

| 19 | Coffee | 0.250 | 0.000 (8,3) | 0.000 | 0.000 | 0.000 (0.1,5) |

| 20 | Olive Oil | 0.133 | 0.067 (64,3) | 0.167 | 0.267 | 0.233 (0.1,5) |

| Methods | n+ | n− | n= | p-Value |

|---|---|---|---|---|

| ML-UTSC VS. EUC | 18 | 2 | 0 | p < 0.01 |

| ML-UTSC VS. SAX-TD | 16 | 3 | 1 | 0.01 < p < 0.05 |

| ML-UTSC VS. DTW | 13 | 6 | 1 | p = 0.167 |

| ML-UTSC VS. ML-UTSC_B | 19 | 0 | 1 | p < 0.01 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Song, K.; Wang, N.; Wang, H. A Metric Learning-Based Univariate Time Series Classification Method. Information 2020, 11, 288. https://doi.org/10.3390/info11060288

Song K, Wang N, Wang H. A Metric Learning-Based Univariate Time Series Classification Method. Information. 2020; 11(6):288. https://doi.org/10.3390/info11060288

Chicago/Turabian StyleSong, Kuiyong, Nianbin Wang, and Hongbin Wang. 2020. "A Metric Learning-Based Univariate Time Series Classification Method" Information 11, no. 6: 288. https://doi.org/10.3390/info11060288

APA StyleSong, K., Wang, N., & Wang, H. (2020). A Metric Learning-Based Univariate Time Series Classification Method. Information, 11(6), 288. https://doi.org/10.3390/info11060288