1. Introduction

Learning is usually associated with going to school or following an educational program. This is so-called

formal learning. Formal learning is typically institutionally organized, often classroom-based, and highly structured [

1]. However, there are plenty of opportunities to learn. Learning can happen as a byproduct of some other activity, i.e., so-called incidental learning [

1], or while searching for information, or exploring an environment. In general this kind of learning is called

informal learning [

2,

3], but also different terms are used, such as learning “en passant” [

4] or self-regulated learning [

5]. Informal learning is the result of an unplanned or an unexpected event. Note that in the educational domain also the term

non-formal learning (also called semi-formal learning [

6]) is used. As opposed to informal learning, non-formal learning is a planned, but very adaptable activity set up by an institution or organization [

7]. It consists of learning embedded in planned activities that are not explicitly designed as learning but contain an important learning element [

8] (p. 839). Examples of non-formal learning are visits to museums and city tours organized as part of educational curriculum activities. So, informal learning is distinguished from formal and non-formal learning by having no authority figure or mediator [

7].

According to Marsick and Watkins [

1], informal learning takes place wherever people have the need, motivation, and opportunity for learning. Informal learning is characterized as being integrated with daily routines, triggered by an internal or external event, not highly conscious, and is an inductive process of reflection and action. Given these characteristics, current digital technologies, such as mobile applications and social media, can foster informal learning [

9,

10,

11]. Most people are using their smartphones daily and carry them all the time. Therefore, mobile applications can be integrated into daily routines. Furthermore, smartphones can be used to trigger the user at any moment. The use of social media, such as Flickr, YouTube, and Facebook, has steadily increased [

12] and has also become part of people’s daily life. The wealth of information available on the Web and accessible at any moment through smartphones provides plenty of opportunities to learn. Therefore, mobile applications and social media are nowadays also used for non-formal learning [

8]. Two example are the MTL Urban Museum App [

13] and the Digital Literacy 2.0 project [

14]. However, the aforementioned technologies can also be used to support formal learning [

15]. Therefore, from a technological point of view, it should, in principle, be possible to develop a learning environment that can be used for all three forms of learning. Our goal was to investigate this possibility. Hence, our goal was not to explicitly support one of the three forms of learning, but, as formulated by Lonsdale, Vavoula, and Sharples, “to use mobile technologies to transform learning into a seamless part of daily life to the point where it is not recognized as learning at all” [

16] (p. 5). We aimed to achieve this by creating an engaging software environment that would be used voluntarily for informal learning, but could also be used for non-formal learning, and in the context of formal learning. The type of learning environment we aimed for is a so-called

Playful Learning Environment (PLE). A playful learning environment refers to a technology-enriched play and learning environment that blends indoor and outdoor spaces to create a playground for exploration, narration, and imagination of information and knowledge [

17,

18].

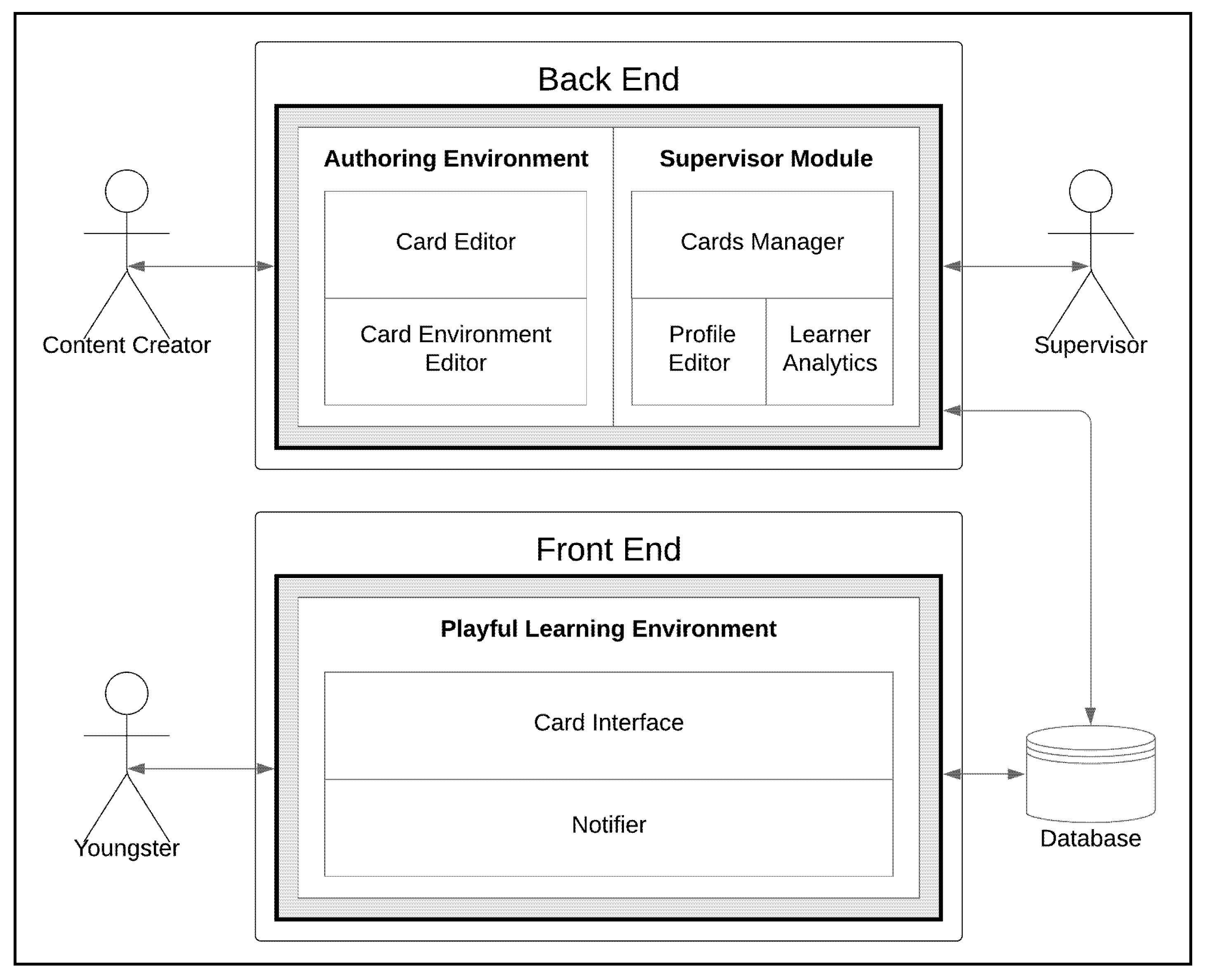

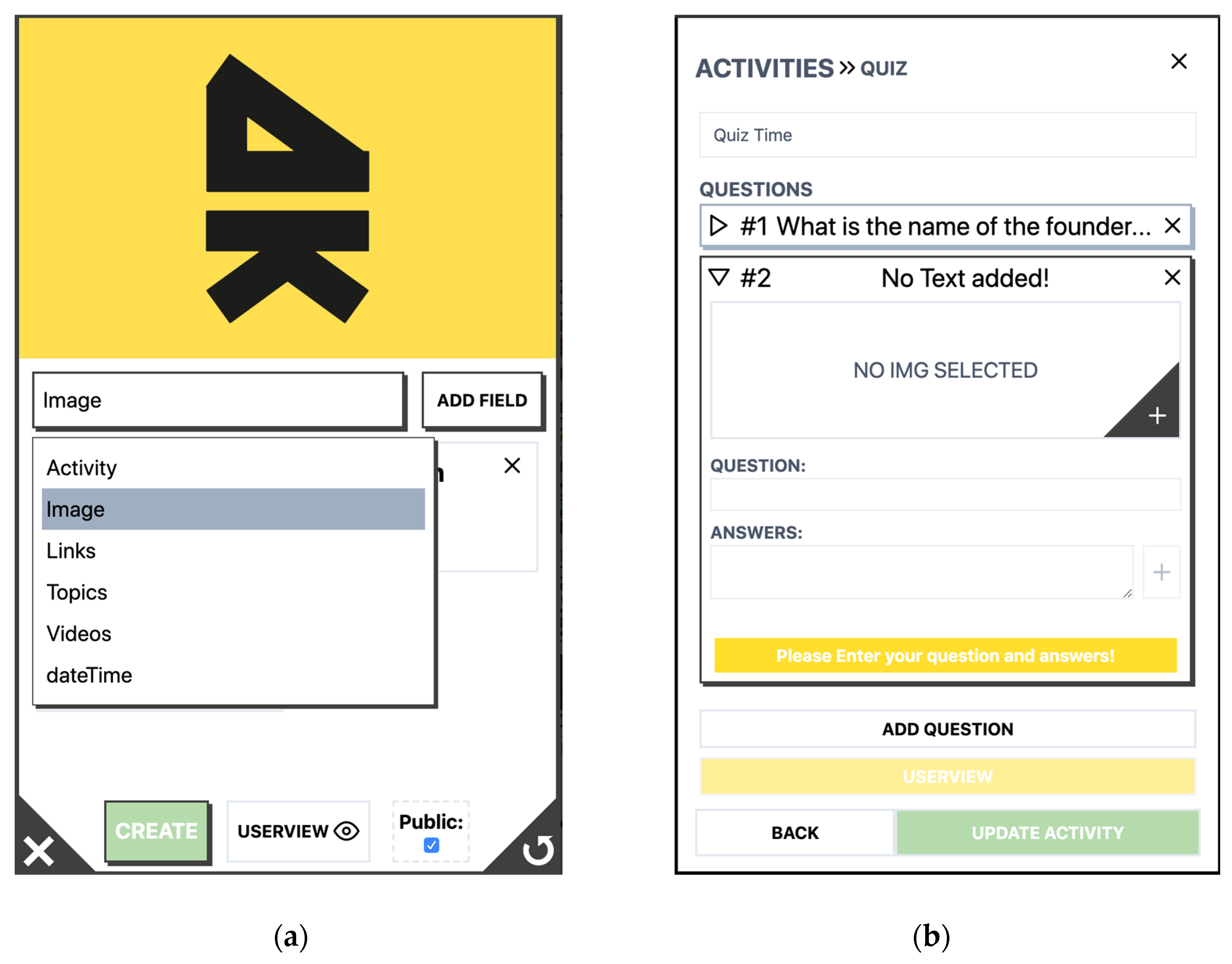

Using the Design Science Research Methodology (DSRM) [

19], we developed a mobile digital environment for stimulating youngsters to explore their environment in a meaningful and playful way. The digital environment uses a card-based interface and the principles of micro learning [

20] to offer small chunks of learning content and challenges. Persuasive techniques [

21] and gamification [

22] are used to stimulate usage. Furthermore, a personalized approach is applied, meaning that what is offered, how and when, is adapted to the needs and behavior of the individual user. We performed several formative evaluations in different contexts, which provided useful feedback to improve and extend the application. We could conclude that, in the context of those evaluations, the app was usable for youngsters and able to engage them, and we see indications that it may be able to increase the intrinsic motivation and learning capacity of youngsters. By means of different use cases, we showed that the app is usable in different contexts and for different purposes, including informal, non-formal, and formal learning, thus achieving our main goal.

The paper starts by discussing related work (

Section 2). Next, it elaborates on the research steps taken to come to the design of the system and justifies the decisions made (

Section 3). In

Section 4, we provide an overview of the system and its functionalities. Next, we discuss the evaluations performed and the demonstrators developed (

Section 5).

Section 6 provides a discussion, limitations, and future work.

Section 7 concludes the paper.

5. Evaluations and Demonstrations

During the research and development process, we performed several evaluations and provided different demonstrations. The evaluations were formative evaluations with the aim to improve the application as its development progresses. For that purpose, qualitative research methods are more useful than solely quantitative ones [

55]. According to Kaplan and Maxwell [

55] qualitative methods can be used throughout the entire development process, as they can help to identify potential problems as they are forming, thereby providing opportunities to improve the system as it develops.

A phased approach was used for this formative evaluation. After each evaluation phase the app was improved based on the feedback received. The main questions for this formative evaluation were: Is the TICKLE environment, as an adaptive mobile tool with persuasive strategies, (1) usable for youngsters, (2) able to engage youngster, and (3) able to increase the intrinsic motivation and learning capacity of youngsters?

5.1. Evaluation Phase 1

In the first phase, which was situated in the early design phase, we wanted to receive suggestions and recommendations from supervisors and organizations concerned with school burnout and dropout to ensure that our environment would be usable for this purpose. Next to that, we also wanted to create awareness about the project within the field (step 6 in DSRM).

Individual sessions were held with 11 organizations, all organizations working with youngsters. In the sessions, we used open interviews to gather feedback and/or input on specific topics concerning the TICKLE environment (i.e., on attractiveness, usability, and feasibility of the approach). After a short introduction, the researchers explained the aim, design, and features of the TICKLE environment, after which the current prototype was presented. Next, the TICKLE environment was discussed using some questions as a guide for the interview conversations. The interviews were audio-recorded, transcribed ad verbatim, and read through repeatedly. The interviews were coded and analyzed in the MAXQDA software package through an iterative process that combined elements of both content and thematic analyses [

56].

Findings & actions taken:

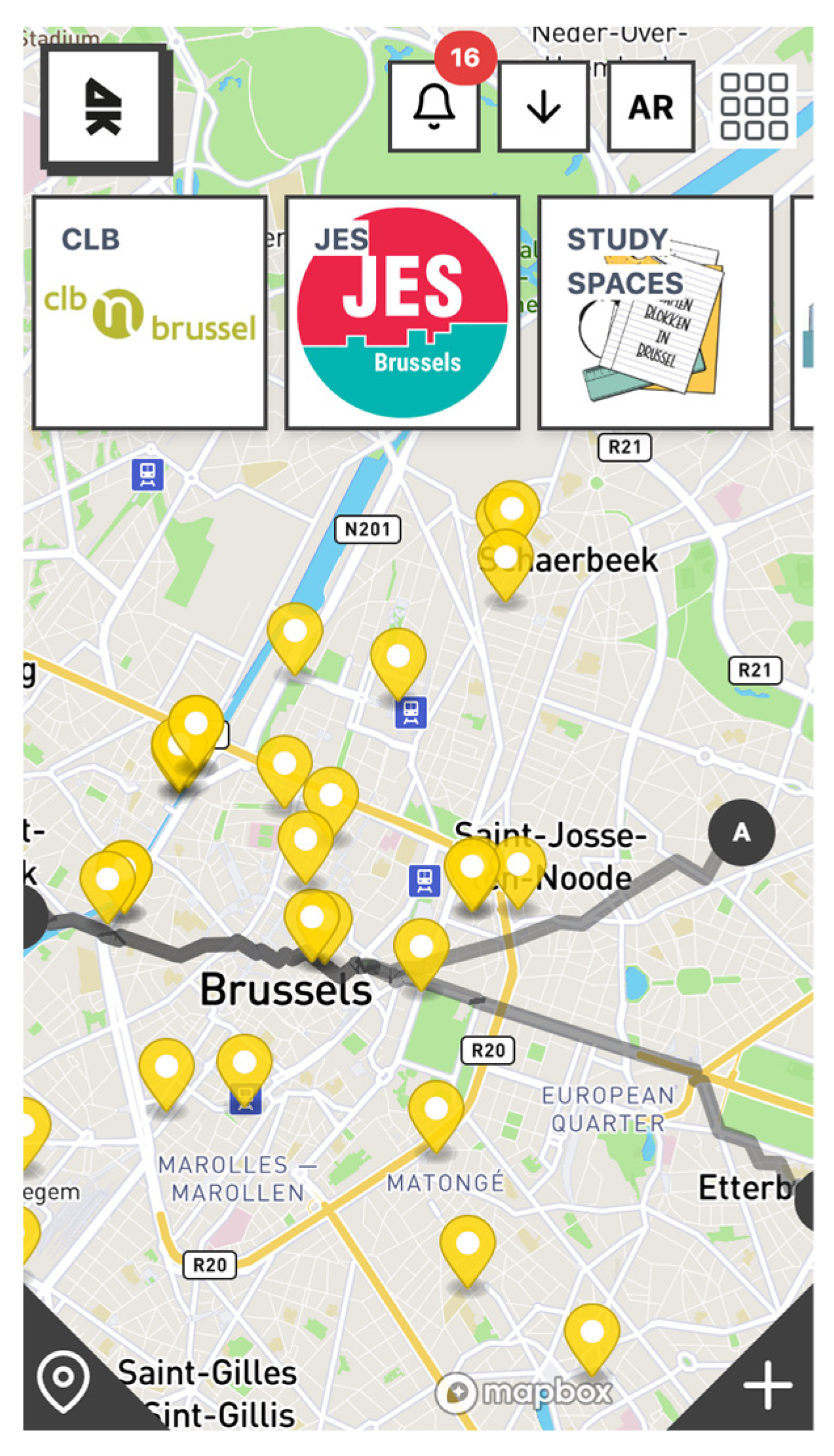

The organizations we consulted pointed out that a lot of youngsters, among whom those that (eventually may) dropout, hold on strongly to the boundaries of their own quarters, in this way missing opportunities to broader their interests. Its location-based service and on-the-go approach meant the organizations did see merit in TICKLE in allowing young people to go out and step outside their own direct neighborhoods, enabling them to explore new parts of their neighborhood and the city in general. By offering youngsters different challenges and activities, the application could bring them to locations and places they have not been before and stimulate them to explore activities they did not participate in before.

The coaches and supervisors from the consulted organizations recommended that the offer in terms of cards, activities, and challenges should be very diverse, so that all youngsters could find something of their interest. Themes mentioned were sports (e.g., dance and boxing) and music, but also media. Next to our intention to start from the youngsters’ own interest, the gamification element within TICKLE was considered a positive and appealing way to motivate youngsters to explore more. Based on this feedback, and in order to allow the youngsters to broaden their interests, we decided to provide links to “related” cards on (the back side of) a card.

Within the environment, the youngster is able to track the cards already opened and collected, the themes discovered, and his/her own growth. It was indicated that this could offer a means of self-reflection. Furthermore, it also provides ownership over one’s own learning process. Another idea that was dropped, and added to the system, was to include soft skills next to topics of interest, and allow the labeling of cards with soft skill labels too, e.g., responsibility, team spirit. In this way, the youngsters can (possibly unconsciously) practice these soft skills and also collect points for them.

Furthermore, it was indicated that it would be valuable to guide the youngsters around within the educational, social (-cultural) support and service landscape. This has been taken up by providing a specific card environment dedicated to this. In this card environment, each relevant organization is described by a card, which is positioned on the map by means of a dedicated icon (see also

Section 5.3.).

It was suggested that the app could support geocaching [

57]. Although TICKLE is not explicitly tailored towards geocaching, it is possible to support it by means of the open challenges. In the future, we will investigate how it can be supported in a more explicit way.

Another aspect that was mentioned was the importance of allowing youngsters to connect with each other with and within the TICKLE environment. The organizations gave several reasons why this would be good to have: to communicate and connect, to inspire and trigger each other, to collaborate and meet in real life, to help and learn from each other. This valuable suggestion will be implemented later (future work).

It was also suggested that the app should provide a help button that the youngster could use when (s)he would be stuck on a challenge. There are different possibilities to implement such a help-functionality. It will be considered in future work.

Another suggestion was to introduce leaderboards. We did not uphold this suggestion as such, because leaderboards are in general only motivating for the ones at the top of the leaderboard. For those at the bottom, it may not be good for their self-esteem and motivation. However, we will reconsider it later as part of the personalization of the persuasive strategy.

5.2. Evaluation Phase 2

Within phase two, the developed tool was piloted and evaluated in real-life settings. The main aim of phase two was to receive feedback and suggestions from the target group, i.e., youngsters, in order to adapt or redesign the environment according to their feedback and suggestions. More specifically, we wanted to know (1) if the app was attractive and usable, (2) if its use was motivating, and (3) the youngsters’ willingness to use the app on a longer term basis. On purpose, we did not focus on youngsters with school burnout but on youngsters in general. This decision was taken in order to ensure that the app would be usable by youngsters in general and would be usable for a broader goal than school burnout.

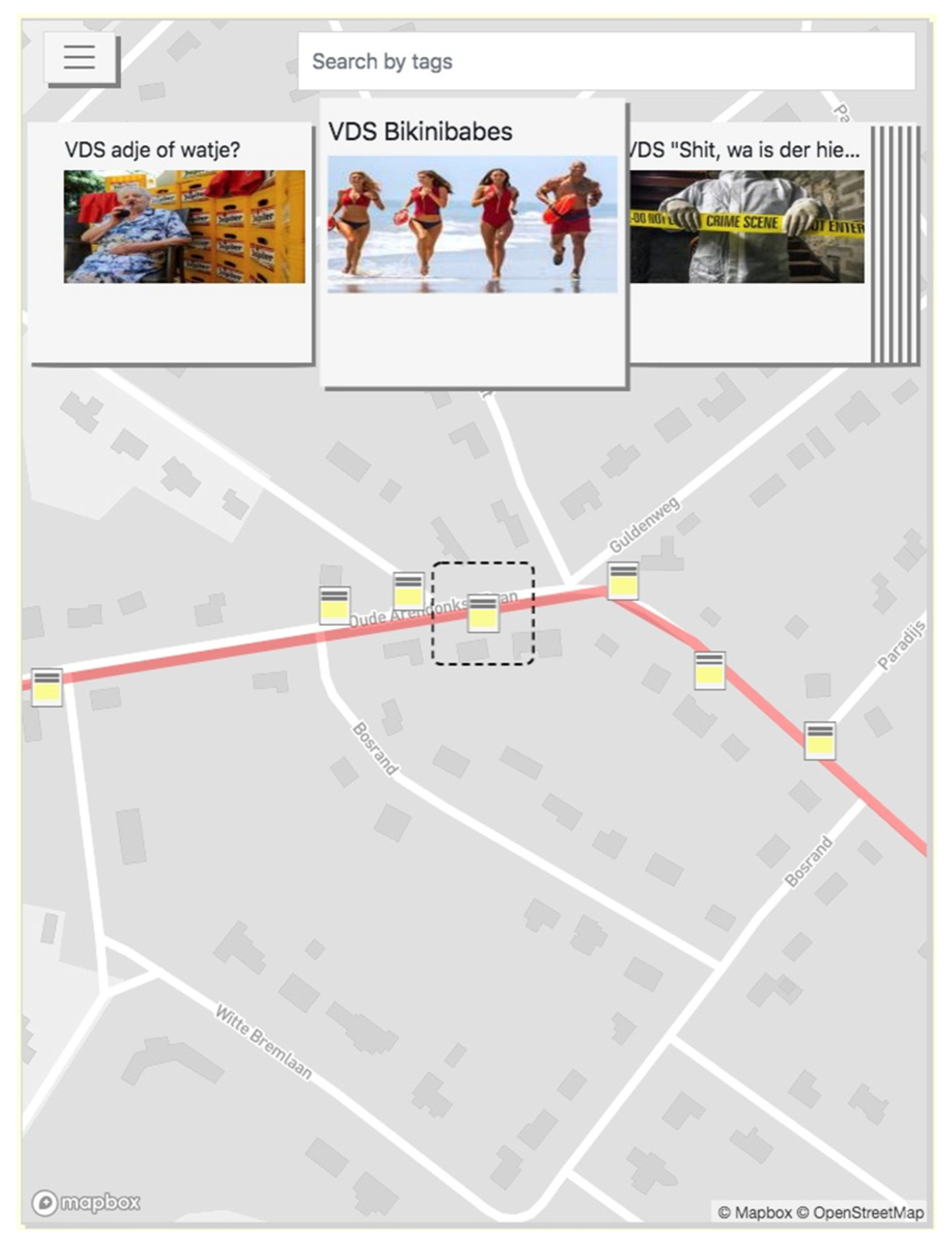

In this phase, two evaluations took place, both with the youth organization the “Vlaamse Dienst Speelpleinwerking” (VDS) [

58], translated as the Flemish Service for Playground Work. VDS organizes so-called playgrounds (i.e., play days) for children during school holidays. They also organize courses for youngsters who want to become animators for the playgrounds.

In both evaluations, the participants were animators of the organization. We informed them that they were participating in an evaluation, and they were informed about their rights and agreed to participate.

5.2.1. Evaluation 1 of Phase 2

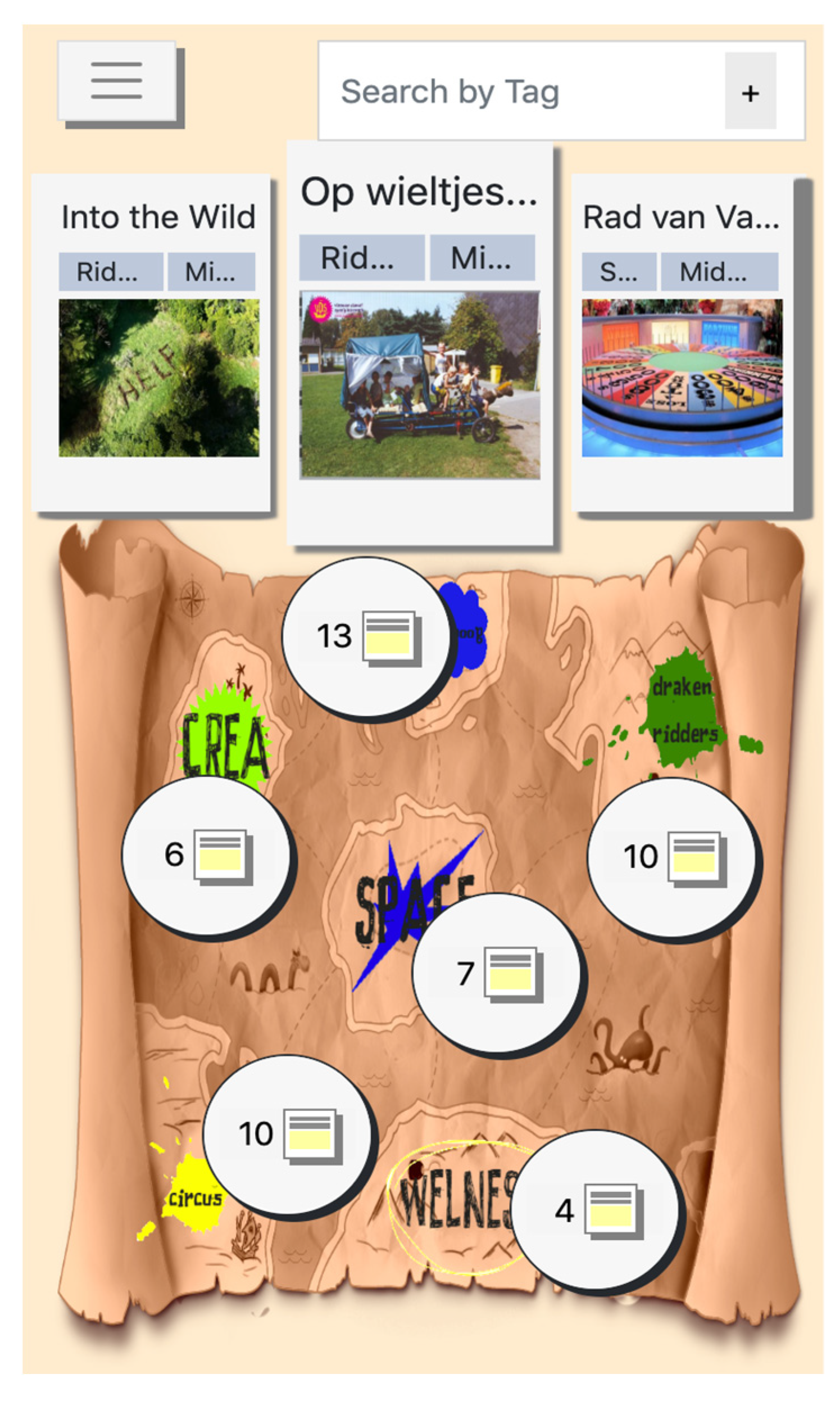

VDS was looking for a game to improve the cooperation between the animators of the playgrounds and to stimulate their creativity. In order to do so, we proposed they try out the TICKLE environment. For this evaluation, cards were created with challenges related to the operation of a playground. An example challenge was, for instance, to build a spaceship. The cards and challenges were created by experienced instructors from the organization. Challenges could be carried out individually, or collaboratively with other animators. The goal for the participants was to carry out the challenge/activity to the best possible standard and to collect as many cards as possible.

For this evaluation, the cards were not placed on a physical map but on a fantasy map, i.e., a treasure map (see

Figure 10), as all challenges were located at the playground’s location. All cards were visible and labeled with a topic, as well as with a difficulty degree: easy, medium, or difficult.

The evaluation was done at two different playground locations. In principle, all animators of those playground locations could participate. The animators were introduced to the TICKLE environment in small groups by means of an oral presentation and a hands-on demo. They also received a short manual on paper. They could use the environment for three weeks. The youngsters had to use their own smartphones. At that time, only recent Android smartphones were well supported. Youngsters that did not have such a device could use the application on a laptop or desktop computer through a Web browser. Afterwards, feedback was invited through an online questionnaire. Next to some questions related to the participant (age, background), this questionnaire contained questions from the short version of the User Experience Questionnaire (UEQ) [

59,

60], as well as questions for testing whether the participants understood specific features of TICKLE, questions about the look and feel of the cards, the challenges, and about the original goal (i.e., stimulating the cooperation and creativity of the animators). These questions used a Likert scale. The participants could also leave comments and suggestions for improvement.

Results: In total, 20 animators filled out the questionnaire: nine participants were 16 years old; the others were between 17 and 25 years old. Nine participants had no or only one year of experience as animator. Concerning the questions from UEQ, the hedonic quality (stimulation and novelty) scored higher (1,5) than the pragmatic quality (attractiveness, efficiency, perspicuity, dependability) (1,00). According to the UEQ handbook, these scores represent a positive evaluation. The results on the questions to test the understanding of specific features, as well as about the look and feel, were mixed, indicating that some improvement would be needed on these aspects: eight of the 18 participants (40%) gave a score higher than 4 for attractiveness (where 1 was attractive and 7 not attractive); five participants (25%) gave a score of 4, while the scores of the other seven participants were between 1 and 3. In general, we received positive results about the challenges. For the fun aspect, all scores were between 1 and 4 (where 1 was fun and 7 boring), with 20% (four participants) for score 1 and 40% (eight participants) for score 2. All scores for being doable (where 1 was not doable and 7 good doable) were 4 or higher, with 45% (nine participants) for score 5 and 6. The results on the questions related to the original goal were positive: 85% (17 participants) indicated that the challenges were inspiring, the other 15% (three participants) replied “maybe”; 70% (14 participants) indicated that this app could contribute to a better collaboration, the other 30% (six participants) answered “maybe”; everybody agreed that the challenges could contribute to a higher quality of the playground activities.

Suggestions and comments were provided. Comments about the challenges provided useful feedback about the type of challenges youngsters are interested in. The other comments and suggestions were about improving the interface, the info presented on the cards, and some aspects of the functionalities. Additionally, usability issues with specific smartphones and browsers were mentioned.

5.3. Evaluation Phase 3

In this phase, evaluations in real-life settings were also done, but this time the focus was on youngsters in some way related to the issue of school burnout and school dropout. Two evaluations took place, both with an organization dealing with youngsters who are in a problematic situation, i.e., Try-out [

61] and CAD Limburg [

62]. Try-out offers activities that allow youngsters with school issues to detect their talents and interests, and in this way try to reconnect them with regular school or work, and CAD Limburg offered a Reboot Camp [

63] for young gamers at risk, who often are also at risk for school dropout.

In both evaluations, the participants were informed that they were participating in an evaluation, they were informed about their rights, and agreed to participate.

Due to the problems experienced with the broad range of smartphones used by youngsters in the evaluation phase two, we decided to provide them with a smartphone to avoid usability problems due to incompatibility issues. The smartphones were Android devices. Sufficient mobile data was provided, as this was reported as an issue in the previous evaluation phase. We realize that those issues should be resolved in a later stage, but we wanted to avoid issues with smartphones influencing the results of the evaluations.

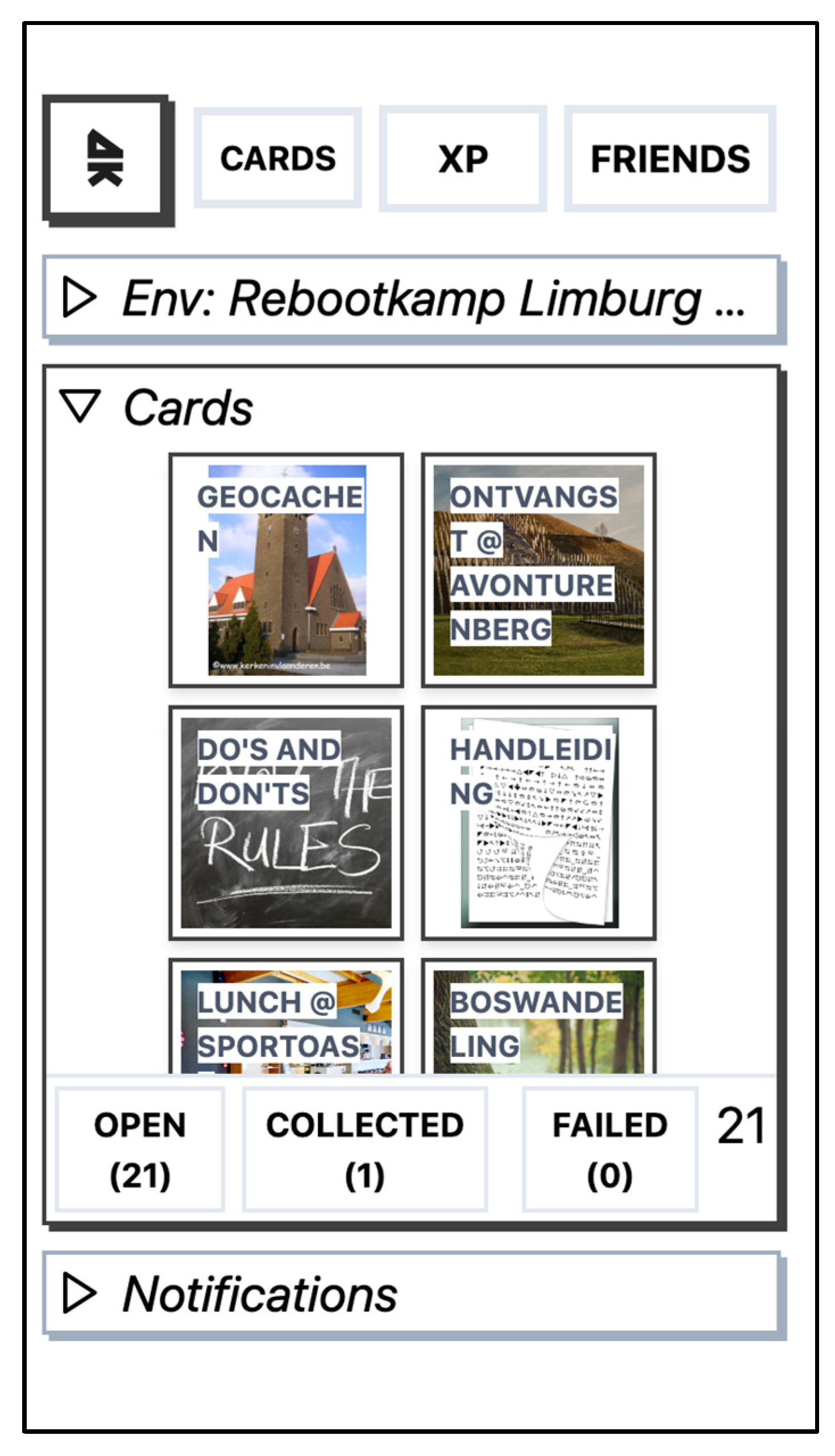

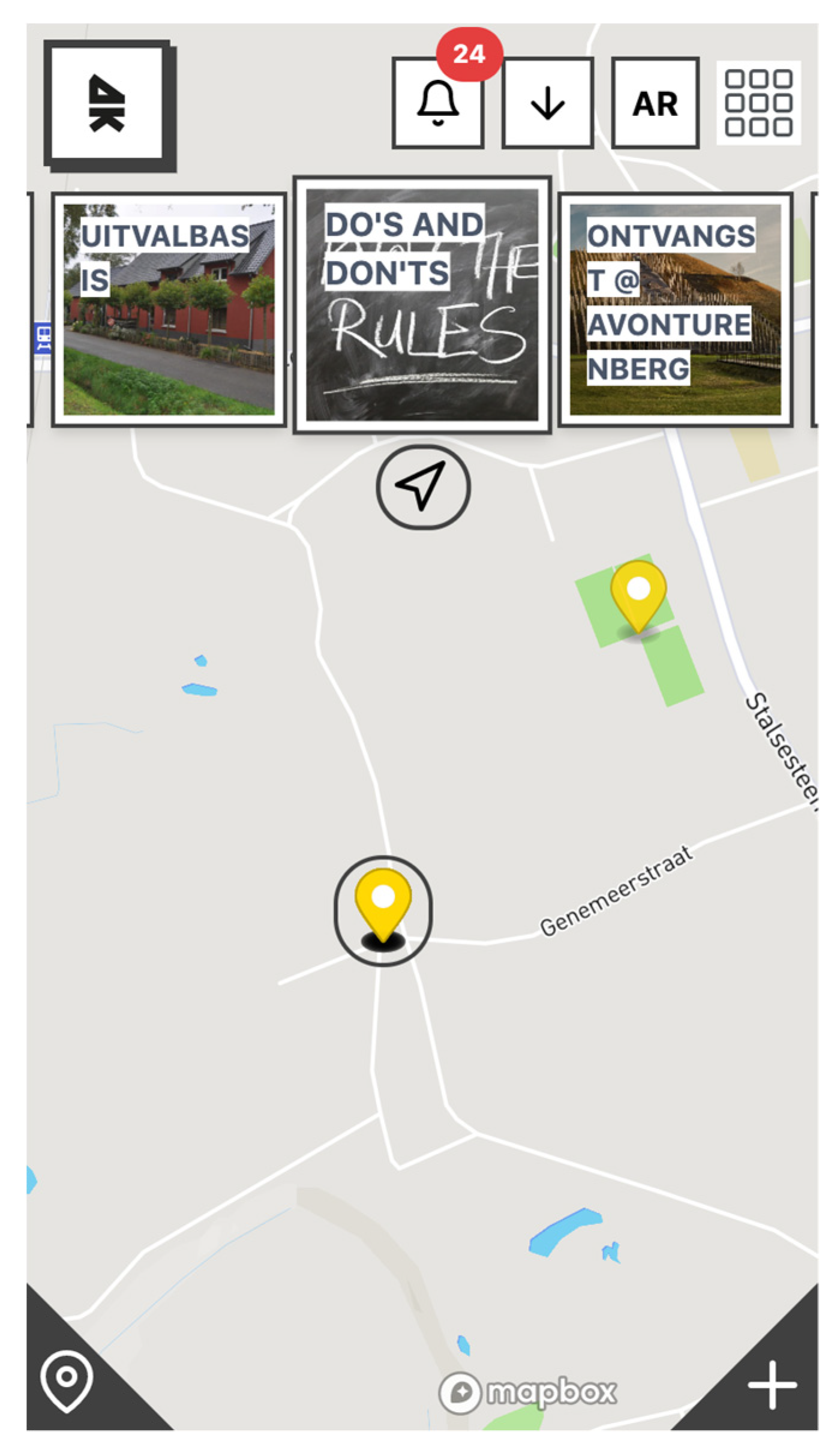

5.3.2. Evaluation 2 of Phase 3

This evaluation was done in the context of the Reboot Camp organized by the organization CAD Limburg. The camp lasted one week (5 days). For this evaluation, cards were created for the different activities offered during the camp. In this way, the youngsters could use TICKLE as a kind of agenda. Each day they could see, by means of cards, the activities of the day. The cards only became visible on the day of the activity. The cards contained information about the activity. To collect a card, they had to do a small challenge related to the activity. The challenges were ranging from doing a quiz to writing a small reflection about an activity. In this way, points could be collected. There were also cards with general information, such as a card with a short manual, a card with the rules of the camp, a card about the camp’s location, and a card with a link to the questionnaire. See

Figure 13 for a screenshot of the card interface used for this evaluation.

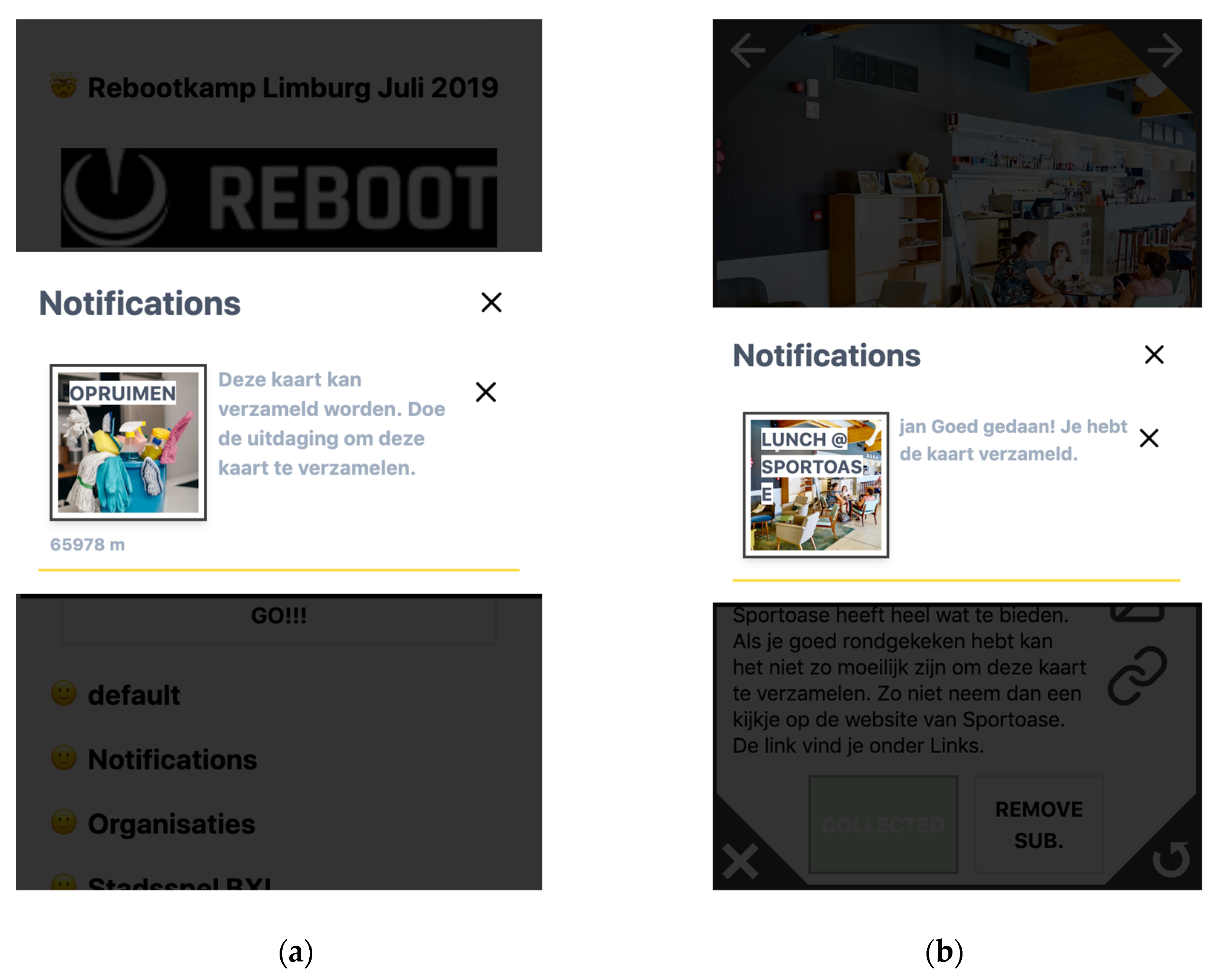

The seven youngsters received an introduction with a hands-on demo. They each received a smartphone with mobile data. On the request of the organization, we restricted the use of the smartphone to TICKLE, to consult the Web, and to take pictures. Unfortunately, the supervisors of the camp decided that they could only use the smartphone at certain moments during the day. At the last day of the camp, the participants were supposed to fill out an online questionnaire, an activity that was also offered through a card. The questionnaire for the participants was similar to the questionnaire for the city game. The questions were formulated as statement and a scale from 1 to 5, used to indicate the level of agreement with the statement: 1 being “strongly disagree”, and 5 being “strongly agree”. In this evaluation, we also asked questions about the notifications provided in TICKLE. The participants could again leave comments and suggestions for improvement.

Results: Although we explicitly asked the organization to stimulate the youngsters to fill out the online questionnaire at the last day of the camp, only three (of the seven) youngsters filled out the questionnaire. They were respectively 14, 15 and 18 years old. These participants were positive about the app (measured by means of different questions), found it easy to use (two participants agreed with a score of 4, one with a score of 5), and a nice way to detect new things (one score 3, one score 4, and one score 5). They were positive about the use of notifications for letting them know which activities would take place (two scores of 4 and one of 3), but they were divided about the usefulness for informing them about the points collected (one score of 1, one score of 3 and one of 4). The information on the cards and their look and feel was evaluated positively (agreement with a score of 3 (one participant) and 4 (two participants) for the information, and with a score of 4 (three participants) for the look and feel). Also, these participants liked the challenges (one score 4, and two score 5), found them good doable (three score 4) and varied (one score 3, and two score 4), but found them in average difficult to understand (one score 2, one score 3, and one score 4). They found TICKLE a nice way to get to know the activities (one score 3, one score 4, and one score 5); recognized that they learned new things (two score 4 and one score 5), and indicated that they would use it again (with different degree of certainty: one score 3, one score 4, and one score 5). For the questions used to measure a change in their motivation for learning more about new areas of interest or activities, the results were mixed: two showed a clear increased motivation (score 5), while one did not (score 2). No comments or suggestions for improvement were given.

We checked the activity in the card environment because of the low number of responses. We observed a slight decrease in activity over the week, and the participants performed the challenge more for the cards about outdoor activities than for those of the indoor activities.

Furthermore, we received feedback from the organizers of the camp. They mentioned that the youngsters indicated that they enjoyed working with the app; the youngsters were often asking in the morning how many challenges they had to perform. However, they also noticed that as the camp progressed, some youngsters had less motivation to complete all the challenges. They reported that the app motivated some of the youngsters to "do it right", e.g., they searched for the right information online. However, the organizers also mentioned that the circumstances were not ideal for the evaluation: given the multitude of activities throughout the camp, it was not obvious to follow up all challenges, and because of the strict policy on the use of cell phones during the camp, the time available for using TICKLE was limited.

6. Discussion, Limitations and Further Work

In general, in the context of the formative evaluations, we obtained positive results and received useful feedback to improve and extend the application. Based on the results, we can conclude that in the context of these formal evaluations, the app is usable for youngsters and able to engage them, and we see indications that it may be able to increase the intrinsic motivation and learning capacity of youngsters. However, the evaluations were limited in the number of participants and the context in which they were performed, and they had a limited goal, i.e., checking the usability of the app for youngsters and the ability to engage them. To confirm the results and to verify whether the app can increase the intrinsic motivation and learning capacity of youngsters, summative and longitudinal evaluations are needed. Such evaluations are under preparation. For these evaluations, questionnaires other than UEQ will be considered. While UEQ aims to measure the user experience in general, GAMEX is a new instrument (i.e., questionnaire) for measuring gameful experience, i.e., the user experience of engaging with gamified applications [

65]. Although developed in the context of customer behavior, it may also be usable for education. PLEXQ [

66] is another questionnaire focusing on measuring playfulness. This questionnaire targets a wide range of products, including mobile apps. To measure the effect on motivation for learning, we will collaborate with our research partners from the educational domain.

Next to the positive results obtained from the formative evaluations performed, we encountered different issues that are worthwhile to mention:

First of all, we found that our target audience, i.e., youngsters, is very demanding, especially concerning look, feel, and usability. Although we always explained very well that the app under evaluation was research work and still required improvements, most of the critique was on the look and feel, and about small usability issues. Also being able to quickly start and resume the app was very important for them.

Next, there exist a broad range of smartphones with different screen sizes and browser versions. It turned out to be impossible in the context of a research project to ensure that the application was running smoothly and without issues on all possible devices used by youngsters. For that reason, we decided to provide smartphones to perform the evaluations in the third phase. However, for the longitudinal evaluation this may cause some bias. When the youngsters have to use an additional device next to their own smartphone, they may find this annoying, and it will counteract efforts to make the app “a seamless part of daily life”.

Lastly, when performing evaluations in real-life settings, it is not always possible to have full control over the setup. Even after careful preparation, unexpected issues may show up during the evaluation. For instance, this happened during the Reboot Camp evaluation: although we limited the use of the mobile phone to the TICKLE app (so the youngsters could not use it to make phone calls, download or play games, or for other apps), and discussed this with the organizers in advance, it turned out that the strict policy for using mobile devices was also applied to the mobile phones given to the youngsters for the evaluation. Probably, during the camp, it must have been easier to ban the use of any mobile phone during the day, and because we were not allowed to be present during the camp week, we could not intervene.

Most available questionnaires on usability and user experience are designed for adults with good literacy. For the evaluations in phase two, we used UEQ in the native language of the participants, but simplified the language somewhat, because a pilot with youngsters of the same age indicated that some terms were still too difficult to understand. When the youngsters were filling out the questionnaire, we also noticed that they had problems in using the Likert scale, especially when the lowest score represented a good result and the highest a bad result. For instance, they had no problem in scoring a statement like “the system was (1) easy to learn … (7) difficult to learn”, but a statement like “the system was (1) motivating … (7) demotivating” caused misunderstanding. Apparently, in their education, they were used to associating a high score with a good result. The participants in phase three were even younger, and it was known that their literacy could be an issue, so we simplified the language in the questionnaire even more and used statements that all could be answered with the same scale: “strongly disagree” to “strongly agree”. As much as possible, we tried to avoid negatively formulated statements. In the first evaluation of phase three, the youngsters could ask for an explanation while filling out the questionnaire, but in general they did not use this opportunity. For the summative longitudinal evaluations, we will pay even more attention to the questionnaire, pilot it several times, and if possible, use an existing validated questionnaire tailored towards children/youngsters and suitable for our purpose.

The evaluations and demonstrations showed that, although we started with the aim to tackle school burnout, the application is usable in a broader context, e.g., for team building activities, for information providing, and for non-formal learning activities, as well as for formal learning activities. Although we did not yet test it, we see more possible application domains, e.g., for tourism, for museums, for shopping opportunities in a city, for event announcements, for social engagement, etc. In addition, it is our opinion that the app could be used for a broader audience than youngsters. For example, we are currently setting up an environment with TICKLE for civic engagement focused on assisting elderly [

67].

Most of the feedback and suggestions received during the formative evaluations has already been taken into consideration. Still to be considered, and planned for future work is:

Allowing youngsters to connect with each other with and within the TICKLE environment and to collaborate on the collection of cards.

To provide a help functionality that a youngster could use when (s)he would be stuck on a challenge.

To provide the possibility to use leaderboards as a persuasive technique when this is appropriate.

To allow youngsters to create cards themselves. This will contribute to the investments made by the youngsters, but also to the fact that youngsters like to share their own material online. This functionality is already available, but a procedure needs to be added to prevent youngsters from creating cards that are not acceptable.

Adding an intelligent matching algorithm to suggest cards to youngsters in an automatic way. Currently, this needs to be done manually by the supervisor of a youngster.

Author Contributions

Conceptualization, O.D.T. and J.M.; methodology, O.D.T. and J.M.; software, J.M. and D.B.; validation, O.D.T., J.M, R.L. and D.B.; investigation, J.M, R.L. and D.B.; writing—original draft preparation, O.D.T, J.M, R.L. and D.B.; writing—review and editing, O.D.T, J.M, R.L. and D.B.; visualization, D.B. and J.M.; supervision, O.D.T.; project administration, O.D.T.; funding acquisition, O.D.T. All authors have read and agreed to the published version of the manuscript.

Funding

This research is funded by the European Regional Development Fund (ERDF) and the Brussels-Capital Region within the framework of the Operational Program 2014-2020 through the ERDF-2020 project ICITY-RDI.BRU.

Acknowledgments

The authors like to acknowledge the support received from the company Telenet bvba by means of the donation of material and services needed in the evaluations. We also like to thank all the members of the organizations involved in our evaluations for their cooperation and contributions. We also thank all participants of the evaluations for their time and feedback.

Conflicts of Interest

The authors declare no conflict of interest. The funders had no role in the design of the application; in the set up of the evaluations, collection, analyses or interpretation of data; in the writing of the manuscript, or in the decision to publish the results.

References

- Marsick, V.J.; Watkins, E.K. Informal and Incidental Learning. New Dir. adult Contin. Educ. 2001, 89, 25–34. [Google Scholar] [CrossRef]

- Maarschalk, J. Scientific literacy and informal science teaching. J. Res. Sci. Teach. 1998, 25, 135–146. [Google Scholar] [CrossRef]

- Tamir, P. Factors associated with the relationship between formal, informal, and nonformal science learning. J. Environ. Educ. 1991, 22, 34–42. [Google Scholar] [CrossRef]

- Reischmann, J. Learning ‘en passant’: The Forgotten Dimension Andragogy. In Proceedings of the Conference of the American Association of Adult and Continuing Education, Hollywood, FL, USA, 23 October 1986. [Google Scholar]

- Pintrich, P.R. Understanding self-regulated learning. New Dir. Teach. Learn. 1995, 199, 3–12. [Google Scholar] [CrossRef]

- Grant, M.M. Using mobile devices to support formal, informal & semi-formal. uses and implications for teaching & learning. In Emerging Technologies for Steam Education; Springer: Berlin/Heidelberg, Germany, 2015. [Google Scholar]

- Eshach, H. Bridging In-school and Out-of-school Learning: Formal, Non-Formal, and Informal Education. J. Sci. Educ. Technol. 2007, 16, 171–190. [Google Scholar] [CrossRef]

- Lee, B. Social Media as a Non-formal Learning Platform. Procedia Soc. Behav. Sci. 2013, 103, 837–843. [Google Scholar] [CrossRef] [Green Version]

- Clough, G. Geolearners: Location-Based Informal Learning with Mobile and Social Technologies. IEEE Trans. Learn. Technol. 2010, 3, 33–44. [Google Scholar] [CrossRef]

- Dabbagh, N.; Kitsantas, A. Internet and Higher Education Personal Learning Environments, social media, and self-regulated learning: A natural formula for connecting formal and informal learning. Internet High. Educ. 2012, 15, 3–8. [Google Scholar] [CrossRef] [Green Version]

- Jeng, Y.L.; Wu, T.T.; Huang, Y.M.; Tan, Q.; Yang, S.J. The add-on impact of mobile applications in learning strategies: A review study. Educ. Technol. Soc. 2010, 13, 3–11. [Google Scholar]

- Clement, J. Social Media—STATISTICS & Facts. Available online: https://www.statista.com/topics/1164/social-networks/ (accessed on 4 December 2019).

- McCord Museum. MTL Urban Museum. Available online: https://www.musee-mccord.qc.ca/en/mtl-urban-museum/ (accessed on 16 December 2019).

- Bernsmann, S.; Croll, J. Lowering the threshold to libraries with social media: The approach of ‘Digital Literacy 2.0’, a project funded in the EU Lifelong Learning Programme. Libr. Rev. 2013, 62, 53–58. [Google Scholar] [CrossRef]

- Gikas, J.; Grant, M.M. Mobile computing devices in higher education: Student perspectives on learning with cellphones, smartphones & social media. Internet High. Educ. 2013, 19, 18–26. [Google Scholar]

- Naismith, L.; Lonsdale, P.; Vavoula, G.; Sharples, M. Literature Review in Mobile Technologies and Learning. Available online: https://telearn.archives-ouvertes.fr/hal-00190143 (accessed on 20 August 2018).

- Kangas, M. Creative and playful learning: Learning through game co-creation and games in a playful learning environment. Think. Ski. Creat. 2010, 5, 1–15. [Google Scholar] [CrossRef]

- Kangas, M.; Ruokamo, H. Playful Learning Environments: Effects on Children’s Learning. In Encyclopedia of the Sciences of Learning; Springer: Berlin/Heidelberg, Germany, 2012. [Google Scholar]

- Peffers, K.; Tuunanen, T.; Rothenberger, M.A.; Chatterjee, S. A Design Science Research Methodology for Information Systems Research. Source J. Manag. Inf. Syst. 2007, 24, 45–77. [Google Scholar] [CrossRef]

- Hug, T. Didactics of Microlearning; Waxmann Verlag: GmbH, Germany, 2007. [Google Scholar]

- Fogg, B.J. Persuasive technology: Using computers to change what we think and do. Ubiquity 2002, 2002, 5. [Google Scholar] [CrossRef] [Green Version]

- Deterding, S.; Dixon, D.; Khaled, R.; Nacke, L. From Game Design Elements to Gamefulness. In Proceedings of the 15th International Academic MindTrek Conference on Envisioning Future Media Environments—MindTrek, Tampere, Finland, 28–30 September 2011. [Google Scholar]

- Pham, X.L.; Chen, G.D. PACARD: A New Interface to Increase Mobile Learning App Engagement, Distributed Through App Stores. J. Educ. Comput. Res. 2018, 57, 1–28. [Google Scholar] [CrossRef]

- Edge, D.; Whitney, M.; Charlotte, C. MemReflex: Adaptive Flashcards for Mobile Microlearning. In Proceedings of the 14th international Conference on Human-Computer Interaction with Mobile Devices and services, San Francisco, CA, USA, 21–24 September 2012. [Google Scholar]

- Epignosis. Talentcards. Available online: https://www.talentcards.com/ (accessed on 6 December 2019).

- TELMA. S_U+G Serious Urban Game. Available online: http://sug-platform.be/ (accessed on 16 December 2019).

- Avouris, N.; Yiannoutsou, N. A Review of Mobile Location-based Games for Learning across Physical and Virtual Spaces. J. Univers. Comput. Sci. 2012, 18, 2120–2142. [Google Scholar]

- Toscos, T.; Faber, A. Chick Clique: Persuasive Technology to Motivate Teenage Girls to Exercise. In Proceedings of the CHI’06 extended abstracts on Human factors in computing systems, Montréal, QC, Cananda, 22–27 April 2006. [Google Scholar]

- Kimura, H.; Ebisui, J.; Funabashi, Y.; Yoshii, A.; Nakajima, T. iDetective: A Persuasive Application to Motivate Healthier Behavior Using Smart Phone. In Proceedings of the Proceedings of the 2011 ACM Symposium on Applied Computing, TaiChung, Taiwan, 21–25 March 2011. [Google Scholar]

- Tikka, P.; Woldemicael, B.; Oinas-kukkonen, H. Building an App for Behavior Change: Case RightOnTime. In Proceedings of the Fouth International Workshop on Behavior Change Support Systems (BCSS 2016), Salzburg, Austria, 5 April 2016. [Google Scholar]

- Hevner, A.R.; March, S.T.; Park, J.; Ram, S. Design Science in Information Systems Research. MIS Q. 2004, 28, 75–105. [Google Scholar] [CrossRef] [Green Version]

- Offermann, P.; Levina, O.; Schönherr, M.; Bub, U. Outline of a Design Science Research Process. In Proceedings of the 4th International Conference on Design Science Research in Information Systems and Technology, Philadelphia, PA, USA, May 2009. [Google Scholar]

- Salmela-Aro, K.; Kiuru, N.; Leskinen, E.; Nurmi, J.E. School Burnout Inventory (SBI) Reliability and Validity. Eur. J. Psychol. Assess. 2009, 25, 48–57. [Google Scholar] [CrossRef]

- Bask, M.; Salmela-Aro, K. Burned Out to Drop Out: Exploring the Relationship Between School Burnout and School Dropout. Eur. J. Psychol. Educ. 2013, 28, 511–528. [Google Scholar] [CrossRef]

- European Commission. Reducing Early School Leaving: Key Messages and Policy Support. Available online: https://ec.europa.eu/education/content/reducing-early-school-leaving-key-messages-and-policy-support_en (accessed on 20 August 2018).

- European Commission. Early School Leaving. Available online: http://ec.europa.eu/education/policy/school/early-school-leavers_en (accessed on 20 August 2018).

- Vlieghe, J.; de Troyer, O. Tickle Report D1: State-of-the-art on Media Use in Belgium, Flanders & Belgium. Available online: https://wise.vub.ac.be/tickle/index.php/reformation/sota-mediause/ (accessed on 20 August 2018).

- Vlieghe, J.; de Troyer, O. Tickle Report D2: State-of-the-Art on Early School Leaving and Dropouts. Available online: https://wise.vub.ac.be/tickle/index.php/reformation/report-d2-state-of-the-art-on-early-school-leaving-and-dropouts/ (accessed on 20 August 2018).

- Fogg, B. A Behavior Model for Persuasive Design. In Proceedings of the 4th International Conference on Persuasive Technology—Persuasive, Claremont, CA, USA, 26–29 April 2009. [Google Scholar]

- Lo, J.L. Playful Tray: Adopting Ubicomp and Persuasive Techniques into Play-Based Occupational Therapy for Reducing Poor Eating Behavior in Young Children. In Proceedings of the International Conference on Ubiquitous Computing, Innsbruck, Austria, 16–19 September 2007. [Google Scholar]

- Pham, X.-L.; Nguyen, T.-H.; Hwang, W.-Y.; Chen, G.-D. Effects of push notifications on learner engagement in a mobile learning app. In Proceedings of the 2016 IEEE 16th International Conference on Advanced Learning Technologies (ICALT), Austin, TX, USA, 25–28 July 2016. [Google Scholar]

- Kaptein, M.; van Halteren, A. Adaptive persuasive messaging to increase service retention: Using persuasion profiles to increase the effectiveness of email reminders. Pers. Ubiquitous Comput. 2013, 17, 1173–1185. [Google Scholar] [CrossRef] [Green Version]

- Eyal, N. Hooked: How to Build Habit-Forming Products; Penguin Books Ltd: London, UK, 2014. [Google Scholar]

- Simons, H.W.; Jones, J.G.; Simons, H.W. Persuasion in Society; SAGE: Routledge, NY, USA, 2017. [Google Scholar]

- Vlieghe, J.; de Troyer, O. Tickle Reoprt D3: Literature Study on Persuasive Techniques and Technology. Available online: https://wise.vub.ac.be/tickle/wp-content/uploads/2015/12/Report-D3_final.pdf (accessed on 20 August 2018).

- Goldstein, N.J.; Steve, M.J.; Cialdini, R.B. Yes! 50 Scientifically Proven Ways to be Persuasive; Simon & Schuster Inc: New York, NY, USA, 2008. [Google Scholar]

- John, O.P.; Srivastava, S. The Big Five trait taxonomy: History, measurement, and theoretical perspectives. In Handbook of Personality: Theory and Research; Lawrence, A.P., John, O.P., Eds.; Elsevier: Amsterdam, The Netherlands, 1999. [Google Scholar]

- Kidimedia. BookWidgets Interactive Learning. Available online: https://www.bookwidgets.com/ (accessed on 23 January 2020).

- Markopoulos, P.; Kaptein, M.; de Ruyter, B.; Aarts, E. Personalizing persuasive technologies: Explicit and implicit personalization using persuasion profiles. Int. J. Hum. Comput. Stud. 2015, 77, 38–51. [Google Scholar]

- Progressive Web Apps. Available online: https://developers.google.com/web/progressive-web-apps/ (accessed on 23 January 2020).

- Redux—A Predictable State Container for JS Apps. Available online: https://redux.js.org/ (accessed on 23 January 2020).

- Facebook. React—A Javascript Library for Building User Interfaces. Available online: https://reactjs.org/ (accessed on 23 January 2020).

- Firebase Helps Mobile and Web App Teams Succeed. Available online: https://firebase.google.com/ (accessed on 23 January 2020).

- Json-Rules-Engine. Available online: https://www.npmjs.com/package/json-rules-engine (accessed on 23 January 2020).

- Kaplan, B.; Maxwell, J.A. Qualitative research methods for evaluating computer information systems. In Evaluating the Organizational Impact of Heathcare Information Systems; Anderson, J.G., Aydin, C.E., Eds.; Springer: Berlin/Heidelberg, Germany, 2005. [Google Scholar]

- Bowen, G. Document Analysis as a Qualitative Research Method. Qual. Res. J. 2009, 9, 27–40. [Google Scholar] [CrossRef] [Green Version]

- Geocaching. Available online: https://www.geocaching.com/ (accessed on 19 December 2019).

- VDS. Vlaamse Dienst Speelpleinwerking. Available online: https://www.speelplein.net/ (accessed on 16 December 2019).

- Rauschenberger, M.; Schrepp, M.; Cota, M.P.; Olschner, S.; Thomaschewski, J. Efficient Measurement of the User Experience of Interactive Products. How to use the User Experience Questionnaire (UEQ). Example: Spanish Language Version. Int. J. Artif. Intell. Interact. Multimed. 2013, 2, 39–45. [Google Scholar] [CrossRef]

- Team UEQ, User Experience Questionnaire. Available online: https://www.ueq-online.org/ (accessed on 16 December 2019).

- Alba. alba—Try-Out Brussel. Available online: http://alba.be/project/try-out-brussel/ (accessed on 16 December 2019).

- CADLimburg. Centra Voor Alcohol-en Andere Drugproblemen Limburg. Available online: https://www.cadlimburg.be/ (accessed on 16 December 2019).

- Reboot. Available online: https://www.rebootkamp.be/ (accessed on 16 December 2019).

- Koerth-Baker, M.; Wolfe, J. Most Personality Quizzes Are Junk Science. Take One That Isn’t. Available online: https://projects.fivethirtyeight.com/personality-quiz/ (accessed on 16 December 2019).

- Eppmann, R.; Bekk, M.; Klein, K. Gameful Experience in Gamification: Construction and Validation of a Gameful Experience Scale. J. Interact. Mark. 2018, 43, 98–115. [Google Scholar] [CrossRef]

- Boberg, M.; Karapanos, E.; Holopainen, J.; Lucero, A. “PLEXQ: Towards a Playful Experiences Questionnaire. In Proceedings of the 2015 Annual Symposium on Computer-Human Interaction in Play (CHI PLAY 2015), London, UK, 3–7 October 2015. [Google Scholar]

- Lindberg, R.; Maushagen, J.; de Troyer, O. Combining a Gamified Civic Engagement Platform with a Digital Game in a Loosely Way to Increase Retention. In Proceedings of the 21st International Conference on Information Integration and Web-based Applications & Services, Munich, Germany, 2–4 December 2019. [Google Scholar]

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).