1. Introduction

Motivation is what triggers and supports the learning process. Undergraduate students enroll in a new course eager to know more about or start a career in a specific area. Nevertheless, this primary stimulus does not last too long and typically vanishes after the initial difficulties and failures. This loss of motivation is arguably the most prominent concern in programming education nowadays since learning to program is difficult [

1] and novice programmers are daunted by the complexity of the domain, which requires a correct understanding of abstract concepts as well as the development of practical skills, such as logical thinking, problem-solving, and debugging [

2,

3]. These knowledge and skills are acquired through extensive practice.

Unfortunately, the amount of practice time in programming classes is inadequate for students to assimilate such knowledge. As an example, a regular sixteen-week semester introductory programming course often does not include more than twelve programming assignments [

4]. This quantity is already tremendous for teachers to be able to give sufficient individualized feedback but short for novices to master programming skills. Consequently, teachers often rely on automated assessment tools to deliver instant, teacherless, error-free, and effortless feedback to students on attempts to solve exercises [

5].

Automated assessment tools can offer both static, which involves the compilation of the source code, and dynamic program analysis, which runs the compiled source code and checks if it produces the correct results [

6]. The value and advantages of such tools in programming learning are supported by several published research studies [

7,

8]. Nevertheless, it is still crucial to ensure that there are enough sources of motivation for learners to make the decision to practice, apply the necessary amount of effort, and persist after repetitive failures [

9,

10].

A widespread and encouraging approach to foster students’ motivation is gamification [

11,

12], which involves the use of game-thinking and game mechanics to engage people [

13]. Even though gamification is not a panacea and can be inefficient or even affect students negatively [

14], challenges including game elements that motivate intrinsically, such as graphical feedback and game-thinking, are more likely to have long-term positive effects on students [

15]. In the specific case of automated assessment of programming assignments, it is possible to tune dynamic analysis of programs to transform the assessment of a program into a game, as the successful games CodeRally [

16] and RoboCode [

17] illustrate.

CodeRally and RoboCode are games in which players are Software Agents (SAs), developed by practitioners, that compete against each other in a 2D environment visible to the authors. These games have the purpose to teach object-oriented programming concepts. Some teachers introduced them in programming classes with quite a lot of success [

18], but only in certain topics, such as the complexity and costs of creating or adapting this kind of game undermines their adoption for teaching other concepts than those they were designed for.

This paper presents Asura, an automated assessment environment for game-based coding challenges, which aims to foster programming practice by delivering engaging programming assignments to students. These assignments challenge students to code a program that acts as a game player (i.e., an SA) providing graphical feedback with the game simulation. These challenges can optionally promote competition among all submitted SAs, in the form of tournaments such as those found on traditional games and sports, including knockout and group formats. The outcome of the tournament is presented on an interactive viewer, which allows learners to navigate through each match and the rankings. From the teachers’ perspective, Asura provides a framework and a command-line interface (CLI) tool to support the development of challenges. The validation of this authoring framework has been already conducted and described in a previous empirical study [

19]. Hence, this paper focuses on the learners’ perspective of Asura.

This paper is an extended version of the work published in [

20]. We extend our previous work by adding a much broader review of the state of the art, following the Asura description with a simple example and some further details, and appending an analysis by type of player to the validation.

The remainder of this paper is organized as follows.

Section 2 reviews the state of the art on platforms and tools that make use of challenges similar to those of Asura to engage programming learners.

Section 3 provides an in-depth overview of Asura, including details on its architecture, design, and implementation.

Section 4 describes its validation regarding the growth in motivation and, consequently, practice time. Finally,

Section 5 summarizes the main contributions of this research.

2. State of the Art

Learning and teaching programming is hard [

1,

21]. When students keep struggling for too long, which is common in programming and particularly frequent during the initial stages, they are likely to give up. This is a huge concern among educators in this area and has led to a large body of research, not only to seek new forms of introducing students to programming, but also to boost the interest and motivation of people in coding. Among the most successful approaches are the use of competition and other game-like features in web learning environments to stimulate learners in solving more programming activities [

22,

23,

24].

Asura uses game elements, particularly the assessment of programming exercises following competition formats and game-like graphical feedback of the behavior of the code, to create a more engaging programming-learning environment. Furthermore, it is embedded in Enki [

22] through a special activity mechanism, which enables instructors to also deliver gamified educational contents along with Asura challenges. To the best of authors’ knowledge, there are already learning environments that provide some of these features, but none of them includes automatic and competition-based assessment, game-like graphical feedback, and gamified educational content presentation, while supporting educators themselves to create their own courses with activities leveraging all these features.

This section describes some systems that support open online programming courses either without gamification or using only game elements that foster extrinsic motivation, programming learning platforms that stand out for their competitive side, and platforms and games in which learners’ code takes action in a game world.

2.1. Platforms for Open Online Programming Courses

The panoply of open online programming courses available on the web is huge as there is a number of platforms supporting them. Some platforms, such as Coursera, Udacity, and edX, offer a wide variety of learning material from top universities’ courses, with the possibility, typically at a monetary cost, of getting a verified certificate. Other platforms have less formal courses with a broad range of activities to provide hands-on experience on a specific language or framework (e.g., Codecademy, Khan Academy, Free Code Camp).

The former platforms are designed for people who already have some previous background in the subjects. They aim to improve the knowledge of people in areas that they are already interested in (intrinsic motivation). These platforms are only a means for people to have access to materials that were otherwise difficult to get. So, there is a small or no gamification factor in them. Moreover, creating courses for platforms like Coursera is an arduous task, requiring the agreement and involvement of third parties (e.g., universities).

In less formal open online courses, providers typically use gamification to attract learners, adopting elements such as progress indicators, badges, levels, leaderboards, and experience points. For example, Khan Academy uses badges and progress tracking to engage students to enlist and complete courses. Even that it also allows educators to easily create courses for their learners, it is limited to JavaScript-based programming activities (HTML and CSS are also supported). Codecademy also uses progress indicators and badges. It supports programming languages such as Python, JavaScript, Java, SQL, Bash/Shell, and Ruby. However, creating courses on Codecademy is not yet possible.

In the context of educational institutions, the most widely-used approach to create gamified open online courses is to rely on a Learning Management System (LMS) with game mechanics. LMSs were created to deliver course contents, and collect assignments of the students. However, many of them evolved to provide more engaging environments resorting to gamification. Some of the most notable examples are Academy LMS, Moodle, and Matrix which include badges, achievements, points, and leaderboards. Moodle also has several plugins that offer a variety of other gamification elements.

Another tool that serves this purpose is Peer 2 Peer University (P2PU) [

25]. P2PU is a social computing platform that fosters peer-created and peer-led online learning environments. It allows students to join, complete, and quit challenges, and to enroll in courses. By completing learning tasks, courses, or challenges, they can earn badges, which are based on Mozilla Open Badges framework (

www.openbadges.org).

However, LMSs and P2PU do not have out-of-the-box support for automatic assessment of programming exercises, which is a somewhat crucial feature for any programming learning environment. Thus, any of the three types of open online courses’ providers described above have strengths, but lack the ability to easily create courses, a strategy to engage learners, the possibility to present different forms of learning materials, or the support for a multitude of programming languages. The same happens with other tools described in the literature [

26,

27].

From the research conducted, Enki [

22] is the only tool that blends gamification, assessment and learning, presenting content in various forms as well as delivering programming assignments with automatic feedback, while allowing any stakeholder to create courses freely.

2.3. Game-Based Programming Learning

Games are usually effective motivators. However, gamified environments are generally very different from games, and fail to use elements that are more prone to motivate users intrinsically such as graphical feedback and in-game challenges. Notwithstanding, there is a subset of gamification where learning is an outcome of playing a full-fledged game (formally known as serious games). For instance, in the context of programming learning, it is common to have games where code replaces the typical input controls. This subsection reviews both platforms where the learning activities are game-based programming challenges with graphical feedback, focusing on their support for authoring new challenges, and games that teach programming concepts.

3. Asura

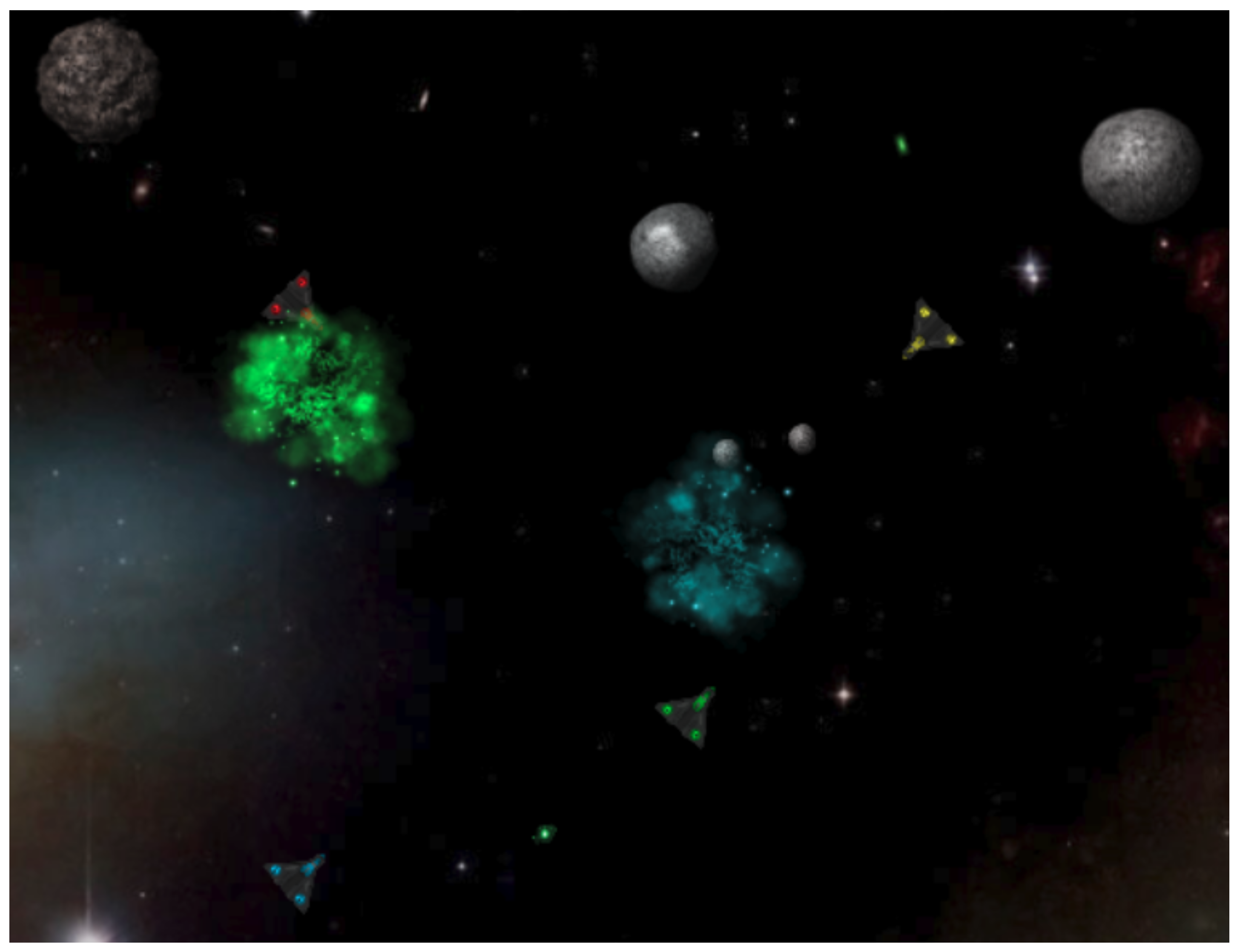

Asura is an automated assessment environment for game-based programming challenges. It focuses on maximizing the time that students commit to programming activities by involving them with game elements that motivate intrinsically while minimizing the effort of teachers to a degree comparable to that of producing traditional programming problems. Therefore, it includes a framework and a CLI tool to support the authors through the process of developing challenges comprising unique features of games, such as graphical feedback, game-thinking, and competition.

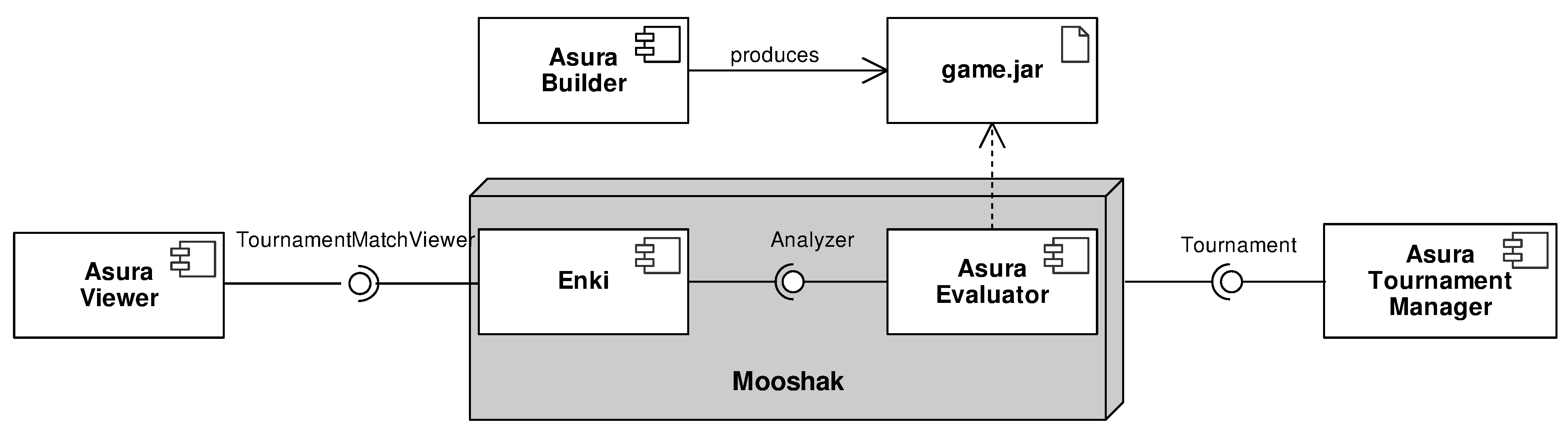

This environment follows a multi-component architecture, presented in

Figure 1, composed of three individual components, named Asura Viewer, Asura Builder, and Asura Tournament Manager, and a component designed to act as a plugin for an evaluation engine, the Asura Evaluator. Each of these components provides an interface to be consumed by other systems, except Asura Builder which produces a Java ARchive (JAR) of the challenge to be imported on the target system. This architecture aims to guarantee that every component is easily replaceable and extendable while allowing them to be integrated independently in different e-learning ecosystems.

An implementation of an ecosystem for Asura also requires the choice of tools both to play the role of the evaluation engine, in which Asura Evaluator integrates, and the learning environment that presents students with learning materials. For the implementation described in this paper, the tool selected for the first role could be almost any programming assessment module of one of the existing open-source automatic judge systems used in programming contests. Nevertheless, the selected system was capable of playing both roles, Mooshak 2 [

38].

Mooshak is a web-based automatic judge system that supports assessment in computer science. It can evaluate programs written in several programming languages, such as Java, C, C++, C#, and Prolog. Besides that, Mooshak provides means to answer clarification requests of a problem, reevaluate programs, track printouts, among others. Although originally designed for managing programming contests, it eventually started being used in computer science classrooms to assist teachers in delivering timely and accurate feedback on programming assignments. As of version 2, Mooshak includes a pedagogical environment, named Enki [

22]. Enki blends assessment (i.e., exercises) and learning (i.e., multimedia and textual resources) in a user interface designed to mimic the distinctive appearance of an IDE. It integrates with external services to provide gamification features and to sequence educational resources at different rhythms according to students’ capabilities.

Figure 1 depicts the implemented architecture of Asura. Asura Evaluator is a small server-side package whose main class is the

AsuraAnalyzer, a specialization of the main analyzer of Mooshak 2—

ProgramAnalyzer—for conducting the dynamic analysis using the JAR provided by Asura Builder. Both

AsuraAnalyzer and

ProgramAnalyzer, which grades submissions to International Collegiate Programming Contest (ICPC)-like problems, implement a common interface—

Analyzer—that allows evaluator consumers (i.e., Enki in this case) to integrate transparently with any of them. When running a tournament, Mooshak consumes the Asura Tournament Manager, a Java library providing an interface

Tournament to organize tournaments. This interface receives a set of players and the structure of the tournament to carry out, and returns the matches to run on the Asura Evaluator, one after another. Either in games or in tournaments, the output of Asura Evaluator is received by Enki and displayed on Asura Viewer, an external Google Web Toolkit (GWT) widget that can integrate in any GWT environment. For that, it has an interface

Viewer that enables consumers to feed it with a JSON object complying either with a match or tournament JSON schemas.

3.2. Asura Builder

Asura Builder is a standalone component composed of multiple tools to support the authors through the whole process of creating game-based coding challenges. To this end, it includes both a CLI tool, to easily generate Asura challenges and install specific features (e.g., support for a particular programming language or a standard turn-based game manager), and a Java framework, that provides a game movie builder, a general game manager, several utilities to exchange complex state objects between the manager and the SAs as JSON or XML, and general wrappers for SAs in several programming languages.

The process of creating an Asura challenge starts with the scaffolding of a skeleton using the CLI tool. For instance, in the Tic Tac Toe example, the author would generate an Asura challenge from the Maven skeleton (there are also similar skeletons raw and a Gradle) by running the command in Listing 1 and answering the questions it poses pertaining to the challenge metadata. The skeleton contains a minimal working challenge, including an empty game state, a simple turn-based game manager, and a simple skeleton and a wrapper for Java-based SAs. Since the introduced challenge is targeted to Java, there is no need to manage programming languages. However, it is important to notice that, even though the authors are required to program the challenges in Java, players can use their preferred programming language to code the SAs. For that, authors can also manage support for programming languages through the CLI using the commands in Listing 2, which will add/remove skeletons and wrappers for the specified language.

| Listing 1: Generate an Asura challenge from the Maven skeleton through the CLI |

| asura−cli create maven |

| Listing 2: Manage support for programming languages through the CLI |

| asura−cli add−language [language] |

| asura−cli remove−language [language] |

After the scaffolding, the actual development phase takes place. The core of an Asura challenge is the game manager, which among many tasks ensures that the game rules are followed, decides which player takes the next turn, and declares the winner(s). The framework provides an abstract game manager containing all the generic code, such as the initial crossing of I/O streams, handlers for timeout and badly formatted input, and automatic (de)serialization of JSON or XML to Java objects. The game manager developed by the author extends this generic one, implementing the specificities of the game. For instance, the game manager of the Tic Tac Toe has to decide which player takes the Xs and Os (which can be done in a random fashion) and, then, query the SA playing with Xs and the one with the Os (i.e., send the last position filled and read the position to play) alternately, updating the game state with their move, until the winning condition verifies (i.e., three equal symbols in a row, column, or diagonal) or all slots of the grid are occupied.

Asura Builder clearly separates the game state from the game manager, defining a “contract” for the object that holds the game state which should be used by the game manager to maintain the state. Hence, the state of a specific game must, at least, implement four mutational methods—prepare(), execute(), endRound(), and finalize()—and two informational methods—isRunning() and getStateUpdateFor() (methods’ parameters omitted for the sake of simplicity). Mutations of the state should generally produce updates in the game movie, a concept introduced by the framework to facilitate the creation of graphics. A game movie consists of a set of frames, each of them containing a set of sprites together with information about their location and applied transformations, and metadata information. The movie should be produced with the support of a provided builder, which includes methods to construct each frame with ease as well as to deal with SA evaluation and premature game termination.

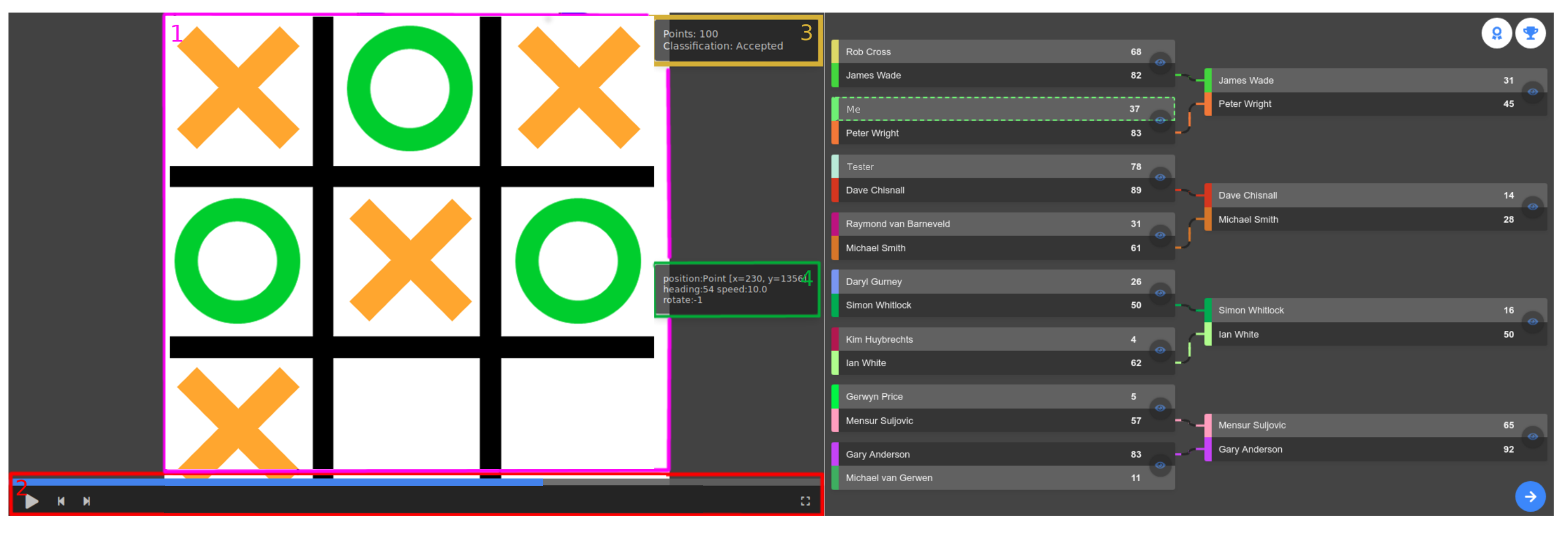

As for the Tic Tac Toe, the prepare() method sets the metadata on the game movie builder, particularly the title, the number of frames per second, the default size, the position of the sprite relative to which the objects’ coordinates are given, the 3 × 3 board background, the X and O sprites, and the players. The execute() function handles the possible actions from the player. In this case, it supports an action PLAY that contains the position in which the player wants to play and an artificial action SYMBOL sent by the game manager to indicate who is X and who is O. The getStateUpdateFor() returns the update to send to a given player which, in this case, consists of the position where the last symbol has been placed. The endRound() generates the frame of the current round based on the previous frame, but adding the new symbol. The isRunning() method checks if the number of rounds has exceeded or there is a winning combination, in which case it is not running. The finalize() method checks who is the winner and sets the statuses of the players accordingly.

Moreover, the framework also allows us to define wrappers for the SAs. A wrapper consists of a set of methods that aim to give players a higher level of abstraction so that they can focus on solving the real challenge instead of spending time processing I/O or doing other unrelated tasks. They can also be used to increase or decrease the difficulty of the problem by changing the way that the SA interacts with the game, without modifying the game itself. For example, a wrapper for the Tic Tac Toe could define two methods to support the SA, getLastPlayed(): int[] which provides the x and y position of the last move and play(x: int, y: int) that sends the action to the game manager to play in the given position. Since there are actions common to players of any game (e.g., communication with the manager), those were included in global wrappers. Hence, authors of challenges will only develop game-specific wrappers if they intend to provide a different abstraction layer to the learners.

3.3. Asura Evaluator

The evaluation engine of Mooshak grades a submission according to a set of rules, following a black-box approach. At the same time, it produces a report of the evaluation for additional validation from a human judge (e.g., teacher), if required. The assessment process has two phases: static analysis, which tests the consistency of the SA source code and generates an executable program; and dynamic analysis, which includes running the program with each test case loaded with the problem and comparing its output to the expected output.

Asura Evaluator uses the static analysis from Mooshak as-is, merely appending the global and game-specific SA wrappers encapsulated in the game JAR file to the compile command-line. However, dynamic analysis is re-implemented from scratch, replacing test cases based on input and output text files with game simulations against opponents from a provided list of paths to submissions. The kinds of evaluation, either validation or submission, determine the origin of the contestants, in the case of multiplayer games. Validations are only a means for the learner to experiment with his/her SA in a match against any existing SAs as they do not count for evaluation purposes. The learner can select the opponents from a list containing all the last accepted SAs of the students as well as the control SAs included by the author. On the contrary, submissions count for evaluation and, thus, are evaluated under the same circumstances against the control SAs developed by the challenge author.

The dynamic analysis starts by combining the current submission and a distinct set of the selected opponents’ submissions into matches, according to the minimum and the maximum number of players per match indicated on the game manager. As the opponents’ SAs are always submissions previously compiled and accepted, the component only needs to start processes from the executable programs. Then, for each match, it initializes an instance of the specific game manager from the JAR, providing it with the list of player processes in the match indexed by the player ID. The game manager crosses the input and output streams of each of these processes with itself, enabling it to write state updates to the input stream of the SA and read actions from its output stream (i.e., from the SA perspective, they are typical I/O operations). Therefore, the game simulation is handed over to the game manager, which should maintain the SAs aware of the game state, query them for actions at the proper time, ensure that the game rules are not broken, manage the state of the game, and classify and grade submissions. Once all matches are completed, the statuses collected from the matches, comprising observations, marks, classifications, and feedback, are combined and added to the submission report.

In the Tic Tac Toe challenge, a submission or validation schedules a set of two-player matches between the submitted SA and either each of the control SAs or the selected opponents’ SAs. Firstly, Asura Evaluator will compile the submitted SA’s code. Then, for each game, Asura Evaluator initiates a process from the submitted SA’s compiled code and another from the previously compiled opponent’s code. Finally, it initializes the game manager passing both processes indexed by the players’ IDs and handovers the evaluation to it. If, during any match, the submitted SA plays in a previously occupied position or the process fails for any other reason, the evaluation exits with the corresponding negative classification. Otherwise, the program is accepted and the mark is calculated.

3.4. Asura Tournament Manager

Asura Tournament Manager consists of a Java library to organize tournaments among SAs submitted and accepted on an Asura challenge. Tournaments aim to give a final goal to students by encouraging them to engage in a competition run at the end of the submission period, optionally. Hence, the challenge is not just about correctly solving the problem, but finding a strategy to win a final competition. To this end, the preparation phase allows students to do “friendly” matches (i.e., validations) against any previously approved SAs from each other, providing a glimpse of the performance expected in the tourney.

To arrange tournaments, instructors may use a wizard implanted in Mooshak’s administration user interface. These tournaments follow the same structure as those conducted in traditional games and sports competitions. They can have multiple stages, each of them organized according to one of the available formats: round-robin, swiss system, single-elimination, or double-elimination. Stages have a series of rounds in which each contestant either participates in a single match or advances immediately to the next round in the absence of assigned opponents (i.e., has a bye). Lastly, matches are the smallest grain of a tournament, generated one after another, executed on the Asura Evaluator, and the result returned to the Asura Tournament Manager. The result of a match includes the points achieved by each player as well as additional features that can be used as tiebreakers, either for the match or rankings.

The interface Tournament offers a sequential way to interact with the library to organize a tournament. Firstly, the consumer provides the mandatory metadata, particularly the title, game, and participants. Then, it adds and configures the stages, starting them one after another. The configuration options available for a stage depend upon its format and may comprise the number of players per match, the minimum number of players per group, the number of qualified players, the maximum number of rounds, the kind of result (e.g., win–draw–loss or position-based), and tiebreakers both for rankings and matches. Once the stage has started, obtaining the next match activates the wait mode until its result is sent and processed. In the end, the output of the Asura Tournament Manager is a JSON object complying with the tournament JSON schema.

Although the Tic Tac Toe example has the goal of teaching the usage of arrays, the instructor could promote additional programming practice by scheduling a tournament to the end of the submissions’ time, so that students would keep improving their SAs even after getting them accepted. Due to the nature of Tic Tac Toe (i.e., two excellent players will always draw), a single group-based format (e.g., round-robin) is adequate whereas elimination formats may fail to classify SAs.

4. Validation

The experiment conducted to assess the effectiveness of Asura in enhancing students’ motivation and increasing practice time, as well as its acceptance by learners, took the form of an open online learning course with a duration of two weeks, free of charge, and without participation limits. The course aims to teach the new concepts introduced in version ES6 of JavaScript, and it has been announced to undergraduate students who participated in the Web Technologies classes of the previous semester, which provided them with some background on JavaScript without ES6 features.

From the invited students, 10 (1 female) registered in the course, who were randomly assigned to either of the equal-sized groups: control (1 female) or treatment. Both groups received the same learning materials consisting of lecture notes about the concepts of ES6, including topics such as the variable declaration, object and array destructuring, arrow functions, promises, and classes, and a consolidated ES6 cheat sheet. However, they differ in the programming assignments delivered for training: Asura challenges for the treatment group and ICPC-like problems for the control group. After finishing the course curriculum, both groups were invited to take an exam composed of ICPC-like exercises to measure the skills attained.

The next subsections describe the Asura challenges created for the course, the analysis conducted, its results and limitations.

Section 4.1 details the Asura challenges created to teach ES6.

Section 4.2 describes the analysis and results.

Section 4.3 summarizes the limitations of this study.

4.2. Results and Analysis

The data obtained from the experiment includes logs automatically registered by Mooshak 2 from the system usage and questionnaire responses. Usage data comprises data about several metrics, in particular, the number of submissions and validations, timestamp of the activity, and results of submitted attempts. The questionnaire follows Lund’s model [

52], providing data on Usefulness, Ease of Use, Ease of Learning, and Satisfaction gathered from questions with a 7-value Likert scale as well as identifying Asura weaknesses and strengths through free-text questions. As the questionnaire is delivered along with the exam, it is guaranteed that only students who finished the course and do the exam may answer it, which was not the case with two students (one from each group).

The outcome from the questionnaire is presented in

Figure 4 separated by group: control on the left and treatment on the right. These results expose positive advancements in all measured metrics (e.g., usefulness in the control group had an average of

against

the treatment group while satisfaction valued

versus

). The quality of the feedback is perhaps the principal reason for these discrepancies as some students in the control group indicated feedback as a weakness (e.g., “Observations and program input/output could be improved” and “Feedback messages are scarce”) in opposition to the treatment group which appreciated the graphical feedback in the free-text questions. Yet, there were complains about the complexity of understanding the game mechanics in parallel with ES6 (e.g., “The idea is good, but when it is used to learn JavaScript since the start, it can be confusing because you have to learn JavaScript, learn the game, and think about the two”), as well as unanimous critics about the user interface of Enki (e.g., “misses terminal and debugger” or “looks bad on my MacBook Air 13”).

The actual increase in practice time and its corresponding impact on knowledge acquisition and retention is measured from six variables extracted from usage data: number of validations, number of submissions, return days (i.e., count of different days in which a student submitted an attempt), the ratio of solved exercises (i.e., a value of 1 means all activities of the course solved while 0 means no solved activities), and exam score (i.e., an integer between 0 and 3—one point per exercise solved), and group.

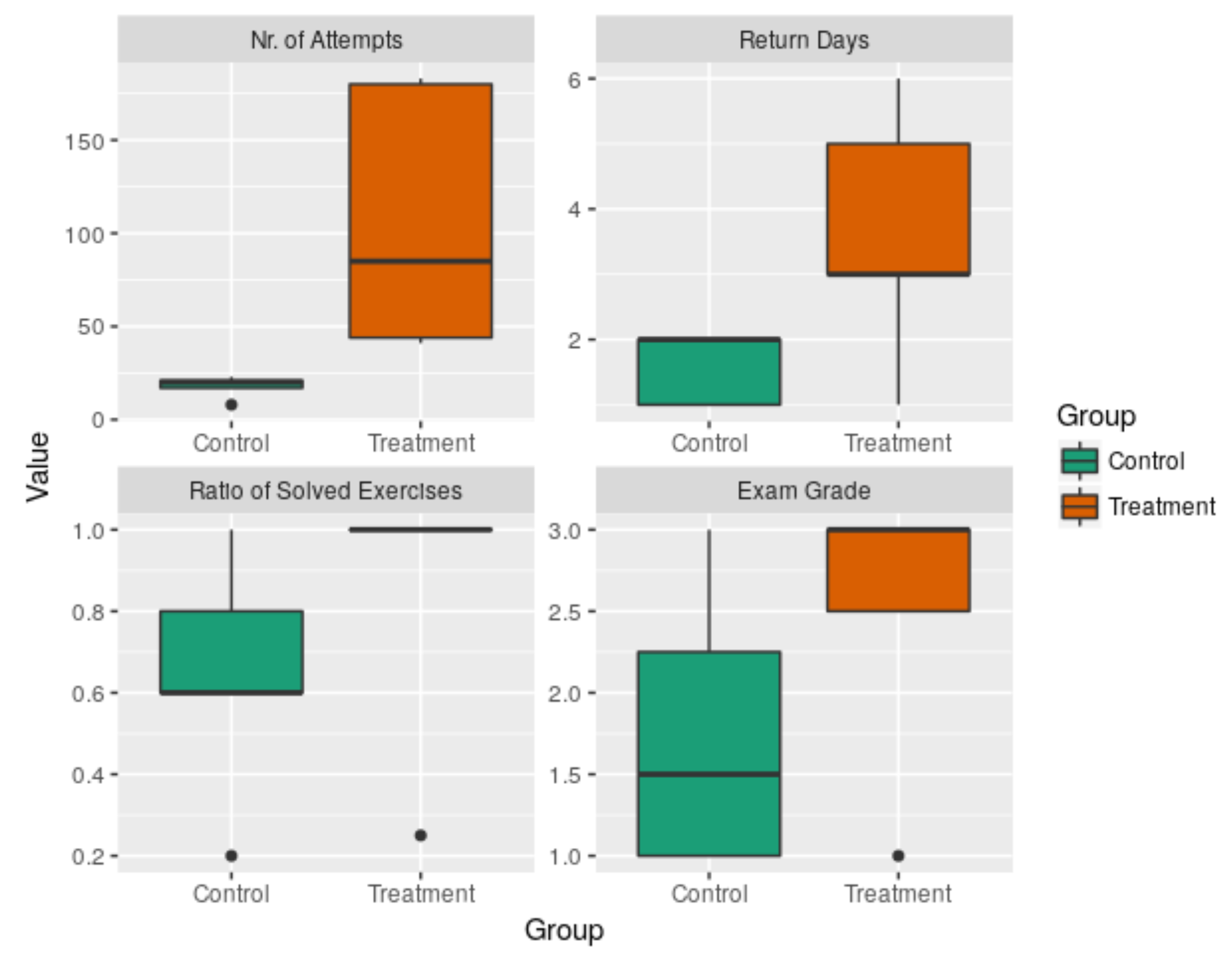

Figure 5 presents boxplots comparing the number of attempts (derived from the sum of submissions and validations), return days, the ratio of solved exercises, and exam scores of both groups.

The number of submissions reveals a considerable discrepancy between the treatment group (370 of which 65 accepted) and the control group (44 of which 16 succeeded), further increased by the differences in the ratio of exercises solved and return days. This discrepancy indicates that students struggled a lot in the treatment group to overcome their difficulties. Moreover, there were 40+ submissions than necessary (i.e., after having an acceptable solution), all of them in the chapter with competition. The validations also reflect these trends, even though students only realized 15 friendly matches against the opponents. The exam shows that 75% of the students in treatment performed well (grade between 2 and 3) against 50% in control.

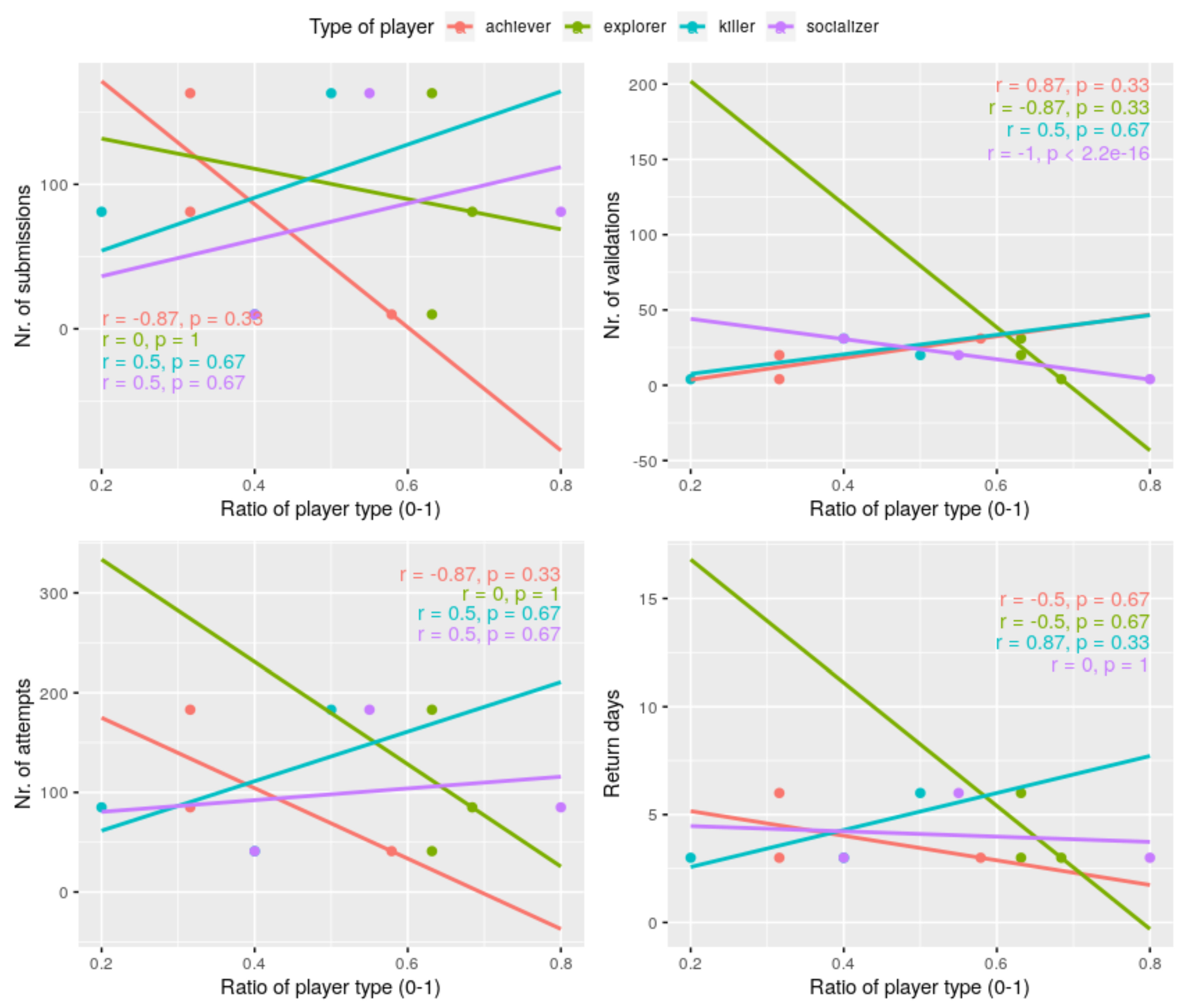

Students in the treatment group were also invited to fill in a survey to evaluate their gamer personality. This survey follows the Bartle Test [

53] to classify players of multiplayer online games into categories based on Bartle’s taxonomy of player types (i.e., achiever, explorer, killer, and socializer) [

54]. It estimates the percentage that the player’s behavior relates to the default behavior of each type of player. The main objective of this analysis was to detect possible correlations between the personality of the learner as a gamer and his/her behavior during the experiment. To this end, four variables were selected: number of submissions, number of validations, number of attempts, and return days. The resulting plots of Spearman’s correlation for the distinct taxonomies are presented in

Figure 6, annotated with Spearman’s correlation coefficient,

r, and

p-value. For instance, it is possible to identify a positive monotonic relationship between the killer’s ratio and each of the measured values as well as a negative monotonic relationship between the explorer’s ratio and the number of validations and return days. Unfortunately, only three learners have completed this test which makes the results statistically insignificant.