A Novel Cargo Ship Detection and Directional Discrimination Method for Remote Sensing Image Based on Lightweight Network

Abstract

:1. Introduction

2. Methodology

2.1. Faster-RCNN Network

2.2. Proposed Model

2.2.1. Model Overview

2.2.2. Feature Extraction Network

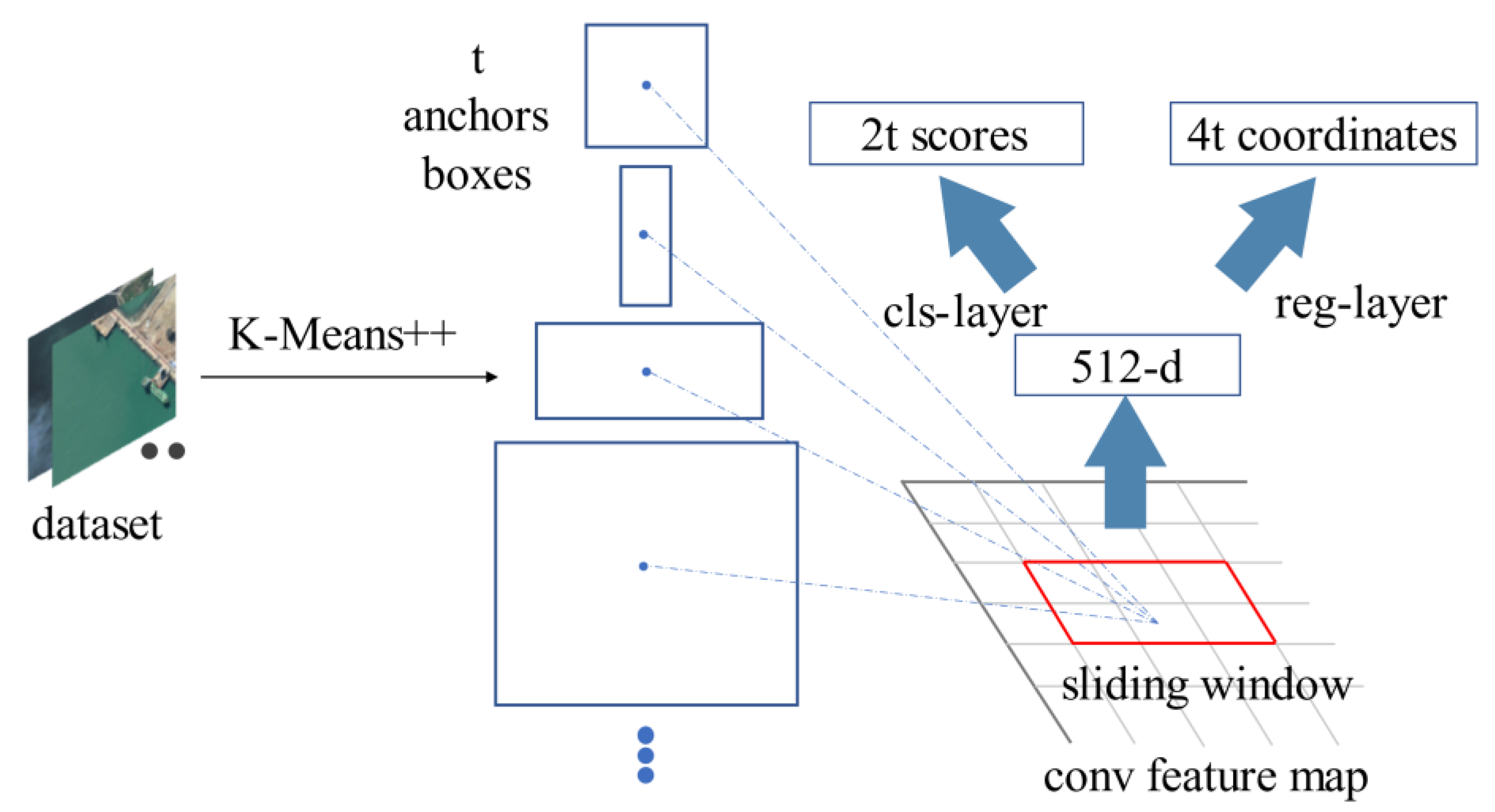

2.2.3. K-RPN

2.2.4. Directional Discrimination

3. Experiments

3.1. Experimental Dataset

- (1)

- Data collection. As far as we know, there is no publicly available remote sensing image cargo ship dataset with category and directional information. To detect cargo ships and discriminate directions of cargo ships, it is necessary to collect corresponding remote sensing images. Remote sensing images selected in this experiment are from Google Earth. The resolutions of images are 16, 17, and 18 levels. The bands of images are red, green, and blue. The data content covers different backgrounds and various positional relationships, which can meet the need for practical tasks. Due to the limitation of computer memory capacity, the collected images are cropped into 800 × 800 pixels, and the images containing three types of cargo ships are filtered out—bulk carrier, container, and tanker—ensuring that each image contains at least one cargo ship. The examples are shown in the Figure 7.

- (2)

- Data annotation. In order to establish a cargo ship dataset of remote sensing images, it is necessary to annotate the collected remote sensing images for the training and testing of the models. In this paper, labeling software (Labelimg) [32] is used on the cargo ships in remote sensing images. Labeled objects include bulk carrier, container, and tanker. According to the directions of cargo ships, they can be divided into four categories, east, south, west, and north, and the angle range of each category is 90 degrees. After the labeling is completed, a corresponding labeling file will be formed, which mainly records the location, category, and direction of the cargo ship. The obtained annotated dataset is uniformly processed into the format of VOC2007 [33] to provide a standard dataset for the training of models.

- (3)

- Data augmentation and splitting. In the process of model training, the larger and more comprehensive the dataset, the stronger the model recognition ability. Therefore, in this paper, the collected remote sensing images are rotated clockwise by 90 degrees, 180 degrees, and 270 degrees, as well as flipped horizontally and vertically, to expand the data. The number of the dataset is expanded by six times, and its directional label is adjusted accordingly. Finally, 15,654 remote sensing images are obtained. Table 2 shows the details of various cargo ships in the dataset. The dataset is randomly divided into training set, validation set, and test set according to the ratio of 8:1:1.

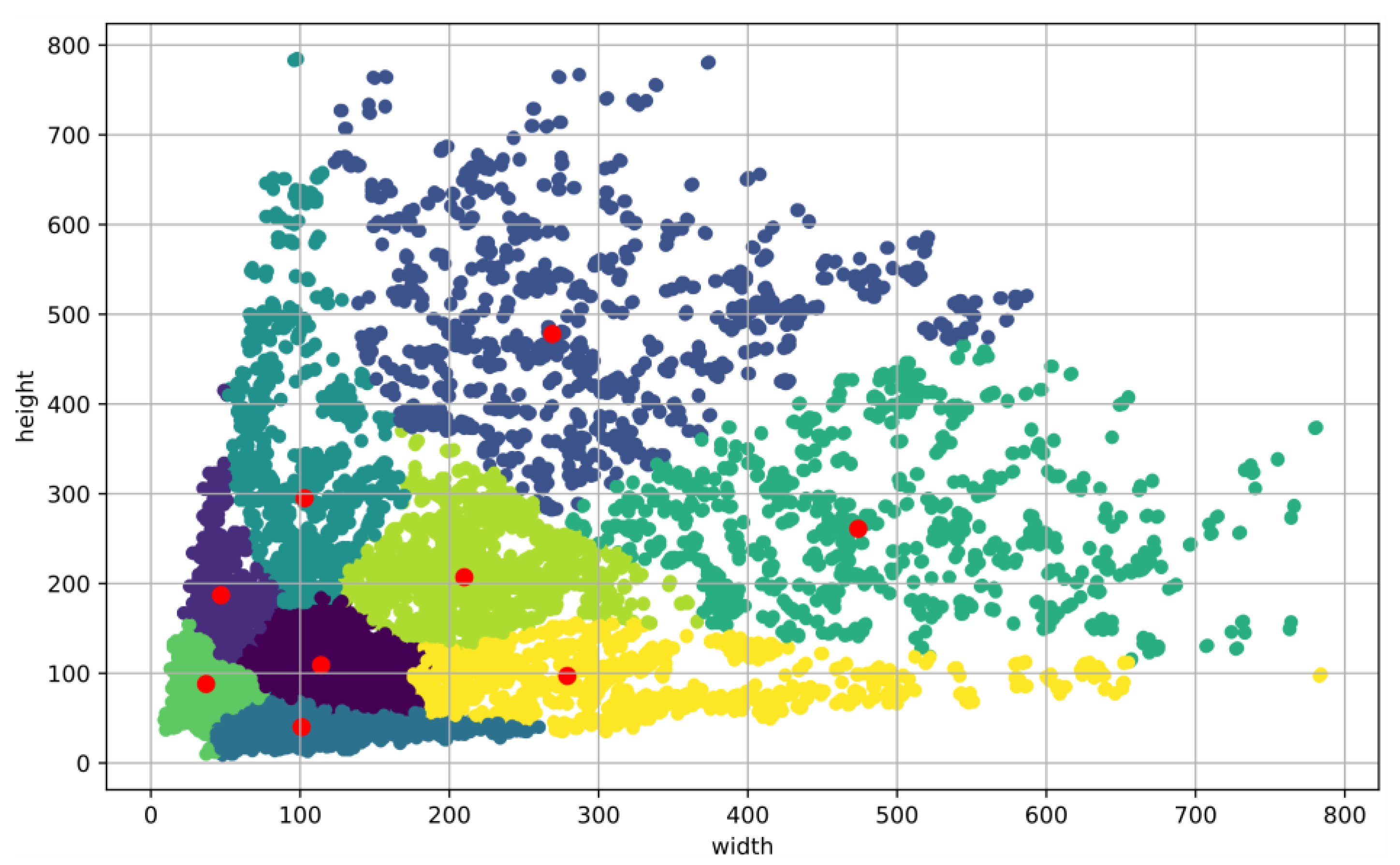

3.2. The Anchors Clustering

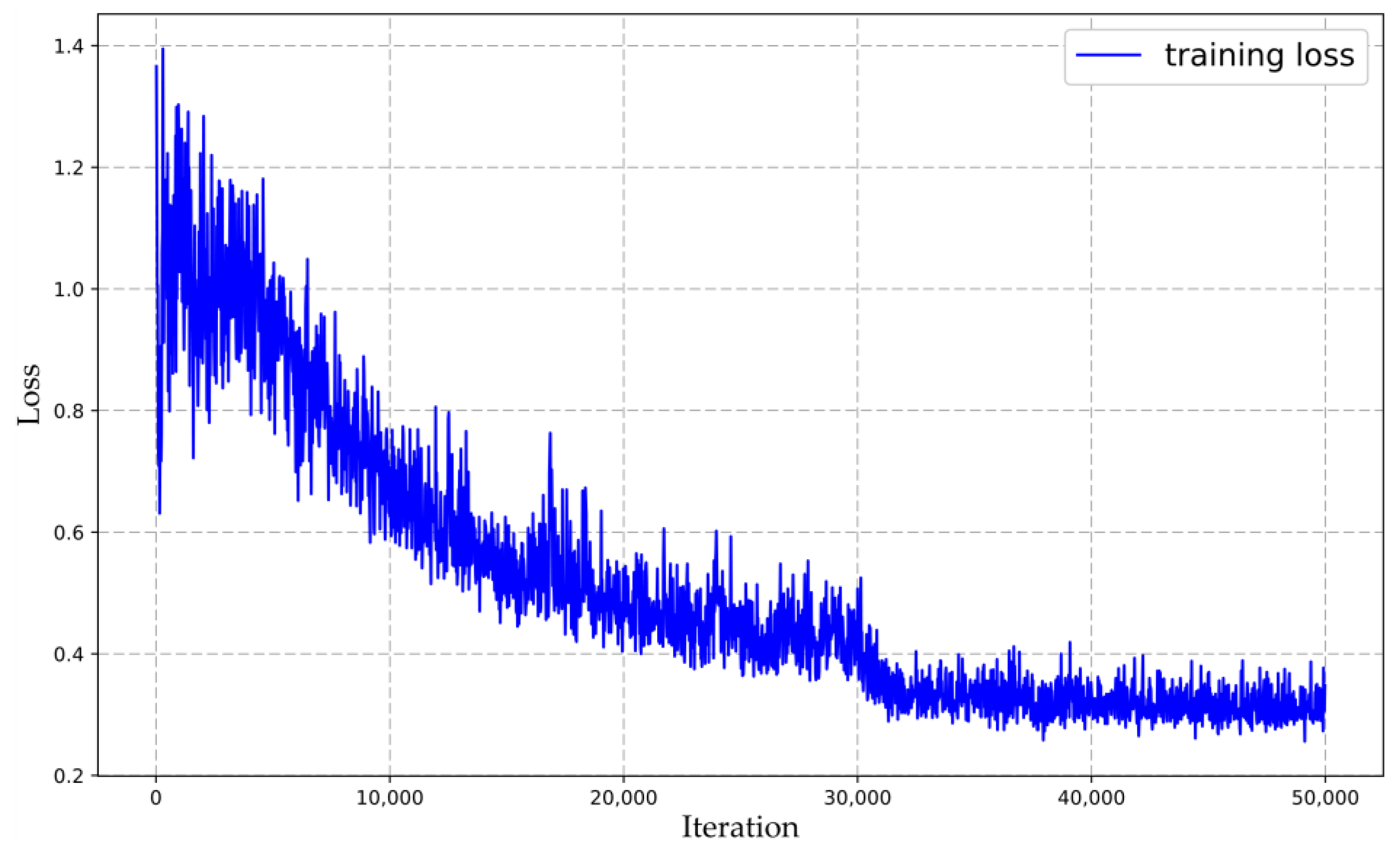

3.3. Implementation Details

3.4. Evaluation Metrics

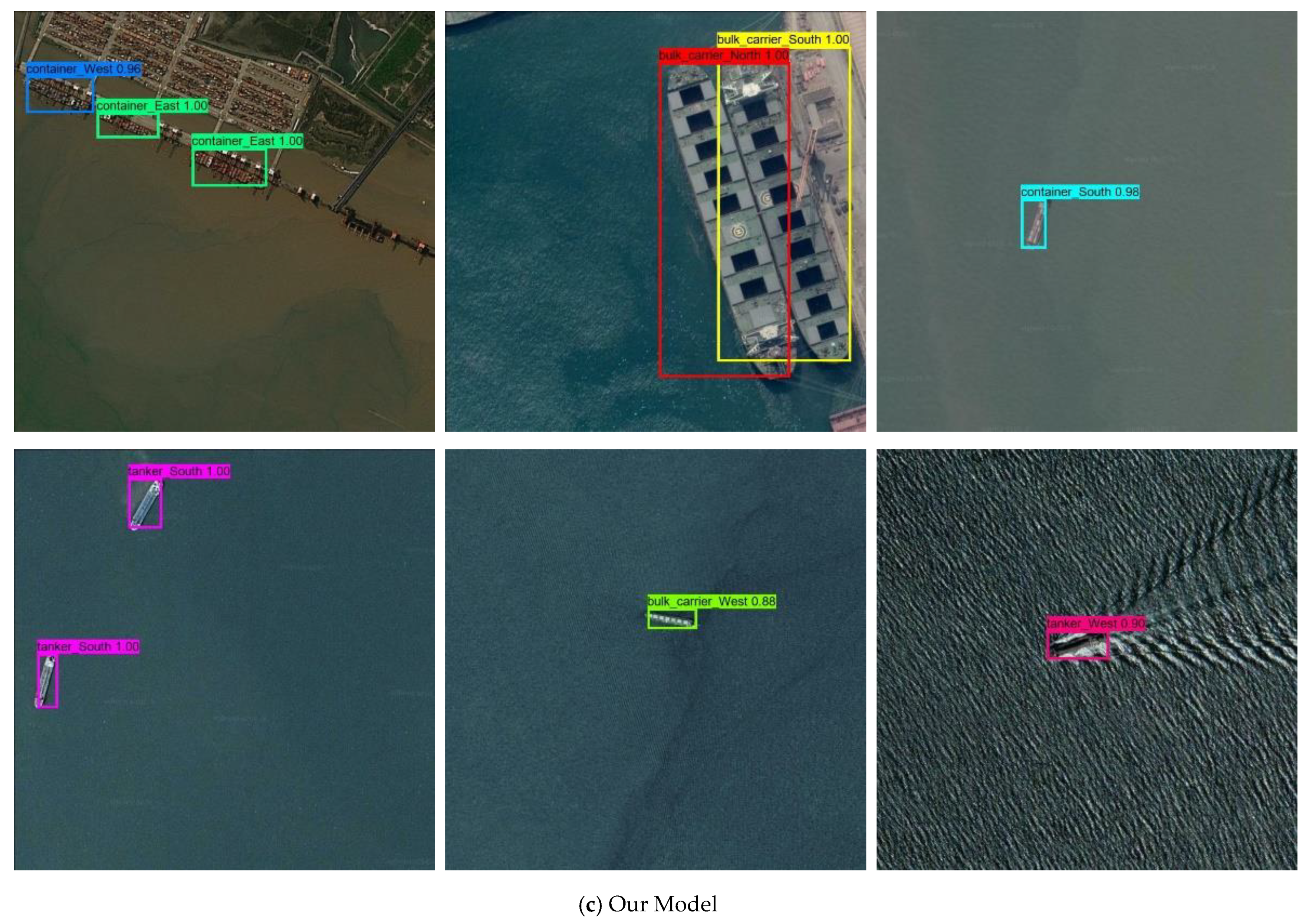

3.5. Experimental Results

3.5.1. Performance of Different Models

3.5.2. Performance of Other Remote Sensing Images

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| AP | Average precision |

| AIS | Automatic identification system |

| BN | Batch normalization |

| CNN | Convolutional neural network |

| False negatives | |

| False positives | |

| Ground truth | |

| IOU | Intersection over union |

| mAP | Mean average precision |

| P | Precision |

| R | Recall |

| R-CNN | Region-based convolutional neural networks |

| ReLU | Rectified linear unit |

| ROI | Regions of interest |

| RPN | Region proposal network |

| SSD | Single shot multibox detector |

| True positives | |

| YOLO | You only look once |

References

- Tang, J.X.; Deng, C.W.; Huang, G.B.; Zhao, B.J. Compressed-Domain Ship Detection on Spaceborne Optical Image Using Deep Neural Network and Extreme Learning Machine. IEEE Trans. Geosci. Remote Sensing 2015, 53, 1174–1185. [Google Scholar] [CrossRef]

- Cheng, G.; Han, J.W. A survey on object detection in optical remote sensing images. ISPRS-J. Photogramm. Remote Sens. 2016, 117, 11–28. [Google Scholar] [CrossRef] [Green Version]

- Yang, X.; Sun, H.; Fu, K.; Yang, J.R.; Sun, X.; Yan, M.L.; Guo, Z. Automatic Ship Detection in Remote Sensing Images from Google Earth of Complex Scenes Based on Multiscale Rotation Dense Feature Pyramid Networks. Remote Sens. 2018, 10, 132. [Google Scholar] [CrossRef] [Green Version]

- Liu, T.; Pang, B.; Zhang, L.; Yang, W.; Sun, X.Q. Sea Surface Object Detection Algorithm Based on YOLO v4 Fused with Reverse Depthwise Separable Convolution (RDSC) for USV. J. Mar. Sci. Eng. 2021, 9, 753. [Google Scholar] [CrossRef]

- Li, X.G.; Li, Z.X.; Lv, S.S.; Cao, J.; Pan, M.; Ma, Q.; Yu, H.B. Ship detection of optical remote sensing image in multiple scenes. Int. J. Remote Sens. 2021, 29. [Google Scholar] [CrossRef]

- Wang, Q.; Shen, F.Y.; Cheng, L.F.; Jiang, J.F.; He, G.H.; Sheng, W.G.; Jing, N.F.; Mao, Z.G. Ship detection based on fused features and rebuilt YOLOv3 networks in optical remote-sensing images. Int. J. Remote Sens. 2021, 42, 520–536. [Google Scholar] [CrossRef]

- Yokoya, N.; Iwasaki, A. Object localization based on sparse representation for remote sensing imagery. In Proceedings of the 2014 IEEE International Geoscience and Remote Sensing Symposium, Quebec City, QC, Canada, 13–18 July 2014; pp. 2293–2296. [Google Scholar]

- Li, Z.M.; Yang, D.Q.; Chen, Z.Z. Multi-Layer Sparse Coding Based Ship Detection for Remote Sensing Images. In Proceedings of the 2015 IEEE International Conference on Information Reuse and Integration, San Francisco, CA, USA, 13–15 Auguest 2015; pp. 122–125. [Google Scholar]

- Zhou, H.T.; Zhuang, Y.; Chen, L.; Shi, H. Ship Detection in Optical Satellite Images Based on Sparse Representation. In Signal and Information Processing, Networking and Computers; Sun, S., Chen, N., Tian, T., Eds.; Springer: Singapore, 2018; Volume 473, pp. 164–171. [Google Scholar]

- Bhagya, C.; Shyna, A. An Overview of Deep Learning Based Object Detection Techniques. In Proceedings of the 2019 1st International Conference on Innovations in Information and Communication Technology (ICIICT), Chennai, India, 25–26 April 2019. [Google Scholar]

- Wu, X.W.; Sahoo, D.; Hoi, S.C.H. Recent advances in deep learning for object detection. Neurocomputing 2020, 396, 39–64. [Google Scholar] [CrossRef] [Green Version]

- Kwak, N.-J.; Kim, D. Object detection technology trend and development direction using deep learning. Int. J. Adv. Cult. Technol. 2020, 8, 119–128. [Google Scholar]

- Girshick, R.; Donahue, J.; Darrell, T.; Malik, J. Rich feature hierarchies for accurate object detection and semantic segmentation. In Proceedings of the 2014 IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 580–587. [Google Scholar]

- Girshick, R. Fast R-CNN. In Proceedings of the 2015 IEEE International Conference on Computer Vision, Las Condes, Chile, 11–18 December 2015; IEEE: New York, NY, USA, 2015; pp. 1440–1448. [Google Scholar]

- Ren, S.Q.; He, K.M.; Girshick, R.; Sun, J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 1137–1149. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You Only Look Once: Unified, Real-Time Object Detection. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; IEEE: New York, NY, USA, 2016; pp. 779–788. [Google Scholar]

- Redmon, J.; Farhadi, A. YOLO9000: Better, Faster, Stronger. In Proceedings of the 30th IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2016; IEEE: New York, NY, USA, 2017; pp. 6517–6525. [Google Scholar]

- Redmon, J.; Farhadi, A. YOLOv3: An Incremental Improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar]

- Bochkovskiy, A.; Chien-Yao, W.; Liao, H.Y.M. YOLOv4: Optimal speed and accuracy of object detection. arXiv 2020, 17, 17. [Google Scholar]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.Y.; Berg, A.C. SSD: Single Shot MultiBox Detector. In Computer Vision—Eccv 2016, Pt I; Leibe, B., Matas, J., Sebe, N., Welling, M., Eds.; Springer International Publishing Ag: Cham, Switzerland, 2016; Volume 9905, pp. 21–37. [Google Scholar]

- Lin, H.N.; Shi, Z.W.; Zou, Z.X. Fully Convolutional Network With Task Partitioning for Inshore Ship Detection in Optical Remote Sensing Images. IEEE Geosci. Remote Sens. Lett. 2017, 14, 1665–1669. [Google Scholar] [CrossRef]

- Sun, Y.J.; Lei, W.H.; Ren, X.D. Remote sensing image ship target detection method based on visual attention model. In Lidar Imaging Detection and Target Recognition 2017; Lv, D., Lv, Y., Bao, W., Eds.; Spie-Int Soc Optical Engineering: Bellingham, WA, USA, 2017; Volume 10605. [Google Scholar]

- Wei, S.H.; Chen, H.M.; Zhu, X.J.; Zhang, H.S. Ship Detection in Remote Sensing Image based on Faster R-CNN with Dilated Convolution. In Proceedings of the 39th Chinese Control Conference, Shenyang, China, 27–29 July 2020; pp. 7148–7153. [Google Scholar]

- Li, Q.P.; Mou, L.C.; Liu, Q.J.; Wang, Y.H.; Zhu, X.X. HSF-Net: Multiscale Deep Feature Embedding for Ship Detection in Optical Remote Sensing Imagery. IEEE Trans. Geosci. Remote Sensing 2018, 56, 7147–7161. [Google Scholar] [CrossRef]

- Zhang, S.M.; Wu, R.Z.; Xu, K.Y.; Wang, J.M.; Sun, W.W. R-CNN-Based Ship Detection from High Resolution Remote Sensing Imagery. Remote Sens. 2019, 11, 631. [Google Scholar] [CrossRef] [Green Version]

- Yun, W. Ship Detection Method for Remote Sensing Images via Adversary Strategy. In Proceedings of the 2019 IEEE International Conference on Signal, Information and Data Processing (ICSIDP), Chongqing, China, 11–13 December 2019; p. 4. [Google Scholar] [CrossRef]

- Lee, Y.W.; Hwang, J.W.; Lee, S.; Bae, Y.; Park, J. An Energy and GPU-Computation Efficient Backbone Network for Real-Time Object Detection. ProCeedings of the 2019 IEEE/Cvf Conference on Computer Vision and Pattern Recognition Workshops, Long Beach, CA, USA, 16–17 June 2019; pp. 752–760. [Google Scholar]

- Chollet, F. Xception: Deep Learning with Depthwise Separable Convolutions. In Proceedings of the 30th IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2016; IEEE: New York, NY, USA, 2017; pp. 1800–1807. [Google Scholar]

- Kaiming, H.; Xiangyu, Z.; Shaoqing, R.; Jian, S. Deep residual learning for image recognition. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar] [CrossRef] [Green Version]

- Huang, G.; Liu, Z.; van der Maaten, L.; Weinberger, K.Q. Densely Connected Convolutional Networks. In Proceedings of the 30th IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2016; IEEE: New York, NY, USA, 2017; pp. 2261–2269. [Google Scholar]

- Olukanmi, P.O.; Nelwamondo, F.; Marwala, T. k-Means-Lite plus plus: The Combined Advantage of Sampling and Seeding. In Proceedings of the 2019 6th International Conference on Soft Computing & Machine Intelligence, Johannesburg, South Africa, 30 June 2019; pp. 223–227. [Google Scholar]

- Tzutalin, D. Labelimg. 2019. Available online: https://github.com/tzutalin/labelImg (accessed on 14 April 2020).

- Everingham, M.; Van Gool, L.; Williams, C.K.I.; Winn, J.; Zisserman, A. The Pascal Visual Object Classes (VOC) Challenge. Int. J. Comput. Vis. 2010, 88, 303–338. [Google Scholar] [CrossRef] [Green Version]

| Stage | Structure |

|---|---|

| Stage1 | 3 × 3 conv,64, stride = 2 3 × 3 dsconv,64, stride = 1 3 × 3 dsconv,64, stride = 1 3 × 3 maxpool2d, stride = 2 |

| Stage 2 | [3 × 3 dsconv,128, stride = 1] × 3 concat & 1 × 1 conv,256 |

| Stage 3 | [3 × 3 dsconv,160, stride = 1] × 3 concat & 1 × 1 conv,512 |

| Stage 4 | [3 × 3 dsconv,192, stride = 1] × 3 concat & 1 × 1 conv,768 |

| Stage 5 | [3 × 3 dsconv,224, stride = 1] × 3 concat & 1 × 1 conv,1024 |

| Category | East | South | West | North | Total |

|---|---|---|---|---|---|

| Bulk carrier | 1505 | 1423 | 1505 | 1423 | 5856 |

| Container | 2207 | 2299 | 2207 | 2299 | 9012 |

| Tanker | 2013 | 1989 | 2013 | 1989 | 8004 |

| Total | 5725 | 5711 | 5725 | 5711 | 22,872 |

| Original Anchors | Width | 91 | 128 | 181 | 181 | 256 | 362 | 362 | 512 | 724 |

| Height | 181 | 128 | 91 | 362 | 256 | 181 | 724 | 512 | 362 | |

| K-Mean++ Anchors | Width | 37 | 128 | 101 | 103 | 114 | 210 | 259 | 279 | 474 |

| Height | 88 | 128 | 40 | 295 | 109 | 207 | 478 | 97 | 261 |

| Class | Number | P (%) | R (%) | AP (%) | |||

|---|---|---|---|---|---|---|---|

| Bulk_Carrier_North | 159 | 150 | 14 | 9 | 91.46 | 94.34 | 93.46 |

| Bulk_Carrier_East | 135 | 130 | 11 | 5 | 92.20 | 96.30 | 94.94 |

| Bulk_Carrier_South | 122 | 117 | 26 | 5 | 81.82 | 95.90 | 95.20 |

| Bulk_Carrier_West | 142 | 133 | 6 | 9 | 95.68 | 93.66 | 93.39 |

| Container_North | 192 | 181 | 27 | 11 | 87.02 | 94.27 | 93.17 |

| Container_East | 203 | 190 | 31 | 13 | 85.97 | 93.60 | 93.00 |

| Container_South | 226 | 207 | 34 | 19 | 85.89 | 91.59 | 90.10 |

| Container_West | 222 | 207 | 24 | 15 | 89.61 | 93.24 | 92.26 |

| Tanker_North | 207 | 185 | 41 | 22 | 81.86 | 89.37 | 88.10 |

| Tanker_East | 192 | 176 | 19 | 16 | 90.26 | 91.67 | 91.26 |

| Tanker_South | 205 | 184 | 26 | 21 | 87.62 | 89.76 | 88.77 |

| Tanker_West | 202 | 183 | 31 | 19 | 85.51 | 90.59 | 89.87 |

| Model | Feature Extraction Network | Region Proposal Network | Weight Size/MB | Training Time/min | mAP | Input Size | Prediction Time/ms |

|---|---|---|---|---|---|---|---|

| Faster-RCNN | ResNet50 | RPN | 319.5 | 100.4 | 94.41% | 800 × 800 | 56 |

| YOLOv3 | DarkNet53 | None | 235.0 | 758.0 | 83.98% | 800 × 800 | 41 |

| Our | OSASPNet | K-RPN | 110.0 | 79.5 | 91.96% | 800 × 800 | 46 |

| Detection Result | Region Proposal | Heatmap | Class Prediction | Direction Prediction |

|---|---|---|---|---|

|  |  | Bulk carrier | East |

|  |  | Container | South |

|  |  | Tanker | North |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, P.; Liu, J.; Zhang, Y.; Zhi, Z.; Cai, Z.; Song, N. A Novel Cargo Ship Detection and Directional Discrimination Method for Remote Sensing Image Based on Lightweight Network. J. Mar. Sci. Eng. 2021, 9, 932. https://doi.org/10.3390/jmse9090932

Wang P, Liu J, Zhang Y, Zhi Z, Cai Z, Song N. A Novel Cargo Ship Detection and Directional Discrimination Method for Remote Sensing Image Based on Lightweight Network. Journal of Marine Science and Engineering. 2021; 9(9):932. https://doi.org/10.3390/jmse9090932

Chicago/Turabian StyleWang, Pan, Jianzhong Liu, Yinbao Zhang, Zhiyang Zhi, Zhijian Cai, and Nannan Song. 2021. "A Novel Cargo Ship Detection and Directional Discrimination Method for Remote Sensing Image Based on Lightweight Network" Journal of Marine Science and Engineering 9, no. 9: 932. https://doi.org/10.3390/jmse9090932

APA StyleWang, P., Liu, J., Zhang, Y., Zhi, Z., Cai, Z., & Song, N. (2021). A Novel Cargo Ship Detection and Directional Discrimination Method for Remote Sensing Image Based on Lightweight Network. Journal of Marine Science and Engineering, 9(9), 932. https://doi.org/10.3390/jmse9090932