Research on Water Surface Object Detection Method Based on Image Fusion

Abstract

1. Introduction

2. Literature Review

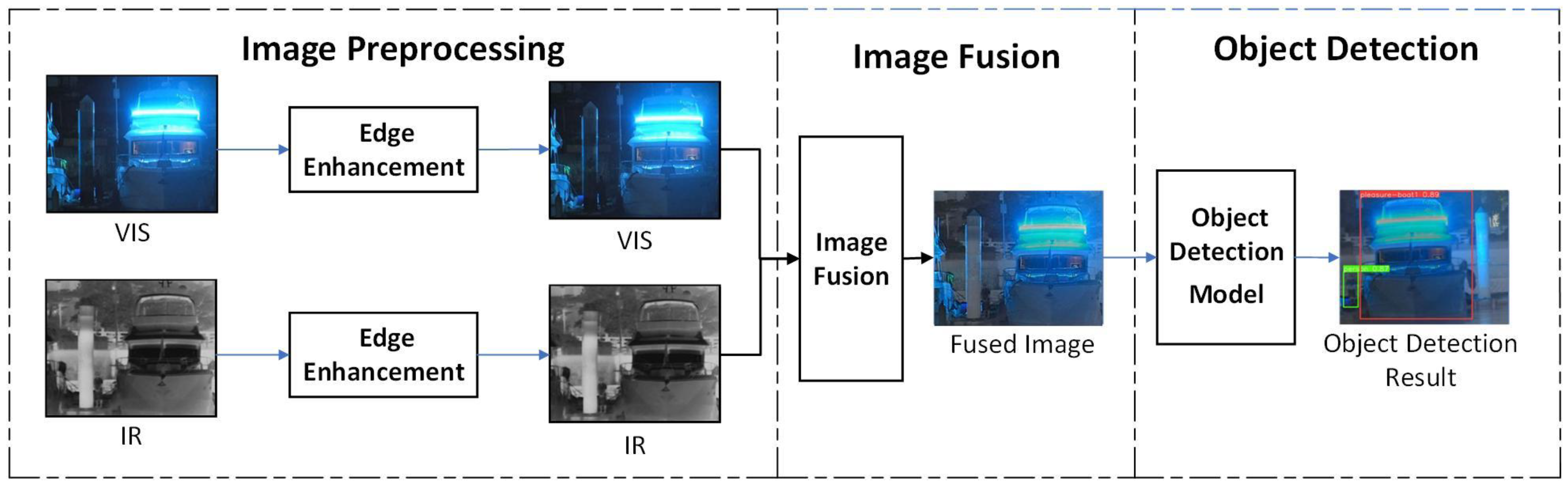

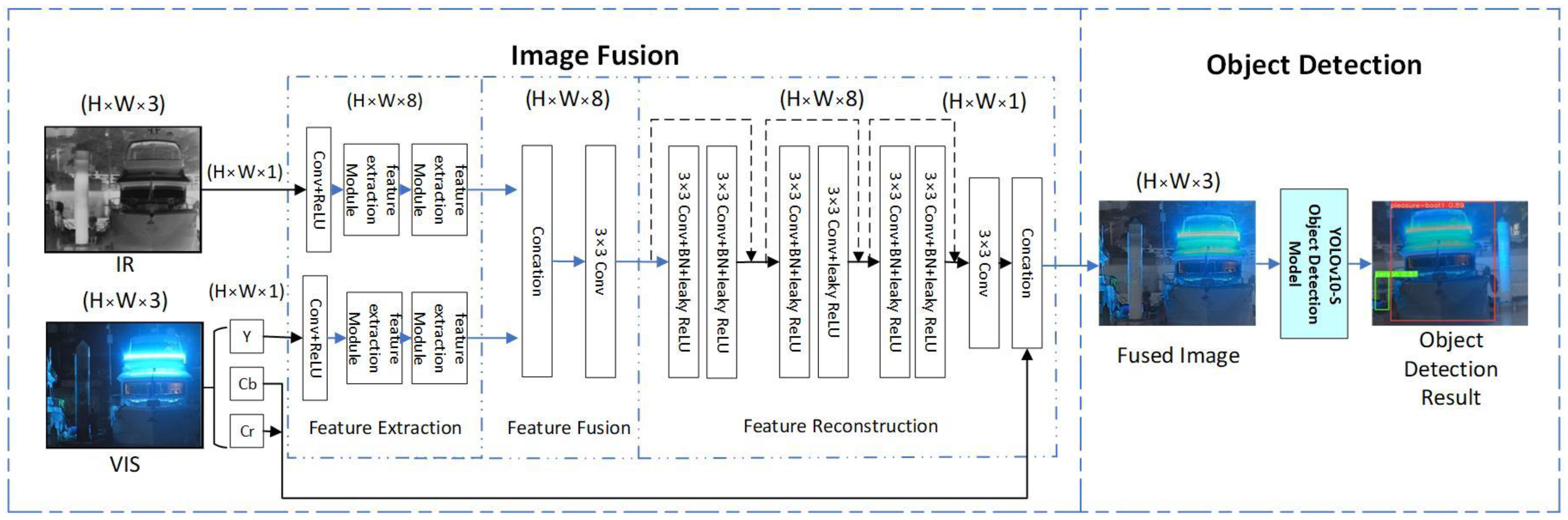

3. Object Detection Technology Based on Image Fusion

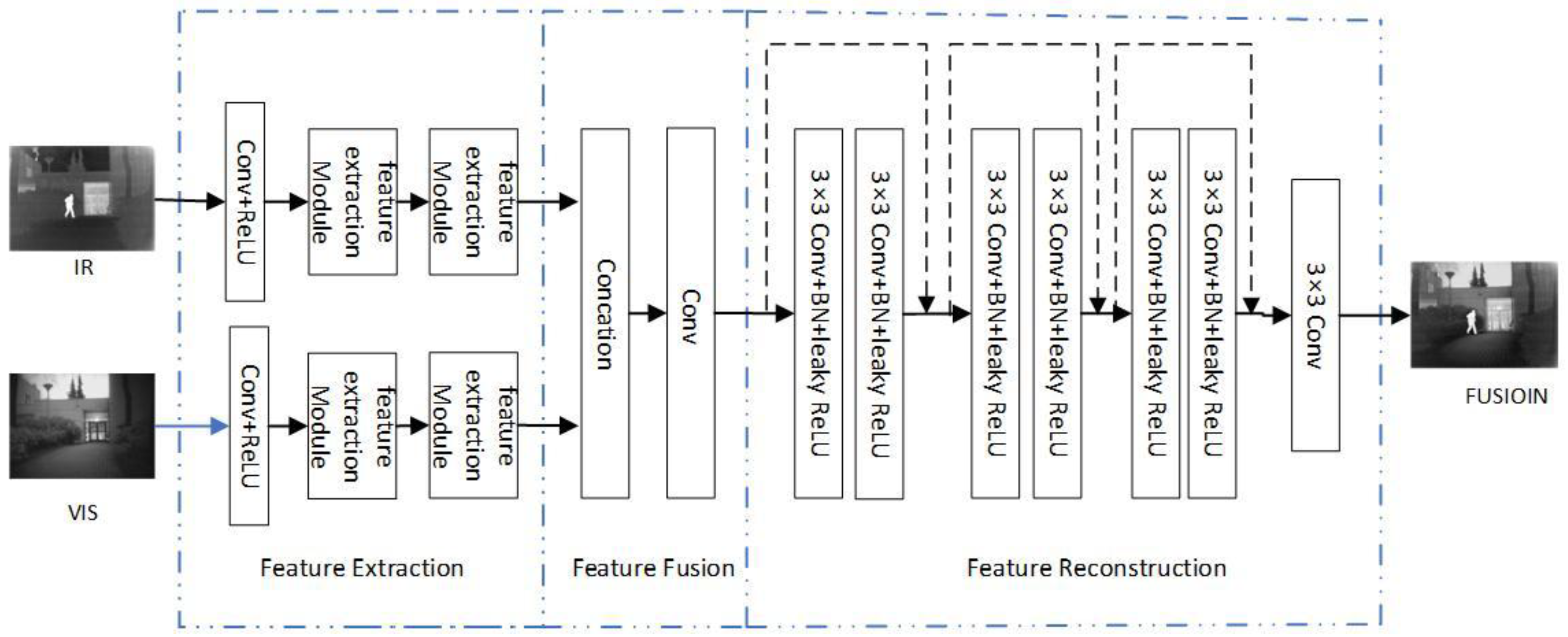

3.1. Image Fusion Model

3.2. Object Detection Process

3.3. Evaluation Index

3.3.1. Evaluation Indicators for Image Fusion Technology

3.3.2. Evaluation Indicators for Object Detection Technology

4. Optimization of Image Fusion Technology

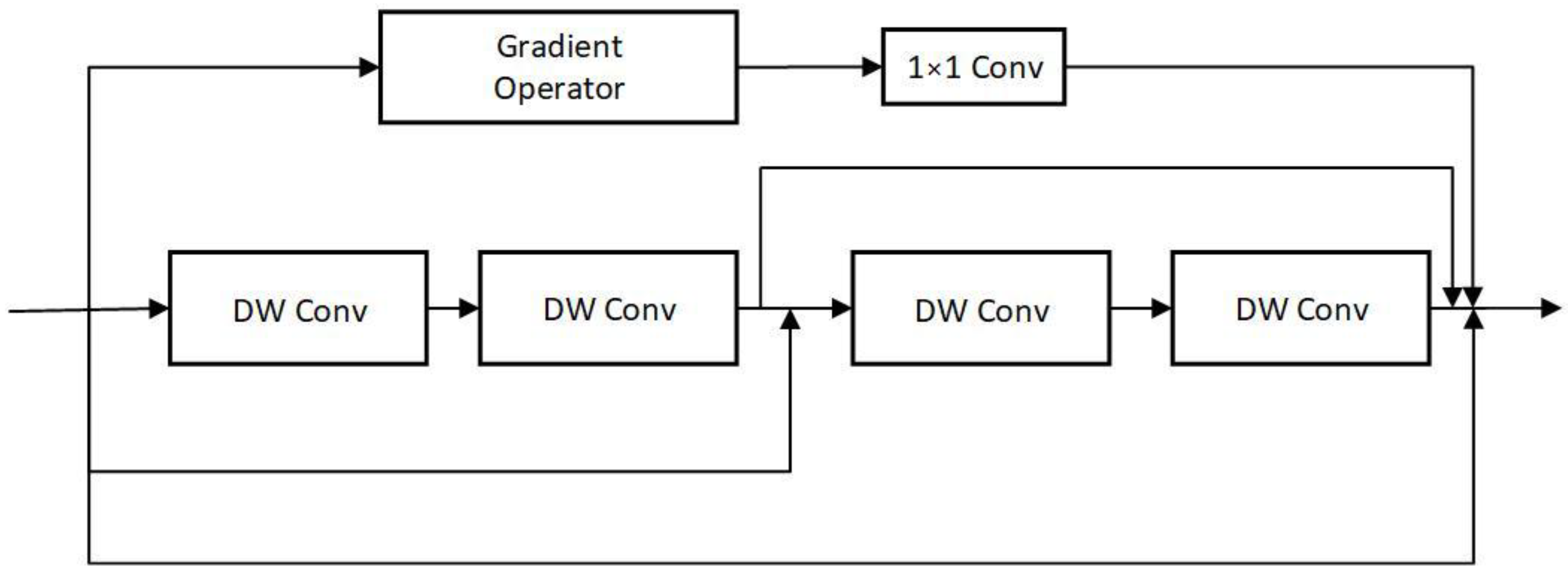

4.1. Optimization of Feature Extraction Module

4.2. Optimization of Loss Function

4.3. Analysis of the Impact of Fusion Parameters

4.3.1. Feature Extraction Dimension

4.3.2. Image Reconstruction Module

5. Analysis of the Impact of Object Detection Models

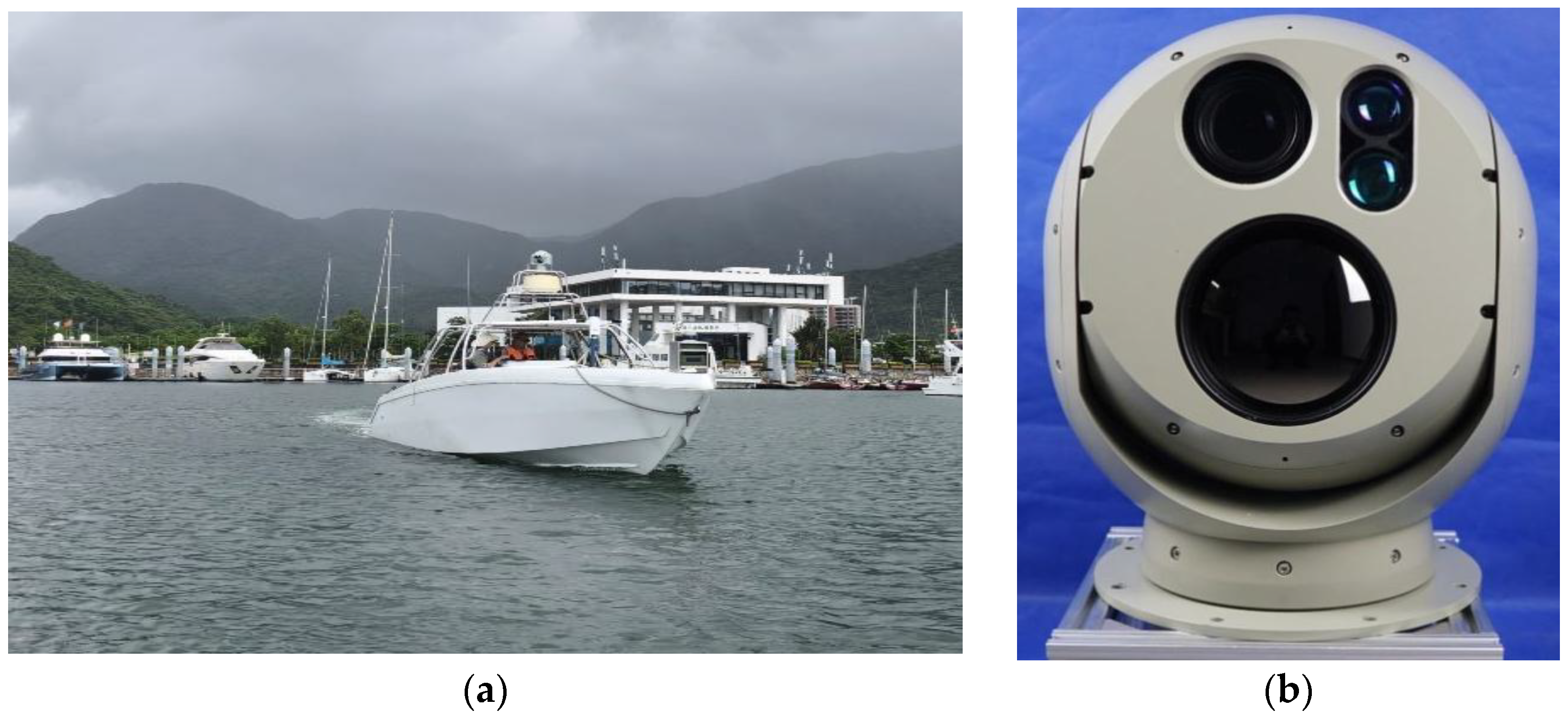

6. Experimental Verification of Maritime Object Detection

6.1. Marine Object Detection Dataset

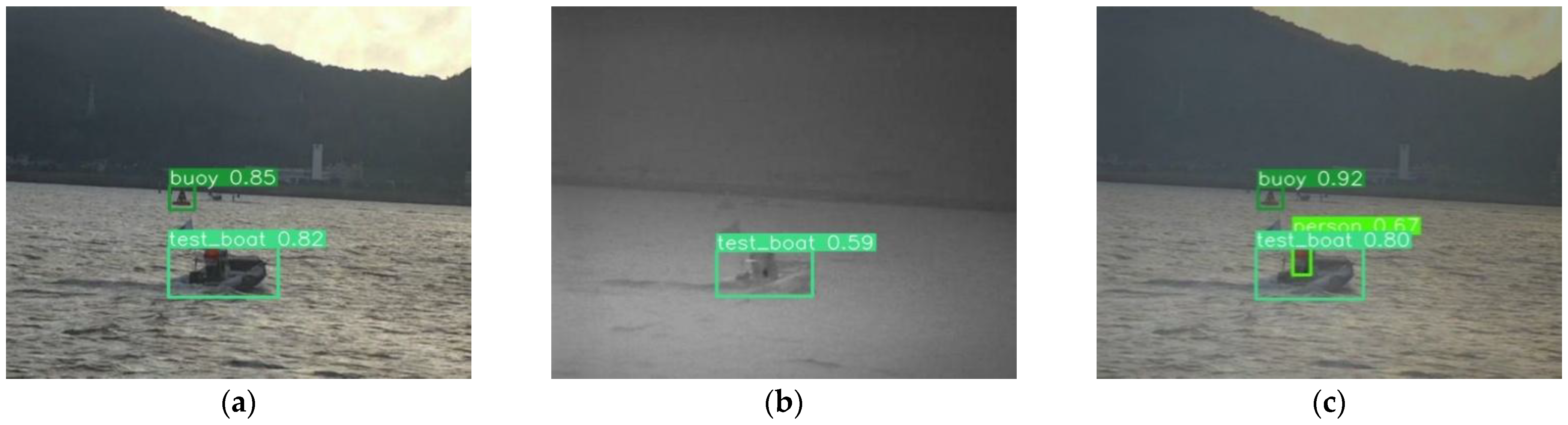

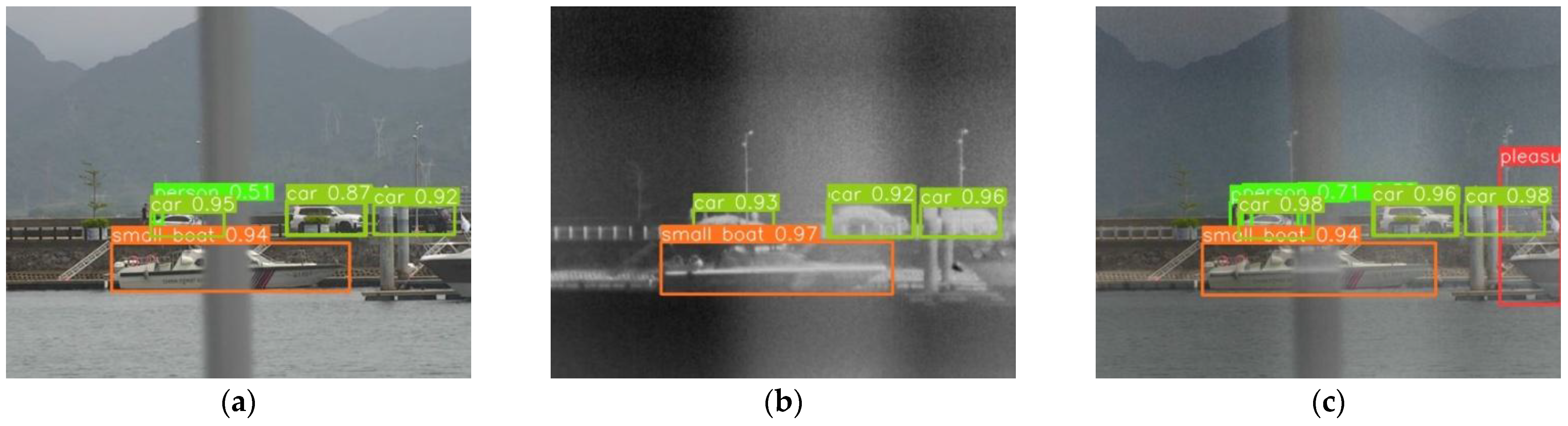

6.2. Multi-Object Detection Experiment in Normal Lighting Scenes

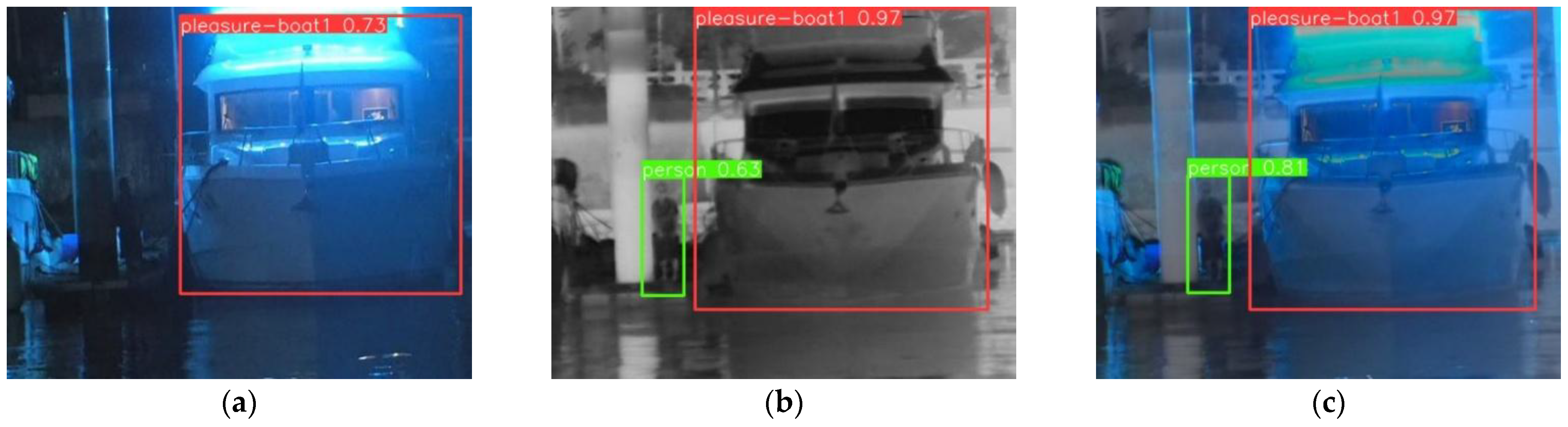

6.3. Object Detection Experiment in Low Illumination and Backlight Scenes

6.4. Quantitative Analysis of Object Detection Results

7. Discussion

8. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Abbreviations

| CNNs | Convolutional Neural Networks |

| GANs | Generative Adversarial Networks |

| AE | Autoencoder |

| LReLU | Leaky Rectified Linear Unit |

| MI | Mutual Information |

| CC | Correlation Coefficient |

| PSNR | Peak Signal-to-Noise Ratio |

| SSIM | Structural Similarity Index Measure |

| FMI | Feature Measurement Index |

| mAP | mean Average Precision |

| DW Conv | Depth-Wise separable Convolution |

| YOLO | You Only Look Once |

References

- Li, H.; Wu, X.J.; Kittler, J. MDLatLRR: A novel decomposition method for infrared and visible image fusion. IEEE Trans. Image Process. 2020, 29, 4733–4746. [Google Scholar] [CrossRef]

- Bavirisetti, D.P.; Dhuli, R. Two-scale image fusion of visible and infrared images using saliency detection. Infrared Phys. Technol. 2016, 76, 52–64. [Google Scholar] [CrossRef]

- Liu, Y.; Chen, X.; Ward, R.K.; Wang, Z.J. Image fusion with convolutional sparse representation. IEEE Signal Process. Lett. 2016, 23, 1882–1886. [Google Scholar] [CrossRef]

- Ma, J.; Chen, C.; Li, C.; Huang, J. Infrared and visible image fusion via gradient transfer and total variation minimization. Inf. Fusion 2016, 31, 100–109. [Google Scholar] [CrossRef]

- Liu, Y.; Liu, S.; Wang, Z. A general framework for image fusion based on multi-scale transform and sparse representation. Inf. Fusion 2015, 24, 147–164. [Google Scholar] [CrossRef]

- Li, S.; Kang, X.; Fang, L.; Hu, J.; Yin, H. Pixel-level image fusion: A survey of the state of the art. Inf. Fusion 2017, 33, 100–112. [Google Scholar] [CrossRef]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention is all you need. Adv. Neural Inf. Process. Syst. 2017, 30. [Google Scholar]

- Liu, Y.; Chen, X.; Peng, H.; Wang, Z. Multi-focus image fusion with a deep convolutional neural network. Inf. Fusion 2017, 36, 191–207. [Google Scholar] [CrossRef]

- Ma, J.; Tang, L.; Xu, M.; Zhang, H.; Xiao, G. STDFusionNet: An infrared and visible image fusion network based on salient target detection. IEEE Trans. Instrum. Meas. 2021, 70, 5009513. [Google Scholar] [CrossRef]

- Zhu, D.; Ma, J.; Li, D.; Wang, X. SCGAFusion: A skip-connecting group convolutional attention network for infrared and visible image fusion. Appl. Soft Comput. 2024, 163, 111902. [Google Scholar] [CrossRef]

- Yang, Y.; Zhou, N.; Wan, W.; Huang, S. MACCNet: Multiscale Attention and Cross-Convolutional Network for Infrared and Visible Image Fusion. IEEE Sens. J. 2024, 24, 16587–16600. [Google Scholar] [CrossRef]

- Goodfellow, I.J.; Pouget-Abadie, J.; Mirza, M.; Xu, B.; Warde-Farley, D.; Ozair, S.; Courville, A.; Bengio, Y. Generative adversarial nets. Adv. Neural Inf. Process. Syst. 2014, 27, 1–9. [Google Scholar]

- Ma, J.; Yu, W.; Liang, P.; Li, C.; Jiang, J. FusionGAN: A generative adversarial network for infrared and visible image fusion. Inf. Fusion 2019, 48, 11–26. [Google Scholar] [CrossRef]

- Yang, X.; Huo, H.; Li, J.; Li, C.; Liu, Z.; Chen, X. DSG-Fusion: Infrared and visible image fusion via generative adversarial networks and guided filter. Expert Syst. Appl. 2022, 200, 116905. [Google Scholar] [CrossRef]

- Yin, H.; Xiao, J.; Chen, H. CSPA-GAN: A cross-scale pyramid attention GAN for infrared and visible image fusion. IEEE Trans. Instrum. Meas. 2023, 72, 5027011. [Google Scholar] [CrossRef]

- Xu, H.; Liang, P.; Yu, W.; Jiang, J.; Ma, J. Learning a generative model for fusing infrared and visible images via conditional generative adversarial network with dual discriminators. In Proceedings of the Twenty-Eighth International Joint Conference on Artificial Intelligence (IJCAI-19), Macao, China, 10–16 August 2019. [Google Scholar]

- Zhou, H.; Wu, W.; Zhang, Y.; Ma, J.; Ling, H. Semantic-supervised infrared and visible image fusion via a dual-discriminator generative adversarial network. IEEE Trans. Multimed. 2021, 25, 635–648. [Google Scholar] [CrossRef]

- Ram Prabhakar, K.; Sai Srikar, V.; Venkatesh Babu, R. Deepfuse: A deep unsupervised approach for exposure fusion with extreme exposure image pairs. In Proceedings of the 2017 IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 4724–4732. [Google Scholar]

- Li, H.; Wu, X.J.; Kittler, J. RFN-Nest: An end-to-end residual fusion network for infrared and visible images. Inf. Fusion 2021, 73, 72–86. [Google Scholar] [CrossRef]

- Tang, L.; Xiang, X.; Zhang, H.; Gong, M.; Ma, J. DIVFusion: Darkness-free infrared and visible image fusion. Inf. Fusion 2023, 91, 477–493. [Google Scholar] [CrossRef]

- Huang, J.; Li, X.; Tan, H.; Yang, L.; Wang, G.; Yi, P. DeDNet: Infrared and visible image fusion with noise removal by decomposition-driven network. Measurement 2024, 237, 115092. [Google Scholar] [CrossRef]

- Wang, Z.; Chen, Y.; Shao, W.; Li, H.; Zhang, L. SwinFuse: A residual swin transformer fusion network for infrared and visible images. IEEE Trans. Instrum. Meas. 2022, 71, 5016412. [Google Scholar] [CrossRef]

- Li, J.; Yang, B.; Bai, L.; Dou, H.; Li, C.; Ma, L. TFIV: Multigrained token fusion for infrared and visible image via transformer. IEEE Trans. Instrum. Meas. 2023, 72, 2526414. [Google Scholar] [CrossRef]

- Zhao, Z.; Bai, H.; Zhang, J.; Zhang, Y.; Xu, S.; Lin, Z.; Timofte, R.; Van Gool, L. Cddfuse: Correlation-driven dual-branch feature decomposition for multi-modality image fusion. In Proceedings of the 2023 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Vancouver, BC, Canada, 17–24 June 2023. [Google Scholar]

- Bulanon, D.; Burks, T.; Alchanatis, V. Image fusion of visible and thermal images for fruit detection. Biosyst. Eng. 2009, 103, 12–22. [Google Scholar] [CrossRef]

- Lin, X.; Sun, H.; Wang, Y. Dynamic positioning particle filtering method based on the EnKF. In Proceedings of the 2017 IEEE International Conference on Mechatronics and Automation (ICMA), Takamatsu, Japan, 6–9 August 2017; pp. 1871–1876. [Google Scholar]

- Xu, D.; Wang, Y.; Xu, S.; Zhu, K.; Zhang, N.; Zhang, X. Infrared and visible image fusion with a generative adversarial network and a residual network. Appl. Sci. 2020, 10, 554. [Google Scholar] [CrossRef]

- Liu, F.; Liu, J.; Wang, L.; Al-Qadi, I.L. Multiple-type distress detection in asphalt concrete pavement using infrared thermography and deep learning. Autom. Constr. 2024, 161, 105355. [Google Scholar] [CrossRef]

- Wang, P.; Xiao, J.; Qiang, X.; Xiao, R.; Liu, Y.; Sun, C.; Hu, J.; Liu, S. An automatic building facade deterioration detection system using infrared-visible image fusion and deep learning. J. Build. Eng. 2024, 95, 110122. [Google Scholar] [CrossRef]

- Eum, I.; Kim, J.; Wang, S.; Kim, J. Heavy equipment detection on construction sites using you only look once (yolo-version 10) with transformer architectures. Appl. Sci. 2025, 15, 2320. [Google Scholar] [CrossRef]

- Wang, S. Development of approach to an automated acquisition of static street view images using transformer architecture for analysis of Building characteristics. Sci. Rep. 2025, 15, 29062. [Google Scholar] [CrossRef] [PubMed]

- Li, H.; Wu, X.J.; Kittler, J. Infrared and visible image fusion using a deep learning framework. In Proceedings of the 2018 24th International Conference on Pattern Recognition (ICPR), Beijing, China, 20–24 August 2018. [Google Scholar]

- Jung, H.; Kim, Y.; Jang, H.; Ha, N.; Sohn, K. Unsupervised deep image fusion with structure tensor representations. IEEE Trans. Image Process. 2020, 29, 3845–3858. [Google Scholar] [CrossRef]

- Tang, L.; Yuan, J.; Ma, J. Image fusion in the loop of high-level vision tasks: A semantic-aware real-time infrared and visible image fusion network. Inf. Fusion 2022, 82, 28–42. [Google Scholar] [CrossRef]

- Roberts, J.W.; Van Aardt, J.A.; Ahmed, F.B. Assessment of image fusion procedures using entropy, image quality, and multispectral classification. J. Appl. Remote Sens. 2008, 2, 023522. [Google Scholar]

- Wang, Z.; Bovik, A.C. A universal image quality index. IEEE Signal Process. Lett. 2002, 9, 81–84. [Google Scholar] [CrossRef]

- Chen, J.; Kao, S.-H.; He, H.; Zhuo, W.; Wen, S.; Lee, C.-H.; Chan, S.-H.G. Run, don’t walk: Chasing higher FLOPS for faster neural networks. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Vancouver, BC, Canada, 17–24 June 2023; pp. 12021–12031. [Google Scholar]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You only look once: Unified, real-time object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar]

- Wang, J.; Chen, Y.; Dong, Z.; Gao, M. Imp roved YOLOv5 network for real-time multi-scale traffic sign detection. Neural Comput. Appl. 2023, 35, 7853–7865. [Google Scholar] [CrossRef]

- Varghese, R.; Sambath, M. Yolov8: A novel object detection algorithm with enhanced performance and robustness. In Proceedings of the 2024 International Conference on Advances in Data Engineering and Intelligent Computing Systems (ADICS), Chennai, India, 18–19 April 2024; pp. 1–6. [Google Scholar]

- Wang, A.; Chen, H.; Liu, L.; Chen, K.; Lin, Z.; Han, J. Yolov10: Real-time end-to-end object detection. Adv. Neural Inf. Process. Syst. 2024, 37, 107984–108011. [Google Scholar]

| Module | MI | PSNR | CC | SSIM | FMI_pixel | Time (/s) | |

|---|---|---|---|---|---|---|---|

| 1CNN block | 1.963 | 62.5561 | 0.4131 | 0.0917 | 0.8583 | 0.8710 | 0.0082 |

| Non gradient | 2.001 | 64.1624 | 0.5856 | 0.0904 | 0.8938 | 0.8956 | 0.0981 |

| 2CNN block | 2.172 | 66.2657 | 0.5956 | 0.0087 | 0.9057 | 0.9005 | 0.1033 |

| Dimension | MI | PSNR | CC | SSIM | FMI_pixel | Time | |

|---|---|---|---|---|---|---|---|

| 2 | 2.5191 | 66.1013 | 0.7164 | 0.0551 | 0.9027 | 0.8643 | 0.046 |

| 4 | 2.5263 | 66.1599 | 0.7177 | 0.0473 | 0.9193 | 0.8753 | 0.053 |

| 8 | 2.5472 | 66.1613 | 0.7178 | 0.0466 | 0.9206 | 0.8749 | 0.102 |

| 16 | 2.5353 | 66.1678 | 0.7178 | 0.0493 | 0.9181 | 0.8741 | 0.204 |

| 32 | 2.5054 | 66.1692 | 0.7181 | 0.0547 | 0.9049 | 0.8696 | 0.378 |

| 64 | 2.5330 | 66.1618 | 0.7184 | 0.0476 | 0.9200 | 0.8761 | 0.551 |

| 96 | 2.5471 | 66.1408 | 0.7174 | 0.0478 | 0.9214 | 0.8756 | 0.820 |

| 128 | 2.5398 | 66.1697 | 0.7182 | 0.0481 | 0.9209 | 0.8754 | 1.451 |

| 256 | 2.5429 | 66.1543 | 0.7177 | 0.0476 | 0.9216 | 0.8758 | 3.544 |

| Number | MI | PSNR | CC | SSIM | FMI_pixel | Time | |

|---|---|---|---|---|---|---|---|

| 1 | 2.1676 | 66.2633 | 0.5956 | 0.0091 | 0.9049 | 0.9002 | 0.0891 |

| 2 | 2.1692 | 66.2635 | 0.5956 | 0.0089 | 0.9055 | 0.9003 | 0.0989 |

| 3 | 2.1726 | 66.2657 | 0.5956 | 0.0087 | 0.9057 | 0.9005 | 0.1033 |

| 4 | 2.1704 | 66.2610 | 0.5951 | 0.0091 | 0.9003 | 0.9002 | 0.1045 |

| Module | Parameter/M | Inference Time per Image | mAP@0.5 | mAP@[0.5:0.95] |

|---|---|---|---|---|

| YOLOv5-s | 7.2 | 20.54 | 0.917 | 0.665 |

| YOLOv8-s | 7.2 | 19.57 | 0.920 | 0.669 |

| YOLOv10-n | 2.3 | 11.4 | 0.893 | 0.616 |

| YOLOv10-s | 7.2 | 14.4 | 0.928 | 0.694 |

| YOLOv10-m | 15.4 | 32.5 | 0.954 | 0.717 |

| YOLOv10-b | 19.1 | 45.8 | 0.923 | 0.706 |

| YOLOv10-l | 24.4 | 54.7 | 0.967 | 0.728 |

| Data Scene | Normal Illumination | Target Occlusion | Low Illumination | Backlight |

|---|---|---|---|---|

| number | 1802 | 540 | 716 | 486 |

| Types of Maritime Targets | Detection Results of Visible Images | Detection Results of Infrared Images | Detection Results of Fusion Images |

|---|---|---|---|

| Pleasure boats | 0.847 | 0.821 | 0.895 |

| Personnel | 0.315 | 0.282 | 0.725 |

| Test boats | 0.553 | 0.570 | 0.738 |

| Buoys | 0.438 | 0.330 | 0.593 |

| Sailboats | 0.551 | 0.546 | 0.784 |

| Small boats | 0.577 | 0.620 | 0.826 |

| Average | 0.547 | 0.528 | 0.760 |

| Types of Maritime Targets | Detection Results of Visible Images | Detection Results of Infrared Images | Detection Results of Fusion Images | |||

|---|---|---|---|---|---|---|

| P | R | P | R | P | R | |

| Pleasure boats | 0.646 | 0.881 | 0.704 | 0.775 | 0.792 | 1 |

| Personnel | 0.714 | 0.625 | 0.767 | 0.274 | 0.954 | 0.958 |

| Test boats | 0.471 | 0.796 | 0.783 | 0.667 | 1 | 0.799 |

| Buoys | 1 | 0.464 | 1 | 0 | 1 | 0.586 |

| Sailboats | 0.898 | 1 | 0.448 | 1 | 0.914 | 1 |

| Small boats | 0.744 | 0.667 | 0.529 | 0.567 | 0.892 | 0.667 |

| All | 0.717 | 0.8 | 0.679 | 0.551 | 0.876 | 0.903 |

| Types of Maritime Targets | Detection Results of Visible Images | Detection Results of Infrared Images | Detection Results of Fusion Images |

|---|---|---|---|

| Pleasure boats | 0.773 | 0.772 | 0.819 |

| Personnel | 0.493 | 0.457 | 0.652 |

| Test boats | 0.607 | 0.730 | 0.826 |

| Buoys | 0.622 | 0.539 | 0.732 |

| Sailboats | 0.624 | 0.772 | 0.757 |

| Small boats | 0.773 | 0.772 | 0.819 |

| Average | 0.493 | 0.457 | 0.652 |

| Types of Maritime Targets | Detection Results of Visible Images | Detection Results of Infrared Images | Detection Results of Fusion Images |

|---|---|---|---|

| Pleasure boats | 0.470 | 0.682 | 0.911 |

| Small boats | 0.901 | 0.909 | 0.932 |

| Sailboats | 0.235 | 0.080 | 0.669 |

| Personnel | 0.570 | 0.616 | 0.871 |

| Average | 0.470 | 0.682 | 0.911 |

| Types of Maritime Targets | Detection Results of Visible Images | Detection Results of Infrared Images | Detection Results of Fusion Images | |||

|---|---|---|---|---|---|---|

| P | R | P | R | P | R | |

| Pleasure boats | 0.875 | 0.636 | 0.915 | 1 | 0.962 | 1 |

| Small boats | 0.596 | 1 | 0.952 | 0.974 | 0.712 | 1 |

| Sailboats | 0.375 | 1 | 0.690 | 0.889 | 0.796 | 1 |

| Personnel | 0.450 | 0.500 | 0.612 | 0.208 | 0.669 | 0.875 |

| All | 0.574 | 0.784 | 0.792 | 0.768 | 0.949 | 0.917 |

| Types of Maritime Targets | Detection Results of Visible Images | Detection Results of Infrared Images | Detection Results of Fusion Images |

|---|---|---|---|

| Pleasure boats | 0.716 | 0.776 | 0.778 |

| Small boats | 0.785 | 0.787 | 0.878 |

| Sailboats | 0.288 | 0.231 | 0.483 |

| Personnel | 0.648 | 0.642 | 0.746 |

| Average | 0.716 | 0.776 | 0.778 |

| Types of Maritime Targets | Detection Results of Visible Images | Detection Results of Infrared Images | Detection Results of Fusion Images |

|---|---|---|---|

| Pleasure boats | 0.869 | 0.847 | 0.950 |

| Small boats | 0.855 | 0.935 | 0.917 |

| Personnel | 0.273 | 0.573 | 0.768 |

| Average | 0.666 | 0.785 | 0.878 |

| Types of Maritime Targets | Detection Results of Visible Images | Detection Results of Infrared Images | Detection Results of Fusion Images | |||

|---|---|---|---|---|---|---|

| P | R | P | R | P | R | |

| Pleasure boats | 0.839 | 0.98 | 0.884 | 0.971 | 0.968 | 0.994 |

| Small boats | 0.804 | 1 | 0.978 | 1 | 0.972 | 1 |

| Personnel | 0.722 | 0.579 | 0.934 | 0.793 | 0.941 | 0.891 |

| All | 0.790 | 0.908 | 0.916 | 0.947 | 0.942 | 0.976 |

| Types of Maritime Targets | Detection Results of Visible Images | Detection Results of Infrared Images | Detection Results of Fusion Images |

|---|---|---|---|

| Pleasure boats | 0.883 | 0.896 | 0.912 |

| Small boats | 0.809 | 0.874 | 0.878 |

| Personnel | 0.423 | 0.680 | 0.734 |

| Average | 0.705 | 0.816 | 0.841 |

| Types of Maritime Targets | Detection Results of Visible Images | Detection Results of Infrared Images | Detection Results of Fusion Images |

|---|---|---|---|

| Pleasure boats | 0.765 | 0.833 | 0.832 |

| Test boats | 0.820 | 0.882 | 0.900 |

| Average | 0.793 | 0.857 | 0.866 |

| Types of Maritime Targets | Detection Results of Visible Images | Detection Results of Infrared Images | Detection Results of Fusion Images | |||

|---|---|---|---|---|---|---|

| P | R | P | R | P | R | |

| Pleasure boats | 0.782 | 0477 | 0.457 | 0.933 | 0.932 | 0.986 |

| Test boats | 0.825 | 0.947 | 0.430 | 1 | 0.828 | 1 |

| All | 0.793 | 0.595 | 0.528 | 0.950 | 0.945 | 0.976 |

| Types of Maritime Targets | Detection Results of Visible Images | Detection Results of Infrared Images | Detection Results of Fusion Images |

|---|---|---|---|

| Pleasure boats | 0.817 | 0.827 | 0.859 |

| Test boats | 0.805 | 0.819 | 0.840 |

| Average | 0.811 | 0.823 | 0.850 |

| Model | P | R | mAP@0.5 | mAP@[0.5:0.95] |

|---|---|---|---|---|

| Visible object detection | 0.869 | 0.857 | 0.933 | 0.726 |

| Infrared object detection | 0.862 | 0.857 | 0.929 | 0.754 |

| Fusion object detection | 0.949 | 0.917 | 0.971 | 0.805 |

| Types of Maritime Targets | Detection Results of Visible Images | Detection Results of Infrared Images | Detection Results of Fusion Images | |||

|---|---|---|---|---|---|---|

| P | R | P | R | P | R | |

| Pleasure boats | 0.933 | 0.89 | 0.930 | 0.953 | 0.974 | 0.957 |

| Personnel | 0.806 | 0.587 | 0.826 | 0.619 | 0.962 | 0.824 |

| Test boats | 0.885 | 0.921 | 0.927 | 0.764 | 0.977 | 0.847 |

| Buoys | 0.962 | 0.732 | 0.906 | 0.657 | 0.994 | 0.800 |

| Sailboats | 0.765 | 0.944 | 0.761 | 0.889 | 0.863 | 0.944 |

| Small boats | 0.883 | 0.945 | 0.918 | 0.946 | 0.973 | 0.964 |

| Types of Maritime Targets | Detection Results of Visible Images | Detection Results of Infrared Images | Detection Results of Fusion Images |

|---|---|---|---|

| Buoys | 0.770 | 0.732 | 0.777 |

| Pleasure boats | 0.829 | 0.857 | 0.873 |

| Sailboats | 0.773 | 0.796 | 0.815 |

| Personnel | 0.401 | 0.432 | 0.618 |

| Test boats | 0.738 | 0.718 | 0.800 |

| Small boats | 0.765 | 0.787 | 0.853 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Chen, Y.; Ma, X.; Wang, Q.; He, Y.; Xie, S. Research on Water Surface Object Detection Method Based on Image Fusion. J. Mar. Sci. Eng. 2025, 13, 1832. https://doi.org/10.3390/jmse13091832

Chen Y, Ma X, Wang Q, He Y, Xie S. Research on Water Surface Object Detection Method Based on Image Fusion. Journal of Marine Science and Engineering. 2025; 13(9):1832. https://doi.org/10.3390/jmse13091832

Chicago/Turabian StyleChen, Yihong, Xiaoyi Ma, Qi Wang, Yunqian He, and Shuo Xie. 2025. "Research on Water Surface Object Detection Method Based on Image Fusion" Journal of Marine Science and Engineering 13, no. 9: 1832. https://doi.org/10.3390/jmse13091832

APA StyleChen, Y., Ma, X., Wang, Q., He, Y., & Xie, S. (2025). Research on Water Surface Object Detection Method Based on Image Fusion. Journal of Marine Science and Engineering, 13(9), 1832. https://doi.org/10.3390/jmse13091832