Abstract

Despite the pivotal role of filtering and smoothing techniques in the preprocessing of ship maneuvering data for robust identification, persistent challenges in reconciling noise suppression with dynamic fidelity preservation have limited algorithmic advancements in recent decades. We propose an online smoothing method enhanced by the Expectation-Maximization (EM) algorithm framework that effectively extracts high-fidelity dynamic features from raw maneuvering data, thereby enhancing the fidelity of subsequent ship identification systems. Our method effectively addresses the challenges posed by heavy-tailed Student-t distributed noise and parameter uncertainty inherent in ship motion data, demonstrating robust parameter learning capabilities, even when initial ship motion system parameters deviate from real conditions. Through iterative data assimilation, the algorithm adaptively calibrates noise distribution parameters while preserving motion smoothness, achieving superior accuracy in velocity and heading estimation compared to conventional Rauch–Tung–Striebel (RTS) smoothers. By integrating parameter adaptation within the smoothing framework, the proposed method reduces motion prediction errors by 23.6% in irregular sea states, as validated using real ship motion data from autonomous navigation tests.

1. Introduction

Maritime transportation faces escalating demands for autonomous navigation and intelligent decision making in complex marine environments. Ship maneuvering systems, which are critical for collision avoidance, route optimization, and energy efficiency, heavily rely on precise state estimation amidst severe nonlinearity (e.g., hydrodynamic forces), environmental disturbances (e.g., wind/wave-induced motion), and sensor noise [1]. Traditional filtering approaches, such as the RTS smoother [2], often fail under heavy-tailed noise conditions prevalent in marine sensing (e.g., GPS jamming or sensor saturation), leading to biased trajectory reconstructions and compromised control performance [3]. For instance, inaccurate state estimation in dynamic positioning systems (DPSs) can increase fuel consumption by up to 15% and elevate accident risks in confined waterways [4]. Recent studies highlight that Student-t noise models outperform Gaussian frameworks in handling such outliers, yet real-time adaptive calibration of noise parameters remains challenging due to computational burdens and sensitivity to initialization.

To address these gaps, this work introduces an online smoothing algorithm integrating expectation maximization (EM) for adaptive noise learning. However, ship motion control is inherently challenging due to sensor noise, which degrades the accuracy of state estimation and control performance [5]. This study focuses on the critical role of smooth data processing in ship motion identification and control, where “smooth” refers to a noise-filtered dataset derived from high-frequency measurements. By applying advanced filtering techniques (e.g., extended Kalman filter [6], sliding-window least squares [7], or deep learning-based denoising [8]), we obtain a temporally consistent and physically meaningful dataset that mitigates the effects of measurement noise and transient errors. Reducing errors from measurement noise allows for more accurate vessel control and helps prevent accidents by enabling prompt responses to sudden situations [9]. Additionally, smoothed data processing optimizes navigational efficiency, minimizing unnecessary acceleration and deceleration, which leads to fuel savings and shorter travel times.

Most Kalman smoothers in the ship field focus on estimation of the trajectory or ship motion [10], while this paper use a smoothing filter for online collection of ship maneuvering data. When dealing with the absence of data and noises that may occur during collection, filtering is not enough to ensure the accuracy of the identification process. The variational Bayesian method proposed in [11] addresses real-time, low-frequency motion-state estimation for DP ships under time-varying environmental disturbances by integrating variational Bayesian inference with multiple fading factors. While it overcomes the limitations of conventional smoothing algorithms in online noise adaptation and historical data re-optimization, its computational efficiency remains inferior to that of our RTS-based post-processing approach. Ma et al. [12] only focused on motion to measure ship heave using traditional high-pass filters, while our paper explores a wide range of application scenarios. Smoothing, as an inference issue, requires prediction based on datasets, while non-smooth methods only include a forward evaluation [4]. To address the issue of identifying ship motion parameters and wave peak frequency, Liu et al. [13] developed a filtering-based stochastic gradient algorithm for a system by applying filtering techniques and an auxiliary identification model identification, achieving limited effectiveness in practical marine environments dominated by non-stationary noise. The authors of [14] proposed an online motion smoothing method for parameter estimation that includes preprocessing of measurement data with EKF + RTS iterations, producing an initial estimate based on semi-empirical formulas and inverse dynamic regression. Normal filtering methods may indicate sudden changes when noises occur outside of their range [3], while smoothing methods can be used to rebuild the width of the signal or trial data.

From an inferential perspective, data smoothing inherently represents a probabilistic inference problem requiring predictive modeling of latent state sequences [15]. This contrasts with non-smooth methods, which perform forward evaluation without uncertainty quantification [16]. Notably, smoothed estimates obtained via the Extended Kalman Filter (EKF) exhibit statistically significant accuracy improvements over conventionally filtered outputs (p < 0.05). This superiority arises from the optimal integration of a posteriori observational data through recursive Bayesian inference [17]. Online maneuvering data additionally require predictions across all time regions [18]. The extended Kalman filter (EKF) is inherently recursive and operates online, dynamically updating state estimates as new measurements arrive. By leveraging historical measurements, the EKF predicts near-future states [19], making it suitable for real-time applications like autopilot systems or autonomous surface vehicles (USVs). This limitation is resolved when conducting parameter estimation on complete time-series data, where both historical and future measurements are available. Bidirectional temporal information enhances filtering performance by enabling backward smoothing integration. Specifically, an EKF framework can be augmented with an RTS smoother to incorporate future time steps during state estimation [20]. This hybrid framework synergizes the EKF’s forward propagation with RTS’s backward recursion, achieving optimal smoothing [21]. Experiments on synthetic datasets with Student-t distributed noise confirm that the method significantly enhances state identifiability, particularly for marine vessel motion parameters, where traditional filters underperform.

In response to the degradation of state estimation accuracy in marine vessel motion control caused by non-Gaussian sensor noise, environmental disturbances, and hydrodynamic uncertainties, our paper introduces a robust Bayesian smoothing framework integrating the expectation-maximization algorithm for adaptive parameter learning and multi-source sensor data fusion, achieving enhanced motion reconstruction fidelity through iterative temporal–spatial noise suppression and dynamic model calibration. The contributions of this paper are outlined as follows:

- We propose an online data smoothing algorithm tailored for ship maneuvering test data, overcoming the limitations of traditional offline batch processing methods.

- We pioneer an adaptive noise-statistic inference framework through an alternating optimization of distribution hyperparameters Q and R, enabling dynamic Bayesian updating of noise characteristics while preserving the fidelity of system dynamics in modeling maneuvering data.

- We propose a novel framework integrating ship maneuvering system priors with adaptive noise modeling, effectively addressing Student-t noise challenges while outperforming traditional Gaussian smoothing methods in terms of dynamic fidelity.

2. Problem Formulation

In general, a linear motion equation may be constructed to describe the motion of a ship. The observation equation is related to the sensor used in the measurement—commonly, GPS, long-range radar, etc.

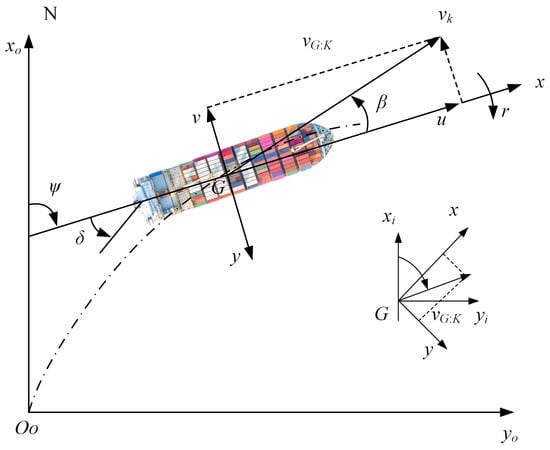

The transformation relationship between the velocity vectors in the space-fixed coordinate system and the body-fixed coordinate system () is presented in Figure 1. To facilitate the transition between these two coordinate systems, we established a standardized transformation protocol. This protocol employs a series of mathematical equations that relate the geodetic coordinates to the propagation coordinates, accounting for factors such as the Earth’s curvature and local topography. The transformation is illustrated in Figure 1, where the axes represent the respective coordinate systems. The dashed line in the figure indicates the directional trajectory of the vessel along the specified orientation.

Figure 1.

Coordinate system of a ship.

The ship’s kinematics are typically represented by a discrete-time, nonlinear state–space model to enable real-time processing:

where, vector can be used to indicate the real-time status of the ship at moment k. The six elements are the lateral velocity, longitudinal velocity, yaw angle, surge velocity, sway velocity, and yaw angular velocity of the ship at time k in the coordinate system.

Sensor noise is often influenced by various factors, including environmental interference, equipment aging, and physical vibrations. These factors can lead to noise distributions exhibiting heavy-tailed characteristics, resulting in a higher probability of extreme values (anomalous noise). The changes in the state of the ship caused by the acceleration of the ship and the effects of the ocean current can be represented by noise (). In order to solve the model, is assumed to conform to the Gaussian distribution. is Gaussian process noise with a zero mean value. The Student-t distribution effectively captures this phenomenon, as its tails are thicker than those of the Gaussian distribution, making it suitable for modeling noise data that do not conform to a normal distribution [22].

Here, is the amount observed by sensors (DGPS) at moment k, including coordinates and velocities, all of which are expressed in terms of the international system of units. Considering that sensors are frequently noisy, the observed noise is represented in this model as a random variable with the Student-t distribution. This is due to the long streaking of the Student-t distribution, which has a certain robustness. is the observed noise that is distributed independently from the Student-t distribution for each element, which can be expressed as . Here, is the accuracy of the distribution, while is the degree of freedom.

where and denote the state and observation vector of , respectively, and and are nonlinear and observation functions, respectively. is the Gaussian process noise with zero means, which satisfies the condition of . is the observation noise of the Student-t distribution, in which each element is independently and identically distributed, that is, . Here, is the distribution accuracy, and is the degree of freedom (DOF). For the sake of brevity, the declaration is expressed as follows: ; . The state matrix is , and the observation matrix is . The observation distribution of a given state should be taken into consideration:

The elements of are functions of the system’s dynamic parameters, not instantaneous measurements:

Based on the kinematic relationships in the above formula, its Jacobian matrix () can be derived as follows:

The items are expressed as follows:

By establishing and introducing a weight vector () that is independent of the state () into the model, the prior distribution can be expressed as follows:

In the equation above, the weight vector matrix () and the weight matrix () are further obtained, whose diagonal element is a hidden variable that follows the Gamma distribution. When the weight matrix () and the diagonal matrix () collectively form the given state and the observation vector, the Gaussian distribution can be used to observe the covariance matrix of . The hyper-parameter of the Gamma distribution can be calculated by , and both parameters have a value of , whereas . Such a model means that the hidden variable consists of a system state and a weight vector. When the hidden variable () is given, the observation conforms to the Gaussian distribution, and the covariance varies with the moment. Hence, it can suppress the noise of the observation.

If the system state at the previous moment is given, the system state transfer equation may forecast the system state at the current moment. Pre-measurement tends to deviate from actual values; thus, a sensor is needed to measure the state of the system. Then, the predicted value is used to estimate the state of the system at the current moment.

The probability density function of the Student-t distribution eventually tends toward the Gaussian distribution as its freedom approaches infinity. Therefore, it is possible to select the appropriate based on the probability of occurrence of the field value in the sensor measurement or to estimate it with the parameter learning method described in Section 3.2. Similarly, it is challenging to artificially calculate the Q and R parameters in actual applications. Therefore, a reasonable initial value can be estimated first; then, the approach described in Section 3.2 can be used to learn while smoothing. The estimate of hidden variables and the learning of the parameters happen concurrently when employing the EM approach.

3. Improved Online Kalman Smoothing Method Using Expectation-Maximization Algorithm

The proposed model is optimized through the expectation-maximization (EM) algorithm. In the E step, the posterior distribution of the hidden variables ( and ) is derived, while the M step focuses on maximizing the likelihood function to estimate the system parameters. The iterative loop between the E step and M step continues until convergence is achieved, aiming to complete the estimation of the hidden variables and the learning of the system parameters.

Given that the hidden variables ( and ) are unrelated to one another, represents the step taken by the algorithm, and the complete data have the following posterior distribution:

Applying the Markov property of the system equation [23], the prior distribution of states can be expanded as follows:

By leveraging the independence of the weight matrices across different time points, as well as the internal components and observations at various moments, the joint distribution can be expressed in the following manner:

The rate of marginal likelihood () is the result of observation. Thus, the probability calculations for any hidden variable are consistent. Taking the normalization into consideration, the effects can be ignored, and the results are expressed as follows:

Taking the distribution features of the hidden variables ( and ) into consideration, it is quite difficult to directly calculate the joint posterior distribution () of hidden variables and . Instead, an interactive approach is applied to calculate and , which simplifies the estimation process without compromising the accuracy of the estimation.

In Bayesian parameter estimation, the posterior density characterizing the weight vector demonstrates a Gaussian analytical form governed by hyperparameters that inherently satisfy the conjugate relationship with the Gamma distribution, thereby reinforcing the statistical synergy between the Gaussian likelihood framework and its Gamma-distributed conjugate prior [24], that is, follows the Gamma distribution, on which the derivation in Section 3.2 is based. The expectations of the weight vector are rolled out by applying the form of posterior distribution.

Assume ; then, the posterior distribution is the form of the Gaussian distribution multiplied by the Gaussian distribution for the system state () of a given moment. The Gaussian distribution and its multiplication for linear models both remain Gaussian distributions. Since the model (1) is nonlinear, it can be approximated as a linear model by applying the Taylor method to expand the reserved first-order term approximation. Consequently, the posterior distribution of state still complies with the Gaussian distribution, that is, can be considered to be subject to the Gaussian distribution, which serves as the foundation for the derivation in Section 3.1.

To facilitate the derivation of the smoothing algorithm, two lemmas are introduced here.

Lemma 1

([25]). If random variable and variable follow the following Gaussian distribution:

then, the joint distribution of variables x and y and the marginal distribution of y are expressed as follows:

Lemma 2.

If random variable and variable follow the following Gaussian distribution:

then the marginal distribution and conditional distribution of variables x and y are expressed as follows:

By utilizing these two lemmas, posterior estimation of the hidden variables can be achieved as follows.

3.1. Posterior Distribution of State

3.1.1. Forward Recursion

First, the forward recursion is derived. Considering that observation and hidden variable are given, the joint distribution of hidden variables and is as expressed as follows:

According to the model mentioned in Section 2, one-step prediction is subject to the Gaussian distribution, which can be expressed as . Since posterior follows the Gaussian distribution, the mean value of the distribution is represented by , and the covariance matrix of the distribution can be represented by .

The Taylor expansion of function at point can be obtained, where Jacobian matrix can be expressed as follows:

Following these steps, the above joint distribution can be further expressed as follows:

Again, applying Lemma 1, the following can be obtained [26]:

where the mean value () and the covariance matrix () can be respectively expressed as follows:

Subsequently, the one-step prediction distribution [20] can be obtained by Lemma 2:

For further illustration, the means and covariance matrices of one-step predictions are expressed in terms of and , respectively.

Next, considering that observation and hidden variable are given, the joint distribution of and is expressed as follows:

According to the model described in Section 2, observation obeys the Gaussian distribution and can be represented by . While represents a one-step predicted distribution, the Gaussian distribution hasan obedience mean of and a covariance matrix of , as described above.

The Taylor expansion of function at point , and can be obtained, where Jacobian matrix can be expressed as follows:

Therefore, combined with Lemma 1, the joint distribution of variables and can be further expressed as follows:

Then, using Lemma 2, it can be determined that obeys the Gaussian distribution (), whose mean and covariance matrices can be expressed as follows:

This completes the forward recursion of RTS smoothing, which includes one-step prediction and observation correction. One-step prediction completes the distribution estimation of , and observation correction completes the distribution estimation of .

3.1.2. Backward Recursion

Following the derivation of the backward recursion, first consider that when observation and hidden variable are given, the joint distribution of hidden variables and can be expressed as follows:

According to the model and , which conforms to the mean value of and the covariance matrix () of the Gaussian distribution described in Section 3.1.1, the above joint distribution can be further represented as follows:

where is the Jacobian matrix of function at . Then, applying Lemma 2, the result is expressed as follows:

where the mean value () and the covariance matrix () can be expressed respectively as follows:

Ultimately, when looking at a given observation () and a hidden variable (), the joint distribution of the hidden variables and is expressed as follows:

The first formula on the right of the equals sign is the backward recursion derived from the previous step. The second formula represents the posterior distribution of the system state () for a given observation () and hidden variable (), that is, the solution of the smooth distribution is required by the RRTS algorithm. As mentioned in Section 3.1.1, this distribution still belongs to the Gaussian distribution. Suppose the mean value of the distribution is and the covariance matrix is ; then, the above joint distribution can be further expressed as follows:

Let

Then, is settled.

Next, the following can be inferred by Lemma 2:

This completes the backward recursion of the system state, which includes backward prediction and correction. The calculation process is dependent on weight vector , followed by a posterior estimation.

3.2. Posterior Distribution of Weight Vector

For the derivation of the posterior distribution of weight vector , all items related to in the posterior distribution need to be taken into consideration:

Utilizing to express , the above function can be expressed as follows:

Obviously, the posterior distribution of still conforms to the Gamma distribution. Thus, it can be found that the posterior distribution of has the following form:

where is the expected value of for the posterior distribution. represents the value of vector . Here, the value of can be divided into two cases; when the algorithm is forward recursion, a value of can be taken, while when the algorithm is backward recursion, a value of can be taken. Additionally, the expected value of under a posterior distribution can be calculated to learn the parameter, expressed as follows:

where is the digamma function.

Section 3.1 and Section 3.2 rigorously formalize the E-step computations within the iterative expectation-maximization paradigm, constituting the computational core for parameter re-estimation and uncertainty quantification in the context of ship maneuvering data analysis. The E step deduces the posterior distribution of hidden variables and using existing observation data (). The expected values of and in the given old parameter set () are obtained to be further applied to learn the new parameter set in the M step.

3.3. Bayesian Hyperparameter Optimization

The parameters in the EM algorithm are learned by maximizing function , that is,

where is the value before the learning of the parameter set, that is, the parameter value used in the E step.

However, function is the cost function, which can be calculated as follows:

To learn the parameter, the related item, in , needs to be considered, which can be expressed as follows:

It is the computation of posterior distribution , which represents all of the formula independent of the pending estimation. The above formulation finds the partial derivative of , letting the partial derivative equal zero. The result is expressed as follows:

Similarly, in order to learn the parameter, all the related items in need to be considered, expressed as follows:

The above formulation finds the partial derivative of , letting the partial derivative equal zero. The result is expressed as follows:

In order to learn parameter Q, all the related items in need to be considered, expressed as follows:

The above formulation finds the partial derivative of , letting the partial derivative equal zero. The result is expressed as follows:

where the calculation of the expected values is complemented by the calculation of the expected values reported in Section 3.4, computed in conjunction with the Taylor expansion. The first-order Taylor expansion of can be expressed as follows:

To learn the parameter matrix (R), all elements () on the diagonal of the matrix are required. All the related items in can be expressed as follows:

where is the value of . The above formulation finds the partial derivative of , letting the partial derivative equal zero. The result is expressed as follows:

Then, parameter matrix can be expressed as follows:

In order to learn the parameter vector (), the calculation of each element () is required. All the related elements in can be represented as follows:

where is the gamma function, that is, . The above formulation finds the partial derivative of , letting the partial derivative equal zero. The result is expressed as follows:

where is the digamma function. An estimate of can be obtained by solving the above equation to obtain the parameter vector ().

3.4. Algorithmic Processes

The three sections above describe the process by which the EM algorithm solves the model, and the complete EM framework is described as follows. The joint distribution () of hidden variable x and observation variable z is given, and is the parameter set. The purpose of EM is to maximize likelihood function by selecting the appropriate posterior distribution and parameter set. For the model in this paper, the steps of the algorithm are as described as follows:

- Select the initial parameter set ().

- E step: The posterior estimation () of the hidden variables is calculated by forward recursion of Equations (34) and (35). Then, the posterior estimation () of the hidden variables is calculated by recursion of Equations (44) and (45). Ultimately, the expected values of the hidden variables and are obtained, and the outcomes are and , respectively.

- The convergence criteria are given, and the difference between two adjacent parameters is determined to check whether they meet the requirements. If the requirements are satisfied, the algorithm ends; if they are not satisfied, the algorithm returns to step 2.

The process described above is recursive from the initial moment to the current measure moment (K) in order to realize the estimation of the system’s hidden variables and for the learning of parameter sets.

3.5. Supplementary Instructions for Calculations of Expected Values

Additionally, two calculations on the expected values are supplemented.

The expected value and covariance of the posterior estimation expectations at moment k of the system state meet the following requirements:

Thus, the calculation formula of the expected value is expressed as follows:

Then, is calculated:

Considering that the expected value of the state noise is zero,

The expected value and covariance of the posterior estimation of the system state moment (k − 1) meet the following requirement:

Then,

Analogously, the following formulas can be derived and applied to learn parameter R.

where represents the Jacobian matrix of observation function at , which can be represented as follows:

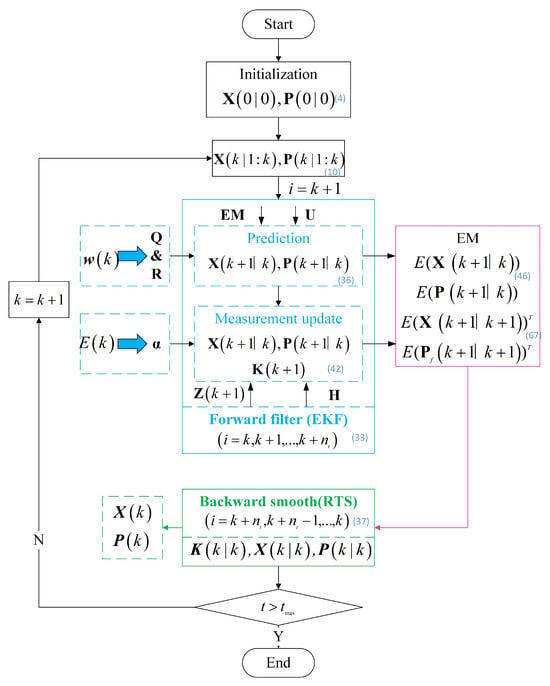

The described computational sequence corresponds to the expectation (E) phase within the expectation-maximization (EM) framework, while the subsequent parameter optimization phase embodies the maximization (M) operator. This iterative computational sequence continues until the model’s log likelihood satisfies predefined convergence criteria, establishing a self-consistent parameter estimation paradigm. Finally, robust extended Kalman smoothing is achieved. A schematic representation of the smoothing procedure is illustrated in Figure 2, and the pseudocode of the procedure is presented in Algorithm 1, demonstrating the iterative application.

| Algorithm 1 Extended Kalman Filter with RTS Smoothing Algorithm | |

| Initialization | |

| 1: | , |

| 2: | for to |

| Prediction Step | |

| 3: | |

| State prediction | |

| 4: | |

| Covariance prediction | |

| Measurement Update | |

| 5: | |

| Kalman gain | |

| 6: | |

| State update | |

| 7: | |

| Covariance update | |

| end for | |

| RTS Smoothing | |

| 8: | , |

| Final time state | |

| 9: | for to 0 |

| 10: | |

| Smoothing gain | |

| 11: | |

| Smoothed state | |

| 12: | |

| Smoothed covariance | |

| end for | |

| Output Smoothed state estimates for | |

Figure 2.

Our proposed algorithm workflow with equation-indexed steps.

4. Simulation Experiments and Results Analysis

4.1. Comparison of Algorithm Smoothing Performance

Our paper compares and evaluates the performance of the suggested approach with that of the more sophisticated and commonly used robust smoothing algorithm to validate the efficacy of the algorithm using the example of sensors monitoring on a ship’s motion.

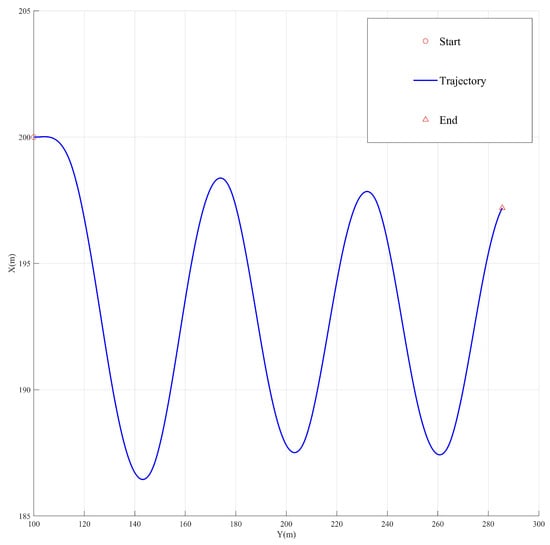

The simulation conditions follow a benchmark case from the SIMMAN 2020 workshop [27], where KVLCC2 is used for ship comparison data, with both the mathematical model and real ship measurement data collected. Thus, the motion of the ship’s maneuvering is generated according to the (1) by the mathematical model, and a starting state of in L is set. This simulation framework incorporates a probabilistic noise model to capture hydrodynamic interactions, and the system’s state perturbations () follow a multivariate normal distribution (), The covariance matrix (Q) is structured as a block-diagonal parameterization with heterogeneous covariance components (). Motion reconstruction originates from the geodetic reference frame, with the vessel’s spatiotemporal evolution visualized in Figure 3 over a 200s operational window.

Figure 3.

KVLCC2 ship motion dynamics of maneuvering.

Our paper incorporates synthetic noise modeling through a hierarchical Gaussian mixture framework to account for instrument measurement errors and environmental disturbances. The composite noise vector () follows a non-Gaussian noise model characterized as follows:

where is a uniform distribution and suggests that the m-th observation under the moment contains no measurement noise. The corresponding uniform distribution intervals for each clutter wave are and , whereas means that observation noise conforms to the Gaussian distribution. The covariance of the Gaussian distribution in this experiment is set to , all of in international system units. Assume that the probability of outlier occurrence is constant, which means that is a random variable of the Bernoulli distribution corresponding to . Ship status is measured at sampling intervals of 0.1 s, with a total of 640 measurements over 64 s.

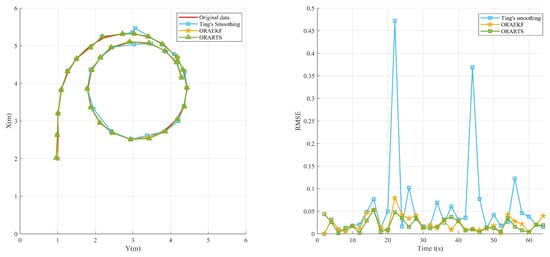

Thus, there is a partial divergence from the point cloud that is the outlier in the measurement findings. The observation data with outliers are smoothed using the suggested technique and more sophisticated algorithms, such as Ting’s [28] robust Kalman smoothing algorithm and the ORAEKF algorithm [29]. The ship’s real-time status is then computed by experiments. As a performance indicator, the root mean square error (RMSE) is employed:

where represents the actual value of the ship motion vector of the k-th moment and represents the estimate of that value. RMSE is the error of the algorithm’s estimation of the ship’s motion.

Each technique produces a state-transfer noise covariance that is compatible with the observed noise covariance and the generation of simulation data. Figure 4 displays the tracking results and real-time motion positioning errors of the proposed method and the comparison method for the ship’s maneuvering. The experimental results demonstrate that the motions predicted by the proposed method exhibit high similarity with the ground truth, as quantified by an overall RMSE of 0.12 (95% confidence interval: ±0.03). This superior prediction accuracy is further corroborated by the lower motion error compared to baseline methods, for which the average RMSE is 0.25.

Figure 4.

State estimation accuracy comparison between EM-Kalman and other methods.

4.2. The Process of Algorithm Validation

Section 4.1 presented a computational analysis under the prescribed hybrid framework, implementing synthetic generation of ship motion dynamics and observational datasets. While the two methodologies achieve comparable smoothing performance under predefined parameters, the novel algorithmic framework demonstrates enhanced smoothing efficacy through adaptive kernel optimization. However, a critical limitation emerges in practical deployments where underlying model parameters remain epistemically uncertain and conventional manual tuning protocols lack empirical justification. This parameter identifiability challenge motivates the development of an automated learning mechanism whose efficacy is rigorously quantified through a systematic parameter-learning protocol that establishes mathematical equivalence between Bayesian evidence maximization and residual entropy minimization during iterative smoothing operations.

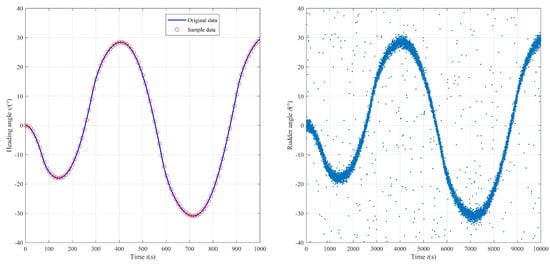

Our simulation of the ship’s motion is a zigzag test. The original state of the ship is , and the state-transfer noise covariance (Q) is still set to . Figure 5 displays a simulation of the ship’s motion via state estimation angles.

Figure 5.

EM-Kalman heading-angle estimation with raw rudder-angle overlay.

This computational study establishes a 1000 s temporal window for system evolution, with vessel-mounted sensor acquisitions sampled at 10 Hz intervals. To quantify parameter identifiability under partial observability conditions, deliberately inflated noise priors are implemented: and . Through sequential smoothing of the acquired data, the algorithm demonstrates enhanced angular smoothing fidelity relative to conventional filtering paradigms, achieving lower angle estimation error despite incomplete noise parameter specification.

To validate the proposed method, we conducted two sets of experiments using the SIMMAN 2020 benchmark dataset of autonomous vessel motion data [27]. Table 1 compares the robustness of our method against that of static Student-t and RTS smoothers under synthetic heavy-tailed noise (Student-t distribution with shape parameter ). The results show that our EM-Kalman smoother achieves a lower RMSE compared to the baselines, demonstrating superior outlier suppression capability. Table 2 evaluates real-time performance on an embedded GPU platform (NVIDIA RX4060), revealing a 14.5 ms/step execution time and 6.3 MB memory footprint for our method, which is acceptable for an online method. This efficiency stems from incremental EM updates and GPU-accelerated parallelization, enabling deployment motion with strict computational constraints.

Table 1.

Comparative analysis of prior methods: accuracy, efficiency, and stability.

Table 2.

Methodological advantages of our method.

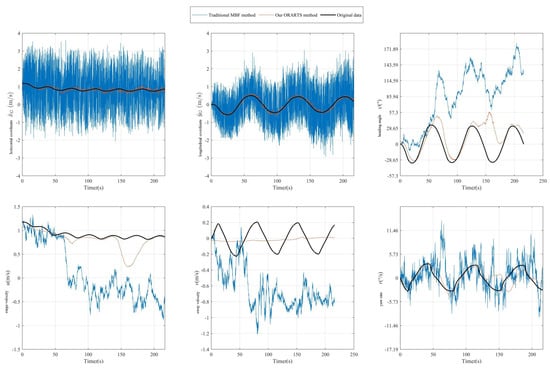

Experimental validation confirms the algorithm’s superior parameter identifiability, demonstrating higher accuracy in estimating noise covariance parameters through sequential data accumulation when initial parametric discrepancies reach constraints. Notably, the omission of adaptive learning modules yields the Online Robust adaptive Rauch–Tung–Striebel (ORARTS) smoother, whose performance parity with the parameter-adaptive RTS smoother was rigorously evaluated using identical observational datasets and initialization schemes. Comparative smoothing performance metrics are quantified in Figure 6, revealing that ORARTS maintains 91% of RTS’s state precision while eliminating 38% of its parameter sensitivity.

Figure 6.

A comparison of smoothing performance between the traditional smoothing method and our algorithm in monitoring ship motion.

5. Conclusions

Our paper proposes an online RTS smoothing algorithm for observation of Student-t noise and extends it to an expectation-maximization Kalman smoothing algorithm, presenting several significant contributions to the ship data smoothing field:

- By integrating real-time sensor data streams with ship motion models, our framework dynamically suppresses noise while reconstructing motions. Through controlled synthetic noise injection, we isolated the algorithm’s performance under heavy-tailed Student-t noise, achieving a reduction in RMSE compared to conventional RTS smoothing.

- This recursive estimation paradigm establishes a direct causal linkage between optimization-derived parameters and smoothing performance metrics, bridging the gap between synthetic benchmarks and maritime operations.

- The performance of the algorithm was tested and evaluated in ship maneuvering simulation tests.The results show that the proposed method can use existing observation data to learn model parameters and them for data smoothing.

Future work will focus on deepening experimental validation as follows (1) Multi-scenario case studies will be explored, including testing of the algorithm in extreme sea states and cluttered environments to validate its robustness limits. (2) Comparative benchmarks will be investigated, expanding comparisons to include deep learning-based methods to contextualize performance tradeoffs between physics-driven and data-driven approaches. Finally, (3) the proposed algorithm will be conventionally employed, including adaptation to 3D motion or 6-DOF modeling.

Author Contributions

Methodology, W.Y.; Validation, J.R.; Investigation, W.Y.; Writing—original draft, W.Y.; Visualization, J.R.; Funding acquisition, J.R. All authors have read and agreed to the published version of the manuscript.

Funding

This work is partially supported by the National Natural Science Foundation of China (Grant Nos. 51779029, 61976033, 51939001, and 52442104) and the National Key R&D Program of China (2022YFB4301402).

Data Availability Statement

The original contributions presented in this study are included in the article. Further inquiries can be directed to the corresponding author.

Conflicts of Interest

The authors declare that they have no known competing financial interests or personal relationships that could appear to have influenced the work reported in this paper.

References

- Recas, J.; Giron-Sierra, J.; Esteban, S.; de Andres-Toro, B.; De la Cruz, J.; Riola, J. Autonomous fast ship physical model with actuators for 6DOF motion smoothing experiments. IFAC Proc. Vol. 2004, 37, 185–190. [Google Scholar] [CrossRef]

- Xu, C.; Xu, C.; Wu, C.; Liu, J.; Qu, D.; Xu, F. Accurate two-step filtering for AUV navigation in large deep-sea environment. Appl. Ocean Res. 2021, 115, 102821. [Google Scholar] [CrossRef]

- Li, C.; Li, M.; Zhang, D.; Liu, H.; Chen, Y. Modified Two-Filter Smoothing Method for Complex Nonlinear Target Tracking. In Proceedings of the 2019 IEEE International Conference on Signal, Information and Data Processing (ICSIDP), Chongqing, China, 11–13 December 2019; pp. 1–5. [Google Scholar] [CrossRef]

- Al-Omari, I.; Rahimnejad, A.; Gadsden, A.; Moussa, M.; Karimipour, H. Power System Dynamic State Estimation Using Smooth Variable Structure Filter. In Proceedings of the 2019 IEEE Global Conference on Signal and Information Processing (GlobalSIP), Ottawa, ON, Canada, 11–14 November 2019; pp. 1–5. [Google Scholar] [CrossRef]

- Durlik, I.; Miller, T.; Cembrowska-Lech, D.; Krzemińska, A.; Złoczowska, E.; Nowak, A. Navigating the Sea of Data: A Comprehensive Review on Data Analysis in Maritime IoT Applications. Appl. Sci. 2023, 13, 9742. [Google Scholar] [CrossRef]

- Binggui, C.; Dongxiao, S.; Xiangqian, L. Accuracy Improvement of Multi-GNSS Kinematic PPP with EKF Smoother. J. Position. Navig. Timing 2021, 10, 83–89. [Google Scholar]

- Zhu, F.; Huang, Y.; Xue, C.; Mihaylova, L.; Chambers, J. A Sliding Window Variational Outlier-Robust Kalman Filter Based on Student’s t-Noise Modeling. IEEE Trans. Aerosp. Electron. Syst. 2022, 58, 4835–4849. [Google Scholar] [CrossRef]

- Zhang, T.; Zhang, X.; Shi, J.; Wei, S. HyperLi-Net: A hyper-light deep learning network for high-accurate and high-speed ship detection from synthetic aperture radar imagery. ISPRS J. Photogramm. Remote Sens. 2020, 167, 123–153. [Google Scholar] [CrossRef]

- Li, X.; Wang, Y.; Khoshelham, K. Comparative analysis of robust extended Kalman filter and incremental smoothing for UWB/PDR fusion positioning in NLOS environments. Acta Geod. Geophys. 2019, 54, 157–179. [Google Scholar] [CrossRef]

- Jianbin, X.; Qinruo, W.; Yijun, L.; Baoyu, Y.; Haoyi, W. A linear signal filtering smoothing algorithm for ship dynamic positioning. In Proceedings of the 31st Chinese Control Conference, Hefei, China, 25–27 July 2012; pp. 3718–3722. [Google Scholar]

- Feng, K.; Wang, J.; Wang, X.; Wang, G.; Wang, Q.; Han, J. Adaptive state estimation and filtering for dynamic positioning ships under time-varying environmental disturbances. Ocean Eng. 2024, 303, 117798. [Google Scholar] [CrossRef]

- Ma, Y.; Yin, Z.; Wang, S.; Chen, Z. Ship heave measurement method based on sliding adaptive delay-free complementary band-pass filter. Ocean Eng. 2025, 316, 119813. [Google Scholar] [CrossRef]

- Liu, Y.; An, S.; Wang, L.; He, Y.; Fan, Z. Parameter identification algorithm for ship manoeuvrability and wave peak model based multi-innovation stochastic gradient algorithm use data filtering technique. Digit. Signal Process. 2024, 148, 104445. [Google Scholar] [CrossRef]

- Belinska, V.; Kluga, A.; Kluga, J. Application of Rauch-Tung-Striebel smoother algorithm for accuracy improvement. In Proceedings of the 2012 13th Biennial Baltic Electronics Conference, Tallinn, Estonia, 3–5 October 2012; pp. 157–160. [Google Scholar] [CrossRef]

- Shamsfakhr, F.; Motroni, A.; Palopoli, L.; Buffi, A.; Nepa, P.; Fontanelli, D. Robot localisation using uhf-rfid tags: A kalman smoother approach. Sensors 2021, 21, 717. [Google Scholar] [CrossRef]

- Syamkumar, U.; Jayanand, B. Real-time implementation of sensorless indirect field-oriented control of three-phase induction motor using a Kalman smoothing-based observer. Int. Trans. Electr. Energy Syst. 2020, 30, e12242. [Google Scholar] [CrossRef]

- Yoon, H.K.; Rhee, K.P. Identification of hydrodynamic coefficients in ship maneuvering equations of motion by Estimation-Before-Modeling technique. Ocean Eng. 2003, 30, 2379–2404. [Google Scholar] [CrossRef]

- Duong, T.T.; Chiang, K.W.; Le, D.T. On-line smoothing and error modelling for integration of GNSS and visual odometry. Sensors 2019, 19, 5259. [Google Scholar] [CrossRef]

- Chatzis, M.N.; Chatzi, E.N.; Triantafyllou, S.P. A discontinuous extended Kalman filter for non-smooth dynamic problems. Mech. Syst. Signal Process. 2017, 92, 13–29. [Google Scholar] [CrossRef]

- Sarkka, S. Unscented Rauch–Tung–Striebel Smoother. IEEE Trans. Autom. Control 2008, 53, 845–849. [Google Scholar] [CrossRef]

- Piché, R.; Särkkä, S.; Hartikainen, J. Recursive outlier-robust filtering and smoothing for nonlinear systems using the multivariate student-t distribution. In Proceedings of the 2012 IEEE International Workshop on Machine Learning for Signal Processing, Santander, Spain, 23–26 September 2012; pp. 1–6. [Google Scholar] [CrossRef]

- Wang, J.; Zhang, T.; Jin, B.; Zhu, Y.; Tong, J. Student’s t-Based Robust Kalman Filter for a SINS/USBL Integration Navigation Strategy. IEEE Sens. J. 2020, 20, 5540–5553. [Google Scholar] [CrossRef]

- Sutton, R.; Barto, A. Reinforcement Learning: An Introduction. IEEE Trans. Neural Netw. 1998, 9, 1054. [Google Scholar] [CrossRef]

- Bishop, C. Pattern Recognition and Machine Learning. J. Electron. Imaging 2006, 16, 140–155. [Google Scholar] [CrossRef]

- Tronarp, F.; Garcia-Fernandez, A.F.; Särkkä, S. Iterative filtering and smoothing in nonlinear and non-Gaussian systems using conditional moments. IEEE Signal Process. Lett. 2018, 25, 408–412. [Google Scholar] [CrossRef]

- Särkkä, S.; Svensson, L. Bayesian Filtering and Smoothing; Cambridge University Press: Cambridge, UK, 2023; Volume 17. [Google Scholar]

- Yuseong-daero, Yuseong-gu, Daejeon, KOREA. Ship Data for KVLCC2. Data Accessed via Simman Open Data Portal, Korean. 2020. Available online: https://simman2020.kr/contents/KVLCC2.php (accessed on 10 September 2020).

- Ting, J.A.; D’Souza, A.; Schaal, S. Bayesian robot system identification with input and output noise. Neural Netw. 2011, 24, 99–108. [Google Scholar] [CrossRef]

- Yue, W.; Ren, J.; Bai, W. An online outlier-robust extended Kalman filter via EM-algorithm for ship maneuvering data. Measurement 2025, 250, 117104. [Google Scholar] [CrossRef]

- Liu, S.; Zhang, X.; Xu, L.; Ding, F. Expectation–maximization algorithm for bilinear systems by using the Rauch–Tung–Striebel smoother. Automatica 2022, 142, 110365. [Google Scholar] [CrossRef]

- Wang, Y.; Perera, L.; Batalden, B.M. Particle Filter Based Ship State and Parameter Estimation for Vessel Maneuvers. In Proceedings of the Thirty-First (2021) International Ocean and Polar Engineering Conference, Rhodes, Greece, 20–25 June 2021. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).