A Multimodal Vision-Based Fish Environment and Growth Monitoring in an Aquaculture Cage

Abstract

1. Introduction

- (1)

- By utilizing the NeuroVI and RGB images (Figure 2a), we introduce a novel approach to segmentation in fishery aquaculture. Notably, we have developed a unique low-visibility dataset that encompasses cage management scenarios such as feeding desire, biofouling attachments, fence breach, and dead fishes detection.

- (2)

- A dual-image cross-attention network is designed for a fishery aquaculture cage (Figure 2b). The features from NeuroVI and RGB images are simultaneously extracted. And NeuroVI images are leveraged to provide spatial attention for RGB images, improving the learning efficiency of network in the fishery aquaculture.

- (3)

- An element-wise multiplication mechanism is designed to replace dot-product multiplication, thereby reducing the calculation latency caused by the dual-channel network. Ultimately, we have achieved state-of-the-art detection performance in the detection of fishery aquaculture, with an 8% improvement in mean average precision (mAP).

2. Related Work

2.1. The Fishery Aquaculture Dataset in Underwater Conditions

2.2. Fishery Aquaculture Cage’s Segmentation Methods

2.3. Sensors’ Fusion

3. Materials and Methods

3.1. Fishery Aquaculture Cage Scene Dataset with NeuroVI and RGB Images

3.2. Cross-Attention Network Based on Spatial Feature Attention

3.3. Guided Attention Learning Module

3.4. Element-Wise Multiplication to Release Calculation Burden

3.5. The Definition of Loss Function

4. Results and Discussion in Fishery Aquaculture

4.1. Dataset Collection and Training Settings

4.2. Segmentation Demonstration Under Low Illumination in Fishery Aquaculture

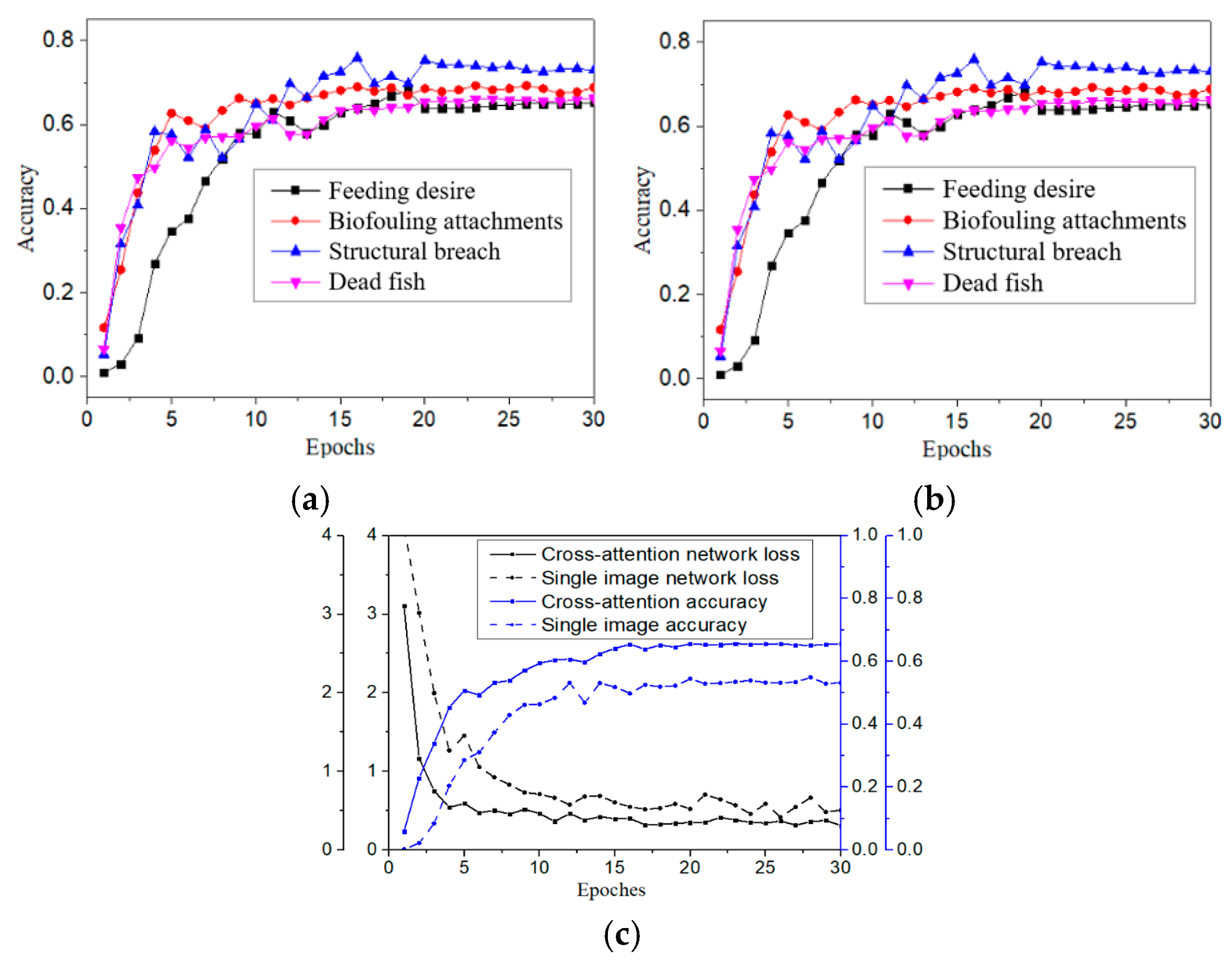

4.3. The Learning Performance Evaluation

4.4. Comparisons with Other Methods

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Huan, X.; Shan, J.; Han, L.; Song, H. Research on the efficacy and effect assessment of deep-sea aquaculture policies in China: Quantitative analysis of policy texts based on the period 2004–2022. Mar. Policy 2024, 160, 105963. [Google Scholar] [CrossRef]

- Sun, X.; Hu, L.; Fan, D.; Wang, H.; Yang, Z.; Guo, Z. Sediment Resuspension Accelerates the Recycling of Terrestrial Organic Carbon at a Large River-Coastal Ocean Interface. Glob. Biogeochem. Cycles 2024, 38, e2024GB008213. [Google Scholar] [CrossRef]

- Xiao, Y.; Huang, L.; Zhang, S.; Bi, C.; You, X.; He, S.; Guan, J. Feeding behavior quantification and recognition for intelligent fish farming application: A review. Appl. Anim. Behav. Sci. 2025, 285, 106588. [Google Scholar] [CrossRef]

- López-Barajas, S.; Sanz, P.J.; Marín-Prades, R.; Gómez-Espinosa, A.; González-García, J.; Echagüe, J. Inspection operations and hole detection in fish net cages through a hybrid underwater intervention system using deep learning techniques. J. Mar. Sci. Eng. 2024, 12, 80. [Google Scholar] [CrossRef]

- Zhu, G.; Li, M.; Hu, J.; Xu, L.; Sun, J.; Li, D.; Dong, C.; Huang, X.; Hu, Y. An Experimental Study on Estimating the Quantity of Fish in Cages Based on Image Sonar. J. Mar. Sci. Eng. 2024, 12, 1047. [Google Scholar] [CrossRef]

- Guan, M.; Cheng, Y.; Li, Q.; Wang, C.; Fang, X.; Yu, J. An Effective Method for Submarine Buried Pipeline Detection via Multi-sensor Data Fusion. IEEE Access 2019, 7, 125300–125309. [Google Scholar] [CrossRef]

- Li, D.; Du, L. Recent advances of deep learning algorithms for aquacultural machine vision systems with emphasis on fish. Artif. Intell. Rev. 2022, 55, 4077–4116. [Google Scholar] [CrossRef]

- Kong, H.; Wu, J.; Liang, X.; Xie, Y.; Qu, B.; Yu, H. Conceptual validation of high-precision fish feeding behavior recognition using semantic segmentation and real-time temporal variance analysis for aquaculture. Biomimetics 2024, 9, 730. [Google Scholar] [CrossRef]

- Gao, T.; Jin, J.; Xu, X. Study on detection image processing method of offshore cage. J. Phys. Conf. Ser. 2021, 1769, 012070. [Google Scholar] [CrossRef]

- Zhou, C.; Lin, K.; Xu, D.; Liu, J.; Zhang, S.; Sun, C.; Yang, X. Method for segmentation of overlapping fish images in aquaculture. Int. J. Agric. Biol. Eng. 2019, 12, 135–142. [Google Scholar] [CrossRef]

- Hu, Z.; Cheng, L.; Yu, S.; Xu, P.; Zhang, P.; Tian, R.; Han, J. Underwater Target Detection with High Accuracy and Speed Based on YOLOv10. J. Mar. Sci. Eng. 2025, 13, 135. [Google Scholar] [CrossRef]

- Islam, M.J.; Edge, C.; Xiao, Y.; Luo, P.; Mehtaz, M.; Morse, C.; Enan, S.S.; Sattar, J. Semantic Segmentation of Underwater Imagery: Dataset and Benchmark. In Proceedings of the 2020 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Las Vegas, NV, USA, 24 October 2020–24 January 2021; pp. 1769–1776. [Google Scholar]

- Yang, D.; Wang, C.; Cheng, C.; Pan, G.; Zhang, F. Data Generation with GAN Networks for Sidescan Sonar in Semantic Segmentation Applications. J. Mar. Sci. Eng. 2023, 11, 1792. [Google Scholar] [CrossRef]

- Liu, W.; Bai, K.; He, X.; Song, S.; Zheng, C.; Liu, X. FishGym: A High-Performance Physics-based Simulation Framework for Underwater Robot Learning. In Proceedings of the 2022 International Conference on Robotics and Automation (ICRA), Philadelphia, PA, USA, 23–27 May 2022; pp. 6268–6275. [Google Scholar]

- Marnet, L.R.; Grasshof, S.; Brodskiy, Y.; Wąsowski, A. Bridging the Sim-to-Real GAP for Underwater Image Segmentation. In Proceedings of the OCEANS 2024—Singapore, Singapore, 15–18 April 2024; pp. 1–6. [Google Scholar]

- Contini, M.; Illien, V.; Barde, J.; Poulain, S.; Bernard, S.; Joly, A.; Bonhommeau, S. From underwater to drone: A novel multi-scale knowledge distillation approach for coral reef monitoring. Ecol. Inform. 2025, 89, 103149. [Google Scholar] [CrossRef]

- Liu, X.Y.; Chen, G.; Sun, X.; Knoll, A. Ground Moving Vehicle Detection and Movement Tracking Based on the Neuromorphic Vision Sensor. IEEE Internet Things J. 2020, 7, 9026–9039. [Google Scholar] [CrossRef]

- Liu, X.Y.; Yang, Z.X.; Hou, J.; Huang, W. Dynamic Scene’s Laser Localization by NeuroIV-based Moving Objects Detection and LIDAR Points Evaluation. IEEE Trans. Geosci. Remote Sens. 2022, 60, 5230414. [Google Scholar] [CrossRef]

- Sun, X.; Chen, C.; Wang, X.; Dong, J.; Zhou, H.; Chen, S. Gaussian dynamic convolution for efficient single-image segmentation. IEEE Trans. Circuits Syst. Video Technol. 2021, 32, 2937–2948. [Google Scholar] [CrossRef]

- Long, J.; Shelhamer, E.; Darrell, T. Fully convolutional networks for semantic segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition 2015, Boston, MA, USA, 7–12 June 2015; pp. 3431–3440. [Google Scholar]

- Chen, L.C.; Papandreou, G.; Kokkinos, I.; Murphy, K.; Yuille, A.L. Deeplab: Semantic image segmentation with deep convolutional nets, atrous convolution, and fully connected crfs. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 40, 834–848. [Google Scholar] [CrossRef]

- Peng, D.; Zhang, Y.; Guan, H. End-to-End Change Detection for High Resolution Satellite Images Using Improved UNet++. Remote Sens. 2019, 11, 1382. [Google Scholar] [CrossRef]

- Huang, Z.; Wang, C.; Wang, X.; Liu, W.; Wang, J. Semantic image segmentation by scale-adaptive networks. IEEE Trans. Image Process. 2020, 29, 2066–2077. [Google Scholar] [CrossRef] [PubMed]

- Deng, X.; Wang, P.; Lian, X.; Newsam, S. Nightlab: A duallevel architecture with hardness detection for segmentation at night. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition 2022, New Orleans, LA, USA, 18–24 June 2022; pp. 938–948. [Google Scholar]

- Liu, Z.; Lin, Y.; Cao, Y.; Hu, H.; Wei, Y.; Zhang, Z.; Lin, S.; Guo, B. Swin transformer: Hierarchical vision transformer using shifted windows. In Proceedings of the IEEE/CVF International Conference on Computer Vision 2021, Montreal, QC, Canada, 10–17 October 2021; pp. 10012–10022. [Google Scholar]

- Wang, W.; Chen, Z.; Yuan, X.; Guan, F. An adaptive weak light image enhancement method. In Proceedings of the Twelfth International Conference on Signal Processing Systems 2020, Shanghai, China, 6–9 November 2020. [Google Scholar]

- Ge, H.; Ouyang, J. Underwater image segmentation via the progressive network of dual iterative complement enhancement. Expert Syst. Appl. 2025, 266, 126049. [Google Scholar] [CrossRef]

- Li, X.; Yu, R.; Zhang, W.; Lu, H.; Zhao, W.; Hou, G.; Liang, Z. DAPNet: Dual Attention Probabilistic Network for Underwater Image Enhancement. IEEE J. Ocean. Eng. 2025, 50, 178–191. [Google Scholar] [CrossRef]

- Jiang, J.; Xu, H.; Xu, X.; Cui, Y.; Wu, J. Transformer-Based Fused Attention Combined with CNNs for Image Classification. Neural Process. Lett. 2023, 55, 11905–11919. [Google Scholar] [CrossRef]

- Romera, E.; Bergasa, L.M.; Yang, K.; Alvarez, J.M.; Barea, R. Bridging the day and night domain gap for semantic segmentation. In Proceedings of the 2019 IEEE Intelligent Vehicles Symposium, Paris, France, 9–12 June 2019; pp. 1312–1318. [Google Scholar]

- Domhof, J.; Kooij, J.; Gavrila, D.M. A Joint Extrinsic Calibration Tool for Radar, Camera and Lidar. IEEE Trans. Intell. Veh. 2021, 6, 571–582. [Google Scholar] [CrossRef]

- Chen, H.; Xu, F.; Liu, W.; Sun, D.; Liu, P.X.; Menhas, M.I.; Ahmad, B. 3D Reconstruction of Unstructured Objects Using Information from Multiple Sensors. IEEE Sens. J. 2021, 21, 26951–26963. [Google Scholar] [CrossRef]

- Roy, S.M.; Beg, M.M.; Bhagat, S.K.; Charan, D.; Pareek, C.M.; Moulick, S.; Kim, T. Application of artificial intelligence in aquaculture—Recent developments and prospects. Aquac. Eng. 2025, 111, 102570. [Google Scholar] [CrossRef]

- Shelhamer, E.; Long, J.; Darrell, T. Fully Convolutional Networks for Semantic Segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2015, 39, 640–651. [Google Scholar] [CrossRef] [PubMed]

- Wan, S.; Liang, Y.; Zhang, Y. Deep convolutional neural networks for diabetic retinopathy detection by image classification. Comput. Electr. Eng. 2018, 72, 274–282. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

| Model Configuration | mAP (%) | ΔmAP | IOU (%) | ΔIOU | Latency (ms) | ΔLatency |

|---|---|---|---|---|---|---|

| Baseline (RGB only) | 72.1 | - | 63.5 | - | 1.8 | - |

| +SNN Filtering | 77.3 | +5.2 | 67.8 | +4.3 | 1.9 | +0.1 |

| +Cross-Attention | 83.6 | +6.3 | 73.2 | +5.4 | 2.1 | +0.2 |

| +Element-wise Multiplication | 87.9 | +4.3 | 78.2 | +5.0 | 1.4 | −0.7 |

| Methods | Image | Latency (ms) | Top1 (%) |

|---|---|---|---|

| Dot-product + Single channel | RGB | 1.3 | 75.1 |

| Element-wise + Single channel | RGB | 1.1 | 74.8 |

| Dot-product + Cross-attention | RGB + NeuroVI | 1.6 | 88.3 |

| Element-wise + Cross-attention | RGB + NeuroVI | 1.4 | 87.9 |

| Backbones | Detection and Instance Segmentation (%) | |||||

|---|---|---|---|---|---|---|

| AlexNet [34] | 32.4 | 52.7 | 35.0 | 28.8 | 50.2 | 30.9 |

| VGGNet [35] | 33.5 | 53.3 | 36.1 | 30.0 | 51.7 | 32.5 |

| UNET++ [22] | 36.2 | 56.1 | 38.9 | 33.5 | 53.6 | 34.5 |

| ResNet18 [36] | 35.0 | 55.0 | 37.7 | 32.2 | 52.0 | 33.7 |

| ResNet50 [36] | 38.0 | 58.6 | 41.4 | 34.4 | 55.1 | 36.7 |

| Swin-Transformer [25] | 40.3 | 60.5 | 43.6 | 36.3 | 57.4 | 38.4 |

| Ours | 42.1 | 62.2 | 45.1 | 38.2 | 59.8 | 40.2 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ma, F.; Liu, X.; Xu, Z. A Multimodal Vision-Based Fish Environment and Growth Monitoring in an Aquaculture Cage. J. Mar. Sci. Eng. 2025, 13, 1700. https://doi.org/10.3390/jmse13091700

Ma F, Liu X, Xu Z. A Multimodal Vision-Based Fish Environment and Growth Monitoring in an Aquaculture Cage. Journal of Marine Science and Engineering. 2025; 13(9):1700. https://doi.org/10.3390/jmse13091700

Chicago/Turabian StyleMa, Fengshuang, Xiangyong Liu, and Zhiqiang Xu. 2025. "A Multimodal Vision-Based Fish Environment and Growth Monitoring in an Aquaculture Cage" Journal of Marine Science and Engineering 13, no. 9: 1700. https://doi.org/10.3390/jmse13091700

APA StyleMa, F., Liu, X., & Xu, Z. (2025). A Multimodal Vision-Based Fish Environment and Growth Monitoring in an Aquaculture Cage. Journal of Marine Science and Engineering, 13(9), 1700. https://doi.org/10.3390/jmse13091700