1. Introduction

Despite the enormous economic importance of the maritime domain for global trade and the advancing digitalization, maritime transport is surprisingly uncoordinated compared to other sectors such as aviation [

1,

2]. However, it should be noted that the coordination of individual actors can have a positive impact on traffic. Because of this, coordination safety can be increased, waiting times reduced and emissions lowered. In today’s maritime shipping industry, each vessel decides locally how it wants to travel without having a holistic view of the overall situation [

3]. In order to derive meaningful actions, a comprehensive picture of the situation is required, which shows, e.g., where other actors are currently located, which services will be available at the port at the time of arrival or how the weather conditions will change during the voyage [

4]. All these parameters and much more contextual information help to get a better understanding of the current situation at sea and positively influence the planning of a voyage. An ideal solution therefore cannot be found locally on board a single vessel, as in practice the necessary information is simply not available [

5]. This in turn inevitably leads to unintentional planning errors and phenomena such as “hurry-up-and-wait” [

2]. In so-called “hurry-up-and-wait”, vessels compete to be the first to arrive at the port to be processed first. This behavior not only poses an increased safety risk but is also very inefficient as it leads to longer waiting times, increased emissions and inefficient use of port-resources [

6].

To counteract this problem, a promising solution is emerging: the introduction of centralized traffic control, comparable to the concept of air traffic controllers in aviation [

4,

7]. The idea of the new approach is to create an instance that monitors the current traffic situation of an entire area and derives instructions for action, such as course or speed adjustments, to the individual traffic participants, so that the traffic is optimized overall with regard to definable target parameters such as emissions or waiting times [

3,

8]. Centralizing traffic management would make it possible to find a holistically optimal solution instead of many local, suboptimal partial solutions [

8]. In addition, only one neutral body, such as the Vessel Traffic Service (VTS), would need to have a complete picture of the current situation to send optimized instructions to the individual actors [

7]. However, decentralized approaches to maritime traffic management have also been discussed in the literature, in which the individual actors negotiate their instructions bilaterally or multilaterally [

9].

Regardless of what the traffic management of the future will look like, all options have in common their reliance on a comprehensive situational picture [

10]. In practice, creating a situation picture is also a challenge, as the information required for this is distributed across a variety of different maritime actors, such as shipping companies, weather services, ports, logistics companies, and many more [

6]. All of these stakeholders pursue their own (sometimes conflicting) interests and have their own technical infrastructures with proprietary interfaces [

11]. This in turn leads to great reluctance to share data with each other, as the stakeholders are either afraid of losing the control over their data-asset or the exchange would involve too much technical effort to align the interfaces of the various IT-systems [

12]. The question therefore arises of how the required data can be exchanged in a standardized and secure way so that the associated technical effort and mistrust in providing external parties with data can be reduced to a minimum. In addition to the decentralized nature of the required information basis, volatile connections between sea- and land-based actors also pose a challenge when exchanging information [

13]. The available bandwidth on the open sea varies from just a few kilobits per second to several megabits per second. However, currently available solutions have usually in common that they are charged per megabyte used. At around 00.20 to 19.32 USD per megabyte, the costs are very variable but still very significant, meaning that careful use makes sense economically [

14,

15]. In addition, despite global coverage, interrupted connections or high latency times (about 700 ms) must be expected all the time [

16]. Thus, the optimization of maritime traffic requires not only an intelligent algorithm for planning but also a corresponding data infrastructure that makes it possible to obtain all relevant information to derive a meaningful picture of the current situation at open sea at any time [

10].

Structure and Methology

Therefore, in this paper, we will present a new approach for a decentralized architecture that makes it feasible to share information in a secure and sovereign way for maritime actors, while taking maritime requirements, like volatile connections and standards, into account. In

Section 2, we initially provide relevant background information regarding the current challenges in sharing data in the maritime domain and present promising approaches to realize a sovereign data exchange. On this basis, we subsequently derive requirements for a maritime data management system ensuring secure and reliable data exchange.

Section 3 takes a closer look at the related work. It provides an insight into ongoing cross-domain initiatives for sovereign information exchange as well as relevant existing projects and approaches for the realization of sovereign data exchange in the maritime sector. The related work is compared against the requirements identified in

Section 2, on which basis the research gap for this paper is derived in a structured way. In

Section 4, our architecture for a system that enables sovereign and secure data exchange in the maritime domain is presented in detail. Afterwards, in

Section 5 the proposed architecture is practically evaluated using an application-oriented scenario from maritime traffic management. The results of the case study are discussed in detail in

Section 6. Finally,

Section 7 summarizes the obtained results and provides insight into limitations and future work.

3. Related Work

This section presents a comprehensive overview of existing architectures and research activities related to Data Spaces within the maritime domain. It highlights the focus areas of these research initiatives and their respective goals. The section concludes with a differentiation of the objectives and requirements pursued in this paper.

International Data Space Association (IDSA)/GAIA-X: The International Data Spaces Association (IDSA) and GAIA-X are key initiatives in the European data economy, each playing a different but complementary role to each other. GAIA-X aims to create a comprehensive European cloud infrastructure that promotes interoperability between different cloud services and providers and creates an open market for digital services [

34]. One particular focus here is on designing the market in such a way that it complies with the European values on data usage in accordance with the General Data Protection Regulation (GDPR) [

35]. The focus is on the development of technical standards, governance models and best practices to ensure a secure environment for data usage [

36]. In contrast, IDSA focuses specifically on the development of standards for trusted data exchange, with a focus on data ownership, sovereignty and interoperability [

29]. In the literature, GAIA-X is often mentioned in the same breath as IDSA. Although the two initiatives have similar intentions, they have a different focus, which means that their defined standards complement rather than contradict each other [

34]. With their defined standards and reference implementations, they provide best practices that should be considered when developing new Data Spaces. In this way, design errors can be avoided and interoperability between different Data Spaces can be further increased in order to reduce data silos.

Marispace-X: One prominent Data Space under the GAIA-X umbrella is Marispace-X, designed specifically for marine big data applications [

37]. It focuses on collecting, merging, and processing data from drones, satellites, sensors and other measure instruments from the sea. The aim of Marispace-X is to simplify the sharing and processing of this information so that further services, such as munitions clearance in the North- and Baltic Sea, can be developed more efficiently and as many parties as possible can benefit from the existing data instead of having to collect it by themselves. Marispace-X is built on the IDSA-RAM framework, supplemented by Federated Services developed by GAIA-X. The project defines five specific use cases in the maritime sector, engaging stakeholders from industry, academia, and government, to explore the potential of the Data Space. These use cases include the internet of underwater things, offshore wind energy, unexploded ordnance in oceans, biological climate protection, and critical infrastructure management [

37].

DataPorts: Another related European Data Space initiative is DataPorts, developed under the HORIZON 2020 framework by the European Union. This project aims to create a secure data platform for information sharing within port communities and infrastructures [

38]. The platform connects to existing digital infrastructures in participating ports and explores the application of blockchain technology in the port environment. The DataPorts platform draws inspiration from the IDSA RAM and emphasizes leveraging collected data for analytics, including AI applications, to optimize business processes. While DataPorts tackles challenges related to sovereign and secure data provision, it does not provide solutions for high-availability data exchange between maritime and terrestrial environments.

Virtual Watchtower (VWT): The Virtual Watchtower (VWT) project serves as a cross-industry collaboration tool for supply chain risk management, particularly in response to incidents like the blockage of the Suez Canal by the Ever Given in 2021 [

39]. VWT aims to enhance digitalization in end-to-end cargo operations by integrating all actors in the logistics chain [

40]. It focuses on data sharing from the cargo owner’s perspective, enabling timely assessments of delays due to external factors, such as storms. Although VWT provides a comprehensive view of the entire supply chain, its scope is primarily geared towards logistics management rather than facilitating information exchange among maritime actors.

Maritime Data Space (MDS): The Maritime Data Space (MDS), initiated in Norway by the research foundation SINTEF, is another significant Data Space built on the IDSA-RAM. Its goal is to establish trusted data exchange between ships and shore-based entities to optimize activities of maritime actors with compliance to EU reporting requirements [

11]. The architecture incorporates a Connector, service broker, and certification authority, alongside a Public Key Infrastructure (PKI). The PKI used in the MDS are the results of the CySiMS research project [

41]. Although the Maritime Data Space (MDS) represents a significant milestone in the development of maritime Data Spaces to optimize maritime activities, several challenges remain. One main problem concerns the Federated Services, which are designed as centralized services. Given the global nature of the maritime domain, relying on a centralized PKI does not seem to be a viable option, as it could lead to potential bottlenecks and single points of failure. Furthermore, the availability of information from sea-based actors is only guaranteed if there is a connection between sea-based and land-based actors. The continuous availability of information at sea, especially in times without a direct connection, is currently not considered. These aspects require further consideration and development in order to improve the resilience and efficiency of maritime Data Spaces.

CISE: The CISE project, spearheaded by the European Maritime Security Agency (EMSA), aims to develop an architecture that connects existing legacy systems from various maritime surveillance entities [

42] This architecture is based on so-called adaptors to link nodes established at both national and European levels, ensuring compatibility among diverse legacy systems. The subsequent EFFECTOR project extends CISE’s capabilities by establishing a data lake-like structure to facilitate big data operations on collected raw data from these legacy systems [

43].

In evaluating the presented projects and initiatives, it becomes evident that most of them are grounded based on the IDSA framework, which provides a robust foundation for data governance and sovereignty, which are core concepts of any Data Space. Due to different requirements, both the VWT and CISE projects pursue a solution that differs from the IDSA. Nevertheless, what all the projects presented have in common is that in data ecosystems involving many different parties, the sovereignty of the data is of the highest importance and must be guaranteed by suitable methods such as a decentralized architecture and secure authentication and authorization. However, it is noticeable that all the initiatives presented take the availability of information as a given. This is by no means the case, especially when it comes to information from seaside actors, whose connection can be interrupted at any time. It is therefore of great importance to investigate how such decentralized systems for sovereign data exchange can be supplemented in such a way that the availability of information between maritime actors is increased as much as possible. The more up to date the available information is, the more precisely services can be developed on this basis to improve the operational activities of maritime actors.

4. Concept

As already described in the

Section 2.2, the main barrier to sharing information between maritime actors is the concern that they will lose control over their own data and will no longer be able to control who has access to which data under which conditions. The architecture to be developed must therefore be structured in such a way that the sovereignty of the Data Providers is always preserved. Over the past years, Data Spaces have emerged as the foundation for the design of data management architectures in connection with the requirement for data sovereignty. With the decentralized approach, all data stocks remain persistent on the infrastructure of the Data Providers, and a central instance, as in the case of data lakes, can be avoided, so that the Data Provider continuously maintains full control over all its data [

18]. Data are only exchanged between two parties when required, provided the Data Provider approves a request from a Data Consumer. Thus, the concept presented in this paper is also based on a decentralized Data Space architecture (see

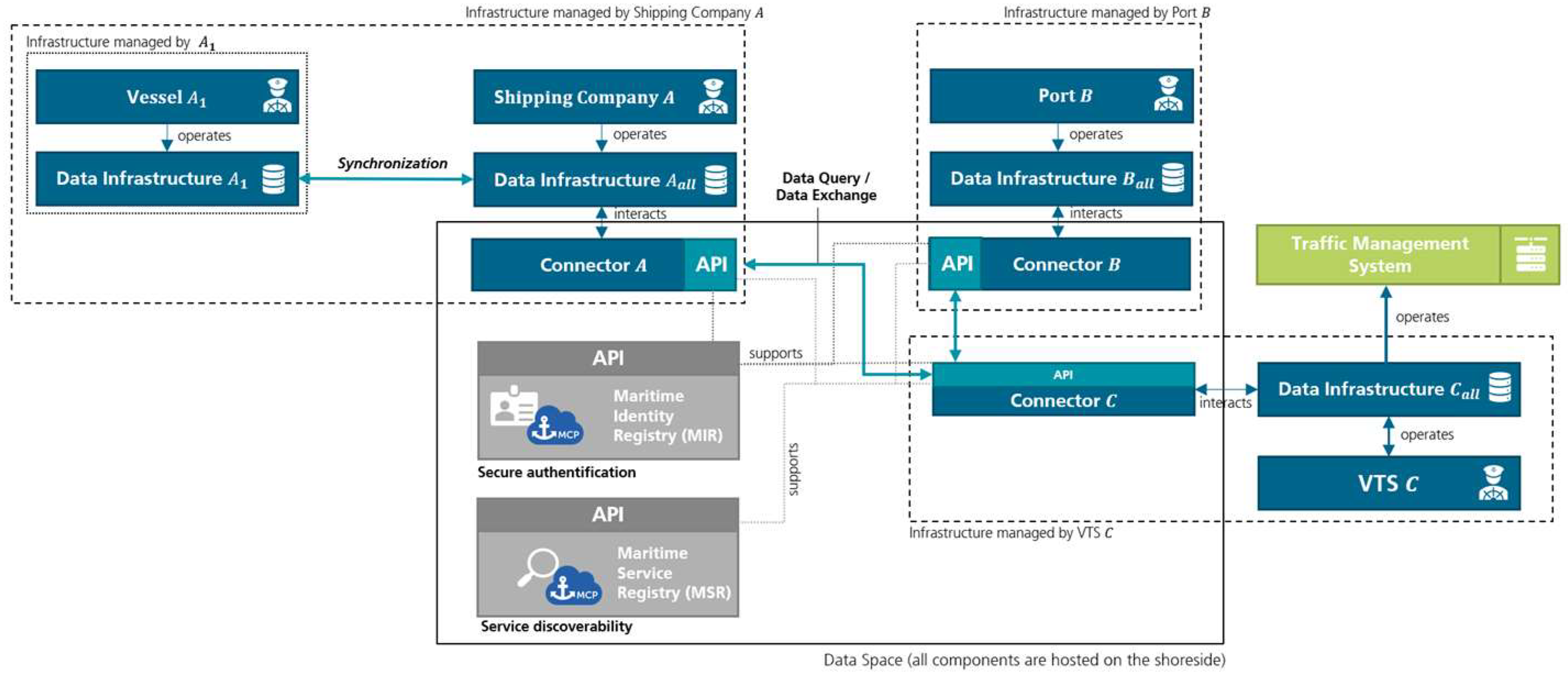

Figure 2).

Figure 2 visualizes the overall architecture of the system for the sovereign and secure exchange of data, considering the maritime requirements with their individual components and their interactions with each other. The following sections describe the functionality of the architecture and its individual components in detail. In principle, the concept can be divided into a sea-based (left-hand side) and a land-based part (right-hand side). The proposed architecture foresees that all components of the Data Space are hosted on the land. Therefore, there are no Connectors or Federated Services on the seaside. The underlying idea is that every seaside actor (e.g., ship, wind farm, etc.) belongs to one Connector on the landside. This Connector can, for example, be hosted by a legal entity, such as the shipping company or the seaside actor itself. In this way, it is also conceivable that, e.g., a shipping company could provide the data of all its vessels via one single Connector instead of having to operate a separate Connector for each vessel. However, to be able to make the data of the seaside actors available on the landside via the Connector, the data must first find its way from the seaside to its landside infrastructure.

For this purpose, each seaside actor has its own seaside infrastructure on which data about the actor itself or its environment is recorded (see

Figure 2, Data Infrastructure

and

). The seaside data infrastructures are always linked to a landside data infrastructure (cf.

Figure 2, e.g., Data Infrastructure

) on which all information available on the seaside from a Data Provider is mirrored and persisted on the landside. The data infrastructure

of Data Provider

therefore contains all data stocks of data infrastructure

and

. In addition, the Data Provider

can also persist other information in its data infrastructure

that has not been collected by seaside actors (such as current information about the hinterland connections). Both the seaside and landside data infrastructures are usually databases in which the data can be persisted in a structured way. Overall, this setup of the architecture offers two major advantages over hosting one seaside Connector each:

Scalability: By outsourcing the Connector to the landside, all requests to the Data Provider are also processed on the landside. This has the great advantage that the information from seaside actors only needs to be mirrored once on the landside and is then available on the landside for all other Data Consumers. The data volume required between the seaside and landside is therefore reduced to a minimum. In addition, a Data Provider only has to operate one Connector in this way. If the data were not collected centrally on a data infrastructure on land, each actor (including those at sea) would have to operate their own connector in order to interact with the Data Space. Depending on the number of actors, this entails a large technical overhead that can also be greatly reduced by outsourcing the Connectors onshore.

Availability: By outsourcing the Connector and mirroring the data on the landside, information from seaside actors can also be requested by Data Consumers even if they are not currently available themselves. If a seaside actor loses its connection, the last version of information immediately before the connection loss can still be queried in this way. If the data were not mirrored on land, it would not be possible to retrieve the information in such situations. This maximizes the availability of information under consideration of volatile connections on sea.

The entire interaction between the land-based actors takes place following the standards of the International Data Spaces Association. For this purpose, the Connectors with their standardized interfaces for peer-to-peer communication are supported by additional Federated Services. According to IDSA, a minimal Data Space has at least one Identity Provider and one Service Broker [

29]. In the maritime use case examined in this paper, Federated Services that have been explicitly developed for the requirements of the maritime domain should preferably be used. For this purpose, the Maritime Connectivity Platform (MCP) offers the Maritime Identity Registry (MIR) as a maritime Identity Provider and the Maritime Service Registry (MSR) as a maritime service broker [

44]. Both Federated Services are based on maritime standards from the International Association of Lighthouse Authorities (IALA) and follow the International Maritime Organization (IMO) e-navigation strategy [

45]. In the following sections, we take a closer look at the individual components of the architecture presented and the processes behind them.

4.1. Maritime Federated Services

As described in

Section 2.2, a crucial prerequisite for trustworthy data exchange is the possibility of secure authentication and authorization between maritime actors. A navigational warning, for example, is not credible without information about its origin. In the digital world, trust is ensured chiefly via cryptographic means, like a Public Key Infrastructure, where digital identities can be authenticated via encrypted certificates. To tackle the challenges regarding the globality and maritime regulations, Federated Services specifically developed for the maritime domain are needed. A system based on IALA guidelines is the Maritime Connectivity Platform, which provides both an Identity Provider (Maritime Identity Provider) and Service Broker (Maritime Service Registry) under the simultaneous consideration of maritime requirements. The MSR enables the exploration of maritime services provided by MCP users, and the MIR provides functionalities for authorizing and authenticating participants. Both services are challenged with the same problem of global unique identification of identities. Therefore, the Maritime Resource Names (MRN), a naming scheme, was developed by IALA to guarantee the unique identification of maritime entities. The MRN is a subspace of the Uniform Resource Name (URN) namespace and is managed by the IALA. A hierarchical structure of an MRN identifier guarantees a clear und unique identification of organizations, stakeholders, services, vessels, persons, and routes (example of an MRN:

; the MRN describes an Aid to Navigation (AtoN) that is managed by the United States with the

id ). Furthermore, MRNs are human-readable, which increases user-friendliness and therefore also acceptance among seafarers. Due to its explicit focus on the maritime domain, it is preferred to other Federated Service options, such as those of the IDSA itself, in this paper.

Maritime Identity Registry (MIR): The MIR is essential for trust establishment by functioning as an Identity Provider while hosting a PKI with a well-established authentication process using standards like OAuth 2.0 and OpenID Connect. Each entity that is registered in a MIR gets a certificate that is connected to its unique MRN. The concept of the MIR foresees that there is not only one single central Identity Provider, but that several Identity Providers can exist simultaneously and are interoperable with each other. For example, each individual country or harbor can provide its own MIR instance with which the registered actors can authenticate themselves. In order to prevent individual trust silos (similar to Data Spaces), policies can be formulated between the individual instances that describe whether two MIR instances trust each other or not. This creates a web of trust relationships between the individual instances so that actors who are not registered in their own MIR instance can also be trusted to be transitive. This would be the case, for example, if trusts and trusts . Users of could then still authenticate and authorize themselves with users of (provided that a trust policy exists between and ), even if they use two different Identity Providers. This approach maximizes trust between the individual parties in a global domain, such as the maritime sector. Additionally, each MIR instance has its own MRN name-subspace, managed by the corresponding MIR itself.

Maritime Service Registry (MSR): Another challenge that arises from the global nature of the maritime domain is the finding of suitable and valuable services. It may be even challenging to find, e.g., a weather-routing service that is operated at the ship’s current location. Therefore, the discovery of services has to be facilitated to minimize the effort of searching and finding services for the vessel crew or in the ship-management office. The MCP provides such functionality for finding different services through the MSR. Maritime services can be registered and searched through a keyword or location-based search. If the search is successful, the service’s endpoint and a description of how to use or consume the service can be looked up in its respective meta-information. The MSR follows the same decentralized concept as the MIR. If several MSR Service Providers trust each other, services can also be found that have not been registered in their own MSR instance.

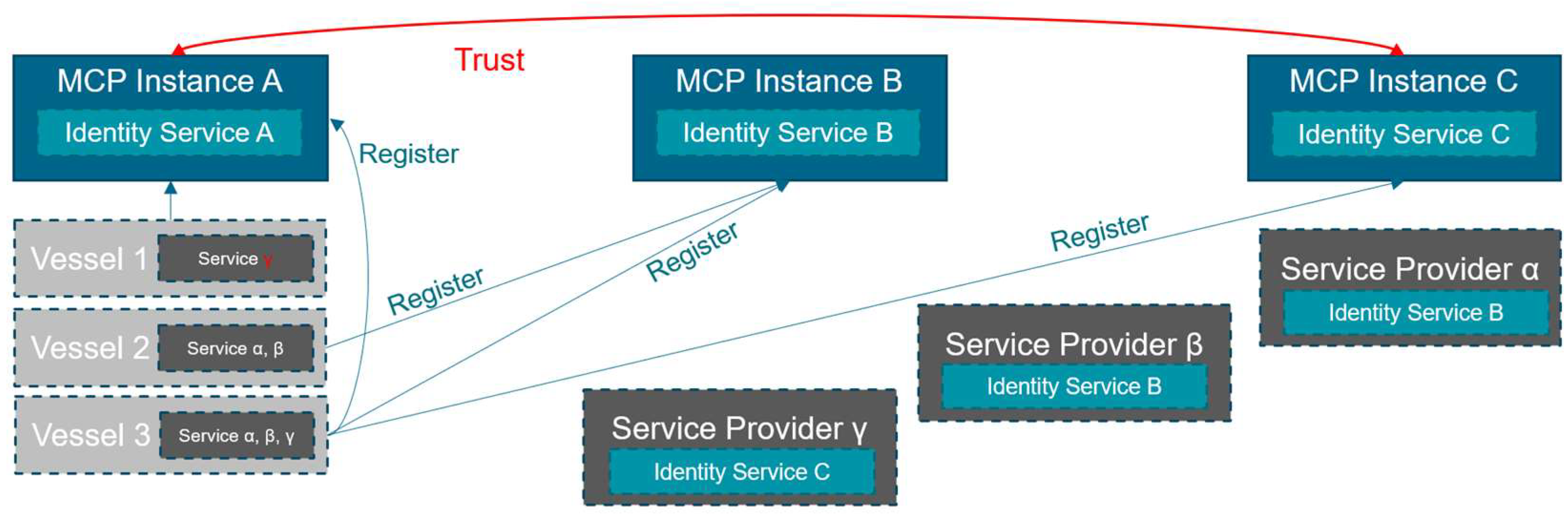

As an example, an MCP setting is given to show how the federated structure in the MCP can be utilized to access services outside the Identity and Service Registry. In this example, there are three different MCP instances, each of which provides its own MIR. Considering the guidelines of the MCP, it is not strictly necessary that every MCP instance must provide an own MSR, but for this example we assume that each MCP instance has its own MSR. As seen in

Figure 3, in our example there are in total three vessels that are registered in the following MCP instances:

is registered in instance

,

in

and

is registered in

. Additionally, in total there are three different Service Providers α, β and γ, each providing a maritime Service.

α and β are registered within

, and

γ is registered in

. As initially every vessel can use only the services that are registered by their own Identity Provider,

has access to no service since no service is registered in the

. However,

has access to service α and service β and

has access to service α, β and γ. As described to enhance the web of trust between maritime actors,

can decide to trust each other. This in turn leads to recognizing an actor or service as trustworthy if it has been registered in the MIR or MSR of the trustworthy

. In this case,

trusts

and vice versa. Through this chain of trust,

γ is seen as trustworthy by

and, thus,

is able to use service γ, which would be seen as not trustworthy without the mechanism of trust relationships.

4.2. Connectors

In addition to the Federated Service, Connectors play a crucial role in the functionality of Data Spaces. As already outlined in

Section 2, various initiatives have established standards for the development of Data Space environments. However, the defined reference architectures do not seek to define one single, universal Connector for every use case. The requirements for a Connector vary depending on the use case, which is why the establishment of a single Connector is not expedient [

29]. Instead of defining one single Connector, the IDSA and GAIA-X reference architectures define a basic set of requirements for the design of Data Space Connectors with the goal to enhance interoperability and the reduction of further data silos between each other. As described in

Section 3, existing Data Spaces with its Connector realizations do not yet meet all the requirements presented in this paper. Explicitly, the use of maritime identities and the increase in availability between sea and land-based information is not yet considered in the current realizations [

46]. For this reason, a personal light-weight realization of a Connector was developed, which considers both the derived standards of the IDSA RAM and further maritime standards in order to be able to meet the defined requirements in

Section 2.3. To ensure security and user sovereignty, the Connector utilizes the Maritime Identity Register (MIR) for authentication and employs widely adopted security libraries for both individual data and request authorization. The developed Connector is equipped with the obligatory standardized IDSA interfaces for data consumption (

query) and provision (

insert) [

29]. Additionally, these interfaces facilitate the seamless connection to diverse data sources, including maritime data, which is made accessible through requests and data synchronization between sea and land. In essence, this functionality is a direct proxy for maritime data sources, ensuring the availability of maritime data.

4.3. Enhancing the Avaiability of Maritime Data

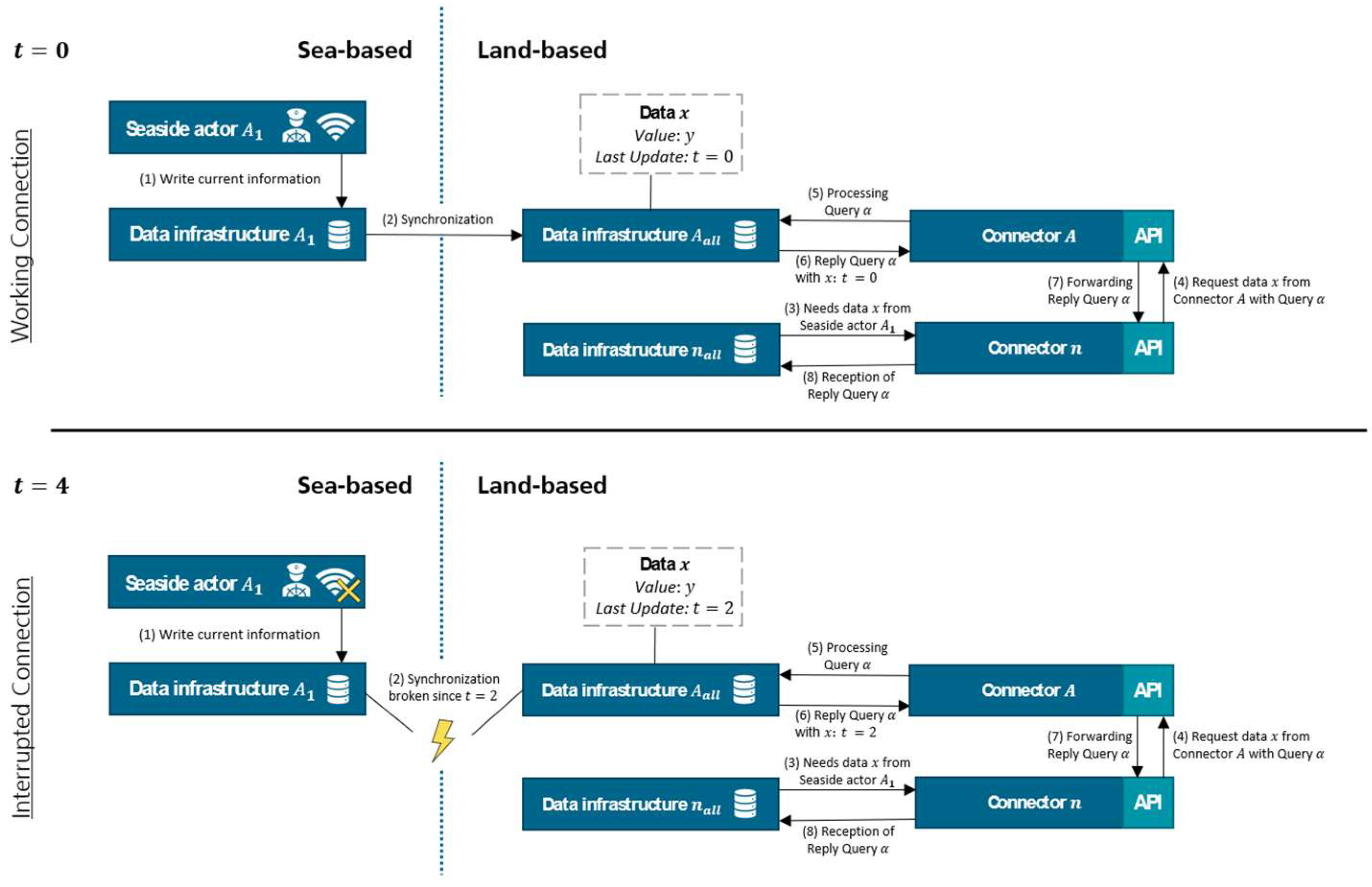

In the following section, we describe the concept of how data from seaside actors can be queried by landside actors. The principle is shown schematically in

Figure 4.

We differentiate between the state of whether the seaside actor from which information has to be retrieved is currently reachable (upper part of

Figure 4) or not (lower part of

Figure 4). In the upper part of the figure at time

, the seaside actor has a stable internet connection. It therefore synchronizes its data, which is persisted locally in data infrastructure

(

step 1), to the landside in data infrastructure

(

step 2). From there, the data can be requested by any participants of the Data Space via Connector

. Actor

requires the information

from the maritime actor

(

step 3). To obtain information

, the requester uses its own Connector

to send a query

via the standardized interfaces to the Connector of actor

(

step 4). The request is then processed by Connector

(

step 5). Connector

checks whether actor

is really actor

or whether someone is just trying to pretend to be actor

. Connector

is able to do that by validating the cryptographic certificate of Connector

. If the validation is successful, Connector

processes the query α and checks whether the actor

is allowed to access the data

or not.

The access rights are organized with a whitelist on which any actor must be in order to gain access to the respective data. If the actor is not on this whitelist, the query is rejected and the actor receives a message that it does not have access to the requested data. If actor

has access to the requested data, it is retrieved from data infrastructure

and forwarded to data infrastructure

via the Connectors of actor

and actor

(

step 6, 7 and 8). It is important to note that at time

, there was a stable internet connection between the seaside and landside and the current data was therefore constantly synchronized between data infrastructure

and data infrastructure

. In this case, actor

was therefore able to retrieve the latest data from actor

at time

. The situation is different if the connection is interrupted, as can be seen in the lower part of

Figure 4. In this case, for example, we are at time

. Already at time

, the connection from the seaside actor

has been lost. Synchronization of the current information is therefore not possible for the seaside actor

anymore as it has no connection to the landside. The synchronization of data has only taken place up to time

. This means that the data infrastructure

has all the data from actor

up to time

. The data request from actor

takes place in the same way when the connection is interrupted as when the connection is stable, as the entire interaction between the Connectors in the concept has been outsourced to the landside. In this case, actor

therefore also requests data

using query α. To do this, it sends the query via its Connector

to Connector

. The latter processes the query again and, if authentication and authorization are successful, the most recent entry from date

is returned to Connector

via Connector

. In this case, the current date would be from time

, as the synchronization of data infrastructure

and data infrastructure

is interrupted since then.

The major advantage of this architecture is the increased scalability and availability of information. Due to the constant onshore mirroring of information from seaside actors, the data are not only available to other actors when the seaside actor itself is available, but also when it is not reachable. In this case, the requesting actor no longer receives the most current information, but information always has a lifespan that varies depending on the use case. This means that every actor still has the option of requesting the latest available information on a seaside actor and deciding for themselves whether or not they want to use the received data based on how current it is. In addition, any information from the seaside actors only needs to be transmitted once to the landside. This is a major advantage, especially in view of the still expensive and low bandwidth at sea. The more frequently the seaside information is requested by shoreside actors, the greater the positive contribution of the shoreside outsourcing.

4.4. Message Management During Interrupted Connections

If the connection between the seaside and landside infrastructure is interrupted, the seaside actors must have a suitable mechanism in place to organize the data that is generated during the interruption of the connection. A connection interruption can last up to several days, so that without a suitable system, a large amount of data would accumulate, which would then all have to be synchronized in an unordered way when the connection is re-established again. This procedure would not only be inefficient, as outdated information might also be transferred in this way, but it would also endanger safety, as critical information might not be synchronized first so that they might be synchronized late or even not synchronized in the event of another unexpected disconnection.

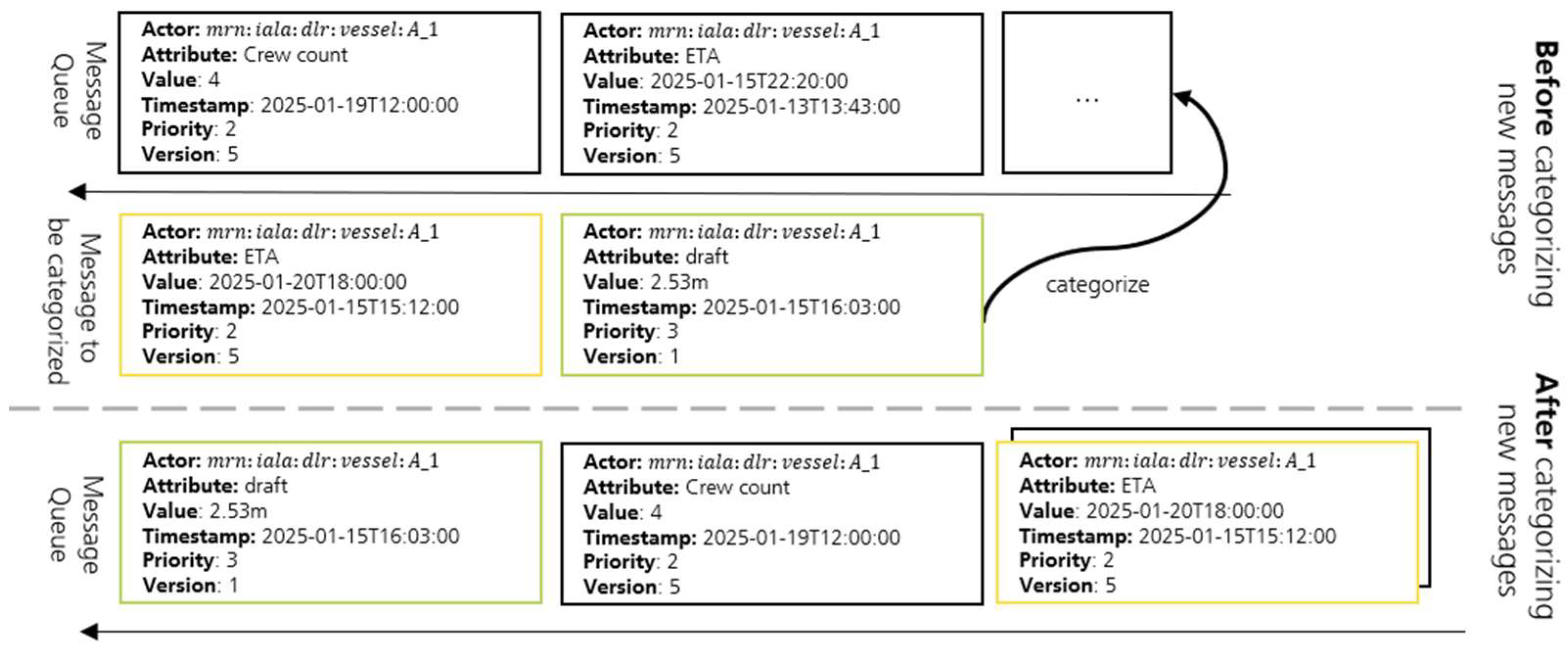

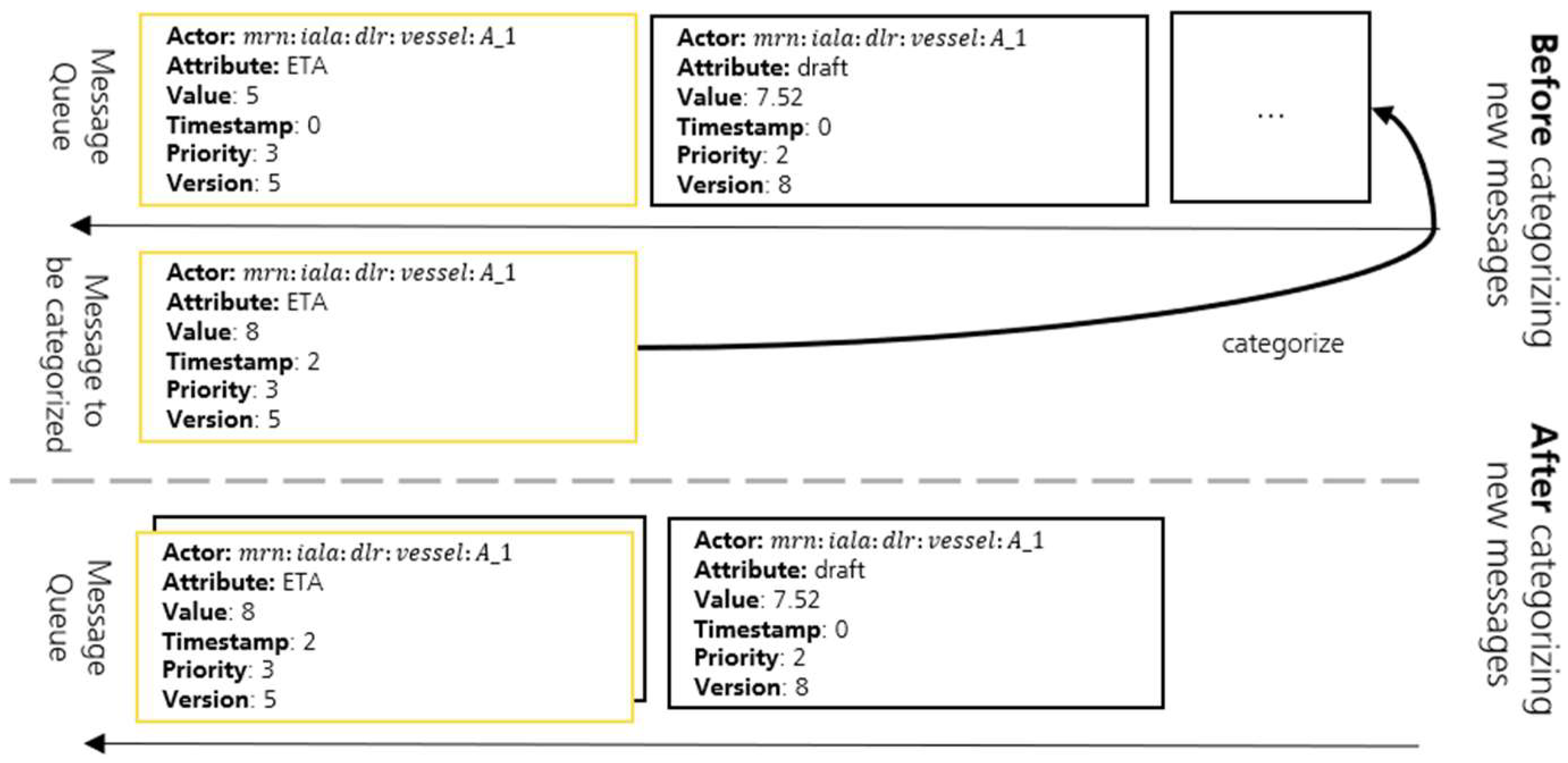

To overcome this issue, it is important to develop a suitable protocol that specifies when information is going to be transmitted. The functionality of the proposed management system is shown schematically in

Figure 5. A virtual queue is introduced in order to organize the data arising in the event of a connection loss, in which the data to be transferred is successively transferred once the connection has been re-established again. An entry in the queue is made up of a sextuple

, where

is the MRN identifier of the actor that generates the information,

describes the type of information,

is the actual data of the attribute,

specifies when the value of the attribute was recorded,

indicates the priority with which the date is to be synchronized to the landside, and

is a counter that prevents collisions while synchronizing the messages.

Prioritization: Due to the fact that bandwidth is very limited and volatile, it is important that safety-critical and important information can be prioritized for transmission. In practice, the operator of the sea- and landside infrastructure (see

Figure 2

, actor

) will decide for all its belonging maritime actors (

which data should be prioritized for transmission. As this can vary significantly depending on the use case, we will not define a specific recommendation for the prioritization of messages in this paper. Since the operator of the seaside and landside infrastructure has its own interest in receiving the relevant information as quickly as possible and has control over all its seaside actors, there is no conflict of interest in the prioritization of the individual messages, as in a scenario with several independent actors who all want to synchronize their own messages first and therefore all send their messages with the highest priority, thus undermining the prioritization system. A simple numerical value,

, where

is suitable as a prioritization number, has a higher value that is associated with a higher priority. Depending on the use case, a different range of priorities can be required, which is why the value

is not specified further. In the schematic example in

Figure 5, the entry

is placed at the front of the queue as the entry has a higher priority than all other messages in the queue.

Update: Prioritization helps to ensure that the most relevant information is synchronized first after a connection is established. However, information can also lose relevance during the duration of a connection loss. This is the case if the same date, e.g., the ETA of a vessel, is updated and the older value is therefore no longer relevant for most use cases. Considering the need to reduce the required bandwidth as much as possible, synchronizing the outdated information would not be beneficial. Instead, the entry with the outdated value will be overwritten with the new value so that there is a maximum of one entry in the queue at the same time for each attribute of an actor. This applies to the entry , of the vessel , where the ETA is updated from “2025-01-15T22:20:00” to “2025-01-20T18:00:00”. However, the new message 2025-01-20T18:00:00, 2025-01-15T15:12:00, the place of in the queue. This has the advantage that outdated information is not transferred and only the most recent entry needs to be mirrored to the landside.

4.5. Synchronization of Message Queues to Landside Infrastructure

The question that remains is how to mirror the entries from the waiting queue to the landside infrastructure without causing conflicts during synchronization. Conflicts can arise, for example, if several maritime actors want to update the same date in the central database. In this paper, we propose two ways in which an infrastructure operator can deal with this challenge.

Consistency per seaside actor: The first and simplest option is to avoid write conflicts by allowing each seaside actor to completely mirror its own data to the landside infrastructure. In this way, if several seaside actors would like to update the same data attribute, a separate entry would be persisted on the landside infrastructure for each seaside actor with the value perceived by the respective actor. The advantage of this approach is that no write conflicts need to be resolved, as each seaside actor has its own write area on the landside infrastructure in which it can synchronize its data. The main disadvantage of this solution is that there may now be several entries for the same attribute. If the value of an attribute will be queried, several entries could be returned in this case and the requester would therefore first have to individually assess which message to trust.

Consistency per land-side infrastructure: Another option is to keep only one valid entry per land-side infrastructure. For the synchronization of messages in the maritime domain, the so-called optimistic replication is suitable, in which it is not necessarily assumed that the information basis of all actors is consistent with each other at all times. Optimistic replication is used, for example, in Version Control Systems (VCS). Especially when synchronizing data between sea- and land-based actors, a constant connection is not guaranteed. In order to ensure that the individual systems remain operational even if the connection is interrupted, optimistic replication is suitable, as no constant synchronization between the actors is required, as would be the case with pessimistic replication. However, the challenge when using optimistic replication is that several actors can process the same message independently of each other, meaning that conflicts can arise while synchronization with the landside infrastructure takes place. These conflicts must first be resolved before the message can be updated on the landside infrastructure. In order to be able to reliably identify whether a planned update would result in a conflict or not, optimistic replication uses a versioning for each of their messages. For example, both actors and may want to update the same data attribute on the landside infrastructure . Both actor and have persisted the same version of data attribute locally from the seaside infrastructure. Now, we assume that the connection is broken for both actors and, despite the lost connection, they are still allowed to edit their local copy of data attribute . To do this, the attribute value of the sextuple is updated and the version is increased by the value of 1. As soon as one of those actors, e.g., , has re-established a connection, the version number of the updated message is compared with the version number of the message in the landside database. As the version number of the updated entry is 1 higher than the version number of the entry in the landside infrastructure, the entry in the shoreside database is overwritten with the entry from . As soon as the other actor, here , also re-establishes its connection and wants to synchronize its updated data, it will notice that the version number of its own entry is the same as the entry in the landside infrastructure. In this case, a conflict arises because the entry has been updated based on an outdated version. In such a case, the conflict must be resolved before the entry from can be synchronized to the landside infrastructure. In the maritime use case, for example, the timeliness of an entry is suitable for resolving the conflict so that the more recent message overwrites the older message. However, other approaches are also feasible, such as entries from authorities being classified as more valuable and therefore overwriting information from supposedly less credible actors.

5. Application and Evaluation

Given the broad range of potential applications for the proposed system, we illustrate several use cases using the example of a system for optimizing maritime traffic, operated by a Vessel Traffic Service as an evaluation.

5.1. Introduction to Case Study

A Vessel Traffic Service (VTS) would like to use a Traffic Management System (TMS) to improve the coordination of vessels entering the port. On the basis of a range of information, the TMS derives recommendations for action for the individual traffic participants, which optimize maritime traffic in terms of waiting times in the roadstead and emissions. However, the TMS relies on data about the current traffic situation, such as information about the position, speed, ETA, unexpected delays, weather information and information from the port about available services, such as pilots, moorings, cranes and connections to the hinterland. To derive recommendations for action, the coordination problem is first modeled mathematically and then solved using genetic algorithms. When deriving the recommendations, the TMS concentrates on adjusting the speed of the individual traffic participants so that they receive a prompt from the system to increase or reduce their current speed accordingly. For reasons of practicability, the TMS does not adjust the route or other parameters. In macroscopic terms, following the recommendations for action leads to an optimization of the overall traffic situation. The solution generated by the TMS is robust to the extent that ignoring individual recommendations for action does not directly cause the solution as a whole to collapse. However, the current traffic situation is continuously monitored by the TMS with regard to increasing efficiency. If the system detects that too many traffic participants are not following the recommendations for action or that other environmental conditions, such as the available port services, have changed unexpectedly, the TMS automatically generates a new solution that takes the deviating environmental parameters into account when finding a solution. As already mentioned, the TMS is only able to derive recommendations for action for the traffic participants if it also has access to the required heterogeneous data basis. The aim of the case study is to show how the data management system from

Section 4

can support the operational provision of data-driven services using an exemplary service for optimized traffic coordination. For this purpose, the concrete evaluation scenario is first described below, before the functionality of the prototype is then explained on the basis of three defined user stories.

5.2. Evaluation Scenario: Traffic Management Optimization Problem

To illustrate how the presented concept actually works, we will focus on a simple traffic management optimization problem with only one traffic participant that is to be solved by the TMS. The evaluation scenario comprises a total of three actors who are dependent on exchanging data with each other in order to optimize the traffic situation (c.f.

Figure 6).

The actors are , which has up-to-date information about its , , with its port-related information, and , which ultimately wants to optimize traffic by operating the TMS. The simple scenario assumes that the incoming wants to enter the Elbe estuary at a speed of at time , so that it will enter the port at time as agreed with the port. At time , however, there is an unexpected delay in the port, so that the berth intended for will not be available at time . The planned berthing time for is therefore adjusted by to time . would now like to optimize the current traffic situation in order to ensure that arrives at the port just in time and that there arise as few waiting times as possible. For this purpose, uses the TMS, which can derive how must adjust its speed based on the current position of and the available berths of in order to enter the port without waiting time. Based on the current position of and the time of the next available berths in , the service suggests reducing the speed of the vessel to . The recommended action is communicated by to so that the vessel adjusts its speed accordingly and can enter at time without waiting. The prerequisite for deriving the recommendations for action from the TMS is that the can access the information from and . For this purpose, the data management system architecture presented in this paper is used to enable secure and sovereign data exchange between these actors. Based on the acquired data, the can then operate its data-driven TMS service, as described, for traffic optimization.

5.3. Setup for Provision of Required Data Basis

To operate the TMS,

, as described in 6.2, requires access to the data about the ETA and velocity of

and the current port utilization of

. The information is distributed across a total of two parties—namely,

and

. In accordance with the concept from

Section 4, all actors use a Data Space Connector for uniform and sovereign data exchange, which is connected to their own data infrastructure (see

Figure 7).

collects data from its seaside

, which is why data are synchronized from Data Infrastructure

to Data

in accordance with the concept presented in

Section 4.3. Therefore, there is no direct data exchange between

and

. Instead, the

obtains the required information via the Connector from

. To increase the global discoverability and secure authentication and authorization of the data exchange, the Connectors are supported by the Maritime Service Registry and Maritime Identity Registry. For the evaluation, the traffic data of

and the entire current traffic situation are simulated by the Maritime Traffic Simulation (MTS) of the German Aerospace Center. The MTS makes it possible to realistically simulate entire traffic situations with a large number of vessels using ship type-dependent physic models and intelligent vessel behavior. The MTS generates Automatic Identification System (AIS) and radar messages in NMEA0183 format as output, which serve as a basis for the validation and verification of maritime systems. In the following, the functionality of the developed architecture will be shown based on the described setup, which demonstrates how (a) data can be found and queried, (b) the data can be exchanged in a sovereign and secure way, and (c) the provision of information can be guaranteed even in the case of instable connections at sea.

5.4. Finding Nessacary Data

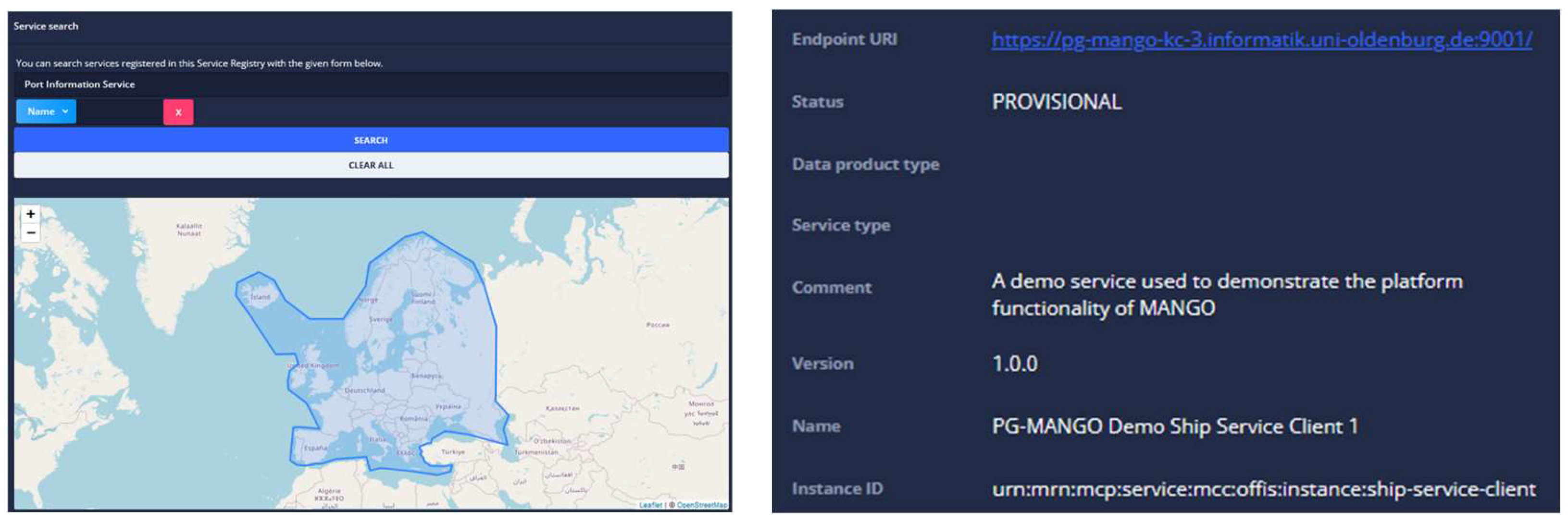

The Maritime Service Registry is used as a broker to find the required information. All services (and therefore also Connectors) can be registered by the service providers in the MSR in accordance with IALA Guideline G1128. If a potential service consumer is looking for specific data, the MSR can be used as a first point of contact to find suitable Connectors (c.f.

Section 2.3, R4, finding data). For this purpose, users can use an API provided by the MSR or a graphical user interface in which they can formulate structured queries. In this way, the user can search the registered services with regard to various attributes such as name, MRN, geographical coverage and status (see

Figure 8).

If the user decides on a service, they can display further meta-information and the endpoint of the service so that the user is subsequently able to address the service itself directly. It is crucial that as many Connectors as possible that should be publicly discoverable are also registered in the MSR so that the widest possible coverage of services can be found using the MSR. In the presented architecture, the use of the MSR should only be regarded as optional; if the Data Consumer already knows a suitable Data Provider and its endpoint, the search in the MSR can be skipped. In the presented case study, the uses the MSR to find a source that has information about . The MSR suggests the Connector from to the for this purpose. The source for the other data required to operate the TMS is already known to the (Connector from ). The therefore does not need to request any further information about from the MSR.

5.5. Souvern and Secure Data Sharing

Due to the decentralized nature of the architecture presented, data can also be used to provide data-driven services without the need to migrate your own databases to a central infrastructure. The Data Providers can continue to persist their heterogeneous data on their local data infrastructure (see

Figure 7, Data Infrastructure

,

,

) and can only provide the data requested by a Data Consumer via their Connector if required (c.f.

Section 2.3, R2) Connection of heterogeneous data sources). In the evaluation scenario, the

requires information about

and the existing port services of

to operate its TMS service. The

formulates one data request to

and another request to

to query the required information basis. In this way, both Data Providers receive a request for their data from

and can decide individually whether they want to provide the requested information to

or not. In contrast to a central data infrastructure, they therefore always retain full control over access to their data (c.f.

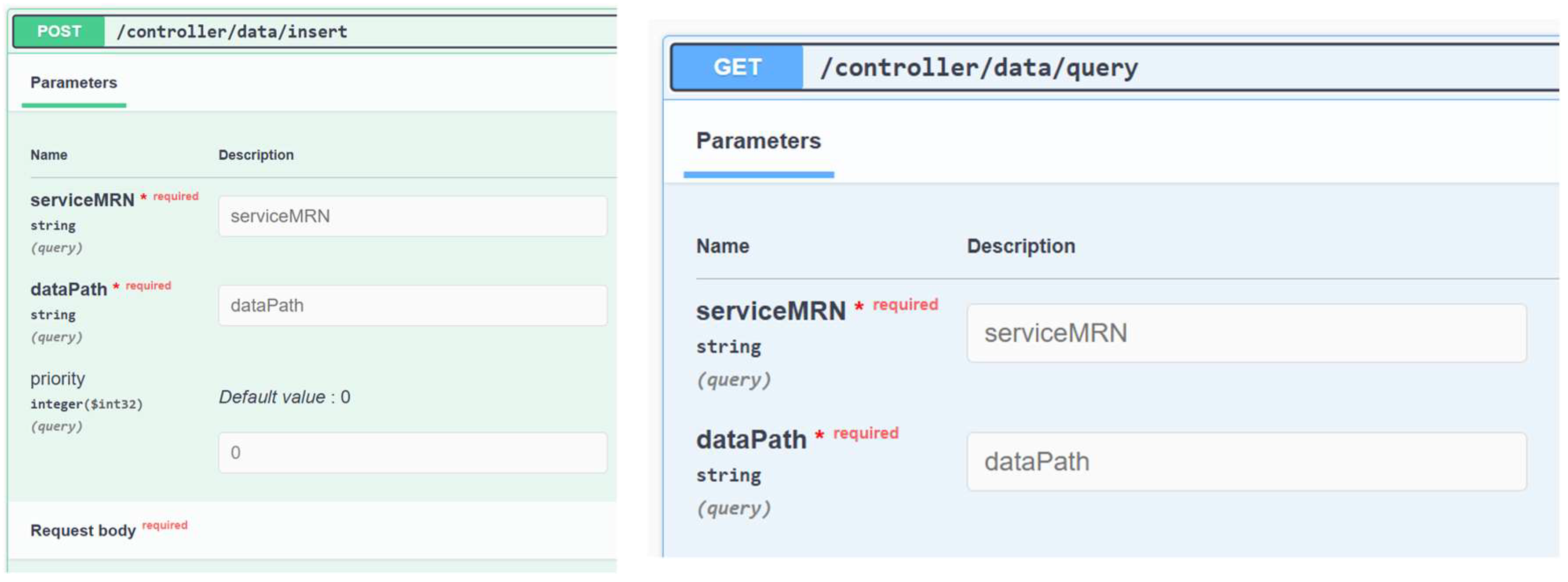

Section 2.3, R1, sovereign and secure data provision). The access rights are realized via a whitelist of the respective Connector. All data requests and data exchanges take place entirely via the standardized Connectors and their uniform interfaces (see

Figure 9). In this way, a request is possible even if, for example,

enters

for the first time and is completely unknown to

. Thanks to the standardized Connectors, they can still exchange information with each other in a uniform way without having to align their IT-infrastructures beforehand. The central interfaces of the Connector are the

insert- and

query-API. The interfaces are aligned to the IDSA RAM 4.0. With the

insert-API, new or updated data can be made available to Data Consumers via their own Connector. A total of three parameters are required for this:

serviceMRN specifies the MRN of your own Connector via which the data to be entered is made available; the

dataPath specifies a JSON path behind which the data to be entered is stored in the Connector; priority is an optional parameter with which the Data Provider can configure the relevance of the date. The priority parameter is mainly used for the synchronization of messages between sea and land.

In the evaluation scenario,

wants to update its current berth availability at time

and therefore calls the insert-API. The exemplary call is shown in

Figure 10, left. The port with the MRN “

” updates its berth availability, which is located behind the JSON path “

”, with the value

and transmits it with a priority of

. The

then queries the updated data independently using the

query-API in order to consider the value during the traffic coordination. For a valid query, the

query-API requires the serviceMRN of the Connector to be accessed and the dataPath that refers to the date to be requested. The

can derive both from the MSR and the meta-information provided. The request made by the

is shown in

Figure 10 on the right.

The asks the Connector “

” for its value, which is hidden behind the attribute

. The Connector of

processes the received request and uses the whitelist to check whether the

has access to the data attribute or not. The

has access to the date and receives the updated value 8 in response, which it then utilized for the operation of the TMS. The

also sends a corresponding query request to the Connector of

in order to receive current information about

.

All communication between the Connectors is encrypted using the X.509 certificates issued by the Maritime Identity Registry. By using the MIR certificates, the actors can securely authenticate each other cryptographically and thus ensure that they are communicating with the intended actor (c.f.

Section 2.3, R3, integrity and certification). Encryption and authentication significantly reduce the risk of the message exchange being read or even manipulated by unwanted third parties.

5.6. Continuous Provision of Required Data

During the provision of information,

experiences an unexpected connection loss at time

, so that it is no longer possible to establish an IP-based communication with the vessel. However, according to the architecture presented, the vessel had already migrated its data from its local

to the shore-side infrastructure of its

at time

. In this situation, no up-to-date values can be retrieved from the vessel at time

, but at least any information at the time of the last synchronization

can still be accessed. The

therefore still has the option at time

to request the Connector from

and thus retrieve the information from

at time

and be able to further operate its TMS based on the available information basis (c.f.

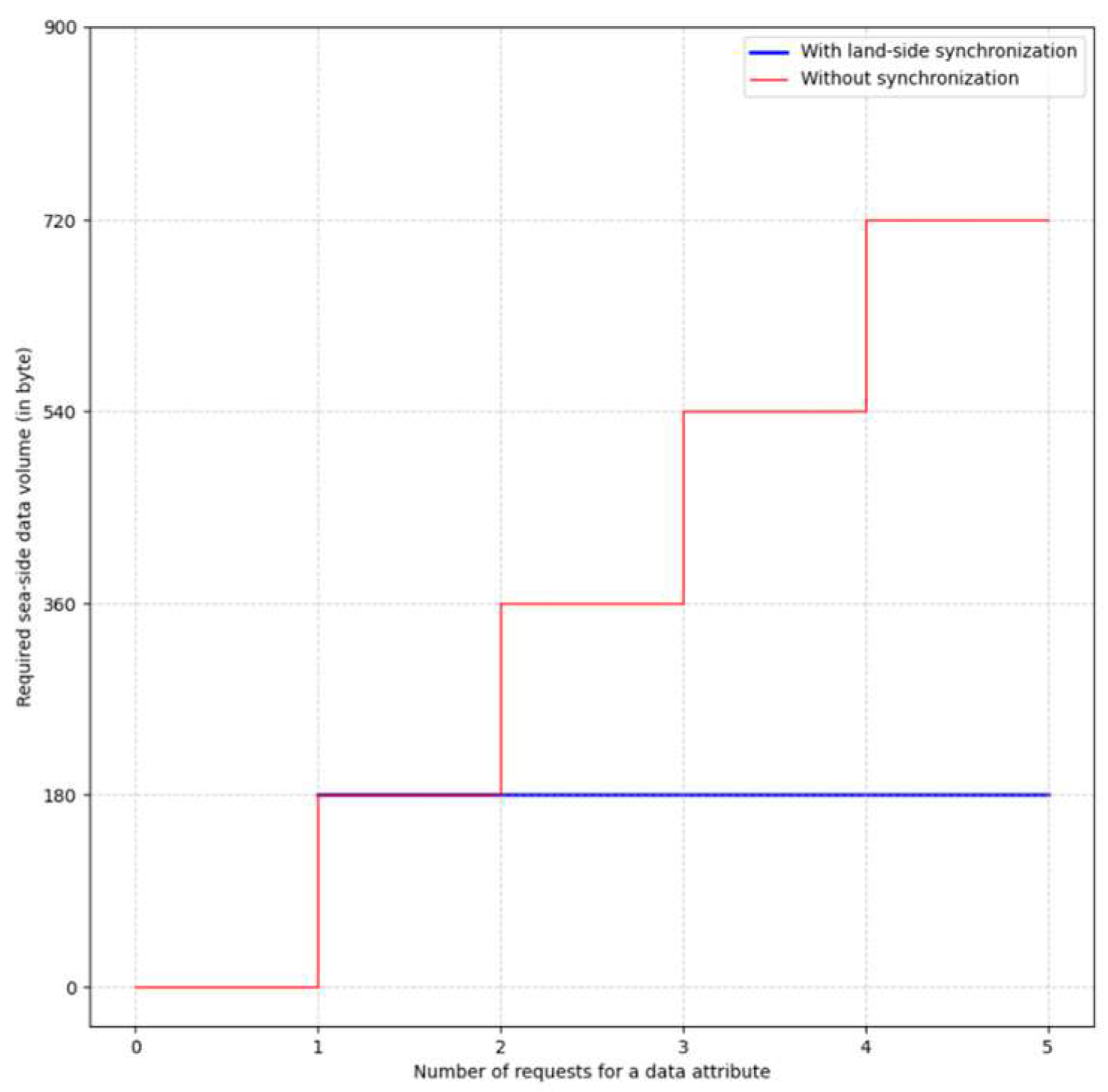

Section 2.3, R5, availability of maritime data). Since all information is always requested and exchanged via the shore-side infrastructure, the break-even point with regard to the required seaside data volume is already reached with the first request for a date (see

Figure 11). Each further request leads to a linear reduction in data volume as the number of requests increases. Only if a date is not requested at all are more data exchanged between the data infrastructures.

During the disconnection,

can continue to update its attributes locally. To do this, it follows the protocol written in

Section 4 in which only the most recent value for an attribute is persisted in the waiting queue. Older values are overwritten and not synchronized in order to reduce the total band volume required (see

Figure 12).

When the internet connection is re-established, the queue of

is then synchronized to the shore-sided

. During the transfer of the queue, a check is made to see whether any synchronization conflicts will occur when updating the shore-side values.

synchronizes its data according to the described principle of “Consistency per land-side infrastructure”, where there is only one valid value for each date, regardless of the source from which it originates. Therefore, when updating the planned ETA of

, the version number of the message from the queue must be compared with the version number in the

. If the version number of the message is 1 higher than the existing version number of the message in the data infrastructure A_all, the message was not updated by any other actor during the disconnection. In this case, there is no conflict and the message can be transferred to

.

7. Conclusions

In this paper, we discussed the need for a data management system that fulfills the requirements for data-driven services to support maritime operational activities. Based on the current literature, we first identified the research gap discussed in this paper and formulated requirements for a corresponding maritime data management system. On this basis, a system architecture was derived that considers the interests of the actors involved in the exchange of maritime data and addresses challenges such as unpredictable disconnections and low bandwidth. The architecture is based on a decentralized Data Space structure in order to preserve the sovereignty of the actors. In addition, concepts have been integrated that increase the availability of information between sea and land and at the same time reduce the data volume that is required on the seaside. A practical case study demonstrated its functionality based on a maritime traffic management scenario. The presented architecture closes the research gap for a data management concept that allows maritime actors to securely exchange their data without compromising their sovereignty. The concept also explicitly addresses the challenges posed by the involvement of sea-side actors and maximizes data availability to support the provision data-driven services. In summary, the proposed architecture represents a holistic approach for a data management system in the maritime domain that enables maritime actors to securely and sovereignly exchange data with each other despite low available bandwidth and unexpected connection losses in order to reliably operate their data-driven services.

Limitations and Future Work

Nevertheless, some limitations of the current architecture should be mentioned, which can be further investigated in future research:

Compliance with further standards: The architecture presented in this paper initially focuses on the use of standards from the maritime domain (such as IALA G1128, G1161, R1023, among others) in order to first gain acceptance in the maritime community. Not considering maritime standards would doom the proposed data management system to fail, even in the context of smaller use cases, as many already deployed systems are based on these standards and would therefore be incompatible with the proposed architecture. Nevertheless, in future, it would be desirable to take a closer look at the standards of ongoing Data Space initiatives. However, a major challenge in the simultaneous consideration of maritime standards and the guidelines of established Data Space initiatives, such as GAIA-X and the IDSA, lies in their partial contradictions. In an initial analysis, some contradictions were already identified between the maritime standards and the IDSA RAM, for example, in the formats used for identifiers (e.g., MRNs in the maritime domain and Universally Unique Identifier (UUID) in the IDSA RAM). In order to ensure conformity with the standards of both domains, the concept would have to be expanded to include further IDSA standards and a corresponding compromise would have to be found for every event of contradictions. In many cases, these contradictions can be resolved by a matching approach, e.g., between MRNs and UUIDs. Conformity between the standards of both domains would be desirable in principle, as it could increase interoperability with other related Data Spaces and prevent data silos.

Serialization to reduce data volume: In addition to increasing conformity with existing standards, the size of the exchanged messages could be further reduced from a technical perspective, by using suitable serialization methods to reduce the size of each single message. Approaches that do not transmit the schema directly in the payload of each individual message, such as ProtoBuff, are particularly suitable for this. In this way, the actors involved would only have to send the schema of a message to each other once. For each transmitted message, the payload of the message is then combined with the schema once transmitted. Especially for smaller messages, the schema of a message can make up a large part of the payload, which can be further reduced using suitable serialization methods.

Data Apps to enhance data sovereignty: Furthermore, additional protection of the sovereignty of Data Providers could be achieved by extending the Connectors to include the principle of Data Apps, in which the data-driven services must be executed directly in the Connector itself instead of outside. This means that the requested data can only be used for the operation of the respective service, so that the Data Consumer can also operate its data-driven service without insight into the data provided by the Data Provider and thus only has access to the higher-value information generated by its own data service, but not to the data of the Data Provider itself. The concept of Data Apps means that the Data Provider’s data can be more strongly protected if required, as the Data Provider can define and control exactly for which Data Apps its data should actually be used for.

It should be noted that the success of such a decentralized infrastructure depends largely on acceptance and active use within the maritime community. The more authorities and the maritime industry decide in favor of such an infrastructure, the greater the resulting benefits for all parties involved. Although the infrastructure can also be used in smaller data ecosystems, the resulting benefits increase with a growing number of users [

47]. The network effect that occurs here can be explained by the fact that actors will only use the data infrastructure in the long term if they can reliably access, find and obtain the relevant data. If the supply of available data is too low, usage will also decline over time. However, Data Providers will only offer their data via the infrastructure if sufficient Data Consumers are available. Due to the circular causality, it is all the more important that standardization bodies and authorities initiate obligatory guidelines for a standardized data exchange in the maritime domain. The efforts to date, such as the IALA G1161 or the IMO’s e-navigation strategy, have already created a good foundation for the digitalization of the maritime domain. Now it is time to continue building on this in order to establish a sovereign and secure data exchange for the reliable use of maritime services.