1. Introduction

Embodied intelligence is reshaping the autonomous obstacle avoidance capabilities of underwater robots in complex marine environments, with accurate biological detection serving as a fundamental prerequisite. In deep-sea exploration, the ability of an underwater robot to merely “detect” obstacles without being able to “recognize” their categories imposes severe limitations on its autonomous navigation. For example, the failure to differentiate between a “movable fish” and a “stationary obstacle requiring evasion” can lead to non-specific avoidance strategies, resulting in unnecessary path deviations or increased risk of collision.

As the core of autonomous navigation systems, underwater object detection directly determines the reliability of obstacle avoidance decisions. In contrast to terrestrial applications, where state-of-the-art detectors such as YOLOv8 [

1], DETR [

2], and RT-DETR [

3] have achieved performance approaching that of human perception, underwater vision is significantly compromised by multiple degradation factors: wavelength-dependent light attenuation introduces severe color distortion and loss of chromatic information; forward and backward scattering by suspended particles produces haze-like blurring and reduced contrast; dynamic illumination and non-uniform lighting obscure object boundaries. Collectively, these factors reduce feature discriminability, disrupt spatial coherence, and substantially increase false detection rates, ultimately undermining the overall performance of underwater obstacle avoidance systems.

Early efforts to mitigate underwater optical degradation predominantly focused on image restoration and enhancement. Physics-based approaches, such as the Jaffe–McGlamery model [

4], attempt to model the light transport process; however, their reliance on precise optical parameters, which are difficult to measure in practice, constrains their applicability. Deep learning-based methods (e.g., WaterNet [

5], UWCNN [

6]) trained on large-scale synthetic datasets have demonstrated improved color correction, but they often suffer from domain shift, resulting in poor generalization to real-world conditions. More recently, self-supervised and unsupervised techniques have gained attention. For instance, FUnIE-GAN [

7] leverages generative adversarial training without paired data, while Water-Color Transfer [

8] exploits color mapping strategies. Although these methods show promising enhancement capabilities, they are typically deployed as standalone preprocessing steps, disconnected from the downstream detection task. This separation prevents end-to-end optimization and, in some cases, introduces artifacts or excessive smoothing that negatively impact final detection accuracy.

To address issues of low contrast and noise, research has also emphasized the development of robust feature extractors. CNN-based models such as LMFEN [

9], which employs hierarchical convolutions to retain fine-grained features, and UW-ResNet [

10], which enhances blurred boundaries through modified residual structures, have achieved significant results. The integration of attention mechanisms has further improved feature discriminability: CBAM [

11,

12] and SE blocks [

13] recalibrate features spatially and channel-wise; lightweight modules such as those used in DAMO-YOLO [

14] enhance detection accuracy without compromising efficiency. Despite these advances, the suitability of Transformer-based architectures for underwater tasks remains contested. Although the Swin Transformer [

15] demonstrates strong capabilities in modeling long-range dependencies, its quadratic computational complexity and high memory cost limit its deployment on resource-constrained embedded platforms. Lightweight alternatives such as MobileViT [

16] and EfficientFormer [

17] offer efficiency advantages but often underperform in detecting small underwater targets.

The scale variability of marine organisms further complicates underwater object detection. Multi-scale architectures based on feature pyramids have therefore become a standard solution. The Feature Pyramid Network (FPN) [

18] improves multi-scale performance by integrating high-level semantics with low-level details via a top-down pathway. Extensions such as PANet [

19] incorporate a bottom-up pathway to strengthen cross-scale feature propagation, while BiFPN [

20] adaptively adjusts scale contributions using weighted bidirectional fusion. Nevertheless, these general strategies encounter unique challenges underwater. Shallow features are often contaminated by scattering and suspended particles, resulting in high noise and blurred details. Directly fusing such degraded features with semantic-rich high-level representations introduces interference and degrades detection performance. Recent approaches, such as UW-FPN [

21], selectively enhance features at different pyramid levels or denoise shallow maps prior to fusion. However, these solutions typically incur higher computational costs and demonstrate limited adaptability across diverse underwater environments.

To overcome these limitations, we propose Underwater YOLO-based Biological detection method (UW-YOLO-Bio), a high-precision framework for underwater biological detection that significantly improves the autonomy of underwater robots. The framework aims to enhance both detection accuracy and robustness, thereby ensuring reliable input for subsequent obstacle avoidance decisions. Our method achieves notable improvements on the DUO, RUOD, and URPC datasets, increasing mAP50 by 2% and reduce model parameters by 8%.

Specifically, UW-YOLO-Bio introduces three innovations tailored to underwater detection challenges:

- (1)

Global Context Perception Module (GCPM): Enhances global feature extraction, effectively mitigating underwater optical degradation and improving perception robustness.

- (2)

Channel-Aggregation Efficient Downsampling Block (CAEDB): Preserves critical information during spatial reduction, thereby improving sensitivity to low-contrast underwater targets.

- (3)

Regional Context Feature Pyramid Network (RCFPN): Optimizes multi-scale feature fusion through contextual awareness, substantially improving detection accuracy for small marine organisms.

Beyond performance gains, this work contributes a systematic framework for environment-aware detector design. By translating domain-specific knowledge—such as underwater optical physics and marine ecological characteristics—into architectural innovations, UW-YOLO-Bio provides a generalizable design paradigm. We anticipate that the principles underlying this framework can inspire the development of robust visual systems in other adverse environments, including foggy driving conditions, smoke-obscured disaster scenarios, and deep-space imaging. Furthermore, the principles underlying UW-YOLO-Bio, particularly its robustness to noise and clutter and its capability for multi-scale target detection, demonstrate potential applicability beyond optical imagery. For instance, in the field of maritime surveillance, High-Frequency Surface Wave Radar (HFSWR) is pivotal for over-the-horizon target detection. Similar to underwater optical environments, HFSWR data, especially Range-Doppler (RD) maps, are contaminated by strong sea clutter and noise, making target detection challenging [

22,

23,

24]. The GCPM ability to model global context could help distinguish weak target signatures from clutter, while the RCFPN multi-scale fusion might adapt to targets of varying velocities and sizes on the RD map. We believe an adapted version of our method could contribute to improved target detection and clutter suppression in HFSWR systems, and we regard this as a promising direction for future interdisciplinary research.

The remainder of this paper is organized as follows.

Section 2 reviews related work on underwater object detection, multi-scale feature fusion, and downsampling methods.

Section 3 details the proposed UW-YOLO-Bio framework, including GCPM, CAEDB, and RCFPN.

Section 4 presents the experimental setup, datasets, ablation studies, comparative results, and an analysis of failure cases. Finally,

Section 5 concludes the paper and discusses future work.

3. Methodology

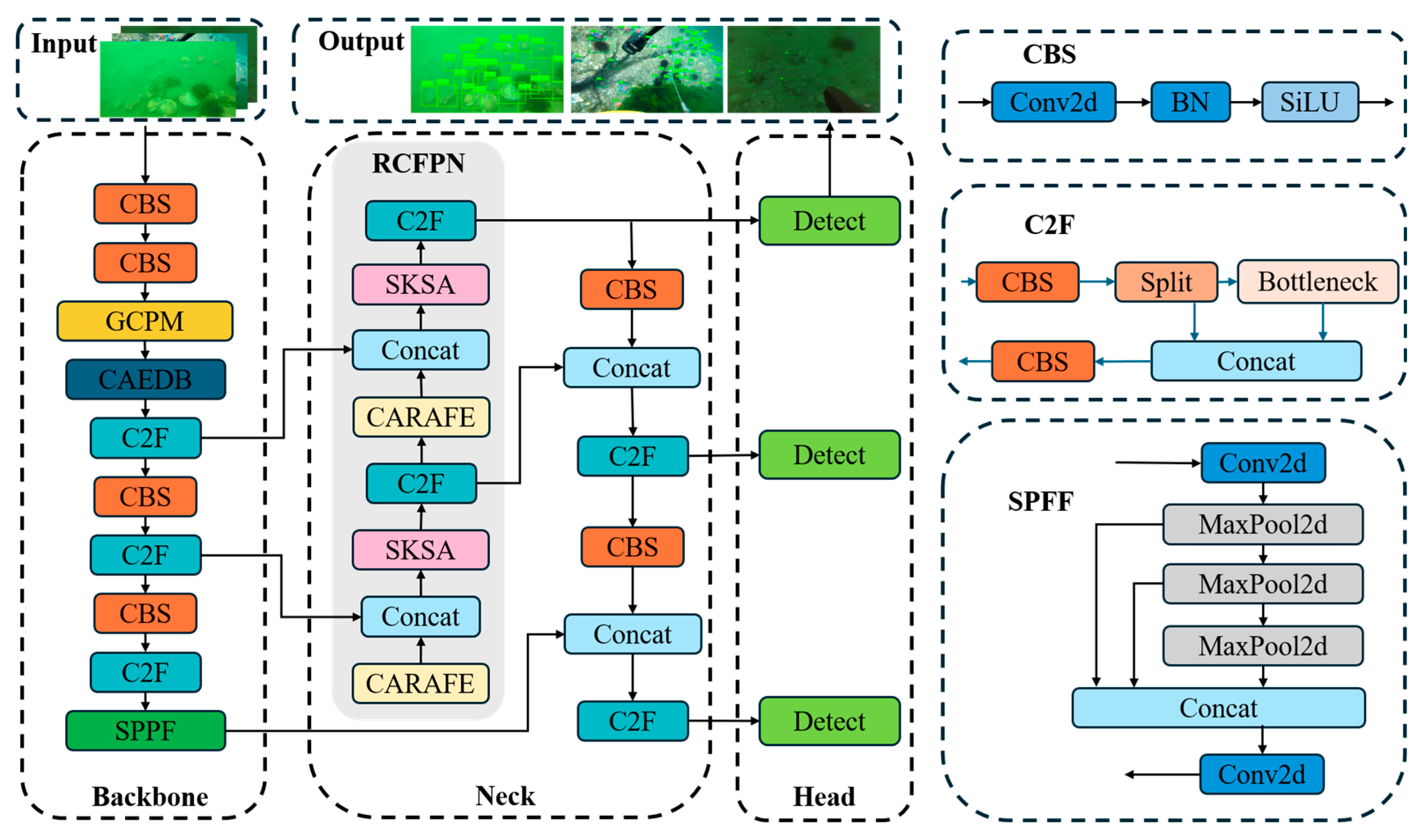

This section presents the architecture of the proposed Underwater YOLO-based Biological detection (UW-YOLO-Bio) framework, which is designed around three core principles: environmental adaptability, semantic awareness, and computational efficiency. YOLOv8 was selected as the baseline architecture for this study due to its proven stability, extensive documentation, and optimal balance between accuracy and speed for resource-constrained platforms at the time of our experimental design. Its modular structure also facilitated the seamless integration of our proposed modules. The overall architecture of the proposed UW-YOLO-Bio is depicted in

Figure 1; the framework enhances the YOLOv8 backbone by integrating three novel modules:

- (1)

GCPM—efficiently captures long-range dependencies without the quadratic computational cost of conventional self-attention, thereby providing robust resistance to underwater occlusion and noise;

- (2)

CAEDB—utilizes depthwise separable convolutions and channel aggregation to achieve efficient feature reduction while retaining critical information from low-contrast targets;

- (3)

RCFPN—refines multi-scale feature fusion by embedding regional contextual cues and ecological scale priors, leading to substantial improvements in detecting small marine organisms.

The GCPM, CAEDB, and RCFPN modules are not isolated components but form a synergistic pipeline addressing distinct bottlenecks in underwater detection. The GCPM acts as a feature enhancer within the backbone network. By capturing global contextual dependencies, it mitigates the macroscopic effects of occlusion and noise, thereby providing a cleaner and semantically richer feature foundation for subsequent modules. The CAEDB operates at critical downsampling stages. Its efficient channel aggregation mechanism ensures that the information crucial for low-contrast targets, which is enhanced by the GCPM, is preserved during spatial reduction, preventing the loss of fine details during transmission. Finally, the RCFPN receives and fuses these optimized multi-scale features from the preceding modules. Its regional context awareness and content-aware upsampling capability enable precise reconstruction of target details, particularly for small objects that have been effectively highlighted by the GCPM and CAEDB. In essence, the GCPM is responsible for macro-environment understanding and robustness, the CAEDB guarantees the lossless transmission of critical information, and the RCFPN focuses on the accurate localization and recognition of multi-scale targets. The three modules work in concert to collectively enhance the model robustness in complex underwater environments.

The overall architecture of the proposed UW-YOLO-Bio is depicted in

Figure 1. The framework enhances the YOLOv8 backbone by integrating three novel modules. The input image is first processed by initial convolutional layers. The GCPMs are strategically inserted into the backbone to capture long-range dependencies and mitigate occlusion and noise at different scales. The feature maps then pass through a series of CAEDB modules, which perform efficient spatial reduction while preserving critical information from low-contrast targets. The multi-scale features extracted by the backbone are then fed into the RCFPN, which optimizes feature fusion through its Separable Kernel Spatial Attention (SKSA) and Content-Aware ReAssembly of Features (CARAFE) components, enhancing the representation for objects of various sizes, particularly small marine organisms. Finally, the detection head (on the right) utilizes these refined, multi-scale features to predict bounding boxes and class probabilities. These components operate synergistically to address the unique challenges inherent in underwater biological detection.

3.1. Global Context 3D Perception Module

GCPM serves as a pivotal component of UW-YOLO-Bio, specifically engineered to capture long-range dependencies in underwater object detection. Unlike traditional approaches relying on computationally intensive self-attention mechanisms, GCPM adopts a lightweight yet powerful design integrating three core elements: a C2f module, a Global Context Block (GCBlock), and a channel-wise SimAM mechanism, as shown in

Figure 2. This integration enables efficient global context modeling while maintaining minimal computational overhead.

The GCPM takes a feature map of size C × H × W as input and produces an output feature map of the identical dimension C × H × W. While the spatial and channel dimensions remain unchanged, the representational capacity of the output features is significantly enhanced through the integration of global context and channel-wise attention.

3.1.1. Global Context Block (GCBlock)

At the heart of GCPM lies the GCBlock, which replaces conventional non-local blocks with a lightweight convolution-based alternative. As depicted in

Figure 2, GCBlock follows a streamlined three-stage process:

Global Feature Extraction: Global average pooling followed by a 1 × 1 convolution compresses the input feature map into a compact context vector.

Feature Transformation: A two-layer bottleneck structure (1 × 1 convolution → LayerNorm → ReLU → 1 × 1 convolution) converts this context vector into a channel-specific weight map.

Feature Aggregation: The refined global context is reintroduced into the original features through element-wise addition.

Mathematically, the GCBlock operation can be expressed as Equation (1):

where

is the weight for the global attention pool, and

denotes the bottleneck transform.

The ability of GCBlock to capture long-range dependencies stems from its use of Global Average Pooling (GAP). The GAP operation aggregates information across the entire spatial dimension into a compact context vector. This operation effectively allows any single point in the feature map to establish a connection with all other points, regardless of their spatial distance. The subsequent bottleneck transformation ( and ) learns to weigh this global information and reintegrates it into the original features via an element-wise addition. This mechanism shares a similar spirit with the self-attention in Transformers, which models pairwise interactions. However, by performing spatial aggregation first, the GCBlock reduces the computational complexity from quadratic, , typical of standard self-attention, to linear, , thereby achieving efficient long-range dependency modeling suitable for resource-constrained platforms.

This design is critically important for underwater applications, as it achieves a substantial reduction in computational complexity, which adds a mere 0.2% more parameters, while fully preserving the module’s capacity to capture global contextual information. Unlike standard self-attention mechanisms, whose computational cost scales quadratically with feature map size, the proposed GCBlock exhibits linear complexity with respect to input dimensionality. This efficiency makes the module exceptionally well-suited for deployment on computationally restricted underwater platforms.

3.1.2. Channel-Wise SimAM

Complementing the GCBlock, the Channel-wise SimAM module introduces 3D perception capabilities without additional learnable parameters. This parameter-free mechanism adaptively assigns importance weights to individual channels according to their intrinsic feature characteristics, thereby enhancing discrimination between target objects and background noise in underwater imagery.

The workflow of the SimAM, as illustrated in

Figure 2, can be conceptually divided into three distinct stages—Generation, Expansion, and Fusion—which collectively enable efficient global context modeling:

Generation: This initial stage generates a compact global context vector from the input feature map X using Global Average Pooling (GAP). The GAP operation aggregates spatial information from each channel into a single scalar value, producing a channel-wise descriptor vector of size . This vector serves as a foundational summary of the global contextual information present in the feature map.

Expansion: The generated context vector then undergoes transformation and expansion within a bottleneck structure comprising two convolutional layers separated by a LayerNorm and ReLU activation. This stage is designed to capture complex, non-linear interactions between channels. The first convolution potentially elevates the dimensionality to learn richer representations, while the second convolution projects the features back to the original channel dimension . This process effectively recalibrates the channel-wise context, learning to emphasize informative features and suppress less useful ones.

Fusion: Finally, the transformed context vector is fused back into the original input features via a broadcasted element-wise addition. This integration mechanism allows the refined global context to modulate features across all spatial locations, enhancing the discriminative power of the network without altering the spatial dimensions of the feature map . This residual-style fusion ensures stable training and seamless integration of global information.

The design of Channel-wise SimAM is inspired by the spatial suppression mechanism in mammalian visual cortices, where active neurons inhibit nearby neuronal activity. This biological phenomenon is mathematically formulated as an energy function that measures the linear separability between a neuron and its neighboring neurons within the same channel. The energy function is defined as Equation (2):

where

represents the value of the target neuron,

and

are the mean and variance of all neurons in the channel, and

is a regularization parameter.

The importance of each neuron is inversely proportional to its energy value

, with lower energy indicating higher importance. The channel-wise SimAM module then computes the importance map as Equation (3):

where

and

with

(spatial size of the feature map). Finally, the feature map is refined using Equation (4):

where

denotes element-wise multiplication. The implementation of Channel-wise SimAM is remarkably simple, which demonstrates its parameter-free nature and computational efficiency. This simplicity is a key advantage over other attention modules that require extensive parameter tuning.

The GCPM integrates GCBlock and Channel-wise SimAM into a single efficient framework, with the following computational flow Equation (5):

This integration strategy ensures that the global context information captured by GCBlock is further refined by Channel-wise SimAM, enhancing the model’s ability to perceive contextual relationships in underwater environments.

3.1.3. Discussion on GCPM

The novelty of the proposed GCPM lies not in the invention of its sub-components but in their novel integration and the specific problem they are designed to address. The GCBlock is adapted from the work of Cao et al. [

42], which efficiently captures long-range dependencies. The Channel-wise SimAM mechanism is inspired by the parameter-free attention module proposed by Yang et al. [

43], which leverages neuroscience-based energy functions. Our key innovation is the synergistic combination of these two distinct mechanisms into a single, cohesive module (GCPM) tailored for underwater visual degradation. Specifically, the GCBlock first models broad contextual relationships to clean the features, and its output is subsequently refined by the SimAM mechanism, which enhances discriminative local features by suppressing neuronal noise. This specific wiring and the focus on mitigating underwater optical artifacts (e.g., haze, low contrast) through 3D perception represent the novel contribution of the GCPM, offering a more effective and efficient alternative to standard self-attention for our target domain.

To quantitatively substantiate the efficiency claims of the proposed GCBlock and provide a clear comparison with existing attention mechanisms, we conducted a detailed computational complexity analysis. This analysis aims to validate the theoretical advantages of linear complexity over quadratic approaches, such as standard self-attention, and to demonstrate the practical benefits in terms of floating-point operations (FLOPs) and parameter counts. Specifically, we evaluated the following key modules under a representative feature map size (e.g., a common intermediate resolution in underwater detection tasks):

Standard Self-Attention: Representing the baseline with quadratic complexity , which is computationally expensive for high-resolution feature maps.

GCBlock (Ours): Our proposed module, designed with linear complexity through global average pooling and bottleneck transformations.

Channel-wise SimAM (Ours): The parameter-free component integrated into GCPM, also exhibiting linear complexity.

The results, summarized in

Table 1, highlight the dramatic reduction in computational overhead achieved by our modules. For instance, the GCBlock reduces FLOPs by over 25× compared to self-attention, while maintaining competitive performance. This analysis not only corroborates the theoretical foundations outlined in

Section 3.1 but also underscores the practicality of our approach for resource-constrained deployments. By presenting these metrics, we provide empirical evidence that the GCBlock and SimAM collectively offer an optimal balance between efficiency and effectiveness, aligning with the goals of real-time underwater biological detection.

3.2. Channel Aggregation Efficient Downsampling Block

Downsampling serves as a pivotal operation in object detection networks, as it reduces spatial dimensions to alleviate computational burden while enlarging receptive fields. However, in underwater imaging scenarios characterized by low contrast, feature fragmentation, and high noise sensitivity, conventional downsampling techniques, such as max pooling and stride convolution, would often lead to considerable loss of discriminative information.

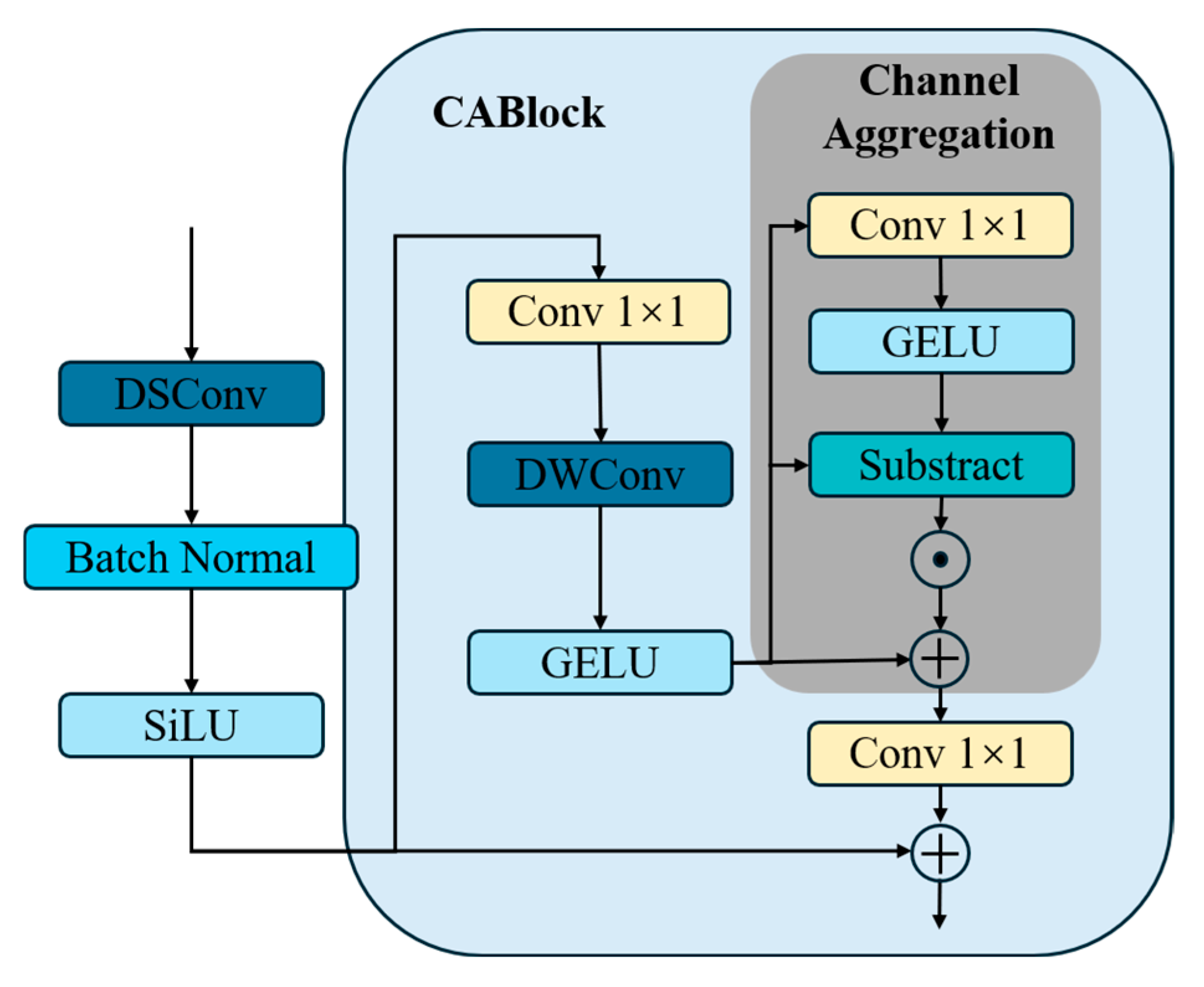

To overcome these limitations, we propose the CAEDB, designed to preserve crucial features while maintaining computational efficiency. As illustrated in

Figure 3, the CAEDB consists of two principal components: Depthwise Separable Convolution (DWConv) and the Channel Aggregation Block (CABlock). This dual-structure design enhances representational capacity and stabilizes feature extraction in degraded underwater conditions through a complementary two-stage process. The DWConv first performs efficient spatial filtering, reducing noise and extracting preliminary features while expanding the receptive field. The subsequent CABlock then operates on these refined features, adaptively recalibrating channel-wise responses based on global context. This sequential processing allows the module to first mitigate spatial degradation (e.g., blurring, noise) and then address feature-level imbalances (e.g., low contrast between targets and background), leading to more robust and stable feature representations compared to using either component alone.

In contemporary architectures, channel mixing, which is denoted as CMixer(·), is commonly implemented via two linear projections. Typical realizations include a two-layer channel-wise multilayer perceptron (MLP) interleaved with a 3 × 3 DWConv. The kernel size of 3 × 3 is chosen for the DWConv as it represents an optimal trade-off between receptive field size and computational efficiency. Smaller kernels (e.g., 1 × 1) lack sufficient spatial context for effective feature extraction in noisy underwater environments, while larger kernels (e.g., 5 × 5 or 7 × 7) significantly increase computational complexity and parameters without providing proportional performance gains for this task [

39,

40]. The depthwise separable design factorizes a standard convolution into a depthwise convolution (applying a single filter per input channel) followed by a pointwise convolution (1 × 1 convolution), which drastically reduces computational cost and model parameters while maintaining representative capacity, making it ideal for real-time applications on resource-constrained platforms. However, due to significant information redundancy among channels, standard MLP-based mixers often require a substantial expansion in the number of parameters (e.g., by a factor of 4 to 8 in the hidden layer) to achieve satisfactory performance. This parameter inflation directly leads to poor computational efficiency, characterized by increased FLOPs, larger memory footprint, and slower inference speeds, which are critical drawbacks for deployment on embedded systems.

To alleviate this inefficiency, several recent studies have incorporated channel enhancement modules, such as the Squeeze-and-Excitation (SE) block, into the MLP pathway to recalibrate channel responses. Inspired by frequency-domain insights, recent research has introduced a lightweight Channel Aggregation (CA) module [

40], which adaptively redistributes channel-wise feature representations within high-dimensional latent spaces—without introducing additional projection layers. The specific implementation process of this mechanism is depicted as Equations (6) and (7):

Concretely, DW represents depthwise convolution, CA(·) is implemented by a channel-reducing projection

and GELU to gather and reallocate channel-wise information, as shown in Equation (8):

where

is the channel-wise scaling factor initialized as zeros. It reallocates the channel-wise feature with the complementary interactions

.

The CAEDB module is designed for 2× spatial reduction. It accepts an input feature map of size and produces an output of size , effectively downsampling the spatial resolution while expanding the channel count from to and preserving critical information.

3.3. Regional Context Feature Pyramid Network

To address the challenges of inefficient multi-scale feature fusion, complex background interference, and small-object degradation commonly encountered in underwater imagery, we propose RCFPN. This framework enhances the baseline Feature Pyramid Network (FPN) by incorporating two novel components: a SKSA mechanism and a CARAFE module, as illustrated in

Figure 4.

The standard FPN aggregates multi-level features primarily through direct concatenation, which often fails to emphasize salient information while introducing redundant background noise. This limitation undermines the discriminative power of subsequent decoding stages. To overcome this, the SKSA module is introduced. In contrast to conventional channel-only attention mechanisms, SKSA employs a separable kernel structure that efficiently models spatial dependencies. By adaptively emphasizing informative regions and suppressing irrelevant background responses, SKSA enhances the network’s capacity to capture fine-grained details in cluttered underwater environments substantially, leading to notable improvements in detection accuracy, particularly for small and partially occluded objects.

SKSA achieves significant computational efficiency by decomposing two-dimensional convolutional kernels into two cascaded one-dimensional separable kernels. This decomposition strategy effectively reduces computational complexity while preserving the benefits of a large receptive field. Specifically, SKSA replaces standard 2D convolution with a separable structure that processes horizontal and vertical spatial dimensions independently through sequential 1D convolutions.

The input feature map F undergoes a sequential transformation through Equations (9)–(12), which collectively implement the SKSA mechanism. Specifically, Equation (9) performs depthwise separable convolution to capture spatial information from different directions, Equation (10) further expands the convolution based on fused feature Z to extract finer features and expand the receptive field, Equation (11) adjusts the number of channels to map the features into spatial attention maps, and Equation (12) achieves feature enhancement to highlight important features. This cascaded processing culminates in the output of the SKSA module, denoted as:

where

d represents the dilation factor. Finally, the output of SKSA,

F, is obtained by performing a Schur product between the input feature

F and the attention feature map

A, enabling dynamic enhancement of the target region and effective suppression of irrelevant regions.

Conventional upsampling approaches, such as bilinear or nearest-neighbor interpolation, utilize fixed, content-agnostic kernels that, while computationally efficient, lack adaptability to local content variations. Consequently, these methods struggle to reconstruct subtle details such as edges, textures, and small-object features that are essential for precise detection in underwater conditions. To address this issue, RCFPN integrates the CARAFE module. The core innovation of CARAFE lies in its content-adaptive upsampling strategy, which dynamically generates reassembly kernels conditioned on the local context of input features. This enables accurate reconstruction of blurred object boundaries and enhances detail preservation, crucial for robust detection in visually degraded underwater scenes.

CARAFE consists of two principal stages: predictive kernel generation and content-aware reassembly. In the first stage, it predicts an upsampling kernel tailored to each local region based on the input feature content. In the second stage, the predicted kernel is used to weight and recombine local feature patches, producing upsampled, higher-resolution outputs (i.e., feature maps with increased spatial dimensions, typically by an upsampling factor

) that retain fine structural details. The kernel prediction process is mathematically expressed in Equations (13) and (14).

The kernel prediction process begins by compressing the channel depth of the input features from C to Cm via a 1 × 1 convolution. Subsequently, a convolutional layer with a kernel size of traverses the compressed feature map, producing a feature vector of length for every spatial location. Finally, this vector is reshaped into a matrix and normalized using the Softmax function, yielding the content-aware reassembly kernel specific to the target location .

Given a feature map X of size and an upsampling factor , CARAFE generates a new feature map with a size of . For each target region in the output , there is a corresponding region in the original feature map. Here, . In X, the neighborhood centered at is denoted by . Since each source location in the input feature map X corresponds to target positions in , each target position requires a reconstruction kernel of size to perform the upsampling operation. This kernel helps to assemble the feature map by taking into account local contextual information around each source location.

Upon generating the reassembly kernel

, CARAFE identifies a local region

of size

centered at the source location

in the input feature map

. The final value at the upsampled output location is then computed as the weighted sum of all feature values within this local neighborhood, expressed as Equation (15):

Unlike conventional interpolation-based approaches relying on fixed kernels, CARAFE dynamically generates content-aware kernels () for feature reconstruction. This adaptive process not only increases the spatial resolution of feature maps but also reinforces semantic consistency, suppresses background noise, and recovers intricate details more effectively—demonstrating clear advantages in the restoration of degraded underwater imagery.

The RCFPN takes multiple feature maps from different levels of the backbone (e.g., with varying spatial sizes ) as inputs. Through its unique fusion and upsampling mechanisms, it outputs a set of multi-scale feature maps imbued with strong semantics and rich spatial details, which are then fed into the detection head for prediction.

4. Experiment and Results

4.1. Experimental Setup

In deep learning, variations in hardware and software configurations can significantly impact experimental outcomes, leading to inconsistencies in model performance. To ensure reproducibility and clarity, we provide detailed hardware and software specifications in

Table 2.

The training procedure utilized the following parameters: a learning rate of 0.01, a batch size of 16, and input image dimensions of 640 × 640 pixels after resizing. The model was trained for 200 epochs, using the “auto” optimizer, a random seed of 0, and a close_mosaic parameter set to 10.

We provide a comprehensive and detailed account of the training configuration used for all models in this study. To guarantee a fair and consistent comparison across all baseline methods and our proposed UW-YOLO-Bio, we adhered to a unified training recipe. This protocol was meticulously designed to align with common practices in object detection literature while ensuring optimal performance on underwater datasets. The configuration encompasses all critical hyperparameters, including the number of training epochs, input image dimensions, data augmentation strategies, optimizer and learning rate scheduler settings, and post-processing parameters such as Non-Maximum Suppression (NMS) IoU thresholds. By standardizing these conditions, we eliminate potential confounding factors and reinforce the validity of our comparative results. The exhaustive list of parameters is summarized in

Table 3, which serves as a reference for researchers seeking to replicate our experiments or apply similar settings to related tasks.

4.2. Comprehensive Evaluation Framework

To evaluate the performance of our underwater object detection model rigorously, we employ a comprehensive set of metrics that assess both detection accuracy and computational efficiency. These metrics correspond to Equations (16)–(20), respectively. These metrics are widely used in computer vision research and provide a well-rounded view of model performance, which is especially critical for assessing robustness in challenging underwater environments.

Here, TP (True Positives) refers to the correctly detected instances, FP (False Positives) represents instances where the negative class is misclassified as positive, FN (False Negatives) indicates instances where the positive class is incorrectly identified as negative. We use Precision to measure the model ability to avoid false positives, and Recall to assess its capability in detecting all relevant objects. The -score combines both precision and recall into a single metric, offering a balanced measure of classification performance. Each class has its own precision and recall values, and plotting them on a precision-recall (P-R) curve helps visualize the trade-off between precision and recall. The area under the P-R curve is termed AP (Average Precision), which reflects the precision of the detection algorithm. Finally, we compute mAP (mean Average Precision) by averaging the AP scores across all classes.

4.3. Dataset Introduction

We evaluated our method on three challenging underwater datasets: DUO [

44], RUOD [

45], and URPC [

46]. These datasets encompass diverse underwater scenarios, including haze effects, color distortion, light interference, and complex background conditions, providing a comprehensive test platform for our method.

DUO (Underwater Object Detection Dataset): This available dataset is specifically designed for underwater object detection tasks publicly. It contains 7782 images with 74,515 annotated instances across four marine species. The dataset is divided into a training set (5447 images), a validation set (778 images), and a test set (1557 images). DUO offers multi-resolution images (ranging from 3840 × 2160 to 586 × 480 pixels) to accommodate varying computational resource constraints. It is particularly valuable for testing challenges such as low contrast, small object scales, and background clutter in underwater environments. DUO is widely used for real-time visual systems in autonomous underwater vehicles (AUVs) and serves as a critical benchmark for evaluating model generalization and multi-scale feature learning capabilities. DUO dataset is available at

https://github.com/chongweiliu/DUO (accessed on 1 October 2025).

RUOD (Robust Underwater Object Detection Dataset): RUOD is a large-scale dataset that includes 14,000 high-resolution images with 74,903 annotated instances across 10 categories. The dataset is divided into a training set (9800 images), a validation set (2100 images), and a test set (2100 images). It simulates real-world conditions such as fog effects, color shifts, and illumination variations, thus testing model robustness under challenging noise, blur, and color distortion. RUOD is a prominent benchmark in the field, emphasizing detection performance across multi-scale variations, occlusions, and complex backgrounds, and is widely used to evaluate advanced deep learning models for applications in resource exploration and environmental monitoring. RUOD dataset is available at

https://github.com/dlut-dimt/RUOD (accessed on 1 October 2025).

URPC (Underwater Robot Perception Challenge): This dataset is derived from real-world scenarios at Zhangzidao Marine Ranch in Dalian, China, and includes 5543 images annotated with four economically significant marine species. The dataset is divided into a training set (3880 images), a validation set (554 images), and a test set (1109 images). URPC focuses on applications in intelligent fishery and automated harvesting tasks. The dataset is notable for its practical applicability, as the images were captured in real operational environments, which include challenges such as particulate interference and object overlap. It provides valuable resources for training and testing the visual systems of underwater robots, contributing to improved efficiency in fishery resource management and automation. URPC dataset is available at

https://github.com/mousecpn/DG-YOLO (accessed on 1 October 2025).

4.4. Ablation Study

A comprehensive set of ablation experiments was conducted on the DUO, RUOD, and URPC datasets to evaluate the contributions of the three key modules systematically: the GCPM, the CAEDB, and the RCFPN. The experiments aimed to quantify the impact of each module on critical performance metrics, including Precision, Recall, F1-Score, mAP50, and mAP50-95. For consistency, all models were trained under identical conditions, adhering to the hyperparameters and hardware specifications outlined in

Section 4.1. The evaluation protocol started with a baseline model using YOLOv8n, followed by incremental additions of the proposed modules. The results, summarized in

Table 4, show the average performance over multiple independent runs to ensure statistical reliability.

Impact of GCPM: The GCPM consistently improved Precision and mAP50 across all datasets, with the most notable increase observed on RUOD (a 1.2% improvement in mAP50). By integrating global context modeling and 3D perceptual enhancement, this module effectively mitigated environmental degradations such as haze and light interference. However, it occasionally led to slight reductions in Recall (e.g., a − 0.1% decrease on DUO), as it tends to prioritize semantic features over finer, localized details.

Impact of CAEDB: The CAEDB module notably enhanced Recall and F1-score, especially on RUOD (a 2.0% improvement in Recall). Through efficient channel aggregation during downsampling, it preserved critical features and improved information flow. The module excelled under noisy and occluded conditions, as observed in the challenging environmental subsets of RUOD.

Impact of RCFPN: The RCFPN module delivered the most significant improvements in both mAP50 and mAP50-95, with the largest gain on RUOD (a 3.3% increase in mAP50). By integrating SKSA and CARAFE, the RCFPN strengthened multi-scale feature fusion and upsampling, proving particularly effective for detecting small and blurred targets.

It is noteworthy that on the DUO dataset, the full model’s mAP50 (88.0%) is marginally lower than that of the model with only RCFPN (88.1%). This slight discrepancy can be attributed to the inherent trade-offs between different module functionalities and statistical variance across runs. While the RCFPN alone excels in multi-scale fusion on DUO, the full model integrated approach provides more consistent and robust performance gains across all three diverse underwater datasets (DUO, RUOD, URPC), as reflected in the average improvement.

Synergistic Effect of the Full Model: The complete model, integrating all three modules, achieved the best overall performance. The GCPM and CAEDB modules provided enhanced feature representations for the RCFPN, enabling more effective feature fusion and outperforming the baseline across all metrics. For example, on URPC, mAP50 improved by 3.0%, demonstrating the complementary nature of the modules: GCPM contributes global context, CAEDB optimizes feature extraction, and RCFPN refines feature fusion. This synergy collectively addresses key challenges in underwater object detection. Additionally, the full model reduced the parameter count by 8.3% compared to the baseline, confirming enhanced efficiency.

4.5. Comparative Experiments

In this section, we present a comprehensive comparative evaluation of UW-YOLO-Bio against several state-of-the-art object detectors across the DUO, RUOD, and URPC datasets. The evaluated competitors include prominent detectors from the YOLO family (e.g., YOLOv8n [

3], YOLOv9s [

47]), as well as RT-DETR-L [

3], SSD [

48], and EfficientDet [

20]. All models were trained and evaluated under identical conditions to ensure a fair comparison. Performance was assessed using key metrics—Precision, Recall, mAP50, and mAP50-95—with visual analyses to provide a comprehensive view of the model capabilities.

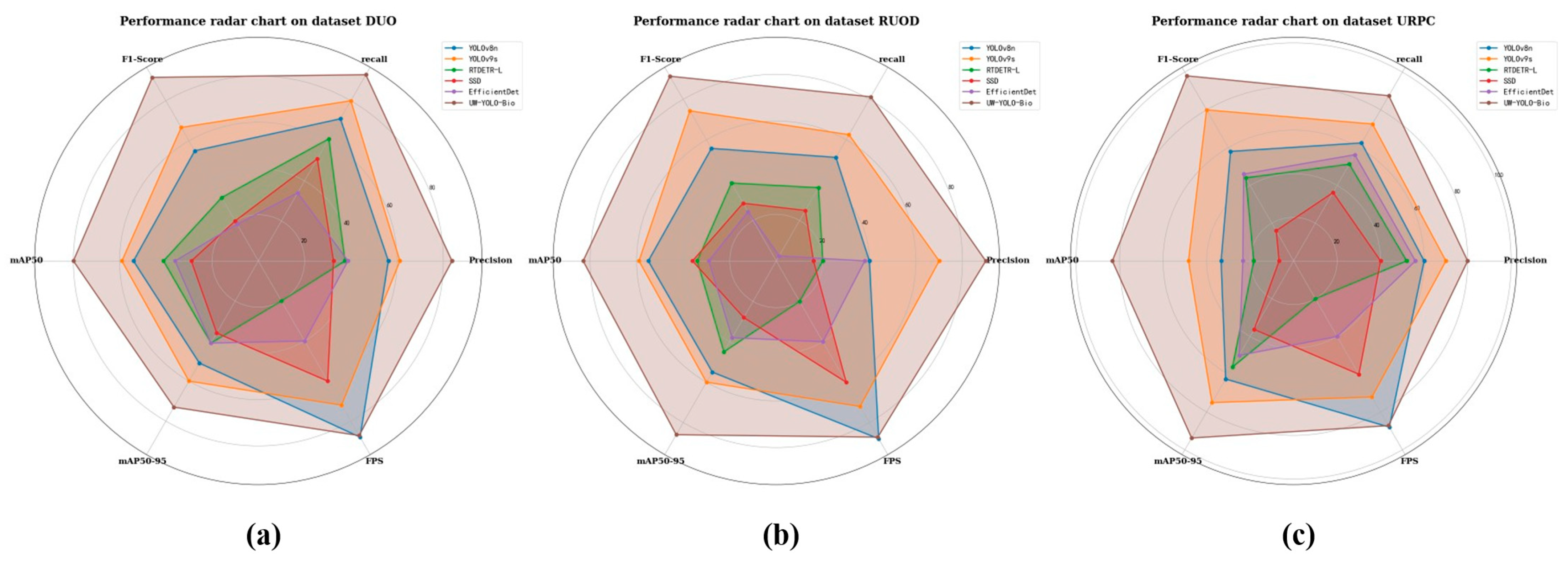

As summarized quantitatively in

Table 5 and visually in the radar chart in

Figure 5, the proposed method outperforms the majority of baseline models across all benchmarks, particularly excelling in mAP50 and F1-Score, surpassing the best competitor by 2.0%.

These results confirm the model robust ability to address key underwater challenges, including low contrast, noise, and significant scale variations. In terms of efficiency, the proposed model contains only 2.76 M parameters, an 8.3% reduction compared to the YOLOv8n baseline (3.01 M). Critically, as quantified in

Table 5, the proposed model achieves an inference speed of 61.8 FPS while maintaining the highest accuracy. This speed significantly surpasses comparative models like RT-DETR-L (45.0 FPS) and EfficientDet (50.0 FPS), and comfortably meets the standard criterion for real-time application (typically considered as >30 FPS). The end-to-end latency breakdown for UW-YOLO-Bio: Backbone (12.3 ms), Neck with RCFPN (2.1 ms), and Head (1.8 ms), resulting in a total latency of 16.2 ms. The balance between accuracy and efficiency is driven by the lightweight design of the GCPM and CAEDB modules, underscoring the model suitability for deployment on resource-constrained underwater platforms.

4.6. Confusion Matrix

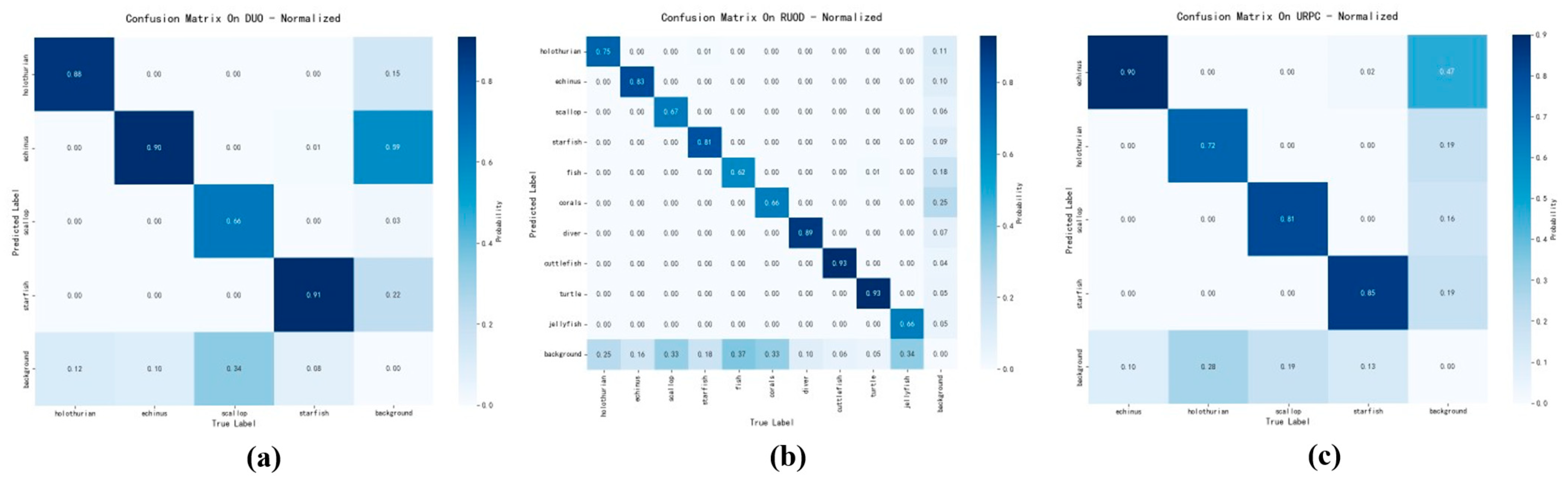

The confusion matrix offers a detailed visualization of the model classification performance, highlighting both correct predictions and misclassifications across categories. Normalized confusion matrices were generated for each dataset to evaluate the model’s behavior under conditions (see

Figure 6).

On the DUO dataset, the proposed model demonstrated high accuracy across all four categories (echinus, holothurian, starfish, scallop). The confusion matrix revealed a low false detection rate, indicating the model’s effectiveness in distinguishing visually similar entities, such as holothurians and the background regions.

For the more challenging RUOD dataset, which includes ten categories, the model exhibited robust performance. It notably reduced confusion among difficult-to-detect classes such as jellyfish and corals. While fish and divers were recognized with high precision, jellyfish, due to their morphological variability and scarcity, were occasionally misclassified as background.

In the URPC dataset, derived from a marine ranch scenario, the model demonstrated balanced and reliable performance. Both precision and recall exceeded 80% for each category, reflecting its strong generalization capability, particularly in practical applications.

4.7. Results Presentation

To demonstrate the model performance in real underwater scenarios, we provide visual examples of both successful detection cases and challenging scenarios, including low contrast, occlusion, and noise.

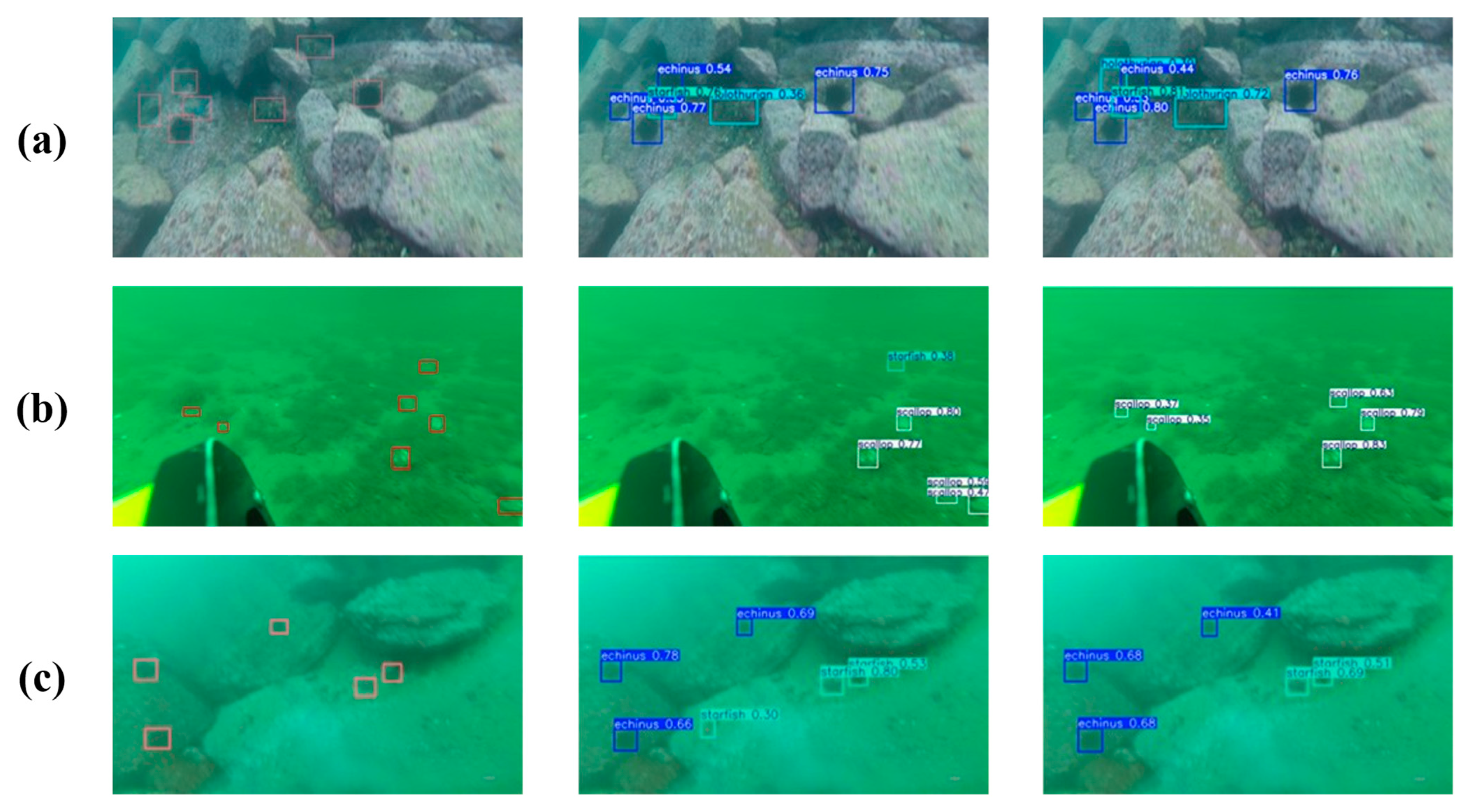

As shown in

Figure 7, visualization results show that the proposed model excels at detecting scales, even when they are mixed with sediment. On the DUO dataset, the model accurately detects small targets and overlapping objects, such as sea urchins and sea cucumbers, in low contrast images. In contrast, baseline models like YOLOv8n may miss these detections. On the RUOD dataset, the model effectively handles challenging conditions like fog effects and light interference, while reducing false positives. For example, in fog-affected images, the model successfully detects scallop, which other models may incorrectly identify as background. On the URPC dataset, the model performs consistently well in marine ranch scenes, where it detects multi-scale and partially occluded targets.

To provide a more intuitive and direct comparison of the detection performance,

Figure 8 presents a comparative analysis of the baseline YOLOv8n model and our proposed UW-YOLO-Bio framework on identical challenging images selected from the test sets.

Figure 8 is organized into three columns: the first column displays the ground truth annotations, the second column shows the detection results obtained by the original YOLOv8n model, and the third column presents the results from our enhanced UW-YOLO-Bio model. Each line corresponds to a specific failure mode: (a) low confidence detections, (b) missed detections, and (c) false detections, which are common challenges in underwater object detection.

As illustrated in

Figure 8a, which focuses on low confidence scenarios, our model significantly increases the confidence levels for various target detections, demonstrating enhanced reliability and superiority. The low confidence observed in the original YOLOv8n results can be attributed to the targets blending with the background, particularly due to shadows cast by underwater rocks that closely match the colors of both the rocks and the targets, making identification challenging. This improvement underscores the effectiveness of our global context modeling in mitigating environmental ambiguities.

In

Figure 8b, which addresses missed detection cases, our model detects a greater number of correct targets, reducing omission errors. This improvement is notable given that the targets are often obscured by underwater sediment and aquatic vegetation, which poses a significant challenge for efficiently extracting key features for identification. Through a series of optimization techniques integrated into our framework, including RCFPN, we have enhanced the model’s capability to handle such occlusions and complex backgrounds, thereby improving recall.

Figure 8c highlights instances where our model reduces false detection rates, particularly false positives. These false detections in the baseline model occur due to the bluish-green tint of the water, which causes objects like starfish to closely resemble the background. Our model exhibits improvements in mitigating this issue by better distinguishing between foreground objects and the ambient environment through the CAEDB and attention mechanisms, thereby reducing misclassifications and enhancing overall detection accuracy.

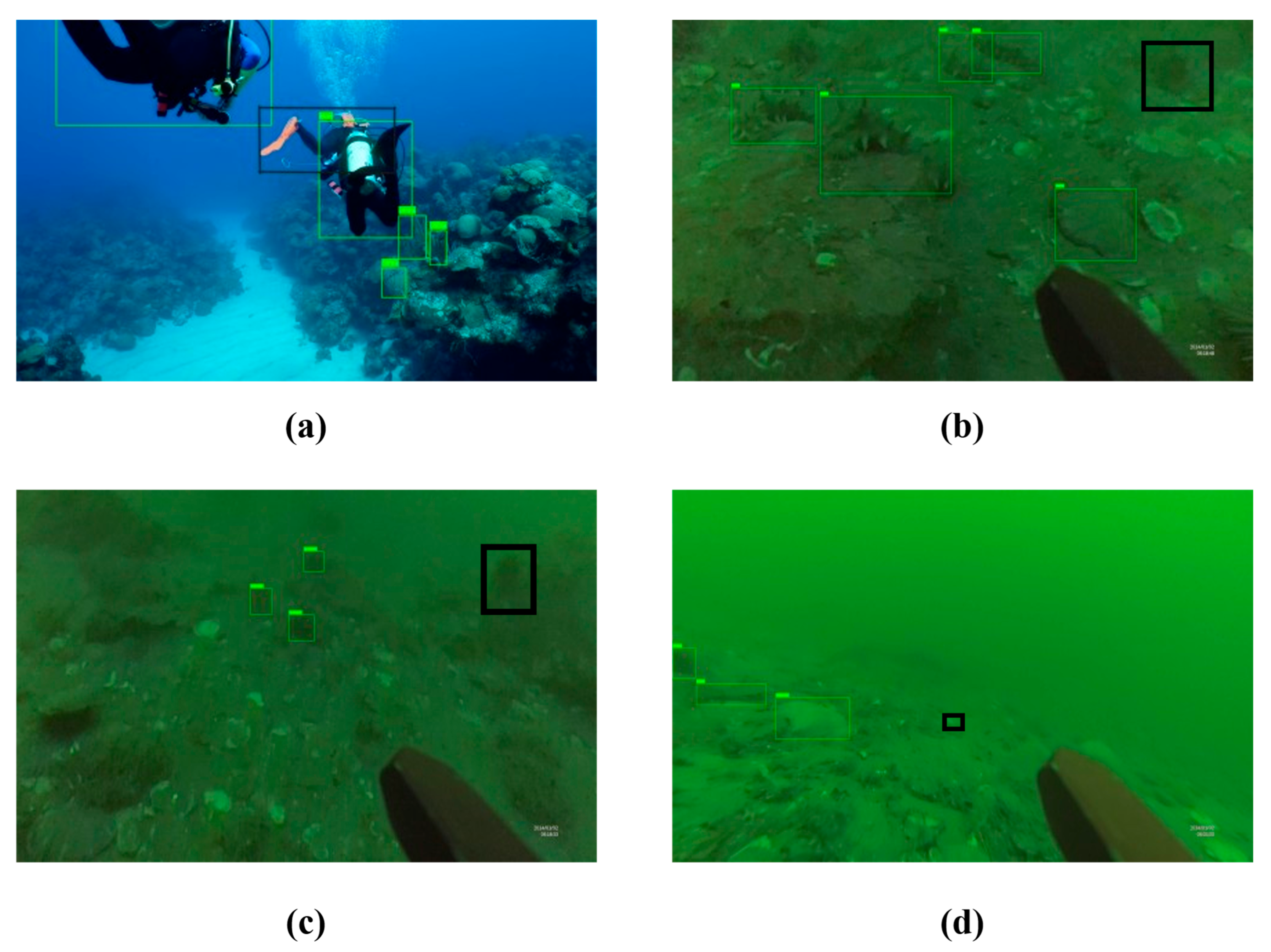

Despite the overall excellent performance, we also analyzed the failure cases to identify areas for further improvement. As illustrated in

Figure 9, these failures primarily occurred in scenarios involving extreme occlusion, high imbalance of image boundary targets, or problematic class distributions.

Firstly, as illustrated in

Figure 9a, the model struggles to delineate individual contours when multiple targets are densely clustered or partially obscured by sediments, often resulting in missed detections or the fusion of multiple instances into a single bounding box. This limitation stems from the model reliance on local appearance features, which are significantly diminished under extreme occlusion, making it difficult to obtain sufficient information for effective instance separation and recognition.

Furthermore, another recurrent issue is observed in the periphery of images.

Figure 9b,c demonstrate that for objects truncated by the image frame, the model detection confidence plummets, frequently leading to complete misses. The root cause for this boundary effect is a training-inference discrepancy, the proportion of complete targets in the training data is much higher than that of truncated targets, resulting in weaker generalization ability of the model to incomplete targets.

Lastly, despite the designed enhancements for low-contrast scenarios, the model remains vulnerable to highly camouflaged targets. As shown in

Figure 9d, when the color and texture of a target are nearly indistinguishable from the surrounding environment, the model frequently misclassifies it as background. This represents an extreme manifestation of the low-contrast challenge, where the discriminative power of low-level features is insufficient, and the model lacks the necessary high-level semantic or contextual cues to resolve the ambiguity based on a single static frame. Collectively, these cases provide crucial insights for directing future research toward enhancing contextual reasoning and robustness in complex underwater environments.

Although the primary evaluation was conducted on datasets encompassing common underwater degradations (e.g., haze, blur, color cast), the model’s design inherently addresses challenges analogous to adverse weather conditions. The GCPM global context modeling enhances robustness to haze-like effects caused by high concentrations of suspended particles, similar to fog. Concurrently, the CAEDB and RCFPN focus on preserving details and suppressing noise helps maintain performance under varying illumination conditions, such as those caused by sunlight penetration at different depths or water turbidity levels. We therefore expect the model to demonstrate consistent performance across a range of water clarity and ambient light conditions typical of different ‘weather’ underwater. Explicit validation on datasets explicitly tagged with meteorological or water quality metadata remains a valuable avenue for future research.

A limitation of this work is that it does not evaluate against newer detector versions like YOLOv10. Architectural differences across generations could influence performance, and exploring the integration of our proposed modules with such advanced backbones is a valuable direction for future work.

Future Work: Based on the analysis of failure cases, several promising directions for improvement have been identified:

Sensitivity to Edge and Occluded Targets: Enhancing the model sensitivity to edge and occluded targets could be achieved by incorporating edge-aware sampling strategies or more sophisticated data augmentation techniques tailored for such scenarios.

Class Imbalance: Addressing class imbalance through techniques like resampling or focal loss adjustments could lead to improved performance, particularly for underrepresented classes.

Robustness to Occlusions: Improving robustness against occlusions could involve integrating context-aware feature reasoning or employing multi-frame analysis to infer obscured parts of objects.

Validation Under Diverse Environmental Conditions: Explicit validation on datasets explicitly tagged with meteorological or water quality metadata remains a valuable avenue for future research. This would systematically assess model robustness across varying weather conditions, water clarity, and illumination scenarios, enhancing generalizability for real-world deployments

Integration with Advanced Detector Architectures: Architectural differences across generations could significantly influence performance. Therefore, a valuable future direction is to explore the integration of the proposed modules with state-of-the-art backbones such as YOLOv10. This would leverage advancements in efficiency and accuracy, potentially leading to further improvements in real-time underwater detection while maintaining the lightweight design principles of our framework.