Improving ICP-Based Scanning Sonar Image Matching Performance Through Height Estimation of Feature Point Using Shaded Area

Abstract

1. Introduction

- 1.

- Estimation of shaded area length: A technique is introduced to estimate the length of the shaded areas by transforming the image data obtained from the Cartesian coordinate system into the cylindrical coordinate system, accounting for the inherent characteristics of sonar.

- 2.

- Integration of matching algorithms: We propose a novel approach that integrates the existing ICP-based image matching algorithm with an additional matching algorithm that uses height estimations of feature points derived from the shaded areas.

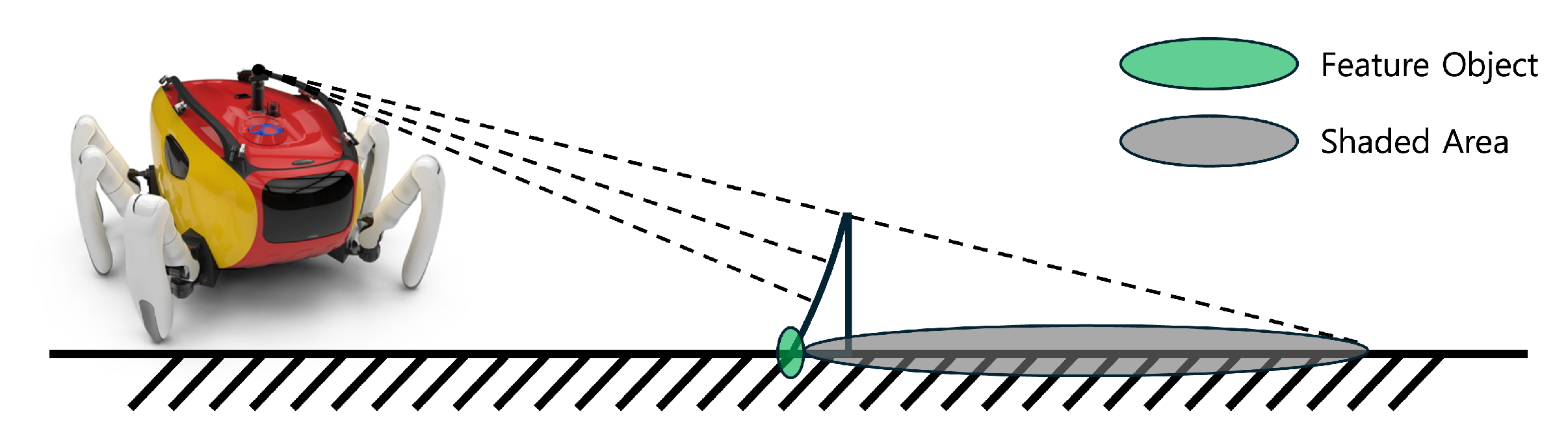

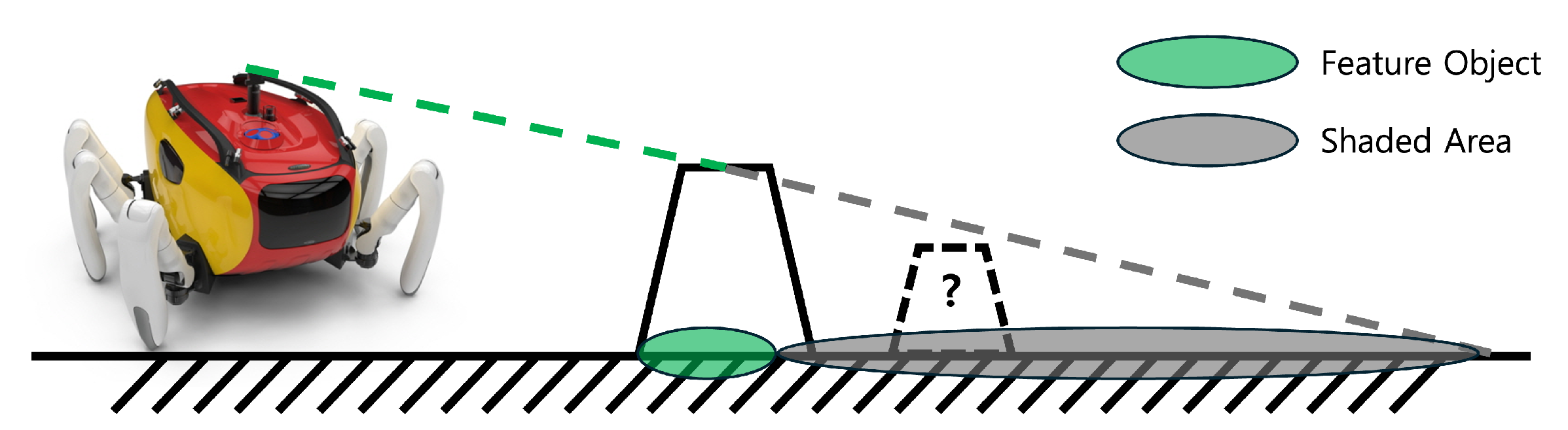

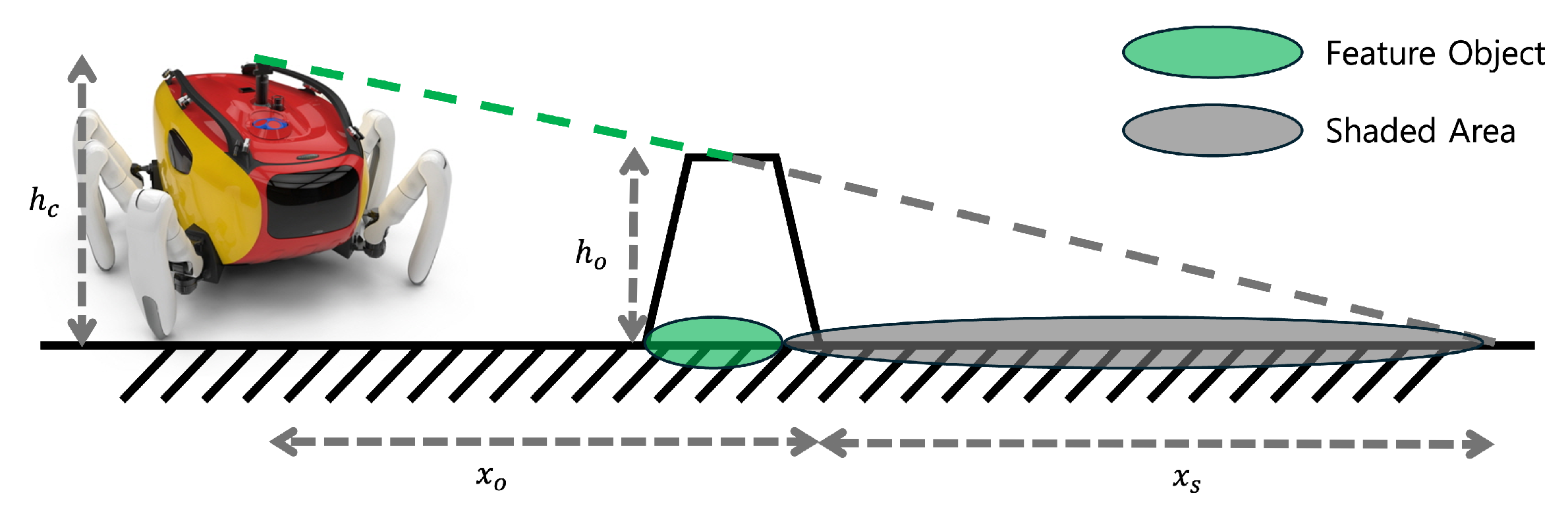

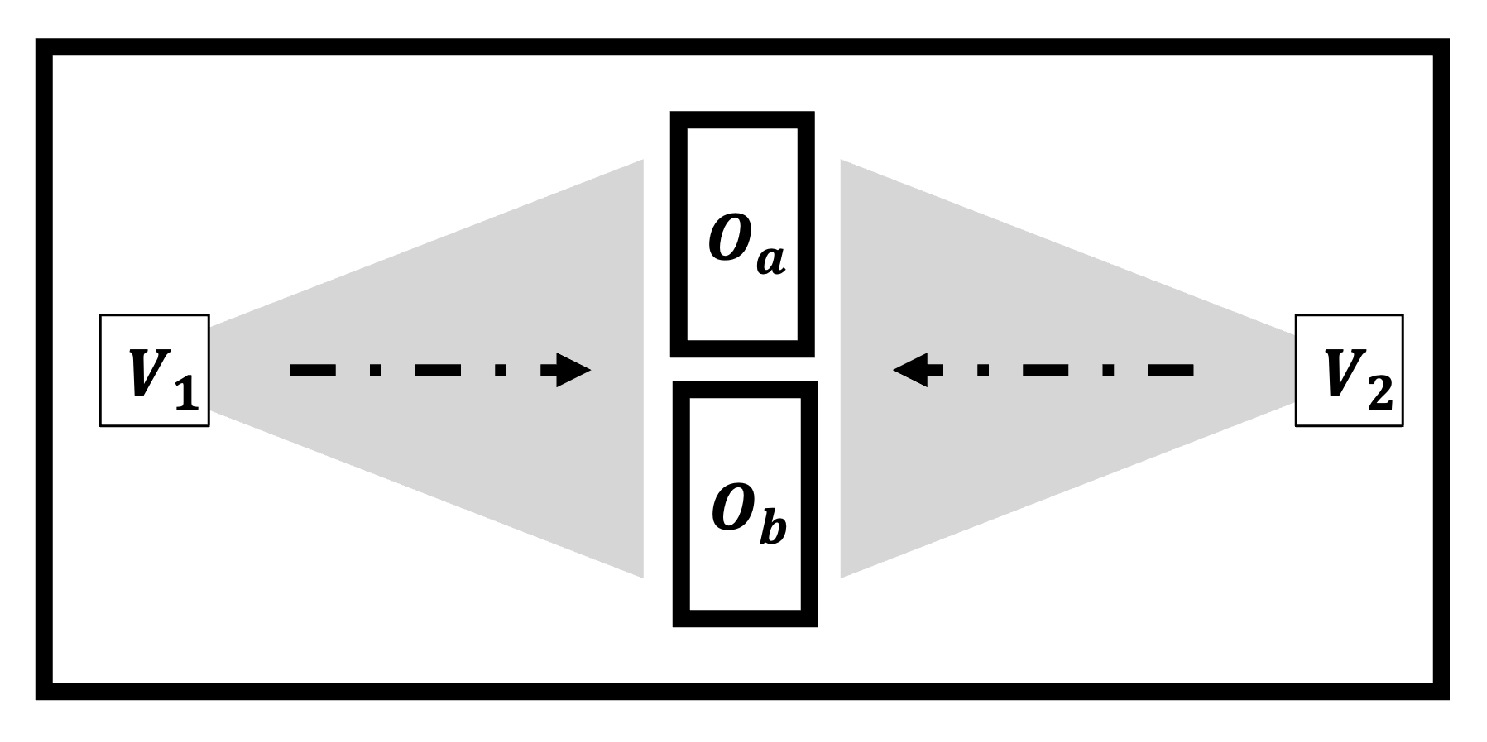

2. Characteristic of Sonar and Shaded Area

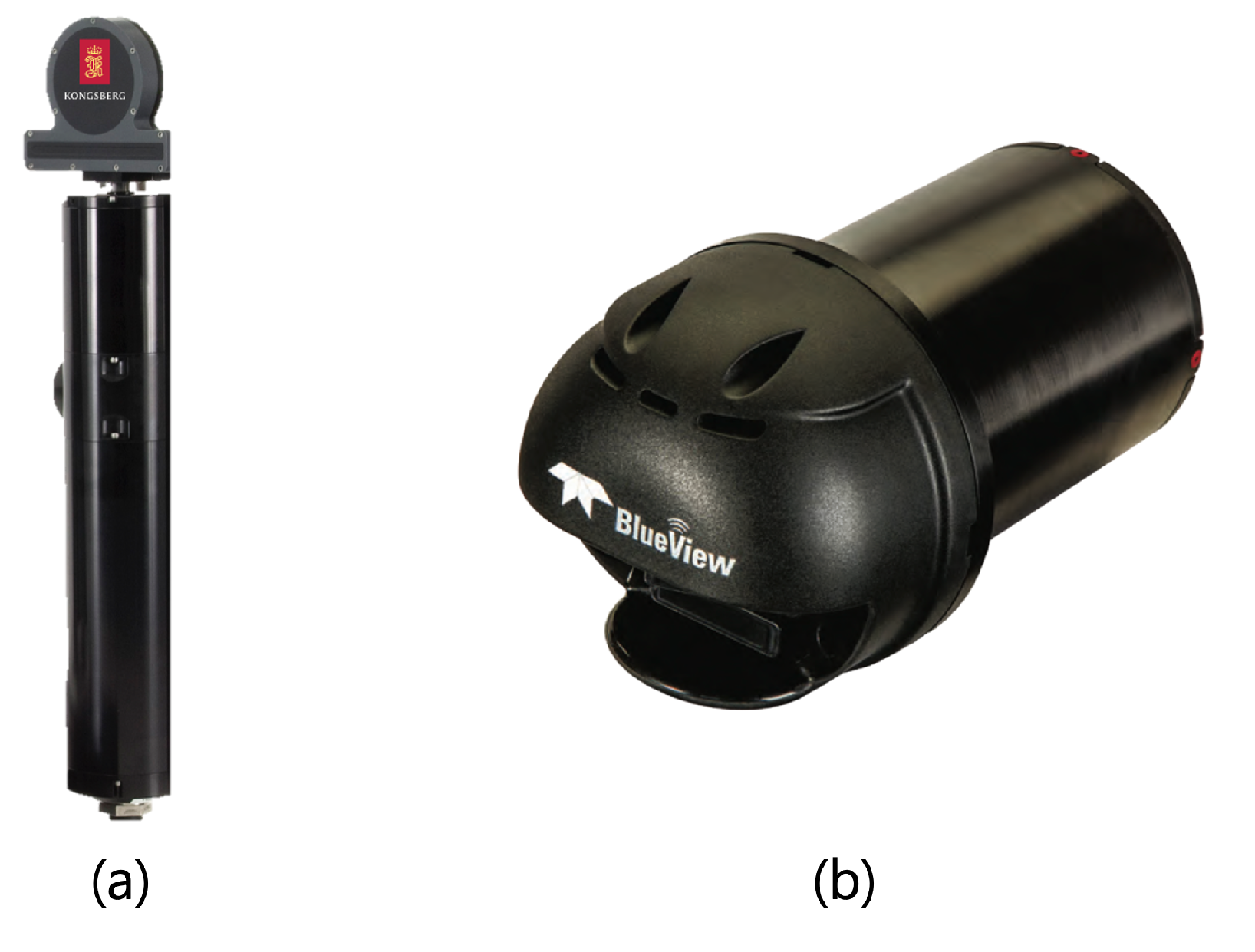

2.1. Characteristics of Sonar

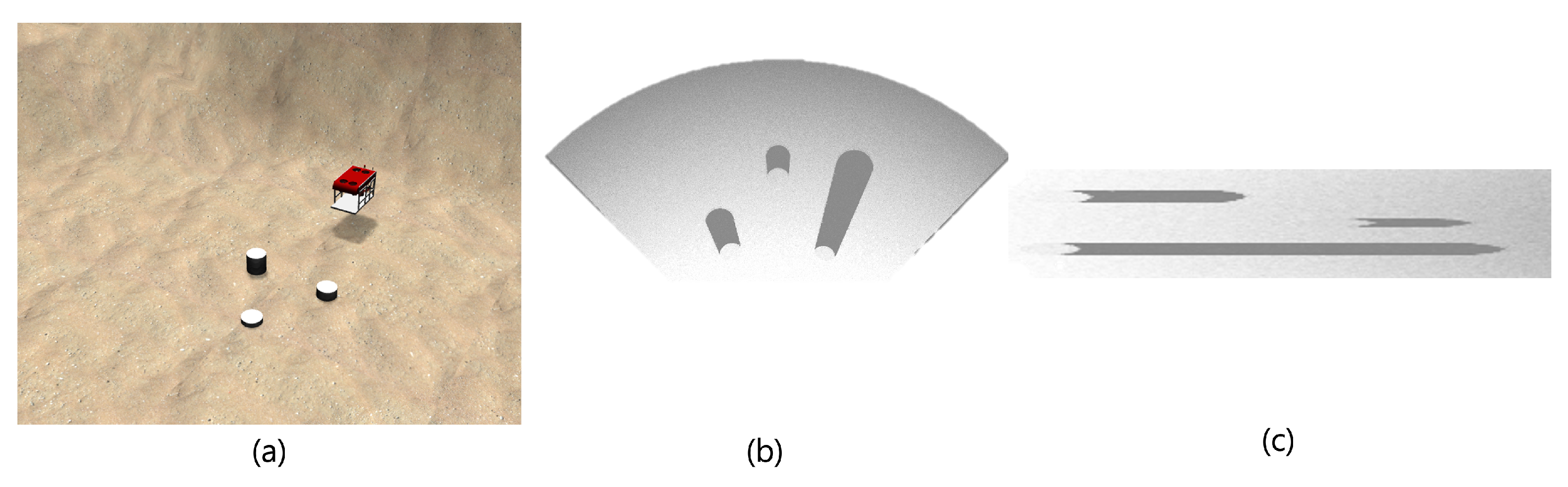

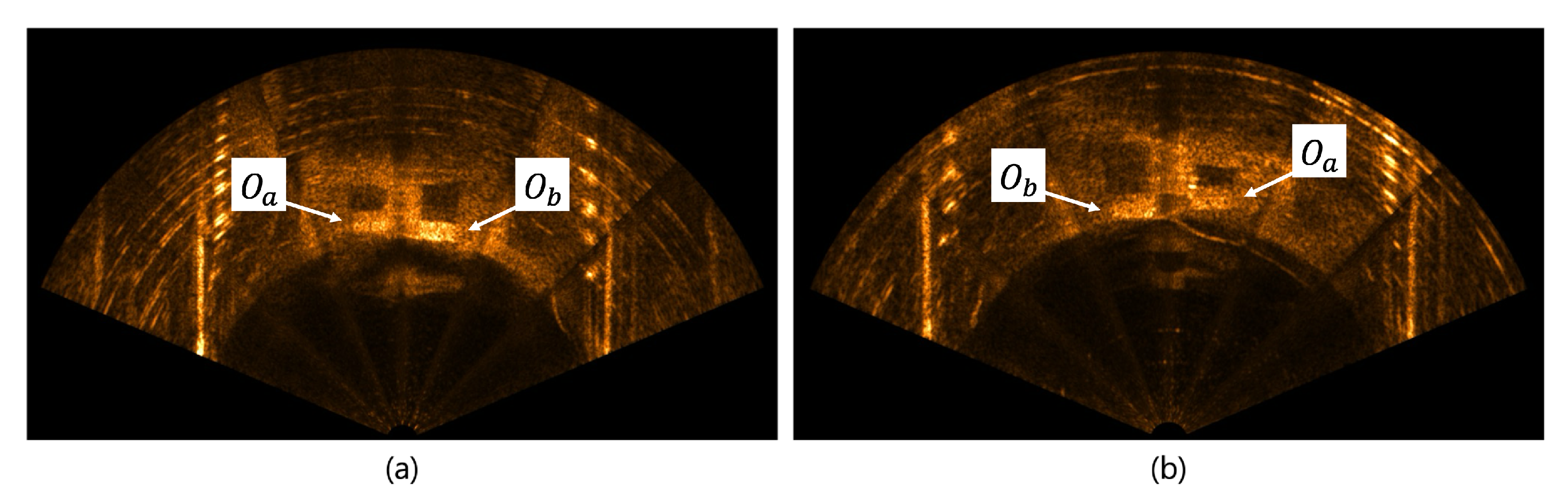

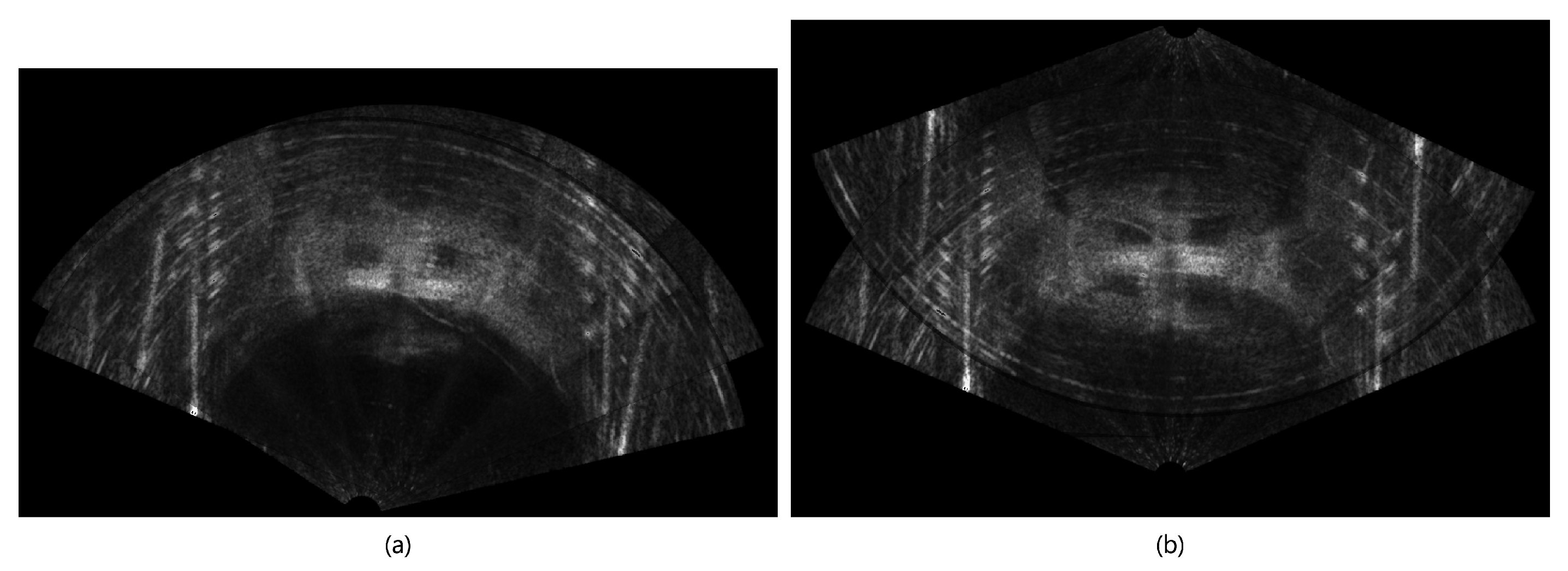

2.2. Observing the Shaded Area Through Simulation

3. Proposed Matching Algorithm

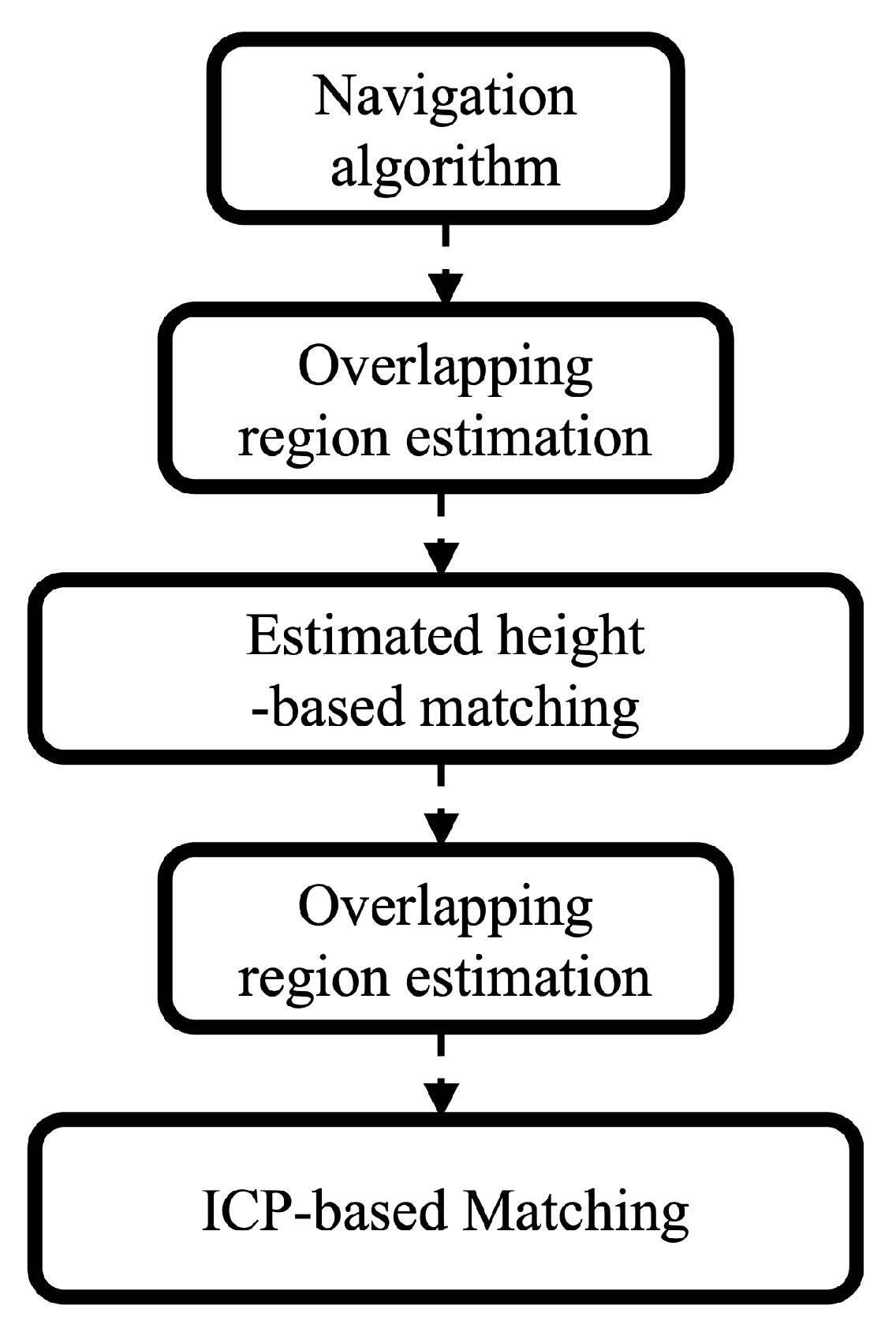

3.1. An Overview of the Proposed Algorithm

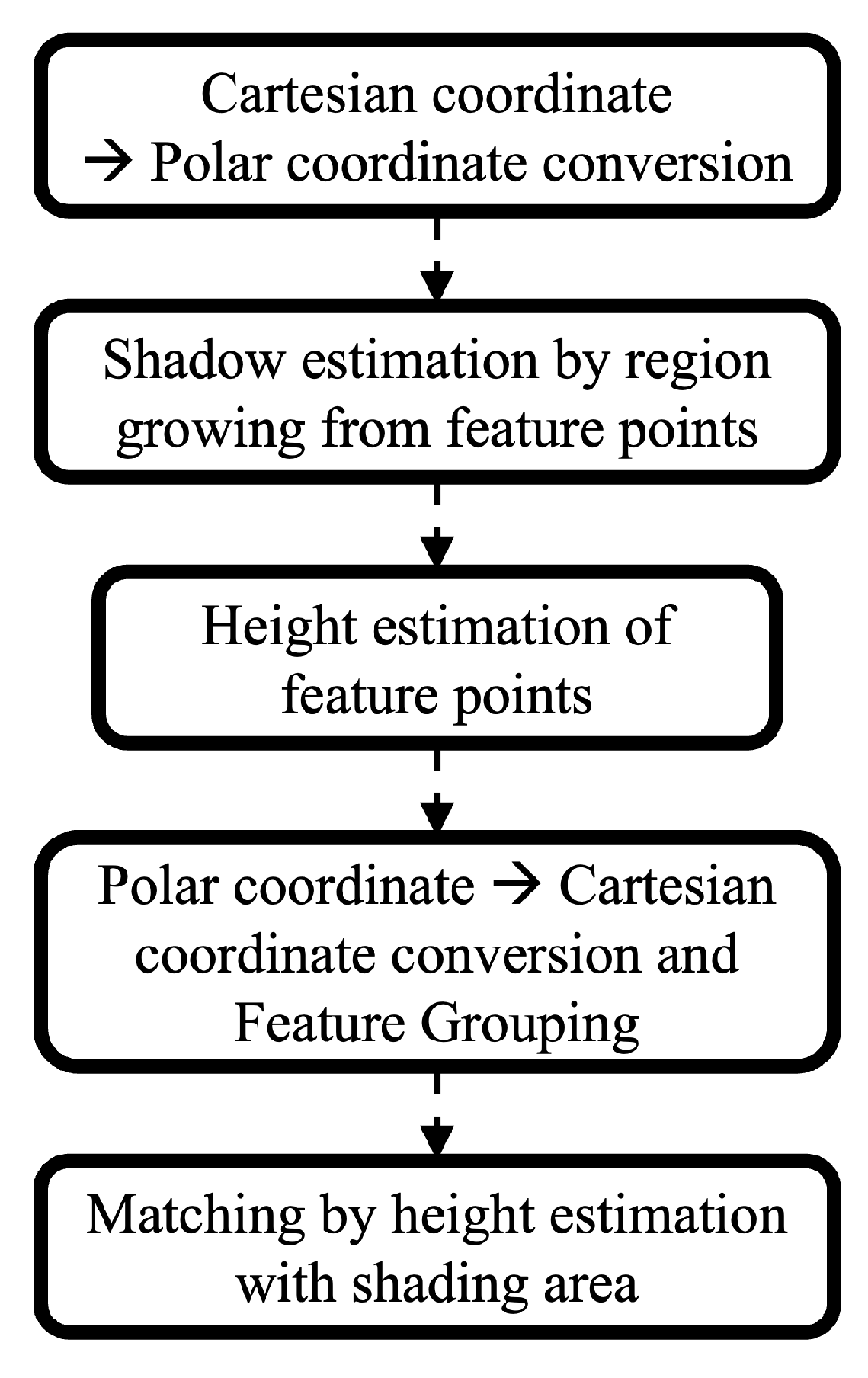

3.2. Shaded Area Estimation and Matching

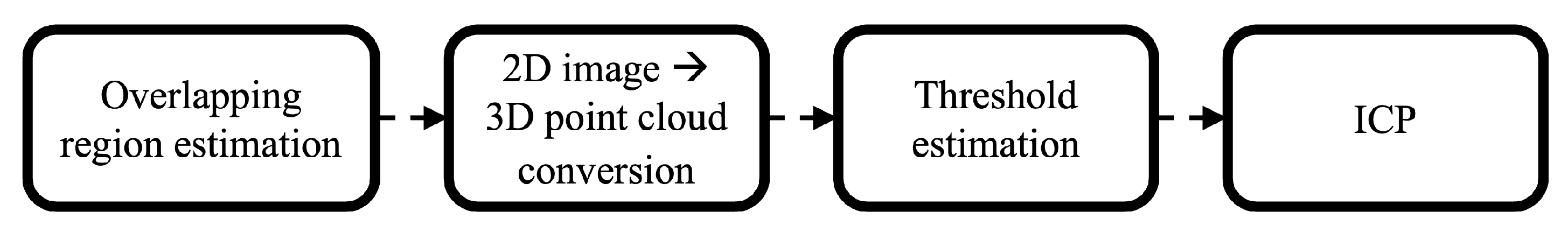

3.3. Proposed Algorithm Obtained by Fusing Two Algorithms

- Determine the distance d between the centroids of the two images.

- Derive the center coordinates between the centroids of the two images.

- Locate through the distance h between the center coordinates and .

- Determine the angles between the center and the center coordinates based on the centroid of each image, and thereafter, locate .

4. Experiments

4.1. Basin Experiments

4.1.1. Experimental Environment

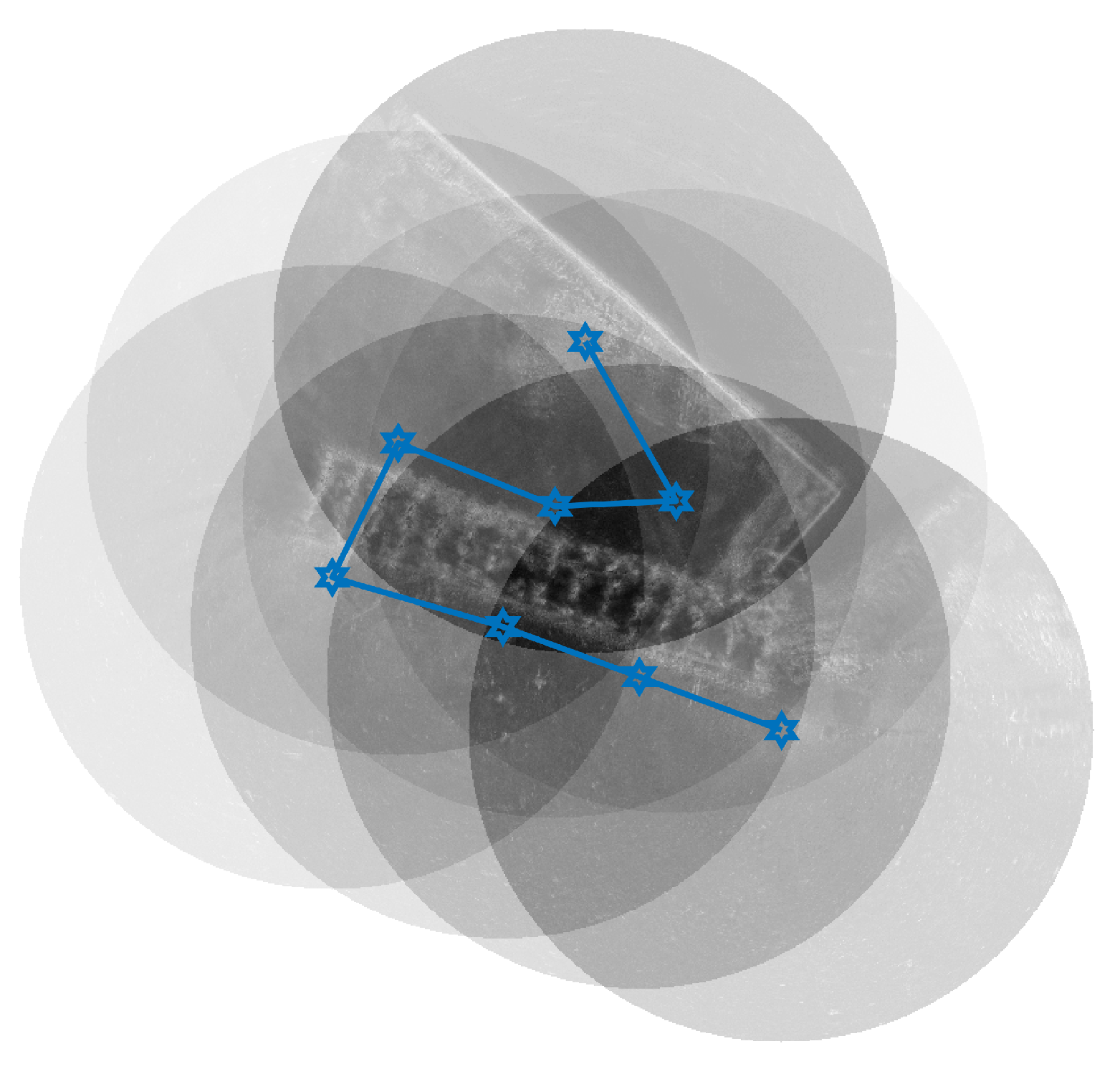

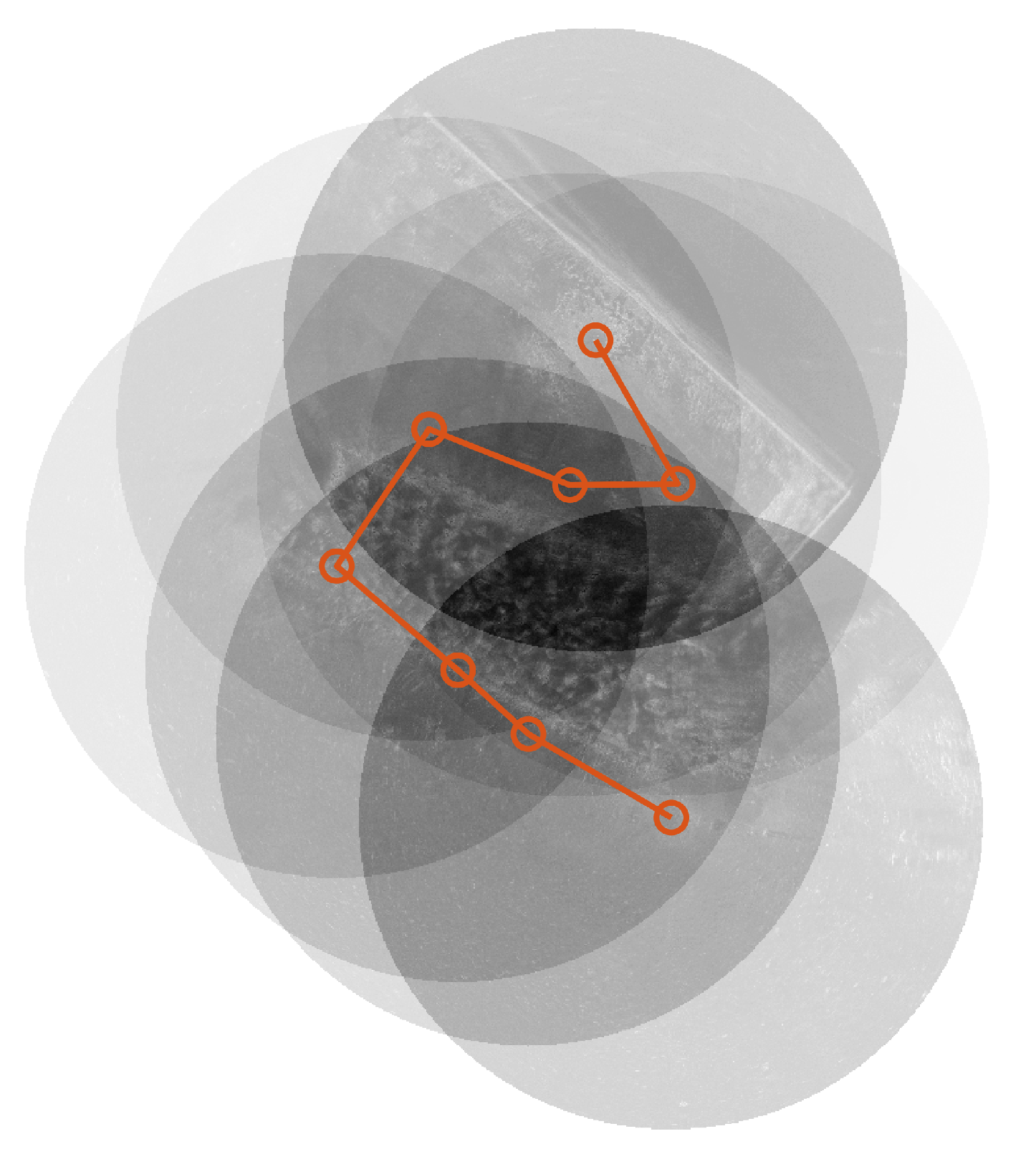

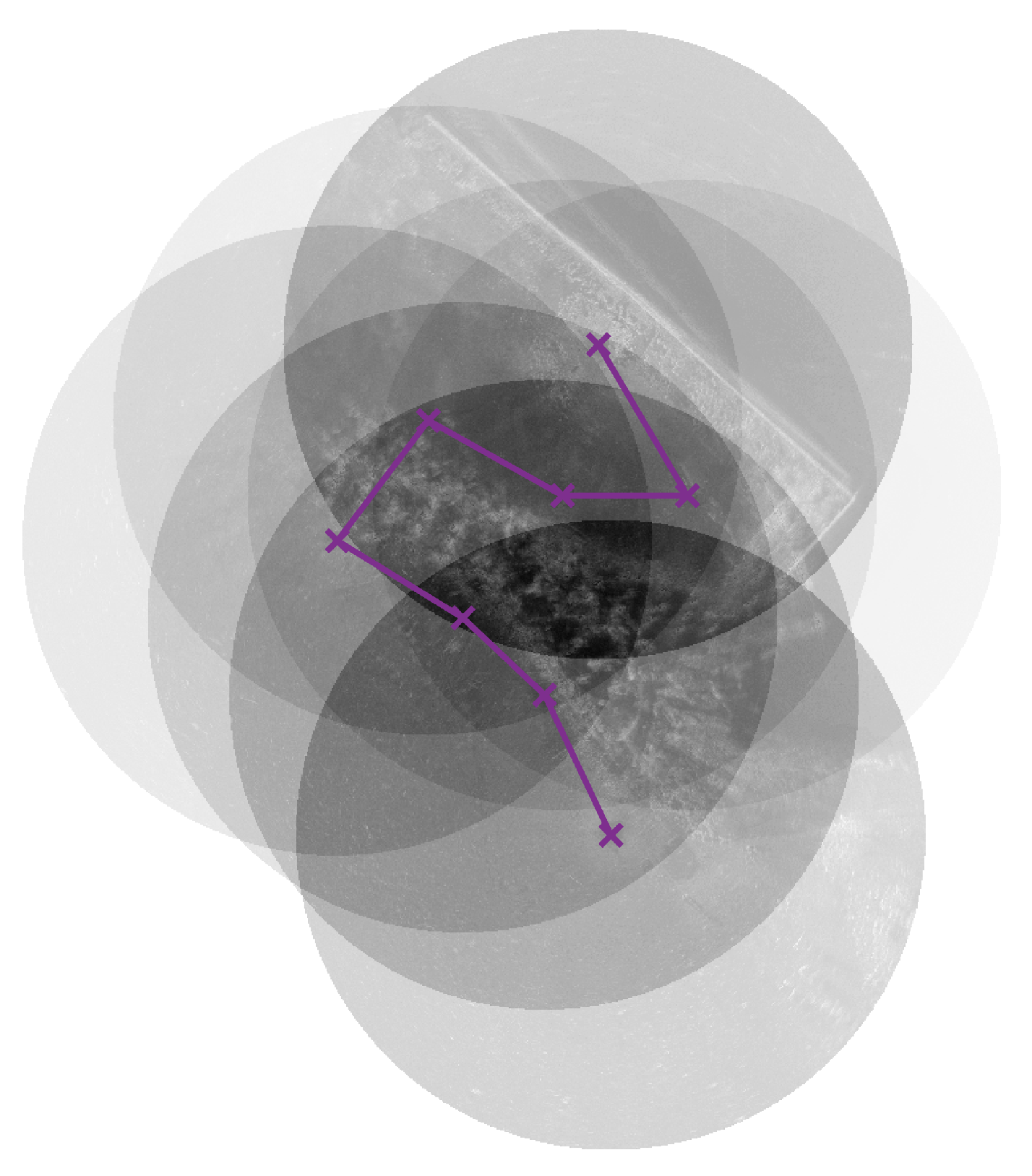

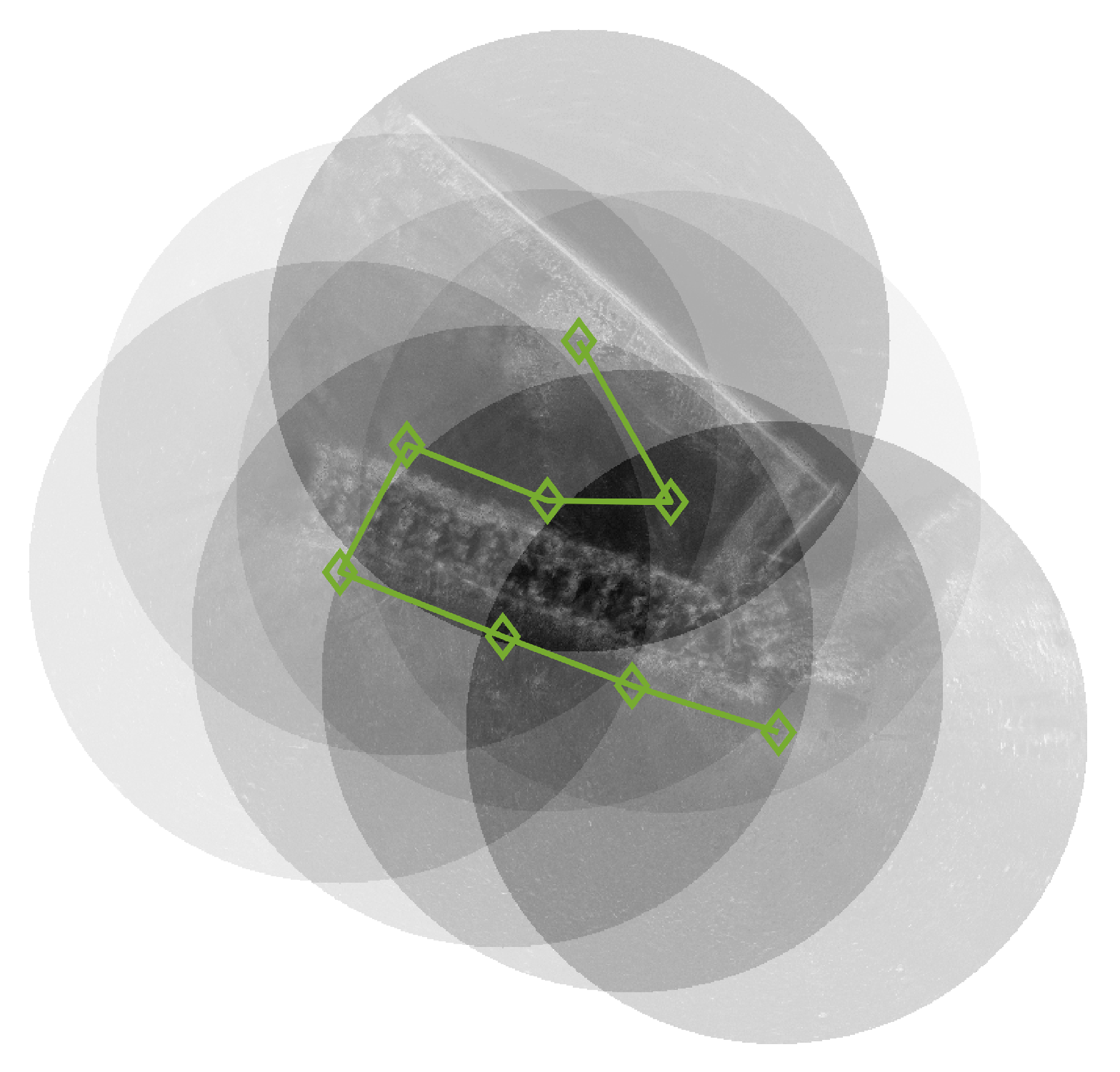

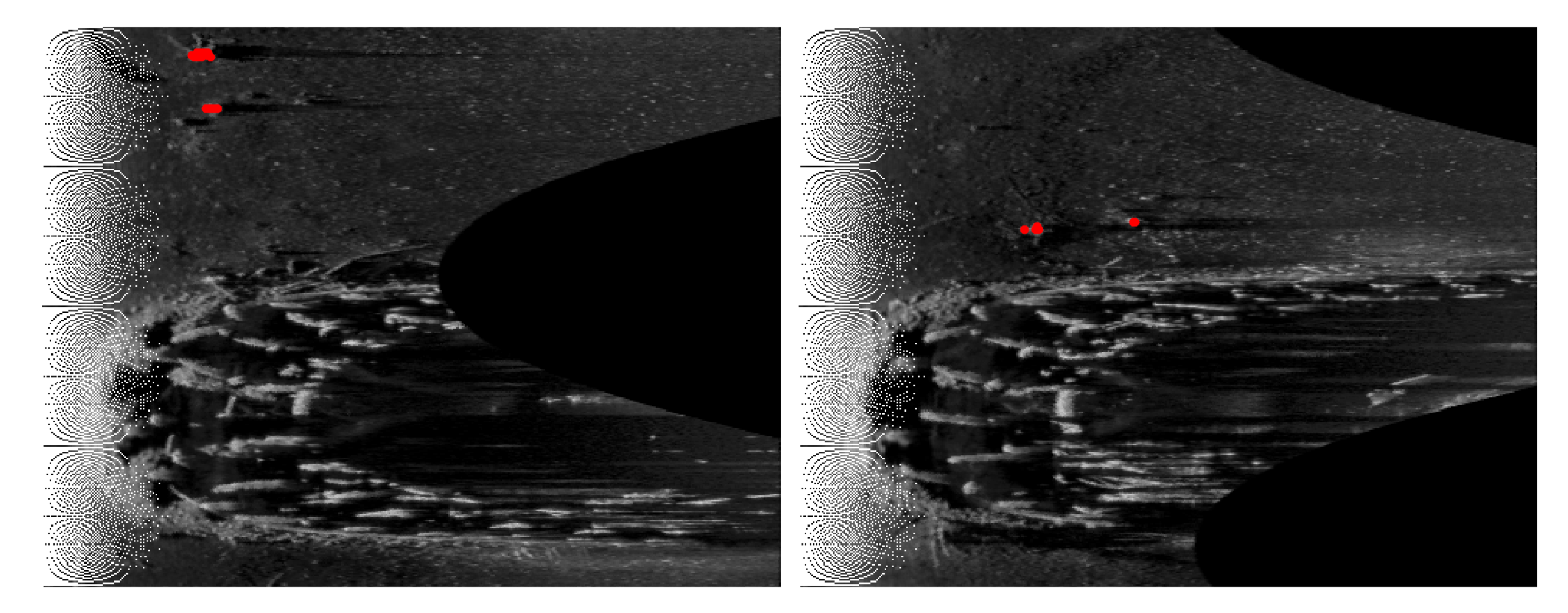

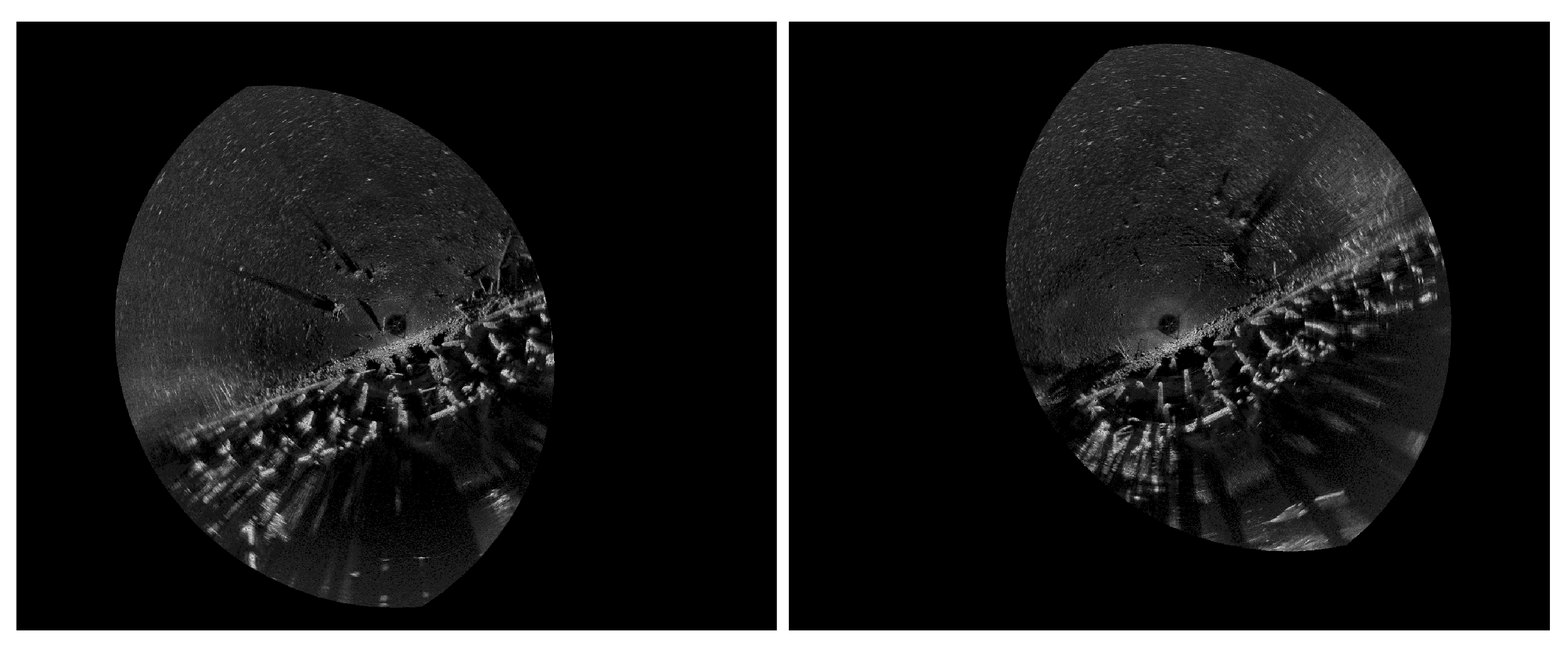

4.1.2. Experimental Results

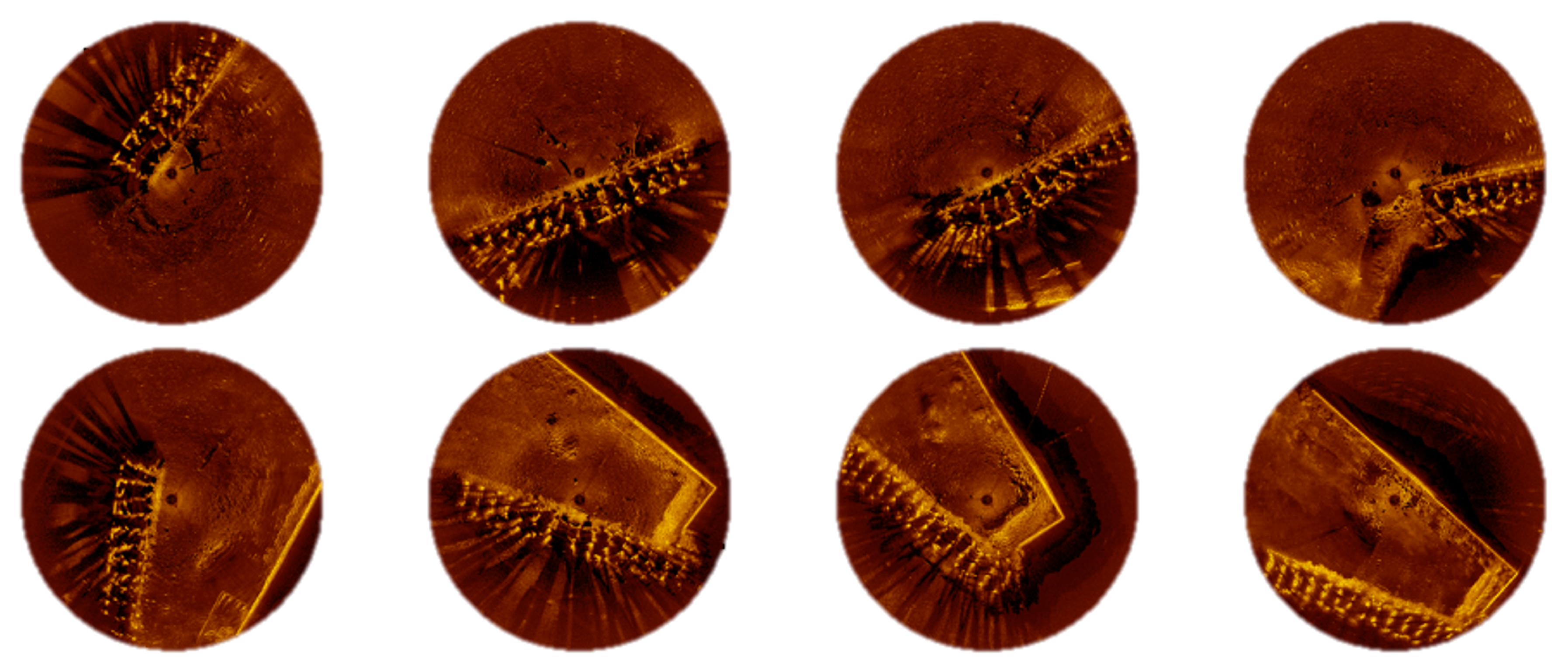

4.2. Real Sea Experiments

4.2.1. Experimental Environment

4.2.2. Experimental Results

5. Discussion

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Li, J.-H. Study on the UUV Operation via Conventional Submarine’s Torpedo Tube. J. Korea Inst. Mil. Sci. Technol. 2024, 17, 33–40. [Google Scholar] [CrossRef]

- Ko, E.S.; Lee, M.H. A Study on the Legal Status of Unmanned Maritime Systems. Korean J. Mil. Art Sci. 2019, 75, 89–118. [Google Scholar]

- Lee, K.Y. An analysis of required technologies for developing unmanned mine countermeasure system based on the unmanned underwater vehicle. J. Korea Inst. Mil. Sci. Technol. 2011, 14, 579–589. [Google Scholar] [CrossRef]

- Byun, S.-W.; Kim, D.; Im, J.-B.; Han, J.-H.; Park, D.-H. A Study on Guidance Methods of Mine Disposal Vehicle Considering the Sensor Errors. J. Embed. Syst. Appl. 2017, 12, 277–286. [Google Scholar]

- Kim, K.; Choi, H.-T.; Lee, C.-M. Multi-sensor Fusion Based Guidance and Navigation System Design of Autonomous Mine Disposal System Using Finite State Machine. Inst. Electron. Eng. Korea Syst. Control 2010, 47, 33–42. [Google Scholar]

- Yoo, S.Y.; Jun, B.H.; Shim, H. Design of Static Gait Algorithm for Hexapod Subsea Walking Robot: Crabster. Trans. Korean Soc. Mech. Eng. A 2014, 38, 989–997. [Google Scholar] [CrossRef]

- Jun, B.-H.; Yoo, S.-Y.; Lee, P.-M.; Park, J.-Y.; Shim, H.; Baek, H. The sea Trial of Deep-sea Crabster CR6000 System. J. Embed. Syst. Appl. 2017, 12, 331–341. [Google Scholar]

- Park, J.-Y.; Lee, G.-M.; Shim, H.; Baek, H.; Lee, P.-M.; Jun, B.-H.; Kim, J.-Y. Head Alignment of a Single-beam Scanning SONAR installed on a Multi-legged Underwater Robot. In Proceedings of the 2012 Oceans, Hampton Roads, VA, USA, 14–19 October 2012; pp. 1–5. [Google Scholar]

- Baek, H.; Jun, B.-H.; Park, J.Y.; Kim, B.R.; Lee, P.M. Application of High Resolution Scanning Sonar with Multi-Legged Underwater Robot. In Proceedings of the 2013 OCEANS, San Diego, CA, USA, 23–27 September 2013; pp. 1–5. [Google Scholar]

- Jun, B.-H.; Shim, H.; Park, J.-Y.; Kim, B.; Lee, P.-M. A new concept and technologies of multi-legged underwater robot for high tidal current environment. In Proceedings of the 2011 IEEE Symposium on Underwater Technology and Workshop on Scientific Use of Submarine Cables and Related Technologies, Tokyo, Japan, 5–8 April 2011; pp. 1–5. [Google Scholar]

- Yoo, S.-Y.; Jun, B.-H.; Shim, H.; Shim, P.-M. Finite Element Analysis of Carbon Fiber Reinforced Plastic Frame for Multi-legged Subsea Robot. J. Ocean. Eng. Technol. 2013, 27, 65–72. [Google Scholar] [CrossRef]

- Lee, G.; Choi, K.; Lee, P.-Y.; Kim, H.S.; Lee, H.; Kang, H.; Lee, J. Performance Enhancement Technique for Position-based Alignment Algorithm in AUV’s Navigation. J. Inst. Control. Robot. Syst. 2023, 29, 740–747. [Google Scholar] [CrossRef]

- Ma, J.; Yu, Y.; Zhang, Y.; Zhu, X. An USBL/DR Integrated Underwater Localization Algorithm Considering Variations of Measurement Noise Covariance. IEEE Access 2022, 10, 23873–23884. [Google Scholar] [CrossRef]

- Guerrero-Font, E.; Massot-Campos, M.; Negre, P.L.; Bonin-Font, F.; Codina, G.O. An USBL-aided multisensor navigation system for field AUVs. In Proceedings of the 2016 IEEE International Conference on Multisensor Fusion and Integration for Intelligent Systems (MFI), Baden-Baden, Germany, 19–21 September 2016; pp. 430–435. [Google Scholar]

- Ribas, D.; Ridao, P.; Mallios, A.; Palomeras, N. Delayed state information filter for USBL-Aided AUV navigation. In Proceedings of the 2012 IEEE International Conference on Robotics and Automation, Saint Paul, MN, USA, 14–18 May 2012; pp. 430–435. [Google Scholar]

- Davis, A.; Lugsdin, A. High speed underwater inspection for port and harbour security using Coda Echoscope 3D sonar. In Proceedings of the OCEANS 2005 MTS/IEEE, Washington, DC, USA, 17–23 September 2005; pp. 2006–2011. [Google Scholar]

- Woodward, W.R.; Webb, P.E.R.; Hansen, R.K.; Cunningham, B.; Markiel, J.N. Approaches for using Three Dimensional sonar as a supplemental sensor to GNSS. In Proceedings of the IEEE/ION Position, Location and Navigation Symposium, Indian Wells, CA, USA, 4–6 May 2010; pp. 1112–1117. [Google Scholar]

- Baek, H.; Jun, B.H.; Noh, M.D. The application of sector-scanning sonar: Strategy for efficient and precise sector-scanning using freedom of underwater walking robot in shallow water. Sensors 2020, 20, 3654. [Google Scholar] [CrossRef] [PubMed]

- Baek, H.; Jun, B.H.; Park, J.-Y.; Shim, H.; Lee, P.-M.; Noh, M.D. 2D and 3D Mapping of Underwater Substructure Using Scanning SONAR System. J. KNST 2019, 2, 21–27. [Google Scholar] [CrossRef]

- Choi, K.; Lee, G.; Lee, P.-Y.; Yoon, S.-M.; Son, J.; Lee, J. Optimal Parameter Estimation for Topological Descriptor Based Sonar Image Matching in Autonomous Underwater Robots. J. Inst. Control Robot. Syst. 2024, 30, 730–739. [Google Scholar] [CrossRef]

- Lee, G.; Choi, K.; Lee, P.-Y.; Yoon, S.-M.; Son, J.; Lee, J. Scanning-sonar Image Matching Algorithm Using Overlapping Region Detection and ICP. J. Inst. Control. Robot. Syst. 2024, 30, 226–233. [Google Scholar] [CrossRef]

- Cotter, E.; Polagye, B. Detection and classification capabilities of two multibeam sonars. Limnol. Oceanogr. Methods 2020, 18, 673–680. [Google Scholar] [CrossRef]

- Franchi, M.; Ridolfi, A.; Zacchini, L. A forward-looking sonar-based system for underwater mosaicing and acoustic odometry. In Proceedings of the 2018 IEEE/OES Autonomous Underwater Vehicle Workshop (AUV), Porto, Portugal, 6–9 November 2018; pp. 1–6. [Google Scholar]

- Douillard, B.; Underwood, J.; Kuntz, N.; Vlaskine, V.; Quadros, A.; Morton, P.; Frenkel, A. On the segmentation of 3D LIDAR point clouds. In Proceedings of the 2011 IEEE International Conference on Robotics and Automation, Shanghai, China, 9–13 May 2011; pp. 2798–2805. [Google Scholar]

- Aykin, M.D.; Negahdaripour, S. Forward-look 2-D sonar image formation and 3-D reconstruction. In Proceedings of the 2013 OCEANS, San Diego, CA, USA, 23–27 September 2013; pp. 1–10. [Google Scholar]

- Joe, H.; Kim, J.; Yu, S.C. 3D reconstruction using two sonar devices in a Monte-Carlo approach for AUV application. Int. J. Control. Autom. Syst. 2020, 18, 587–596. [Google Scholar] [CrossRef]

- Sung, M.; Kim, J.; Cho, H.; Lee, M.; Yu, S.-C. Underwater-Sonar-Image-Based 3D Point Cloud Reconstruction for High Data Utilization and Object Classification Using a Neural Network. Electronics 2020, 9, 1763. [Google Scholar] [CrossRef]

- Joe, H.; Kim, J.; Yu, S.-C. Probabilistic 3D Reconstruction Using Two Sonar Devices. Sensors 2022, 22, 2094. [Google Scholar] [CrossRef] [PubMed]

- Lee, Y.; Kim, T.; Choi, J.; Choi, H.-T. Experimental Results on Shape Reconstruction of Underwater Object Using Imaging Sonar. J. Inst. Electron. Inf. Eng. 2016, 53, 116–122. [Google Scholar] [CrossRef][Green Version]

- Manhães, M.M.M.; Scherer, S.A.; Voss, M.; Douat, L.R.; Rauschenbach, T. UUV Simulator: A Gazebo-based package for underwater intervention and multi-robot simulation. In Proceedings of the OCEANS 2016 MTS/IEEE Monterey, Monterey, CA, USA, 19–23 September 2016; pp. 1–8. [Google Scholar]

- Lee, G.; Choi, K.; Yoon, S.-M.; Lee, J. UUV Simulator: Image Registration of 2D Underwater Sonar Images Using Shadow-based Height Estimation. In Proceedings of the The Institute of Electronics and Information Engineers Summer Annual Conterence of IEIE, Jeju, Republic of Korea, 27–29 February 2024; pp. 1415–1418. [Google Scholar]

- Ester, M.; Kriegel, H.P.; Sander, J.; Xu, X. A density-based algorithm for discovering clusters in large spatial databases with noise. In Proceedings of the Second International Conference on Knowledge Discovery and Data Mining, KDD’96, Portland, OR, USA, 2–4 August 1996; pp. 226–231. [Google Scholar]

| Endpoint Position Error | |||

|---|---|---|---|

| m | Pixel | ||

| Method | A | 23.97 | 227 |

| B | 12.53 | 147 | |

| C | 33.29 | 316 | |

| D | 0.89 | 8 | |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lee, G.; Yoon, S.; Lee, Y.; Lee, J. Improving ICP-Based Scanning Sonar Image Matching Performance Through Height Estimation of Feature Point Using Shaded Area. J. Mar. Sci. Eng. 2025, 13, 150. https://doi.org/10.3390/jmse13010150

Lee G, Yoon S, Lee Y, Lee J. Improving ICP-Based Scanning Sonar Image Matching Performance Through Height Estimation of Feature Point Using Shaded Area. Journal of Marine Science and Engineering. 2025; 13(1):150. https://doi.org/10.3390/jmse13010150

Chicago/Turabian StyleLee, Gwonsoo, Sukmin Yoon, Yeongjun Lee, and Jihong Lee. 2025. "Improving ICP-Based Scanning Sonar Image Matching Performance Through Height Estimation of Feature Point Using Shaded Area" Journal of Marine Science and Engineering 13, no. 1: 150. https://doi.org/10.3390/jmse13010150

APA StyleLee, G., Yoon, S., Lee, Y., & Lee, J. (2025). Improving ICP-Based Scanning Sonar Image Matching Performance Through Height Estimation of Feature Point Using Shaded Area. Journal of Marine Science and Engineering, 13(1), 150. https://doi.org/10.3390/jmse13010150