Ship Target Identification via Bayesian-Transformer Neural Network

Abstract

:1. Introduction

- The ship target is identified only by the track information.

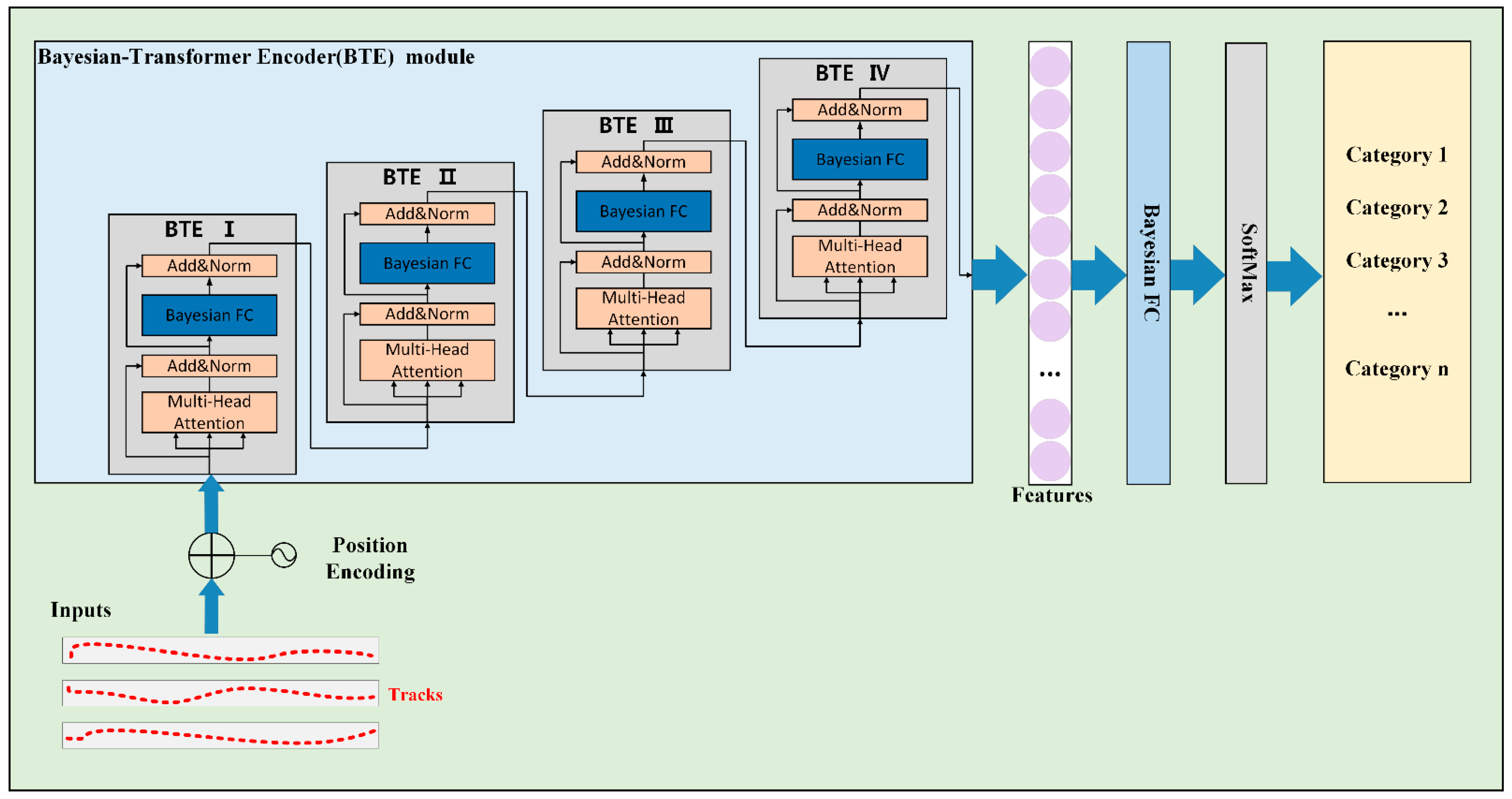

- To extract the discriminative features of tracks, a Bayesian-Transformer Encoder (BTE) module is proposed, which can deal with the long sequences and reduce network parameters.

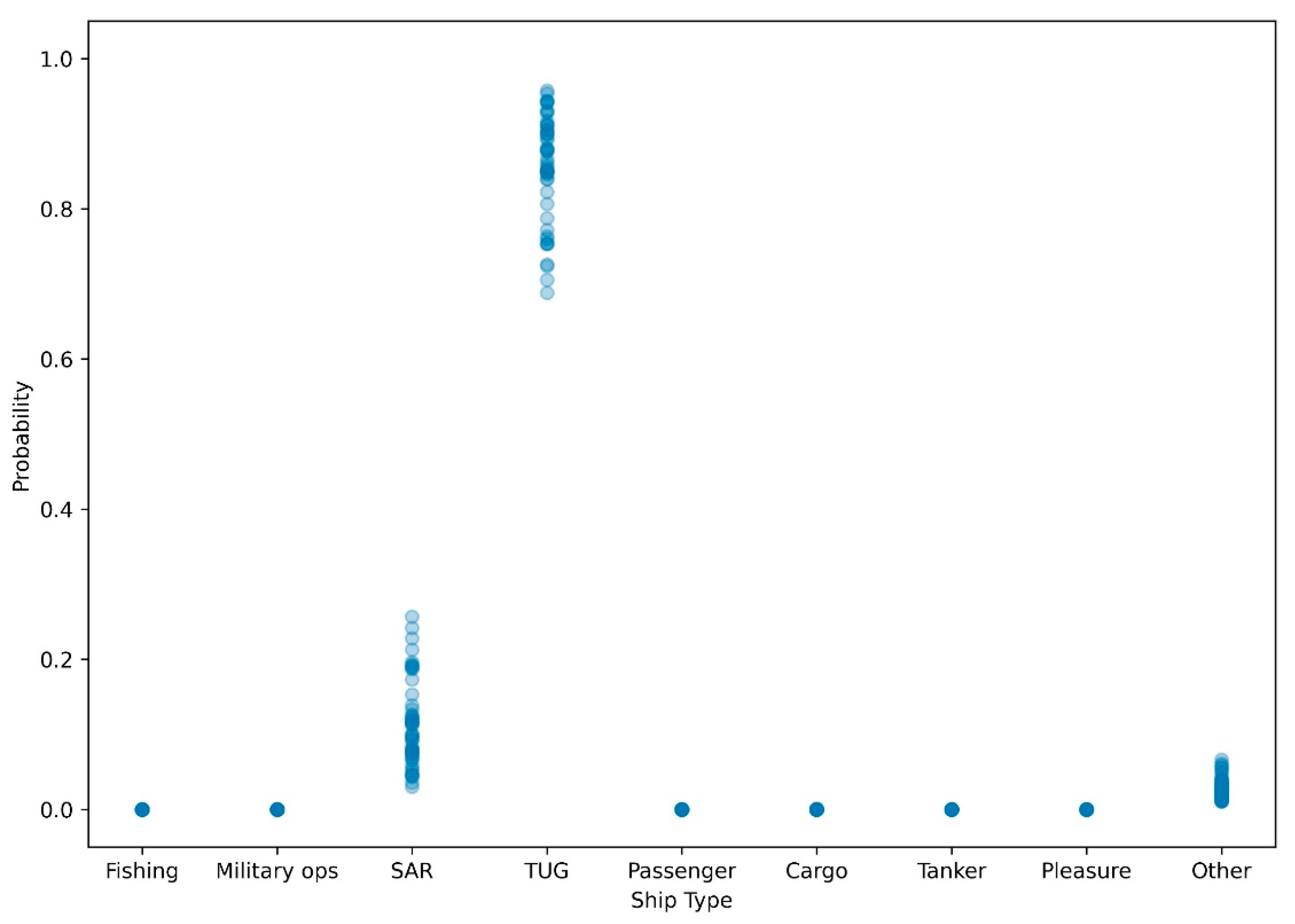

- The Bayesian principle is applied to the transformer neural network, which makes it possible to provide a more reliable probability that catches both aleatoric uncertainty and epistemic uncertainty.

2. Methods

2.1. Mathematical Model of Ship Targets Identification Using Tracks

2.2. Overall Structure of BTNN

2.3. Bayesian-Transformer Encoder (BTE) Module

2.4. Bayesian-Transformer Neural Network (BTNN) Training and the Predictive Probability Calculation

3. Experiments and Analysis

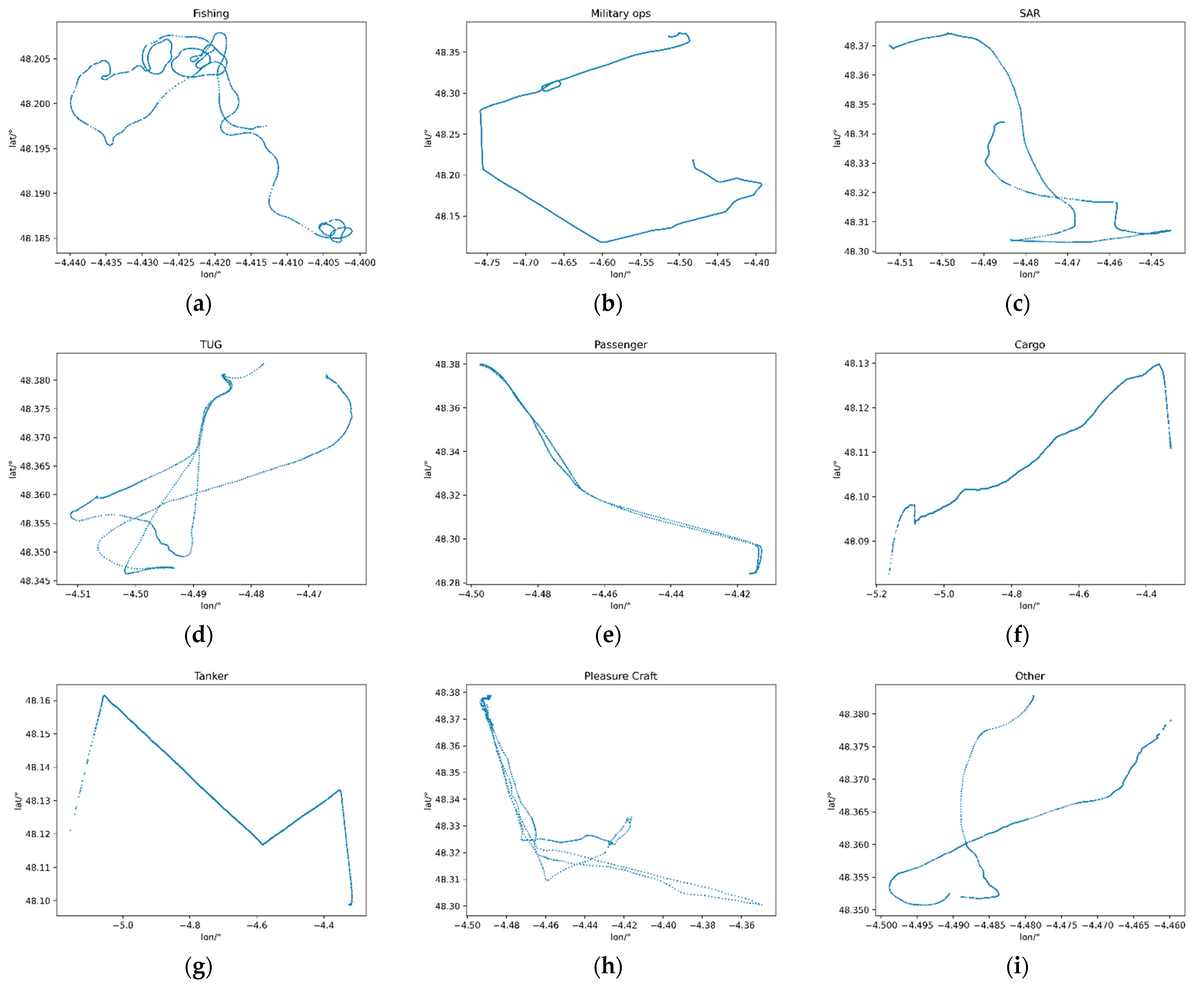

3.1. Data Preparing and Experimental Setup

3.2. Dimension Analysis and Choice

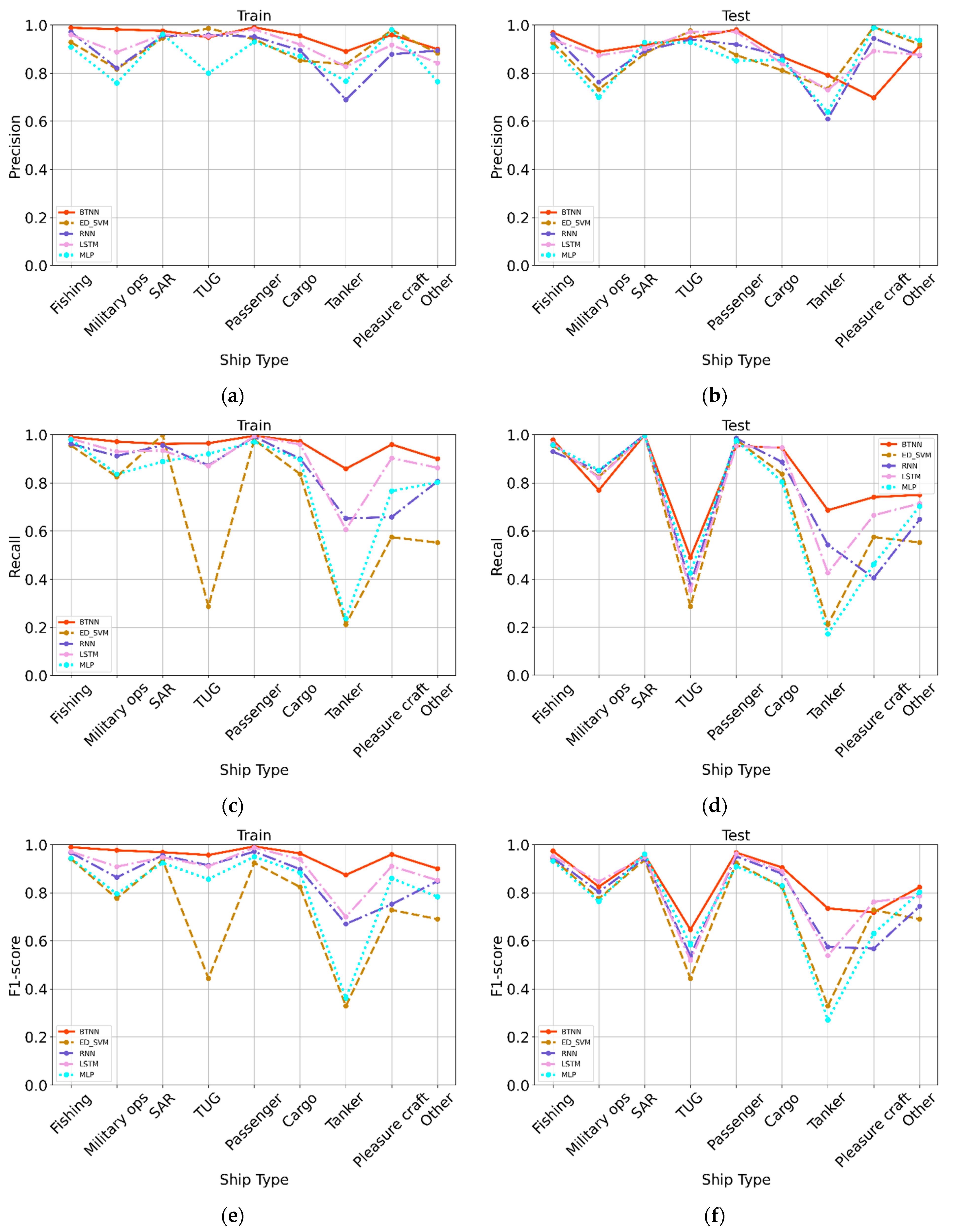

3.3. Accuracy Analysis and Comparison

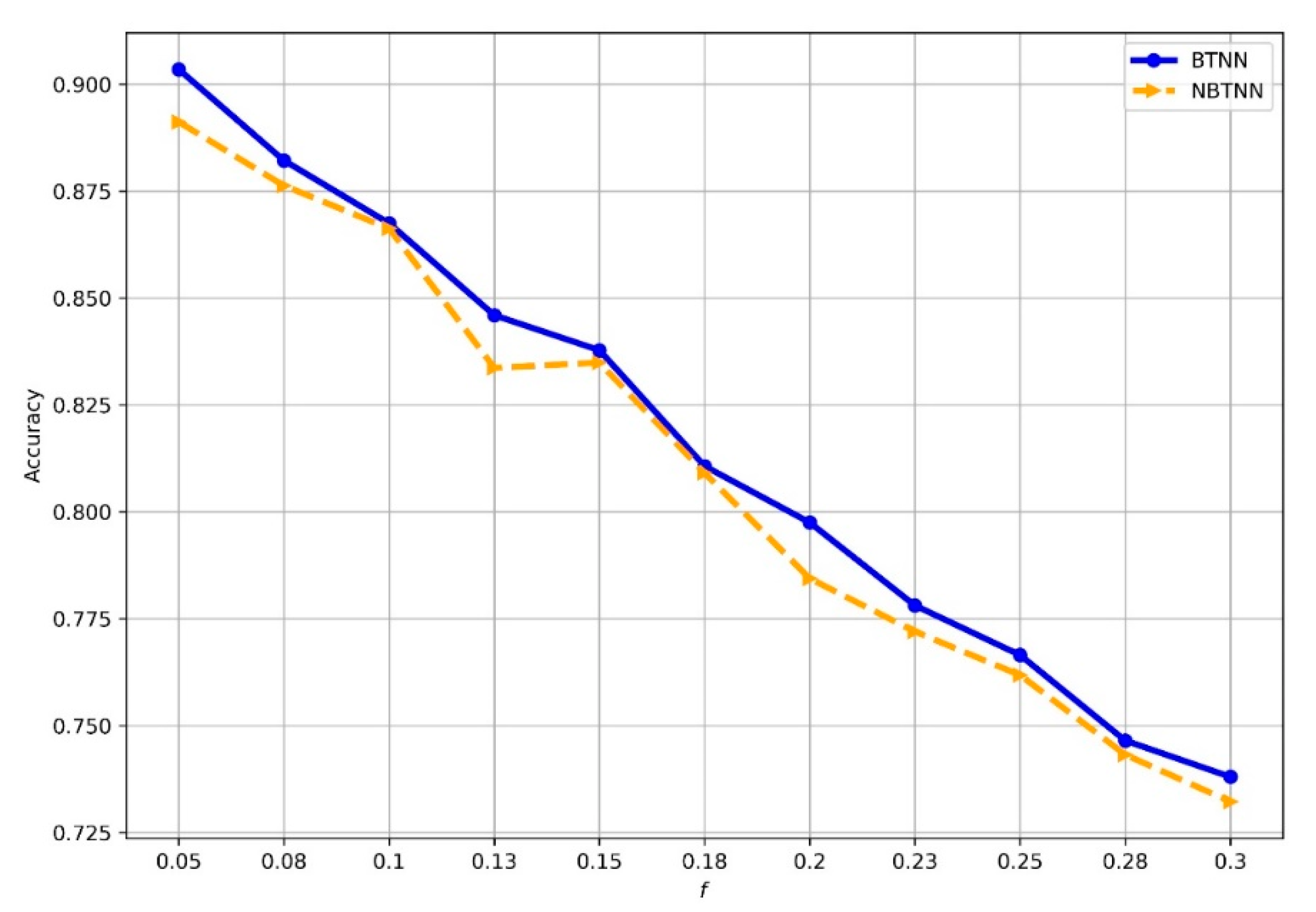

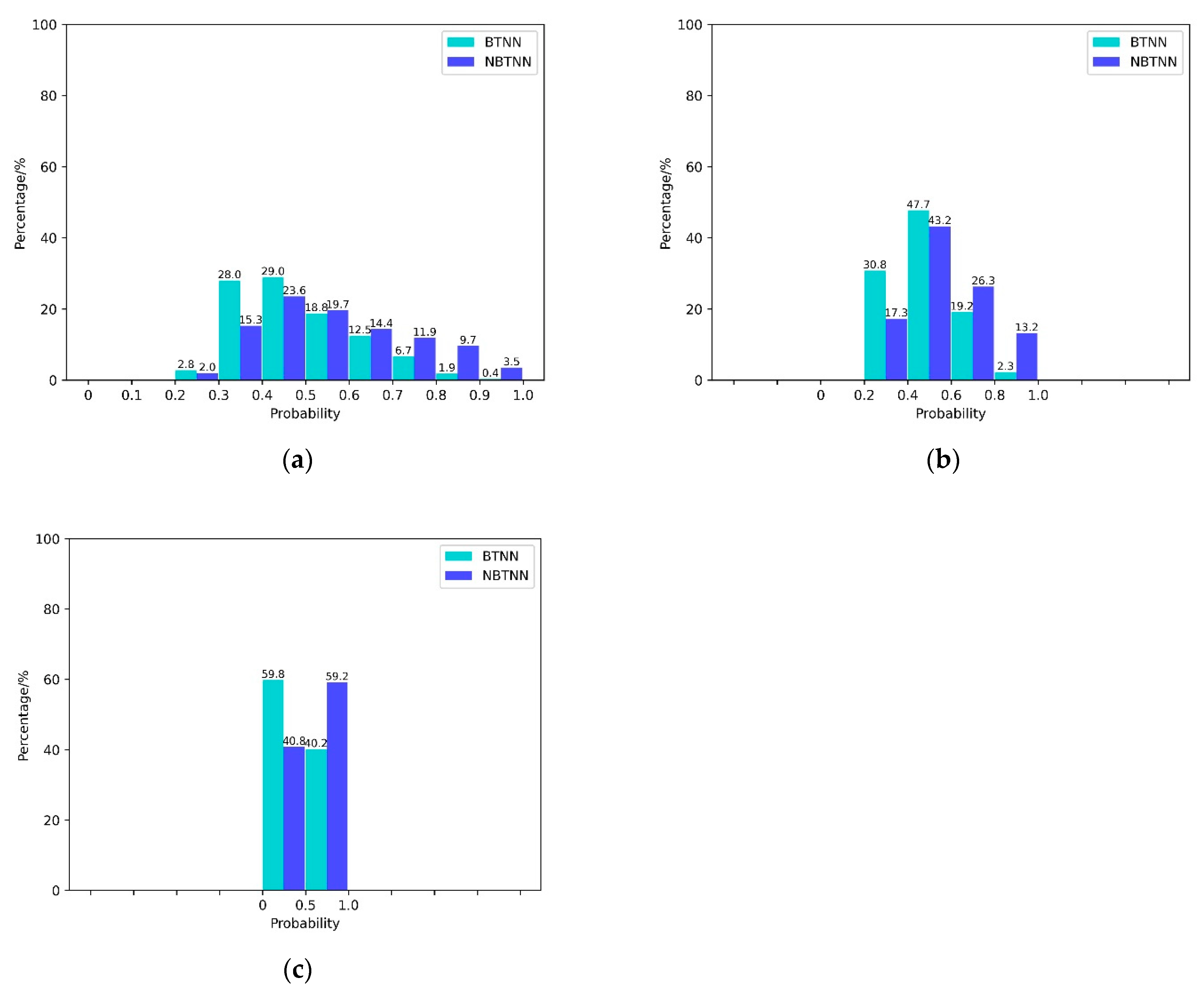

3.4. Network Anti-Noise Testing

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Guo, D.; Chen, B.; Chen, W.; Wang, C.; Zhou, M. Variational Temporal Deep Generative Model for Radar HRRP Target Recognition. IEEE Trans. Signal Process. 2020, 68, 5795–5809. [Google Scholar] [CrossRef]

- Xu, C.; Yin, C.; Wang, D.; Han, W. Fast ship detection combining visual saliency and a cascade CNN in SAR images. IET Radar Sonar Navig. 2020, 14, 1879–1887. [Google Scholar] [CrossRef]

- Ai, J.; Mao, Y.; Luo, Q.; Jia, L.; Xing, M. SAR Target Classification Using the Multikernel-Size Feature Fusion-Based Convolutional Neural Network. IEEE Trans. Geosci. Remote Sens. 2021, 60, 1–13. [Google Scholar] [CrossRef]

- Bagnall, A.; Lines, J.; Hills, J.; Bostrom, A. Time-series classification with COTE: The collective of transformation-based ensembles. IEEE Trans. Knowl. Data Eng. 2015, 27, 2522–2535. [Google Scholar] [CrossRef]

- Bagnall, A.; Lines, J.; Bostrom, A.; Large, J.; Keogh, E. The great time series classification bake off: A review and experimental evaluation of recent algorithmic advances. Data Min. Knowl. Discov. 2017, 31, 606–660. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Lines, J.; Bagnall, A. Time series classification with ensembles of elastic distance measures. Data Min. Knowl. Discov. 2015, 29, 565–592. [Google Scholar] [CrossRef]

- Hills, J.; Lines, J.; Baranauskas, E.; Mapp, J.; Bagnall, A. Classification of time series by shapelet transformation. Data Min. Knowl. Discov. 2013, 28, 851–881. [Google Scholar] [CrossRef] [Green Version]

- Noyes, S.P. Track classification in a naval defence radar using fuzzy logic. In Proceedings of the Target Tracking & Data Fusion, Birmingham, UK, 9 June 1998. [Google Scholar]

- Kouemou, G.; Opitz, F. Radar target classification in littoral environment with HMMs combined with a track based classifier. In Proceedings of the International Conference on Radar, Adelaide, Australia, 2–5 September 2008. [Google Scholar]

- Doumerc, R.; Pannetier, B.; Moras, J.; Dezert, J.; Canevet, L. Track classification within wireless sensor network. In Proceedings of the SPIE Defense + Security, Anaheim, CA, USA, 4 May 2017. [Google Scholar]

- Wang, Z.F.; Pan, Q.; Chen, L.P.; Liang, Y.; Yang, F. Tracks classification based on airway-track association for over-the-horizon radar. Syst. Eng. Electron. 2012, 34, 2018–2022. [Google Scholar]

- Ghadaki, H.; Dizaji, R. Target track classification for airport surveillance radar (ASR). In Proceedings of the 2006 IEEE Conference on Radar, Verona, NY, USA, 24–27 April 2006. [Google Scholar]

- Mohajerin, N.; Histon, J.; Dizaji, R.; Waslander, S.L. Feature extraction and radar track classification for detecting UAVs in civillian airspace. In Proceedings of the 2014 IEEE Radar Conference (RadarCon), Cincinnati, OH, USA, 19–23 May 2014. [Google Scholar]

- Espindle, L.P.; Kochenderfer, M.J. Classification of primary radar tracks using Gaussian mixture models. IET Radar Sonar Navig. 2010, 3, 559–568. [Google Scholar] [CrossRef]

- Sheng, K.; Liu, Z.; Zhou, D.; He, A.; Feng, C. Research on Ship Classification Based on Trajectory Features. J. Navig. 2017, 71, 100–116. [Google Scholar] [CrossRef]

- Sarikaya, T.B.; Yumus, D.; Efe, M.; Soysal, G.; Kirubarajan, T. Track Based UAV Classification Using Surveillance Radars. In Proceedings of the 2019 22th International Conference on Information Fusion (FUSION), Ottawa, ON, Canada, 2–5 July 2019. [Google Scholar]

- Zhan, W.; Yi, J.; Wan, X.; Rao, Y.J.I.S.J. Track-Feature-Based Target Classification in Passive Radar for Low-Altitude Airspace Surveillance. IEEE Sens. J. 2021, 21, 10017–10028. [Google Scholar] [CrossRef]

- Fawaz, H.I.; Forestier, G.; Weber, J.; Idoumghar, L.; Muller, P.-A. Deep learning for time series classification: A review. Data Min. Knowl. Discov. 2019, 33, 917–963. [Google Scholar] [CrossRef] [Green Version]

- Tan, H.X.; Aung, N.N.; Tian, J.; Chua, M.C.H.; Yang, Y.O. Time series classification using a modified LSTM approach from accelerometer-based data: A comparative study for gait cycle detection—ScienceDirect. Gait Posture 2019, 74, 128–134. [Google Scholar] [CrossRef] [PubMed]

- Kooshan, S.; Fard, H.; Toroghi, R.M. Singer Identification by Vocal Parts Detection and Singer Classification Using LSTM Neural Networks. In Proceedings of the 2019 4th International Conference on Pattern Recognition and Image Analysis (IPRIA), Tehran, Iran, 6–7 March 2019. [Google Scholar]

- Lai, C.; Zhou, S.; Trayanova, N.A. Optimal ECG-lead selection increases generalizability of deep learning on ECG abnormality classification. Philos. Trans. R. Soc. A 2021, 379, 20200258. [Google Scholar] [CrossRef] [PubMed]

- Bakkegaard, S.; Blixenkrone-Moller, J.; Larsen, J.J.; Jochumsen, L. Target Classification Using Kinematic Data and a Recurrent Neural Network. In Proceedings of the 2018 19th International Radar Symposium (IRS), Bonn, Germany, 20–22 June 2018. [Google Scholar]

- Ichimura, S.; Zhao, Q. Route-Based Ship Classification. In Proceedings of the 2019 IEEE 10th International Conference on Awareness Science and Technology (iCAST), Morioka, Japan, 23–25 October 2019. [Google Scholar]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention is all you need. In Proceedings of the Advances in Neural Information Processing Systems, Long Beach, CA, USA, 4–9 December 2017; pp. 5998–6008. [Google Scholar]

- Jordan, M.I.; Ghahramani, Z.; Jaakkola, T.S.; Saul, L.K. An Introduction to Variational Methods for Graphical Models; Springer: Dordrecht, The Netherlands, 1998. [Google Scholar]

- Kiureghian, A.D.; Ditlevsen, O. Aleatory or epistemic? Does it matter? Struct. Saf. 2009, 31, 105–112. [Google Scholar] [CrossRef]

- Kingma, D.P.; Welling, M. Auto-Encoding Variational Bayes. In Proceedings of the ICLR, Banff, AB, Canada, 14–16 April 2014. [Google Scholar]

- Dürr, O.; Sick, B.; Murina, E. Probabilistic Deep Learning; Michaels, M., Ed.; Manning Publications: Shelter Island, NY, USA, 2020; pp. 229–263. [Google Scholar]

- De Vries, G.K.D.; Van Someren, M. Machine learning for vessel trajectories using compression, alignments and domain knowledge. Expert Syst. Appl. 2012, 39, 13426–13439. [Google Scholar] [CrossRef]

| Train | Test | Train | Test | Train | Test | Train | Test | Train | Test | Train | Test | Train | Test | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0.3613 | 0.3402 | 0.8646 | 0.8657 | 0.9155 | 0.8791 | 0.9342 | 0.9046 | 0.9500 | 0.9130 | 0.9630 | 0.9160 | 0.9494 | 0.9076 | |

| 0.3625 | 0.3402 | 0.9131 | 0.8746 | 0.9321 | 0.8804 | 0.9669 | 0.9273 | 0.9675 | 0.9265 | 0.9747 | 0.9396 | 0.9592 | 0.9226 | |

| 0.3699 | 0.3402 | 0.9310 | 0.9007 | 0.9312 | 0.9020 | 0.9601 | 0.9199 | 0.9664 | 0.9240 | 0.9737 | 0.9351 | 0.9721 | 0.9354 | |

| 0.3670 | 0.3402 | 0.9005 | 0.8864 | 0.9604 | 0.9255 | 0.9661 | 0.9337 | 0.9699 | 0.9218 | 0.9744 | 0.9343 | 0.9636 | 0.9340 | |

| Weighted Precision | Weighted Recall | Weighted F1-Score | Accuracy | |||||

|---|---|---|---|---|---|---|---|---|

| Train | Test | Train | Test | Train | Test | Train | Test | |

| ED_SVM [29] | 0.9154 | 0.8784 | 0.9170 | 0.8806 | 0.9084 | 0.8652 | 0.9355 | 0.8958 |

| RNN [22] | 0.9324 | 0.9014 | 0.9328 | 0.9016 | 0.9322 | 0.8968 | 0.9328 | 0.9016 |

| LSTM [19] | 0.9455 | 0.9107 | 0.9468 | 0.9124 | 0.9451 | 0.9053 | 0.9468 | 0.9124 |

| MLP [23] | 0.8988 | 0.8757 | 0.9016 | 0.8822 | 0.8925 | 0.8679 | 0.9016 | 0.8822 |

| BTNN (ours) | 0.9704 | 0.9303 | 0.9704 | 0.9313 | 0.9703 | 0.9282 | 0.9747 | 0.9396 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kong, Z.; Cui, Y.; Xiong, W.; Yang, F.; Xiong, Z.; Xu, P. Ship Target Identification via Bayesian-Transformer Neural Network. J. Mar. Sci. Eng. 2022, 10, 577. https://doi.org/10.3390/jmse10050577

Kong Z, Cui Y, Xiong W, Yang F, Xiong Z, Xu P. Ship Target Identification via Bayesian-Transformer Neural Network. Journal of Marine Science and Engineering. 2022; 10(5):577. https://doi.org/10.3390/jmse10050577

Chicago/Turabian StyleKong, Zhan, Yaqi Cui, Wei Xiong, Fucheng Yang, Zhenyu Xiong, and Pingliang Xu. 2022. "Ship Target Identification via Bayesian-Transformer Neural Network" Journal of Marine Science and Engineering 10, no. 5: 577. https://doi.org/10.3390/jmse10050577

APA StyleKong, Z., Cui, Y., Xiong, W., Yang, F., Xiong, Z., & Xu, P. (2022). Ship Target Identification via Bayesian-Transformer Neural Network. Journal of Marine Science and Engineering, 10(5), 577. https://doi.org/10.3390/jmse10050577