Investigation of Peanut Leaf Spot Detection Using Superpixel Unmixing Technology for Hyperspectral UAV Images

Abstract

1. Introduction

2. Materials and Methods

2.1. Study Area and Experimental Details

2.2. Data Acquisition

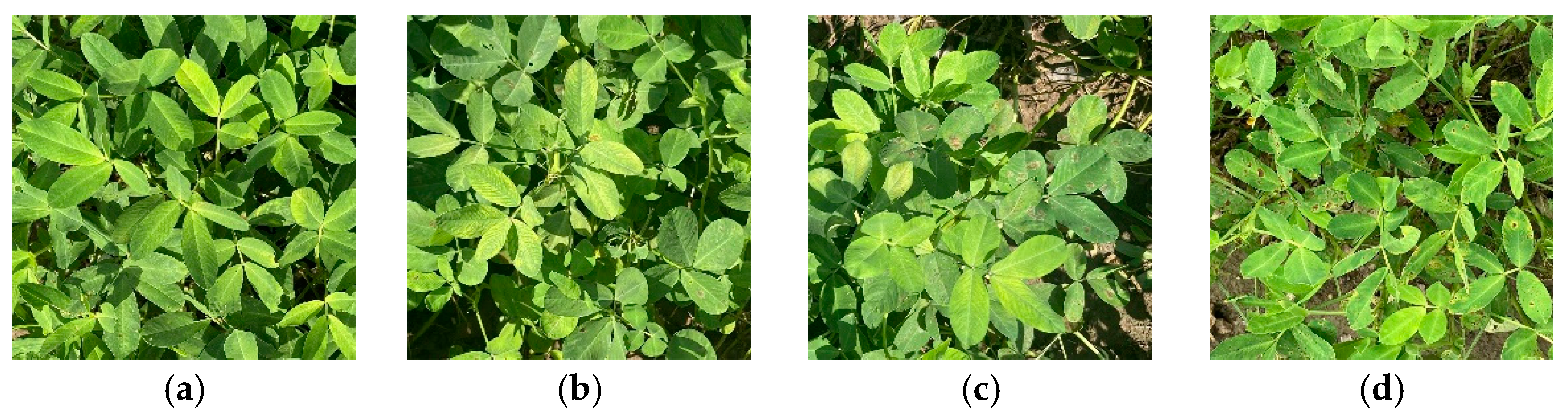

2.2.1. Disease Levels

2.2.2. Hyperspectral Image Acquisition

2.2.3. Disease Marker Area Acquisition

2.3. Crop Spectral Feature Extraction Method Based on Unmixing Technique

2.3.1. Superpixel Segmentation

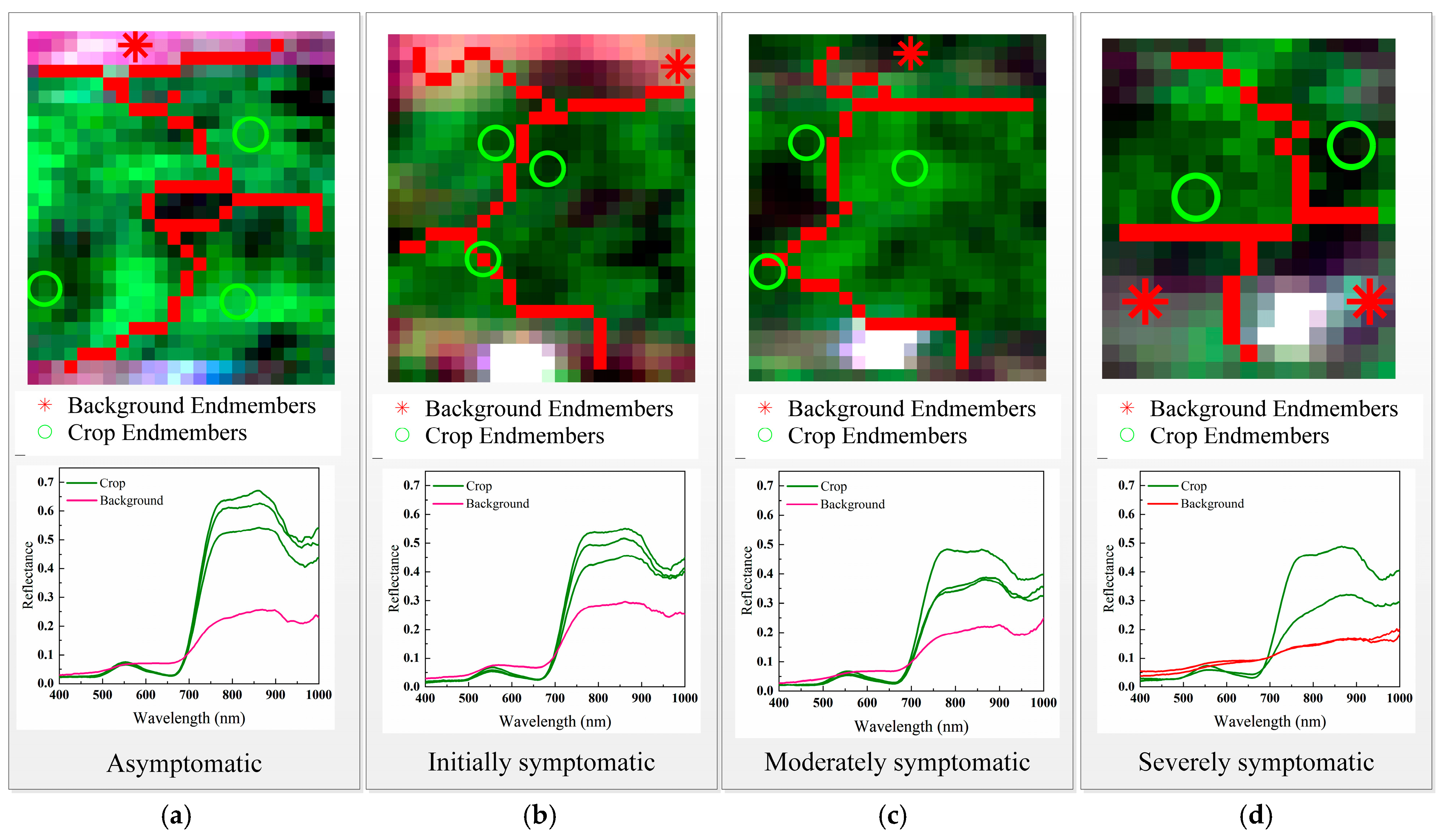

2.3.2. Endmember Extraction

2.3.3. Endmember Clustering

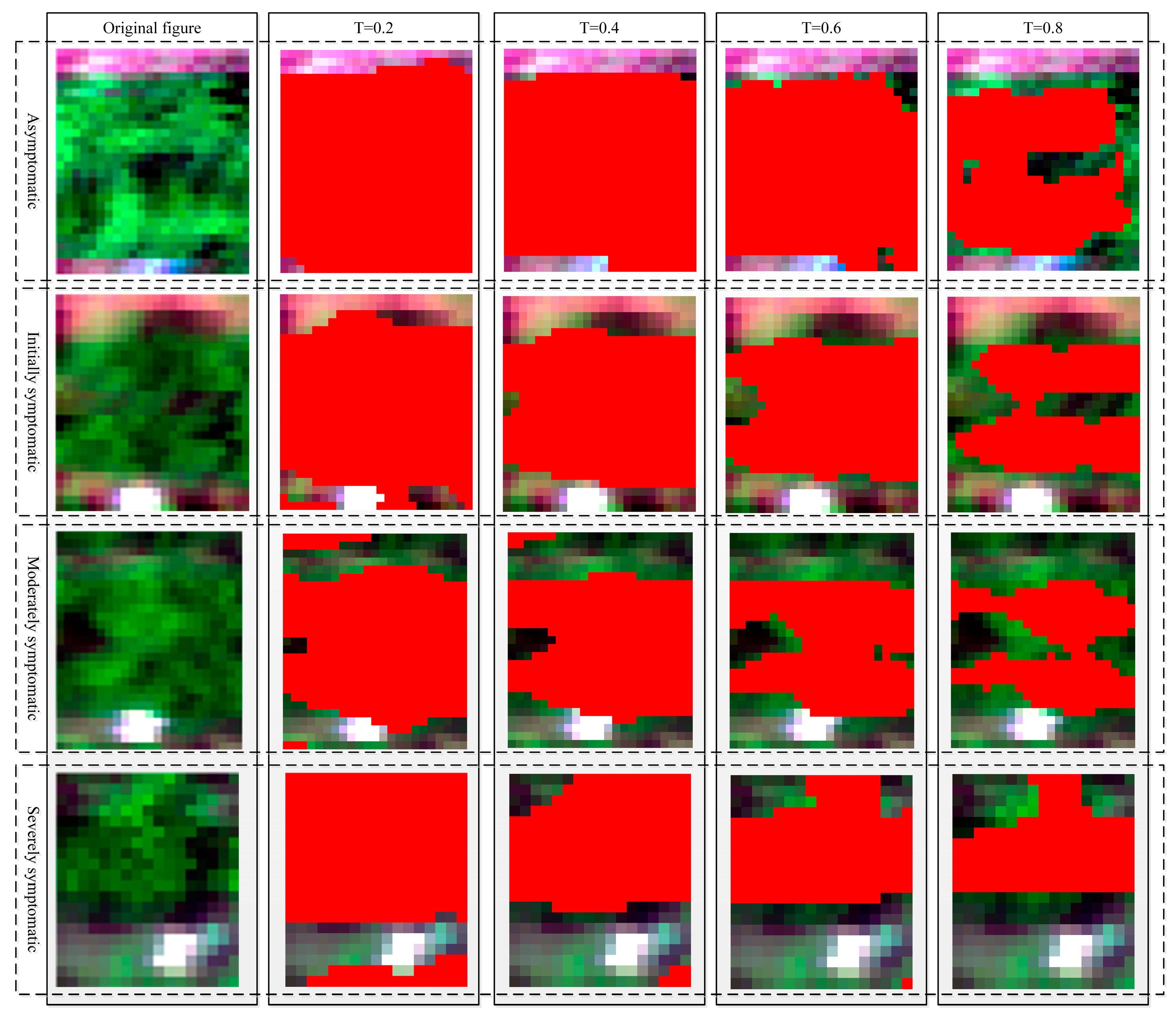

2.3.4. Abundance Estimation

2.4. Classification Methods

2.4.1. Extreme Gradient Boosting

2.4.2. Multi-Layer Perceptron

2.4.3. Support Vector Machine Based on Genetic Algorithm

2.5. Evaluation Indicators

2.6. Flow of Disease Detection Method Based on Superpixel Unmixing Technique

3. Results and Analysis

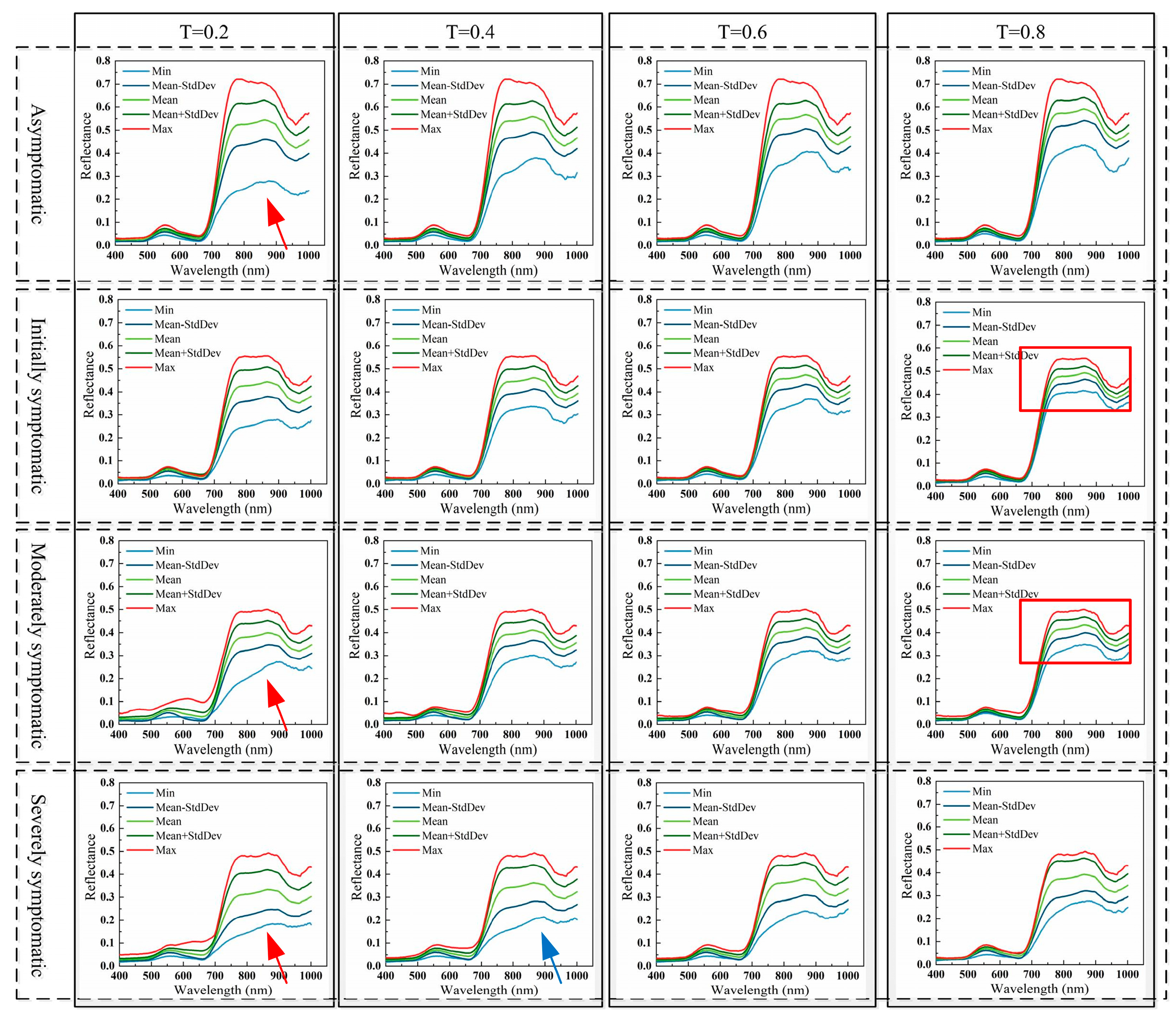

3.1. Spectral Feature Extraction Results

3.2. Comparison of Pure and Mixed Spectral Features

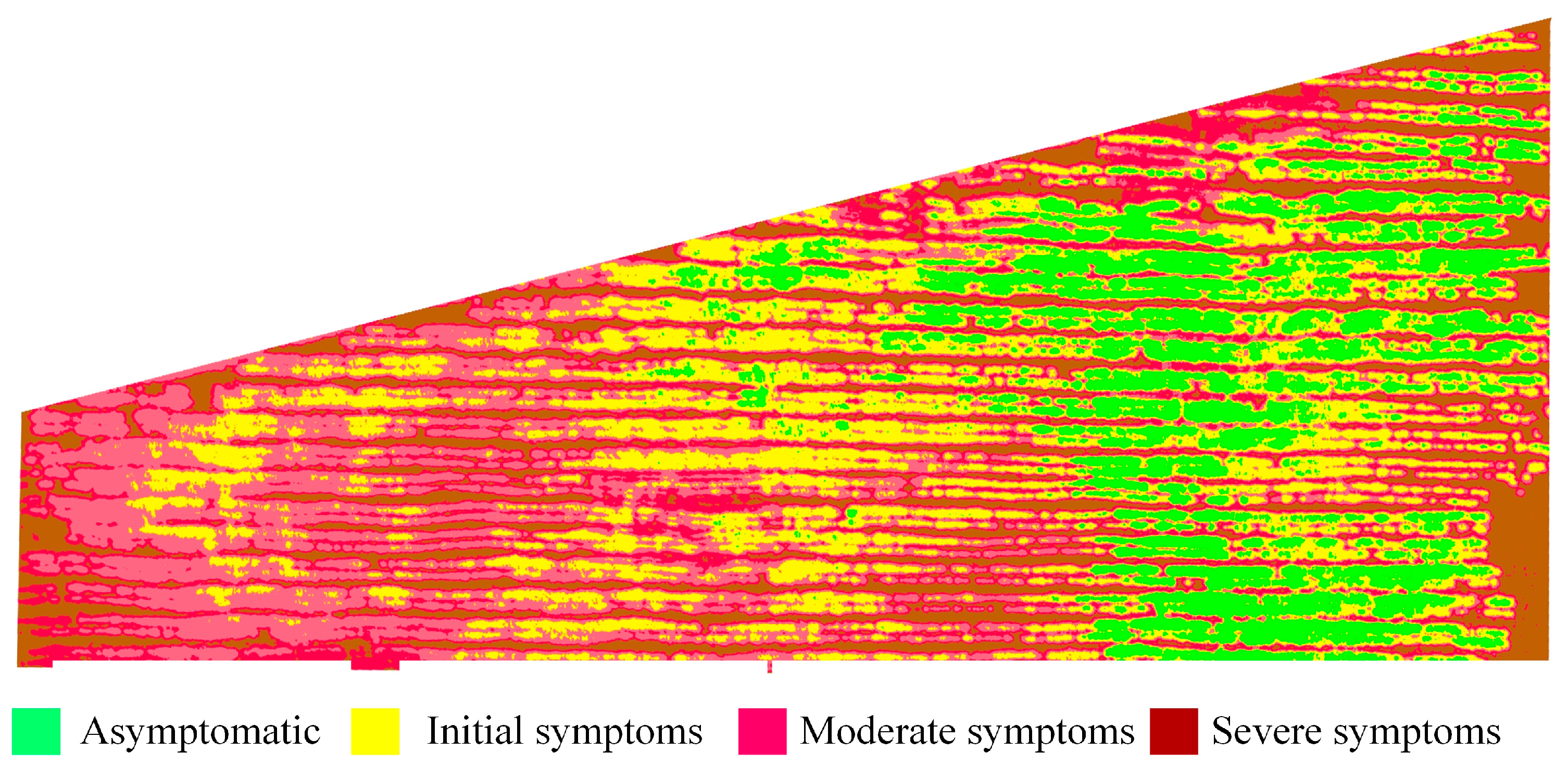

3.3. Crop Region Extraction Results

3.4. Crop Disease Detection Results

3.5. Comparison with Other Unmixing Methods

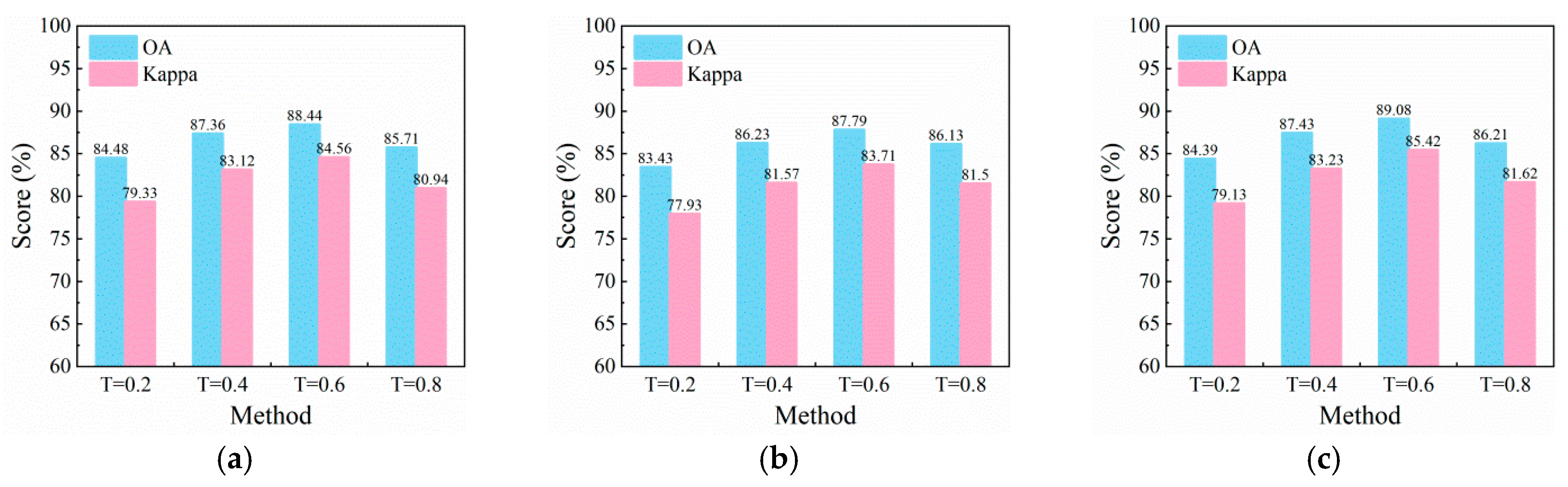

3.5.1. Comparison with Other Superpixel Methods

3.5.2. Comparison with Other Abundance Estimation Methods

3.6. Comparison Results with Traditional Region-of-Interest Extraction Methods

4. Discussion

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Guan, Q.; Zhao, D.; Feng, S.; Xu, T.; Wang, H.; Song, K. Hyperspectral Technique for Detection of Peanut Leaf Spot Disease Based on Improved PCA Loading. Agronomy 2023, 13, 1153. [Google Scholar] [CrossRef]

- Khera, P.; Pandey, M.K.; Wang, H.; Feng, S.; Qiao, L.; Culbreath, A.K.; Kale, S.; Wang, J.; Holbrook, C.C.; Zhuang, W.; et al. Mapping Quantitative Trait Loci of Resistance to Tomato Spotted Wilt Virus and Leaf Spots in a Recombinant Inbred Line Population of Peanut (Arachis hypogaea L.) from SunOleic 97R and NC94022. PLoS ONE 2016, 11, e0158452. [Google Scholar] [CrossRef] [PubMed]

- Bera, S.K.; Rani, K.; Kamdar, J.H.; Pandey, M.K.; Desmae, H.; Holbrook, C.C.; Burow, M.D.; Manivannan, N.; Bhat, R.S.; Jasani, M.D.; et al. Genomic Designing for Biotic Stress Resistant Peanut. In Genomic Designing for Biotic Stress Resistant Oilseed Crops; Kole, C., Ed.; Springer International Publishing: Cham, Switzerland, 2022; pp. 137–214. [Google Scholar]

- Graham, J.H.; Leite, R.P. Lack of Control of Citrus Canker by Induced Systemic Resistance Compounds. Plant Dis. 2004, 88, 745–750. [Google Scholar] [CrossRef]

- Suk Park, D.; Wook Hyun, J.; Jin Park, Y.; Sun Kim, J.; Wan Kang, H.; Ho Hahn, J.; Joo Go, S. Sensitive and specific detection of Xanthomonas axonopodis pv. citri by PCR using pathovar specific primers based on hrpW gene sequences. Microbiol. Res. 2006, 161, 145–149. [Google Scholar] [CrossRef]

- Maes, W.H.; Steppe, K. Perspectives for Remote Sensing with Unmanned Aerial Vehicles in Precision Agriculture. Trends Plant Sci. 2019, 24, 152–164. [Google Scholar] [CrossRef]

- Kerkech, M.; Hafiane, A.; Canals, R. Deep leaning approach with colorimetric spaces and vegetation indices for vine diseases detection in UAV images. Comput. Electron. Agric. 2018, 155, 237–243. [Google Scholar] [CrossRef]

- Kuswidiyanto, L.W.; Noh, H.-H.; Han, X. Plant Disease Diagnosis Using Deep Learning Based on Aerial Hyperspectral Images: A Review. Remote Sens. 2022, 14, 6031. [Google Scholar] [CrossRef]

- Guan, Q.; Song, K.; Feng, S.; Yu, F.; Xu, T. Detection of Peanut Leaf Spot Disease Based on Leaf-, Plant-, and Field-Scale Hyperspectral Reflectance. Remote Sens. 2022, 14, 4988. [Google Scholar] [CrossRef]

- Park, S.; Nolan, A.; Ryu, D.; Fuentes, S.; Hernandez, E.; Chung, H.; O’Connell, M. Estimation of crop water stress in a nectarine orchard using high-resolution imagery from unmanned aerial vehicle (UAV). In Proceedings of the 21st International Congress on Modelling and Simulation, Gold Coast, Australia, 29 November–4 December 2015. [Google Scholar]

- Bendig, J.; Yu, K.; Aasen, H.; Bolten, A.; Bennertz, S.; Broscheit, J.; Gnyp, M.L.; Bareth, G. Combining UAV-based plant height from crop surface models, visible, and near infrared vegetation indices for biomass monitoring in barley. Int. J. Appl. Earth Obs. Geoinf. 2015, 39, 79–87. [Google Scholar] [CrossRef]

- Calderón, R.; Navas-Cortés, J.A.; Zarco-Tejada, P.J. Early Detection and Quantification of Verticillium Wilt in Olive Using Hyperspectral and Thermal Imagery over Large Areas. Remote Sens. 2015, 7, 5584–5610. [Google Scholar] [CrossRef]

- Zhang, J.; Huang, Y.; Pu, R.; Gonzalez-Moreno, P.; Yuan, L.; Wu, K.; Huang, W. Monitoring plant diseases and pests through remote sensing technology: A review. Comput. Electron. Agric. 2019, 165, 104943. [Google Scholar] [CrossRef]

- Ma, H.; Huang, W.; Dong, Y.; Liu, L.; Guo, A. Using UAV-Based Hyperspectral Imagery to Detect Winter Wheat Fusarium Head Blight. Remote Sens. 2021, 13, 3024. [Google Scholar] [CrossRef]

- An, G.; Xing, M.; He, B.; Kang, H.; Shang, J.; Liao, C.; Huang, X.; Zhang, H. Extraction of Areas of Rice False Smut Infection Using UAV Hyperspectral Data. Remote Sens. 2021, 13, 3185. [Google Scholar] [CrossRef]

- Guo, A.; Huang, W.; Dong, Y.; Ye, H.; Ma, H.; Liu, B.; Wu, W.; Ren, Y.; Ruan, C.; Geng, Y. Wheat Yellow Rust Detection Using UAV-Based Hyperspectral Technology. Remote Sens. 2021, 13, 123. [Google Scholar] [CrossRef]

- Zhang, S.; Li, X.; Ba, Y.; Lyu, X.; Zhang, M.; Li, M. Banana Fusarium Wilt Disease Detection by Supervised and Unsupervised Methods from UAV-Based Multispectral Imagery. Remote Sens. 2022, 14, 1231. [Google Scholar] [CrossRef]

- Zhang, H.; Huang, L.; Huang, W.; Dong, Y.; Weng, S.; Zhao, J.; Ma, H.; Liu, L. Detection of wheat Fusarium head blight using UAV-based spectral and image feature fusion. Front. Plant Sci. 2022, 13, 1004427. [Google Scholar] [CrossRef]

- Wang, Y.; Xing, M.; Zhang, H.; He, B.; Zhang, Y. Rice False Smut Monitoring Based on Band Selection of UAV Hyperspectral Data. Remote Sens. 2023, 15, 2961. [Google Scholar] [CrossRef]

- Zhao, D.; Cao, Y.; Li, J.; Cao, Q.; Li, J.; Guo, F.; Feng, S.; Xu, T. Early Detection of Rice Leaf Blast Disease Using Unmanned Aerial Vehicle Remote Sensing: A Novel Approach Integrating a New Spectral Vegetation Index and Machine Learning. Agronomy 2024, 14, 602. [Google Scholar] [CrossRef]

- Yu, R.; Ren, L.; Luo, Y. Early detection of pine wilt disease in Pinus tabuliformis in North China using a field portable spectrometer and UAV-based hyperspectral imagery. For. Ecosyst. 2021, 8, 44. [Google Scholar] [CrossRef]

- Zhang, N.; Zhang, X.; Yang, G.; Zhu, C.; Huo, L.; Feng, H. Assessment of defoliation during the Dendrolimus tabulaeformis Tsai et Liu disaster outbreak using UAV-based hyperspectral images. Remote Sens. Environ. 2018, 217, 323–339. [Google Scholar] [CrossRef]

- Zhang, N.; Wang, Y.; Zhang, X. Extraction of tree crowns damaged by Dendrolimus tabulaeformis Tsai et Liu via spectral-spatial classification using UAV-based hyperspectral images. Plant Methods 2020, 16, 135. [Google Scholar] [CrossRef] [PubMed]

- Zhang, S.; Huang, H.; Huang, Y.; Cheng, D.; Huang, J. A GA and SVM Classification Model for Pine Wilt Disease Detection Using UAV-Based Hyperspectral Imagery. Appl. Sci. 2022, 12, 6676. [Google Scholar] [CrossRef]

- Kurihara, J.; Koo, V.-C.; Guey, C.W.; Lee, Y.P.; Abidin, H. Early Detection of Basal Stem Rot Disease in Oil Palm Tree Using Unmanned Aerial Vehicle-Based Hyperspectral Imaging. Remote Sens. 2022, 14, 799. [Google Scholar] [CrossRef]

- Guan, Q.; Xu, T.; Feng, S.; Yu, F.; Song, K. Optimal segmentation and improved abundance estimation for superpixel-based Hyperspectral Unmixing. Eur. J. Remote Sens. 2022, 55, 485–506. [Google Scholar] [CrossRef]

- Bioucas-Dias, J.M.; Plaza, A.; Dobigeon, N.; Parente, M.; Du, Q.; Gader, P.; Chanussot, J. Hyperspectral Unmixing Overview: Geometrical, Statistical, and Sparse Regression-Based Approaches. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2012, 5, 354–379. [Google Scholar] [CrossRef]

- Lu, J.; Li, W.; Yu, M.; Zhang, X.; Ma, Y.; Su, X.; Yao, X.; Cheng, T.; Zhu, Y.; Cao, W.; et al. Estimation of rice plant potassium accumulation based on non-negative matrix factorization using hyperspectral reflectance. Precis. Agric. 2021, 22, 51–74. [Google Scholar] [CrossRef]

- Yu, F.; Bai, J.; Jin, Z.; Zhang, H.; Guo, Z.; Chen, C. Research on Precise Fertilization Method of Rice Tillering Stage Based on UAV Hyperspectral Remote Sensing Prescription Map. Agronomy 2022, 12, 2893. [Google Scholar] [CrossRef]

- Pölönen, I.; Saari, H.; Kaivosoja, J.; Honkavaara, E.; Pesonen, L. Hyperspectral imaging based biomass and nitrogen content estimations from light-weight UAV. In Proceedings of the SPIE Remote Sensing 2013, Dresden, Germany, 23–26 September 2013; p. 88870J. [Google Scholar]

- Bauriegel, E.; Giebel, A.; Geyer, M.; Schmidt, U.; Herppich, W.B. Early detection of Fusarium infection in wheat using hyper-spectral imaging. Comput. Electron. Agric. 2011, 75, 304–312. [Google Scholar] [CrossRef]

- Chen, T.; Yang, W.; Zhang, H.; Zhu, B.; Zeng, R.; Wang, X.; Wang, S.; Wang, L.; Qi, H.; Lan, Y.; et al. Early detection of bacterial wilt in peanut plants through leaf-level hyperspectral and unmanned aerial vehicle data. Comput. Electron. Agric. 2020, 177, 105708. [Google Scholar] [CrossRef]

- Huang, W.; Lamb, D.W.; Niu, Z.; Zhang, Y.; Liu, L.; Wang, J. Identification of yellow rust in wheat using in-situ spectral reflectance measurements and airborne hyperspectral imaging. Precis. Agric. 2007, 8, 187–197. [Google Scholar] [CrossRef]

- Liu, M.Y.; Tuzel, O.; Ramalingam, S.; Chellappa, R. Entropy rate superpixel segmentation. In Proceedings of the CVPR 2011, Colorado Springs, CO, USA, 20–25 June 2011; pp. 2097–2104. [Google Scholar]

- Achanta, R.; Shaji, A.; Smith, K.; Lucchi, A.; Fua, P.; Süsstrunk, S. SLIC Superpixels Compared to State-of-the-Art Superpixel Methods. IEEE Trans. Pattern Anal. Mach. Intell. 2012, 34, 2274–2282. [Google Scholar] [CrossRef] [PubMed]

- Miguel, A.G.-J.; Miguel, V.-R. Integrating spatial information in unmixing using the nonnegative matrix factorization. In Proceedings of the SPIE Defense, Security, and Sensing, Baltimore, MD, USA, 5–9 May 2014; p. 908811. [Google Scholar]

- Xuewen, Z.; Selene, E.C.; Zhenlin, X.; Nathan, D.C. SLIC superpixels for efficient graph-based dimensionality reduction of hyperspectral imagery. In Proceedings of the SPIE Defense + Security, Baltimore, MD, USA, 20–24 April 2015; p. 947209. [Google Scholar]

- Xu, M.; Zhang, L.; Du, B. An Image-Based Endmember Bundle Extraction Algorithm Using Both Spatial and Spectral Information. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2015, 8, 2607–2617. [Google Scholar] [CrossRef]

- Alkhatib, M.Q.; Velez-Reyes, M. Effects of Region Size on Superpixel-Based Unmixing. In Proceedings of the 2019 10th Workshop on Hyperspectral Imaging and Signal Processing: Evolution in Remote Sensing (WHISPERS), Amsterdam, The Netherlands, 24–26 September 2019; pp. 1–5. [Google Scholar]

- Heinz, D.C.; Chein, I.C. Fully constrained least squares linear spectral mixture analysis method for material quantification in hyperspectral imagery. IEEE Trans. Geosci. Remote Sens. 2001, 39, 529–545. [Google Scholar] [CrossRef]

- Ibrahem Ahmed Osman, A.; Najah Ahmed, A.; Chow, M.F.; Feng Huang, Y.; El-Shafie, A. Extreme gradient boosting (Xgboost) model to predict the groundwater levels in Selangor Malaysia. Ain Shams Eng. J. 2021, 12, 1545–1556. [Google Scholar] [CrossRef]

- Abdulridha, J.; Ehsani, R.; De Castro, A. Detection and Differentiation between Laurel Wilt Disease, Phytophthora Disease, and Salinity Damage Using a Hyperspectral Sensing Technique. Agriculture 2016, 6, 56. [Google Scholar] [CrossRef]

- Zhu, X.; Li, N.; Pan, Y. Optimization Performance Comparison of Three Different Group Intelligence Algorithms on a SVM for Hyperspectral Imagery Classification. Remote Sens. 2019, 11, 734. [Google Scholar] [CrossRef]

- Devadas, R.; Lamb, D.W.; Simpfendorfer, S.; Backhouse, D. Evaluating ten spectral vegetation indices for identifying rust infection in individual wheat leaves. Precis. Agric. 2009, 10, 459–470. [Google Scholar] [CrossRef]

- Gitelson, A.A.; Kaufman, Y.J.; Stark, R.; Rundquist, D. Novel algorithms for remote estimation of vegetation fraction. Remote Sens. Environ. 2002, 80, 76–87. [Google Scholar] [CrossRef]

- Jacquemoud, S.; Baret, F. PROSPECT: A model of leaf optical properties spectra. Remote Sens. Environ. 1990, 34, 75–91. [Google Scholar] [CrossRef]

- Heylen, R.; Burazerovic, D.; Scheunders, P. Fully Constrained Least Squares Spectral Unmixing by Simplex Projection. IEEE Trans. Geosci. Remote Sens. 2011, 49, 4112–4122. [Google Scholar] [CrossRef]

- Roberts, D.A.; Gardner, M.; Church, R.; Ustin, S.; Scheer, G.; Green, R.O. Mapping Chaparral in the Santa Monica Mountains Using Multiple Endmember Spectral Mixture Models. Remote Sens. Environ. 1998, 65, 267–279. [Google Scholar] [CrossRef]

- Somers, B.; Zortea, M.; Plaza, A.; Asner, G.P. Automated Extraction of Image-Based Endmember Bundles for Improved Spectral Unmixing. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2012, 5, 396–408. [Google Scholar] [CrossRef]

- Nguyen, C.; Sagan, V.; Maimaitiyiming, M.; Maimaitijiang, M.; Bhadra, S.; Kwasniewski, M.T. Early Detection of Plant Viral Disease Using Hyperspectral Imaging and Deep Learning. Sensors 2021, 21, 742. [Google Scholar] [CrossRef] [PubMed]

- Duan, B.; Fang, S.; Zhu, R.; Wu, X.; Wang, S.; Gong, Y.; Peng, Y. Remote Estimation of Rice Yield With Unmanned Aerial Vehicle (UAV) Data and Spectral Mixture Analysis. Front. Plant Sci. 2019, 10, 204. [Google Scholar] [CrossRef]

- Gong, Y.; Duan, B.; Fang, S.; Zhu, R.; Wu, X.; Ma, Y.; Peng, Y. Remote estimation of rapeseed yield with unmanned aerial vehicle (UAV) imaging and spectral mixture analysis. Plant Methods 2018, 14, 70. [Google Scholar] [CrossRef]

- Curcio, A.C.; Barbero, L.; Peralta, G. UAV-Hyperspectral Imaging to Estimate Species Distribution in Salt Marshes: A Case Study in the Cadiz Bay (SW Spain). Remote Sens. 2023, 15, 1419. [Google Scholar] [CrossRef]

- Villa, A.; Chanussot, J.; Benediktsson, J.A.; Jutten, C. Spectral Unmixing for the Classification of Hyperspectral Images at a Finer Spatial Resolution. IEEE J. Sel. Top. Signal Process. 2011, 5, 521–533. [Google Scholar] [CrossRef]

- Alkhatib, M.Q.; Velez-Reyes, M. Improved Spatial-Spectral Superpixel Hyperspectral Unmixing. Remote Sens. 2019, 11, 2374. [Google Scholar] [CrossRef]

| Level | Severity | DI | Symptom Description |

|---|---|---|---|

| A | Asymptomatic | 0 | No visible spots |

| I | Initial symptoms | 0–0.10 | Few spots on some leaves |

| M | Moderate symptoms | 0.11–0.25 | More infected leaves and increased spot size |

| S | Severe symptoms | 0.26–0.50 | Large number of leaves infected with larger spots |

| Method | XGBoost | MLP | GA-SVM | |||

|---|---|---|---|---|---|---|

| OA (%) | Kappa (%) | OA (%) | Kappa (%) | OA (%) | Kappa (%) | |

| QT | 84.00 | 78.61 | 83.43 | 77.93 | 84.48 | 79.33 |

| ERS | 86.23 | 81.57 | 86.13 | 81.50 | 88.44 | 84.56 |

| SLIC | 88.44 | 84.56 | 87.79 | 83.71 | 89.08 | 85.42 |

| Method | XGBoost | MLP | GA-SVM | t (s) | |||

|---|---|---|---|---|---|---|---|

| OA (%) | Kappa (%) | OA (%) | Kappa (%) | OA (%) | Kappa (%) | ||

| SPU | 85.71 | 80.94 | 85.14 | 80.16 | 85.71 | 80.93 | 0.0013 |

| MESMA | 89.66 | 86.19 | 88.57 | 84.76 | 90.17 | 86.88 | 0.0053 |

| FCLS | 87.36 | 83.12 | 86.86 | 82.47 | 88.37 | 84.46 | 0.0012 |

| MD-FCLS | 86.21 | 81.59 | 85.71 | 80.94 | 87.36 | 83.12 | 0.00064 |

| Mean-FCLS | 85.14 | 80.23 | 85.71 | 80.94 | 86.21 | 81.59 | 0.00072 |

| D-SPU | 86.21 | 81.62 | 85.47 | 80.60 | 86.78 | 82.35 | 0.00096 |

| D- MESMA | 89.71 | 86.26 | 88.57 | 84.76 | 89.08 | 85.44 | 0.0039 |

| D-FCLS | 88.44 | 84.56 | 87.79 | 83.71 | 89.08 | 85.42 | 0.00081 |

| Method | XGBoost | MLP | GA-SVM | |||

|---|---|---|---|---|---|---|

| OA (%) | Kappa (%) | OA (%) | Kappa (%) | OA (%) | Kappa (%) | |

| Elliptical | 78.29 | 70.98 | 77.65 | 70.15 | 77.71 | 70.26 |

| Rectangle | 82.29 | 76.32 | 82.29 | 76.37 | 82.76 | 76.93 |

| Polygon | 85.14 | 80.15 | 85.47 | 80.60 | 85.63 | 80.81 |

| This study | 88.44 | 84.56 | 87.79 | 83.71 | 89.08 | 85.42 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Guan, Q.; Qiao, S.; Feng, S.; Du, W. Investigation of Peanut Leaf Spot Detection Using Superpixel Unmixing Technology for Hyperspectral UAV Images. Agriculture 2025, 15, 597. https://doi.org/10.3390/agriculture15060597

Guan Q, Qiao S, Feng S, Du W. Investigation of Peanut Leaf Spot Detection Using Superpixel Unmixing Technology for Hyperspectral UAV Images. Agriculture. 2025; 15(6):597. https://doi.org/10.3390/agriculture15060597

Chicago/Turabian StyleGuan, Qiang, Shicheng Qiao, Shuai Feng, and Wen Du. 2025. "Investigation of Peanut Leaf Spot Detection Using Superpixel Unmixing Technology for Hyperspectral UAV Images" Agriculture 15, no. 6: 597. https://doi.org/10.3390/agriculture15060597

APA StyleGuan, Q., Qiao, S., Feng, S., & Du, W. (2025). Investigation of Peanut Leaf Spot Detection Using Superpixel Unmixing Technology for Hyperspectral UAV Images. Agriculture, 15(6), 597. https://doi.org/10.3390/agriculture15060597