1. Introduction

China is the world’s largest producer of citrus fruits. As the citrus industry continues to develop, the demand for harvesting has increased significantly. Currently, harvesting citrus is primarily done by hand, which requires a substantial workforce. However, an aging population has led to labor shortages in orchards, making it increasingly difficult for manual labor to meet the demands of large-scale production [

1,

2,

3,

4]. Citrus picking robots have become popular for harvesting, and knowing the exact location of the citrus picking points is essential for efficient picking [

5,

6,

7,

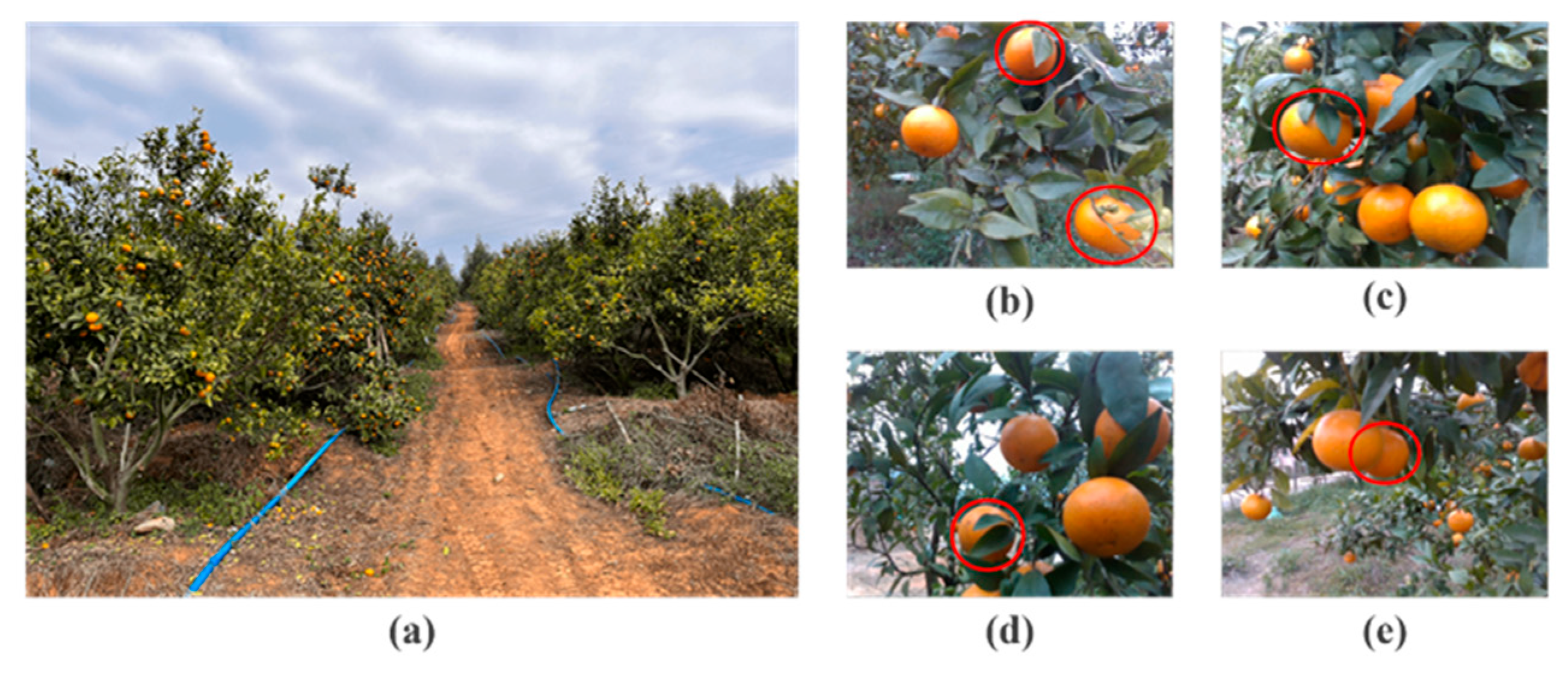

8]. However, the current method of picking citrus fruits is easily affected by factors such as branches and leaves occluding the fruit and overlapping fruits. This makes it difficult for robots to accurately detect the location of the picking point of the citrus fruit stems under complex occlusion conditions. This results in poor positioning accuracy of the robots, affecting picking efficiency. Therefore, the study of efficient and stable citrus picking localization algorithms is of great significance to improve the efficiency of autonomous robot picking in complex environments.

Deep learning, as the current mainstream method for researching intelligent agricultural equipment, is widely used in fruit detection and localization [

9,

10]. Currently, research has improved the network model architecture to improve the detection accuracy in various agricultural application scenarios and achieved better results. Sun et al. proposed an improved YOLO-P method based on the YOLOv5 network architecture, which optimizes the detection ability of small targets, so that the model can maintain excellent detection performance under different complex background and lighting conditions, with an average accuracy of 97.6% [

11]. Pan et al. developed a fruit recognition method for robotic systems, which was able to effectively detect individual pears by combining a 3D stereo camera with a Mask R-CNN, and the algorithm achieved an mAP of 95.22% [

12]. Fruit yield estimation is an important part of fruit harvesting; Zhang et al. proposed two algorithms, OrangeYolo and OrangeSort, which are used for citrus detection and tracking, respectively, and the AP of citrus detection reaches 93.8%, while the average absolute error of motion displacement is 0.081 [

13,

14]. Yan et al. proposed a lightweight apple detection method based on the improved YOLOv5s detection method, by introducing the BottleneckCSP-2 module, combined with the SE attention mechanism to extract the apple feature information, which effectively improves the robot’s recognition ability in occluded situations, and the recall and mAP of the algorithm reach 91.48% and 86.75%, respectively [

15]. In addition, the researchers used a fusion method based on deep learning and stereo vision to obtain the 3D position of the fruit. Shu et al. proposed a visual system based on stereoscopic vision for lychee-picking robots. They used background separation technology to obtain external feature information of lychees by combining automatic exposure and white balance to process left-eye images in real time, and by combining right-eye images for stereoscopic matching to extract three-dimensional spatial coordinate information. This method achieved a picking success rate of 91.1%, effectively addressing the issue of lychees being obstructed [

16]. Gené-Mola et al. propose an apple detection method combining Mask R-CNN and SFM. The fruit is detected and segmented in 2D space by Mask R-CNN, and the 3D point cloud reconstruction of the apples is realized by combining SFM, and the F1-score of the algorithm is improved by 0.065 [

17]. Lin et al. proposed a citrus detection and localization method based on RGB-D image analysis, which employs a depth filter and Bayesian classifiers to eliminate the non-correlated points and introduces a density clustering method for the citrus point cloud clustering, combined with an SVM classifier to further estimate the position of citrus. The F1 value of the algorithm reaches 0.9197, which meets the robustness requirements of citrus picking robots [

18]. Zhang et al. proposed a real-time apple recognition and localization method based on structured light and deep learning. The YOLOv5 model was employed to detect apples in real time and obtain two-dimensional pixel coordinates. These were then combined with an active laser vision system to obtain the three-dimensional world coordinates of the apples. This method effectively improved the quality of three-dimensional reconstruction of apples, with an average recognition accuracy of 92.86% and a spatial localization accuracy of 4 mm [

19].

The above research method fully demonstrates that improved deep learning network models can yield relatively precise fruit information. Simultaneously, a fusion approach combining deep learning and stereo vision enables accurate acquisition of the fruit’s three-dimensional position, providing the picking robot with the fruit’s 3D coordinates. However, achieving precise fruit picking still requires further estimation of the fruit’s picking point [

20]. Addressing this challenge, numerous scholars have initiated related research aimed at extracting fruit picking point information based on the three-dimensional coordinates. Xiao et al. proposed a monocular pose estimation method based on semantic segmentation and rotating object detection. They used R-CNN to identify candidate boxes for the fruit target, reconstructed a 3D point cloud for the ROI with the highest confidence, and constructed a 3D bounding box to estimate the pose of the citrus fruits. The algorithm’s identification and localization success rate reached 93.6%, and the fruit picking success rate reached 85.1% [

21]. Researchers estimate the location of fruit picking points by obtaining information about the depth of fruit. Hou et al. proposed a method for detecting and locating citrus picking points based on stereo vision. They introduced the CBAM attention mechanism and used the Soft-NMS strategy in the region proposal network, as well as a matching method based on normalized cross-correlation, to obtain picking points. Experimental results showed that the average absolute error for citrus picking point localization was 8.63 mm, and the average relative error was 2.76% [

22]. However, the accuracy of these localization methods fusing depth information still suffers when dealing with dense occlusion or dynamic interference. Tang et al. proposed a detection and localization algorithm based on the YOLOv4-tiny model and stereo vision. By optimizing the YOLOv4-tiny model, relevant feature information of oil tea fruits was obtained. This feature information was combined with the bounding boxes generated by the YOLO-Oleifera model to extract ROI information. Triangulation principles were then employed to determine the picking points. The algorithm achieved an AP of 92.07% and an average detection time of 31 ms [

23].

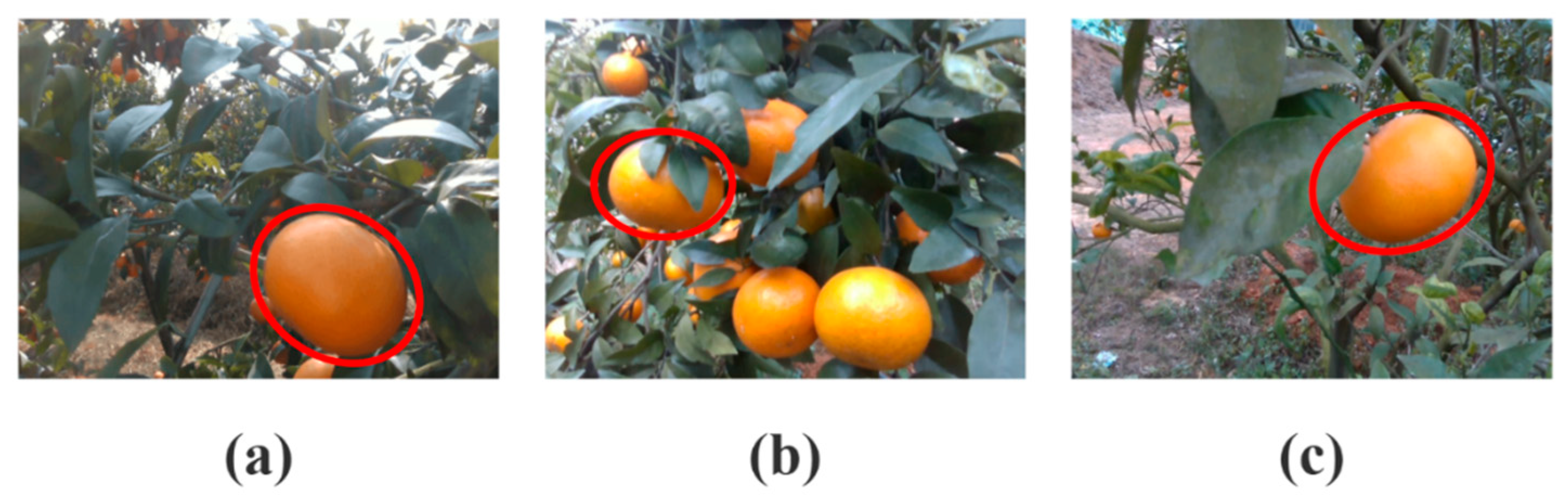

The aforementioned research report indicates that under conditions of clear background and well-defined fruit observation positions, the fruit picking point can be obtained with relative precision. However, in actual citrus orchards, phenomena such as foliage obscuring fruits and overlapping fruits occur. Simultaneously, the small size of the fruit pedicels makes it difficult to determine the position and orientation of the pedicel where the fruit picking point is located, thereby hindering the picking robot’s ability to accurately identify the fruit’s picking point. To address this challenge, researchers have initiated relevant studies. Li et al. proposed the improved YOLO11-GS model based on YOLO11n-OBB. For grape stem recognition, oriented bounding boxed and geometric methods are combined to enable rapid and precise localization of picking point and postures. Meanwhile ByteTrack and BoTSORT tracking algorithms are used for tracking grape bunches and stems [

24]. Song et al. proposed a novel method for estimating corn tassel posture based on computer vision and oriented object detection. This method first locates the horizontal plane through the tassel and leaf veins, then matches the tassel orientation with directional bounding boxes. Directional bounding boxes are generated by integrating the detection results from the Directional R-CNN model. Subsequently, pixels from these directional bounding boxes are input into a secondary observation module to extract corn tassel information, ultimately enabling precise determination of its orientation [

25]. Zhou et al. proposed an integrated framework based on multi-objective oriented detection specifically designed for the detection and analysis of stalk crops. The proposed framework utilizes the YOLO-OBB model, which is built upon the YOLOv8 architecture, to extract stalk-shaped objects. Simultaneously, it utilizes the multi-label detection model YOLO-MLD to perform quality grading and pose estimation for individual objects, achieving an average accuracy of 93.4% [

26]. Gao et al. proposed an improved detector-YOLOv11OC, which integrates an angle-aware attention module, cross-layer fusion network, and GSConv Inception network. This approach achieves precise pose-oriented detection while reducing model complexity. When combined with depth maps, the system achieves a pose estimation accuracy of 92.5% [

27]. Gao et al. proposed a Stereo Corn Pose Detection algorithm that uses 3D object detection technology to obtain information about the pose and size of corn through stereo images. This algorithm addresses the shortcoming of traditional 3D object detection, which lacks pitch angle detection. It achieves an accuracy rate of 91% in corn size and pose detection [

28].

By analyzing the above research methods, the Orient Bounding Box (OBB) algorithm show significant advantages in specific detection scenarios: when facing targets tilted at any angle, it can flexibly adjust the angle of the bounding box with the rotation of the target to achieve a close fit to the target shape; for a large number of targets that are densely distributed and are prone to mutual obstruction or overlapping of the bounding box, the algorithm can differentiate between different targets based on the different angle of the target to reduce the obstruction interference; at the same time, the OBB algorithm can directly output the position information of the detected target to support the subsequent accurate operation data. At the same time, the OBB algorithm can directly output the positional information of the detected targets, providing key data support for subsequent precision operations.

The aforementioned research methods demonstrate that deep learning and stereo vision technologies can effectively detect fruit and provide precise positioning information. However, in the actual orchard, the fruit stems are slender, similar in color to the branches and leaves, and easily obscured by the leaves and fruits, which makes it difficult to accurately extract the fruit picking point by the traditional target fruit localization method, ultimately resulting in low picking accuracy of the picking robot. For this reason, this paper therefore proposes a citrus fruit stems pose estimation method based on the YOLO-OBB algorithm to address these challenges. By precisely estimating the pose of the fruit stems and the picking point, this method provides a crucial technical solution for the autonomous picking of citrus fruits in complex environments. The specific details are as follows:

(1) The YOLOv5s algorithm is used to detect citrus fruits in real time and extract their precise Region of Interest (ROI) information. The ROI information is then mapped to the depth image space to construct a three-dimensional point cloud of citrus fruits, providing a data foundation for subsequent estimation of the posture of citrus fruit stems.

(2) Combining the camera imaging and citrus point cloud features, the OBB algorithm is used to construct the oriented point cloud bounding box, which solves the attitude estimation limitation problem of the traditional Axis-Aligned Bounding Box (AABB) algorithm for the elongated and inclined fruit stems and then realizes the estimation of the attitude of the fruit stems; the precise location of the picking point of the fruit stems is then extracted from the citrus point cloud features by PCA analysis.

(3) Based on the coordinate relationship between the camera and the robotic arm, the spatial coordinate offsets between the camera, the end-effector, and the fruit stem pose are calculated. Compensating for the angular offsets enables precise real-time picking operations to be achieved with the end-effector.

3. Methods

3.1. Algorithm Framework

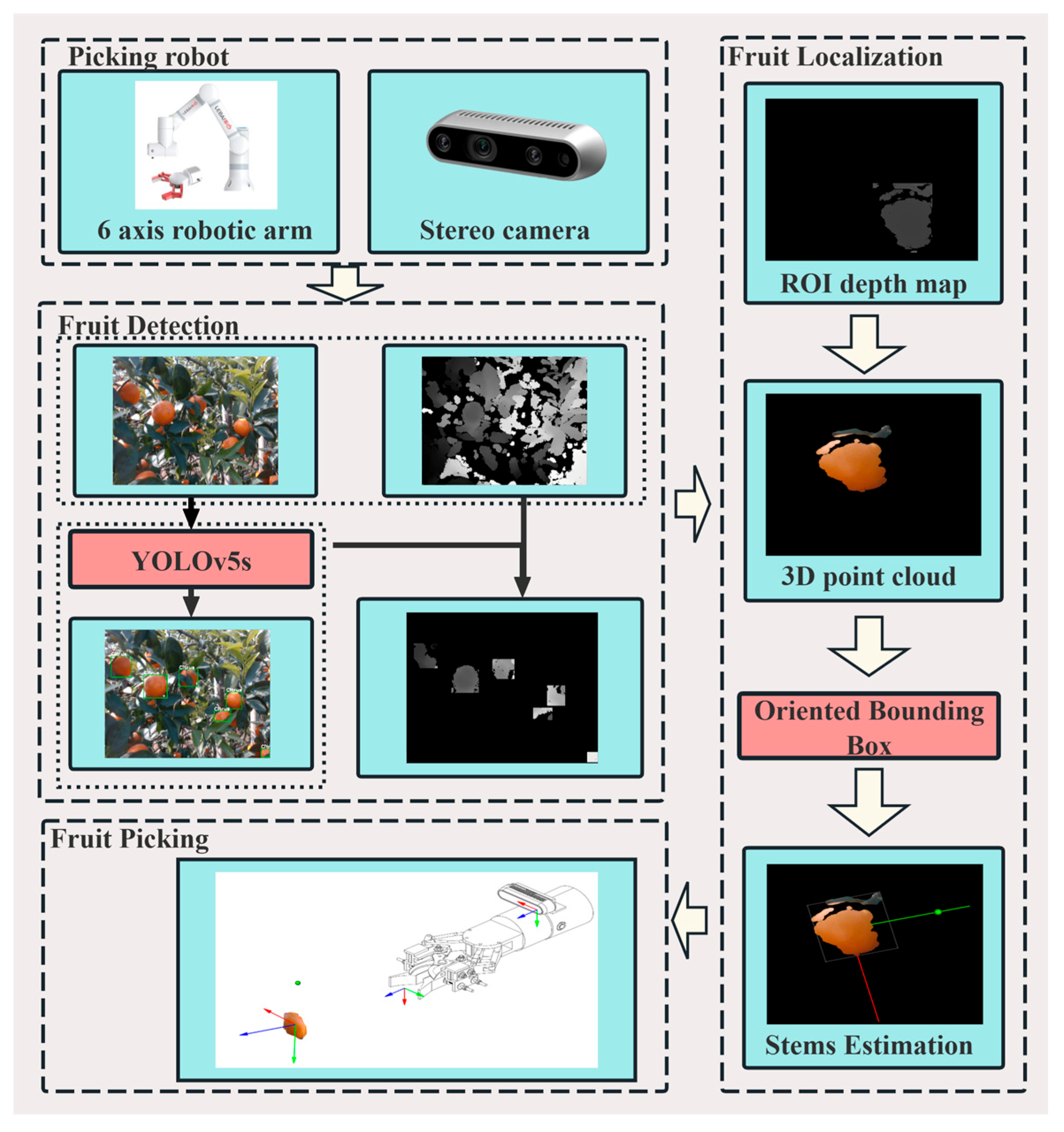

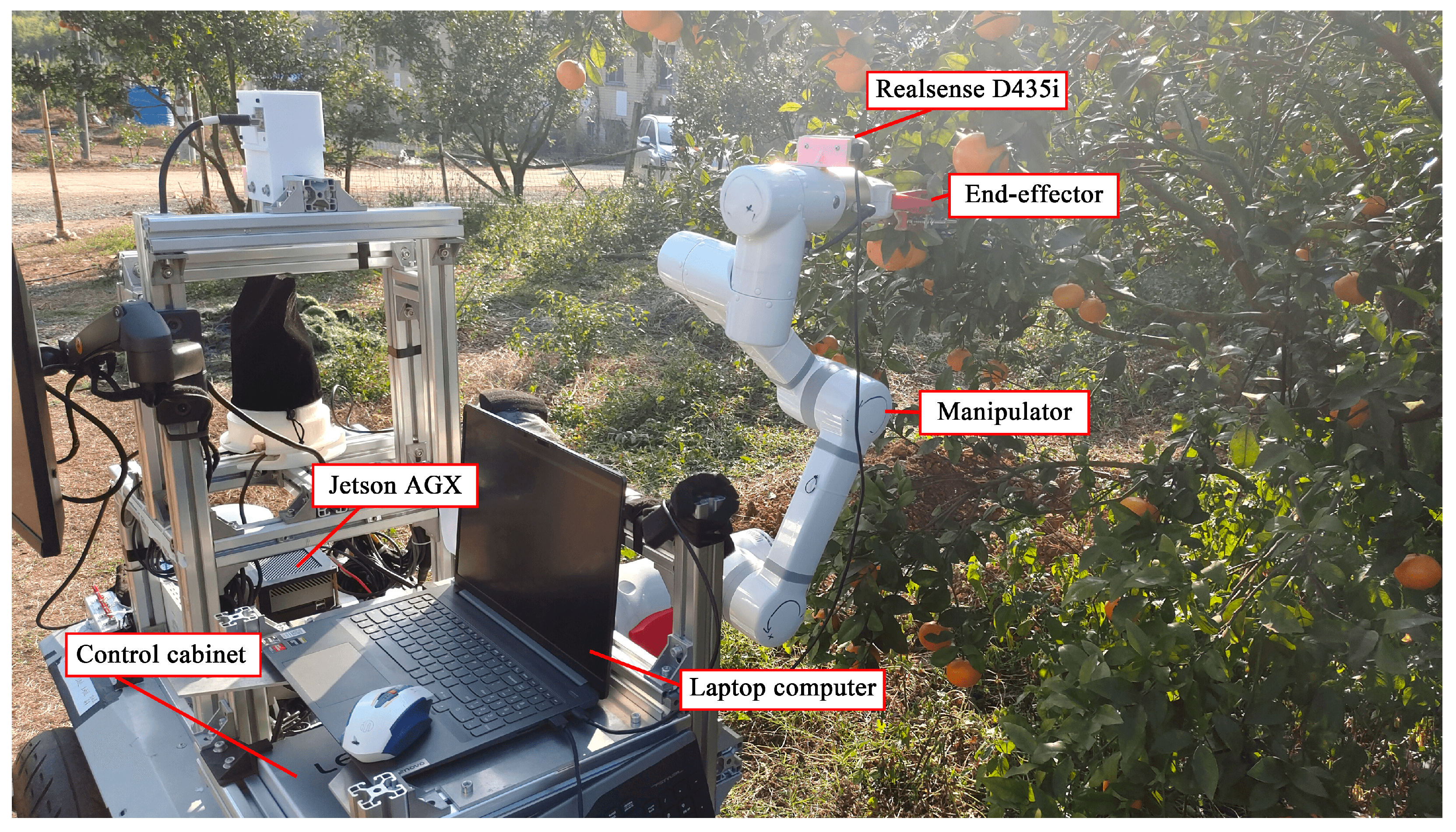

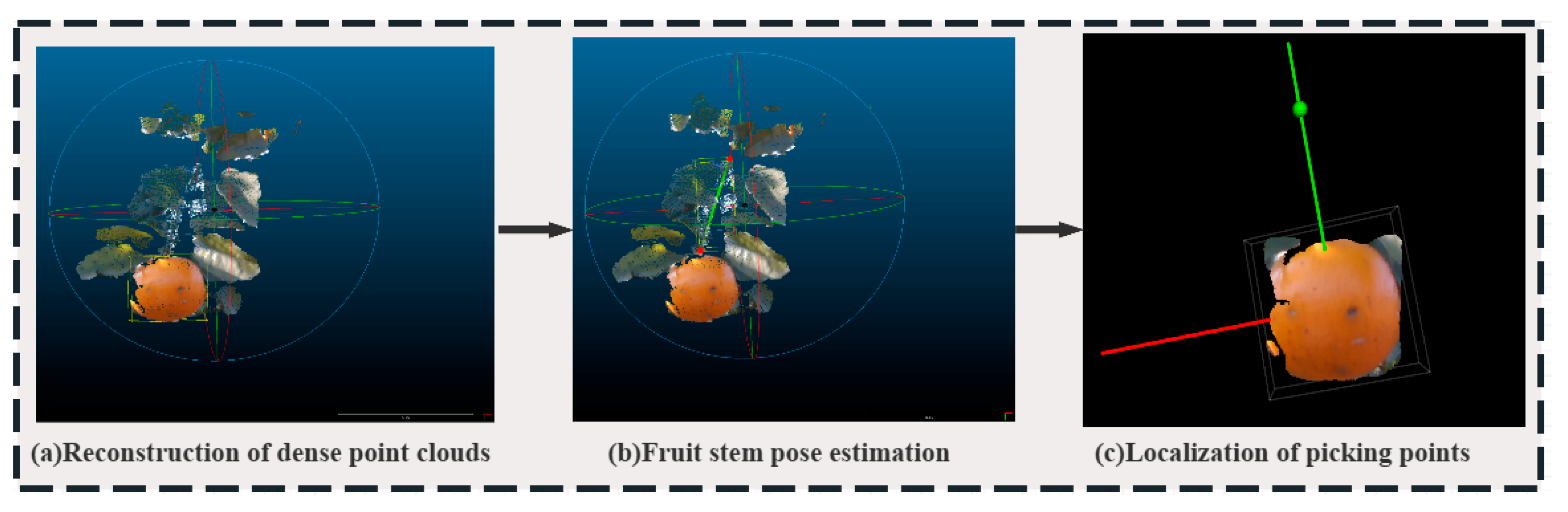

In the unstructured orchard operating environment, citrus fruit stems are susceptible to branch and leaf shading, fruit overlap, and other factors, resulting in poor positioning accuracy of the robot’s picking and affecting the efficiency of automated citrus picking. To address the aforementioned challenges, this paper proposes a citrus fruit stem pose estimation method based on the YOLO-OBB fusion algorithm. The method consists of three parts: fruit detection, fruit localization, and fruit picking, in order to realize the three major processes of detection–localization–picking in the citrus picking robot operation shown in

Figure 2. In the fruit detection part, the YOLOv5s detection algorithm is combined with a depth camera to extract the depth information of the target citrus; in the fruit localization part, the 3D point cloud re-construction of the target citrus is realized, and the OBB algorithm is combined with the PCA algorithm to estimate the attitude of the stems of the citrus point cloud, so as to obtain the precise position information of the target citrus picking point; Finally, in the fruit picking part, based on the hand–eye coordination relationship and the position information of the picking point, the end-effector is further controlled to complete the picking operation. The approach comprises three components: fruit detection, fruit positioning, and fruit picking, as illustrated in

Figure 2.

Initially, the RealSense D435i depth camera aligns RGB and depth video streams. The YOLOv5s algorithm is employed for real-time citrus detection, acquiring ROI information of the fruits. Subsequently, the ROI data are mapped onto the depth map to extract the depth value at the center point of the bounding box. Combining this depth information, a 3D point cloud of the citrus fruit is reconstructed. Ultimately, the OBB algorithm is used to construct the oriented minimum enclosing box for the citrus point cloud, analyze and extract the features of the citrus point cloud by the PCA method, estimate the attitude of the fruit stem, obtain the accurate position information of the picking point, calculate the attitude of the citrus fruit stem and the attitude of the end-effector based on the hand–eye coordination relationship transformation, and further control the end-effector to realize the accurate picking operation of the citrus.

By estimating the pose of the citrus fruit stem and controlling the picking direction of the end-effector through the aforementioned method, the citrus picking robot is provided with precise information about the location of the picking point, thereby enabling efficient picking operations. The method proposed in this paper realizes the innovation of the application by integrating the existing YOLO detection algorithm, OBB algorithm, and PCA method applied to the citrus picking robot. Due to the insufficient accuracy of the traditional citrus picking algorithm in locating the citrus picking points under occlusion conditions, this paper estimates the position of citrus fruit stems under the occlusion conditions, based on the traditional citrus picking algorithm, by adding the OBB algorithm and the PCA method to the traditional citrus picking algorithm. The accuracy of citrus stem localization under occlusion conditions is improved, which in turn improves the success rate of citrus picking. Furthermore, the algorithm presented in this paper is not only applicable to citrus picking scenarios but can also be further developed and applied to similar fruit stems affected by branch and leaf obstruction, fruit overlap, and other factors, thus demonstrating good generality and application value.

3.2. Fruit Detection

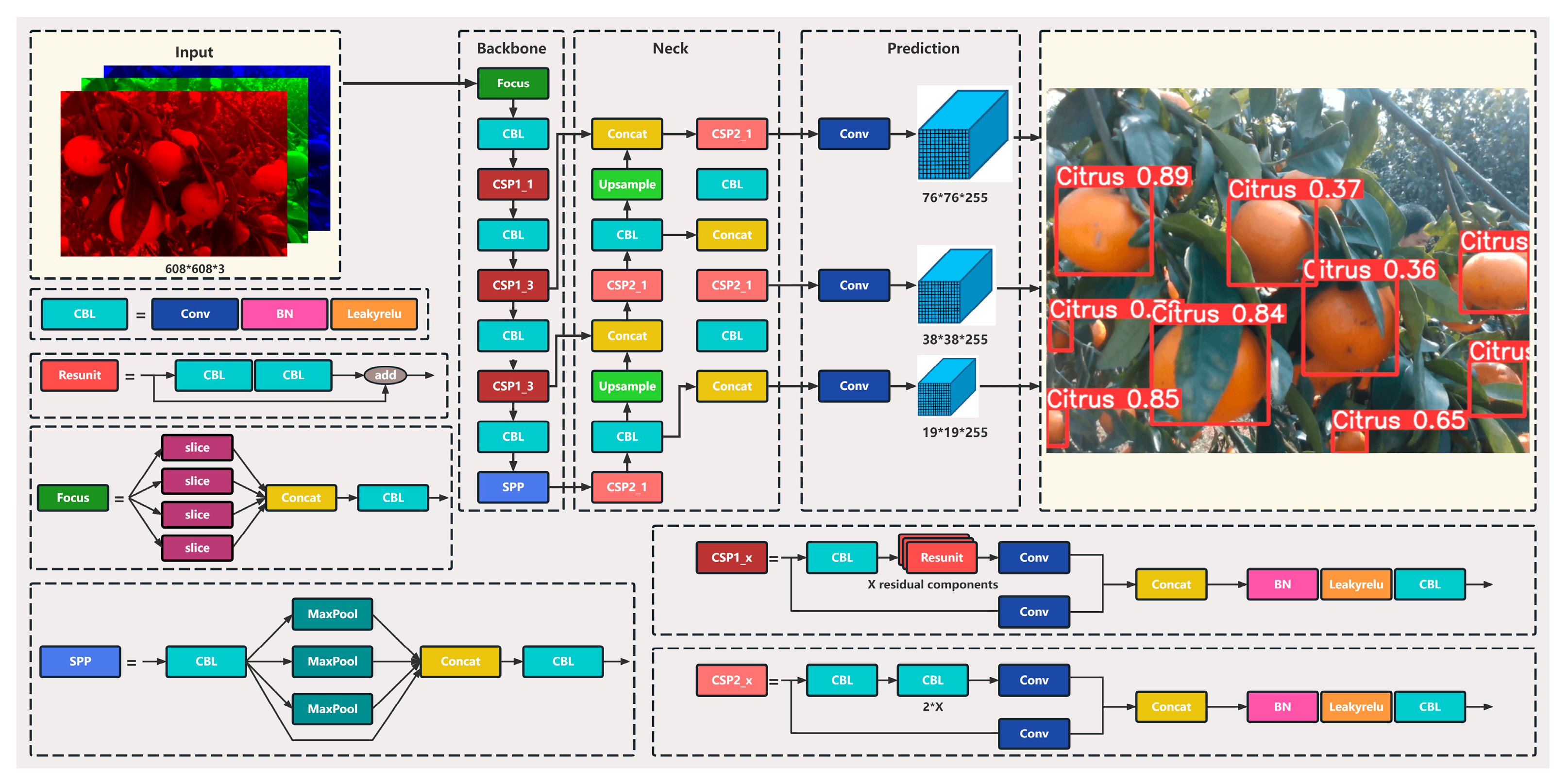

YOLO series network models are widely recognized for their high detection rate and high-precision target recognition capability. Currently, YOLO series network models have been updated and iterated, and the detection capability and deployment aspects of different versions vary. Among them, YOLOv5 series network models are widely used for crop detection due to the balanced performance of detection accuracy and efficiency and easy deployment characteristics. In terms of large target object detection, compared with the YOLOv5 series network models, the higher versions such as YOLOv8 and YOLOv11 have improved the training efficiency, but the degree of improvement in detection accuracy is smaller [

31]. In addition, according to the official document of YOLOv5, YOLOv5s is more effective than YOLOv5m and YOLOv51 in detecting large targets in complex scenes. At the same time, based on the deployment convenience and device applicability considerations, and the subsequent application of the method to embedded devices such as Jeston AGX(NVIDIA Corporation, Santa Clara, CA, USA), the YOLOV5s algorithm is more suitable for lightweight framework deployment [

32]. Therefore, the YOLOv5s network is selected as the algorithmic framework of this paper, and the target citrus ROI is extracted through the YOLOv5s network [

33]. Its detailed network architecture is shown in

Figure 3.

The YOLOv5s network model consists of four parts: Input, Backbone, Neck, and Prediction. Firstly, the Input module uniformly scales the size of the input image to 608 × 608 pixels; secondly, in the Backbone module, the Focus structure utilizes a convolution kernel to perform feature extraction on the input image and completes the initial feature encoding; then, the Neck module utilizes the feature pyramid and path aggregation network to fuse feature information at different levels of the image and enhances the model’s multi-scale target detection performance by constructing a multi-scale feature pyramid; finally, in the Prediction module, three different sizes of feature maps are used to perform multi-scale target prediction, generating the target’s category and bounding box location information, which makes the network model able to adapt to and accurately handle citrus targets of different sizes.

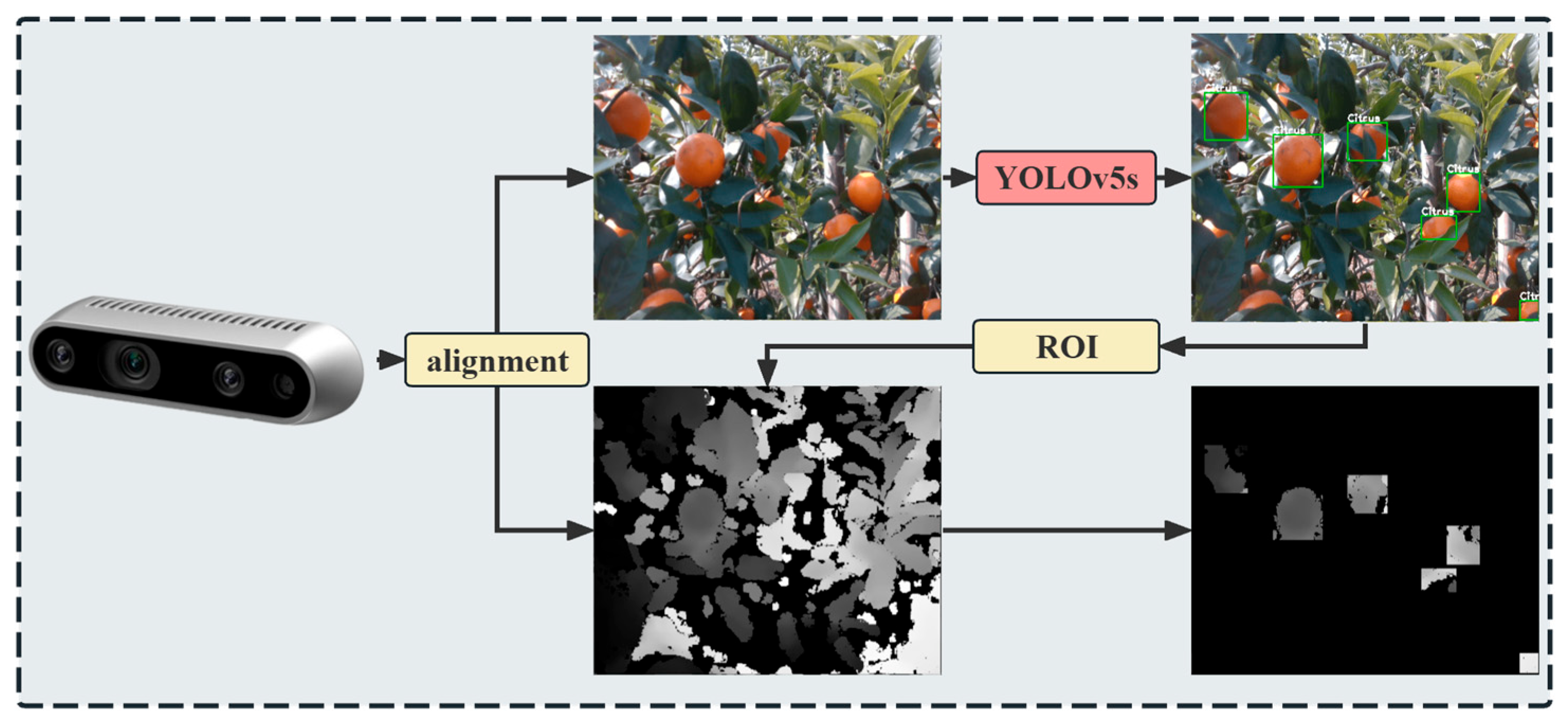

Upon activating the RealSense camera, RGB and depth video streams are spatially aligned. The YOLOv5s network model then performs real-time detection on the RGB stream to extract citrus ROIs, thereby establishing the image data foundation for subsequent stem pose estimation.

3.3. Fruit Localization

The YOLOv5s network model can provide accurate location information of citrus targets, but it cannot be directly applied to the picking robot, and it is necessary to further extract the 3D spatial location information of citrus targets based on the ROI information of citrus to provide accurate localization for the precise operation of the robot. Fruit depth information matching is crucial for obtaining the 3D spatial location of citrus fruits. As a common visual localization method, RGB-D matching employs image detection algorithms to extract ROI information from RGB images. It then matches the internal parameters of the citrus ROI with the corresponding depth information in the depth image, ultimately mapping the output citrus depth information into 3D space. This paper employs the RGB-D target matching method to process citrus ROI information, thereby acquiring 3D point cloud data of citrus fruits. Firstly, combining the citrus ROI information extracted from the YOLOv5s network model, its center coordinates are further extracted; secondly, through the coordinate transformation relationship between pixel space and 3D space, the ROI information is mapped to the depth map for the masking process, and the center depth information of the corresponding detection frames is obtained; lastly, combining with the depth information of the citrus fruits, the real-time generation of a citrus 3D point cloud is carried out, which provides the robot with accurate, three-dimensional spatial position information for the robot. This method can effectively avoid matching the redundant features in the background area, which greatly improves the efficiency, and the specific process is shown in

Figure 4.

3.4. Fruit Picking

The citrus depth information is obtained by the fruit localization algorithm, so as to calculate the point cloud information of citrus, but it cannot be directly used as a picking point to act on citrus picking, and it needs to be fitted and merged based on the point cloud attitude of citrus to obtain the positioning information of the fruit stems. In this paper, the OBB algorithm is used to calculate the minimum directed box of the citrus point cloud, combined with the PCA algorithm to estimate the optimal direction of the box, to estimate the position information of the fruit stems, and finally, the position information of the fruit stems is transformed and outputted into the attitude parameters of the robotic arm for the picking of citrus fruits.

3.4.1. Fruit Attitude Estimation

In the unstructured orchard environment, due to the interference of factors such as changes in light intensity, resulting in citrus fruits often exhibiting texture patches, discontinuities, and occlusion, which affects the picking accuracy of the robot; on the other hand, because the RealSense camera uses structured light imaging to obtain depth information and the citrus fruit stems are fine, when the picking robot needs to obtain the depth information of the fine target, it is easy to generate errors or return wrong depth values, affecting the spatial localization accuracy of citrus fruit stems.

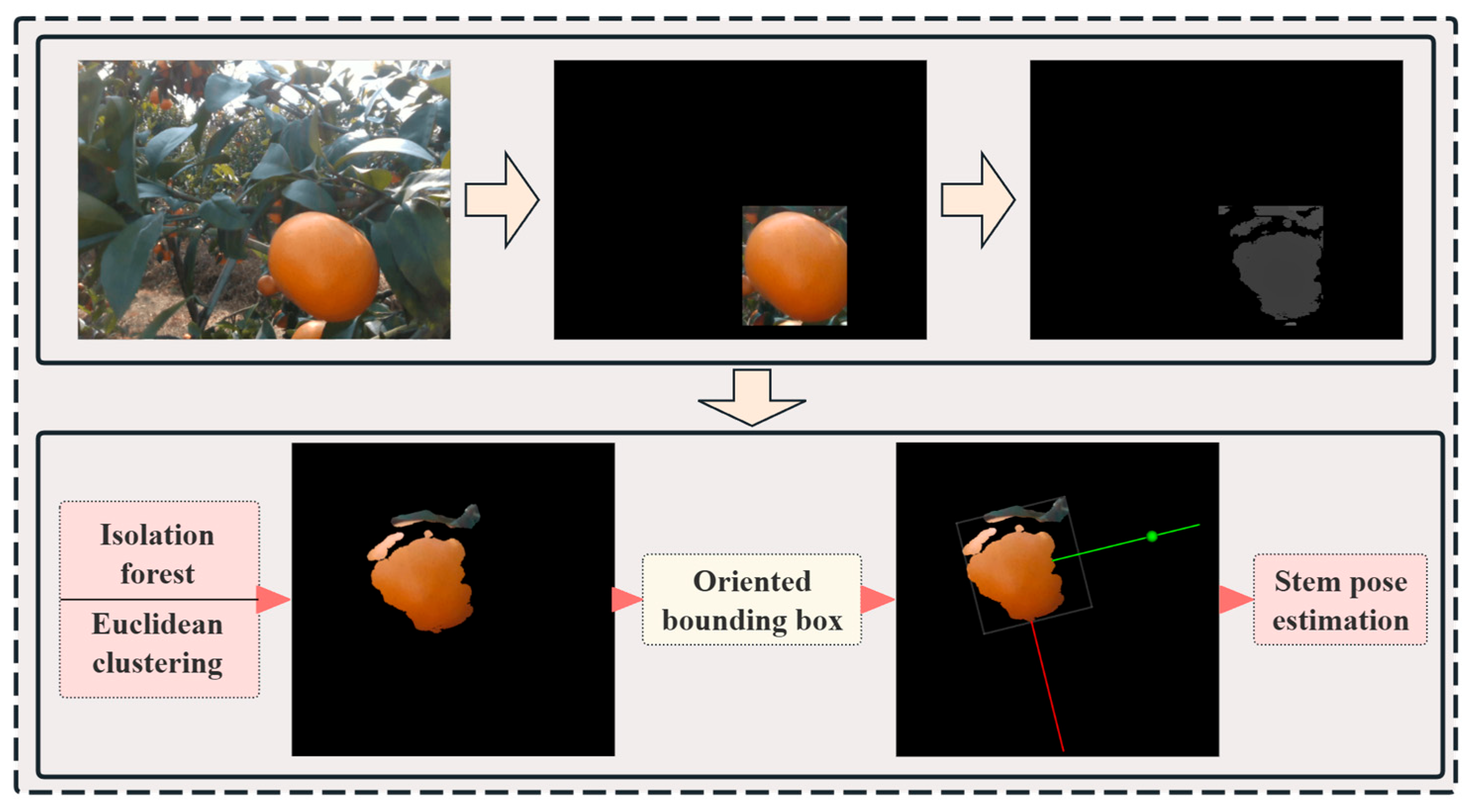

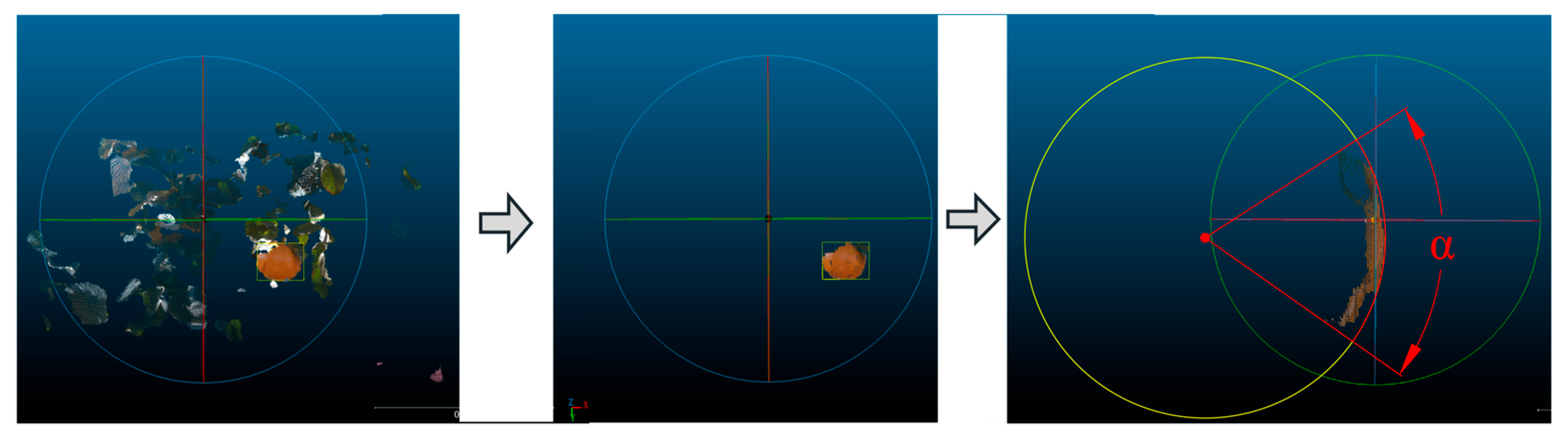

In order to obtain more accurate information on the posture of citrus fruit stems, this paper combines the imaging characteristics of the camera with the results of approximating the ellipsoidal shape of citrus point clouds. The OBB algorithm constructs a three-dimensional minimum bounding box for the citrus fruit, and PCA analyzes and extracts features from the point cloud. Further calculations of the posture information of citrus fruit stems are performed to obtain precise fruit stem picking point location information. The specific process is shown in

Figure 5.

OBB is a minimum directed bounding box estimation algorithm with linear complexity, which can dynamically determine the size and direction of the bounding box according to the geometry of the target, and the bounding box does not need to be parallel to the coordinate axes. When the target is displaced or rotated, the OBB algorithm can recalculate the bounding box by transforming the coordinates of the base axis, which has better dynamic transformation characteristics. And PCA, as a classical statistical method, analyzes the distribution characteristics of the point cloud data in each direction by calculating the covariance matrix of the point cloud data to further determine the optimal direction of the bracketing box. Among them, the covariance is used to measure the linear correlation between two variables, and the covariance matrix integrally describes the correlation characteristics of the whole point set in different axes [

34], and the covariance calculation formula is shown in Equation (1).

Here, μi and μj are the expected values of Xi and Xj, respectively.

Once the covariance between variables has been ascertained, the next step involves calculating the covariance matrix of the point cloud data. The eigenvalues and eigenvectors of the point cloud data are obtained through the eigen decomposition of the covariance matrix, as demonstrated in Equation (2). In this equation, the eigenvalues represent the distribution strength of the covariance matrix in the corresponding directions, while the eigenvectors define the main directions of the point cloud data, and the feature vectors are orthogonal to each other.

In this paper, the PCA algorithm is employed to eigen-decompose the matrix of covariance. The eigenvectors and the corresponding eigenvalues of the main axis direction of the point cloud are then extracted. Ultimately, the eigenvector corresponding to the largest eigenvalue is selected as the optimal direction of the enclosing box to construct the real pose of the citrus fruit stems in three-dimensional space. This provides a solid data basis for the establishment of accurate location information of the fruit stem picking points. The effect of this is shown in

Figure 6. As shown in the figure, the green feature vectors point in the Y-axis direction, the blue vectors point in the Z-axis direction, and the red vectors point in the X-axis direction. Among them, the green feature vector is the direction of fruit stem pose estimation, and the green point in this direction is defined as the picking point.

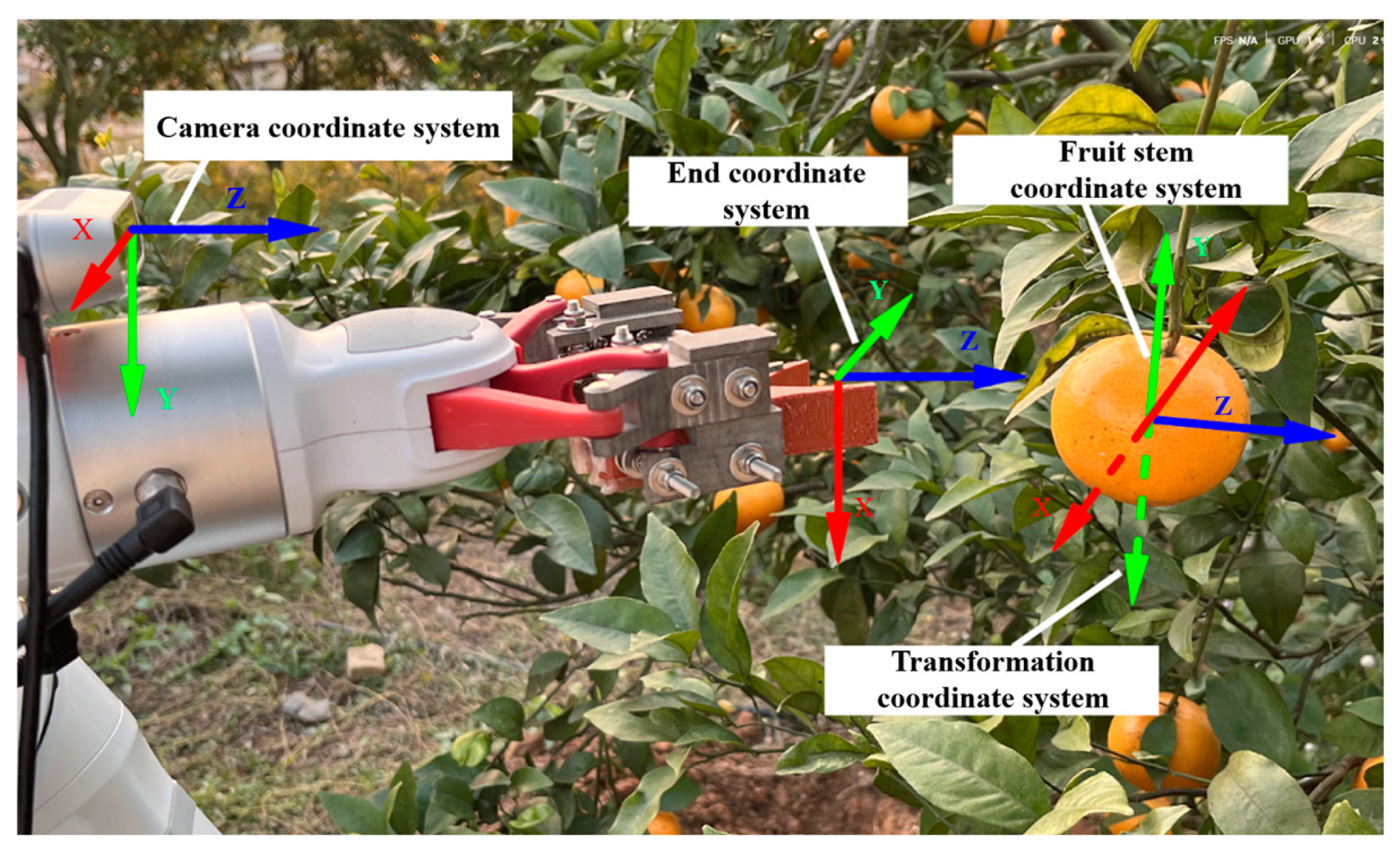

3.4.2. Estimation of Robot’s Picking Posture

Accurate coordination between the camera and the robot arm is a key prerequisite for picking robot operation. Before the citrus picking operation, it is necessary to calibrate the camera and robotic arm to establish the spatial mapping relationship between the citrus fruit stem pose and the end of the robotic arm. In this paper, we take the real citrus fruit stem coordinate system {

S} as an example and establish its transformation relationship with the camera coordinate system {

C} and the end-effector coordinate system {

G}, as shown in

Figure 7.

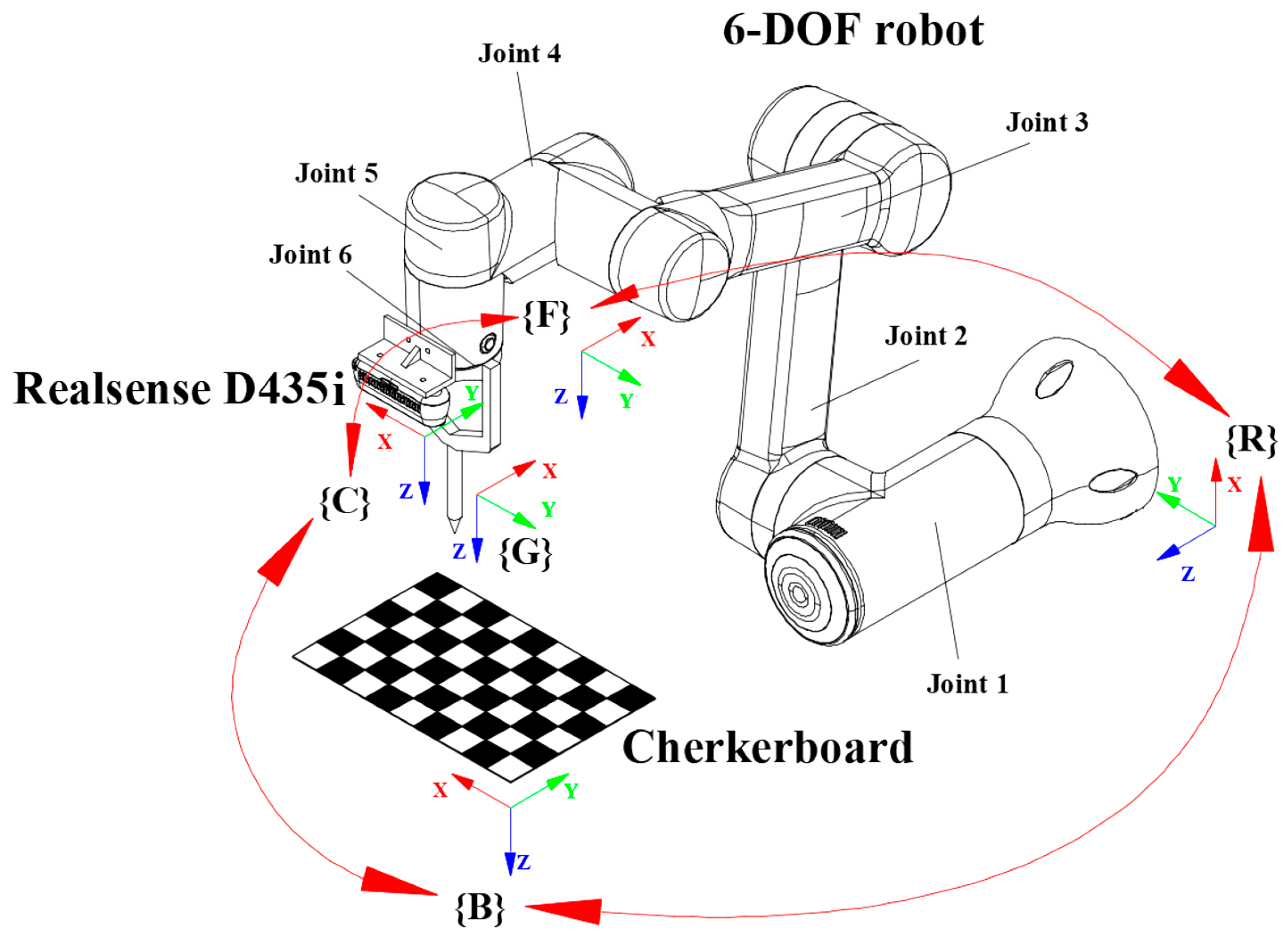

The relationship of each coordinate system for hand–eye calibration is shown in

Figure 8. In order to ensure the mapping accuracy between the camera pixel coordinates and the real 3D position, this paper use Zhang’s calibration method to calibrate the camera [

35], as well as the hand–eye calibration of the camera and the robotic arm based on the method of Chen et al. [

36] to establish the coordinate transformation relationship between the end-effector and the depth camera. The robot arm coordinate system {

R}, the robot arm flange coordinate system {

F}, the end coordinate system {

G}, the camera coordinate system {

C}, and the checkerboard coordinate system {

B} are indicated.

Firstly, Zhang’s calibration method is used to extract corner point coordinates and perform optimization, completing the camera’s intrinsic and extrinsic calibration. Secondly, the checkerboard is placed in front of the robotic arm, and images are acquired from different angles by continuously adjusting the robotic arm’s posture. Finally, based on the hand–eye calibration equation, the least squares method is used to solve the transformation relationship between the camera coordinate system and the robotic arm coordinate system. According to the chain transfer principle of coordinate transformation, the spatial coordinate transformation relationship can be expressed as shown in Equation (3).

where

I is the identity matrix. To better represent the coordinate transformation relationship between the tool (hand) and the camera (eye), it is further transformed into

After establishing the coordinate transformation relationship between the camera and the robotic arm, the rotation matrix for the fruit stem coordinate system {

S} needs to be further constructed:

where

v1 is the growth direction of the fruit stem (green vector),

v2 is the transverse growth direction of the fruit (red vector), and

v3 is the normal vector of the fruit stem direction (blue vector).

To better establish the mapping relationship between {

C} and {

S}, the fruit stem coordinate system is further transformed. In the fruit stem pose coordinate system, using

v3 as the rotation axis, a rotation of

π radians is performed around this axis to ensure its feature vector direction aligns with that of the camera coordinate system. The rotated coordinate system rotation matrix (

) is

Combining the rotation matrix of the fruit stem coordinate system, a homogeneous transformation matrix is constructed to achieve the mapping from {

C} to {

S}:

where the translation vector

t is the coordinate of the point cloud centroid in {

C}.

Based on the hand–eye matrix (

) obtained from calibration, the transformation from {

G} to {

S} is acquired:

The deflection angle of the end-effector is obtained by parsing the rotation matrix () component, which is then combined with the robot arm to drive the end-effector and ultimately enable precise picking.

5. Conclusions and Future Work

5.1. Conclusions

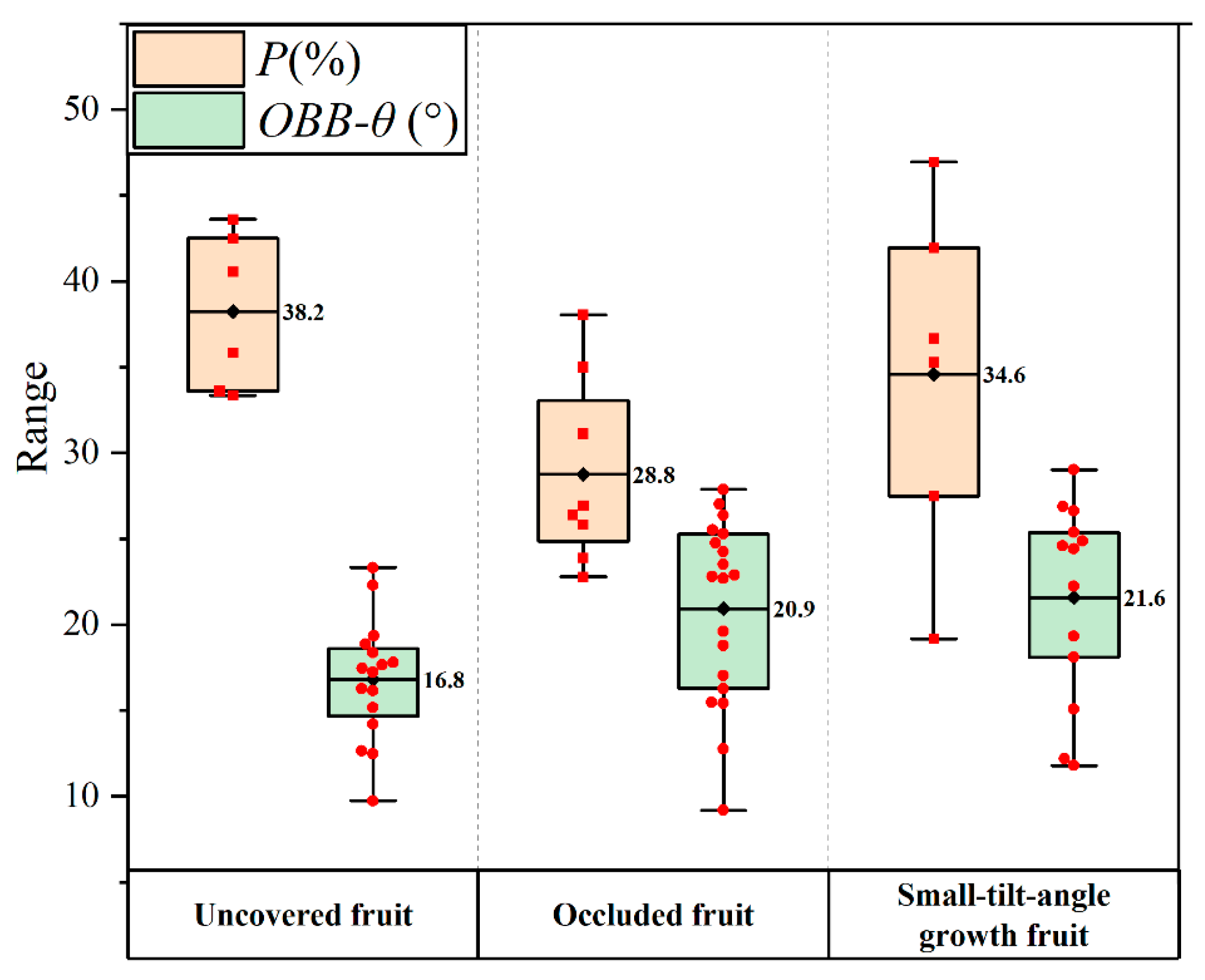

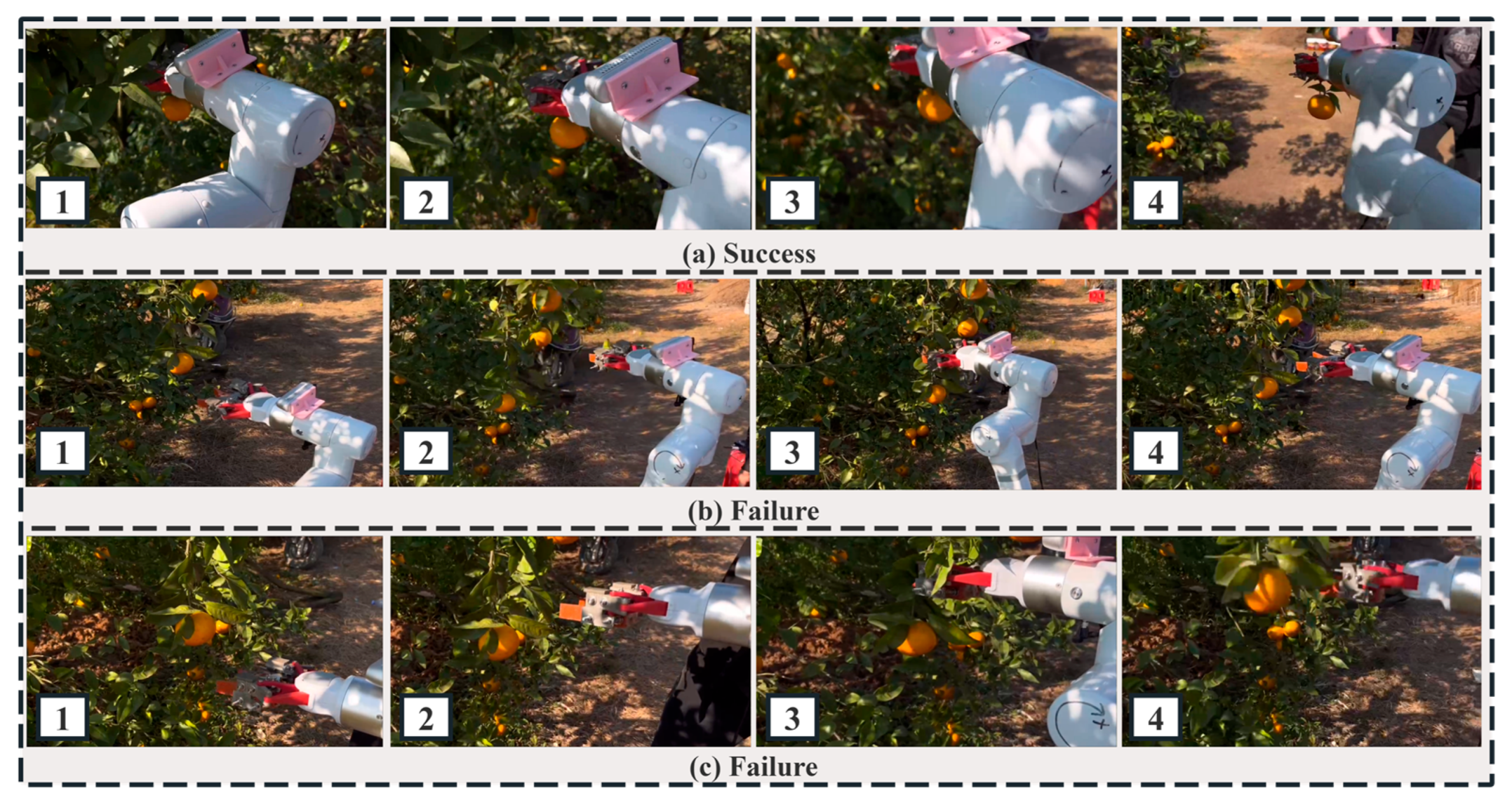

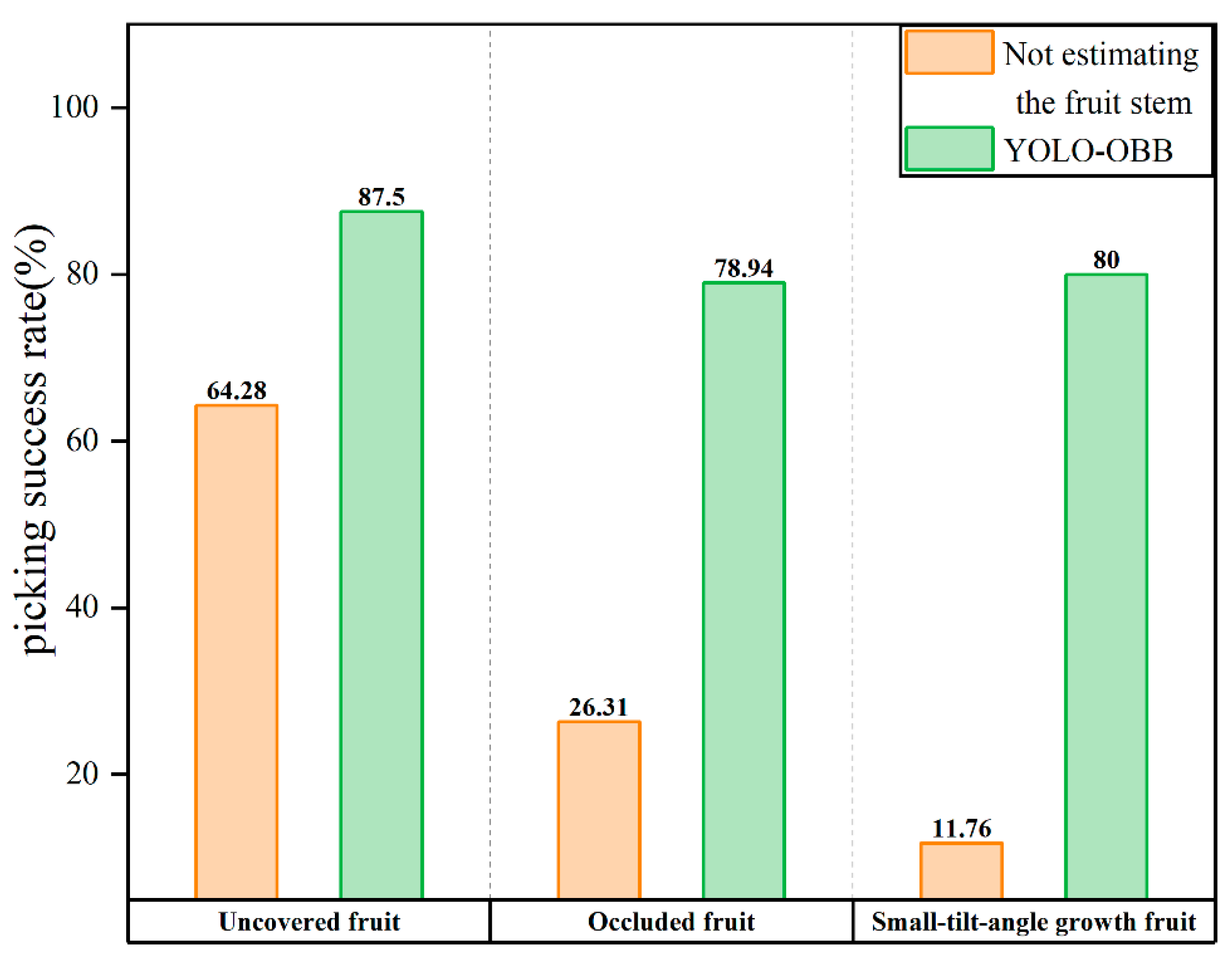

To address the problems of poor picking localization accuracy of citrus picking robots under complex occlusion conditions, which affects the efficiency of autonomous picking, in this paper, we propose an attitude estimation method of citrus stem based on the YOLO-OBB algorithm, which is based on the YOLOv5s network model and binocular vision method for preliminary localization of citrus, to improve the feature extraction ability of the target in the field of view of the camera, and combine the OBB algorithm and the PCA method to accurately estimate the attitude of the fruit stem, to solve the problem of difficulty in localization of the fruit stem picking point, and to improve the localization accuracy of the picking robot and the operation efficiency. In scenes with unobstructed fruits, obstructed fruits, and fruits growing at a small angle, the arc coverage ratio of citrus fruits was 38.24%, 28.75%, and 34.58%, respectively, with an average ratio of 33.86%. In terms of fruit stem pose estimation, the average θ of the OBB algorithm reached 16.81° in the unoccluded fruit scene, while in the occluded fruit scene, the average θ rose to 20.92°, an increase of 4.11° compared to the unoccluded fruit scene. Although the fruit stem pose estimation error increased due to the loss of the effective contour of the surface caused by the interference of occluded objects and the tilted growth of the citrus fruit, the OBB algorithm was still able to maintain a relatively accurate fruit stem pose estimation ability, meeting the picking requirements of the picking robot. In addition, in the scenario of fruit growing at a small angle of inclination, the average θ of the OBB algorithm was 21.58°, which was only 0.66° higher than that in the scenario with occluded fruit, effectively addressing the challenge of estimating the pose of fruit stems that are occluded and tilted, and the average estimation time of fruit stem pose reaches 0.29 s, which has a good estimation effect. In the above three picking scenarios, the picking success rate of the algorithm in this paper reached 87.5%, 78.94%, and 80%, respectively, with an average picking success rate of 82%, which is 50% higher than the fruit stem estimation scheme without fruit stems, greatly improving the picking success rate of the robot. The experimental results show that the algorithm in this paper can accurately estimate the pose of citrus fruit stems, provide accurate picking point location information for picking robots, efficiently complete picking tasks, meet the fruit picking requirements of robots, and provide an effective solution for fruit detection, positioning, and picking by orchard picking robots.

5.2. Discussions of Limitations

Traditional deep learning and three-dimensional vision technologies can effectively detect fruits and provide precise positioning information. However, in unstructured orchard environments where fruits are obscured by branches and leaves or overlap, relying solely on fruit location data results in low picking accuracy for picking robots. This paper addresses citrus picking under occlusion conditions. It employs visual algorithms to detect and perform 3D reconstruction of obscured citrus fruits, mapping partial point cloud information in 3D space. Directional bounding boxes are then constructed for these partial point clouds. Using PCA to estimate the location of citrus stems, finally, citrus fruit picking under occlusion conditions is performed.

The citrus picking method in this paper focuses mainly on the implementation of the overall picking method; our current focus on the YOLOv5s detection method is on using the YOLO framework to obtain ROI information for specific fruits, and we have not yet attempted a higher version of YOLO or a method to improve the network model. This may cause some limitations in the algorithm, which is something we did not do well, so our future work will further attempt to optimize the recognition algorithm.

Experimental data demonstrate that the proposed algorithm achieves excellent results when picking citrus fruits under three conditions: no occlusion, minor occlusion, and small-angle-tilted growth. However, under conditions of extensive occlusion and steeply inclined growth, the picking rate decreases. This is because while the robot precisely locates the picking point during the process, it fails to plan the robotic arm’s picking path. Consequently, the cutting end is prone to collisions with nearby branches and leaves during execution, causing the citrus stem to shift out of the targeted picking position and preventing successful fruit removal.

5.3. Future Work

The algorithm in this paper can provide accurate picking position information for the picking robot to realize efficient citrus picking in complex orchard environments with good robustness. However, in the face of the rapid development of intelligent agricultural machinery and the complex changes in unstructured orchards, there is still room for improvement in the future: the applicability of the end-effector affects the success rate of picking in actual picking operations. In the future, the structural design of the end-effector can be further optimized to improve the success rate of citrus picking. Improvement of the network model will also be considered in the future to enhance the recognition performance of fruit targets under occlusion; in addition, the picking method proposed in this paper can be further improved by further improving the network model and the end-effector mechanism, so that it can be extended and applied to the picking scenarios of other fruits such as lychees, apples, pears, and dragon fruits, etc., which demonstrates good generalization performance and application value.