A Novel Approach to Optimize Key Limitations of Azure Kinect DK for Efficient and Precise Leaf Area Measurement

Abstract

1. Introduction

2. Materials and Methods

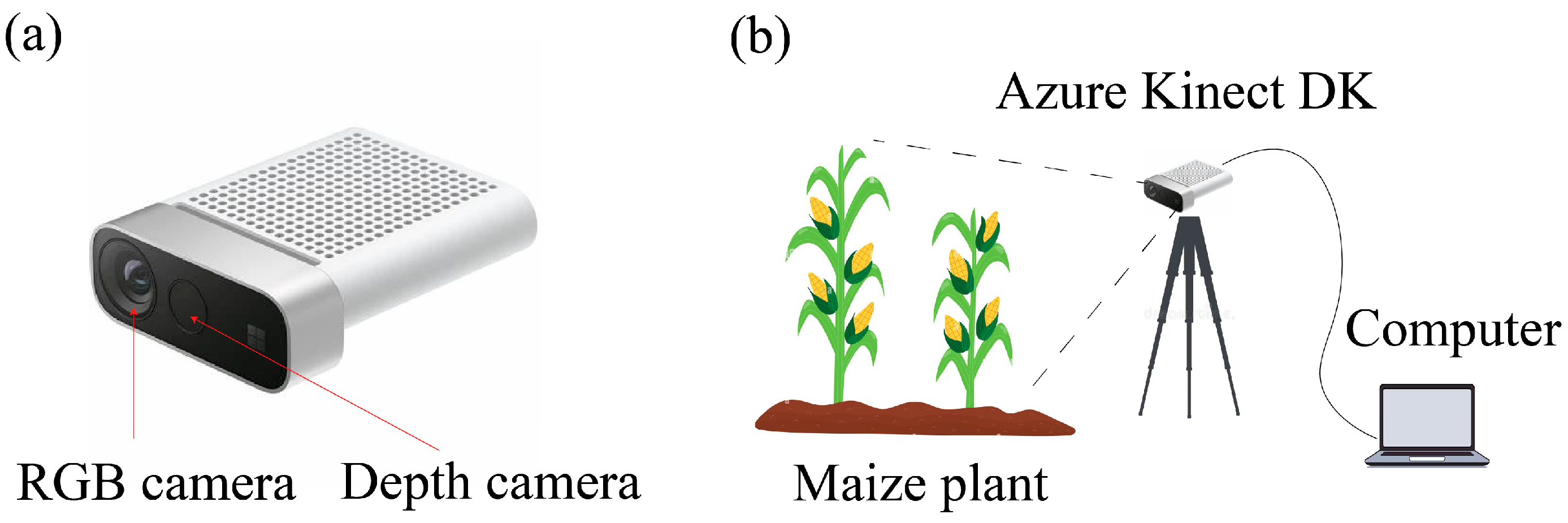

2.1. Experiment Setup

- Growth Stage Diversity: Samples were chosen from various growth stages, ranging from V1 (vegetative stage when the first leaf collar is present) to V7 (vegetative stage when seven leaf collars are present);

- Plant Size Variability: Maize plants with varying heights (from ~20 cm to ~45 cm) and leaf sizes (from ~10 cm2 to ~100 cm2) were included to reflect natural variability;

- Spatial Distribution: Plants from different locations within the field were selected to account for variations caused by soil fertility, shading, and other factors.

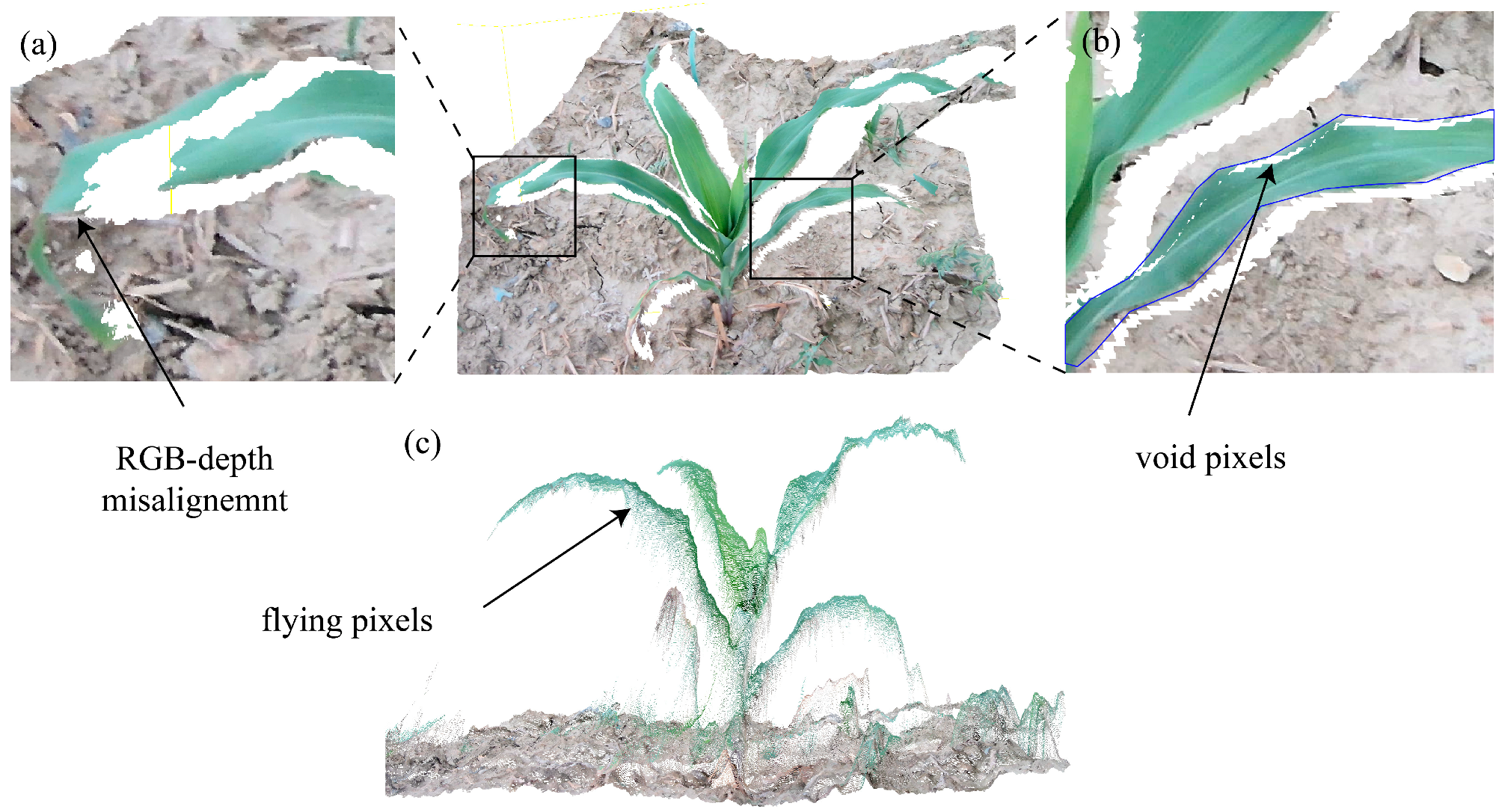

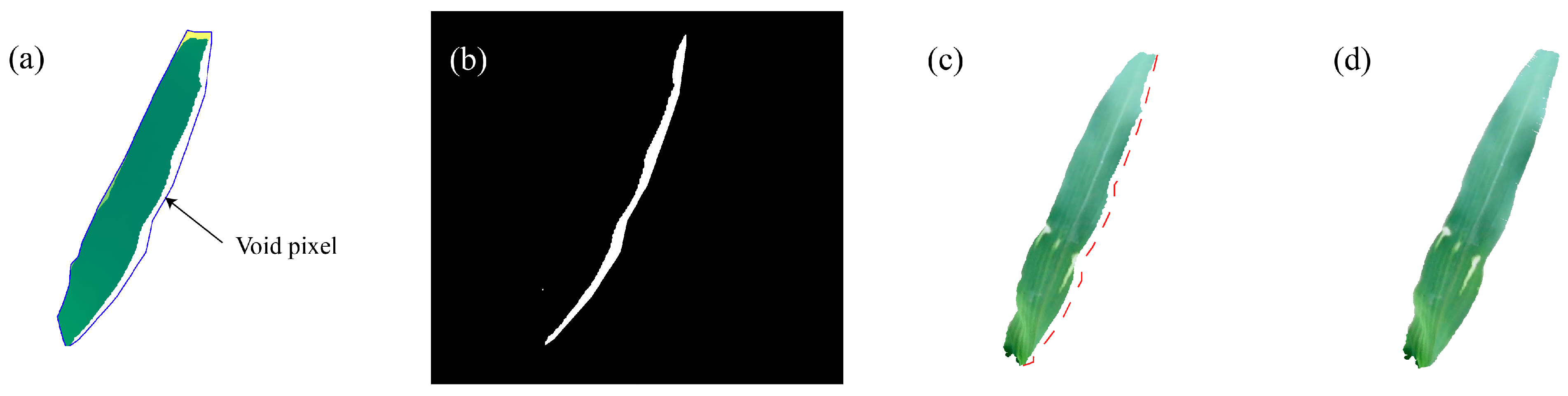

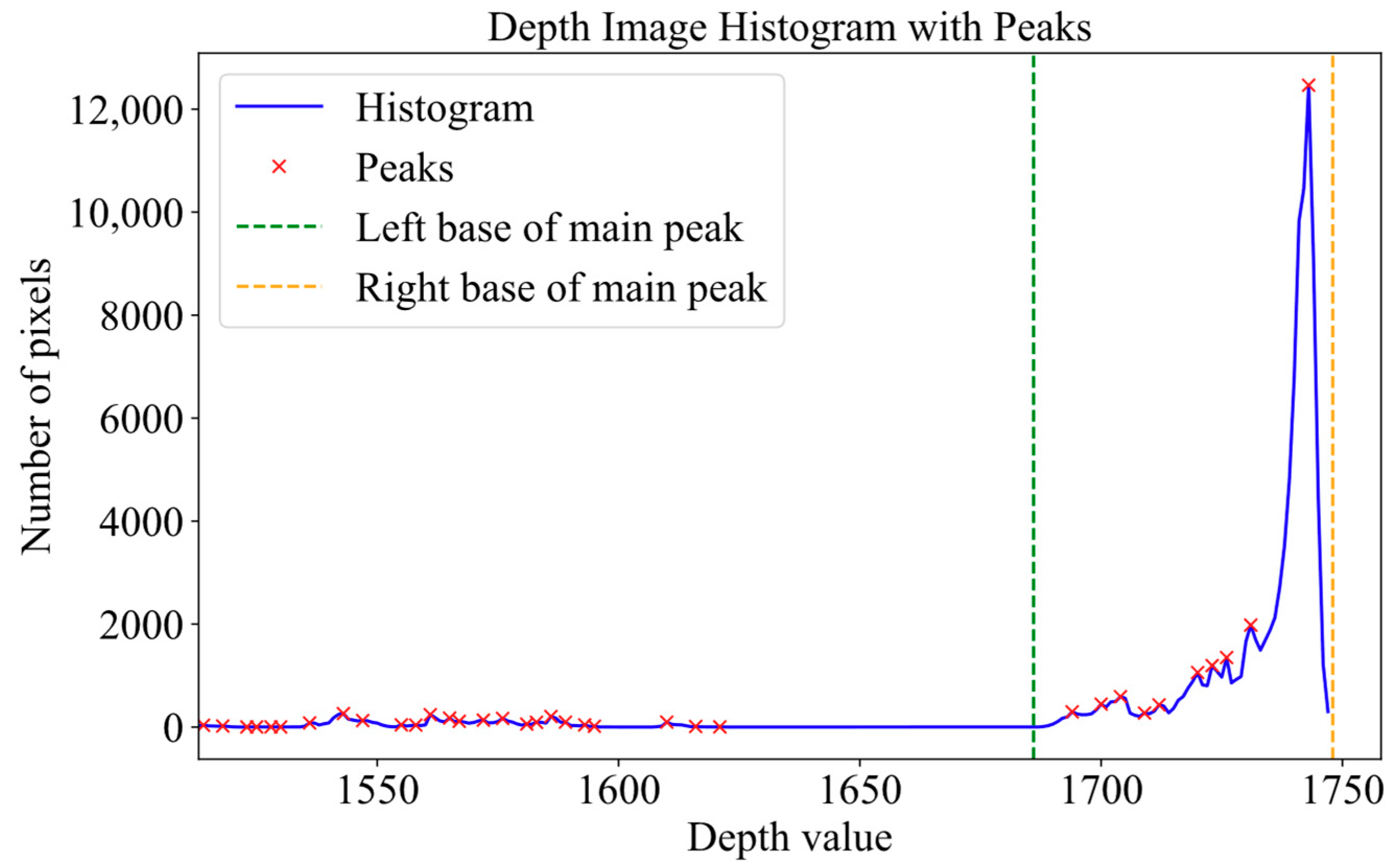

2.2. Problem Description

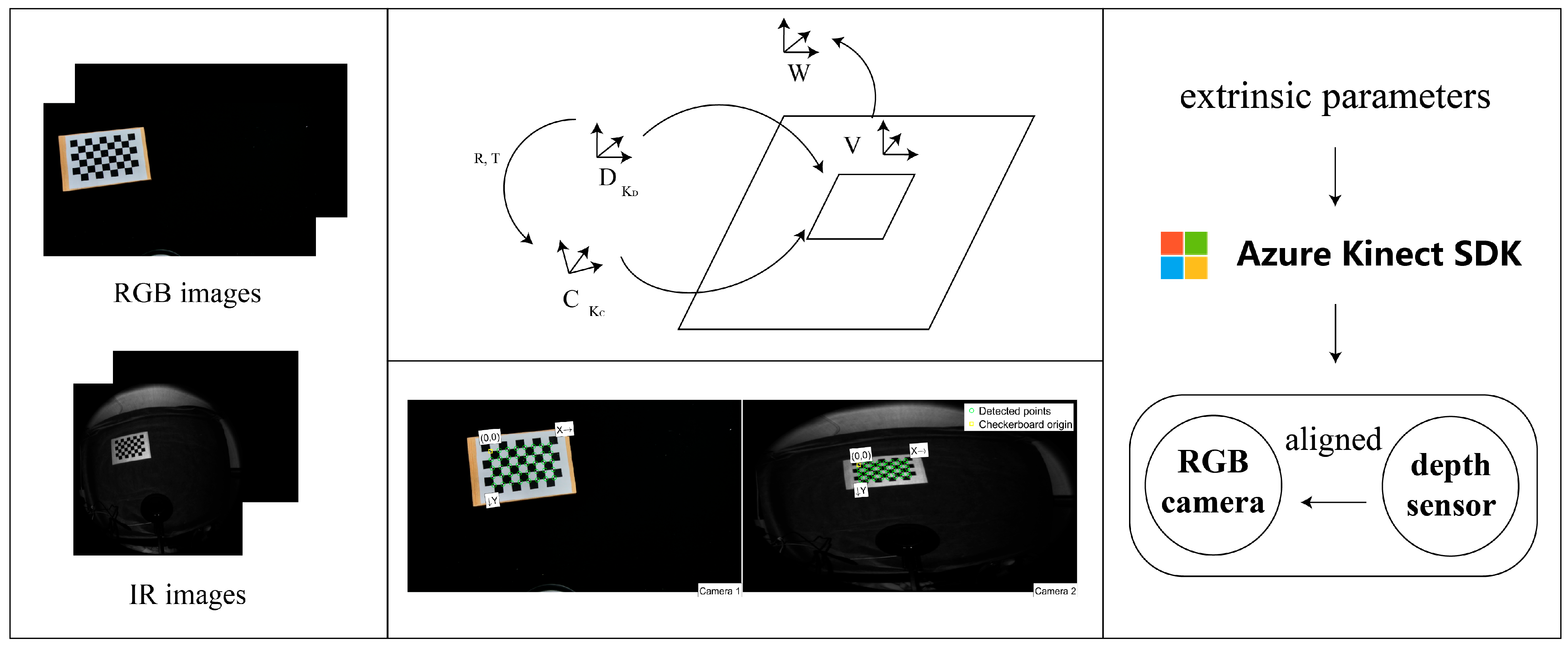

2.3. Recalibration of RGB-D Camera

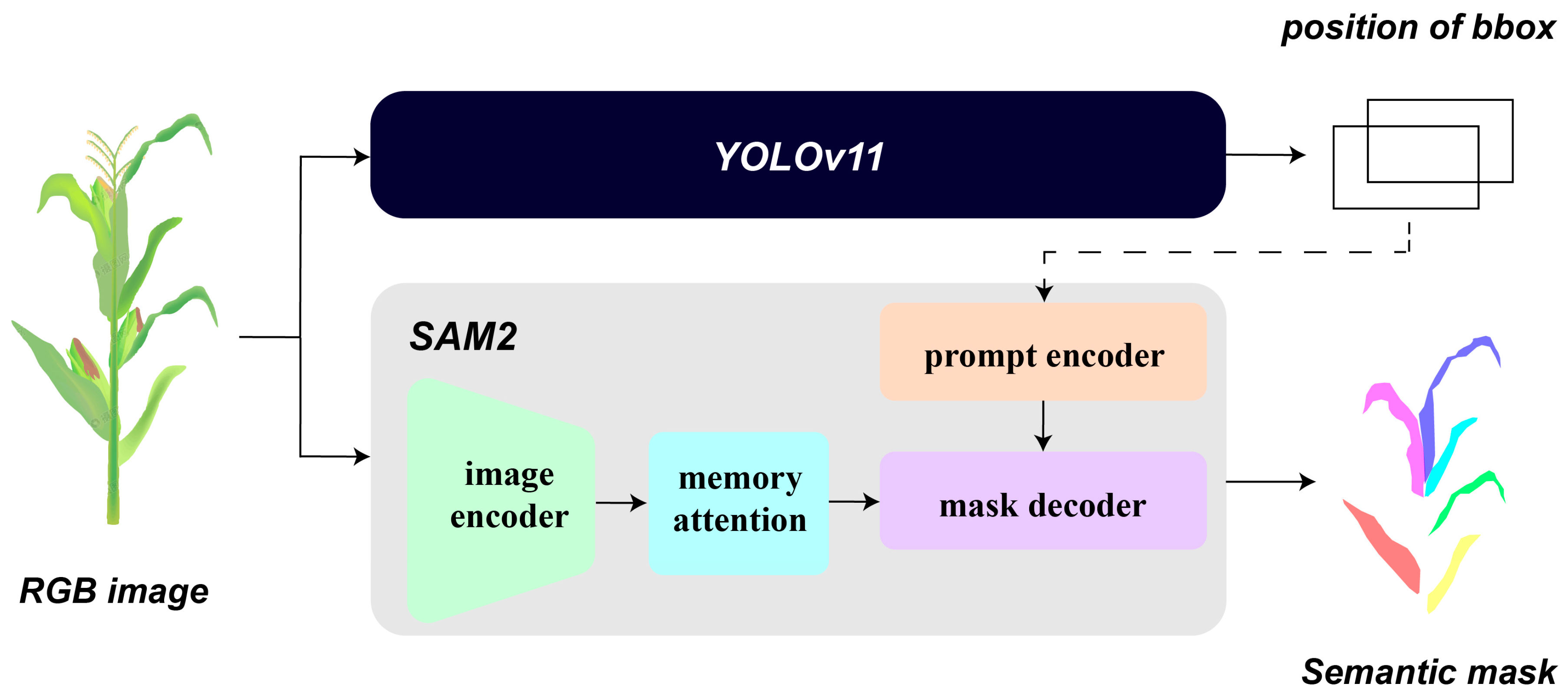

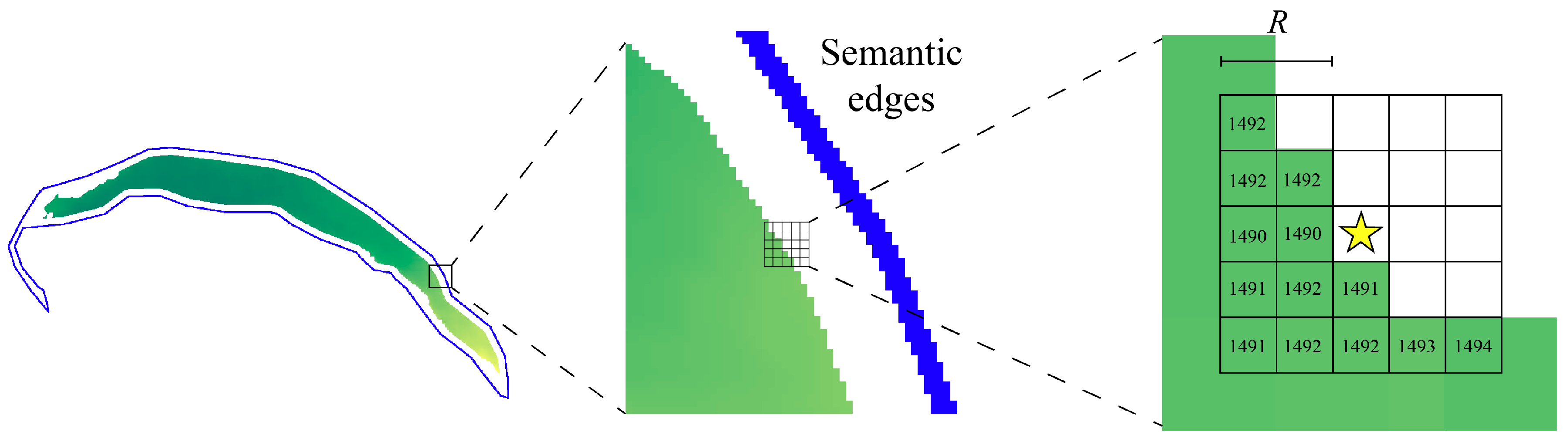

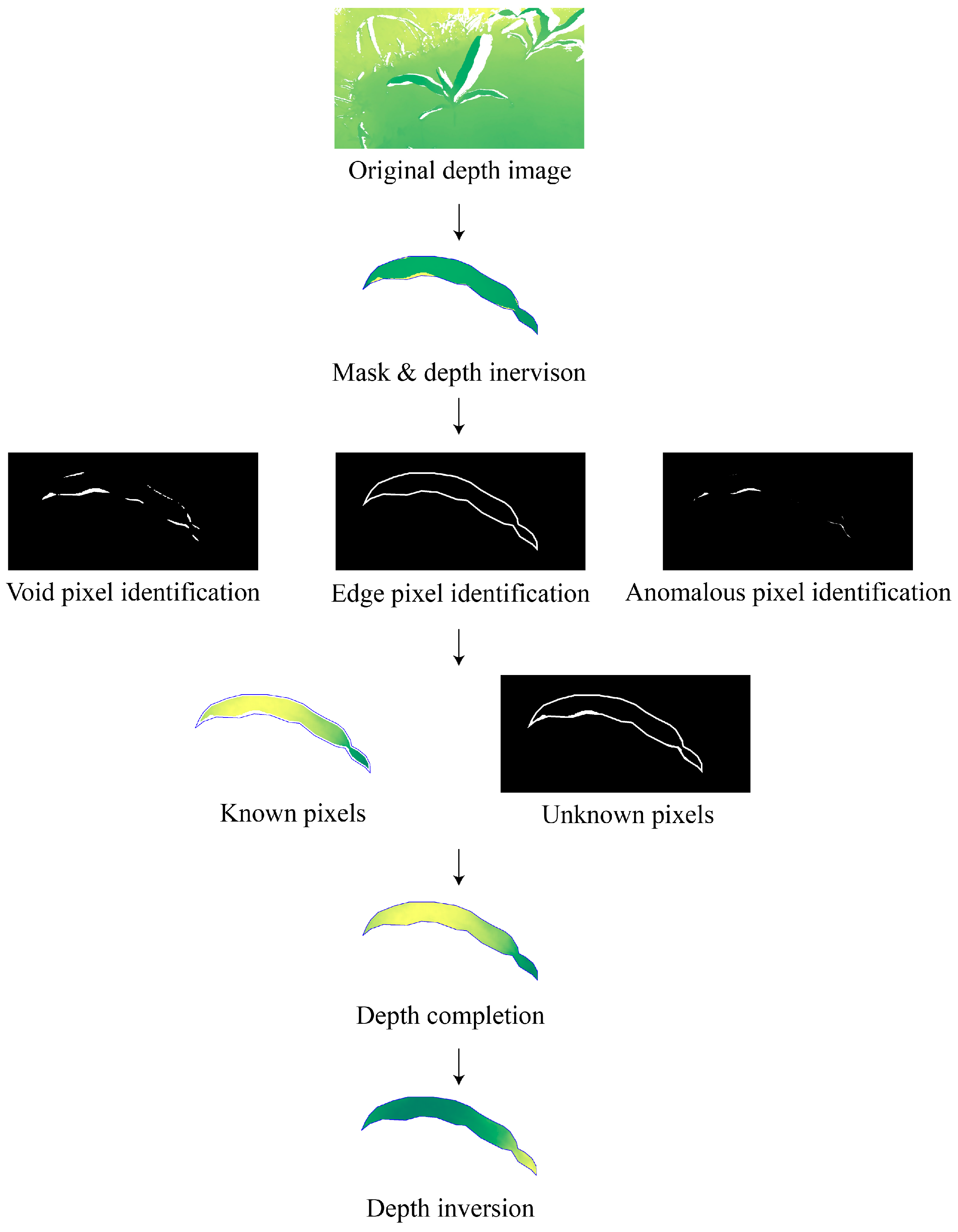

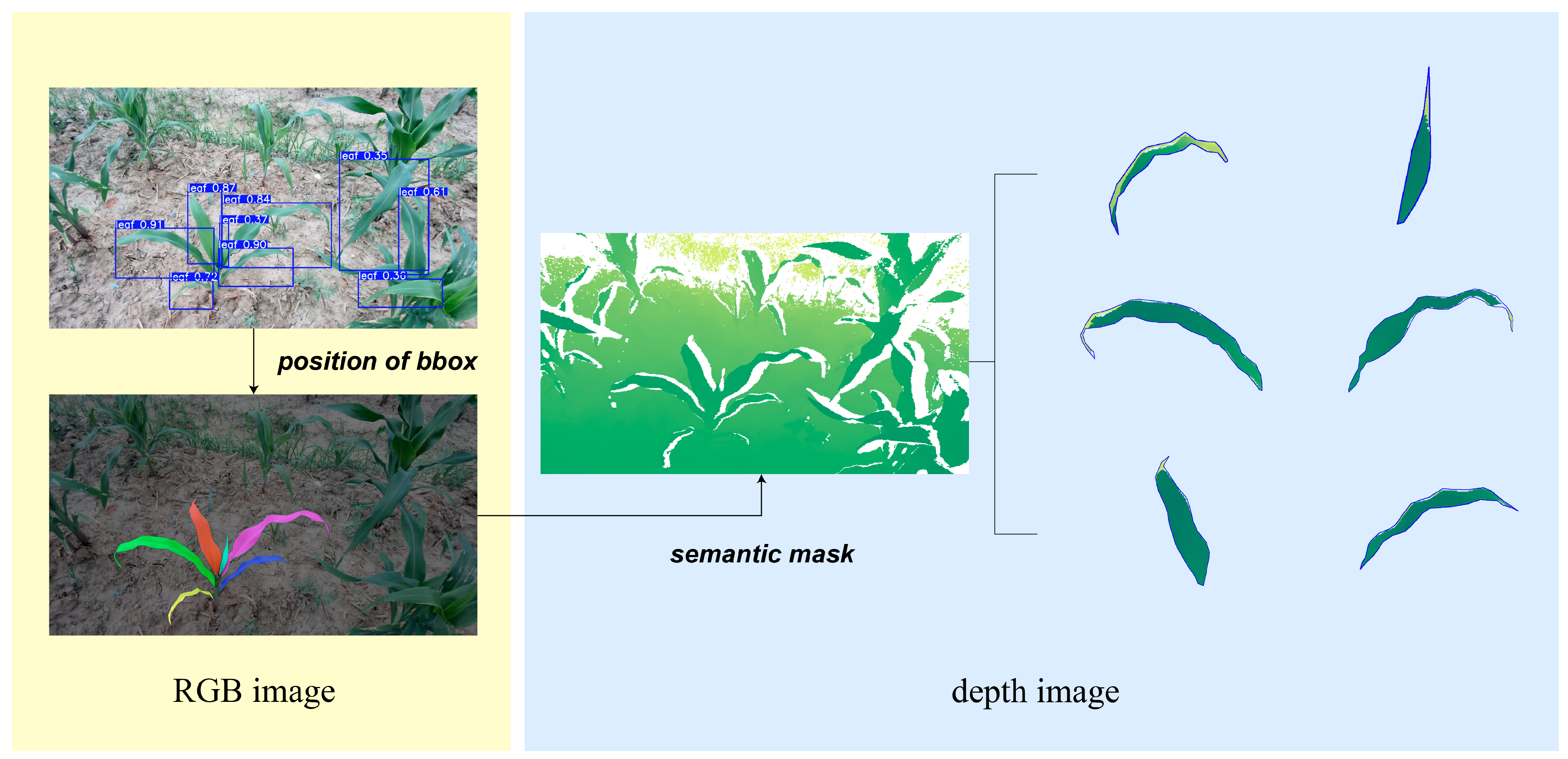

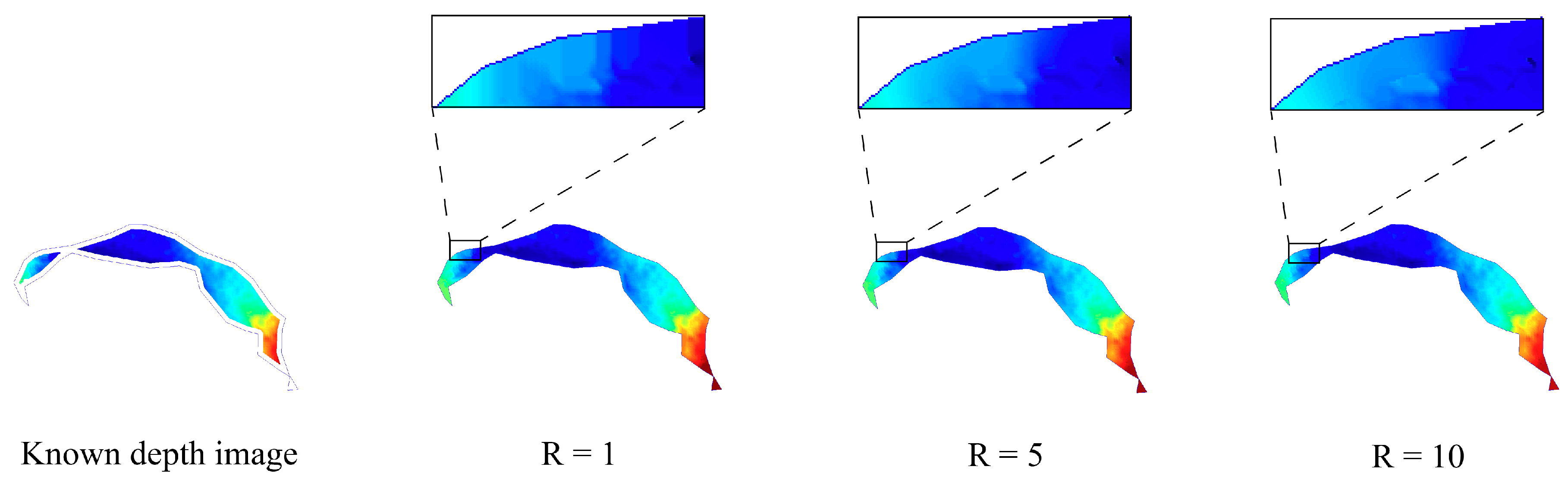

2.4. Semantic Information Guided Depth Image Inpainting

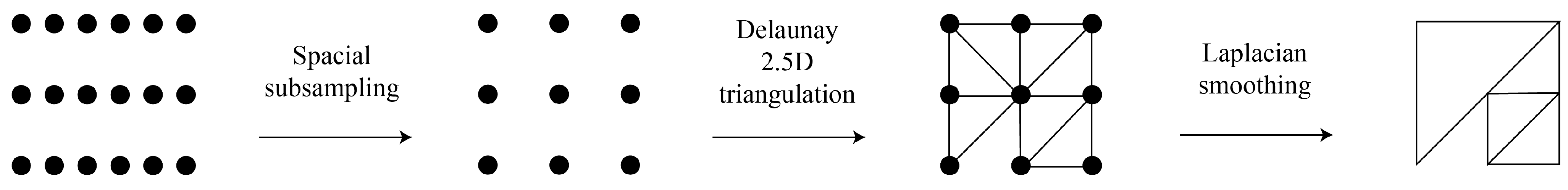

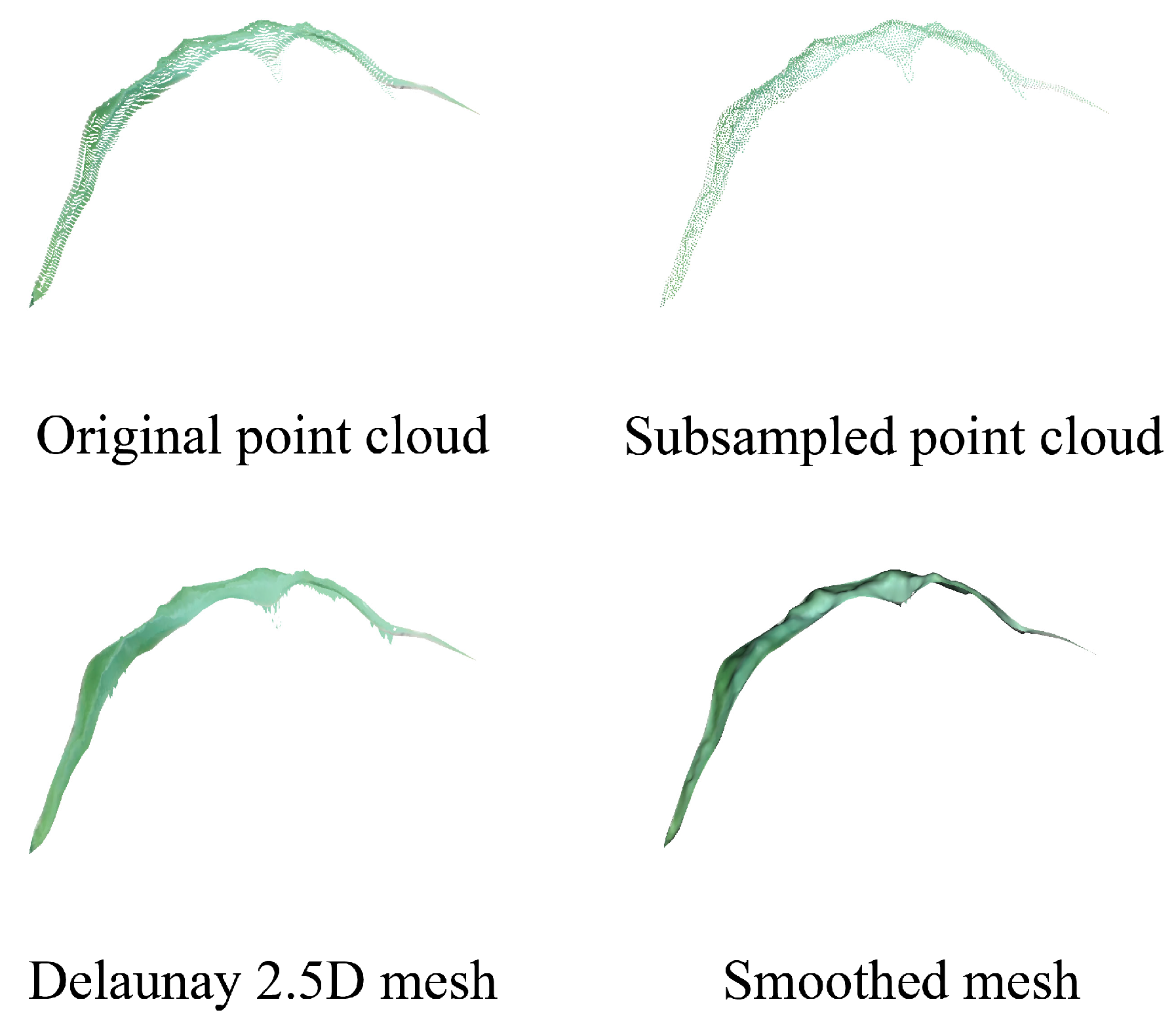

2.5. Leaf Area Measurement

2.6. Evaluation of the Accuracy of Leaf Area Measurement

3. Results and Discussions

3.1. Recalibration Result

3.2. Performance of Semantic Information Guided Depth Inpainting

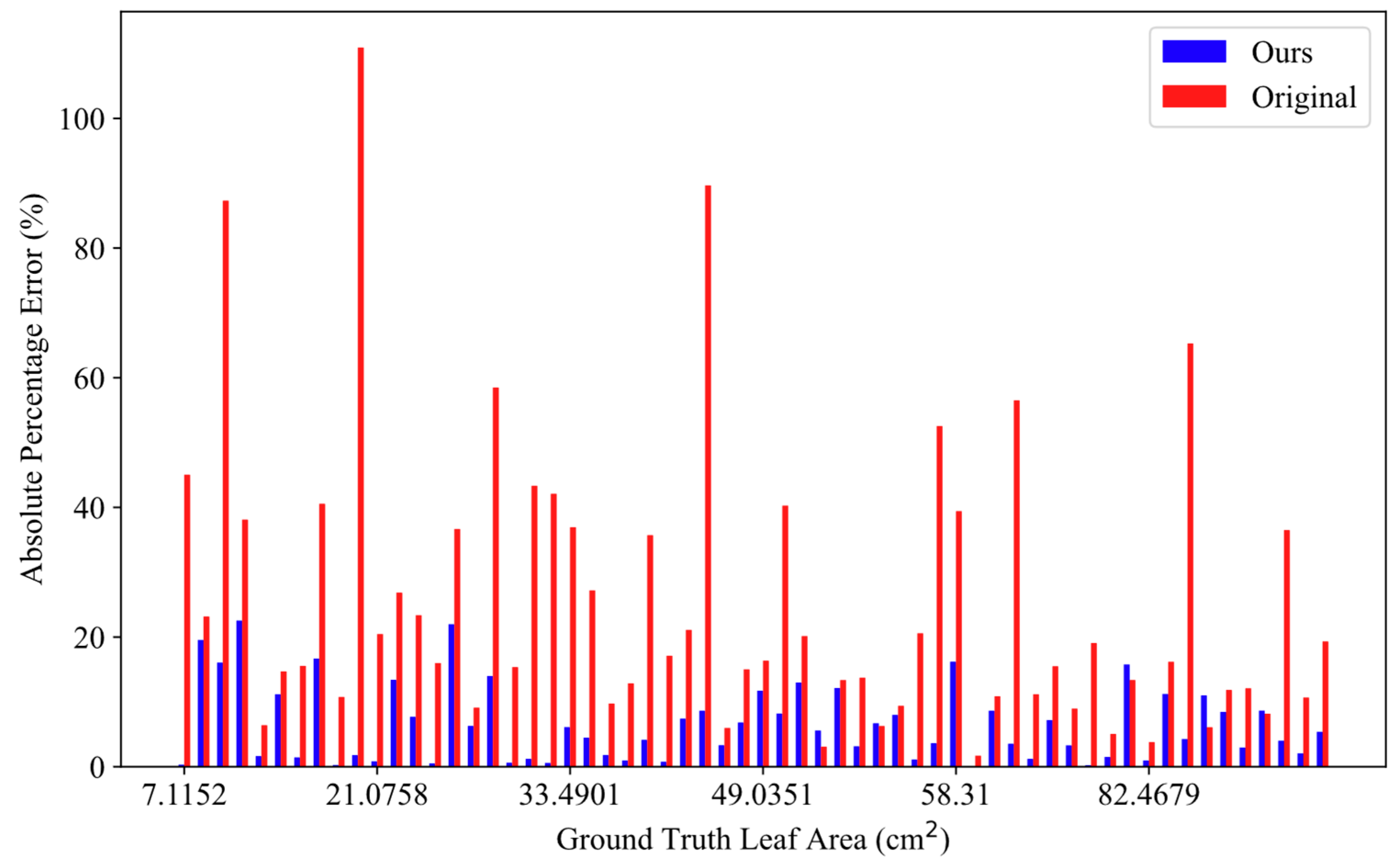

3.3. Leaf Area Measurement Performance

4. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Data Availability Statement

Conflicts of Interest

References

- Wang, X.; Hua, J.; Kang, M.; Wang, H.; Reffye, P. Functional–Structural Plant Model “GreenLab”: A State-of-the-Art Review. Plant Phenomics 2024, 6, 0118. [Google Scholar] [CrossRef] [PubMed]

- Zhou, L.; Zhang, H.; Bian, L.; Tian, Y.; Zhou, H. Phenotyping of Drought-Stressed Poplar Saplings Using Exemplar-Based Data Generation and Leaf-Level Structural Analysis. Plant Phenomics 2024, 6, 0205. [Google Scholar] [CrossRef]

- Fang, H.; Baret, F.; Plummer, S.; Schaepman-Strub, G. An Overview of Global Leaf Area Index (LAI): Methods, Products, Validation, and Applications. Rev. Geophys. 2019, 57, 739–799. [Google Scholar] [CrossRef]

- Haghshenas, A.; Emam, Y. Accelerating leaf area measurement using a volumetric approach. Plant Methods 2022, 18, 61. [Google Scholar] [CrossRef] [PubMed]

- Ji, X.; Zhou, Z.; Gouda, M.; Zhang, W.; He, Y.; Ye, G.; Li, X. A novel labor-free method for isolating crop leaf pixels from RGB imagery: Generating labels via a topological strategy. Comput. Electron. Agric. 2024, 218, 108631. [Google Scholar] [CrossRef]

- Yang, T.; Zhou, S.; Xu, A.; Ye, J.; Yin, J. An Approach for Plant Leaf Image Segmentation Based on YOLOV8 and the Improved DEEPLABV3+. Plants 2023, 12, 3438. [Google Scholar] [CrossRef]

- Su, Z.; Zhou, G.; Song, L.; Lu, X.; Zhao, R.; Zhou, X. Three-Dimensional Reconstruction of Leaves Based on Laser Point Cloud Data. In Proceedings of the 2021 IEEE International Geoscience and Remote Sensing Symposium IGARSS, Brussels, Belgium, 11–16 July 2021; pp. 6688–6691. [Google Scholar]

- Wen, W.; Wu, S.; Lu, X.; Liu, X.; Gu, S.; Guo, X. Accurate and semantic 3D reconstruction of maize leaves. Comput. Electron. Agric. 2024, 217, 108566. [Google Scholar] [CrossRef]

- Ando, R.; Ozasa, Y.; Guo, W. Robust Surface Reconstruction of Plant Leaves from 3D Point Clouds. Plant Phenomics 2021, 2021, 3184185. [Google Scholar] [CrossRef]

- Li, Y.; Wen, W.; Miao, T.; Wu, S.; Yu, Z.; Wang, X.; Guo, X.; Zhao, C. Automatic organ-level point cloud segmentation of maize shoots by integrating high-throughput data acquisition and deep learning. Comput. Electron. Agric. 2022, 193, 106702. [Google Scholar] [CrossRef]

- Huang, T.; Bian, Y.; Niu, Z.; Taha, M.F.; He, Y.; Qiu, Z. Fast neural distance field-based three-dimensional reconstruction method for geometrical parameter extraction of walnut shell from multiview images. Comput. Electron. Agric. 2024, 224, 109189. [Google Scholar] [CrossRef]

- Zhou, L.; Jin, S.; Wang, J.; Zhang, H.; Shi, M.; Zhou, H. 3D positioning of Camellia oleifera fruit-grabbing points for robotic harvesting. Biosyst. Eng. 2024, 246, 110–121. [Google Scholar] [CrossRef]

- Andújar, D.; Ribeiro, A.; Fernández-Quintanilla, C.; Dorado, J. Using depth cameras to extract structural parameters to assess the growth state and yield of cauliflower crops. Comput. Electron. Agric. 2016, 122, 67–73. [Google Scholar] [CrossRef]

- Sun, G.; Wang, X. Three-Dimensional Point Cloud Reconstruction and Morphology Measurement Method for Greenhouse Plants Based on the Kinect Sensor Self-Calibration. Agronomy 2019, 9, 596. [Google Scholar] [CrossRef]

- Hu, Y.; Wang, L.; Xiang, L.; Wu, Q.; Jiang, H. Automatic Non-Destructive Growth Measurement of Leafy Vegetables Based on Kinect. Sensors 2018, 18, 806. [Google Scholar] [CrossRef]

- Wu, G.; Zhu, Q.; Huang, M.; Guo, Y.; Qin, J. Automatic recognition of juicy peaches on trees based on 3D contour features and colour data. Biosyst. Eng. 2019, 188, 1–13. [Google Scholar] [CrossRef]

- Yang, T.; Ye, J.; Zhou, S.; Xu, A.; Yin, J. 3D reconstruction method for tree seedlings based on point cloud self-registration. Comput. Electron. Agric. 2022, 200, 107210. [Google Scholar] [CrossRef]

- Zhu, T.; Ma, X.; Guan, H.; Wu, X.; Wang, F.; Yang, C.; Jiang, Q. A calculation method of phenotypic traits based on three-dimensional reconstruction of tomato canopy. Comput. Electron. Agric. 2023, 204, 107515. [Google Scholar] [CrossRef]

- Ma, X.; Wei, B.; Guan, H.; Cheng, Y.; Zhuo, Z. A method for calculating and simulating phenotype of soybean based on 3D reconstruction. Eur. J. Agron. 2024, 154, 127070. [Google Scholar] [CrossRef]

- Servi, M.; Profili, A.; Furferi, R.; Volpe, Y. Comparative Evaluation of Intel RealSense D415, D435i, D455, and Microsoft Azure Kinect DK Sensors for 3D Vision Applications. IEEE Access 2024, 12, 111311–111321. [Google Scholar] [CrossRef]

- Xie, P.; Ma, Z.; Du, R.; Yang, X.; Jiang, Y.; Cen, H. An unmanned ground vehicle phenotyping-based method to generate three-dimensional multispectral point clouds for deciphering spatial heterogeneity in plant traits. Mol. Plant 2024, 17, 1624–1638. [Google Scholar] [CrossRef] [PubMed]

- Miranda, J.C.; Arnó, J.; Gené-Mola, J.; Lordan, J.; Asín, L.; Gregorio, E. Assessing automatic data processing algorithms for RGB-D cameras to predict fruit size and weight in apples. Comput. Electron. Agric. 2023, 214, 108302. [Google Scholar] [CrossRef]

- Otoya, P.E.L.; Gardini, S.R.P. Real-Time Non-Invasive Leaf Area Measurement Method using Depth Images. In Proceedings of the 2020 IEEE ANDESCON, Quito, Ecuador, 13–16 October 2020; pp. 1–6. [Google Scholar]

- Qiu, R.; Zhang, M.; He, Y. Field estimation of maize plant height at jointing stage using an RGB-D camera. Crop J. 2022, 10, 1274–1283. [Google Scholar] [CrossRef]

- Song, P.; Li, Z.; Yang, M.; Shao, Y.; Pu, Z.; Yang, W.; Zhai, R. Dynamic detection of three-dimensional crop phenotypes based on a consumer-grade RGB-D camera. Front. Plant Sci. 2023, 14, 1097725. [Google Scholar] [CrossRef] [PubMed]

- Boukhana, M.; Ravaglia, J.; Hétroy-Wheeler, F.; De Solan, B. Geometric models for plant leaf area estimation from 3D point clouds: A comparative study. Graph. Vis. Comput. 2022, 7, 200057. [Google Scholar] [CrossRef]

- Chen, Q.; Huang, S.; Liu, S.; Zhong, M.; Zhang, G.; Song, L.; Zhang, X.; Zhang, J.; Wu, K.; Ye, Z.; et al. Multi-view 3D reconstruction of seedling using 2D image contour. Biosyst. Eng. 2024, 243, 130–147. [Google Scholar] [CrossRef]

- Ma, Z.; Sun, D.; Xu, H.; Zhu, Y.; He, Y.; Cen, H. Optimization of 3D Point Clouds of Oilseed Rape Plants Based on Time-of-Flight Cameras. Sensors 2021, 21, 664. [Google Scholar] [CrossRef] [PubMed]

- Li, Y.; Si, S.; Liu, X.; Zou, L.; Wu, W.; Liu, X.; Zhang, L. Three-dimensional reconstruction of cotton plant with internal canopy occluded structure recovery. Comput. Electron. Agric. 2023, 215, 108370. [Google Scholar] [CrossRef]

- Chen, H.; Liu, S.; Wang, C.; Wang, C.; Gong, K.; Li, Y.; Lan, Y. Point Cloud Completion of Plant Leaves under Occlusion Conditions Based on Deep Learning. Plant Phenomics 2023, 5, 0117. [Google Scholar] [CrossRef]

- Yang, R.S.; Chan, Y.H.; Gong, R.; Nguyen, M.; Strozzi, A.G.; Delmas, P.; Gimel’farb, G.; Ababou, R. Multi-Kinect scene reconstruction: Calibration and depth inconsistencies. In Proceedings of the 2013 28th International Conference on Image and Vision Computing New Zealand (IVCNZ 2013), Wellington, New Zealand, 27–29 November 2013; pp. 47–52. [Google Scholar]

- Wang, Z.; Song, X.; Wang, S.; Xiao, J.; Zhong, R.; Hu, R. Filling Kinect depth holes via position-guided matrix completion. Neurocomputing 2016, 215, 48–52. [Google Scholar] [CrossRef]

- Paredes, A.L.; Song, Q.; Conde, M.H. Performance Evaluation of State-of-the-Art High-Resolution Time-of-Flight Cameras. IEEE Sens. J. 2023, 23, 13711–13727. [Google Scholar] [CrossRef]

- Tölgyessy, M.; Dekan, M.; Chovanec, Ľ.; Hubinský, P. Evaluation of the Azure Kinect and Its Comparison to Kinect V1 and Kinect V2. Sensors 2021, 21, 413. [Google Scholar] [CrossRef] [PubMed]

- Lachat, E.; Macher, H.; Landes, T.; Grussenmeyer, P. Assessment and Calibration of a RGB-D Camera (Kinect v2 Sensor) Towards a Potential Use for Close-Range 3D Modeling. Remote Sens. 2015, 7, 13070–13097. [Google Scholar] [CrossRef]

- Wei, F.; Xu, G.; Wu, Q.; Kuang, J.; Tian, P.; Qin, P.; Li, Z. Azure Kinect Calibration and Parameter Recommendation in Different Scenarios. IEEE Sens. J. 2022, 22, 9733–9742. [Google Scholar] [CrossRef]

- Singh, D.; Jain, N.; Jain, P.; Kayal, P.; Kumawat, S.; Batra, N. PlantDoc: A Dataset for Visual Plant Disease Detection. In Proceedings of the CoDS COMAD 2020, Hyderabad, India, 5–7 January 2020; pp. 249–253. [Google Scholar]

- Ravi, N.; Gabeur, V.; Hu, Y.-T.; Hu, R.; Ryali, C.; Ma, T.; Khedr, H.; Rädle, R.; Rolland, C.; Gustafson, L.; et al. SAM 2: Segment Anything in Images and Videos. arXiv 2024, arXiv:2408.00714. [Google Scholar]

- Ku, J.; Harakeh, A.; Waslander, S.L. In Defense of Classical Image Processing: Fast Depth Completion on the CPU. In Proceedings of the 2018 15th Conference on Computer and Robot Vision (CRV), Toronto, ON, Canada, 8–10 May 2018; pp. 16–22. [Google Scholar]

| Indicator | LiDAR Scanner | Structured Light Camera | SfM | RGB-D Camera (Azure Kinect DK) |

|---|---|---|---|---|

| Sensing method | Active | Active | Passive | Active |

| Resolution | High | Medium | Medium | Medium |

| Accuracy | High | High | Medium | Medium |

| Environmental robustness | High | Low | Low | Medium |

| Real-time performance | Low | Medium | Low | High |

| cost | High | High | Low | Medium |

| Data | RMSE/cm2 | MAE/cm2 | MAPE/% | R2 |

|---|---|---|---|---|

| Ours | 4.114 | 2.980 | 6.549 | 0.976 |

| Original | 14.953 | 10.726 | 25.384 | 0.687 |

| Growth Stage | Average Leaf Area/cm2 | RMSE/cm2 | MAE/cm2 | MAPE/% |

|---|---|---|---|---|

| V1–V4 | 40.907 | 2.274 | 1.812 | 5.824 |

| V5–V7 | 60.751 | 5.891 | 3.742 | 6.087 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Niu, Z.; Huang, T.; Xu, C.; Sun, X.; Taha, M.F.; He, Y.; Qiu, Z. A Novel Approach to Optimize Key Limitations of Azure Kinect DK for Efficient and Precise Leaf Area Measurement. Agriculture 2025, 15, 173. https://doi.org/10.3390/agriculture15020173

Niu Z, Huang T, Xu C, Sun X, Taha MF, He Y, Qiu Z. A Novel Approach to Optimize Key Limitations of Azure Kinect DK for Efficient and Precise Leaf Area Measurement. Agriculture. 2025; 15(2):173. https://doi.org/10.3390/agriculture15020173

Chicago/Turabian StyleNiu, Ziang, Ting Huang, Chengjia Xu, Xinyue Sun, Mohamed Farag Taha, Yong He, and Zhengjun Qiu. 2025. "A Novel Approach to Optimize Key Limitations of Azure Kinect DK for Efficient and Precise Leaf Area Measurement" Agriculture 15, no. 2: 173. https://doi.org/10.3390/agriculture15020173

APA StyleNiu, Z., Huang, T., Xu, C., Sun, X., Taha, M. F., He, Y., & Qiu, Z. (2025). A Novel Approach to Optimize Key Limitations of Azure Kinect DK for Efficient and Precise Leaf Area Measurement. Agriculture, 15(2), 173. https://doi.org/10.3390/agriculture15020173