Abstract

Global food security is increasingly challenged by climate change and the availability of arable land. This situation calls for improved crop monitoring and management strategies. Rice is a staple food for nearly half of the world’s population and a significant source of calories. Accurately identifying rice varieties is crucial for maintaining varietal purity, planning agricultural activities, and enhancing genetic improvement strategies. This study evaluates the effectiveness of machine learning algorithms to identify the most effective approach to predicting rice varieties, using multitemporal Sentinel-2 images in the Marismas del Guadalquivir of Sevilla, Spain. Spectral reflectance data were collected from ten Sentinel-2 bands, which include visible, red-edge, near-infrared, and shortwave infrared regions, at two key phenological stages: tillering and reproduction. The models were trained on pixel-level data from the growing seasons of 2021 and 2024, and they were evaluated using a test set from 2022. Four classifiers were compared: random forest, XGBoost, K-nearest neighbors, and logistic regression. Performance was assessed based on accuracy, precision, recall, specificity and F1 score. Non-linear models outperformed linear ones. The highest performance was achieved with the Random Forest classifier during the reproduction phase, reaching an exceptional accuracy of 0.94 using all bands or only the most informative subset (red edge, NIR, and SWIR). This classifier also maintained excellent accuracy (0.93 and 0.92) during the initial tillering phase. This fact demonstrates that it is possible to perform reliable varietal mapping in the early stages of the growing season.

1. Introduction

Global food security faces increasing challenges as climate change and limited arable land place greater pressure on food systems. Consequently, effective land and water management is crucial for sustaining food production [1]. According to the FAO [2], food production must rise by 50% by 2050 to meet the growing demands of an expanding population, yet climate change is already affecting key crop yields, complicating strategic planning and operational management in agriculture [3].

Among staple crops, rice remains the most important, particularly in developing countries [4,5], serving as a fundamental energy source for nearly half of the global population. It accounts for over 21% of human caloric intake, with even higher dependence in Southeast Asia, where it makes up more than 76% [6,7]. Around three billion people rely on rice as a dietary staple, making it one of the most essential crops in global agriculture worth studying [8].

Recent advances in satellite technology have greatly improved the monitoring of crops, the prediction of yields, and the detection of diseases [9,10,11], with initiatives such as NASA Harvest (established in 2017) playing a key role in supporting global food security [9]. In this context, crop mapping is essential for yield estimation and resource management [12], although spatial and temporal variability still hinder accurate classification [13]. Reliable identification depends on phenological differences that influence spectral and structural responses [14], which makes the timely generation of precise crop distribution maps indispensable [15,16].

Sentinel-2 time-series data have proven highly effective for crop mapping, thanks to their high spatial, temporal, and spectral resolution. Spectral bands in the visible, red-edge, and infrared regions provide insights into vegetation properties such as chlorophyll content, canopy structure, and water status. These raw spectral bands can be used directly or combined into vegetation indices (VIs) such as the Normalized Difference Vegetation Index (NDVI) and Enhanced Vegetation Index (EVI), which highlight plant vigor and greenness. Several studies have reported the utility of these time-series observations for early and accurate crop classification [17,18,19]. In addition to identifying crop types, remote sensing technologies also support yield forecasting [20], monitoring nutrient deficiencies [21], detecting rice diseases for timely interventions [22], and following plant growth dynamics and phenology [23]. All these advantages combined strengthen the foundation for precision agriculture [24].

Traditional crop-mapping methods often rely on expert knowledge and fixed, rule-based systems, such as spectral or phenological thresholds, and they involve intensive-labor field inspections. These approaches are time-consuming and prone to misclassification when crops have similar growth patterns (e.g., Triticum aestivum vs. Triticum durum). They also often fail to generalize across regions [13,14]. Therefore, there is a need for more flexible, accurate, and automated classification techniques.

Machine learning classifiers such as decision trees, K-Nearest Neighbor (k-NN), Gradient Boosting, and Support Vector Machines (SVMs) have become widely used for crop classification using remote sensing data. They can process large datasets and achieve good accuracy in cropland mapping [25,26,27]. Random forest and SVM have achieved excellent results in distinguishing crop types in heterogeneous agricultural landscapes [28,29]. Wavelength bands, which capture the spectral reflectance of vegetation, serve as the primary input for these models. When combined into vegetation indices (VIs), such as NDVI or EVI, they enhance the sensitivity to plant physiological traits at different phenological stages, thereby improving classification accuracy [30,31].

Building on this framework, several studies have demonstrated that integrating time-series remote sensing data with machine learning models improves classification accuracy [32]. This combined approach has been successfully applied worldwide—for example, in Uzbekistan, where it was used to classify crop rotations involving irrigated cotton, winter wheat, and rice with an overall accuracy of 80% [29], and in the United States for distinguishing between corn and soybean crops [33]. However, the studies have begun to address rice variety discrimination using satellite data, usually using higher computational cost and then only one growth year, a limited number of varieties, and a limited area of study. Guo et al. [34], demonstrated that a deep CNN applied to a Sentinel-2 image time series could separate five rice varieties within a single growing season in Australia. Similarly, Rauf et al. [35] proposed a multi-temporal Sentinel-2 approach to classify two major rice cultivars (“Basmati” and “IRRI”) in Pakistan, achieving an accuracy of 98.6% using a Conv2D (CNN analysis). Saadat et al. [36] combined Sentinel-1/2 time series with a multistream CNN to map two rice varieties and the non-rice areas, achieving an overall accuracy (OA) of 97%. López-Andreu et al. [37], studied various machine learning (ML) classification models for rice crop in Murcia, Spain, in 2019, covering an area of 336 hectares. The Extra Trees, Random Forest, and K-Neighbors models achieved an accuracy of 0.93 in the 2019 trial. However, tests in the new year (2020) reduced the accuracy to 0.86 in specific weeks of the growing season.

This study investigates the potential of machine learning models, applied to Sentinel-2 multispectral imagery, to accurately classify the common rice varieties using spectral reflectance bands. The research aims to evaluate the performance of different supervised machine learning models in distinguishing rice varieties. The ultimate goal is to identify the most effective approach to predicting varietal classes. This will support the improved monitoring and management of rice cultivation in the region, as well as constraining variety-specific yield models. This will improve rice production forecasts at the field and regional levels.

2. Materials and Methods

2.1. Study Area

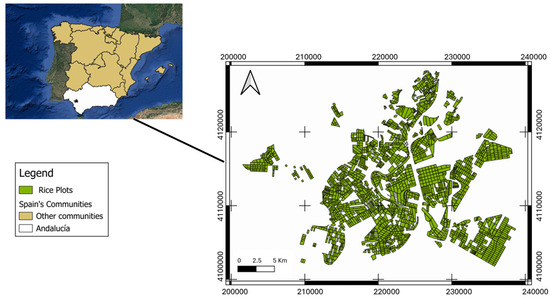

The present study was conducted during the 2021, 2022, and 2024 rice-growing seasons in the Marismas del Guadalquivir, a major rice-producing region in southern Spain. In 2023, no rice was cultivated due to drought conditions. The study area is situated within the municipalities of Isla Mayor, La Puebla del Río, and Los Palacios y Villafranca (Figure 1), and it is approximately bounded by the following WGS 84 coordinates: 37.2602° N, 6.3647° W (northwest) and 36.9962° N, 5.9345° W (southeast). This relatively flat and homogeneous agricultural landscape spans about 350 km2 (roughly 15 × 23 km) and is almost entirely dedicated to flooded rice cultivation, with a total rice field area of approximately 39,635 hectares. Figure 1 shows the spatial distribution of rice plots in the study area.

Figure 1.

Situation of Sevilla and spatial distribution of rice plots in the study area.

The Guadalquivir River, the main source of irrigation, flows for about 657 km from its origin in the Sierra de Cazorla (Jaén province) to the Atlantic Ocean at Sanlúcar de Barrameda, passing through Córdoba and Seville. It drains a basin of around 58,000 km2 and is essential for sustaining agriculture in Andalusia. Rice in the Marismas is cultivated under flood irrigation, with water depths ranging from 10 to 20 cm. Despite moderate salinity levels (EC = 2.3 dS m−1) [38], the region’s subtropical Mediterranean climate—characterized by hot, dry summers and mild winters—provides ideal conditions for rice growth [39].

The soils in the study area are primarily clay to silty clay, with a pH between 7.9 and 8.3 and organic matter content ranging from 1.5% to 3.2%. Compared to northern rice-growing regions such as Aragón and Navarre, the Marismas del Guadalquivir achieves higher yields, largely due to the warmer irrigation water, as northern fields are supplied via colder rivers originating in the Pyrenees, an environmental factor that can limit photosynthetic efficiency and reduce crop productivity [38].

2.2. Experimental Setup

The present study focused on eight rice varieties that are widely cultivated and consistently present across the 2021, 2022, and 2024 seasons in the Marismas del Guadalquivir region. The varieties “Puntal”, “Hispalong”, “J Sendra”, “Copsemar7”, “Guadiagrán”, “Bomba”, “Hispagran”, and “Piñana” represent a significant portion of the regional rice production and are commonly sown by local farmers. Table 1 presents the number of parcels, corresponding to cultivated areas (in hectares). The final matrix consists of 670,277 rows, 10 bands per year, and as many columns as the number of dates available in each year, for a total of 49,135,100 values. Table 2 presents the number of pixels per variety for the years 2021, 2022, and 2024, as well as for the total study period.

Table 1.

Rice parcels and cultivated area (ha) used in the study across seasons 2021, 2022, and 2024.

Table 2.

Number of pixels per variety in 2021, 2022, and 2023 and the total for the study.

2.3. Satellite Data

This study utilized Sentinel-2 satellite imagery, accessed and processed through Google Earth Engine (GEE), a robust cloud-based platform designed for large-scale spatial analysis. GEE facilitated the efficient selection, filtering, and export of imagery based on specific spatial and temporal parameters. The dataset was derived from the Sentinel-2 Level 2A product, which provides surface reflectance values corrected for atmospheric interference. Imagery was acquired over the rice-growing region, but only for cloud-free days’ images, within tile 29SQB of the Military Grid Reference System (MGRS), covering the study area across the 2021, 2022, and 2024 rice-growing seasons. Central to data acquisition was the multi-spectral instrument (MSI) aboard the Sentinel-2A satellite. This sensor captures reflected sunlight across 13 spectral bands; however, this study focused on a subset of 10 bands, primarily those with the highest spatial resolutions of 10 and 20 m (Table 3).

Table 3.

Bands and their resolution (m) from Sentinel-2 and each Wavelength (nm) resolution and regions.

Only cloud-free Sentinel-2 images were processed for each rice-growing season studied, covering the entire period from sowing to harvest. The selected image acquisition dates were aligned across all years based on days after sowing (DAS) and corresponding key crop phenological stages, ensuring consistency in crop development monitoring. Detailed download dates and relatively corresponding days after sowing are summarized in Table 4. All images provide surface reflectance data.

Table 4.

Dates of downloaded Sentinel-2 images across seasons and corresponding days after sowing (DAS) and phenological stage.

2.4. Variety Data

Field boundaries were obtained from the Spanish Cadastre INSPIRE portal [40]. Rice parcel data and varietal information were sourced from official Red de Alerta e Información Fitosanitaria (RAIF) maps provided by the Federación de Arroceros de Sevilla [41] for the 2021, 2022, and 2024 seasons. These maps identified more than 20 cultivated rice varieties and served as the basis for the selection of ground truth data.

2.5. Statistics and Machine Learning Algorithms

The objective of this study was to evaluate the spectral separability of rice varieties using Sentinel-2 satellite imagery. A Principal Component Analysis (PCA) was applied to the dataset, combining days after sowing (DAS) and spectral bands, to identify the most relevant features contributing to varietal discrimination. The analysis was conducted at the pixel level, with each pixel labeled according to its corresponding rice variety, based on the official parcel maps. The spectral reflectance values corresponding to DAS in Table 3 served as input for the machine learning models used in the classification process.

2.5.1. Principal Component Analysis

Principal Component Analysis (PCA) is a statistical method used to simplify large datasets by reducing their dimensionality while preserving as much of the original information (variance) as possible.

Given a dataset with n samples and p correlated variables ,…, , PCA seeks to transform these into a smaller set of k new uncorrelated variables ,…, (where k ≤ p). These new variables, called principal components, are linear combinations of the original variables:

where the coefficients reflect the weight or contribution of each original variable to the principal component , providing information about their correlations.

Principal components are constructed to be mutually uncorrelated and are ordered by the amount of variance they explain. The first principal component captures the greatest variance, while the last captures the least. By reducing dimensionality, PCA facilitates visualizing enormous and complex data in two or three dimensions, aiding in the identification of possible clusters or groups within the dataset. In this case, groups of pixels with similar characteristics.

In this study, PCA was applied using reflectance values of the bands listed in Table 2 across the following seven days after sowing (DAS): 20, 35, 40, 45, 75, 80, and 90. The purpose was to cluster both DAS and bands simultaneously, identifying patterns of variation over time and spectral variables.

2.5.2. Machine Learning Models

Different machine learning models were applied to classify pixels according to rice varieties. Training and validation were performed using combined data from the 2021 and 2024 seasons, with an 80/20 split for training and validation, respectively. All pixels from the 2022 season were reserved exclusively for testing. Stratified random sampling was employed during the training and validation data selection to ensure the balanced representation of all varieties and avoid bias.

For classification, four supervised learning algorithms were employed: k-nearest neighbors (k-NN), extreme gradient boosting (XGBoost), random forest (RF), and logistic regression (LR). The selected algorithms have been widely applied in comparable classification studies (Table 5). Models were trained with standard hyperparameters widely used in the literature. Random forest and XGBoost employed 100 estimators, KNN used 5 neighbors, and logistic regression was run with the lbfgs solver and 1000 maximum iterations.

Table 5.

Representative studies using machine learning algorithms for the classification of different crops in multiple countries.

k-Nearest Neighbors (k-NN)

Cover and Hart [48] introduced the nearest-neighbor classification rule, which assigns a class label to an unknown sample, x, based on the class of its closest training sample, , in a metric space.

The k-NN algorithm assigns a class to a data point based on the majority class among its k-closest neighbors in the feature space. k-NN does not require explicit training; instead, it stores the entire training dataset, and when a new instance needs to be classified, the algorithm finds the k-nearest neighbors (with the smallest distances) and then assigns the class that is most frequent among them.

A small value of k (e.g., 1 or 3) makes the algorithm sensitive to noise and outliers. A large value of k may include too many irrelevant points and may reduce accuracy [49].

Extreme Gradient Boosting (XGBoost)

XGBoost is an ensemble machine learning algorithm that extends the gradient boosting framework by building a series of decision trees sequentially for classification and regression tasks [50]. Gradient boosting iteratively fits new models to the residual errors of prior models, refining predictions over time.

Random Forest (RF)

RF is an ensemble learning algorithm introduced by Leo Breiman in 2001 [51]. It combines two key concepts: bootstrap aggregating (bagging), which Breiman had introduced earlier in 1996 [52], and random feature selection at each node split, a technique influenced by the work of Amit and Geman [53] in image recognition. In RF, multiple decision trees are built using random subsets of the training data (via bootstrapping) and random subsets of features, which introduces diversity among the trees. The final prediction is made by aggregating the outputs of all trees, through majority voting in classification tasks.

Logistic Regression (LR)

LR is a statistical classification method originally developed for binary outcomes, with roots tracing back to the early 20th century in biological studies. It models the probability of a binary response by applying the logistic (sigmoid) function to a linear combination of input features. The method was formalized through the work of David Cox in 1958 [54], who framed it within the context of generalized linear models.

LR estimates parameters by maximizing the likelihood of the observed data, via iterative optimization techniques like gradient descent. It remains effective for linearly separable classes and offers interpretable coefficients representing the effect of each predictor.

2.5.3. Performance Evaluation

The confusion matrix is a powerful tool for analyzing the performance of a multi-classifier [55] since it allows us to easily obtain the number of correct and incorrect answers for a classification algorithm. Thus, with a confusion matrix, as shown in Figure 2, there are correct classifications and possible errors.

Figure 2.

Confusion matrix of N elements for pairwise comparison.

Particularly for this work, the following applies:

- , : the different rice varieties.

- : the number of samples that truly belong to class and were predicted as class .

- : total number of samples that truly belong to class (i = 1, 2…, 8).

- : total number of samples that were predicted as class (j = 1, 2…, 8).

- N: total number of samples.

In general, for each class , the correct classifications, called true positives (), is the number of samples correctly classified as belonging to this class, so it is obtained as follows:

On the other hand, there are two kinds of errors:

- False positives (), which are the samples that have been incorrectly classified as belonging to class , that is

- False negatives (), which are the samples that have been incorrectly classified as not belonging to class ; thus,

Moreover, there is a fourth type of samples, true negatives (), which are those that do not actually belong to class . They are calculated by adding the entries of the submatrix that results from eliminating row and column i in the confusion matrix, that is

When the values of these four types of samples are known, several commonly used metrics can be generated, as shown in Table 6, to evaluate the performance of the classifier with different evaluation approaches. These metrics must be computed for each class, , and then, the model metrics will be the average of them.

Table 6.

Definitions and formulas of common classification performance Metricsmetrics.

2.6. Software

Processing of satellite images obtained from Google Earth Engine (GEE) for Sentinel-2 was carried out using QGIS 3.10.14 software (QGIS, 2025). Statistical analyses and model evaluations were conducted in a Python 3.11.9 environment using Visual Studio Code (Microsoft, 2025). The analysis employed machine learning libraries (Scikit-learn, Pandas, Joblib, and Numpy) for data handling and classification tasks.

3. Results

3.1. PCA Results

In order to optimize the classification framework, the PCA technique was applied to the joint data matrix in order to establish an exploratory analysis that would guide the selection of the most informative DAS intervals and spectral bands for the classification of varieties. Rather than analyzing each available date independently, PCA enabled the temporal data grouping into two agronomically significant phenological stages, as defined by the Biologische Bundesanstalt, Bundessortenamt und Chemische Industrie (BBCH) scale for rice development [56]. The first group encompassed the tillering phase (35, 40, and 45 days after sowing, DAS; BBCH 21-29), a stage marked by active shoot proliferation. The second group corresponded to the reproductive phase (75, 80, and 90 DAS; BBCH 74-89), during which panicle initiation and flowering occur.

The first three principal components cumulatively accounted for an average of 83.22% of the total variance across both phenological stages analyzed, with the first component explaining, on average, 58.60%, followed by 16.20% and 8.42% for the second and third components, respectively. PCA of DAS showed limited varietal separability in early stages (20 to 45 DAS) due to overlapping clusters, while between 75 and 90 DAS the groups became compact and well separated, confirming this as the optimal window for discrimination. Similarly, PCA of Sentinel-2 bands indicated that near-infrared (B08, B8A) and red-edge bands (B05–B07) contributed most to varietal separability, whereas visible and SWIR bands played a minor role. These results are depicted in Supplementary Figures S1 and S2. These results combine both the tillering and early reproductive phase (35,40 and 45 DAS) and the later reproductive phase (75, 80, and 90 DAS). Spectral bands exhibiting the highest loading coefficients within these components were interpreted as the most informative for discriminating varietal differences.

Subsequently, a set of classification scenarios was developed, each defined by a distinct combination of Sentinel-2 bands selected based on their statistical contribution during the tillering and reproductive phases. These scenarios, detailed in Table 7, include spectral subsets from the visible, red-edge, near-infrared (NIR), and shortwave infrared (SWIR) regions. The final selection aimed to optimize the balance between dimensionality reduction and information preservation, thereby enhancing the discriminative power of the spectral inputs used in the subsequent machine learning classification models.

Table 7.

Band combinations used for machine learning models as input features.

3.2. Results of Machine Learning Models

Table 7, Table 8, Table 9, and Table 10 present, respectively, the classification performance of the k-NN, XGBoost, random forest, and logistic regression models across tillering (35–45 DAS) and reproductive (75–90 DAS) stages, evaluated under four spectral band combinations. Due to a lack of space and because no significant differences have been observed between the metric values of the different varieties, these values are the average of the metrics obtained for each of the eight varieties. As an example, the Supplementary Materials show the results obtained for Scenario 1 in Supplementary Figures S3–S6.

Table 8.

Test and validation results (accuracy, precision, recall, specificity, and F1-score) of the k-NN model to map rice varieties in the different scenarios and DAS.

Table 9.

Test and validation results (accuracy, precision, recall, specificity, and F1-score) of the XGBoost model to map rice varieties in the different scenarios and DAS.

Table 10.

Test and validation results (accuracy, precision, recall, specificity, and F1-score) of the random forest model to map rice varieties in the different scenarios and DAS.

In Table 8, the consistently superior results observed in Scenario 1 across both phenological stages underscore the importance of leveraging the full Sentinel-2 spectral range. Specifically, validation accuracy reached 0.98 during tillering (35–45 DAS) and improved further to 0.99 during reproduction (75–90 DAS). Corresponding test accuracies of 0.93 and 0.94, indicating that the combined information from visible, red-edge, NIR, and SWIR bands captures complementary physiological traits that discriminate rice varieties effectively.

The gradual decline in classification metrics from Scenario 2 to Scenario 4 illustrates the detrimental impact of spectral band reduction. Scenario 2, which excludes visible bands but retains red-edge, NIR, and SWIR, maintains high validation accuracy (0.98 at both stages) but shows a slight drop in test accuracy to 0.91 during tillering and 0.93 in reproduction. The further restricted spectral inputs in Scenario 3 caused test accuracy to drop to 0.84 at tillering and 0.88 at the reproductive stage.

Scenario 4, limited to SWIR bands, suffered the most substantial performance degradation, with test accuracies of 0.62 and 0.67 during the two stages. Despite high specificity values (>0.94), the low recall (~0.62) indicates that the model frequently failed to identify true positives, reflecting a tendency toward false negatives.

The improved overall accuracies at the reproductive stage compared to tillering, rising by approximately 1–4% across scenarios, reflect the increased spectral reflectance separability as rice plants mature and physiological differences among varieties intensify.

The results in Table 9, obtained using the XGBoost model under various scenarios, show that Scenario 1, which incorporates the full spectral range, constantly achieves the highest validation and test performance metrics at both phenological stages. Validation accuracy reaches 0.96 during tillering and improves to 0.99 during the reproductive stage, with test accuracy correspondingly increasing from 0.85 to 0.93.

Spectral simplification, reflected in Scenarios 2 to 4, results in progressive declines in model performance. Scenario 2 maintains high validation accuracies (0.96 and 0.98) but exhibits moderate test accuracy reductions to 0.80 at tillering and 0.92 during reproduction.

Scenario 3, limited to red-edge and NIR bands, shows a marked drop in test accuracy to 0.68 during tillering, though performance improves to 0.85 during the reproductive stage. The relatively lower test specificity (0.95 at tillering and notably 0.79 at the reproductive stage) reveals the limitations in accurately distinguishing true negatives, which may reflect spectral ambiguity among certain varieties.

The most constrained spectral input, Scenario 4 (SWIR bands only), yields the poorest classification results, with test accuracies dropping to 0.49 at tillering and 0.60 at reproductive stage. The recall and F1-score values indicate frequent false negatives.

Table 10 shows that the RF model demonstrates strong and stable predictive capabilities, with high validation accuracies exceeding 0.97 across all scenarios under phenological stages. In the early phenological stage (35–45 DAS), Scenario 1 achieves a validation accuracy of 0.98 and test accuracy of 0.92. These metrics reflect near-perfect agreement beyond chance, implying the model can effectively differentiate rice varieties using comprehensive spectral inputs early in the growing season. Scenario 2 closely mirrors this performance with slightly higher validation accuracy (0.99) and comparable test accuracy (0.91), indicating that excluding visible bands but retaining red-edge, NIR, and SWIR still preserves most discriminative information.

As spectral complexity decreases in Scenarios 3 and 4, model performance declines. Scenario 3 still maintains a high validation accuracy value (0.98) and a test accuracy of 0.84. Scenario 4 (SWIR only) experiences a more pronounced drop, with test accuracy falling to 0.73.

During the reproductive stage, all scenarios show improved test accuracies relative to the tillering stage. Scenario 1 and Scenario 2 both attain test accuracy of 0. Scenario 3 shows a meaningful test accuracy increase to 0.89, while Scenario 4 remains the least accurate.

The results of the LR in Table 11 model exhibit inferior classification performance compared to the ensemble (RF and XGBoost) and instance-based (k-NN) classifiers analyzed previously. In the early phenological stage (35–45 DAS), validation accuracies range from 0.60 in Scenario 1 down to 0.33 in Scenario 4, with corresponding test accuracies declining further to as low as 0.16. Moreover, the results reveal that the LR model’s predictive agreement is barely above chance levels, reflecting substantial misclassification and model instability. This is evident in the low values obtained for precision, recall, and F1-score across scenarios, all of which fall below 0.60, particularly in the test sets.

Table 11.

Test and validation results (accuracy, precision, recall, specificity, and F1-score) of the logistic regression model to map rice varieties in the different scenarios and DAS.

During the reproduction, the LR model performance improves moderately. Validation accuracies peak at 0.75 for Scenario 1 but declines to 0.42 for Scenario 4, while test accuracies are notably lower, with the highest reaching only 0.48.

Interestingly, despite relatively high specificity values (ranging from 0.88 to 0.96 across all scenarios), the low precision and recall values indicate that the LR model predominantly favors negative class predictions, leading to numerous false negatives and a failure to reliably detect true positive class instances.

Figure 3 compares model accuracies across five spectral scenarios and two growing stages for predicting rice varieties. RF and k-NN achieved the highest accuracies, particularly with full spectral input (visible–red-edge–NIR–SWIR) during the reproductive phase. RF is stable across all spectral combinations. Accuracy fell with reduced spectral bands. A significant drop was observed within the LR model. Overall, performance improved with richer spectral data and later phenological stages.

Figure 3.

Comparative analysis of RF, K-NN, XGBoost, and LR for rice variety mapping using Sentinel-2 spectral bands across tillering and reproductive stages.

Figure 4 illustrates the spatial distribution using RF model predictions with Scenario 1 at the pixel level for the eight rice varieties. Green points indicate correct predictions, while red points highlight misclassified pixels. The model shows high pixel-level accuracy for varieties such as ‘’Puntal’’, ‘’Bomba’’, and ‘’Piñana’’, for which nearly all pixels are correctly identified, and misclassifications are minimal and mostly confined to parcel edges. In contrast, varieties like ‘’Guadiagrán’’, ‘’Cosepmar 7’’, and ‘’Hispagrán’’ exhibit more frequent errors. The overall spatial consistency across most varieties highlights the RF model’s potential for reliable pixel-based varietal classification.

Figure 4.

Geospatial assessment of pixel-level prediction accuracy by variety: test of Scenario 1 using RF Model Trained on 2021–2024 data and evaluated on independent 2022 parcels across the eight common rice varieties.

4. Discussion

4.1. Model Performance Comparison

The comparative analysis of the four supervised machine learning classifiers revealed a clear performance hierarchy in the task of classifying rice varieties using Sentinel-2 time-series data. As shown in Figure 2, RF emerged as the top-performing model across all configurations, followed by k-NN, XGBoost, and finally LR.

RF achieved the highest varieties’ prediction accuracies. RF relies on an ensemble approach, which constructs multiple decision trees using random features and sample subsets, thereby reducing overfitting and increasing generalization [57]. RF handles high-dimensional data and multicollinearity, both of which are intrinsic to multispectral satellite imagery. A similar dominance of RF has been reported in land-cover studies; for instance, RF yielded overall accuracies up to 96.3% and demonstrated higher stability than XGBoost, even when trained on radar-optical fused inputs [58].

Despite its sensitivity to high-dimensional data, k-NN demonstrated solid classification accuracy, likely attributable to the discriminative structure of the selected spectral and temporal features. Such patterns were also noted by Asgari & Hasanlou [59], in whose work k-NN achieved an overall accuracy of 90% in crop-type mapping using vegetation indices derived from Sentinel-2, outperforming gradient boosting (GB) under similar input conditions.

XGBoost, being a “state-of-the-art’’ boosting method known for its high predictive ability [50], reflected moderate to good performance.

LR yielded the weakest results among all models, which proves its unsuitability for problems characterized by non-linear relationships and subtle spectral distinctions. LR’s assumption of linear decision boundaries and its inability to model feature interactions significantly limited its performance in this study. Our results mirror the conclusions of Zhong et al. [60], who found LR to be ineffective for classifying spectrally similar crop types. Militino et al. [61] reported that logistic regression slightly outperformed XGBoost in terms of overall accuracy for the imbalanced binary classification task of burned area detection using multi-temporal satellite imagery.

This observed performance hierarchy, RF > k-NN > XGBoost > LR, underscores that ensemble-based (RF, XGBoost) and non-parametric (k-NN) models remain the most reliable for remote sensing-based varietal classification compared to simple linear statistical methods (LR).

Finally, while deep learning architectures such as PhenologyNet [62] offer fine-grained phenological classification via phenotypic similarity weighting, our findings show that high accuracy is achievable using classical machine learning methods. When training data is spectrally and temporally diverse and sampling is guided by phenological knowledge, ensemble models remain effective, interpretable, and operationally viable tools for rice variety classification using Sentinel-2 data.

4.2. Spectral Band Combination Analysis

The superior performance of models incorporating NIR and red-edge spectral bands, as observed in this study, is strongly supported by the physiological and structural relevance of these wavelengths in capturing vegetation and biophysical properties. Unlike models relying solely on visible or shortwave SWIR bands, NIR reflectance is tightly coupled with the leaf area index (LAI) and above-ground biomass, which serve as proxies for plant vigor and developmental stage [63].

Genotypic variability in rice varieties, manifested by tillering capacity, leaf orientation, and canopy density, modulates NIR reflectance patterns [64]. Equally important are the red-edge bands, positioned between the red and NIR regions (approximately 700–740 nm), which are uniquely sensitive to changes in chlorophyll content and photosynthetic activity [65,66]. These bands exhibit a steep reflectance gradient, known as the red-edge inflection point, which shifts in response to biotic stress, nutrient status, and phenological transitions. For instance, the shift from vegetative growth to the reproductive stage (marked by panicle emergence and floral development) can significantly alter chlorophyll distribution and spectral reflectance. Zhang et al. [45] demonstrated that prioritizing NIR and Red Edge enhances model generalizability and the classification precision by minimizing the influence of less informative spectral features. A detailed evaluation of Sentinel-2 and Sentinel-3 data by Imran et al. [67] revealed that red edge and NIR shoulder-based vegetation indices provide a consistent and scalable means of estimating LAI across spatial and temporal scales in heterogeneous grassland systems.

4.3. Influence of Phenological Stages

The best results were obtained when models were trained from the reproduction stage (DAS 75–90), corresponding to inflorescence emergence and flowering, rather than the tillering stage. During tillering (DAS 35–45), the minimal phenodata typic divergence of similarity is due to similar chlorophyll. In contrast, the reproductive stage is characterized by more heightened changes. Differences in panicle emergence timing, flag leaf angles, canopy openness, and the onset of senescence produce measurable changes in NIR, RE, and SWIR reflectance [68,69]. These variations may be intensified due to varietal responses to flowering stress. Moreover, flowering marks a transition in nutrient dynamics, with nitrogen remobilization and water redistribution within the plant, influencing SWIR signatures.

These findings match those of prior studies [70,71] that identify the flowering stage as optimal for crop classification and yield prediction. These findings are supported by Wang et al. [72], who pointed out that UAV-based hyperspectral fluorescence information collected during the flowering stage can enhance VIS based rice yield estimation models.

5. Conclusions

This study clearly demonstrates the power of combining Sentinel-2 multispectral imagery with machine learning classifiers to accurately discriminate between eight major rice varieties consistently cultivated in Seville. By training models on multi-season data from 2021 and 2024 and testing them on an entirely separate 2022 dataset, we confirm the strong potential for temporal generalization.

The PCA was utilized to guide the selection of the combination of growth stages and spectral bands most relevant for varietal classification analysis. Four machine learning classifiers were analyzed: random forest, XGBoost, K-nearest neighbors, and logistic regression. A clear performance hierarchy was revealed in the task of classifying rice varieties using Sentinel-2 time-series data. From the comparison of the four models, RF was revealed to be the best performing model across all configurations, followed by k-NN, XGBoost, and finally LR.

In terms of phenological stage, the highest accuracy, 0.94, was achieved during the reproduction phase with the random forest (RF) model, although even during the initial tillering phase, the model maintained excellent accuracy (0.93 and 0.92). This demonstrates that it is possible to perform reliable varietal mapping in the early stages of the growing season. This study also observed the superior performance of models incorporating NIR and red-edge spectral bands, unlike models based solely on visible or shortwave SWIR bands.

In conclusion, this research underscores the importance of combining phenologically timed spectral data with resilient machine learning frameworks. It not only enables early, accurate varietal mapping but also supports the development of scalable tools for precision agriculture. Our results highlight random forest as the most reliable approach, setting a strong foundation for future in seasonal variety mapping.

Furthermore, this work can be particularly valuable as a foundational step in the development and optimization of predictive models and algorithms. Accurately classifying rice varieties is a crucial prerequisite before applying models aimed at forecasting crop area, disease outbreaks, or yield estimation. As such, our approach offers a filtering mechanism to strengthen existing prediction models by integrating varietal information upfront. Ultimately, the ability to distinguish between rice varieties enhances the interpretability and applicability of agronomic models, contributing to more reliable and actionable insights for farmers and stakeholders. This, in turn, may indirectly support improved field-level decision-making and better outcomes for the food industry, especially when deploying precision agriculture tools in operational contexts.

Supplementary Materials

The following supporting information can be downloaded at https://www.mdpi.com/article/10.3390/agriculture15171832/s1, Figure S1. Principal component analysis (PCA) of Sentinel-2 data grouped by days after sowing (DAS), showing the distribution of samples across PC1 (58.6%) and PC2 (16.2%); Figure S2. Principal component analysis (PCA) of Sentinel-2 data (Bands), showing the distribution of samples across PC1 (58.6%) and PC2 (16.2%); Figure S3. Confusion matrix of the random forest model for the test set at the tillering phase (Scenario 1); Figure S4. Confusion matrix of the K-nearest neighbor model for the test set at the tillering phase (Scenario 1); Figure S5. Confusion matrix of the XGBoost model for the test set at the tillering phase (Scenario 1); Figure S6. Confusion matrix of the logistic regression model for the test set at the tillering phase (Scenario 1).

Author Contributions

Conceptualization, R.S., A.A.-M., C.R. and A.S.B.; methodology, R.S., C.R., B.R., A.U., K.E.M., A.A.-M. and A.S.B.; software, R.S., K.E.M., B.F. and A.A.-M.; validation, R.S., A.A.-M., K.E.M., B.R., A.U., C.R. and A.S.B.; formal analysis, R.S., A.A.-M. and K.E.M.; investigation, R.S., A.A.-M., C.R., B.R., A.U., B.F. and A.S.B.; resources, C.R., B.R., A.U., B.F. and A.S.B.; data curation, R.S., K.E.M., A.A.-M. and A.S.B.; writing—original draft preparation, R.S., K.E.M., C.R., B.R., A.U., A.A.-M., B.F. and A.S.B.; writing—review and editing, C.R., B.R., A.U. and A.S.B.; visualization, R.S., C.R., B.R., A.U., B.F. and A.S.B.; supervision, R.S., K.E.M., A.A.-M., B.F. and A.S.B.; project administration, A.S.B. and C.R.; funding acquisition, C.R. and A.S.B. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the Algarrsen project SCPP2300C010708XV0 of the State Research Agency of the Ministry of Science, Innovation and Universities.

Institutional Review Board Statement

Not applicable.

Data Availability Statement

The raw data supporting the conclusions of this article will be made available by the authors upon request.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Biradar, C.M.; Thenkabail, P.S.; Platonov, A.; Xiao, X.M.; Geerken, R.; Noojipady, P.; Turral, H.; Vithanage, J. Water productivity mapping methods using remote sensing. J. Appl. Remote Sens. 2008, 2, 023544. [Google Scholar]

- Food and Agriculture Organization of the United Nations. About FAO’s Work on Plant Production and Protection; Food and Agriculture Organization of the United Nations: Rome, Italy, 2022; Available online: https://www.fao.org/plant-production-protection/about/en (accessed on 31 May 2025).

- Wolanin, A.; Mateo-García, G.; Camps-Valls, G.; Gómez-Chova, L.; Meroni, M.; Duveiller, G.; Liangzhi, Y.; Guanter, L. Estimating and Understanding Crop Yields with Explainable Deep Learning in the Indian Wheat Belt. Environ. Res. Lett. 2020, 15, 024019. [Google Scholar] [CrossRef]

- Awika, J.M. Major cereal grains production and use around the world. In Advances in Cereal Science: Implications to Food Processing and Health Promotion, 1st ed.; Awika, J.M., Piironen, V., Bean, S., Eds.; American Chemical Society (ACS): Washington, DC, USA, 2011; pp. 1–13. [Google Scholar]

- Farahzadi, F.; Ebrahimi, A.; Zarrinnia, V.; Azizinezhad, R. Evaluation of Genetic Diversity in Iranian Rice (Oryza sativa) Cultivars for Resistance to Blast Disease Using Microsatellite (SSR) Markers. Agric. Res. 2020, 9, 460–468. [Google Scholar] [CrossRef]

- Zahra, N.; Hafeez, M.B.; Nawaz, A.; Farooq, M. Rice production systems and grain quality. J. Cereal Sci. 2022, 105, 103463. [Google Scholar] [CrossRef]

- Zhao, M.; Lin, Y.; Chen, H. Improving nutritional quality of rice for human health. Theor. Appl. Genet. 2020, 133, 1397–1413. [Google Scholar] [CrossRef]

- Cantrell, R.P.; Reeves, T.G. The rice genome. The cereal of the world’s poor takes center stage. Science 2002, 296, 53. [Google Scholar] [CrossRef]

- Becker-Reshef, I.; Bandaru, V.; Barker, B.; Coutu, S.; Deines, J.M.; Doorn, B.; Eilerts, G.; Franch, B.; Galvez, A.S.; Hosseini, M.; et al. The NASA harvest program on agriculture and food security. In Remote Sensing of Agriculture and Land Cover/Land Use Changes in South and Southeast Asian Countries; Springer International Publishing: Cham, Switzerland, 2022; pp. 53–80. [Google Scholar]

- UN. Department of Economic and Social Affairs; UNCTAD; UN. ECA; UN. ECE; UN. ECLAC; UN. ESCAP; UN. ESCWA. World Economic Situation and Prospects Executive Summary: 2015; UN: New York, NY, USA, 2025; Available online: https://coilink.org/20.500.12592/5rsl4ww (accessed on 24 June 2025).

- Sanyal, J.; Lu, X.X. Application of Remote Sensing in Flood Management with Special Reference to Monsoon Asia: A Review. Nat. Hazards 2004, 33, 283–301. [Google Scholar] [CrossRef]

- Zhai, Y.; Wang, N.; Zhang, L.; Hao, L.; Hao, C. Automatic crop classification in northeastern China by improved nonlinear dimensionality reduction for satellite image time series. Remote Sens. 2020, 12, 2726. [Google Scholar] [CrossRef]

- Bargiel, D. A new method for crop classification combining time series of radar images and crop phenology information. Remote Sens. Environ. 2017, 198, 369–383. [Google Scholar] [CrossRef]

- Heupel, K.; Spengler, D.; Itzerott, S. A progressive crop-type classification using multitemporal remote sensing data and phenological information. PFG—J. Photogramm. Remote Sens. Geoinf. Sci. 2018, 86, 53–69. [Google Scholar] [CrossRef]

- Bargiel, D.; Herrmann, S.; Lohmann, P.; Sörgel, U. Land use classification with high-resolution satellite radar for estimating the impacts of land use change on the quality of ecosystem services. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. ISPRS Arch. 2010, 38, 68–73. [Google Scholar]

- Conrad, C.; Fritsch, S.; Zeidler, J.; Rücker, G.; Dech, S. Per-field irrigated crop classification in arid Central Asia using SPOT and ASTER data. Remote Sens. 2010, 2, 1035–1056. [Google Scholar] [CrossRef]

- Wei, P.; Ye, H.; Qiao, S.; Liu, R.; Nie, C.; Zhang, B.; Song, L.; Huang, S. Early Crop Mapping Based on Sentinel-2 Time-Series Data and the Random Forest Algorithm. Remote Sens. 2023, 15, 3212. [Google Scholar] [CrossRef]

- Wang, Y.; Zhang, Z.; Feng, L.; Ma, Y.; Du, Q. A New Attention-Based Cnn Approach for Crop Mapping Using Time Series Sentinel-2 Images. Comput. Electron. Agric. 2021, 184, 106090. [Google Scholar] [CrossRef]

- Feng, S.; Zhao, J.; Liu, T.; Zhang, H.; Zhang, Z.; Guo, X. Crop Type Identification and Mapping Using Machine Learning Algorithms and Sentinel-2 Time Series Data. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2019, 12, 3295–3306. [Google Scholar] [CrossRef]

- Bellón, B.; Bégué, A.; Lo Seen, D.; de Almeida, C.; Simões, M. A Remote Sensing Approach for Regional-Scale Mapping of Agricultural Land-Use Systems Based on NDVI Time Series. Remote Sens. 2017, 9, 600. [Google Scholar] [CrossRef]

- Ranjan, R.; Chopra, U.K.; Sahoo, R.N.; Singh, A.K.; Pradhan, S. Assessment of plant nitrogen stress in wheat (Triticum aestivum L.) through hyperspectral indices. Int. J. Remote Sens. 2012, 33, 6342–6360. [Google Scholar] [CrossRef]

- Agenjos-Moreno, A.; Rubio, C.; Uris, A.; Simeón, R.; Franch, B.; Domingo, C.; Bautista, A.S. Strategy for Monitoring the Blast Incidence in Crops of Bomba Rice Variety Using Remote Sensing Data. Agriculture 2024, 14, 1385. [Google Scholar] [CrossRef]

- Wang, B.; Fan, D. Research progress of deep learning in classification and recognition of remote sensing images. Bull. Surv. Mapp. 2019, 2, 99–102, 136. [Google Scholar]

- Hatfield, J.L.; Gitelson, A.A.; Schepers, J.S.; Walthall, C.L. Application of Spectral Remote Sensing for Agronomic Decisions. Agron. J. 2008, 100, S-117–S-131. [Google Scholar] [CrossRef]

- Forkuor, G.; Conrad, C.; Thiel, M.; Landmann, T.; Barry, B. Evaluating the sequential masking classification approach for improving crop discrimination in the Sudanian Savanna of West Africa. Comput. Electron. Agric. 2015, 118, 380–389. [Google Scholar] [CrossRef]

- Chen, Y.; Lu, D.; Moran, E.; Batistella, M.; Dutra, L.V.; Sanches, I.D.A.; da Silva, R.F.B.; Huang, J.; Luiz, A.J.B.; de Oliveira, M.A.F. Mapping croplands, cropping patterns, and crop types using MODIS time-series data. Int. J. Appl. Earth Obs. Geoinf. 2018, 69, 133–147. [Google Scholar] [CrossRef]

- Youssef, A.M.; Pourghasemi, H.R.; Pourtaghi, Z.S.; Al-Katheeri, M.M. Landslide susceptibility mapping using random forest, boosted regression tree, classification and regression tree, and general linear models and comparison of their performance at Wadi Tayyah Basin, Asir Region, Saudi Arabia. Landslides 2016, 13, 839–856. [Google Scholar] [CrossRef]

- Hou, H.; Wang, R.; Murayama, Y. Scenario-based modelling for urban sustainability focusing on changes in cropland under rapid urbanization: A case study of Hangzhou from 1990 to 2035. Sci. Total Environ. 2019, 661, 422–431. [Google Scholar] [CrossRef]

- Conrad, C.; Dech, S.; Dubovyk, O.; Fritsch, S.; Klein, D.; Löw, F.; Schorcht, G.; Zeidler, J. Derivation of temporal windows for accurate crop discrimination in heterogeneous croplands of Uzbekistan using multitemporal RapidEye images. Comput. Electron. Agric. 2014, 103, 63–74. [Google Scholar] [CrossRef]

- Wardlow, B.D.; Egbert, S.L.; Kastens, J.H. Analysis of time-series MODIS 250 m vegetation index data for crop classification in the US Central Great Plains. Remote Sens. Environ. 2007, 108, 290–310. [Google Scholar] [CrossRef]

- Simonneaux, V.; Duchemin, B.; Helson, D.; Er-Raki, S.; Olioso, A.; Chehbouni, A. The use of high-resolution image time series for crop classification and evapotranspiration estimate over an irrigated area in Central Morocco. Int. J. Remote Sens. 2008, 29, 95–116. [Google Scholar] [CrossRef]

- Peña-Barragán, J.M.; Ngugi, M.K.; Plant, R.E.; Six, J. Object-Based Crop Identification Using Multiple Vegetation Indices, Textural Features and Crop Phenology. Remote Sens. Environ. 2011, 115, 1301–1316. [Google Scholar] [CrossRef]

- Chang, J.; Hansen, M.C.; Pittman, K.; Carroll, M.; DiMiceli, C. Corn and soybean mapping in the United States using MODIS time-series data sets. Agron. J. 2007, 99, 1654–1664. [Google Scholar] [CrossRef]

- Guo, Y.; Jia, X.; Paull, D. Mapping of rice varieties with sentinel-2 data via deep cnn learning in spectral and time domains. In Proceedings of the 2018 Digital Image Computing: Techniques and Applications (DICTA), Canberra, Australia, 10–13 December 2018; pp. 1–7. [Google Scholar]

- Rauf, U.; Qureshi, S.; Jabbar, H.; Zeb, A.; Mirza, A.; Alanazi, E.; Rashid, N. A New Method for Pixel Classification for Rice Variety Identification Using Spectral and Time Series Data from Sentinel-2 Satellite Imagery. Comput. Electron. Agric. 2022, 193, 106731. [Google Scholar] [CrossRef]

- Saadat, M.; Seydi, S.T.; Hasanlou, M.; Homayouni, S. A Convolutional Neural Network Method for Rice Mapping Using Time-Series of Sentinel-1 and Sentinel-2 Imagery. Agriculture 2022, 12, 2083. [Google Scholar] [CrossRef]

- López-Andreu, F.J.; Erena, M.; Dominguez-Gómez, J.A.; López-Morales, J.A. Sentinel-2 Images and Machine Learning as Tool for Monitoring of the Common Agricultural Policy: Calasparra Rice as a Case Study. Agronomy 2021, 11, 621. [Google Scholar] [CrossRef]

- de Barreda, D.G.; Pardo, G.; Osca, J.; Catala-Forner, M.; Consola, S.; Garnica, I.; López-Martínez, N.; Palmerín, J.; Osuna, M. An Overview of Rice Cultivation in Spain and the Management of Herbicide-Resistant Weeds. Agronomy 2021, 11, 1095. [Google Scholar] [CrossRef]

- Fita, D.; Rubio, C.; Franch, B.; Castiñeira-Ibáñez, S.; Tarrazó-Serrano, D.; San Bautista, A. Improving Harvester Yield Maps Postprocessing Leveraging Remote Sensing Data in Rice Crop. Precis. Agric. 2025, 26, 33. [Google Scholar] [CrossRef]

- Dirección General del Catastro. Sede Electrónica del Catastro: Visor cartográfico. Ministerio de Hacienda. 2025. Available online: https://www.sedecatastro.gob.es (accessed on 24 June 2025).

- Federación de Arroceros de Sevilla. Sitio web Oficial de la Federación de Arroceros de Sevilla. 2025. Available online: https://www.federaciondearroceros.es/ (accessed on 24 June 2025).

- Wang, S.; Di Tommaso, S.; Faulkner, J.; Friedel, T.; Kennepohl, A.; Strey, R.; Lobell, D.B. Mapping Crop Types in Southeast India with Smartphone Crowdsourcing and Deep Learning. Remote Sens. 2020, 12, 2957. [Google Scholar] [CrossRef]

- Hudait, M.; Patel, P.P. Crop-type mapping and acreage estimation in smallholding plots using Sentinel-2 images and machine learning algorithms: Some comparisons. Egypt. J. Remote Sens. Space Sci. 2022, 25, 147–156. [Google Scholar] [CrossRef]

- Prins, A.J.; Van Niekerk, A. Crop type mapping using LiDAR, Sentinel-2 and aerial imagery with machine learning algorithms. Geo-Spat. Inf. Sci. 2021, 24, 215–227. [Google Scholar] [CrossRef]

- Zhang, P.; Jia, Y.; Shang, Y. Research and application of XGBoost in imbalanced data. Int. J. Distrib. Sens. Netw. 2022, 18, 15501329221106935. [Google Scholar] [CrossRef]

- Arias, M.; Campo-Bescós, M.Á.; Álvarez-Mozos, J. Crop classification based on temporal signatures of Sentinel-1 observations over Navarre province, Spain. Remote. Sens. 2020, 12, 278. [Google Scholar] [CrossRef]

- Rui, X.; Su, S.; Mai, G.; Zhang, Z.; Yang, C. Quantifying determinants of cash crop expansion and their relative effects using logistic regression modeling and variance partitioning. Int. J. Appl. Earth Obs. Geoinf. 2015, 34, 258–263. [Google Scholar]

- Cover, T.; Hart, P. Nearest neighbor pattern classification. IEEE Trans. Inf. Theory 1967, 13, 21–27. [Google Scholar] [CrossRef]

- Uddin, K.M.M.; Biswas, N.; Rikta, S.T.; Dey, S.K. Machine learning-based diagnosis of breast cancer utilizing feature optimization technique. Comput. Methods Programs Biomed. Updat. 2023, 3, 100098. [Google Scholar] [CrossRef]

- Chen, T.; Guestrin, C. Xgboost: A scalable tree boosting system. In Proceedings of the 22nd ACM Sigkdd International Conference on Knowledge Discovery and Data Mining, San Francisco, CA, USA, 13–17 August 2016; pp. 785–794. [Google Scholar]

- Breiman, L. Random Forests. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef]

- Breiman, L. Bagging predictors. Mach. Learn. 1996, 24, 123–140. [Google Scholar] [CrossRef]

- Amit, Y.; Geman, D. Shape quantization and recognition with randomized trees. Neural Comput. 1997, 9, 1545–1588. [Google Scholar] [CrossRef]

- Cox, D.R. The Regression Analysis of Binary Sequences. J. R. Stat. Soc. Ser. B 1958, 20, 215–242. [Google Scholar] [CrossRef]

- Tharwat, A. Classification assessment methods. Appl. Comput. Inform. 2020, 17, 168–192. [Google Scholar] [CrossRef]

- Lancashire, P.D.; Bleiholder, H.; van den Boom, T.; Langelüddeke, P.; Stauss, R.; Weber, E.; Witzenberger, A. A Uniform Decimal Code for Growth Stages of Crops and Weeds. Ann. Appl. Biol. 1991, 119, 561–601. [Google Scholar] [CrossRef]

- Belgiu, M.; Drăguţ, L. Random Forest in Remote Sensing: A Review of Applications and Future Directions. ISPRS J. Photogramm. Remote Sens. 2016, 114, 24–31. [Google Scholar] [CrossRef]

- Thanh, D.K.; Ngoc, D.L.; Dieu, H.D.; Tran, V.A. Comparison of random forest and extreme gradient boosting algorithms in land cover classification in van yen district, Yen Bai Province, Vietnam. J. Hydro-Environ. Res. 2025, 23, 50–59. [Google Scholar]

- Asgari, S.; Hasanlou, M. A Comparative Study of Machine Learning Classifiers for Crop Type Mapping Using Vegetation Indices. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2023, X-4/W1-2022, 79–85. [Google Scholar] [CrossRef]

- Zhong, L.H.; Gong, P.; Biging, G.S. Phenology-based crop classification algorithm and its implications on agricultural water use assessments in California’s Central Valley. Photogramm. Eng. Remote Sens. 2012, 78, 799–813. [Google Scholar] [CrossRef]

- Militino, A.F.; Goyena, H.; Pérez-Goya, U.; Ugarte, M.D. Logistic Regression versus XGBoost for Detecting Burned Areas Using Satellite Images. Environ. Ecol. Stat. 2024, 31, 57–77. [Google Scholar] [CrossRef]

- Yang, H.-C.; Zhou, J.-P.; Zheng, C.; Wu, Z.; Li, Y.; Li, L.-G. PhenologyNet: A fine-grained approach for crop-phenology classification fusing convolutional neural network and phenotypic similarity. Comput. Electron. Agric. 2025, 229, 109728. [Google Scholar] [CrossRef]

- Gitelson, A.A.; Viña, A.; Arkebauer, T.J.; Rundquist, D.C.; Keydan, G.; Leavitt, B. Remote estimation of leaf area index and green leaf biomass in maize canopies. Geophys. Res. Lett. 2003, 30. [Google Scholar] [CrossRef]

- Zhang, H.; Duan, Z.; Li, Y.; Zhao, G.; Zhu, S.; Fu, W.; Peng, T.; Zhao, Q.; Svanberg, S.; Hu, J. Vis/NIR Reflectance Spectroscopy for Hybrid Rice Variety Identification and Chlorophyll Content Evaluation for Different Nitrogen Fertilizer Levels. R. Soc. Open Sci. 2019, 6, 191132. [Google Scholar] [CrossRef]

- Frampton, W.J.; Dash, J.; Watmough, G.; Milton, E.J. Evaluating the Capabilities of Sentinel-2 for Quantitative Estimation of Biophysical Variables in Vegetation. ISPRS J. Photogramm. Remote Sens. 2013, 82, 83–92. [Google Scholar] [CrossRef]

- Clevers, J.G.; Gitelson, A.A. Remote estimation of crop and grass chlorophyll and nitrogen content using red-edge bands on Sentinel-2 and -3. Int. J. Appl. Earth Obs. Geoinf. 2013, 23, 344–351. [Google Scholar] [CrossRef]

- Imran, H.A.; Gianelle, D.; Rocchini, D.; Dalponte, M.; Martín, M.P.; Sakowska, K.; Wohlfahrt, G.; Vescovo, L. VIS-NIR, red-edge and NIR-shoulder based normalized vegetation indices response to co-varying leaf and Canopy structural traits in heterogeneous grasslands. Remote Sens. 2020, 12, 2254. [Google Scholar] [CrossRef]

- Xie, Q.; Dash, J.; Huete, A.; Jiang, A.; Yin, G.; Ding, Y.; Peng, D.; Hall, C.C.; Brown, L.; Shi, Y.; et al. Retrieval of crop biophysical parameters from Sentinel-2 remote sensing imagery. Int. J. Appl. Earth Obs. Geoinf. 2019, 80, 187–195. [Google Scholar] [CrossRef]

- Yang, J.; Jiang, J.; Fu, Z.; Wang, W.; Cao, Q.; Tian, Y.; Zhu, Y.; Cao, W.; Liu, X. Integrating phenology information with UAV multispectral data for rice nitrogen nutrition diagnosis. Eur. J. Agron. 2025, 169, 127696. [Google Scholar] [CrossRef]

- Qiu, B.; Yu, L.; Yang, P.; Wu, W.; Chen, J.; Zhu, X.; Duan, M. Mapping Upland Crop–Rice Cropping Systems for Targeted Sustainable Intensification in South China. Crop J. 2024, 12, 614–629. [Google Scholar] [CrossRef]

- Son, N.T.; Chen, C.F.; Chen, C.R.; Sobue, S.I.; Chiang, S.H.; Maung, T.H.; Chang, L.Y. Delineating and predicting changes in rice cropping systems using multi-temporal MODIS data in myanmar. J. Spat. Sci. 2017, 62, 235–259. [Google Scholar] [CrossRef]

- Wang, F.M.; Yi, Q.X.; Hu, J.H.; Xie, L.L.; Yao, X.P.; Xu, T.Y.; Zheng, J.Y. Combining spectral and textural information in UAV hyperspectral images to estimate rice grain yield. Int. J. Appl. Earth Obs. Geoinf. 2021, 102, 102397. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).