PCC-YOLO: A Fruit Tree Trunk Recognition Algorithm Based on YOLOv8

Abstract

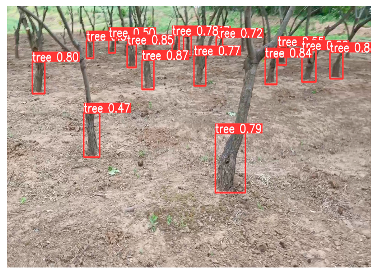

1. Introduction

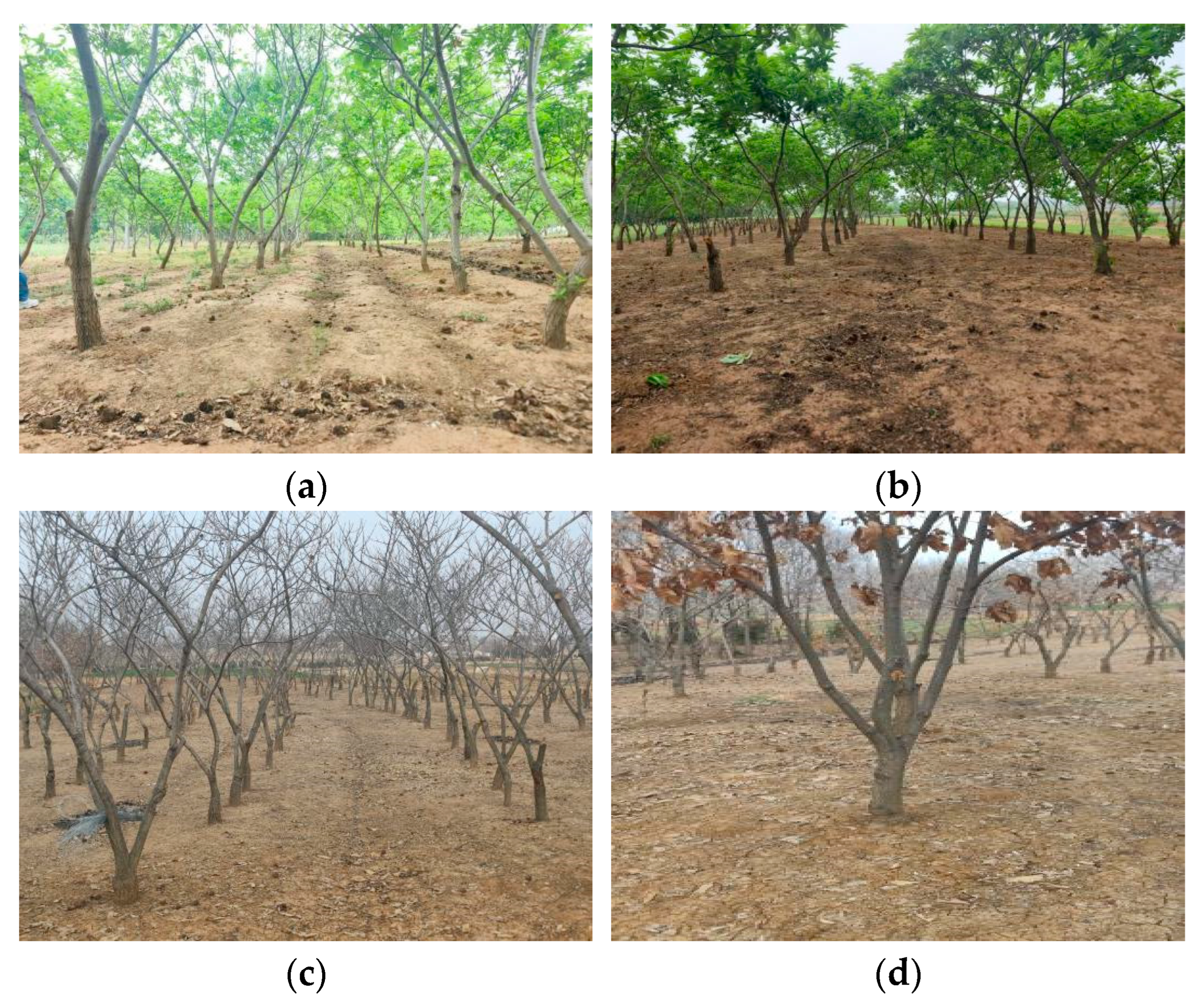

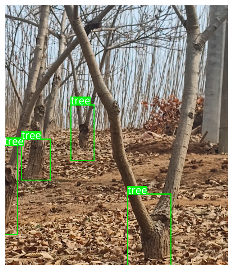

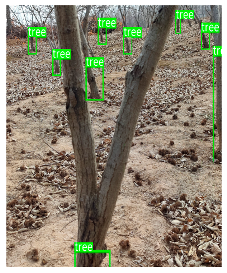

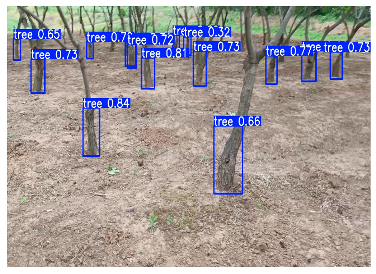

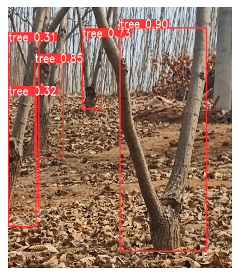

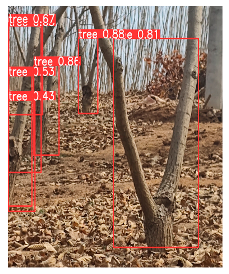

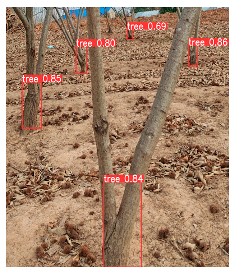

- A thorough and realistic orchard dataset was developed. To guarantee the universality of the experimental model, the dataset encompassed various seasons (winter and spring), diverse lighting conditions, and fruit trees of varying sizes (large, medium, and small), hence ensuring the dataset’s comprehensiveness and wide application.

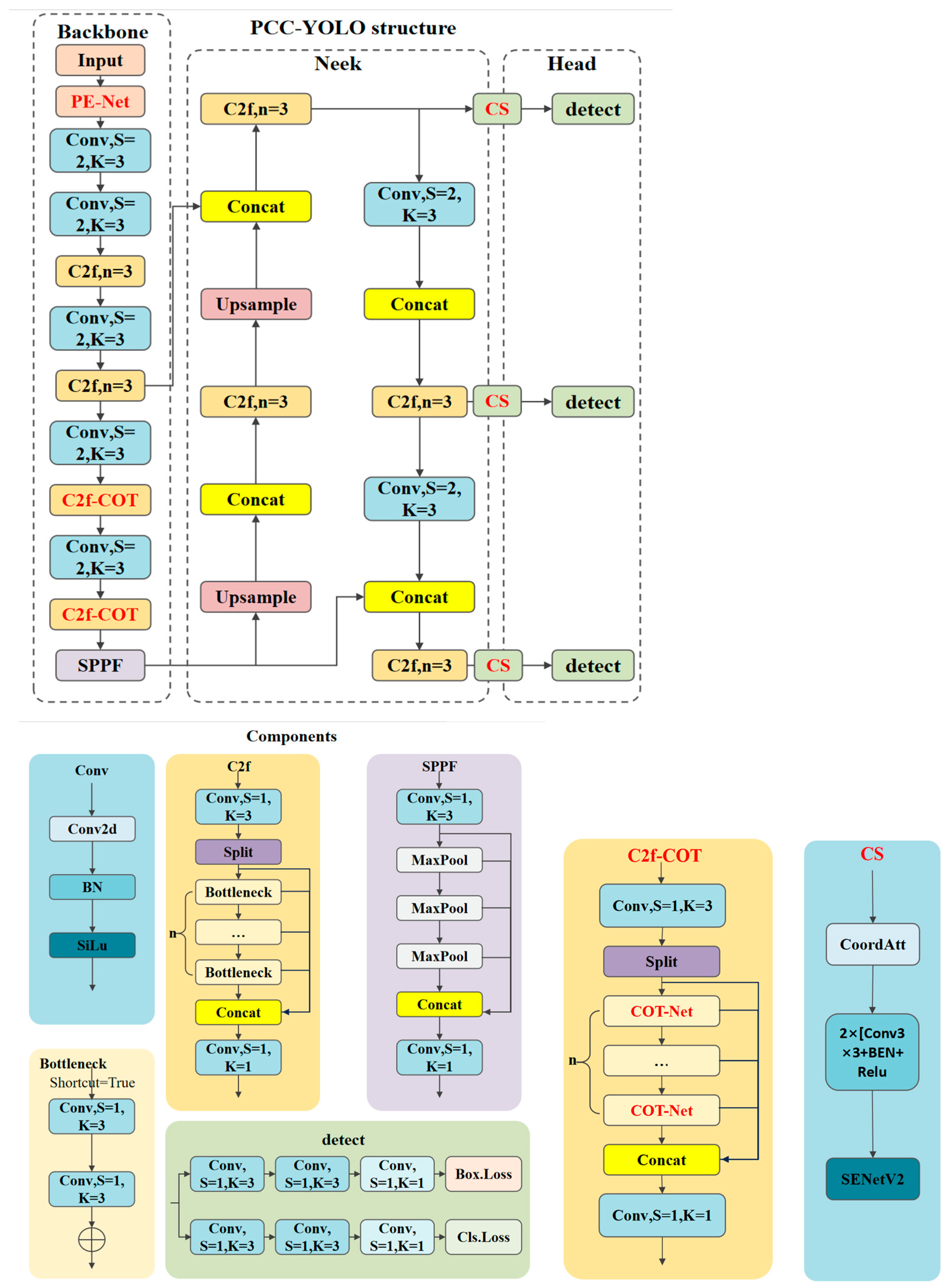

- A multi-module fusion model augmentation strategy was presented to attain multi-dimensional performance improvements. Optimizations were implemented on the YOLOv8 model to mitigate poor contrast and intricate lighting variations. The PENet and CoT-Net modules were introduced, and the Coord-SE module was proposed to improve model robustness while preserving a lightweight architecture.

- Traditional methods employing LiDAR for fruit tree identification are expensive. This study presents an economical, lightweight, and extremely resilient method for fruit tree detection in orchard robots, demonstrating resistance to occlusion and variations in lighting conditions.

2. Materials and Methods

2.1. Experimental Data

2.2. Improved YOLOv8 Algorithm

2.2.1. Model Selection

2.2.2. Model Construction

2.2.3. Element Replacement

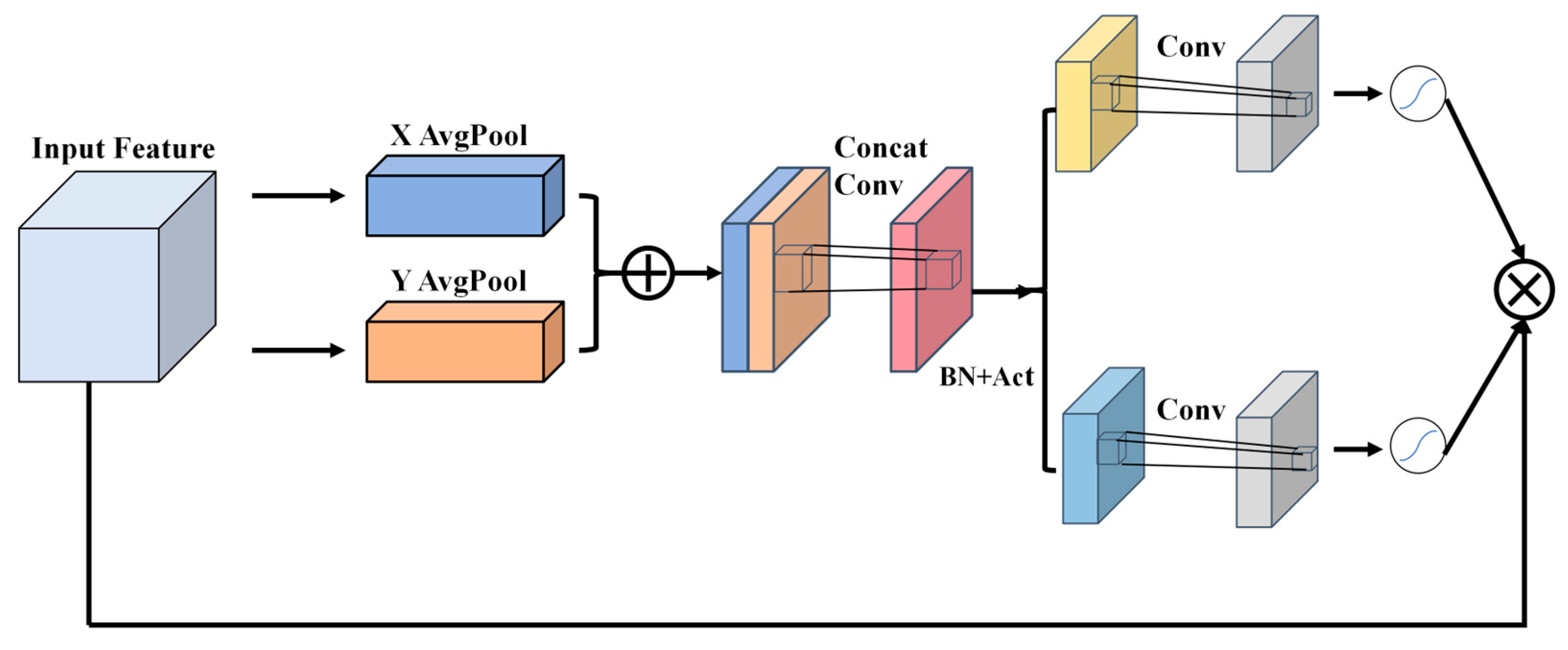

2.2.4. Unit Optimization

2.2.5. PENet Preprocessing

2.3. Experimental Platform and Parameter Settings

2.3.1. Experimental Platform

2.3.2. Parameter Settings

- Number of training epochs: 300.

- Initial learning rate: 0.001.

- Momentum: 0.95.

- Weight decay: 0.0004.

- Optimizer: SGD.

- Image size: 640 × 640.

- Dataset caching: enabled (true).

2.3.3. Evaluation Criteria for Tree Trunk Recognition

3. Results

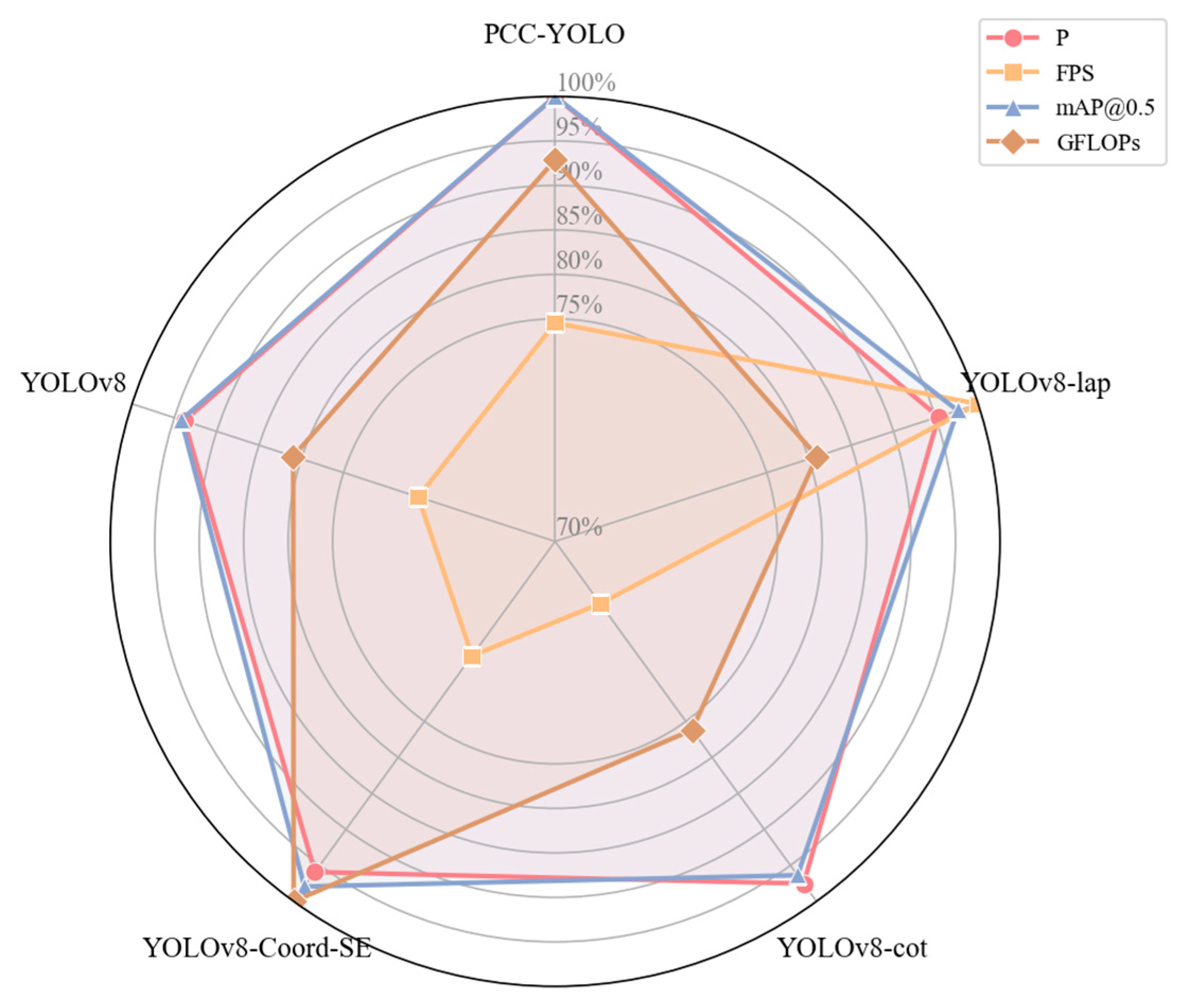

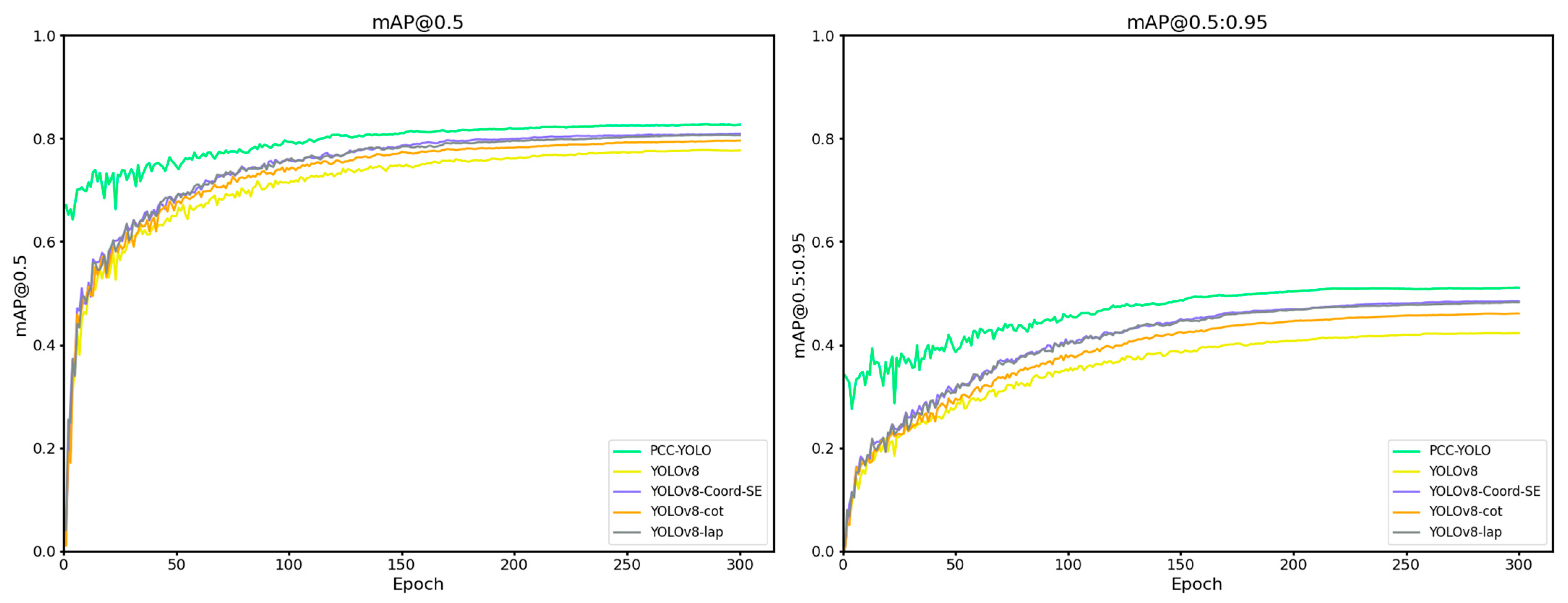

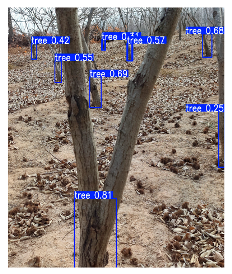

3.1. Comparison of Different Network Models

3.2. Comparison of Different Attention Mechanisms

3.3. CoT-Net Effectiveness Validation

3.4. Ablation Experiment

4. Discussion

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Chen, Z.; Granland, K.; Tang, Y.; Chen, C. HOB-CNNv2: Deep learning based detection of extremely occluded tree branches and reference to the dominant tree image. Comput. Electron. Agric. 2024, 218, 108727. [Google Scholar] [CrossRef]

- Mandal, S.; Yadav, A.; Panme, F.A.; Devi, K.M.; S.M., S.K. Adaption of smart applications in agriculture to enhance production. Smart Agric. Technol. 2024, 7, 100431. [Google Scholar] [CrossRef]

- Li, D.; Nanseki, T.; Chomei, Y.; Kuang, J. A review of smart agriculture and production practices in Japanese large-scale rice farming. J. Sci. Food Agric. 2022, 103, 1609–1620. [Google Scholar] [CrossRef]

- Abbasi, R.; Martinez, P.; Ahmad, R. The digitization of agricultural industry—A systematic literature review on agriculture 4.0. Smart Agric. Technol. 2022, 2, 100042. [Google Scholar] [CrossRef]

- Javaid, M.; Haleem, A.; Singh, R.P.; Suman, R. Enhancing smart farming through the applications of Agriculture 4.0 technologies. Int. J. Intell. Netw. 2022, 3, 150–164. [Google Scholar] [CrossRef]

- Lv, J.; Zhao, D.-A.; Ji, W.; Chen, Y.; Zhang, Y. Research on trunk and branch recognition method of apple harvesting robot. In Proceedings of the 2012 International Conference on Measurement, Information and Control, Harbin, China, 18–20 May 2012; pp. 474–478. [Google Scholar] [CrossRef]

- Chen, X.; Wang, S.; Zhang, B.; Luo, L. Multi-feature fusion tree trunk detection and orchard mobile robot localization using camera/ultrasonic sensors. Comput. Electron. Agric. 2018, 147, 91–108. [Google Scholar] [CrossRef]

- Maeyama, S.; Ohya, A.; Yuta, S. Positioning by tree detection sensor and dead reckoning for outdoor navigation of a mobile robot. In Proceedings of the 1994 IEEE International Conference on MFI ‘94. Multisensor Fusion and Integration for Intelligent Systems, Las Vegas, NV, USA, 2–5 October 1994; pp. 653–660. [Google Scholar] [CrossRef]

- Andersen, J.C.; Ravn, O.; Andersen, N.A. Autonomous rule-based robot navigation in orchards. IFAC Proc. Vol. 2010, 43, 43–48. [Google Scholar] [CrossRef]

- Shalal, N.; Low, T.; McCarthy, C.; Hancock, N. Orchard mapping and mobile robot localisation using on-board camera and laser scanner data fusion—Part A: Tree detection. Comput. Electron. Agric. 2015, 119, 254–266. [Google Scholar] [CrossRef]

- Gimenez, J.; Sansoni, S.; Tosetti, S.; Capraro, F.; Carelli, R. Trunk detection in tree crops using RGB-D images for structure-based ICM-SLAM. Comput. Electron. Agric. 2022, 199, 107099. [Google Scholar] [CrossRef]

- Lamprecht, S.; Stoffels, J.; Dotzler, S.; Haß, E.; Udelhoven, T. aTrunk—An ALS-Based Trunk Detection Algorithm. Remote Sens. 2015, 7, 9975–9997. [Google Scholar] [CrossRef]

- Wang, J.; Chen, X.; Cao, L.; An, F.; Chen, B.; Xue, L.; Yun, T. Individual Rubber Tree Segmentation Based on Ground-Based LiDAR Data and Faster R-CNN of Deep Learning. Forests 2019, 10, 793. [Google Scholar] [CrossRef]

- Zhu, D.; Liu, X.; Zheng, Y.; Xu, L.; Huang, Q. Improved Tree Segmentation Algorithm Based on Backpack-LiDAR Point Cloud. Forests 2024, 15, 136. [Google Scholar] [CrossRef]

- Fei, Z.; Vougioukas, S. Row-sensing templates: A generic 3D sensor-based approach to robot localization with respect to orchard row centerlines. J. Field Robot. 2022, 39, 712–738. [Google Scholar] [CrossRef]

- Jiang, A.; Ahamed, T. Navigation of an Autonomous Spraying Robot for Orchard Operations Using LiDAR for Tree Trunk Detection. Sensors 2023, 23, 4808. [Google Scholar] [CrossRef]

- Zambre, Y.; Rajkitkul, E.; Mohan, A.; Peeples, J. Spatial Transformer Network YOLO Model for Agricultural Object Detection (Version 2). arXiv 2024, arXiv:2407.21652. [Google Scholar]

- Huang, P.; Huang, P.; Wang, Z.; Wu, X.; Liu, J.; Zhu, L. Deep-Learning-Based Trunk Perception with Depth Estimation and DWA for Robust Navigation of Robotics in Orchards. Agronomy 2023, 13, 1084. [Google Scholar] [CrossRef]

- Escolà, A.; Planas, S.; Rosell, J.R.; Pomar, J.; Camp, F.; Solanelles, F.; Gracia, F.; Llorens, J.; Gil, E. Performance of an Ultrasonic Ranging Sensor in Apple Tree Canopies. Sensors 2011, 11, 2459–2477. [Google Scholar] [CrossRef]

- Murtiyoso, A.; Cabo, C.; Singh, A.; Obaya, D.P.; Cherlet, W.; Stoddart, J.; Fol, C.R.; Schwenke, M.B.; Rehush, N.; Stereńczak, K.; et al. A Review of Software Solutions to Process Ground-based Point Clouds in Forest Applications. Curr. For. Rep. 2024, 10, 401–419. [Google Scholar] [CrossRef]

- Deng, S.; Xu, Q.; Yue, Y.; Jing, S.; Wang, Y. Individual tree detection and segmentation from unmanned aerial vehicle-LiDAR data based on a trunk point distribution indicator. Comput. Electron. Agric. 2024, 218, 108717. [Google Scholar] [CrossRef]

- Lin, T.-Y.; Goyal, P.; Girshick, R.; He, K.; Dollar, P. Focal Loss for Dense Object Detection. In Proceedings of the IEEE Transactions on Pattern Analysis and Machine Intelligence, Venice, Italy, 22–29 October 2017. [Google Scholar]

- Redmon, J.; Farhadi, A. YOLO9000: Better, Faster, Stronger. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 6517–6525. [Google Scholar]

- Cao, Z.; Gong, C.; Meng, J.; Liu, L.; Rao, Y.; Hou, W. Orchard Vision Navigation Line Extraction Based on YOLOv8-Trunk Detection. IEEE Access 2024, 12, 104126–104137. [Google Scholar] [CrossRef]

- Yinghua, Z.; Yu, T.; Xingxing, L. Urban Street tree Recognition Method Based on Machine Vision. In Proceedings of the 2021 6th International Conference on Intelligent Computing and Signal Processing (ICSP), Xi’an, China, 9–11 April 2021; pp. 967–971. [Google Scholar]

- Ozer, T.; Akdogan, C.; Cengiz, E.; Kelek, M.M.; Yildirim, K.; Oguz, Y.; Akkoc, H. Cherry Tree Detection with Deep Learning. In Proceedings of the 2022 Innovations in Intelligent Systems and Applications Conference (ASYU), Antalya, Turkey, 7–9 September 2022; pp. 1–4. [Google Scholar]

- Chen, Y.; Xu, H.; Zhang, X.; Gao, P.; Xu, Z.; Huang, X. An object detection method for bayberry trees based on an improved YOLO algorithm. Int. J. Digit. Earth 2023, 16, 781–805. [Google Scholar] [CrossRef]

- Sun, H.; Xue, J.; Zhang, Y.; Li, H.; Liu, R.; Song, Y.; Liu, S. Novel method of rapid and accurate tree trunk location in pear orchard combining stereo vision and semantic segmentation. Measurement 2025, 242, 116127. [Google Scholar] [CrossRef]

- Zhou, J.; Geng, S.; Qiu, Q.; Shao, Y.; Zhang, M. A Deep-Learning Extraction Method for Orchard Visual Navigation Lines. Agriculture 2022, 12, 1650. [Google Scholar] [CrossRef]

- Katsura, H.; Miura, J.; Hild, M.; Shirai, Y. A View-Based Outdoor Navigation Using Object Recognition Robust to Changes of Weather and Seasons. J. Robot. Soc. Jpn. 2005, 23, 75–83. [Google Scholar] [CrossRef]

- Brown, J.; Paudel, A.; Biehler, D.; Thompson, A.; Karkee, M.; Grimm, C.; Davidson, J.R. Tree detection and in-row localization for autonomous precision orchard management. Comput. Electron. Agric. 2024, 227, 109454. [Google Scholar] [CrossRef]

- Kumar, P.; Kumar, V. Exploring the Frontier of Object Detection: A Deep Dive into YOLOv8 and the COCO Dataset. In Proceedings of the 2023 IEEE International Conference on Computer Vision and Machine Intelligence (CVMI), Gwalior, India, 10–11 December 2023; pp. 1–6. [Google Scholar]

- Li, Y.; Yao, T.; Pan, Y.; Mei, T. Contextual Transformer Networks for Visual Recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2023, 45, 1489–1500. [Google Scholar] [CrossRef]

- Hou, Q.; Zhou, D.; Feng, J. Coordinate Attention for Efficient Mobile Network Design (Version 1). arXiv 2021, arXiv:2103.02907. [Google Scholar]

- Narayanan, M. SENetV2: Aggregated dense layer for channelwise and global representations (Version 1). arXiv 2023, arXiv:2311.10807. [Google Scholar]

- Yin, X.; Yu, Z.; Fei, Z.; Lv, W.; Gao, X. PE-YOLO: Pyramid Enhancement Network for Dark Object Detection. In Proceedings of the 32nd International Conference on Artificial Neural Networks, Heraklion, Crete, Greece, 26–29 September 2023. [Google Scholar]

- Guo, Q.; Wang, Y.; Zhang, Y.; Qin, H.; Jiang, Y. AWF-YOLO: Enhanced underwater object detection with adaptive weighted feature pyramid network. Complex Eng. Syst. 2023, 3, 16. [Google Scholar] [CrossRef]

- Diao, Z.; Guo, P.; Zhang, B.; Zhang, D.; Yan, J.; He, Z.; Zhao, S.; Zhao, C.; Zhang, J. Navigation line extraction algorithm for corn spraying robot based on improved YOLOv8s network. Comput. Electron. Agric. 2023, 212, 108049. [Google Scholar] [CrossRef]

- Chen, J.; Wang, H.; Zhang, H.; Luo, T.; Wei, D.; Long, T.; Wang, Z. Weed detection in sesame fields using a YOLO model with an enhanced attention mechanism and feature fusion. Comput. Electron. Agric. 2022, 202, 107412. [Google Scholar] [CrossRef]

- Praveen, S.; Jung, Y. CBAM-STN-TPS-YOLO: Enhancing Agricultural Object Detection through Spatially Adaptive Attention Mechanisms (Version 1). arXiv 2025, arXiv:2506.07357. [Google Scholar]

- Liu, Y.; Wang, H.; Liu, Y.; Luo, Y.; Li, H.; Chen, H.; Liao, K.; Li, L. A Trunk Detection Method for Camellia oleifera Fruit Harvesting Robot Based on Improved YOLOv7. Forests 2023, 14, 1453. [Google Scholar] [CrossRef]

- Ramalingam, K.; Pazhanivelan, P.; Jagadeeswaran, R.; Prabu, P.C. YOLO deep learning algorithm for object detection in agriculture: A review. J. Agric. Eng. 2024, 55. [Google Scholar] [CrossRef]

- Badgujar, C.M.; Poulose, A.; Gan, H. Agricultural Object Detection with You Look Only Once (YOLO) Algorithm: A Bibliometric and Systematic Literature Review (Version 1). arXiv 2024, arXiv:2401.10379. [Google Scholar] [CrossRef]

- Tang, Z.; Lu, J.; Chen, Z.; Qi, F.; Zhang, L. Improved Pest-YOLO: Real-time pest detection based on efficient channel attention mechanism and transformer encoder. Ecol. Inform. 2023, 78, 102340. [Google Scholar] [CrossRef]

- Liu, H.; Wang, X.; Zhao, F.; Yu, F.; Lin, P.; Gan, Y.; Ren, X.; Chen, Y.; Tu, J. Upgrading swin-B transformer-based model for accurately identifying ripe strawberries by coupling task-aligned one-stage object detection mechanism. Comput. Electron. Agric. 2024, 218, 108674. [Google Scholar] [CrossRef]

- Zhang, J.; Tian, M.; Yang, Z.; Li, J.; Zhao, L. An improved target detection method based on YOLOv5 in natural orchard environments. Comput. Electron. Agric. 2024, 219, 108780. [Google Scholar] [CrossRef]

- Ling, S.; Wang, N.; Li, J.; Ding, L. Accurate Recognition of Jujube Tree Trunks Based on Contrast Limited Adaptive Histogram Equalization Image Enhancement and Improved YOLOv8. Forests 2024, 15, 625. [Google Scholar] [CrossRef]

- Su, F.; Zhao, Y.; Shi, Y.; Zhao, D.; Wang, G.; Yan, Y.; Zu, L.; Chang, S. Tree Trunk and Obstacle Detection in Apple Orchard Based on Improved YOLOv5s Model. Agronomy 2022, 12, 2427. [Google Scholar] [CrossRef]

| Model | P | FPS/(f·s−1) | mAP@0.5 | GFLOPs | MSE/px | RMSE/px |

|---|---|---|---|---|---|---|

| YOLOv5s | 0.820 | 85.19 | 0.724 | 18.9 | 12.20 | 3.39 |

| YOLOv6 | 0.750 | 132.56 | 0.772 | 11.8 | 11.10 | 3.34 |

| YOLOv8n | 0.760 | 127.27 | 0.778 | 6.8 | 11.20 | 3.34 |

| PCC-YOLO | 0.810 | 143.36 | 0.826 | 7.8 | 5.02 | 2.24 |

| A | B | C | D | |

|---|---|---|---|---|

| (ground truth) |  |  |  |  |

| (PCC-YOLO) |  |  |  |  |

| (YOLOv8n) |  |  |  |  |

| (YOLOv6) |  |  |  |  |

| (YOLOv5s) |  |  |  |  |

| Model | P | mAP@0.5 | FPS/(f·s−1) | MSE/px | RMSE/px |

|---|---|---|---|---|---|

| YOLOv8 | 0.760 | 0.778 | 127.27 | 11.2 | 3.34 |

| YOLOv8-SENetV2 | 0.733 | 0.751 | 117.39 | 14.3 | 3.79 |

| YOLOv8-CGA | 0.753 | 0.763 | 112.77 | 12.7 | 3.57 |

| YOLOv8-SEAM | 0.768 | 0.783 | 104.08 | 11.2 | 3.34 |

| YOLOv8-Coord-SE | 0.777 | 0.809 | 126.69 | 6.2 | 2.49 |

| Model | P | FPS/(f·s−1) | mAP@0.5 | GFLOPs |

|---|---|---|---|---|

| YOLOv8 | 0.760 | 127.27 | 0.778 | 6.8 |

| YOLOv8-tr | 0.765 | 109.45 | 0.781 | 6.9 |

| YOLOv8-cot | 0.790 | 112.77 | 0.796 | 6.4 |

| Model | P | FPS/(f·s−1) | mAP@0.5 | GFLOPs | MSE/px | RMSE/px |

|---|---|---|---|---|---|---|

| YOLOv8 | 0.760 | 127.27 | 0.778 | 6.8 | 11.2 | 3.34 |

| YOLOv8-Coord-SE | 0.777 | 126.69 | 0.809 | 8.4 | 6.18 | 2.49 |

| YOLOv8-cot | 0.790 | 112.77 | 0.796 | 6.4 | 8.02 | 2.83 |

| YOLOv8-lap | 0.772 | 192.31 | 0.806 | 6.8 | 7.44 | 2.73 |

| PCC-YOLO | 0.810 | 143.36 | 0.826 | 7.8 | 5.04 | 2.24 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, Y.; Jin, W.; Gu, B.; Tian, G.; Li, Q.; Zhang, B.; Ji, G. PCC-YOLO: A Fruit Tree Trunk Recognition Algorithm Based on YOLOv8. Agriculture 2025, 15, 1786. https://doi.org/10.3390/agriculture15161786

Zhang Y, Jin W, Gu B, Tian G, Li Q, Zhang B, Ji G. PCC-YOLO: A Fruit Tree Trunk Recognition Algorithm Based on YOLOv8. Agriculture. 2025; 15(16):1786. https://doi.org/10.3390/agriculture15161786

Chicago/Turabian StyleZhang, Yajie, Weiliang Jin, Baoxing Gu, Guangzhao Tian, Qiuxia Li, Baohua Zhang, and Guanghao Ji. 2025. "PCC-YOLO: A Fruit Tree Trunk Recognition Algorithm Based on YOLOv8" Agriculture 15, no. 16: 1786. https://doi.org/10.3390/agriculture15161786

APA StyleZhang, Y., Jin, W., Gu, B., Tian, G., Li, Q., Zhang, B., & Ji, G. (2025). PCC-YOLO: A Fruit Tree Trunk Recognition Algorithm Based on YOLOv8. Agriculture, 15(16), 1786. https://doi.org/10.3390/agriculture15161786