Extraction of Cotton Cultivation Areas Based on Deep Learning and Sentinel-2 Image Data

Abstract

1. Introduction

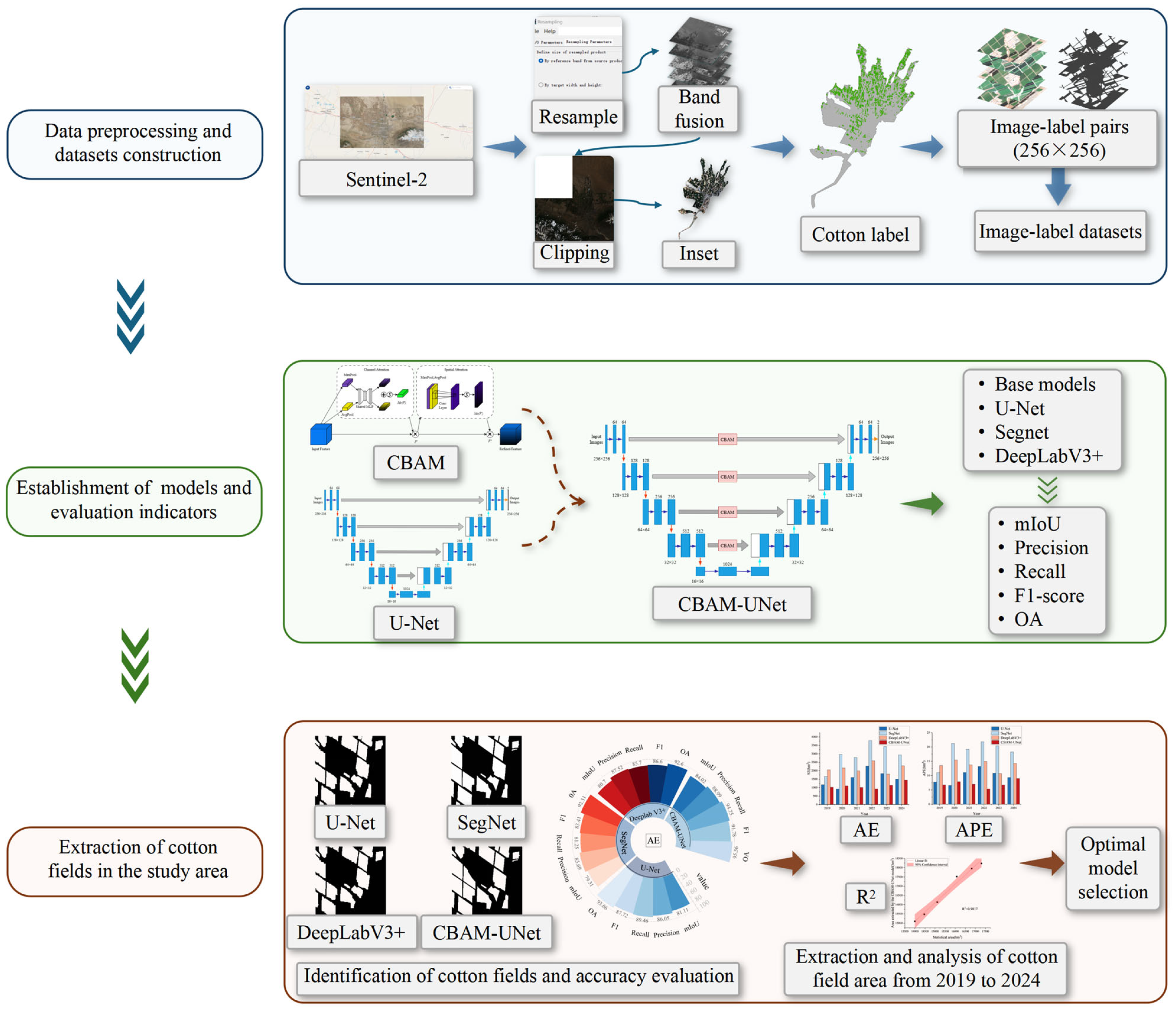

2. Materials and Methods

2.1. Overview of the Study Area

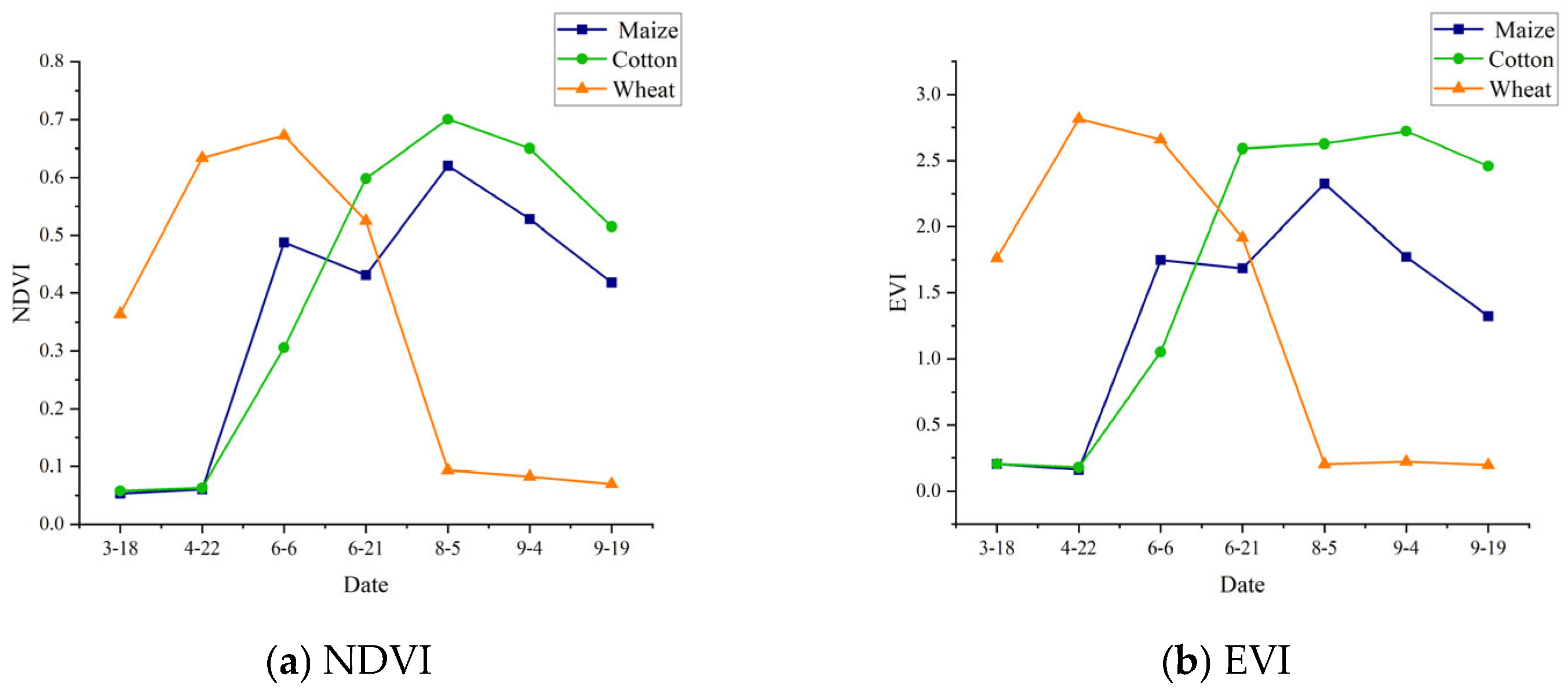

2.2. Growth Cycles of Cotton in the Study Area

2.3. Data Source and Preprocessing

2.3.1. Sentinel-2 Remote Sensing Image Data

2.3.2. Statistical Data

2.4. Dataset Production

3. Research Methods

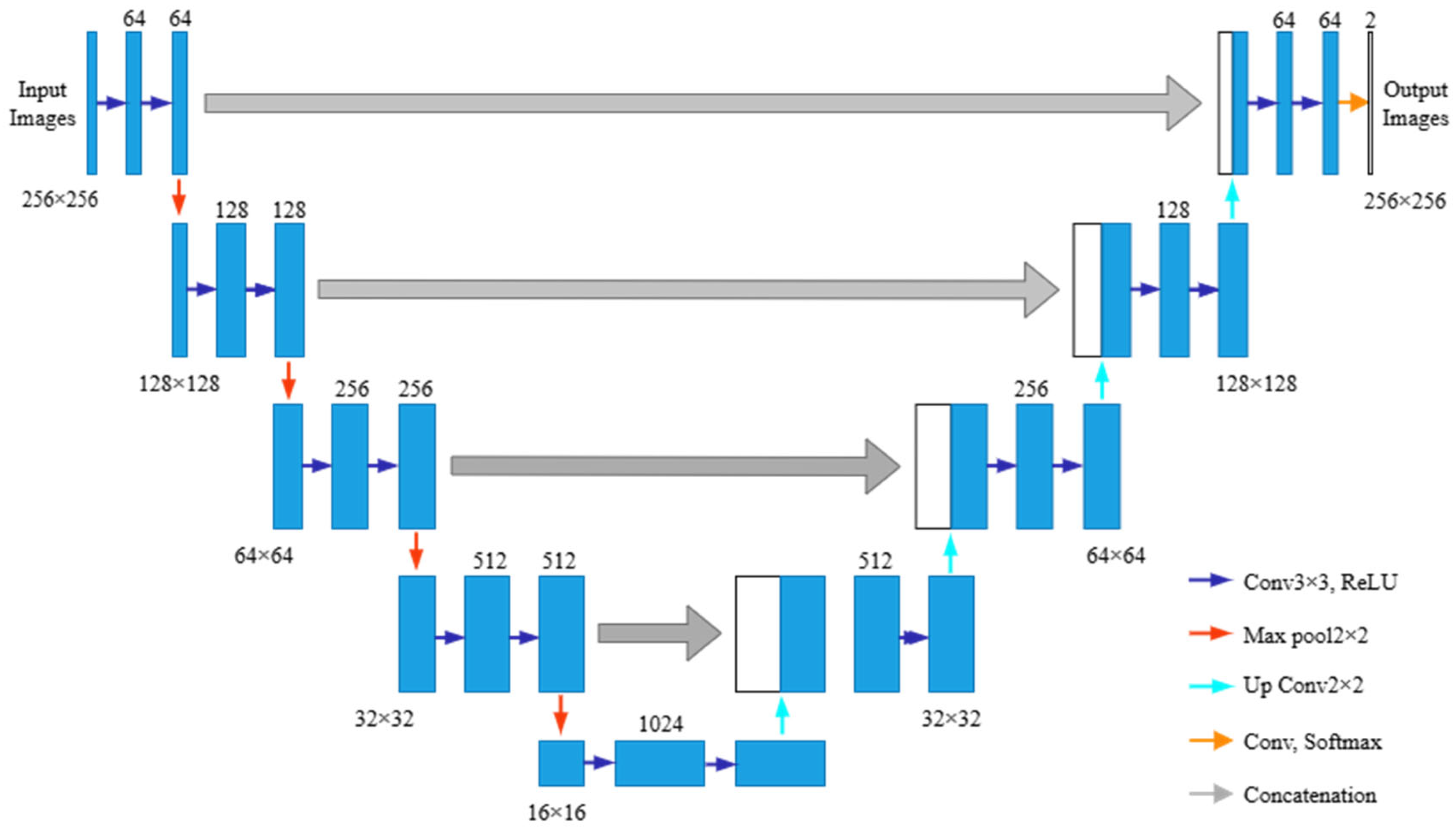

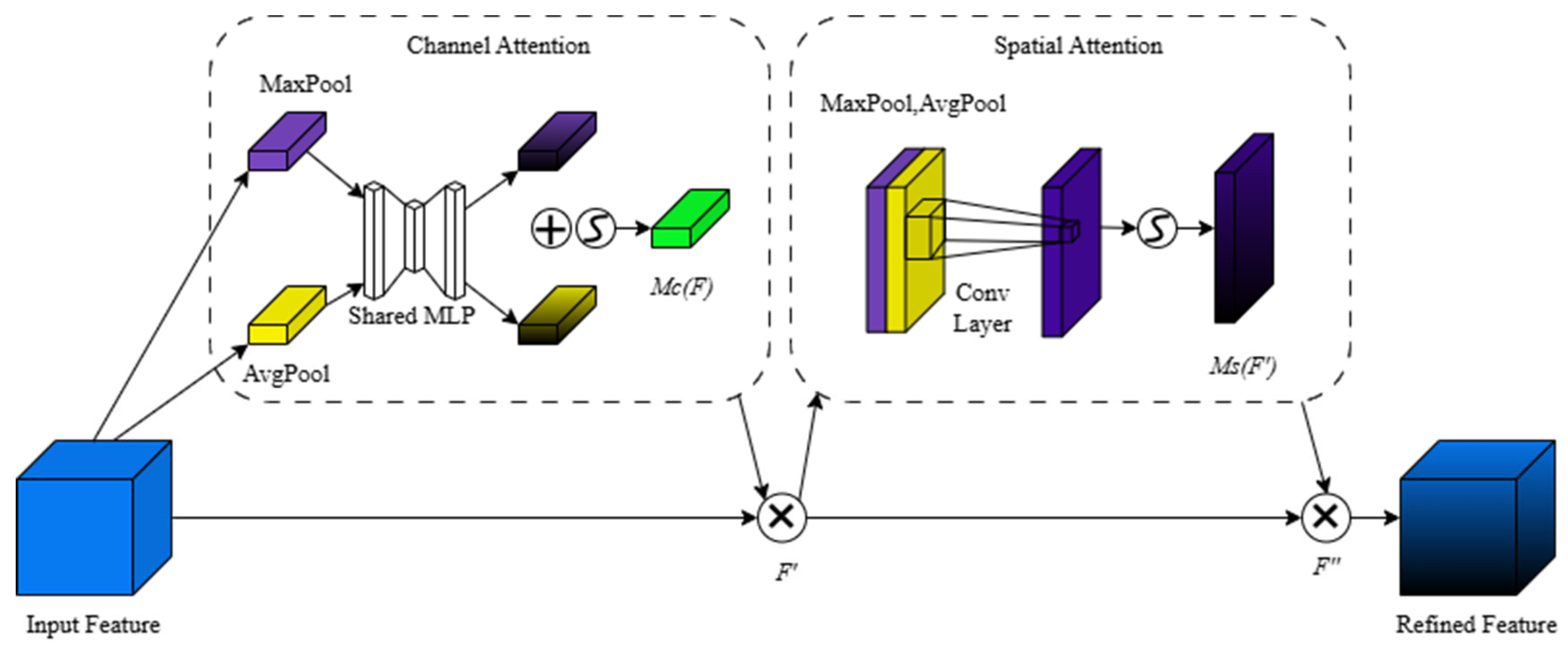

3.1. Establishment of the CBAM-UNet Model

3.2. Model Training

- (1)

- Data preparation: organize and preprocess the dataset for model training.

- (2)

- Parameter initialization: assign random initial values to all of the weights and biases of the neurons within the model.

- (3)

- Forward propagation: feed the input images and their associated labels into the model, performing layer-wise computations from the input layer to the output layer.

- (4)

- Loss computation: compare the model’s predictions with the ground truth labels and compute the value of the loss function to quantify the prediction errors.

- (5)

- Backpropagation: propagate the error signals backward from the output layer to the input layer, updating the model’s weights and biases using the optimization algorithm to minimize the loss.

- (6)

- Iterative training: repeat the forward propagation, loss computation, and backpropagation steps until the loss function converges below a predefined threshold.

3.3. Accuracy Evaluation Indicators

4. Results and Analysis

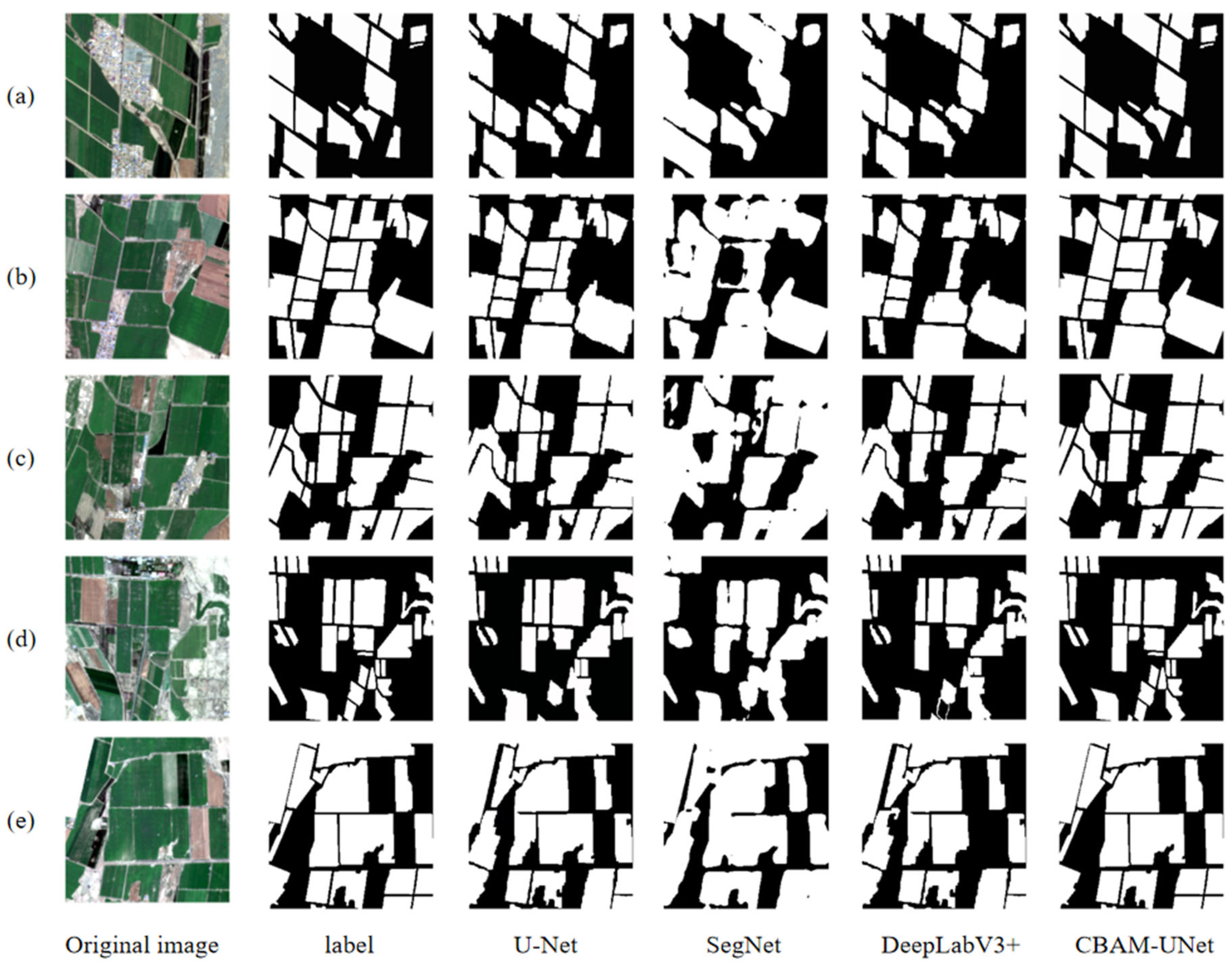

4.1. Comparative Analysis of Cotton Extraction Results Across Different Models

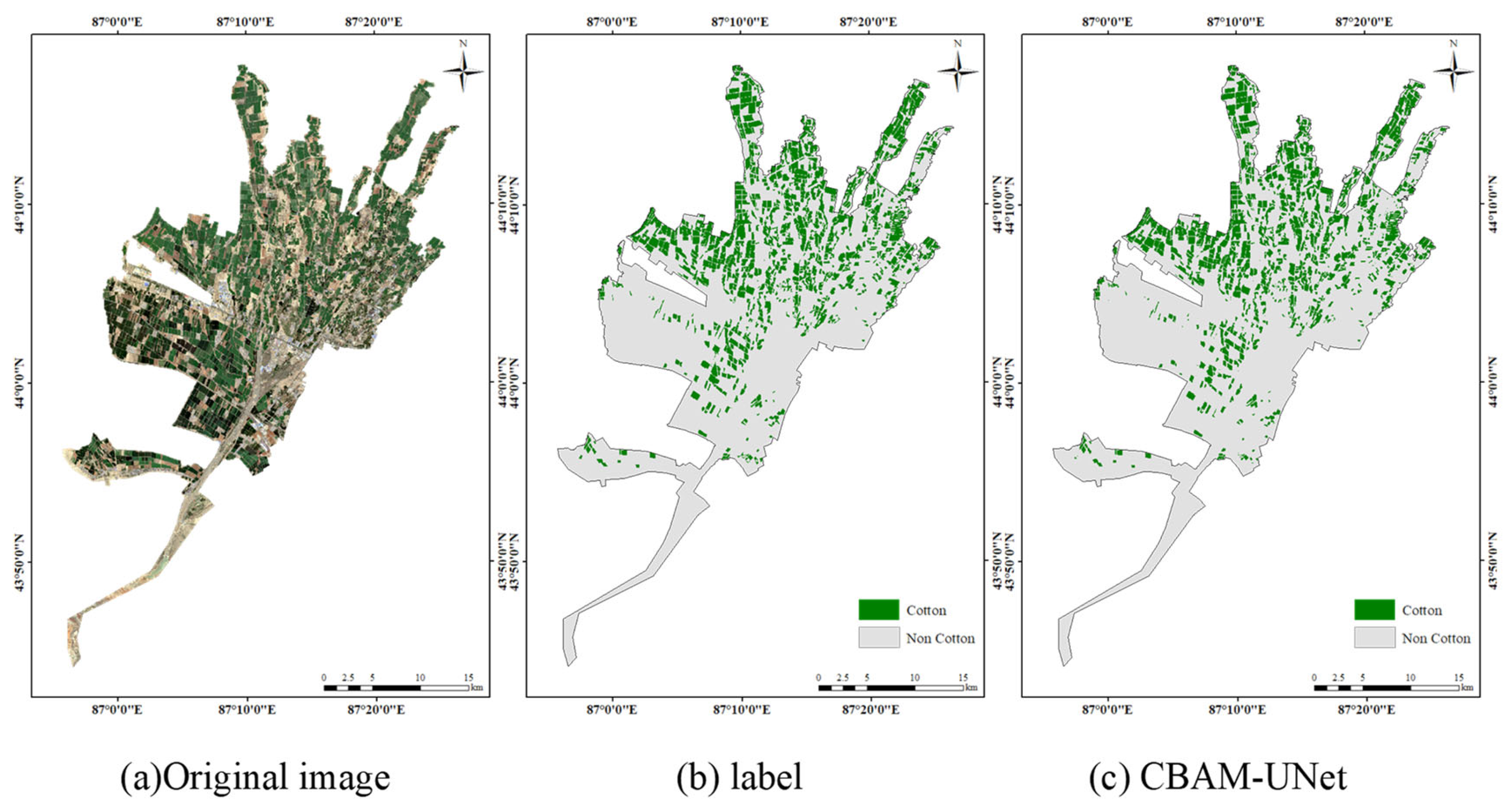

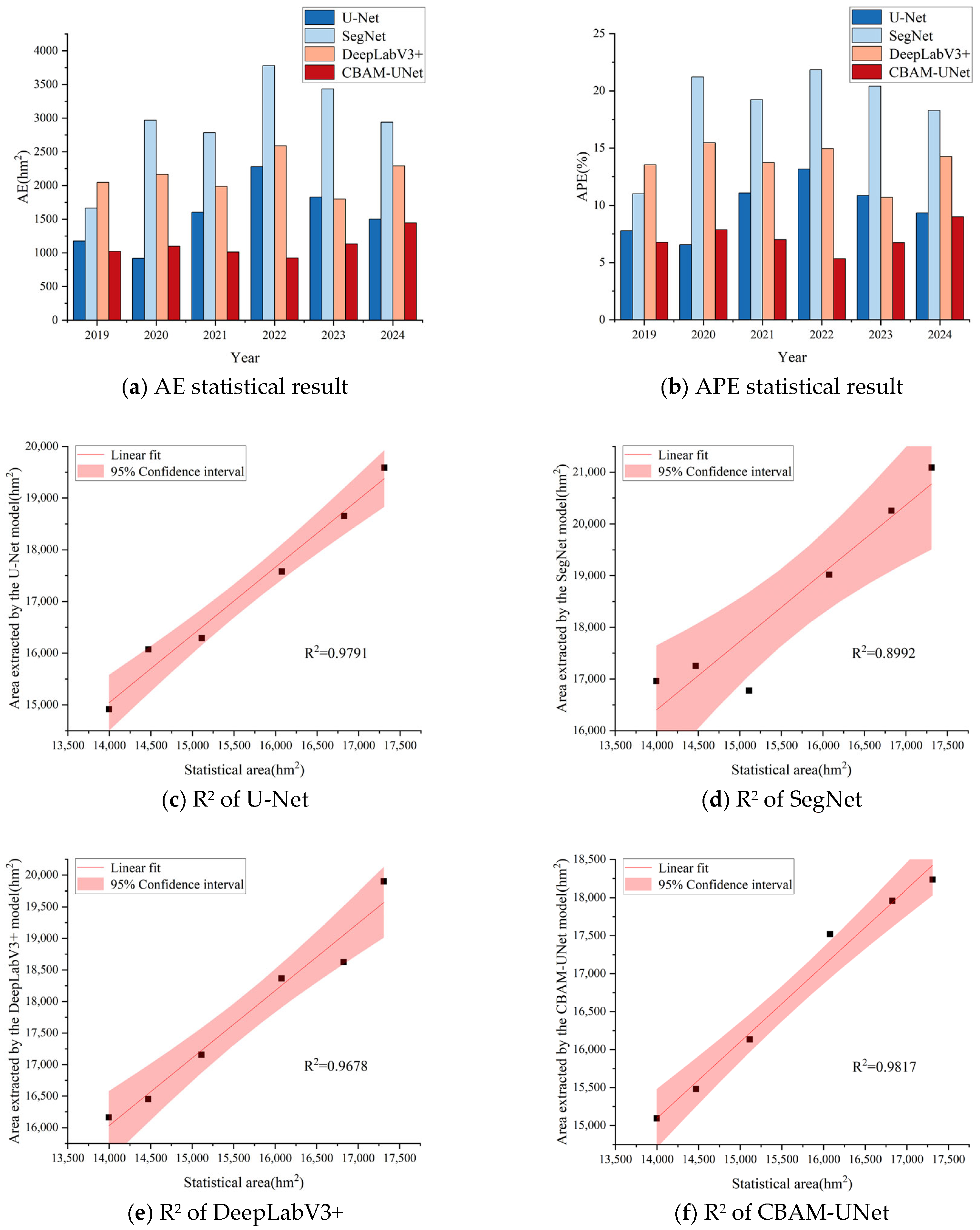

4.2. Analysis of Cotton Cultivation Area Extraction in the Study Area from 2019 to 2024

5. Discussion

5.1. Comparison and Analysis of Different Models

5.2. Analysis of the Causes of Changes in Cotton Cultivation Areas in the Study Area from 2019 to 2024

5.3. Limitations and Prospects

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Data Availability Statement

Conflicts of Interest

References

- Akter, T.; Islam, A.K.M.A.; Rasul, M.G.; Kundu, S.; Khalequzzaman, A.J. Evaluation of genetic diversity in short duration cotton (Gossypium hirsutum L.). Cotton Res. 2019, 2, 1. [Google Scholar] [CrossRef]

- Chen, Z.J.; Scheffler, B.E.; Dennis, E.; Triplett, B.A.; Zhang, T.; Guo, W.; Chen, X.; Stelly, D.M.; Rabinowicz, P.D.; Christopher, D.T.; et al. Toward sequencing cotton (Gossypium) genomes. Plant Physiol. 2007, 145, 1303–1310. [Google Scholar] [CrossRef]

- Zhang, Z.G.; Li, Y.M.; Yuan, Z.; Liu, X.H.; Shu, X.Y.; Liu, J.Y.; Guo, C.F. Cotton production pattern and contribution factors in Xinjiang from 1988 to 2020. J. Agric. Resour. Environ. 2024, 41, 1192–1200. [Google Scholar]

- Wang, D.; Zhong, G.J.; Zhang, Y.; Tian, T.; Zeng, Y. Effects of spatial autocorrelation on spatial sampling efficiencies of winter wheat planting areas. Trans. Chin. Soc. Agric. Eng. 2021, 37, 188–197. [Google Scholar]

- Jin, N.; Sun, L.; Zhang, Y.D.; Zhang, X.; Li, Y.; Yao, N. Classification of Cotton Planting Area Using CBAM-U-HRNet Model and Sentinel-2 Data. Trans. Chin. Soc. Agric. Mach. 2023, 54, 159–168. [Google Scholar]

- Zhang, J.H.; You, S.C.; Liu, A.X.; Xie, L.J.; Huang, C.H.; Han, X.; Li, P.H.; Wu, Y.X.; Deng, J.S. Winter Wheat Mapping Method Based on Pseudo-Labels and U-Net Model for Training Sample Shortage. Remote Sens. 2024, 16, 2553. [Google Scholar] [CrossRef]

- Wei, R.Q.; Li, L.F.; Lin, W.; Shao, H.Y.; Wang, D. Extracting Cotton Cultivation Regions of Xinjiang Shihezi Utilizing the TIMESAT and Satellite Time-Series Images. Hubei Agric. Sci. 2018, 57, 105–112. [Google Scholar]

- Zheng, Y.; Wu, B.F.; Zhang, M.; Zeng, H.W. Crop Phenology Detection Using High Spatio-Temporal Resolution Data Fused from SPOT5 and MODIS Products. Sensors 2016, 16, 2099. [Google Scholar] [CrossRef]

- Tatsumi, K.; Yamashiki, Y.; Torres, M.A.C.; Taipe, C.L.R. Crop classification of upland fields using Random forest of time-series Landsat 7 ETM+ data. Comput. Electron. Agric. 2015, 115, 171–179. [Google Scholar] [CrossRef]

- Yu, L.X.R.; Tao, H.F.; Li, Q.; Xie, H.; Xu, Y.; Mahemujiang, A.; Jiang, Y.W. Research on Machine Learning-Based Extraction and Classification of Crop Planting Information in Arid Irrigated Areas Using Sentinel-1 and Sentinel-2 Time-Series Data. Agriculture 2025, 15, 1196. [Google Scholar] [CrossRef]

- Sertel, E.; Yay, I. Vineyard parcel identification from Worldview-2 images using object-based classification model. J. Appl. Remote Sens. 2014, 8, 83535. [Google Scholar] [CrossRef]

- Ayixiemu, M.; Maimaiti, S. Study on Cotton Growing Area Extraction Based on GF-2 Image Fusion Method. Geomat. Spat. Inf. Technol. 2023, 46, 81–84+88. [Google Scholar]

- Zhao, J.L.; Zhan, Y.Y.; Wang, J.; Huang, L.S. SE-UNet-Based Extraction of Winter Wheat Planting Areas. Trans. Chin. Soc. Agric. Mach. 2022, 53, 189–196. [Google Scholar]

- Peng, J.L.; Zhao, Y.L.; Wang, L.M. Research on Video Abnormal Behavior Detection Based on Deep Learning. Laser Optoelectron. Prog. 2021, 58, 51–61. [Google Scholar] [CrossRef]

- Deng, C.; Li, H.W.; Zhang, B.; Xu, Z.B.; Xiao, Z.Y. Research on key frame image processing of semantic SLAM based on deep learning. Acta Geod. Cartogr. Sin. 2021, 50, 1605–1616. [Google Scholar]

- Du, M.; Huang, J.F.; Wei, P.L.; Yang, L.B.; Chai, D.F.; Peng, D.L.; Sha, J.M.; Sun, W.W.; Huang, R. Dynamic Mapping of Paddy Rice Using Multi-Temporal Landsat Data Based on a Deep Semantic Segmentation Model. Agronomy 2022, 12, 1583. [Google Scholar] [CrossRef]

- Li, H.L.; Wang, G.J.; Dong, Z.; Wei, X.K.; Wu, M.J.; Song, H.H.; Amankwah, S.O.Y. Identifying Cotton Fields from Remote Sensing Images Using Multiple Deep Learning Networks. Agronomy 2021, 11, 174. [Google Scholar] [CrossRef]

- Wang, M.; Wang, J.; Cui, Y.P.; Liu, J.; Chen, L. Agricultural Field Boundary Delineation with Satellite Image Segmentation for High-Resolution Crop Mapping: A Case Study of Rice Paddy. Agronomy 2022, 12, 2342. [Google Scholar] [CrossRef]

- Zhang, S.J.; Ban, X.Y.; Xiao, T.; Huang, L.S.; Zhao, J.L.; Huang, W.J.; Liang, D. Identification of Soybean Planting Areas Combining Fused Gaofen-1 Image Data and U-Net Model. Agronomy 2023, 13, 863. [Google Scholar] [CrossRef]

- Hu, H.; Niu, X.W.; Zuo, H.; Jin, C.Y. Application Study of Image Semantic Segmentation Algorithm Based on Improved HRNet Architecture. SmartTech Innov. 2022, 28, 23–29. [Google Scholar]

- Fu, T.Y.; Tian, S.F.; Ge, J. R-UNet: A Deep Learning Model for Rice Extraction in Rio Grande Do Sul, Brazil. Remote Sens. 2023, 15, 4021. [Google Scholar] [CrossRef]

- Yan, H.J.; Liu, G.; Li, Z.; Li, Z.; He, J. SCECA U-Net crop classification for UAV remote sensing image. Clust. Comput. 2024, 28, 23. [Google Scholar] [CrossRef]

- Chang, Z.; Li, H.; Chen, D.H.; Liu, Y.F.; Zou, C.; Chen, J.; Han, W.J.; Liu, S.S.; Zhang, N.M. Crop type identification using high-resolution remote sensing images based on an improved DeepLabv3+ network. Remote Sens. 2023, 15, 5088. [Google Scholar] [CrossRef]

- Wan, T.Y.; Rao, Y.; Jin, X.; Wang, F.Y.; Zhang, T.; Shu, Y.L.; Li, S.W. Improved U-Net for Growth Stage Recognition of In-Field Maize. Agronomy 2023, 13, 1523. [Google Scholar] [CrossRef]

- Delegido, J.; Verrelst, J.; Alonso, L.; Moreno, J. Evaluation of Sentinel-2 red-edge bands for empirical estimation of green LAI and chlorophyll content. Sensors 2011, 11, 7063–7081. [Google Scholar] [CrossRef]

- Yang, S.T.; Gu, L.J.; Li, X.F.; Jiang, T.; Ren, R.Z. Crop classification method based on optimal feature selection and hybrid CNN-RF networks for multi-temporal remote sensing imagery. Remote Sens. 2020, 12, 3119. [Google Scholar] [CrossRef]

- Fan, X.P.; Zhou, J.P.; Xu, Y.; Li, K.J.; Wen, D.S. Identification and Localization of Weeds Based onOptimized Faster R-CNN in Cotton Seedling Stage. Trans. Chin. Soc. Agric. Mach. 2021, 52, 26–34. [Google Scholar]

- Yin, X.H.; Wang, Y.C.; Li, D.Y. Suvery of Medical Image Segmentation Technology Based on U-Net Structure Improvement. J. Softw. 2021, 32, 519–550. [Google Scholar]

- Woo, S.; Park, J.; Lee, J.Y.; Kweno, I.S. CBAM: Convolutional block attention module. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 3–19. [Google Scholar]

- Huang, G.H.; Zhu, J.W.; Li, J.J.; Wang, Z.W.; Cheng, L.L.; Liu, L.Z.; Li, H.J.; Zhou, J. Channel-attention U-Net: Channel attention mechanism for semantic segmentation of esophagus and esophageal cancer. IEEE Access 2020, 8, 122798–122810. [Google Scholar] [CrossRef]

- Li, H.F.; Qiu, K.J.; Chen, L.; Mei, X.M.; Hong, L.; Tao, C. SCAttNet: Semantic segmentation network with spatial and channel attention mechanism for high-resolution remote sensing images. IEEE Geosci. Remote Sens. Lett. 2020, 18, 905–909. [Google Scholar] [CrossRef]

- Liu, X.F.; Liu, X.D.; Wang, Z.H.; Huang, G.H.; Shu, R. Classification of laser footprint based on random forest in mountainous area using GLAS full-waveform features. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2022, 15, 2284–2297. [Google Scholar] [CrossRef]

- De Bem, P.P.; De Carvalho Júnior, O.A.; De Carvalho, O.L.F.; Gomes, R.A.T.; Guimarāes, R.F.; Pimentel, C.M.M. Irrigated Rice Crop Identification in Southern Brazil Using Convolutional Neural Networks and Sentinel-1 Time Series. Remote Sens. 2021, 24, 100627. [Google Scholar]

- Onojeghuo, A.O.; Miao, Y.X.; Blackburn, G.A. Deep ResU-Net Convolutional Neural Networks Segmentation for Smallholder Paddy Rice Mapping Using Sentinel 1 SAR and Sentinel 2 Optical Imagery. Remote Sens. 2023, 15, 1517. [Google Scholar] [CrossRef]

- Li, Y.; Liu, W.J.; Ge, Y.; Yuan, S.; Zhang, T.X.; Liu, X.H. Extracting Citrus-Growing Regions by Multiscale UNet Using Sentinel-2 Satellite Imagery. Remote Sens. 2024, 16, 36. [Google Scholar] [CrossRef]

- Xia, L.; Zhao, F.; Chen, J.; Yu, L.; Lu, M.; Yu, Q.Y.; Liang, S.F.; Fan, L.L.; Sun, X.; Wu, X.R.; et al. A Full Resolution Deep Learning Network for Paddy Rice Mapping Using Landsat Data. Remote Sens. 2022, 194, 91–107. [Google Scholar] [CrossRef]

- Lu, H.; Liu, C.; Li, N.W.; Fu, X.; Li, L.G. Optimal segmentation scale selection and evaluation of cultivated land objects based on high-resolution remote sensing images with spectral and texture features. Environ. Sci. Pollut. Res. 2021, 28, 27067–27083. [Google Scholar] [CrossRef]

- Zhao, J.L.; Wang, J.; Qian, H.M.; Zhan, Y.Y.; Lei, Y. Extraction of winter-wheat planting areas using a combination of U-Net and CBAM. Agronomy 2022, 12, 2965. [Google Scholar] [CrossRef]

- Saadat, M.; Seydi, S.T.; Hasanlou, M.; Homayouni, S. A convolutional neural network method for rice mapping using time-series of Sentinel-1 and Sentinel-2 imagery. Agriculture 2022, 12, 2083. [Google Scholar] [CrossRef]

- Zou, C.; Chen, D.H.; Chang, Z.; Fan, J.W.; Zheng, J.; Zhao, H.P.; Wang, Z.; Li, H. Early Identification of Cotton Fields Based on Gf-6 Images in Arid and Semiarid Regions (China). Remote Sens. 2023, 15, 5326. [Google Scholar] [CrossRef]

- Kang, R.; Huang, J.X.; Zhou, X.H.; Ren, N.; Sun, S.P. Toward real scenery: A lightweight tomato growth inspection algorithm for leaf disease detection and fruit counting. Plant Phenomics 2024, 6, 174. [Google Scholar] [CrossRef]

- Yu, X.; Yin, D.M.; Nie, C.W.; Ming, B.; Xu, H.G.; Liu, Y.; Bai, Y.; Shao, M.C.; Cheng, M.H.; Liu, Y.D.; et al. Maize tassel area dynamic monitoring based on near-ground and UAV RGB images by U-Net model. Comput. Electron. Agric. 2022, 203, 107477. [Google Scholar] [CrossRef]

- Kang, J.; Liu, L.T.; Zhang, F.C.; Shen, C.; Wang, N.; Shao, L.M. Semantic segmentation model of cotton roots in-situ image based on attention mechanism. Comput. Electron. Agric. 2021, 189, 106370. [Google Scholar] [CrossRef]

- Wang, L.R.; Rui, L.L. Analyzing the Impact of Target Price Subsidy Policy on Cotton Production—Based on PSM-DID Method. Chin. J. Agric. Resour. Reg. Plan. 2021, 42, 228–236. [Google Scholar]

- Xin, Y.Y.; Xiao, H.F. Analysis of the Impact of the Target Price Subsidy Policy on Cotton Production in Xinjiang. Shanxi Agric. Econ. 2024, 24, 91–95. [Google Scholar]

- Tian, L.W.; Lou, S.W.; Zhang, P.Z.; Du, M.W.; Luo, H.H.; Li, J.; Paerhati, M.; Ma, T.F.; Zhang, L.Z. Analysis of Problems and Pathways for Increasing Cotton Yield per Unit Area in Xinjiang Under Green and Efficient Production Mode. Sci. Agric. Sin. 2025, 58, 1102–1115. [Google Scholar]

- Zhang, Y.; Bian, X.N.; Zhang, H.L.; Li, N.; Gao, Q.; Zhang, B.Q. Research on the Application Prospect of Digital Twin in Large Irrigation Area. J. Irrig. Drain. 2022, 41, 71–76. [Google Scholar]

- Zhang, N.N.; Zhang, X.; Bai, T.C.; Yuan, X.T.; Ma, R.; Li, L. Field Scale Cotton Land Feature Recognition Based on UAV Visible Light Images in Xinjiang. Trans. Chin. Soc. Agric. Mach. 2023, 54, 199–205. [Google Scholar]

- Liu, J.W. Forestry Engineering Based on UAV Remote Sensing Technology. New Farmers 2025, 19, 82–84. [Google Scholar]

| Growth Cycle | Time | Photo | Growth Cycle | Time | Photo |

|---|---|---|---|---|---|

| Sowing | Mid-April |  | Seedling | Late April to early June |  |

| Squaring | Mid-June to mid-July |  | Flowering-boll | Late July to late August |  |

| Boll-opening | Early to late September |  | Maturation | Early October |  |

| Serial Number | Date | Name |

|---|---|---|

| 1 | 28 July 2019 | S2B_MSIL2A_20190728T050659_N9999_R019_T45TVJ_20230512T183636 |

| S2B_MSIL2A_20190728T050659_N9999_R019_T45TWJ_20230512T183649 | ||

| S2B_MSIL2A_20190728T050659_N9999_R019_T45TWK_20230512T184055 | ||

| 2 | 17 July 2020 | S2A_MSIL2A_20200717T050701_N0500_R019_T45TVJ_20230424T021048 |

| S2A_MSIL2A_20200717T050701_N0500_R019_T45TWJ_20230424T021048 | ||

| S2A_MSIL2A_20200717T050701_N0500_R019_T45TWK_20230424T021048 | ||

| 3 | 2 July 2021 | S2A_MSIL2A_20210702T050701_N0500_R019_T45TVJ_20230130T233224 |

| S2A_MSIL2A_20210702T050701_N0500_R019_T45TWJ_20230130T233224 | ||

| S2A_MSIL2A_20210702T050701_N0500_R019_T45TWK_20230130T233224 | ||

| 4 | 22 July 2022 | S2B_MSIL2A_20220722T050659_N0400_R019_T45TVJ_20220722T080424 |

| S2B_MSIL2A_20220722T050659_N0400_R019_T45TWJ_20220722T080424 | ||

| S2B_MSIL2A_20220722T050659_N0400_R019_T45TWK_20220722T080424 | ||

| 5 | 12 July 2023 | S2A_MSIL2A_20230712T050701_N0509_R019_T45TVJ_20230712T091055 |

| S2A_MSIL2A_20230712T050701_N0509_R019_T45TWJ_20230712T091055 | ||

| S2A_MSIL2A_20230712T050701_N0509_R019_T45TWK_20230712T091055 | ||

| 6 | 5 August 2024 | S2A_MSIL2A_20240805T050651_N0511_R019_T45TVJ_20240805T110647 |

| S2A_MSIL2A_20240805T050651_N0511_R019_T45TWJ_20240805T110647 | ||

| S2A_MSIL2A_20240805T050651_N0511_R019_T45TWK_20240805T110647 |

| Model | mIoU/% | Precision/% | Recall/% | F1-Score/% | OA/% |

|---|---|---|---|---|---|

| U-Net | 81.11 | 86.05 | 89.46 | 87.72 | 93.66 |

| SegNet | 79.31 | 85.69 | 81.25 | 83.41 | 92.31 |

| DeepLabV3+ | 80.70 | 87.52 | 85.70 | 86.60 | 92.60 |

| CBAM-UNet | 84.02 | 88.99 | 94.75 | 91.78 | 95.56 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, L.; Tao, H.; Xu, Y.; Yu, L.; Li, Q.; Xie, H.; Jiang, Y. Extraction of Cotton Cultivation Areas Based on Deep Learning and Sentinel-2 Image Data. Agriculture 2025, 15, 1783. https://doi.org/10.3390/agriculture15161783

Li L, Tao H, Xu Y, Yu L, Li Q, Xie H, Jiang Y. Extraction of Cotton Cultivation Areas Based on Deep Learning and Sentinel-2 Image Data. Agriculture. 2025; 15(16):1783. https://doi.org/10.3390/agriculture15161783

Chicago/Turabian StyleLi, Liyuan, Hongfei Tao, Yan Xu, Lixiran Yu, Qiao Li, Hong Xie, and Youwei Jiang. 2025. "Extraction of Cotton Cultivation Areas Based on Deep Learning and Sentinel-2 Image Data" Agriculture 15, no. 16: 1783. https://doi.org/10.3390/agriculture15161783

APA StyleLi, L., Tao, H., Xu, Y., Yu, L., Li, Q., Xie, H., & Jiang, Y. (2025). Extraction of Cotton Cultivation Areas Based on Deep Learning and Sentinel-2 Image Data. Agriculture, 15(16), 1783. https://doi.org/10.3390/agriculture15161783