Abstract

Environmental control based on growth stage is critical for enhancing the yield and quality of industrially cultivated Pleurotus pulmonarius. Challenges such as scene complexity and overlapping mushroom clusters can impact the accuracy of growth stage detection and target segmentation. This study introduces a lightweight method called the real-time detection model for the growth stages of P. pulmonarius (GSP-RTMDet). A spatial pyramid pooling fast network with simple parameter-free attention (SPPF-SAM) was proposed, which enhances the backbone’s capability to extract key feature information. Additionally, it features an interactive attention mechanism between spatial and channel dimensions to build a cross-stage partial spatial group-wise enhance network (CSP-SGE), improving the feature fusion capability of the neck. The class-aware adaptive feature enhancement (CARAFE) upsampling module is utilized to enhance instance segmentation performance. This study innovatively fusions the improved methods, enhancing the feature representation and the accuracy of masks. By lightweight model design, it achieves real-time growth stage detection of P. pulmonarius and accurate instance segmentation, forming the foundation of an environmental control strategy. Model evaluations reveal that GSP-RTMDet-S achieves an optimal balance between accuracy and speed, with a bounding box mean average precision (bbox mAP) and a segmentation mAP (segm mAP) of 96.40% and 93.70% on the test set, marking improvements of 2.20% and 1.70% over the baseline. Moreover, it boosts inference speed to 39.58 images per second. This method enhances detection and segmentation outcomes in real-world environments of P. pulmonarius houses, offering a more accurate and efficient growth stage perception solution for environmental control.

1. Introduction

P. pulmonarius is highly nutritious, tastes good, and is well accepted in the market, establishing it as a widely cultivated and significant edible mushroom [1,2]. Contemporary cultivation employs an industrial model, where mushroom bags are housed in specialized rooms resembling greenhouses [3], allowing them to undergo the full growth process up to harvest. This growth process can be broadly segmented into recovery, knotting, budding, fruiting body growth, and harvesting stages [4]. Each growth stage necessitates specific environmental parameters to optimize growth conditions [5]. Hence, effective regulation of these parameters based on growth stage perception is critical for the development and yield quality of P. pulmonarius. Currently, growth stage assessment primarily depends on the manual observation and expertise of technical personnel [6], who adjust environmental parameters accordingly. In large-scale production, this reliance results in substantial labor costs and resource allocation, demonstrating clear limitations [7]. Moreover, these assessments are susceptible to subjective human influence, and the environmental controls cannot dynamically adapt to the varying growth states of P. pulmonarius.

The regulation of environmental parameters such as temperature, humidity, CO2, light, and misting is essential during the growth of P. pulmonarius. Typically, agricultural internet of things (IoT) is employed to develop environmental control systems in mushroom houses [8,9]. These systems integrate various sensors, controllers, and control algorithms to align environmental variables closely with set values. Building upon these systems, advanced control methods, such as fuzzy logic and expert systems are designed to make control decisions based on predefined rules or knowledge bases [10,11,12], ensuring environmental variables adjust according to established patterns. However, these methods typically allow for strategy adjustments only at preset times [13]. Due to the absence of effective growth stage detection methods for P. pulmonarius, current practices in production often combine environmental control systems with manual oversight, whereby technicians observe growth stage changes and adjust environmental parameters accordingly within the control system.

Inspired by existing patterns, replacing manual observation with machine vision to develop growth stage detection models is a viable approach to addressing these challenges. Machine vision techniques utilize measured or extracted visual features to distinguish differences in target tasks [14]. Lu et al. [15] proposed an image-based method for measuring the cap diameter of Agaricus bisporus during harvesting, employing the YOLOv3 to calculate cap diameter. Wei et al. [16] developed an Amodal instance segmentation method to measure the width and length of Agrocybe cylindraceas caps. Tao et al. [17] presented the ReYOLO-MSM method for evaluating the selective harvesting of shiitake mushrooms, using cap size and roundness for harvest suitability. Although cap size is crucial for assessing mushroom growth, these methods are applicable to mushroom varieties with simpler phenotypes. Sujatanagarjuna et al. [18] introduced a Mask R-CNN model with 91.7% training accuracy for detecting the four maturity stages of oyster mushroom. Shi et al. [19] developed an instance segmentation model for A. bisporus, attaining a segmentation accuracy of 98.64% for automatic harvest systems. Wu et al. [20] create the Y-PNet for detection and segmentation of Antler mushrooms, establishing a maturity grading evaluation model. Wang et al. [21] designed an improved YOLOv5 to identify the growth stages of shiitake fruiting body, achieving 92.70% accuracy for detecting mature phases. While these methods contribute significantly to mushroom growth detection, they primarily focus on harvesting and grading near maturity. Despite challenges in detecting the full growth stages of edible mushroom, current methods have significantly inspired our research.

Accurate detection of growth stages is crucial for effective environmental control. During the growth of P. pulmonarius, complex backgrounds, overlapping, and occlusion pose challenges for models in achieving precise target segmentation, also impacting growth stage detection. Recently, advancements in object detection and instance segmentation methods have supported efforts to address these challenges. SOLOv2 [22] employs dynamic convolution to independently process mask features for each instance, producing high-quality segmentation results and classifications. CondInst [23] utilizes conditional convolution to predict only the masks relevant to instances, enabling finer instance segmentation. The Swin Transformer [24] merges the strengths of visual transformers and convolutional neural networks (CNN) by introducing a sliding window attention mechanism and a hierarchical architecture compatible with multiple tasks. When integrated into models like Mask R-CNN, it enhances instance segmentation performance [25]. The RTMDet [26] is an efficient model suitable for object detection and instance segmentation, characterized by lightweight, dynamic task optimization, and excellent multi-scale capability. High-performance instance segmentation models facilitate the detection of growth stages in P. pulmonarius.

In summary, this paper introduces the GSP-RTMDet, a real-time detection model designed for the growth stages judgment of P. pulmonarius, effectively covering their entire cultivation cycle in real production scenarios. The enhanced network structure improves segmentation and detection accuracy in complex environments, achieving an optimal balance between accuracy and speed, and addressing the challenge of accurately segmenting P. pulmonarius instances in overlapping scenes. By linking environmental parameters to the detected growth stages, this method aligns environmental control with the growth requirements of P. pulmonarius. This approach is anticipated to significantly reduce reliance on manual management while enhancing the adaptability of environmental control in mushroom houses. The main innovations and contributions of this study are summarized as follows:

- (1)

- This study introduces the GSP-RTMDet to achieve detection of P. pulmonarius growth stages, enabling environmental control based on growth stage perception. It addresses the challenge of segmentation in complex scenarios. An environmental parameter control strategy was formulated based on the detection of growth stages.

- (2)

- The SPPF-SAM module is integrated into the model’s backbone. By incorporating the simple parameter-free attention module (SimAM) and optimizing the activation function, the features extracted by the backbone across various growth stages is enhanced. This approach bolsters the model’s detection performance while preserving its lightweight architecture.

- (3)

- In neck of the model, the CSP-SGE module is utilized to replace the cross-stage partial layer (CSPLayer). This substitution enhances spatial and channel feature interactions during feature fusion, thereby improving the semantic feature learning capability. The CARAFE module is employed for upsampling to more precisely restore the morphological boundaries of the target, thus enhancing segmentation capabilities in complex scenarios.

2. Materials and Methods

2.1. The Growth Stage of P. pulmonarius

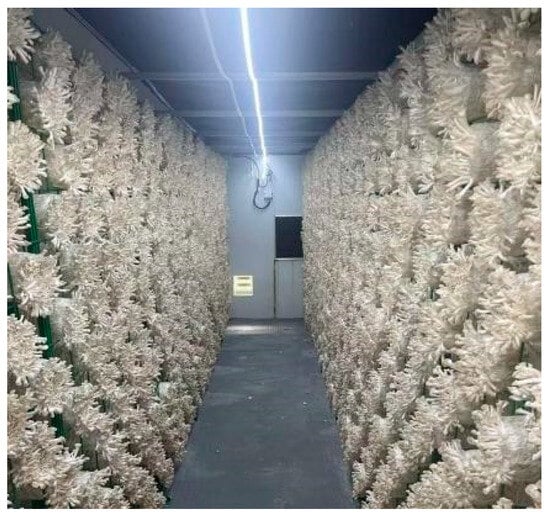

This study is based on the industrial cultivation mode of P. pulmonarius. The substrate block used for their growth, referred to as a mushroom bag, is prepared by professional producers following standardized industry protocols [27]. These bags are inoculated with mycelia and stored for cultivation. The production process places the conditioned bags into an environment-controlled mushroom house until harvest, as shown in Figure 1. Initially, the temperature is lowered (16–18 °C for 12 h) to stimulate primordial differentiation through temperature variation (20–22 °C for 24 h) [28], then increased (22–24 °C for 24 h) to reactivate mycelial activity, thus fostering favorable conditions for fruiting body formation. This phase, occurring within the mushroom bag, is achieved by adjusting temperature and duration in the mushroom house, and is excluded from this study’s scope. The process then proceeds to the knotting stage, where mycelial knots emerge at the bag’s opening. From this stage to final harvest, continuous phenotypic monitoring by professional managers is essential for adjusting environmental parameters. In this study, the period from knotting to harvest is defined as the growth cycle of P. pulmonarius and is divided into distinct growth stages. To implement growth stage-based environmental control, the growth stages aligns with the modification of environmental parameters, identifying seven growth stages as detailed in Table 1. These modifications correspond to phenotypic changes during the growth cycle as determined by managers.

Figure 1.

Growth environment of P. pulmonarius.

Table 1.

Growth stages and environmental parameters of P. pulmonarius.

The entire growth cycle lasts approximately 4 to 5 days and is divided into seven stages, from Stage 1 to Stage 7. During Stage 1 and Stage 2, no fruiting bodies are present, only subtle changes on the surface of substrate. Stage 3 represents the onset of fruiting body growth, distinctly differing from the previous stages. From Stage 4 to Stage 7, the fruiting bodies gradually elongate, the cap area enlarges, and the color darkens, with phenotypic similarities observed between these stages. By Stage 7, the fruiting bodies mature and reach the harvesting standard.

2.2. Image Acquisition and Dataset Creation

This study examines in situ images of P. pulmonarius in mushroom houses, covering the entire growth cycle defined by Stages 1 through 7. To ensure the generalizability of image samples, three mushroom houses were selected as collection sites, each cultivating mushroom bags from different production batches. The growth of P. pulmonarius in each house was continuously monitored. Images were captured at 4 h intervals during each growth stage, with stages defined based on environmental control and phenotypic observations detailed in Table 1. The Femto Bolt camera (Orbbec, Shenzhen, China) was employed to capture RGB images at an original resolution of 2560 × 1440. A simple image capture platform was designed. Due to the narrow distance between shelves, maintaining a 0.3 m distance from the camera to the shelf holding the mushroom bags, with the camera height adjusted to align with the center of the bags and positioned perpendicularly to the mushroom clusters. Each collected image contains at least one complete mushroom bundle, including instance where it is partially occluded by nearby incomplete mushroom bundles. LED lighting provided supplementary illumination during dark growth stages. In total, 1261 images were collected.

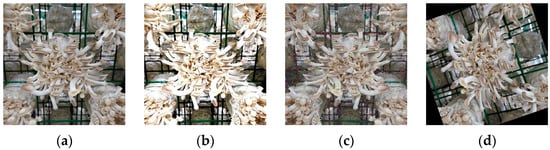

Images are uniformly cropped and resized to a resolution of 512 × 512 to accelerate the model’s training and inference processes. Labelme is utilized for image annotation [29], marking the complete regions of P. pulmonarius located in the images (https://github.com/wkentaro/labelme (accessed on 2 May 2025)). A polygon annotation method is employed to ensure that the boundaries of the marked areas accurately conform to the morphological contours of the P. pulmonarius. Bounding boxes are automatically generated from the annotated areas, and annotation categories are specified based on the growth stages of P. pulmonarius, classified as Stage 1 to Stage 7. The dataset is structured in the COCO instance format, with an additional test set for the model’s final validation. The dataset is divided as follows: 70% for the training set, 15% for the validation set, and 15% for the test set. Sample images from the dataset and their visualized annotation results are displayed in Figure 2.

Figure 2.

Sample images and visualized annotations in the datasets.

2.3. Data Augmentation

To enhance model detection accuracy and generalization capability, and prevent over fitting, this study increases the dataset’s sample size using data augmentation methods [30,31]. The methods employed are: (1) color jitters, achieved by randomly adjusting the images’ saturation, brightness, contrast, and sharpness; (2) random noise addition and horizontal flip, which involves adding random Gaussian noise to images and applying horizontal flip; (3) random rotation, which rotates images by random angles and fills in empty pixels. These methods augment the training set’s sample size and are implemented in the data loading pipeline to synchronously process sample images and their annotations. This implementation of image augmentation is an online strategy, applied to batch image samples during each training iteration. After augmentation, the images are directly input the model for training. The training strategy indirectly determines the number of effective samples produced by image augmentation. As illustrated in Figure 3, an example from Stage 4 demonstrates the enhancement effects.

Figure 3.

The effect of data augmentation. (a) Original image; (b) color jittering; (c) random Gaussian noise and horizontal flip; (d) random rotation.

2.4. The Growth Stage Detection Model

The objective of this study is to develop a visual detection model that identifies the growth stages of P. pulmonarius and segments each cluster of mushrooms in complex scenes, thereby providing critical decision-making support for environmental control tasks in mushroom houses. The model’s performance must satisfy two requirements: (1) achieve precise detection and segmentation in complex backgrounds and irregular shapes; (2) fulfill the real-time constraints of practical applications. The RTMDet is an efficient, one-stage, lightweight instance segmentation model that has attained state-of-the-art performance. It has shown competitive performance across multiple benchmark datasets. In the cultivation environment of P. pulmonarius, the dense arrangement of mushroom bags causes overlap and occlusion among mushroom clusters, particularly in the late growth stages, thus complicating segmentation. Additionally, phenotypic similarities between growth stages may result in inaccurate detection. To further enhance the model’s detection and segmentation accuracy, this study introduces improvements based on the RTMDet, culminating in the proposal of a detection and instance segmentation model for the growth stages of P. pulmonarius, designated as the GSP-RTMDet.

2.4.1. GSP-RTMDet Model Architecture

The RTMDet architecture is composed of three primary components: the backbone, neck, and head. The backbone utilizes the CSPNeXt architecture [32], which integrates the cross- stage partial network (CSP) with the ResNeXt network. This architecture employs large kernel depth-wise convolution to enhance feature extraction capabilities and the effective receptive field, thereby improving the model’s capacity to capture global contextual information. The neck features a CSP path aggregation feature pyramid network (CSP-PAFPN) structure, employing top-down and bottom-up processing mechanisms to generate pyramid feature maps. The head, known as SepBNHead [33], consists of classification and regression branches. These branches utilize two stacked convolutional layers to efficiently extract task-specific features for object classification and bounding box prediction. The segmentation head may also be incorporated, for instance segmentation based on specific task requirements. Leveraging advanced multi-scale feature extraction and modular processing design, this architecture delivers a robust, low-latency solution for object detection.

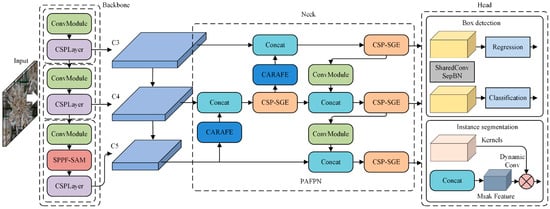

The GSP-RTMDet proposed in this study enhances the accuracy of detection and instance segmentation for P. pulmonarius growth stages, building upon the RTMDet architecture. The key improvements are: (1) Backbone enhancement: The spatial pyramid pooling fast (SPPF) module is replaced with the SPPF-SAM module. By incorporating the SimAM attention mechanism [34], feature representation capabilities are strengthened by highlighting critical information and suppressing interference, thereby enhancing downstream detection tasks without compromising speed. (2) Neck development: The CSP-SGE and CARAFE modules are integrated into the neck. The CSP-SGE module refines the attention mechanism of the original CSPLayer, improving interaction between spatial and channel dimensions, enhancing the network’s ability to learn semantic features, and strengthening feature fusion. The CARAFE module [35] facilitates upsampling operations in the neck by aggregating information within a large receptive field and generating upsampling weights in a content-aware manner. This approach allows upsampled feature maps to more accurately depict object shapes, thus improving instance segmentation performance without the need for additional parameters. The structure of the GSP-RTMDet model is illustrated in Figure 4.

Figure 4.

The structure of the GSP-RTMDet model.

The backbone comprises the ConvModule, CSPLayer, and SPPF-SAM module. The ConvModule features a 3 × 3 Conv2d layer, a BatchNorm layer, and a SiLU activation function. The CSPLayer integrates three ConvModules, a CSP-block, and a channel attention module. The CSP-block includes a ConvModule and a 5 × 5 depth-wise convolution module (DWConv). Located in the final stage of backbone, the SPPF-SAM module enhances feature representation by incorporating the SimAM attention mechanism into the SFFP and optimizing activation functions to improve efficiency. The backbone extracts three key feature maps at scales C3, C4, and C5 from inputs. The neck comprises the ConvModule, CARAFE module, and CSP-SGE module. The CARAFE module generates upsampled feature maps while refining feature information for precise morphological segmentation. The CSP-SGE module builds upon the CSPLayer by incorporating SGE attention [36], bolstering the fusion of spatial and channel features, and generating pyramid feature maps. Consistent channel dimensions of all feature maps enable efficient merging across scales. The neck combines FPN and the path aggregation network to integrate multi-scale and hierarchical features. The fused features are then inputted into head, composed of three branches. The classification and regression heads, used for object detection, prominently feature SharedConv and separated batch normalization layers. Convolution weights are shared across pyramid feature maps, while batch normalization is computed separately for each map. This architecture balances efficiency with parameter flexibility. The segmentation head includes a kernel prediction head and a mask feature head, generating segmentation masks via dynamic convolution kernels for instance segmentation tasks, thereby enhancing applicability in complex detection scenarios. The output of the model is a detection box of growth stage and a segmentation mask of the mushroom bundle morphology region, with each segmented region corresponding to a complete mushroom bundle.

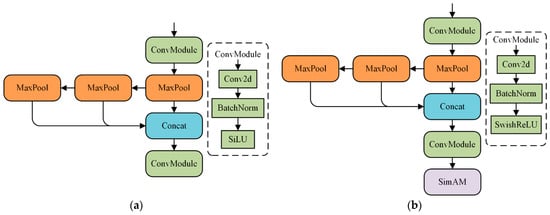

2.4.2. The SPPF-SAM Module

In the backbone network, the extracted feature maps progressively deepen. To address the issue of image distortion caused by cropping and scaling operations, YOLOv4 introduced the spatial pyramid pooling (SPP) module. This module resolves the problem of redundant feature extraction in convolutional neural networks, enhances the speed of candidate box generation, and reduces computational cost. The SPP module produces four feature maps through three parallel Maxpool operations and one direct input, which are then merged via the Concat operation. YOLOv5 proposed an improved SPPF structure, as shown in Figure 5a. SPPF changes parallel Maxpool to a series configuration and adds the ConvModule at both input and output stages, improving operational speed. In the YOLOv6 model, simplified spatial pyramid pooling fast (SimSPPF) was introduced, replacing the SiLU activation function in the ConvModule with ReLU to increase efficiency. However, the accuracy of the SiLU activation function is generally higher than ReLU in deep neural networks. In the RTMDet model, the SPPF structure is employed. Inspired by the aforementioned research methods, this study proposes the SPPF-SAM module for the C5 stage in the backbone, with the module structure shown in Figure 5b. This modification balances efficiency and accuracy, and fuses receptive fields of different scales in high-level features to enhance key feature information.

Figure 5.

SPPF structure of the backbone network. (a) Original SPPF structure; (b) SPPF-SAM structure.

The first improvement pertains to the activation function. SiLU, with characteristics such as being unbounded above, bounded below, smooth, and non-monotonic, performs better than ReLU in deep networks, calculated as Equation (1). ReLU effectively addresses the gradient vanishing, exhibits low computational complexity, and is highly efficient; however, neuron death can negatively impact network performance. By combining the advantages of both, this study employs the SwishReLU activation function [37], calculated as Equation (2):

The nonlinear segment of SwishReLU is consistent with SiLU, and the function value is not zero when x < 0. This helps the model learn more complex features and improve network performance. When x > 0, it aligns with the ReLU function, a linear form, which helps maintain gradient stability, and accelerates network convergence. SwishReLU can be smoothly differential over the entire range. This characteristic enables it to effectively capture both linear and nonlinear patterns in the data, enhancing network performance.

The SimAM module is integrated post feature merging to enhance the focus on key features. SimAM is a parameter-free attention mechanism that deduces attention weights by computing feature similarity using an energy function, defined as follows:

where wt and bt represent the linear transformation weights and bias for the target feature i. t and xi denote the target feature and other features within a channel of input features (dimensions H, W, C), respectively, with i as the index of spatial dimensions. y represents binary labels, M = H × W denotes the number of features within a channel, and λ is the regularization hyper parameter. Minimizing this equation allows for identifying the linear separability between the target feature and other features. The methods for calculating wt and bt are as follows:

where μt and σt2 are the mean and variance of all features within a channel excluding target feature. They can be reused in the calculations to reduce computational cost. The minimum energy is calculated as follows:

A lower minimum energy indicates a greater distinction between the target feature and other features. SimAM enables the model to dynamically adjust feature weights in response to various inputs. During the processing of multi-scale features in SPPF, this dynamic feature selection allows the model to concentrate on more discriminative features, thereby enhancing downstream tasks.

In complex scenarios, SPPF-SAM can create stronger associations across different scales, concentrating on essential feature information. Its parameter-free design enhances the performance of SPPF without compromising inference speed.

2.4.3. The CSP-SGE Module

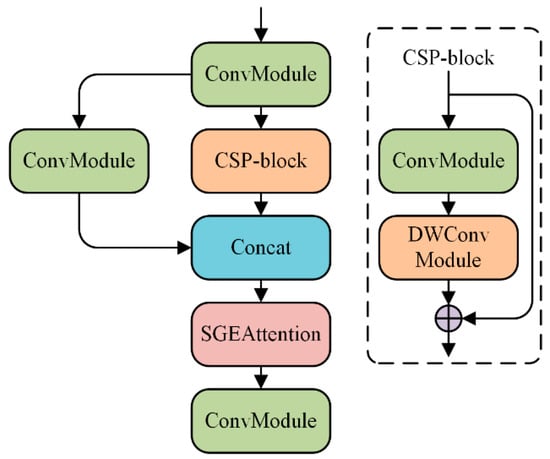

In the neck of the original model, CSPLayer facilitates cross-stage feature integration, enhancing the diversity of features across different layers. Semantic sub-features, representing various entities, are typically distributed in groups within each layer’s feature vectors. These sub-features are influenced by spatially similar patterns and backgrounds, which can lead to recognition and localization errors. Channel attention is employed in original CSPLayer to learn the weight of each channel, thereby enhancing the feature representation of the network. Spatial group-wise enhance (SGE) attention refines the importance of sub-features by generating attention factors, thereby enhancing semantic feature learning. This lightweight mechanism adds negligible computational overhead. In this study, SGE attention has been introduced to replace the original attention mechanism in CSPLayer, and the modified CSP-SGE structure is depicted in Figure 6.

Figure 6.

The structure of CSP-SGE.

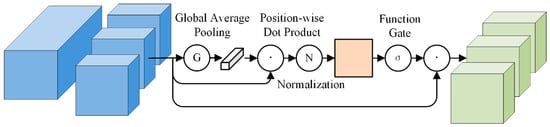

The main concept of the SGE attention mechanism is to partition the feature map into groups, with each group representing a semantic feature. An attention mask is generated based on the similarity between local and global features, guiding and enhancing the spatial distribution of semantic features. The processing flow is illustrated in Figure 7. Assuming the input feature dimension is (H, W, C), the feature map is initially divided into G groups along the channel dimension, with each group processed in parallel. In each spatial position, every group can be expressed as a vector, P = {x1, …, xM}, where the dimension of semantic feature xi is C/G, and the quantity M = H × W. A global statistical feature approximates the semantic vector represented by the group using a spatial averaging function Fgp:

where g denotes the global feature. g is utilized to generate a corresponding importance coefficient for each semantic feature. This coefficient quantifies the similarity between g and the local feature xi, calculated through a dot product:

Figure 7.

SGE attention process diagram.

Next, calculate the mean and standard deviation of ci, and standardize ci to obtain ai. Finally, compute the output feature using ai and xi:

where σ denotes the Sigmoid function gate. This method relies solely on the similarity of features within a group to guide attention factors, thereby enhancing feature expression capability and suppressing potential noise. It improves network performance without increasing model complexity.

2.4.4. The CARAFE Module

In the model’s neck, feature fusion occurs via top-down and bottom-up pathways. In the top-down pathway, upsampling is necessary for small-scale feature maps to align their resolution with larger feature maps. Initially, this operation in the original network was nearest neighbor interpolation upsampling method. This approach fills feature maps using adjacent pixels, focusing on subpixel neighborhoods, which can cause the morphological boundaries distort of targets. CARAFE is a lightweight upsampling operator, employs a class-aware adaptive feature enhancement method. Compared to traditional upsampling techniques, it generates feature maps with higher quality and richer details more effectively. CARAFE first aggregates contextual information within large receptive fields, then applies content-aware processing to instances for adaptive kernel generation. Specifically, it predicts the upsampling kernel using underlying content information. By reassembling features through weighted combinations within a predefined region centered at each position, the generated features are rearranged into spatial blocks to complete feature upsampling. Additionally, these spatially adaptive weights are not learned as network parameters. Consequently, the CARAFE module is integrated into the neck to replace nearest neighbor interpolation for upsampling, providing more accurate preservation of target shapes and enhancing the model’s instance segmentation capability.

2.4.5. Loss Function

The model’s head consists of three branches: classification, regression, and segmentation. The classification and regression heads handle object classification and box position prediction, respectively, while the segmentation head generates instance masks. Each branch uses a different loss function: Lcls, Lreg and Lseg. Lcls employs Quality Focal Loss [38], enabling the model to dynamically adjust loss weights by incorporating sample quality, thus improving the learning of hard-to-classify samples. The definition of Lcls is as follows:

where y denotes the intersection over union (IoU) between the predicted bounding box and the ground truth, representing the continuous label of localization quality. σ represents the predicted estimate, and β is the parameter that controls the rate of weight change. Lreg uses GIoU Loss [39], a loss function designed to optimize cases where the predicted box and the ground truth box do not overlap, thus enhancing localization accuracy. The definition of Lreg is as follows:

where Pb denotes the predicted box, Gb represents the ground truth box, and Cs is the smallest enclosing convex object. Lseg employs Dice Loss [40], a loss function for image segmentation that calculates the similarity between the predicted mask and the ground truth mask based on the Dice coefficient. The definition of Lseg is as follows:

where Pm denotes the predicted mask and Gm represents the ground truth mask. The model’s overall loss is defined as follows:

where the loss weights are set as λ1 = 1, λ2 = 2, and λ3 = 2. In complex scenarios, box and mask predictions present greater challenges than classification tasks. Consequently, increasing the weight proportions of these two loss components prioritizes their contribution during the training process.

2.5. The Training Strategy

The dataset created in this study was used to train the GSP-RTMDet. The training strategy employs the AdamW optimizer to update network parameters during iterations, with a learning rate set at 0.004 and a weight decay of 0.05. Training consists of 300 total epochs, with a batch size of 4. The initial 1000 iterations act as a training warm-up phase, starting with a learning rate of 1 × 10−5, linearly increasing to 0.004. From the 150th to the 300th epoch, the learning rate follows a cosine annealing schedule, gradually decreasing to 2 × 10−4. Hyper-parameter calibration is determined by the model’s performance on the validation set. Potential parameter values are set based on rational training assumptions, and the impact of different hyper-parameters values on model performance is examined through experiments. The results on the validation set are presented in Table 2.

Table 2.

The validation results of hyper-parameters.

As indicated in Table 2, setting the learning rate to 0.004 leads to improved detection and segmentation accuracy on the validation set. Similarly, a weight decay of 0.05 is optimal. With 200 training epochs, the model does not achieve optimal performance, and accuracy does not improve beyond 300 epochs, suggesting that 300 epochs are sufficient for optimal validation performance. A batch size of 4 yields better detection and segmentation accuracy. Thus, the chosen hyper-parameters are appropriate.

2.6. Model Performance Evaluation

This study employs the COCO evaluation metric, mean average precision (mAP), to assess the accuracy of object detection and instance segmentation. The mAP values for bounding boxes and instance masks are calculated separately. The mAP serves as a comprehensive performance metric, calculating based on indicators like IoU, precision, and recall. The calculation formula is as follows:

where TP denotes the number of correctly detected targets, FP denotes the number of incorrectly detected targets, and FN denotes the number of true targets not detected. IoU, defined as the intersection over union of the predicted box/mask and the actual box/mask, represents the degree of overlap between them. A larger IoU threshold results in a stricter evaluation of detection performance. Formula (16) calculates the average precision for each category, where R is the recall level. N in Formula (17) denotes the number of categories, to compute the mean AP across all categories. The mAP is calculated at IoU thresholds ranging from 0.5 to 0.95, in increments of 0.05. The average of the results at different thresholds is used to obtain the final mAP.

Params indicates the size of parameters in the model, and Flops refers to the floating-point operations executes per second. They are commonly used to assess model complexity. FPS indicates the number of frames or images processes per second, evaluating model inference speed. These indicators provide a comprehensive evaluation of the model’s accuracy, complexity, and speed.

3. Results and Analysis

In this section, the methods proposed in this study are validated and analyzed. The effectiveness of model improvements is assessed through ablation experiments. Comparative experiments analyze performance between the proposed model and other high-performance models. The actual performance is verified by analyzing detection and segmentation effects in complex environments. An environmental control strategy is proposed based on growth stage detection.

3.1. The Experimental Environment

The running environment of models are based on Python 3.9.15, PyTorch 1.13.0, CUDA 11.7, CuDNN 8.5, and OpenCV 4.5.4. The hardware platform configuration for the experiments includes AMD R5 3600X 3.8 GHz CPU, 64 GB RAM, Nvidia GeForce RTX 2080Ti (11 GB) GPU, and the Windows operating system. All models for comparison were evaluated in this experimental environment.

3.2. The Performance of GSP-RTMDet

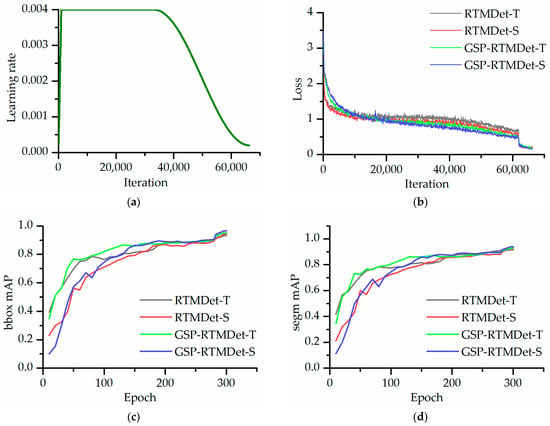

The GSP-RTMDet model was trained, validated, and tested using the dataset presented in this paper. To optimize the balance between accuracy and speed, the RTMDet models with varying size were employed as benchmarks. Utilizing the methods proposed in this study, we developed two enhanced model versions: GSP-RTMDet-T and GSP-RTMDet-S. Initially, the models were trained following the previously outlined strategy, with the learning rate curve illustrated in Figure 8a. Figure 8b illustrates the change in loss function values for each model during iterations. Throughout training, detection accuracy (bbox mAP) and segmentation accuracy (segm mAP) were assessed using validation set data, with results displayed in Figure 8c,d.

Figure 8.

Training and validation results of the GSP-RTMDet. (a) The learning rate; (b) loss; (c) mAP for bounding box detection results; (d) mAP for segmentation results.

Figure 8b demonstrates that all models undergo effective training, showing a consistent pattern in loss variation. Initially, the loss values for GSP-RTMDet-T and GSP-RTMDet-S are higher than baseline models. After approximately 20,000 iterations, these loss values decrease below baseline models and maintain this advantage until training concludes, resulting in better training outcomes. Figure 8c illustrates the validation results of the bbox mAP metric for each model throughout the training process. In the early phase of training, the bbox mAP of improved models is below that of baselines. However, as training advances, the accuracy of improved models markedly increases. After approximately 100 training epochs, the bbox mAP of the improved models consistently exceeds that of the corresponding baselines, lasting until the end of training. Following approximately 150 epochs, GSP-RTMDet-S outperforms GSP-RTMDet-T, achieving superior detection results. The segm mAP metric in Figure 8c follows a trend similar to that of the bbox mAP. In the final validation at the 300th epoch, the bbox mAP and segm mAP for GSP-RTMDet-T were 95.20% and 93.00%, respectively, marking improvements of 1.90% and 1.40% over RTMDet-T. For GSP-RTMDet-S, the bbox mAP and segm mAP are 96.60% and 94.00%, respectively, indicating improvements of 2.10% and 1.50% over RTMDet-S. These results indicate that the GSP-RTMDet outperforms the baseline models on the validation set, with GSP-RTMDet-S achieving the best performance.

The performance of the GSP-RTMDet was assessed on the test set, as shown in Table 3. The data in Table 3 demonstrate that the GSP-RTMDet, compared to the original model, offers improvements in both accuracy and speed while maintaining lightweight. Regarding detection and segmentation accuracy, GSP-RTMDet-T increases the bbox mAP by 2.10% and the segm mAP by 1.10% compared to RTMDet-T. GSP-RTMDet-S shows improvements of 2.20% in the bbox mAP and 1.70% in the segm mAP compared to RTMDet-S. GSP-RTMDet-S achieves the highest bbox mAP and segm mAP, with increases of 1.50% and 1.10%, respectively, over GSP-RTMDet-T. In terms of speed, the improved models’ FPS surpasses baselines, with GSP-RTMDet-T exhibiting the fastest inference speed, 2.54 img/s higher than GSP-RTMDet-S. Regarding model complexity, the Params of the improved models decreased by 7.83% (GSP-RTMDet-T) and 2.85% (GSP-RTMDet-S), while the Flops is lower than that of the baselines. This indicates that the GSP-RTMDet achieve superior detection and segmentation performance while reducing the model’s complexity, aligning with expectations. With similar inference speeds, GSP-RTMDet-S demonstrates the higher bbox mAP and segm mAP, achieving the best balance of accuracy and speed.

Table 3.

Test results of the GSP-RTMDet.

3.3. Ablation Study

To validate the effectiveness of improvements proposed in this study, ablation experiments were performed using the original RTMDet as a baseline. The environment and hardware conditions were kept consistent across all models. Improved modules sequentially replaced the original ones from backbone to neck in the model architecture, and performance was evaluated on the test set. Ablation experiments were conducted on GSP-RTMDet-T and GSP-RTMDet-S, with results presented in Table 4. The symbol “√” indicates the replacement of the improved module, whereas “×” denotes the retention of the original module.

Table 4.

The results of ablation study between the GSP-RTMDet and baseline.

The test results for RTMDet-T and RTMDet-S are presented in the first and fifth rows of Table 4, serving as baselines for the experiments. T1 involves adding SPPF-SAM to RTMDet-T, leading to increases of 0.80% in the bbox mAP and 0.30% in the segm mAP compared to the baseline, while maintaining the model parameters and computational complexity. This illustrates that the combined effects of the attention mechanism and improved activation function enhance the model’s detection and segmentation accuracy. T2 introduces CSP-SGE to T1, resulting in further gains of 1.00% in the bbox mAP and 0.10% in the segm mAP compared to T1, while reducing model parameters by 15.84% and increasing FPS by 4.38%. This demonstrates that replacing the original attention mechanism with the SGE module reduces model complexity and enhances detection and segmentation accuracy while boosting inference speed, with a more pronounced improvement in detection accuracy. GSP-RTMDet-T incorporates the CARAFE module on T2, further increasing the bbox mAP by 0.30% and the segm mAP by 0.70% compared to T2. This suggests a notable enhancement in segmentation accuracy, although it slightly increases model parameters; FPS is minimally affected. Following the same approach, improvement modules are progressively applied to RTMDet-S, forming S1, S2, and GSP-RTMDet-S. Compared to RTMDet-S, S1 shows gains of 0.70% in the bbox mAP and 0.60% in the segm mAP, with a slight decrease in FPS. Relative to S1, S2 achieves improvements of 1.20% in the bbox mAP and 0.20% in the segm mAP, with a 9.25% reduction in parameters and a 4.94% increase in FPS. Compared to S2, GSP-RTMDet-S shows increases of 0.30% in the bbox mAP and 0.90% in the segm mAP, with FPS remaining largely unchanged. The ablation experiments confirm the effectiveness of each improvement. Results suggest that integrating improvement modules can enhance the model’s detection and segmentation performance while maintaining inference speed, without increasing model complexity. The improved models achieve an optimal balance between accuracy and speed.

Figure 9 presents the detection and segmentation effect of the GSP-RTMDet compared to baselines. For image samples from various growth stages, each model’s segmentation performance is compared based on correctly detected stages, with segmentation errors highlighted in red circles. In this paper, segmentation errors are defined as localized areas or boundaries in segmentation masks that are visibly inconsistent with the ground truth. These errors are caused by the failure of the model to accurately classify the pixel categories. In Stage 1 and Stage 2, segmentation results show no significant differences among the models, attributed to the simple target morphology in these stages. In contrast, from Stage 3 to Stage 7, segmentation performance varies, with GSP-RTMDet-T and GSP-RTMDet-S results closer to the ground truth, while baselines display localized segmentation errors. These errors are mainly observed at target boundaries, which become increasingly complex as fruiting bodies grow, leading to greater segmentation challenges due to random overlaps. The results demonstrate that the enhanced feature representation and semantic segmentation abilities of the improved modules enable the models to achieve superior segmentation performance in complex morphologies and scenarios, resulting in precisely defined boundaries.

Figure 9.

Comparison of detection and segmentation results between the RTMDet and the GSP-RTMDet. Red circles denote segmentation errors. Ground truth refers to the labeled annotations corresponding to sample images, which serve as a benchmark for evaluating model performance.

3.4. Comparative Studies

To further validate the advantages of the improved model, a comparative analysis was conducted between the GSP-RTMDet and other state-of-the-art instance segmentation models, including Mask R-CNN-T (Swin-T + FPN), Mask R-CNN-S (Swin-S + FPN), SOLOv2 (ResNet50 + FPN), and CondInst (ResNet50 + FPN). Mask R-CNN incorporates the Swin Transformer as its backbone to enhance segmentation capabilities, available in T and S versions. Each model in the comparison was trained and tested under identical hardware and software environments, with training epochs matching this study, and other training parameters set to default values. The performance comparison results of different models on the test set are presented in Table 5.

Table 5.

The results of the comparative studies.

The data in Table 5 indicate that the GSP-RTMDet series models achieved superior performance in this study. Compared to Mask R-CNN-T and Mask R-CNN-S, the bbox mAP of GSP-RTMDet-T increased by 7.40% and 5.40%, the segm mAP increased by 7.00% and 6.50%, and FPS improved by 44.20% and 95.91%, respectively. For GSP-RTMDet-S, the bbox mAP increased by 8.90% and 6.90%, the segm mAP increased by 8.10% and 7.60%, and FPS improved by 35.50% and 84.09%, respectively. Thus, compared to Mask R-CNN series, the GSP-RTMDet demonstrates a comprehensive advantage in both accuracy and speed. As lightweight instance segmentation models, GSP-RTMDet-T and GSP-RTMDet-S improved the segm mAP by 2.80% and 3.90% over SOLOv2, with FPS increasing by 71.36% and 61.03%, while their parameters are only 11.20% and 21.34% of SOLOv2, achieving superior performance in segmentation accuracy and speed. Compared to the high-performance model CondInst, GSP-RTMDet-T and GSP-RTMDet-S improved the bbox mAP by 1.10% and 2.60%, the segm mAP by 1.10% and 2.20%, and FPS by 97.28% and 85.39%, while their parameters are only 15.24% and 29.04% of CondInst, offering higher accuracy with faster inference speed. The computational complexity of the GSP-RTMDet series models is lower than that of all comparison models. Considering both model size and performance, the GSP-RTMDet exhibits superiority in bounding box and instance mask accuracy, achieving optimal performance with fewer parameters and lower complexity, and presents a significant advantage in real-time capability.

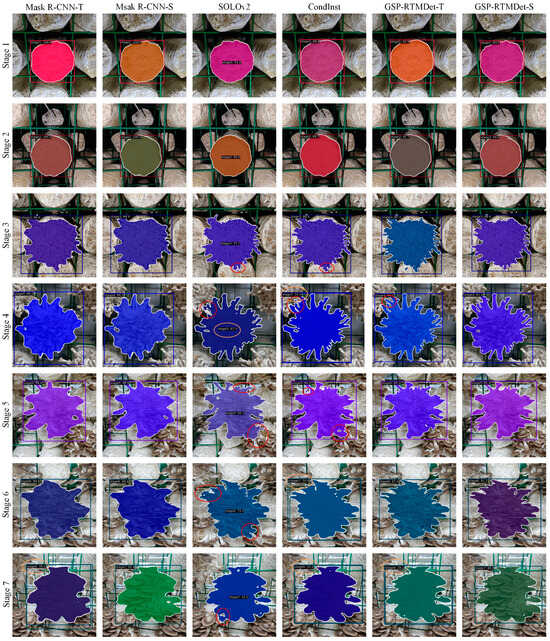

Figure 10 illustrates the detection and segmentation performance of various models. Red circles denote segmentation errors, while yellow circles indicate detection errors. In Stage 1 and Stage 2, all models deliver accurate results. However, performance varies for Stages 3~Stages 7.

Figure 10.

Comparison of detection and segmentation effects of different models. Red circles denote segmentation errors, while yellow circles indicate detection errors.

In terms of detection, GSP-RTMDet-S and Mask R-CNN-S perform the best. Detection errors are predominantly found in Stages 4 and 7, where these stages are sometimes confused with Stages 3 and 6, due to lesser morphological changes and higher feature similarity. GSP-RTMDet-S enhances feature discrimination ability, improves detection accuracy, and significantly surpasses Mask R-CNN-S in inference speed. In segmentation performance, Mask R-CNN-T and Mask R-CNN-S exhibit lower precision, failing to achieve accurate target boundaries. SOLOv2 and CondInst produce better segmentation results than Mask R-CNN series, with boundaries closely target morphology, however errors occur near localized boundaries in complex scenarios.

The GSP-RTMDet enhances the model’s capability to learn target morphological boundaries and semantic features, improving boundary division in overlapping situations and achieving precise segmentation in complex scenes. Thus, GSP-RTMDet-S can accurately detect the growth stages of P. pulmonarius and precisely segment target morphological boundaries in complex environments.

3.5. The Environmental Parameter Control Strategy

The mission of the growth stage detection model proposed in this study is to provide a decision-making basis for the environmental control of mushroom houses. Consequently, the GSP-RTMDet model serves as the core of the environment control system. Based on the model’s detection results of the growth stages of P. pulmonarius, it determines the environmental parameters which given to the controller, i.e., the desired environmental parameters at current stage to be achieved. The desired environmental parameters and control strategies were established in this section, which are combined with the GSP-RTMDet’s decisions on growth stages to accomplish the task of automatic environmental control in mushroom houses.

Based on the environmental conditions required for the growth process of P. pulmonarius, Table 6 outlines the desired environmental parameters aligned with each growth stages. The control strategy utilized is the classical closed-loop PID (Proportional-Integral-Derivative) control, which enables environmental parameters to swiftly achieve the desired steady-state value when adjustments are necessary. This method exhibits excellent tracking and disturbance rejection performance, establishing it as a mature and effective technique for environmental parameter control. The closed-loop control system for the environment of mushroom houses is composed of the growth stage detection model, PID controller, drive circuit, environmental regulation equipment, and environmental sensors. Based on the detection results from the model regarding growth stages, the desired environmental parameters are designated as the set point for the controller. The controller then leverages PID operations to generate control signals that regulate the environmental equipment, ensuring the environment consistently achieves the desired parameters. During Stages 1 and 2, to facilitate the formation of mushroom buds, environmental conditions should support mycelial knot differentiation by maintaining higher temperatures and increasing humidity in darkness. CO2 levels are minimally impactful during these stages and can be regulated through scheduled ventilation. From Stages 3 to 6, as fruiting bodies develop, the temperature should be gradually reduced to establish a temperature differential exceeding 10 °C for uniform primordium. Once fruiting bodies form, relative humidity should increase, with misting during Stages 4 through 6, and lighting should be introduced. CO2 concentration influences the length of stipe. To meet market quality standards, initially maintain a higher CO2 level to promote elongation, then gradually decrease it. Stage 7 marks the harvesting without additional misting, and environmental parameters remain constant. Throughout the entire growth process of P. pulmonarius, the growth stages are detected by the model developed in this study, and the environmental parameters are adjusted using the proposed control strategy. This strategy relying on expert knowledge and production experience as the optimal approach. The distinction lies in utilizing a visual detection model instead of relying on growth duration and manual observation. Accurate judgment of growth stages by the model is critical, which is the primary focus of this research, with GSP-RTMDet’s outstanding performance providing assurance.

Table 6.

Environmental control parameters.

4. Discussion

P. pulmonarius differs from other edible mushrooms such as A. bisporus, shiitake mushroom, and Pleurotus eryngii. P. pulmonarius exhibits greater compactness and randomness in cluster morphology, and both the morphology and color of its fruiting bodies (including the cap) change significantly during growth. Moreover, P. pulmonarius typically grows densely in mushroom house environments, making images of it susceptible to complex scenarios like overlap, shadows, and occlusion. These factors render the accurate detection and segmentation of P. pulmonarius in mushroom house settings highly challenging. In practical production, the growth status serves as a critical basis for decision making in mushroom house environmental control. Achieving precise environmental control requires detailed phasing the entire growth process according to control requirements. One of the contributions of this study is development of an image dataset for detection of growth stages and instance segmentation. This dataset is finely annotated for both growth stages and morphological regions, providing a foundation for addressing the challenges of growth stage detection and target segmentation in complex scenarios.

In related works, the size of fruiting body’s cap is typically used as a judgment criterion. As noted in the introduction of this paper [15,16,17,18,19,20,21], these methods present the following shortcomings. These methods generally employ models such as YOLO to detect the cap, followed by algorithms to calculate parameters like cap size as indicators of growth status. If the cap is not effectively detected, the methods cannot be realized. These studies frequently target automated harvesting, restricting growth status detection to mature and immature states, failing to cover the entire growth cycle. Given the growth characteristics of P. pulmonarius and its placement conditions in actual production, obtaining an accurate and undeformed cap size is challenging. Consequently, a further contribution of this study is the development of a novel growth stage detection method. A model for growth stage detection and instance segmentation throughout the complete growth cycle of P. pulmonarius was established, with a focus on meeting environmental control requirements.

The test results presented in Table 3 and Figure 9 clearly demonstrate that the GSP-RTMDet proposed in this study performs effectively in detecting growth stages and instance segmentation of P. pulmonarius. On the test set, the GSP-RTMDet’s bbox mAP, segm mAP, and FPS exceed those of the baseline. Ablation experiments shown in Table 4 confirm the effectiveness of the improved methods. The primary function of the SPPF-SAM module is to enhance the feature representation capacity of the backbone. The contribution of SimAM is boosts key feature information while suppressing irrelevant features, improving the model’s accuracy. Despite being a parameter-free attention mechanism, its computational processing can reduce inference speed. SwishReLU was introduced to enhance inference speed. It approximates the performance of the Swish function while retaining the efficiency of ReLU, thereby balancing accuracy and speed. The CSP-SGE module enhances the feature fusion capability of the neck, improving feature distinguishability at different growth stages and contributing to detection accuracy. The CARAFE module is helpful to maintaining precise target boundaries during upsampling, enhancing segmentation accuracy. Comparative results in Table 5 and Figure 10 indicate that the GSP-RTMDet outperforms commonly used models across various metrics.

This study developed an environment control strategy for mushroom houses centered on the GSP-RTMDet. This approach can be integrated into current environmental control systems of P. pulmonarius mushroom houses to replace manual decision making, facilitating automatic regulation of environmental parameters in response to changes throughout the growth process. Consequently, it reduces management costs, enhances operational efficiency, and offers substantial practical application value.

5. Conclusions

This study proposed a detection and segmentation method for the growth stages of P. pulmonarius based on the improved GSP-RTMDet to control environmental parameters in mushroom houses. To enhance the model’s capability in extracting features from each growth stage and addressing the challenge of precise segmentation in complex scenarios, we developed the GSP-RTMDet, which builds upon the RTMDet network as a lightweight, high-performance instance segmentation model. Key improvements consist of designing the SPPF-SAM module to replace the backbone’s SPPF, integrating the SGE attention mechanism with CSPLayer to construct the neck’s CSP-SGE module, and employing the CARAFE module for upsampling.

In a comprehensive analysis of the model’s performance during training, validation, and testing, GSP-RTMDet-S was identified as achieving the optimal accuracy–speed balance. On the test dataset, GSP-RTMDet-S attained a bbox mAP of 96.40% and a segm mAP of 93.70%, showing improvements of 2.20% and 1.70% over the baselines, respectively. Model parameters were reduced by 2.85%, and FPS increased to 39.58 img/s. The results demonstrate that the GSP-RTMDet achieves superior detection and segmentation performance while decreasing model parameters and computational complexity. Ablation experiments confirmed the effectiveness of the model’s improvements. For further validation, we compared the GSP-RTMDet with other prominent instance segmentation models using the dataset established in this study. Experimental results indicate that the GSP-RTMDet surpassed Mask R-CNN, SOLOv2, and CondInst in the bbox mAP, segm mAP, and FPS, achieving top performance with the fewest parameters. The detection and segmentation effects showed that the GSP-RTMDet exhibits enhanced accuracy in complex scenarios, providing precise and efficient growth state perception for P. pulmonarius. Based on these findings, a growth stage-based environmental parameter control strategy was proposed for supporting autonomous regulation of environmental parameters during the P. pulmonarius growth process.

Future work will focus on enhancing the model’s generalization capabilities by increasing data diversity to address the challenges posed by variable production scene, such as image deviations due to differences in environments and collection conditions. Additionally, the exploration of multimodal perceptual inputs, including the fusion of 3D and 2D visual information, will be pursued to achieve precise measurement of fruiting body phenotypic parameters. This will further improve and refine the model’s ability to express and differentiate growth states. Advancements in the development of growth environment control systems in mushroom house will be aimed at improving production efficiency and reducing management costs.

Author Contributions

Conceptualization, C.W. and D.Y.; methodology, C.W. and X.K.; software, Z.W.; validation, Z.W. and H.S.; formal analysis, C.W. and X.W.; investigation, X.K. and X.W.; resources, D.Y. and X.K.; data curation, C.W., Z.W. and H.S.; writing—original draft preparation, C.W. and X.W.; writing—review and editing, C.W. and X.W.; C.W. and X.W. jointly completed the manuscript writing; visualization, X.W.; project administration, C.W. and D.Y.; funding acquisition, X.W., D.Y. and X.K. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the Fujian Agriculture and Forestry University Visiting Study Project Fujian Agriculture and Forestry University, grant number KFXH23032) and the Natural Science Foundation of Fujian Province, China (Fujian Provincial Department of science and technology, grant number 2024J01420).

Institutional Review Board Statement

Not applicable.

Data Availability Statement

Data will be made available on reasonable request.

Acknowledgments

This research was conducted at the College of Mechanical and Electrical Engineering and the School of Future Technology, Fujian Agriculture and Forestry University, Fuzhou, China.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Li, Q.; Ying, X.; Yang, Y.; Gao, W. Genetic Diversity and Genome-Wide Association Study of Pleurotus pulmonarius Germplasm. Agriculture 2024, 14, 2023. [Google Scholar] [CrossRef]

- Wang, Q.; Meng, L.; Wang, X.; Zhao, W.; Shi, X.; Wang, W.; Li, Z.; Wang, L. The Yield, Nutritional Value, Umami Components and Mineral Contents of the First-Flush and Second-Flush Pleurotus pulmonarius Mushrooms Grown on Three Forestry Wastes. Food Chem. 2022, 397, 133714. [Google Scholar] [CrossRef] [PubMed]

- Han, J.-H.; Kwon, H.-J.; Yoon, J.-Y.; Kim, K.; Nam, S.-W.; Son, J.E. Analysis of the Thermal Environment in a Mushroom House Using Sensible Heat Balance and 3-D Computational Fluid Dynamics. Biosyst. Eng. 2009, 104, 417–424. [Google Scholar] [CrossRef]

- Rajarathnam, S.; Bano, Z.; Miles, P.G. Pleurotus mushrooms. Part I A. Morphology, Life Cycle, Taxonomy, Breeding, and Cultivation. Crit. Rev. Food Sci. Nutr. 1987, 26, 157–223. [Google Scholar] [CrossRef]

- Barauskas, R.; Kriščiūnas, A.; Čalnerytė, D.; Pilipavičius, P.; Fyleris, T.; Daniulaitis, V.; Mikalauskis, R. Approach of AI-Based Automatic Climate Control in White Button Mushroom Growing Hall. Agriculture 2022, 12, 1921. [Google Scholar] [CrossRef]

- Wahab, H.A.; Manap, M.Z.I.A.; Ismail, A.E.; Pauline, O.; Ismon, M.; Zainulabidin, M.H.; Noor, F.M.; Mohamad, Z. Investigation of Temperature and Humidity Control System for Mushroom House. Int. J. Integr. Eng. 2019, 11, 27–37. [Google Scholar]

- Chen, L.; Qian, L.; Zhang, X.; Li, J.; Zhang, Z.; Chen, X. Research Progress on Indoor Environment of Mushroom Factory. Int. J. Agric. Biol. Eng. 2022, 15, 25–32. [Google Scholar] [CrossRef]

- Chong, J.L.; Chew, K.W.; Peter, A.P.; Ting, H.Y.; Show, P.L. Internet of Things (IoT)-Based Environmental Monitoring and Control System for Home-Based Mushroom Cultivation. Biosensors 2023, 13, 98. [Google Scholar] [CrossRef]

- Kavaliauskas, Ž.; Šajev, I.; Gecevičius, G.; Čapas, V. Intelligent Control of Mushroom Growing Conditions Using an Electronic System for Monitoring and Maintaining Environmental Parameters. Appl. Sci. 2022, 12, 13040. [Google Scholar] [CrossRef]

- Thong-un, N.; Wongsaroj, W. Productivity Enhancement Using Low-Cost Smart Wireless Programmable Logic Controllers: A Case Study of an Oyster Mushroom Farm. Comput. Electron. Agric. 2022, 195, 106798. [Google Scholar] [CrossRef]

- Elbasi, E.; Mostafa, N.; AlArnaout, Z.; Zreikat, A.I.; Cina, E.; Varghese, G.; Shdefat, A.; Topcu, A.E.; Abdelbaki, W.; Mathew, S.; et al. Artificial Intelligence Technology in the Agricultural Sector: A Systematic Literature Review. IEEE Access 2023, 11, 171–202. [Google Scholar] [CrossRef]

- Irwanto, F.; Hasan, U.; Lays, E.S.; De La Croix, N.J.; Mukanyiligira, D.; Sibomana, L.; Ahmad, T. IoT and Fuzzy Logic Integration for Improved Substrate Environment Management in Mushroom Cultivation. Smart Agric. Technol. 2024, 7, 100427. [Google Scholar] [CrossRef]

- Arun Kumar, K.; Karthikeyan, J. Review on Implementation of IoT for Environmental Condition Monitoring in the Agriculture Sector. J. Ambient. Intell. Hum. Comput. 2022, 13, 183–200. [Google Scholar] [CrossRef]

- Yin, H.; Yi, W.; Hu, D. Computer Vision and Machine Learning Applied in the Mushroom Industry: A Critical Review. Comput. Electron. Agric. 2022, 198, 107015. [Google Scholar] [CrossRef]

- Lu, C.-P.; Liaw, J.-J.; Wu, T.-C.; Hung, T.-F. Development of a Mushroom Growth Measurement System Applying Deep Learning for Image Recognition. Agronomy 2019, 9, 32. [Google Scholar] [CrossRef]

- Wei, Q.; Wang, Y.; Yang, S.; Guo, C.; Wu, L.; Yin, H. Moving toward Automaticity: A Robust Synthetic Occlusion Image Method for High-Throughput Mushroom Cap Phenotype Extraction. Agronomy 2024, 14, 1337. [Google Scholar] [CrossRef]

- Tao, K.; Liu, J.; Wang, Z.; Yuan, J.; Liu, L.; Liu, X. ReYOLO-MSM: A Novel Evaluation Method of Mushroom Stick for Selective Harvesting of Shiitake Mushroom Sticks. Comput. Electron. Agric. 2024, 225, 109292. [Google Scholar] [CrossRef]

- Sujatanagarjuna, A.; Kia, S.; Briechle, D.F.; Leiding, B. MushR: A Smart, Automated, and Scalable Indoor Harvesting System for Gourmet Mushrooms. Agriculture 2023, 13, 1533. [Google Scholar] [CrossRef]

- Shi, L.; Wei, Z.; You, H.; Wang, J.; Bai, Z.; Yu, H.; Ji, R.; Bi, C. OMC-YOLO: A Lightweight Grading Detection Method for Oyster Mushrooms. Horticulturae 2024, 10, 742. [Google Scholar] [CrossRef]

- Wu, Y.; Sun, Y.; Zhang, S.; Liu, X.; Zhou, K.; Hou, J. A Size-Grading Method of Antler Mushrooms Using YOLOv5 and PSPNet. Agronomy 2022, 12, 2601. [Google Scholar] [CrossRef]

- Wang, Y.; Yang, L.; Chen, H.; Hussain, A.; Ma, C.; Al-gabri, M. Mushroom-YOLO: A Deep Learning Algorithm for Mushroom Growth Recognition Based on Improved YOLOv5 in Agriculture 4.0. In Proceedings of the 2022 IEEE 20th International Conference on Industrial Informatics (INDIN), Perth, Australia, 25–28 July 2022; pp. 239–244. [Google Scholar]

- Wang, X.; Zhang, R.; Kong, T.; Li, L.; Shen, C. SOLOv2: Dynamic and Fast Instance Segmentation. In Proceedings of the Advances in Neural Information Processing Systems, Vancouver, BC, Canada, 6–12 December 2020; Volume 33, pp. 17721–17732. [Google Scholar]

- Tian, Z.; Shen, C.; Chen, H. Conditional Convolutions for Instance Segmentation. In Proceedings of the Computer Vision—ECCV 2020, Virtual, 23–28 August 2020; pp. 282–298. [Google Scholar]

- Liu, Z.; Lin, Y.; Cao, Y.; Hu, H.; Wei, Y.; Zhang, Z.; Lin, S.; Guo, B. Swin Transformer: Hierarchical Vision Transformer Using Shifted Windows. In Proceedings of the 2021 IEEE/CVF International Conference on Computer Vision (ICCV), Virtual, 11–17 October 2021; pp. 9992–10002. [Google Scholar]

- Fu, R.; He, J.; Liu, G.; Li, W.; Mao, J.; He, M.; Lin, Y. Fast Seismic Landslide Detection Based on Improved Mask R-CNN. Remote Sens. 2022, 14, 3928. [Google Scholar] [CrossRef]

- Liu, S.; Zou, H.; Huang, Y.; Cao, X.; He, S.; Li, M.; Zhang, Y. ERF-RTMDet: An Improved Small Object Detection Method in Remote Sensing Images. Remote Sens. 2023, 15, 5575. [Google Scholar] [CrossRef]

- Tongcham, P.; Supa, P.; Pornwongthong, P.; Prasitmeeboon, P. Mushroom Spawn Quality Classification with Machine Learning. Comput. Electron. Agric. 2020, 179, 105865. [Google Scholar] [CrossRef]

- Shen, Y.; Gu, M.; Jin, Q.; Fan, L.; Feng, W.; Song, T.; Tian, F.; Cai, W. Effects of Cold Stimulation on Primordial Initiation and Yield of Pleurotus pulmonarius. Sci. Hortic. 2014, 167, 100–106. [Google Scholar] [CrossRef]

- Russell, B.C.; Torralba, A.; Murphy, K.P.; Freeman, W.T. LabelMe: A Database and Web-Based Tool for Image Annotation. Int J. Comput. Vis. 2008, 77, 157–173. [Google Scholar] [CrossRef]

- Shorten, C.; Khoshgoftaar, T.M. A Survey on Image Data Augmentation for Deep Learning. J. Big Data 2019, 6, 60. [Google Scholar] [CrossRef]

- Kumar, T.; Brennan, R.; Mileo, A.; Bendechache, M. Image Data Augmentation Approaches: A Comprehensive Survey and Future Directions. IEEE Access 2024, 12, 187536–187571. [Google Scholar] [CrossRef]

- Chen, X.; Yang, C.; Mo, J.; Sun, Y.; Karmouni, H.; Jiang, Y.; Zheng, Z. CSPNeXt: A New Efficient Token Hybrid Backbone. Eng. Appl. Artif. Intell. 2024, 132, 107886. [Google Scholar] [CrossRef]

- Ghiasi, G.; Lin, T.-Y.; Le, Q.V. NAS-FPN: Learning Scalable Feature Pyramid Architecture for Object Detection. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Los Angeles, CA, USA, 16–20 June 2019; pp. 7029–7038. [Google Scholar]

- Yang, L.; Zhang, R.-Y.; Li, L.; Xie, X. SimAM: A Simple, Parameter-Free Attention Module for Convolutional Neural Networks. In Proceedings of the 38th International Conference on Machine Learning, Virtual, 18–24 July 2021; pp. 11863–11874. [Google Scholar]

- Wang, J.; Chen, K.; Xu, R.; Liu, Z.; Loy, C.C.; Lin, D. CARAFE: Content-Aware ReAssembly of FEatures. In Proceedings of the 2019 IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Republic of Korea, 27–30 October 2019; pp. 3007–3016. [Google Scholar]

- Ding, J.; Huang, R.; Liang, Y.; Weng, X.; Chen, J.; You, H. Oilpalm-RTMDet: An Lightweight Oil Palm Detector Base on RTMDet. Ecol. Inform. 2025, 85, 103000. [Google Scholar] [CrossRef]

- Rahman, J.U.; Zulfiqar, R.; Khan, A. SwishReLU: A Unified Approach to Activation Functions for Enhanced Deep Neural Networks Performance. arXiv 2024, arXiv:2407.08232. [Google Scholar] [CrossRef]

- Li, X.; Lv, C.; Wang, W.; Li, G.; Yang, L.; Yang, J. Generalized Focal Loss: Towards Efficient Representation Learning for Dense Object Detection. IEEE Trans. Pattern Anal. Mach. Intell. 2023, 45, 3139–3153. [Google Scholar] [CrossRef] [PubMed]

- Zhang, Y.-F.; Ren, W.; Zhang, Z.; Jia, Z.; Wang, L.; Tan, T. Focal and Efficient IOU Loss for Accurate Bounding Box Regression. Neurocomputing 2022, 506, 146–157. [Google Scholar] [CrossRef]

- Wang, L.; Wang, C.; Sun, Z.; Chen, S. An Improved Dice Loss for Pneumothorax Segmentation by Mining the Information of Negative Areas. IEEE Access 2020, 8, 167939–167949. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).