Advanced Technology in Agriculture Industry by Implementing Image Annotation Technique and Deep Learning Approach: A Review

Abstract

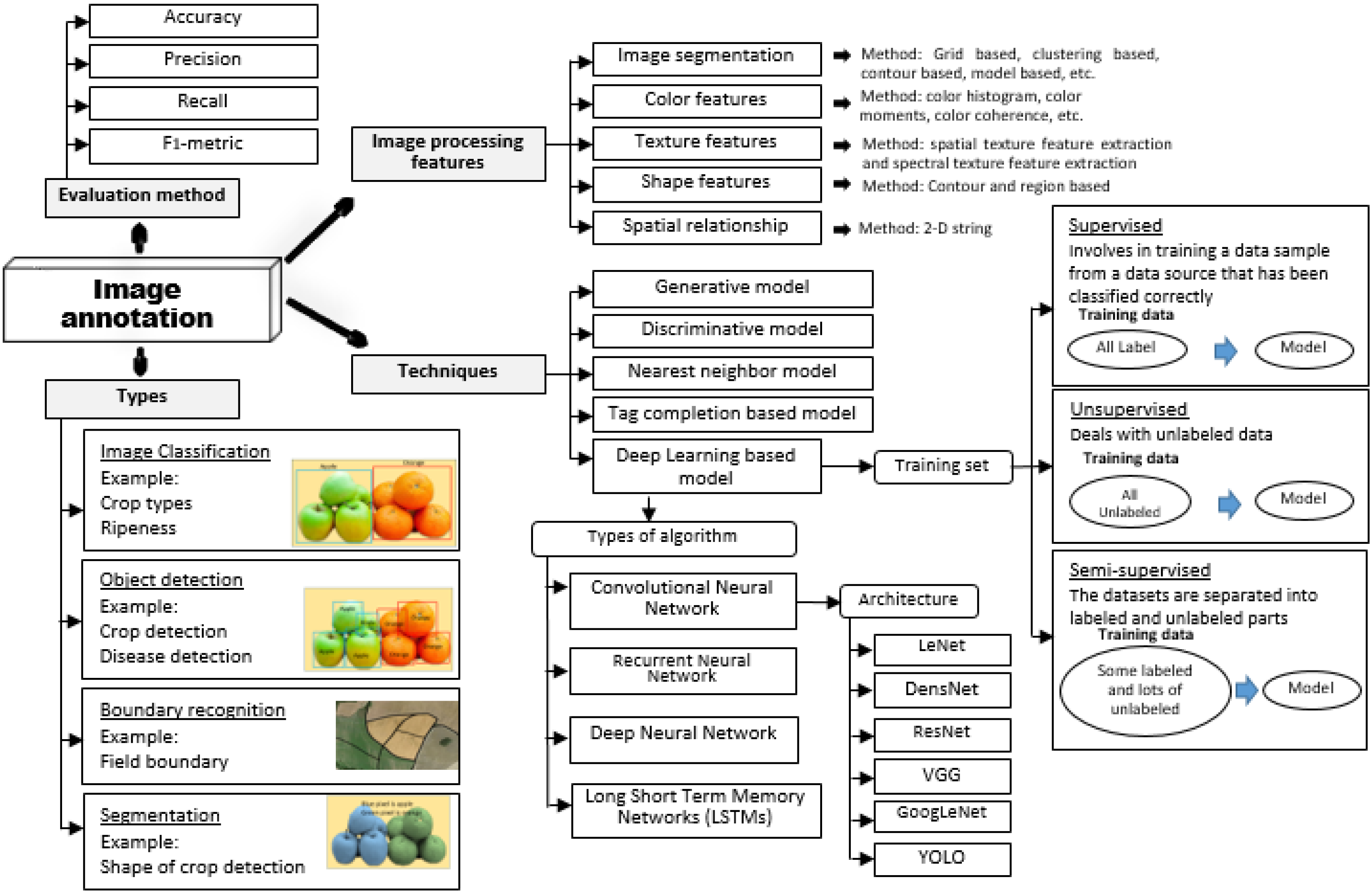

:1. Introduction

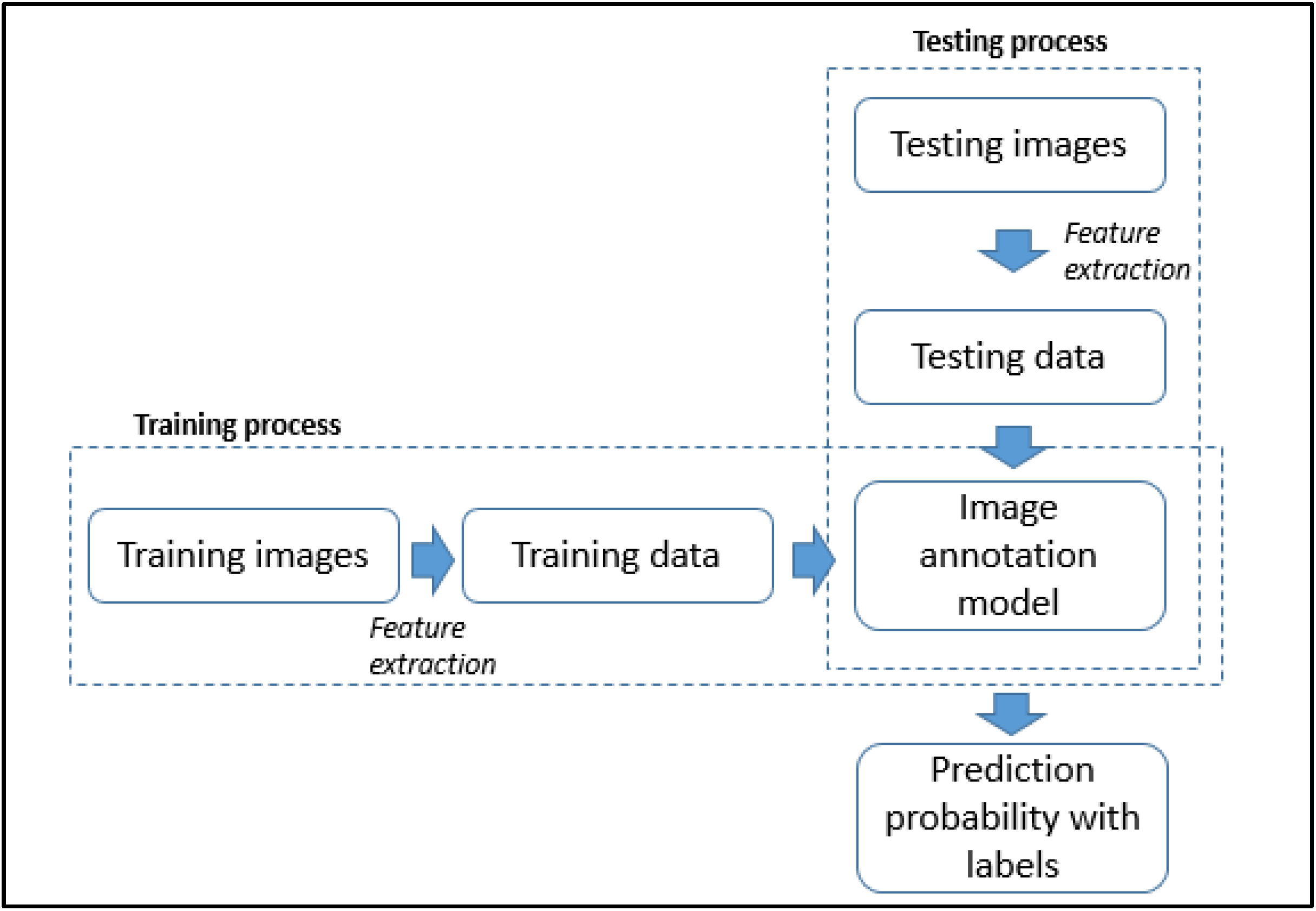

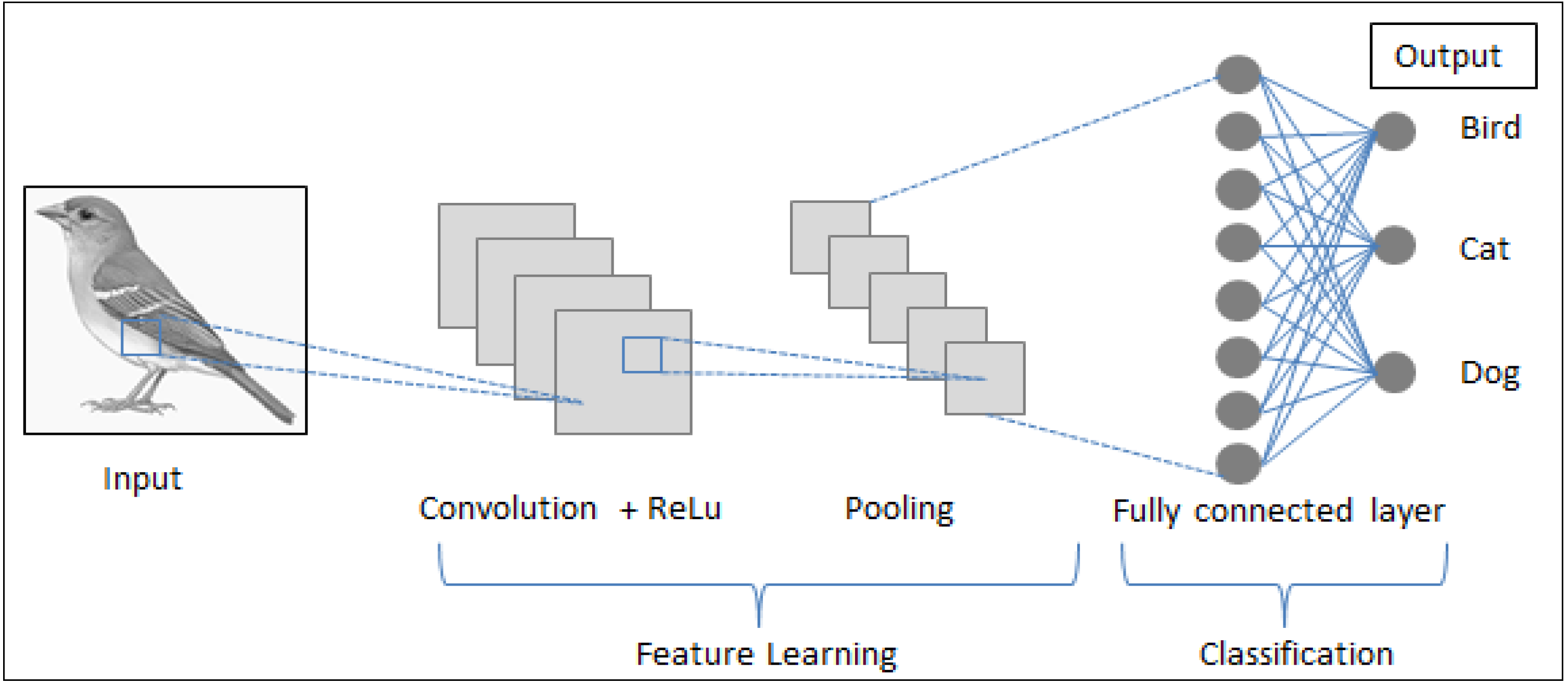

2. Deep Learning for Image Annotation

3. Deep Learning Architecture

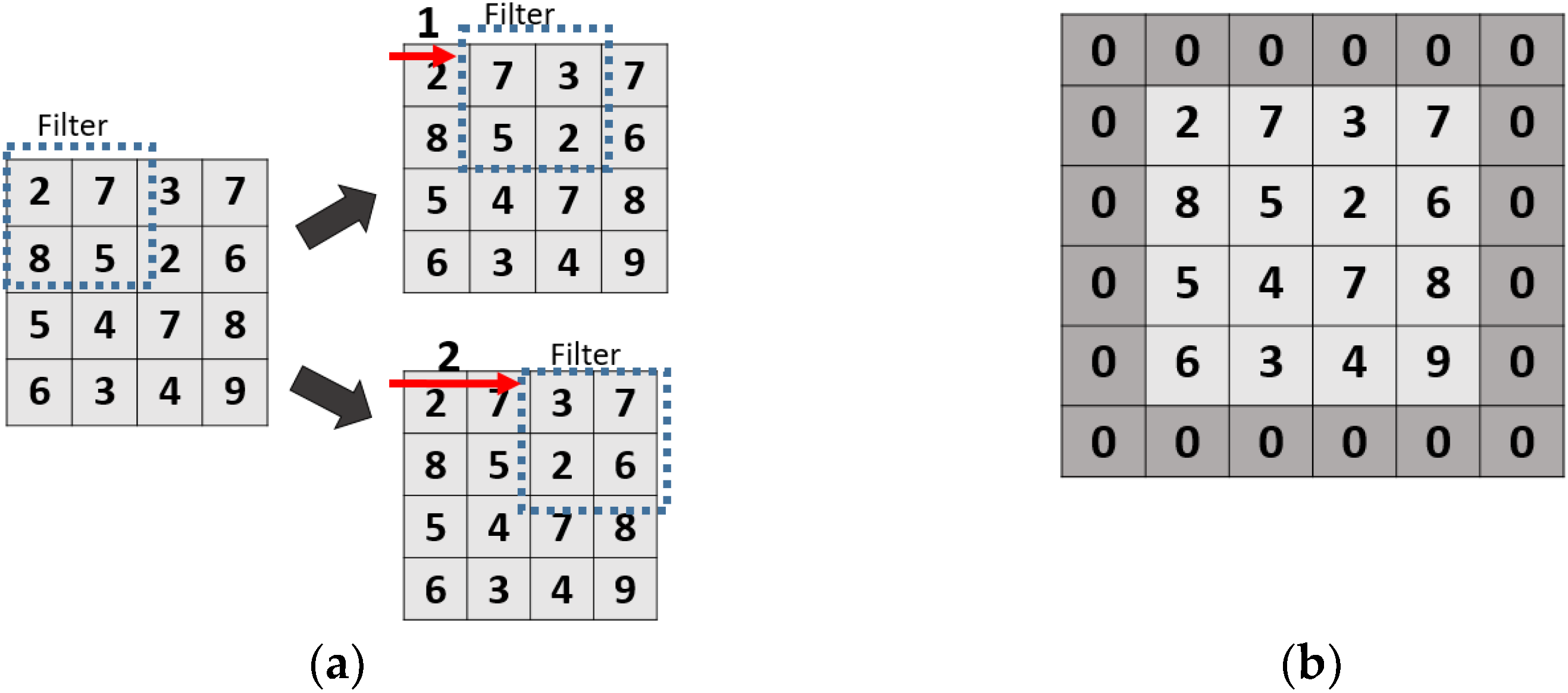

3.1. Convolutional Layer

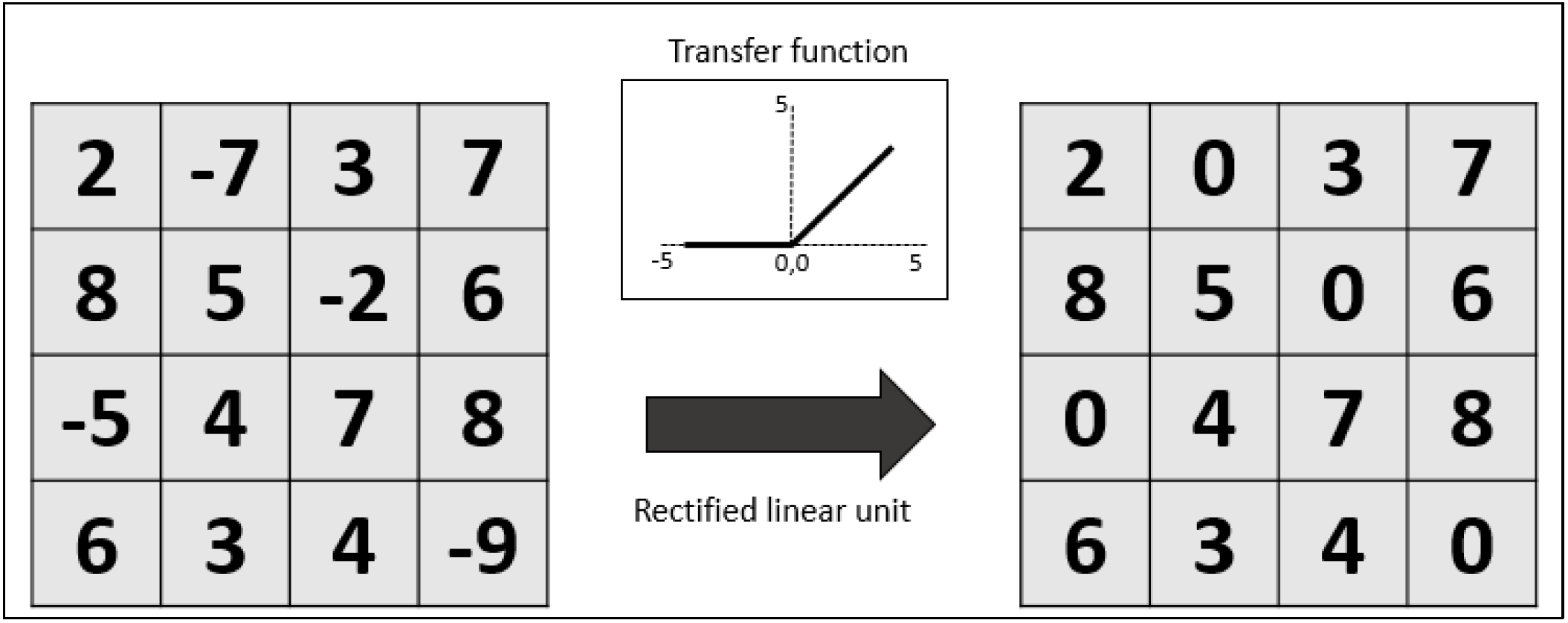

3.2. Activation Function

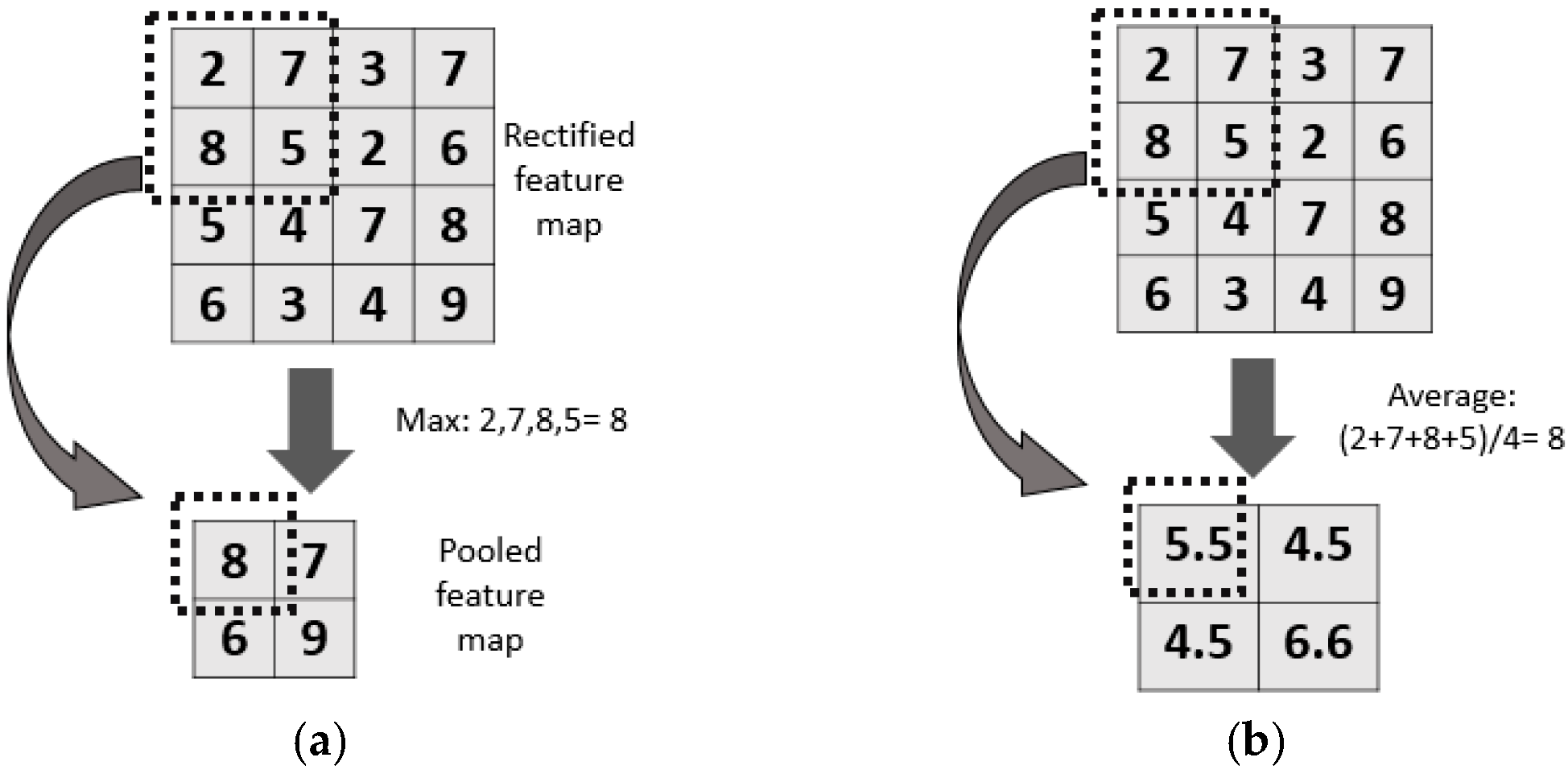

3.3. Pooling Layer

3.4. Fully Connected Layer

3.5. Loss Function

4. Improvement of CNN Architecture

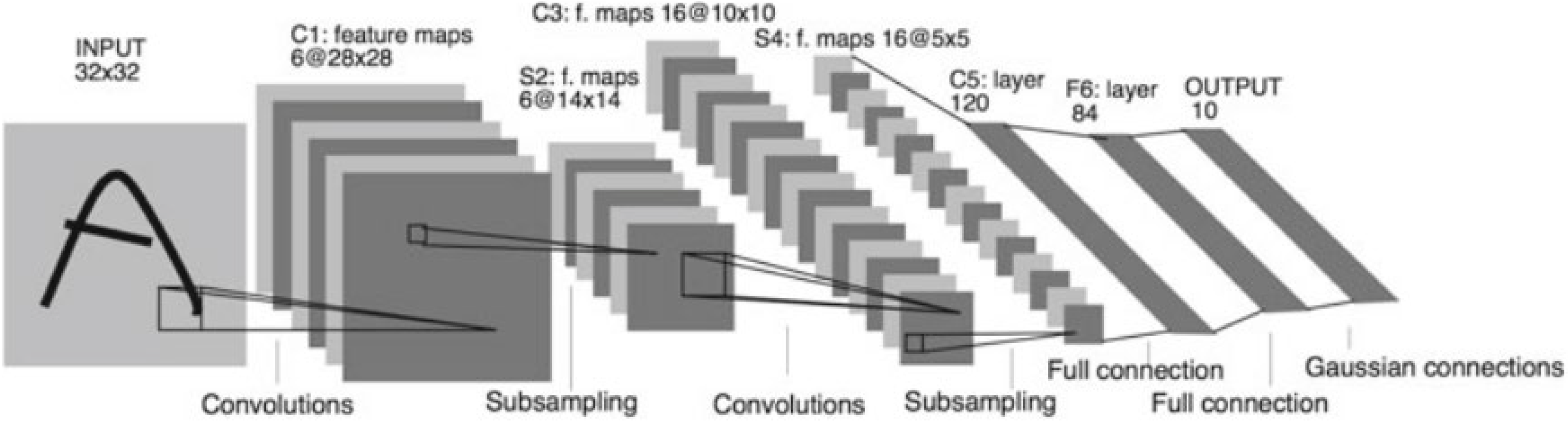

4.1. LeNet

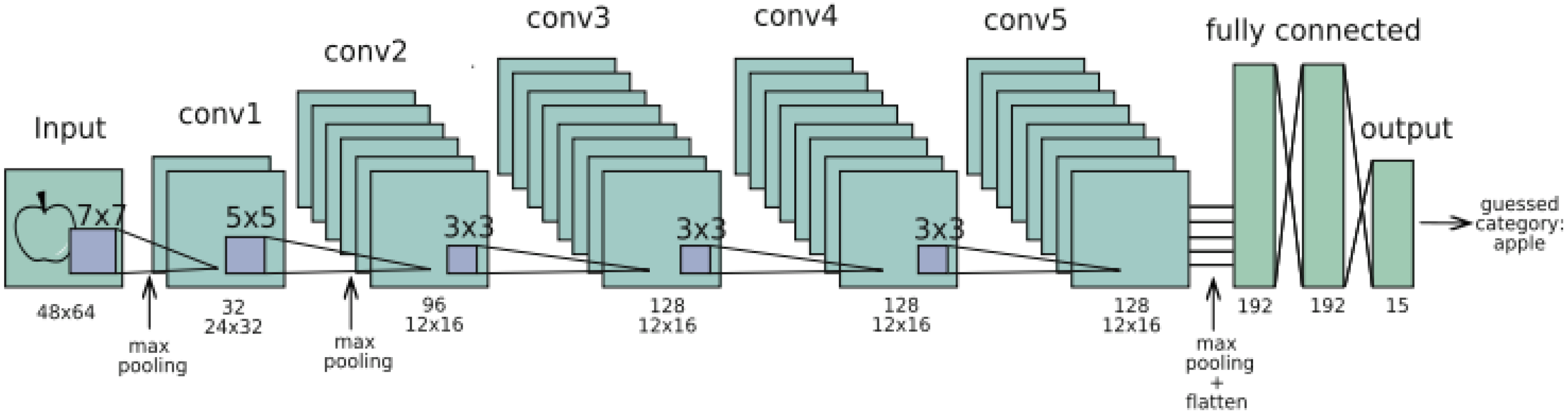

4.2. AlexNet

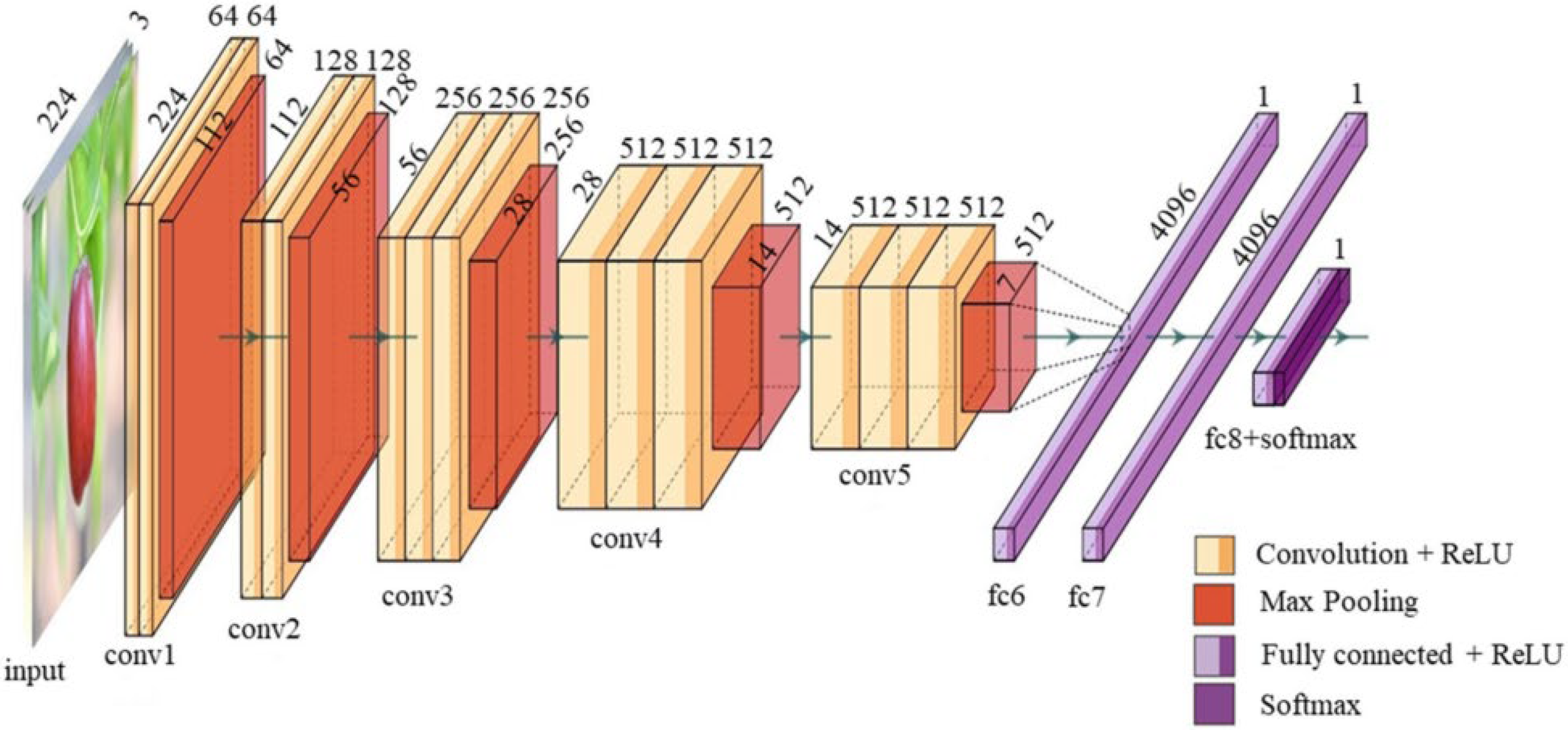

4.3. VGG

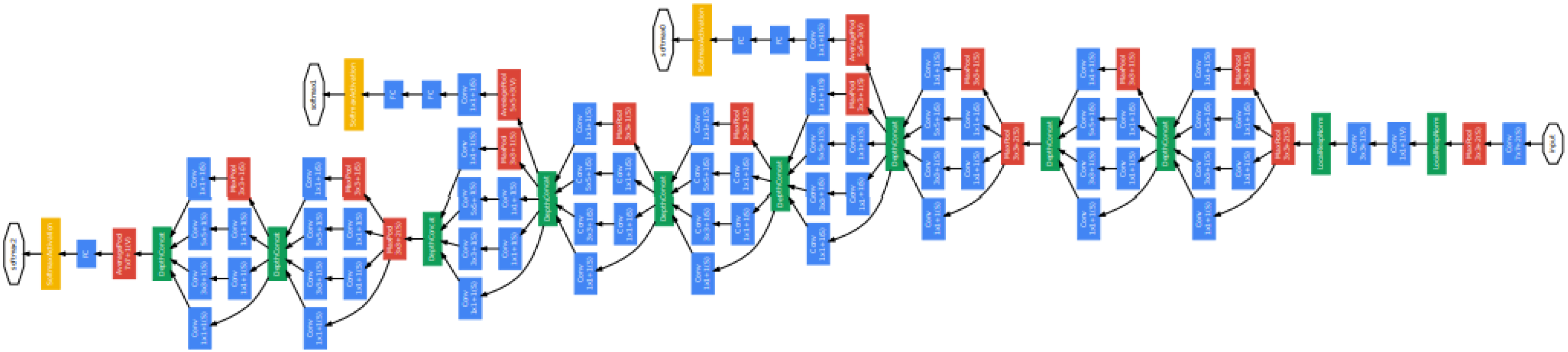

4.4. GoogLeNet/Inception

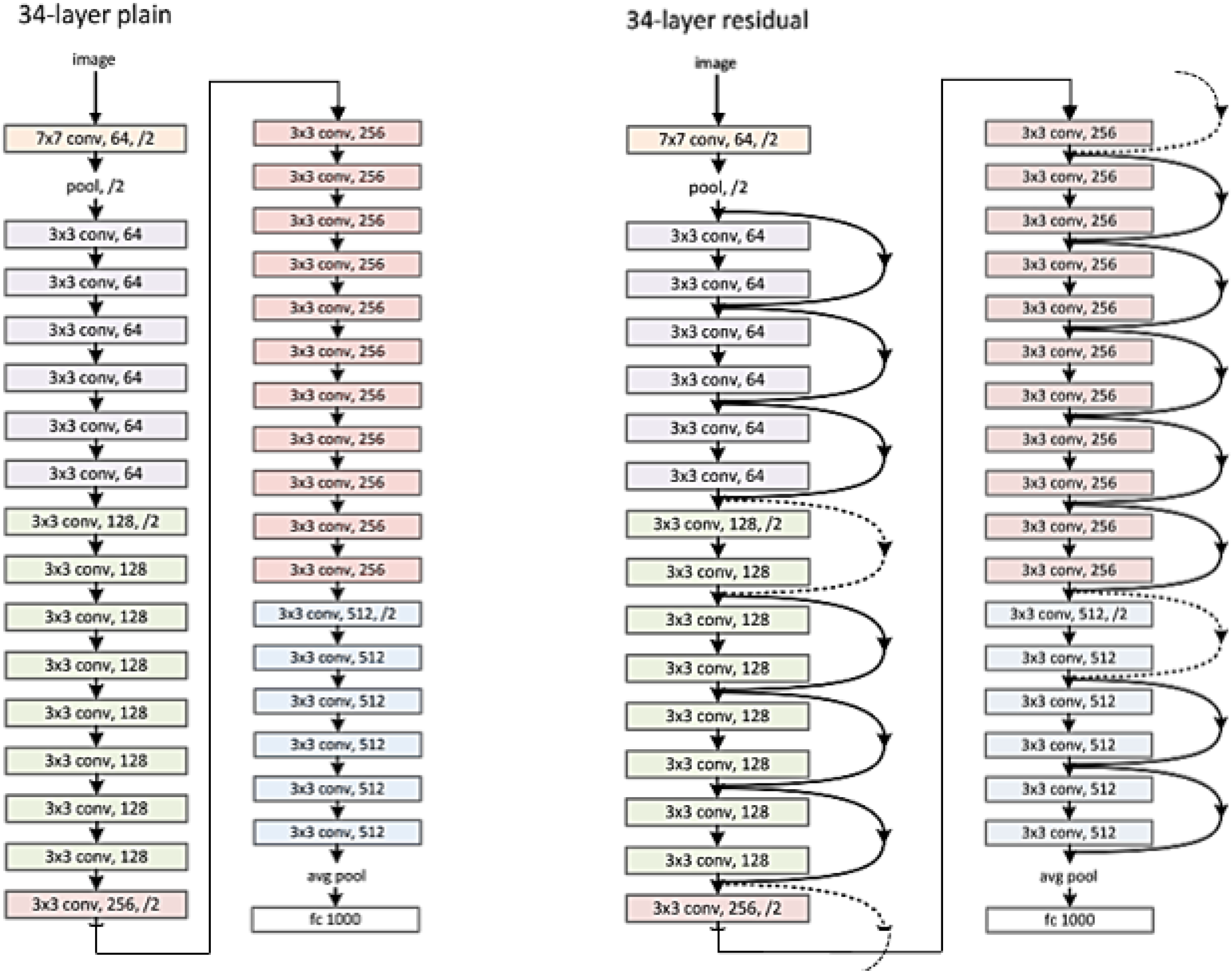

4.5. Residual Network (ResNet)

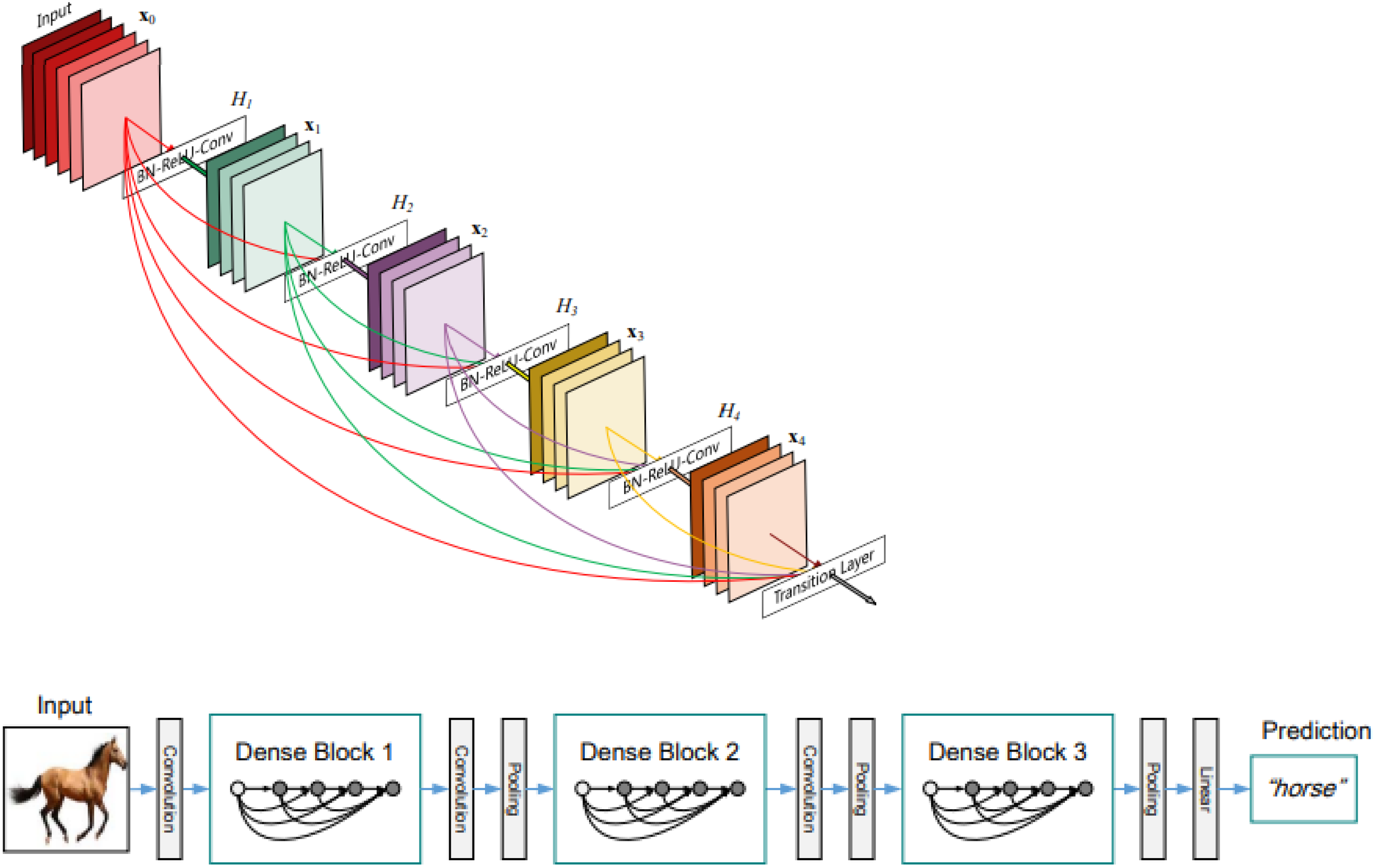

4.6. DenseNet

4.7. You Only Look Once (YOLO)

4.8. Performance Metric

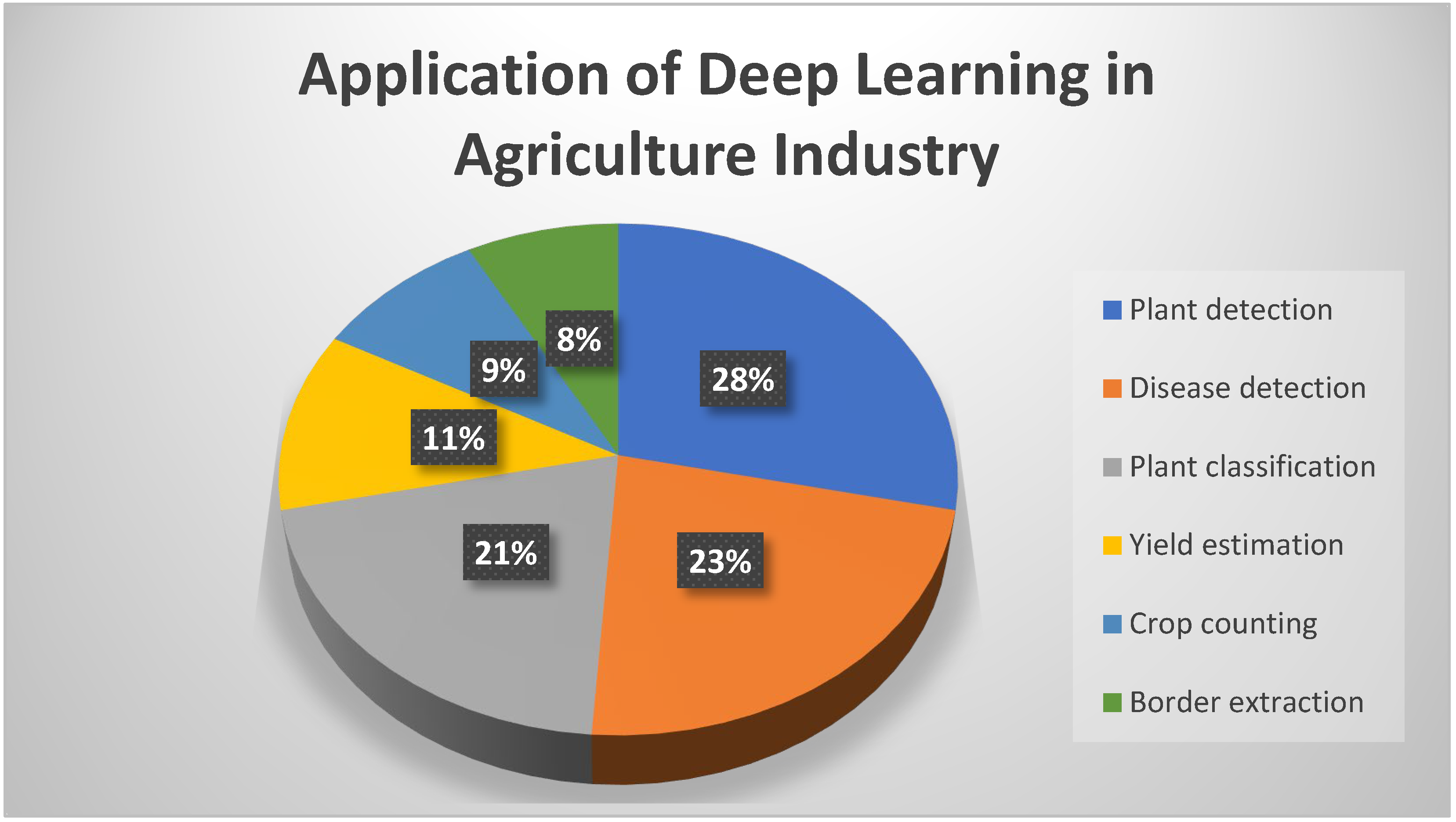

5. Results and Discussion

| Authors | Research Problem | Dataset Collection Method | Dataset Pre-processing Method | DL Method | Datasets Used | Result (Accuracy, Score, Detection Time) |

|---|---|---|---|---|---|---|

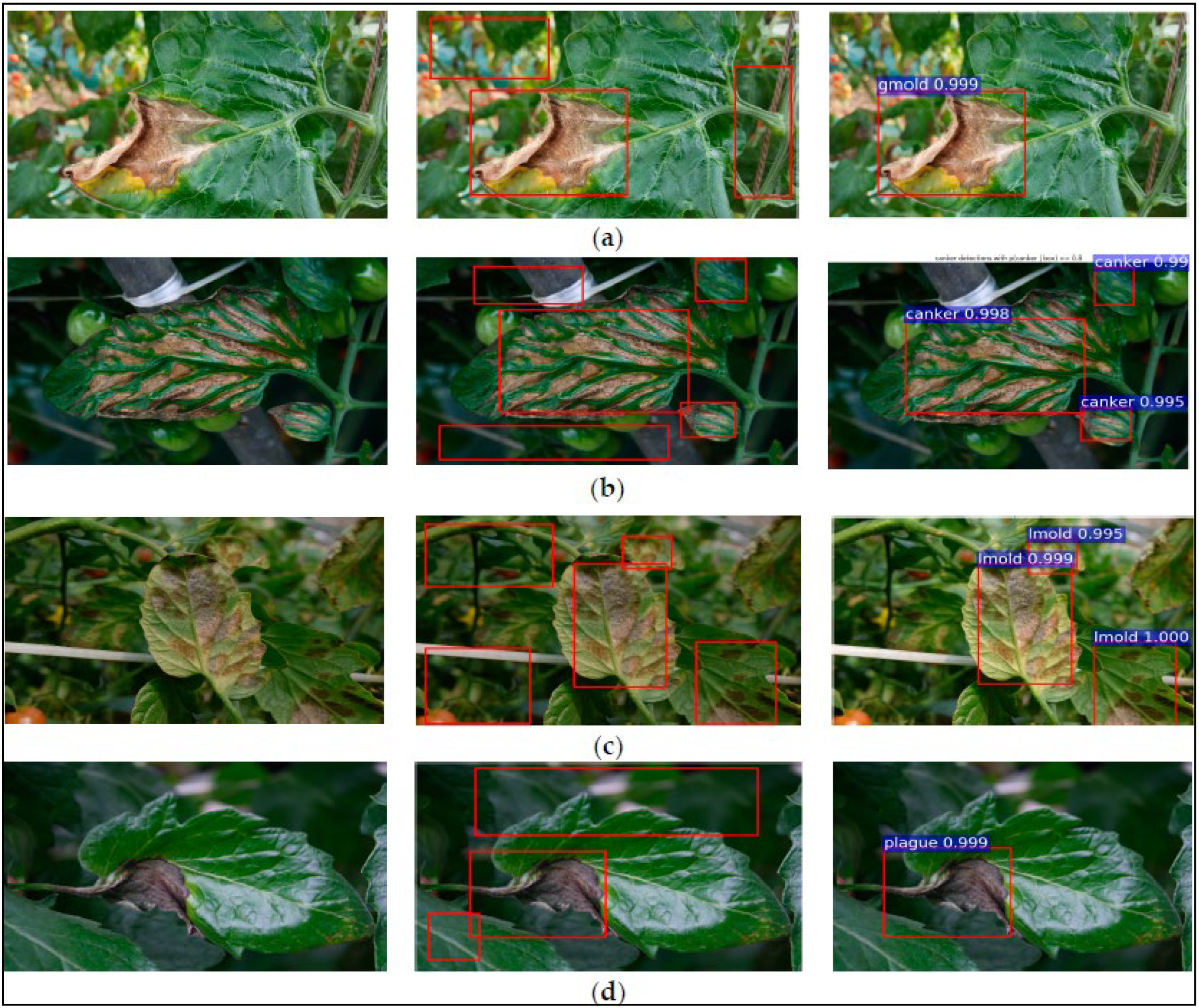

| [171] | Disease detection | Digital camera and smartphone used to collect tomato images in planting greenhouse | Bounding box | Yolo V3 | Tomato | Accuracy = 92.39% (20.39 ms) |

| [172] | Disease detection | Collected RGB color images using Nikon 7200d camera with image resolution 4000 × 6000 pixels | - | CNN (SVM classifier) | 4447 Turkey-PlantDataset | Accuracy score: 97.56% (PlantDiseaseNet-MV) 96.83% (PlantDiseaseNet-EF) |

| [167] | Disease detection | Collected RGB images using Canon Rebel T5i DSLR and smartphones | - | CNN | 3651 apple leaves | Accuracy = 97% |

| [173] | Disease detection | Image collected by two digital cameras and five smartphone cameras | - | GoogLeNet, Xception and Inception-RestNet-v2 | 4727 tea leaves | Accuracy = 89.64% |

| [112] | Disease detection | Used initial version of the PV dataset that is publicly available | - | VGG-16 InceptionV3 GoogLeNetBN | 14 crop plants, 38 crop–disease pairs, and 26 crop–disease categories | Accuracy = 98.8% (VGG-16) |

| [174] | Disease detection | Images are captured by hand | Augmentation | Ensemble of pre-trained DenseNet121 EfficientNetB7 and EfficicentNet NoisyStudent | 3651 high-grade images of apple leaves with various foliar diseases | Ensemble of pre-trained DenseNet121, EfficientNetB7 and EfficientNet NoisyStudent: Accuracy = 96.25% DenseNet121: Accuracy = 95.26% EfficientNwtB7: Accuracy = 95.62% NoisyStudent: Accuracy = 91.24% |

| [136] | Disease classification | Image source is online dataset from Plant Pathology 2020-FGVC7 | Data augmentation | ResNetV2 | 1821 images of apple tree leaves | Accuracy = 94.7% |

| [175] | Plant disease detection | Mobile phone | - | Baseline model of ResNet18 ResNet34 ResNet50 | 54,305 leaf images, PlantVillage and 1747 coffee leaves | Accuracy = 99% |

| [170] | Crop detection | Multirotor DJI Phantom 4 drone using RGB camera with 4000 × 3000 pixels | Bounding box | EfficientDet-D1 SSD MobileNetv2 SSD ResNet50 Faster R-CNN ResNet50 | 197 images of paddy seedlings | EfficientDet-D1: Precision = 0.83 Recall = 0.71 F1 = 0.77 Faster R-CNN: Precision = 0.82 Recall = 0.64 F1 = 0.72 |

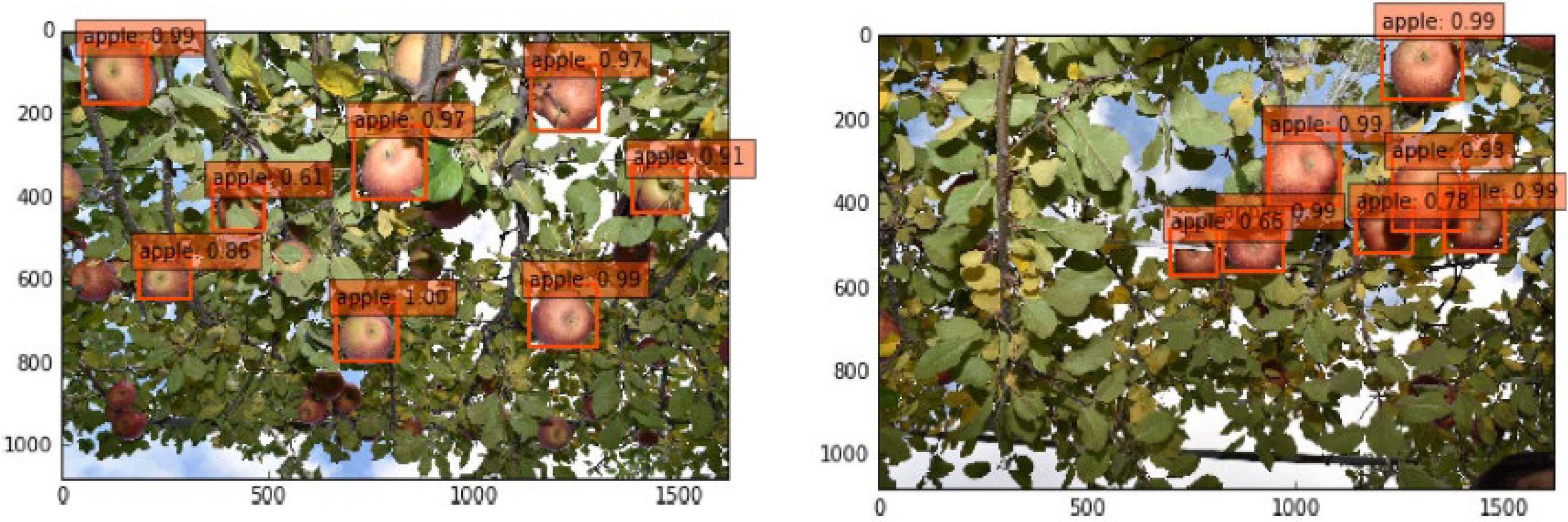

| [176] | Fruit detection | Smartphone (Galaxy S9, Samsung Electronics) with 5312 × 2988 pixels | Bounding box | Canopy-attention-YOLOv4 | 480 raw apple tree images | Precision = 94.89% Recall = 90.08% F1 = 92.52% (0.19 s) |

| [177] | Fruit detection | QG Rasberry Pi_Sony IMX477 and OAK-D color camera | Bounding box | SSD MobileNet-V1 | 1929 images of grape bunches | mAP = 66.96% |

| [178] | Fruit detection | Canon Powershot G16 camera | Bounding box | Improved YOLOv5 | 1214 apple images | Recall = 91.48% Precision = 83.83% mAP = 86.75% F1 = 87.49% |

| [179] | Fruit detection | Dataset obtained from GrapeCS-ML and Open Image Dataset v6 | Bounding box | YOLOv3 YOLOv4 YOLOv5 | 2985 images of grapes | YOLOv5: F1=0.76 YOLOv4: F1=0.77 |

| [49] | Fruit detection | Image collected with 4032 × 3024-pixel smartphone camera (iPhone 7 Plus, Apple) | Bounding box | AlexNet RestNet101 DarkNet53, Improve YOLOv3 | 849 apple images | Improve YOLOv3: F1 = 95.0% DarkNet53+YOLOv3: F1 = 94.6% AlexNet+Faster R-CNN: F1 = 91.2% |

| [153] | Fruit detection | Image captured by 3000 × 4000 pixel Sony DSC-HX400 Camera and 40 million-pixel Huawei mobile phone | Bounding box | Improved YOLO-V4 | 400 images of cherries fruit | F1 score = 0.947 Iou = 0.856 (0.467 s) |

| [103] | Fruit position detection and harvesting robot | Harvesting robot equipped with stereo camera and robot arm | - | SSD (VGGNet) R-CNN YOLO | 169 images of apples | SSD (VGGNet): Accuracy = 90% (2 s) |

| [180] | Fruit detection and counting | Captured images by DJI MAVIC Air2 drones, SLR cameras (Panasonic DMC-G7) and Honor 20 mobile phone | Bounding box | YOLOv5-CS (citrus sort) | More than 3000 original images of green citrus | mAP = 98.23% Precision = 86.97% Recall = 97.66% |

| [98] | Fruit counting | Collected 128 × 128-pixel images from Google Images | Generate synthetic image | Inception-ResNet | 24,000 tomato images | Average accuracy = 91% |

| [94] | Olive fruit fly detection and counting | Collected images from Dacus Image Recognition Toolkit (DIRT) | Bounding box | Modified YOLOv4 | 848 images of olive fruit fly | mAP = 96.68% (52.46 h) Precision = 0.84 Recall = 0.97 F1 score = 0.90 |

| [181] | Leaf counting | Captured image by Cannon Rebel XS camera | Bounding box | Faster R-CNN Tiny YOLOv3 | 1000 images of Arabidopsis plants | F1 score =0.94 |

| [102] | Fruit count | Flatbed scanner (Plustek, OpticPro A320) with 3600 × 5200 pixels | Patch classifier | LeNet DenseNet | 2552 images of mature inflorescences | DenseNet: Precision = 91.8% Recall = 92% LeNet: Precision = 77.8% Recall = 76.2% |

| [182] | Plant counting | RGB images taken using UAV with 256 × 256 pixels | Segmentation | Mask R-CNN | Potato and lettuce plants | Potato: Precision = 0.997 Recall = 0.825 |

| [183] | Crop classification | Images captured by Landsat-8 satellites | Semantic segmentation | DNN | Corn, soybean, barley, spring wheat, dry bean, sugar beet and alfalfa area | F1=0.8476 Precision = 0.8463 Recall = 0.8536 |

| [184] | Crop classification | Dataset taken from previous study in which the size of images is 1280 × 1024 pixels | Augmentation (crop) | AlexNet | 13,200 white cabbage seedlings | Accuracy = 94% |

| [185] | Fruit classification | RGB images captured using smartphone camera (LG-V20) | Data augmentation technique (flipping, horizontal, vertical) | VGG-16 | 1300 four classes of dates | Accuracy = 98.49% Precision = 96.63% Recall = 97.33% |

| [137] | Fruit classification | Images captured using Nikon D7500 camera with 3024 × 4032 and 6000 × 4000 pixels | Image augmentation (rotation, flip, brightness, adjustment, contrast and saturation enhancement) | Jujube classification network based on DL technique SVM AlexNet VGG-16 ResNet | 1700 images of 20 jujube varieties | Jujube classification network-based DL technique: Accuracy = 84.16% ResNet-18: Accuracy = 78.25% VGG-16: Accuracy = 71.42% AlexNet: Accuracy = 65.36% SVM: Accuracy = 60.84% |

| [186] | Classify fruit images | Image taken by digital camera (Nikon D7100) | - | VGG-16 | 440 images of litchi and lychee | Accuracy = 98.33% |

| [187] | Freshness and fruit classification | Real-world images from the internet | Augmentation (rotation and horizontal flipping) | RestNet-50 + RestNet-101 | Fresh and rotten fruits, e.g., apple, banana, orange, lemon, pear, strawberry and others | RestNet-50+RestNet-101: Average accuracy = 98.50% for freshness 97.43% for classification VGG-16: 94.79% for freshness 94.90% for classification |

| [188] | Classification of seedless and seeded fruit | Images taken by a digital camera (COOLPIX P520, Nikon) with image size of 1600 × 1200 pixels | Augmentation (brightness changes, rotation and horizontal and vertical flip) | VGG16 RestNet-50 InceptionV3 InceptionResNetV2 | 599 images of seeded and seedless fruit | VGG-16: Accuracy = 89% ResNet50: Accuracy = 86% InceptionV3: Accuracy = 91% InceptionResNetV2: Accuracy = 85% |

| [189] | Detection and classification | Recorded using video camera with full HD definition (1920 × 1080) | Bounding box | Tiny YOLOv3 | Unripe, ripe and overripe coffee fruit at density of 5000 trees hectare−1 | mAP = 84.0% F1 score = 82.0% Precision = 82.0% Recall = 83% |

| [190] | Detection, segmentation and classification | Dataset taken from a previous study [191] | Segmentation | R-CNN | 1036 reproductive structures (flower buds, flowers, immature fruits and mature fruit) | Average counting precision = 77.9% |

| [40] | Detection and segmentation | Images captured using camera | Bounding box | CNN | 300 images of grapes | F1 score= 0.91 |

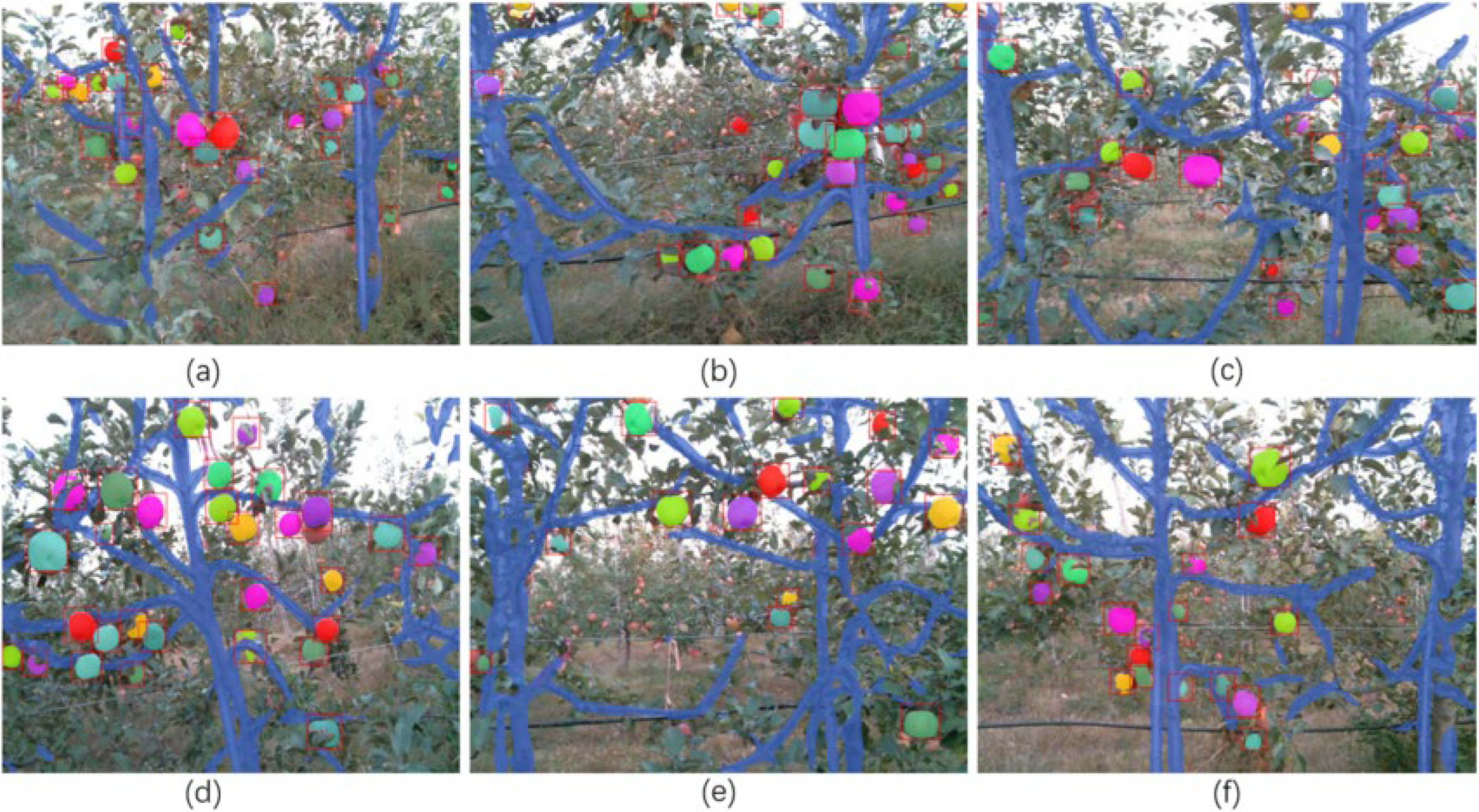

| [106] | Detection and segmentation | Intel RealSense D-435 RGB- camera and Logitech C615 webcam | Semantic segmentation | DaSNet-v2 | 1277 images of apple trees (fruit and branches) | Recall = 0.868 Precision = 0.88 Accuracy = 0.873 |

| [168] | Image segmentation | RGB images and 3D point cloud data (Kinect V2 sensor) | Semantic segmentation | SegNet | Apple trees | F1 score = 0.93 |

| [93] | Yield estimation | Images from Google Images | - | LSTM GRU BLSTM BGRU | Tomato, potato | R2 = 0.97 to 0.99 (BLSTM) |

| [192] | Yield estimation | Image taken from UAV (DJI Phantom 4 Pro) | Bounding box | Region convolutional neural network | 592 trees of apples | R2 = 0.86 |

| [72] | Monitoring agricultural area | Images collected by optical satellite sensors of SPOT, Landsat-8 and Sentinel-1A | Aumentation (patch normalization) | Spatio-temporal–spectral deep learning | Paddy field area | F1 score = 0.93 |

| [193] | Border extraction | Images collected by Sentinel-2 and Landsat-8 satellites | Semantic segmentation | ResUNet | Field border | Accuracy = 85.60% |

| [194] | Border extraction | RGB image and near-infrared-2 bands taken using WorldView-3 satellite image | Polygon | FCNN (UNet, SegNet and DenseNet) | Smallholder farm in Bangladesh | Precision for all proposed FCNNs is up to 0.8 |

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Acknowledgments

Conflicts of Interest

References

- Khan, T.; Sherazi, H.; Ali, M.; Letchmunan, S.; Butt, U. Deep Learning-Based Growth Prediction System: A Use Case of China Agriculture. Agronomy 2021, 11, 1551. [Google Scholar] [CrossRef]

- Ahmad, N.; Singh, S. Comparative study of disease detection in plants using machine learning and deep learning. In Proceedings of the 2nd International Conference on Electrical, Computer and Energy Technologies (ICECET), Prague, Czech Republic, 20–22 July 2021; pp. 54–59. [Google Scholar] [CrossRef]

- Zheng, Y.-Y.; Kong, J.-L.; Jin, X.-B.; Wang, X.-Y.; Su, T.-L.; Zuo, M. CropDeep: The Crop Vision Dataset for Deep-Learning-Based Classification and Detection in Precision Agriculture. Sensors 2019, 19, 1058. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Velumani, K.; Madec, S.; de Solan, B.; Lopez-Lozano, R.; Gillet, J.; Labrosse, J.; Jezequel, S.; Comar, A.; Baret, F. An automatic method based on daily in situ images and deep learning to date wheat heading stage. Field Crop. Res. 2020, 252, 107793. [Google Scholar] [CrossRef]

- Santos, L.; Santos, F.N.; Oliveira, P.M.; Shinde, P. Deep learning applications in agriculture: A short review. In Proceedings of the Iberian Robotics Conference, Proceedings of the Robot 2019: Fourth Iberian Robotics Conference, Porto, Portugal, 20–22 November 2019; Springer: Cham, Switzerland, 2019; pp. 139–151. [Google Scholar]

- Khan, N.; Ray, R.; Sargani, G.; Ihtisham, M.; Khayyam, M.; Ismail, S. Current Progress and Future Prospects of Agriculture Technology: Gateway to Sustainable Agriculture. Sustainability 2021, 13, 4883. [Google Scholar] [CrossRef]

- Cecotti, H.; Rivera, A.; Farhadloo, M.; Pedroza, M.A. Grape detection with convolutional neural networks. Expert Syst. Appl. 2020, 159, 113588. [Google Scholar] [CrossRef]

- Kayad, A.; Paraforos, D.; Marinello, F.; Fountas, S. Latest Advances in Sensor Applications in Agriculture. Agriculture 2020, 10, 362. [Google Scholar] [CrossRef]

- Cheng, C.Y.; Liu, L.; Tao, J.; Chen, X.; Xia, R.; Zhang, Q.; Xiong, J.; Yang, K.; Xie, J. The image annotation algorithm using convolutional features from intermediate layer of deep learning. Multimed. Tools Appl. 2021, 80, 4237–4261. [Google Scholar] [CrossRef]

- Niu, Y.; Lu, Z.; Wen, J.-R.; Xiang, T.; Chang, S.-F. Multi-Modal Multi-Scale Deep Learning for Large-Scale Image Annotation. IEEE Trans. Image Process. 2018, 28, 1720–1731. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Blok, P.M.; van Henten, E.J.; van Evert, F.K.; Kootstra, G. Image-based size estimation of broccoli heads under varying degrees of occlusion. Biosyst. Eng. 2021, 208, 213–233. [Google Scholar] [CrossRef]

- Chen, S.; Wang, M.; Chen, X. Image Annotation via Reconstitution Graph Learning Model. Wirel. Commun. Mob. Comput. 2020, 2020, 8818616. [Google Scholar] [CrossRef]

- Bhagat, P.; Choudhary, P. Image annotation: Then and now. Image Vis. Comput. 2018, 80, 1–23. [Google Scholar] [CrossRef]

- Wang, R.; Xie, Y.; Yang, J.; Xue, L.; Hu, M.; Zhang, Q. Large scale automatic image annotation based on convolutional neural network. J. Vis. Commun. Image Represent. 2017, 49, 213–224. [Google Scholar] [CrossRef]

- Mori, Y.; Takahashi, H.; Oka, R. Image-to-word transformation based on dividing and vector quantizing images with words. In Proceedings of the First International Workshop on Multimedia Intelligent Storage and Retrieval Management, Orlando, FL, USA, October 1999; pp. 1–9. [Google Scholar]

- Ma, Y.; Liu, Y.; Xie, Q.; Li, L. CNN-feature based automatic image annotation method. Multimed. Tools Appl. 2019, 78, 3767–3780. [Google Scholar] [CrossRef]

- Deng, J.; Dong, W.; Socher, R.; Li, L.J.; Li, K.; Fei-Fei, L. Imagenet: A large-scale hierarchical image database. In Proceedings of the 2009 IEEE Conference on Computer Vision and Pattern Recognition, Miami, FL, USA, 20–25 June 2009; pp. 248–255. [Google Scholar]

- Mohanty, S.P.; Hughes, D.P.; Salathé, M. Using Deep Learning for Image-Based Plant Disease Detection. Front. Plant Sci. 2016, 7, 1419. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Hani, N.; Roy, P.; Isler, V. MinneApple: A Benchmark Dataset for Apple Detection and Segmentation. IEEE Robot. Autom. Lett. 2020, 5, 852–858. [Google Scholar] [CrossRef] [Green Version]

- Altaheri, H.; Alsulaiman, M.; Muhammad, G.; Amin, S.U.; Bencherif, M.; Mekhtiche, M. Date fruit dataset for intelligent harvesting. Data Brief 2019, 26, 104514. [Google Scholar] [CrossRef]

- Koirala, A.; Walsh, K.B.; Wang, Z.; McCarthy, C. Deep learning for real-time fruit detection and orchard fruit load estimation: Benchmarking of ‘MangoYOLO’. Precis. Agric. 2019, 20, 1107–1135. [Google Scholar] [CrossRef]

- Olsen, A.; Konovalov, D.A.; Philippa, B.; Ridd, P.; Wood, J.C.; Johns, J.; Banks, W.; Girgenti, B.; Kenny, O.; Whinney, J.; et al. DeepWeeds: A Multiclass Weed Species Image Dataset for Deep Learning. Sci. Rep. 2019, 9, 2058. [Google Scholar] [CrossRef]

- Madsen, S.L.; Mathiassen, S.K.; Dyrmann, M.; Laursen, M.S.; Paz, L.-C.; Jørgensen, R.N. Open Plant Phenotype Database of Common Weeds in Denmark. Remote Sens. 2020, 12, 1246. [Google Scholar] [CrossRef] [Green Version]

- Giselsson, T.M.; Jørgensen, R.N.; Jensen, P.K.; Dyrmann, M.; Midtiby, H.S. Midtiby, A public image database for benchmark of plant seedling classification algorithms. arXiv 2017, arXiv:1711.05458. [Google Scholar]

- Cheng, Q.; Zhang, Q.; Fu, P.; Tu, C.; Li, S. A survey and analysis on automatic image annotation. Pattern Recognit. 2018, 79, 242–259. [Google Scholar] [CrossRef]

- Randive, K.; Mohan, R. A State-of-Art Review on Automatic Video Annotation Techniques. In Proceedings of the International Conference on Intelligent Systems Design and Applications, Vellore, India, 6–8 December 2018; Springer: Cham, Switzerland, 2018; pp. 1060–1069. [Google Scholar] [CrossRef]

- Sudars, K.; Jasko, J.; Namatevs, I.; Ozola, L.; Badaukis, N. Dataset of annotated food crops and weed images for robotic computer vision control. Data Brief 2020, 31, 105833. [Google Scholar] [CrossRef]

- Cao, J.; Zhao, A.; Zhang, Z. Automatic image annotation method based on a convolutional neural network with threshold optimization. PLoS ONE 2020, 15, e0238956. [Google Scholar] [CrossRef]

- Dechter, R. Learning while searching in constraint-satisfaction problems. In Proceedings of the Fifth National Conference on Artificial Intelligence (AAAI-86), Philadelphia, PN, USA, 11–15 August 1986. [Google Scholar]

- Aizenberg, I.; Aizenberg, N.N.; Vandewalle, J.P. Multi-Valued and Universal Binary Neurons: Theory, Learning and Applications; Springer Science & Business Media: Cham, Switzerland, 2000. [Google Scholar]

- Schmidhuber, J. Deep learning. Scholarpedia 2015, 10, 32832. [Google Scholar] [CrossRef] [Green Version]

- Schmidhuber, J. Deep Learning in Neural Networks: An Overview. Neural Netw. 2015, 61, 85–117. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Adnan, M.M.; Rahim, M.S.M.; Rehman, A.; Mehmood, Z.; Saba, T.; Naqvi, R.A. Automatic Image Annotation Based on Deep Learning Models: A Systematic Review and Future Challenges. IEEE Access 2021, 9, 50253–50264. [Google Scholar] [CrossRef]

- Chen, J.; Ran, X. Deep Learning with Edge Computing: A Review. Proc. IEEE 2019, 107, 1655–1674. [Google Scholar] [CrossRef]

- Caron, M.; Bojanowski, P.; Joulin, A.; Douze, M. Deep clustering for unsupervised learning of visual features. In Proceedings of the ECCV: European Conference on Computer Vision, Munich, Germany, 8–14 September 2018; pp. 139–156. [Google Scholar] [CrossRef] [Green Version]

- Bakhshipour, A.; Jafari, A. Evaluation of support vector machine and artificial neural networks in weed detection using shape features. Comput. Electron. Agric. 2018, 145, 153–160. [Google Scholar] [CrossRef]

- Kamilaris, A.; Prenafeta-Boldú, F.X. Deep learning in agriculture: A survey. Comput. Electron. Agric. 2018, 147, 70–90. [Google Scholar] [CrossRef] [Green Version]

- Bresilla, K.; Perulli, G.D.; Boini, A.; Morandi, B.; Corelli Grappadelli, L.; Manfrini, L. Single-Shot Convolution Neural Networks for Real-Time Fruit Detection Within the Tree. Front. Plant Sci. 2019, 10, 611. [Google Scholar] [CrossRef] [Green Version]

- Tsironis, V.; Bourou, S.; Stentoumis, C. Evaluation of Object Detection Algorithms on A New Real-World Tomato Dataset. ISPRS Arch. 2020, 43, 1077–1084. [Google Scholar] [CrossRef]

- Santos, T.T.; de Souza, L.L.; dos Santos, A.A.; Avila, S. Grape detection, segmentation, and tracking using deep neural networks and three-dimensional association. Comput. Electron. Agric. 2020, 170, 105247. [Google Scholar] [CrossRef] [Green Version]

- Dos Santos Ferreira, A.; Freitas, D.M.; da Silva, G.G.; Pistori, H.; Folhes, M.T. Unsupervised deep learning and semi-automatic data labeling in weed discrimination. Comput. Electron. Agric. 2019, 165, 104963. [Google Scholar] [CrossRef]

- Van Engelen, J.E.; Hoos, H.H. A survey on semi-supervised learning. Mach. Learn. 2020, 109, 373–440. [Google Scholar] [CrossRef] [Green Version]

- Shorewala, S.; Ashfaque, A.; Sidharth, R.; Verma, U. Weed Density and Distribution Estimation for Precision Agriculture Using Semi-Supervised Learning. IEEE Access 2021, 9, 27971–27986. [Google Scholar] [CrossRef]

- Hu, C.; Thomasson, J.A.; Bagavathiannan, M.V. A powerful image synthesis and semi-supervised learning pipeline for site-specific weed detection. Comput. Electron. Agric. 2021, 190, 106423. [Google Scholar] [CrossRef]

- Khan, S.; Tufail, M.; Khan, M.T.; Khan, Z.A.; Iqbal, J.; Alam, M. A novel semi-supervised framework for UAV based crop/weed classification. PLoS ONE 2021, 16, e0251008. [Google Scholar] [CrossRef]

- Karami, A.; Crawford, M.; Delp, E.J. Automatic Plant Counting and Location Based on a Few-Shot Learning Technique. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2020, 13, 5872–5886. [Google Scholar] [CrossRef]

- Noon, S.K.; Amjad, M.; Qureshi, M.A.; Mannan, A. Use of deep learning techniques for identification of plant leaf stresses: A review. Sustain. Comput. Inform. Syst. 2020, 28, 100443. [Google Scholar] [CrossRef]

- Fountsop, A.N.; Fendji, J.L.E.K.; Atemkeng, M. Deep Learning Models Compression for Agricultural Plants. Appl. Sci. 2020, 10, 6866. [Google Scholar] [CrossRef]

- Xuan, G.; Gao, C.; Shao, Y.; Zhang, M.; Wang, Y.; Zhong, J.; Li, Q.; Peng, H. Apple Detection in Natural Environment Using Deep Learning Algorithms. IEEE Access 2020, 8, 216772–216780. [Google Scholar] [CrossRef]

- Rahnemoonfar, M.; Sheppard, C. Real-time yield estimation based on deep learning. Autonomous Air and Ground Sensing Systems for Agricultural Optimization and Phenotyping II. In Proceedings of the SPIE Commercial + Scientific Sensing and Imaging, Anaheim, CA, USA, 8 May 2017; p. 1021809. [Google Scholar] [CrossRef]

- Bah, M.D.; Hafiane, A.; Canals, R. Deep Learning with Unsupervised Data Labeling for Weed Detection in Line Crops in UAV Images. Remote. Sens. 2018, 10, 1690. [Google Scholar] [CrossRef] [Green Version]

- Huang, H.; Lan, Y.; Yang, A.; Zhang, Y.; Wen, S.; Deng, J. Deep learning versus Object-based Image Analysis (OBIA) in weed mapping of UAV imagery. Int. J. Remote Sens. 2020, 41, 3446–3479. [Google Scholar] [CrossRef]

- Veeranampalayam Sivakumar, A.N.; Li, J.; Scott, S.; Psota, E.; Jhala, A.J.; Luck, J.D.; Shi, Y. Comparison of Object Detection and Patch-Based Classification Deep Learning Models on Mid- to Late-Season Weed Detection in UAV Imagery. Remote Sens. 2020, 12, 2136. [Google Scholar] [CrossRef]

- Franco, C.; Guada, C.; Rodríguez, J.T.; Nielsen, J.; Rasmussen, J.; Gómez, D.; Montero, J. Automatic detection of thistle-weeds in cereal crops from aerial RGB images. In Proceedings of the International Conference on Information Processing and Management of Uncertainty in Knowledge-Based Systems, Cádiz, Spain, 11–15 June 2018; Springer: Cham, Switzerland, 2018; pp. 441–452. [Google Scholar] [CrossRef]

- Kalampokas, Τ.; Vrochidou, Ε.; Papakostas, G.A.; Pachidis, T.; Kaburlasos, V.G. Grape stem detection using regression convolutional neural networks. Comput. Electron. Agric. 2021, 186, 106220. [Google Scholar] [CrossRef]

- Liu, X.; Ghazali, K.H.; Han, F.; Mohamed, I.I. Automatic Detection of Oil Palm Tree from UAV Images Based on the Deep Learning Method. Appl. Artif. Intell. 2021, 35, 13–24. [Google Scholar] [CrossRef]

- Yang, Q.; Shi, L.; Han, J.; Yu, J.; Huang, K. A near real-time deep learning approach for detecting rice phenology based on UAV images. Agric. For. Meteorol. 2020, 287, 107938. [Google Scholar] [CrossRef]

- Tetila, E.C.; Machado, B.B.; Astolfi, G.; Belete, N.A.D.S.; Amorim, W.P.; Roel, A.R.; Pistori, H. Detection and classification of soybean pests using deep learning with UAV images. Comput. Electron. Agric. 2020, 179, 105836. [Google Scholar] [CrossRef]

- Mhango, J.; Harris, E.; Green, R.; Monaghan, J. Mapping Potato Plant Density Variation Using Aerial Imagery and Deep Learning Techniques for Precision Agriculture. Remote Sens. 2021, 13, 2705. [Google Scholar] [CrossRef]

- Tri, N.C.; Duong, H.N.; Van Hoai, T.; Van Hoa, T.; Nguyen, V.H.; Toan, N.T.; Snasel, V. A novel approach based on deep learning techniques and UAVs to yield assessment of paddy fields. In Proceedings of the 2017 9th International Conference on Knowledge and Systems Engineering (KSE), Hue, Vietnam, 19–21 October 2017; pp. 257–262. [Google Scholar]

- Trujillano, F.; Flores, A.; Saito, C.; Balcazar, M.; Racoceanu, D. Corn classification using Deep Learning with UAV imagery. An operational proof of concept. In Proceedings of the IEEE 1st Colombian Conference on Applications in Computational Intelligence (ColCACI), Medellin, Colombia, 16–18 May 2018; pp. 1–4. [Google Scholar] [CrossRef]

- Vaeljaots, E.; Lehiste, H.; Kiik, M.; Leemet, T. Soil sampling automation case-study using unmanned ground vehicle. Eng. Rural Dev. 2018, 17, 982–987. [Google Scholar] [CrossRef]

- Cantelli, L.; Bonaccorso, F.; Longo, D.; Melita, C.D.; Schillaci, G.; Muscato, G. A Small Versatile Electrical Robot for Autonomous Spraying in Agriculture. AgriEngineering 2019, 1, 29. [Google Scholar] [CrossRef] [Green Version]

- Cutulle, M.A.; Maja, J.M. Determining the utility of an unmanned ground vehicle for weed control in specialty crop system. Ital. J. Agron. 2021, 16, 1426–1435. [Google Scholar] [CrossRef]

- Jun, J.; Kim, J.; Seol, J.; Kim, J.; Son, H.I. Towards an Efficient Tomato Harvesting Robot: 3D Perception, Manipulation, and End-Effector. IEEE Access 2021, 9, 17631–17640. [Google Scholar] [CrossRef]

- Mazzia, V.; Salvetti, F.; Aghi, D.; Chiaberge, M. Deepway: A deep learning estimator for unmanned ground vehicle global path planning. arXiv 2020, arXiv:2010.16322. [Google Scholar]

- Li, Y.; Iida, M.; Suyama, T.; Suguri, M.; Masuda, R. Implementation of deep-learning algorithm for obstacle detection and collision avoidance for robotic harvester. Comput. Electron. Agric. 2020, 174, 105499. [Google Scholar] [CrossRef]

- Persello, C.; Tolpekin, V.; Bergado, J.; de By, R. Delineation of agricultural fields in smallholder farms from satellite images using fully convolutional networks and combinatorial grouping. Remote Sens. Environ. 2019, 231, 111253. [Google Scholar] [CrossRef]

- Mounir, A.J.; Mallat, S.; Zrigui, M. Analyzing satellite images by apply deep learning instance segmentation of agricultural fields. Period. Eng. Nat. Sci. 2021, 9, 1056–1069. [Google Scholar] [CrossRef]

- Gastli, M.S.; Nassar, L.; Karray, F. Satellite images and deep learning tools for crop yield prediction and price forecasting. In Proceedings of the 2021 International Joint Conference on Neural Networks (IJCNN), Shenzhen, China, 18–22 July 2021; pp. 1–8. [Google Scholar] [CrossRef]

- Waldner, F.; Diakogiannis, F.I. Deep learning on edge: Extracting field boundaries from satellite images with a convolutional neural network. Remote Sens. Environ. 2020, 245, 111741. [Google Scholar] [CrossRef]

- Nguyen, T.T.; Hoang, T.D.; Pham, M.T.; Vu, T.T.; Huynh, Q.-T.; Jo, J. Monitoring agriculture areas with satellite images and deep learning. Appl. Soft Comput. 2020, 95, 106565. [Google Scholar] [CrossRef]

- Dhyani, Y.; Pandya, R.J. Deep learning oriented satellite remote sensing for drought and prediction in agriculture. In Proceedings of the 2021 IEEE 18th India Council International Conference (INDICON), Guwahati, India, 19–21 December 2021; pp. 1–5. [Google Scholar] [CrossRef]

- Gadiraju, K.K.; Ramachandra, B.; Chen, Z.; Vatsavai, R.R. Multimodal deep learning based crop classification using multispectral and multitemporal satellite imagery. In Proceedings of the 26th ACM SIGKDD International Conference on Knowledge Discovery & Data Mining, Virtual Event, 6–10 July 2020; pp. 3234–3242. [Google Scholar] [CrossRef]

- Ahmed, A.; Deo, R.; Raj, N.; Ghahramani, A.; Feng, Q.; Yin, Z.; Yang, L. Deep Learning Forecasts of Soil Moisture: Convolutional Neural Network and Gated Recurrent Unit Models Coupled with Satellite-Derived MODIS, Observations and Synoptic-Scale Climate Index Data. Remote Sens. 2021, 13, 554. [Google Scholar] [CrossRef]

- Wang, C.; Liu, B.; Liu, L.; Zhu, Y.; Hou, J.; Liu, P.; Li, X. A review of deep learning used in the hyperspectral image analysis for agriculture. Artif. Intell. Rev. 2021, 54, 5205–5253. [Google Scholar] [CrossRef]

- Koirala, A.; Walsh, K.B.; Wang, Z.; McCarthy, C. Deep learning—Method overview and review of use for fruit detection and yield estimation. Comput. Electron. Agric. 2019, 162, 219–234. [Google Scholar] [CrossRef]

- Darwin, B.; Dharmaraj, P.; Prince, S.; Popescu, D.; Hemanth, D. Recognition of Bloom/Yield in Crop Images Using Deep Learning Models for Smart Agriculture: A Review. Agronomy 2021, 11, 646. [Google Scholar] [CrossRef]

- Moazzam, S.I.; Khan, U.S.; Tiwana, M.I.; Iqbal, J.; Qureshi, W.S.; Shah, S.I. A Review of application of deep learning for weeds and crops classification in agriculture. In Proceedings of the 2019 International Conference on Robotics and Automation in Industry (ICRAI), Rawalpindi, Pakistan, 21–22 October 2019; pp. 1–6. [Google Scholar] [CrossRef]

- Zhang, Q.; Liu, Y.; Gong, C.; Chen, Y.; Yu, H. Applications of Deep Learning for Dense Scenes Analysis in Agriculture: A Review. Sensors 2020, 20, 1520. [Google Scholar] [CrossRef] [Green Version]

- Chen, Y.; Zeng, X.; Chen, X.; Guo, W. A survey on automatic image annotation. Appl. Intell. 2020, 50, 3412–3428. [Google Scholar] [CrossRef]

- Bouchakwa, M.; Ayadi, Y.; Amous, I. A review on visual content-based and users’ tags-based image annotation: Methods and techniques. Multimedia Tools Appl. 2020, 79, 21679–21741. [Google Scholar] [CrossRef]

- Dananjayan, S.; Tang, Y.; Zhuang, J.; Hou, C.; Luo, S. Assessment of state-of-the-art deep learning based citrus disease detection techniques using annotated optical leaf images. Comput. Electron. Agric. 2022, 193, 106658. [Google Scholar] [CrossRef]

- He, Z.; Xiong, J.; Chen, S.; Li, Z.; Chen, S.; Zhong, Z.; Yang, Z. A method of green citrus detection based on a deep bounding box regression forest. Biosyst. Eng. 2020, 193, 206–215. [Google Scholar] [CrossRef]

- Morbekar, A.; Parihar, A.; Jadhav, R. Crop disease detection using YOLO. In Proceedings of the 2020 International Conference for Emerging Technology (INCET), Belgaum, Karnataka, India, 5–7 June 2020; pp. 1–5. [Google Scholar] [CrossRef]

- Lamb, N.; Chuah, M.C. A strawberry detection system using convolutional neural networks. In Proceedings of the 2018 IEEE International Conference on Big Data (Big Data), Seattle, WA, USA, 10–13 December 2018; pp. 2515–2520. [Google Scholar] [CrossRef]

- Tassis, L.M.; de Souza, J.E.T.; Krohling, R.A. A deep learning approach combining instance and semantic segmentation to identify diseases and pests of coffee leaves from in-field images. Comput. Electron. Agric. 2021, 186, 106191. [Google Scholar] [CrossRef]

- Fawakherji, M.; Youssef, A.; Bloisi, D.; Pretto, A.; Nardi, D. Crop and weeds classification for precision agriculture using context-independent pixel-wise segmentation. In Proceedings of the Third IEEE International Conference on Robotic Computing (IRC), Naples, Italy, 25–27 February 2019; pp. 146–152. [Google Scholar] [CrossRef]

- Bosilj, P.; Aptoula, E.; Duckett, T.; Cielniak, G. Transfer learning between crop types for semantic segmentation of crops versus weeds in precision agriculture. J. Field Robot. 2019, 37, 7–19. [Google Scholar] [CrossRef]

- Storey, G.; Meng, Q.; Li, B. Leaf Disease Segmentation and Detection in Apple Orchards for Precise Smart Spraying in Sustainable Agriculture. Sustainability 2022, 14, 1458. [Google Scholar] [CrossRef]

- Wspanialy, P.; Brooks, J.; Moussa, M. An image labeling tool and agricultural dataset for deep learning. arXiv 2021, arXiv:2004.03351. [Google Scholar]

- Biffi, L.; Mitishita, E.; Liesenberg, V.; Santos, A.; Gonçalves, D.; Estrabis, N.; Silva, J.; Osco, L.P.; Ramos, A.; Centeno, J.; et al. ATSS Deep Learning-Based Approach to Detect Apple Fruits. Remote Sens. 2020, 13, 54. [Google Scholar] [CrossRef]

- Alibabaei, K.; Gaspar, P.D.; Lima, T.M. Crop Yield Estimation Using Deep Learning Based on Climate Big Data and Irrigation Scheduling. Energies 2021, 14, 3004. [Google Scholar] [CrossRef]

- Mamdouh, N.; Khattab, A. YOLO-Based Deep Learning Framework for Olive Fruit Fly Detection and Counting. IEEE Access 2021, 9, 84252–84262. [Google Scholar] [CrossRef]

- Kattenborn, T.; Leitloff, J.; Schiefer, F.; Hinz, S. Review on Convolutional Neural Networks (CNN) in vegetation remote sensing. ISPRS J. Photogramm. Remote Sens. 2021, 173, 24–49. [Google Scholar] [CrossRef]

- Zhu, X.X.; Tuia, D.; Mou, L.; Xia, G.-S.; Zhang, L.; Xu, F.; Fraundorfer, F. Deep Learning in Remote Sensing: A Comprehensive Review and List of Resources. IEEE Geosci. Remote Sens. Mag. 2017, 5, 8–36. [Google Scholar] [CrossRef] [Green Version]

- Fukushima, K. Neocognitron: A hierarchical neural network capable of visual pattern recognition. Neural Netw. 1988, 1, 119–130. [Google Scholar] [CrossRef]

- Rahnemoonfar, M.; Sheppard, C. Deep Count: Fruit Counting Based on Deep Simulated Learning. Sensors 2017, 17, 905. [Google Scholar] [CrossRef] [Green Version]

- Thenmozhi, K.; Reddy, U.S. Crop pest classification based on deep convolutional neural network and transfer learning. Comput. Electron. Agric. 2019, 164, 104906. [Google Scholar] [CrossRef]

- Huang, N.; Chou, D.; Lee, C.; Wu, F.; Chuang, A.; Chen, Y.; Tsai, Y. Smart agriculture: Real-time classification of green coffee beans by using a convolutional neural network. IET Smart Cities 2020, 2, 167–172. [Google Scholar] [CrossRef]

- Asad, M.H.; Bais, A. Weed detection in canola fields using maximum likelihood classification and deep convolutional neural network. Inf. Process. Agric. 2020, 7, 535–545. [Google Scholar] [CrossRef]

- Hamidinekoo, A.; Martínez, G.A.G.; Ghahremani, M.; Corke, F.; Zwiggelaar, R.; Doonan, J.H.; Lu, C. DeepPod: A convolutional neural network based quantification of fruit number in Arabidopsis. GigaScience 2020, 9, giaa012. [Google Scholar] [CrossRef] [Green Version]

- Onishi, Y.; Yoshida, T.; Kurita, H.; Fukao, T.; Arihara, H.; Iwai, A. An automated fruit harvesting robot by using deep learning. ROBOMECH J. 2019, 6, 13. [Google Scholar] [CrossRef] [Green Version]

- Adi, M.; Singh, A.K.; Reddy, H.; Kumar, Y.; Challa, V.R.; Rana, P.; Mittal, U. An overview on plant disease detection algorithm using deep learning. In Proceedings of the 2021 2nd International Conference on Intelligent Engineering and Management (ICIEM), London, UK, 28–30 April 2021; pp. 305–309. [Google Scholar] [CrossRef]

- Sharma, P.; Berwal, Y.P.S.; Ghai, W. Performance analysis of deep learning CNN models for disease detection in plants using image segmentation. Inf. Process. Agric. 2020, 7, 566–574. [Google Scholar] [CrossRef]

- Kang, H.; Chen, C. Fruit detection, segmentation and 3D visualisation of environments in apple orchards. Comput. Electron. Agric. 2020, 171, 105302. [Google Scholar] [CrossRef] [Green Version]

- Khattak, A.; Asghar, M.U.; Batool, U.; Ullah, H.; Al-Rakhami, M.; Gumaei, A. Automatic Detection of Citrus Fruit and Leaves Diseases Using Deep Neural Network Model. IEEE Access 2021, 9, 112942–112954. [Google Scholar] [CrossRef]

- Yang, W.; Nigon, T.; Hao, Z.; Paiao, G.D.; Fernández, F.G.; Mulla, D.; Yang, C. Estimation of corn yield based on hyperspectral imagery and convolutional neural network. Comput. Electron. Agric. 2021, 184, 106092. [Google Scholar] [CrossRef]

- Fuentes, A.; Yoon, S.; Kim, S.C.; Park, D.S. A Robust Deep-Learning-Based Detector for Real-Time Tomato Plant Diseases and Pests Recognition. Sensors 2017, 17, 2022. [Google Scholar] [CrossRef] [Green Version]

- Maheswari, P.; Raja, P.; Apolo-Apolo, O.E.; Pérez-Ruiz, M. Intelligent Fruit Yield Estimation for Orchards Using Deep Learning Based Semantic Segmentation Techniques—A Review. Front. Plant Sci. 2021, 12, 684328. [Google Scholar] [CrossRef]

- Mu, C.; Yuan, Z.; Ouyang, X.; Sun, P.; Wang, B. Non-destructive detection of blueberry skin pigments and intrinsic fruit qualities based on deep learning. J. Sci. Food Agric. 2021, 101, 3165–3175. [Google Scholar] [CrossRef] [PubMed]

- Lee, S.H.; Goëau, H.; Bonnet, P.; Joly, A. New perspectives on plant disease characterization based on deep learning. Comput. Electron. Agric. 2020, 170, 105220. [Google Scholar] [CrossRef]

- Verma, S.; Chug, A.; Singh, A.P. Application of convolutional neural networks for evaluation of disease severity in tomato plant. J. Discret. Math. Sci. Cryptogr. 2020, 23, 273–282. [Google Scholar] [CrossRef]

- Gehlot, M.; Saini, M.L. Analysis of different CNN architectures for tomato leaf disease classification. In Proceedings of the 2020 5th IEEE International Conference on Recent Advances and Innovations in Engineering (ICRAIE), Jaipur, India, 1–3 December 2020; pp. 1–6. [Google Scholar] [CrossRef]

- Zhang, D.; Islam, M.; Lu, G. A review on automatic image annotation techniques. Pattern Recognit. 2012, 45, 346–362. [Google Scholar] [CrossRef]

- Jmour, N.; Zayen, S.; Abdelkrim, A. Convolutional neural networks for image classification. In 2018 International Conference on Advanced Systems and Electric Technologies (IC_ASET); IEEE: New York, NY, USA, 2018; pp. 397–402. [Google Scholar] [CrossRef]

- Zhou, L.; Zhang, C.; Liu, F.; Qiu, Z.; He, Y. Application of Deep Learning in Food: A Review. Compr. Rev. Food Sci. Food Saf. 2019, 18, 1793–1811. [Google Scholar] [CrossRef] [Green Version]

- Lee, K.B.; Cheon, S.; Kim, C.O. A Convolutional Neural Network for Fault Classification and Diagnosis in Semiconductor Manufacturing Processes. IEEE Trans. Semicond. Manuf. 2017, 30, 135–142. [Google Scholar] [CrossRef]

- Das, P.; Yadav, J.K.P.S.; Yadav, A.K. An Automated Tomato Maturity Grading System Using Transfer Learning Based AlexNet. Ing. Des Syst. Inf. 2021, 26, 191–200. [Google Scholar] [CrossRef]

- Palsson, F.; Sveinsson, J.R.; Ulfarsson, M.O. Multispectral and Hyperspectral Image Fusion Using a 3-D-Convolutional Neural Network. IEEE Geosci. Remote Sens. Lett. 2017, 14, 639–643. [Google Scholar] [CrossRef] [Green Version]

- Indolia, S.; Goswami, A.K.; Mishra, S.; Asopa, P. Conceptual Understanding of Convolutional Neural Network—A Deep Learning Approach. Procedia Comput. Sci. 2018, 132, 679–688. [Google Scholar] [CrossRef]

- Gu, J.; Wang, Z.; Kuen, J.; Ma, L.; Shahroudy, A.; Shuai, B.; Liu, T.; Wang, X.; Wang, G.; Cai, J.; et al. Recent advances in convolutional neural networks. Pattern Recognit. 2018, 77, 354–377. [Google Scholar] [CrossRef] [Green Version]

- LeCun, Y.; Boser, B.; Denker, J.; Henderson, D.; Howard, R.; Hubbard, W.; Jackel, L. Handwritten digit recognition with a back-propagation network. Adv. Neural Inf. Process. Syst. 1989, 2, 396–404. [Google Scholar]

- Sharma, N.; Jain, V.; Mishra, A. An Analysis of Convolutional Neural Networks for Image Classification. Procedia Comput. Sci. 2018, 132, 377–384. [Google Scholar] [CrossRef]

- Sarıgül, M.; Ozyildirim, B.; Avci, M. Differential convolutional neural network. Neural Netw. 2019, 116, 279–287. [Google Scholar] [CrossRef]

- Zeng, W.; Li, M.; Zhang, J.; Chen, L.; Fang, S.; Wang, J. High-order residual convolutional neural network for robust crop disease recognition. In Proceedings of the 2nd International Conference on Computer Science and Application Engineering, Hohhot, China, 22–24 October 2018; pp. 1–5. [Google Scholar] [CrossRef]

- Mohammadi, S.; Belgiu, M.; Stein, A. 3D fully convolutional neural networks with intersection over union loss for crop mapping from multi-temporal satellite images. In Proceedings of the 2021 IEEE International Geoscience and Remote Sensing Symposium IGARSS, Brussels, Belgium, 11–16 July 2021; pp. 5834–5837. [Google Scholar] [CrossRef]

- Prilianti, K.R.; Brotosudarmo, T.H.P.; Anam, S.; Suryanto, A. Performance comparison of the convolutional neural network optimizer for photosynthetic pigments prediction on plant digital image. AIP Conf. Proc. 2019, 2084, 020020. [Google Scholar] [CrossRef]

- Lecun, Y.; Bottou, L.; Bengio, Y.; Haffner, P. Gradient-based learning applied to document recognition. Proc. IEEE 1998, 86, 2278–2324. [Google Scholar] [CrossRef] [Green Version]

- Dubey, A. Agricultural plant disease detection and identification. Int. J. Electr. Eng. Technol. 2020, 11, 354–363. [Google Scholar]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. Imagenet classification with deep convolutional neural networks. Adv. Neural Inf. Processing Syst. 2012, 60, 84–90. [Google Scholar] [CrossRef]

- Liu, X.; Han, F.; Ghazali, K.H.; Mohamed, I.I.; Zhao, Y. A review of convolutional neural networks in remote sensing image. In Proceedings of the 2019 8th International Conference on Software and Computer Applications, Penang, Malaysia, 19–21 February 2019; pp. 263–267. [Google Scholar] [CrossRef]

- Cheng, L.; Leung, A.C.S.; Ozawa, S. (Eds.) In Proceedings of the Neural Information Processing: 25th International Conference, ICONIP 2018, Siem Reap, Cambodia, 13–16 December 2018; Springer: Cham, Switzerland, 2018.

- Zhu, L.; Li, Z.; Li, C.; Wu, J.; Yue, J. High performance vegetable classification from images based on AlexNet deep learning model. Int. J. Agric. Biol. Eng. 2018, 11, 190–196. [Google Scholar] [CrossRef]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. In Proceedings of the 3rd International Conference on Learning Representations (ICLR2015), San Diego, CA, USA, 7–9 May 2015; pp. 1–14. [Google Scholar]

- Alsayed, A.; Alsabei, A.; Arif, M. Classification of Apple Tree Leaves Diseases using Deep Learning Methods. Int. J. Comput. Sci. Netw. Secur. 2021, 21, 324–330. [Google Scholar]

- Meng, X.; Yuan, Y.; Teng, G.; Liu, T. Deep learning for fine-grained classification of jujube fruit in the natural environment. J. Food Meas. Charact. 2021, 15, 4150–4165. [Google Scholar] [CrossRef]

- Szegedy, C.; Liu, W.; Jia, Y.; Sermanet, P.; Reed, S.; Anguelov, D.; Erhan, D.; Vanhoucke, V.; Rabinovich, A. Going deeper with convolutions. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 1–9. [Google Scholar]

- Szegedy, C.; Vanhoucke, V.; Ioffe, S.; Shlens, J.; Wojna, Z. Rethinking the inception architecture for computer vision. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 2818–2826. [Google Scholar] [CrossRef] [Green Version]

- Szegedy, C.; Ioffe, S.; Vanhoucke, V.; Alemi, A.A. Inception-v4, inception-resnet and the impact of residual connections on learning. In Proceedings of the Thirty-First AAAI Conference on Artificial Intelligence, San Francisco, CA, USA, 4–9 February 2017. [Google Scholar]

- Ni, J.; Gao, J.; Deng, L.; Han, Z. Monitoring the Change Process of Banana Freshness by GoogLeNet. IEEE Access 2020, 8, 228369–228376. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar] [CrossRef] [Green Version]

- Deeba, K.; Amutha, B. WITHDRAWN: ResNet—Deep neural network architecture for leaf disease classification. Microprocess. Microsyst. 2020, 103364. [Google Scholar] [CrossRef]

- Huang, G.; Liu, Z.; Van Der Maaten, L.; Weinberger, K.Q. Densely connected convolutional networks. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 2261–2269. [Google Scholar] [CrossRef] [Green Version]

- Huang, G.; Liu, S.; van der Maaten, L.; Weinberger, K.Q. CondenseNet: An efficient densenet using learned group convolutions. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 2752–2761. [Google Scholar] [CrossRef] [Green Version]

- Zhang, K.; Guo, Y.; Wang, X.; Yuan, J.; Ding, Q. Multiple feature reweight DenseNet for image classification. IEEE Access 2019, 7, 9872–9880. [Google Scholar] [CrossRef]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You only look once: Unified, real-time object detection. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar] [CrossRef] [Green Version]

- Redmon, J.; Farhadi, A. YOLO9000: Better, faster, stronger. In Proceedings of the IEEE Conference on Computer Vision And Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 7263–7271. Available online: http://www.worldscientific.com/doi/abs/10.1142/9789812771728_0012 (accessed on 15 March 2022).

- Redmon, J.; Farhadi, A. YOLOv3: An incremental improvement. arXiv 2018, arXiv:1804.02767. Available online: http://arxiv.org/abs/1804.02767 (accessed on 17 March 2022).

- Bochkovskiy, A.; Wang, C.; Liao, H. YOLOv4: Optimal Speed and Accuracy of Object Detection. arXiv 2020, arXiv:2004.10934. [Google Scholar]

- Lippi, M.; Bonucci, N.; Carpio, R.F.; Contarini, M.; Speranza, S.; Gasparri, A. A YOLO-based pest detection system for precision agriculture. In Proceedings of the 2021 29th Mediterranean Conference on Control and Automation (MED), Puglia, Italy, 22–25 June 2021; pp. 342–347. [Google Scholar] [CrossRef]

- Chang, C.-L.; Chung, S.-C. Improved deep learning-based approach for real-time plant species recognition on the farm. In Proceedings of the 12th International Symposium on Communication Systems, Networks and Digital Signal Processing (CSNDSP), Porto, Portugal, 20–22 July 2020; pp. 1–5. [Google Scholar] [CrossRef]

- Gai, R.; Chen, N.; Yuan, H. A detection algorithm for cherry fruits based on the improved YOLO-v4 model. Neural Comput. Appl. 2021, 1–12. [Google Scholar] [CrossRef]

- Jocher, G.; Stoken, A.; Borovec, J.; Chaurasia, A.; Changyu, L. Yolov5, Code Repos. 2020. Available online: Https//Github.Com/Ultralytics/Yolov5 (accessed on 5 April 2022).

- Zhao, J.; Zhang, X.; Yan, J.; Qiu, X.; Yao, X.; Tian, Y.; Zhu, Y.; Cao, W. A Wheat Spike Detection Method in UAV Images Based on Improved YOLOv. Remote Sens. 2021, 13, 3095. [Google Scholar] [CrossRef]

- Fan, Y.; Zhang, S.; Feng, K.; Qian, K.; Wang, Y.; Qin, S. Enhancement, Strawberry Maturity Recognition Algorithm Combining Dark Channel Enhancement and YOLOv5. Sensors 2022, 22, 419. [Google Scholar] [CrossRef]

- Yao, J.; Qi, J.; Zhang, J.; Shao, H.; Yang, J.; Li, X. A Real-Time Detection Algorithm for Kiwifruit Defects Based on YOLOv. Electronics 2021, 10, 1711. [Google Scholar] [CrossRef]

- Kuznetsova, A.; Maleva, T.; Soloviev, V. Detecting apples in orchards using YOLOv3 and YOLOv5 in general and close-up images. In Proceedings of the International Symposium on Neural Networks, Cairo, Egypt, 4–6 December 2020; pp. 233–243. [Google Scholar]

- LeCun, Y.; Haffner, P.; Bottou, L.; Bengio, Y. Object recognition with gradient-based learning. In Shape, Contour and Grouping in Computer Vision; Springer: Berlin/Heidelberg, Germany, 1999; pp. 319–345. [Google Scholar]

- Kolesnikov, A.; Beyer, L.; Zhai, X.; Puigcerver, J.; Yung, J.; Gelly, S.; Houlsby, N. Big Transfer (BiT): General visual representation learning. In Proceedings of the European Conference on Computer Vision, Glasgow, UK, 23–28 August 2020; Springer: Cham, Switzerland, 2020; pp. 491–507. [Google Scholar] [CrossRef]

- Xie, Q.; Luong, M.-T.; Hovy, E.; Le, Q.V. Self-Training with noisy student improves imagenet classification. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 10684–10695. [Google Scholar] [CrossRef]

- Pham, H.; Dai, Z.; Xie, Q.; Le, Q.V. Meta Pseudo Labels. 2020. Available online: http://arxiv.org/abs/2003.10580 (accessed on 6 April 2022).

- Mureşan, H.; Oltean, M. Fruit recognition from images using deep learning. Acta Univ. Sapientiae Inform. 2018, 10, 26–42. [Google Scholar] [CrossRef] [Green Version]

- Chen, S.W.; Shivakumar, S.S.; Dcunha, S.; Das, J.; Okon, E.; Qu, C.; Taylor, C.J.; Kumar, V. Counting Apples and Oranges with Deep Learning: A Data-Driven Approach. IEEE Robot. Autom. Lett. 2017, 2, 781–788. [Google Scholar] [CrossRef]

- Zhong, L.; Hu, L.; Zhou, H. Deep learning based multi-temporal crop classification. Remote Sens. Environ. 2019, 221, 430–443. [Google Scholar] [CrossRef]

- Marani, R.; Milella, A.; Petitti, A.; Reina, G. Deep neural networks for grape bunch segmentation in natural images from a consumer-grade camera. Precis. Agric. 2021, 22, 387–413. [Google Scholar] [CrossRef]

- Thapa, R.; Zhang, K.; Snavely, N.; Belongie, S.; Khan, A. The Plant Pathology Challenge 2020 data set to classify foliar disease of apples. Appl. Plant Sci. 2020, 8, e11390. [Google Scholar] [CrossRef]

- Majeed, Y.; Zhang, J.; Zhang, X.; Fu, L.; Karkee, M.; Zhang, Q.; Whiting, M.D. Deep learning based segmentation for automated training of apple trees on trellis wires. Comput. Electron. Agric. 2020, 170, 105277. [Google Scholar] [CrossRef]

- Garcia-Garcia, A.; Orts-Escolano, S.; Oprea, S.; Villena-Martinez, V.; Garcia-Rodriguez, J. A review on deep learning techniques applied to semantic segmentation. arXiv 2017, arXiv:1704.06857. [Google Scholar]

- Anuar, M.M.; Halin, A.A.; Perumal, T.; Kalantar, B. Aerial Imagery Paddy Seedlings Inspection Using Deep Learning. Remote Sens. 2022, 14, 274. [Google Scholar] [CrossRef]

- Liu, J.; Wang, X. Tomato Diseases and Pests Detection Based on Improved Yolo V3 Convolutional Neural Network. Front. Plant Sci. 2020, 11, 898. [Google Scholar] [CrossRef]

- Turkoglu, M.; Yanikoğlu, B.; Hanbay, D. PlantDiseaseNet: Convolutional neural network ensemble for plant disease and pest detection. Signal Image Video Process. 2021, 16, 301–309. [Google Scholar] [CrossRef]

- Krisnandi, D.; Pardede, H.F.; Yuwana, R.S.; Zilvan, V.; Heryana, A.; Fauziah, F.; Rahadi, V.P. Diseases Classification for Tea Plant Using Concatenated Convolution Neural Network. CommIT J. 2019, 13, 67–77. [Google Scholar] [CrossRef] [Green Version]

- Bansal, P.; Kumar, R.; Kumar, S. Disease Detection in Apple Leaves Using Deep Convolutional Neural Network. Agriculture 2021, 11, 617. [Google Scholar] [CrossRef]

- Afifi, A.; Alhumam, A.; Abdelwahab, A. Convolutional Neural Network for Automatic Identification of Plant Diseases with Limited Data. Plants 2021, 10, 28. [Google Scholar] [CrossRef] [PubMed]

- Lu, S.; Chen, W.; Zhang, X.; Karkee, M. Canopy-attention-YOLOv4-based immature/mature apple fruit detection on dense-foliage tree architectures for early crop load estimation. Comput. Electron. Agric. 2022, 193, 106696. [Google Scholar] [CrossRef]

- Aguiar, A.S.; Magalhães, S.A.; dos Santos, F.N.; Castro, L.; Pinho, T.; Valente, J.; Martins, R.; Boaventura-Cunha, J. Grape Bunch Detection at Different Growth Stages Using Deep Learning Quantized Models. Agronomy 2021, 11, 1890. [Google Scholar] [CrossRef]

- Yan, B.; Fan, P.; Lei, X.; Liu, Z.; Yang, F. A Real-Time Apple Targets Detection Method for Picking Robot Based on Improved YOLOv. Remote Sens. 2021, 13, 1619. [Google Scholar] [CrossRef]

- Sozzi, M.; Cantalamessa, S.; Cogato, A.; Kayad, A.; Marinello, F. Automatic Bunch Detection in White Grape Varieties Using YOLOv3, YOLOv4, and YOLOv5 Deep Learning Algorithms. Agronomy 2022, 12, 319. [Google Scholar] [CrossRef]

- Lyu, S.; Li, R.; Zhao, Y.; Li, Z.; Fan, R.; Liu, S. Green Citrus Detection and Counting in Orchards Based on YOLOv5-CS and AI Edge System. Sensors 2022, 22, 576. [Google Scholar] [CrossRef] [PubMed]

- Buzzy, M.; Thesma, V.; Davoodi, M.; Velni, J.M. Real-Time Plant Leaf Counting Using Deep Object Detection Networks. Sensors 2020, 20, 6896. [Google Scholar] [CrossRef] [PubMed]

- Machefer, M.; Lemarchand, F.; Bonnefond, V.; Hitchins, A.; Sidiropoulos, P. Mask R-CNN Refitting Strategy for Plant Counting and Sizing in UAV Imagery. Remote Sens. 2020, 12, 3015. [Google Scholar] [CrossRef]

- Sun, Z.; Di, L.; Fang, H.; Burgess, A. Deep Learning Classification for Crop Types in North Dakota. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2020, 13, 2200–2213. [Google Scholar] [CrossRef]

- Perugachi-Diaz, Y.; Tomczak, J.M.; Bhulai, S. Deep learning for white cabbage seedling prediction. Comput. Electron. Agric. 2021, 184, 106059. [Google Scholar] [CrossRef]

- Nasiri, A.; Taheri-Garavand, A.; Zhang, Y.-D. Image-based deep learning automated sorting of date fruit. Postharvest Biol. Technol. 2019, 153, 133–141. [Google Scholar] [CrossRef]

- Osako, Y.; Yamane, H.; Lin, S.-Y.; Chen, P.-A.; Tao, R. Cultivar discrimination of litchi fruit images using deep learning. Sci. Hortic. 2020, 269, 109360. [Google Scholar] [CrossRef]

- Kang, J.; Gwak, J. Ensemble of multi-task deep convolutional neural networks using transfer learning for fruit freshness classification. Multimedia Tools Appl. 2021, 81, 22355–22377. [Google Scholar] [CrossRef]

- Masuda, K.; Suzuki, M.; Baba, K.; Takeshita, K.; Suzuki, T.; Sugiura, M.; Niikawa, T.; Uchida, S.; Akagi, T. Noninvasive Diagnosis of Seedless Fruit Using Deep Learning in Persimmon. Hortic. J. 2021, 90, 172–180. [Google Scholar] [CrossRef]

- Bazame, H.C.; Molin, J.P.; Althoff, D.; Martello, M. Detection, classification, and mapping of coffee fruits during harvest with computer vision. Comput. Electron. Agric. 2021, 183, 106066. [Google Scholar] [CrossRef]

- Goëau, H.; Mora-Fallas, A.; Champ, J.; Love, N.L.R.; Mazer, S.J.; Mata-Montero, E.; Joly, A.; Bonnet, P. A new fine-grained method for automated visual analysis of herbarium specimens: A case study for phenological data extraction. Appl. Plant Sci. 2020, 8, e11368. [Google Scholar] [CrossRef]

- Goëau, H.; Mora-Fallas, A.; Champ, J.; Love, N.L.R.; Mazer, S.J.; Mata-Montero, E.; Joly, A.; Bonnet, P. Fine-grained automated visual analysis of herbarium specimens for phenological data extraction: An annotated dataset of reproductive organs in Strepanthus herbarium specimens. Zenodo Repos. 2020, 10. [Google Scholar] [CrossRef]

- Apolo-Apolo, O.E.; Pérez-Ruiz, M.; Guanter, J.M.; Valente, J. A Cloud-Based Environment for Generating Yield Estimation Maps from Apple Orchards Using UAV Imagery and a Deep Learning Technique. Front. Plant Sci. 2020, 11, 1086. [Google Scholar] [CrossRef]

- Sharifi, A.; Mahdipour, H.; Moradi, E.; Tariq, A. Agricultural Field Extraction with Deep Learning Algorithm and Satellite Imagery. J. Indian Soc. Remote Sens. 2022, 50, 417–423. [Google Scholar] [CrossRef]

- Yang, R.; Ahmed, Z.U.; Schulthess, U.C.; Kamal, M.; Rai, R. Detecting functional field units from satellite images in smallholder farming systems using a deep learning based computer vision approach: A case study from Bangladesh. Remote Sens. Appl. Soc. Environ. 2020, 20, 100413. [Google Scholar] [CrossRef] [PubMed]

| CNN Architecture | Year | Developed by | Characteristics | ImageNet Top Accuracy | ImageNet Top-5 Accuracy | Number of Parameters |

|---|---|---|---|---|---|---|

| LeNet | 1998 | Yann LeCun et al. [159] | Small and easy to understand | 98.35% | - | 60 thousand |

| AlexNet | 2012 | Alex Krizhevsky et al. [131] | First major CNN model that used GPU for training | 63.3% | 84.60% | 60 million |

| VGG-16 | 2014 | Simonyan, and Zisserman [135] | -Good architecture for particular task benchmark -Attractive feature of architectural simplicity comes at high cost -Progressing network requires a lot of computation -Has 16 layers | 74.4% | 91.90% | 138 million |

| VGG-19 | 2014 | Simonyan and Zisserman [135] | -Has 19 layers | 74.5% | 90.9% | 144 million |

| GoogLeNet/InceptionV1 | 2014 | Google [138] | -Designed to work well under strict constraint of memory and computational budget -Trains faster than VGG | 74.80% | 92.2% | 4 million |

| InceptionV3 | 2014 | Szegedy et al. [139] | Has higher efficiency and deeper network compared than InceptionV1 and -V2 | 78.8% | 94.4% | 24 million |

| InceptionV4 | 2014 | Szegedy et al. [140] | Has more Inception modules than InceptionV3 and uniform, more simplified architecture | 80.0% | 95.0% | 48 million |

| Inception-ResNetV2 | 2014 | Szegedy et al. [140] | -Hybrid Inception version with enhancement of recognition performance -Has computational cost similar to InceptionV4 | 80.1% | 95.1% | 56 million |

| YOLO | 2015 | Joseph Redmon [147] | Superb speed (45 frames per second) | 76.5% | 93.3% | 60 million |

| ResNet-50 | 2015 | Kaiming He [142] | -Introduces a skip connection to adapt the input from the previous layer to the next layer by maintaining the input -Deep network with 50 layers | 76.0% | 93.0% | 26 million |

| ResNet-152 | 2015 | Kaiming He [142] | Very deep network of 152 layers | 77.8% | 93.8% | 60 million |

| DenseNet-121 | 2016 | Gao Huang et al. [144] | -Has 120 convolutions and 4 AvgPool -Each layer has connection to every other layer | 74.98% | 92.29% | 8 million |

| DenseNet-264 | 2016 | Gao Huang et al. [145] | -Has 264-layer DenseNet | 77.85% | 93.88% | 34 million |

| YOLOv2/YOLO9000 | 2016 | Joseph Redmon and Ali Farhadi [148] | -Improvement from YOLOv1 in variety of ways -Uses Darknet-19 as a backbone -Real-time object detection model with a single stage | 86% | - | 59 million |

| YOLOv3 | 2018 | Joseph Redmon and Ali Farhadi [149] | -Improved version of YOLOv1 and v2 -Significant differences between the previous versions in terms of speed, precision and class specifications | 86.3% | - | 86 million |

| Big Transfer (BiT-L) | 2019 | Kolesnikov et al. [160] | Pre-trains on large supervised source datasets and fine-tunes the model on a target task | 87.54% | 98.5% | 928 million |

| YOLOv4 | 2020 | Alexey [150] | -Most recent YOLO series version for fast object detection in a single image -Uses CSPDarknet53 as a backbone | 86.8% | - | 193 million |

| YOLOv5 | 2020 | Glenn Jocher [154] | -Has three important parts: backbone, neck and head model -CSPNet is used as a backbone | 87.1% | - | 296 million |

| Noisy Student Training EfficientNet-L2 | 2020 | Xie et al. [161] | -A semi-supervised learning method that performs well even when labeled data are plentiful | 88.4% | 98.7% | 480 million |

| Meta Pseudo Labels | 2021 | Pham et al. [162] | Has a teacher network that generates pseudo labels from unlabeled data in order to teach a student network | 90.2% | 98.8% | 480 million |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Mamat, N.; Othman, M.F.; Abdoulghafor, R.; Belhaouari, S.B.; Mamat, N.; Mohd Hussein, S.F. Advanced Technology in Agriculture Industry by Implementing Image Annotation Technique and Deep Learning Approach: A Review. Agriculture 2022, 12, 1033. https://doi.org/10.3390/agriculture12071033

Mamat N, Othman MF, Abdoulghafor R, Belhaouari SB, Mamat N, Mohd Hussein SF. Advanced Technology in Agriculture Industry by Implementing Image Annotation Technique and Deep Learning Approach: A Review. Agriculture. 2022; 12(7):1033. https://doi.org/10.3390/agriculture12071033

Chicago/Turabian StyleMamat, Normaisharah, Mohd Fauzi Othman, Rawad Abdoulghafor, Samir Brahim Belhaouari, Normahira Mamat, and Shamsul Faisal Mohd Hussein. 2022. "Advanced Technology in Agriculture Industry by Implementing Image Annotation Technique and Deep Learning Approach: A Review" Agriculture 12, no. 7: 1033. https://doi.org/10.3390/agriculture12071033

APA StyleMamat, N., Othman, M. F., Abdoulghafor, R., Belhaouari, S. B., Mamat, N., & Mohd Hussein, S. F. (2022). Advanced Technology in Agriculture Industry by Implementing Image Annotation Technique and Deep Learning Approach: A Review. Agriculture, 12(7), 1033. https://doi.org/10.3390/agriculture12071033