Machine Learning and Virtual Reality on Body Movements’ Behaviors to Classify Children with Autism Spectrum Disorder

Abstract

1. Introduction

1.1. Autism Spectrum Disorder and Repetitive Behaviors

1.2. Traditional Assessment in ASD: Advantages and Limitations

1.3. Implicit Methods: Biomarkers as Supports for ASD Assessment

1.4. Repetitive Behaviors Recognition in ASD

1.5. Use of Virtual Reality in ASD

2. Materials and Methods

2.1. Participants

2.2. Psychological Assessment

2.2.1. Autism Diagnostic Observation Schedule (ADOS-2)

2.2.2. Autism Diagnostic Interview-Revised (ADI-R)

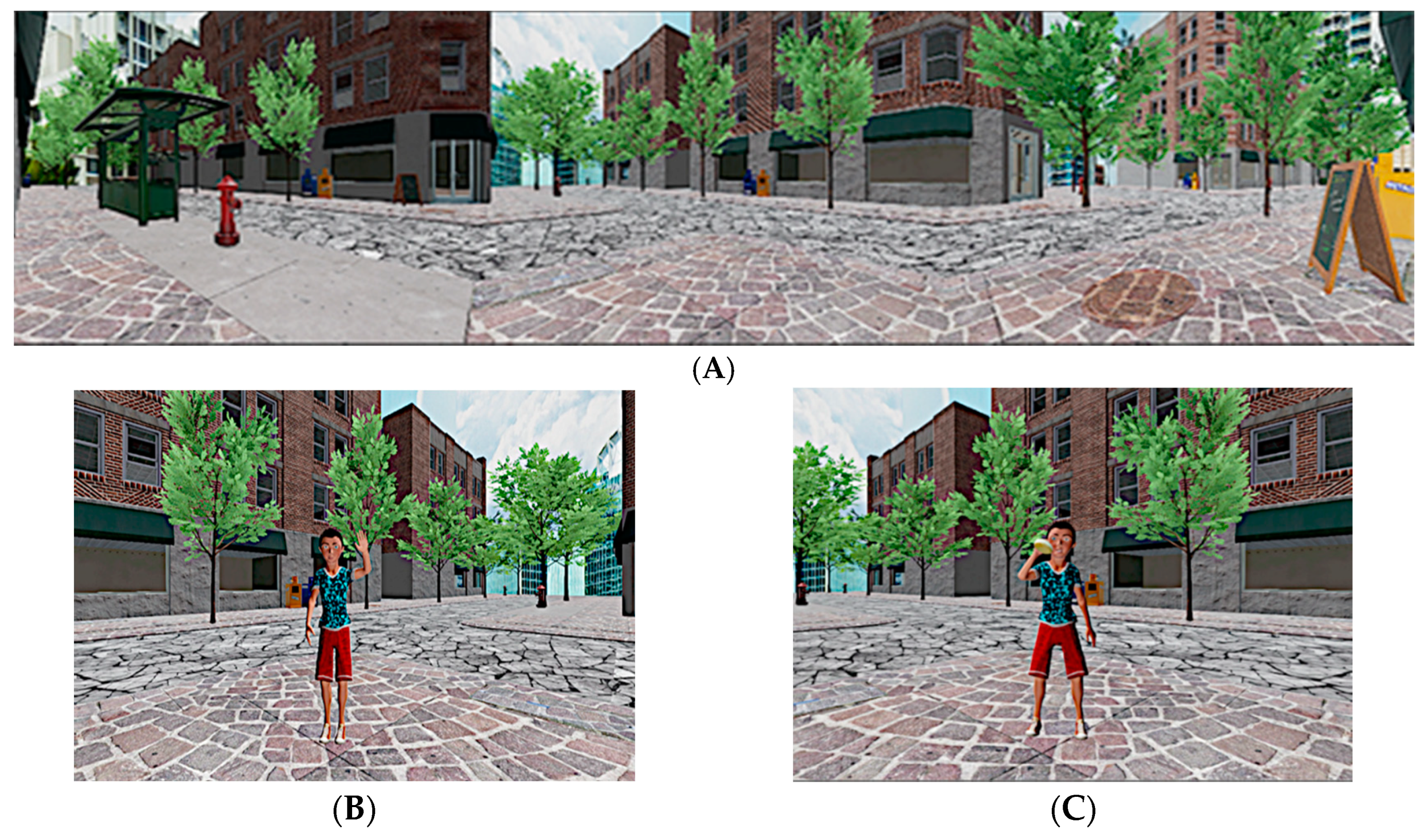

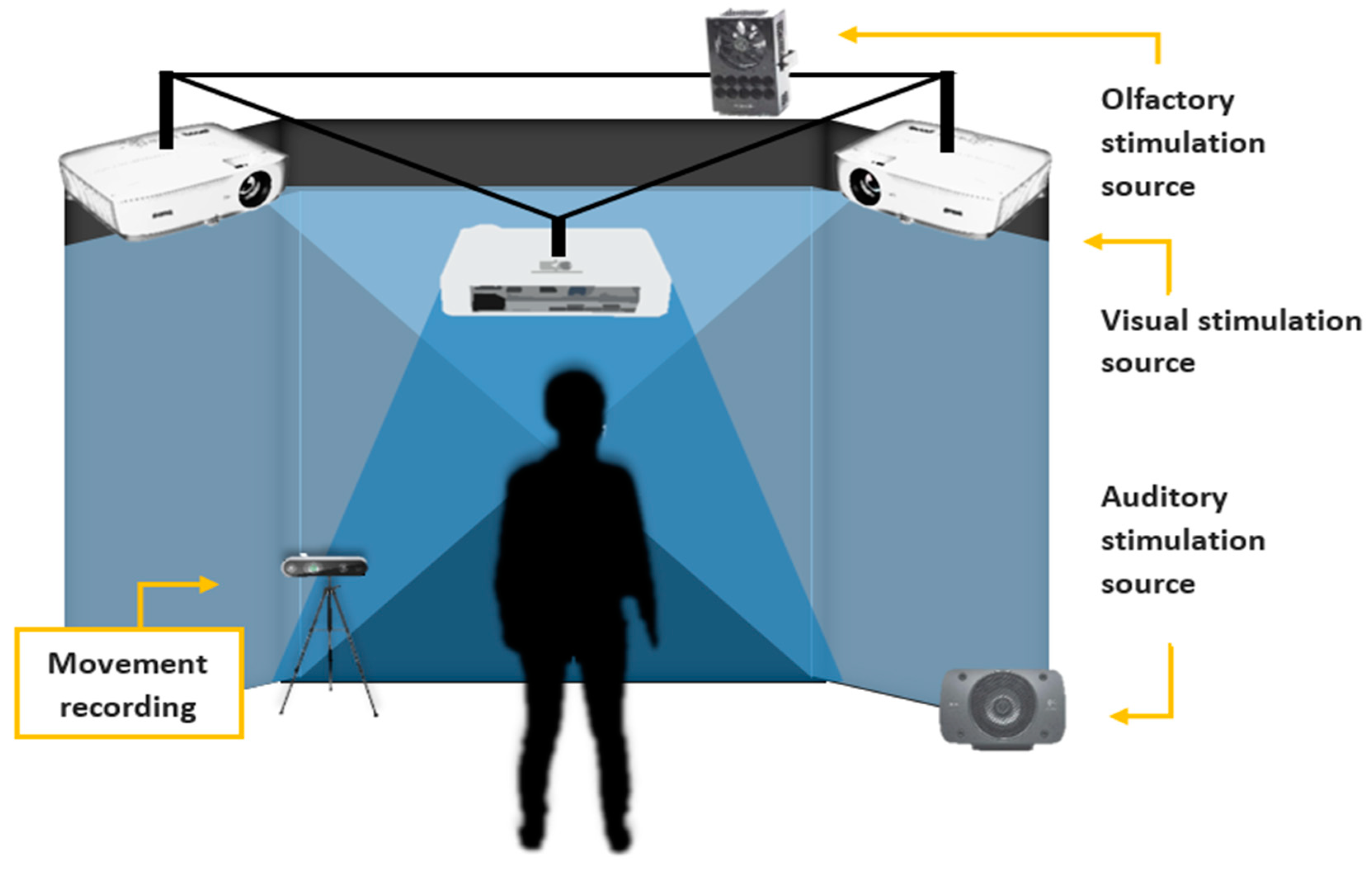

2.3. The Multimodal Virtual Environment (VE) and the Imitation Tasks

2.4. The Olfactory System

2.5. Experimental Procedure

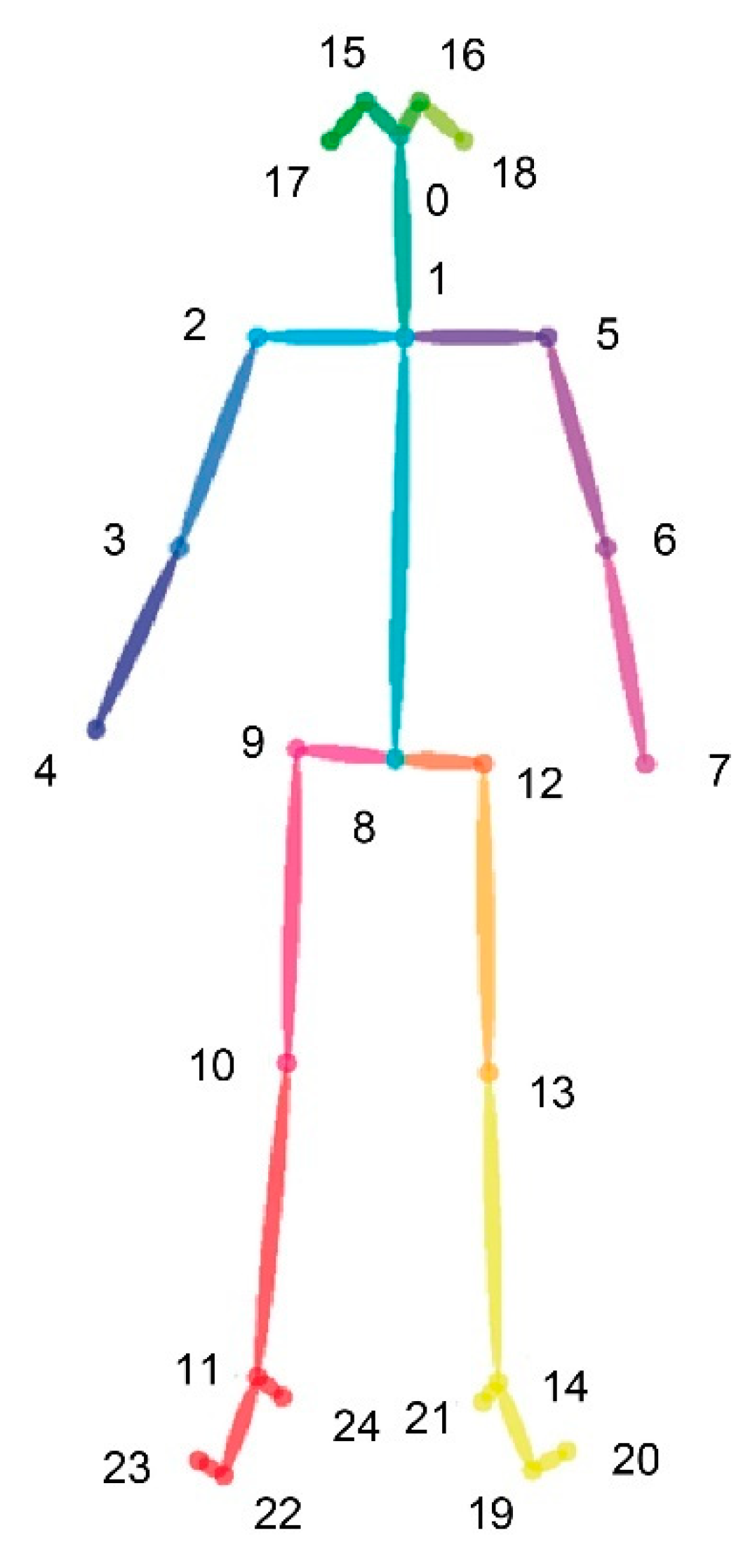

2.6. Behavioural Motor Assessment and Data Processing

2.7. Statistical Analysis

3. Results

3.1. Analysis of Total Movement

3.2. ASD Classification Performance

4. Discussion

4.1. Body Movement Parameters’ Differences between Groups

4.2. Machine Learning Methods on Body Movements and Features Used

4.3. The Influence of Stimuli Conditions

4.4. Limitations and Future Studies

5. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- American Psychiatric Association. Diagnostic and Statistical Manual of Mental Disorders (DSM-5®); American Psychiatric Pub: Washington, DC, USA, 2013. [Google Scholar]

- World Health Organization. Available online: https://www.who.int/news-room/fact-sheets/detail/autism-spectrum-disorders (accessed on 20 November 2019).

- Anagnostou, E.; Zwaigenbaum, L.; Szatmari, P.; Fombonne, E.; Fernandez, B.A.; Woodbury-Smith, M.; Buchanan, J.A. Autism spectrum disorder: Advances in evidence-based practice. Cmaj 2014, 186, 509–519. [Google Scholar] [CrossRef] [PubMed]

- Lord, C.; Risi, S.; DiLavore, P.S.; Shulman, C.; Thurm, A.; Pickles, A. Autism from 2 to 9 years of age. Arch. Gen. Psychiatry 2006, 63, 694–701. [Google Scholar] [CrossRef] [PubMed]

- Schmidt, L.; Kirchner, J.; Strunz, S.; Broźus, J.; Ritter, K.; Roepke, S.; Dziobek, I. Psychosocial functioning and life satisfaction in adults with autism spectrum disorder without intellectual impairment. J. Clin. Psychol. 2015, 71, 1259–1268. [Google Scholar] [CrossRef]

- Turner, M. Annotation: Repetitive behaviour in autism: A review of psychological research. J. Child Psychol. Psychiatry Allied Discip. 1999, 40, 839–849. [Google Scholar] [CrossRef]

- Lewis, M.H.; Bodfish, J.W. Repetitive behavior disorders in autism. Ment. Retard. Dev. Disabil. Res. Rev. 1998, 4, 80–89. [Google Scholar] [CrossRef]

- Ghanizadeh, A. Clinical approach to motor stereotypies in autistic children. Iran. J. Pediatr. 2010, 20, 149. [Google Scholar]

- Mahone, E.M.; Bridges, D.; Prahme, C.; Singer, H.S. Repetitive arm and hand movements (complex motor stereotypies) in children. J. Pediatr. 2004, 145, 391–395. [Google Scholar] [CrossRef] [PubMed]

- MacDonald, R.; Green, G.; Mansfield, R.; Geckeler, A.; Gardenier, N.; Anderson, J.; Sanchez, J. Stereotypy in young children with autism and typically developing children. Res. Dev. Disabil. 2007, 28, 266–277. [Google Scholar] [CrossRef] [PubMed]

- Singer, H.S. Motor stereotypies. Semin. Pediatr. Neurol. 2009, 16, 77–81. [Google Scholar] [CrossRef]

- Lidstone, J.; Uljarević, M.; Sullivan, J.; Rodgers, J.; McConachie, H.; Freeston, M.; Leekam, S. Relations among restricted and repetitive behaviors, anxiety and sensory features in children with autism spectrum disorders. Res. Autism Spectr. Disord. 2014, 8, 82–92. [Google Scholar] [CrossRef]

- Bodfish, J.W.; Symons, F.J.; Parker, D.E.; Lewis, M.H. Varieties of repetitive behavior in autism: Comparisons to mental retardation. J. Autism Dev. Disord. 2000, 30, 237–243. [Google Scholar] [CrossRef] [PubMed]

- Campbell, M.; Locascio, J.J.; Choroco, M.C.; Spencer, E.K.; Malone, R.P.; Kafantaris, V.; Overall, J.E. Stereotypies and tardive dyskinesia: Abnormal movements in autistic children. Psychopharmacol. Bull. 1990, 26, 260–266. [Google Scholar] [PubMed]

- Goldman, S.; Wang, C.; Salgado, M.W.; Greene, P.E.; Kim, M.; Rapin, I. Motor stereotypies in children with autism and other developmental disorders. Dev. Med. Child Neurol. 2009, 51, 30–38. [Google Scholar] [CrossRef] [PubMed]

- Lord, C.; Rutter, M.; DiLavore, P.C.; Risi, S.A. Diagnostic Observation Schedule-WPS (ADOS-WPS); Western Psychological Services: Los Angeles, CA, USA, 1999. [Google Scholar]

- Lord, C.; Rutter, M.; Le Couteur, A. Autism Diagnostic Interview-Revised: A revised version of a diagnostic interview for caregivers of individuals with possible pervasive developmental disorders. J. Autism Dev. Disord. 1994, 24, 659–685. [Google Scholar] [CrossRef]

- Goldstein, S.; Naglieri, J.A.; Ozonoff, S. Assessment of Autism Spectrum Disorder; The Guilford Press: New York, NY, USA, 2009. [Google Scholar]

- Gonçalves, N.; Rodrigues, J.L.; Costa, S.; Soares, F. Preliminary study on determining stereotypical motor movements. In Proceedings of the 2012 Annual International Conference of the IEEE Engineering in Medicine and Biology Society, San Diego, CA, USA, 28 August–1 September 2012; pp. 1598–1601. [Google Scholar]

- Volkmar, F.R.; State, M.; Klin, A. Autism and autism spectrum disorders: Diagnostic issues for the coming decade. J. Child Psychol. Psychiatry 2009, 50, 108–115. [Google Scholar] [CrossRef]

- Lord, C.; Rutter, M.; DiLavore, P.C.; Risi, S. Autism Diagnostic Observation Schedule; Western Psychological Services: Los Angeles, CA, USA, 2001. [Google Scholar]

- Reaven, J.A.; Hepburn, S.L.; Ross, R.G. Use of the ADOS and ADI-R in children with psychosis: Importance of clinical judgment. Clin. Child Psychol. Psychiatry 2008, 13, 81–94. [Google Scholar] [CrossRef]

- Torres, E.B.; Brincker, M.; Isenhower, R.W., III; Yanovich, P.; Stigler, K.A.; Nurnberger, J.I., Jr.; José, J.V. Autism: The micro-movement perspective. Front. Integr. Neurosci. 2013, 7, 32. [Google Scholar] [CrossRef]

- Paulhus, D.L. Measurement and Control of Response Bias. In Measures of Social Psychological Attitudes, Volume 1. Measures of Personality and Social Psychological Attitudes; Robinson, J.P., Shaver, P.R., Wrightsman, L.S., Eds.; Academic Press: San Diego, CA, USA, 1991; pp. 17–59. [Google Scholar]

- Edwards, A.L. The Social Desirability Variable in Personality Assessment and Research; Dryden Press: Dryden, ON, Canada, 1957. [Google Scholar]

- Möricke, E.; Buitelaar, J.K.; Rommelse, N.N. Do we need multiple informants when assessing autistic traits? The degree of report bias on offspring, self, and spouse ratings. J. Autism Dev. Disord. 2016, 46, 164–175. [Google Scholar] [CrossRef]

- Chaytor, N.; Schmitter-Edgecombe, M.; Burr, R. Improving the ecological validity of executive functioning assessment. Arch. Clin. Neuropsychol. 2006, 21, 217–227. [Google Scholar] [CrossRef]

- Franzen, M.D.; Wilhelm, K.L. Conceptual Foundations of Ecological Validity in Neuropsychological Assessment. In Ecological Validity of Neuropsychological Testing; Sbordone, R.J., Long, C.J., Eds.; Gr Press/St Lucie Press Inc.: Delray Beach, FL, USA, 1996; pp. 91–112. [Google Scholar]

- Brunswick, E. Symposium of the probability approach in psychology: Representative design and probabilistic theory in a functional psychology. Psychol. Rev. 1955, 62, 193–217. [Google Scholar] [CrossRef]

- Gillberg, C.; Rasmussen, P. Brief report: Four case histories and a literature review of Williams syndrome and autistic behavior. J. Autism Dev. Disord. 1994, 24, 381–393. [Google Scholar] [CrossRef] [PubMed]

- Parsons, S. Authenticity in Virtual Reality for assessment and intervention in autism: A conceptual review. Educ. Res. Rev. 2016, 19, 138–157. [Google Scholar] [CrossRef]

- Francis, K. Autism interventions: A critical update. Dev. Med. Child Neurol. 2005, 47, 493–499. [Google Scholar] [CrossRef] [PubMed]

- Albinali, F.; Goodwin, M.S.; Intille, S.S. Recognizing stereotypical motor movements in the laboratory and classroom: A case study with children on the autism spectrum. In Proceedings of the 11th International Conference on Ubiquitous Computing, Orlando, FL, USA, 30 September–3 October 2009; ACM: New York, NY, USA, 2009; pp. 71–80. [Google Scholar] [CrossRef]

- Pyles, D.A.; Riordan, M.M.; Bailey, J.S. The stereotypy analysis: An instrument for examining environmental variables associated with differential rates of stereotypic behavior. Res. Dev. Disabil. 1997, 18, 11–38. [Google Scholar] [CrossRef]

- Min, C.H.; Tewfik, A.H. Novel pattern detection in children with autism spectrum disorder using iterative subspace identification. In Proceedings of the 2010 IEEE International Conference on Acoustics, Speech and Signal Processing, Dallas, TX, USA, 14–19 March 2010; pp. 2266–2269. [Google Scholar]

- Min, C.H.; Tewfik, A.H. Automatic characterization and detection of behavioral patterns using linear predictive coding of accelerometer sensor data. In Proceedings of the 2010 Annual International Conference of the IEEE Engineering in Medicine and Biology, Buenos Aires, Argentina, 31 August–4 September 2010; pp. 220–223. [Google Scholar]

- Nosek, B.A.; Hawkins, C.B.; Frazier, R.S. Implicit social cognition: From measures to mechanisms. Trends Cogn. Sci. 2011, 15, 152–159. [Google Scholar] [CrossRef] [PubMed]

- Lieberman, M.D. Social cognitive neuroscience. In Handbook of Social Psychology; Fiske, S.T., Gilbert, D.T., Lindzey, G., Eds.; John Wiley & Sons Inc.: Hoboken, NJ, USA, 2010; pp. 143–193. [Google Scholar]

- Forscher, P.S.; Lai, C.K.; Axt, J.R.; Ebersole, C.R.; Herman, M.; Devine, P.G.; Nosek, B.A. A meta-analysis of procedures to change implicit measures. J. Pers. Soc. Psychol. 2019, 117, 522–559. [Google Scholar] [CrossRef]

- LeDoux, J.E.; Pine, D.S. Using neuroscience to help understand fear and anxiety: A two-system framework. Am. J. Psychiatry 2016, 173, 1083–1093. [Google Scholar] [CrossRef] [PubMed]

- Fenning, R.M.; Baker, J.K.; Baucom, B.R.; Erath, S.A.; Howland, M.A.; Moffitt, J. Electrodermal variability and symptom severity in children with autism spectrum disorder. J. Autism Dev. Disord. 2017, 47, 1062–1072. [Google Scholar] [CrossRef]

- Walsh, P.; Elsabbagh, M.; Bolton, P.; Singh, I. In search of biomarkers for autism: Scientific, social and ethical challenges. Nat. Rev. Neurosc. 2011, 12, 603. [Google Scholar] [CrossRef]

- Nikula, R. Psychological correlates of nonspecific skin conductance responses. Psychophysiology 1991, 28, 86–90. [Google Scholar] [CrossRef]

- Alcañiz Raya, M.; Chicchi Giglioli, I.A.; Marín-Morales, J.; Higuera-Trujillo, J.L.; Olmos, E.; Minissi, M.E.; Abad, L. Application of Supervised Machine Learning for Behavioral Biomarkers of Autism Spectrum Disorder Based on Electrodermal Activity and Virtual Reality. Front. Hum. Neurosci. 2020, 14, 90. [Google Scholar] [CrossRef] [PubMed]

- Cunningham, W.A.; Raye, C.L.; Johnson, M.K. Implicit and explicit evaluation: fMRI correlates of valence, emotional intensity, and control in the processing of attitudes. J. Cogn. Neurosci. 2004, 16, 1717–1729. [Google Scholar] [CrossRef] [PubMed]

- Kopton, I.M.; Kenning, P. Near-infrared spectroscopy (NIRS) as a new tool for neuroeconomic research. Front. Hum. Neurosci. 2014, 8, 549. [Google Scholar] [CrossRef] [PubMed]

- Knyazev, G.G.; Slobodskaya, H.R.; Wilson, G.D. Personality and Brain Oscillations in the Developmental Perspective. In Advances in Psychology Research; Shohov, S.P., Ed.; Nova Science Publishers: Hauppauge, NY, USA, 2004; Volume 29, pp. 3–34. [Google Scholar]

- Gwizdka, J. Characterizing relevance with eye-tracking measures. In Proceedings of the 5th Information Interaction in Context Symposium, Regensburg, Germany, 26–29 August 2014; pp. 58–67. [Google Scholar]

- Nickel, P.; Nachreiner, F. Sensitivity and diagnosticity of the 0.1-Hz component of heart rate variability as an indicator of mental workload. Hum. Factors 2003, 45, 575–590. [Google Scholar] [CrossRef] [PubMed]

- Di Martino, A.; Yan, C.G.; Li, Q.; Denio, E.; Castellanos, F.X.; Alaerts, K.; Deen, B. The autism brain imaging data exchange: Towards a large-scale evaluation of the intrinsic brain architecture in autism. Mol. Psychiatry 2014, 19, 659. [Google Scholar] [CrossRef] [PubMed]

- Van Hecke, A.V.; Lebow, J.; Bal, E.; Lamb, D.; Harden, E.; Kramer, A.; Porges, S.W. Electroencephalogram and heart rate regulation to familiar and unfamiliar people in children with autism spectrum disorders. Child Dev. 2009, 80, 1118–1133. [Google Scholar] [CrossRef]

- Alcañiz, M.L.; Olmos-raya, E.; Abad, L. Uso de entornos virtuales para trastornos del neurodesarrollo: Una revisión del estado del arte y agenda futura. Medicina (Buenos Aires) 2019, 79, 77–81. [Google Scholar]

- Amiri, A.; Peltier, N.; Goldberg, C.; Sun, Y.; Nathan, A.; Hiremath, S.; Mankodiya, K. WearSense: Detecting autism stereotypic behaviors through smartwatches. Healthcare 2017, 5, 11. [Google Scholar] [CrossRef]

- Coronato, A.; De Pietro, G.; Paragliola, G. A situation-aware system for the detection of motion disorders of patients with autism spectrum disorders. Expert Syst. Appl. 2014, 41, 7868–7877. [Google Scholar] [CrossRef]

- Goodwin, M.S.; Intille, S.S.; Velicer, W.F.; Groden, J. Sensor-enabled detection of stereotypical motor movements in persons with autism spectrum disorder. In Proceedings of the 7th International Conference on Interaction Design and Children, Chicago, IL, USA, 11–13 June 2008; pp. 109–112. [Google Scholar]

- Goodwin, M.S.; Intille, S.S.; Albinali, F.; Velicer, W.F. Automated detection of stereotypical motor movements. J. Autism Dev. Disord. 2011, 41, 770–782. [Google Scholar] [CrossRef]

- Paragliola, G.; Coronato, A. Intelligent monitoring of stereotyped motion disorders in case of children with autism. In Proceedings of the 2013 9th International Conference on Intelligent Environments, Athens, Greece, 16–17 July 2013; pp. 258–261. [Google Scholar]

- Rodrigues, J.L.; Gonçalves, N.; Costa, S.; Soares, F. Stereotyped movement recognition in children with ASD. Sens. Actuators A Phys. 2013, 202, 162–169. [Google Scholar] [CrossRef]

- Madsen, M.; El Kaliouby, R.; Goodwin, M.; Picard, R. Technology for just-in-time in-situ learning of facial affect for persons diagnosed with an autism spectrum disorder. In Proceedings of the 10th International ACM SIGACCESS Conference on Computers and Accessibility, Halifax, NS, Canada, 13–15 October 2008; pp. 19–26. [Google Scholar]

- Min, C.H.; Tewfik, A.H.; Kim, Y.; Menard, R. Optimal sensor location for body sensor network to detect self-stimulatory behaviors of children with autism spectrum disorder. In Proceedings of the 2009 Annual International Conference of the IEEE Engineering in Medicine and Biology Society, Minneapolis, MN, USA, 2–6 September 2009; pp. 3489–3492. [Google Scholar]

- Westeyn, T.; Vadas, K.; Bian, X.; Starner, T.; Abowd, G.D. Recognizing mimicked autistic self-stimulatory behaviors using hmms. In Proceedings of the Ninth IEEE International Symposium on Wearable Computers (ISWC’05), Osaka, Japan, 11–14 October 2005; pp. 164–167. [Google Scholar]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- Andriluka, M.; Pishchulin, L.; Gehler, P.; Schiele, B. 2D human pose estimation: New benchmark and state of the art analysis. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 3686–3693. [Google Scholar]

- Lin, T.Y.; Maire, M.; Belongie, S.; Hays, J.; Perona, P.; Ramanan, D.; Zitnick, C.L. Microsoft coco: Common objects in context. In Proceedings of the European Conference on Computer Vision, Zurich, Switzerland, 6–12 September 2014; Springer: Cham, Switzerland, 2014; pp. 740–755. [Google Scholar]

- Carreira, J.; Agrawal, P.; Fragkiadaki, K.; Malik, J. Human pose estimation with iterative error feedback. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 4733–4742. [Google Scholar]

- Iqbal, U.; Garbade, M.; Gall, J. Pose for action-action for pose. In Proceedings of the 2017 12th IEEE International Conference on Automatic Face & Gesture Recognition (FG 2017), Washington, DC, USA, 30 May–3 June 2017; pp. 438–445. [Google Scholar]

- Pfister, T.; Charles, J.; Zisserman, A. Flowing convnets for human pose estimation in videos. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 1913–1921. [Google Scholar]

- Toshev, A.; Szegedy, C. Deeppose: Human pose estimation via deep neural networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 1653–1660. [Google Scholar]

- Wei, S.E.; Ramakrishna, V.; Kanade, T.; Sheikh, Y. Convolutional pose machines. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 4724–4732. [Google Scholar]

- Crippa, A.; Salvatore, C.; Perego, P.; Forti, S.; Nobile, M.; Molteni, M.; Castiglioni, I. Use of machine learning to identify children with autism and their motor abnormalities. J. Autism Dev. Disord. 2015, 45, 2146–2156. [Google Scholar] [CrossRef]

- Wedyan, M.; Al-Jumaily, A.; Crippa, A. Using machine learning to perform early diagnosis of autism spectrum disorder based on simple upper limb movements. Int. J. Hybrid Intell. Syst. 2019, 15, 195–206. [Google Scholar] [CrossRef]

- Burdea, G.C.; Coiffet, P. Virtual Reality Technology; John Wiley & Sons: Hoboken, NJ, USA, 2003. [Google Scholar]

- Fuchs, H.; Bishop, G. Research Directions in Virtual Environments; University of North Carolina at Chapel Hill: Chapel Hill, NC, USA, 1992. [Google Scholar]

- Parsons, S.; Mitchell, P.; Leonard, A. The use and understanding of virtual environments by adolescents with autistic spectrum disorders. J. Autism Dev. Disord. 2004, 34, 449–466. [Google Scholar] [CrossRef]

- Parsons, T.D.; Rizzo, A.A.; Rogers, S.; York, P. Virtual reality in paediatric rehabilitation: A review. Dev. Neurorehabil. 2009, 12, 224–238. [Google Scholar] [CrossRef] [PubMed]

- Parsons, T.D. Neuropsychological assessment using virtual environments: Enhanced assessment technology for improved ecological validity. In Advanced Computational Intelligence Paradigms in Healthcare 6. Virtual Reality in Psychotherapy, Rehabilitation, and Assessment; Springer: Heidelberg/Berlin, Germany, 2011; pp. 271–289. [Google Scholar]

- Bowman, D.A.; Gabbard, J.L.; Hix, D. A survey of usability evaluation in virtual environments: Classification and comparison of methods. Presence Teleoperators Virtual Environ. 2002, 11, 404–424. [Google Scholar] [CrossRef]

- Pastorelli, E.; Herrmann, H. A small-scale, low-budget semi-immersive virtual environment for scientific visualization and research. Procedia Comput. Sci. 2013, 25, 14–22. [Google Scholar] [CrossRef]

- Cobb, S.V.; Nichols, S.; Ramsey, A.; Wilson, J.R. Virtual reality-induced symptoms and effects (VRISE). Presence Teleoperators Virtual Environ. 1999, 8, 169–186. [Google Scholar] [CrossRef]

- Guazzaroni, G. (Ed.) Virtual and Augmented Reality in Mental Health Treatment; IGI Global: Hershey, PA, USA, 2018. [Google Scholar]

- Wallace, S.; Parsons, S.; Westbury, A.; White, K.; White, K.; Bailey, A. Sense of presence and atypical social judgments in immersive virtual environments: Responses of adolescents with Autism Spectrum Disorders. Autism 2010, 14, 199–213. [Google Scholar] [CrossRef]

- Lorenzo, G.; Lledó, A.; Arráez-Vera, G.; Lorenzo-Lledó, A. The application of immersive virtual reality for students with ASD: A review between 1990–2017. Educ. Inf. Technol. 2019, 24, 127–151. [Google Scholar] [CrossRef]

- Bailenson, J.N.; Yee, N.; Merget, D.; Schroeder, R. The effect of behavioral realism and form realism of real-time avatar faces on verbal disclosure, nonverbal disclosure, emotion recognition, and copresence in dyadic interaction. Presence 2006, 15, 359–372. [Google Scholar] [CrossRef]

- Biocca, F.; Harms, C.; Gregg, J. The networked minds measure of social presence: Pilot test of the factor structure and concurrent validity. In Proceedings of the 4th Annual International Workshop on Presence, Philadelphia, PA, USA, 21–23 May 2001; pp. 1–9. [Google Scholar]

- Cipresso, P.; Chicchi Giglioli, I.A.; Alcañiz Raya, M.; Riva, G. The past, present, and future of virtual and augmented reality research: A network and cluster analysis of the literature. Front. Psychol. 2018, 9, 2086. [Google Scholar] [CrossRef] [PubMed]

- Cummings, J.J.; Bailenson, J.N. How immersive is enough? A meta-analysis of the effect of immersive technology on user presence. Media Psychol. 2016, 19, 272–309. [Google Scholar] [CrossRef]

- Skalski, P.; Tamborini, R. The role of social presence in interactive agent-based persuasion. Media Psychol. 2007, 10, 385–413. [Google Scholar] [CrossRef]

- Slater, M. Place illusion and plausibility can lead to realistic behaviour in immersive virtual environments. Philos. Trans. R. Soc. B Biol. Sci. 2009, 364, 3549–3557. [Google Scholar] [CrossRef]

- Sundar, S.S.; Xu, Q.; Bellur, S. Designing interactivity in media interfaces: A communications perspective. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems, Atlanta, GA, USA, 10–15 April 2010; ACM: New York, NY, USA, 2010; pp. 2247–2256. [Google Scholar]

- Baños, R.M.; Botella, C.; Garcia-Palacios, A.; Villa, H.; Perpiñá, C.; Alcaniz, M. Presence and reality judgment in virtual environments: A unitary construct? Cyber Psychol. Behav. 2000, 3, 327–335. [Google Scholar] [CrossRef]

- Baños, R.; Botella, C.; Garcia-Palacios, A.; Villa, H.; Perpiñá, C.; Gallardo, M. Psychological variables and reality judgment in virtual environments: The roles of absorption and dissociation. Cyber Psychol. Behav. 2009, 2, 143–148. [Google Scholar] [CrossRef]

- Bente, G.; Rüggenberg, S.; Krämer, N.C.; Eschenburg, F. Avatar-mediated networking: Increasing social presence and interpersonal trust in net-based collaborations. Hum. Commun. Res. 2008, 34, 287–318. [Google Scholar] [CrossRef]

- Heeter, C. Being there: The subjective experience of presence. Presence Teleoperators Virtual Environ. 1992, 1, 262–271. [Google Scholar] [CrossRef]

- Sanchez-Vives, M.V.; Slater, M. From presence to consciousness through virtual reality. Nat. Rev. Neurosci. 2005, 6, 332. [Google Scholar] [CrossRef] [PubMed]

- Alcorn, A.; Pain, H.; Rajendran, G.; Smith, T.; Lemon, O.; Porayska-Pomsta, K.; Bernardini, S. Social communication between virtual characters and children with autism. In Proceedings of the International Conference on Artificial Intelligence in Education, Auckland, New Zealand, 28 June–1 July 2011; Springer: Berlin, Heidelberg, 2011; pp. 7–14. [Google Scholar]

- Mohr, D.C.; Burns, M.N.; Schueller, S.M.; Clarke, G.; Klinkman, M. Behavioral intervention technologies: Evidence review and recommendations for future research in mental health. Gen. Hosp. Psychiatry 2013, 35, 332–338. [Google Scholar] [CrossRef] [PubMed]

- Neguț, A.; Matu, S.A.; Sava, F.A.; David, D. Virtual reality measures in neuropsychological assessment: A meta-analytic review. Clin. Neuropsychol. 2016, 30, 165–184. [Google Scholar] [CrossRef]

- Riva, G. Virtual reality in psychotherapy. Cyber Psychol. Behav. 2005, 8, 220–230. [Google Scholar] [CrossRef] [PubMed]

- Valmaggia, L.R.; Latif, L.; Kempton, M.J.; Rus-Calafell, M. Virtual reality in the psychological treatment for mental health problems: An systematic review of recent evidence. Psychiatry Res. 2016, 236, 189–195. [Google Scholar] [CrossRef] [PubMed]

- Mesa-Gresa, P.; Gil-Gómez, H.; Lozano-Quilis, J.A.; Gil-Gómez, J.A. Effectiveness of virtual reality for children and adolescents with autism spectrum disorder: An evidence-based systematic review. Sensors 2018, 18, 2486. [Google Scholar] [CrossRef] [PubMed]

- Cheng, Y.; Ye, J. Exploring the social competence of students with autism spectrum conditions in a collaborative virtual learning environment—The pilot study. Comput. Educ. 2010, 54, 1068–1077. [Google Scholar] [CrossRef]

- Jarrold, W.; Mundy, P.; Gwaltney, M.; Bailenson, J.; Hatt, N.; McIntyre, N.; Swain, L. Social attention in a virtual public speaking task in higher functioning children with autism. Autism Res. 2013, 6, 393–410. [Google Scholar] [CrossRef]

- d’Arc, B.F.; Ramus, F.; Lefebvre, A.; Brottier, D.; Zalla, T.; Moukawane, S.; Leboyer, M. Atypical social judgment and sensitivity to perceptual cues in autism spectrum disorders. J. Autism Dev. Disord. 2016, 46, 1574–1581. [Google Scholar] [CrossRef]

- Hopkins, I.M.; Gower, M.W.; Perez, T.A.; Smith, D.S.; Amthor, F.R.; Wimsatt, F.C.; Biasini, F.J. Avatar assistant: Improving social skills in students with an ASD through a computer-based intervention. J. Autism Dev. Disord. 2011, 41, 1543–1555. [Google Scholar] [CrossRef]

- Maskey, M.; Lowry, J.; Rodgers, J.; McConachie, H.; Parr, J.R. Reducing specific phobia/fear in young people with autism spectrum disorders (ASDs) through a virtual reality environment intervention. PLoS ONE 2014, 9, e100374. [Google Scholar] [CrossRef]

- Baron-Cohen, S.; Ashwin, E.; Ashwin, C.; Tavassoli, T.; Chakrabarti, B. Talent in autism: Hyper-systemizing, hyper-attention to detail and sensory hypersensitivity. Philos. Trans. R. Soc. B Biol. Sci. 2009, 364, 1377–1383. [Google Scholar] [CrossRef] [PubMed]

- Tomchek, S.D.; Huebner, R.A.; Dunn, W. Patterns of sensory processing in children with an autism spectrum disorder. Res. Autism Spectr. Disord. 2014, 8, 1214–1224. [Google Scholar] [CrossRef]

- Ashwin, C.; Chapman, E.; Howells, J.; Rhydderch, D.; Walker, I.; Baron-Cohen, S. Enhanced olfactory sensitivity in autism spectrum conditions. Mol. Autism 2014, 5, 53. [Google Scholar] [CrossRef] [PubMed]

- Dudova, I.; Vodicka, J.; Havlovicova, M.; Sedlacek, Z.; Urbanek, T.; Hrdlicka, M. Odor detection threshold, but not odor identification, is impaired in children with autism. Eur. Child Adolesc. Psychiatry 2011, 20, 333–340. [Google Scholar] [CrossRef] [PubMed][Green Version]

- Boyd, B.A.; Baranek, G.T.; Sideris, J.; Poe, M.D.; Watson, L.R.; Patten, E.; Miller, H. Sensory features and repetitive behaviors in children with autism and developmental delays. Autism Res. 2010, 3, 78–87. [Google Scholar] [CrossRef] [PubMed]

- Gabriels, R.L.; Agnew, J.A.; Miller, L.J.; Gralla, J.; Pan, Z.; Goldson, E.; Hooks, E. Is there a relationship between restricted, repetitive, stereotyped behaviors and interests and abnormal sensory response in children with autism spectrum disorders? Res. Autism Spectr. Disord. 2008, 2, 660–670. [Google Scholar] [CrossRef]

- Cao, Z.; Hidalgo, G.; Simon, T.; Wei, S.E.; Sheikh, Y. OpenPose: Realtime multi-person 2D pose estimation using Part Affinity Fields. arXiv 2018, arXiv:1812.08008. [Google Scholar] [CrossRef]

- Schöllkopf, B.; Smola, A.J.; Williamson, R.C.; Bartlett, P.L. New support vector algorithms. Neural Comput. 2000, 12, 1207–1245. [Google Scholar] [CrossRef]

- Yan, K.; Zhang, D. Feature selection and analysis on correlated gas sensor data with recursive feature elimination. Sens. Actuators B Chem. 2015, 212, 353–363. [Google Scholar] [CrossRef]

- Chang, C.-C.; Lin, C.-J. Libsvm: A Library for Support Vector Machines. ACM Trans. Intell. Syst. Technol. 2011, 2, 1–27. [Google Scholar] [CrossRef]

- O’Neill, M.; Jones, R.S. Sensory-perceptual abnormalities in autism: A case for more research? J. Autism Dev. Disord. 1997, 27, 283–293. [Google Scholar] [CrossRef] [PubMed]

- Foss-Feig, J.H.; Kwakye, L.D.; Cascio, C.J.; Burnette, C.P.; Kadivar, H.; Stone, W.L.; Wallace, M.T. An extended multisensory temporal binding window in autism spectrum disorders. Exp. Brain Res. 2010, 203, 381–389. [Google Scholar] [CrossRef] [PubMed]

- Courchesne, E.; Lincoln, A.J.; Kilman, B.A.; Galambos, R. Event-related brain potential correlates of the processing of novel visual and auditory information in autism. J. Autism Dev. Disord. 1985, 15, 55–76. [Google Scholar] [CrossRef] [PubMed]

- Russo, N.; Foxe, J.J.; Brandwein, A.B.; Altschuler, T.; Gomes, H.; Molholm, S. Multisensory processing in children with autism: High-density electrical mapping of auditory–somatosensory integration. Autism Res. 2010, 3, 253–267. [Google Scholar] [CrossRef] [PubMed]

- Ament, K.; Mejia, A.; Buhlman, R.; Erklin, S.; Caffo, B.; Mostofsky, S.; Wodka, E. Evidence for specificity of motor impairments in catching and balance in children with autism. J. Autism Dev. Disord. 2015, 45, 742–751. [Google Scholar] [CrossRef]

| Stimuli | |||||

|---|---|---|---|---|---|

| V | VA | VAO | All | ||

| Set of Joints | Head | 80.85% | 82.98% | 89.36% | 82.98% |

| Trunk | 70.21% | 72.34% | 76.60% | 82.98% | |

| Arms | 87.23% | 78.72% | 65.96% | 74.47% | |

| Legs | 61.70% | 63.83% | 74.47% | 72.34% | |

| Feet | 78.72% | 78.72% | 65.96% | 82.98% | |

| All | 89.36% | 74.47% | 70.21% | 70.21% | |

| Stimuli | Set of Joints | Accuracy | TPR | TNR | Kappa | PCA Features Selected |

|---|---|---|---|---|---|---|

| All | All | 70.21% | 45.45% | 92.00% | 0.39 | 1/20 |

| V | All | 89.36% | 100.00% | 80.00% | 0.79 | 1/14 |

| VA | All | 74.47% | 59.09% | 88.00% | 0.48 | 2/12 |

| VAO | All | 70.21% | 63.64% | 76.00% | 0.40 | 3/12 |

| All | Head | 82.98% | 100.00% | 68.00% | 0.67 | 1/11 |

| All | Trunk | 82.98% | 63.64% | 100.00% | 0.65 | 2/8 |

| All | Arms | 74.47% | 90.91% | 60.00% | 0.50 | 1/15 |

| All | Legs | 72.34% | 68.18% | 76.00% | 0.44 | 3/8 |

| All | Feet | 82.98% | 81.82% | 84.00% | 0.66 | 2/13 |

| V | Head | 80.85% | 68.18% | 92.00% | 0.61 | 1/6 |

| V | Trunk | 70.21% | 81.82% | 60.00% | 0.41 | 1/3 |

| V | Arms | 87.23% | 72.73% | 100.00% | 0.74 | 1/8 |

| V | Legs | 61.70% | 45.45% | 76.00% | 0.22 | 1/5 |

| V | Feet | 78.72% | 54.55% | 100.00% | 0.56 | 2/6 |

| VA | Head | 82.98% | 63.64% | 100.00% | 0.65 | 3/6 |

| VA | Trunk | 72.34% | 72.73% | 72.00% | 0.45 | 1/3 |

| VA | Arms | 78.72% | 95.45% | 64.00% | 0.58 | 1/8 |

| VA | Legs | 63.83% | 45.45% | 80.00% | 0.26 | 1/4 |

| VA | Feet | 78.72% | 72.73% | 84.00% | 0.57 | 2/6 |

| VAO | Head | 89.36% | 77.27% | 100.00% | 0.78 | 2/6 |

| VAO | Trunk | 76.60% | 68.18% | 84.00% | 0.53 | 2/3 |

| VAO | Arms | 65.96% | 50.00% | 80.00% | 0.30 | 2/7 |

| VAO | Legs | 74.47% | 68.18% | 80.00% | 0.48 | 1/5 |

| VAO | Feet | 65.96% | 45.45% | 84.00% | 0.30 | 2/7 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Alcañiz Raya, M.; Marín-Morales, J.; Minissi, M.E.; Teruel Garcia, G.; Abad, L.; Chicchi Giglioli, I.A. Machine Learning and Virtual Reality on Body Movements’ Behaviors to Classify Children with Autism Spectrum Disorder. J. Clin. Med. 2020, 9, 1260. https://doi.org/10.3390/jcm9051260

Alcañiz Raya M, Marín-Morales J, Minissi ME, Teruel Garcia G, Abad L, Chicchi Giglioli IA. Machine Learning and Virtual Reality on Body Movements’ Behaviors to Classify Children with Autism Spectrum Disorder. Journal of Clinical Medicine. 2020; 9(5):1260. https://doi.org/10.3390/jcm9051260

Chicago/Turabian StyleAlcañiz Raya, Mariano, Javier Marín-Morales, Maria Eleonora Minissi, Gonzalo Teruel Garcia, Luis Abad, and Irene Alice Chicchi Giglioli. 2020. "Machine Learning and Virtual Reality on Body Movements’ Behaviors to Classify Children with Autism Spectrum Disorder" Journal of Clinical Medicine 9, no. 5: 1260. https://doi.org/10.3390/jcm9051260

APA StyleAlcañiz Raya, M., Marín-Morales, J., Minissi, M. E., Teruel Garcia, G., Abad, L., & Chicchi Giglioli, I. A. (2020). Machine Learning and Virtual Reality on Body Movements’ Behaviors to Classify Children with Autism Spectrum Disorder. Journal of Clinical Medicine, 9(5), 1260. https://doi.org/10.3390/jcm9051260