Artificial Intelligence and Digital Tools Across the Hepato-Pancreato-Biliary Surgical Pathway: A Systematic Review

Abstract

1. Introduction

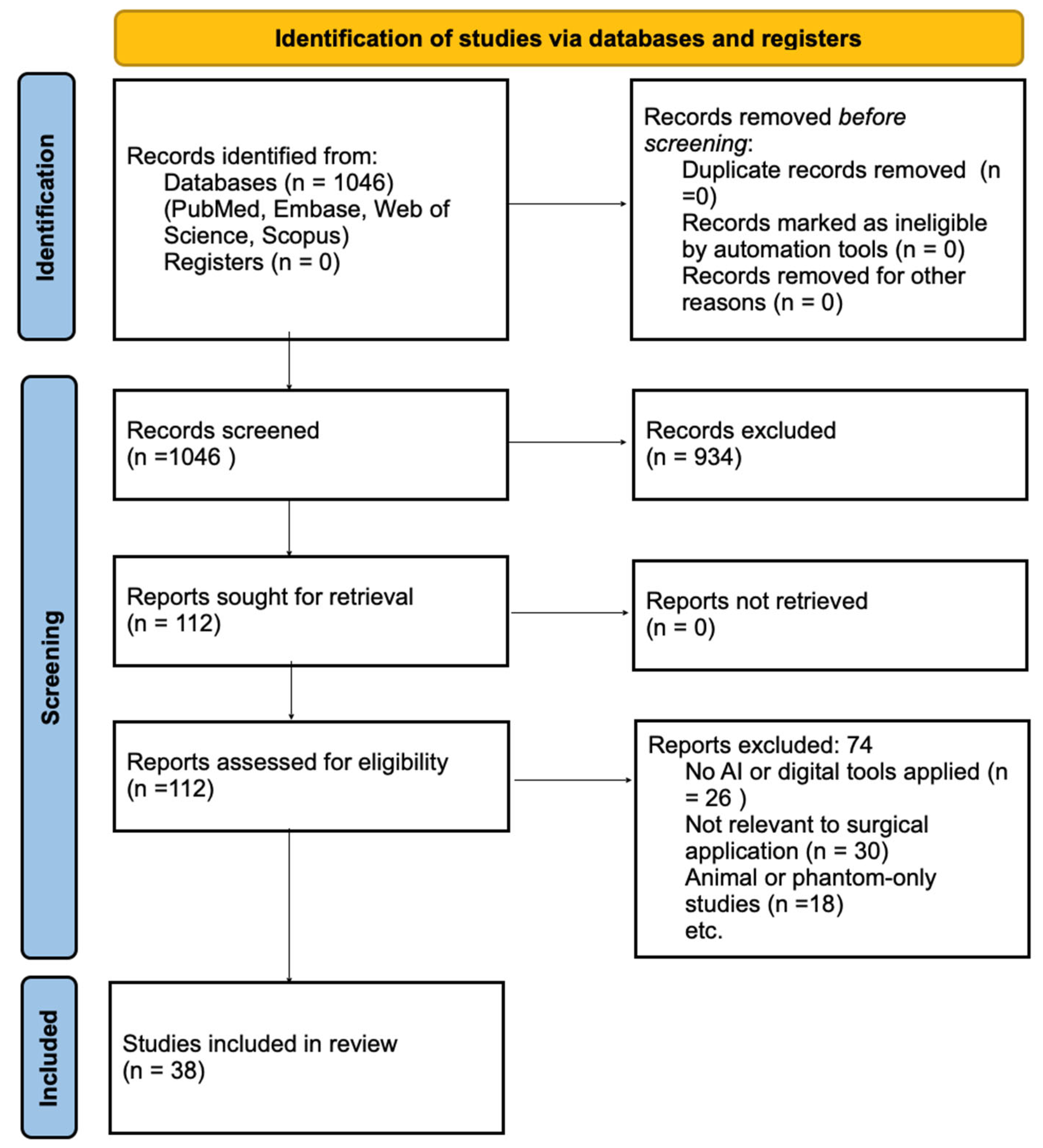

2. Materials and Methods

2.1. Protocol and Registration

2.2. Eligibility Criteria

2.3. Information Sources and Search Strategy

2.4. Study Selection

2.5. Data Extraction

2.6. Risk of Bias Assessment

2.7. Data Synthesis

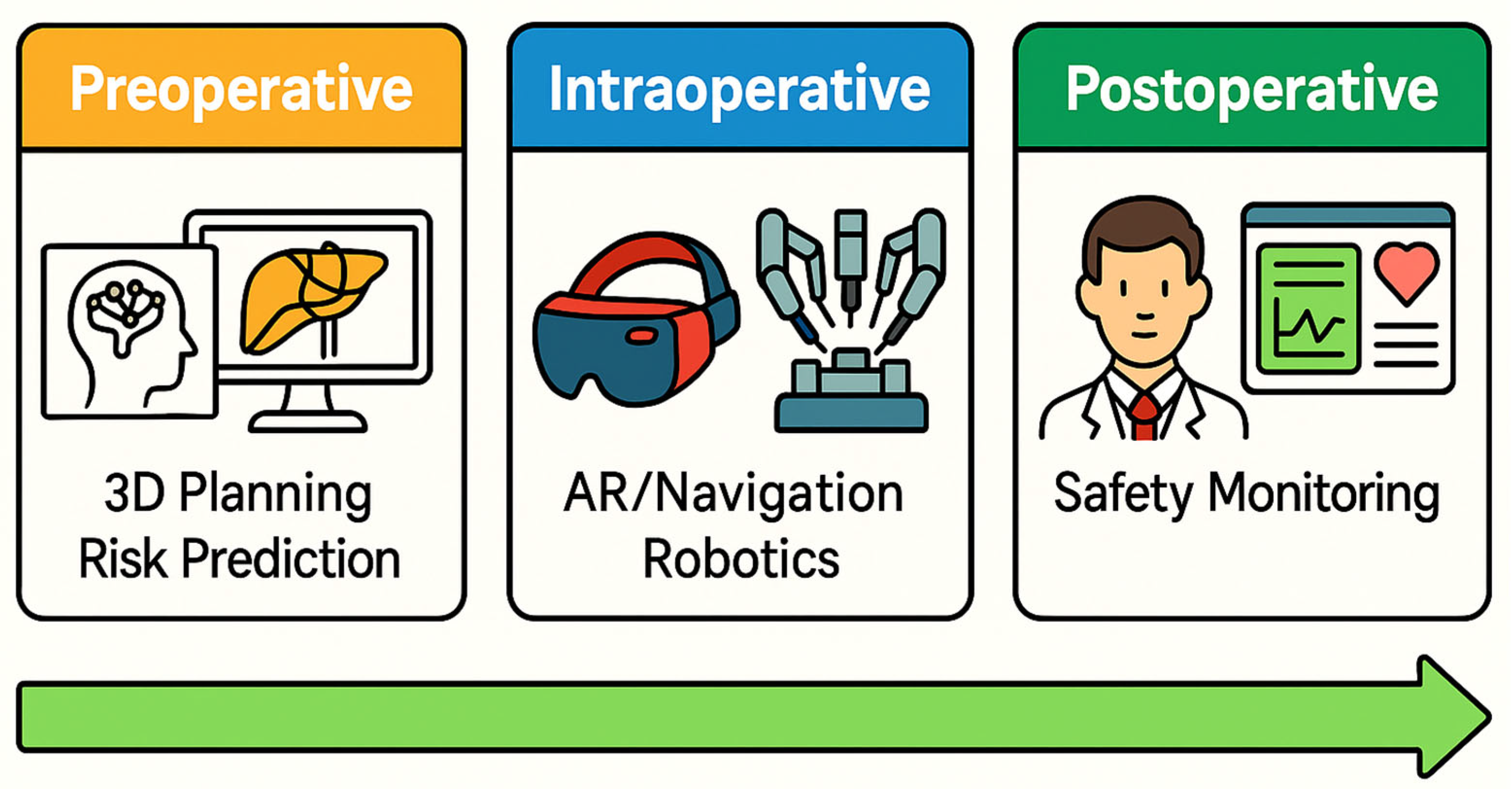

- Preoperative Imaging and Diagnosis

- Risk Prediction (outcomes)

- Surgical Planning and Simulation

- Intraoperative Guidance and Navigation

- Surgical Video Analysis/Robotics

3. Results

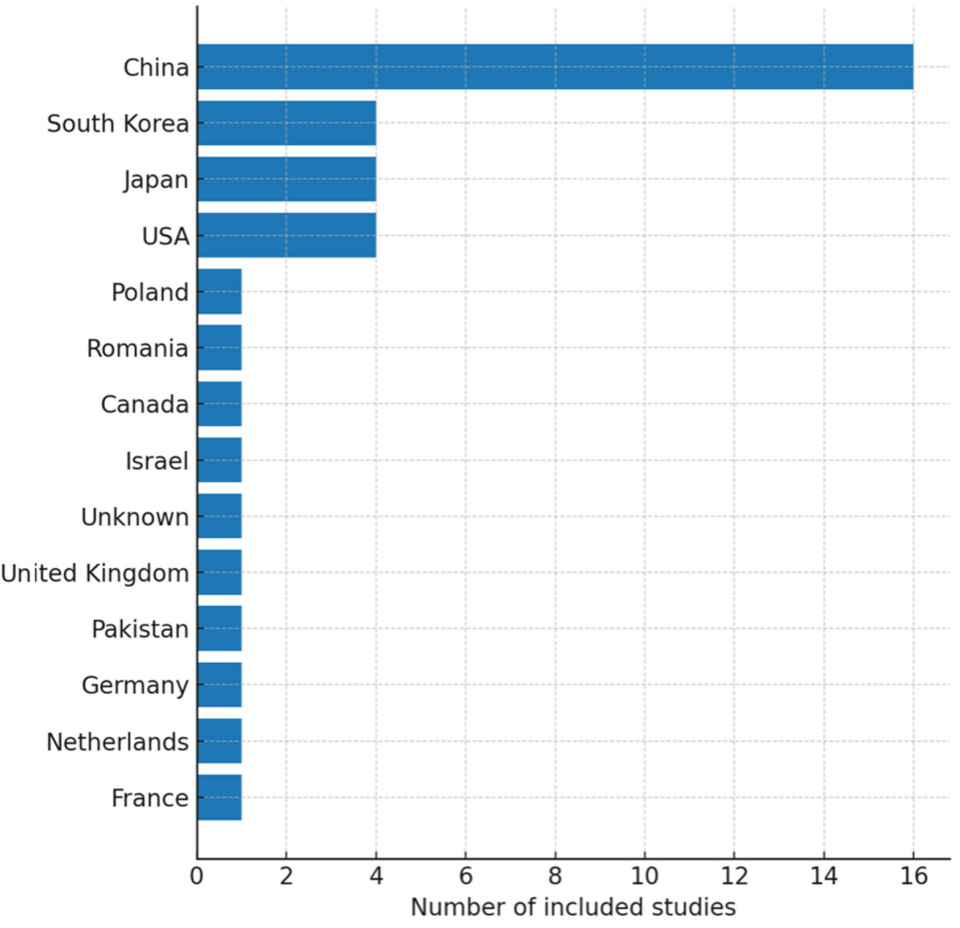

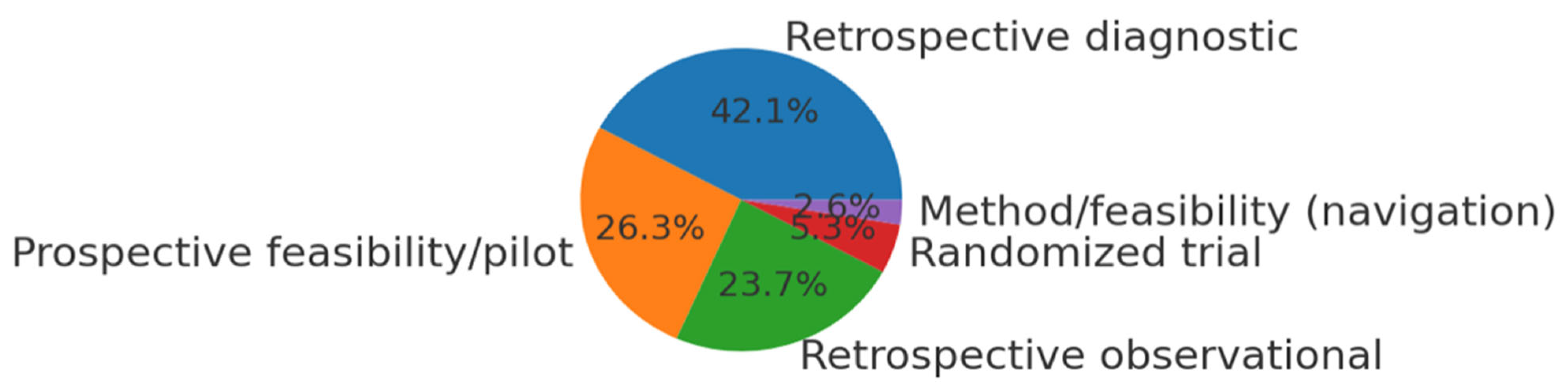

3.1. Study Selection and Characteristics

3.2. Preoperative AI and 3D Planning (Imaging and Diagnosis)

- Machine learning for tumor detection and characterization: In liver imaging, AI algorithms (often CNNs) have shown high accuracy in identifying liver tumors and classifying lesion types. For example, one study combined radiomics features with a CNN to differentiate hepatocellular carcinoma (HCC) from liver metastases on ultrasound images, achieving AUC ~0.85–0.90 [4]. In another, a deep learning model (ResNet-50) trained on endoscopic ultrasound (EUS) images distinguished pancreatic ductal adenocarcinoma (PDAC) from chronic pancreatitis with an AUC of 0.95 [22]. These accuracies are on par with, or exceeding, expert radiologist performance in these tasks. A recent systematic review of AI in liver imaging noted that most algorithms focus on lesion classification (particularly on CT scans) and report high internal accuracies, but a common limitation is lack of external validation [28]—suggesting potential optimism bias in those results.

- Beyond simply detecting lesions, AI has been used to predict tumor biology or staging from preoperative scans. For instance, radiomic models analyzing preoperative CT have shown ability to predict early HCC recurrence after resection better than conventional clinical staging, by identifying subtle textural features associated with tumor aggressiveness [30]. In pancreatic cancer, one model automatically quantified vascular involvement on CT to classify tumors as resectable vs. locally advanced unresectable, with high agreement to expert assessments [31]. Tools like this could aid surgical decision-making by more objectively staging tumors preoperatively (e.g., determining which patients should go straight to surgery vs. need neoadjuvant therapy).

- Some digital tools also bridge into intraoperative imaging enhancement. For example, an experimental system used an AI algorithm to register real-time intraoperative ultrasound with preoperative CT images [32]. This kind of image fusion could improve the surgeon’s ability to locate tumors during laparoscopic ultrasound by correlating it with preop imaging. In summary, AI in preoperative imaging has shown high diagnostic performance in identifying and characterizing HPB tumors. The challenge ahead lies in translating these algorithms into clinical workflows and ensuring they maintain accuracy in the real-world setting. As noted in the literature, prospective validation is needed: recent study concluded that while ultrasound-based AI models showed AUCs up to 0.98 in differentiating tissue types, prospective studies are required to confirm consistent performance externally [4].

- In parallel, advanced 3D imaging and visualization tools are augmenting preoperative planning. Automated 3D reconstruction: Several studies highlighted the use of AI to perform 3D reconstructions of liver and pancreatic anatomy from CT/MRI scans. This includes segmenting the liver into anatomical units and delineating tumors, vessels, and ducts [33,34]. Historically, creating patient-specific 3D models was time-consuming (often requiring hours of manual work by radiologists). AI-based image segmentation significantly accelerates this. For example, a deep learning algorithm in one study reduced liver 3D model processing time by ~94% compared to manual methods. Rapid model generation makes it feasible to use 3D visualization for every complex case. Surgeons can then virtually plan their resections—determining optimal resection planes, estimating future liver remnant volume, and even rehearsing the surgery on a computer [35,36]. One recent randomized study even incorporated 3D-printed models: it was reported that using AI-assisted 3D printed liver models for surgical planning led to significantly less intraoperative blood loss compared to standard planning without such models. This suggests that enhanced planning can translate to improved intraoperative outcomes [37].

- Augmented and mixed reality for planning: In addition to screen-based 3D models, some teams have explored using augmented reality (AR) or mixed reality to aid preoperative planning. For instance, holographic visualization of patient anatomy via AR headsets has been tested as a planning tool. In a pilot study for pancreatic tumor resection using mixed reality, surgeons could visualize major arteries and veins holographically in 3D prior to surgery, which reportedly improved their confidence in dissection around those structures (though quantitative outcomes were not yet measured) [38,39].

3.3. Predictive Analytics (AI for Risk and Outcome Prediction)

- Postoperative complication risk models: A prototypical example is predicting postoperative pancreatic fistula (POPF), one of the most common and feared complications after pancreatic resection. Traditional risk scores (like the Fistula Risk Score, FRS) use a few surgeon-assessed variables and have moderate accuracy (AUC often ~0.6) [40]. Several included studies developed ML models that substantially improved prediction of clinically relevant POPF. For instance, in a recent study, an ensemble of ML algorithms was applied to ~300 pancreaticoduodenectomies; their model incorporated dozens of clinical and radiologic features and achieved an AUC around 0.80 for POPF on validation, significantly outperforming the conventional FRS (~0.56 AUC) [24]. This highlights AI’s value in personalized risk stratification by analyzing complex, high-dimensional perioperative data that surgeons cannot easily synthesize alone. Similarly, other studies used ML to predict complications like liver insufficiency after hepatectomy or severe bile leak after bile duct injury repair, often reporting improved accuracy over logistic regression models [41,42]. Notably, most AI-based risk models to date have been developed retrospectively; prospective testing is still scarce. A consistent finding, however, is that combining human expertise with AI can be synergistic—e.g., an AI model might quickly flag high-risk patients, which the surgical team can then further evaluate and proactively manage (such as closer postoperative monitoring or preemptive interventions) [43,44].

- Outcome prediction and decision support: Beyond immediate complications, AI has been used to predict oncologic outcomes like cancer recurrence or long-term survival. Models integrating tumor genomic data, radiologic features, and patient comorbidities have shown promise in forecasting which patients are likely to have early recurrence after resection, which could influence adjuvant therapy decisions. For example, one radiomics study could predict early recurrence of HCC from preoperative imaging and lab data, raising the prospect of tailoring follow-up intensity for high-risk patients [45]. Another AI tool predicted which patients might not benefit from surgery at all due to very aggressive tumor biology, potentially guiding multidisciplinary discussions on alternative treatments [46]. These predictive analytics tools carry ethical implications: using an AI to recommend against surgery or to alter standard treatment must be approached cautiously and transparently. Nonetheless, they offer an avenue for more data-driven clinical decisions in HPB oncology.

3.4. Intraoperative AR and Navigation

- AR for open and minimally invasive surgery: Several pilot studies in our review implemented AR during liver or pancreatic resections. The common workflow is as follows: a 3D model of the patient’s anatomy (from preoperative imaging) is created and then registered to the patient on the operating table. Registration often uses surface landmarks or fiducial markers so that the virtual model aligns correctly with the real organ. For example, in an AR-guided liver surgery study, a CT-based 3D model of the liver (with tumor and vessels) was projected onto the live laparoscopic camera view [48]. For instance, a snapshot of such an AR overlay is being shown to the surgeon for a planned segment 5 liver resection, with the tumor (green) and planned transection plane (red) delineated. By seeing this overlay, the surgeon obtains a form of “X-ray vision”—the ability to visualize hidden structures beneath the liver surface. This can guide where to cut and help avoid critical vessels that are not visible externally [26,49].

- Technical feasibility and accuracy: The feasibility of AR has been demonstrated in small case series. A recent study reported using an AR overlay in three cases of robotic liver resection. The AR model was displayed in the surgeon’s console view, showing tumor and vasculature projections during the robotic hepatectomies. Surgeons found it helped localize lesions and plan transections. However, a noted limitation was the need for improved registration accuracy—misalignment of the virtual overlay by even a few millimeters could erode trust in the system. Organ deformation is a major issue: in soft tissues like the liver, once surgery begins and the organ is mobilized or resected partially, it changes shape, making static preoperative overlays increasingly inaccurate [26]. Future solutions may involve real-time organ tracking or deformable models (potentially using AI to adjust the overlays continuously based on intraoperative imaging or sensors) [50,51].

- Navigation and workflow: Despite these challenges, AR clearly adds value as a navigation aid. For example, AR has been used to guide the placement of trocars (ports) in minimally invasive HPB surgery by projecting an optimal port map onto the patient’s abdomen [26,52]. It has also been applied to help locate small tumors during parenchymal transection by projecting their approximate depth/location [52]. Surgeons generally still rely on tactile feedback or intraoperative ultrasound for confirmation, but AR provides an extra layer of information. In a recent mixed-reality study for pancreatic tumor resection, surgeons could see major blood vessels holographically “through” the pancreatic tissue, which improved their confidence in dissecting around those structures (qualitatively reported) [53].

3.5. Robotics and AI Augmentation (Computer Vision in Surgery)

- Automatic landmark and safety identification: A prominent example is objective recognition of the critical view of safety (CVS) during laparoscopic cholecystectomy. CVS is a method to ensure the cystic duct and artery are clearly identified before cutting, to prevent common bile duct injuries. Achieving CVS is typically a subjective assessment by the surgeon. One included study developed an AI system to automatically detect when CVS had been attained. They trained a deep CNN on ~23,000 laparoscopic cholecystectomy video frames, labeled for presence or absence of key CVS criteria. The best model achieved ~83% accuracy in determining if CVS was achieved, operating at ~6 frames per second (nearly real-time). Such a system could potentially alert a surgeon if they have not yet adequately exposed critical structures [23]. In effect, the AI serves as a “safety coach” in the OR. Considering bile duct injuries are among the most dreaded complications in HPB surgery, this application has high significance. A recent review echoed that AI can reliably identify safe vs. unsafe zones during gallbladder dissection, reinforcing its potential to improve surgical safety [58].

- Real-time hazard detection: Another cutting-edge application is automatic detection of intraoperative bleeding and other hazards. In complex HPB surgeries, bleeding from small vessels can sometimes go unnoticed for critical seconds if the surgeon’s focus is elsewhere. One innovative study designed a hazard detection system using the YOLOv5 deep learning model to identify bleeding in endoscopic video and immediately display alerts via an AR headset. The system distinguished true bleeding from look-alike events (such as spilled irrigating fluid) with high accuracy, highlighting the bleed with a bounding box on the surgeon’s display in real time. Essentially, this is an AI-driven early warning system—a digital “co-pilot” that never blinks, constantly scanning the operative field for hazards. In testing across recorded procedures, the system successfully alerted surgeons to bleeding events that were at times outside the central field of view or at the periphery. Such technology could reduce response time to hemorrhage, potentially improving patient safety in major liver or pancreatic resections where even a brief delay in controlling bleeding can have serious consequences [27].

- Robotic surgery integration: In robotic surgery, integration of AI is also advancing. The da Vinci surgical system already allows some digital interfacing—for example, the TileProTM feature can display auxiliary imaging (like preoperative scans or ultrasound) side-by-side with the operative view [60]. Researchers are going further by embedding AI into robotic workflows. One aspect is surgical skill analysis—computer vision can analyze the motion of instruments (captured via the robot’s kinematic data or video) to grade the surgeon’s skill or identify suboptimal technique. While our review focused on clinical outcome tools, it is worth noting that some surgery studies have used AI to assess technique quality for training purposes [61].

3.6. Postoperative Video Analytics

4. Discussion

4.1. Key Findings and Current Evidence

4.2. Evidence Quality and Bias

4.3. Safety, Ethical and Considerations

4.4. Costs and Implementation

4.5. Clinical Guidance for Surgeons

- Preoperative planning: Use available 3D reconstructions and consider AI-based volumetry or segmentation where accessible, especially in complex resections.

- Intraoperative AR/Navigation: Treat AR overlays as adjuncts, not replacements, and verify with standard techniques. Early adoption should include fallback strategies.

- Robotic platforms: Maximize built-in digital features; integrate emerging AI modules cautiously and maintain oversight of semi-autonomous functions.

- AI decision support: Apply predictive models as aids, not determinants; combine outputs with clinical judgment and document their use.

- Team training: Ensure all OR staff understand the purpose and limits of digital tools, with contingency plans in place.

- Ongoing education: Surgeons should remain updated on AI principles and evolving guidelines to critically evaluate and responsibly deploy new technologies.

- Patient communication: Transparently explain when digital or AI tools support surgical planning or procedures and obtain informed consent for experimental systems.

4.6. Strengths and Limitations of This Review

5. Future Directions

6. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

References

- Agrawal, H.; Tanwar, H.; Gupta, N. Revolutionizing hepatobiliary surgery: Impact of three-dimensional imaging and virtual surgical planning on precision, complications, and patient outcomes. Artif. Intell. Gastroenterol. 2025, 6, 106746. [Google Scholar] [CrossRef]

- Zureikat, A.H.; Postlewait, L.M.; Liu, Y.; Gillespie, T.W.; Weber, S.M.; Abbott, D.E.; Ahmad, S.A.; Maithel, S.K.; Hogg, M.E.; Zenati, M.; et al. A multi-institutional comparison of perioperative outcomes of robotic and open pancreaticoduodenectomy. Ann. Surg. 2016, 264, 640–649. [Google Scholar] [CrossRef] [PubMed]

- Zheng, R.J.; Li, D.L.; Lin, H.M.; Wang, J.F.; Luo, Y.M.; Tang, Y.; Li, F.; Hu, Y.; Su, S. Bibliometrics of Artificial Intelligence Applications in Hepatobiliary Surgery from 2014 to 2024. World J. Gastrointest. Surg. 2025, 17, 104728. [Google Scholar] [CrossRef] [PubMed]

- Bektaş, M.; Chia, C.M.; Burchell, G.L.; Daams, F.; Bonjer, H.J.; van der Peet, D.L. Artificial intelligence-aided ultrasound imaging in hepatopancreatobiliary surgery: Where are we now? Surg. Endosc. 2024, 38, 4869–4879. [Google Scholar] [CrossRef] [PubMed]

- Chadebecq, F.; Vasconcelos, F.; Mazomenos, E.; Stoyanov, D. Computer vision in the surgical operating room. Visc. Med. 2020, 36, 456–462. [Google Scholar] [CrossRef]

- Lan, T.; Liu, S.; Dai, Y.; Jin, Y. Real-time image fusion and Apple Vision Pro in laparoscopic microwave ablation of hepatic hemangiomas. npj Precis. Oncol. 2025, 9, 79. [Google Scholar] [CrossRef]

- Hanna, M.G.; Pantanowitz, L.; Dash, R.; Harrison, J.H.; Deebajah, M.; Pantanowitz, J.; Rashidi, H.H. Future of Artificial Intelligence-Machine Learning Trends in Pathology and Medicine. Mod. Pathol. 2025, 38, 100705. [Google Scholar] [CrossRef]

- Faiyazuddin, M.; Rahman, S.J.Q.; Anand, G.; Siddiqui, R.K.; Mehta, R.; Khatib, M.N.; Gaidhane, S.; Zahiruddin, Q.S.; Hussain, A.; Sah, R. The Impact of Artificial Intelligence on Healthcare: A Comprehensive Review of Advancements in Diagnostics, Treatment, and Operational Efficiency. Health Sci. Rep. 2025, 8, e70312. [Google Scholar] [CrossRef]

- Qadri, S.F.; Rong, C.; Ahmad, M.; Li, J.; Qadri, S.; Zareen, S.S.; Zhuang, Z.; Khan, S.; Lin, H. Chan–Vese aided fuzzy C-means approach for whole breast and fibroglandular tissue segmentation: Preliminary application to real-world breast MRI. Med. Phys. 2025, 52, 2950–2960. [Google Scholar] [CrossRef]

- Lee, W.; Park, H.J.; Lee, H.-J.; Song, K.B.; Hwang, D.W.; Lee, J.H.; Lim, K.; Ko, Y.; Kim, H.J.; Kim, K.W.; et al. Deep learning-based prediction of post-pancreaticoduodenectomy pancreatic fistula. Scientific Reports 2024, 14, 5089. [Google Scholar] [CrossRef]

- Verma, A.; Balian, J.; Hadaya, J.; Premji, A.; Shimizu, T.; Donahue, T.; Benharash, P. Machine Learning-based Prediction of Postoperative Pancreatic Fistula Following Pancreaticoduodenectomy. Ann. Surg. 2024, 280, 325–331. [Google Scholar] [CrossRef] [PubMed]

- Grover, S.; Gupta, S. Automated diagnosis and classification of liver cancers using deep learning techniques: A systematic review. Discov. Appl. Sci. 2024, 6, 508. [Google Scholar] [CrossRef]

- Rawlani, P.; Ghosh, N.K.; Kumar, A. Role of artificial intelligence in the characterization of indeterminate pancreatic head mass and its usefulness in preoperative diagnosis. Artif. Intell. Gastroenterol. 2023, 4, 48–63. [Google Scholar] [CrossRef]

- Cremades Pérez, M.; Espin Álvarez, F.; Pardo Aranda, F.; Navinés López, J.; Vidal Piñeiro, L.; Zarate Pinedo, A.; Piquera Hinojo, A.M.; Sentí Farrarons, S.; Cugat Andorra, E. Augmented reality in hepatobiliary-pancreatic surgery: A technology at your fingertips. Cir. Esp. 2023, 101, 312–318. [Google Scholar] [CrossRef]

- Roman, J.; Sengul, I.; Němec, M.; Sengul, D.; Penhaker, M.; Strakoš, P.; Vávra, P.; Hrubovčák, J.; Pelikán, A. Augmented and mixed reality in liver surgery: A comprehensive narrative review of novel clinical implications on cohort studies. Rev. Assoc. Méd. Bras. 2025, 71, e20250315. [Google Scholar] [CrossRef]

- Ntourakis, D.; Memeo, R.; Soler, L.; Marescaux, J.; Mutter, D.; Pessaux, P. Augmented Reality Guidance for the Resection of Missing Colorectal Liver Metastases: An Initial Experience. World J. Surg. 2016, 40, 419–426. [Google Scholar] [CrossRef]

- Hua, S.; Gao, J.; Wang, Z.; Yeerkenbieke, P.; Li, J.; Wang, J.; He, G.; Jiang, J.; Lu, Y.; Yu, Q.; et al. Automatic Bleeding Detection in Laparoscopic Surgery Based on a Faster Region-Based Convolutional Neural Network. Ann. Transl. Med. 2022, 10, 546. [Google Scholar] [CrossRef]

- Calomino, N.; Carbone, L.; Kelmendi, E.; Piccioni, S.A.; Poto, G.E.; Bagnacci, G.; Resca, L.; Guarracino, A.; Tripodi, S.; Barbato, B.; et al. Western Experience of Hepatolithiasis: Clinical Insights from a Case Series in a Tertiary Center. Medicina 2025, 61, 860. [Google Scholar] [CrossRef]

- Page, M.J.; McKenzie, J.E.; Bossuyt, P.M.; Boutron, I.; Hoffmann, T.C.; Mulrow, C.D.; Shamseer, L.; Tetzlaff, J.M.; Akl, E.A.; Brennan, S.E.; et al. The PRISMA 2020 statement: An updated guideline for reporting systematic reviews. BMJ 2021, 372, n71. [Google Scholar] [CrossRef]

- Wolff, R.F.; Moons, K.G.M.; Riley, R.D.; Whiting, P.F.; Westwood, M.; Collins, G.S.; Reitsma, J.B.; Kleijnen, J.; Mallett, S.; PROBAST Group. PROBAST: A Tool to Assess the Risk of Bias and Applicability of Prediction Model Studies. Ann. Intern. Med. 2019, 170, 51–58. [Google Scholar] [CrossRef]

- Whiting, P.F.; Rutjes, A.W.S.; Westwood, M.E.; Mallett, S.; Deeks, J.J.; Reitsma, J.B.; Leeflang, M.M.G.; Sterne, J.A.C.; Bossuyt, P.M.M.; QUADAS-2 Group. QUADAS-2: A Revised Tool for the Quality Assessment of Diagnostic Accuracy Studies. Ann. Intern. Med. 2011, 155, 529–536. [Google Scholar] [CrossRef]

- Tong, T.; Gu, J.; Xu, D.; Song, L.; Zhao, Q.; Cheng, F.; Yuan, Z.; Tian, S.; Yang, X.; Tian, J.; et al. Deep learning radiomics based on contrast-enhanced ultrasound images for assisted diagnosis of pancreatic ductal adenocarcinoma and chronic pancreatitis. BMC Med. 2022, 20, 74. [Google Scholar] [CrossRef] [PubMed]

- Kawamura, M.; Endo, Y.; Fujinaga, A.; Orimoto, H.; Amano, S.; Kawasaki, T.; Kawano, Y.; Masuda, T.; Hirashita, T.; Kimura, M.; et al. Development of an Artificial Intelligence System for Real-Time Intraoperative Assessment of the Critical View of Safety in Laparoscopic Cholecystectomy. Surg. Endosc. 2023, 37, 8755–8763. [Google Scholar] [CrossRef] [PubMed]

- Müller, P.C.; Erdem, S.; Müller, B.P.; Künzli, B.; Gutschow, C.A.; Hackert, T.; Diener, M.K. Artificial intelligence and image guidance in minimally invasive pancreatic surgery: Current status and future challenges. Artif. Intell. Surg. 2025, 5, 170–181. [Google Scholar] [CrossRef]

- Wang, X.; Yang, J.; Zhou, B.; Tang, L.; Liang, Y. Integrating mixed reality, augmented reality, and artificial intelligence in complex liver surgeries: Enhancing precision, safety, and outcomes. iLiver 2025, 4, 100167. [Google Scholar] [CrossRef]

- Giannone, F.; Felli, E.; Cherkaoui, Z.; Mascagni, P.; Pessaux, P. Augmented Reality and Image-Guided Robotic Liver Surgery. Cancers 2021, 13, 6268. [Google Scholar] [CrossRef]

- Rus, G.; Andras, I.; Vaida, C.; Crisan, N.; Gherman, B.; Radu, C.; Tucan, P.; Iakab, S.; Al Hajjar, N.; Pisla, D. Artificial Intelligence-Based Hazard Detection in Robotic-Assisted Single-Incision Oncologic Surgery. Cancers 2023, 15, 3387. [Google Scholar] [CrossRef]

- Pomohaci, M.D.; Grasu, M.C.; Băicoianu-Nițescu, A.; Enache, R.M.; Lupescu, I.G. Systematic Review: AI Applications in Liver Imaging with a Focus on Segmentation and Detection. Life 2025, 15, 258. [Google Scholar] [CrossRef]

- Cheng, H.; Xu, H.; Peng, B.; Huang, X.; Hu, Y.; Zheng, C.; Zhang, Z. Illuminating the Future of Precision Cancer Surgery with Fluorescence Imaging and Artificial Intelligence Convergence. npj Precis. Oncol. 2024, 8, 196. [Google Scholar] [CrossRef]

- Wen, L.; Weng, S.; Yan, C.; Ye, R.; Zhu, Y.; Zhou, L.; Gao, L.; Li, Y. A Radiomics Nomogram for Preoperative Prediction of Early Recurrence of Small Hepatocellular Carcinoma After Surgical Resection or Radiofrequency Ablation. Front. Oncol. 2021, 11, 657039. [Google Scholar] [CrossRef]

- Bereska, J.I.; Janssen, B.V.; Nio, C.Y.; Kop, M.P.M.; Kazemier, G.; Busch, O.R.; Struik, F.; Marquering, H.A.; Stoker, J.; Besselink, M.G.; et al. Artificial intelligence for assessment of vascular involvement and tumor resectability on CT in patients with pancreatic cancer. Eur. Radiol. Exp. 2024, 8, 18. [Google Scholar] [CrossRef]

- Wang, D.; Azadvar, S.; Heiselman, J.; Jiang, X.; Miga, M.I.; Wang, L. LIBR+: Improving intraoperative liver registration by learning the residual of biomechanics-based deformable registration. arXiv 2024, arXiv:2403.06901. [Google Scholar]

- Sethia, K.; Strakos, P.; Jaros, M.; Kubicek, J.; Roman, J.; Penhaker, M.; Riha, L. Advances in liver, liver lesion, hepatic vasculature, and biliary segmentation: A comprehensive review of traditional and deep learning approaches. Artif. Intell. Rev. 2025, 58, 299. [Google Scholar] [CrossRef]

- Moglia, A.; Cavicchioli, M.; Mainardi, L.; Cerveri, P. Deep Learning for Pancreas Segmentation on Computed Tomography: A Systematic Review. Artif. Intell. Rev. 2025, 58, 220. [Google Scholar] [CrossRef]

- Takamoto, T.; Ban, D.; Nara, S.; Mizui, T.; Nagashima, D.; Esaki, M.; Shimada, K. Automated three-dimensional liver reconstruction with artificial intelligence for virtual hepatectomy. J. Gastrointest. Surg. 2022, 26, 2119–2127. [Google Scholar] [CrossRef] [PubMed]

- Kazami, Y.; Kaneko, J.; Keshwani, D.; Kitamura, Y.; Takahashi, R.; Mihara, Y.; Ichida, A.; Kawaguchi, Y.; Akamatsu, N.; Hasegawa, K. Two-step Artificial Intelligence Algorithm for Liver Segmentation Automates Anatomic Virtual Hepatectomy. J. Hepato-Biliary-Pancreat. Sci. 2023, 30, 1205–1217. [Google Scholar] [CrossRef]

- Li, L.; Cheng, S.; Li, J.; Yang, J.; Wang, H.; Dong, B.; Yin, X.; Shi, H.; Gao, S.; Gu, F.; et al. Randomized Comparison of AI-Enhanced 3D Printing and Traditional Simulations in Hepatobiliary Surgery. npj Digit. Med. 2025, 8, 293. [Google Scholar] [CrossRef]

- Javaheri, H.; Ghamarnejad, O.; Bade, R.; Lukowicz, P.; Karolus, J.; Stavrou, G.A. Beyond the visible: Preliminary evaluation of the first wearable augmented reality assistance system for pancreatic surgery. Int. J. Comput. Assist. Radiol. Surg. 2024, 19, 931–939. [Google Scholar] [CrossRef]

- Stott, M.; Kausar, A. Can 3D visualisation and navigation techniques improve pancreatic surgery? A systematic review. Artif. Intell. Surg. 2023, 3, 207–216. [Google Scholar] [CrossRef]

- Ryu, Y.; Shin, S.H.; Park, D.J.; Kim, N.; Heo, J.S.; Choi, D.W.; Han, I.W. Validation of original and alternative fistula risk scores in postoperative pancreatic fistula. J. Hepatobiliary Pancreat. Sci. 2019, 26, 354–359. [Google Scholar] [CrossRef]

- Wang, J.; Zheng, T.; Liao, Y.; Geng, S.; Li, J.; Zhang, Z.; Shang, D.; Liu, C.; Yu, P.; Huang, Y.; et al. Machine Learning Prediction Model for Post-Hepatectomy Liver Failure in Hepatocellular Carcinoma: A Multicenter Study. Front. Oncol. 2022, 12, 986867. [Google Scholar] [CrossRef]

- Altaf, A.; Munir, M.M.; Khan, M.M.M.; Rashid, Z.; Khalil, M.; Guglielmi, A.; Ratti, F.; Aldrighetti, L.; Bauer, T.W.; Marques, H.P.; et al. Machine Learning Based Prediction Model for Bile Leak Following Hepatectomy for Liver Cancer. HPB 2025, 27, 489–501. [Google Scholar] [CrossRef] [PubMed]

- Vaccaro, M.; Almaatouq, A.; Malone, T. When combinations of humans and AI are useful: A systematic review and meta-analysis. Nat. Hum. Behav. 2024, 8, 2293–2303. [Google Scholar] [CrossRef] [PubMed]

- Brennan, M.; Puri, S.; Ozrazgat-Baslanti, T.; Bhat, R.R.; Feng, Z.; Momcilovic, P.; Li, X.; Wang, D.; Zhe Wang, D.; Bihorac, A.; et al. Comparing Clinical Judgment with MySurgeryRisk Algorithm for Preoperative Risk Assessment: A Pilot Usability Study. Surgery 2019, 165, 1035–1045. [Google Scholar] [CrossRef] [PubMed]

- Wang, K.-D.; Guan, M.-J.; Bao, Z.-Y.; Shi, Z.-J.; Tong, H.-H.; Xiao, Z.-Q.; Liang, L.; Liu, J.-W.; Shen, G.-L. Radiomics analysis based on dynamic contrast-enhanced MRI for predicting early recurrence after hepatectomy in hepatocellular carcinoma patients. Sci. Rep. 2025, 15, 22240. [Google Scholar] [CrossRef]

- Morales-Galicia, A.E.; Rincón-Sánchez, M.N.; Ramírez-Mejía, M.M.; Méndez-Sánchez, N. Outcome prediction for cholangiocarcinoma prognosis: Embracing the machine learning era. World J. Gastroenterol. 2025, 31, 106808. [Google Scholar] [CrossRef]

- Kenig, N.; Monton Echeverria, J.; Muntaner Vives, A. Artificial intelligence in surgery: A systematic review of use and validation. J. Clin. Med. 2024, 13, 7108. [Google Scholar] [CrossRef] [PubMed] [PubMed Central]

- Oh, M.Y.; Yoon, K.C.; Hyeon, S.; Jang, T.; Choi, Y.; Kim, J.; Kong, H.-J.; Chai, Y.J. Navigating the Future of 3D Laparoscopic Liver Surgeries: Visualization of Internal Anatomy on Laparoscopic Images with Augmented Reality. Surg. Laparosc. Endosc. Percutaneous Tech. 2024, 34, 459–465. [Google Scholar] [CrossRef]

- Ramalhinho, J.; Yoo, S.; Dowrick, T.; Koo, B.; Somasundaram, M.; Gurusamy, K.; Hawkes, D.J.; Davidson, B.; Blandford, A.; Clarkson, M.J. The value of augmented reality in surgery—A usability study on laparoscopic liver surgery. Med. Image Anal. 2023, 90, 102943. [Google Scholar] [CrossRef]

- Han, Z.; Dou, Q. A Review on Organ Deformation Modeling Approaches for Reliable Surgical Navigation Using Augmented Reality. Comput. Assist. Surg. 2024, 29, 2357164. [Google Scholar] [CrossRef]

- Han, Z.; Zhou, J.; Pei, J.; Qin, J.; Fan, Y.; Dou, Q. Towards Reliable AR-Guided Surgical Navigation: Interactive Deformation Modeling with Data-Driven Biomechanics and Prompts. IEEE Trans. Med. Imaging 2025, in press. [Google Scholar] [CrossRef]

- Ribeiro, M.; Espinel, Y.; Rabbani, N.; Pereira, B.; Bartoli, A.; Buc, E. Augmented reality guided laparoscopic liver resection: A phantom study with intraparenchymal tumors. J. Surg. Res. 2024, 296, 612–620. [Google Scholar] [CrossRef]

- Wierzbicki, R.; Pawłowicz, M.; Job, J.; Balawender, R.; Kostarczyk, W.; Stanuch, M.; Janc, K.; Skalski, A. 3D mixed-reality visualization of medical imaging data as a supporting tool for innovative, minimally invasive surgery for gastrointestinal tumors and systemic treatment as a new path in personalized treatment of advanced cancer diseases. J. Cancer Res. Clin. Oncol. 2022, 148, 237–243. [Google Scholar] [CrossRef] [PubMed]

- Wu, X.; Wang, D.; Xiang, N.; Pan, M.; Jia, F.; Yang, J.; Fang, C. Augmented Reality-Assisted Navigation System Contributes to Better Intraoperative and Short-Time Outcomes of Laparoscopic Pancreaticoduodenectomy: A Retrospective Cohort Study. Int. J. Surg. 2023, 109, 2598–2607. [Google Scholar] [CrossRef] [PubMed]

- Cheng, K.; You, J.; Wu, S.; Chen, Z.; Zhou, Z.; Guan, J.; Peng, B.; Wang, X. Artificial Intelligence-Based Automated Laparoscopic Cholecystectomy Surgical Phase Recognition and Analysis. Surg. Endosc. 2022, 36, 3160–3168. [Google Scholar] [CrossRef] [PubMed]

- Tokuyasu, T.; Iwashita, Y.; Matsunobu, Y.; Kamiyama, T.; Ishikake, M.; Sakaguchi, S.; Ebe, K.; Tada, K.; Endo, Y.; Etoh, T.; et al. Development of an Artificial Intelligence System Using Deep Learning to Indicate Anatomical Landmarks During Laparoscopic Cholecystectomy. Surg. Endosc. 2021, 35, 1651–1658. [Google Scholar] [CrossRef]

- You, J.; Cai, H.; Wang, Y.; Bian, A.; Cheng, K.; Meng, L.; Wang, X.; Gao, P.; Chen, S.; Cai, Y.; et al. Artificial intelligence automated surgical phases recognition in intraoperative videos of laparoscopic pancreatoduodenectomy. Surg. Endosc. 2024, 38, 4894–4905. [Google Scholar] [CrossRef]

- Laplante, S.; Namazi, B.; Kiani, P.; Hashimoto, D.A.; Alseidi, A.; Pasten, M.; Brunt, L.M.; Gill, S.; Davis, B.; Bloom, M.; et al. Validation of an Artificial Intelligence Platform for the Guidance of Safe Laparoscopic Cholecystectomy. Surg. Endosc. 2023, 37, 2260–2268. [Google Scholar] [CrossRef]

- Kehagias, D.; Lampropoulos, C.; Bellou, A.; Kehagias, I. Detection of anatomic landmarks during laparoscopic cholecystectomy with the use of artificial intelligence—A systematic review of the literature. Updates Surg. 2025, 77, 1–17. [Google Scholar] [CrossRef]

- Woo, Y.; Choi, G.H.; Min, B.S.; Hyung, W.J. Novel application of simultaneous multi-image display during complex robotic abdominal procedures. BMC Surg. 2014, 14, 13. [Google Scholar] [CrossRef]

- Hashemi, N.; Mose, M.; Østergaard, L.R.; Bjerrum, F.; Hashemi, M.; Svendsen, M.B.S.; Friis, M.L.; Tolsgaard, M.G.; Rasmussen, S. Video-based robotic surgical action recognition and skills assessment on porcine models using deep learning. Surg. Endosc. 2025, 39, 1709–1719. [Google Scholar] [CrossRef]

- Saeidi, H.; Opfermann, J.D.; Kam, M.; Leonard, S.; Hsieh, M.H.; Kang, J.U.; Krieger, A. Autonomous robotic laparoscopic surgery for intestinal anastomosis. Sci. Robot. 2022, 7, eabj2908. [Google Scholar] [CrossRef] [PubMed]

- Gruijthuijsen, C.; Garcia-Peraza-Herrera, L.C.; Borghesan, G.; Reynaerts, D.; Deprest, J.; Ourselin, S.; Vander Poorten, E. Robotic endoscope control via autonomous instrument tracking. Front. Robot. AI 2022, 9, 832208. [Google Scholar] [CrossRef] [PubMed]

- Golany, T.; Aides, A.; Freedman, D.; Rabani, N.; Liu, Y.; Rivlin, E.; Corrado, G.S.; Matias, Y.; Khoury, W.; Kashtan, H.; et al. Artificial intelligence for phase recognition in complex laparoscopic cholecystectomy. Surg. Endosc. 2022, 36, 9215–9223. [Google Scholar] [CrossRef] [PubMed]

- Yang, H.Y.; Hong, S.S.; Yoon, J.; Park, B.; Yoon, Y.; Han, D.H.; Choi, G.H.; Choi, M.-K.; Kim, S.H. Deep Learning-Based Surgical Phase Recognition in Laparoscopic Cholecystectomy. Ann. Hepato-Biliary-Pancreat. Surg. 2024, 28, 466–473. [Google Scholar] [CrossRef]

- Mascagni, P.; Alapatt, D.; Garcia, A.; Okamoto, N.; Vardazaryan, A.; Costamagna, G.; Dallemagne, B.; Padoy, N. Surgical Data Science for Safe Cholecystectomy: A Protocol for Segmentation of Hepatocystic Anatomy and Assessment of the Critical View of Safety. arXiv 2021, arXiv:2106.10916. [Google Scholar]

- Pei, J.; Zhou, Z.; Guo, D.; Li, Z.; Qin, J.; Du, B.; Heng, P.-A. Synergistic Bleeding Region and Point Detection in Laparoscopic Surgical Videos. arXiv 2025, arXiv:2503.22174. [Google Scholar]

- Kamtam, D.N.; Shrager, J.B.; Malla, S.D.; Lin, N.; Cardona, J.J.; Kim, J.J.; Hu, C. Deep learning approaches to surgical video segmentation and object detection: A scoping review. arXiv 2025, arXiv:2502.16459. [Google Scholar]

- Kanani, F.; Zindel, N.; Mowbray, N.; Nesher, E. Clinical outcomes, learning effectiveness, and patient-safety implications of AI-assisted HPB surgery for trainees: A systematic review and multiple meta-analyses. Artif. Intell. Surg. 2025, 5, 387–417. [Google Scholar] [CrossRef]

- Romero, F.P.; Diler, A.; Bisson-Grégoire, G.; Turcotte, S.; Lapointe, R.; Vandenbroucke-Menu, F.; Tang, A.; Kadoury, S. End-to-End Discriminative Deep Network for Liver Lesion Classification. arXiv 2019, arXiv:1901.09483. [Google Scholar]

- Huo, Y.; Cai, J.; Cheng, C.-T.; Raju, A.; Yan, K.; Landman, B.A.; Xiao, J.; Lu, L.; Liao, C.-H.; Harrison, A.P. Harvesting, Detecting, and Characterizing Liver Lesions from Large-scale Multi-phase CT Data via Deep Dynamic Texture Learning. arXiv 2020, arXiv:2006.15691. [Google Scholar]

- Dumitrescu, E.A.; Ungureanu, B.S.; Cazacu, I.M.; Florescu, L.M.; Streba, L.; Croitoru, V.M.; Sur, D.; Croitoru, A.; Turcu-Stiolica, A.; Lungulescu, C.V. Diagnostic Value of Artificial Intelligence-Assisted Endoscopic Ultrasound for Pancreatic Cancer: A Systematic Review and Meta-Analysis. Diagnostics 2022, 12, 309. [Google Scholar] [CrossRef] [PubMed]

- Wang, Q.; Wang, Z.; Liu, F.; Wang, Z.; Ni, Q.; Chang, H.; Li, B.; Zhang, Y.; Chen, X.; Sun, J.; et al. Machine Learning-Based Prediction of Postoperative Pancreatic Fistula after Laparoscopic Pancreaticoduodenectomy. BMC Surg. 2025, 25, 191. [Google Scholar] [CrossRef] [PubMed]

- Wang, P.; Wang, S.; Luo, P. Evaluation of the Effectiveness of Preoperative 3D Reconstruction Combined with Intraoperative Augmented Reality Fluorescence Guidance System in Laparoscopic Liver Surgery: A Retrospective Cohort Study. BMC Surg. 2025, 25, 288. [Google Scholar] [CrossRef]

- Wu, S.; Tang, M.; Liu, J.; Qin, D.; Wang, Y.; Zhai, S.; Bi, E.; Li, Y.; Wang, C.; Xiong, Y.; et al. Impact of an AI-based Laparoscopic Cholecystectomy Coaching Program on Surgical Performance: A Randomized Controlled Trial. Int. J. Surg. 2024, 110, 7816–7823. [Google Scholar] [CrossRef]

- Li, Z.; Li, F.; Fu, Q.; Wang, X.; Liu, H.; Zhao, Y.; Ren, W. Large language models and medical education: A paradigm shift in educator roles. Smart Learn. Environ. 2024, 11, 26. [Google Scholar] [CrossRef]

- U.S. Food and Drug Administration. Artificial Intelligence and Machine Learning Software as a Medical Device (SaMD) Action Plan—Total Product Lifecycle (TPLC) Approach, Good Machine Learning Practice (GMLP), and Predetermined Change Control Plan (PCCP) Frameworks; U.S. Food and Drug Administration: Silver Spring, MD, USA, 2025. [Google Scholar]

- U.S. Food and Drug Administration. Artificial Intelligence-Enabled Device Software Functions: Lifecycle Management and Marketing Submission Recommendations; Draft Guidance Released 6 January 2025; U.S. Food and Drug Administration: Silver Spring, MD, USA, 2025. [Google Scholar]

- Lekadir, K.; Frangi, A.F.; Porras, A.R.; Glocker, B.; Cintas, C.; Langlotz, C.P.; Weicken, E.; Asselbergs, F.W.; Prior, F.; Collins, G.S.; et al. FUTURE-AI: International Consensus Guideline for Trustworthy and Deployable Artificial Intelligence in Healthcare. BMJ 2025, 388, e081554. [Google Scholar] [CrossRef]

- Arjomandi Rad, A.; Vardanyan, R.; Athanasiou, T.; Maessen, J.; Sardari Nia, P. The ethical considerations of integrating artificial intelligence into surgery: A review. Interdiscip. Cardiovasc. Thorac. Surg. 2025, 40, ivae192. [Google Scholar] [CrossRef]

- Gómez Bergin, A.D.; Craven, M.P. Virtual, augmented, mixed, and extended reality interventions in healthcare: A systematic review of health economic evaluations and cost-effectiveness. BMC Digit. Health 2023, 1, 53. [Google Scholar] [CrossRef]

- Ye, Z.; Zhou, R.; Deng, Z.; Wang, D.; Zhu, Y.; Jin, X.; Zhang, Q.; Liu, H.; Li, L.; Xu, Y.; et al. A Comprehensive Video Dataset for Surgical Laparoscopic Action Analysis. Sci. Data 2025, 12, 862. [Google Scholar] [CrossRef]

- Wei, J.; Xiao, Z.; Sun, D.; Gong, L.; Yang, Z.; Liu, Z.; Wu, J.; Zhou, J.; Chen, Y.; Li, Q.; et al. SurgBench: A Unified Large-Scale Benchmark for Surgical Video Analysis. arXiv 2025, arXiv:2506.07603. [Google Scholar]

- Chen, G.; Sha, Y.; Wang, Z.; Wang, K.; Chen, L. The Role of Large Language Models (LLMs) in Hepato-Pancreato-Biliary Surgery: Opportunities and Challenges. Cureus 2025, 17, e85979. [Google Scholar] [CrossRef] [PubMed]

- Banerjee, S.; Wang, D.S.; Kim, H.J.; Sirlin, C.B.; Chan, M.G.; Korn, R.L.; Rutman, A.M.; Siripongsakun, S.; Lu, D.; Imanbayev, G.; et al. A Computed Tomography Radiogenomic Biomarker Predicts Microvascular Invasion and Clinical Outcomes in Hepatocellular Carcinoma. Hepatology 2015, 62, 792–800. [Google Scholar] [CrossRef]

- Hashimoto, D.A.; Ward, T.M.; Meireles, O.R.; Rosman, G.; Meireles, O.R.; Alseidi, A.; Madani, A.; Pugh, C.M.; Jopling, J.; Trehan, A.; et al. The Role of Artificial Intelligence in Surgery: A Review of Current and Future Applications. JAMA Surg. 2022, 157, 368–376. [Google Scholar]

| Study (Author, Year) | AI Method or Tool | Target Organ/Disease Focus | Clinical Application | Performance Metrics | Study Type |

|---|---|---|---|---|---|

| Bektas et al., 2024 [4] | Radiomics + CNN on ultrasound imaging | Liver (HCC tumors) | Imaging diagnosis (tumor classification; recurrence prediction) | AUC ~0.81–0.85 for early HCC recurrence (outperformed clinical risk factors); high accuracy distinguishing HCC vs. metastases | Retrospective cohort study (imaging analysis) |

| Tong et al., 2022 [22] | Deep CNN (ResNet-50) on EUS images | Pancreas (PDAC vs. chronic pancreatitis) | Imaging diagnosis (tumor differentiation) | AUC ≈ 0.95 for PDAC detection (vs. pancreatitis); demonstrated high diagnostic accuracy as decision support in borderline cases | Retrospective diagnostic study (multi-center image dataset) |

| Kawamura et al., 2023 [23] | CNN-based video analysis for critical view of safety (CVS) identification | Biliary (CVS during cholecystectomy) | Intraoperative safety guidance (anatomic landmark confirmation) | Real-time CVS recognition with ~83% overall accuracy; could alert surgeons to incomplete CVS, aiming to prevent bile duct injury | Retrospective observational study (analysis of recorded LC videos) |

| Müller et al., 2025 [24] | Ensemble ML algorithms on clinical data | Pancreas (postoperative fistula risk) | Outcome risk prediction (complication risk stratification) | AUC ~0.80 for clinically relevant POPF (vs. <0.60 with Fistula Risk Score); ML model significantly improved risk prediction | Retrospective cohort study (model development & validation) |

| Wang et al., 2025 [25] | Mixed reality (Hololens) with 3D liver model overlay | Liver (tumor cases) | Surgical planning (preoperative simulation & education) | Qualitative improvements in anatomical understanding and surgical strategy planning (holographic tumor visualization); no quantitative metrics reported | Prospective case series (pilot implementation of MR planning) |

| Giannone et al., 2021 [26] | Augmented reality overlay in robotic surgery | Liver (robotic hepatectomy for tumors) | Intraoperative guidance (real-time image guidance) | Feasible AR overlay of tumor and vasculature during surgery; improved lesion localization and transection planning (qualitative), though registration accuracy was a noted limitation | Prospective pilot study (feasibility in 3 cases) |

| Crisan et al., 2023 [27] | YOLOv5 deep learning vision + AR display | Intraoperative bleeding (multiple HPB procedures) | Intraoperative hazard detection (real-time bleed alert) | Detected surgical bleeding in real time with high accuracy (distinguished blood vs. irrigation fluid); demonstrated concept of automated hazard alerts via AR headset | Experimental feasibility study (lab validation on recorded surgery videos) |

| Pomohaci et al., 2025 [28] | N/A (Systematic review of AI in imaging) | Liver imaging (various pathologies) | Imaging diagnosis (lesion detection & classification)—review | N/A (Reviewed 329 studies: most focused on lesion classification; CT was the most common modality; highlighted lack of external validation in many studies) | Systematic review (2018–2024 literature) |

| Cheng et al., 2024 [29] | Concept: AI-enhanced fluorescence imaging | HPB cancers (tumor margins) | Intraoperative guidance (augmented fluorescence surgery) | N/A (Conceptual proposal: AI can enhance fluorescence image quality and differentiate tumor vs. normal tissue signals, potentially improving margin detection and resection precision) | Perspective article (conceptual review of emerging techniques) |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Efstathiou, A.; Charitaki, E.; Triantopoulou, C.; Delis, S. Artificial Intelligence and Digital Tools Across the Hepato-Pancreato-Biliary Surgical Pathway: A Systematic Review. J. Clin. Med. 2025, 14, 6501. https://doi.org/10.3390/jcm14186501

Efstathiou A, Charitaki E, Triantopoulou C, Delis S. Artificial Intelligence and Digital Tools Across the Hepato-Pancreato-Biliary Surgical Pathway: A Systematic Review. Journal of Clinical Medicine. 2025; 14(18):6501. https://doi.org/10.3390/jcm14186501

Chicago/Turabian StyleEfstathiou, Andreas, Evgenia Charitaki, Charikleia Triantopoulou, and Spiros Delis. 2025. "Artificial Intelligence and Digital Tools Across the Hepato-Pancreato-Biliary Surgical Pathway: A Systematic Review" Journal of Clinical Medicine 14, no. 18: 6501. https://doi.org/10.3390/jcm14186501

APA StyleEfstathiou, A., Charitaki, E., Triantopoulou, C., & Delis, S. (2025). Artificial Intelligence and Digital Tools Across the Hepato-Pancreato-Biliary Surgical Pathway: A Systematic Review. Journal of Clinical Medicine, 14(18), 6501. https://doi.org/10.3390/jcm14186501