Artificial Intelligence in Alzheimer’s Disease Diagnosis and Prognosis Using PET-MRI: A Narrative Review of High-Impact Literature Post-Tauvid Approval

Abstract

1. Introduction

2. Materials and Methods

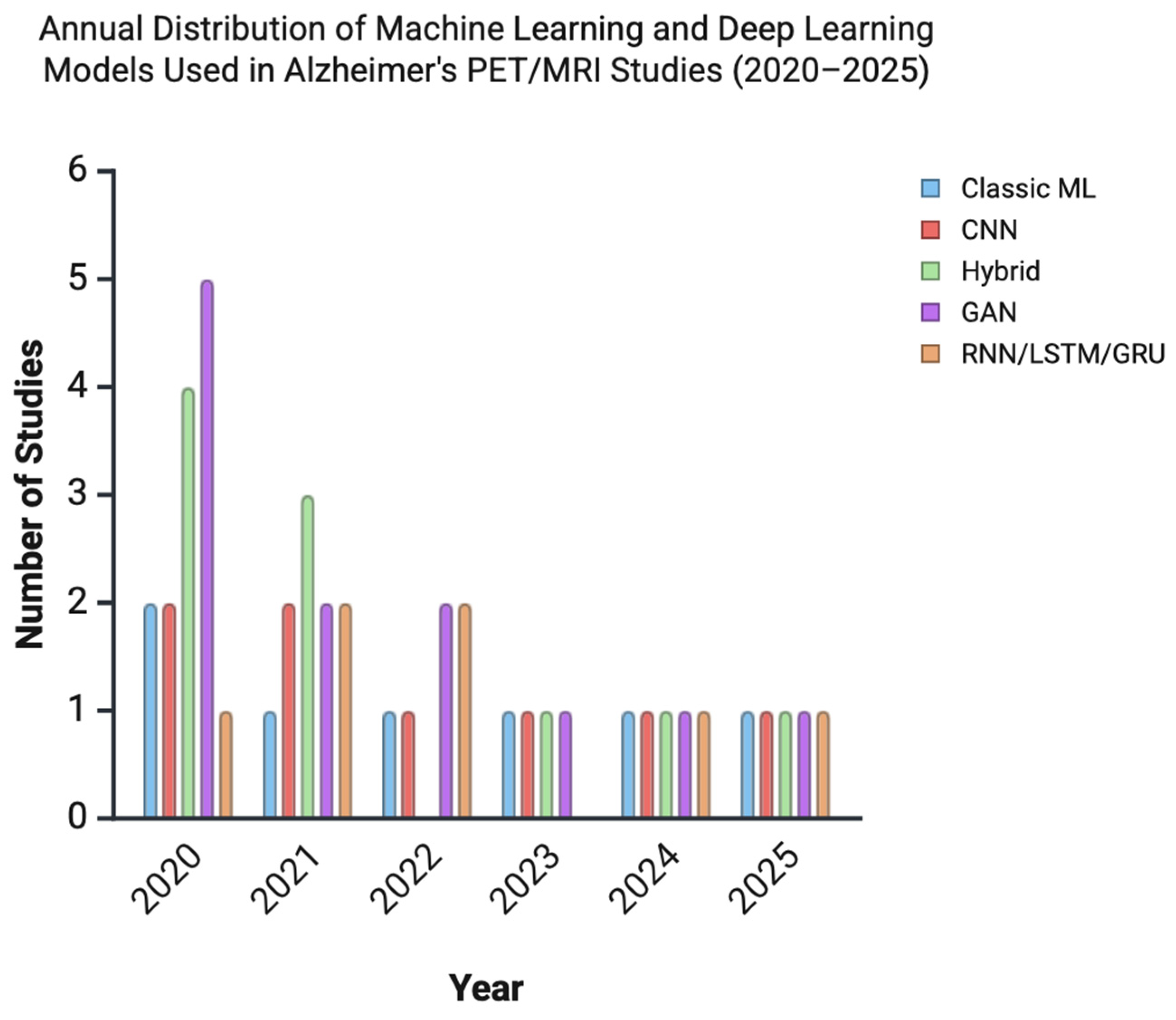

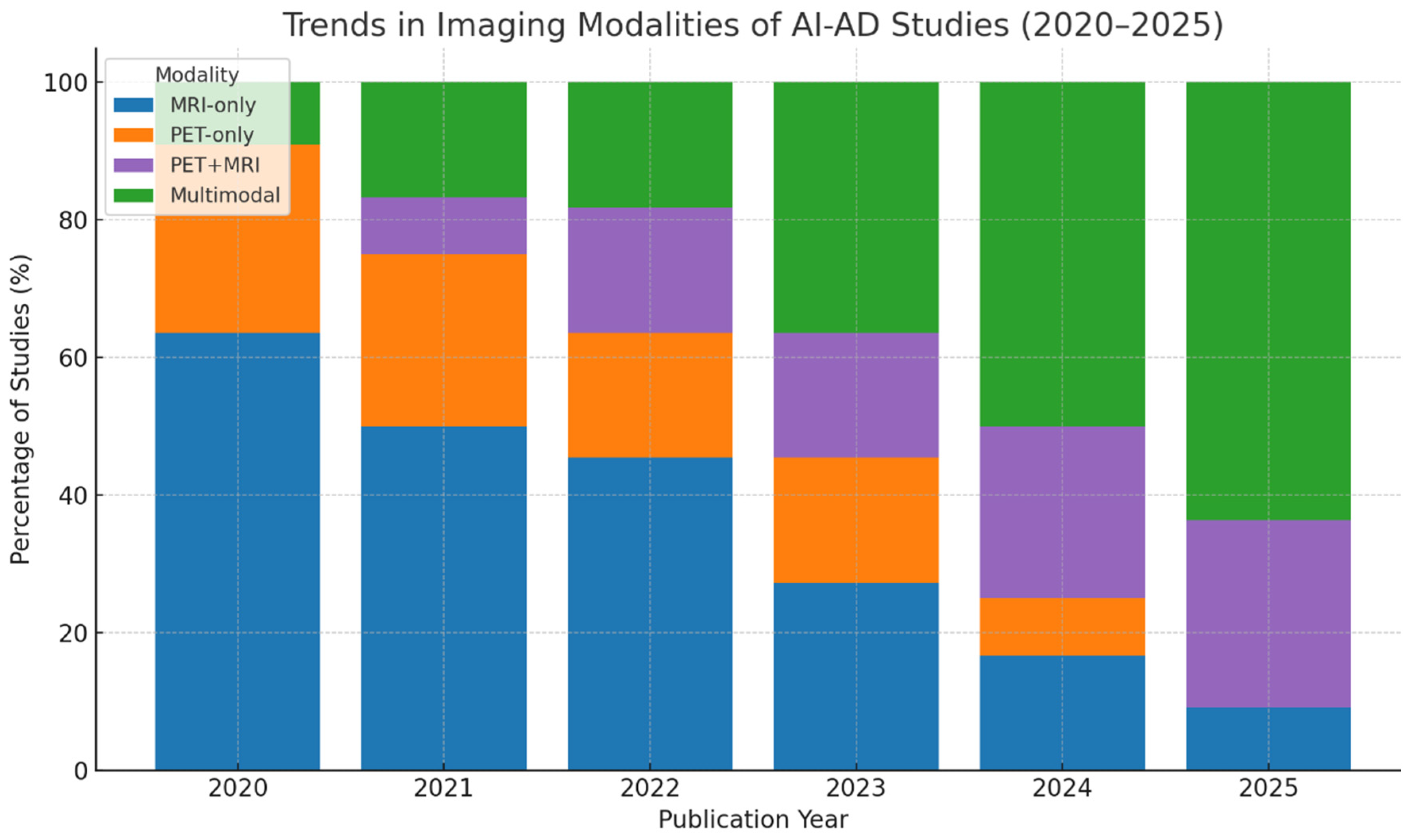

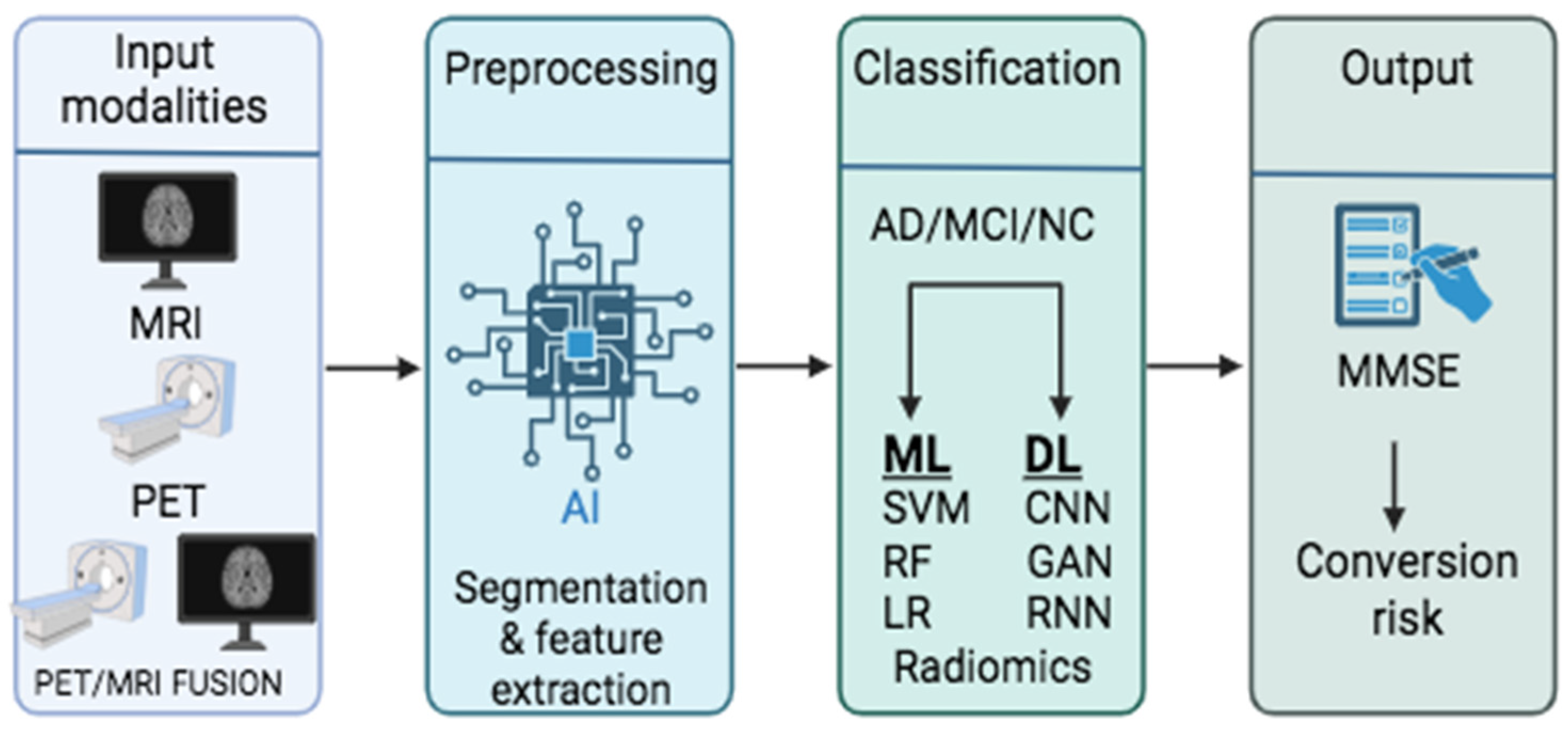

3. Results

- Image preprocessing and segmentation,

- Diagnosis and classification,

- Prediction and prognosis,

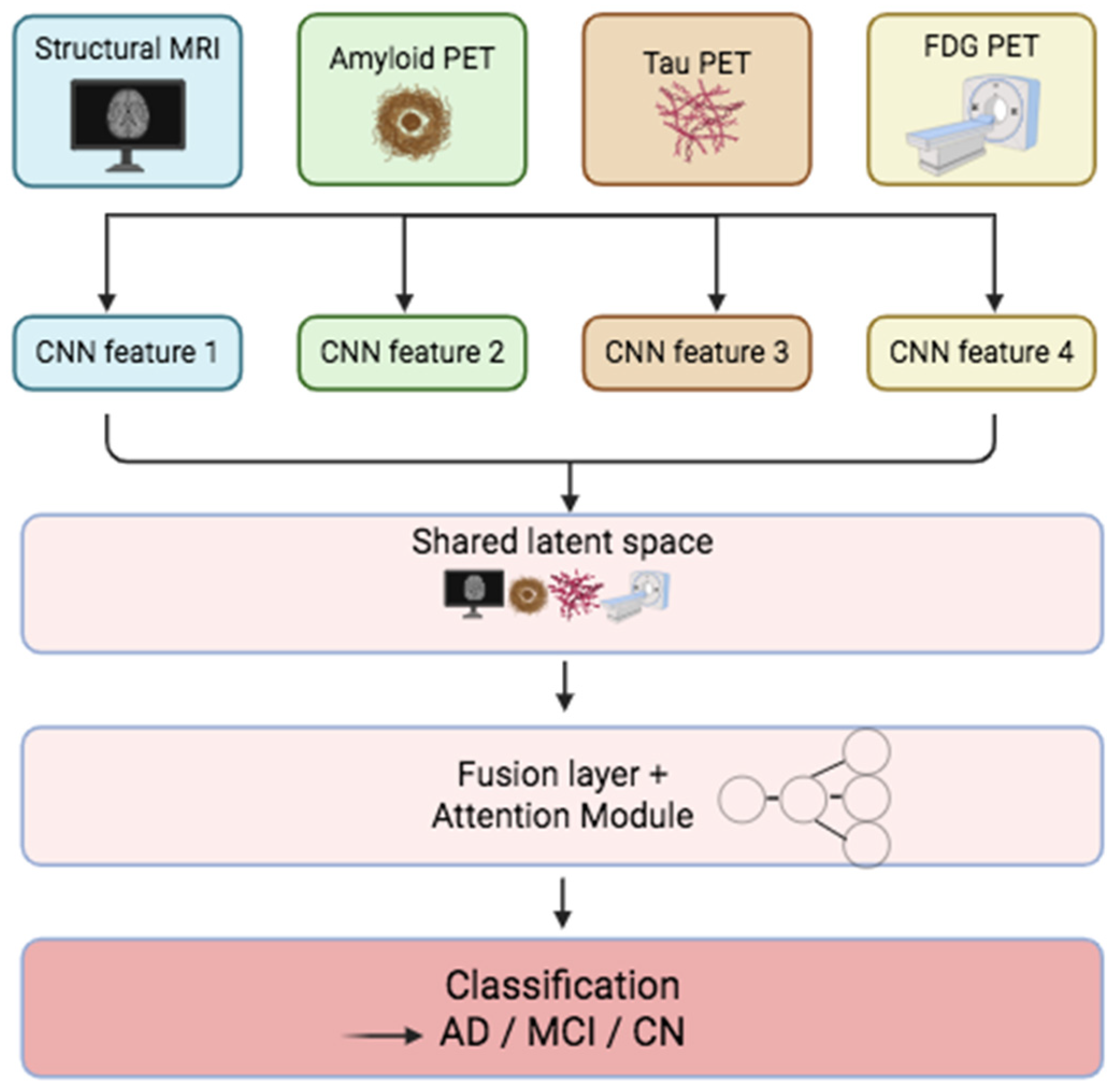

- Multimodal data fusion,

- Emerging trends in AI modeling.

4. Discussion

4.1. Image Preprocessing and Segmentation

4.2. Diagnosis and Classification

4.2.1. Machine Learning in AD Diagnosis and Classification

4.2.2. Deep Learning in AD Diagnosis and Classification

4.3. Prediction/Prognosis

4.4. Multimodal Fusion and Clinical Integration

4.5. Emerging Trends and Future Directions

4.5.1. Clinical Importance

4.5.2. Limitations

4.5.3. Future Directions

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Correction Statement

Abbreviations

| AD | Alzheimer’s Disease |

| AI | Artificial Intelligence |

| AUC | Area Under the Curve |

| ANN | Artificial Neural Network |

| CNN | Convolutional Neural Network |

| CSF | Cerebrospinal Fluid |

| DL | Deep Learning |

| DT | Decision Tree |

| FDA | Food and Drug Administration |

| FDG | Fluorodeoxyglucose |

| GAN | Generative Adversarial Network |

| LLM | Large Language Model |

| LSTM | Long Short-Term Memory |

| ML | Machine Learning |

| MCI | Mild Cognitive Impairment |

| MRI | Magnetic Resonance Imaging |

| MMSE | Mini-Mental State Examination |

| PET | Positron Emission Tomography |

| RF | Random Forest |

| RNN | Recurrent Neural Network |

| ROI | Region of Interest |

| SVM | Support Vector Machine |

| SUVR | Standardized Uptake Value Ratio |

| XAI | Explainable Artificial Intelligence |

Appendix A

Appendix A.1. Pubmed Search Strategy

| Set # | Concept | Syntax | Results |

| 1 | AI | “artificial intelligence” [MeSH Terms] OR “Neural Networks, Computer” [Mesh] OR “Image Processing, Computer-Assisted” [Mesh] OR “Deep Learning” [mesh] OR “Machine Learning” [mesh] OR “Artificial Intelligence” [tw] OR “Artificial Neural Network” [tw] OR “Convolutional Neural Network” [tw] OR “Deep Learning” [tw] OR “Machine Learning” [tw] | 606,889 |

| 2 | PET/MRI | “Positron-Emission Tomography” [Mesh] OR “Magnetic Resonance Imaging” [Mesh] OR “Positron Emission Tomography” [tw] OR “PET” [tw] OR “Magnetic Resonance Imaging” [tw] OR MRI [tw] | 921,043 |

| 3 | Alzheimer | “Alzheimer Disease” [Mesh] OR Alzheimer [tw] OR Alzheimers [tw] OR Alzheimer’s [tw] OR “Senile Dementia” [tw] OR “Presenile Dementia” [tw] | 227,267 |

| 4 | Diagnosis or detection | “Diagnosis” [Mesh] OR “diagnosis” [Subheading] OR diagnoses [tw] OR diagnose [tw] OR diagnosis [tw] OR detect * [tw] | 13,530,301 |

| 5 | combining | #1 AND #2 AND #3 | 4124 |

| 6 | filters | # 4 NOT (“animals” [MeSH Terms] NOT “humans” [MeSH Terms]) NOT (“Case Reports” [Publication Type] OR “Editorial” [Publication Type] OR “Review” [Publication Type]) AND “English” [Language] AND (2020:2024 [pdat]) | 1334 |

Appendix A.2. Embase Search Strategy

| Set # | Concept | Syntax | Results |

| 1 | AI | ‘artificial intelligence’/exp OR ‘artificial neural network’/exp OR ‘image processing’/exp OR ‘deep learning’/exp OR ‘machine learning’/exp OR ‘Artificial Intelligence’:ti,ab,kw OR ‘Artificial Neural Network’:ti,ab,kw OR ‘Convolutional Neural Network’:ti,ab,kw OR ‘Deep Learning’:ti,ab,kw OR ‘Machine Learning’:ti,ab,kw | 760,426 |

| 2 | PET/MRI | 1,696,145 | 921,043 |

| 3 | Alzheimer | ‘Alzheimer disease’/exp OR Alzheimer:ti,ab,kw OR Alzheimers:ti,ab,kw OR ‘Senile Dementia’:ti,ab,kw OR ‘Presenile Dementia’:ti,ab,kw | 337,471 |

| 4 | Diagnosis or detection | ‘diagnosis’/exp OR diagnoses:ti,ab,kw OR diagnose:ti,ab,kw OR diagnosis:ti,ab,kw OR detect *:ti,ab,kw | 13,097,987 |

| 5 | combining | #1 AND #2 AND #3 | 5670 |

| 6 | filters | #4 NOT (‘case report’/de OR [editorial]/lim OR [review]/lim) AND [2020–2025]/py AND [english]/lim AND [humans]/lim | 2843 |

Appendix A.3. Scopus Search Strategy

| Set # | Concept | Syntax | Results |

| 1 | AI | TITLE-ABS-KEY (“Artificial Intelligence” OR “Artificial Neural Network” OR “Convolutional Neural Network” OR “Deep Learning” OR “Machine Learning”) | 1,881,748 |

| 2 | PET/MRI | TITLE-ABS-KEY (“Positron Emission Tomography” OR “PET” OR “Magnetic Resonance Imaging” OR MRI) | 1,506,097 |

| 3 | Alzheimer | TITLE-ABS-KEY (alzheimer OR alzheimers OR “Senile Dementia” OR “Presenile Dementia”) | 316,171 |

| 4 | Diagnosis or detection | TITLE-ABS-KEY (diagnoses OR diagnose OR diagnosis OR detect *) | 13,530,301 |

| 5 | combining | #1 AND #2 AND #3 | 3612 |

| 6 | filters | #4 date limit 2020–2025 and English language | 2848 |

References

- Scheltens, P.; De Strooper, B.; Kivipelto, M.; Holstege, H.; Chételat, G.; Teunissen, C.E.; Cummings, J.; van der Flier, W.M. Alzheimer’s disease. Lancet 2021, 397, 1577–1590. [Google Scholar] [CrossRef]

- Monteiro, A.R.; Barbosa, D.J.; Remião, F.; Silva, R. Alzheimer’s disease: Insights and new prospects in disease pathophysiology, biomarkers and disease-modifying drugs. Biochem. Pharmacol. 2023, 211, 115522. [Google Scholar] [CrossRef] [PubMed]

- McDade, E.M. Alzheimer Disease. Contin. Lifelong Learn. Neurol. 2022, 28, 648–675. [Google Scholar] [CrossRef]

- Lacosta, A.M.; Insua, D.; Badi, H.; Pesini, P.; Sarasa, M. Neurofibrillary Tangles of Aβx-40 in Alzheimer’s Disease Brains. J. Alzheimers Dis. 2017, 58, 661–667. [Google Scholar] [CrossRef]

- Ma, C.; Hong, F.; Yang, S. Amyloidosis in Alzheimer’s Disease: Pathogeny, Etiology, and Related Therapeutic Directions. Molecules 2022, 27, 1210. [Google Scholar] [CrossRef]

- Otero-Garcia, M.; Mahajani, S.U.; Wakhloo, D.; Tang, W.; Xue, Y.-Q.; Morabito, S.; Pan, J.; Oberhauser, J.; Madira, A.E.; Shakouri, T.; et al. Molecular signatures underlying neurofibrillary tangle susceptibility in Alzheimer’s disease. Neuron 2022, 110, 2929–2948.e8. [Google Scholar] [CrossRef]

- Braak, H.; Braak, E. Neuropathological staging of Alzheimer-related changes. Acta Neuropathol. 1991, 82, 239–259. [Google Scholar] [CrossRef]

- Bondi, M.W.; Edmonds, E.C.; Salmon, D.P. Alzheimer’s Disease: Past, Present, and Future. J. Int. Neuropsychol. Soc. 2017, 23, 818–831. [Google Scholar] [CrossRef]

- Raji, C.A.; Benzinger, T.L.S. The Value of Neuroimaging in Dementia Diagnosis. Contin. Lifelong Learn. Neurol. 2022, 28, 800–821. [Google Scholar] [CrossRef]

- Del Sole, A.; Malaspina, S.; Magenta Biasina, A. Magnetic resonance imaging and positron emission tomography in the diagnosis of neurodegenerative dementias. Funct. Neurol. 2016, 31, 205–215. [Google Scholar] [CrossRef]

- Masdeu, J.C. Neuroimaging of Diseases Causing Dementia. Neurol. Clin. 2020, 38, 65–94. [Google Scholar] [CrossRef]

- Jie, C.V.M.L.; Treyer, V.; Schibli, R.; Mu, L. TauvidTM: The First FDA-Approved PET Tracer for Imaging Tau Pathology in Alzheimer’s Disease. Pharmaceuticals 2021, 14, 110. [Google Scholar] [CrossRef]

- Jiang, H.; Cao, P.; Xu, M.; Yang, J.; Zaiane, O. Hi-GCN: A hierarchical graph convolution network for graph embedding learning of brain network and brain disorders prediction. Comput. Biol. Med. 2020, 127, 104096. [Google Scholar] [CrossRef] [PubMed]

- Chen, Z.; Mo, X.; Chen, R.; Feng, P.; Li, H. A Reparametrized CNN Model to Distinguish Alzheimer’s Disease Applying Multiple Morphological Metrics and Deep Semantic Features From Structural MRI. Front. Aging Neurosci. 2022, 14, 856391. [Google Scholar] [CrossRef] [PubMed]

- Mirkin, S.; Albensi, B.C. Should artificial intelligence be used in conjunction with Neuroimaging in the diagnosis of Alzheimer’s disease? Front. Aging Neurosci. 2023, 15, 1094233. [Google Scholar] [CrossRef]

- Institut Curie. Hydrosorb® Versus Control (Water Based Spray) in the Management of Radio-Induced Skin Toxicity: Multicentre Controlled Phase III Randomized Trial. 2016. Available online: https://clinicaltrials.gov/ct2/show/NCT02839473 (accessed on 3 November 2022).

- Nenning, K.H.; Langs, G. Machine learning in neuroimaging: From research to clinical practice. Radiologie 2022, 62 (Suppl. 1), S1–S10. [Google Scholar] [CrossRef]

- Lyu, J.; Bartlett, P.F.; Nasrallah, F.A.; Tang, X. Toward hippocampal volume measures on ultra-high field magnetic resonance imaging: A comprehensive comparison study between deep learning and conventional approaches. Front. Neurosci. 2023, 17, 1238646. [Google Scholar] [CrossRef]

- Bazangani, F.; Richard, F.J.P.; Ghattas, B.; Guedj, E. FDG-PET to T1 Weighted MRI Translation with 3D Elicit Generative Adversarial Network (E-GAN). Sensors 2022, 22, 4640. [Google Scholar] [CrossRef]

- Kim, C.M.; Lee, W. Classification of Alzheimer’s Disease Using Ensemble Convolutional Neural Network with LFA Algorithm. IEEE Access 2023, 11, 143004–143015. [Google Scholar] [CrossRef]

- Odusami, M.; Maskeliūnas, R.; Damaševičius, R.; Krilavičius, T. Analysis of Features of Alzheimer’s Disease: Detection of Early Stage from Functional Brain Changes in Magnetic Resonance Images Using a Finetuned ResNet18 Network. Diagnostics 2021, 11, 1071. [Google Scholar] [CrossRef]

- Aqeel, A.; Hassan, A.; Khan, M.A.; Rehman, S.; Tariq, U.; Kadry, S.; Majumdar, A.; Thinnukool, O. A Long Short-Term Memory Biomarker-Based Prediction Framework for Alzheimer’s Disease. Sensors 2022, 22, 1475. [Google Scholar] [CrossRef]

- Khalid, A.; Senan, E.M.; Al-Wagih, K.; Al-Azzam, M.M.A.; Alkhraisha, Z.M. Automatic Analysis of MRI Images for Early Prediction of Alzheimer’s Disease Stages Based on Hybrid Features of CNN and Handcrafted Features. Diagnostics 2023, 13, 1654. [Google Scholar] [CrossRef] [PubMed]

- Kim, S.K.; Duong, Q.A.; Gahm, J.K. Multimodal 3D Deep Learning for Early Diagnosis of Alzheimer’s Disease. IEEE Access 2024, 12, 46278–46289. [Google Scholar] [CrossRef]

- Chiu, S.I.; Fan, L.Y.; Lin, C.H.; Chen, T.-F.; Lim, W.S.; Jang, J.-S.R.; Chiu, M.-J. Machine Learning-Based Classification of Subjective Cognitive Decline, Mild Cognitive Impairment, and Alzheimer’s Dementia Using Neuroimage and Plasma Biomarkers. ACS Chem. Neurosci. 2022, 13, 3263–3270. [Google Scholar] [CrossRef]

- Liu, Y.; Tang, K.; Cai, W.; Chen, A.; Zhou, G.; Li, L.; Liu, R. MPC-STANet: Alzheimer’s Disease Recognition Method Based on Multiple Phantom Convolution and Spatial Transformation Attention Mechanism. Front. Aging Neurosci. 2022, 14, 918462. [Google Scholar] [CrossRef]

- Amoroso, N.; Quarto, S.; La Rocca, M.; Tangaro, S.; Monaco, A.; Bellotti, R. An eXplainability Artificial Intelligence approach to brain connectivity in Alzheimer’s disease. Front. Aging Neurosci. 2023, 15, 1238065. [Google Scholar] [CrossRef]

- Rao, B.S.; Aparna, M. A Review on Alzheimer’s Disease Through Analysis of MRI Images Using Deep Learning Techniques. IEEE Access 2023, 11, 71542–71556. [Google Scholar] [CrossRef]

- Khagi, B.; Kwon, G.R. 3D CNN Design for the Classification of Alzheimer’s Disease Using Brain MRI and PET. IEEE Access 2020, 8, 217830–217847. [Google Scholar] [CrossRef]

- Nobakht, S.; Schaeffer, M.; Forkert, N.D.; Nestor, S.; Black, S.E.; Barber, P.; Initiative, T.A.D.N. Combined Atlas and Convolutional Neural Network-Based Segmentation of the Hippocampus from MRI According to the ADNI Harmonized Protocol. Sensors 2021, 21, 2427. [Google Scholar] [CrossRef] [PubMed]

- Sun, Z.; Meikle, S.; Calamante, F. CONN-NLM: A Novel CONNectome-Based Non-local Means Filter for PET-MRI Denoising. Front. Neurosci. 2022, 16, 824431. [Google Scholar] [CrossRef]

- Tang, Y.; Du, Q.; Wang, J.; Wu, Z.; Li, Y.; Li, M.; Yang, X.; Zheng, J. CCN-CL: A content-noise complementary network with contrastive learning for low-dose computed tomography denoising. Comput. Biol. Med. 2022, 147, 105759. [Google Scholar] [CrossRef]

- Yamanakkanavar, N.; Lee, B. Using a Patch-Wise M-Net Convolutional Neural Network for Tissue Segmentation in Brain MRI Images. IEEE Access 2020, 8, 120946–120958. [Google Scholar] [CrossRef]

- Yin, T.T.; Cao, M.H.; Yu, J.C.; Shi, T.Y.; Mao, X.H.; Wei, X.Y.; Jia, Z.Z. T1-Weighted Imaging-Based Hippocampal Radiomics in the Diagnosis of Alzheimer’s Disease. Acad. Radiol. 2024, 31, 5183–5192. [Google Scholar] [CrossRef]

- Liu, Q.; Zhang, Y.; Guo, L.; Wang, Z. Spatial-temporal data-augmentation-based functional brain network analysis for brain disorders identification. Front. Neurosci. 2023, 17, 1194190. [Google Scholar] [CrossRef]

- Noh, J.H.; Kim, J.H.; Yang, H.D. Classification of Alzheimer’s Progression Using fMRI Data. Sensors 2023, 23, 6330. [Google Scholar] [CrossRef]

- Brusini, I.; Lindberg, O.; Muehlboeck, J.S.; Smedby, Ö.; Westman, E.; Wang, C. Shape Information Improves the Cross-Cohort Performance of Deep Learning-Based Segmentation of the Hippocampus. Front. Neurosci. 2020, 14, 15. [Google Scholar] [CrossRef] [PubMed]

- Cortez, J.; Torres, C.G.; Parraguez, V.H.; De los Reyes, M.; Peralta, O.A. Bovine adipose tissue-derived mesenchymal stem cells self-assemble with testicular cells and integrates and modifies the structure of a testicular organoids. Theriogenology 2024, 215, 259–271. [Google Scholar] [CrossRef]

- Zhang, Y.; Jiang, X.; Qiao, L.; Liu, M. Modularity-Guided Functional Brain Network Analysis for Early-Stage Dementia Identification. Front. Neurosci. 2021, 15, 720909. [Google Scholar] [CrossRef]

- Jiao, F.; Wang, M.; Sun, X.; Ju, Z.; Lu, J.; Wang, L.; Jiang, J.; Zuo, C. Based on Tau PET Radiomics Analysis for the Classification of Alzheimer’s Disease and Mild Cognitive Impairment. Brain Sci. 2023, 13, 367. [Google Scholar] [CrossRef]

- Kaya, M.; Cetin-Kaya, Y. A Novel Deep Learning Architecture Optimization for Multiclass Classification of Alzheimer’s Disease Level. IEEE Access 2024, 12, 46562–46581. [Google Scholar] [CrossRef]

- Nuvoli, S.; Bianconi, F.; Rondini, M.; Lazzarato, A.; Marongiu, A.; Fravolini, M.L.; Cascianelli, S.; Amici, S.; Filippi, L.; Spanu, A.; et al. Differential Diagnosis of Alzheimer Disease vs. Mild Cognitive Impairment Based on Left Temporal Lateral Lobe Hypomethabolism on 18F-FDG PET/CT and Automated Classifiers. Diagnostics 2022, 12, 2425. [Google Scholar] [CrossRef]

- Akramifard, H.; Balafar, M.; Razavi, S.; Ramli, A.R. Emphasis Learning, Features Repetition in Width Instead of Length to Improve Classification Performance: Case Study-Alzheimer’s Disease Diagnosis. Sensors 2020, 20, 941. [Google Scholar] [CrossRef]

- Wang, L.; Sheng, J.; Zhang, Q.; Zhou, R.; Li, Z.; Xin, Y. Functional Brain Network Measures for Alzheimer’s Disease Classification. IEEE Access 2023, 11, 111832–111845. [Google Scholar] [CrossRef]

- Lama, R.K.; Kwon, G.R. Diagnosis of Alzheimer’s Disease Using Brain Network. Front. Neurosci. 2021, 15, 605115. [Google Scholar] [CrossRef]

- Choi, R.Y.; Coyner, A.S.; Kalpathy-Cramer, J.; Chiang, M.F.; Campbell, J.P. Introduction to Machine Learning, Neural Networks, and Deep Learning. Transl. Vis. Sci. Technol. 2020, 9, 14. [Google Scholar] [CrossRef]

- van Loon, W.; de Vos, F.; Fokkema, M.; Szabo, B.; Koini, M.; Schmidt, R.; de Rooij, M. Analyzing Hierarchical Multi-View MRI Data with StaPLR: An Application to Alzheimer’s Disease Classification. Front. Neurosci. 2022, 16, 830630. [Google Scholar] [CrossRef]

- Khan, Y.F.; Kaushik, B.; Chowdhary, C.L.; Srivastava, G. Ensemble Model for Diagnostic Classification of Alzheimer’s Disease Based on Brain Anatomical Magnetic Resonance Imaging. Diagnostics 2022, 12, 3193. [Google Scholar] [CrossRef]

- Bao, Y.W.; Wang, Z.J.; Shea, Y.F.; Chiu, P.K.-C.; Kwan, J.S.; Chan, F.H.-W.; Mak, H.K.-F. Combined Quantitative amyloid-β PET and Structural MRI Features Improve Alzheimer’s Disease Classification in Random Forest Model—A Multicenter Study. Acad. Radiol. 2024, 31, 5154–5163. [Google Scholar] [CrossRef]

- Song, M.; Jung, H.; Lee, S.; Kim, D.; Ahn, M. Diagnostic classification and biomarker identification of alzheimer’s disease with random forest algorithm. Brain Sci. 2021, 11, 453. [Google Scholar] [CrossRef]

- Keles, M.K.; Kilic, U. Classification of Brain Volumetric Data to Determine Alzheimer’s Disease Using Artificial Bee Colony Algorithm as Feature Selector. IEEE Access 2022, 10, 82989–83001. [Google Scholar] [CrossRef]

- LeCun, Y.; Bengio, Y.; Hinton, G. Deep learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef]

- Odusami, M.; Maskeliūnas, R.; Damaševičius, R. An Intelligent System for Early Recognition of Alzheimer’s Disease Using Neuroimaging. Sensors 2022, 22, 740. [Google Scholar] [CrossRef]

- Sharma, S.; Gupta, S.; Gupta, D.; Altameem, A.; Saudagar, A.K.J.; Poonia, R.C.; Nayak, S.R. HTLML: Hybrid AI Based Model for Detection of Alzheimer’s Disease. Diagnostics 2022, 12, 1833. [Google Scholar] [CrossRef]

- Cao, Y.; Kuai, H.; Liang, P.; Pan, J.S.; Yan, J.; Zhong, N. BNLoop-GAN: A multi-loop generative adversarial model on brain network learning to classify Alzheimer’s disease. Front. Neurosci. 2023, 17, 1202382. [Google Scholar] [CrossRef]

- Pan, D.; Zeng, A.; Yang, B.; Lai, G.; Hu, B.; Song, X.; Jiang, T.; Alzheimer’s Disease Neuroimaging Initiative (ADNI). Deep Learning for Brain MRI Confirms Patterned Pathological Progression in Alzheimer’s Disease. Adv. Sci. 2023, 10, e2204717. [Google Scholar] [CrossRef] [PubMed]

- Murugan, S.; Venkatesan, C.; Sumithra, M.G.; Gao, X.-Z.; Elakkiya, B.; Akila, M.; Manoharan, S. DEMNET: A Deep Learning Model for Early Diagnosis of Alzheimer Diseases and Dementia from MR Images. IEEE Access 2021, 9, 90319–90329. [Google Scholar] [CrossRef]

- Mujahid, M.; Rehman, A.; Alam, T.; Alamri, F.S.; Fati, S.M.; Saba, T. An Efficient Ensemble Approach for Alzheimer’s Disease Detection Using an Adaptive Synthetic Technique and Deep Learning. Diagnostics 2023, 13, 2489. [Google Scholar] [CrossRef] [PubMed]

- Khan, R.; Akbar, S.; Mehmood, A.; Shahid, F.; Munir, K.; Ilyas, N.; Asif, M.; Zheng, Z. A transfer learning approach for multiclass classification of Alzheimer’s disease using MRI images. Front. Neurosci. 2023, 16, 1050777. [Google Scholar] [CrossRef]

- Dhillon, A.; Verma, G.K. Convolutional neural network: A review of models, methodologies and applications to object detection. Prog. Artif. Intell. 2020, 9, 85–112. [Google Scholar] [CrossRef]

- Chen, D.; Hu, F.; Nian, G.; Yang, T. Deep Residual Learning for Nonlinear Regression. Entropy 2020, 22, 193. [Google Scholar] [CrossRef]

- Li, C.; Wang, Q.; Liu, X.; Hu, B. An Attention-Based CoT-ResNet with Channel Shuffle Mechanism for Classification of Alzheimer’s Disease Levels. Front. Aging Neurosci. 2022, 14, 930584. [Google Scholar] [CrossRef]

- Pusparani, Y.; Lin, C.Y.; Jan, Y.K.; Lin, F.-Y.; Liau, B.-Y.; Ardhianto, P.; Farady, I.; Alex, J.S.R.; Aparajeeta, J.; Chao, W.-H.; et al. Diagnosis of Alzheimer’s Disease Using Convolutional Neural Network with Select Slices by Landmark on Hippocampus in MRI Images. IEEE Access 2023, 11, 61688–61697. [Google Scholar] [CrossRef]

- Sun, H.; Wang, A.; Wang, W.; Liu, C. An Improved Deep Residual Network Prediction Model for the Early Diagnosis of Alzheimer’s Disease. Sensors 2021, 21, 4182. [Google Scholar] [CrossRef] [PubMed]

- AlSaeed, D.; Omar, S.F. Brain MRI Analysis for Alzheimer’s Disease Diagnosis Using CNN-Based Feature Extraction and Machine Learning. Sensors 2022, 22, 2911. [Google Scholar] [CrossRef]

- Syed Jamalullah, R.; Mary Gladence, L.; Ahmed, M.A.; Lydia, E.L.; Ishak, M.K.; Hadjouni, M.; Mostafa, S.M. Leveraging Brain MRI for Biomedical Alzheimer’s Disease Diagnosis Using Enhanced Manta Ray Foraging Optimization Based Deep Learning. IEEE Access 2023, 11, 81921–81929. [Google Scholar] [CrossRef]

- Carcagnì, P.; Leo, M.; Del Coco, M.; Distante, C.; De Salve, A. Convolution Neural Networks and Self-Attention Learners for Alzheimer Dementia Diagnosis from Brain MRI. Sensors 2023, 23, 1694. [Google Scholar] [CrossRef]

- Shamrat, F.M.J.M.; Akter, S.; Azam, S.; Karim, A.; Ghosh, P.; Tasnim, Z.; Hasib, K.M.; De Boer, F.; Ahmed, K. AlzheimerNet: An Effective Deep Learning Based Proposition for Alzheimer’s Disease Stages Classification From Functional Brain Changes in Magnetic Resonance Images. IEEE Access 2023, 11, 16376–16395. [Google Scholar] [CrossRef]

- Hazarika, R.A.; Abraham, A.; Kandar, D.; Maji, A.K. An Improved LeNet-Deep Neural Network Model for Alzheimer’s Disease Classification Using Brain Magnetic Resonance Images. IEEE Access 2021, 9, 161194–161207. [Google Scholar] [CrossRef]

- Fareed, M.M.S.; Zikria, S.; Ahmed, G.; Din, M.Z.; Mahmood, S.; Aslam, M.; Jillani, S.F.; Moustafa, A. ADD-Net: An Effective Deep Learning Model for Early Detection of Alzheimer Disease in MRI Scans. IEEE Access 2022, 10, 96930–96951. [Google Scholar] [CrossRef]

- Sait, A.R.W.; Nagaraj, R. A Feature-Fusion Technique-Based Alzheimer’s Disease Classification Using Magnetic Resonance Imaging. Diagnostics 2024, 14, 2363. [Google Scholar] [CrossRef]

- Chabib, C.M.; Hadjileontiadis, L.J.; Shehhi, A.A. DeepCurvMRI: Deep Convolutional Curvelet Transform-Based MRI Approach for Early Detection of Alzheimer’s Disease. IEEE Access 2023, 11, 44650–44659. [Google Scholar] [CrossRef]

- Ganokratanaa, T.; Ketcham, M.; Pramkeaw, P. Advancements in Cataract Detection: The Systematic Development of LeNet-Convolutional Neural Network Models. J. Imaging 2023, 9, 197. [Google Scholar] [CrossRef]

- Dey, R.; Salem, F.M. Gate-variants of Gated Recurrent Unit (GRU) neural networks. In Proceedings of the 2017 IEEE 60th International Midwest Symposium on Circuits and Systems (MWSCAS), Boston, MA, USA, 6–9 August 2017; pp. 1597–1600. [Google Scholar] [CrossRef]

- Mahim, S.M.; Ali, M.S.; Hasan, M.O.; Nafi, A.A.N.; Sadat, A.; Al Hasan, S.; Shareef, B.; Ahsan, M.; Islam, K.; Miah, S.; et al. Unlocking the Potential of XAI for Improved Alzheimer’s Disease Detection and Classification Using a ViT-GRU Model. IEEE Access 2024, 12, 8390–8412. [Google Scholar] [CrossRef]

- Zhao, Y.; Guo, Q.; Zhang, Y.; Zheng, J.; Yang, Y.; Du, X.; Feng, H.; Zhang, S. Application of Deep Learning for Prediction of Alzheimer’s Disease in PET/MR Imaging. Bioengineering 2023, 10, 1120. [Google Scholar] [CrossRef]

- Al-Otaibi, S.; Mujahid, M.; Khan, A.R.; Nobanee, H.; Alyami, J.; Saba, T. Dual Attention Convolutional AutoEncoder for Diagnosis of Alzheimer’s Disorder in Patients Using Neuroimaging and MRI Features. IEEE Access 2024, 12, 58722–58739. [Google Scholar] [CrossRef]

- Guo, H.; Zhang, Y. Resting State fMRI and Improved Deep Learning Algorithm for Earlier Detection of Alzheimer’s Disease. IEEE Access 2020, 8, 115383–115392. [Google Scholar] [CrossRef]

- Yi, X.; Walia, E.; Babyn, P. Generative adversarial network in medical imaging: A review. Med. Image Anal. 2019, 58, 101552. [Google Scholar] [CrossRef]

- Chui, K.T.; Gupta, B.B.; Alhalabi, W.; Alzahrani, F.S. An MRI Scans-Based Alzheimer’s Disease Detection via Convolutional Neural Network and Transfer Learning. Diagnostics 2022, 12, 1531. [Google Scholar] [CrossRef] [PubMed]

- Kale, M.; Wankhede, N.; Pawar, R.; Ballal, S.; Kumawat, R.; Goswami, M.; Khalid, M.; Taksande, B.; Upaganlawar, A.; Umekar, M.; et al. AI-driven innovations in Alzheimer’s disease: Integrating early diagnosis, personalized treatment, and prognostic modelling. Ageing Res. Rev. 2024, 101, 102497. [Google Scholar] [CrossRef]

- Alongi, P.; Laudicella, R.; Panasiti, F.; Stefano, A.; Comelli, A.; Giaccone, P.; Arnone, A.; Minutoli, F.; Quartuccio, N.; Cupidi, C.; et al. Radiomics Analysis of Brain [18F]FDG PET/CT to Predict Alzheimer’s Disease in Patients with Amyloid PET Positivity: A Preliminary Report on the Application of SPM Cortical Segmentation, Pyradiomics and Machine-Learning Analysis. Diagnostics 2022, 12, 933. [Google Scholar] [CrossRef] [PubMed]

- Wang, M.; Wei, M.; Wang, L.; Song, J.; Rominger, A.; Shi, K.; Jiang, J. Tau Protein Accumulation Trajectory-Based Brain Age Prediction in the Alzheimer’s Disease Continuum. Brain Sci. 2024, 14, 575. [Google Scholar] [CrossRef]

- Jain, V.; Nankar, O.; Jerrish, D.J.; Gite, S.; Patil, S.; Kotecha, K. A Novel AI-Based System for Detection and Severity Prediction of Dementia Using MRI. IEEE Access 2021, 9, 154324–154346. [Google Scholar] [CrossRef]

- Peng, J.; Wang, W.; Song, Q.; Hou, J.; Jin, H.; Qin, X.; Yuan, Z.; Wei, Y.; Shu, Z. 18F-FDG-PET Radiomics Based on White Matter Predicts The Progression of Mild Cognitive Impairment to Alzheimer Disease: A Machine Learning Study. Acad. Radiol. 2023, 30, 1874–1884. [Google Scholar] [CrossRef]

- Lin, W.; Gao, Q.; Yuan, J.; Chen, Z.; Feng, C.; Chen, W.; Du, M.; Tong, T. Predicting Alzheimer’s Disease Conversion From Mild Cognitive Impairment Using an Extreme Learning Machine-Based Grading Method with Multimodal Data. Front. Aging Neurosci. 2020, 12, 77. [Google Scholar] [CrossRef]

- Fakoya, A.A.; Parkinson, S. A Novel Image Casting and Fusion for Identifying Individuals at Risk of Alzheimer’s Disease Using MRI and PET Imaging. IEEE Access 2024, 12, 134101–134114. [Google Scholar] [CrossRef]

- Li, H.T.; Yuan, S.X.; Wu, J.S.; Gu, Y.; Sun, X. Predicting conversion from mci to ad combining multi-modality data and based on molecular subtype. Brain Sci. 2021, 11, 674. [Google Scholar] [CrossRef]

- Kim, S.T.; Kucukaslan, U.; Navab, N. Longitudinal Brain MR Image Modeling Using Personalized Memory for Alzheimer’s Disease. IEEE Access 2021, 9, 143212–143221. [Google Scholar] [CrossRef]

- Crystal, O.; Maralani, P.J.; Black, S.; Fischer, C.; Moody, A.R.; Khademi, A. Brain Age Estimation on a Dementia Cohort Using FLAIR MRI Biomarkers. Am. J. Neuroradiol. 2023, 44, 1384–1390. [Google Scholar] [CrossRef] [PubMed]

- Chattopadhyay, T.; Ozarkar, S.S.; Buwa, K.; Joshy, N.A.; Komandur, D.; Naik, J.; Thomopoulos, S.I.; Steeg, G.V.; Ambite, J.L.; Thompson, P.M. Comparison of deep learning architectures for predicting amyloid positivity in Alzheimer’s disease, mild cognitive impairment, and healthy aging, from T1-weighted brain structural MRI. Front. Neurosci. 2024, 18, 1387196. [Google Scholar] [CrossRef] [PubMed]

- Habuza, T.; Zaki, N.; Mohamed, E.A.; Statsenko, Y. Deviation from Model of Normal Aging in Alzheimer’s Disease: Application of Deep Learning to Structural MRI Data and Cognitive Tests. IEEE Access 2022, 10, 53234–53249. [Google Scholar] [CrossRef]

- Liang, W.; Zhang, K.; Cao, P.; Liu, X.; Yang, J.; Zaiane, O.R. Exploiting task relationships for Alzheimer’s disease cognitive score prediction via multi-task learning. Comput. Biol. Med. 2023, 152, 106367. [Google Scholar] [CrossRef]

- Qin, Y.; Cui, J.; Ge, X.; Tian, Y.; Han, H.; Fan, Z.; Liu, L.; Luo, Y.; Yu, H. Hierarchical multi-class Alzheimer’s disease diagnostic framework using imaging and clinical features. Front. Aging Neurosci. 2022, 14, 935055. [Google Scholar] [CrossRef]

- Dyrba, M.; Mohammadi, R.; Grothe, M.J.; Kirste, T.; Teipel, S.J. Gaussian Graphical Models Reveal Inter-Modal and Inter-Regional Conditional Dependencies of Brain Alterations in Alzheimer’s Disease. Front. Aging Neurosci. 2020, 12, 99. [Google Scholar] [CrossRef]

- Zhang, G.; Nie, X.; Liu, B.; Yuan, H.; Li, J.; Sun, W.; Huang, S. A multimodal fusion method for Alzheimer’s disease based on DCT convolutional sparse representation. Front. Neurosci. 2023, 16, 1100812. [Google Scholar] [CrossRef]

- Hong, X.; Huang, K.; Lin, J.; Ye, X.; Wu, G.; Chen, L.; Chen, E.; Zhao, S. Combined Multi-Atlas and Multi-Layer Perception for Alzheimer’s Disease Classification. Front. Aging Neurosci. 2022, 14, 891433. [Google Scholar] [CrossRef] [PubMed]

- Lin, W.; Gao, Q.; Du, M.; Chen, W.; Tong, T. Multiclass diagnosis of stages of Alzheimer’s disease using linear discriminant analysis scoring for multimodal data. Comput. Biol. Med. 2021, 134, 104478. [Google Scholar] [CrossRef] [PubMed]

- Gupta, Y.; Kim, J.I.; Kim, B.C.; Kwon, G.R. Classification and Graphical Analysis of Alzheimer’s Disease and Its Prodromal Stage Using Multimodal Features From Structural, Diffusion, and Functional Neuroimaging Data and the APOE Genotype. Front. Aging Neurosci. 2020, 12, 238. [Google Scholar] [CrossRef] [PubMed]

- Lau, A.; Beheshti, I.; Modirrousta, M.; Kolesar, T.A.; Goertzen, A.L.; Ko, J.H. Alzheimer’s Disease-Related Metabolic Pattern in Diverse Forms of Neurodegenerative Diseases. Diagnostics 2021, 11, 2023. [Google Scholar] [CrossRef]

- Dong, A.; Li, Z.; Wang, M.; Shen, D.; Liu, M. High-Order Laplacian Regularized Low-Rank Representation for Multimodal Dementia Diagnosis. Front. Neurosci. 2021, 15, 634124. [Google Scholar] [CrossRef]

- Yamao, T.; Miwa, K.; Kaneko, Y.; Takahashi, N.; Miyaji, N.; Hasegawa, K.; Wagatsuma, K.; Kamitaka, Y.; Ito, H.; Matsuda, H. Deep Learning-Driven Estimation of Centiloid Scales from Amyloid PET Images with 11C-PiB and 18F-Labeled Tracers in Alzheimer’s Disease. Brain Sci. 2024, 14, 406. [Google Scholar] [CrossRef]

- Gajjar, P.; Garg, M.; Desai, S.; Chhinkaniwala, H.; Sanghvi, H.A.; Patel, R.H.; Gupta, S.; Pandya, A.S. An Empirical Analysis of Diffusion, Autoencoders, and Adversarial Deep Learning Models for Predicting Dementia Using High-Fidelity MRI. IEEE Access 2024, 12, 131231–131243. [Google Scholar] [CrossRef]

- Ying, C.; Chen, Y.; Yan, Y.; Flores, S.; Laforest, R.; Benzinger, T.L.S.; An, H. Accuracy and longitudinal consistency of PET/MR attenuation correction in amyloid PET imaging amid software and hardware upgrades. Am. J. Neuroradiol. 2024, 46, 635–642. [Google Scholar] [CrossRef] [PubMed]

- Apostolopoulos, I.D.; Papathanasiou, N.D.; Apostolopoulos, D.J.; Panayiotakis, G.S. Applications of Generative Adversarial Networks (GANs) in Positron Emission Tomography (PET) imaging: A review. Eur. J. Nucl. Med. Mol. Imaging 2022, 49, 3717–3739. [Google Scholar] [CrossRef] [PubMed]

- Grigas, O.; Damaševičius, R.; Maskeliūnas, R. Positive Effect of Super-Resolved Structural Magnetic Resonance Imaging for Mild Cognitive Impairment Detection. Brain Sci. 2024, 14, 381. [Google Scholar] [CrossRef] [PubMed]

- Wu, J.; Zhao, K.; Li, Z.; Wang, D.; Ding, Y.; Wei, Y.; Zhang, H.; Liu, Y. A systematic analysis of diagnostic performance for Alzheimer’s disease using structural MRI. Psychoradiology 2022, 2, 287–295. [Google Scholar] [CrossRef]

- Wang, L.X.; Wang, Y.-Z.; Han, C.-G.; Zhao, L.; He, L.; Li, J. Revolutionizing early Alzheimer’s disease and mild cognitive impairment diagnosis: A deep learning MRI meta-analysis. Arq. Neuro-Psiquiatr. 2024, 82, s00441788657. [Google Scholar] [CrossRef]

- Sun, Y.; Chen, Y.; Dong, L.; Hu, D.; Zhang, X.; Jin, C.; Zhou, R.; Zhang, J.; Dou, X.; Wang, J.; et al. Diagnostic performance of deep learning-assisted [18F]FDG PET imaging for Alzheimer’s disease: A systematic review and meta-analysis. Eur. J. Nucl. Med. Mol. Imaging 2025, 52, 3600–3612. [Google Scholar] [CrossRef]

| Domain | Description | Modalities | Common AI Methods | Example Studies |

|---|---|---|---|---|

| Preprocessing and Segmentation | Prepares data for modeling: skull stripping, noise correction, registration | MRI, PET | U-Net, nnU-Net, GANs, Radiomics | [18,19] |

| Diagnosis and Classification | Identifies disease stage or detects AD during early stages | MRI, PET | CNNs (ResNet, DenseNet, VGG), SVM, RF | [20,21] |

| Prediction and Prognosis | Forecasts disease progression, enables longitudinal analysis studies, and provides risk assessment | MRI, PET, fMRI | RNN, LSTM, Logistic Regression | [22,23] |

| Multimodal Fusion | Combines imaging output, blood/CSF biomarkers, and clinical data to obtain a comprehensive final, cumulative result | MRI + PET + MMSE + CSF | Ensemble CNNs, Dual-Path CNNs, SVM | [24,25] |

| Emerging Trends | New techniques like XAI (enhancing explainability), synthetic data (generating training input for AI models), and harmonization (enabling better standardization) | All | XAI, Generative Models, Transformers | [26,27] |

| AI Model | Task | Input Modality | Accuracy/AUC | Study |

|---|---|---|---|---|

| ResNet18 | AD vs. MCI classification | fMRI | 99.99% | [53] |

| DenseNet121 + SVM | AD Classification | T1 MRI | 91.75% | [54] |

| BNLoop-GAN | Brain network generation | fMRI + sMRI | 98% | [55] |

| Ensemble 3D CNN | Progression Tracking | Longitudinal MRI | -- | [56] |

| DemNet | AD staging | MRI | 95.23% | [57] |

| Pathological Feature | Modality | AI Applications | Typical Output | References |

|---|---|---|---|---|

| Amyloid-β (Aβ) | PET (AV45, PiB) | Diagnosis, prognosis | SUVR, Centiloid scaling | [24,49,82] |

| Tau Tangles | PET (Tauvid) | Staging, prognosis | SUVR, cortical distribution | [24,40,83] |

| CSF Aβ42/tau ratio | Biochemical (CSF) | Risk prediction, multimodal fusion | The ratio “drop” correlates with AD conversion | [50] |

| Medial Temporal Atrophy | T1 MRI | Segmentation, classification, brain age | Volume loss in the hippocampus/entorhinal cortex | [34,51,63] |

| FDG Hypometabolism | FDG PET | Multimodal fusion, deep learning | Reduced glucose metabolism in parietal/temporal | [24,42] |

| White Matter Integrity | DTI/Structural MRI | Prediction of progression, subtype analysis | FA and MD abnormalities in frontal-parietal tracts | [35,36] |

| Title | Author | Year | AI Model/Architecture | Modality | Dataset | Performance Metrics | Model Insights | Limitations | Reference |

|---|---|---|---|---|---|---|---|---|---|

| 3D CNN Design for the Classification of Alzheimer’s Disease Using Brain MRI and PET. | Khagi B. et al. | 2020 | Encoder-based 3D CNN | MRI and/or PET | ADNI Baseline (BL) projects | Accuracy: 94.56% | Diverges receptive fields to optimize feature extraction efficiency | Dataset size and class imbalances (AD, MCI) | [29] |

| Diagnosis of Alzheimer’s Disease Using Convolutional Neural Network With Select Slices by Landmark on Hippocampus in MRI Images | Pusparani Y et al. | 2023 | Resnet50 and LeNet | MRI | ADNI | Accuracy: 98% | Attention model to improve accuracy | No external validation | [63] |

| 3D CNN for AD detection using MRI | Haijing et al. | 2021 | ResNet | MRI | ADNI | Accuracy: 97.1% | Captures more information from MRI | Limited interpretability/No external Validation | [64] |

| Using a Patch-Wise M-Net Convolutional Neural Network for Tissue segmentation in brain MRI images | Yamanakkanavar N. et al. | 2020 | CNN M-Net | MRI | OASIS | Accuracy: 94.81–96.33% | Automatic segmentation of brain MRI scans | M-Net is prone to missing details in certain regions | [33] |

| An Intelligent System for Early Recognition of Alzheimer’s Disease Using Neuroimaging | Odusami et al. | 2022 | ResNet DesNet | MRI | ADNI | Accuracy: 98.86% | Grad class activation map | No external validation | [53] |

| Analysis of Features of Alzheimer’s Disease: Detection of Early Stage from Functional Brain Changes in Magnetic Resonance Images Using a Finetuned ResNet18 Network | Odusami et al. | 2021 | ResNet 18 | fMRI | ADNI | Accuracy: 99.99% | Integrates structural and metabolic info | Overfitting | [21] |

| FDG-PET to T1 Weighted MRI Translation with 3D Elicit Generative Adversarial Network (E-GAN) | Bazangani F. et al. | 2022 | Elicit GAN | FDG PET | ADNI | Structural similarity (SSIM): 75% | FDG-PET to 3D T1-WI generation | Long training time and model tested only on healthy subjects | [19] |

| Brain MRI Analysis for Alzheimer’s Disease Diagnosis Using CNN-Based Feature Extraction and Machine Learning | Duaa AlSaeed | 2022 | ResNet 50 | MRI | ADNI and MIRIAD | Accuracy: 85.87–99% | Efficient feature extraction | No external validation | [65] |

| Based on Tau PET Radiomics Analysis for the Classification of Alzheimer’s Disease and Mild Cognitive Impairment | Jiao F. et al. | 2023 | Radiomics analysis | Tau PET | ADNI | Accuracy: 84.8% | Prediction of tau positive MCI or ApoE ε4 presence | Not biopsy-confirmed AD diagnosis and external cohorts with small subject number employed | [40] |

| MPC-STANet: Alzheimer’s Disease Recognition Method Based on Multiple Phantom Convolution and Spatial Transformation Attention Mechanism | Yujian et al. | 2022 | ResNet50 | MRI | Multiinstitutional | Accuracy: 96.25 | Space conversion attention | No external validation | [26] |

| Functional Brain Network Measures for Alzheimer’s Disease Classification. IEEE Access. | Wang L et al. | 2023 | SVM-linear | fMRI | ADNI | Accuracy: 96.80 (HC vs. AD) | Identification of significantly altered networks between HC, MCI states and AD | Only 36 out of 360 regions taken into account using J-HCPMMP parcellation | [44] |

| Leveraging Brain MRI for Biomedical Alzheimer’s Disease Diagnosis Using Enhanced Manta Ray Foraging Optimization Based Deep Learning | R. Syed Jamalullah | 2023 | DesNet 121 | MRI | ADNI | Accuracy: 98.29% | Enhanced Manta ray Foraging Optimization | No external validation | [66] |

| Convolution Neural Networks and Self-Attention Learners for Alzheimer Dementia Diagnosis from Brain MRI | Pierluigi Carcagnì et al. | 2023 | CNN | MRI | ADNI and OASIS | Accuracy: 77% | Self-attention learners | No external validation, information may be lost during feature extraction | [67] |

| Analyzing Hierarchical Multi-View MRI Data With StaPLR: An Application to Alzheimer’s Disease Classification | Van Loon. et al. | 2022 | Stacked penalized LR (STaPLR) | MRI (DWI, sMRI, fMRI) | PRODEM (Medical University of Graz) | Accuracy: 88.8% | MRI view selection most significant for disease prediction | Binary selection process: each view is either selected or not selected, which may differ from subject to subject | [47] |

| HTLML: Hybrid AI Based Model for Detection of Alzheimer’s Disease | Sarang et al. | 2022 | DesNet 121, DesNet 201 | MRI | Kaggle | Accuracy: 91.75% | Hybrid architecture improves stability | Complex to reproduce, no external validation | [54] |

| DEMNET: A Deep Learning Model for Early Diagnosis of Alzheimer Diseases and Dementia From MR Images | Suriya et al. | 2021 | CNN | MRI | Kaggle ADNI- external validation | Accuracy: 95.23% | Multilayer architecture | Complex to reproduce | [57] |

| Combined Quantitative amyloid-β PET and Structural MRI Features Improve Alzheimer’s Disease Classification in Random Forest Model—A Multicenter Study | Bao Y. et al. | 2024 | RF | Aβ-PET, sMRI | AIBL database GAIN dataset | Accuracy: 81% (HC vs. AD) | Aβ PET features for AD detection using ML models | Demographical information missing from subjects, limited sample size | [49] |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Christodoulou, R.C.; Woodward, A.; Pitsillos, R.; Ibrahim, R.; Georgiou, M.F. Artificial Intelligence in Alzheimer’s Disease Diagnosis and Prognosis Using PET-MRI: A Narrative Review of High-Impact Literature Post-Tauvid Approval. J. Clin. Med. 2025, 14, 5913. https://doi.org/10.3390/jcm14165913

Christodoulou RC, Woodward A, Pitsillos R, Ibrahim R, Georgiou MF. Artificial Intelligence in Alzheimer’s Disease Diagnosis and Prognosis Using PET-MRI: A Narrative Review of High-Impact Literature Post-Tauvid Approval. Journal of Clinical Medicine. 2025; 14(16):5913. https://doi.org/10.3390/jcm14165913

Chicago/Turabian StyleChristodoulou, Rafail C., Amanda Woodward, Rafael Pitsillos, Reina Ibrahim, and Michalis F. Georgiou. 2025. "Artificial Intelligence in Alzheimer’s Disease Diagnosis and Prognosis Using PET-MRI: A Narrative Review of High-Impact Literature Post-Tauvid Approval" Journal of Clinical Medicine 14, no. 16: 5913. https://doi.org/10.3390/jcm14165913

APA StyleChristodoulou, R. C., Woodward, A., Pitsillos, R., Ibrahim, R., & Georgiou, M. F. (2025). Artificial Intelligence in Alzheimer’s Disease Diagnosis and Prognosis Using PET-MRI: A Narrative Review of High-Impact Literature Post-Tauvid Approval. Journal of Clinical Medicine, 14(16), 5913. https://doi.org/10.3390/jcm14165913