Rapid Assessment of Non-Verbal Auditory Perception in Normal-Hearing Participants and Cochlear Implant Users

Abstract

:1. Introduction

1.1. Music Perception

1.2. Prosody Perception

1.3. Auditory Scene Perception

1.4. Enhancing Pitch Perception with Visual Information

1.5. Rationale for the Present Study

2. Material and Methods

2.1. Participants

2.2. Listening Tests: Material and Procedure

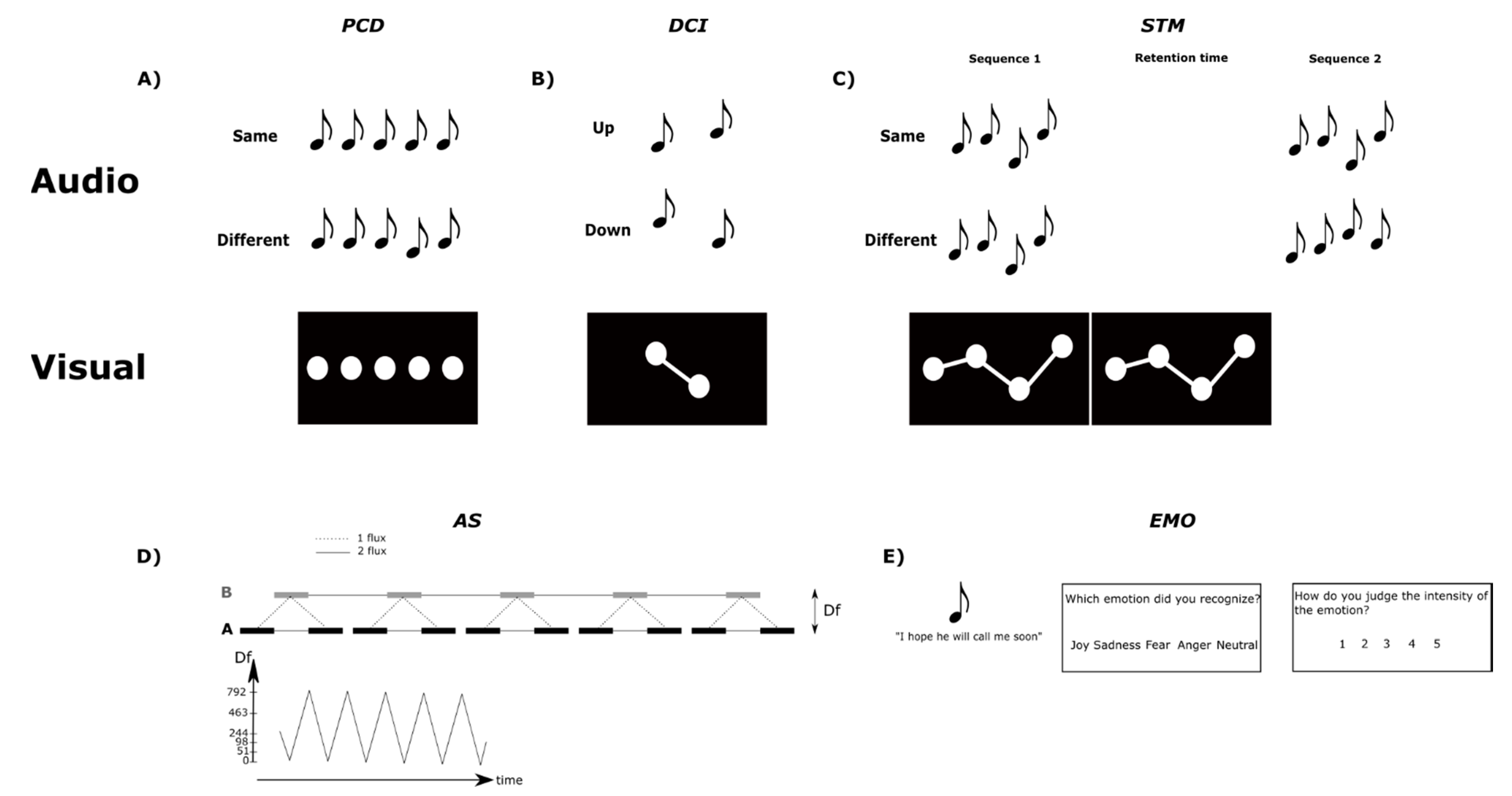

2.2.1. Pitch Change Detection (PCD) Test

2.2.2. Pitch Direction Change Identification (DCI) Test

2.2.3. Pitch Short-Term Memory (STM) Test

2.2.4. Auditory Stream Segregation (AS) Test

2.2.5. Emotion Recognition (EMO) Test

2.2.6. Vocoded Sounds

2.3. Data Analysis

3. Results

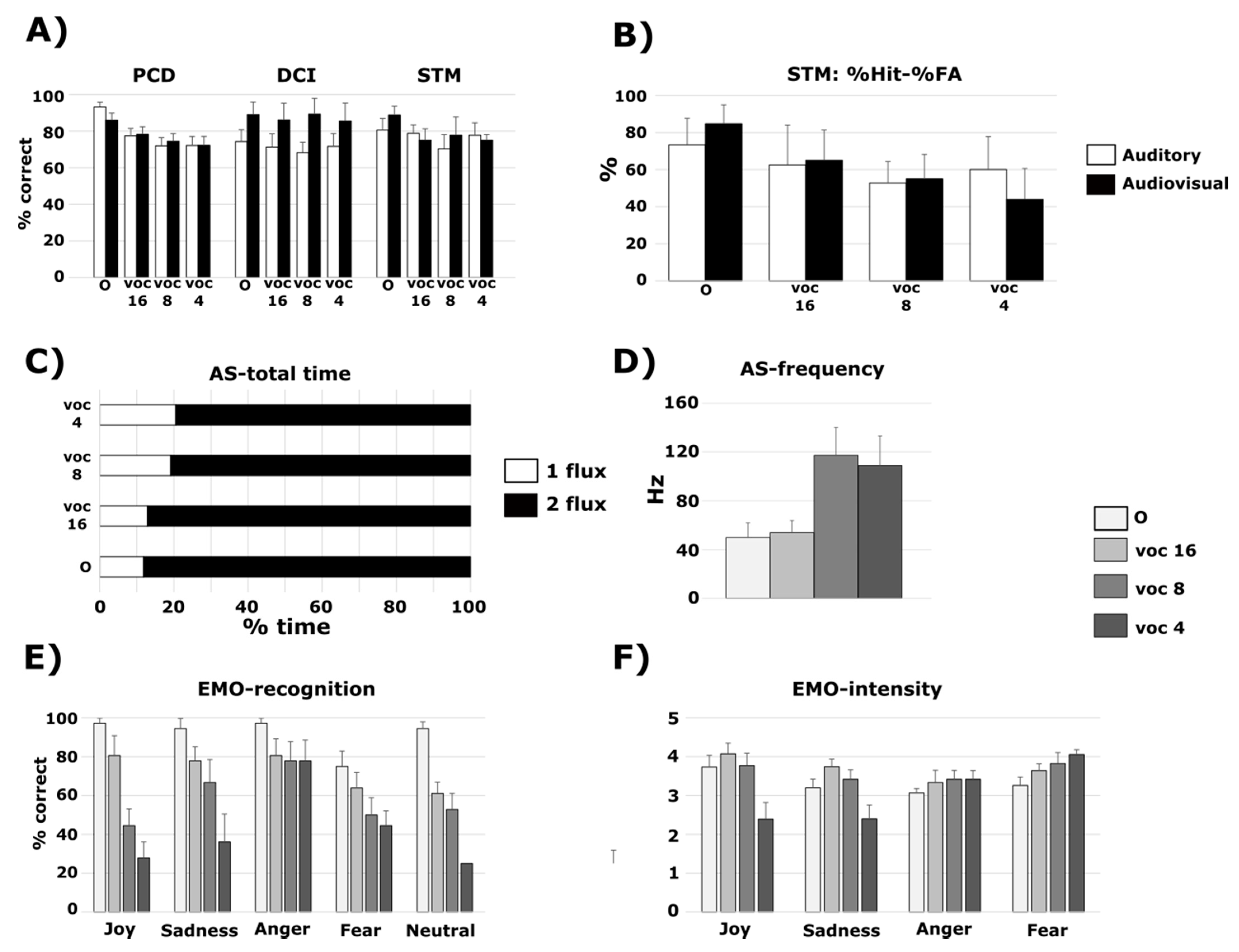

3.1. PCD Test

3.1.1. Normal-Hearing Participants and Vocoded Sounds

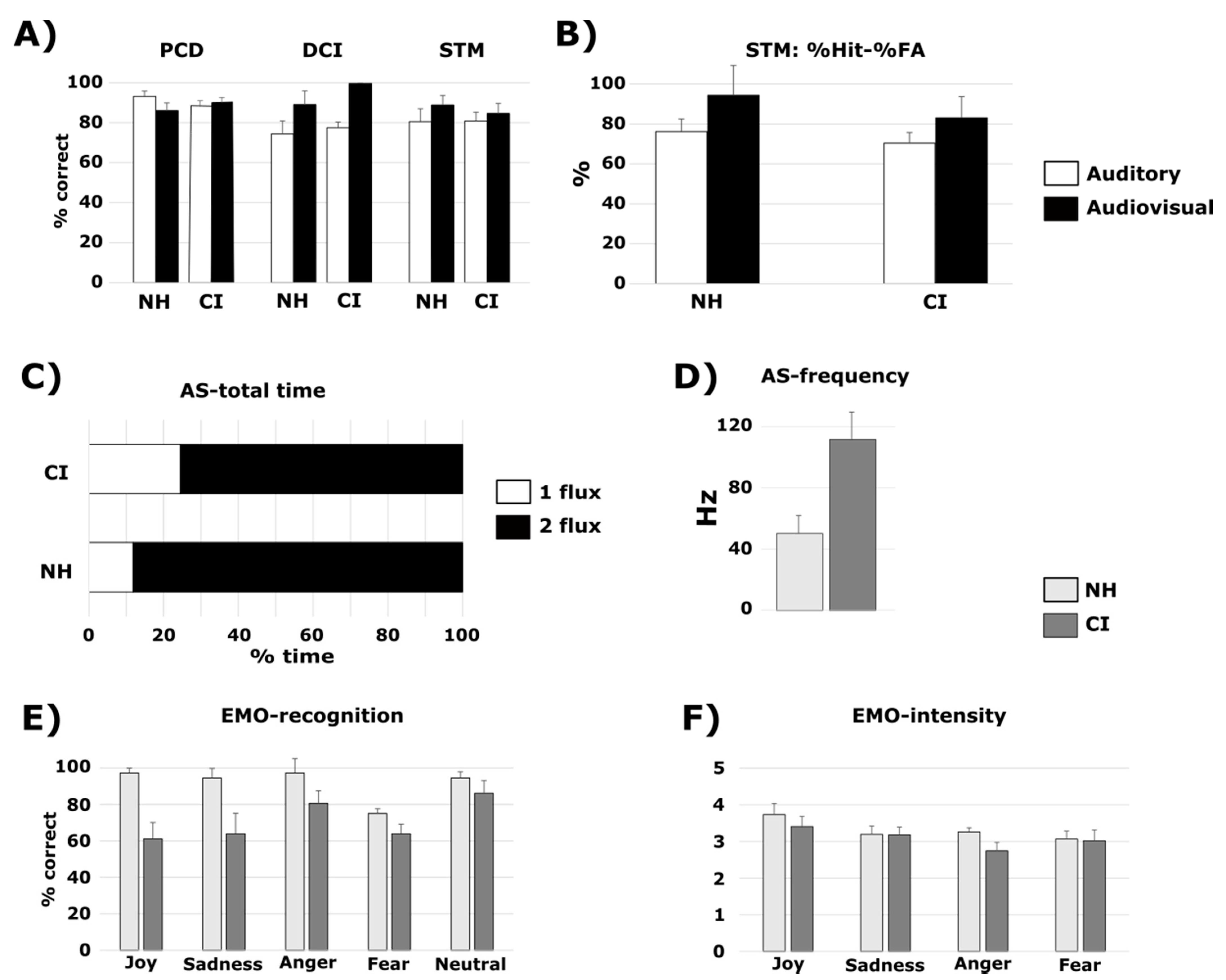

3.1.2. Cochlear Implant Listeners Compared to Normal-Hearing Participants

3.2. DCI Test

3.2.1. Normal-Hearing Participants and Vocoded Sounds

3.2.2. Cochlear Implant Listeners Compared to Normal-Hearing Participants

3.3. STM Test

3.3.1. Normal-Hearing Participants and Vocoded Sounds

3.3.2. Cochlear Implant Listeners Compared to Normal-Hearing Participants

3.4. AS Test

3.4.1. Normal-Hearing Participants and Vocoded Sounds

3.4.2. Cochlear Implant Listeners Compared to Normal-Hearing Participants

3.5. EMO Test

3.5.1. Normal-Hearing Participants and Vocoded Sounds

3.5.2. Cochlear Implant Listeners Compared to Normal-Hearing Participants

3.6. Relationships between the Tasks: Cochlear Implant Listeners Compared to Normal-Hearing Participants in Pitch Tasks (PCD, DCI and STM)

4. Discussion

4.1. Patterns of Non-verbal Auditory Perception Deficits in CI Users and in NH Participants Hearing Vocoded Sounds

4.2. Benefit of Audiovisual Cues for Non-Verbal Auditory Tasks

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Highlights

- Five listening tests were used to assess non-verbal auditory perception.

- CI users showed deficits in pitch discrimination, emotional prosody, and streaming.

- Similar deficits were observed in NH listeners with vocoded sounds.

- Visual cues can enhance CI users’ performance in pitch perception tasks.

References

- Lolli, S.L.; Lewenstein, A.D.; Basurto, J.; Winnik, S.; Loui, P. Sound frequency affects speech emotion perception: Results from congenital amusia. Front. Psychol. 2015, 6, 1340. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Bregman, A.S.; McAdams, S. Auditory Scene Analysis: The Perceptual Organization of Sound. J. Acoust. Soc. Am. 1994, 95, 1177–1178. [Google Scholar] [CrossRef]

- McAdams, S. Segregation of concurrent sounds. I: Effects of frequency modulation coherence. J. Acoust. Soc. Am. 1989, 86, 2148–2159. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Pattisapu, P.; Lindquist, N.R.; Appelbaum, E.N.; Silva, R.C.; Vrabec, J.T.; Sweeney, A.D. A Systematic Review of Cochlear Implant Outcomes in Prelingually-deafened, Late-implanted Patients. Otol. Neurotol. 2020, 41, 444–451. [Google Scholar] [CrossRef] [PubMed]

- Sharma, S.D.; Cushing, S.L.; Papsin, B.C.; Gordon, K.A. Hearing and speech benefits of cochlear implantation in children: A review of the literature. Int. J. Pediatr. Otorhinolaryngol. 2020, 133, 109984. [Google Scholar] [CrossRef] [PubMed]

- Torppa, R.; Huotilainen, M. Why and how music can be used to rehabilitate and develop speech and language skills in hearing-impaired children. Hear. Res. 2019, 380, 108–122. [Google Scholar] [CrossRef] [PubMed]

- Glennon, E.; Svirsky, M.A.; Froemke, R.C. Auditory cortical plasticity in cochlear implant users. Curr. Opin. Neurobiol. 2020, 60, 108–114. [Google Scholar] [CrossRef]

- Lehmann, A.; Paquette, S. Cross-domain processing of musical and vocal emotions in cochlear implant users. Audit. Cogn. Neurosci. 2015, 9, 343. [Google Scholar] [CrossRef] [Green Version]

- McDermott, H.J. Music Perception with Cochlear Implants: A Review. Trends Amplif. 2004, 8, 49–82. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Limb, C.J.; Roy, A.T. Technological, biological, and acoustical constraints to music perception in cochlear implant users. Hear. Res. 2014, 308, 13–26. [Google Scholar] [CrossRef] [PubMed]

- Roy, A.T.; Jiradejvong, P.; Carver, C.; Limb, C.J. Assessment of Sound Quality Perception in Cochlear Implant Users During Music Listening. Otol. Neurotol. 2012, 33, 319–327. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Roy, A.T.; Jiradejvong, P.; Carver, C.; Limb, C.J. Musical Sound Quality Impairments in Cochlear Implant (CI) Users as a Function of Limited High-Frequency Perception. Trends Amplif. 2012, 16, 191–200. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Loizou, P.C. Speech processing in vocoder-centric cochlear implants. Adv. Otorhinolaryngol. 2006, 64, 109–143. [Google Scholar] [PubMed] [Green Version]

- Oxenham, A.J. Pitch Perception and Auditory Stream Segregation: Implications for Hearing Loss and Cochlear Implants. Trends Amplif. 2008, 12, 316–331. [Google Scholar] [CrossRef] [Green Version]

- Marozeau, J.; Innes-Brown, H.; Blamey, P.J. The acoustic and perceptual cues affecting melody segregation for listeners with a cochlear implant. Front. Psychol. 2013, 4, 790. [Google Scholar] [CrossRef] [Green Version]

- Hopyan, T.; Manno, F.A.M.; Papsin, B.C.; Gordon, K.A. Sad and happy emotion discrimination in music by children with cochlear implants. Child Neuropsychol. J. Norm. Abnorm. Dev. Child. Adolesc. 2016, 22, 366–380. [Google Scholar] [CrossRef]

- Petersen, B.; Andersen, A.S.F.; Haumann, N.T.; Højlund, A.; Dietz, M.J.; Michel, F.; Riis, S.K.; Brattico, E.; Vuust, P. The CI MuMuFe—A New MMN Paradigm for Measuring Music Discrimination in Electric Hearing. Front. Neurosci. 2020, 14, 2. [Google Scholar] [CrossRef]

- Sharp, A.; Delcenserie, A.; Champoux, F. Auditory Event-Related Potentials Associated with Music Perception in Cochlear Implant Users. Front. Neurosci. 2018, 12. [Google Scholar] [CrossRef]

- Spitzer, E.R.; Galvin, J.J.; Friedmann, D.R.; Landsberger, D.M. Melodic interval perception with acoustic and electric hearing in bimodal and single-sided deaf cochlear implant listeners. Hear. Res. 2021, 400, 108136. [Google Scholar] [CrossRef]

- Spangmose, S.; Hjortkjær, J.; Marozeau, J. Perception of Musical Tension in Cochlear Implant Listeners. Front. Neurosci. 2019, 13. [Google Scholar] [CrossRef]

- Phillips-Silver, J.; Toiviainen, P.; Gosselin, N.; Turgeon, C.; Lepore, F.; Peretz, I. Cochlear implant users move in time to the beat of drum music. Hear. Res. 2015, 321, 25–34. [Google Scholar] [CrossRef] [PubMed]

- Jiam, N.T.; Limb, C.J. Rhythm processing in cochlear implant−mediated music perception. Ann. N. Y. Acad. Sci. 2019, 1453, 22–28. [Google Scholar] [CrossRef] [PubMed]

- Riley, P.E.; Ruhl, D.S.; Camacho, M.; Tolisano, A.M. Music Appreciation after Cochlear Implantation in Adult Patients: A Systematic Review. Otolaryngol. Neck Surg. 2018, 158, 1002–1010. [Google Scholar] [CrossRef]

- Zhou, Q.; Gu, X.; Liu, B. The music quality feeling and music perception of adult cochlear implant recipients. J. Clin. Otorhinolaryngol. Head Neck Surg. 2019, 33, 47–51. [Google Scholar]

- Ambert-Dahan, E.; Giraud, A.; Sterkers, O.; Samson, S. Judgment of musical emotions after cochlear implantation in adults with progressive deafness. Front. Psychol. 2015, 6, 181. [Google Scholar] [CrossRef]

- Shirvani, S.; Jafari, Z.; Sheibanizadeh, A.; Motasaddi Zarandy, M.; Jalaie, S. Emotional perception of music in children with unilateral cochlear implants. Iran. J. Otorhinolaryngol. 2014, 26, 225–233. [Google Scholar] [PubMed]

- Shirvani, S.; Jafari, Z.; Motasaddi Zarandi, M.; Jalaie, S.; Mohagheghi, H.; Tale, M.R. Emotional Perception of Music in Children With Bimodal Fitting and Unilateral Cochlear Implant. Ann. Otol. Rhinol. Laryngol. 2016, 125, 470–477. [Google Scholar] [CrossRef] [PubMed]

- Paquette, S.; Ahmed, G.D.; Goffi-Gomez, M.V.; Hoshino, A.C.H.; Peretz, I.; Lehmann, A. Musical and vocal emotion perception for cochlear implants users. Hear. Res. 2018. [Google Scholar] [CrossRef]

- Fuller, C.; Başkent, D.; Free, R. Early Deafened, Late Implanted Cochlear Implant Users Appreciate Music More than and Identify Music as Well as Postlingual Users. Front. Neurosci. 2019, 13. [Google Scholar] [CrossRef]

- Migirov, L.; Kronenberg, J.; Henkin, Y. Self-reported listening habits and enjoyment of music among adult cochlear implant recipients. Ann. Otol. Rhinol. Laryngol. 2009, 118, 350–355. [Google Scholar] [CrossRef]

- Giannantonio, S.; Polonenko, M.J.; Papsin, B.C.; Paludetti, G.; Gordon, K.A. Experience Changes How Emotion in Music Is Judged: Evidence from Children Listening with Bilateral Cochlear Implants, Bimodal Devices, and Normal Hearing. PLoS ONE 2015, 10, e0136685. [Google Scholar] [CrossRef] [Green Version]

- Tillmann, B.; Poulin-Charronnat, B.; Gaudrain, E.; Akhoun, I.; Delbé, C.; Truy, E.; Collet, L. Implicit Processing of Pitch in Postlingually Deafened Cochlear Implant Users. Front. Psychol. 2019, 10. [Google Scholar] [CrossRef] [PubMed]

- Buechner, A.; Krueger, B.; Klawitter, S.; Zimmermann, D.; Fredelake, S.; Holube, I. The perception of the stereo effect in bilateral and bimodal cochlear implant users and its contribution to music enjoyment. PLoS ONE 2020, 15, e0235435. [Google Scholar] [CrossRef] [PubMed]

- Lo, C.Y.; McMahon, C.M.; Looi, V.; Thompson, W.F. Melodic Contour Training and Its Effect on Speech in Noise, Consonant Discrimination, and Prosody Perception for Cochlear Implant Recipients. Behav. Neurol. 2015, 2015, 352869. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Peng, S.-C.; Tomblin, J.B.; Turner, C.W. Production and perception of speech intonation in pediatric cochlear implant recipients and individuals with normal hearing. Ear Hear. 2008, 29, 336–351. [Google Scholar] [CrossRef] [PubMed]

- Torppa, R.; Faulkner, A.; Huotilainen, M.; Jaervikivi, J.; Lipsanen, J.; Laasonen, M.; Vainio, M. The perception of prosody and associated auditory cues in early-implanted children: The role of auditory working memory and musical activities. Int. J. Audiol. 2014, 53, 182–191. [Google Scholar] [CrossRef] [PubMed]

- Pak, C.L.; Katz, W.F. Recognition of emotional prosody by Mandarin-speaking adults with cochlear implants. J. Acoust. Soc. Am. 2019, 146, EL165–EL171. [Google Scholar] [CrossRef] [PubMed]

- Deroche, M.L.D.; Felezeu, M.; Paquette, S.; Zeitouni, A.; Lehmann, A. Neurophysiological Differences in Emotional Processing by Cochlear Implant Users, Extending Beyond the Realm of Speech. Ear Hear. 2019. [Google Scholar] [CrossRef]

- Good, A.; Gordon, K.A.; Papsin, B.C.; Nespoli, G.; Hopyan, T.; Peretz, I.; Russo, F.A. Benefits of Music Training for Perception of Emotional Speech Prosody in Deaf Children With Cochlear Implants. Ear Hear. 2017, 38, 455–464. [Google Scholar] [CrossRef] [Green Version]

- Bugannim, Y.; Roth, D.A.-E.; Zechoval, D.; Kishon-Rabin, L. Training of Speech Perception in Noise in Pre-Lingual Hearing Impaired Adults with Cochlear Implants Compared with Normal Hearing Adults. Otol. Neurotol. 2019, 40, e316–e325. [Google Scholar] [CrossRef]

- Choi, J.E.; Moon, I.J.; Kim, E.Y.; Park, H.-S.; Kim, B.K.; Chung, W.-H.; Cho, Y.-S.; Brown, C.J.; Hong, S.H. Sound Localization and Speech Perception in Noise of Pediatric Cochlear Implant Recipients: Bimodal Fitting Versus Bilateral Cochlear Implants. Ear Hear. 2017, 38, 426–440. [Google Scholar] [CrossRef]

- Hong, R.S.; Turner, C.W. Pure-tone auditory stream segregation and speech perception in noise in cochlear implant recipients. J. Acoust. Soc. Am. 2006, 120, 360–374. [Google Scholar] [CrossRef] [PubMed]

- Döge, J.; Baumann, U.; Weissgerber, T.; Rader, T. Single-sided Deafness: Impact of Cochlear Implantation on Speech Perception in Complex Noise and on Auditory Localization Accuracy. Otol. Neurotol. 2017, 38. [Google Scholar] [CrossRef] [PubMed]

- Kaandorp, M.W.; Smits, C.; Merkus, P.; Festen, J.M.; Goverts, S.T. Lexical-Access Ability and Cognitive Predictors of Speech Recognition in Noise in Adult Cochlear Implant Users. Trends Hear. 2017, 21, 2331216517743887. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Coffey, E.B.J.; Arseneau-Bruneau, I.; Zhang, X.; Zatorre, R.J. The Music-In-Noise Task (MINT): A Tool for Dissecting Complex Auditory Perception. Front. Neurosci. 2019, 13. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Sardone, R.; Battista, P.; Panza, F.; Lozupone, M.; Griseta, C.; Castellana, F.; Capozzo, R.; Ruccia, M.; Resta, E.; Seripa, D.; et al. The Age-Related Central Auditory Processing Disorder: Silent Impairment of the Cognitive Ear. Front. Neurosci. 2019, 13, 619. [Google Scholar] [CrossRef]

- Van Noorden, L.P.A.S. Temporal Coherence in the Perception of Tone Sequences. Ph.D. Thesis, Eindhoven University of Technology, Eindhoven, The Netherlands, 1975. [Google Scholar]

- Nie, Y.; Nelson, P.B. Auditory stream segregation using amplitude modulated bandpass noise. Front. Psychol. 2015, 6. [Google Scholar] [CrossRef]

- Gaudrain, E.; Grimault, N.; Healy, E.W.; Béra, J.-C. Streaming of vowel sequences based on fundamental frequency in a cochlear-implant simulation. J. Acoust. Soc. Am. 2008, 124, 3076–3087. [Google Scholar] [CrossRef] [Green Version]

- Grimault, N.; Bacon, S.P.; Micheyl, C. Auditory stream segregation on the basis of amplitude-modulation rate. J. Acoust. Soc. Am. 2002, 111, 1340–1348. [Google Scholar] [CrossRef]

- Böckmann-Barthel, M.; Deike, S.; Brechmann, A.; Ziese, M.; Verhey, J.L. Time course of auditory streaming: Do CI users differ from normal-hearing listeners? Front. Psychol. 2014, 5. [Google Scholar] [CrossRef] [Green Version]

- Cooper, H.R.; Roberts, B. Auditory stream segregation of tone sequences in cochlear implant listeners. Hear. Res. 2007, 225, 11–24. [Google Scholar] [CrossRef] [PubMed]

- Cooper, H.R.; Roberts, B. Auditory stream segregation in cochlear implant listeners: Measures based on temporal discrimination and interleaved melody recognition. J. Acoust. Soc. Am. 2009, 126, 1975. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Hong, R.S.; Turner, C.W. Sequential stream segregation using temporal periodicity cues in cochlear implant recipients. J. Acoust. Soc. Am. 2009, 126, 291–299. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Rouger, J.; Lagleyre, S.; Démonet, J.; Fraysse, B.; Deguine, O.; Barone, P. Evolution of crossmodal reorganization of the voice area in cochlear-implanted deaf patients. Hum. Brain Mapp. 2012, 33, 1929–1940. [Google Scholar] [CrossRef]

- Strelnikov, K.; Marx, M.; Lagleyre, S.; Fraysse, B.; Deguine, O.; Barone, P. PET-imaging of brain plasticity after cochlear implantation. Hear. Res. 2015, 322, 180–187. [Google Scholar] [CrossRef]

- Strelnikov, K.; Rouger, J.; Demonet, J.; Lagleyre, S.; Fraysse, B.; Deguine, O.; Barone, P. Visual activity predicts auditory recovery from deafness after adult cochlear implantation. Brain J. Neurol. 2013, 136, 3682–3695. [Google Scholar] [CrossRef] [Green Version]

- McGurk, H.; MacDonald, J. Hearing lips and seeing voices. Nature 1976, 264, 746–748. [Google Scholar] [CrossRef] [PubMed]

- Frassinetti, F.; Bolognini, N.; Bottari, D.; Bonora, A.; Làdavas, E. Audiovisual integration in patients with visual deficit. J. Cogn. Neurosci. 2005, 17, 1442–1452. [Google Scholar] [CrossRef]

- Grasso, P.A.; Làdavas, E.; Bertini, C. Compensatory Recovery after Multisensory Stimulation in Hemianopic Patients: Behavioral and Neurophysiological Components. Front. Syst. Neurosci. 2016, 10, 45. [Google Scholar] [CrossRef] [Green Version]

- Passamonti, C.; Bertini, C.; Làdavas, E. Audio-visual stimulation improves oculomotor patterns in patients with hemianopia. Neuropsychologia 2009, 47, 546–555. [Google Scholar] [CrossRef]

- Caclin, A.; Bouchet, P.; Djoulah, F.; Pirat, E.; Pernier, J.; Giard, M.-H. Auditory enhancement of visual perception at threshold depends on visual abilities. Brain Res. 2011, 1396, 35–44. [Google Scholar] [CrossRef] [PubMed]

- Albouy, P.; Lévêque, Y.; Hyde, K.L.; Bouchet, P.; Tillmann, B.; Caclin, A. Boosting pitch encoding with audiovisual interactions in congenital amusia. Neuropsychologia 2015, 67, 111–120. [Google Scholar] [CrossRef] [PubMed]

- Rouger, J.; Lagleyre, S.; Fraysse, B.; Deneve, S.; Deguine, O.; Barone, P. Evidence that cochlear-implanted deaf patients are better multisensory integrators. Proc. Natl. Acad. Sci. USA 2007, 104, 7295–7300. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Rouger, J.; Fraysse, B.; Deguine, O.; Barone, P. McGurk effects in cochlear-implanted deaf subjects. Brain Res. 2008, 1188, 87–99. [Google Scholar] [CrossRef]

- Strelnikov, K.; Rouger, J.; Lagleyre, S.; Fraysse, B.; Démonet, J.-F.; Déguine, O.; Barone, P. Increased audiovisual integration in cochlear-implanted deaf patients: Independent components analysis of longitudinal positron emission tomography data. Eur. J. Neurosci. 2015, 41, 677–685. [Google Scholar] [CrossRef] [PubMed]

- Strelnikov, K.; Rouger, J.; Barone, P.; Deguine, O. Role of speechreading in audiovisual interactions during the recovery of speech comprehension in deaf adults with cochlear implants. Scand. J. Psychol. 2009, 50, 437–444. [Google Scholar] [CrossRef]

- Waddington, E.; Jaekel, B.N.; Tinnemore, A.R.; Gordon-Salant, S.; Goupell, M.J. Recognition of Accented Speech by Cochlear-Implant Listeners: Benefit of Audiovisual Cues. Ear Hear. 2020. [Google Scholar] [CrossRef]

- Barone, P.; Chambaudie, L.; Strelnikov, K.; Fraysse, B.; Marx, M.; Belin, P.; Deguine, O. Crossmodal interactions during non-linguistic auditory processing in cochlear-implanted deaf patients. Cortex J. Devoted Study Nerv. Syst. Behav. 2016, 83, 259–270. [Google Scholar] [CrossRef] [Green Version]

- Owens, E.; Kessler, D.K.; Raggio, M.W.; Schubert, E.D. Analysis and revision of the minimal auditory capabilities (MAC) battery. Ear Hear. 1985, 6, 280–290. [Google Scholar] [CrossRef]

- Nilsson, M.; Soli, S.D.; Sullivan, J.A. Development of the Hearing In Noise Test for the measurement of speech reception thresholds in quiet and in noise. J. Acoust. Soc. Am. 1994, 95, 1085–1099. [Google Scholar] [CrossRef]

- Nilsson, M.J.; McCaw, V.M.; Soli, S. Minimum Speech Test Battery for Adult Cochlear Implant Users; House Ear Institute: Los Angeles, CA, USA, 1996. [Google Scholar]

- Shafiro, V.; Hebb, M.; Walker, C.; Oh, J.; Hsiao, Y.; Brown, K.; Sheft, S.; Li, Y.; Vasil, K.; Moberly, A.C. Development of the Basic Auditory Skills Evaluation Battery for Online Testing of Cochlear Implant Listeners. Am. J. Audiol. 2020, 29, 577–590. [Google Scholar] [CrossRef] [PubMed]

- Pralus, A.; Lévêque, Y.; Blain, S.; Bouchet, P.; Tillmann, B.; Caclin, A. Training pitch perception in cochlear implant users via audiovisual integration: A pilot study in normal-hearing controls. Implant. Congr. Montr. 2019. [Google Scholar]

- Tillmann, B.; Schulze, K.; Foxton, J.M. Congenital amusia: A short-term memory deficit for non-verbal, but not verbal sounds. Brain Cogn. 2009, 71, 259–264. [Google Scholar] [CrossRef] [PubMed]

- Pralus, A.; Fornoni, L.; Bouet, R.; Gomot, M.; Bhatara, A.; Tillmann, B.; Caclin, A. Emotional prosody in congenital amusia: Impaired and spared processes. Neuropsychologia 2019, 107234. [Google Scholar] [CrossRef] [PubMed]

- Tillmann, B.; Albouy, P.; Caclin, A. Congenital amusias. Handb. Clin. Neurol. 2015, 129, 589–605. [Google Scholar]

- Albouy, P.; Mattout, J.; Bouet, R.; Maby, E.; Sanchez, G.; Aguera, P.-E.; Daligault, S.; Delpuech, C.; Bertrand, O.; Caclin, A.; et al. Impaired pitch perception and memory in congenital amusia: The deficit starts in the auditory cortex. Brain J. Neurol. 2013, 136, 1639–1661. [Google Scholar] [CrossRef]

- Albouy, P.; Cousineau, M.; Caclin, A.; Tillmann, B.; Peretz, I. Impaired encoding of rapid pitch information underlies perception and memory deficits in congenital amusia. Sci. Rep. 2016, 6, 18861. [Google Scholar] [CrossRef] [PubMed]

- Graves, J.E.; Pralus, A.; Fornoni, L.; Oxenham, A.J.; Caclin, A.; Tillmann, B. Short- and long-term memory for pitch and non-pitch contours: Insights from congenital amusia. Brain Cogn. 2019, 136, 103614. [Google Scholar] [CrossRef]

- Tillmann, B.; Lévêque, Y.; Fornoni, L.; Albouy, P.; Caclin, A. Impaired short-term memory for pitch in congenital amusia. Brain Res. 2016, 1640, 251–263. [Google Scholar] [CrossRef]

- Albouy, P.; Caclin, A.; Norman-Haignere, S.V.; Lévêque, Y.; Peretz, I.; Tillmann, B.; Zatorre, R.J. Decoding Task-Related Functional Brain Imaging Data to Identify Developmental Disorders: The Case of Congenital Amusia. Front. Neurosci. 2019, 13. [Google Scholar] [CrossRef]

- Albouy, P.; Mattout, J.; Sanchez, G.; Tillmann, B.; Caclin, A. Altered retrieval of melodic information in congenital amusia: Insights from dynamic causal modeling of MEG data. Front. Hum. Neurosci. 2015, 9, 20. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Hyde, K.L.; Peretz, I. Brains That Are out of Tune but in Time. Psychol. Sci. 2004, 15, 356–360. [Google Scholar] [CrossRef] [PubMed]

- Loui, P.; Guenther, F.H.; Mathys, C.; Schlaug, G. Action-Perception Mismatch in Tone-Deafness. Curr. Biol. 2008, 18, R331–R332. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Williamson, V.J.; Stewart, L. Memory for pitch in congenital amusia: Beyond a fine-grained pitch discrimination problem. Memory 2010, 18, 657–669. [Google Scholar] [CrossRef] [Green Version]

- Lima, C.F.; Brancatisano, O.; Fancourt, A.; Müllensiefen, D.; Scott, S.K.; Warren, J.D.; Stewart, L. Impaired socio-emotional processing in a developmental music disorder. Sci. Rep. 2016, 6. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Patel, A.D.; Wong, M.; Foxton, J.; Lochy, A.; Peretz, I. Speech Intonation Perception Deficits in Musical Tone Deafness (congenital Amusia). Music Percept. Interdiscip. J. 2008, 25, 357–368. [Google Scholar] [CrossRef] [Green Version]

- Thompson, W.F.; Marin, M.M.; Stewart, L. Reduced sensitivity to emotional prosody in congenital amusia rekindles the musical protolanguage hypothesis. Proc. Natl. Acad. Sci. USA 2012, 109, 19027–19032. [Google Scholar] [CrossRef] [Green Version]

- Butera, I.M.; Stevenson, R.A.; Mangus, B.D.; Woynaroski, T.G.; Gifford, R.H.; Wallace, M.T. Audiovisual Temporal Processing in Postlingually Deafened Adults with Cochlear Implants. Sci. Rep. 2018, 8, 11345. [Google Scholar] [CrossRef] [Green Version]

- Arnoldner, C.; Kaider, A.; Hamzavi, J. The role of intensity upon pitch perception in cochlear implant recipients. Laryngoscope 2006, 116, 1760–1765. [Google Scholar] [CrossRef]

- Arnoldner, C.; Riss, D.; Kaider, A.; Mair, A.; Wagenblast, J.; Baumgartner, W.-D.; Gstöttner, W.; Hamzavi, J.-S. The intensity-pitch relation revisited: Monopolar versus bipolar cochlear stimulation. Laryngoscope 2008, 118, 1630–1636. [Google Scholar] [CrossRef]

- Hirel, C.; Nighoghossian, N.; Lévêque, Y.; Hannoun, S.; Fornoni, L.; Daligault, S.; Bouchet, P.; Jung, J.; Tillmann, B.; Caclin, A. Verbal and musical short-term memory: Variety of auditory disorders after stroke. Brain Cogn. 2017, 113, 10–22. [Google Scholar] [CrossRef] [PubMed]

- Massida, Z.; Belin, P.; James, C.; Rouger, J.; Fraysse, B.; Barone, P.; Deguine, O. Voice discrimination in cochlear-implanted deaf subjects. Hear. Res. 2011, 275, 120–129. [Google Scholar] [CrossRef] [PubMed]

- Lee, M.D.; Wagenmakers, E.-J. Bayesian Cognitive Modeling: A Practical Course; Cambridge Core—Psychology Research Methods and Statistics; Cambridge University Press: Cambridge, UK, 2014. [Google Scholar]

- Wagenmakers, E.-J.; Marsman, M.; Jamil, T.; Ly, A.; Verhagen, J.; Love, J.; Selker, R.; Gronau, Q.F.; Šmíra, M.; Epskamp, S.; et al. Bayesian inference for psychology. Part I: Theoretical advantages and practical ramifications. Psychon. Bull. Rev. 2018, 25, 35–57. [Google Scholar] [CrossRef] [PubMed]

- Wagenmakers, E.-J.; Love, J.; Marsman, M.; Jamil, T.; Ly, A.; Verhagen, J.; Selker, R.; Gronau, Q.F.; Dropmann, D.; Boutin, B.; et al. Bayesian inference for psychology. Part II: Example applications with JASP. Psychon. Bull. Rev. 2017, 1–19. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Amieva, H.; Ouvrard, C. Does Treating Hearing Loss in Older Adults Improve Cognitive Outcomes? A Review. J. Clin. Med. 2020, 9, 805. [Google Scholar] [CrossRef] [Green Version]

- Claes, A.J.; Van de Heyning, P.; Gilles, A.; Rompaey, V.V.; Mertens, G. Cognitive outcomes after cochlear implantation in older adults: A systematic review. Cochlear Implants Int. 2018, 19, 239–254. [Google Scholar] [CrossRef]

- Mosnier, I.; Bebear, J.-P.; Marx, M.; Fraysse, B.; Truy, E.; Lina-Granade, G.; Mondain, M.; Sterkers-Artières, F.; Bordure, P.; Robier, A.; et al. Improvement of cognitive function after cochlear implantation in elderly patients. JAMA Otolaryngol. Head Neck Surg. 2015, 141, 442–450. [Google Scholar] [CrossRef]

- Völter, C.; Götze, L.; Dazert, S.; Falkenstein, M.; Thomas, J.P. Can cochlear implantation improve neurocognition in the aging population? Clin. Interv. Aging 2018, 13, 701–712. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Galvin, J.J., 3rd; Fu, Q.J.; Nogaki, G. Melodic contour identification by cochlear implant listeners., Melodic Contour Identification by Cochlear Implant Listeners. Ear Hear. 2007, 28, 302–319. [Google Scholar] [CrossRef] [Green Version]

- Luo, X.; Masterson, M.E.; Wu, C.-C. Contour identification with pitch and loudness cues using cochlear implants. J. Acoust. Soc. Am. 2014, 135, EL8–EL14. [Google Scholar] [CrossRef] [Green Version]

- Wright, R.; Uchanski, R.M. Music Perception and Appraisal: Cochlear Implant Users and Simulated CI Listening. J. Am. Acad. Audiol. 2012, 23, 350–379. [Google Scholar] [PubMed] [Green Version]

- Galvin, J.J., 3rd; Fu, Q.J.; Shannon, R.V. Melodic contour identification and music perception by cochlear implant users., Melodic Contour Identification and Music Perception by Cochlear Implant Users. Ann. N. Y. Acad. Sci. 2009, 1169, 518–533. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Busch, T.; Vanpoucke, F.; van Wieringen, A. Auditory Environment Across the Life Span of Cochlear Implant Users: Insights From Data Logging. J. Speech Lang. Hear. Res. JSLHR 2017, 60, 1362–1377. [Google Scholar] [CrossRef] [PubMed]

- Paredes-Gallardo, A.; Madsen, S.M.K.; Dau, T.; Marozeau, J. The Role of Temporal Cues in Voluntary Stream Segregation for Cochlear Implant Users. Trends Hear. 2018, 22, 2331216518773226. [Google Scholar] [CrossRef] [Green Version]

- Paredes-Gallardo, A.; Madsen, S.M.K.; Dau, T.; Marozeau, J. The Role of Place Cues in Voluntary Stream Segregation for Cochlear Implant Users. Trends Hear. 2018, 22, 2331216517750262. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Saki, N.; Nikakhlagh, S.; Mirmomeni, G.; Bayat, A. Perceptual organization of sequential stimuli in cochlear implant listeners: A temporal processing approach. Int. Tinnitus J. 2019, 23, 37–41. [Google Scholar] [CrossRef]

- Everhardt, M.K.; Sarampalis, A.; Coler, M.; Baskent, D.; Lowie, W. Meta-Analysis on the Identification of Linguistic and Emotional Prosody in Cochlear Implant Users and Vocoder Simulations. Ear Hear. 2020. [Google Scholar] [CrossRef]

- Frühholz, S.; Ceravolo, L.; Grandjean, D. Specific Brain Networks during Explicit and Implicit Decoding of Emotional Prosody. Cereb. Cortex 2012, 22, 1107–1117. [Google Scholar] [CrossRef] [Green Version]

- Stewart, L. Characterizing congenital amusia. Q. J. Exp. Psychol. 2011, 64, 625–638. [Google Scholar] [CrossRef] [Green Version]

- Tillmann, B.; Lalitte, P.; Albouy, P.; Caclin, A.; Bigand, E. Discrimination of tonal and atonal music in congenital amusia: The advantage of implicit tasks. Neuropsychologia 2016, 85, 10–18. [Google Scholar] [CrossRef]

- Blamey, P.J.; Maat, B.; Başkent, D.; Mawman, D.; Burke, E.; Dillier, N.; Beynon, A.; Kleine-Punte, A.; Govaerts, P.J.; Skarzynski, P.H.; et al. A Retrospective Multicenter Study Comparing Speech Perception Outcomes for Bilateral Implantation and Bimodal Rehabilitation. Ear Hear. 2015, 36, 408–416. [Google Scholar] [CrossRef] [Green Version]

- Canfarotta, M.W.; Dillon, M.T.; Buss, E.; Pillsbury, H.C.; Brown, K.D.; O’Connell, B.P. Frequency-to-Place Mismatch: Characterizing Variability and the Influence on Speech Perception Outcomes in Cochlear Implant Recipients. Ear Hear. 2020. [Google Scholar] [CrossRef] [PubMed]

- Fiveash, A.; Schön, D.; Canette, L.-H.; Morillon, B.; Bedoin, N.; Tillmann, B. A stimulus-brain coupling analysis of regular and irregular rhythms in adults with dyslexia and controls. Brain Cogn. 2020, 140, 105531. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Jones, M.R. Time, our lost dimension: Toward a new theory of perception, attention, and memory. Psychol. Rev. 1976, 83, 323–355. [Google Scholar] [CrossRef] [PubMed]

- Kuroda, T.; Ono, F.; Kadota, H. Dynamic Attending Binds Time and Rhythm Perception. Brain Nerve Shinkei Kenkyu No Shinpo 2017, 69, 1195–1202. [Google Scholar]

- Barlow, N.; Purdy, S.C.; Sharma, M.; Giles, E.; Narne, V. The Effect of Short-Term Auditory Training on Speech in Noise Perception and Cortical Auditory Evoked Potentials in Adults with Cochlear Implants. Semin. Hear. 2016, 37, 84–98. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Cheng, X.; Liu, Y.; Shu, Y.; Tao, D.-D.; Wang, B.; Yuan, Y.; Galvin, J.J.; Fu, Q.-J.; Chen, B. Music Training Can Improve Music and Speech Perception in Pediatric Mandarin-Speaking Cochlear Implant Users. Trends Hear. 2018, 22, 2331216518759214. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Firestone, G.M.; McGuire, K.; Liang, C.; Zhang, N.; Blankenship, C.M.; Xiang, J.; Zhang, F. A Preliminary Study of the Effects of Attentive Music Listening on Cochlear Implant Users’ Speech Perception, Quality of Life, and Behavioral and Objective Measures of Frequency Change Detection. Front. Hum. Neurosci. 2020, 14. [Google Scholar] [CrossRef]

- Fuller, C.D.; Galvin, J.J.; Maat, B.; Başkent, D.; Free, R.H. Comparison of Two Music Training Approaches on Music and Speech Perception in Cochlear Implant Users. Trends Hear. 2018, 22, 2331216518765379. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Lerousseau, J.P.; Hidalgo, C.; Schön, D. Musical Training for Auditory Rehabilitation in Hearing Loss. J. Clin. Med. 2020, 9, 1058. [Google Scholar] [CrossRef] [Green Version]

- Patel, A.D. Can nonlinguistic musical training change the way the brain processes speech? The expanded OPERA hypothesis. Hear. Res. 2014, 308, 98–108. [Google Scholar] [CrossRef] [PubMed]

- Shukor, N.F.A.; Lee, J.; Seo, Y.J.; Han, W. Efficacy of Music Training in Hearing Aid and Cochlear Implant Users: A Systematic Review and Meta-Analysis. Clin. Exp. Otorhinolaryngol. 2020. [Google Scholar] [CrossRef]

- Chari, D.A.; Barrett, K.C.; Patel, A.D.; Colgrove, T.R.; Jiradejvong, P.; Jacobs, L.Y.; Limb, C.J. Impact of Auditory-Motor Musical Training on Melodic Pattern Recognition in Cochlear Implant Users. Otol. Neurotol. 2020, 41, e422. [Google Scholar] [CrossRef]

- Huang, J.; Sheffield, B.; Lin, P.; Zeng, F.-G. Electro-Tactile Stimulation Enhances Cochlear Implant Speech Recognition in Noise. Sci. Rep. 2017, 7. [Google Scholar] [CrossRef] [PubMed]

- Innes-Brown, H.; Marozeau, J.; Blamey, P. The Effect of Visual Cues on Difficulty Ratings for Segregation of Musical Streams in Listeners with Impaired Hearing. PLoS ONE 2011, 6. [Google Scholar] [CrossRef] [Green Version]

- Sato, T.; Yabushita, T.; Sakamoto, S.; Katori, Y.; Kawase, T. In-home auditory training using audiovisual stimuli on a tablet computer: Feasibility and preliminary results. Auris. Nasus. Larynx 2019. [Google Scholar] [CrossRef]

- Talamini, F.; Vigl, J.; Doerr, E.; Grassi, M.; Carretti, B. Auditory and Visual Mental Imagery in Musicians and Nonmusicians. OSF Prepr. 2020. [Google Scholar] [CrossRef]

| Group | CI (6 Unilateral and 4 Bilateral) | Controls (N = 10) | p-Value (Group Comparison) | Effect Size (Lower and Upper Confidence Interval at 95%) |

|---|---|---|---|---|

| Sex | 8M 2F | 4M 6F | 0.07 | |

| Age (years) | 51 (±14) Min: 24 Max: 73 | 22.1(±1.7) Min: 20 Max: 25 | <0.001 | 2.9 (1.6–4.2) |

| Education (years) | 16.1 (±2.8) Min: 10 Max: 20 | 15.5 (±1.2) Min: 14 Max: 17 | 0.5 | 0.28 (−0.6–1.2) |

| Musical education (years) | 1.5 (±4.7) Min: 0 Max: 15 | 0.6 (±1.6) Min: 0 Max: 5 | 0.6 | 0.26 (−0.6–1.1) |

| Laterality | 9R, 1L | 9R, 1L | 1 | |

| Right Ear | 8 implants, 2 hearing-aids | NA | ||

| Left Ear | 6 implants, 4 hearing-aids | NA | ||

| Unilateral implant (n = 6): Duration (years) | 2.33 (±1.5) Min: 1 Max: 5 | NA | ||

| Bilateral implants (n = 4): First implant Duration (years) | 6.75 (±6.4) Min: 2 Max: 16 | NA | ||

| Bilateral implants (n = 4): Second implant Duration (years) | 5 (±4.5) Min: 1 Max: 11 | NA |

| Models | P(M) | P(M|Data) | BFM | BF10 | Error % | |

|---|---|---|---|---|---|---|

| PCD | Null model (incl. subject) | 0.2 | 0.136 | 0.629 | 1.000 | |

| Sound Type | 0.2 | 0.555 | 4.986 | 4.085 | 0.865 | |

| Sound Type + Modality | 0.2 | 0.164 | 0.782 | 1.204 | 3.341 | |

| Sound Type + Modality + Sound Type × Modality | 0.2 | 0.107 | 0.479 | 0.787 | 3.020 | |

| Modality | 0.2 | 0.039 | 0.161 | 0.285 | 0.956 | |

| DCI | Null model (incl. subject) | 0.2 | 3.164 × 10−7 | 1.266 × 10−6 | 1.000 | |

| Modality | 0.2 | 0.881 | 29.605 | 2.784 × 106 | 5.315 | |

| Sound Type + Modality | 0.2 | 0.099 | 0.438 | 311,979.244 | 1.818 | |

| Sound Type + Modality + Sound Type × Modality | 0.2 | 0.020 | 0.083 | 64,215.941 | 1.075 | |

| Sound Type | 0.2 | 3.109 × 10−8 | 1.244 × 10−7 | 0.098 | 1.218 | |

| STM | Null model (incl. subject) | 0.2 | 0.522 | 4.370 | 1.000 | |

| Sound Type | 0.2 | 0.239 | 1.255 | 0.458 | 0.612 | |

| Modality | 0.2 | 0.147 | 0.687 | 0.281 | 1.416 | |

| Sound Type + Modality | 0.2 | 0.071 | 0.305 | 0.136 | 3.380 | |

| Sound Type + Modality + Sound Type × Modality | 0.2 | 0.021 | 0.088 | 0.041 | 1.351 | |

| AS-total time | Null model (incl. subject) | 0.2 | 2.668 × 10−49 | 1.067 × 10−48 | 1.000 | |

| Sound Type + Percept + Sound Type× Percept | 0.2 | 0.875 | 28.028 | 3.280 × 1048 | 3.159 | |

| Percept | 0.2 | 0.116 | 0.523 | 4.332 × 1047 | 1.046 | |

| Sound Type + Percept | 0.2 | 0.009 | 0.038 | 3.484 × 1046 | 1.184 | |

| Sound Type | 0.2 | 1.883 × 10−50 | 7.532 × 10−50 | 0.071 | 2.129 | |

| AS-frequency | Null model (incl. subject) | 0.5 | 0.073 | 0.079 | 1.000 | |

| Sound Type | 0.5 | 0.927 | 12.720 | 12.720 | 0.307 | |

| EMO-recognition | Null model (incl. subject) | 0.2 | 1.560 × 10−20 | 6.240 × 10−20 | 1.000 | |

| Sound Type + Emotion + Sound Type× Emotion | 0.2 | 0.886 | 31.106 | 5.680 × 1019 | 0.682 | |

| Sound Type + Emotion | 0.2 | 0.114 | 0.514 | 7.301 × 1018 | 0.934 | |

| Sound Type | 0.2 | 6.305 × 10−5 | 2.522 × 10−4 | 4.042 × 1015 | 0.600 | |

| Emotion | 0.2 | 3.105 × 10−19 | 1.242 × 10−18 | 19.904 | 0.658 | |

| EMO-intensity | Null model (incl. subject) | 0.2 | 3.605 × 10−6 | 1.442 × 10−5 | 1.000 | |

| Sound Type + Emotion + Sound Type× Emotion | 0.2 | 0.999 | 4038.321 | 277,105.29 | 0.859 | |

| Sound Type + Emotion | 0.2 | 8.423 × 10−4 | 0.003 | 233.632 | 0.864 | |

| Sound Type | 0.2 | 1.304 × 10−4 | 5.218 × 10−4 | 36.182 | 0.789 | |

| Emotion | 0.2 | 1.320 × 10−5 | 5.280 × 10−5 | 3.661 | 0.550 |

| Models | P(M) | P(M|data) | BFM | BF10 | Error % | |

|---|---|---|---|---|---|---|

| PCD | Null model (incl. subject) | 0.2 | 0.284 | 1.586 | 1.000 | |

| Modality + Group + Modality × Group | 0.2 | 0.255 | 1.368 | 0.897 | 1.732 | |

| Modality | 0.2 | 0.204 | 1.026 | 0.719 | 1.035 | |

| Group | 0.2 | 0.150 | 0.706 | 0.528 | 0.943 | |

| Modality+ Group | 0.2 | 0.107 | 0.480 | 0.377 | 2.265 | |

| DCI | Null model (incl. subject) | 0.2 | 1.111 × 10−4 | 4.444 × 10−4 | 1.000 | |

| Modality | 0.2 | 0.464 | 3.465 | 4178.231 | 1.488 | |

| Modality + Group | 0.2 | 0.301 | 1.721 | 2708.463 | 1.336 | |

| Modality + Group + Modality × Group | 0.2 | 0.235 | 1.227 | 2113.544 | 1.824 | |

| Group | 0.2 | 5.756 × 10−5 | 2.303 × 10−4 | 0.518 | 0.660 | |

| STM | Null model (incl. subject) | 0.2 | 0.226 | 1.170 | 1.000 | |

| Modality | 0.2 | 0.394 | 2.605 | 1.743 | 0.945 | |

| Modality + Group | 0.2 | 0.195 | 0.967 | 0.860 | 1.415 | |

| Group | 0.2 | 0.105 | 0.470 | 0.465 | 0.647 | |

| Modality + Group + Modality × Group | 0.2 | 0.079 | 0.345 | 0.351 | 1.767 | |

| AS-total time | Null model (incl. subject) | 0.2 | 1.367 × 10−17 | 5.469 × 10−17 | 1.000 | |

| Perception + Group + Perception × Group | 0.2 | 0.878 | 28.803 | 6.422 × 1016 | 1.531 | |

| Percept | 0.2 | 0.090 | 0.394 | 6.558 × 1015 | 0.953 | |

| Perception + Group | 0.2 | 0.032 | 0.133 | 2.360 × 1015 | 1.629 | |

| Group | 0.2 | 4.768 × 10−18 | 1.907 × 10−17 | 0.349 | 1.044 | |

| AS-frequency | Null model | 0.5 | 0.163 | 0.195 | 1.000 | |

| Group | 0.5 | 0.837 | 5.125 | 5.125 | 7.765 × 10−4 | |

| EMO-recognition | Null model (incl. subject) | 0.2 | 2.095 × 10−4 | 8.380 × 10−4 | 1.000 | |

| Emotion + Group + Emotion×Group | 0.2 | 0.860 | 24.653 | 4107.699 | 2.294 | |

| Emotion + Group | 0.2 | 0.123 | 0.563 | 588.756 | 0.879 | |

| Emotion | 0.2 | 0.014 | 0.058 | 67.967 | 0.347 | |

| Group | 0.2 | 0.002 | 0.007 | 8.747 | 2.277 | |

| EMO-intensity | Null model (incl. subject) | 0.2 | 0.295 | 1.673 | 1.000 | |

| Emotion | 0.2 | 0.315 | 1.838 | 1.067 | 0.787 | |

| Emotion + Group | 0.2 | 0.174 | 0.843 | 0.590 | 0.956 | |

| Group | 0.2 | 0.161 | 0.769 | 0.547 | 0.868 | |

| Emotion + Group + Emotion × Group | 0.2 | 0.055 | 0.233 | 0.187 | 1.238 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Pralus, A.; Hermann, R.; Cholvy, F.; Aguera, P.-E.; Moulin, A.; Barone, P.; Grimault, N.; Truy, E.; Tillmann, B.; Caclin, A. Rapid Assessment of Non-Verbal Auditory Perception in Normal-Hearing Participants and Cochlear Implant Users. J. Clin. Med. 2021, 10, 2093. https://doi.org/10.3390/jcm10102093

Pralus A, Hermann R, Cholvy F, Aguera P-E, Moulin A, Barone P, Grimault N, Truy E, Tillmann B, Caclin A. Rapid Assessment of Non-Verbal Auditory Perception in Normal-Hearing Participants and Cochlear Implant Users. Journal of Clinical Medicine. 2021; 10(10):2093. https://doi.org/10.3390/jcm10102093

Chicago/Turabian StylePralus, Agathe, Ruben Hermann, Fanny Cholvy, Pierre-Emmanuel Aguera, Annie Moulin, Pascal Barone, Nicolas Grimault, Eric Truy, Barbara Tillmann, and Anne Caclin. 2021. "Rapid Assessment of Non-Verbal Auditory Perception in Normal-Hearing Participants and Cochlear Implant Users" Journal of Clinical Medicine 10, no. 10: 2093. https://doi.org/10.3390/jcm10102093

APA StylePralus, A., Hermann, R., Cholvy, F., Aguera, P.-E., Moulin, A., Barone, P., Grimault, N., Truy, E., Tillmann, B., & Caclin, A. (2021). Rapid Assessment of Non-Verbal Auditory Perception in Normal-Hearing Participants and Cochlear Implant Users. Journal of Clinical Medicine, 10(10), 2093. https://doi.org/10.3390/jcm10102093