A 36-Class Bimodal ERP Brain-Computer Interface Using Location-Congruent Auditory-Tactile Stimuli

Abstract

1. Introduction

2. Materials and Methods

2.1. Subjects

2.2. The BCI Design

- Recognize “J” is in the 3rd row. Focus on the stimulus “3” (at 135°) and count the number of randomly delivered repetitions of the desired stimulus;

- The computer determines the user is focusing on the third row after the preset number of repetitions of stimuli is reached;

- Recognize “J” is in the 4th column. Focus on the stimulus “4” (at 180°) and count the number of randomly delivered repetitions of the desired stimulus;

- Lastly, computer determines the user was focusing on “4”. Consequently, the character “J” is presented on the screen if both the row and column indexes are correctly selected.

2.3. The Stimulus Design

2.3.1. Auditory Stimulus Design

2.3.2. Electro-Tactile Stimulus Design

2.3.3. Bimodal Stimulus Design

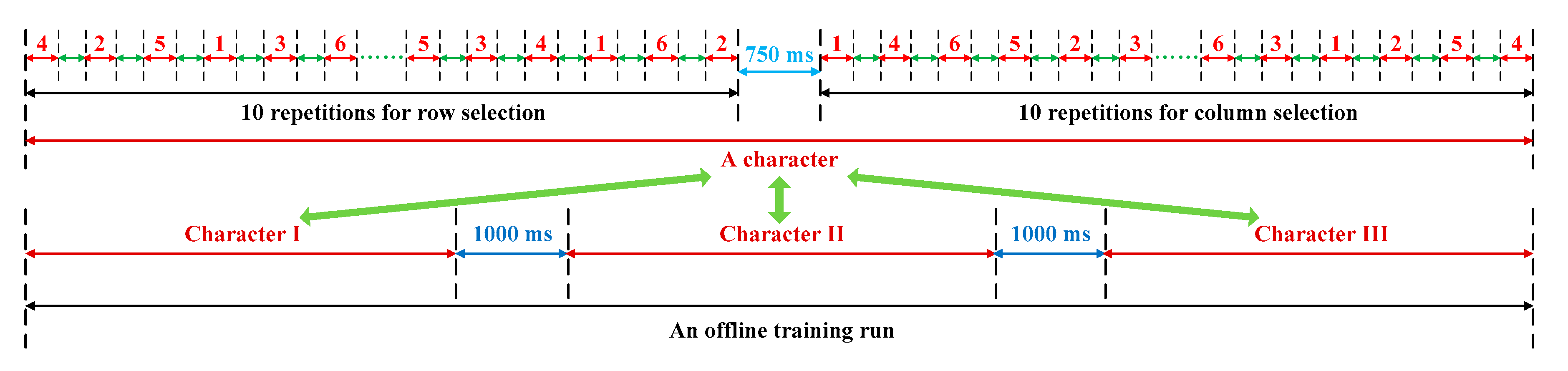

2.4. Experiment Procedure

3. Signal Processing

- Signal acquisition and preprocessing;

- Channel selection;

- BLDA training;

- Trial number optimization.

- Signal acquisition and preprocessing;

- ERP feature extraction;

- Target selection.

3.1. Signal Acquisition and Preprocessing

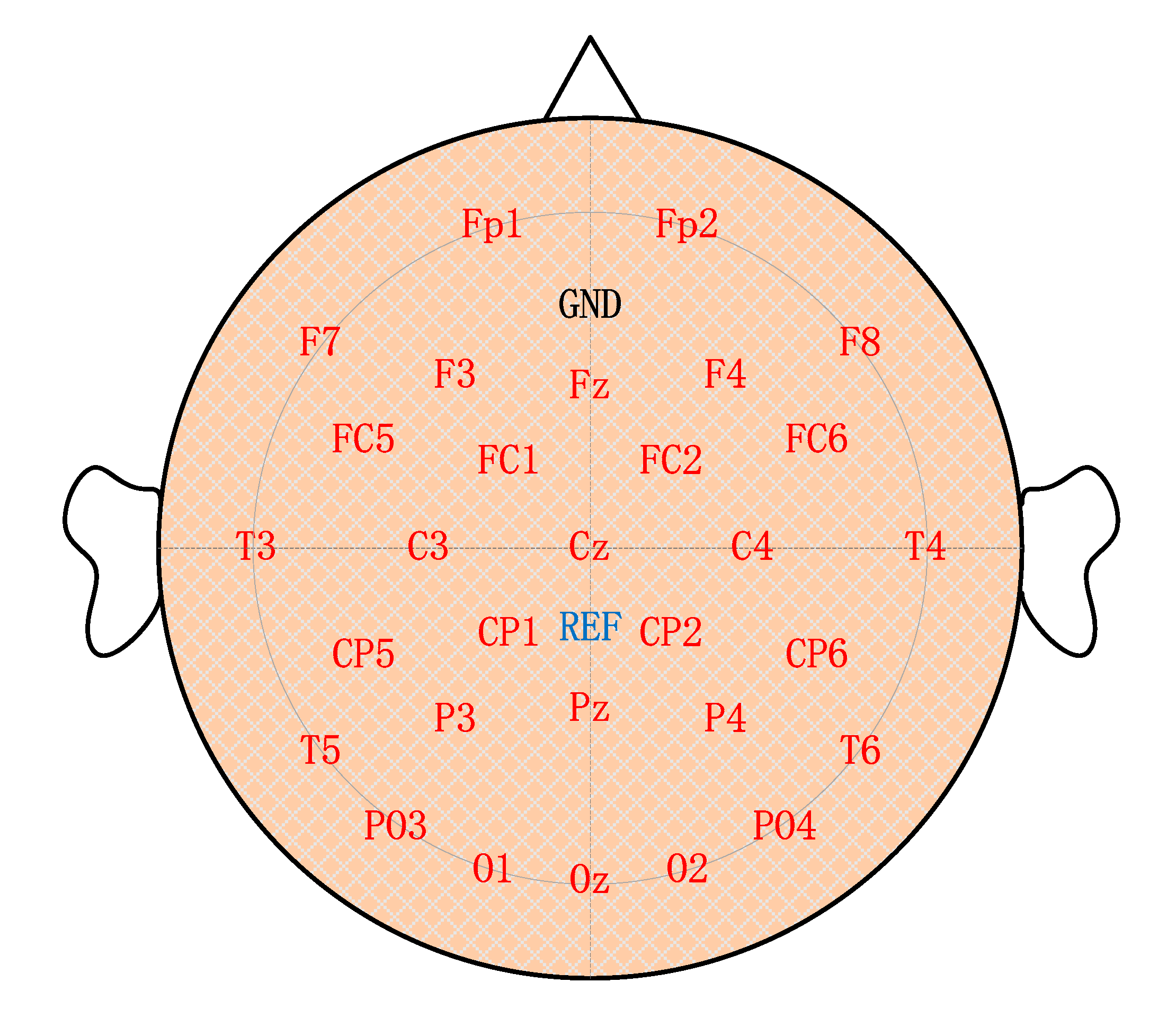

3.2. Channel Selection

3.3. BLDA Classifier

3.4. ERP Detection

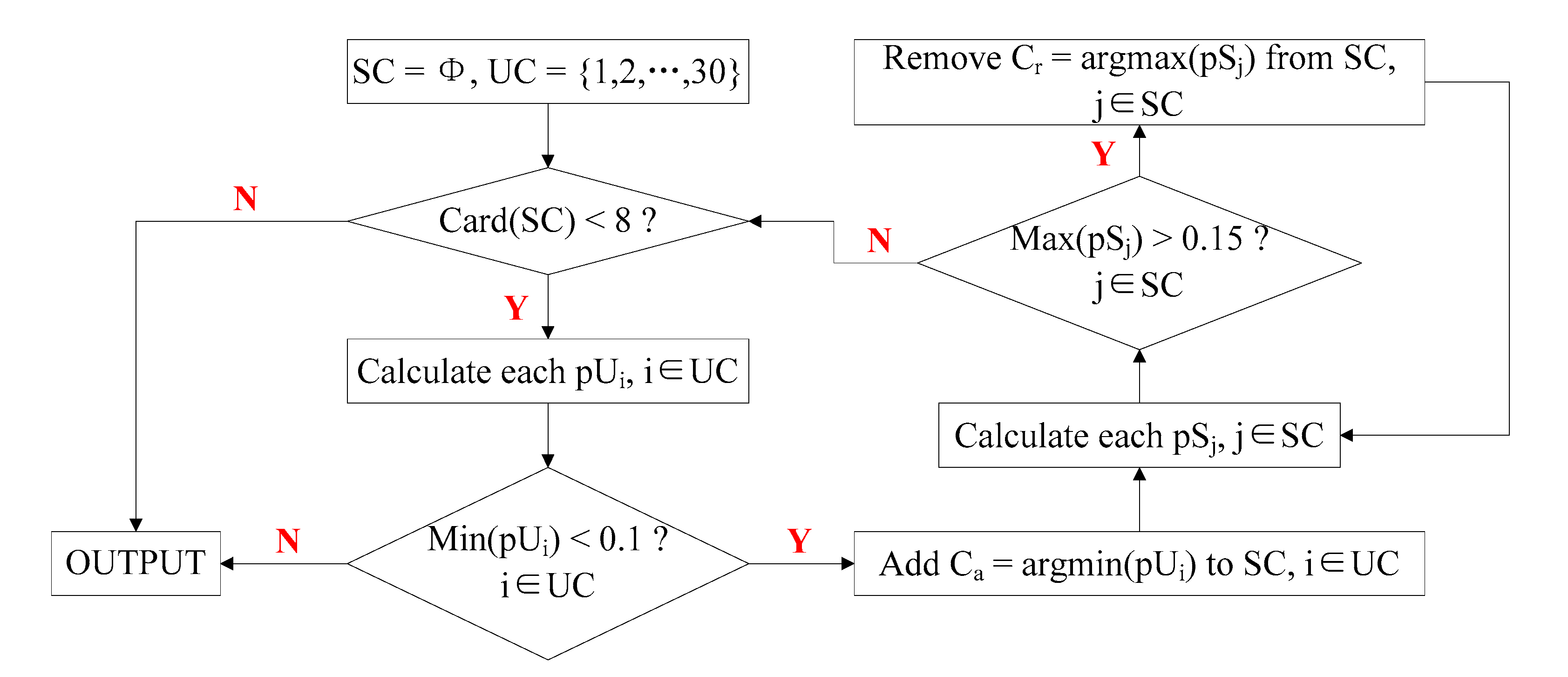

3.5. Selection of Optimal Trials

3.6. Statistical Analysis

4. Results

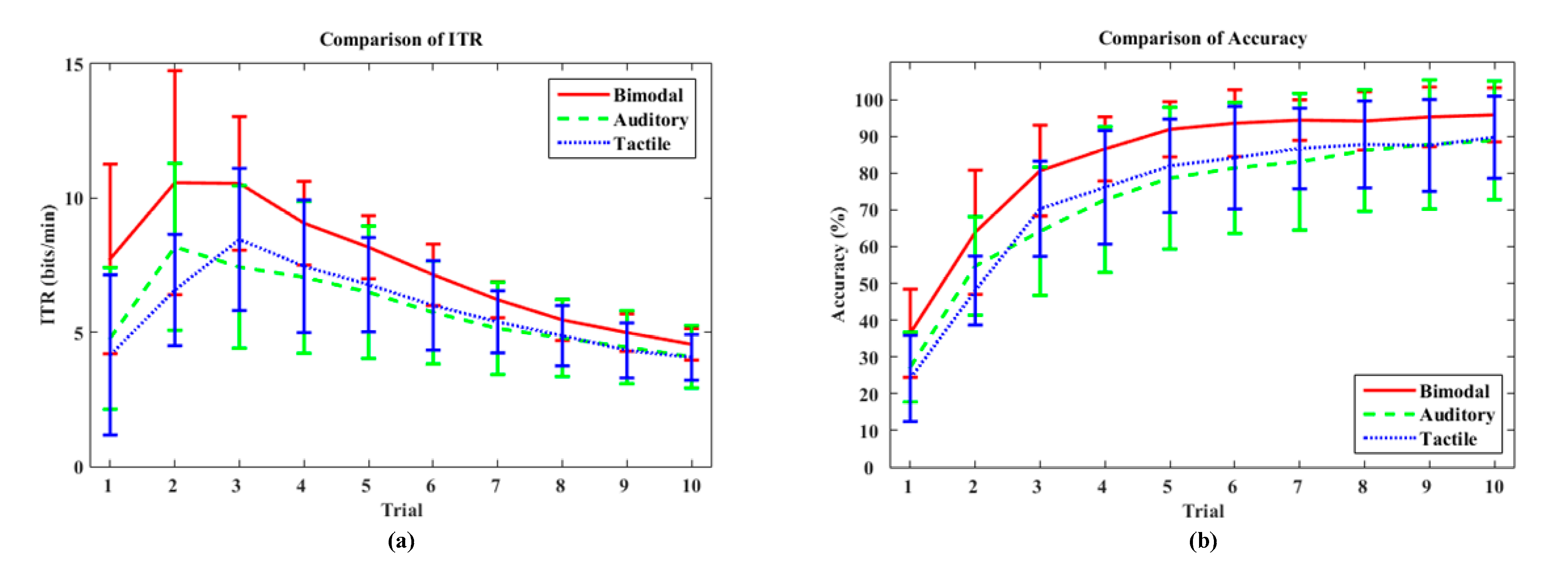

4.1. Online Performance

4.2. Offline Analyses

5. Discussions

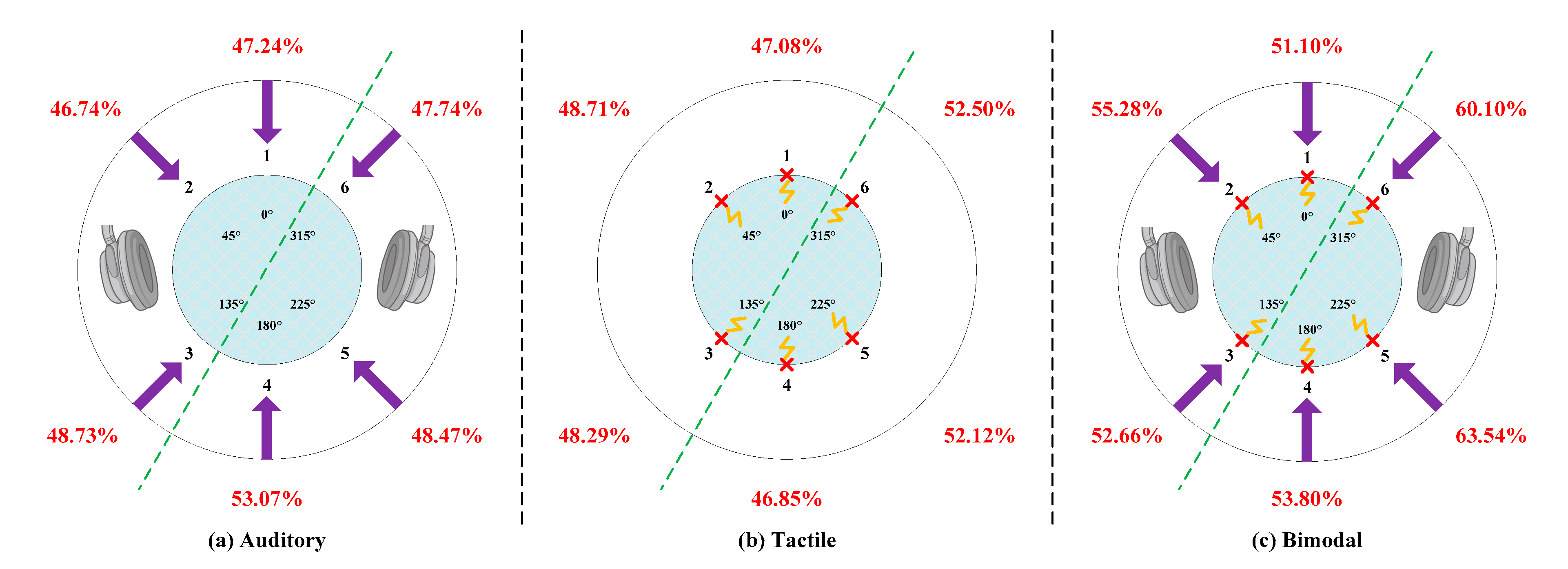

5.1. Classification Accuracy of Each Direction

- In total, 80% of the information received by human beings is from the visual system and the rest is collectively referred to as non-visual information. Because our eyes are in the anterior area, we cannot focus on the posterior area in a visual way. As previous research indicates, auditory and tactile stimuli play a quite important role in enhancing human spatial perception of the posterior area, whereas auditory and tactile are not crucial for target identification within the range of human vision [57,58]. Hence, the posterior area was superior to the anterior area for non-visual ERP-BCIs, especially auditory and tactile ERP-BCIs.

- Tommasi and Marzoli demonstrated that the human brain processes sounds heard from our two ears differently. Signals received by the right ear are processed first and generally commands received by our right ear are easier to execute. This is defined as right-ear advantage [59] or multisensory right-side-of-space advantage [60]. To be specific, there might be a right-ear advantage in dichotic listening compared to the left in most individuals, which leads to the phenomenon that the left hemisphere of the brain is used for language processing and may be more sensitive to right-sided stimuli [61]. Consequently, the detection accuracy of right-sided ERP targets was superior to targets presentation on the left side.

5.2. Results of Channel Selection

5.3. Limitations and Optimized Orientations

- The current optimal number of trials is estimated using offline calibration data and is fixed prior to the online use. During online signal processing, the target is determined once the optimal number of trials is met. However, the brain state as well as individual electrode conditions change over time. The optimal number of trials determined offline may not be optimal for the subsequent online experiment. Therefore, the number of trials can be further optimized by applying dynamic stopping strategy, which adaptively determines a selection time in each single character selection [69].

- The performance of the bimodal BCI system proposed in this paper is superior to the auditory or tactile BCI systems alone. However, we still do not know the underlying basis for this superior performance. As previous researches indicated, Xu et al. optimized the performance of visual-based BCI systems by combing the P300 and SSVEP features [70]. Therefore, in our future work, we will attempt to extract more potentials, such as steady state somatosensory evoked potential (SSSEP) and steady state auditory evoked potential (SSAEP), to analyze the dynamic information of human brain neural activity under the condition of delivering the auditory-tactile bimodal stimuli.

- Recently, BCI technology has been widely used in neural system and rehabilitation engineering especially as a potential communication solution for persons with severe motor impairments. Traditional visual-based BCI systems are dependent on the user’s vision and it is thus difficult to apply these solutions to the patients with visual impairments. The proposed BCI approach provides an alternative way to establish a visual-saccade-independent online brain-computer cooperative control system based on multisensory information. While the proposed approach is currently still in the laboratory phase, it is hoped that the system can be easily adapted to real-world applications.

6. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Wolpaw, J.R.; Birbaumer, N.; Heetderks, W.; Mcfarland, D.; Peckham, P.; Schalk, G.; Donchin, E.; Quatrano, L.; Robinson, C.; Vaughan, T. Brain–computer interface technology: A review of the first international meeting. IEEE Trans. Rehabil. Eng. 2000, 8, 164–173. [Google Scholar] [CrossRef] [PubMed]

- Allison, B.Z.; Wolpaw, E.W.; Wolpaw, J.R. Brain–computer interface systems: Progress and prospects. Expert Rev. Med. Devices 2007, 4, 463–474. [Google Scholar] [CrossRef] [PubMed]

- Xu, M.; Xiao, X.; Wang, Y.; Qi, H.; Jung, T.P.; Ming, D. A brain computer interface based on miniature event-related potentials induced by very small lateral visual stimuli. IEEE Trans. Biomed. Eng. 2018, 65, 1166–1175. [Google Scholar]

- Coyle, S.; Ward, T.; Markham, C. Brain computer interfaces, a review. Int. Sci. Rev. 2003, 28, 112–118. [Google Scholar] [CrossRef]

- Lance, B.J.; Kerick, S.E.; Ries, A.J.; Oie, K.S.; Mcdowell, K. Brain-computer interface technologies in the coming decades. Proc. IEEE 2012, 100, 1585–1599. [Google Scholar] [CrossRef]

- Burns, A.; Adeli, H.; Buford, J.A. Brain-computer interface after nervous system injury. Neuroscientist 2014, 20, 639–651. [Google Scholar] [CrossRef]

- Ortiz-Rosario, A.; Adeli, H. Brain-computer interface technologies: From signal to action. Rev. Neurosci. 2013, 24, 537–552. [Google Scholar] [CrossRef]

- Ortiz-Rosario, A.; Berrios-Torres, I.; Adeli, H.; Buford, J.A. Combined corticospinal and reticulospinal effects on upper limb muscles. Neurosci. Lett. 2014, 561, 30–34. [Google Scholar] [CrossRef]

- Spueler, M.; Walter, A.; Ramos-Murguialday, A.; Naros, G.; Birbaumer, N.; Gharabaghi, A. Decoding of motor intentions from epidural ECoG recordings in severely paralyzed chronic stroke patients. J. Neural Eng. 2014, 11, 066008. [Google Scholar] [CrossRef]

- Moghimi, S.; Kushki, A.; Guerguerian, A.M.; Chau, T. A review of EEG-based brain–computer interfaces as access pathways for individuals with severe disabilities. Assist. Technol. 2013, 25, 99–110. [Google Scholar] [CrossRef]

- Chen, X.; Xu, X.; Liu, A.; Mckeown, M.J.; Wang, Z.J. The use of multivariate EMD and CCA for denoising muscle artifacts from few-channel EEG recordings. IEEE Trans. Instrum. Meas. 2018, 67, 359–370. [Google Scholar] [CrossRef]

- Jiang, J.; Zhou, Z.T.; Yin, E.W.; Yu, Y.; Liu, Y.D.; Hu, D.W. A novel Morse code-inspired method for multiclass motor imagery brain–computer interface (BCI) design. Comput. Biol. Med. 2015, 66, 11–19. [Google Scholar] [CrossRef]

- Feng, J.; Yin, E.; Jin, J.; Saab, R.; Daly, I.; Wang, X. Towards correlation-based time window selection method for motor imagery BCIs. Neural Netw. 2018, 102, 87–95. [Google Scholar] [CrossRef]

- Farwell, L.A. Talking off the top of your head: Toward a mental prosthesis utilizing event-related brain potentials. Electroencephalogr. Clin. Neurophysiol. 1988, 70, 510–523. [Google Scholar] [CrossRef]

- Jin, J.; Sellers, E.W.; Zhou, S.; Zhang, Y.; Wang, X.; Cichocki, A. A P300 brain computer interface based on a modification of the mismatch negative paradigm. Int. J. Neural Syst. 2015, 25, 150011. [Google Scholar] [CrossRef] [PubMed]

- Sutton, S.; Braren, M.; Zubin, J.; John, E.R. Evoked-potential correlates of stimulus uncertainty. Science 1965, 150, 1187–1188. [Google Scholar] [CrossRef] [PubMed]

- Jin, J.; Zhang, H.; Daly, I.; Wang, X.; Cichocki, A. An improved P300 pattern in BCI to catch user’s attention. J. Neural Eng. 2017, 14, 036001. [Google Scholar] [CrossRef] [PubMed]

- Mak, J.N.; Arbel, Y.; Minett, J.W.; Mccane, L.M.; Yuksel, B.; Ryan, D. Optimizing the p300-based brain–computer interface: Current status and limitations and future directions. J. Neural Eng. 2011, 8, 025003. [Google Scholar] [CrossRef]

- Fazel-Rezai, R.; Allison, B.Z.; Guger, C.; Sellers, E.W.; Kleih, S.C.; Kübler, A. P300 brain computer interface: Current challenges and emerging trends. Front. Neuroeng. 2012, 5, 00014. [Google Scholar] [CrossRef]

- Riccio, A.; Mattia, D.; Simione, L.; Olivetti, M.; Cincotti, F. Eye-gaze independent EEG-based brain-computer interfaces for communication. J. Neural Eng. 2012, 9, 045001. [Google Scholar] [CrossRef]

- Acqualagna, L.; Blankertz, B. Gaze-independent BCI-spelling using rapid serial visual presentation (RSVP). Clin. Neurophysiol. 2013, 124, 901–908. [Google Scholar] [CrossRef] [PubMed]

- Liu, Y.; Zhou, Z.; Hu, D. Gaze independent brain-computer speller with covert visual search tasks. Clin. Neurophysiol. 2011, 122, 1127–1136. [Google Scholar] [CrossRef]

- Treder, M.S.; Schmidt, N.M.; Blankertz, B. Gaze-independent brain-computer interfaces based on covert attention and feature attention. J. Neural Eng. 2011, 8, 066003. [Google Scholar] [CrossRef]

- Barbosa, S.; Pires, G.; Nunes, U. Toward a reliable gaze-independent hybrid BCI combining visual and natural auditory stimuli. J. Neurosci. Methods 2011, 261, 47–61. [Google Scholar] [CrossRef]

- Xie, Q.; Pan, J.; Chen, Y.; He, Y.; Ni, X.; Zhang, J. A gaze-independent audiovisual brain-computer Interface for detecting awareness of patients with disorders of consciousness. BMC Neurol. 2018, 18, 144. [Google Scholar] [CrossRef]

- Hill, N.J.; Lal, T.N.; Bierig, K.; Birbaumer, N.; Schlkopf, B. An Auditory Paradigm for Brain–computer Interfaces. In Advances in Neural Information Processing Systems; MIT: Cambridge, MA, USA, 2005; pp. 569–576. [Google Scholar]

- Schreuder, M.; Blankertz, B.; Tangermann, M. A new auditory multi-class brain–computer interface paradigm: Spatial hearing as an informative cue. PLoS ONE 2010, 5, E9813. [Google Scholar] [CrossRef]

- Guo, J.; Gao, S.; Hong, B. An auditory brain– computer interface using active mental response. IEEE Trans. Neural Syst. Rehabil. Eng. 2010, 18, 230–235. [Google Scholar]

- Xu, H.; Zhang, D.; Ouyang, M.; Hong, B. Employing an active mental task to enhance the performance of auditory attention-based brain–computer interfaces. Clin. Neurophysiol. 2013, 124, 83–90. [Google Scholar] [CrossRef]

- Baykara, E.; Ruf, C.A.; Fioravanti, C.; Kthner, I.; Halder, S. Effects of training and motivation on auditory P300 brain-computer interface performance. Clin. Neurophysiol. 2016, 127, 379–387. [Google Scholar] [CrossRef]

- Halder, S.; KaThner, I.; Kübler, A. Training leads to increased auditory brain–computer interface performance of end-users with motor impairments. Clin. Neurophysiol. 2016. [Google Scholar] [CrossRef]

- Miho, S.; Yutaka, H.; Isao, N.; Alejandro, G.; Yoshinori, T.; Shohei, Y. Improving the performance of an auditory brain-computer interface using virtual sound sources by shortening stimulus onset asynchrony. Front. Neurosci. 2018, 12, 108–118. [Google Scholar]

- Brouwer, A.M. A tactile p300 brain–computer interface. Front. Neurosci. 2010, 4, 19. [Google Scholar] [CrossRef] [PubMed]

- Waal, M.V.D.; Severens, M.; Geuze, J.; Desain, P. Introducing the tactile speller: An ERP-based brain-computer interface for communication. J. Neural Eng. 2012, 9, 045002. [Google Scholar] [CrossRef]

- Kaufmann, T.; Holz, E.M.; Kübler, A. Comparison of tactile and auditory and visual modality for brain–computer interface use: A case study with a patient in the locked-in state. Front. Neurosci. 2013, 7, 129. [Google Scholar]

- Kaufmann, T.; Herweg, A.; Andrea, K. Toward brain-computer interface based wheelchair control utilizing tactually-evoked event-related potentials. J. Neural Eng. Rehabil. 2014, 11, 7. [Google Scholar] [CrossRef]

- Herweg, A.; Gutzeit, J.; Kleih, S.; Kübler, A. Wheelchair control by elderly participants in a virtual environment with a brain-computer interface (BCI) and tactile stimulation. Biol. Psychol. 2016, 121, 117–124. [Google Scholar] [CrossRef]

- Liu, Y.; Wang, J.; Yin, E.; Yu, Y.; Zhou, Z.; Hu, D. A tactile ERP-based brain–computer interface for communication. Int. J. HCI 2018, 35, 1–9. [Google Scholar] [CrossRef]

- Bernasconi, F.; Noel, J.-P.; Park, H.D.; Faivre, N.; Seeck, M.; Laurent, S.; Schaller, K.; Blanke, O.; Serino, A. Audio-tactile and peripersonal space processing around the trunk in human parietal and temporal cortex: An intracranial EEG study. Cereb. Cortex 2018, 28, 3385–3397. [Google Scholar] [CrossRef]

- Gao, S.; Wang, Y.; Gao, X.; Hong, B. Visual and auditory brain-computer interfaces. IEEE Trans. Bio Med. Eng. 2014, 61, 1436–1447. [Google Scholar]

- Thurlings, M.E.; Anne-Marie, B.; Van, E.J.B.F.; Peter, W. Gaze-independent ERP-BCIs: Augmenting performance through location-congruent bimodal stimuli. Front. Neurosci. 2014, 8. [Google Scholar] [CrossRef] [PubMed]

- Stein, B.E.; Stanford, T.R. Multisensory integration: Current issues from the perspective of the single neuron. Nat. Rev. Neurosci. 2008, 9, 255–266. [Google Scholar] [CrossRef] [PubMed]

- Ito1, T.; Gracco, V.L.; Ostry, D.J. Temporal factors affecting somatosensory-auditory interactions in speech processing. Front. Psychol. 2014, 5, 1198. [Google Scholar]

- Tidoni, E.; Gergondet, P.; Fusco, G. The role of audio-visual feedback in a thought-based control of a humanoid robot: A BCI study in healthy and spinal cord injured people. IEEE Trans. Neural Syst. Rehabil. Eng. 2017, 25, 772–781. [Google Scholar] [CrossRef]

- Tonelli, A.; Campus, C.; Serino, A.; Gori, M. Enhanced audio-tactile multisensory interaction in a peripersonal task after echolocation. Exp. Brain Res. 2019, 237, 3–4. [Google Scholar] [CrossRef]

- An, X.; Johannes, H.; Dong, M.; Benjamin, B.; Virginie, V.W. Exploring combinations of auditory and visual stimuli for gaze-independent brain-computer interfaces. PLoS ONE 2014, 9, E111070. [Google Scholar] [CrossRef]

- Sun, H.; Jin, J.; Zhang, Y.; Wang, B.; Wang, X. An Improved Visual-Tactile P300 Brain Computer Interface. In International Conference on Neural Information Processing; Springer: Cham, Switzerland, 2017. [Google Scholar]

- Wilson, A.D.; Tresilian, J.; Schlaghecken, F. The masked priming toolbox: An open-source MATLAB toolbox for masked priming researchers. Behav. Res. Methods 2011, 43, 201–214. [Google Scholar] [CrossRef]

- Gardner, B. HRTF Measurements of a KEMAR Dummy Head Microphone. In MIT Media Lab Perceptual Computing Technical Report; MIT Media Laboratory: Cambridge, MA, USA, 1994. [Google Scholar]

- Bruns, P.; Liebnau, R.; Röder, B. Cross-modal training induces changes in spatial representations early in the auditory processing pathway. Psychol. Sci. 2011, 22, 1120–1126. [Google Scholar] [CrossRef]

- Yao, L.; Sheng, X.; Mrachacz-Kersting, N.; Zhu, X.; Farina, D.; Jiang, N. Sensory stimulation training for BCI system based on somatosensory attentional orientation. IEEE Trans. Biomed. Eng. 2018, 66, 640–646. [Google Scholar] [CrossRef]

- Cecotti, H.; Rivet, B.; Congedo, M.; Jutten, C.; Bertrand, O.; Maby, E. A robust sensor-selection method for P300 braincomputer interfaces. J. Neural Eng. 2011, 8, 016001. [Google Scholar] [CrossRef]

- Colwell, K.A.; Ryan, D.B.; Throckmorton, C.S.; Sellers, E.W.; Collins, L.M. Channel selection methods for the P300 speller. J. Neurosci. Methods 2014, 232, 6–15. [Google Scholar] [CrossRef]

- David, J.C. Bayesian interpolation. Neural Comput. 1992, 4, 415–447. [Google Scholar]

- Hoffmann, U.; Vesin, J.M.; Ebrahimi, T.; Diserens, K. An efficient P300-based brain–computer interface for disabled subjects. J. Neurosci. Methods 2008, 167, 115–125. [Google Scholar] [CrossRef]

- Zhou, W.; Liu, Y.; Yuan, Q.; Li, X. Epileptic seizure detection using lacunartiy and Bayesian linear discriminant analysis in intracranial EEG. IEEE Trans. Biomed. Eng. 2013, 60, 3375–3381. [Google Scholar] [CrossRef]

- Occelli, V.; Spence, C.; Zampini, M. Audiotactile interactions in front and rear space. Neurosci. Biobehav. Rev. 2011, 35, 589–598. [Google Scholar] [CrossRef]

- Farne, A.; Làdavas, E. Auditory peripersonal space in humans. J. Cognit. Neurosci. 2002, 14, 1030–1043. [Google Scholar] [CrossRef]

- Marzoli, D.; Tommasi, L. Side biases in humans (Homo sapiens): Three ecological studies on hemispheric asymmetries. Naturwissenschaften 2009, 96, 1099–1106. [Google Scholar] [CrossRef]

- Hiscock, M.; Kinsbourne, M. Attention and the right-ear advantage: What is the connection? Brain Cognit. 2011, 76, 263–275. [Google Scholar] [CrossRef]

- Satrevik, B. The right ear advantage revisited: Speech lateralization in dichotic listening using consonant-vowel and vowel-consonant syllables. Laterality 2012, 17, 119–127. [Google Scholar] [CrossRef]

- Cao, Y.; Zhou, S.; Wang, Y. Neural dynamics of cognitive flexibility: Spatiotemporal analysis of event-related potentials. J. South. Med. Uni. 2017, 37, 755–760. [Google Scholar]

- Gill, P.; Woolley, S.M.N.; Fremouw, T.; Theunissen, F.E. What’s that sound? Auditory area CLM encodes stimulus surprise and not intensity or intensity changes. J. Neurophysiol. 2008, 99, 2809–2820. [Google Scholar] [CrossRef]

- Choi, D.; Nishimura, T.; Midori, M. Effect of empathy trait on attention to various facial expressions: Evidence from n170 and late positive potential (LPP). J. Physiol. Anthropol. 2014, 33, 18. [Google Scholar] [CrossRef]

- Catani, M.; Jones, D.K.; Ffytche, D.H. Perisylvian language networks of the human brain. Ann. Neurol. 2005, 57, 8–16. [Google Scholar] [CrossRef]

- Apostolova, L.G.; Lu, P.; Rogers, S.; Dutton, R.A.; Hayashi, K.M.; Toga, A.W. 3D mapping of language networks in clinical and pre-clinical Alzheimer’s disease. Brain Lang. 2008, 104, 33–41. [Google Scholar] [CrossRef]

- Leavitt Victoria, M.; Molholm, S.; Gomez-Ramirez, M. “What” and “Where” in auditory sensory processing: A high-density electrical mapping study of distinct neural processes underlying sound object recognition and sound localization. Front. Integr. Neurosci. 2011, 5, 23. [Google Scholar]

- Bizley, J.K.; Cohen, Y.E. The what, where and how of auditory-object perception. Nat. Rev. Neurosci. 2013, 14, 693–707. [Google Scholar] [CrossRef]

- Jiang, J.; Yin, E.; Wang, C.; Xu, M.; Ming, D. Incorporation of dynamic stopping strategy into the highspeed SSVEP-based BCIs. J. Neural Eng. 2018, 15, 046025. [Google Scholar] [CrossRef]

- Xu, M.; Qi, H.; Wan, B.; Yin, T.; Liu, Z.; Ming, D. A hybrid BCI speller paradigm combining P300 potential and the SSVEP blocking feature. J. Neural Eng. 2013, 10, 026001. [Google Scholar] [CrossRef]

| Subject | Auditory | Tactile | Bimodal | ||||||

|---|---|---|---|---|---|---|---|---|---|

| Trial | Acc. | ITR | Trial | Acc. | ITR | Trial | Acc. | ITR | |

| S1 | 2 | 66.67 | 10.90 | 3 | 100.00 | 15.43 | 2 | 80.00 | 14.68 |

| S2 | 2 | 73.33 | 12.72 | 3 | 100.00 | 15.43 | 2 | 80.00 | 14.68 |

| S3 | 1 | 33.33 | 6.34 | 3 | 66.67 | 7.58 | 1 | 46.67 | 10.96 |

| S4 | 2 | 53.33 | 7.63 | 3 | 73.33 | 8.85 | 2 | 80.00 | 14.68 |

| S5 | 2 | 66.67 | 10.90 | 3 | 60.00 | 6.41 | 2 | 80.00 | 14.68 |

| S6 | 2 | 60.00 | 9.21 | 3 | 66.67 | 7.58 | 2 | 66.67 | 10.90 |

| S7 | 1 | 33.33 | 6.34 | 2 | 46.67 | 6.17 | 2 | 66.67 | 10.90 |

| S8 | 4 | 73.33 | 6.78 | 4 | 73.33 | 6.78 | 3 | 73.33 | 8.85 |

| S9 | 4 | 73.33 | 6.78 | 3 | 73.33 | 8.85 | 5 | 100.00 | 9.59 |

| S10 | 5 | 86.67 | 7.27 | 3 | 53.33 | 5.31 | 3 | 80.00 | 10.21 |

| S11 | 4 | 80.00 | 7.83 | 2 | 46.67 | 6.17 | 3 | 73.33 | 8.85 |

| S12 | 2 | 66.67 | 10.90 | 4 | 80.00 | 7.83 | 1 | 46.67 | 10.96 |

| Mean | 2.58 | 63.89 | 8.63 | 3.00 | 70.00 | 8.53 | 2.33 | 72.78 | 11.66 |

| Std | 1.26 | 15.98 | 2.11 | 0.58 | 16.89 | 3.25 | 1.03 | 14.27 | 2.25 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, B.; Zhou, Z.; Jiang, J. A 36-Class Bimodal ERP Brain-Computer Interface Using Location-Congruent Auditory-Tactile Stimuli. Brain Sci. 2020, 10, 524. https://doi.org/10.3390/brainsci10080524

Zhang B, Zhou Z, Jiang J. A 36-Class Bimodal ERP Brain-Computer Interface Using Location-Congruent Auditory-Tactile Stimuli. Brain Sciences. 2020; 10(8):524. https://doi.org/10.3390/brainsci10080524

Chicago/Turabian StyleZhang, Boyang, Zongtan Zhou, and Jing Jiang. 2020. "A 36-Class Bimodal ERP Brain-Computer Interface Using Location-Congruent Auditory-Tactile Stimuli" Brain Sciences 10, no. 8: 524. https://doi.org/10.3390/brainsci10080524

APA StyleZhang, B., Zhou, Z., & Jiang, J. (2020). A 36-Class Bimodal ERP Brain-Computer Interface Using Location-Congruent Auditory-Tactile Stimuli. Brain Sciences, 10(8), 524. https://doi.org/10.3390/brainsci10080524