A Deep Siamese Convolution Neural Network for Multi-Class Classification of Alzheimer Disease

Abstract

1. Introduction

- We formed an SCNN model for the multi-class classification of Alzheimer’s disease.

- We presented an efficient model to overcome the data shortcoming complications for an imbalanced dataset.

- We developed a regularized model that learns from the small dataset and still demonstrates superior performance for Alzheimer’s disease diagnosis.

1.1. Machine Learning-Based Technique

1.2. Deep Learning-Based Technique

2. Materials and Methods

2.1. Data Selection

2.2. Image Preprocessing

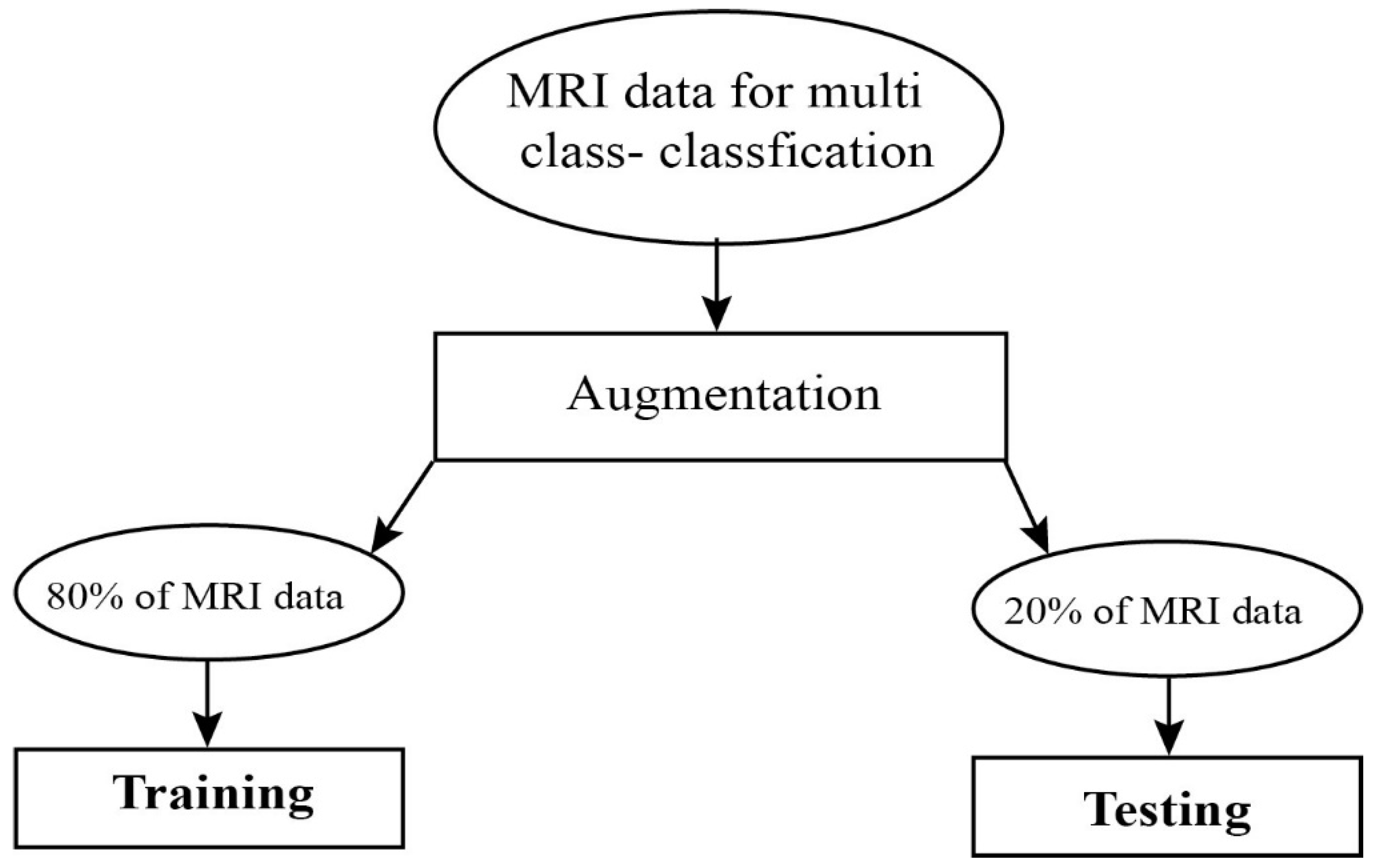

2.3. Data Augmentation

2.4. Convolutional Neural Networks

2.5. Improved Learning Rate and Regularization

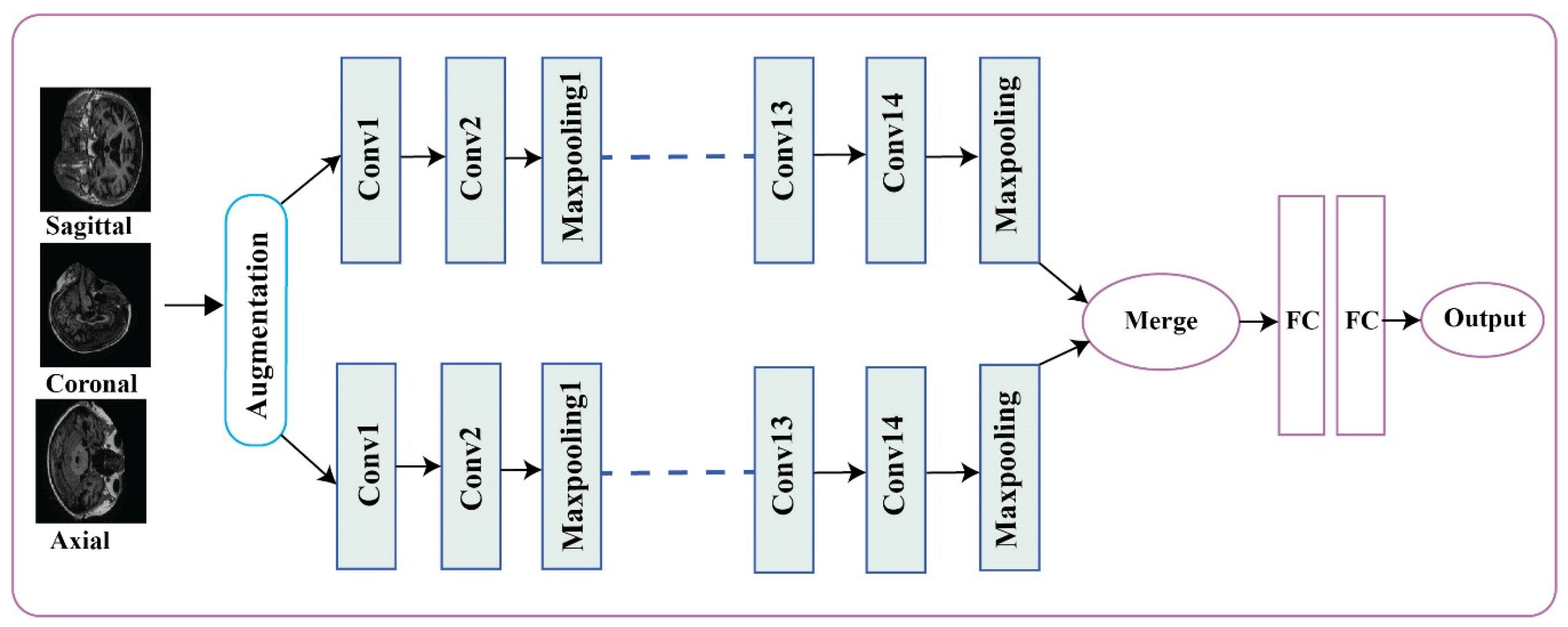

2.6. Alzheimer’s Disease Detection and Classification Architecture

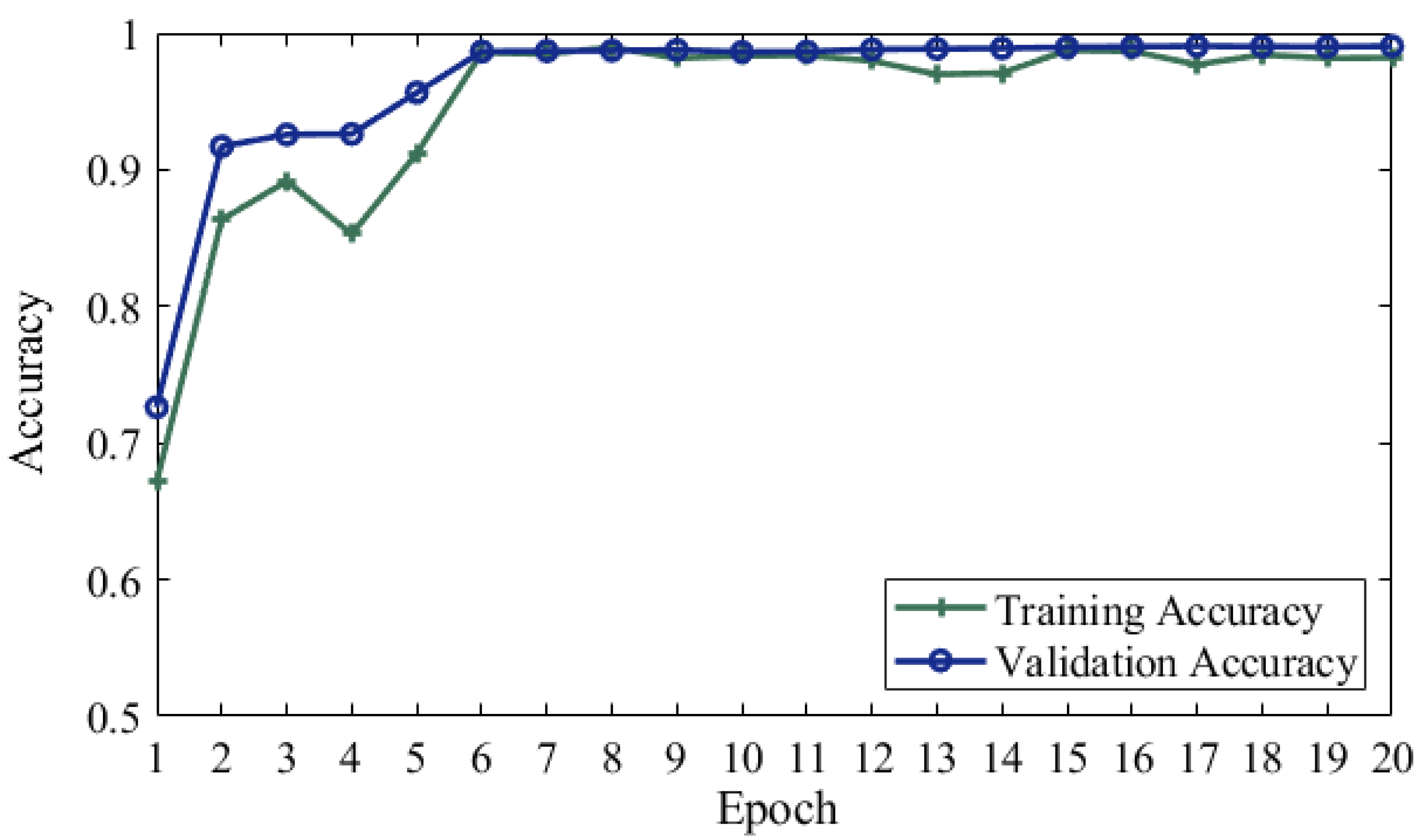

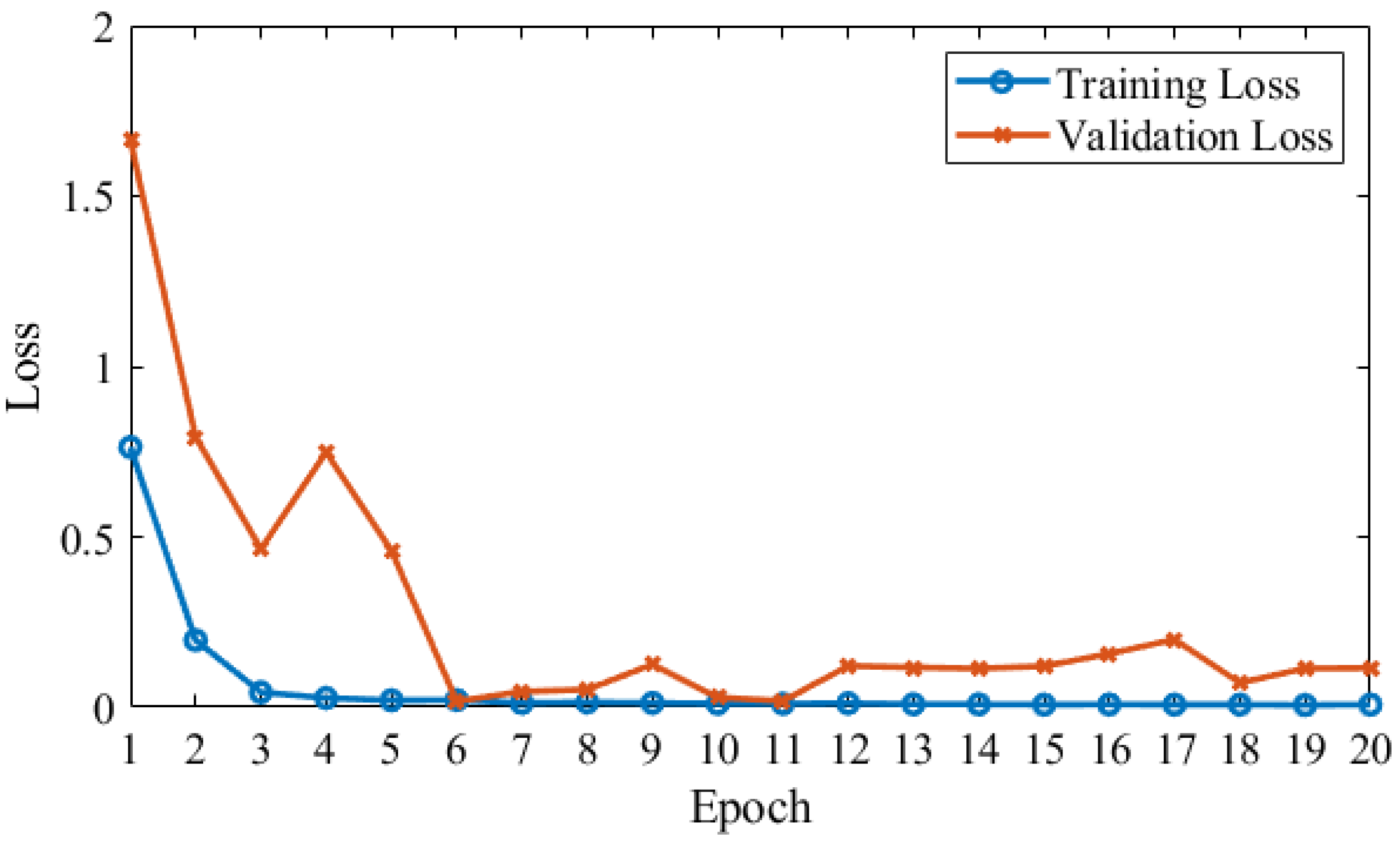

3. Results

4. Discussion

5. Conclusion

Author Contributions

Funding

Conflicts of Interest

References

- Beheshti, I.; Demirel, H.; Neuroimaging, D. Feature-ranking-based Alzheimer’s disease classification from structural MRI. Magn. Reson. Imaging 2016, 34, 252–263. [Google Scholar] [CrossRef] [PubMed]

- Alzheimer’s Association. 2019 Alzheimer’s disease facts and figures. Alzheimer’s Dement. 2019, 15, 321–387. [Google Scholar] [CrossRef]

- Afzal, S.; Maqsood, M.; Nazir, F.; Khan, U.; Song, O. A data augmentation-based framework to handle class imbalance problem for Alzheimer’s stage detection. IEEE Access 2019, 7, 115528–115539. [Google Scholar] [CrossRef]

- Picón, E.; Rabadán, O.J.; Seoane, C.L.; Magdaleno, M.C.; Mallo, S.C.; Vietes, A.N.; Pereiro, A.X.; Facal, D. Does empirically derived classification of individuals with subjective cognitive complaints predict dementia? Brain Sci. 2019, 9, 314. [Google Scholar] [CrossRef]

- Brookmeyer, R.; Johnson, E.; Ziegler-graham, K.; Arrighi, H.M. Forecasting the global burden of Alzheimer’s disease. Alzheimer’s Dementia 2007, 3, 186–191. [Google Scholar] [CrossRef]

- Maqsood, M. Transfer learning assisted classification and detection of Alzheimer’s disease stages using 3D. Sensors 2019, 19, 2645. [Google Scholar] [CrossRef]

- Bryant, S.E.O.; Waring, S.C.; Cullum, C.M.; Hall, J.; Lacritz, L. Staging dementia using clinical dementia rating scale sum of boxes scores. Arch. Neurol. 2015, 65, 1091–1095. [Google Scholar]

- Alirezaie, J.; Jernigan, M.E.; Nahmias, C. Automatic segmentation of cerebral MR images using artificial neural networks. IEEE Trans. Nucl. Sci. 1998, 45, 2174–2182. [Google Scholar] [CrossRef]

- Xie, Q.; Zhao, W.; Ou, G.; Xue, W. An overview of experimental and clinical spinal cord findings in Alzheimer’s disease. Brain Sci. 2019, 9, 168. [Google Scholar] [CrossRef]

- Sarraf, S.; Tofighi, G.; Neuroimaging, D. DeepAD: Alzheimer’s disease classification via deep convolutional neural networks using MRI and fMRI. BioRxiv 2016, 070441. [Google Scholar] [CrossRef]

- Hosseini-Asl, E.; Keynton, R.; El-Baz, A. Alzheimer’s disease diagnostics by adaptation of 3D convolutional network. In Proceedings of the International Conference on Image Processing, Arizona, AZ, USA, 25–28 September 2016; pp. 126–130. [Google Scholar]

- Litjens, G.; Kooi, T.; Bejnordi, B.E.; Arindra, A.; Setio, A.; Ciompi, F.; Ghafoorian, M.; Van Der Laak, J.A.W.M.; Van Ginneken, B.; Clara, I.S. A survey on deep learning in medical image analysis. Medical Image Analysis 2017, 42, 60–88. [Google Scholar] [CrossRef]

- Hosny, A.; Parmar, C.; Quackenbush, J.; Schwartz, L.H.; Aerts, H.J.W.L. Artificial intelligence in radiology. Nat. Rev. Cancer 2018, 18, 500–510. [Google Scholar] [CrossRef]

- Khagi, B.; Kwon, G.R.; Lama, R. Comparative analysis of Alzheimer’s disease classification by CDR level using CNN, feature selection, and machine-learning techniques. Int. J. Imaging Syst. Technol. 2019, 29, 1–14. [Google Scholar] [CrossRef]

- Huang, C.; Slovin, P.N.; Nielsen, R.B.; Skimming, J.W. Diprivan attenuates the cytotoxicity of nitric oxide in cultured human bronchial epithelial cells. Intensive Care Med. 2002, 28, 1145–1150. [Google Scholar] [CrossRef]

- Ritchie, K.; Ritchie, C.W. Mild cognitive impairment (MCI) twenty years on. Int. Psychogeriatrics 2012, 24, 1–5. [Google Scholar] [CrossRef]

- Fei-Fei, L.; Deng, J.; Li, K. ImageNet: Constructing a large-scale image database. J. Vis. 2010, 9, 1037–1043. [Google Scholar] [CrossRef]

- Baron, J.C.; Chételat, G.; Desgranges, B.; Perchey, G.; Landeau, B.; De La Sayette, V.; Eustache, F. In vivo mapping of gray matter loss with voxel-based morphometry in mild Alzheimer’s disease. Neuroimage 2001, 14, 298–309. [Google Scholar] [CrossRef]

- Arge, F.O.R.L.; Mage, C.I. V d c n l -s i r. 2015, 1–14. [Google Scholar]

- Plant, C.; Teipel, S.J.; Oswald, A.; Böhm, C.; Meindl, T.; Mourao-Miranda, J.; Bokde, A.W.; Hampel, H.; Ewers, M. Automated detection of brain atrophy patterns based on MRI for the prediction of Alzheimer’s disease. Neuroimage 2010, 50, 162–174. [Google Scholar] [CrossRef]

- Klöppel, S.; Stonnington, C.M.; Chu, C.; Draganski, B.; Scahill, R.I.; Rohrer, J.D.; Fox, N.C.; Jack, C.R.; Ashburner, J.; Frackowiak, R.S.J. Automatic classification of MR scans in Alzheimer’s disease. Brain 2008, 131, 681–689. [Google Scholar] [CrossRef]

- Gray, K.R.; Aljabar, P.; Heckemann, R.A.; Hammers, A. NeuroImage Random forest-based similarity measures for multi-modal classi fi cation of Alzheimer’s disease. Neuroimage 2013, 65, 167–175. [Google Scholar] [CrossRef]

- Morra, J.H.; Tu, Z.; Apostolova, L.G.; Green, A.E.; Toga, A.W.; Thompson, P.M. Comparison of adaboost and support vector machines for detecting Alzheimer’s disease through automated hippocampal segmentation. IEEE Trans. Med. Imaging 2010, 29, 30–43. [Google Scholar] [CrossRef] [PubMed]

- Neffati, S.; Ben Abdellafou, K.; Jaffel, I.; Taouali, O.; Bouzrara, K. An improved machine learning technique based on downsized KPCA for Alzheimer’s disease classification. Int. J. Imaging Syst. Technol. 2019, 29, 121–131. [Google Scholar] [CrossRef]

- Zhang, Y.; Wang, S. Detection of Alzheimer’s disease by displacement field and machine learning. PeerJ 2015, 3, 1–29. [Google Scholar] [CrossRef] [PubMed]

- Ben Ahmed, O.; Benois-Pineau, J.; Allard, M.; Ben Amar, C.; Catheline, G. Classification of Alzheimer’s disease subjects from MRI using hippocampal visual features. Multimed. Tools Appl. 2014, 74, 1249–1266. [Google Scholar] [CrossRef]

- El-Dahshan, E.S.A.; Hosny, T.; Salem, A.B.M. Hybrid intelligent techniques for MRI brain images classification. Digit. Signal Process. A Rev. J. 2010, 20, 433–441. [Google Scholar] [CrossRef]

- Hinrichs, C.; Singh, V.; Mukherjee, L.; Xu, G.; Chung, M.K.; Johnson, S.C. Spatially augmented LPboosting for AD classification with evaluations on the ADNI dataset. Neuroimage 2009, 48, 138–149. [Google Scholar] [CrossRef]

- Yue, L.; Gong, X.; Li, J.; Ji, H.; Li, M.; Nandi, A.K. Hierarchical feature extraction for early Alzheimer’s disease diagnosis. IEEE Access 2019, 7, 93752–93760. [Google Scholar] [CrossRef]

- Ahmed, S.; Choi, K.Y.; Lee, J.J.; Kim, B.C.; Kwon, G.R.; Lee, K.H.; Jung, H.Y. Ensembles of patch-based classifiers for diagnosis of Alzheimer diseases. IEEE Access 2019, 7, 73373–73383. [Google Scholar] [CrossRef]

- Chaturvedi, I.; Cambria, E.; Welsch, R.E.; Herrera, F. Distinguishing between facts and opinions for sentiment analysis: Survey and challenges. Inf. Fusion 2018, 44, 65–77. [Google Scholar] [CrossRef]

- Zeng, K.; Yang, Y.; Xiao, G.; Chen, Z. A very deep densely connected network for compressed sensing MRI. IEEE Access 2019, 7, 85430–85439. [Google Scholar] [CrossRef]

- Zhang, Y.Y.; Dong, Z.; Phillips, P.; Wang, S.H.; Ji, G.; Yang, J.; Yuan, T.F.; Zhang, D.; Wang, Y.; Zhou, L.; et al. Ultrafast 3D ultrasound localization microscopy using a 32 × 32 matrix array. IEEE Trans. Med. Imaging 2015, 38, 2005–2015. [Google Scholar]

- Gupta, A.; Ayhan, M.S.; Maida, A.S. Natural image bases to represent neuroimaging data. 30th Int. Conf. Mach. Learn. 2013, 28, 2024–2031. [Google Scholar]

- Dou, Q.; Member, S.; Chen, H.; Member, S.; Yu, L.; Zhao, L.; Qin, J. Automatic detection of cerebral microbleeds from MR images via 3D convolutional neural networks. IEEE 2016, 35, 1182–1195. [Google Scholar] [CrossRef] [PubMed]

- Suk, H.; Lee, S.; Shen, D. Deep ensemble learning of sparse regression models for brain disease diagnosis. Med. Image Anal. 2017, 37, 101–113. [Google Scholar] [CrossRef]

- Hong, X.; Lin, R.; Yang, C.; Zeng, N.; Cai, C.; Gou, J.; Yang, J. Predicting Alzheimer’s disease using LSTM. IEEE Access 2019, 7, 80893–80901. [Google Scholar] [CrossRef]

- Fulton, L.V.; Dolezel, D.; Harrop, J.; Yan, Y.; Fulton, C.P. Classification of Alzheimer’s disease with and without imagery using gradient boosted machines and ResNet-50. Brain Sci. 2019, 9, 212. [Google Scholar] [CrossRef]

- Jenkins, N.W.; Lituiev, M.S.D.; Timothy, P.; Aboian, M.P.P.M.S. A deep learning model to predict a diagnosis of Alzheimer disease by using 18 F-FDG PET of the brain. Radiology 2018, 290, 456–464. [Google Scholar]

- Khan, N.M.; Abraham, N.; Hon, M. Transfer learning with intelligent training data selection for prediction of Alzheimer’s disease. IEEE Access 2019, 7, 72726–72735. [Google Scholar] [CrossRef]

- Gorji, H.T.; Kaabouch, N. A deep learning approach for diagnosis of mild cognitive impairment based on mri images. Brain Sci. 2019, 9, 217. [Google Scholar] [CrossRef]

- Severyn, A.; Moschitti, A. UNITN: Training deep convolutional neural network for twitter sentiment classification. In Proceedings of the 9th International Workshop on Semantic Evaluation (SemEval 2015), Amsterdam, The Netherlands, 4–5 June 2015; pp. 464–469. [Google Scholar]

- Liu, S.; Deng, W. Very deep convolutional neural network based image classification using small training sample size. In Proceedings of the 2015 3rd IAPR Asian Conference on Pattern Recognition (ACPR), Kuala Lumpur, Malaysia, 3–6 November 2015. [Google Scholar]

- Kim, H.; Jeong, Y.S. Sentiment classification using convolutional neural networks. Appl. Sci. 2019, 9, 2347. [Google Scholar] [CrossRef]

- Chincarini, A.; Bosco, P.; Calvini, P.; Gemme, G.; Esposito, M.; Olivieri, C.; Rei, L.; Squarcia, S.; Rodriguez, G.; Bellotti, R. Local MRI analysis approach in the diagnosis of early and prodromal Alzheimer’s disease. Neuroimage 2011, 58, 469–480. [Google Scholar] [CrossRef] [PubMed]

- Ateeq, T.; Majeed, M.N.; Anwar, S.M.; Maqsood, M.; Rehman, Z.-u.; Lee, J.W.; Muhammad, K.; Wang, S.; Baik, S.W.; Mehmood, I. Ensemble-classifiers-assisted detection of cerebral microbleeds in brain MRI. Comput. Electr. Eng. 2018, 69, 768–781. [Google Scholar] [CrossRef]

- Yang, J.; Zhao, Y.; Chan, J.C.W.; Yi, C. The effectiveness of data augmentation in image classification using deep learning. arXiv 2017, arXiv:1712.04621. [Google Scholar]

- Bjerrum, E.J. SMILES enumeration as data augmentation for neural network modeling of molecules. arXiv 2015, arXiv:1703.07076. [Google Scholar]

- Schmidhuber, J. Deep learning in neural networks: An overview. Neural Networks 2015, 61, 85–117. [Google Scholar] [CrossRef] [PubMed]

- Ioffe, S.; Szegedy, C. Batch normalization: Accelerating deep network training by reducing internal covariate shift. arXiv 2015, arXiv:1502.013167. [Google Scholar]

- Wiesler, S.; Richard, A.; Schl, R. Mean-Normalized Stochastic Gradient for large-scale deep learning. In Proceedings of the International Conference on Acoustics, Speech and Signal Processing (ICASSP), Florence, Italy, 4–9 May 2014; pp. 180–184. [Google Scholar]

- Boyat, A.K.; Joshi, B.K. A review paper: noise models in digital image processing. arXiv 2015, 6, 63–75. [Google Scholar] [CrossRef]

- Islam, J.; Zhang, Y. Brain MRI analysis for Alzheimer’s disease diagnosis using an ensemble system of deep convolutional neural networks. Brain Informatics 2018, 5, 2. [Google Scholar] [CrossRef]

- Asl, E.H.; Ghazal, M.; Mahmoud, A.; Aslantas, A.; Shalaby, A.; Casanova, M.; Barnes, G.; Gimel’farb, G.; Keynton, R.; Baz, A. El Alzheimer’s disease diagnostics by a 3D deeply supervised adaptable convolutional network. Front. Biosci. Landmark 2018, 23, 584–596. [Google Scholar]

- Evgin, G. Diagnosis of Alzheimer’s disease with Sobolev gradient- based optimization and 3D convolutional neural network. Int. J. Meth. Biomed. Eng. 2019, 35, e3225. [Google Scholar]

- Farooq, A.; Anwar, S.; Awais, M.; Rehman, S. A deep CNN based multi-class classification of Alzheimer’s disease using MRI. In Proceedings of the 2017 IEEE International Conference on Imaging Systems and Techniques (IST), Beijing, China, 18–20 October 2017. [Google Scholar]

| Layer No. | Layer Name | Kernel Size | Pool Size | No. of Filters |

|---|---|---|---|---|

| 1 | Conv1 + ReLU | 3 | 64 | |

| Batch Normalization | ||||

| 2 | Conv2 + ReLU | 3 | 64 | |

| Maxpooling1 | 2 | |||

| 3 | Conv3 + ReLU | 3 | 128 | |

| Gaussian Noise | ||||

| Batch Normalization | ||||

| 4 | Conv4 + ReLU | 3 | 128 | |

| Maxpooling2 | 2 | |||

| 5 | Conv5 + ReLU | 3 | 256 | |

| Batch Normalization | ||||

| 6 | Conv6 + ReLU | 3 | 256 | |

| 7 | Conv7 + ReLU | 3 | 256 | |

| Gaussian Noise | ||||

| 8 | Conv8 + ReLU | 3 | 256 | |

| Maxpooling3 | 2 | |||

| 9 | Conv9 + ReLU | 3 | 512 | |

| 10 | Conv10 + ReLU | 3 | 512 | |

| 11 | Conv11 + ReLU | 3 | 512 | |

| Maxpooling4 | 2 | |||

| 12 | Conv12 + ReLU | 3 | 512 | |

| Gaussian Noise | ||||

| 13 | Conv13 + ReLU | 3 | 512 | |

| 14 | Conv14 + ReLU | 3 | 512 | |

| Maxpooling5 | 2 | |||

| 15 | Flatten1 | |||

| 16 | Flatten2 | |||

| 17 | Concatenate | |||

| 18 | FC1 + ReLU 4096 | |||

| 19 | FC2 + ReLU 4096 | |||

| 20 | Softmax |

| Clinical Dementia Rate (RATE) | No. of Samples |

|---|---|

| CDR-0 (No Dementia) | 167 |

| CDR-0.5 (Very Mild Dementia) | 87 |

| CDR-1 (Mild-Dementia) | 105 |

| CDR-2 (Moderate AD) | 23 |

| Rotation Range | 10 Degree |

|---|---|

| Width shift range | 0.1 Degree |

| Height shift range | 0.1 Degree |

| Shear range | 0.15 Degree |

| Zoom range | 0.5, 1.5 |

| Channel shift range | 150.0 |

| Actual Class | Predicted | Class | ||

|---|---|---|---|---|

| ND | VMD | MD | MAD | |

| No Dementia (ND) | 334 | 0 | 0 | 0 |

| Very Mild Dementia (VMD) | 0 | 170 | 4 | 0 |

| Mild Dementia (MD) | 0 | 3 | 207 | 0 |

| Moderate AD (MAD) | 0 | 0 | 0 | 46 |

| Paper | Method | Dataset | Accuracy |

|---|---|---|---|

| Islam et al. [53] | ResNet, CNN | OASIS (MRI) | 93.18% |

| Hosseini et al. [54] | 3D-DSA-CNN | ADNI (MRI) | 97.60% |

| Evign et al. [55] | 3D-CNN | ADNI (MRI) | 98.01% |

| Farooq et al. [56] | GoogLeNet | OASIS (MRI) | 98.88% |

| Khan et al. [40] | VGG | ADNI (MRI) | 99.36% |

| Proposed Method | SCNN | OASIS (MRI) | 99.05% |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Mehmood, A.; Maqsood, M.; Bashir, M.; Shuyuan, Y. A Deep Siamese Convolution Neural Network for Multi-Class Classification of Alzheimer Disease. Brain Sci. 2020, 10, 84. https://doi.org/10.3390/brainsci10020084

Mehmood A, Maqsood M, Bashir M, Shuyuan Y. A Deep Siamese Convolution Neural Network for Multi-Class Classification of Alzheimer Disease. Brain Sciences. 2020; 10(2):84. https://doi.org/10.3390/brainsci10020084

Chicago/Turabian StyleMehmood, Atif, Muazzam Maqsood, Muzaffar Bashir, and Yang Shuyuan. 2020. "A Deep Siamese Convolution Neural Network for Multi-Class Classification of Alzheimer Disease" Brain Sciences 10, no. 2: 84. https://doi.org/10.3390/brainsci10020084

APA StyleMehmood, A., Maqsood, M., Bashir, M., & Shuyuan, Y. (2020). A Deep Siamese Convolution Neural Network for Multi-Class Classification of Alzheimer Disease. Brain Sciences, 10(2), 84. https://doi.org/10.3390/brainsci10020084