Automatic Lip-Reading System Based on Deep Convolutional Neural Network and Attention-Based Long Short-Term Memory

Abstract

1. Introduction

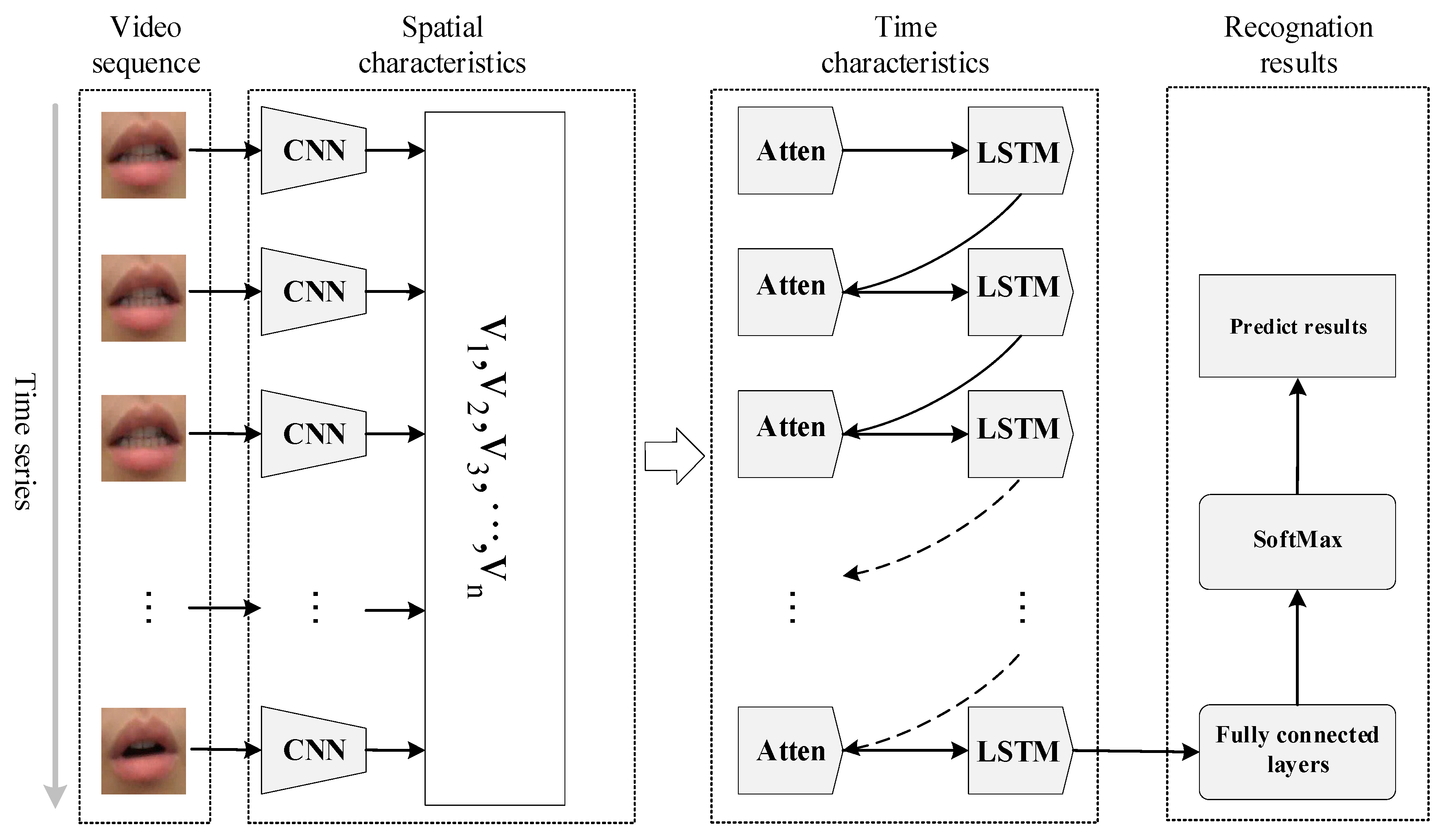

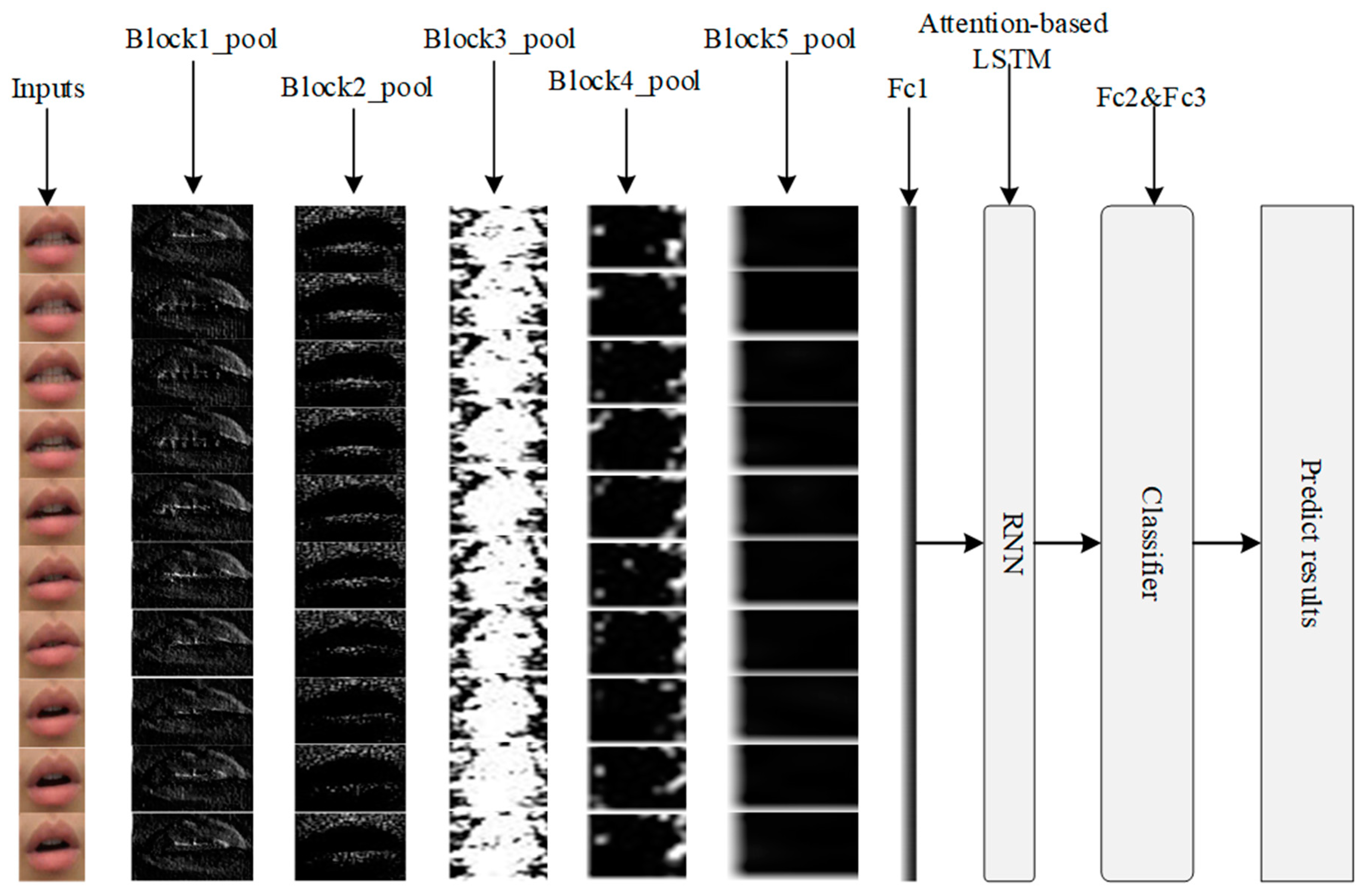

2. Proposed Lip-Reading Model

2.1. Video Preprocessing

- The time of the utterance is divided into 10 equally interval portions, and a random frame of each portion is selected as a keyframe, thus each word obtains a sequence image frame of equal length.

- We use OpenCV library to load images and convert them into a three-dimensional matrix [18]. Then, we use the facial landmark detection of Dlib toolkit [19]. It takes the face images as input, and the returned face structure consists of different landmarks for each specific face attribute. We choose to locate the seven key points of the mouth, labeled as: 49, 51, 53, 55, 57, 58, 59.

- We segment the mouth images and remove the redundant information, then calculate the center position of the mouth based on the coordinate points of the image boundary, denoted as . The width and height of the lip image are represented by w and h, respectively, and represent the left and right, upper and lower dividing lines surrounding the mouth, respectively. According to the following formula to calculate the bounding box of the mouth:

- After the mouth segmentation step, the original dataset will be processed into 224 × 224 pixels which take lips as a standard. This method has the characteristics of strong robustness, high computational efficiency and consistency of eigenvectors. The processes of pretreatment are as shown in Figure 2.

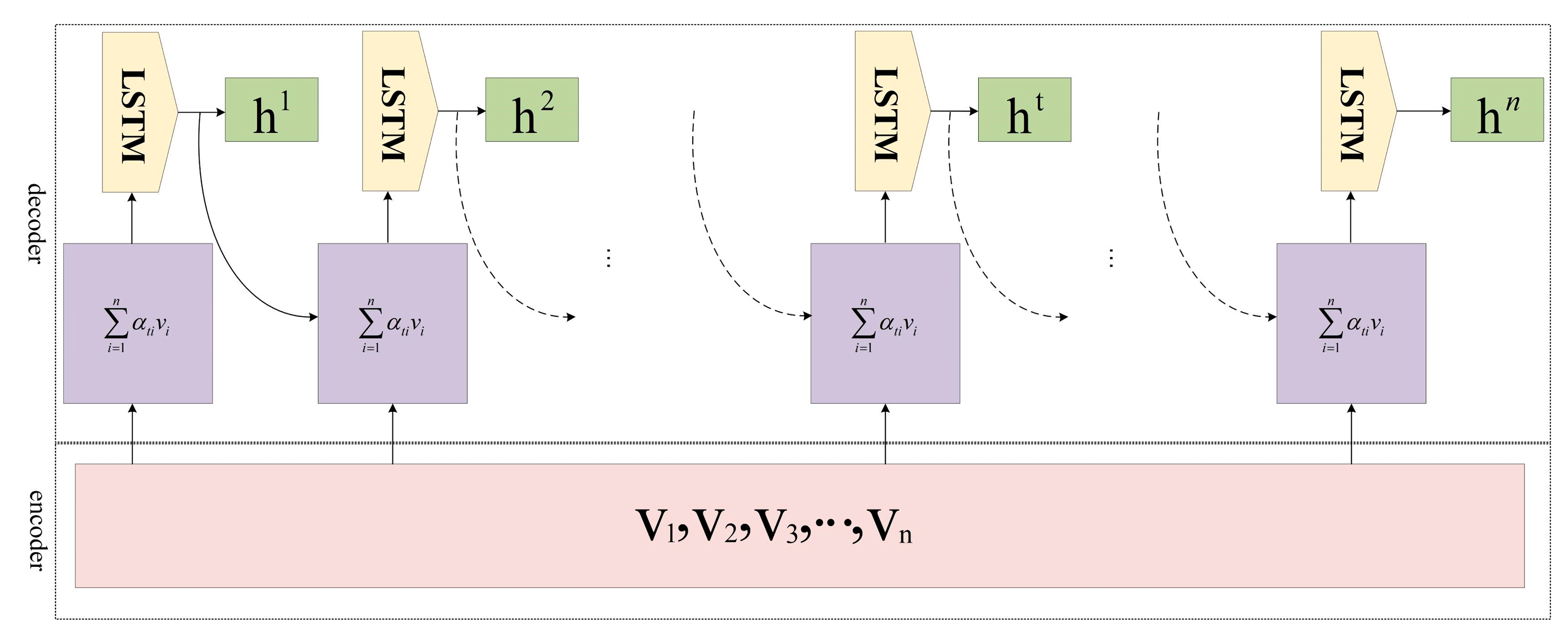

2.2. Attention-Based LSTM

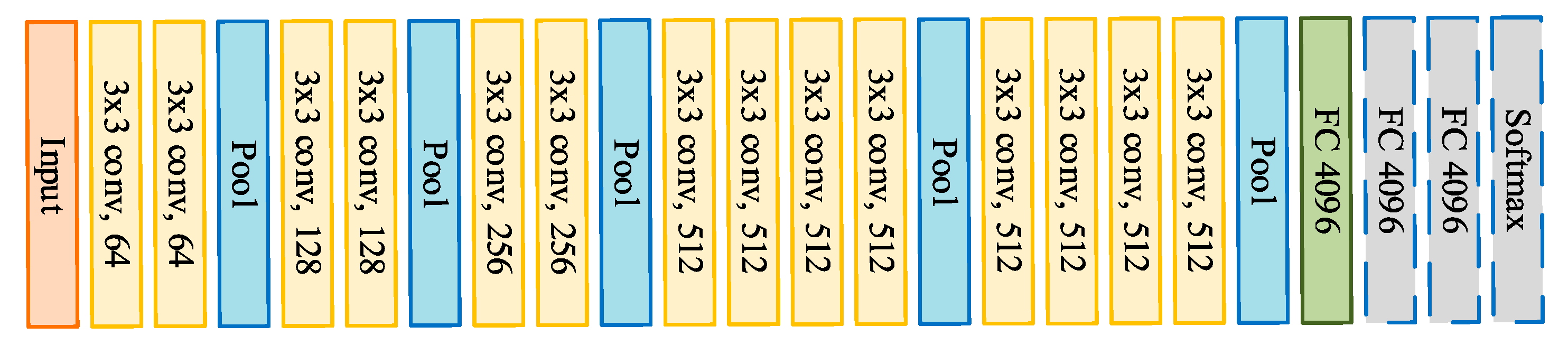

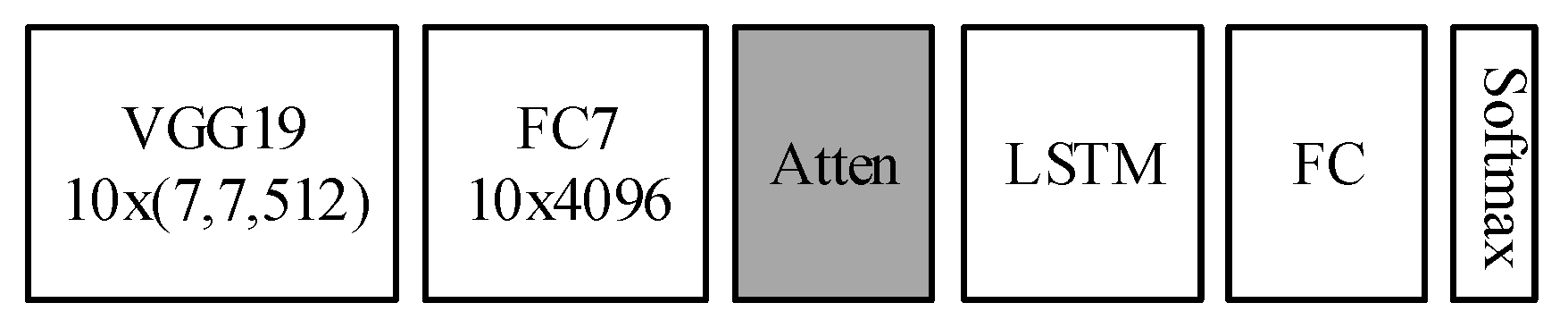

2.3. CNN-LSTM With Attention Networks

3. Experimental Dataset and Results

3.1. Dataset

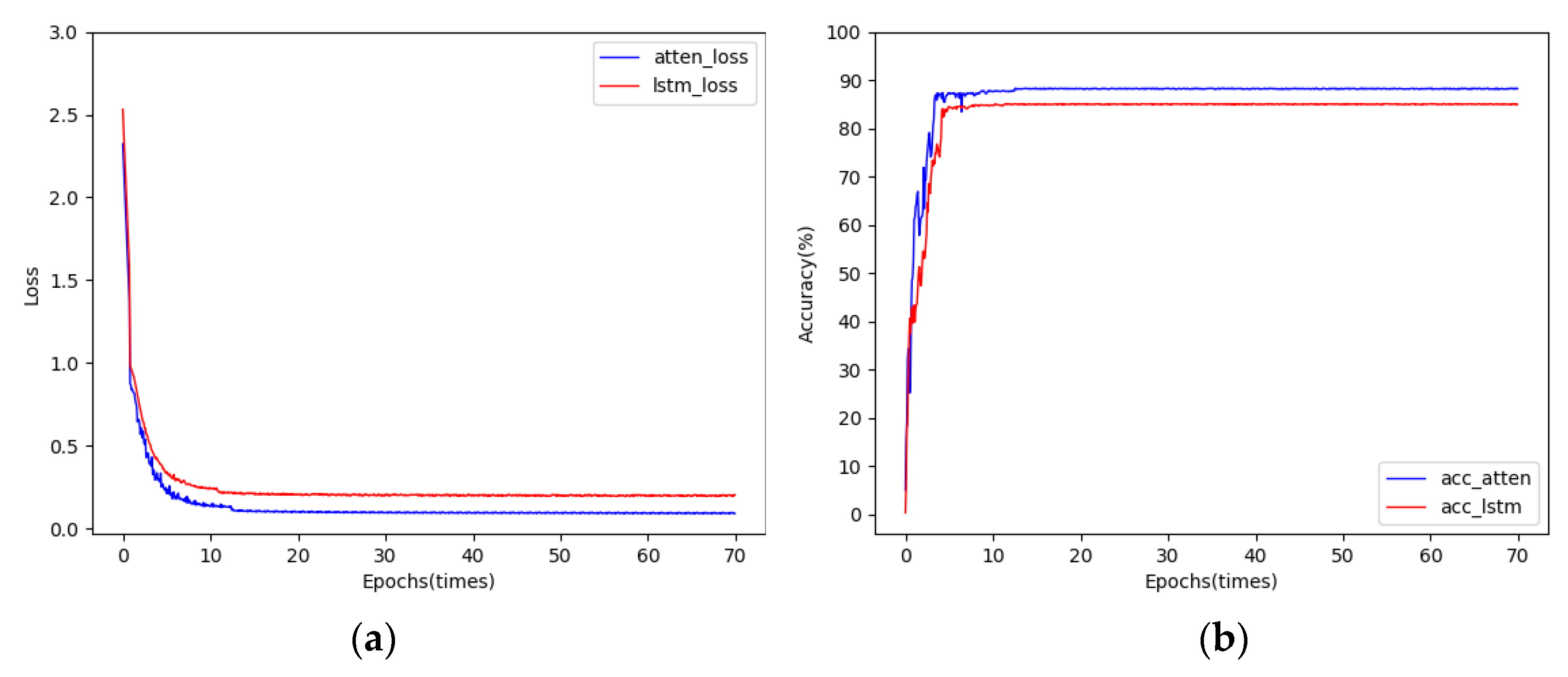

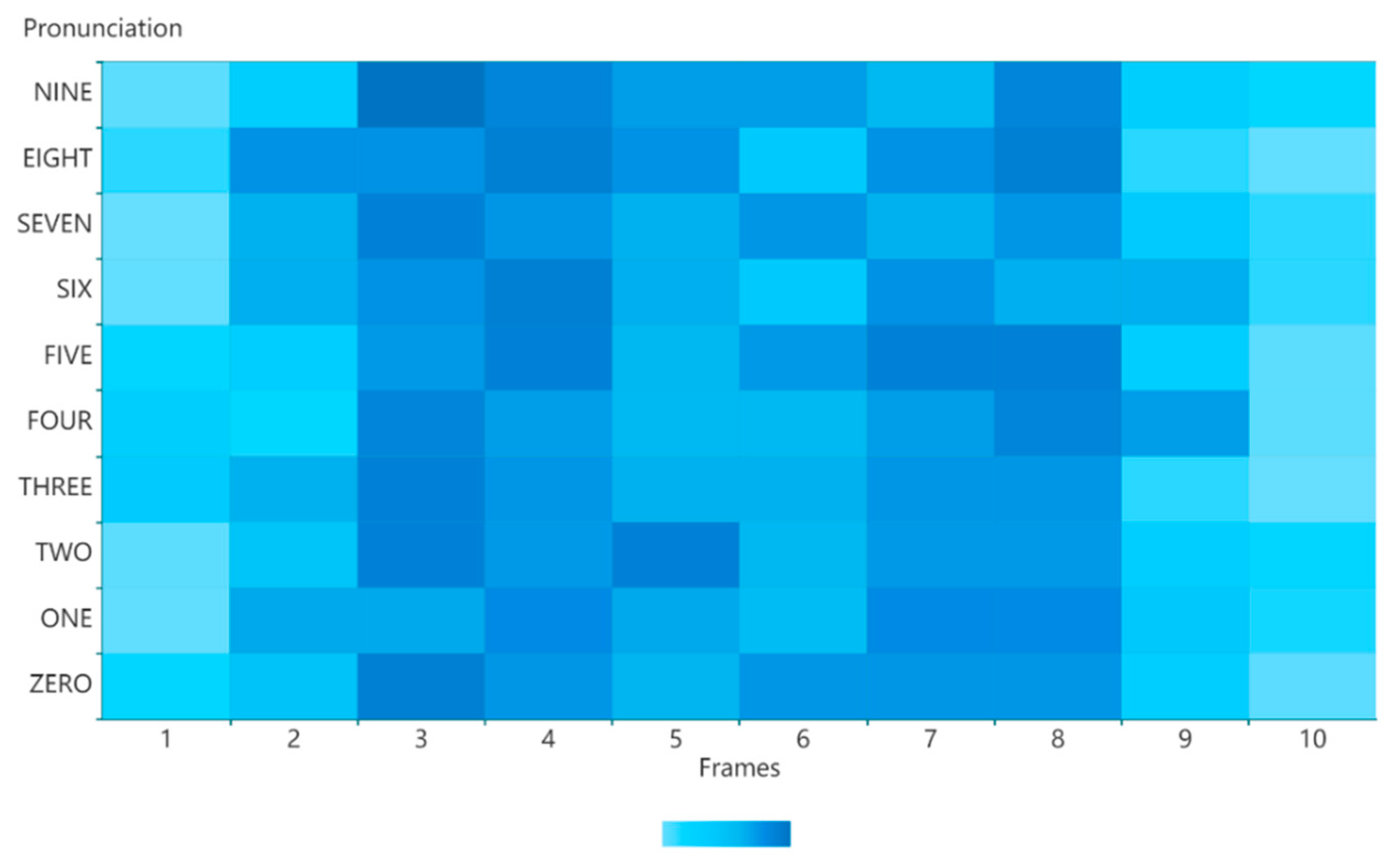

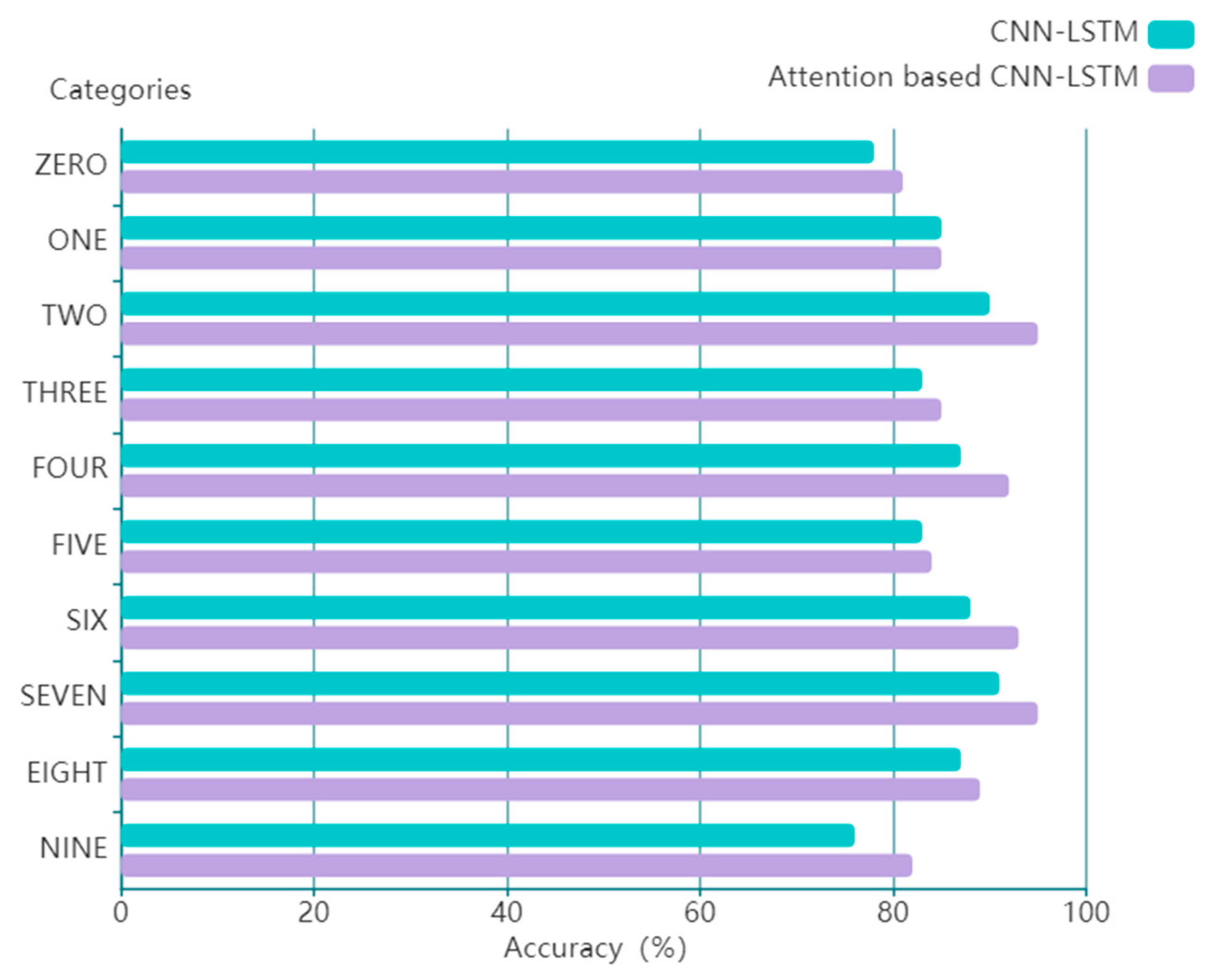

3.2. Results and Discussions

4. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Jaimes, A.; Sebe, N. Multimodal human–computer interaction: A survey. Comput. Vis. Image Underst. 2007, 108, 116–134. [Google Scholar] [CrossRef]

- Loomis, J.M.; Blascovich, J.J.; Beall, A.C. Immersive virtual environment technology as a basic research tool in psychology. Behav. Res. Methods Instrum. Comput. 1999, 31, 557–564. [Google Scholar] [CrossRef] [PubMed]

- Hassanat, A.B. Visual passwords using automatic lip reading. arXiv, 2014; arXiv:1409.0924. [Google Scholar]

- Thanda, A.; Venkatesan, S.M. Multi-task learning of deep neural networks for audio visual automatic speech recognition. arXiv, 2017; arXiv:1701.02477. [Google Scholar]

- Biswas, A.; Sahu, P.K.; Chandra, M. Multiple cameras audio visual speech recognition using active appearance model visual features in car environment. Int. J. Speech Technol. 2016, 19, 159–171. [Google Scholar] [CrossRef]

- Scanlon, P.; Reilly, R. Feature analysis for automatic speechreading. In Proceedings of the 2001 IEEE Fourth Workshop on Multimedia Signal Processing (Cat. No. 01TH8564), Cannes, France, 3–5 October 2001; pp. 625–630. [Google Scholar]

- Matthews, I.; Potamianos, G.; Neti, C.; Luettin, J. A comparison of model and transform-based visual features for audio-visual LVCSR. In Proceedings of the IEEE International Conference on Multimedia and Expo, ICME 2001, Tokyo, Japan, 22–25 August 2001; pp. 825–828. [Google Scholar]

- Aleksic, P.S.; Katsaggelos, A.K. Comparison of low-and high-level visual features for audio-visual continuous automatic speech recognition. In Proceedings of the 2004 IEEE International Conference on Acoustics, Speech, and Signal Processing, Montreal, QC, Canada, 17–21 May 2004; p. V-917. [Google Scholar]

- Chitu, A.G.; Driel, K.; Rothkrantz, L.J. Automatic lip reading in the Dutch language using active appearance models on high speed recordings. In Proceedings of the International Conference on Text, Speech and Dialogue, Brno, Czech Republic, 6–10 September 2010; pp. 259–266. [Google Scholar]

- Luettin, J.; Thacker, N.A.; Beet, S.W. Visual speech recognition using active shape models and hidden Markov models. In Proceedings of the 1996 IEEE International Conference on Acoustics, Speech, and Signal Processing Conference Proceedings, Atlanta, GA, USA, 9 May 1996; pp. 817–820. [Google Scholar]

- Shaikh, A.A.; Kumar, D.K.; Yau, W.C.; Azemin, M.C.; Gubbi, J. Lip reading using optical flow and support vector machines. In Proceedings of the 2010 3rd International Congress on Image and Signal Processing, Yantai, China, 16–18 October 2010; pp. 327–330. [Google Scholar]

- Puviarasan, N.; Palanivel, S. Lip reading of hearing impaired persons using HMM. Expert Syst. Appl. 2011, 38, 4477–4481. [Google Scholar] [CrossRef]

- Vinyals, O.; Toshev, A.; Bengio, S.; Erhan, D. Show and tell: A neural image caption generator. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 3156–3164. [Google Scholar]

- Gers, F.A.; Schmidhuber, J.; Cummins, F. Learning to forget: Continual prediction with LSTM. In Proceedings of the 9th International Conference on Artificial Neural Networks: ICANN’99, Edinburgh, UK, 7–10 September 1999. [Google Scholar]

- Wang, Y.; Huang, M.; Zhao, L. Attention-based LSTM for aspect-level sentiment classification. In Proceedings of the 2016 Conference on Empirical Methods in Natural Language Processing, Austin, TX, USA, 1–5 November 2016; pp. 606–615. [Google Scholar]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv, 2014; arXiv:1409.1556. [Google Scholar]

- Matthews, I.; Cootes, T.F.; Bangham, J.A.; Cox, S.; Harvey, R. Extraction of visual features for lipreading. IEEE Trans. Pattern Anal. Mach. Intell. 2002, 24, 198–213. [Google Scholar] [CrossRef]

- Fan, X.; Zhang, F.; Wang, H.; Lu, X. The system of face detection based on OpenCV. In Proceedings of the 2012 24th Chinese Control and Decision Conference (CCDC), Taiyuan, China, 23–25 May 2012; pp. 648–651. [Google Scholar]

- King, D.E. Dlib-ml: A machine learning toolkit. J. Mach. Learn. Res. 2009, 10, 1755–1758. [Google Scholar]

- Martins, A.; Astudillo, R. From softmax to sparsemax: A sparse model of attention and multi-label classification. In Proceedings of the International Conference on Machine Learning, New York, NY, USA, 19–24 June 2016; pp. 1614–1623. [Google Scholar]

- Hochreiter, S.; Schmidhuber, J. Long short-term memory. Neural Comput. 1997, 9, 1735–1780. [Google Scholar] [CrossRef]

- Graves, A. Long short-term memory. In Supervised Sequence Labelling with Recurrent Neural Networks; Springer: Berlin/Heidelberg, Germany, 2012; pp. 37–45. [Google Scholar]

- Cho, K.; Courville, A.; Bengio, Y. Describing multimedia content using attention-based encoder-decoder networks. IEEE Trans. Multimed. 2015, 17, 1875–1886. [Google Scholar] [CrossRef]

- Zhang, Y.; Pezeshki, M.; Brakel, P.; Zhang, S.; Bengio, C.L.Y.; Courville, A. Towards end-to-end speech recognition with deep convolutional neural networks. arXiv, 2017; arXiv:1701.02720. [Google Scholar]

- Graves, A. Generating sequences with recurrent neural networks. arXiv, 2013; arXiv:1308.0850. [Google Scholar]

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lu, Y.; Li, H. Automatic Lip-Reading System Based on Deep Convolutional Neural Network and Attention-Based Long Short-Term Memory. Appl. Sci. 2019, 9, 1599. https://doi.org/10.3390/app9081599

Lu Y, Li H. Automatic Lip-Reading System Based on Deep Convolutional Neural Network and Attention-Based Long Short-Term Memory. Applied Sciences. 2019; 9(8):1599. https://doi.org/10.3390/app9081599

Chicago/Turabian StyleLu, Yuanyao, and Hongbo Li. 2019. "Automatic Lip-Reading System Based on Deep Convolutional Neural Network and Attention-Based Long Short-Term Memory" Applied Sciences 9, no. 8: 1599. https://doi.org/10.3390/app9081599

APA StyleLu, Y., & Li, H. (2019). Automatic Lip-Reading System Based on Deep Convolutional Neural Network and Attention-Based Long Short-Term Memory. Applied Sciences, 9(8), 1599. https://doi.org/10.3390/app9081599