Appendix A

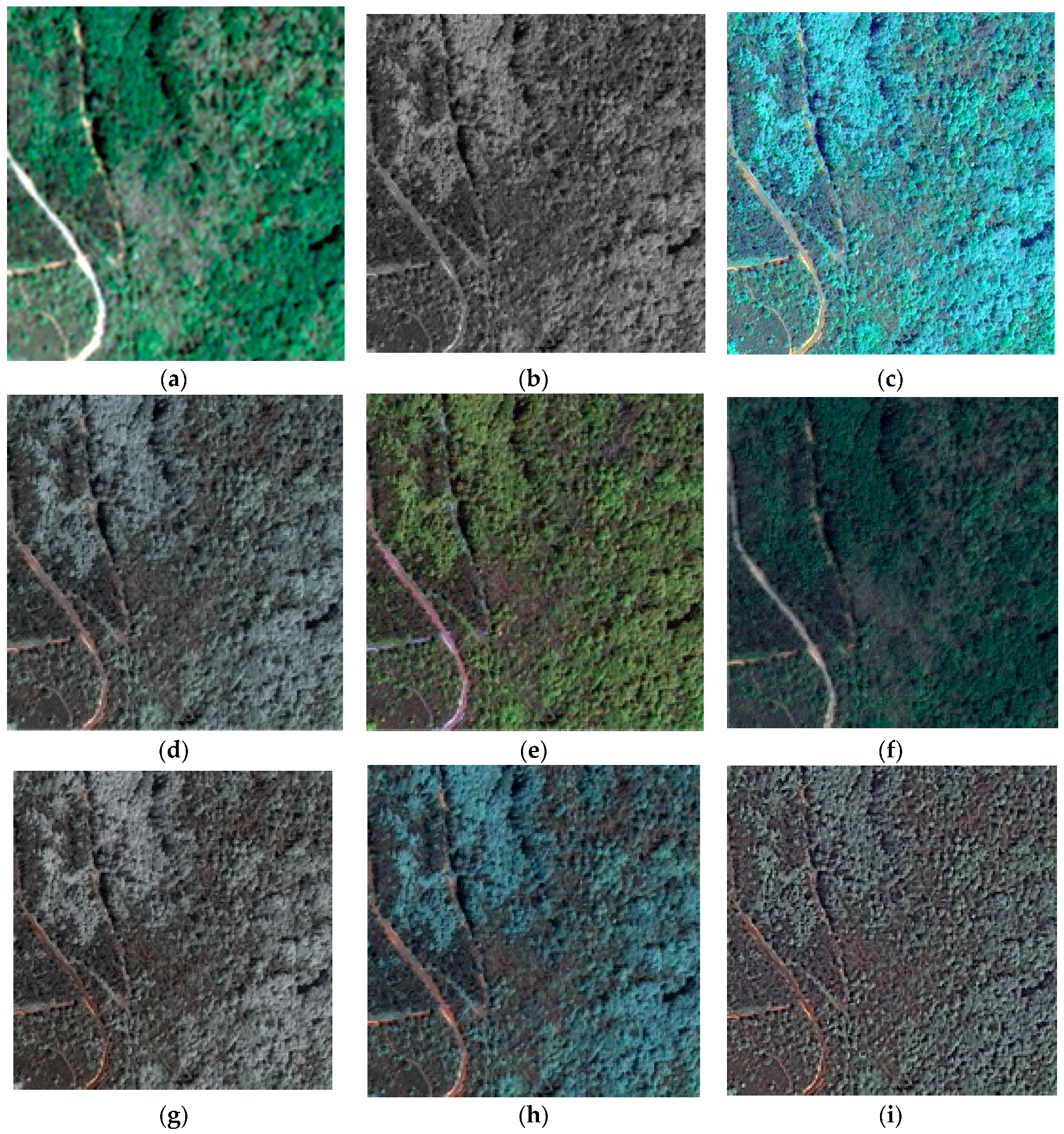

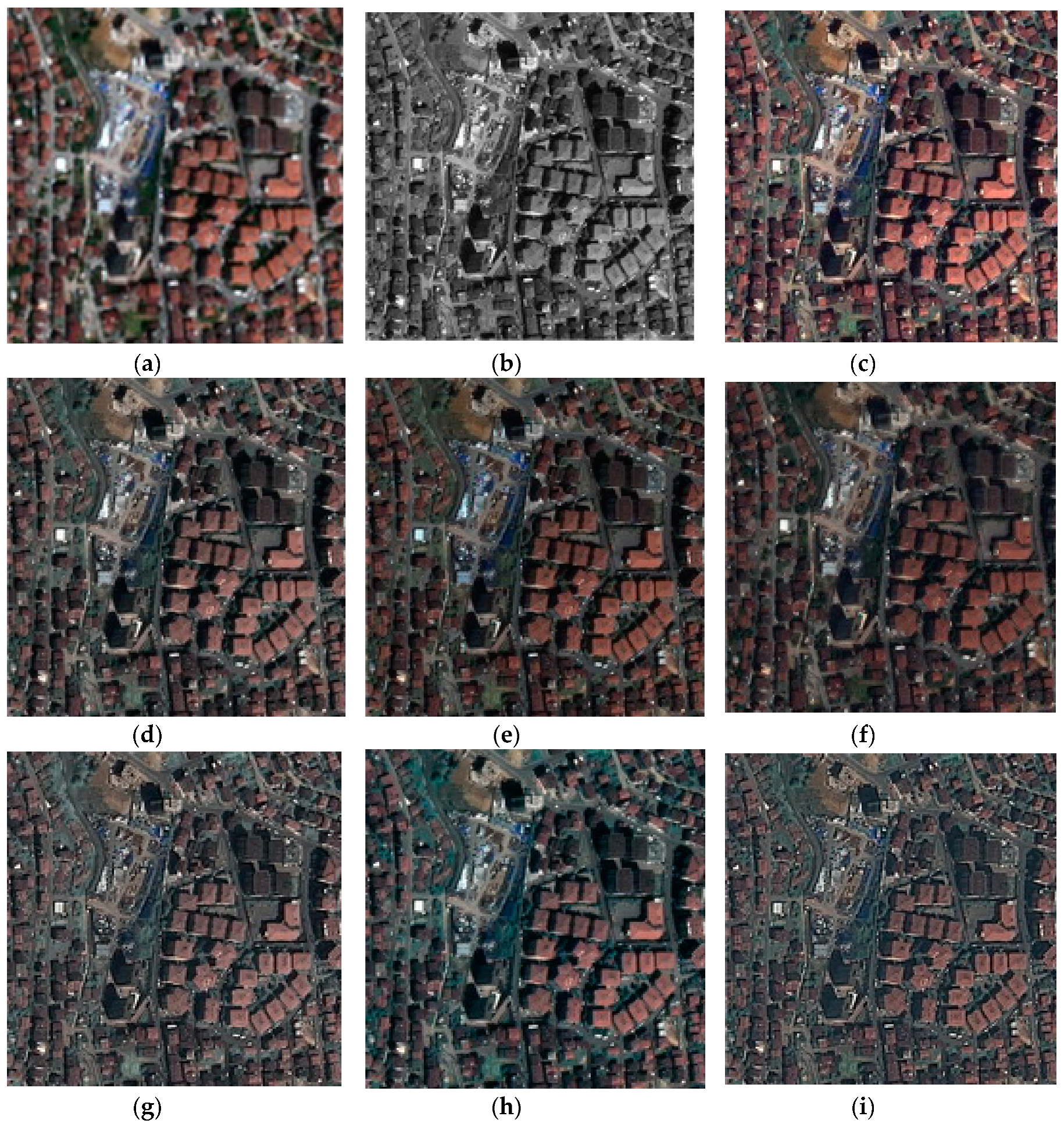

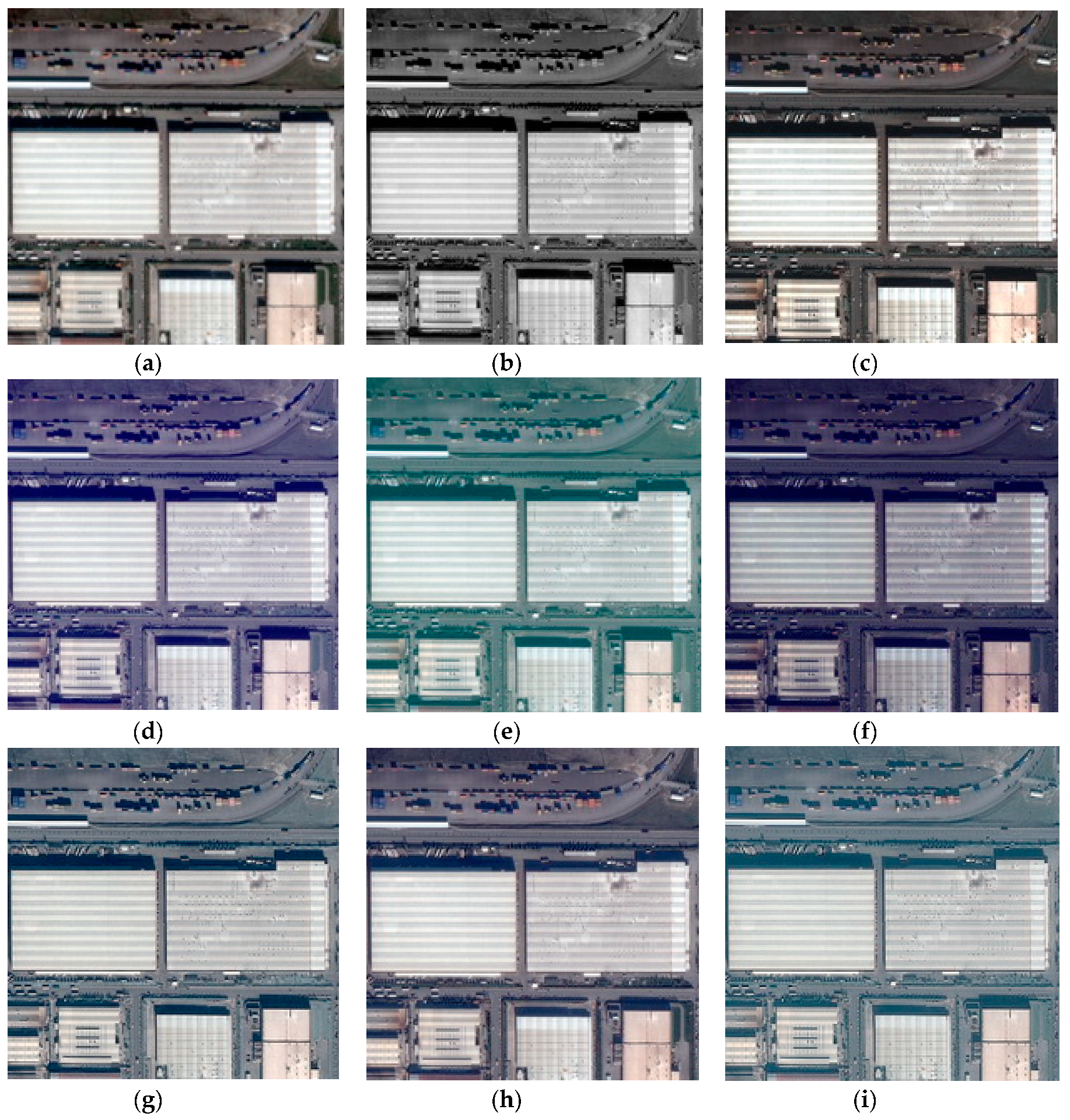

Figure A1.

Result and comparison of the proposed Pan sharpening method for the F1 (zoomed) area, which is represented in a true color (RGB) combination. (a) MS; (b) Pan; (c) Lab; (d) GIHS; (e) GS; (f) HCS; (g) IHS; (h) NNDiffuse; (i) Ehlers.

Figure A1.

Result and comparison of the proposed Pan sharpening method for the F1 (zoomed) area, which is represented in a true color (RGB) combination. (a) MS; (b) Pan; (c) Lab; (d) GIHS; (e) GS; (f) HCS; (g) IHS; (h) NNDiffuse; (i) Ehlers.

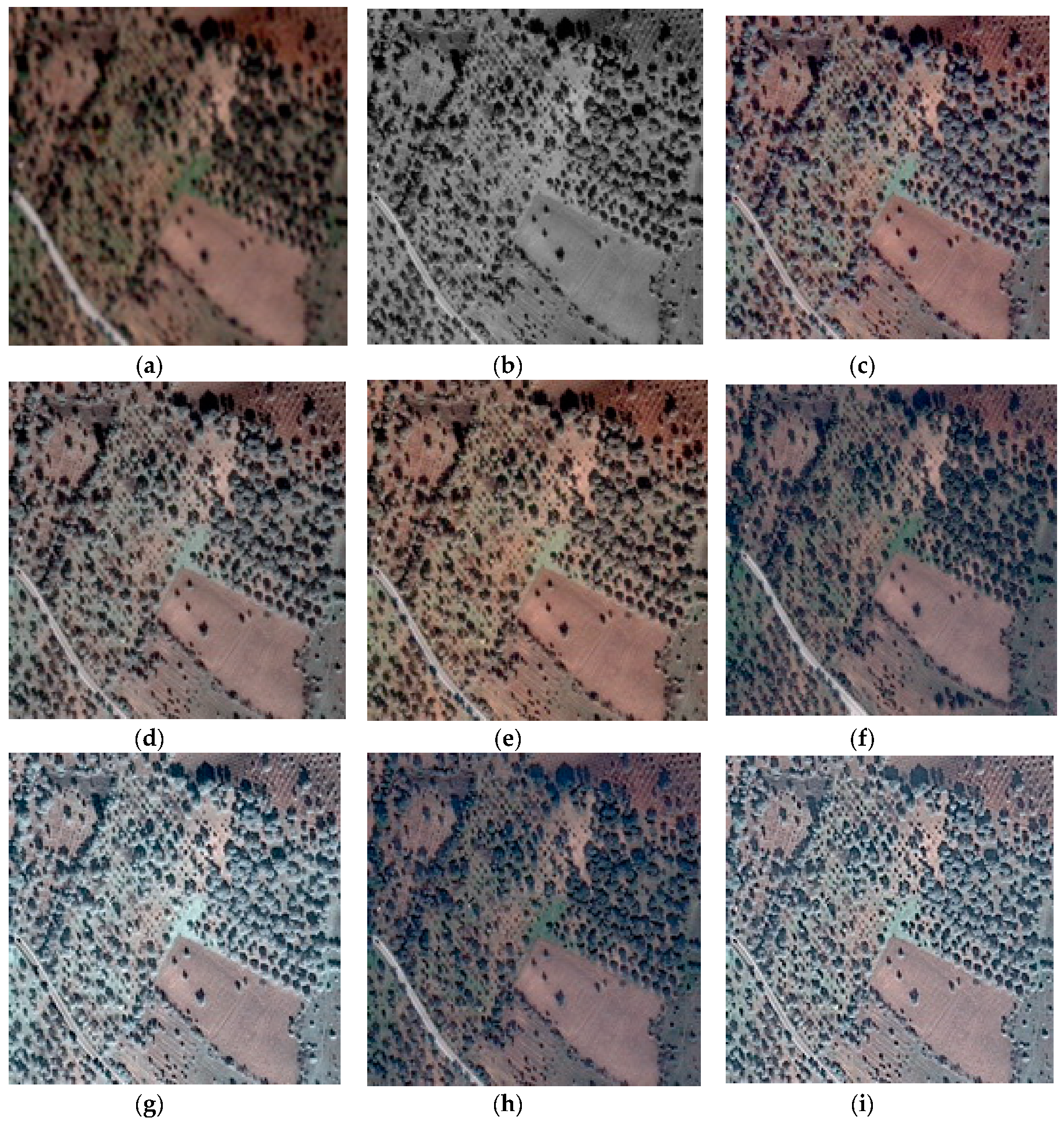

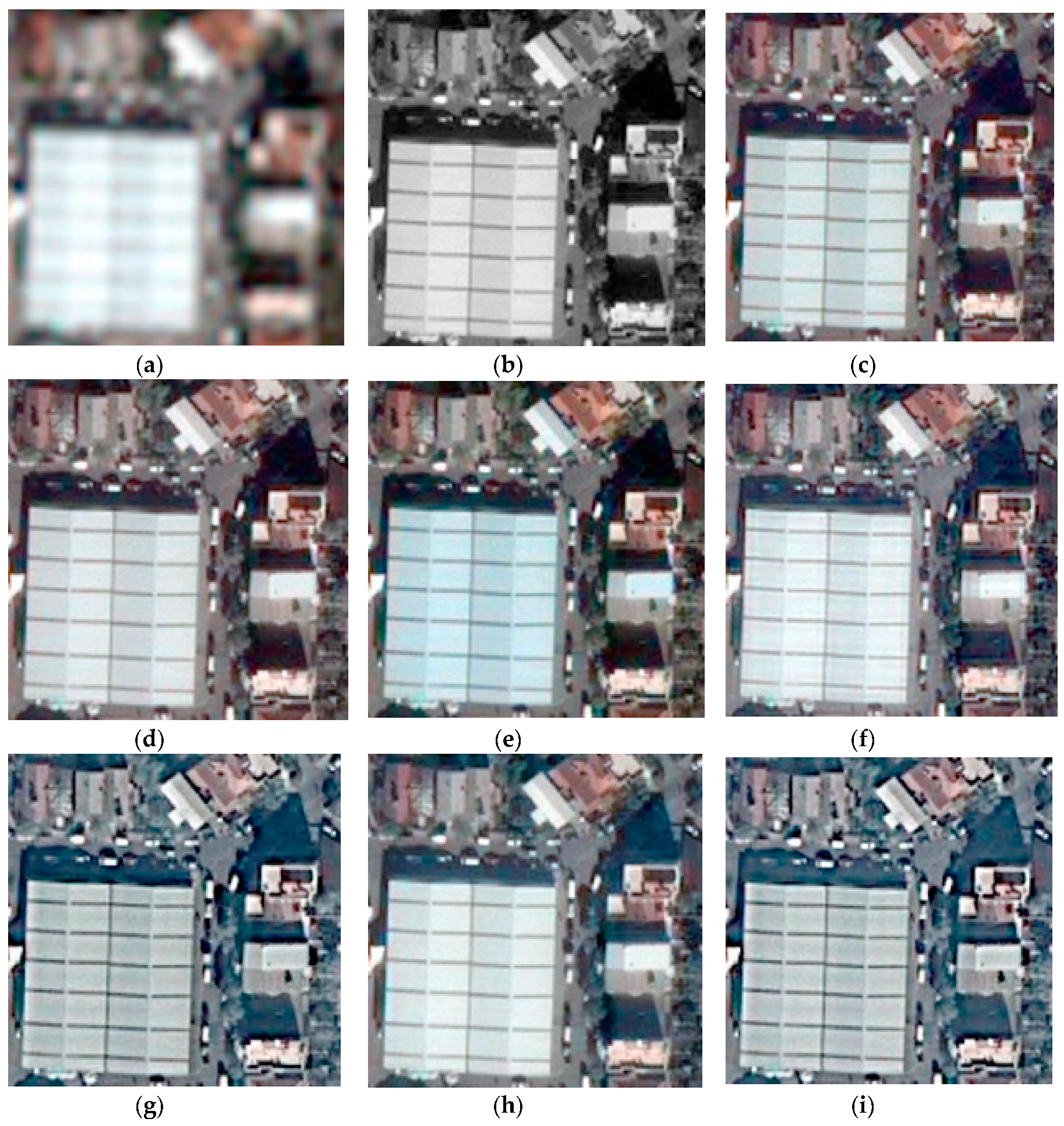

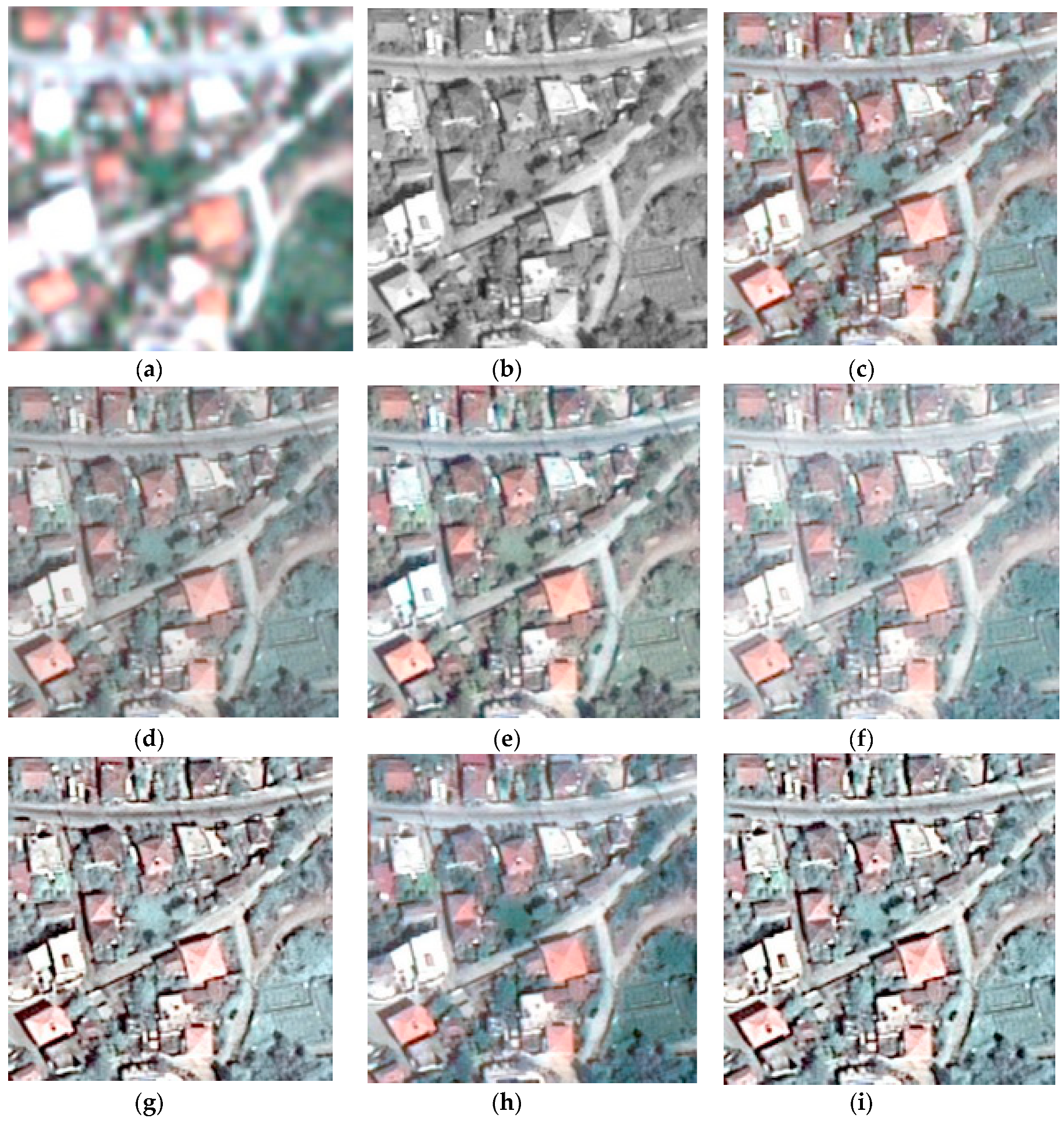

Figure A2.

Result and comparison of the proposed Pan sharpening method for the F5 area (zoomed), which is represented in a true color (RGB) combination. (a) MS; (b) Pan; (c) Lab; (d) GIHS; (e) GS; (f) HCS; (g) IHS; (h) NNDiffuse; (i) Ehlers.

Figure A2.

Result and comparison of the proposed Pan sharpening method for the F5 area (zoomed), which is represented in a true color (RGB) combination. (a) MS; (b) Pan; (c) Lab; (d) GIHS; (e) GS; (f) HCS; (g) IHS; (h) NNDiffuse; (i) Ehlers.

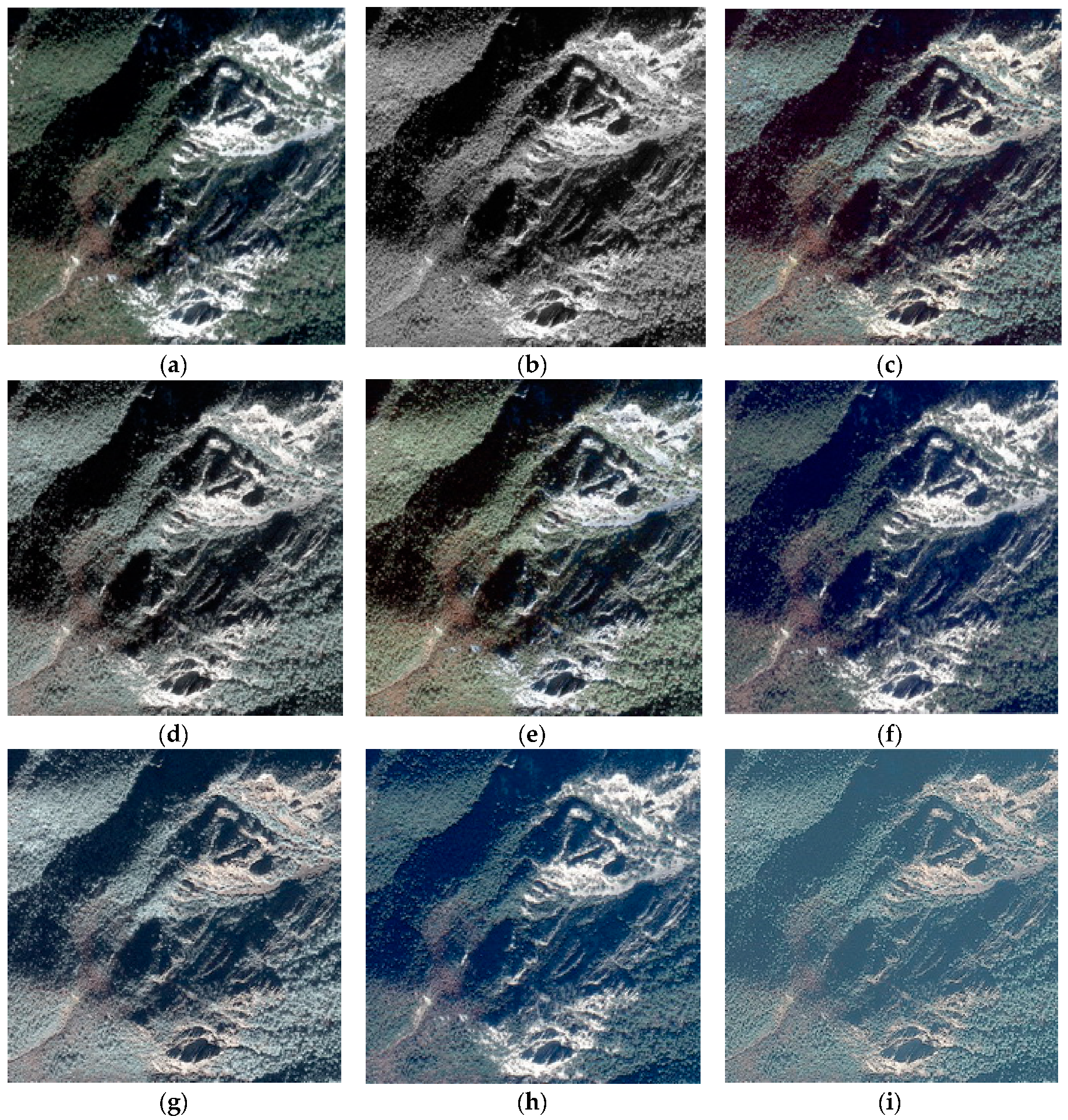

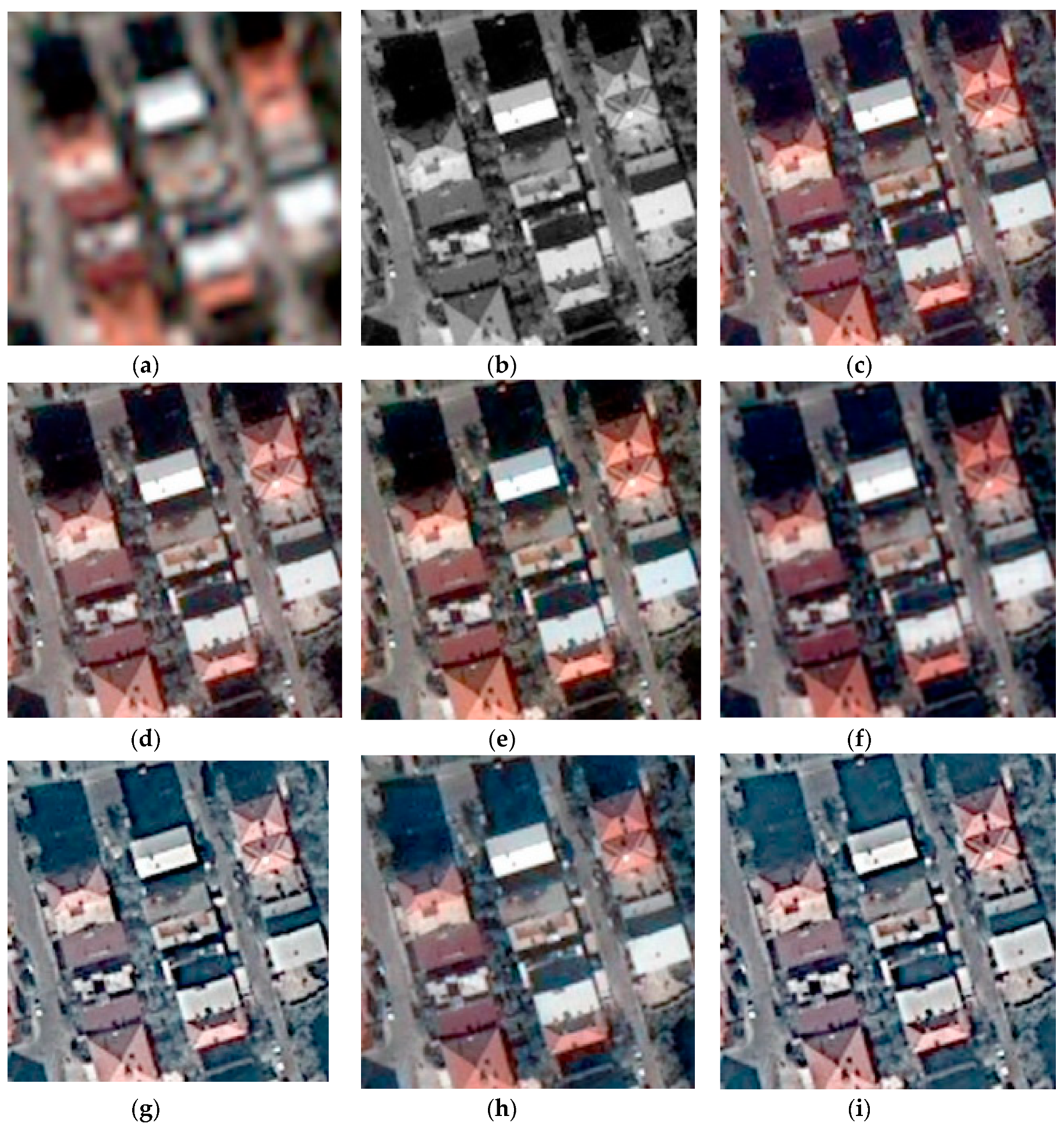

Figure A3.

Result and comparison of the proposed Pan sharpening method for the F6 area (zoomed), which is represented in a true color (RGB) combination. (a) MS; (b) Pan; (c) Lab; (d) GIHS; (e) GS; (f) HCS; (g) IHS; (h) NNDiffuse; (i) Ehlers.

Figure A3.

Result and comparison of the proposed Pan sharpening method for the F6 area (zoomed), which is represented in a true color (RGB) combination. (a) MS; (b) Pan; (c) Lab; (d) GIHS; (e) GS; (f) HCS; (g) IHS; (h) NNDiffuse; (i) Ehlers.

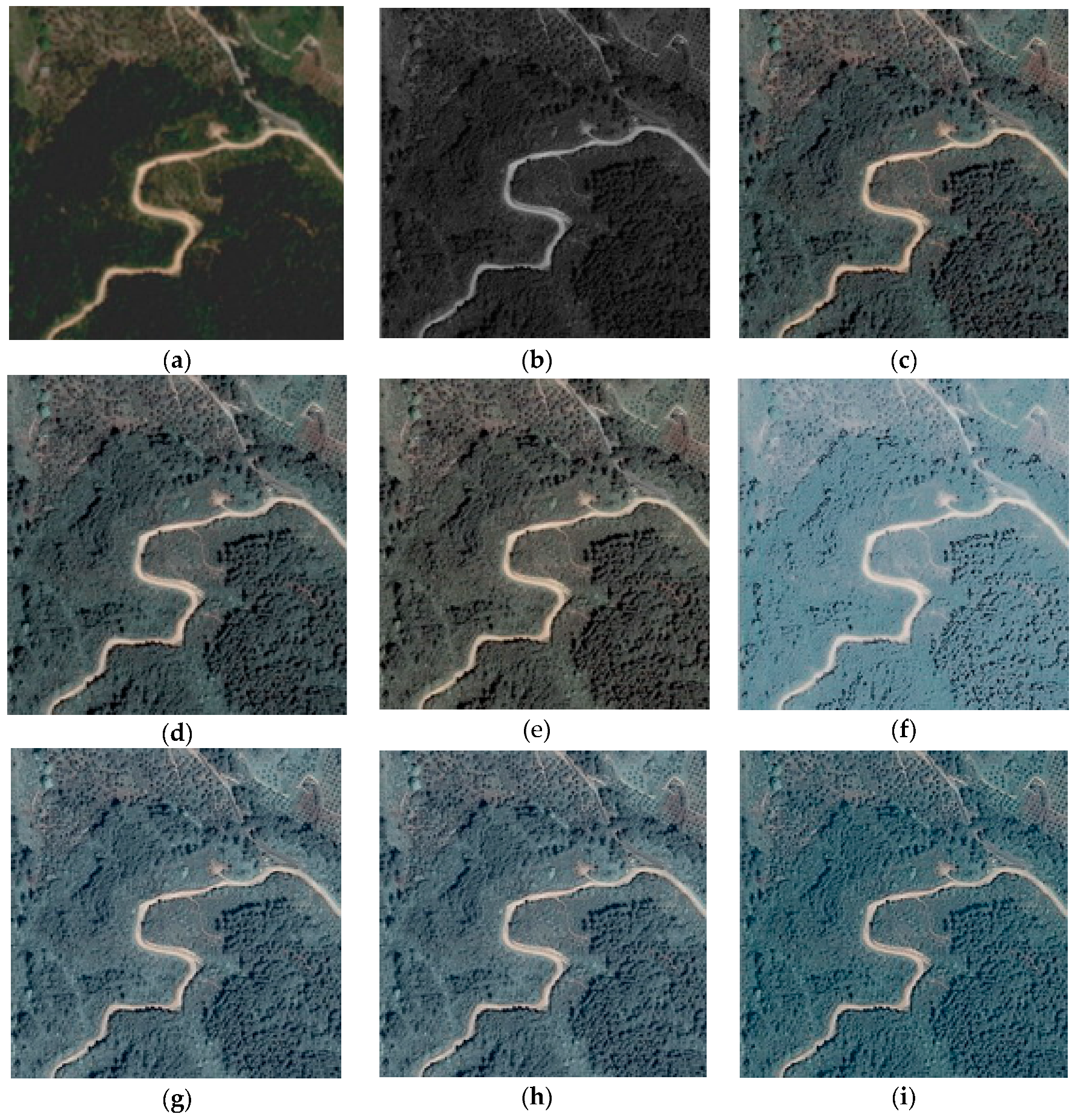

Figure A4.

Result and comparison of the proposed Pan sharpening method for the F6 area (zoomed), which is represented in a true color (RGB) combination. (a) MS; (b) Pan; (c) Lab; (d) GIHS; (e) GS; (f) HCS; (g) IHS; (h) NNDiffuse; (i) Ehlers.

Figure A4.

Result and comparison of the proposed Pan sharpening method for the F6 area (zoomed), which is represented in a true color (RGB) combination. (a) MS; (b) Pan; (c) Lab; (d) GIHS; (e) GS; (f) HCS; (g) IHS; (h) NNDiffuse; (i) Ehlers.

Table A1.

Numeric results of spectral quality metrics of the Pan-sharpened images produced by selected algorithms for rural test sites (blue: highest accuracy; red: lowest accuracy).

Table A1.

Numeric results of spectral quality metrics of the Pan-sharpened images produced by selected algorithms for rural test sites (blue: highest accuracy; red: lowest accuracy).

| | ERGAS | QAVG | RASE | RMSE | SAM | PSNR | SSIM |

|---|

| F1 | Ehlers | 8.35973 | 0.70345 | 31.03779 | 0.00169 | 3.64069 | 55.45895 | 0.99423 |

| GS | 3.53280 | 0.72884 | 12.85721 | 0.00070 | 2.17343 | 63.81712 | 0.99854 |

| GIHS | 9.70735 | 0.68332 | 54.94083 | 0.00299 | 3.71061 | 50.49886 | 0.96170 |

| HCS | 3.30104 | 0.72804 | 13.12935 | 0.00071 | 3.07602 | 62.93191 | 0.99913 |

| IHS | 10.74394 | 0.67747 | 60.82506 | 0.00331 | 3.74898 | 49.61512 | 0.95743 |

| CIELab | 2.07704 | 0.83561 | 11.24036 | 0.00061 | 1.33832 | 69.28116 | 1 |

| NNDiffuse | 6.01008 | 0.68762 | 29.32491 | 0.00159 | 1.68020 | 55.95203 | 0.99065 |

| F5 | Ehlers | 7.62863 | 0.67932 | 30.11675 | 0.00136 | 2.76553 | 57.32630 | 0.99653 |

| GS | 3.43803 | 0.70832 | 15.06442 | 0.00069 | 1.96874 | 64.58066 | 0.99909 |

| GIHS | 4.68240 | 0.70403 | 20.21354 | 0.00091 | 1.71451 | 60.78961 | 0.99738 |

| HCS | 6.04601 | 0.67023 | 24.66611 | 0.00111 | 1.64450 | 59.06045 | 0.98721 |

| IHS | 7.58878 | 0.66419 | 32.80574 | 0.00148 | 2.31403 | 56.58346 | 0.98436 |

| CIELab | 3.1501 | 0.81033 | 12.37956 | 0.00056 | 1.25643 | 75.04835 | 1 |

| NNDiffuse | 6.05444 | 0.69039 | 25.02772 | 0.00117 | 1.64355 | 59.53192 | 0.99658 |

| F6 | Ehlers | 12.35511 | 0.68485 | 44.42476 | 0.00133 | 5.60838 | 57.50393 | 0.99650 |

| GS | 4.95180 | 0.73240 | 18.30183 | 0.00059 | 2.46646 | 66.21170 | 0.99918 |

| GIHS | 8.96652 | 0.71253 | 36.05239 | 0.00108 | 3.84187 | 59.31775 | 0.99551 |

| HCS | 5.56829 | 0.71320 | 22.52386 | 0.00068 | 3.70496 | 63.40357 | 0.98920 |

| IHS | 12.47236 | 0.68690 | 50.19509 | 0.00151 | 5.41973 | 56.44320 | 0.98278 |

| CIELab | 4.53026 | 0.83580 | 16.05962 | 0.00048 | 1.86814 | 66.34172 | 1 |

| NNDiffuse | 7.74954 | 0.69166 | 32.23618 | 0.00097 | 2.70472 | 60.28956 | 0.99680 |

| F10 | Ehlers | 9.22107 | 0.61404 | 36.48889 | 0.00316 | 4.44999 | 49.99698 | 0.98567 |

| GS | 4.78197 | 0.63099 | 18.07030 | 0.00157 | 2.86307 | 56.10088 | 0.99517 |

| GIHS | 6.60284 | 0.62945 | 32.99550 | 0.00286 | 2.41971 | 50.87109 | 0.97682 |

| HCS | 9.96965 | 0.57631 | 40.17887 | 0.00348 | 2.10395 | 49.16024 | 0.98781 |

| IHS | 9.49649 | 0.60973 | 47.55454 | 0.00412 | 3.41879 | 47.69635 | 0.96361 |

| CIELab | 4.3611 | 0.6478 | 17.14994 | 0.00149 | 1.77264 | 57.55494 | 1 |

| NNDiffuse | 4.46541 | 0.63385 | 17.77395 | 0.00154 | 1.50089 | 56.24451 | 0.99540 |

Table A2.

Numeric results of spatial quality metrics of the Pan-sharpened images produced by select algorithms for the rural test sites (blue: highest accuracy; red: lowest accuracy).

Table A2.

Numeric results of spatial quality metrics of the Pan-sharpened images produced by select algorithms for the rural test sites (blue: highest accuracy; red: lowest accuracy).

| | | CC | Zhou’s SP | SRMSE | SP ERGAS |

|---|

| F1 | Ehlers | 0.876695 | 0.939608 | 0.006527 | 26.540923 |

| GS | 0.970317 | 0.973490 | 0.002298 | 25.222656 |

| GIHS | 0.978149 | 0.979959 | 0.005213 | 25.699874 |

| HCS | 0.939252 | 0.963691 | 0.00293 | 25.531640 |

| IHS | 0.869487 | 0.929827 | 0.006592 | 26.040642 |

| CIELab | 0.982436 | 0.998617 | 5.19E-08 | 17.345011 |

| NNDiffuse | 0.944022 | 0.967511 | 0.003237 | 24.580730 |

| F5 | Ehlers | 0.926486 | 0.92948 | 0.006241 | 26.658944 |

| GS | 0.989994 | 0.98932 | 0.003323 | 25.503829 |

| GIHS | 0.990875 | 0.989946 | 0.004153 | 25.881689 |

| HCS | 0.869709 | 0.914225 | 0.00467 | 26.457399 |

| IHS | 0.927104 | 0.989560 | 0.006261 | 26.460988 |

| CIELab | 0.996861 | 0.994414 | 2.22E-08 | 4.9495726 |

| NNDiffuse | 0.971652 | 0.989410 | 0.004303 | 25.740781 |

| F6 | Ehlers | 0.891415 | 0.929872 | 0.006123 | 29.084361 |

| GS | 0.989992 | 0.989450 | 0.002473 | 26.047215 |

| GIHS | 0.989997 | 0.989974 | 0.004425 | 28.173902 |

| HCS | 0.912241 | 0.972654 | 0.002903 | 26.946861 |

| IHS | 0.95928 | 0.969886 | 0.006141 | 29.301514 |

| CIELab | 0.997273 | 0.996194 | 3.40E-08 | 11.968807 |

| NNDiffuse | 0.969999 | 0.979946 | 0.003062 | 27.901263 |

| F10 | Ehlers | 0.923442 | 0.919671 | 0.014214 | 28.001920 |

| GS | 0.989619 | 0.989061 | 0.008828 | 26.488351 |

| GIHS | 0.971429 | 0.969959 | 0.008738 | 26.263696 |

| HCS | 0.799403 | 0.694767 | 0.012855 | 28.756548 |

| IHS | 0.914264 | 0.929730 | 0.014267 | 27.019798 |

| CIELab | 0.997258 | 0.996956 | 2.69E-08 | 8.0781533 |

| NNDiffuse | 0.929999 | 0.929405 | 0.0067663 | 26.362095 |

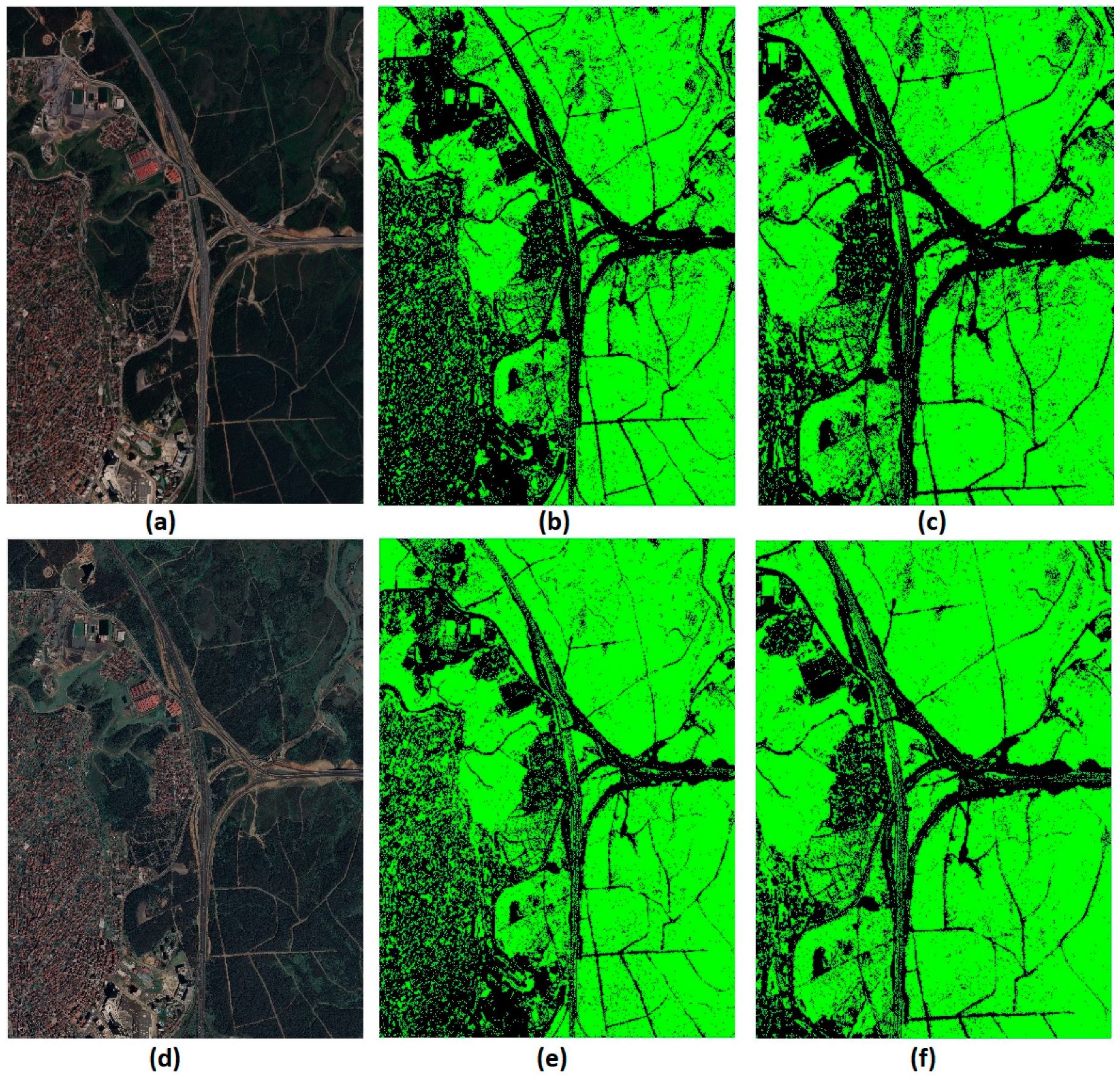

Figure A5.

Result and comparison of the proposed Pan sharpening method for the F2 test frame, zoomed areas, which are represented in a true color (RGB) combination. (a) MS; (b) Pan; (c) Lab; (d) GIHS; (e) GS; (f) HCS; (g) IHS; (h) NNDiffuse; (i) Ehlers.

Figure A5.

Result and comparison of the proposed Pan sharpening method for the F2 test frame, zoomed areas, which are represented in a true color (RGB) combination. (a) MS; (b) Pan; (c) Lab; (d) GIHS; (e) GS; (f) HCS; (g) IHS; (h) NNDiffuse; (i) Ehlers.

Figure A6.

Result and comparison of the proposed Pan sharpening method for the F4 test frame, zoomed areas, which are represented in a true color (RGB) combination. (a) MS; (b) Pan; (c) Lab; (d) GIHS; (e) GS; (f) HCS; (g) IHS; (h) NNDiffuse; (i) Ehlers.

Figure A6.

Result and comparison of the proposed Pan sharpening method for the F4 test frame, zoomed areas, which are represented in a true color (RGB) combination. (a) MS; (b) Pan; (c) Lab; (d) GIHS; (e) GS; (f) HCS; (g) IHS; (h) NNDiffuse; (i) Ehlers.

Figure A7.

Result and comparison of the proposed Pan sharpening method for the F9 test frame, zoomed areas, which are represented in a true color (RGB) combination. (a) MS; (b) Pan; (c) Lab; (d) GIHS; (e) GS; (f) HCS; (g) IHS; (h) NNDiffuse; (i) Ehlers.

Figure A7.

Result and comparison of the proposed Pan sharpening method for the F9 test frame, zoomed areas, which are represented in a true color (RGB) combination. (a) MS; (b) Pan; (c) Lab; (d) GIHS; (e) GS; (f) HCS; (g) IHS; (h) NNDiffuse; (i) Ehlers.

Table A3.

Numeric results of spectral quality metrics of the Pan-sharpened images produced by select algorithms for urban test sites (blue: highest accuracy; red: lowest accuracy).

Table A3.

Numeric results of spectral quality metrics of the Pan-sharpened images produced by select algorithms for urban test sites (blue: highest accuracy; red: lowest accuracy).

| | ERGAS | QAVG | RASE | RMSE | SAM | PSNR | SSIM |

|---|

| F2 | Ehlers | 11.86921 | 0.53991 | 47.33854 | 0.00401 | 4.71053 | 47.94083 | 0.97725 |

| GS | 5.23770 | 0.58263 | 22.61594 | 0.00195 | 2.64855 | 55.16107 | 0.99458 |

| GIHS | 7.34063 | 0.57639 | 33.87054 | 0.00287 | 2.46540 | 50.84869 | 0.98449 |

| HCS | 15.71881 | 0.48541 | 62.43752 | 0.00529 | 2.73988 | 45.53622 | 0.97461 |

| IHS | 11.04969 | 0.54971 | 51.03739 | 0.00432 | 3.46087 | 47.28736 | 0.95942 |

| CIELab | 4.22351 | 0.68629 | 20.75741 | 0.00176 | 1.36201 | 65.10167 | 1 |

| NNDiffuse | 9.43220 | 0.56867 | 49.55085 | 0.00420 | 3.40818 | 47.54411 | 0.96330 |

| F4 | Ehlers | 22.84143 | 0.52356 | 89.68352 | 0.00405 | 7.95294 | 47.84821 | 0.95598 |

| GS | 12.57253 | 0.42386 | 65.56001 | 0.00296 | 6.13381 | 50.56968 | 0.96821 |

| GIHS | 15.81385 | 0.63109 | 90.10525 | 0.00407 | 3.15646 | 47.80746 | 0.94837 |

| HCS | 23.98628 | 0.28239 | 121.71057 | 0.00550 | 8.28181 | 45.19589 | 0.93994 |

| IHS | 19.50133 | 0.38961 | 111.13086 | 0.00502 | 8.99373 | 45.98577 | 0.92838 |

| CIELab | 6.86913 | 0.70144 | 26.93926 | 0.00122 | 1.89738 | 58.29474 | 1 |

| NNDiffuse | 19.72400 | 0.63077 | 98.22609 | 0.00441 | 6.13952 | 50.53176 | 0.97519 |

| F9 | Ehlers | 9.89595 | 0.51189 | 39.61836 | 0.00495 | 4.27519 | 46.10242 | 0.97015 |

| GS | 4.96528 | 0.55533 | 18.29249 | 0.00256 | 2.20216 | 51.30320 | 0.99273 |

| GIHS | 5.40401 | 0.53562 | 24.49466 | 0.00306 | 1.92496 | 50.27892 | 0.98486 |

| HCS | 10.02627 | 0.47659 | 39.67119 | 0.00496 | 2.02550 | 46.09085 | 0.93648 |

| IHS | 9.55051 | 0.51220 | 43.30832 | 0.00541 | 3.25101 | 45.32893 | 0.92951 |

| CIELab | 4.2936 | 0.6402 | 17.16686 | 0.00215 | 1.12493 | 54.36654 | 1 |

| NNDiffuse | 8.25412 | 0.58294 | 18.91789 | 0.01112 | 1.80691 | 39.08057 | 0.94984 |

Table A4.

Numeric results of spatial quality metrics of the Pan-sharpened images produced by select algorithms for urban test sites (blue: highest accuracy; red: lowest accuracy).

Table A4.

Numeric results of spatial quality metrics of the Pan-sharpened images produced by select algorithms for urban test sites (blue: highest accuracy; red: lowest accuracy).

| | CC | Zhou’s SP | SRMSE | SP ERGAS |

|---|

| F2 | Ehlers | 0.923124 | 0.939587 | 0.027718 | 28.484374 |

| GS | 0.985504 | 0.968697 | 0.008643 | 25.816466 |

| GIHS | 0.986114 | 0.989954 | 0.012513 | 26.611553 |

| HCS | 0.937058 | 0.949630 | 0.019802 | 21.018918 |

| IHS | 0.913914 | 0.929653 | 0.027772 | 27.734556 |

| CIELab | 0.996129 | 0.995455 | 7.17E-08 | 6.2709053 |

| NNDiffuse | 0.960443 | 0.961942 | 0.012853 | 22.490210 |

| F4 | Ehlers | 0.820014 | 0.939655 | 0.017949 | 31.953533 |

| GS | 0.989999 | 0.989842 | 0.009369 | 26.949381 |

| GIHS | 0.992001 | 0.989953 | 0.012168 | 28.452035 |

| HCS | 0.775576 | 0.688826 | 0.018171 | 33.941348 |

| IHS | 0.919941 | 0.989666 | 0.018000 | 31.259808 |

| CIELab | 0.997280 | 0.996187 | 5.44E-08 | 5.8158174 |

| NNDiffuse | 0.914590 | 0.888001 | 0.012253 | 27.737860 |

| F9 | Ehlers | 0.924923 | 0.949622 | 0.024424 | 28.179705 |

| GS | 0.989237 | 0.982593 | 0.014115 | 26.132656 |

| GIHS | 0.978923 | 0.989960 | 0.015724 | 26.434713 |

| HCS | 0.860205 | 0.794738 | 0.023803 | 28.880067 |

| IHS | 0.912546 | 0.969662 | 0.024479 | 27.533331 |

| CIELab | 0.99848 | 0.998002 | 5.26E-08 | 4.4340045 |

| NNDiffuse | 0.929067 | 0.958606 | 0.021917 | 25.926934 |

Figure A8.

Result and comparison of the proposed Pan sharpening method for the F3 test frame, zoomed areas, which are represented in a true color (RGB) combination. (a) MS; (b) Pan; (c) Lab; (d) GIHS; (e) GS; (f) HCS; (g) IHS; (h) NNDiffuse; (i) Ehlers.

Figure A8.

Result and comparison of the proposed Pan sharpening method for the F3 test frame, zoomed areas, which are represented in a true color (RGB) combination. (a) MS; (b) Pan; (c) Lab; (d) GIHS; (e) GS; (f) HCS; (g) IHS; (h) NNDiffuse; (i) Ehlers.

Figure A9.

Result and comparison of the proposed Pan sharpening method for the F7 test frame, zoomed areas, which are represented in a true color (RGB) combination. (a) MS; (b) Pan; (c) Lab; (d) GIHS; (e) GS; (f) HCS; (g) IHS; (h) NNDiffuse; (i) Ehlers.

Figure A9.

Result and comparison of the proposed Pan sharpening method for the F7 test frame, zoomed areas, which are represented in a true color (RGB) combination. (a) MS; (b) Pan; (c) Lab; (d) GIHS; (e) GS; (f) HCS; (g) IHS; (h) NNDiffuse; (i) Ehlers.

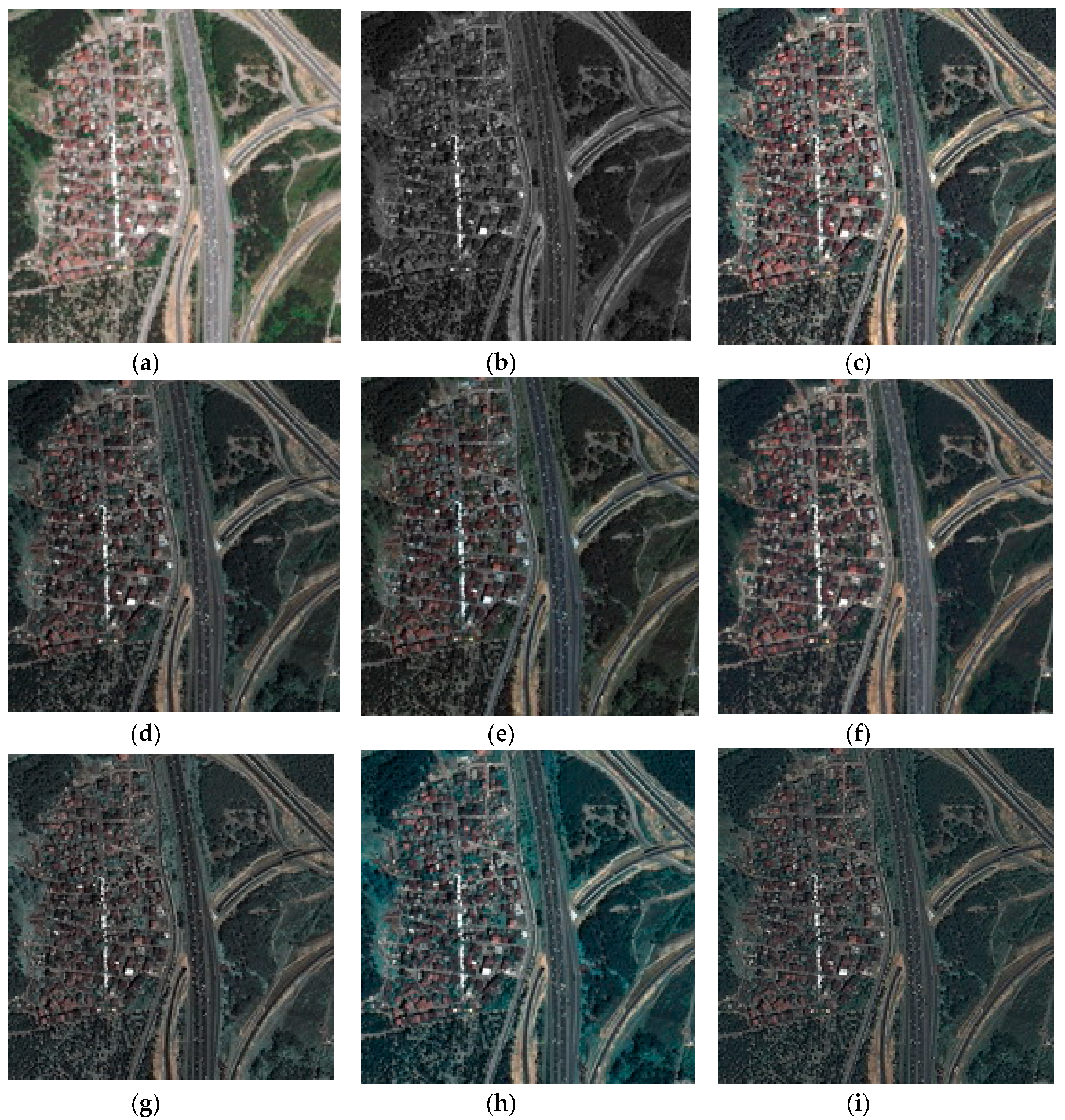

Figure A10.

Result and comparison of the proposed Pan sharpening method for the F8 test frame, zoomed areas, which are represented in a true color (RGB) combination. (a) MS; (b) Pan; (c) Lab; (d) GIHS; (e) GS; (f) HCS; (g) IHS; (h) NNDiffuse; (i) Ehlers.

Figure A10.

Result and comparison of the proposed Pan sharpening method for the F8 test frame, zoomed areas, which are represented in a true color (RGB) combination. (a) MS; (b) Pan; (c) Lab; (d) GIHS; (e) GS; (f) HCS; (g) IHS; (h) NNDiffuse; (i) Ehlers.

Table A5.

Numeric results of spectral quality metrics of the Pan-sharpened images produced by select algorithms for suburban test sites.

Table A5.

Numeric results of spectral quality metrics of the Pan-sharpened images produced by select algorithms for suburban test sites.

| | ERGAS | QAVG | RASE | RMSE | SAM | PSNR | SSIM |

|---|

| F3 | Ehlers | 10.98399 | 0.63217 | 43.08140 | 0.00301 | 4.48411 | 50.43809 | 0.98554 |

| GS | 5.51194 | 0.66128 | 23.69334 | 0.00154 | 2.84298 | 56.80727 | 0.99542 |

| GIHS | 6.97787 | 0.65746 | 33.45619 | 0.00233 | 2.40080 | 52.63436 | 0.98413 |

| HCS | 9.58868 | 0.62070 | 38.29194 | 0.00267 | 2.67967 | 51.46174 | 0.99176 |

| IHS | 10.61170 | 0.63204 | 50.97203 | 0.00356 | 3.52966 | 48.97725 | 0.97134 |

| CIELab | 4.14506 | 0.77127 | 20.06662 | 0.00140 | 1.84331 | 67.07440 | 1 |

| NNDiffuse | 6.93153 | 0.68589 | 30.69262 | 0.00214 | 2.67079 | 53.38321 | 0.99047 |

| F7 | Ehlers | 9.66602 | 0.58842 | 38.60687 | 0.00252 | 5.34836 | 51.98700 | 0.98848 |

| GS | 4.43979 | 0.64207 | 28.36296 | 0.00183 | 2.15986 | 58.92781 | 0.99695 |

| GIHS | 4.86275 | 0.61769 | 19.90348 | 0.00130 | 2.39510 | 57.74171 | 0.99608 |

| HCS | 6.21293 | 0.59860 | 24.68755 | 0.00161 | 6.57336 | 55.87073 | 0.99479 |

| IHS | 9.99170 | 0.58440 | 40.96218 | 0.00267 | 5.54742 | 51.47263 | 0.98461 |

| CIELab | 3.3780 | 0.72134 | 17.43123 | 0.00114 | 1.88890 | 68.89373 | 1 |

| NNDiffuse | 6.00374 | 0.60227 | 28.30174 | 0.00184 | 2.87237 | 54.68403 | 0.98907 |

| F8 | Ehlers | 7.61492 | 0.68151 | 29.46707 | 0.00217 | 3.58878 | 53.26597 | 0.99194 |

| GS | 4.02694 | 0.70121 | 14.48671 | 0.00107 | 2.77359 | 59.43332 | 0.99737 |

| GIHS | 5.76109 | 0.70009 | 27.24384 | 0.00201 | 2.20337 | 53.94734 | 0.98655 |

| HCS | 6.82371 | 0.68186 | 27.87873 | 0.00205 | 1.32168 | 53.74725 | 0.99579 |

| IHS | 8.13416 | 0.68201 | 38.54293 | 0.00284 | 2.97812 | 50.93381 | 0.97920 |

| CIELab | 3.5084 | 0.7133 | 13.48278 | 0.00099 | 1.20401 | 60.05712 | 1 |

| NNDiffuse | 5.70992 | 0.69899 | 14.95033 | 0.00110 | 1.31369 | 59.15970 | 0.99740 |

Table A6.

Numeric results of spatial quality metrics of the Pan-sharpened images produced by select algorithms for suburban test sites.

Table A6.

Numeric results of spatial quality metrics of the Pan-sharpened images produced by select algorithms for suburban test sites.

| | CC | Zhou’s SP | SRMSE | SP ERGAS |

|---|

| F3 | Ehlers | 0.922299 | 0.949657 | 0.012645 | 28.478359 |

| GS | 0.989999 | 0.979878 | 0.007121 | 26.395064 |

| GIHS | 0.988664 | 0.989955 | 0.00808 | 26.561586 |

| HCS | 0.942661 | 0.979506 | 0.01034 | 26.580025 |

| IHS | 0.920211 | 0.929723 | 0.012687 | 27.542710 |

| CIELab | 0.996151 | 0.996340 | 3.22E-08 | 7.7739934 |

| NNDiffuse | 0.940385 | 0.972273 | 0.00683 | 26.669404 |

| F7 | Ehlers | 0.940410 | 0.929782 | 0.013524 | 30.304598 |

| GSc | 0.989997 | 0.989956 | 0.008056 | 28.497749 |

| GIHS | 0.973639 | 0.978996 | 0.008367 | 29.121990 |

| HCS | 0.968484 | 0.962481 | 0.009486 | 29.539885 |

| IHS | 0.939801 | 0.964973 | 0.013591 | 30.416119 |

| CIELab | 0.998630 | 0.996223 | 1.66E-08 | 3.1299142 |

| NNDiffuse | 0.996187 | 0.989929 | 0.008685 | 28.273530 |

| F8 | Ehlers | 0.918887 | 0.919399 | 0.009181 | 26.935703 |

| GS | 0.989998 | 0.989753 | 0.005542 | 25.948199 |

| GIHS | 0.972152 | 0.989942 | 0.005759 | 25.943732 |

| HCS | 0.800374 | 0.700073 | 0.006954 | 27.084421 |

| IHS | 0.921523 | 0.995543 | 0.009236 | 26.447253 |

| CIELab | 0.997412 | 0.997655 | 2.26E-08 | 8.2907935 |

| NNDiffuse | 0.989999 | 0.969353 | 0.004198 | 26.011647 |

Table A7.

Band-by-band calculation results of spectral quality metrics belonging to test site F3.

Table A7.

Band-by-band calculation results of spectral quality metrics belonging to test site F3.

| Method | Band | Ehlers | GC | GIHS | HCS | IHS | CIELab | NNDIF |

|---|

| ERGAS | Band 1 | 13.23542 | 6.28494 | 7.53986 | 11.51714 | 14.68644 | 6.19922 | 9.43229 |

| Band 2 | 10.86379 | 5.49824 | 6.73039 | 10.62880 | 10.07883 | 4.07797 | 7.66444 |

| Band 3 | 6.52447 | 4.08371 | 4.97720 | 5.58341 | 7.75358 | 2.07075 | 2.81246 |

| Average | 10.98399 | 5.51194 | 6.97787 | 9.58868 | 10.6117 | 4.14506 | 6.93153 |

| QAVG | Band 1 | 0.86547 | 1.02826 | 0.95928 | 1.02387 | 0.87788 | 1.0607 | 1.03723 |

| Band 2 | 0.57607 | 0.65720 | 0.69449 | 0.52406 | 0.56826 | 0.70848 | 0.60802 |

| Band 3 | 0.48901 | 0.30277 | 0.36560 | 0.31617 | 0.45130 | 0.54621 | 0.41064 |

| Average | 0.63217 | 0.66128 | 0.65746 | 0.62070 | 0.63204 | 0.77127 | 0.68589 |

| RASE | Band 1 | 49.91752 | 30.04186 | 39.39898 | 42.79105 | 54.69452 | 25.33830 | 34.82911 |

| Band 2 | 46.79656 | 22.35778 | 35.26309 | 37.59033 | 52.26805 | 21.10608 | 31.65890 |

| Band 3 | 34.19874 | 18.59234 | 25.44349 | 36.19742 | 45.77826 | 13.75556 | 25.58183 |

| Average | 43.08140 | 23.69334 | 33.45619 | 38.29194 | 50.97203 | 20.06662 | 30.69262 |

| RMSE | Band 1 | 0.00833 | 0.00450 | 0.00525 | 0.00625 | 0.01033 | 0.00413 | 0.00525 |

| Band 2 | 0.00040 | 0.00010 | 0.00186 | 0.00156 | 0.00042 | 0.00008 | 0.00106 |

| Band 3 | 0.00031 | 0.00002 | 0.00001 | 0.00009 | 0.00001 | 0.00001 | 0.00009 |

| Average | 0.00301 | 0.00154 | 0.00233 | 0.00267 | 0.00356 | 0.00140 | 0.00214 |

| SAM | Band 1 | 4.86237 | 3.61186 | 3.40619 | 3.10669 | 4.08712 | 2.22633 | 3.13166 |

| Band 2 | 4.42003 | 2.42682 | 2.50814 | 2.87424 | 3.16997 | 1.82082 | 2.55769 |

| Band 3 | 3.95193 | 2.35714 | 1.78239 | 2.20364 | 3.05983 | 1.53200 | 2.21219 |

| Average | 4.48411 | 2.84298 | 2.4008 | 2.67967 | 3.52966 | 1.84331 | 2.67079 |

| PSNR | Band 1 | 56.42695 | 60.52591 | 54.16646 | 54.15527 | 51.81649 | 69.90153 | 57.27461 |

| Band 2 | 48.08904 | 59.45342 | 51.82179 | 51.08538 | 47.59585 | 67.71639 | 57.20947 |

| Band 3 | 47.47481 | 50.38406 | 50.63450 | 49.28219 | 46.97046 | 65.36066 | 45.98168 |

| Average | 50.43809 | 56.80727 | 52.63436 | 51.46174 | 48.97725 | 67.07440 | 53.383210 |

| SSIM | Band 1 | 0.99888 | 0.99702 | 0.99417 | 0.99251 | 0.98071 | 1 | 0.99051 |

| Band 2 | 0.98443 | 0.99539 | 0.98407 | 0.99175 | 0.97231 | 1 | 0.99048 |

| Band 3 | 0.97331 | 0.99385 | 0.97415 | 0.99108 | 0.96142 | 0.9999 | 0.99042 |

| Average | 0.98554 | 0.99542 | 0.98413 | 0.99176 | 0.97134 | 1 | 0.99047 |

List A1. Definition of Terms in Table 2 RMSE: MN is the image size, and represent pixel digital number (DN) at ’th position of Pan-sharpened and MS image.

ERGAS: represents the ratio between the pixel size of high resolution and low resolution images; e.g., ¼ for Pléiades data, and number of bands. The RMSE represents root mean square error of band .

SAM: The spectral vector stands for reference MS image pixels and stands for Pan-sharpened image pixels rep reference and both have L components.

RASE: The represnts the mean of bth band; b is the number of bands and represents root mean square error.

PSNR: The represents the number of gray levels in the image; MN is the image size, is pixel value of reference image and is the pixel value of Pan-sharpened image. A higher PSNR value indicates more similarity between the reference MS and Pan-sharpened images.

QAVG: The and are the means of reference and Pan-sharpened images, respectively; is the covariance and and are variances. As QI can only be applied to one band, the average value of three or more bands (QAVG) is used for calculating a global spectral quality index for multi-bands images. QI values range between −1 and 1. A higher value indicates more similarity between reference and Pan-sharpened image.

SSIM: The stands for mean, stands for standard deviation; and represent reference and Pan-sharpened image respectively. The C1 and C2 are two necessary constants to avoid the index from a division by zero. These constants depend on the dynamic range of the pixel values. A higher value of the measured index shows the better quality of Pan-sharpened algorithm.

CC: Cr,f is the cross-correlation between reference and fused images, while Cr and Cf are the correlation coefficients belonging to reference and fused images respectively.

SRMSE: Edge magnitude (M) is calculated via spectral distance of horizontal and vertical ( and ) edge intensities.

Sp-ERGAS: represents the ratio between the pixel size of MS and Pan images, and

is the number of bands. Spatial RMSE is represented as below:

where MN is the image size,

and

represents the pixel digital number (DN) at

’th position of Pan-sharpened and Pan image.