BICOS—An Algorithm for Fast Real-Time Correspondence Search for Statistical Pattern Projection-Based Active Stereo Sensors †

Abstract

1. Introduction

1.1. Stereo Vision Based 3D Sensors

- 1.

- Single-shot vs. multi-shot. Single-shot systems work on a single image (or image pair for stereo camera systems) and a fixed projection pattern. Multi-shot systems record a sequence of images (or sequence of image pairs). The projected pattern is different for each successively taken image/image pair.

- 2.

- Single-camera vs. multi-camera. Systems which use a single camera find correspondences between the camera and the projector. Multi-camera systems use at least two cameras and find correspondences between them.

- 3.

- Coded light vs. statistical patterns. Coded light systems project well-known patterns or pattern sequences onto the measurement object. An overview of coded light techniques can be found in Reference [3]. On the other hand, statistical pattern systems do not require well-known patterns but can work with quasi random patterns which do not need to be known to the reconstruction algorithm.

1.2. Existing Algorithms and Algorithms in Related Fields

2. Materials and Methods

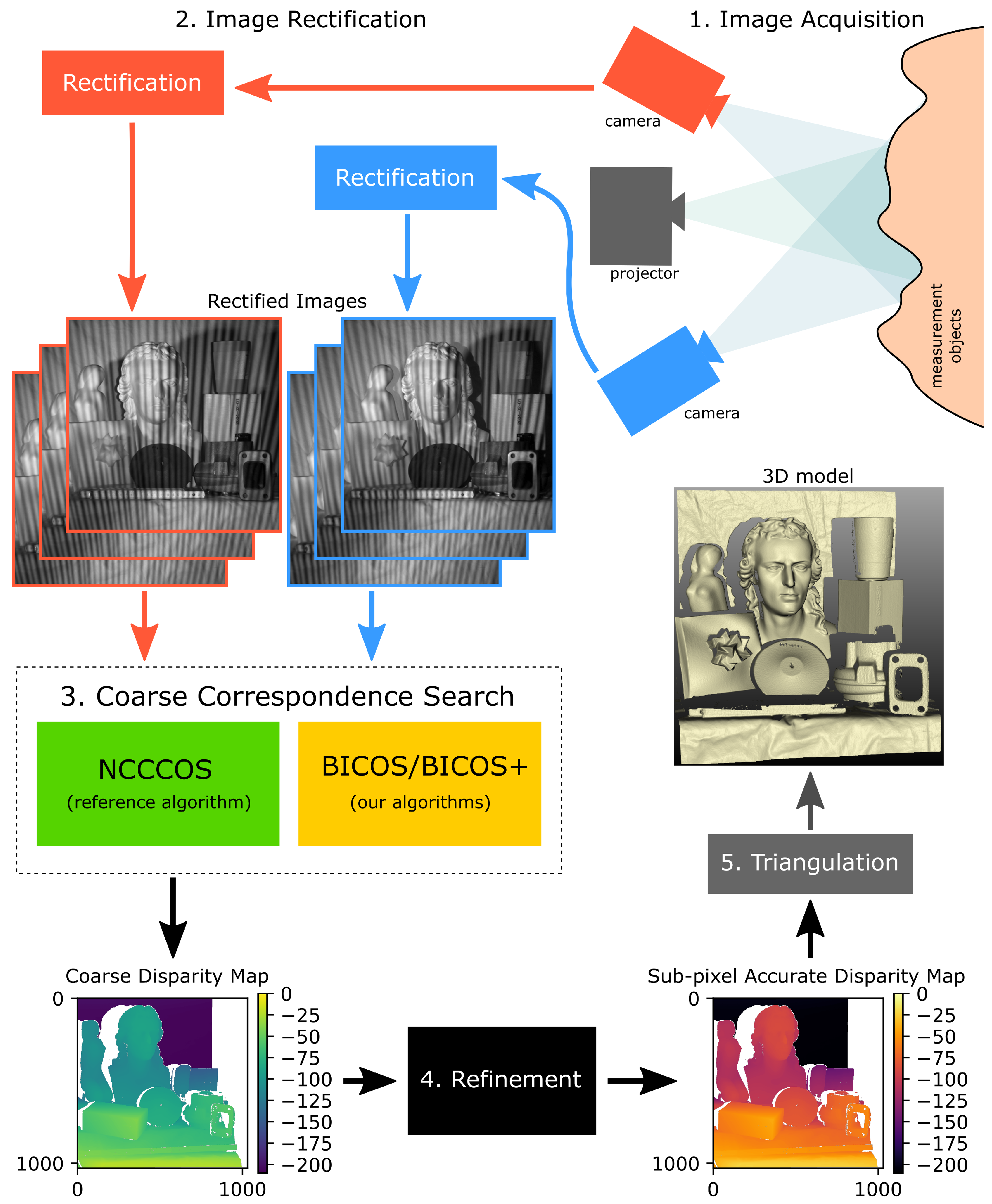

2.1. General Reconstruction Algorithm Outline

- 1.

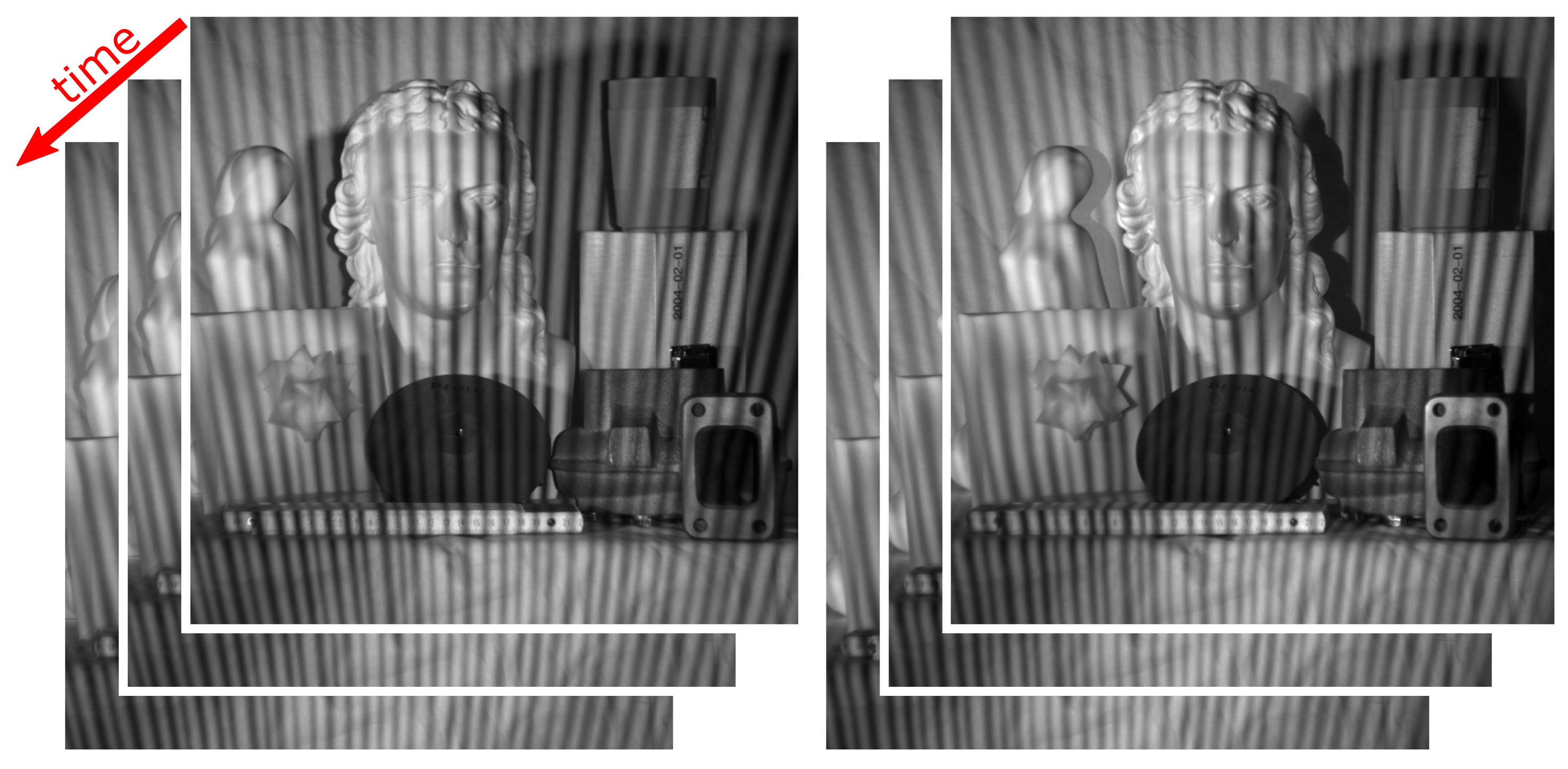

- Image Aquisition. A sequence of image pairs is recorded synchronously with the two cameras of the stereo setup.

- 2.

- Rectification. We rectify the camera images, that is, we apply a geometric transformation to the images to simulate two cameras with parallel image planes. The rectification algorithm also corrects lens distortion. After rectification, the images have the important property that an object point, which is visible in a specific image row r of the first camera’s images, also appears in the identical row r in the other camera’s images. This means that the epipolar lines [32,33] are parallel to the image rows.

- 3.

- Coarse Correspondence Search. We search corresponding pixels between the cameras. For each pixel in the left camera at position , we search a pixel in the right camera at position which shows the same object point. Due to rectification (step 2), this pixel can be found on the same image row r. The column has to be determined. In the coarse correspondence search, we consider any with a distance of up to two pixels from the real correspondence correct. The difference of the column numbers is called “disparity”. The result of the coarse correspondence search is the coarse disparity map.

- 4.

- Correspondence Refinement. We refine the result by searching an interval around the coarse correspondence. We then interpolate between pixels in the right image and find the best match amongst the interpolated sub-pixels. The result is the refined disparity map.

- 5.

- Calculation of 3D points. For each pixel in the disparity map, we calculate a 3D point by triangulation.

2.2. Reference Algorithm (NCCCOS)

- reduce the number of pixel comparisons (e.g., by further restricting the scan volume)

- reduce the calculation time which is required for each pixel comparison

2.3. BICOS Algorithm

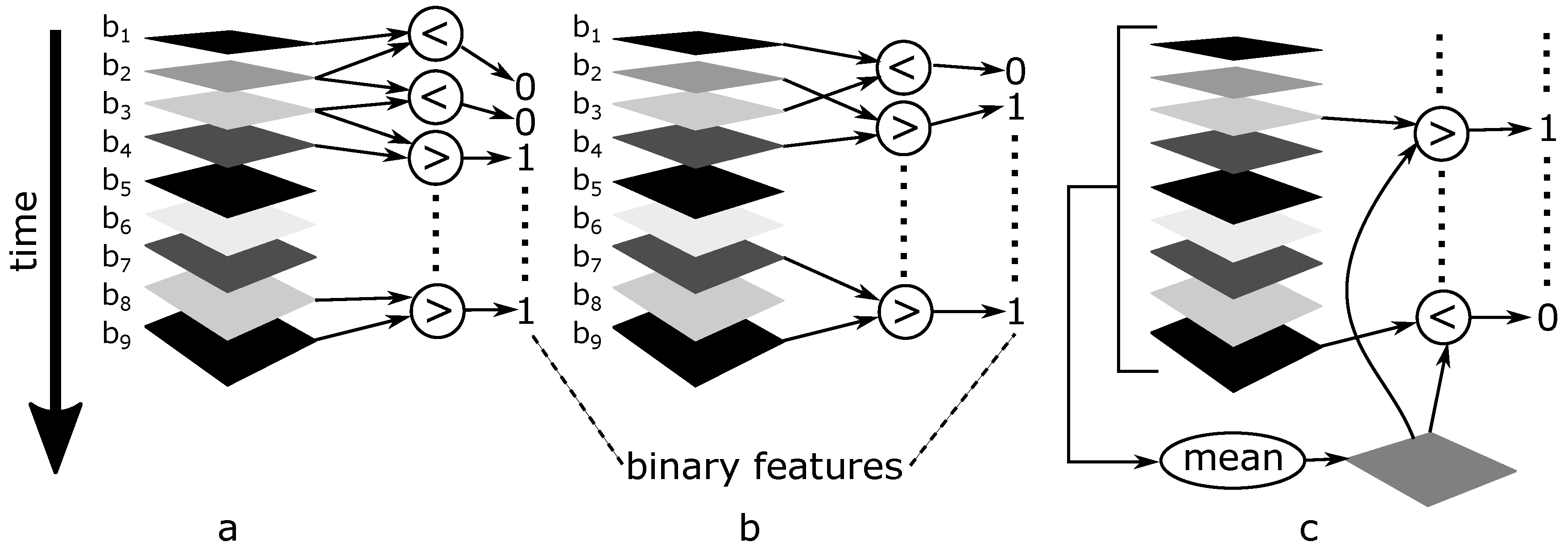

- 1.

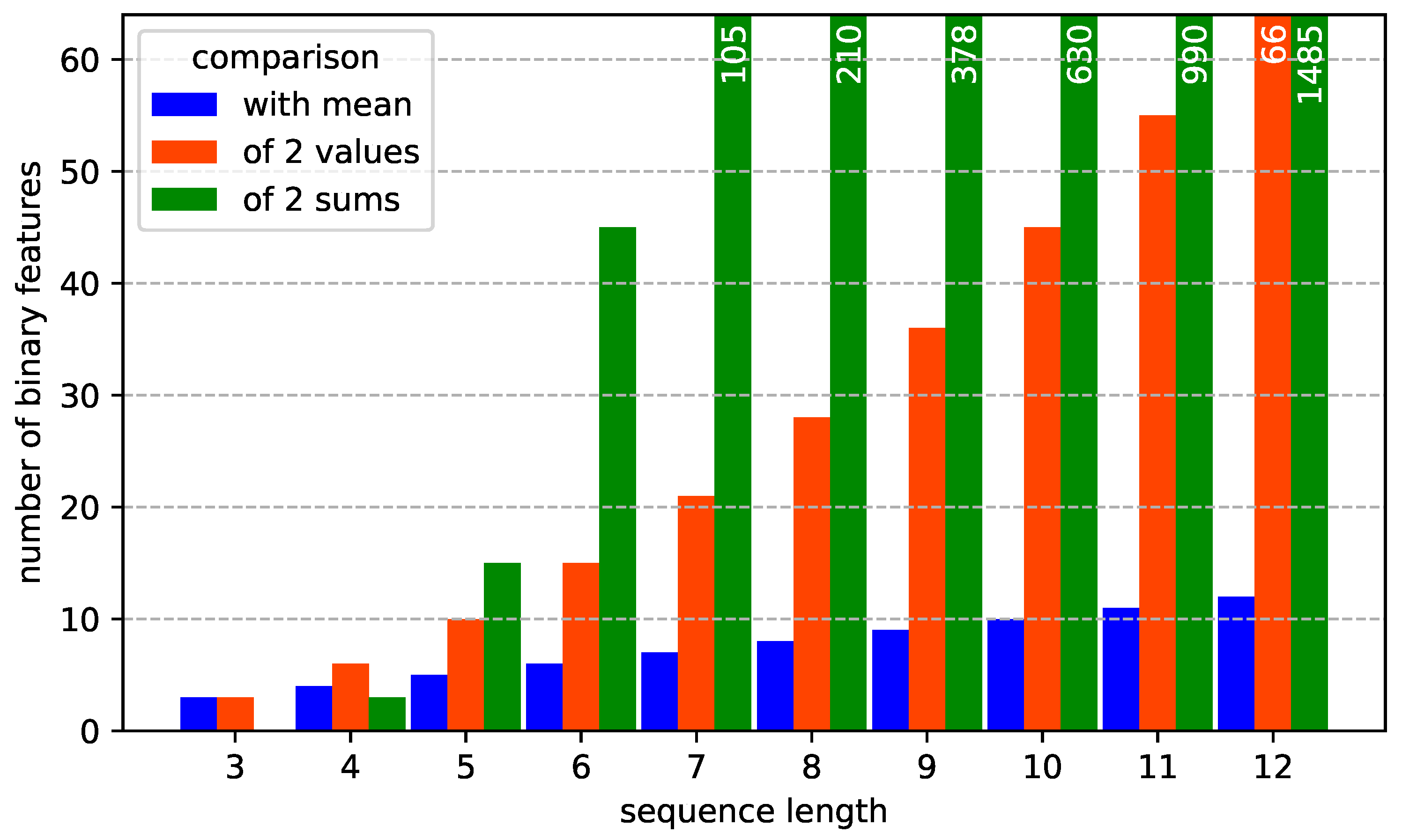

- Calculation of binary features. For each pixel, we first calculate a bit string of “binary features”. A binary feature is generated by comparing two of the brightness values of a pixel with each other (Figure 2a,b). For example, comparing brightness values with yields a binary feature with a value of 1 if and 0 otherwise. It does not matter, if a >, <, ≥ or ≤ operator is used, as long as it is consistent.We can compare every brightness value with every other brightness value within the sequence. For instance, for a sequence length of 10, we compare brightness value with , with , ..., with , with , with and so forth. This yields 45 binary features. In addition, each brightness value can be compared to the mean brightness value of the sequence, yielding another 10 binary features for a sequence length of 10 (Figure 2c). We restrict the number of binary features to 64 to allow fast computation.

- 2.

- Comparing the binary features. To find a correspondence we do not compare the brightness values but we compare the binary features of a pixel in the left camera with the binary features of a pixel in the right camera. The more binary features coincide, the better the match. Thus the pixel similarity measure for BICOS (and BICOS+) is the Number of Equal Binary Features (NEBF). Calculation of the NEBF is very fast because only two operations are required: compare and count. Like in the NCCCOS, we perform the correspondence search in both directions, that is, first from left to right, then from right to left. We accept only consistent results.

- 3.

- Filtering the coarse correspondences. The coarse disparity map which we generate by using the NEBF contains more outliers and holes than the one created with the NCC which is used in NCCCOS. For an explanation why this is the case, see Section 3.2. We compensate for this by applying a median filter to the disparity map, which also fills the holes. Please note that this does not mean that the final 3D result is filtered because we apply this filter only to the coarse disparity map which is used as a start solution for the refinement step.

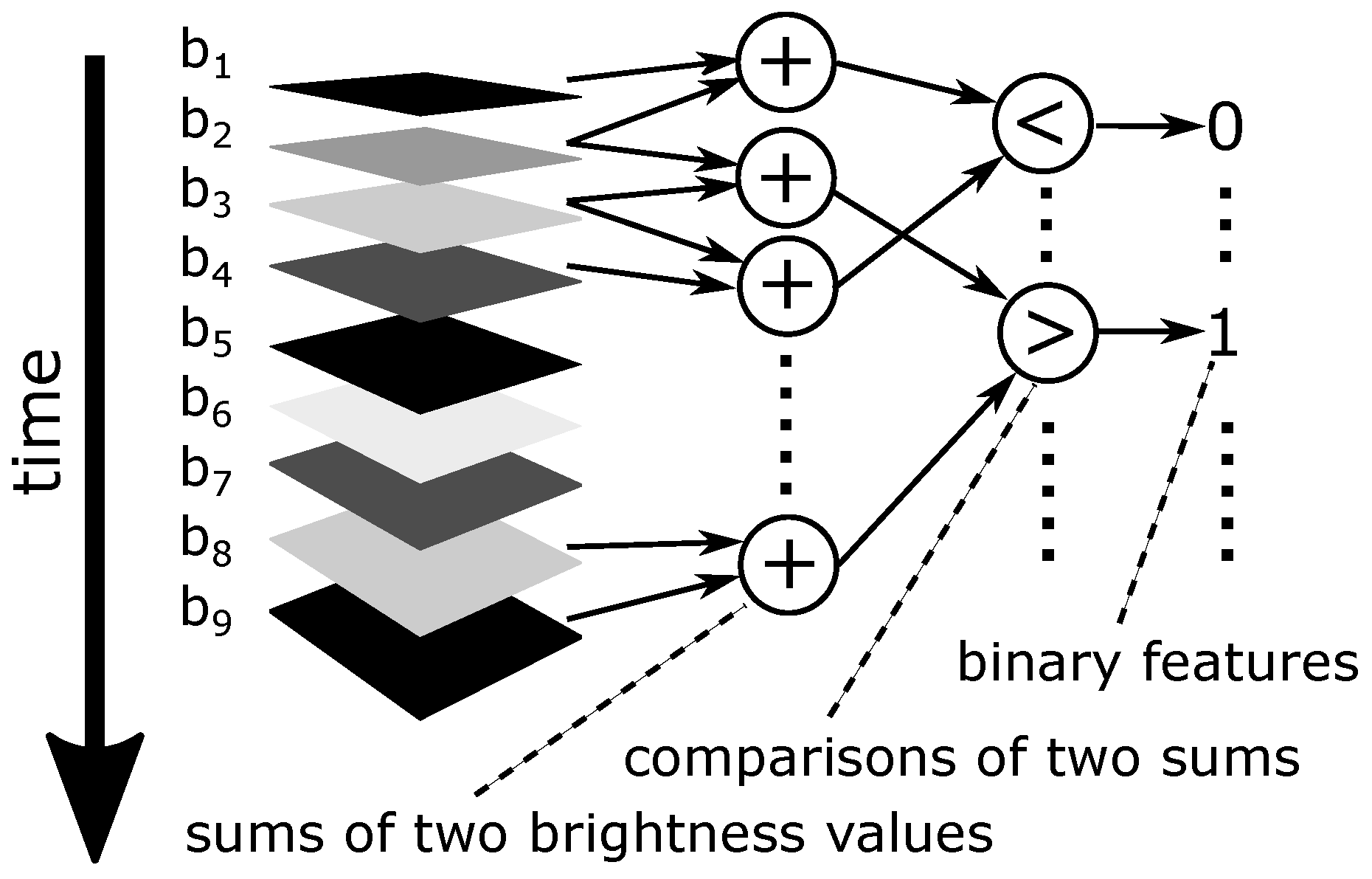

2.4. BICOS+ Algorithm

- form pairs of brightness values (from the brightness value sequence of a pixel)

- sum up the two brightness values of each pair

- compare two such sums with each other and save the result of the comparison as a binary feature (only sums which do not share a common brightness value are compared)

2.5. Robustness of the Algorithms against Ambient Light and Changes in Reflectivity

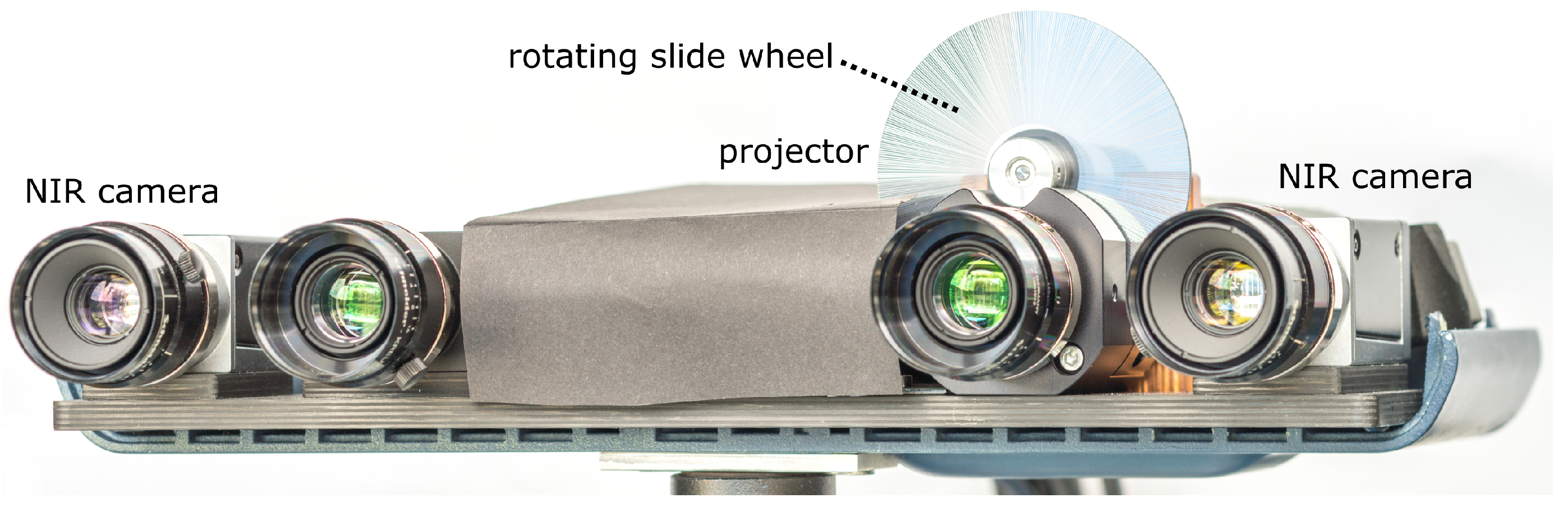

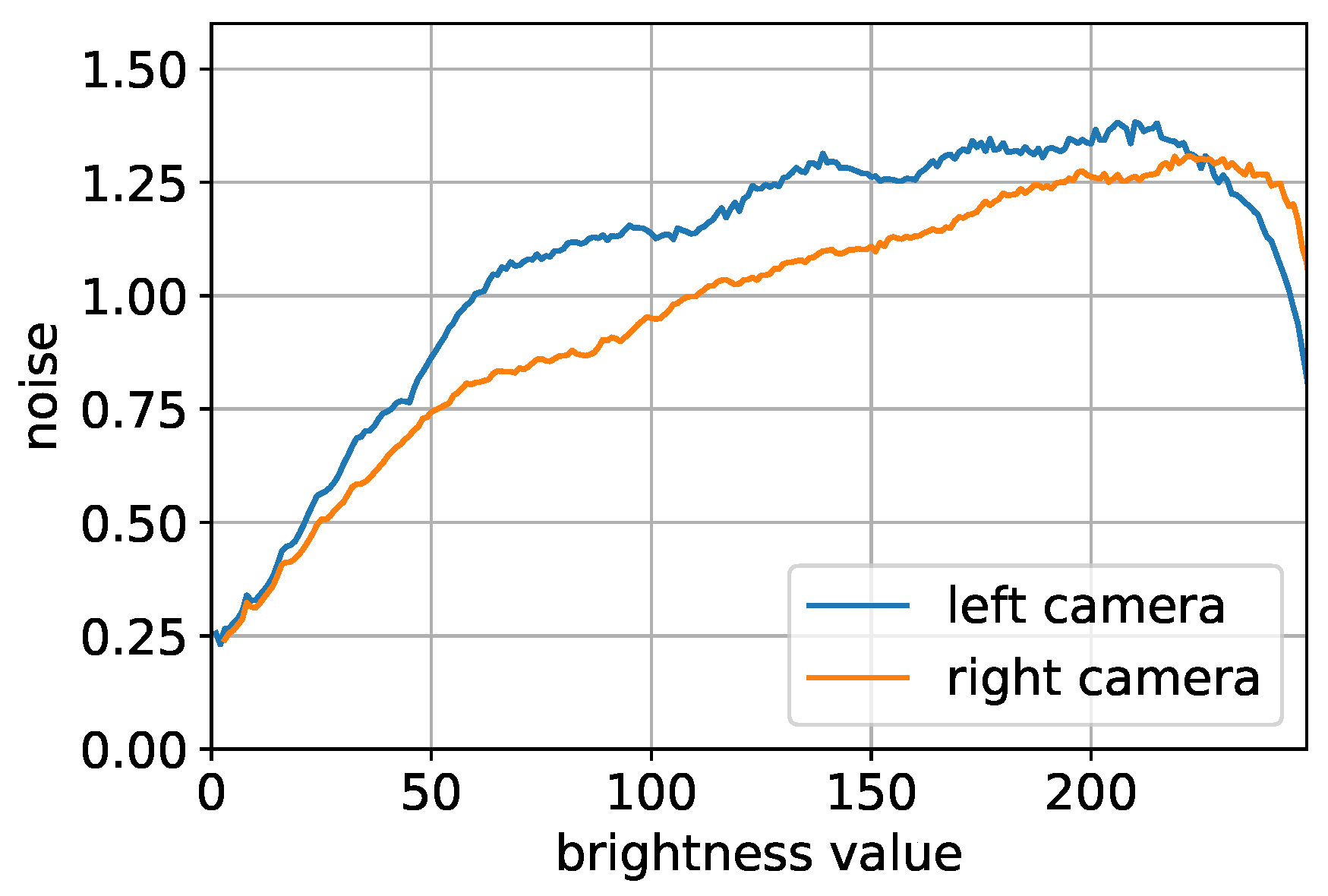

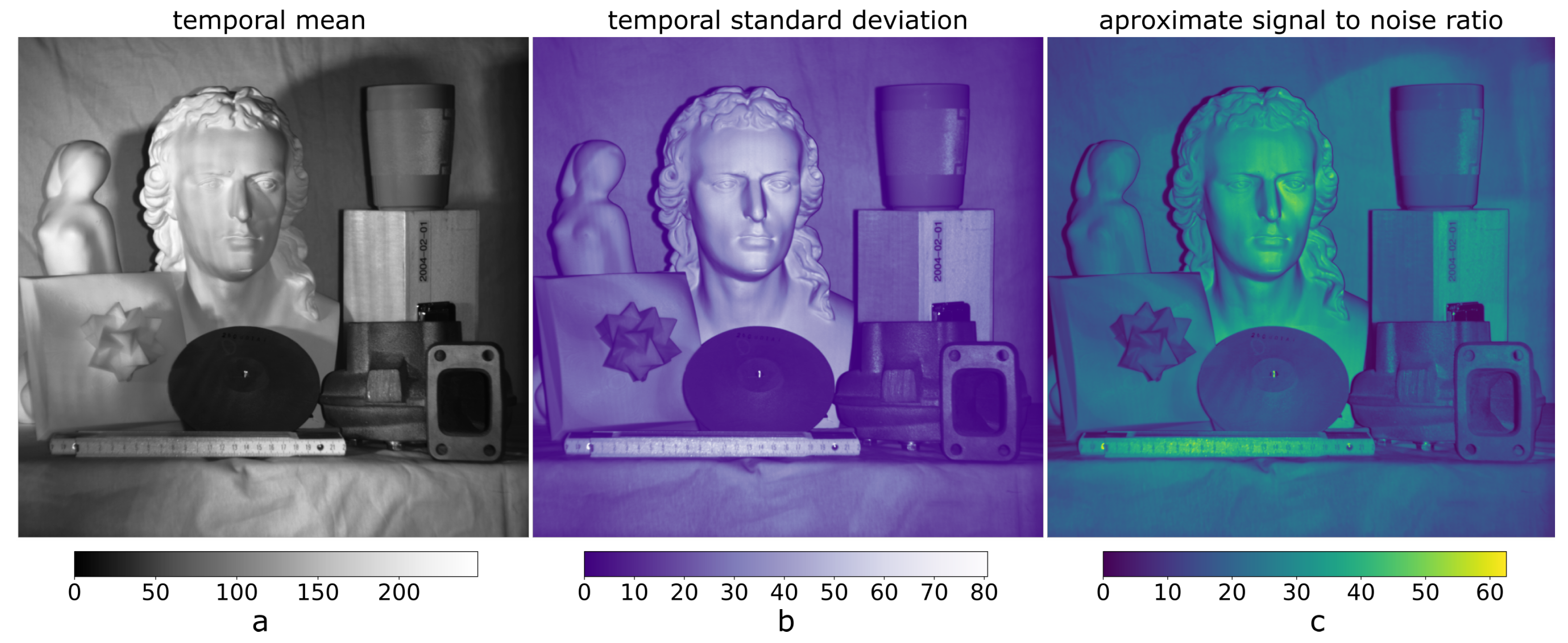

2.6. Sensor for Image Acquisition

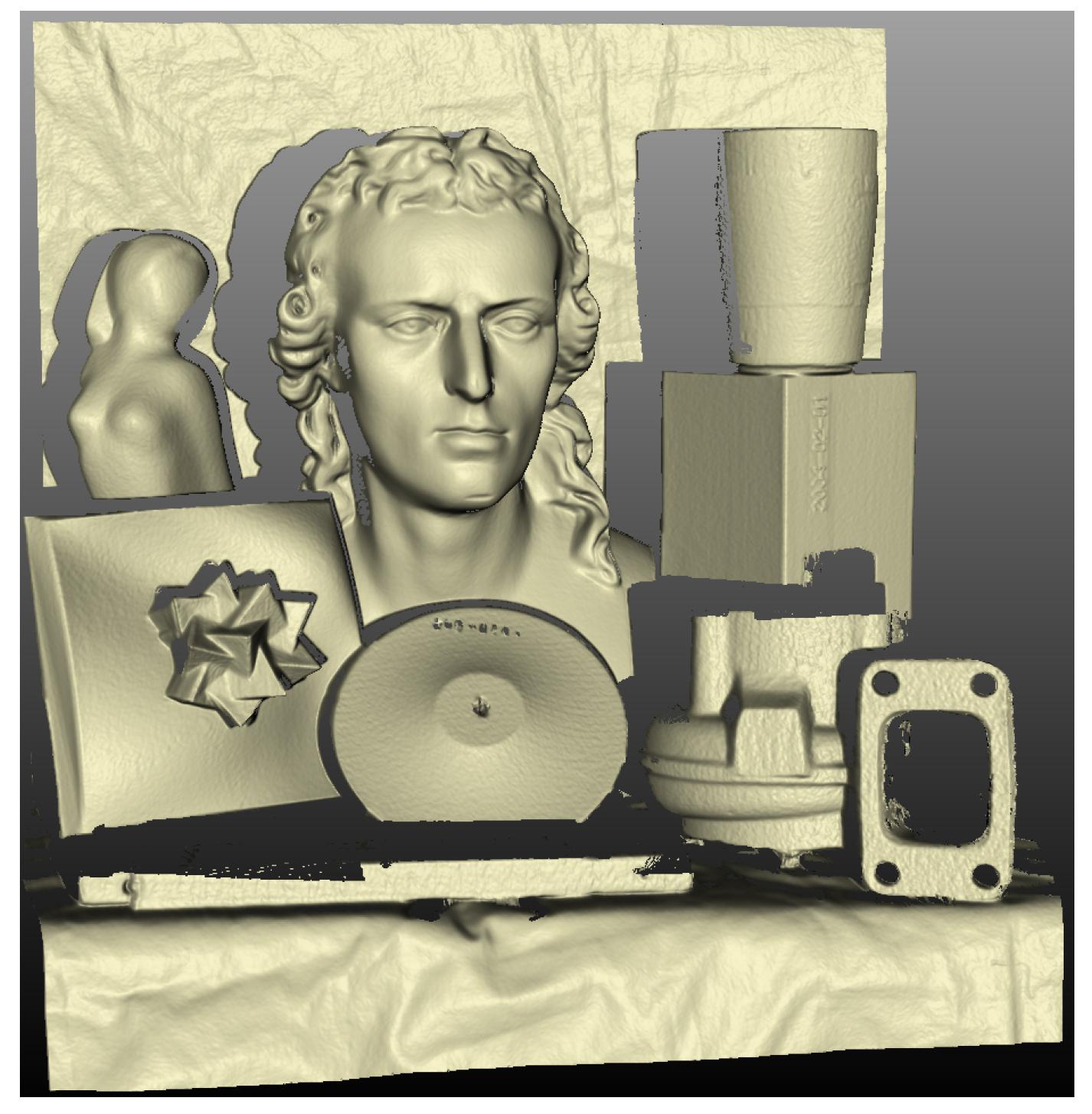

2.7. Test Scene and Ground Truth Data

3. Results

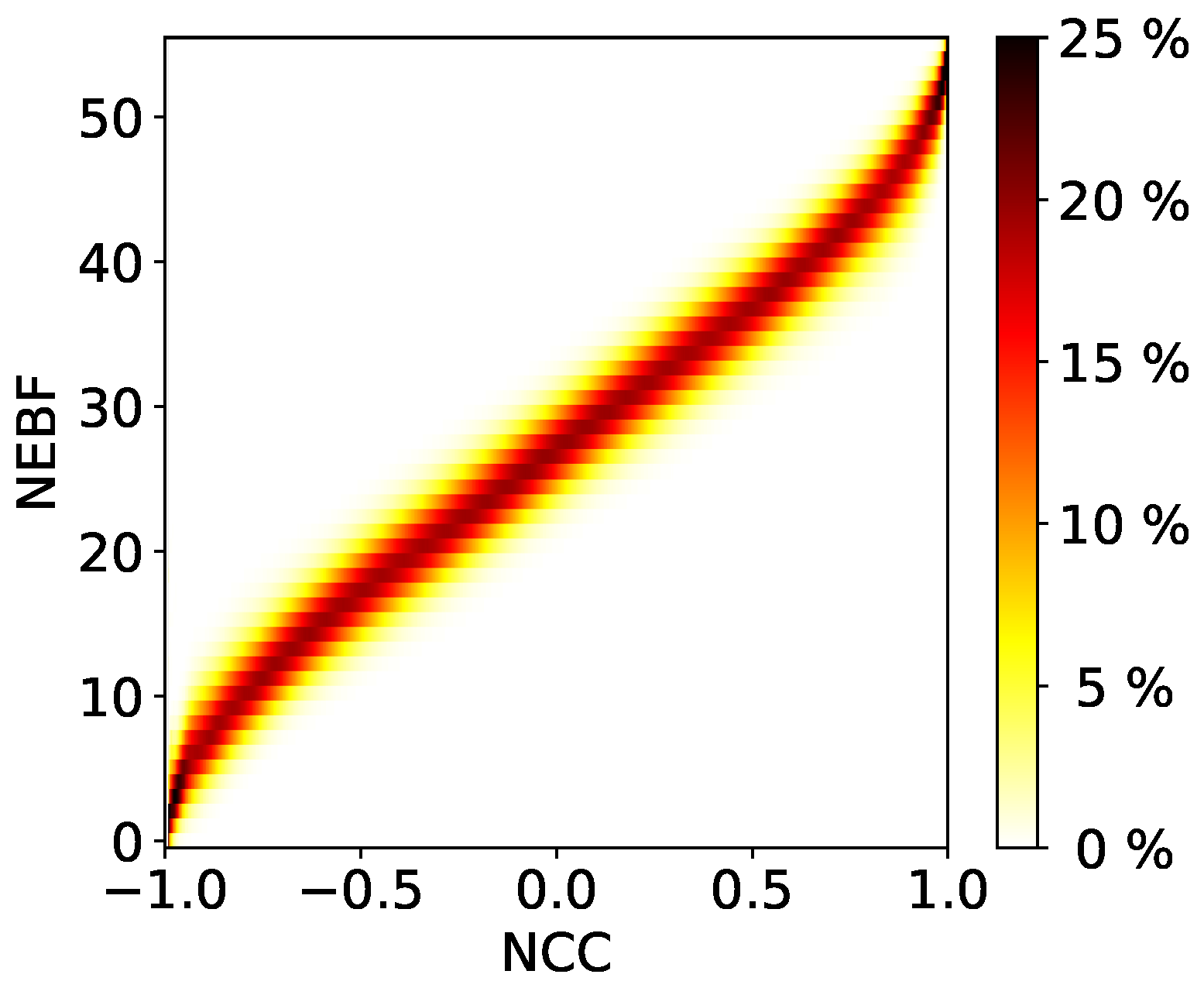

3.1. Relationship between NCC and NEBF

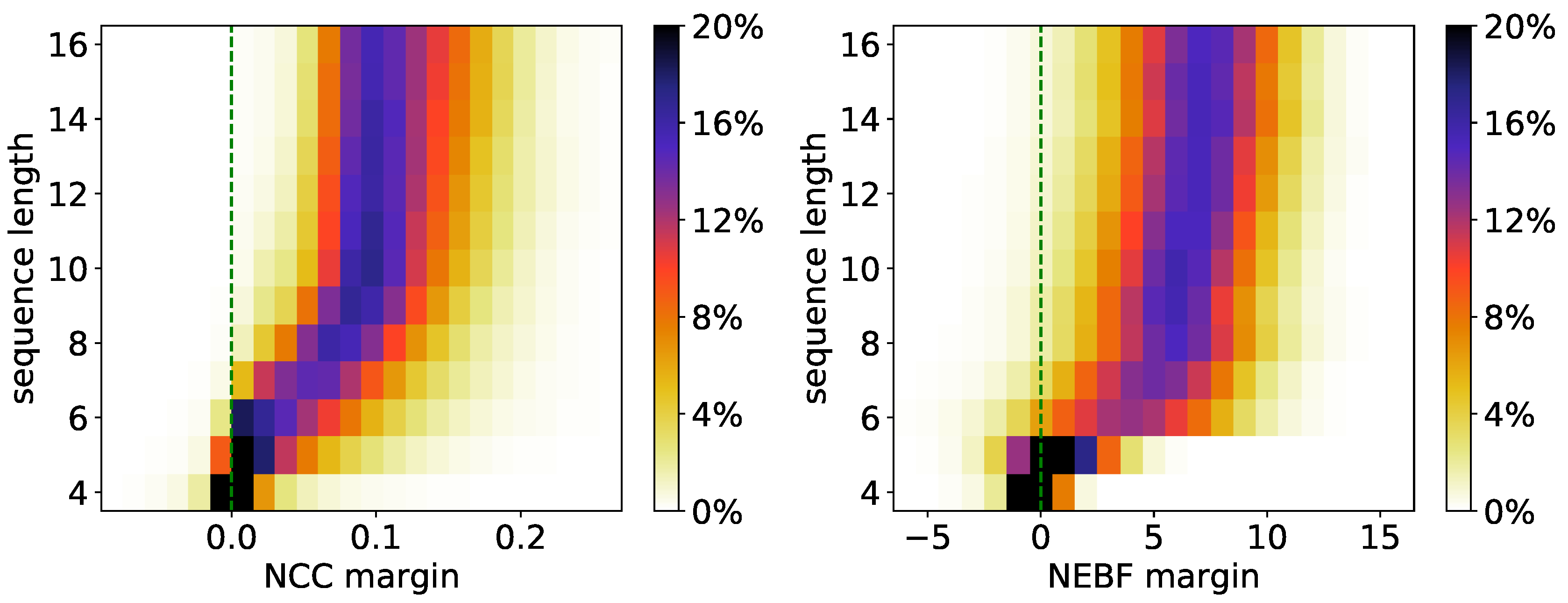

3.2. How Well Can NCC and NEBF Distinguish between Correct and Wrong Correspondences?

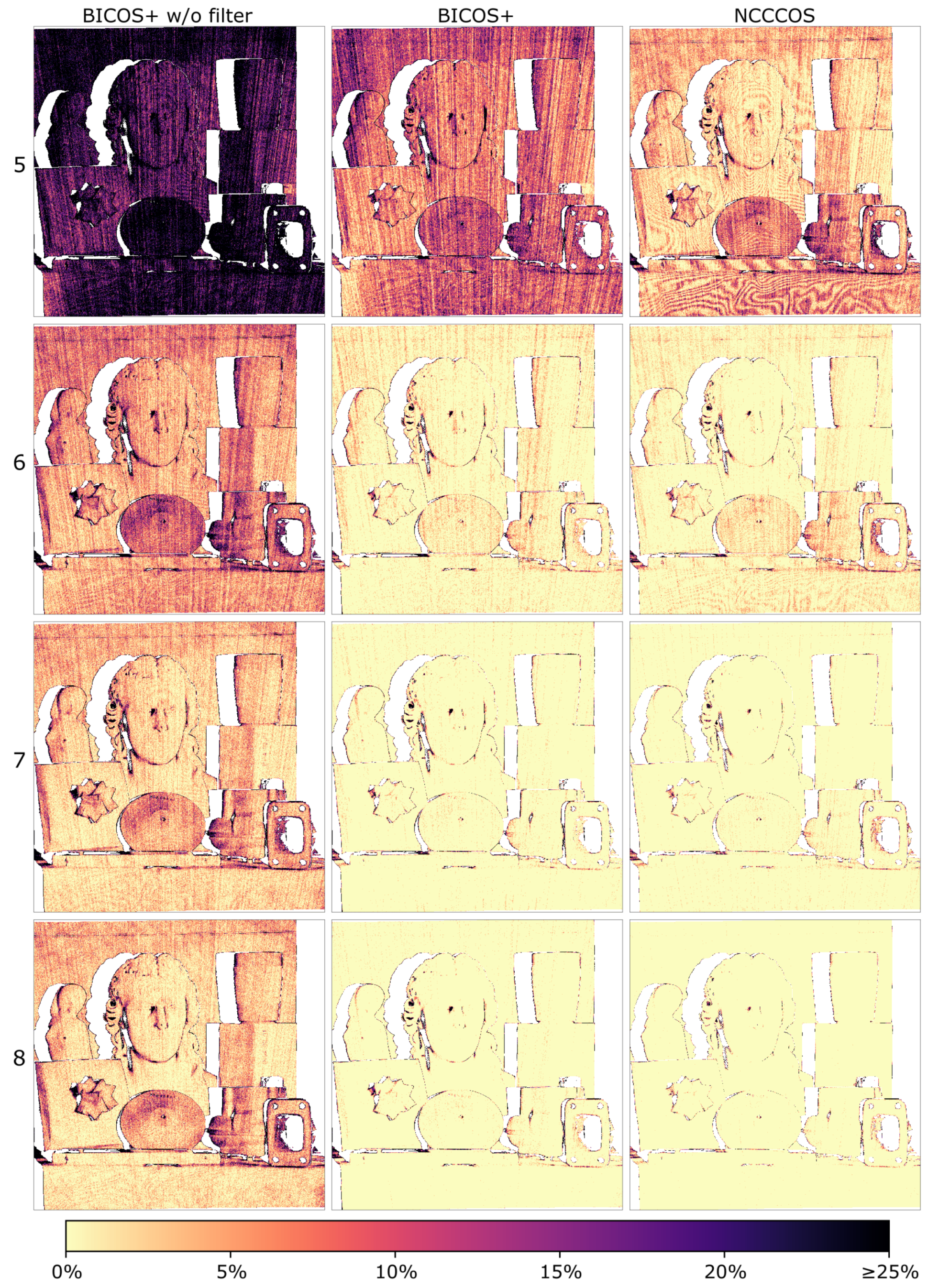

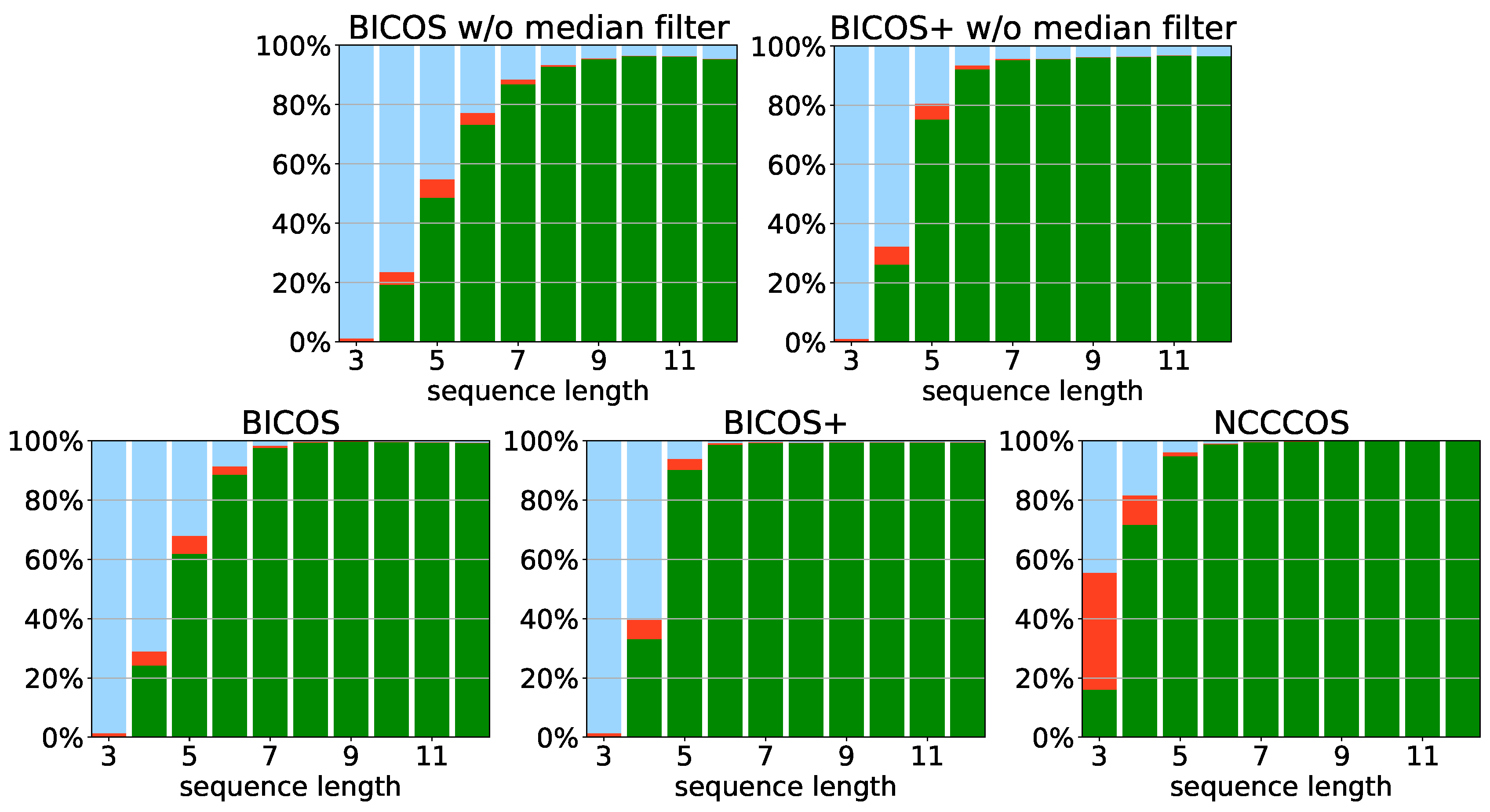

3.3. Quality of the Coarse Correspondence Search

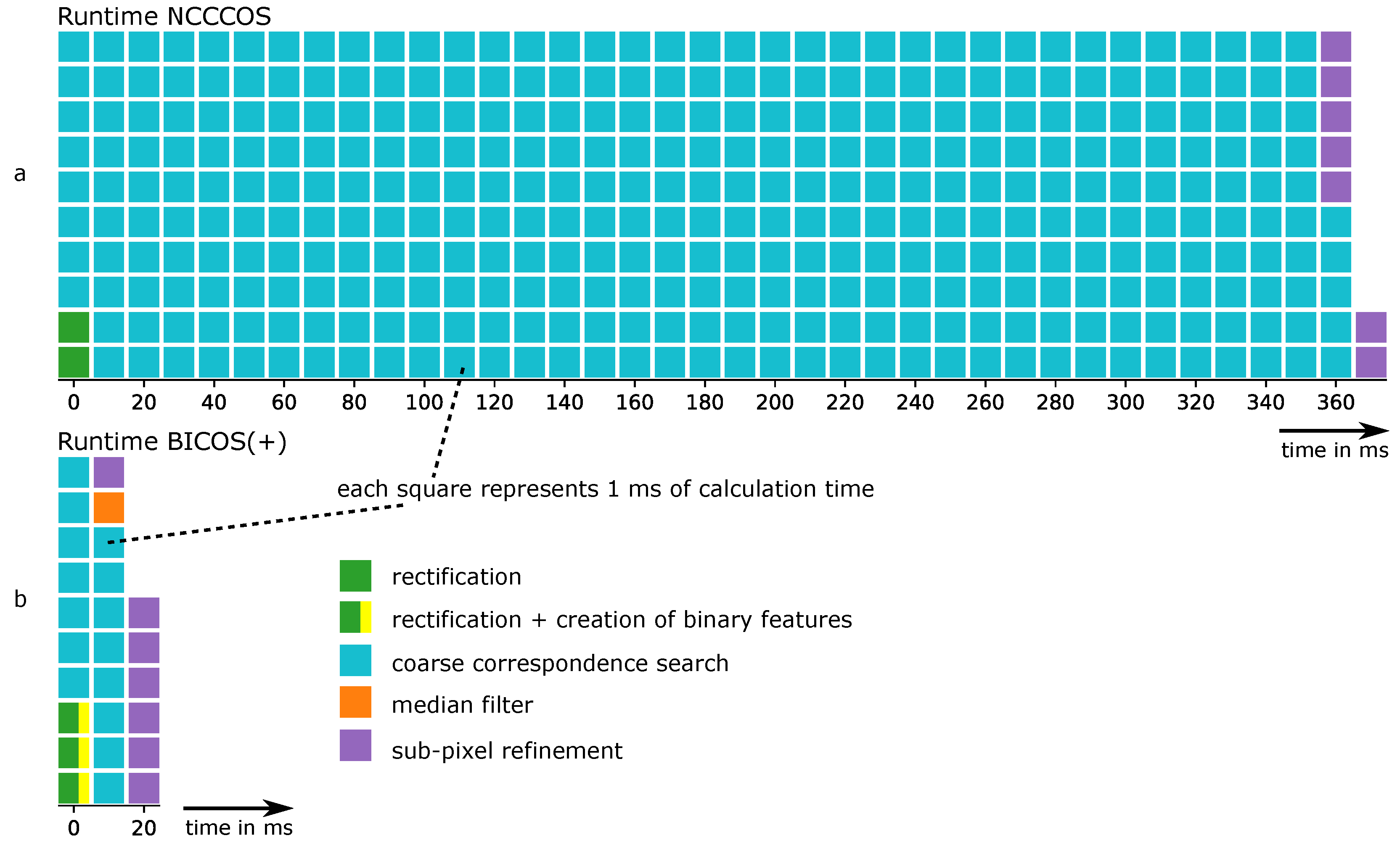

3.4. Speed of the Coarse Correspondence Search

4. Discussion

4.1. Interpretation of Results

4.2. Comparison with Other Methods

5. Conclusions and Outlook

Supplementary Materials

Author Contributions

Funding

Conflicts of Interest

Abbreviations

| Abbreviations | |

| BICOS | BInary Correspondence Search |

| BICOS+ | Improved Binary Correspondence Search |

| GOBO | GOes Before Optics (rotating slide) |

| NCC | Normalized Cross Correlation |

| NCCCOS | NCC-based Correspondence Search (the reference algorithm) |

| NEBF | Number of Equal Binary Features |

| NIR | Near-InfraRed light |

| SAD | Sum of Absolute Differences |

| Mathematical Symbols | |

| r | row in a rectified image |

| column in a rectified image of the left camera | |

| column in a rectified image of the right camera | |

| d | the disparity between an object point in a left and in a right rectified image |

| the brightness values of a pixel in an image sequence which consists of n rectified images | |

| A | ambient light |

| s | factor representing the difference in camera sensitivity and reflectivity of the measured object towards the left and the right camera |

References

- Sansoni, G.; Trebeschi, M.; Docchio, F. State-of-The-Art and Applications of 3D Imaging Sensors in Industry, Cultural Heritage, Medicine, and Criminal Investigation. Sensors 2009, 9, 568–601. [Google Scholar] [CrossRef] [PubMed]

- Maier-Hein, L.; Mountney, P.; Bartoli, A.; Elhawary, H.; Elson, D.; Groch, A.; Kolb, A.; Rodrigues, M.; Sorger, J.; Speidel, S.; et al. Optical techniques for 3D surface reconstruction in computer-assisted laparoscopic surgery. Med. Image Anal. 2013, 17, 974–996. [Google Scholar] [CrossRef] [PubMed]

- Geng, J. Structured-light 3D surface imaging: A tutorial. Adv. Opt. Photonics 2011, 3, 128–160. [Google Scholar] [CrossRef]

- Zhang, Z. Microsoft Kinect Sensor and Its Effect. IEEE MultiMed. 2012, 19, 4–10. [Google Scholar] [CrossRef]

- Gorthi, S.S.; Rastogi, P. Fringe projection techniques: Whither we are? Opt. Lasers Eng. 2010, 48, 133–140. [Google Scholar] [CrossRef]

- Bergmann, D. New approach for automatic surface reconstruction with coded light. In Proceedings of the SPIE’s 1995 International Symposium on Optical Science, Engineering, and Instrumentation, San Diego, CA, USA, 9–14 July 1995; Volume 2572. [Google Scholar] [CrossRef]

- Ishiyama, R.; Sakamoto, S.; Tajima, J.; Okatani, T.; Deguchi, K. Absolute phase measurements using geometric constraints between multiple cameras and projectors. Appl. Opt. 2007, 46, 3528–3538. [Google Scholar] [CrossRef] [PubMed]

- Sansoni, G.; Carocci, M.; Rodella, R. Three-dimensional vision based on a combination of gray-code and phase-shift light projection: Analysis and compensation of the systematic errors. Appl. Opt. 1999, 38, 6565–6573. [Google Scholar] [CrossRef] [PubMed]

- Zuo, C.; Huang, L.; Zhang, M.; Chen, Q.; Asundi, A. Temporal phase unwrapping algorithms for fringe projection profilometry: A comparative review. Opt. Lasers Eng. 2016, 85, 84–103. [Google Scholar] [CrossRef]

- Zuo, C.; Feng, S.; Huang, L.; Tao, T.; Yin, W.; Chen, Q. Phase shifting algorithms for fringe projection profilometry: A review. Opt. Lasers Eng. 2018, 109, 23–59. [Google Scholar] [CrossRef]

- Zhang, S. Absolute phase retrieval methods for digital fringe projection profilometry: A review. Opt. Lasers Eng. 2018, 107, 28–37. [Google Scholar] [CrossRef]

- Chen, X.; Xi, J.; Jin, Y.; Sun, J. Accurate calibration for a camera–projector measurement system based on structured light projection. Opt. Lasers Eng. 2009, 47, 310–319. [Google Scholar] [CrossRef]

- Siebert, J.P.; Marshall, S.J. Human body 3D imaging by speckle texture projection photogrammetry. Sens. Rev. 2000, 20, 218–226. [Google Scholar] [CrossRef]

- Albrecht, P.; Michaelis, B. Improvement of the spatial resolution of an optical 3-D measurement procedure. IEEE Trans. Instrum. Meas. 1998, 47, 158–162. [Google Scholar] [CrossRef]

- Schaffer, M.; Grosse, M.; Kowarschik, R. High-speed pattern projection for three-dimensional shape measurement using laser speckles. Appl. Opt. 2010, 49, 3622–3629. [Google Scholar] [CrossRef] [PubMed]

- Wiegmann, A.; Wagner, H.; Kowarschik, R. Human face measurement by projecting bandlimited random patterns. Opt. Express 2006, 14, 7692–7698. [Google Scholar] [CrossRef] [PubMed]

- Heist, S.; Kühmstedt, P.; Tünnermann, A.; Notni, G. Theoretical considerations on aperiodic sinusoidal fringes in comparison to phase-shifted sinusoidal fringes for high-speed three-dimensional shape measurement. Appl. Opt. 2015, 54, 10541–10551. [Google Scholar] [CrossRef] [PubMed]

- Heist, S.; Kühmstedt, P.; Tünnermann, A.; Notni, G. Experimental comparison of aperiodic sinusoidal fringes and phase-shifted sinusoidal fringes for high-speed three-dimensional shape measurement. Opt. Eng. 2016, 55, 024105. [Google Scholar] [CrossRef]

- Brahm, A.; Ramm, R.; Heist, S.; Rulff, C.; Kühmstedt, P.; Notni, G. Fast 3D NIR systems for facial measurement and lip-reading. In Proceedings of the SPIE Commercial + Scientific Sensing and Imaging, Anaheim, CA, USA, 9–13 April 2017; Volume 10220. [Google Scholar] [CrossRef]

- Heist, S.; Lutzke, P.; Schmidt, I.; Dietrich, P.; Kühmstedt, P.; Tünnermann, A.; Notni, G. High-speed three-dimensional shape measurement using GOBO projection. Opt. Lasers Eng. 2016, 87, 90–96. [Google Scholar] [CrossRef]

- Landmann, M.; Heist, S.; Dietrich, P.; Lutzke, P.; Gebhart, I.; Templin, J.; Kühmstedt, P.; Tünnermann, A.; Notni, G. High-speed 3D thermography. Opt. Lasers Eng. 2019, 121, 448–455. [Google Scholar] [CrossRef]

- Brahm, A.; Schindwolf, S.; Landmann, M.; Heist, S.; Kühmstedt, P.; Notni, G. 3D shape measurement of glass and transparent plastics with a thermal 3D system in the mid-wave infrared. In Proceedings of the SPIE Commercial + Scientific Sensing and Imaging, Orlando, FL, USA, 15–19 April 2018; Volume 10667. [Google Scholar] [CrossRef]

- Lazaros, N.; Sirakoulis, G.C.; Gasteratos, A. Review of Stereo Vision Algorithms: From Software to Hardware. Int. J. Optomechatron. 2008, 2, 435–462. [Google Scholar] [CrossRef]

- Hirschmüller, H.; Innocent, P.R.; Garibaldi, J. Real-Time Correlation-Based Stereo Vision with Reduced Border Errors. Int. J. Comput. Vis. 2002, 47, 229–246. [Google Scholar] [CrossRef]

- Scharstein, D.; Szeliski, R. A Taxonomy and Evaluation of Dense Two-Frame Stereo Correspondence Algorithms. Int. J. Comput. Vis. 2002, 47, 7–42. [Google Scholar] [CrossRef]

- Große, M. Untersuchungen zur Korrelationsbasierten Punktzuordnung in der Stereophotogrammetrischen 3D-Objektvermessung unter Verwendung von Sequenzen Strukturierter Beleuchtung. Ph.D. Thesis, Friedrich-Schiller-Universität Jena, Jena, Germany, 2013. [Google Scholar]

- Dietrich, P.; Heist, S.; Lutzke, P.; Landmann, M.; Grosmann, P.; Kühmstedt, P.; Notni, G. Efficient correspondence search algorithm for GOBO projection-based real-time 3D measurement. In Proceedings of the SPIE Defense + Commercial Sensing, Baltimore, MD, USA, 14–18 April 2019. [Google Scholar] [CrossRef]

- Rublee, E.; Rabaud, V.; Konolige, K.; Bradski, G. ORB: An Efficient Alternative to SIFT or SURF. In Proceedings of the 2011 International Conference on Computer Vision, Barcelona, Spain, 6–13 November 2011; pp. 2564–2571. [Google Scholar] [CrossRef]

- Calonder, M.; Lepetit, V.; Strecha, C.; Fua, P. BRIEF: Binary Robust Independent Elementary Features. Eur. Conf. Comput. Vis. 2010, 6314, 778–792. [Google Scholar] [CrossRef]

- Leutenegger, S.; Chli, M.; Siegwart, R. BRISK: Binary Robust invariant scalable keypoints. In Proceedings of the 2011 International Conference on Computer Vision, Barcelona, Spain, 6–13 November 2011; pp. 2548–2555. [Google Scholar] [CrossRef]

- Liu, L.; Fieguth, P.; Guo, Y.; Wang, X.; Pietikäinen, M. Local binary features for texture classification: Taxonomy and experimental study. Pattern Recognit. 2017, 62, 135–160. [Google Scholar] [CrossRef]

- Hartley, R.; Zisserman, A. Multiple View Geometry in Computer Vision; Cambridge University Press: Cambridge, UK, 2003. [Google Scholar]

- Luhmann, T.; Robson, S.; Kyle, S.; Böhm, J. Close-Range Photogrammetry and 3D Imaging; Walter de Gruyter: Berlin, Germany, 2014. [Google Scholar]

- Bradski, G. The OpenCV Library. Dr. Dobb’s J. Softw. Tools 2000, 120, 122–125. [Google Scholar]

- Itseez. Open Source Computer Vision Library. 2015. Available online: https://github.com/itseez/opencv (accessed on 8 August 2019).

- Zhang, S.; Huang, P. High-Resolution, Real-time 3D Shape Acquisition. In Proceedings of the 2004 Conference on Computer Vision and Pattern Recognition Workshop, Washington, DC, USA, 27 June–2 July 2004; p. 28. [Google Scholar] [CrossRef]

- Zhang, S. High-Resolution, Real-Time 3-D Shape Measurement. Ph.D. Thesis, Stony Brook University, Stony Brook, NY, USA, 2005. [Google Scholar]

| Passive Stereo | Active Multi-Shot Stereo with Statistical Patterns |

|---|---|

| All information for correspondence search must be found from spatial features, for example, texture, object edges, shadows, etc. | We can rely on temporal features. For a given pixel in the left camera we can find a correspondence without looking at any other pixel in the same camera. |

| Correspondences for smooth image areas without texture or other features must be guessed from surrounding image features. | The projected patterns are visible on all object parts, pixel-wise correspondences can also be found in smooth image areas. |

| Spatial image features look slightly different from each camera perspective, they have a different projected geometry. The correspondence search algorithm must account for that. | If only temporal features are used (i.e., only the brightness value sequence of a single pixel), there is only minimal geometric change. |

| The reflected ambient light intensity may be different at each camera view-point because the reflectivity of most objects depends on the angles towards light source and view point. | In addition to (unwanted) ambient light, the projected patterns may have a different intensity for each camera. The reflection factors for the projected light and the ambient light are different, because the angles towards the light sources are different. |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Dietrich, P.; Heist, S.; Landmann, M.; Kühmstedt, P.; Notni, G. BICOS—An Algorithm for Fast Real-Time Correspondence Search for Statistical Pattern Projection-Based Active Stereo Sensors. Appl. Sci. 2019, 9, 3330. https://doi.org/10.3390/app9163330

Dietrich P, Heist S, Landmann M, Kühmstedt P, Notni G. BICOS—An Algorithm for Fast Real-Time Correspondence Search for Statistical Pattern Projection-Based Active Stereo Sensors. Applied Sciences. 2019; 9(16):3330. https://doi.org/10.3390/app9163330

Chicago/Turabian StyleDietrich, Patrick, Stefan Heist, Martin Landmann, Peter Kühmstedt, and Gunther Notni. 2019. "BICOS—An Algorithm for Fast Real-Time Correspondence Search for Statistical Pattern Projection-Based Active Stereo Sensors" Applied Sciences 9, no. 16: 3330. https://doi.org/10.3390/app9163330

APA StyleDietrich, P., Heist, S., Landmann, M., Kühmstedt, P., & Notni, G. (2019). BICOS—An Algorithm for Fast Real-Time Correspondence Search for Statistical Pattern Projection-Based Active Stereo Sensors. Applied Sciences, 9(16), 3330. https://doi.org/10.3390/app9163330