Visual Saliency Detection Using a Rule-Based Aggregation Approach

Abstract

1. Introduction

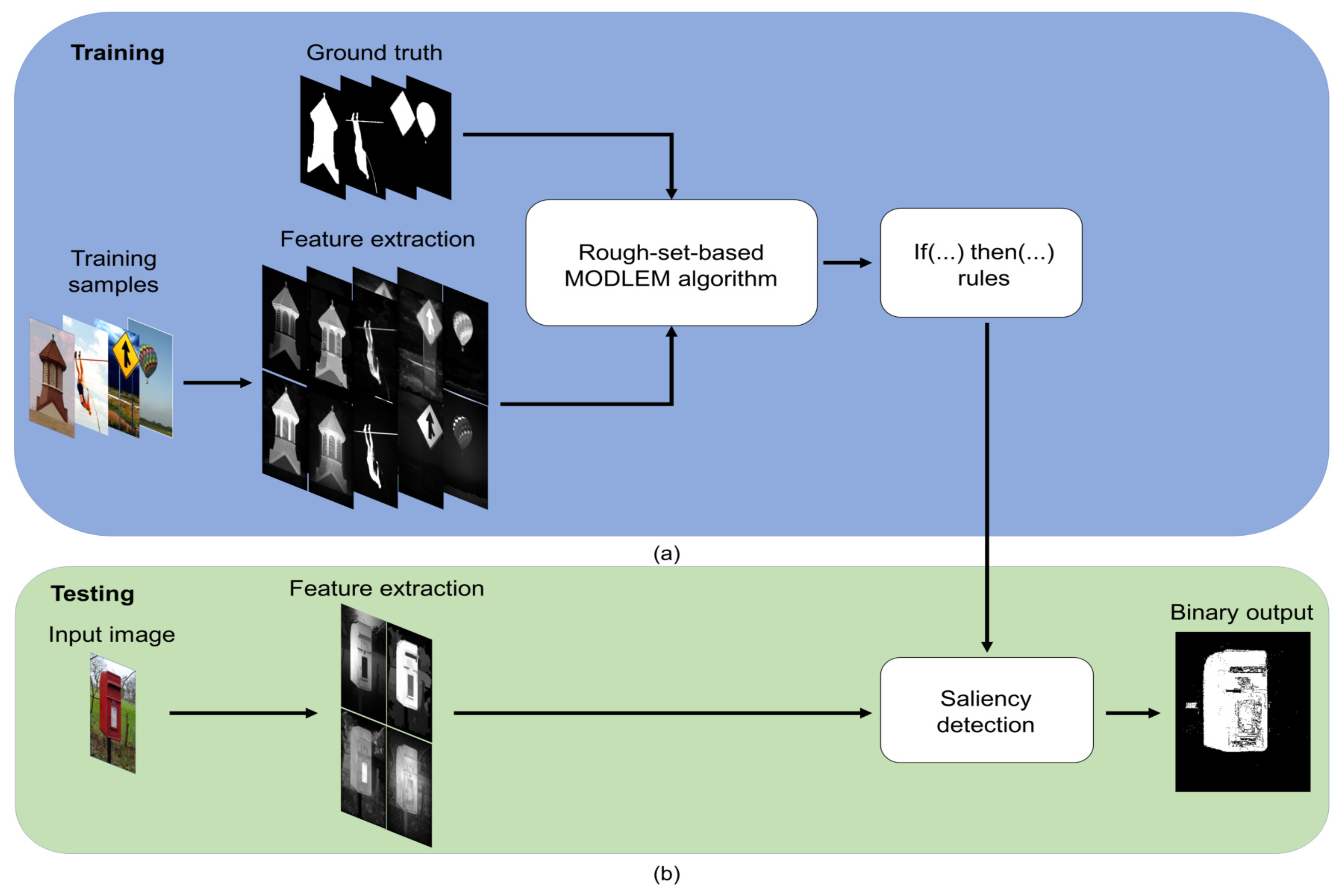

2. Methodology

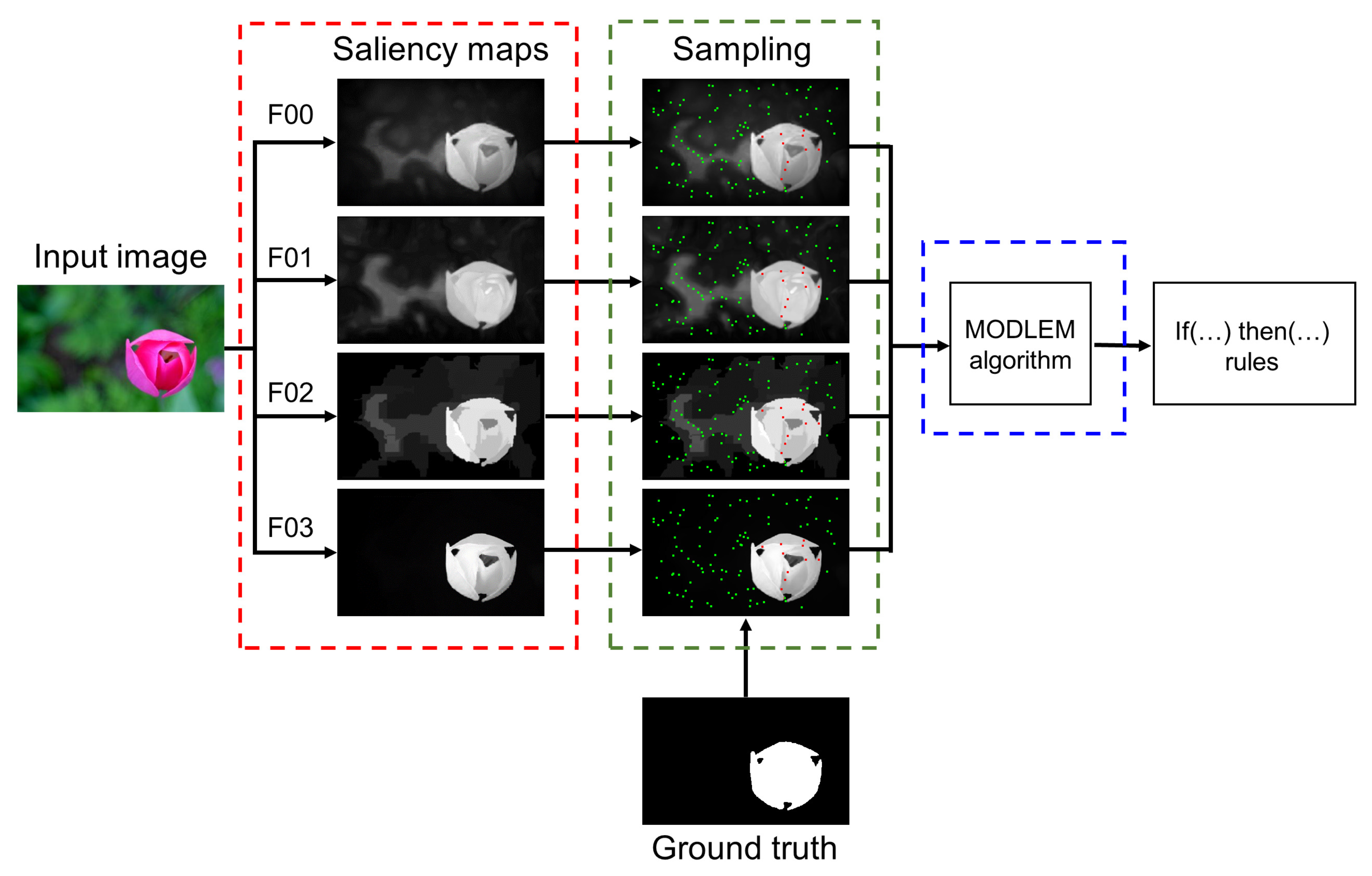

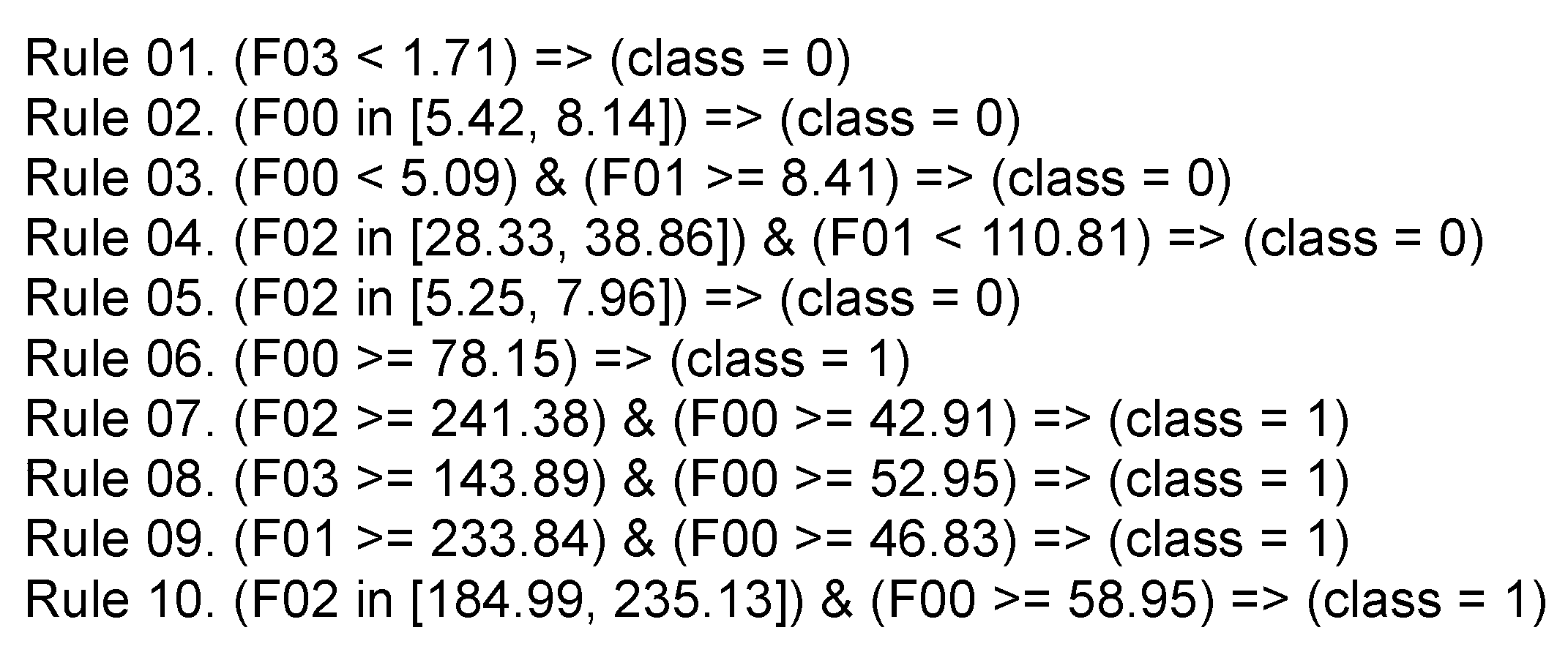

2.1. Rough-Set-Based Rules

2.2. Feature Extraction

2.3. Learning a Saliency Model

3. Experimental Results

3.1. Datasets and Quantitative Metrics

3.2. Parameter Setting

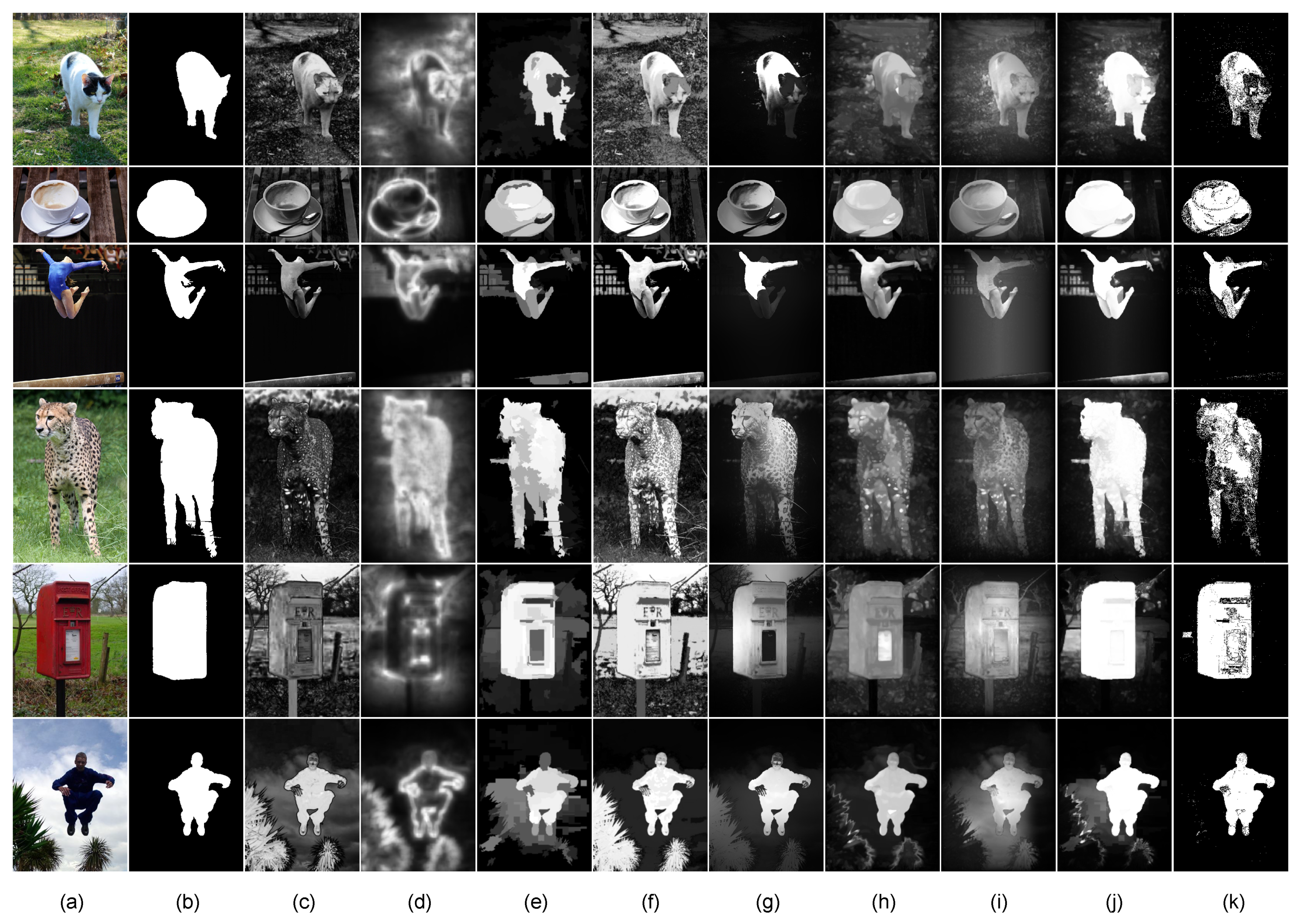

3.3. Evaluation

3.3.1. Comparison of Diverse Combinations

3.3.2. Saliency Detection Comparison Using an Adaptive Threshold

3.3.3. Saliency Detection Comparison with All Thresholds

4. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Koch, C.; Ullman, S. Shifts in selective visual attention: Towards the underlying neural circuitry. Hum. Neurobiol. 1985, 4, 219–227. [Google Scholar] [PubMed]

- Guo, C.; Zhang, L. A novel multiresolution spatiotemporal saliency detection model and its applications in image and video compression. IEEE Trans. Image Process. 2010, 19, 185–198. [Google Scholar]

- Stentiford, F. An estimator for visual attention through competitive novelty with application to image compression. In Proceedings of the Picture Coding Symposium 2001, Seoul, Korea, 25–27 April 2001; pp. 101–104. [Google Scholar]

- Ouerhani, N.; Bracamonte, J.; Hügli, H.; Ansorge, M.; Pellandini, F. Adaptive color image compression based on visual attention. In Proceedings of the 11th International Conference on Image Analysis and Processing, Palermo, Italy, 26–28 September 2001; Volume 11, pp. 416–421. [Google Scholar]

- Ren, Z.; Gao, S.; Chia, L.; Tsang, I.W. Region-based saliency detection and its application in object recognition. IEEE Trans. Circuits Syst. Video Technol. 2014, 24, 769–779. [Google Scholar] [CrossRef]

- Gao, D.; Han, S.; Vasconcelos, N. Discriminant saliency, the detection of suspicious coincidences, and applications to visual recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2009, 31, 989–1005. [Google Scholar]

- Rutishauser, U.; Walther, D.; Koch, C.; Perona, P. Is bottom-up attention useful for object recognition? In Proceedings of the 2004 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, 2004. CVPR 2004, Washington, DC, USA, 27 June–2 July 2004. [Google Scholar]

- Mei, T.; Hua, X.S.; Yang, L.; Li, S. VideoSense: Towards effective online video advertising. In Proceedings of the 15th ACM international conference on Multimedia, Augsburg, Germany, 25–29 September 2007; pp. 1075–1084. [Google Scholar]

- Chang, C.H.; Hsieh, K.Y.; Chung, M.C.; Wu, J.L. ViSA: Virtual spotlighted advertising. In Proceedings of the 16th ACM international conference on Multimedia, Vancouver, BC, Canada, 26–31 October 2008; pp. 837–840. [Google Scholar]

- 3M. Visual Attention System (VAS). Available online: https://www.3m.com/3M/en_US/visual-attention-software-us (accessed on 3 October 2018).

- Frintrop, S.; Jensfelt, P. Attentional landmarks and active gaze control for visual SLAM. IEEE Trans. Robot. 2008, 24, 1054–1065. [Google Scholar] [CrossRef]

- Siagian, C.; Itti, L. Biologically inspired mobile robot vision localization. IEEE Trans. Robot. 2009, 25, 861–873. [Google Scholar] [CrossRef]

- Chang, C.; Siagian, C.; Itti, L. Mobile robot vision navigation & localization using Gist and Saliency. In Proceedings of the 2010 IEEE/RSJ International Conference on Intelligent Robots and Systems, Taipei, Taiwan, 18–22 October 2010; pp. 4147–4154. [Google Scholar]

- Borji, A.; Sihite, D.N.; Itti, L. Salient object detection: A benchmark. In Proceedings of the European Conference on Computer Vision, (ECCV), Florence, Italy, 7–13 October 2012; pp. 414–429. [Google Scholar]

- Achanta, R.; Hemami, S.; Estrada, F.; Susstrunk, S. Frequency-tuned salient region detection. In Proceedings of the IEEE International Conference on Computer Vision and Pattern Recognition (CVPR 2009), Miami Beach, FL, USA, 20–25 June 2009; pp. 1597–1604. [Google Scholar]

- Itti, L.; Koch, C.; Niebur, E. A model of saliency-based visual attention for rapid scene analysis. IEEE Trans. Pattern Anal. Mach. Intell. 1998, 20, 1254–1259. [Google Scholar] [CrossRef]

- Treisman, A.M.; Gelade, G. A feature-integration theory of attention. Cogn. Psychol. 1980, 12, 97–136. [Google Scholar] [CrossRef]

- Frintrop, S.; Klodt, M.; Rome, E. A real-time visual attention system using integral images. In Proceedings of the 5th International Conference on Computer Vision Systems, Bielefeld, Germany, 21–24 March 2007; pp. 1–10. [Google Scholar]

- Frintrop, S.; Werner, T.; Garcia, G.M. Traditional saliency reloaded: A good old model in new shape. In Proceedings of the 2015 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015; pp. 82–90. [Google Scholar]

- Parkhurst, D.; Law, K.; Niebur, E. Modeling the role of salience in the allocation of overt visual attention. Vis. Res. 2002, 42, 107–123. [Google Scholar] [CrossRef]

- Le Meur, O.; Le Callet, P.; Barba, D.; Thoreau, D. A coherent computational approach to model the bottom-up visual attention. IEEE Trans. Pattern Anal. Mach. Intell. 2006, 28, 802–817. [Google Scholar] [CrossRef] [PubMed]

- Cheng, M.M.; Mitra, N.J.; Huang, X.; Torr, P.H.S.; Hu, S.M. Global contrast based salient region detection. IEEE Trans. Pattern Anal. Mach. Intell. 2015, 37, 569–582. [Google Scholar] [CrossRef] [PubMed]

- Perazzi, F.; Krahenbuhl, P.; Pritch, Y.; Hornung, A. Saliency filters: Contrast based filtering for salient region detection. In Proceedings of the 2012 IEEE Conference on Computer Vision and Pattern Recognition, Providence, RI, USA, 16–21 June 2012; pp. 733–740. [Google Scholar]

- Goferman, S.; Zelnik-Manor, L.; Tal, A. Context-aware saliency detection. IEEE Trans. Pattern Anal. Mach. Intell. 2012, 34, 1915–1926. [Google Scholar] [CrossRef] [PubMed]

- Huang, X.; Zhang, Y.J. 300-FPS salient object detection via minimum directional Contrast. IEEE Trans. Image Process. 2017, 26, 4243–4254. [Google Scholar] [CrossRef] [PubMed]

- Itti, L.; Koch, C. Computational modelling of visual attention. Nat. Rev. Neurosci. 2001, 2, 194–203. [Google Scholar] [CrossRef]

- Bylinskii, Z.; Judd, T.; Oliva, A.; Torralba, A.; Durand, F. What do different evaluation metrics tell us about saliency models? IEEE Trans. Pattern Anal. Mach. Intell. 2019, 41, 740–757. [Google Scholar] [CrossRef]

- Mai, L.; Niu, Y.; Liu, F. Saliency aggregation: A data-driven approach. In Proceedings of the 2013 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Portland, OR, USA, 23–28 June 2013; pp. 1131–1138. [Google Scholar]

- Wang, L.; Lu, H.; Ruan, X.; Yang, M.H. Deep networks for saliency detection via local estimation and global search. In Proceedings of the 2015 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015; pp. 3183–3192. [Google Scholar]

- Zhao, R.; Ouyang, W.; Li, H.; Wang, X. Saliency detection by multi-context deep learning. In Proceedings of the 2015 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015; pp. 1265–1274. [Google Scholar]

- Liu, N.; Han, J. DHSNet: Deep hierarchical saliency network for salient object Detection. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 678–686. [Google Scholar]

- Hu, P.; Shuai, B.; Liu, J.; Wang, G. Deep level sets for salient object detection. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 540–549. [Google Scholar]

- Chen, S.; Wang, B.; Tan, X.; Hu, X. Embedding attention and residual network for accurate salient object detection. IEEE Trans. Cybern. 2018. [Google Scholar] [CrossRef]

- Napierala, K.; Stefanowski, J. BRACID: A comprehensive approach to learning rules from imbalanced data. J. Intell. Inf. Syst. 2012, 39, 335–373. [Google Scholar] [CrossRef]

- Stefanowski, J. On Combined Classifiers, Rule Induction and Rough Sets. Trans. Rough Sets VI LNCS 2007, 4374, 329–350. [Google Scholar]

- Pawlak, Z. Rough sets. Int. J. Comput. Inf. Sci. 1982, 11, 341–356. [Google Scholar] [CrossRef]

- Swiniarski, R. Rough sets methods in feature reduction and classification. Int. J. Appl. Math. Comput. Sci. 2001, 11, 565–582. [Google Scholar]

- Tay, F.E.; Shen, L. Economic and financial prediction using rough sets model. Eur. J. Oper. Res. 2002, 141, 641–659. [Google Scholar] [CrossRef]

- Pawlak, Z. Why rough sets? Proc. IEEE Int. Fuzzy Syst. 1996, 2, 738–743. [Google Scholar]

- Zhang, J.; Sclaroff, S.; Lin, Z.; Shen, X.; Price, B.; Mech, R. Minimum barrier salient object detection at 80 FPS. In Proceedings of the 2015 IEEE Conference on Computer Vision and Pattern Recognition (ICCV), Santiago, Chile, 11–18 December 2015; pp. 1404–1412. [Google Scholar]

- Stefanowski, J. On rough set based approaches to induction of decision rules. Rough Sets Knowl. Discov. 1998, 1, 500–529. [Google Scholar]

- Stefanowski, J. The rough set based rule induction technique for classification problems. In Proceedings of the 6th European Conference on Intelligent Techniques and Soft Computing EUFIT, Aachen, Germany, 7–10 September 1998; Volume 98, pp. 109–113. [Google Scholar]

- Grzymala-Busse, J.W.; Stefanowski, J. Three discretization methods for rule induction. Int. J. Intell. Syst. 2001, 16, 29–38. [Google Scholar] [CrossRef]

- Hall, M.; Frank, E.; Holmes, G.; Pfahringer, B.; Reutemann, P.; Witten, I.H. The WEKA data mining software: An update. ACM SIGKDD Explor. Newsl. 2009, 11, 10–18. [Google Scholar] [CrossRef]

- Rother, C.; Kolmogorov, V.; Blake, A. GrabCut: Interactive foreground extraction using iterated graph cuts. ACM Trans. Graph. 2004, 23, 309–314. [Google Scholar] [CrossRef]

- Shi, J.; Yan, Q.; Xu, L.; Jia, J. Hierarchical image saliency detection on extended CSSD. IEEE Trans. Pattern Anal. Mach. Intell. 2016, 38, 717–729. [Google Scholar] [CrossRef]

- Batra, D.; Kowdle, A.; Parikh, D.; Luo, J.; Chen, T. iCoseg: Interactive co-segmentation with intelligent scribble guidance. In Proceedings of the 2010 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, San Francisco, CA, USA, 13–18 June 2010; pp. 3169–3176. [Google Scholar]

- Borji, A.; Cheng, M.M.; Jiang, H.; Li, J. Salient object detection: A benchmark. IEEE Trans. Image Process. 2015, 24, 5706–5722. [Google Scholar] [CrossRef]

- Jiang, H.; Wang, J.; Yuan, Z.; Wu, Y.; Zheng, N.; Li, S. Salient object detection: A discriminative regional feature integration approach. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Portland, OR, USA, 23–28 June 2013; pp. 2083–2090. [Google Scholar]

- Powers, D.M.W. Evaluation: From precision, recall and f-factor to roc, informedness, markedness and correlation. J. Mach. Learn. Technol. 2011, 2, 37–63. [Google Scholar]

- Dembczynski, K.J.; Waegeman, W.; Cheng, W.; Hüllermeier, E. An exact algorithm for f-measure maximization. In Advances in Neural Information Processing Systems; Curran Associates, Inc.: Red Hook, NY, USA, 2011; pp. 1404–1412. [Google Scholar]

- Liu, T.; Yuan, Z.; Sun, J.; Wang, J.; Zheng, N.; Tang, X.; Shum, H. Learning to Detect a Salient Object. IEEE Trans. Pattern Anal. Mach. Intell. 2011, 33, 353–367. [Google Scholar] [PubMed]

| Method | F-Measure |

|---|---|

| RC (2011) | 0.720 |

| SF (2012) | 0.707 |

| MDC (2017) | 0.680 |

| MBS (2015) | 0.665 |

| FT (2009) | 0.601 |

| HC (2011) | 0.572 |

| CA (2010) | 0.467 |

| Superior | |

| Combination | F-Measure |

| RC | 0.633 |

| RC, SF | 0.663 |

| RC, SF, MDC | 0.715 |

| RC, SF, MDC, MBS | 0.736 |

| RC, SF, MDC, MBS, FT | 0.730 |

| RC, SF, MDC, MBS, FT, HC | 0.726 |

| RC, SF, MDC, MBS, FT, HC, CA | 0.725 |

| Inferior | |

| Combination | F-Measure |

| CA | 0.191 |

| CA, HC | 0.547 |

| CA, HC, FT | 0.566 |

| CA, HC, FT, MBS | 0.644 |

| CA, HC, FT, MBS, MDC | 0.667 |

| CA, HC, FT, MBS, MDC, SF | 0.687 |

| Method | Average F-Measure | Average Precision | Average Recall |

|---|---|---|---|

| FT (2009) | 0.577 | 0.624 | 0.481 |

| CA (2010) | 0.505 | 0.540 | 0.489 |

| RC (2011) | 0.759 | 0.780 | 0.737 |

| HC (2011) | 0.572 | 0.599 | 0.559 |

| SF (2012) | 0.722 | 0.760 | 0.589 |

| PW (2013) | 0.734 | 0.744 | 0.794 |

| MBS (2015) | 0.699 | 0.736 | 0.642 |

| MDC (2017) | 0.726 | 0.765 | 0.640 |

| RSD (ours) | 0.770 | 0.847 | 0.513 |

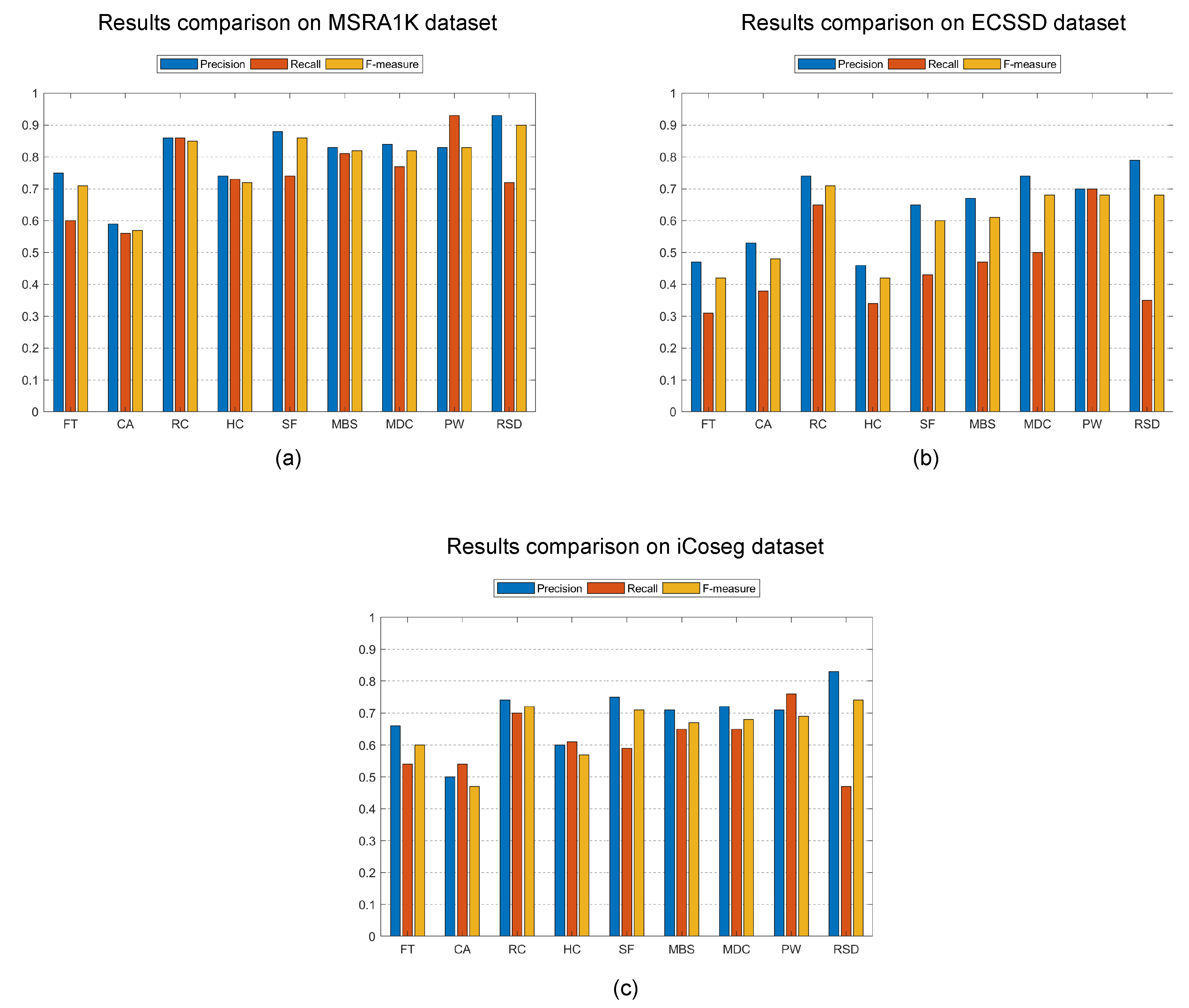

| Method (year) | MSRA1K | ECSSD | iCoseg |

|---|---|---|---|

| F-Measure | F-Measure | F-Measure | |

| Average | Average | Average | |

| FT (2009) | 0.481 | 0.280 | 0.434 |

| CA (2010) | 0.439 | 0.374 | 0.407 |

| RC (2011) | 0.761 | 0.628 | 0.675 |

| HC (2011) | 0.652 | 0.388 | 0.553 |

| SF (2012) | 0.733 | 0.489 | 0.611 |

| PW (2013) | 0.816 | 0.637 | 0.677 |

| MBS (2015) | 0.596 | 0.415 | 0.516 |

| MDC (2017) | 0.584 | 0.447 | 0.515 |

| RSD (ours) | 0.896 | 0.679 | 0.735 |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lopez-Alanis, A.; Lizarraga-Morales, R.A.; Sanchez-Yanez, R.E.; Martinez-Rodriguez, D.E.; Contreras-Cruz, M.A. Visual Saliency Detection Using a Rule-Based Aggregation Approach. Appl. Sci. 2019, 9, 2015. https://doi.org/10.3390/app9102015

Lopez-Alanis A, Lizarraga-Morales RA, Sanchez-Yanez RE, Martinez-Rodriguez DE, Contreras-Cruz MA. Visual Saliency Detection Using a Rule-Based Aggregation Approach. Applied Sciences. 2019; 9(10):2015. https://doi.org/10.3390/app9102015

Chicago/Turabian StyleLopez-Alanis, Alberto, Rocio A. Lizarraga-Morales, Raul E. Sanchez-Yanez, Diana E. Martinez-Rodriguez, and Marco A. Contreras-Cruz. 2019. "Visual Saliency Detection Using a Rule-Based Aggregation Approach" Applied Sciences 9, no. 10: 2015. https://doi.org/10.3390/app9102015

APA StyleLopez-Alanis, A., Lizarraga-Morales, R. A., Sanchez-Yanez, R. E., Martinez-Rodriguez, D. E., & Contreras-Cruz, M. A. (2019). Visual Saliency Detection Using a Rule-Based Aggregation Approach. Applied Sciences, 9(10), 2015. https://doi.org/10.3390/app9102015