2.1. Image Dataset

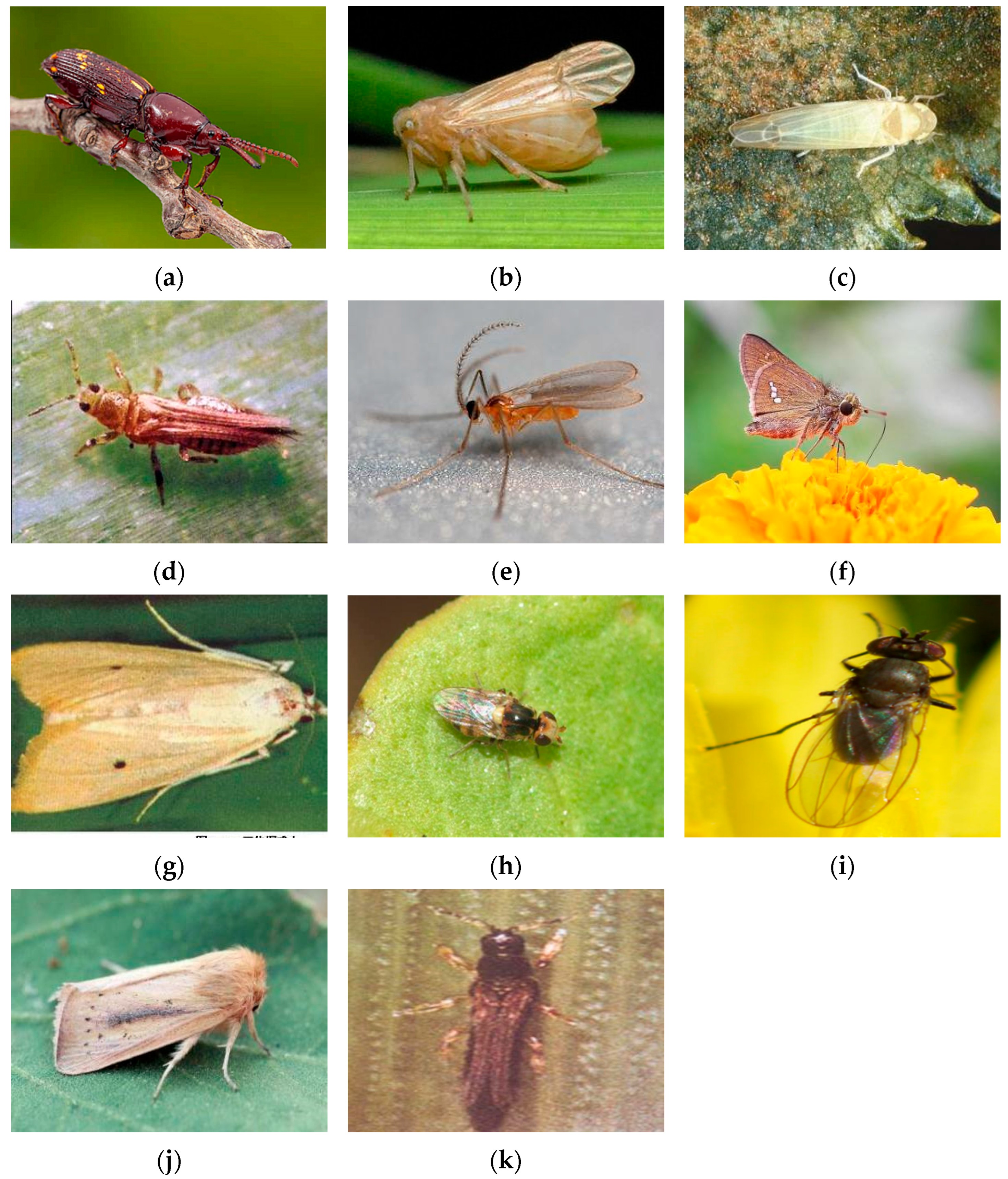

This research utilizes the RP11 (Rice Pest 11) dataset, crafted by Ding et al. [

27], as its foundation. The RP11 dataset came from the large-scale IP102 pest dataset [

28] through a process of screening, cleaning, and professional re-annotation by plant protection experts based on strict morphological criteria. It is significantly different from the original dataset in terms of taxonomy, annotation accuracy, and image quality.

The RP11 dataset is built upon images from two sources. One is the manually selected high-quality adult insect images from “rice” as a super-category of IP102. The other is the extra image datasets scraped from some authoritative web databases, such as GBIF and Insect Images, by a Latin name-based web crawler. These were added to balance the category distribution and were subsequently verified by experts.

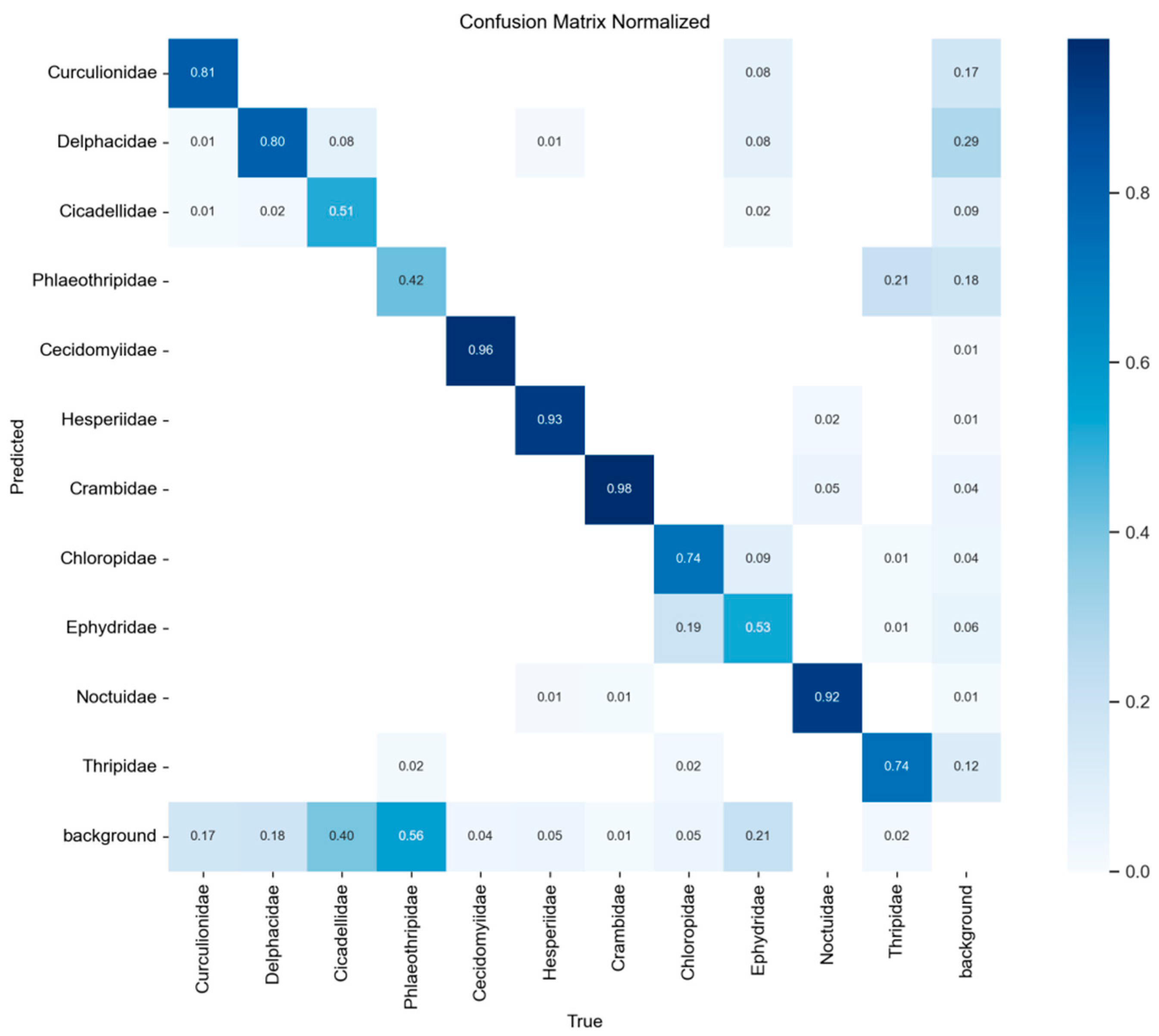

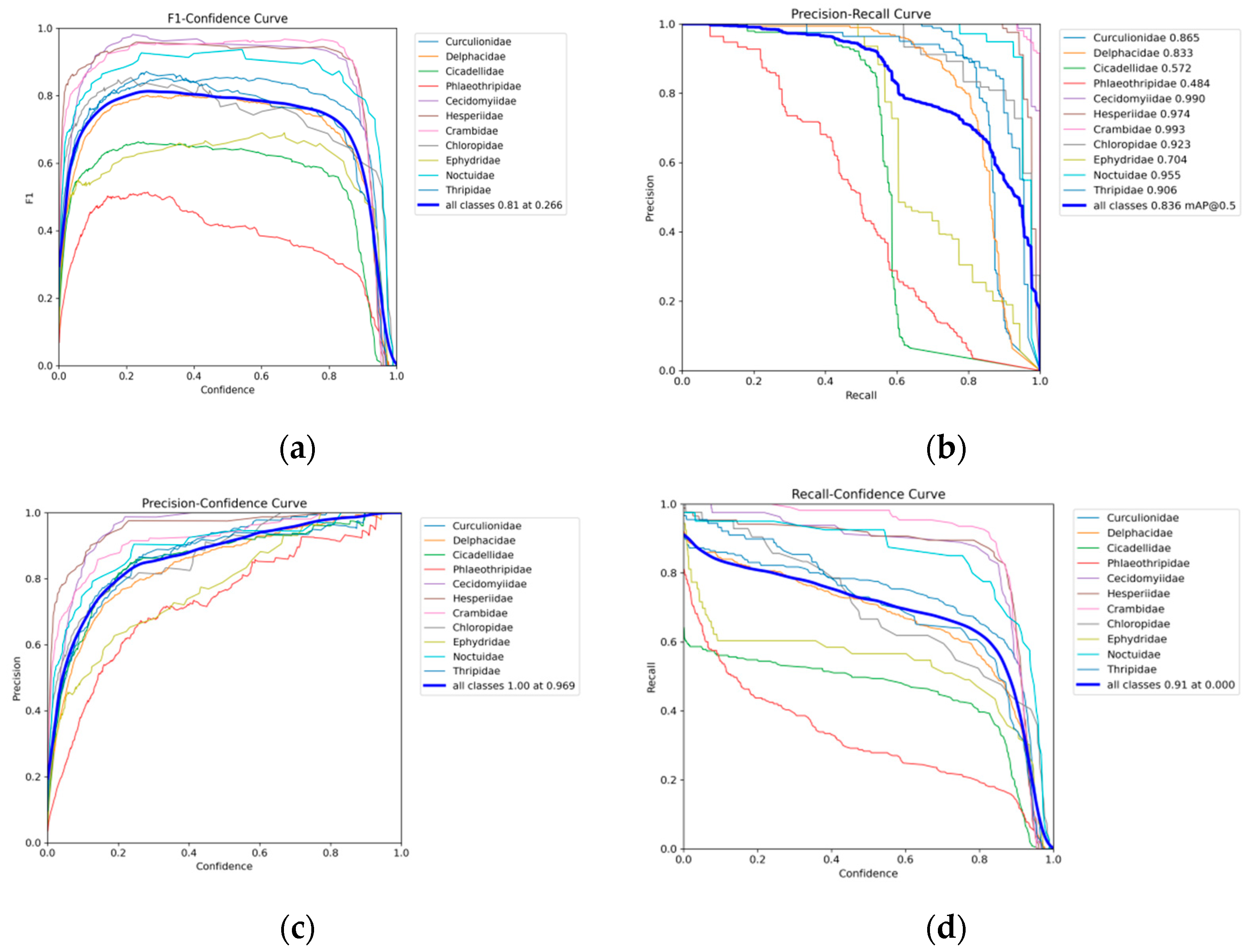

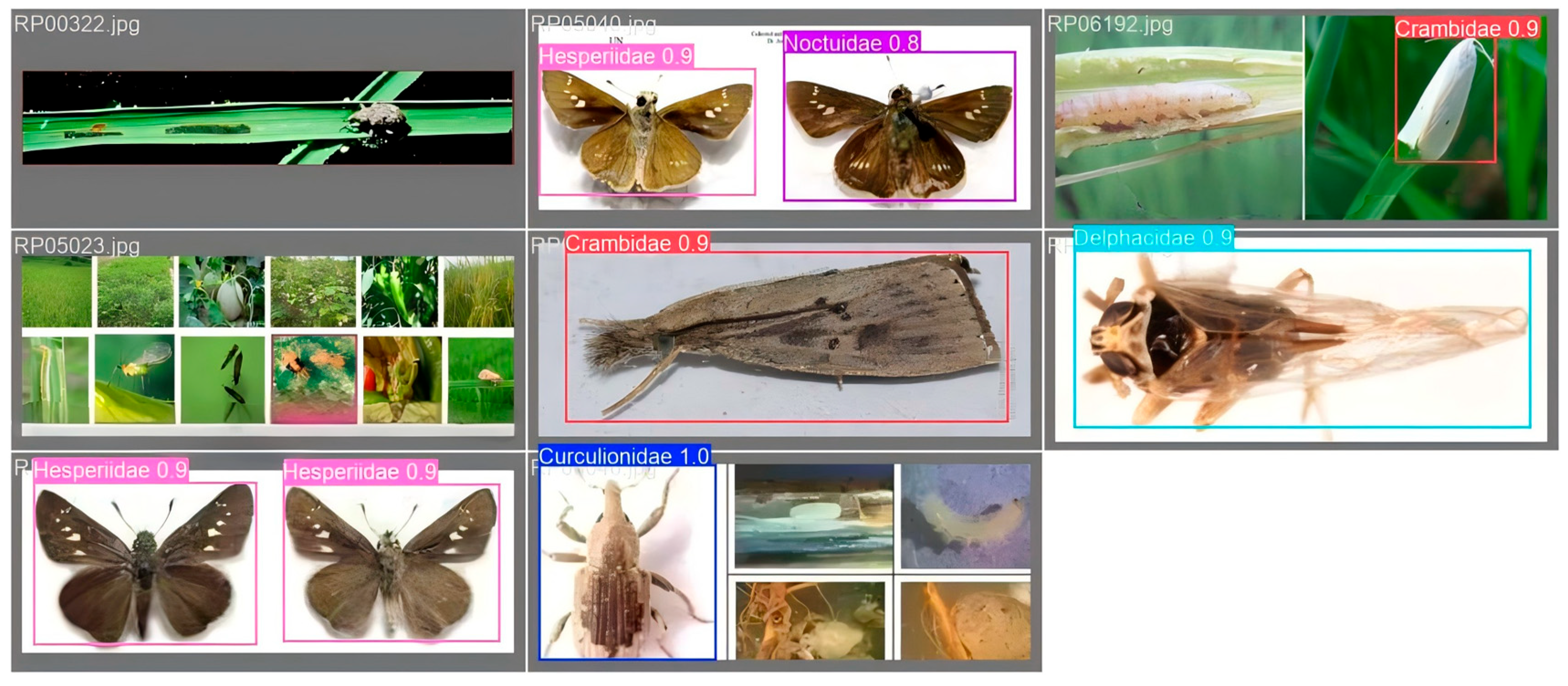

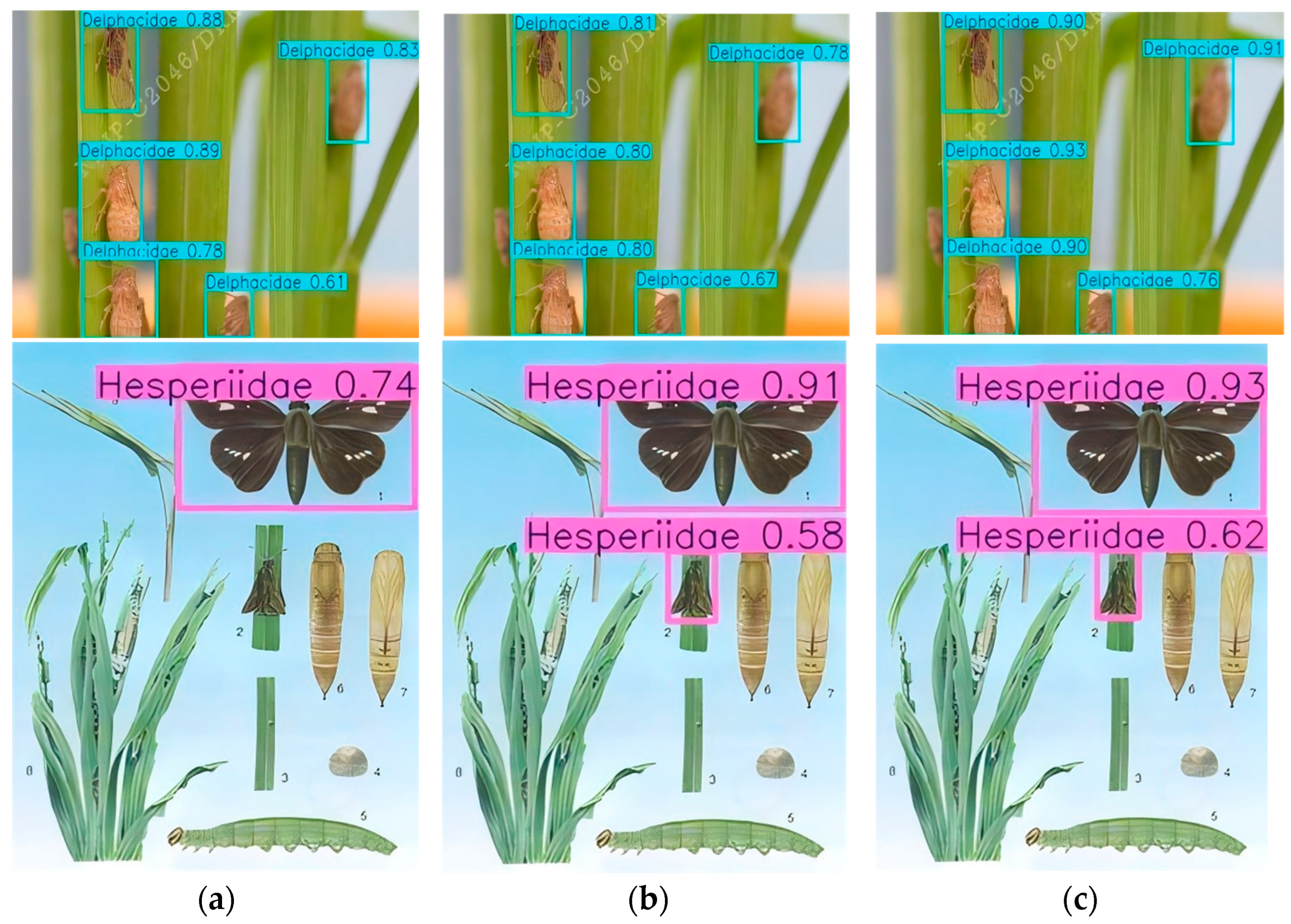

Figure 1 shows representative image samples from the adult pest categories in the RP11 dataset. The distribution of labels across categories is provided in

Table 1.

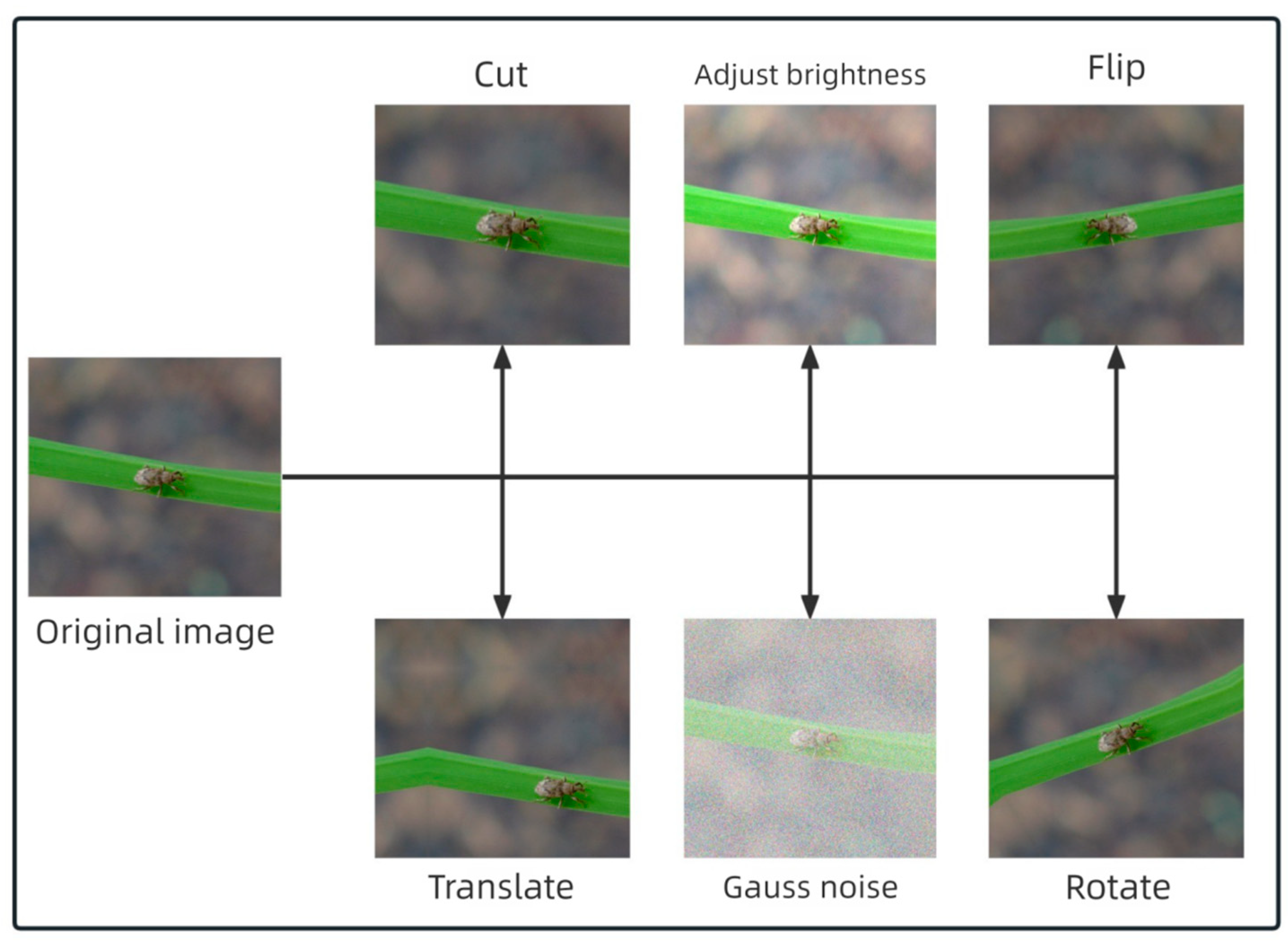

In order to enhance the generalization ability of the model in a complex field environment and prevent over-fitting, we apply offline data augmentation to the training set. We adopt both geometric transformation and content transformation to expand the training set. Geometric transformations, including random scaling and cropping, horizontal flipping, translation, and rotation, are used to simulate the variation of target scale and spatial position. And content transformations, including adjusting brightness and injecting Gaussian noise, are used to simulate the variation of lighting conditions and sensor noise from different capture devices. The whole data augmentation flow is shown in

Figure 2.

These augmentation strategies collectively enhance the model’s adaptability to varying illumination, occlusion, and sensor noise, improving its robustness in complex field environments.

The dataset was randomly divided into training, validation, and test subsets with a ratio of 7:2:1 (3189/911/457 images). To prevent data leakage, each image in the RP11 dataset was assigned to only one subset, ensuring no overlap between training, validation, and test data.

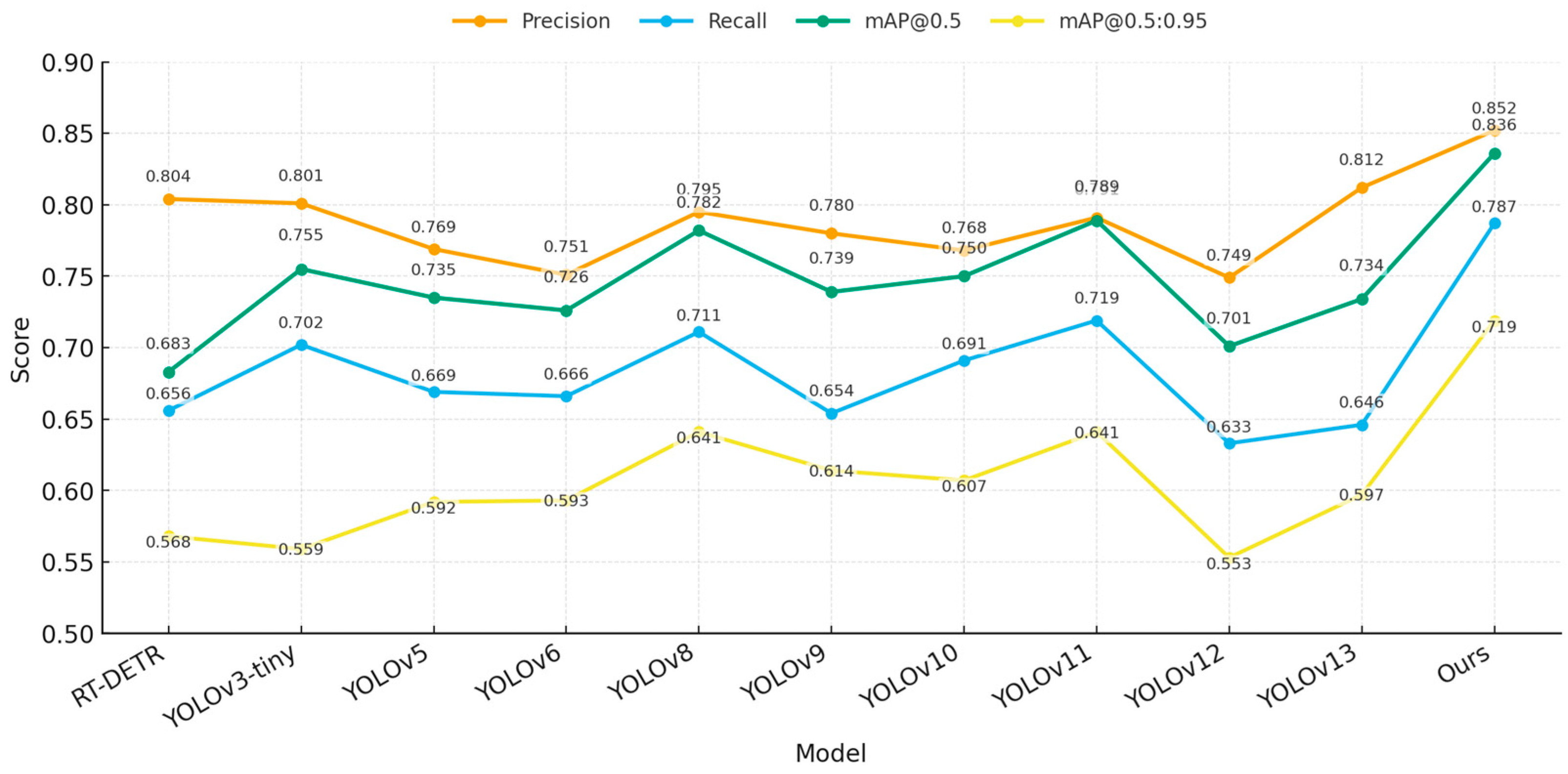

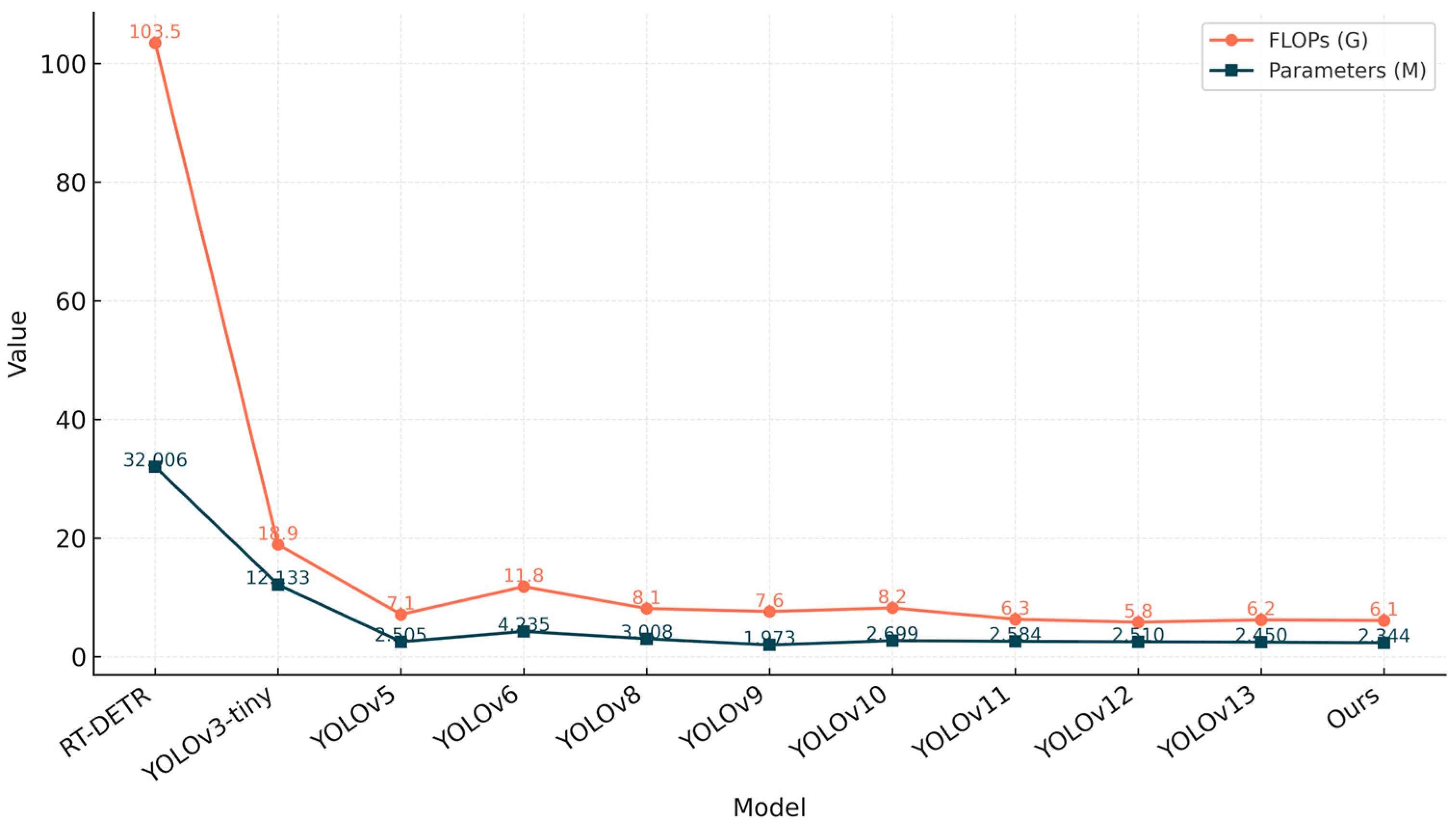

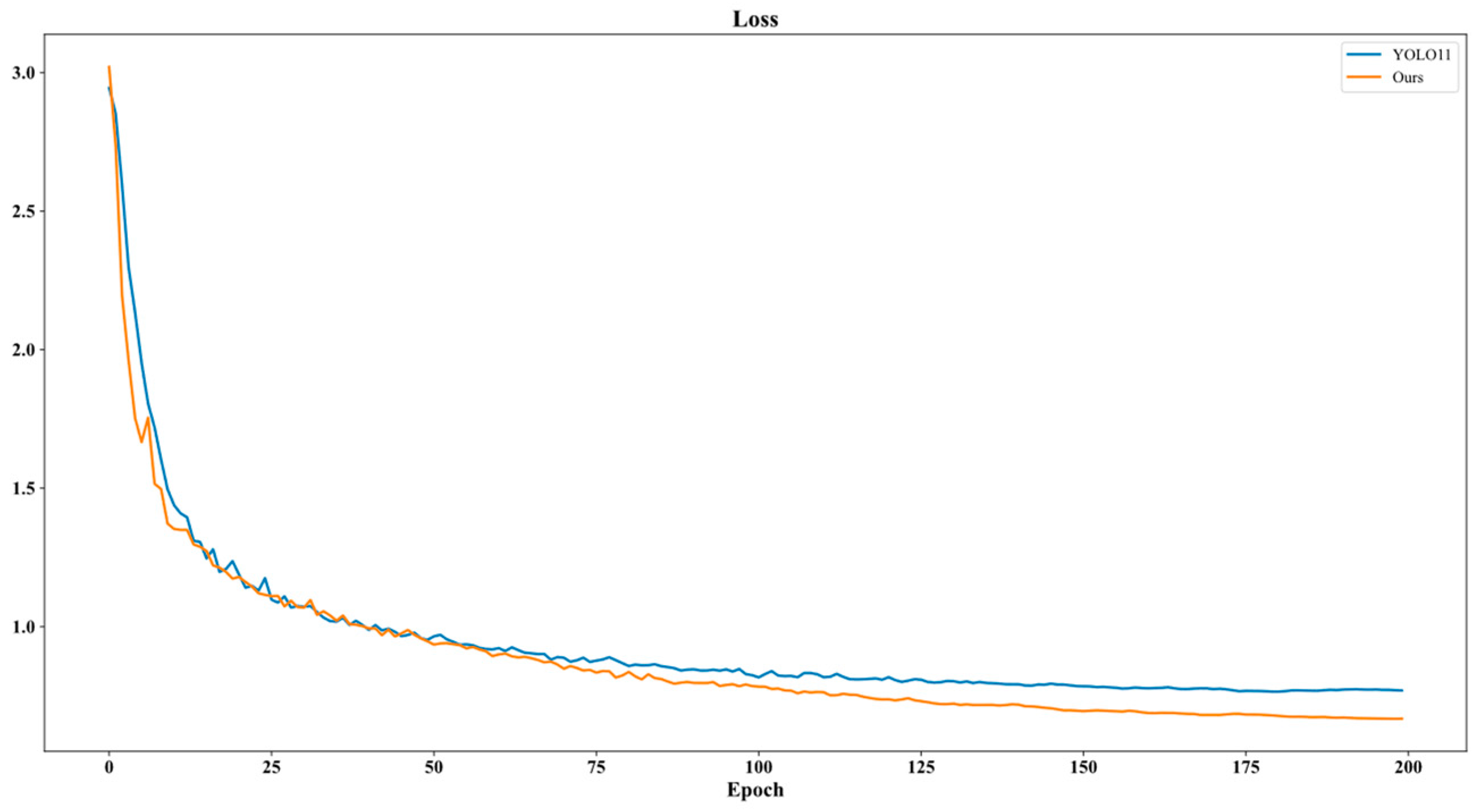

2.2. CRRE-YOLO

YOLO series is a mainstream architecture in object detection. It is effective at balancing real-time capability and accuracy [

29]. This paper adopts YOLOv11n [

30] as the baseline model. YOLOv11n is optimized in structure to get a better feature extractor and inference. YOLOv11n can be rescaled to get other variants with more or fewer parameters and representational ability, such as (n, s, m, l, x). To meet the requirements of edge computing and real-time analysis in agricultural applications, we choose the lightest and fastest variant in YOLOv11n as our baseline, YOLOv11n.

However, when applying YOLOv11n to paddy pest detection, we found that it had some limitations in a complex field environment. Firstly, it was hard for YOLOv11n to extract delicate pest features. Secondly, it was not easy to regress accurate bounding boxes for targets of different sizes. Thirdly, it was difficult for YOLOv11n to adapt to complex scenes.

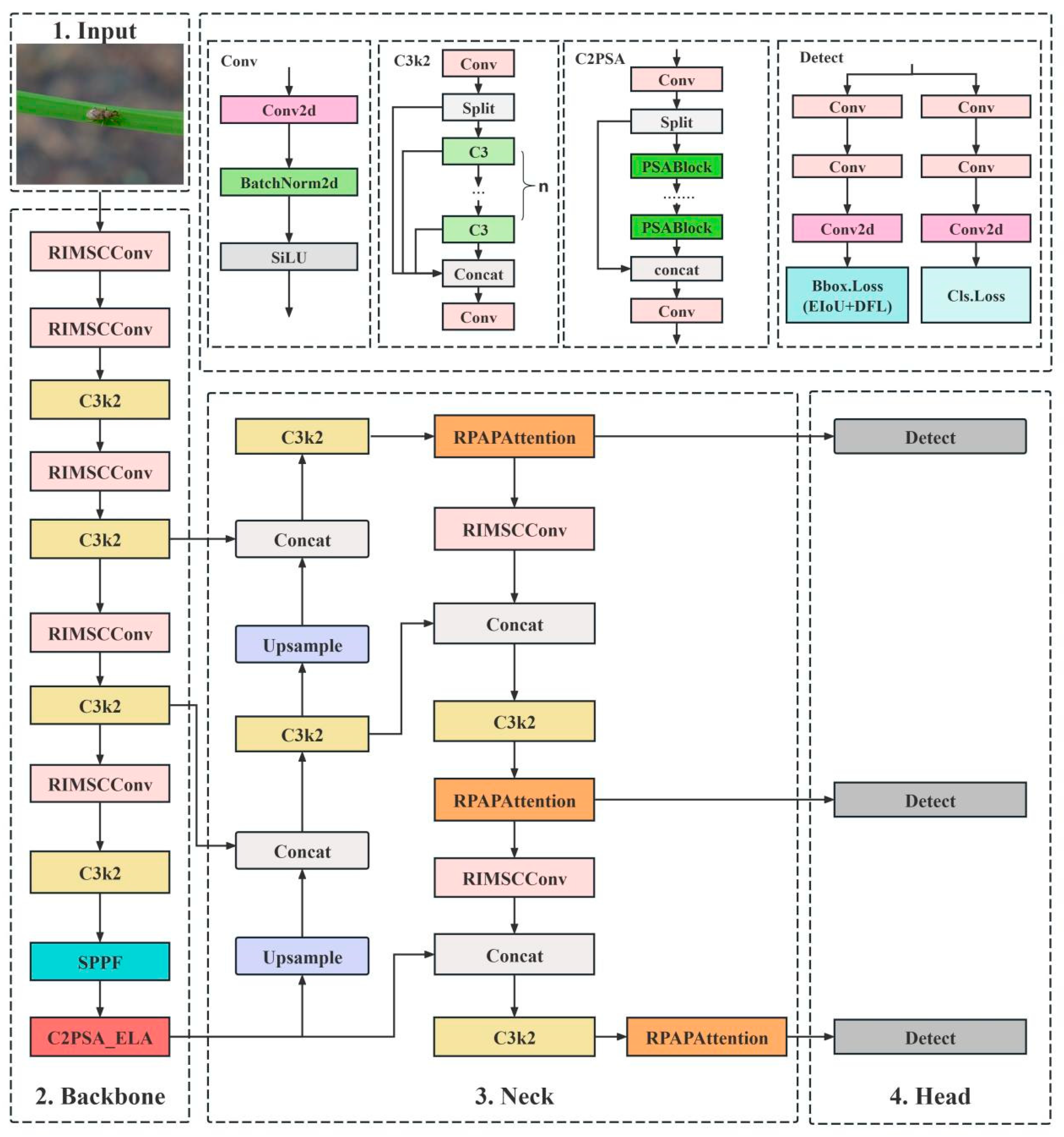

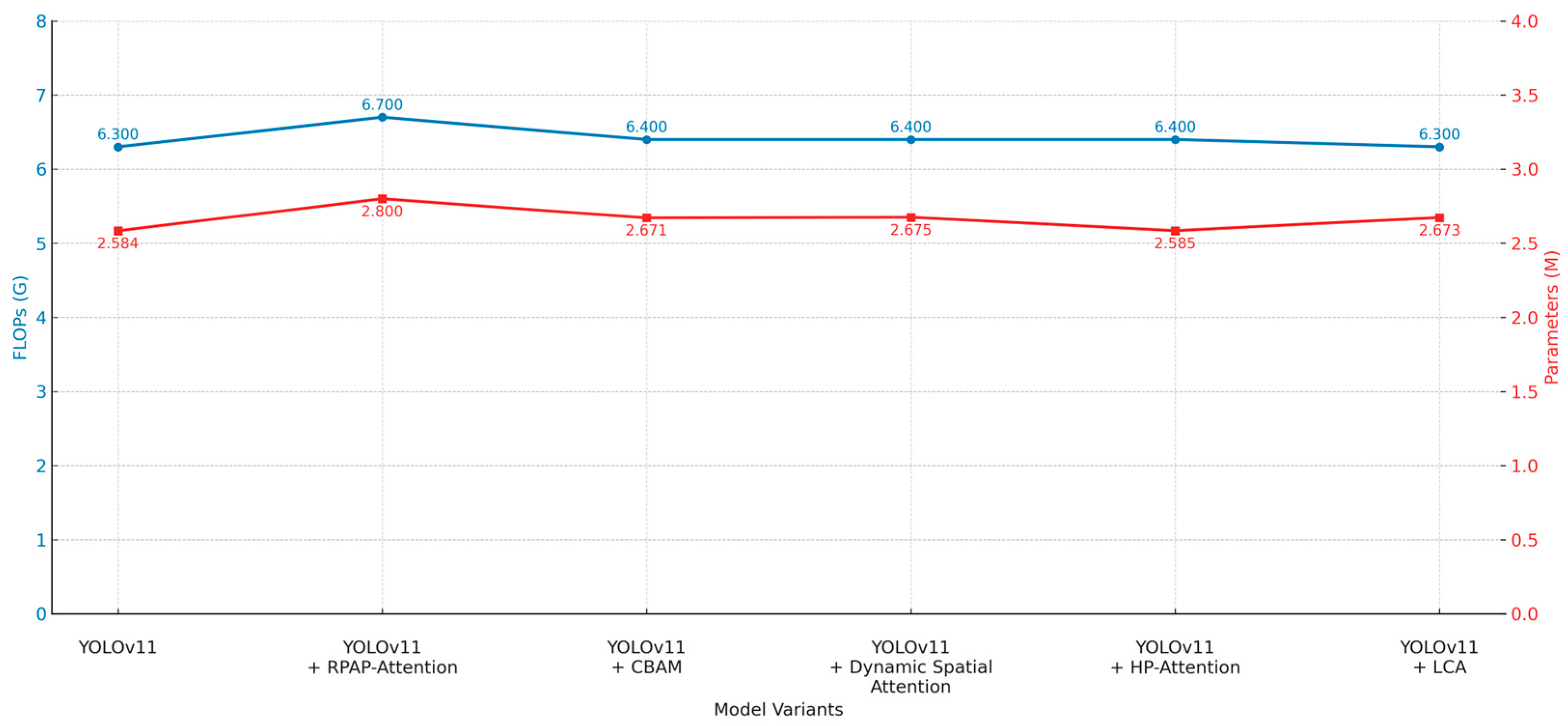

To address these issues, we propose a new model, CRRE-YOLO, which introduces four innovative components to enhance the detection performance in an iterative way: C2PSA_ELA, an Efficient Local Attention module; RPAPAttention, an Adaptive Perceptual Attention mechanism; RIMSCConv, a lightweight MultiScale Convolution module; and EIoU loss function. The whole architecture of CRRE-YOLO is shown in

Figure 3.

The design of CRRE-YOLO follows a problem-driven rationale aimed at the specific challenges of rice-pest detection. Rice pests are typically small, densely distributed, and often visually similar to background textures under complex field illumination, while real-time edge deployment demands high computational efficiency.

To address these issues, four complementary modules were integrated:

EIoU loss improves localization stability for small and overlapping pests.

C2PSA_ELA enhances local-detail extraction to distinguish fine pest features from complex backgrounds.

RPAPAttention strengthens texture discrimination and robustness to occlusion or illumination changes.

RIMSCConv provides efficient multiscale perception with minimal computation for edge devices.

Together, these modules form a compact and efficient architecture that directly addresses small-target recognition, background interference, and computational efficiency constraints, establishing a clear methodological rationale for the proposed CRRE-YOLO framework.

Each component plays a key role in addressing these challenges. C2PSA_ELA enhances fine-grained feature perception, RPAPAttention improves feature discrimination under complex backgrounds, RIMSCConv strengthens multiscale adaptability, and EIoU loss refines bounding-box regression. Together, these modules greatly enhance the model’s robustness and detection accuracy in real-world paddy fields.

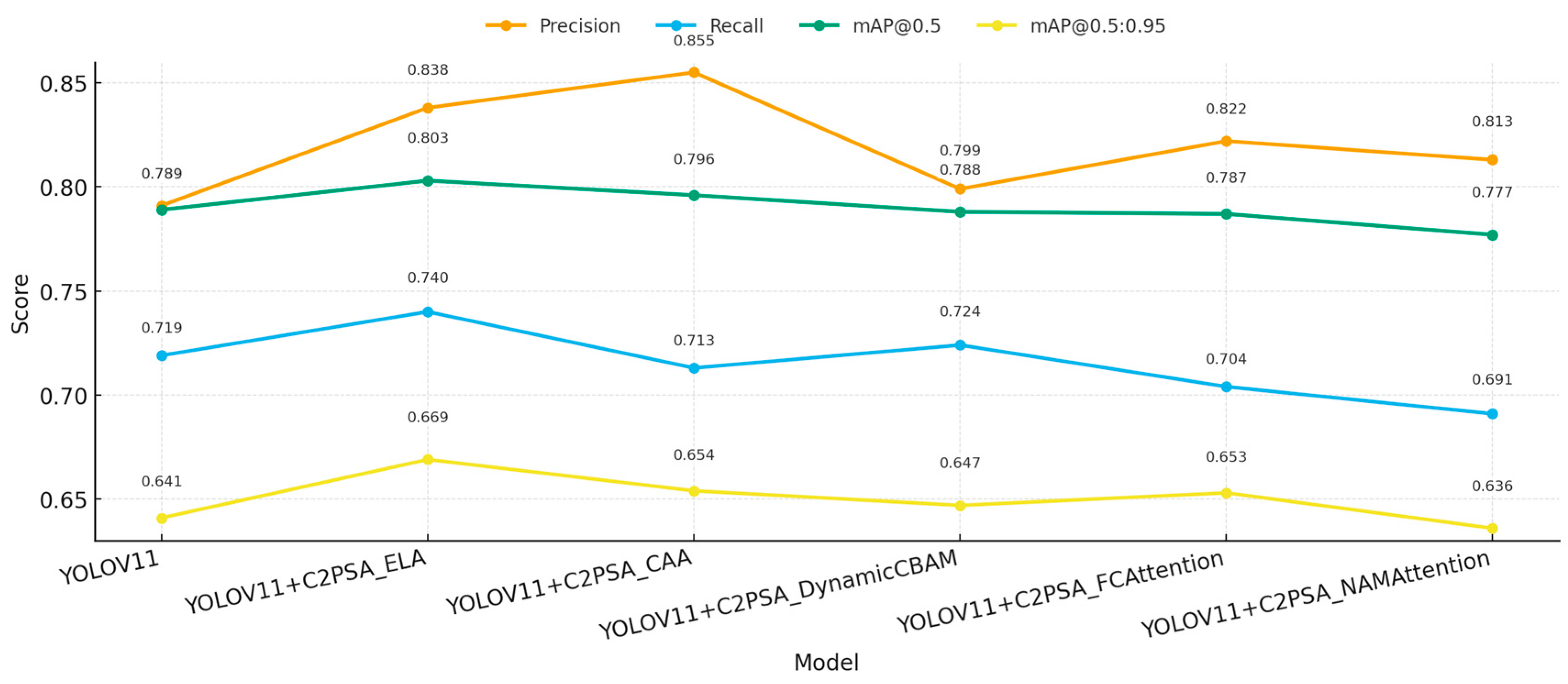

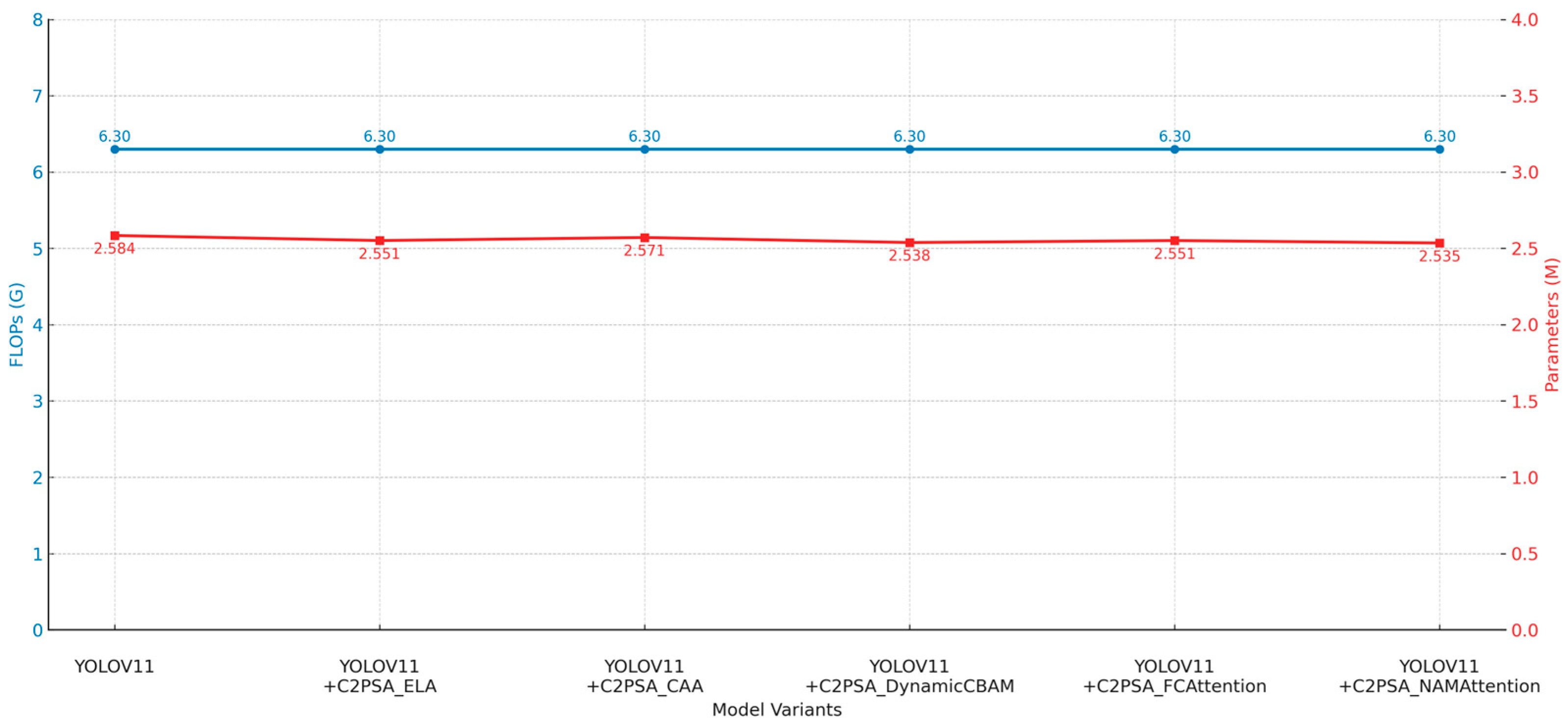

2.2.1. C2PSA_ELA

Compared with rice leaves and panicles, rice pests target present a high degree of similarity with their background. For example, Curculionidae and Delphacidae are almost indistinguishable from rice leaves in both color and texture. Therefore, it is challenging for models to distinguish foreground from background. In contrast to previous methods, the proposed method partially improved the modeling of features through Position-Sensitive Attention in C2PSA. However, because Position-Sensitive Attention heavily relied on global spatial relationships, its local perception ability was relatively weak. This limited the improvement of detection accuracy in complex paddy field scenes. Specifically, this local perception ability often leads to the missing of small targets and ambiguous representation of features, which also restricts the improvement of subsequent detection accuracy.

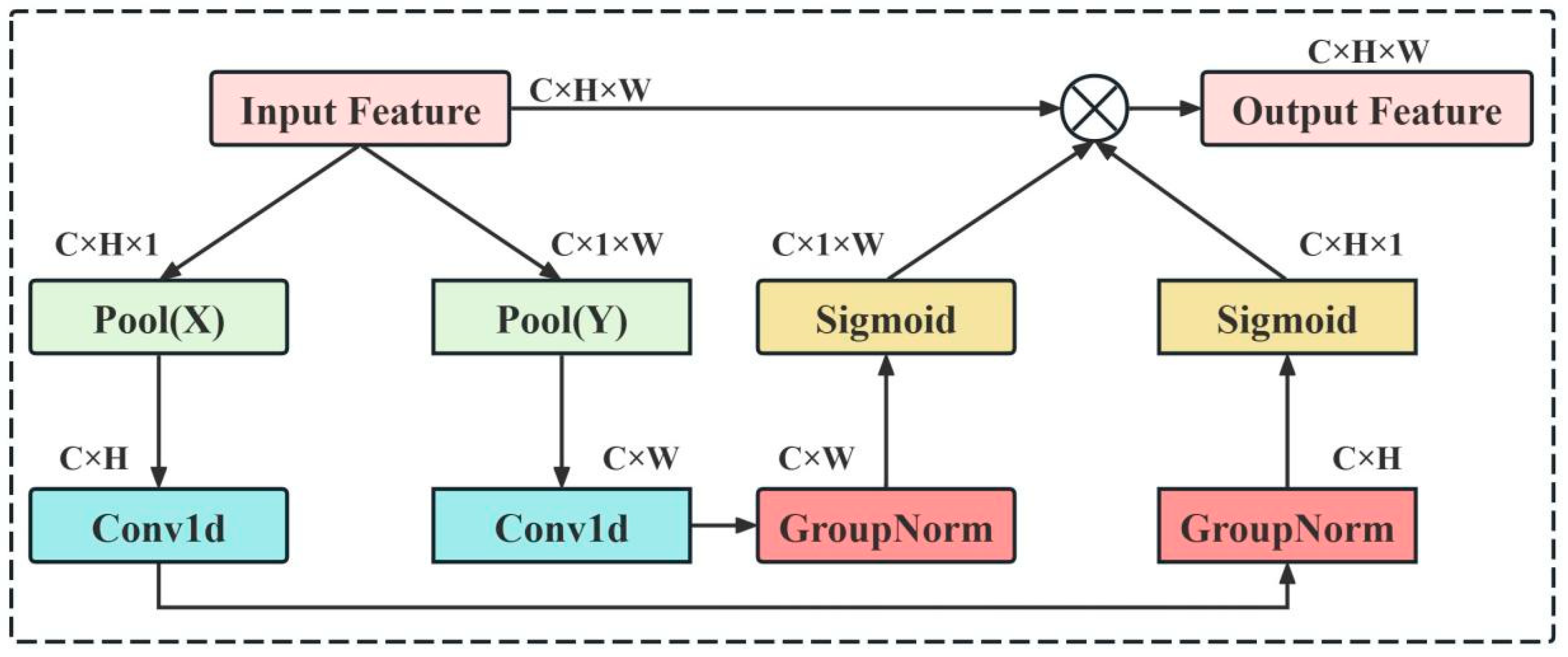

To address the above issues, we design an Efficient Local Attention (ELA) mechanism [

31]. As shown in

Figure 4, the ELA adaptively performs statistical modeling in horizontal and vertical directions of feature maps to extract row and column dimensional information. Afterwards, lightweight convolutions are used to enhance the localized features. Different from previous attention methods that focused on global spatial relationships, the proposed ELA focuses on spatially local structures and enhances the discriminative local details at a low computational cost. Meanwhile, it can suppress the interference of the background and enhance the model’s sensitivity to subtle features. Thus, it is suitable for the detection scenarios with complex backgrounds and varying textures in rice fields.

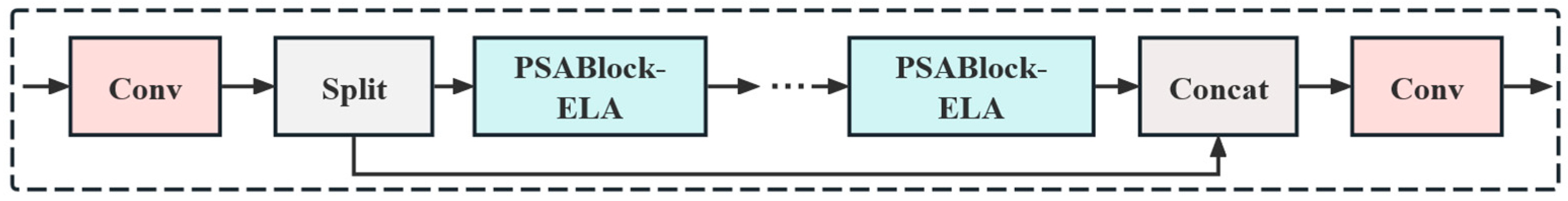

Based on the ELA mechanism, we further propose C2PSA_ELA, whose structure is shown in

Figure 5. Similar to the original C2PSA module, this module still uses a dualbranch convolutional structure. However, we embed ELA into PSABlock to construct the multi-level local attention enhancement path. Specifically, one branch keeps the stability of input features, while the other concatenates multiple EBs based on ELA-PSABlock to sequentially perform the local attention extraction and nonlinear transformation. Through the stacked design, C2PSA_ELA can enhance the visual saliency of target regions in a gradual way. Finally, the two branches enhance the model discrimination ability in a complementary way during the feature fusion stage, which is performed by the lightweight convolutional structure.

When running in the backbone network, the C2PSA_ELA shows the following advantages in rice field pest detection: Firstly, compared with the original module, the ELA method can solve the problem that the original module cannot express enough local features well, and improve the ability of expressing small target features and perception of differences between targets and backgrounds. Secondly, the local feature expression of two branches is enhanced and not at the expense of global semantic information, which avoids the problem of semantic information loss caused by excessive localization. Finally, this module can realize accurate localization of small-sized pests while controlling the complexity of computation, and greatly improve the overall accuracy and robustness of the model. The experimental results show that C2PSA_ELA, as an effective enhancement of C2PSA, provides strong technical support for intelligent pest monitoring in rice fields with a complex environment.

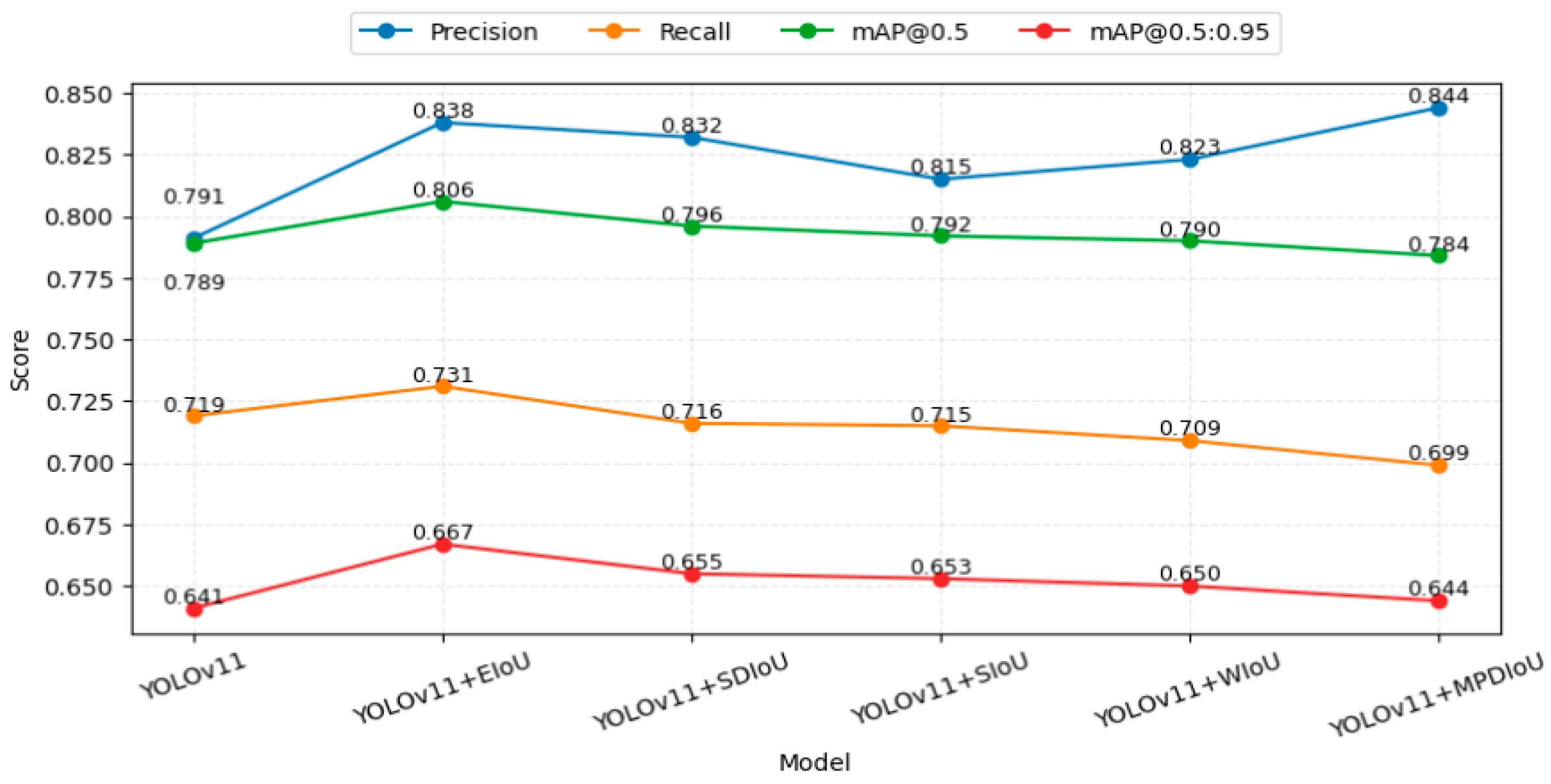

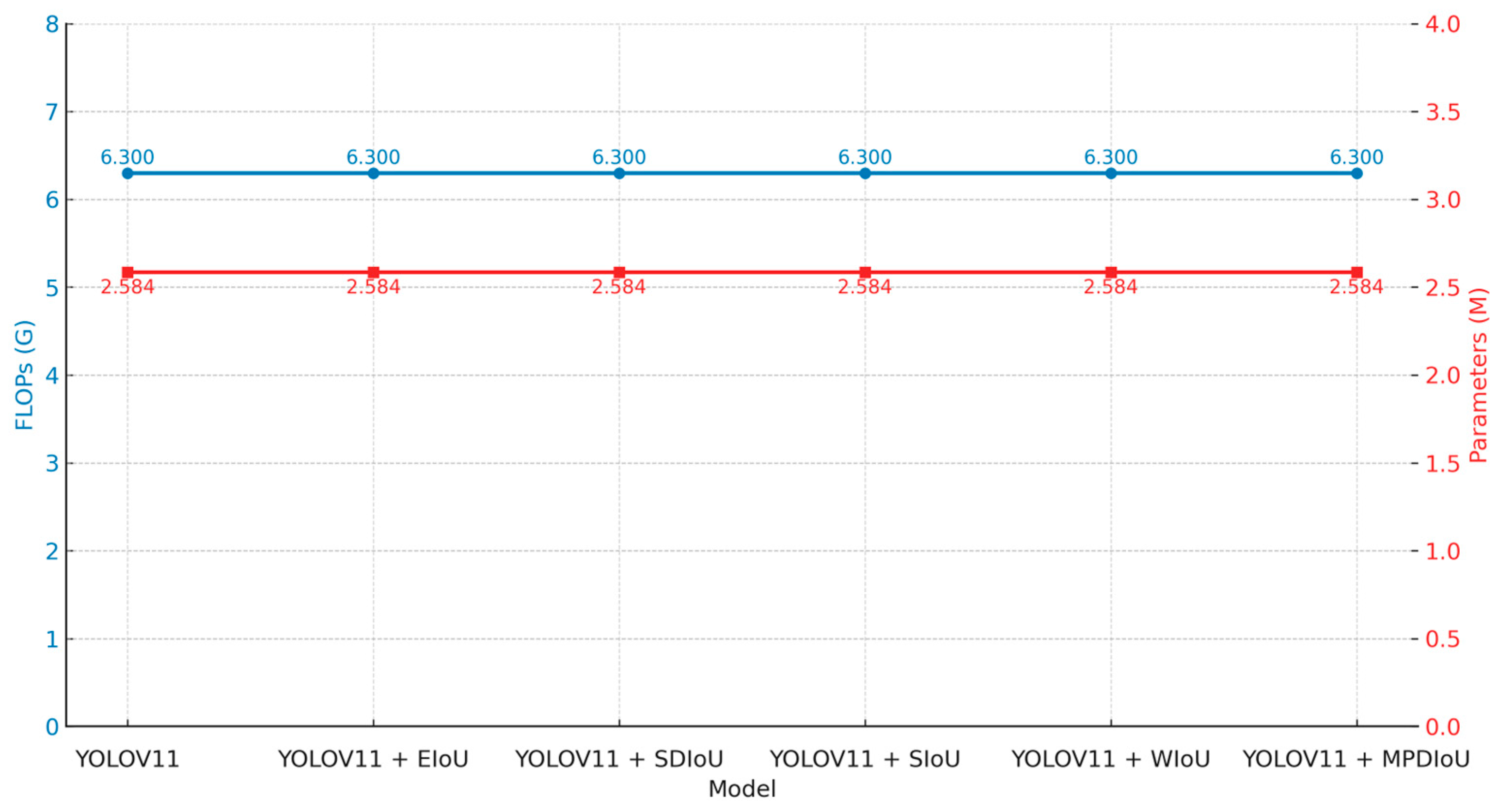

2.2.2. EIoU

In rice field pest detection, targets present minute scale, diverse morphology and a large degree of similarity to complex backgrounds, which bring severe challenges for the bounding box regression in rice field pest detection: Compared with general object detection tasks, the traditional CIoU (Complete IoU) loss function performs well, but its optimization mechanism shows obvious disadvantages in tasks like pest detection, which have dense small targets and obvious interference from backgrounds: first, its limitations on aspect ratio parameters will cause training instability in the process of regression of small targets; second, the target is easy to converge slowly in the case of complex background noise interference; third, the optimization is greatly dependent on geometric features and is unable to model local target characteristics in the boundary areas between pest and plant, often causing great positioning errors of detection boxes in pest detection.

To solve the problems, this paper proposes the Efficient IoU (EIoU) loss function [

32]. By reorganizing the mathematical expression of the loss term, this method makes the optimization target clearly decompose into three basic geometric elements: measuring the overlapping part, center point distance, and the length of the edge. Its mathematical expression is defined as follows:

where

and

denote the center coordinates of the predicted and target boxes, respectively;

ρ(⋅) represents the Euclidean distance metric; and

and

are the width and height of the minimum bounding rectangle covering both boxes. While maintaining the advantages of CIoU, this expression effectively solves the problem that CIoU has optimized the aspect ratio parameters unsteadily by minimizing the width and height difference between target boxes and predicted boxes.

In rice field pest detection, the EIoU loss function has the following advantages: First, it greatly accelerated the convergence of bounding boxes of small targets, and improved the overall ability of the model to detect the recall rate of small pests. Second, when faced with complex field environment disturbance such as leaf occlusion and changes in lighting, the boxes optimized based on EIoU have more accurate boundary fitting ability, and the false positive rate is greatly reduced. The experimental results show that the EIoU loss function can greatly improve the accuracy of bounding box regression and the stability of training while maintaining the lightweight of the model, and provides a more reliable loss function for intelligent pest monitoring in rice fields with a complex environment. Besides EIoU, several IoU-based losses (SDIoU, SIoU, WIoU, MPDIoU) were tested to evaluate localization robustness under class imbalance. EIoU showed the most stable precision–recall trade-off and faster convergence, effectively improving the detection of rare pests. Thus, it was adopted as the default loss in CRRE-YOLO.

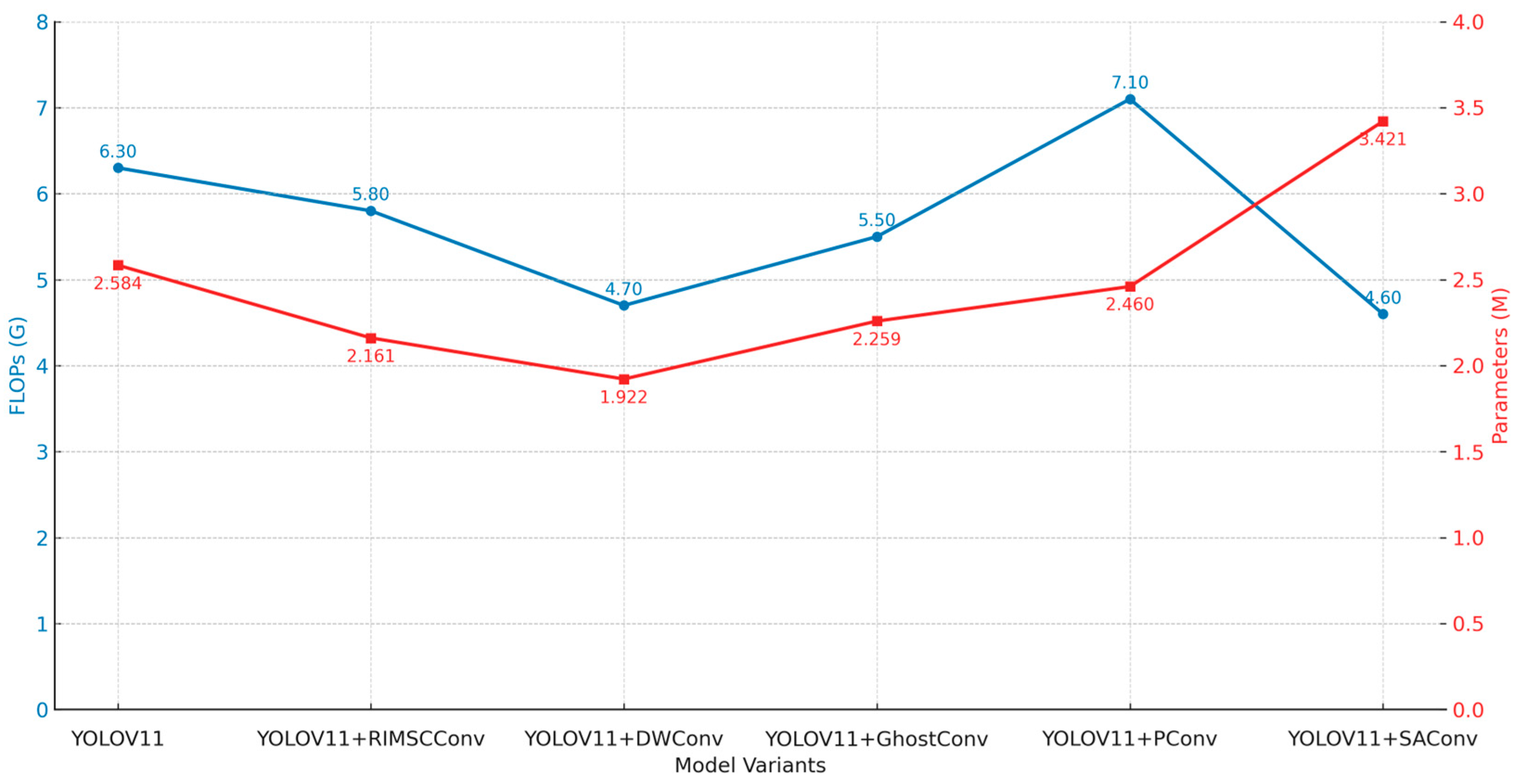

2.2.3. RIMSCConv (Rice Insect MultiScale Convolution)

In rice field pest detection tasks, the diverse target species with significant morphological variations (e.g., Curculionidae, Delphacidae, Cicadellidae) present challenges characterized by small scales, dense distribution, and high environmental blending. These characteristics make traditional standard convolutions (Conv) inadequate for the task [

33]. The original Conv module employs a single fixed convolution kernel with a limited receptive field. When processing pest infestations in complex natural environments like rice fields, this approach often suffers from insufficient feature extraction. Particularly when dealing with small targets and areas with heavy background interference, the model struggles to capture fine-grained structural features. Simultaneously, traditional Conv kernels suffer from computational redundancy and excessive parameter overhead during feature representation. This makes it challenging to balance detection accuracy with inference speed and lightweight requirements. Thus, designing a multi-scale convolutional structure that concurrently tackles small target perception, complex background suppression, and efficient computation has become the key solution to surmounting the bottlenecks in rice field pest detection. Thus, formulating a multi-scale convolutional structure that concurrently takes care of small target perception, complex background suppression, and efficient computation has become the vital solution to surmounting the difficulties in rice field pest detection.

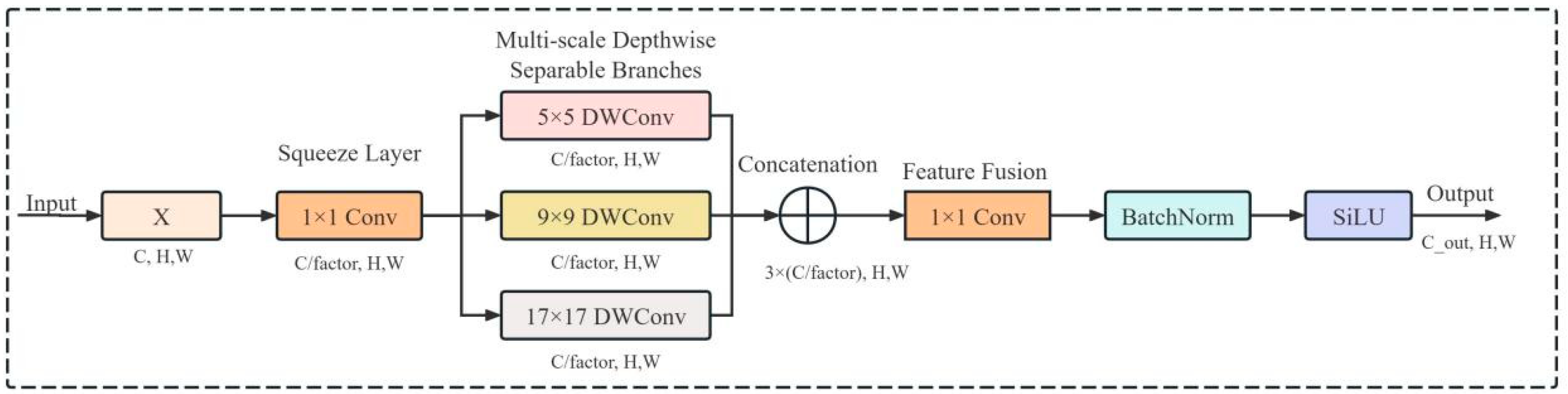

To surmount these challenges, the RIMSCConv module puts forth a strategy combining multiscale separable convolutions and lightweight feature fusion, as illustrated in

Figure 6. The core principle is to use convolutional kernels of different scales to enhance the model’s multiscale perception ability, thus improving the feature representation of rice field pest targets. In detail, assuming the input feature map is

, a 1 × 1 convolution first performs channel compression to yield a compact feature map

, where

denotes the channel compression operation. The output dimensions are

, with

representing the compression factor. Subsequently, RIMSCConv performs separable convolutions at three distinct scales:

where

denotes the depthwise separable convolution operation

, which effectively reduces computational complexity while ensuring receptive field expansion. Subsequently, the multiscale features are concatenated:

where

denotes the channel-wise concatenation operation. Finally, the concatenated features are fused using a shared 1 × 1 convolution

, and the output is obtained through batch normalization and the SiLU activation function:

where

denotes the SiLU activation function. Through this process, RIMSCConv effectively merges information from different scales while preserving computational lightness, and thus accomplishes feature representations that balance local and global context.

The RIMSCConv module offers several advantages over traditional Conv modules. First, by introducing 5 × 5, 9 × 9, and 17 × 17 multiscale convolutional kernels, it covers both small target details and the overall distribution of larger pest populations, significantly enhancing the model’s multi-level feature capture capability for rice field pest detection tasks. Second, RIMSCConv resorts to channel compression strategies and depthwise separable convolutions to effectively diminish parameter counts and computational load. This lets the model keep resource consumption low and ensure real-time detection, which is crucial for its deployment on edge devices like drones or agricultural smart terminals. Thirdly, RIMSCConv raises the stability of gradient flow during training by leveraging the smooth nonlinear attributes of the SiLU activation function. This prevents the weakening of small-target features during backpropagation, thereby accelerating model convergence and improving generalization performance. On the whole, RIMSCConv not only addresses the problem of suboptimal multi-scale feature representation in existing Conv modules for rice field pest detection but also boosts the robustness against small-scale pest targets and complex backgrounds while keeping a lightweight framework. This contributes more viable technical support to the intelligent monitoring of pests in rice fields. It supplies more trustworthy technical backing for the intelligent monitoring of pests in rice croplands.

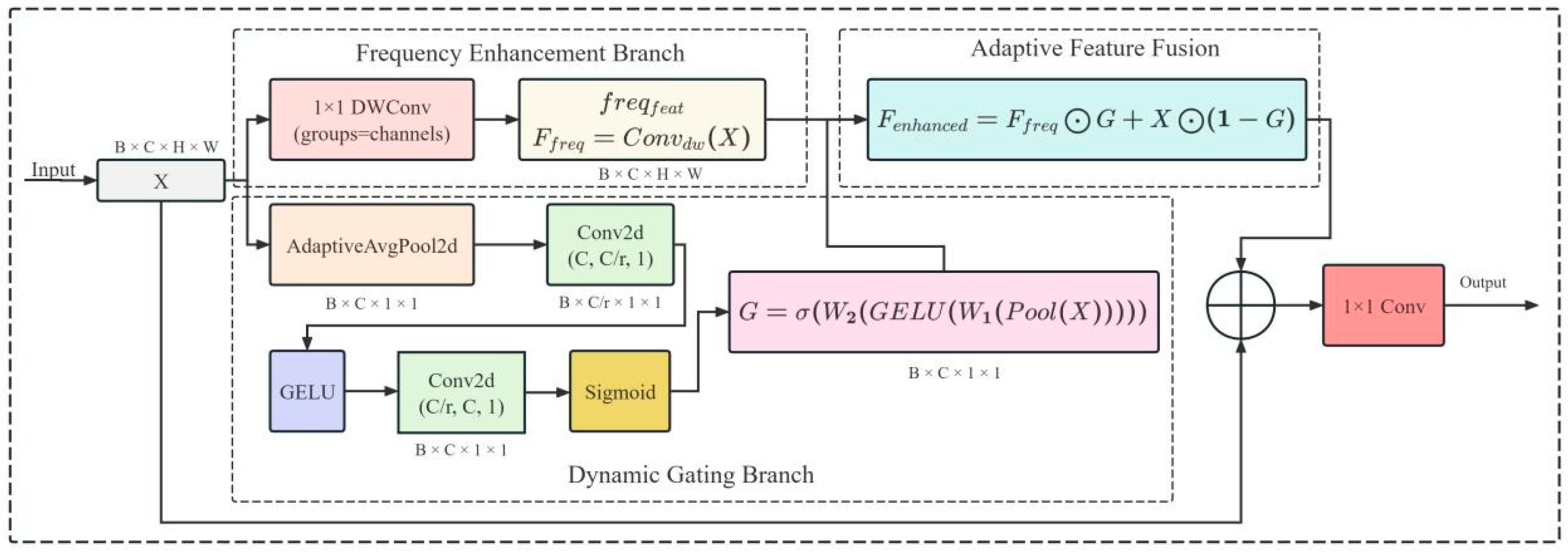

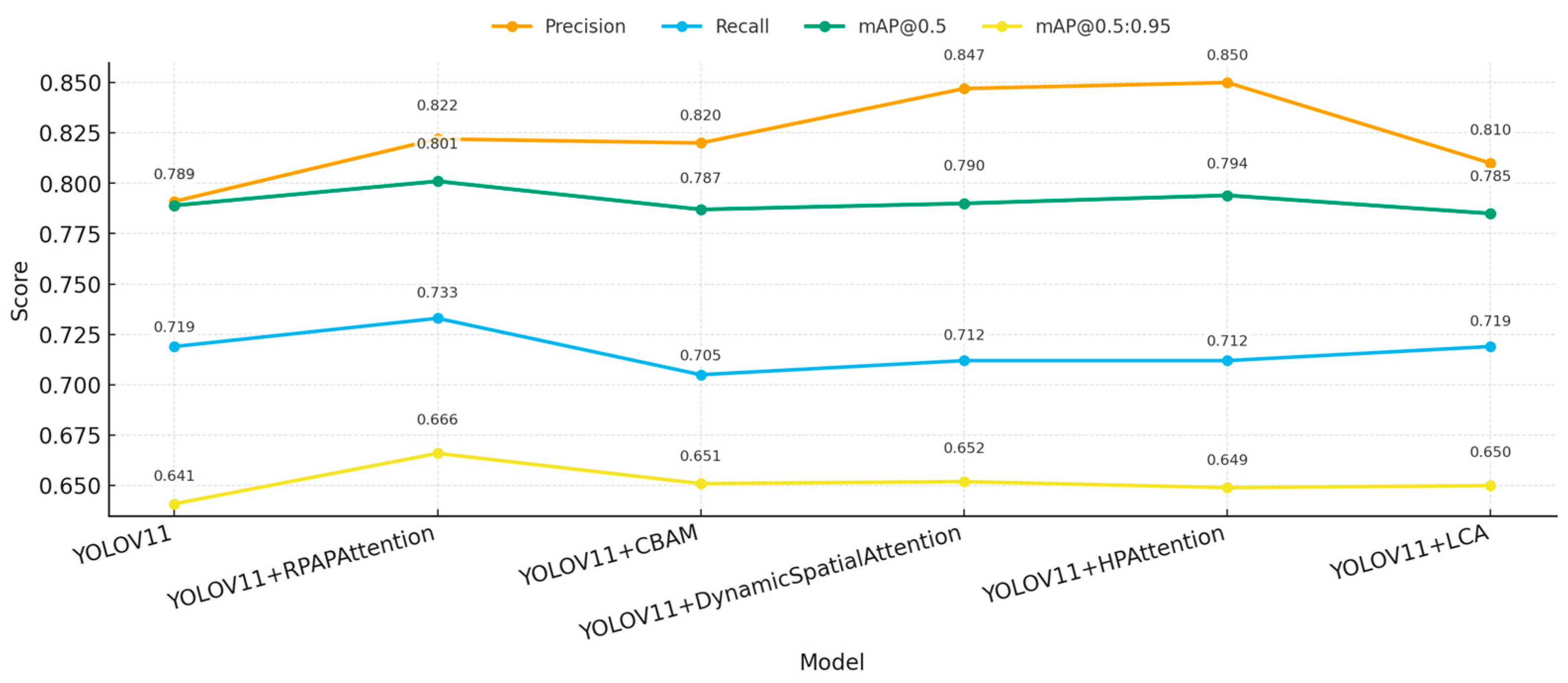

2.2.4. RPAPAttention (Rice Pest Adaptive Perception Attention)

The principal issue in rice pest target detection stems from the targets’ commonly small size, high density, and strong linkage with the environmental background. This makes it tough for traditional detection models to effectively tell pests from background textures during feature extraction, thus causing a reduction in detection accuracy. In particular, for the detection of pests like Curculionidae, Delphacidae, and Cicadellidae, their fine body contours and colors similar to those of rice leaves pose difficulties for conventional convolutional operations to capture high-frequency texture details. Moreover, global average pooling or channel attention mechanisms regularly overlook the crucial nature of local high-frequency features, causing inadequate small object detection [

34]. Therefore, the proposed RPAPAttention aims to effectively mitigate the issues of “feature blurring” and “small targets being drowned in the background” in rice field pest detection by combining frequency domain enhancement with dynamic weight allocation. This approach provides more robust and fine-grained representations for small object detection, as illustrated in

Figure 7.

Conceptually, the RPAPAttention module introduces a dual-branch architecture: a frequency domain enhancement branch and a dynamic gating branch. Let the input feature map be represented as

, where

denotes the batch size,

represents the number of channels,

and

denote the spatial dimensions, respectively. The frequency domain enhancement branch extracts high-frequency textures through per-channel convolutions, with its output expressed as

The dynamic gating branch first obtains channel-level statistical features through global adaptive average pooling. It then constructs gating weights via two layers of 1×1 convolutions (one for dimension reduction and one for dimension expansion) combined with nonlinear activation functions:

where Pool (⋅) denotes global average pooling,

represents the GELU activation function,

denotes the Sigmoid mapping,

and

denote the dimension–reduction and dimension–increase convolution matrices, respectively. Subsequently, the module achieves a balance between high-frequency features and original features through residual-style adaptive fusion:

Finally, the original features

and enhanced features

are concatenated along the channel dimension and compressed to the original channel count via a 1 × 1 convolution:

yielding the final output feature

.

The RPAPAttention module demonstrates significant advantages in rice pest detection. First off, the frequency-domain enhancement branch successfully highlights pest edges and texture details, enabling the model to extract features of small targets even when dealing with complex paddy field backgrounds. Secondly, the dynamic gating mechanism assures the adaptive adjustment of feature importance among channels in line with the input data. This stops over-reliance on any single feature category while retaining essential information. This elevates the robustness in cross-species detection. At the end, the residual fusion design preserves the original global semantic information and then introduces locally enhanced high-frequency features. This approach keeps the model’s stability intact while further refining small-object perception capabilities. Generally speaking, the RPAPAttention module efficiently deals with the setbacks of “small targets, weak features, and complex backgrounds” in rice pest detection with low computational complexity, presenting an effective solution for balancing accuracy and real-time performance in intelligent rice pest monitoring.