Abstract

In this paper, we propose an improved Region Proposal Network (RPN) by introducing a metric-based nonlinear classifier to compute the similarity between features extracted from the backbone network and those of new classes. This enhancement aims to improve the detection precision for candidate boxes of new classes and filter out candidate boxes with high Intersection of Union (IoU). Simultaneously, we introduce an attention-based Feature Aggregation Module (AFM) in Region of Interest (RoI) Align to aggregate feature information from different levels, obtaining more comprehensive information and feature representation to address the issue of missing feature information due to scale differences. Combining these two improvements, we present a novel few-shot object detection algorithm—IFA-FSOD. We conduct extensive experiments on datasets. Compared to some mainstream few-shot object detection algorithms, the IFA-FSOD algorithm can select more accurate candidate boxes, addressing issues of missed high IoU candidate boxes and incomplete feature information capture, resulting in higher precision.

1. Introduction

In recent years, with the continuous development of deep neural networks, object detection technology has made tremendous progress, and a series of high-performance detectors have emerged. However, traditional object detection methods require a large amount of annotated images and considerable time, labor, and cost. Under low data volume conditions, due to the diversity of data, it is often difficult to achieve good performance with a small amount of data [1]. How to make the model learn useful knowledge with limited labeled samples has become a new challenging task [2]. In the context of few-shot recognition, people are committed to solving the problem of few-shot object detection (FSOD), so FSOD has gradually become a research hotspot [3]. Early attempts introduced the idea of few-shot classification methods in FSOD, which was adjusted accordingly. For example, using the meta-learning paradigm [4], the meta detector is first trained in the base class to learn the prior knowledge of the base class and then updated in the new class to make predictions. Kang et al. first applied meta-learning to detection and proposed to add a meta feature learner and weight module to YOLOv2 to solve the problem of few-shot detection [5]. Another corresponding approach is the fine-tuning-based FSOD method, which typically includes two steps: (1) pretraining the model on a rich base class. (2) Fine-tuning the model to adapt to the new class. Wang et al. proposed a simple method of freezing the feature extractor while only fine-tuning the classification and regression branches of the detector, which achieved competitive results [6]. Yan et al. proposed treating each supporting example as a single point in the feature space and averaging all features as class prototypes [7]. Despite some progress being achieved, existing methods are far less effective on new classes than on base classes. The previous methods treated supporting and querying data as a single task, only considering the aggregation of support and query features between similar classes, without establishing associations between heterogeneous classes, and ignoring more feature information [5,6,7]. In addition, previous methods did not improve RPN. The original RPN mainly distinguished foreground and background, and used RPN trained on base class data to generate candidate boxes for new classes [8]. However, for a limited number of new classes, the introduction of new class images produces complex backgrounds that are different from the object. RPN may misclassify images with large background areas, resulting in the omission of some high IoU value candidate boxes.

To address the above issues, we propose a few-shot object detection method based on an improved PRN and feature aggregation using a two-stage approach based on Faster R-CNN [8], which is based on Wang’s foundation [6]. This method mainly consists of a feature extractor, an improved RPN, RoI Align, and an introduced feature aggregation module [9]. In our works, a nonlinear classifier has been added to the improved RPN [10], which establishes a similarity matrix by calculating the similarity between the features extracted by the backbone network and the new class features [11], obtaining high IoU candidate regions and improving the performance of object detection. At the same time, a feature aggregation module is introduced in RoI Align to enhance feature aggregation through attention mechanisms, aggregating features generated from RoIs of different scales to alleviate the problem of information loss caused by scale differences. Finally, normalization is performed in softmax to balance each feature information and obtain the final object detection result. The entire network is called IFA-FSOD, and our model has achieved competitive results compared to advanced methods (SOTAs, state-of-the-arts). By fine-tuning on the new class, we can achieve SOTA precision on multiple FSOD benchmarks, further demonstrating the performance improvement of the model in FSOD.

The main innovations and contributions of this paper are as follows.

- (1)

- Few-shot object detection network based on improved RPN and feature aggregation (IFA-FSOD) was proposed using Faster R-CNN as the backbone, achieving efficient performance improvement in FSOD.

- (2)

- The original RPN has been improved by introducing a nonlinear classifier to calculate the similarity between the features extracted by the backbone network and the new class features, thereby increasing the detection precision of new class candidate boxes and screening out high IoU candidate boxes, improving the detection performance of object detection.

- (3)

- Utilizing attention mechanism to enhance feature aggregation, aggregating feature information from different levels, obtaining more comprehensive information and feature representations, solving the problem of information loss, and improving the model’s generalization ability and detection performance for new classes.

2. Related Work

Neural network-based object detection algorithms are mainly divided into two types: single-stage detectors and two-stage detectors [4]. Single stage detectors attempt to directly predict the bounding boxes and detection confidence of object categories, typically with fast detection speed and high efficiency, but with lower detection precision. The two-stage detector mainly includes Faster R-CNN [8] and related variants. In the first stage, the network generates a series of candidate regions. In the second stage, by using classification and regression branches to classify and fine tune the candidate regions, higher performance can usually be achieved. Due to its flexible architecture, two-stage detectors typically perform more robustly in complex scenarios. Our method adopts a two-stage Faster R-CNN detector, aiming to improve detection precision while maintaining stability, which is also the primary task of FSOD [1].

2.1. Few-Shot Learning

Few-shot learning aims to learn transferable knowledge that can generalize to new categories with only a few annotated samples. Among them, Bayesian inference is used to summarize the knowledge in the pre-trained model for one-time learning [4]. Currently, meta-learning-based methods have become very popular in the field of few samples learning. The methods based on metric learning [1,4,12] have also achieved leading performance in few-shot classification tasks. The matching network encodes the input into deep neural features, classifies the query image by performing weighted nearest neighbor matching, represents a class using prototypes (feature vectors), and compares the target image with some labeled images by learning distance metrics. Meanwhile, optimization-based methods were also proposed to quickly adapt to new few samples tasks. Pierre et al. proposed a cross attention mechanism for learning the correlation between supporting images and query images [11]. The above methods mainly focus on few samples classification tasks, and there is relatively less research on FSOD problems.

2.2. Few-Shot Object Detection

FSOD not only requires training the model to recognize new classes, but also requires the localization of objects in the image. There have been several early attempts in using meta-learning for FSOD. For example, Kang et al. [5] and Yan et al. [7] used meta learners to apply feature re-weighting schemes to single-stage object detectors (YOLOV2 [5]) and two-stage object detectors (Faster R-CNN [8]). These meta-learners will support images (e.g., a small number of new/base class labeled images) and bounding box annotations as inputs. In [5], a weight prediction meta model was proposed to predict the parameters of class-specific components from a few samples while learning class-unknown components from base class samples. In [13], the authors enhance the generalization ability of the model on new classes from the perspective of sample diffusion. In [14], the authors optimize feature space partitioning and novel class representation to achieve FSOD. Although these methods have improved the performance of object detection to some extent, some scholars have found that they mainly focus on the feature interaction of the detection head and ignore feature extraction. Han et al. [15] conducted feature interaction simultaneously in the backbone network and detection head. YAN et al. aggregated image/ROI level query features with support features generated by meta-learners [7]. There are also other similar designs, such as feature aggregation schemes (Xiao and Marlet et al. [16], Fan et al. [17], Hu et al. [9], Zhang et al. [18], Han et al. [19]) and feature space enhancement (Lin et al. [20], Fan et al. [21], Jiaming et al. [22]) were explored. Unlike meta-learning, Wang et al. proposed a simple two-stage fine-tuning approach (TFA) that significantly improves FSOD performance by fine-tuning only the last layer [6]. There are also a series of works following TFA (Sun et al. [23], Zhu et al. [24], Qiao et al. [25]). Label-Verify-Correct uses pseudo labeling methods to increase the number of instances to reduce class imbalance [26]. However, most of these methods use RPNs trained in base classes to generate new candidate regions. Therefore, for a limited number of new classes, the introduction of new class images creates complex backgrounds that are different from the target object, and new class boxes may be considered as background regions in the base class, resulting in the loss of high threshold candidate boxes. In addition, these methods mainly focus on inter-class support and query feature aggregation, with less consideration given to feature information issues caused by scale in the RoI stage. We have adopted a meta-learning architecture and proposed an improved RPN and feature aggregation module to effectively alleviate the aforementioned issues.

In general, the existing FSOD methods have the following shortcomings: (1) Lack of accuracy. Although the FSOD methods have made some progress, the overall performance of most FSOD methods still cannot reach the required accuracy. This limits the universality and reliability of FSOD in practical applications. (2) The ability to identify new categories is limited. Due to the scarcity of training samples, the FSOD model often performs poorly in identifying new categories. This reduces the generalization ability of the model and makes it difficult to adapt to the changing environment and requirements. (3) The FSOD model is usually trained on the basis of a large number of labeled samples, and then fine-tuned on the novel classes with a small number of labeled samples. This training method is easy to lead to the bias of the model towards the basic class, thus ignoring or misjudging the novel class. (4) RPN plays a key role in FSOD, but it is difficult for RPN to accurately identify the target area of novel class because it may be affected by basic class data in the training process. (5) The detector may be affected by the limited training samples of the new category in the fine-tuning stage, resulting in deviation from the new category, which cannot be well generalized to the real data distribution. (6) The model is not robust enough: because each novel class has only a small number of samples, the model is very sensitive to the variance of novel classes. For example, if new samples are randomly selected for multiple trainings, the results will vary greatly each time. Therefore, it is very necessary to improve the robustness of the model in a small number of samples. Therefore, in order to overcome these problems and challenges existing in the current FSOD methods, we need to constantly explore and improve new methods and technologies. This includes optimizing the model structure, improving the training strategy, enhancing the generalization ability of the model, improving the labeling efficiency, and so on.

3. Definitions and Improved Network Framework

3.1. Related Definitions

Based on previous work [5,6,7,15], we divide the category set C into two parts: the base class with a large amount of labeled data and the new class with only K-labeled data per category, i.e., . Among them, comes from the base class dataset , comes from the new class dataset , and the two category sets have no intersection. That is, . The learning process of FSOD is usually divided into a base class training stage and a new class fine-tuning stage [3]. For base classes, we have a large number of annotated target images , where i is the training image and y is the real label corresponding to image i. Specifically, , . Image I contains N bounding boxes, each consisting of a class label and a bounding box position . For the new class, we use the K-shot method to label the samples (e.g., ). The support image for the new class is defined as , . represents the training image, is the boundary position of the object with class label c. The ultimate goal of the algorithm is to optimize the detector at different stages, using the base class dataset and the new class dataset to detect the query set and samples, where the query set belongs to a subset of the base class and the new class.

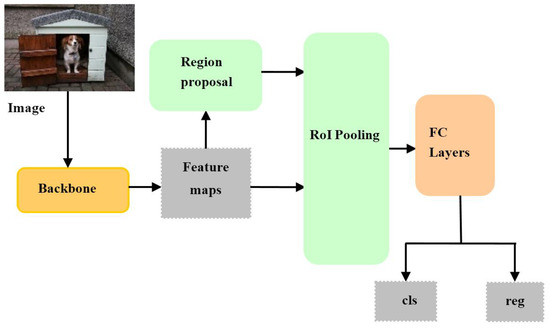

3.2. Basic Network Framework

This paper proposes a few-shot object detection algorithm based on improved RPN and feature aggregation (IFA-FSOD). We use the classical two-stage object detector Faster R-CNN as the basic detection framework, which includes a backbone network [27], a region proposal network (RPN), and R-CNN (RoI Pooling and Full Connected (FC) layers). As shown in Figure 1, the backbone network is used to extract features from preprocessed images, and RPN utilizes anchor boxes to transform these features into a set of high-quality, category-independent region proposals. Finally, the R-CNN classifier and regressor output category scores and bounding box coordinates, respectively.

Figure 1.

The basic detection framework of Faster R-CNN structure.

In the structure of Faster R-CNN, traditional Faster R-CNN detectors perform poorly in FSOD due to the need for a large amount of annotated sample data. Therefore, we propose a new few-shot object detection framework (IFA-FSOD) based on Faster R-CNN.

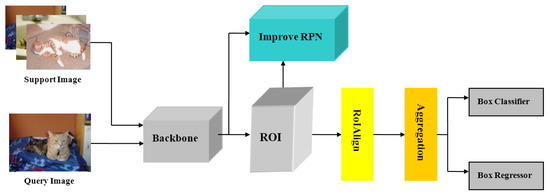

As shown in Figure 2, the framework mainly consists of a feature extractor, an improved RPN, RoI Align, and a newly added feature aggregation module. We chose RestNet-101 [26] as the backbone network for the feature extraction of images. For querying images, we directly use RestNet-101 for feature extraction. For supporting images, first enlarge the target area in the image, then crop the target area and adjust the cropped image to the same size. Next, the processed image is input into a shared feature backbone network to extract features that support the image. In the process of generating proposal features, the original RPN [8] was improved. Traditional RPN mainly distinguishes foreground and background through simple linear or nonlinear classifiers. We introduce a metric learning-based nonlinear classifier to better calculate the similarity between extracted features and new class features, making similar features closer in the image space. After RoI Align, a feature aggregation module is introduced to obtain more comprehensive feature information. This module effectively aggregates features generated by RoI pooling of different scales. We still use a two-stage detection structure, similar to Faster R-CNN. The total loss L of the IFA-FSOD model consists of loss and loss , as shown in Formula (1).

where is a hyperparameter used to balance the losses of the and parts. Parameter has an impact on model performance. (1) Balance different loss items: In target detection, the loss function usually consists of several parts, such as classification loss, boundary box regression loss, etc. These loss items may have different orders of magnitude and importance. By introducing the weighting parameter , these loss items can be weighted to balance their contribution to the total loss, so that the model can pay more attention to the performance of different aspects in the training process. (2) Improve model generalization. Reasonable value selection can help the model to better generalize to unseen data. When a loss item accounts for a large proportion of the total loss, the model may pay too much attention to the loss item, resulting in good performance on a specific task, but the generalization ability on datasets will decline. By adjusting the value, the model can achieve a better balance on multiple tasks or datasets, so as to improve the generalization ability.

Figure 2.

The framework of IFA-FSOD with a feature extractor, an improved RPN, RoI Align, and a newly added feature aggregation module.

3.2.1. Feature Extractor

We use the RestNet-101 network pre-trained on the ImageNet dataset for feature extraction of input images. For a given image , use RestNet-101 to extract CNN features. So, we have . Here, H, W, and C represent the height, width, and number of channels of the extracted feature dimensions, respectively. We choose the output after the res4 block of RestNet-101 as the feature of the image. For supporting images, crop the corresponding object regions in the image, adjust the cropped image to the same size, and then input it into the shared feature backbone network to extract the features of the supporting image. Using the query image features and the supporting image features of as inputs, generate query features for specific classes. The main process of the improved RPN and feature aggregation few-shot object detection algorithm IFA-FSOD proposed in this paper is as follows: Firstly, the supporting image and query image are input into the feature extractor to extract features. In RPN, a similarity matrix is established between the support features and anchor box features of a new class, and the similarity between the two is calculated to obtain candidate regions with high IoUs. Finally, a feature aggregation module is introduced in RoI Align to effectively aggregate features generated from RoI of different scales. Normalize in the final softmax layer to fuse the information of each feature more evenly and obtain the final object detection result.

3.2.2. Improved RPN Module

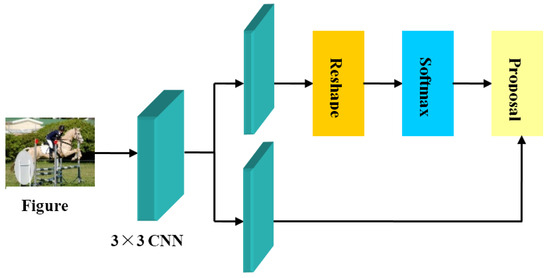

RPN is a fully convolutional network that can simultaneously predict the target detection box and score of the target, generate high-quality region proposals through end-to-end training, and share the same convolutional features with the detection network. Its essence is a classless object detector based on sliding windows, which can input images of any scale and output a series of rectangular candidate regions. The structure of the original RPN is shown in Figure 3.

Figure 3.

The original RPN structure.

Usually, previous RPN methods use an RPN to train base class data in order to generate novel class candidate boxes. However, due to the introduction of the novel class, the target object is considered as a background region in the base class, which can result in missing some high IoU candidate boxes for new classes during the candidate box generation process. To solve this problem, this paper improves the original RPN.

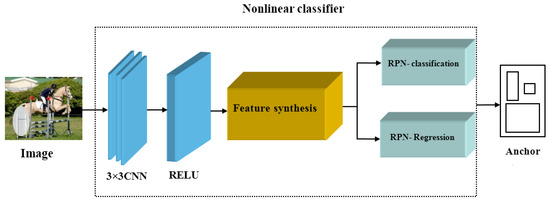

In the improved RPN, for each novel class, the average of the CNN feature values of its K-shot supported images is taken to obtain the class center of that class. Let , . The extracted features from the image are compared with the class center of the novel class during training to more accurately match the candidate region with the real region by comparing their similarity, thus filtering out high IoU candidate boxes. The improved RPN model is shown in Figure 4.

Figure 4.

Improved RPN structure with three CNN networks and one Relu network.

Figure 4 calculates the similarity between new class features and anchor features by designing a nonlinear classifier consisting of three CNN networks and one Relu network. In this process, the N-way K-shot mode is used to obtain features of the same size as the anchor points through the average pooling layer, as shown in Formula (2).

where is the representation of global classes. H and W represent the height and width of feature channels. Then, a non-linear classifier is used to establish a similarity matrix between the new class center and anchor features and calculate their similarity. Inspired by the work in [10,14], we add a network with addition and multiplication to enhance the fusion between features. Formula (3) is as follows:

where and consist of a CNN layer and a Relu layer, can display the distance between two input features, and shows the correlation between features. The combination of the two achieves a stronger effect. Finally, input into the binary classification layer and regression layer to predict the proposal. By improving PRN, the precision of positioning proposals for new categories can be enhanced, and candidate regions with high IoUs can be obtained.

3.2.3. Feature Aggregation Module

We obtain a series of preliminary candidate regions (Rols) through RPN, and for each RoI, RoI Align divides it into fixed-sized grid cells. We extract corresponding feature values from each grid cell on the feature map using bilinear interpolation to obtain feature representations that match the original RoI size. The best scenario is to divide the RoI region into grids. For large-scale training samples, the introduction of interpolation operation enables RoI Align to perform more accurate feature cropping on each RoI on the feature map, greatly solving the problem of boundary pixel information loss [9]. However, in few-shot detection scenarios with only a small amount of training data, due to the extremely small number of feature points contained in the target area, using raw interpolation operations can result in fixed cell sizes that cannot accurately restore the features within the target area, leading to serious information loss issues.

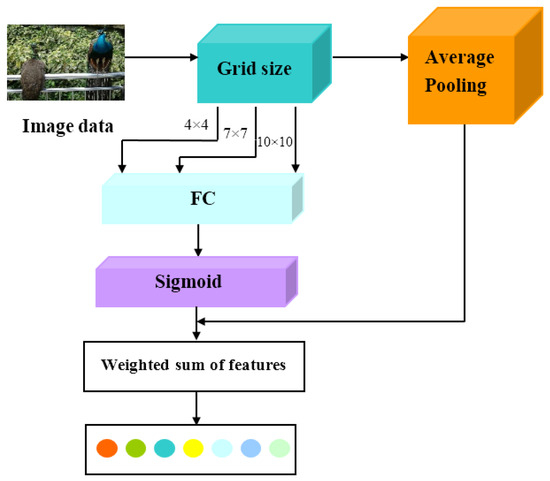

In addition, due to the nature of few-shot introducing scale variations, traditional models may lose their ability to generalize to new categories, making it difficult to fully adapt to targets of different scales. Therefore, we propose an attention mechanism-based feature aggregation module for aggregating feature information from different levels to obtain more comprehensive information and feature representations. We design three different parallel pooling operations using , , and grids, respectively. Small grids help to obtain overall information and capture more high-level feature information. The large grid mainly focuses on low-level features to obtain detailed contextual information. Each generated feature contains different levels of semantic information [12]. By introducing attention mechanisms [11], the features generated by RoI pooling of different scales are effectively aggregated, improving the model’s adaptability to features of different levels.

As shown in Figure 5, the attention module consists of two branches: one branch is the average pooling layer, and the other branch is a fully connected layer and a Sigmoid activation function. Finally, the three features are aggregated through a multiplication operation. Assuming the input feature map is . Here, C is the number of channels, H is the height, and W is the width. Firstly, the average pooling operation is used to take the average of the feature values of each channel, as shown in Formula (4).

where represents the feature map of the c-th channel in the feature map I.

Figure 5.

Feature aggregation module structure with attention mechanism.

The fully connected layer linearly changes the average pooled vector through a weight matrix, as shown in Formula (5):

where is the fully connected output, r is the scaling ratio, and b is the bias.

By activation function to balance the weights and ensure their effectiveness, the aggregated feature output is a weighted sum of three features, as shown in Formula (6).

where represents the feature values of the channel corresponding to the feature map after feature aggregation, is the output of the activation function, and represents the feature value of the c-th channel in the feature map I among the three grids.

4. Experiment and Results Analysis

4.1. Dataset Selection

In this paper, following the instructions in [7], we constructed a few-shot detection dataset and evaluated our method on PASCAL VOC and MS COCO using the same data segmentation and training examples. A partial example of the dataset is shown in Figure 6.

Figure 6.

Dataset example for PASCAL VOC and MSCOCO dataset.

For the PASCAL VOC dataset, we trained the model on the VOC 2007 [28] trainval and VOC 2012 [29] trainval sets, and tested the model on the VOC 2007 test set. Two trainval sets are divided according to object categories, with five randomly selected as novel classes and the remaining 15 as base classes. There are k object instances in each novel class, with . We use the same three different partitioning methods in [7]: The new classes in the first classification include {“bird”, “bus”, “cow”, “motorbike”, “sofa”}. In the second classification, new classes include {“aerolane”, “aero”, “bottle”, “cow”, “horse”, “sofa”}. In the third classification, new classes include {“boat”, “cat”, “motorbike”, “sheep”, “sofa”}. For the MS COCO dataset [30], which contains 80 object categories, we select 60 categories that do not intersect with PASCAL VOC as base classes, while 20 categories that overlap with PASCAL VOC are set as new classes. There is no overlap between the base class and the new class category, and each category contains k object instances, with k set to 10 and 30. We train the model on the base class and test it on the new class, following the N-way K-shot setup for training and testing.

4.2. Training Steps

Our experimental environment is as follows: Ubuntu 18.04 operating system, 32 GB memory, two V100-32GB GPUs, Pytorch 1.6.0 deep learning framework, Python version 3.8, CUDA version 10.1.

We use Faster R-CNN [8] as the basic detection framework, with ResNet-101 [27] as the feature extractor and RoI Align as the RoI feature extractor. After training the base class, we replace the original fully connected layer with a global average pooling layer. The backbone network adopts Restnet-101, which uses the output after res4 blocks as image features. We use the softmax algorithm to update certain parameters in order to learn better ones. During the meta training process on the PASCAL VOC dataset, the model underwent 20,000 and 10,000 iterations, with learning rates set to 0.001 and 0.0005, respectively. During the fine-tuning phase on PASCAL VOC, the model performs 3000 iterations with learning rates of 0.001 and 0.0001, respectively. For the MS COCO dataset, during the meta training phase, the model performs 4000 and 2000 iterations, with learning rates of 0.001 and 0.0005, respectively. In the fine-tuning phase, we conducted 3000 iterations of training for 10-shot and 30-shot fine-tuning.

Usually, Faster-CNN is configured to train on Pascal VOC and MS coco datasets, but it cannot give an exact calculation cost and time. This is closely related to hardware configuration, software environment, dataset selection. and training strategy. Specific to our model and algorithm, we can estimate based on some experience: (1) meta training on Pascal VOC dataset: training with a single GPU may take hours to days, depending on the hardware configuration and training strategy. In terms of computing cost, it will consume a lot of GPU memory and computing resources. (2) Meta training on MS coco dataset: because the MS coco dataset is larger, the training time will be longer, which may take several days to weeks. Similarly, the computational cost will be higher. (3) Fine-tuning stage: fine-tuning is usually faster than training from scratch, because the model already has certain prior knowledge. However, the fine-tuning of 10-shot and 30-shot may require additional attention, because the data under these settings are very limited, and special training strategies may be required to avoid over fitting.

4.3. Experimental Results and Analysis

Firstly, this paper conducts comparative experiments on the IFA-FSOD algorithm using N-way K-shot conditions on the PASCAL VOC dataset, and evaluates it against previous FSOD methods such as TFA [6], Meta R-CNN [7], and MetaDet [31]. Here, , respectively. And according to the experimental setup of TFA, three types of classification results for base and new classes were sampled, namely Novel Set 1, 2 and 3. We evaluate using the AP50 predicted by the new class on the VOC-2007 test set [3]. The experimental results are shown in Table 1, which presents the evaluation results of three different segmentation methods on the VOC dataset.

Table 1.

Performance comparison of object detection under various few-shot conditions on the PASCAL-VOC dataset.

We can observe that the proposed method in this paper has achieved higher performance than other methods in most cases. In the K-shot task, when and 3, the proposed method achieved better performance compared to other methods. In the 3-shot task of Novel Set 1, compared with the best performing QA-FewDet [19] algorithm among the comparison methods, the proposed method achieved nearly 3% improvement. For larger 5-shot and 10-shot, there is not much difference from QA-FewDet, and the results obtained by different partitioning methods are not the same. For example, in Novel Set 2, the detection performance of 5-shot and 10-shot is inferior to QA-FewDet, but in Novel Set 1, the detection performance of 5-shot and 10-shot is better than QA-FewDet.

Table 2 shows the comparison of detection performance between IFA-FSOD and other methods on the relatively more challenging MSCOCO dataset for new categories. The average precision (AP) was evaluated using 10-shot and 30-shot methods. AP is calculated based on different IoU (threshold) sizes. That is, by calculating the average threshold between each predicted box and the true box to reflect the AP. AP50 represents the corresponding AP to an IoU value greater than or equal to 50%, and AP75 represents the corresponding AP to an IoU value greater than or equal to 75%.

Table 2.

Performance comparison of object detection under various few-shot conditions on the MSCOCO dataset.

From the results in Table 2, compared to other model methods, IFA-FSOD achieved better performance at different K values. When K = 10, compared to the method FsDetView [16], it increased mAP by 0.3 percentage points and mAP75 by 0.9 percentage points. When K = 30, compared with the method FsDetView, mAP75 improved by 3.4 percentage points, and at K = 30, IFA-FSOD achieved the best performance on all indicators. These results indicate that IFA-FSOD achieved good performance on different datasets and can better cope with FSOD, demonstrating good robustness and generalization ability.

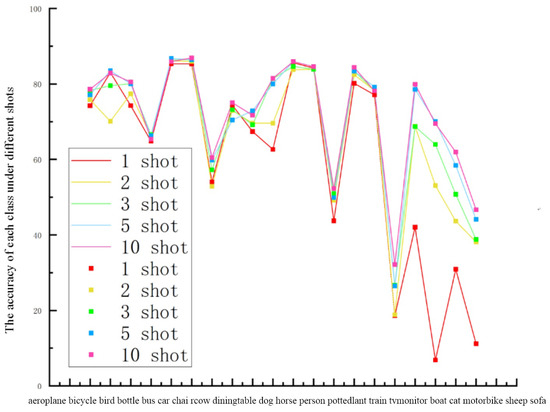

In order to gain a more intuitive understanding of the performance of each category in different shot environments, we chose the PASCAL VOC dataset. Figure 7 shows the precision of 20 categories in the dataset under 1-, 2-, 3-, 5-, and 10-shot environments. It can be observed that the performance in high-shot environments is generally better than that in low-shot environments under the same category. Specifically, we find that in a 10-shot environment, the detection performance for each class was optimal. In addition, some categories perform well while others may present challenges in FSOD. This also reminds us that in practical applications, we need to pay more attention to the performance differences between categories to better adapt to FSOD.

Figure 7.

Performance of each class under different shots.

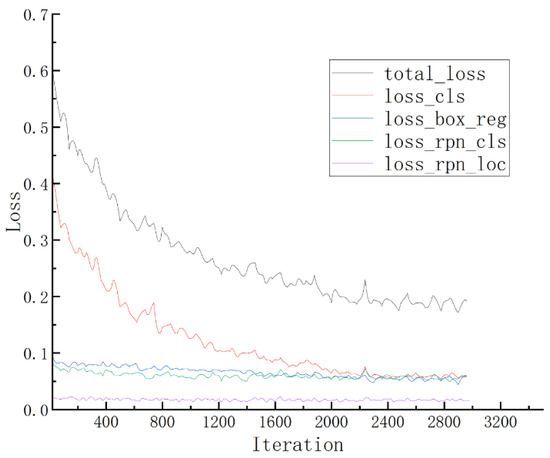

Figure 8 shows the variation in the loss values of the model at each stage on the COCO dataset with increasing iteration times at a learning rate of 0.001. As the iteration progresses, the losses in each stage are continuously optimized, and the final total loss value stabilizes at 0.172. From Figure 8, it can be observed that during the RPN stage, the loss value of the model is very small, which indirectly indicates that our improved RPN effectively enhances the model’s ability to generate candidate boxes for the target during training. In Figure 8, total_loss is the sum of all loss items in the model training process. loss_cls is classification loss, and it is used to measure the performance of the model in the classification task; that is, the accuracy of judging which category the detected object belongs to. loss_box_reg is bounding box regression loss, and it is used to adjust the bounding box predicted by the model to more accurately surround the target object. loss_rpn_cls is RPN classification loss, and it is used to measure the accuracy of RPN in distinguishing the foreground (the area containing the object) from the background (the area excluding the object). loss_rpn_loc is RPN localization loss, and it is used to optimize the prediction of these bounding boxes.

Figure 8.

Loss value at different stages.

4.4. Ablation Experiments

To verify the effectiveness of the improved RPN module and feature aggregation module for few-shot sizes, we conduct ablation experiments on PASCAL VOC split 1 and MSCOCO under 10-shot and 30-shot conditions to evaluate the effectiveness of the detection performance. Table 3 shows the results of ablation experiments conducted on PASCAL VOC split 1, while Table 4 presents the results of ablation experiments conducted on the MSCOCO dataset (Here, √ indicate the addition of this module and × indicate not to add this module). Through these results, we can observe the improvement in detection precision of our improved RPN (Metric RPN) and AFM modules. The improved RPN improves the generation performance of candidate regions through metric learning, while the AFM module effectively aggregates feature information from different levels, enhancing the model’s ability to represent targets. When two modules are applied simultaneously, the mAP of the algorithm reaches its highest value, which once again verifies the effectiveness and rationality of the model proposed in this paper.

Table 3.

Ablation experiments of PASCAL VOC Novel Set 1 (%).

Table 4.

Ablation experiments of MSCOCO (%).

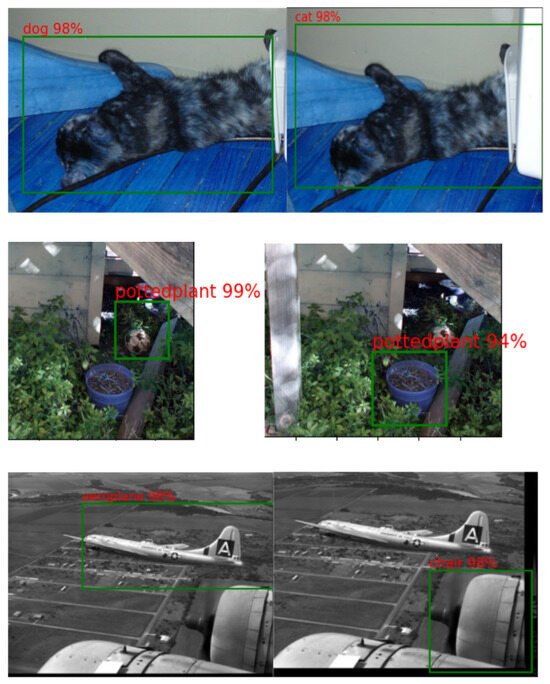

In Figure 9, Figure 10 and Figure 11, we visually demonstrate the effectiveness of the model by presenting some of its test results. We compare our model with the baseline model, with the results of the base class on the left and our method on the right. Figure 9 clearly shows the significant improvement in object detection performance of IFA-FOSD compared to the baseline model. In Figure 10, we can see that IFA-FOSD can correctly and accurately detect the error detecting categories in the base class. In Figure 11, we can observe that the problem of incomplete detection of target objects in the base class can be fully detected by IFA-FOSD. Through these illustrations, we can see that the method proposed in this paper effectively solves problems such as missed detections, false detections, and improves detection precision, further confirming the effectiveness of the model in FOSD.

Figure 9.

IFA-FOSD improves object detection performance compared to baseline models.

Figure 10.

IFA-FOSD correctly and accurately detects the error detecting categories in the base class.

Figure 11.

IFA-fosd can completely detect the incompleteness of the detected target object in the base class.

5. Conclusions and Further Work

In this paper, we propose a novel few-shot object detection model based on improved RPN and feature aggregation (IFA-FSOD). By designing an RPN with a metric-based nonlinear classifier, the detection precision of new class candidate boxes can be improved to screen for high IoU candidate boxes. We introduce an attention-based feature aggregation module (AFM) in RoI Align to aggregate feature information from different levels, obtaining more comprehensive and important information and feature representations, and solving the problem of missing feature information. The ablation experiment demonstrated the effectiveness of each component in IFA-FSOD. Our proposed IFA-FSOD has been experimentally proven to have certain object detection capabilities in few-shot sizes on two benchmark datasets, namely PASCAL VOC and MSCOCO. In general, IFA-FSOD is innovative and potential in improving the detection performance of few-shot object detection.

IFA-FSOD proposes innovative ideas in target detection performance, especially in processing small sample data and enhancing feature representation. However, any model has its limitations, and IFA-FSOD is no exception. The main limitations of this method and model are as follows: (1) The introduction of a metric-based nonlinear classifier may increase the computational complexity of RPN, especially when dealing with a large number of candidate boxes. This may increase the consumption of computing resources in the training and reasoning phase of the model, and affect the real-time performance. (2) The AFM module enhances feature representation by aggregating feature information from different levels, but this will also increase the amount of computation and memory consumption. Especially in deep networks, the size and number of channels of the feature graph are usually large, which further aggravates the problem of resource consumption. (3) Although IFA-FSOD aims to improve the performance of small sample target detection, its generalization ability may be limited. Especially when faced with new categories or complex scenes that are quite different from the training data, the model may not be effectively generalized. (4) Although IFA-FSOD aims to deal with small sample data, the model still needs a certain amount of labeled data to train. For some fields or tasks, high-quality annotation data may be difficult to obtain or expensive.

In future research, we will deeply study the dense relationship between similar and different features to learn a more adaptive feature model so as to further improve the performance of small sample target detector. For example, the same and different features are fused to form a richer feature representation. The feature fusion technology in deep learning is used to fuse features of different scales and levels to improve the robustness and generalization ability of the feature model. Adaptive learning algorithms (such as online learning, incremental learning, etc.) are used to update the feature model according to the new samples, so that it can adapt to the change in target detection task. The knowledge learned from large-scale datasets is migrated to small sample target detection tasks by transfer learning and other methods to improve the performance of the detector. According to the analysis results, the feature model and detector are optimized and improved.

Author Contributions

Conceptualization, G.W.; methodology, Q.P.; software, K.F.; validation, Q.P.; investigation, K.F.; writing—original draft preparation, K.F.; writing—review and editing, Q.P.; visualization, G.W.; supervision, G.W.; project administration, G.W. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported in part by the National Natural Science Foundation of China. No. 62062007.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The raw data supporting the conclusions of this article will be made available by the authors on request.

Acknowledgments

The suggestions from the relevant editors and reviewers have improved the quality of this paper, and we would like to express our sincere gratitude to them.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Shi, Y.; Shi, D.; Qiao, Z.; Zhang, Y.; Liu, Y.; Yang, S. A review of research on small sample object detection. J. Comput. Sci. 2023, 46, 1753–1780. [Google Scholar]

- Xin, Z.; Chen, S.; Wu, T.; Shao, Y.; Ding, W.; You, X. Few-shot object detection: Research advances and challenges. Inf. Fusion 2024, 107, 102307. [Google Scholar]

- Huang, Y.; Dou, H.; Xiao, G. Small Sample Object Detection Combining Classification Correction and Sample Amplification. Computer Engineering and Applications: 1-10 2023 [2022-11-20]. Available online: http://kns.cnki.net/kcms/detail/11.2127.TP.20221104.1529.020.html (accessed on 15 June 2024).

- Kohler, M.; Eisenbach, M.; Gross, H.-M. Few-Shot Object Detection: A Comprehensive Survey. [EB/OL]. [2023-11-10]. Available online: https://arxiv.org/pdf/2112.11699v2.pdf (accessed on 15 June 2024).

- Kang, B.; Liu, Z.; Wang, X.; Yu, F.; Feng, J.; Darrell, T. Few-shot object detection via feature reweighting. In Proceedings of the 2019 IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 8420–8429. [Google Scholar]

- Wang, X.; Huang, T.E.; Darrell, T.; Gonzalez, J.E.; Yu, F. Frustratingly simple few-shot object detection. In Proceedings of the 2020 International Conference on Machine Learning, Virtual, 13–18 July 2020; PMLR: New York, NY, USA, 2020; pp. 9919–9928. [Google Scholar]

- Yan, X.; Chen, Z.; Xu, A.; Wang, X.; Liang, X.; Lin, L. Meta r-cnn: Towards general solver for instance-level low-shot learning. In Proceedings of the 2019 IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 9577–9586. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards real-time object detection with region proposal networks. IEEE Trans. Pattern Anal. Mach. Intell. 2016, 39, 1137–1149. [Google Scholar] [PubMed]

- Hu, H.; Bai, S.; Li, A.; Cui, J.; Wang, L. Dense relation distillation with context—Aware aggregation for few-shot object detection. In Proceedings of the 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; IEEE: Piscataway, NJ, USA, 2021; pp. 10185–10194. [Google Scholar]

- Han, G.; Huang, S.; Ma, J.; He, Y.; Chang, S.F. Meta faster r-cnn: Towards accurate few-shot object detection with attentive feature alignment. In Proceedings of the 2022 AAAI Conference on Artificial Intelligence, Virtual, 22 February–1 March 2022; AAAI: Menlo Park, CA, USA, 2022; Volume 36, pp. 780–789. [Google Scholar]

- Jeune, P.L.; Mokraoui, A. A Comparative Attention Framework for Better Few-Shot Object Detection on Aerial Images. [EB/OL]. [2023-11-10]. Available online: https://arxiv.org/pdf/2210.13923.pdf (accessed on 15 June 2024).

- Li, Y.; Feng, W.; Lyu, S.; Zhao, Q. Feature reconstruction and metric based network for few-shot object detection. Comput. Vis. Image Underst. 2023, 227, 103600. [Google Scholar] [CrossRef]

- Li, A.; Li, Z. Transformation Invariant Few-Shot Object Detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition(CVPR), Nashville, TN, USA, 20–25 June 2021; IEEE: Piscataway, NJ, USA, 2021; pp. 3094–3102. [Google Scholar]

- Li, B.; Yang, B.; Liu, C.; Liu, F.; Ji, R.; Ye, Q. Beyond Max-Margin: Class Margin Equilibrium for Few-Shot Object Detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, 20–25 June 2021; IEEE: Piscataway, NJ, USA, 2021; pp. 7363–7372. [Google Scholar]

- Han, G.; Ma, J.; Huang, S.; Chen, L.; Chang, S.F. Few-shot object detection with fully cross-transformer. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; IEEE: Piscataway, NJ, USA, 2022; pp. 5321–5330. [Google Scholar]

- Xiao, Y.; Lepetit, V.; Marlet, R. Few-shot object detection and viewpoint estimation for objects in the wild. IEEE Trans. Pattern Anal. Mach. Intell. 2022, 45, 3090–3106. [Google Scholar] [CrossRef] [PubMed]

- Fan, Q.; Zhuo, W.; Tang, C.K.; Tai, Y.W. Few-shot object detection with Attention-RPN and multi-relation detector. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 4012–4021. [Google Scholar]

- Zhang, L.; Zhou, S.; Guan, J.; Zhang, J. Accurate few-shot object detection with support-query mutual guidance and hybrid loss. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, 20–25 June 2021; IEEE: Piscataway, NJ, USA, 2021; pp. 14424–14432. [Google Scholar]

- Han, G.; He, Y.; Huang, S.; Ma, J.; Chang, S.F. Query adaptive few-shot object detection with heterogeneous graph convolutional networks. In Proceedings of the 2021 IEEE/CVF International Conference on Computer Vision, Nashville, TN, USA, 20–25 June 2021; IEEE: Piscataway, NJ, USA, 2021; pp. 3263–3272. [Google Scholar]

- Lin, T.Y.; Dollár, P.; Girshick, R.; He, K.; Hariharan, B.; Belongie, S. Feature pyramid networks for object detection. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; IEEE: Piscataway, NJ, USA, 2017; pp. 2117–2125. [Google Scholar]

- Fan, Z.; Ma, Y.; Li, Z.; Sun, J. Generalized few-shot object detection without forgetting. In Proceedings of the 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; IEEE: Piscataway, NJ, USA, 2021; pp. 4527–4536. [Google Scholar]

- Han, J.; Ren, Y.; Ding, J.; Yan, K.; Xia, G.S. Few-Shot Object Detection via Variational Feature Aggregation. In Proceedings of the 2023 AAAI Conference on Artificial Intelligence, Washington, DC, USA, 7–14 February 203; AAAI: Menlo Park, CA, USA, 2023; pp. 755–763. [Google Scholar]

- Sun, B.; Li, B.; Cai, S.; Yuan, Y.; Zhang, C. Fsce: Few-shot object detection via contrastive proposal encoding. In Proceedings of the 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; IEEE: Piscataway, NJ, USA, 2021; pp. 7352–7362. [Google Scholar]

- Zhu, C.; Chen, F.; Ahmed, U.; Shen, Z.; Savvides, M. Semantic relation reasoning for shot-stable few-shot object detection. In Proceedings of the 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; IEEE: Piscataway, NJ, USA, 2021; pp. 8782–8791. [Google Scholar]

- Qiao, L.; Zhao, Y.; Li, Z.; Qiu, X.; Wu, J.; Zhang, C. DeFRCN: Decoupled Faster R-CNN for few-shot object detection. In Proceedings of the 2021 IEEE/CVF International Conference on Computer Vision, Nashville, TN, USA, 20–25 June 2021; IEEE: Piscataway, NJ, USA, 2021; pp. 8681–8690. [Google Scholar]

- Kaul, P.; Xie, W.; Zisserman, A. Label, verify, correct: A simple few shot object detection method. In Proceedings of the 2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; IEEE: Piscataway, NJ, USA, 2022; pp. 14237–14247. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the 2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; IEEE: Piscataway, NJ, USA, 2016; pp. 770–778. [Google Scholar]

- Everingham, M.; Van Gool, L.; Williams, C.K.; Winn, J.; Zisserman, A. The Pascal Visual Object Classes (VOC) challenge. Int. J. Comput. Vis. 2009, 88, 303–308. [Google Scholar]

- Everingham, M.; Eslami, S.A.; Van Gool, L.; Williams, C.K.; Winn, J.; Zisserman, A. The Pascal visual object classes challenge: A retrospective. Int. J. Comput. Vis. 2015, 111, 98–136. [Google Scholar]

- Lin, T.Y.; Maire, M.; Belongie, S.; Hays, J.; Perona, P.; Ramanan, D.; Dollár, P.; Zitnick, C.L. Microsoft coco: Common objects in context. In Proceedings of the Computer Vision ECCV 2014: 13th European Conference, Zurich, Switzerland, 6–12 September 2014, Proceedings, Part V 13; Springer: Berlin, Germany, 2014; pp. 740–755. [Google Scholar]

- Wang, Y.X.; Ramanan, D.; Hebert, M. Meta-learning to detect rare objects. In Proceedings of the 2019 IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 9925–9934. [Google Scholar]

- Yao, J.; Shi, T.; Che, X.; Yao, J.; Wu, L. DA-FSOD:A Novel Augmentation Scheme for Few-Shot Object Detection. IEEE Access 2023, 11, 92100–92110. [Google Scholar]

- Wu, J.; Liu, S.; Huang, D.; Wang, Y. Multi-scale positive sample refinement for few-shot object detection. In Proceedings of the 2020 European Conference on Computer Vision—ECCV 2020: 16th European Conference, Glasgow, UK, 23–28 August 2020, Proceedings, Part XVI 16; Springer: Cham, Switzerland, 2020; pp. 456–472. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).