Abstract

Human–robot skill transfer enables robots to learn skills from humans and adapt to new task-constrained scenarios. During task execution, robots are expected to react in real-time to unforeseen dynamic obstacles. This paper proposes an integrated human–robot skill transfer strategy with offline task-constrained optimization and real-time whole-body adaptation. Specifically, we develop the via-point trajectory generalization method to learn from only one human demonstration. To incrementally incorporate multiple human skill variations, we encode initial distributions for each skill with Joint Probabilistic Movement Primitives (ProMPs) by generalizing the template trajectory with discrete via-points and deriving corresponding inverse kinematics (IK) solutions. Given initial Joint ProMPs, we develop an effective constrained optimization method to incorporate task constraints in Joint and Cartesian space analytically to a unified probabilistic framework. A double-loop gradient descent-ascent algorithm is performed with the optimized ProMPs directly utilized for task execution. During task execution, we propose an improved real-time adaptive control method for robot whole-body movement adaptation. We develop the Dynamical System Modulation (DSM) method to modulate the robot end-effector through iterations in real-time and improve the real-time null space velocity control method to ensure collision-free joint configurations for the robot non-end-effector. We validate the proposed strategy with a 7-DoF Xarm robot on a series of offline and real-time movement adaptation experiments.

1. Introduction

Recent advances in flexible manufacturing have heightened demands to release traditional industrial robots from repetitive tasks under controlled conditions. Robots are expected to complete tasks with humans in a shared workspace named human–robot coexistence [1]. Human–robot skill transfer plays a key role for the robot to learn new skills from humans and adapt to new, constantly changing task scenarios. In this context, Learning from Demonstration (LfD) [2,3,4,5,6] frameworks have been proposed, where robots learn tasks by observing and imitating human demonstrations. A core component of LfD are the Movement Primitives (MPs), which are well-established movement encoding methods with temporal and spatial modulation; complex tasks are accomplished by sequencing and combining different MPs [7].

For MPs learning, the basic-level capability refers to generalizing the task start, end, and intermediate points (via-points) under varying conditions while preserving the demonstration features, even for points outside the range (extrapolation ability); the research methods generally involve deterministic and probabilistic approaches. Deterministic methods, such as Dynamic Movement Primitives (DMPs) [8], model movements with forced nonlinear differential equations. DMPs generalize from a single demonstration but lack support for via-points or multiple trajectory statistics, and their extrapolation depends on start-to-goal relative distances. Probabilistic methods, such as Gaussian Mixture Models with Gaussian Mixture Regression (GMM-GMR) [9], Probabilistic Movement Primitives (ProMPs) [10], and Kernelized Movement Primitives (KMPs) [11], encode the mean and variance of multiple trajectories. GMM-GMR models the joint probability distributions using GMM and derives the task execution parameters via GMR, but struggles with via-point adaptation and extrapolation. ProMPs use basis functions and hierarchical Bayesian models to generalize via-points through conditional probability, but require extensive demonstrations and face extrapolation limits. KMPs avoid explicit basis functions, offering a non-parametric approach for high-dimensional tasks. They generalize via-points effectively and exhibit robust extrapolation, but suffer from numerical instability and high computational costs as timesteps increase. To combine deterministic and probabilistic approaches, Probabilistic Dynamic Movement Primitives (ProDMPs) [12] convert DMPs numerical integration into basis functions for encoding ProMPs, utilizing deep neural networks (NNs) for nonlinear trajectory conditioning. ProDMPs enhance via-point adaptation through NNs but exhibit limited extrapolation when via-points exceed the demonstration range.

The core level of MPs’ learning focuses on adapting MPs to unknown task scenarios, termed MPs adaptation. These scenarios involve task constraints, expressed as inequality equations in Joint and Cartesian space, including position/velocity limits, obstacles, and virtual walls. Extensive research on MPs adaptation has been conducted under various frameworks. Deterministic methods, such as DMPs, enhance obstacle avoidance by applying repulsive forces in the acceleration domain for multiple obstacles [13] and non-point obstacles [14]. Position limits are addressed through exogenous state transformations [15], while velocity limits are managed via time-governed differential equations [16]. DMPs compute efficiently as constraints are directly integrated, avoiding iterative processes. However, they struggle with simultaneous Joint and Cartesian space constraints, particularly under multiple repulsive forces, leading to local minima. DMPs also lack adaptability to nonlinear constraints and exhibit limited generalization, as they are tailored to specific trajectories, restricting flexibility in goal-reaching. Probabilistic methods, such as ProMPs, encode variations through covariance matrices to satisfy task constraints. For example, ref. [17] combines ProMPs with Gaussian multiplications, introducing primitives to handle obstacles, but this requires anticipating all possible situations. Alternatively, ref. [18] optimizes ProMPs by excluding regions using the Kullback–Leibler (KL) divergence and reward functions, with policies derived via Relative Entropy Policy Search (REPS) [19], while ref. [20] minimizes the Mahalanobis distance to the original ProMPs. However, these methods focus solely on the end-effector, neglecting collisions for other links and constraints, like virtual walls or position/velocity limits. They are efficient but struggle to handle multiple combined constraints. To address these limitations, ref. [21] integrates multiple task constraints in Joint and Cartesian space into a unified probabilistic framework, optimizing ProMPs using the gradient ascent-descent algorithm. However, the covariance matrix of ProMPs basis functions can face symmetric positive-definite issues during iteration, and optimizing timesteps satisfying task constraints increases computational cost. KMPs replace explicit basis functions with a kernel matrix, extending to linear constraints (LC-KMPs) [22] via quadratic programming (QP). Further, ref. [23] handles nonlinear constraints (EKMPs) by approximating them with the Taylor expansion to solve with QP. While EKMPs improve efficiency over [21], they face numerical instability and high computational costs as timesteps grow, and their reliance on Taylor expansion for nonlinear constraints limits the adaptability due to inaccurate estimations.

When a robot adapts learned skills to new task-constrained scenarios, unexpected obstacles or human partners may appear during execution. Due to the significant replanning time required for MPs’ adaptation, real-time reactions are hindered, limiting applications to offline and static environments. Thus, real-time movement adaptation is crucial for dynamic environments, often categorized into global and local strategies. Global methods focus on convergence towards the goal. For instance, Rapidly Exploring Random Trees (RRT)* utilizes space-filling trees to find feasible paths with automatic sampling length [24], and Covariant Hamiltonian Optimization for Motion Planning (CHOMP) employs gradient optimization for motion planning [25]. These methods exhibit fast convergence but struggle in dynamic environments. Model Predictive Control (MPC) reduces adaptation to a finite time horizon [26], but defining cost functions is challenging. Reinforcement learning-based adaptation trains end-to-end strategies [27], but it lacks reliable convergence guarantees and requires extensive training. Local methods generate nonlinear control fields evaluated in real-time. Artificial Potential Fields (APF) [28] represent obstacles as repulsive fields, offering computational efficiency, but are prone to local minima. Harmonic Potential Functions (HPF) [29] ensure no critical points in free space but lack analytical solutions. Inspired by HPF, Dynamical System Modulation (DSM) [30] ensures convergence using fluid dynamics around obstacles, with stability proven via Lyapunov theory and extensions to handle multiple obstacles [31], real-time sensing [32], and rotation dynamics [33]. DSM demonstrates high efficiency for real-time obstacle avoidance in dynamic environments, providing closed-form solutions without local minima. However, DSM assumes no initial collisions and requires dynamic modulation in advance. If new positions from the last timestep encounter collisions during convergence to the goal, the robot may stop, limiting real-time performance. Additionally, DSM treats the robot as a zero-dimensional point, focusing only on the end-effector and ignoring collisions with other rigid links. In this context, null space velocity control [34] and task priority control [35] are proposed for non-end-effector real-time obstacle avoidance. However, they assume the obstacles to be stationary and only consider robot non-end-effector portions. The work [36] combines DSM with null space velocity control for whole-body adaptation, assigning retreat velocities to avoid collisions. However, this approach performs adaptation only once, and the retreat velocity norm limits non-end-effector competence.

This paper proposes a unified human–robot skill transfer strategy that integrates offline task-constrained optimization and real-time whole-body adaptation. The contributions are outlined as follows:

- 1.

- Considering basic-level MPs learning, to facilitate human–robot skill transfer, we develop the via-point trajectory generalization method enabling the robot to learn and generalize smooth trajectories from only one human demonstration. To incrementally incorporate multiple human skill variations, we encode initial distributions for each skill with Joint ProMPs by generalizing the template trajectory with discrete via-points and deriving corresponding inverse kinematics (IK) solutions.

- 2.

- Considering core-level MPs adaptation, given initial Joint ProMPs, we propose an effective task-constrained probabilistic optimization method integrating multiple task constraints in Joint and Cartesian space via a double-loop optimization. We decouple the ProMPs into Gaussians at each timestep, only optimizing those timesteps violating task constraints, and update ProMPs independently. Given optimized ProMPs, we propose the analytical movement adaptation method to utilize ProMPs directly for task execution with a threshold.

- 3.

- Considering real-time movement adaptation, during task execution, we propose an improved robot whole-body movement adaptation method, combining offline task-constrained optimization. For the robot end-effector, we incorporate an offline-optimized trajectory for dynamic modulation and develop DSM by performing multiple modulation iterations until safe; for the robot non-end-effector, we integrate the offline-optimized Joint ProMPs to improve the real-time null space velocity control method to ensure collision-free joint configurations through iterations.

- 4.

- We conduct both offline and online movement adaptation experiments with comparative results to validate the effectiveness of the proposed strategy.

2. Via-Point Trajectory Generalization and Initial Distributions Encoding

2.1. Learning New Skills in Cartesian Space with Via-Point Trajectory Generalization

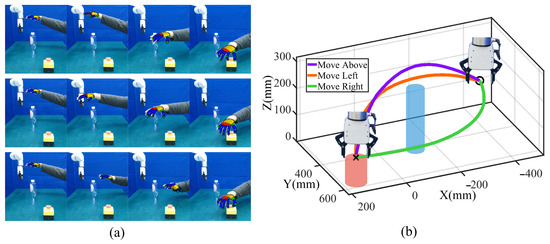

To endow the robot with skill diversity for obstacle avoidance, the human provides three modes, namely, moving above, left, and right around the obstacle. For each mode, only one human demonstration is required, and we utilize a markerless vision capture system, the Kinect FORTH [37], to track human hand centroid movements with position and orientation sets recorded in T timesteps. We adopt the Moving Average Filter (MAF) to ensure smoothness and transform to the robot base frame via hand-eye calibration.

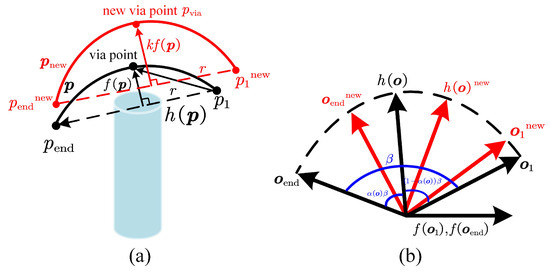

To learn skills denoted as positions and quaternions in Cartesian space, we express the demonstration trajectory as an integration of linear elementary trajectory h and nonlinear shape modulation f, and the schematic diagram of the proposed via-point trajectory generalization is shown in Figure 1.

Figure 1.

The proposed via-point trajectory generalization schematic. (a) 3D via-point generalization with obstacles. and represent x, y, and z values over T time steps, with one via-point shown as an example. The blue cylinder denotes the obstacle, and the new via-point can be selected from above, left, or right of the obstacle for safe avoidance. (b) Orientation generalization using SLERP. At new orientations and , the orientation is generalized based on the quaternion multiplication of and .

For 3D positions, where each degree of freedom (DOF) is independent, we derive position sets with T timesteps in one DOF as follows:

The elementary trajectory is a line directly connecting the start point and the end point , with the shape modulation defined as follows:

where r is the scale factor denoting the proportion of projective points on the line ,

If no intermediate via-points are required to generalize, we directly generate new projective points between the new start point and end point according to r, and add learned shape modulation to generalize new trajectory sets :

Once a via-point is needed, we scale the shape modulation with the coefficient k:

Subsequently, new trajectory sets are generalized upon:

For quaternion sets, considering dimensions are affected by each other in orientation, we define the trajectory with:

where represents the quaternion sets, and × denotes quaternion multiplication. The elementary trajectory is derived using the spherical linear interpolation (SLERP) as follows:

where , is the ratio of SLERP, ranging from 0 to 1. and are the start and end points of the elementary trajectory:

In order to learn from demonstration, we set to distribute uniformly over T timesteps and set , to . Once new start quaternion sets and end quaternion sets are required during generalization, we compute a new elementary trajectory using Equations (8) and (9), with learned shape modulation superimposed to generate a new orientation trajectory using Equation (7). Note that there is no need to generalize via-points in orientation, as we can achieve via-points generalization in 3D positions while keeping the original orientations.

2.2. Model Joint Space Distributions with Probabilistic Movement Primitives

Probabilistic Movement Primitives (ProMPs) [10] are probabilistic methods based on hierarchical Bayesian models to encode variances in Joint or Cartesian space. To promote integrating all nonlinear task constraints for the robot end effector and other links analytically, we model the Joint space distributions with ProMPs. Given the positions and orientations generalized in Section 2.1, we obtain all feasible joint sets by the Jacobian inverse kinematic (IK) algorithm [38]. , , N is the number of demonstrations, and D is the joint DOFs.

ProMPs model joint positions as a linear combination of Gaussian basis functions and a weight vector under the presence of a zero-mean Gaussian noise ,

Joint positions in one demonstration are expressed with the weight vector via linear ridge regression [39]. Using parameters to estimate the weight vector with Gaussian distributions , the probability of observing a trajectory given becomes:

where and denote the mean and covariance of the weight vector, which are derived by maximum likelihood estimation, and is the covariance of the Gaussian noise. When it comes to new task conditions , where and are the expected mean and covariance of the joint positions at time t, the updated parameters can be computed based on Bayesian theory as follows:

where denotes the basis function at time t. The Joint positions under new task conditions are sampled from Gaussian distributions as follows:

3. Offline Optimization and Adaptation Under Nonlinear Task Constraints

After generalizing feasible collision-free trajectories with initial ProMPs distribution encoded, we put forward the optimization method under various nonlinear task constraints. Given optimized ProMPs distribution, we present the movement adaptation method for task execution. We formulate the constrained optimization problem in Section 3.1, provide analytical expressions of task constraints in Section 3.2, and summarize the complete procedure in Section 3.3.

3.1. Problem Formulation

Given initial ProMPs distribution , the main objective is to obtain the optimized ProMPs distribution , which is as close as possible to the original distribution while satisfying task constraints. We use the Kullback–Leibler divergence to represent the similarity between optimized and initial distributions owing to its analytical tractability, while expressing the constraints with cumulative distribution functions. However, in this paper, considering ProMPs model every timestep as an independent Gaussian distribution, we optimize the parameters of each timestep separately. Specifically, we convert the initial distribution to the mean and standard deviation of each timestep t as follows:

For each timestep t, the new mean and standard deviation are optimized under task constraints separately. Let denote the function of the task constraints related to , and denote the cumulative distribution function (CDF) of the random variable defined by , then we can rewrite the optimization problem as a Lagrangian function:

where is the confidence level to represent the probability satisfying task constraints , and H represents the one-side or two-side inequality constraints; the optimized mean and standard deviation are derived by gradient ascent-descent methods as follows:

Subsequently, given , we implement another optimization step to obtain the optimized ProMPs distribution with:

In Section 3.2, we formulate every task constraint by defining the function related to , expressing the inequality constraint function H and approximating the cumulative distribution function ; the optimized results are then numerically iterated based on automatic differentiation.

3.2. Constraints Definitions in Joint Space and Cartesian Space

A robot may encounter constraints in Joint space and Cartesian space at one time. Joint space constraints depend directly on of each joint freedom, which usually refer to the motion range of the robot’s links. The Cartesian space constraints depend on the nonlinear forward kinematics function of , standing for the 3D positions of the end effector or any other interest points on the robot links. In the following, we formulate several key constraints commonly used in obstacle avoidance, like the joint range limit, waypoints, hyperplane, and repellers.

3.2.1. Joint Range Limit

The joint range limit indicates the limit motion range in joint space based on the robot’s configuration or the user’s expectation. Considering the limit motion range is usually ensured with the forward kinematics (FK) function. In this paper, we focus on retaining the original joint range limit learned with ProMPs as much as possible. We formulate the constraint function as , and denote the two-side constraint with:

where k denotes the kth joint freedom, is the confidence level, and and are the minimum and maximum of the joint positions from demonstration, respectively. Due to the Gaussian distributions of the joint positions, we calculate the difference between the CDF of the maximum and minimum points as follows:

where F denotes the CDF of the Gaussian distributions.

3.2.2. Waypoints

Waypoints are Cartesian positions on the robot’s end effector or any other interest points that we expect the robot to reach at timestep t or a temporal interval . Waypoints are defined in the Cartesian space with the FK function T to associate with some DOFs of the joint positions. Based on the distance square between the expected points and the actual points, we denote the waypoint constraint function as follows:

And express the one-side constraint with:

where is the distance threshold and is the confidence level. Due to the nonlinear nature of the constraint function, we can not derive the exact distribution of with Bayesian theory. To tackle the problem, the Unscented Transform [40] method is adopted in [21]. However, the initial factor deeply influences the estimation result—when the mean and standard deviation change, the factor can not reflect the variance of the constraint function precisely. Alternatively, in this paper, we utilize moment matching to estimate the distribution:

where D is the DOF number required to express the constraint function. We utilize 2x the standard deviation to constitute the set S and compute the mean and variance based on moment-matching. Given that the distribution of the random variable is a generalized-, we approximate it with a simpler Gamma distibution to represent the CDF in an analytical way. The shape and rate of the Gamma distribution are derived as follows:

The CDF of the Gamma distributions are then computed using to represent the waypoints constraint probability via Equation (22).

3.2.3. Hyperplane

During motion, the robot is required to be confined to specific planes called the hyperplane or the virtual wall. Hyperplane constraints are defined in the Cartesian space related to the positions of the robot’s end effector or any other interest points. We can formulate a hyperplane constraint function as follows:

where and are the normal and bias vectors of the hyperplane. The one-side inequality constraint is expressed with:

The distribution of is a linear transformation of the robot’s 3D positions ; however, is nonlinear in . We thus use our moment-matching method via Equation (23) to obtain the estimated mean and variance . The overall distribution of can be derived by:

Subsequently, The CDF of is computed using Gaussian distributions to represent the hyperplane constraint probability via Equation (26). Note that we can impose hyperplane constraints at a timestep or a temporal interval, and combine several hyperplane constraints to represent more complex convex domains.

3.2.4. Repellers

The robot needs to avoid obstacles in the environment while performing tasks. Constraints between the robot and obstacles are called repeller constraints. Repellers constraints are imposed not only on the robot end effector but also on any other danger points on the robot link. In this paper, we choose three segments on the robot link in each direction: front, back, left and right, and use cylinders to envelop obstacles. Considering we only need to consider danger situations where the robot is apt to collide, we define the danger distance to express the repellers constraints. For three segments in four directions, we compute the shortest distance between segments on the robot link and the axis segment, with the minimum distance found afterward. If the minimum distance is greater than the safety radius of obstacles, the danger distance is 0, and if else, the danger distance is computed as . We formulate the repellers constraint function as follows:

Furthermore, we express the one-side inequality constraint to show the probability that the minimum distance is greater than the safety radius:

3.3. Optimization and Adaptation Procedure

3.3.1. Optimization Procedure

Based on the Lagrangian function in Equation (16) and constraints function defined in Section 3.2, we concretize the optimization procedure to obtain the optimal parameters. For notational brevity, we use the notation and to denote the intial parameters and optimized parameters. We can rewrite the Lagrangian function in Equation (16) as follows:

The term denoting the KL divergence between two univariate Gaussian distributions is further derived by:

We express the optimized parameters derived in Equation (17) as follows:

To solve the problem, we utilize a double-loop algorithm based on the gradient ascent-descent method. For the outer-loop, we optimize with the gradient quasi-ascent steps. The Exponential Method of Multipliers (EMM) [41] method is suitable to solve the inequality constraints of the Lagrangian function via exponential factors. We can express the optimized after each iteration as , where decides the convergence speed; the gradient of is obtained by . For the inner-loop, we optimize with the gradient descent method. The LBFGS method [42] is tailored for solving nonlinear optimization problems with low computing and memory costs. We perform several LBFGS steps in , with the gradient computed using the automatic differentiation frameworks in Tensorflow [43]. The convergence conditions are checked after each iteration of LBFGS to speed up convergence. After we obtain the optimized , we directly perform several LBFGS steps to obtain the optimized based on Equation (18).

3.3.2. Adaptation Procedure

Subsequently, given optimized ProMPs distribution , we present the complete offline robot movement adaptation procedure. During the learning phase, the human first demonstrates three skills to avoid a single obstacle. The human hand centroid positions and orientations are equivalent to the robot end effector trajectories. For each mode, we generalize trajectory sets to the same start and end points, normalized to the same timestep T, to formulate the template trajectory. Aiming to generate feasible collision-free trajectory sets, we choose feature 3D via-points with equal intervals to generalize the initial trajectories (see Section 2.1). We check the collisions and fine-tune the via-points with threshold to construct initial collision-free trajectories and obtain corresponding joint position sets . We encode the initial joint distributions with ProMPs (see Section 2.2) as . To speed up optimization, we first find feature timesteps that actually violate task constraints, then impose task constraints at those timesteps and optimize the ProMPs distributions (see Section 3.3.1). The optimized ProMPs , the mean and standard deviation are treated as safe joint distributions.

During task execution, the robot first determines the start and end point by visual information, next randomly selects the running mode from three modes , then samples the via-points with Gaussian distributions as . The mean and standard deviation are computed from the initial via-points range. The trajectory is then generalized and fine-tuned to ensure safety according to the sampled via-point. The optimized and represent the joint limit range as with the initial joint value denoted as . The joint limited range and initial value are passed to the Jacobian IK algorithm to test whether the solution is calculated successfully. The succeed number of all feature timesteps decides whether the robot can perform the task successfully; that is, if the succeed number is more than a threshold, the robot directly runs the generalized trajectory, if else, the robot continues sampling until success. To obtain the threshold automatically, we perform equal space sampling from the initial via-points range, record the success result and corresponding succeed number, then determine the threshold at the turning point between success and failure. Note that if there are no feature timesteps that violate the task constraints, the robot only needs to generalize the trajectory without optimizing the distribution. Meanwhile, if any of the three modes can not be optimized, that is, the probability satisfying the task constraints always equals 0, we eliminate the mode from the optional modes. The complete offline optimization and adaptation procedure is outlined in Algorithm 1.

| Algorithm 1 Robotic offline task constrained optimization and adaptation algorithm. |

Input:

|

4. Real-Time Whole-Body Movement Adaptation

Following offline movement adaptation under the static scene, the robot needs to react in real-time to immediate changes in the dynamic environment, for instance, multiple stationary or moving obstacles, new target points, etc. The analytical optimization process in Section 3 can not fulfill the real-time demands. In this context, real-time dynamic modulation upon velocity control provides a promising solution. To be specific, the offline task execution trajectory can be treated as an initial dynamic system; the objective is to obtain the modulated system for real-time robot whole-body movement adaptation including end-effector and other links (non-end-effector). We present the end-effector and non-end-effector adaptation methods in Section 4.1 and Section 4.2.

4.1. Real-Time End-Effector Adaptation

In this paper, we assume O convex obstacles around the robotic arm. Movement adaptation for the end-effector is achieved in Cartesian space by modulating the 3D positions while keeping the original orientations. Consider a state variable denoting the end effector 3D positions; its temporal dynamics is governed by an autonomous (time-invariant) and continuous function as follows:

Given the initial state , the trajectory can be iteratively computed by integrating according to:

where t is a positive integer and is the integration time step.

For each obstacle , we define a distance function with a continuous first-order partial derivative and monotonically increasing value away from the obstacle center to distinguish the space around an obstacle into three regions:

To envelop obstacles, we construct the ellipsoid-shaped function as follows:

where is the i-th axis length and is a positive curvature parameter.

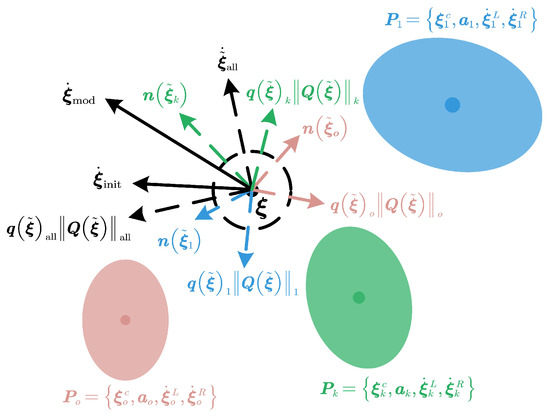

As shown in Figure 2, we parametrize the k-th obstacle (static or moving) as a vector , where is the axis length, and are the linear and angular velocity with respect to the center . Note that the static obstacle is a special case where the angular and linear velocities equal 0. Real-time obstacle avoidance for the end effector is achieved via a dynamic modulation matrix given that the local modulation matrix will not bring in new extrema with a cheap computational cost and closed-form solution [44]. To be generic, we derive the modulated trajectory from the original dynamics system (DS) in Equation (33) as follows:

where denotes the relative velocity induced by the obstacle motion. The modulation matrix is composed of the orthogonal basis matrix and the corresponding eigenvalue matrix as follows:

Figure 2.

Dynamic system modulation for robot end-effector.

For notational brevity, we define the relative position to the obstacle center . Based on Equation (36), we derive the deflection hyperplane at point with the normal vector denoted as the distance function gradient :

Given the normal vector, we formulate the basis matrix as follows:

where the tangent vectors are orthonormal to the normal as follows:

We define the related diagonal eigenvalue matrix to modify the normal and tangent directions independently with eigenvalues. The normal eigenvalue is selected to ensure impenetrability, that is, the eigenvalues should be zero on the obstacle surface. Meanwhile, the tangent eigenvalue should have the same magnitude in each direction to increase the velocity for moving around the obstacle. We formulate as follows:

Considering the safety margin, reactivity, and tail-effect in [30], we define the eigenvalue as follows:

where is the safety magnitude for each direction, and is the reactivity parameter to determine the velocity modulation amplitude, that is, the larger the , the earlier a robot reacts to the obstacle. To remedy the tail-effect, we check to evaluate whether the end effector is moving towards (negative) or away (positive) from the obstacle.

When it extends to multiple obstacles, as in Figure 2, we encapsulate all obstacles to create a single virtual obstacle by the weighted mean of each modulated DS in Equation (37). The benefit is that we only need to apply modulation once to ensure collision avoidance. Considering the impenetrability of the O obstacles and that the influence from other obstacles vanishes when approaching one obstacle boundary, we set the weight inversely proportional to the distance measure as follows:

Given Equations (33) and (37), to integrate O obstacles, for simplicity, we first compute the weighted mean of the relative velocity induced by the obstacle motion as follows:

where we add the weight term to smooth the distance influence.

In this paper, the combined weighted mean of refers to the init velocity computed from the offline task execution trajectory (see Section 3.3.2) with position differencing. To be specific, is a nonlinear function of , which can be computed from the trajectory generalization method presented in Section 2.1 given the current position . We further simplify Equation (37) as follows:

where and represent the combined magnitude and direction of .

For the magnitude, given separate equivalent magnitude for each obstacle from Equation (37) and the weight in Equation (44), we can obtain:

where is obtained from Equation (37):

Concerning the combined directions, given each equivalent directions , as [31], we define the function to project the 3D original space onto a 2D hyper-sphere space with radius as follows:

where is the projection matrix formulated from the unit vector for the init vel along with two orthonormal bases :

We then evaluate the weighted mean in this space as follows:

with the combined direction expressed back to the original 3D space as follows:

The final modulated velocity and position for the O obstacles can be computed with Equation (46) and velocity integration (Equation (34)). To sum up, the complete real-time robot end effector adaptation method comprises the following steps: First, we obtain the initial task execution trajectory with T timesteps and integration steps after the offline optimization and adaptation procedure in Section 3.3.2. Given the initial velocity at with position differencing, the modulated velocity is computed from Equation (46) with the presence of dynamic obstacles. We then apply velocity integration (Equation (34)) to obtain the expected position for the next timestep. The offline trajectory beyond the current timestep is treated as a template trajectory. Given the current position and the changing target point, we utilize the proposed trajectory generalization method in Section 2.1 to generate the remaining trajectory and compute the velocity via position differencing. The velocity is treated as the initial velocity at the next timestep, and we apply modulation iteratively to obtain the whole modulated trajectory with the expected velocity for the robot end-effector. Note that if the modulated positons collide, we treat them as init positions and modulate them iteratively until safe.

During the real-time end-effector modulation process, we leverage the demonstration information from the offline optimization phase through trajectory generalization, preserving the human demonstration characteristics. The initial velocity at the next timestep is determined based on the generalized trajectory, rather than being arbitrarily chosen, which integrates offline optimization and online modulation. The end-effector modulation provides the modulated velocity and the desired position at each timestep, which are utilized for subsequent real-time non-end-effector adaptation.

4.2. Real-Time Non-End-Effector Adaptation

We ensure real-time obstacle avoidance for the robot end effector in Section 4.1; however, the potential collisions between other robot links (non-end-effector) and obstacles are not considered. To achieve real-time non-end-effector adaptation, given the expected velocity in Cartesian space, we utilize the robot joint redundancy with null-space velocity control. In particular, the 7-DoF manipulator is considered in this paper; its end effector velocity and joint velocity conform to:

where is a Jacobian matrix. Considering is not a square matrix, given the expected end-effector velocity , the joint velocity is derived by:

where is the generalized inverse and is the null space matrix of ; that is, whatever the value of the 7-dimensional joint velocity vector , the resulted end-effector velocity remains 0. To derive , we minimize the norm error between and with the least square solution satisfying the Moor–Penrose inverse [45]. We rewrite Equation (54) with as follows:

The term provides distinct joint velocity configurations resulting in the same end-effector velocity.

Considering multiple solutions for the joint velocity vector in Equation (54), we aim to ensure that retains the joint velocity distributions of the 7DoF ProMPs during offline optimization while minimizing the norm error between and in Equation (54). This allows us to integrate offline task-constrained optimization and real-time movement adaptation for robot whole-body control with Joint ProMPs. To derive the joint veloicty distributions, at the current time step t, the mean and standard deviation of the joint velocities are computed based on the joint positions and are expressed in the form of ProMPs basis functions as follows:

where

where represents the optimized mean and standard deviation of the joint positions with denoting the optimized mean and covariance matrix of the 7DoF Joint ProMPs. To simplify the notation, we omit the superscript t. Given that the modulation is performed at each timestep, the mean and standard deviation of the joint velocities at each timestep are expressed with and .

To minimize the norm in Equation (54), while ensuring that the resulting joint velocity vector adheres to the optimized distribution at each time step, we define the loss function as follows:

We further substitute Equation (54) into the above equation to obtain:

where is the regularization term. By minimizing with respect to , we obtain the joint velocity vector as follows:

where represents the diagonal covariance matrix composed of the standard deviations for the offline-optimized 7DoF joint velocities.

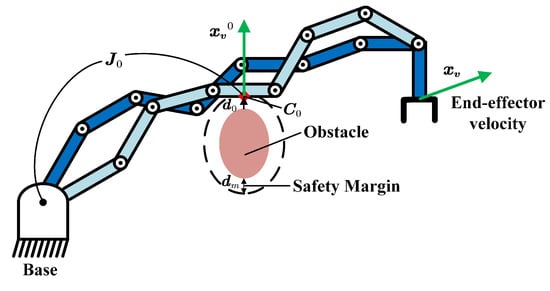

In addition to preserving the joint distribution, we also need to perform real-time collision detection between the robot’s links and obstacles with dynamic modulation to achieve null-space velocity control. Figure 3 demonstrates the null space velocity control strategy. We detect the closest point on the robot non-end-effector to each obstacle and enforce velocity at that point if the point is within the obstacle safety margin . The vector starts from the obstacle surface to the point , with the same direction as , and is the Jacobian matrix from the base to point . We further denote the end-effector velocity and the point velocity with Equation (53):

Figure 3.

Null-space velocity control for robot non-end-effector.

We substitute Equation (54) to with derived as folllows:

To achieve a trade-off between maintaining consistency with the optimized distribution and ensuring a safe distance from the robot non-end-effector, we define the coefficients and satisfying . We weight the joint velocity vectors from Equations (60) and (62) to obtain the combined joint velocity vector as follows:

Taking and as control variables and substituting Equation (63) to , we obtain:

Due to the idempotent and hermitian nature of in Equation (55), that is, , we simplify Equation (64) as follows:

Given the expected end-effector velocity , we can utilize Equation (65) to design a feedback controller for non-end-effector adaptation. In this context, is used to track , denote the point velocity attributed to , and is used to transform the Cartesian velocity into the joint velocity. In this paper, considering the manipulator redundancy is 1, less than 3, we define the direction of the same as vector as , where is the unit vector of , and is a scalar velocity. To obtain smooth and flexible motion for obstacle avoidance, we define as in Equation (66), where is the safety margin, and denotes the distance at which the obstacle begins to affect the manipulator to construct a smoothing factor .

Let denote the end effector pose obtained through the forward kinematics function ; the expected end effector pose can be derived with velocity integration:

we then express the feed-back controller error as follows:

and further denote the resulted joint gradient as follows:

We derive with integration , and continue the iteration process until the error meets the stopping criterion to obtain the modulated joint positions for real-time task execution.

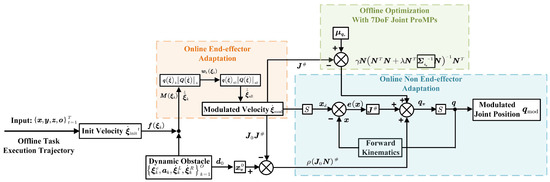

To integrate the offline and real-time robot movement adaptation methods presented in Section 3 and Section 4, we summarize the overall control framework in Figure 4.

Figure 4.

The integrated offline and real-time robot movement adaptation control diagram.

Given an offline task execution trajectory obtained from Algorithm 1, the initial velocity is computed using position differencing. In the presence of O dynamic obstacles, represented as , we derive the modulation matrix and the weight coefficient for each obstacle. Additionally, we identify the closest point on the robot’s non-end-effector links and define the corresponding vector . The avoidance velocity is then determined accordingly from Equation (66).

For online end-effector adaptation, we compute the equivalent modulation magnitude and direction for each obstacle, denoted as . These are then combined using weighted summation with , along with hyper-sphere projection , to obtain the overall modulation effect . The resulting modulated velocity serves as the desired end-effector velocity. The expected position at the next timestep is determined via velocity integration. Note that if collides, we treat it as the initial position and modulate it iteratively until safe.

For online non-end-effector adaptation, given the mean and diagonal covariance matrix from offline optimization with 7DoF Joint ProMPs, along with the null-space velocity component, Equation (69) is constructed to design the control diagram. Given the expected position , the system iterates through a control loop, minimizing the error defined in Equations (67)–(69) until the stopping criteria are met. If any joint configuration results in a collision, the nominal velocity norm is iteratively increased, and the control loop is repeated until a safe motion is achieved. The modulated joint positions are then used for real-time task execution. Furthermore, at each timestep, the initial velocity for the next step is estimated using trajectory generalization based on the current position . This process repeats iteratively until all T timesteps are completed.

5. Experimental Validation

In this section, we validate the proposed human–robot skill transfer strategy within an industrial scenario where a robot learns different skills from the human to move around the obstacle and grasp the emergency stop. We first conduct human–robot skill transfer experiments. After the robot learns the skills, we evaluate our method in different static (offline) and dynamic (real-time) environments, and compare our method to other similar movement adaptation methods. For clarity, we summarize the definitions of all the parameters employed in the experiment section in Table 1.

Table 1.

Definitions of all parameters employed in the experiment section.

5.1. Template Trajectory Generalization from Only One Human Demonstration

The Ufactory Xarm 7-DoF manipulator is selected to validate our method. A Kinect V2 camera is positioned on the side to track continuous human hand movements, while a Zed2i binocular camera is mounted overhead to capture task-related object poses. For a single obstacle scenario, the robot is taught three distinct skills: moving above, left, and right around the obstacle using the Kinect FORTH system. Each skill is demonstrated only once, with the human hand centroid trajectories recorded (see Figure 5a). The Kinect FORTH system captures real-time 6D poses of the human hand centroid and provides a colored model of the fingers, enabling precise trajectory acquisition.

Figure 5.

Template trajectory generalization from only one human demonstration. (a) Snapshots of human hand centroid tracking with the Kinect FORTH system. The first, middle, and last rows denote move above, left, and right around the obstacle, respectively. (b) The generalized template trajectory for three modes at the minimum Z-position of the robot gripper center with the same start point (symbol ‘o’) and end point (symbol ‘x’).

For each skill, the trajectory is smoothed using a Moving Average Filter (MAF), normalized to 30 timesteps, and transformed into the robot base frame. It is further converted to the table frame at the tabletop surface. Utilizing our trajectory generalization method (see Section 2.1), the trajectory is generalized with the same start and end points, without via-points, to generate the template trajectory for each skill (see Figure 5b). To account for the inherent noise in the Kinect FORTH system, the trajectories are aligned to the same start and end point, ensuring consistency for subsequent offline task optimization. A suitable start point is set, and the end point is determined based on the emergency stop 3D positions detected by YOLO [46]. The template trajectory corresponds to the minimum Z-position of the robot gripper center.

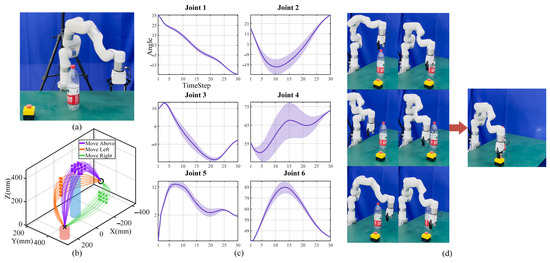

5.2. Offline Movement Adaptation

Given the template trajectory, we validate the offline task-constrained adaptation algorithm (Algorithm 1) under a static scene with the presence of a new big bottle, as shown in Figure 6a. We randomly place the emergency stop and the bottle with 3D positions detected by YOLO. For each skill, the robot first generates collision-free end-effector trajectory sets with via-point trajectory generalization (see Section 2.1). We select the feature via-points corresponding to the max norm of shape modulation and set discrete via-points with equal spacing to construct the initial trajectory sets. To be specific, for the above skill, the X- and Y-positions are within the radius = 39 mm around the obstacle with Z within ±100 mm around the template trajectory via-points; for the left and right skill, we fix X and set the Y-, Z-positions distributed within ±100 mm around. Meanwhile, the X-, Y-, and Z-positions are unioned based on the limited Y- and Z-ranges for task execution. We check the collisions and fine-tune the via-points with the threshold = 30 mm; the resultant collision-free trajectory sets are shown in Figure 6b. We further obtain collision-free joint sets with Jacobian IK and set 35 basis functions to encode the 7DoF joint distributions with ProMPs, as shown in Figure 6c.

Figure 6.

Offline movment adaptation experiment with a new big bottle. (a) Experimental setup. (b) End-effector collision-free trajectory sets for three skills with via-point trajectory generalization. (c) Joint ProMPs distributions given discrete collision-free joint sets (taking the move above skill as an example). Solid lines denote mean values with shaded areas denoting standard deviations. (d) Robot task execution snapshots: the first, middle, and last rows denote move above, left, and right around the obstacle.

The task constraints include several critical factors: (1) Joint Range Limit: Ensures each joint operates within its specified range to prevent mechanical damage or errors expressed as follows: , where k denotes the kth joint freedom, and and are the minimum and maximum of the joint positions from demonstration, respectively.

(2) Waypoint Constraints: Guarantee precise start and end positions in task space. The forward kinematics (FK) function T maps joint configurations to end-effector positions, with a precision requirement of 1mm: , where are the desired end-effector positions.

(3) Hyperplane Constraints: Define spatial boundaries for the robot motion. For instance, the Z-direction not up to 575 mm is constrained by the following: , where . Similarly, all constraints of Y and Z are defined as .

(4) Repeller Constraints: Prevent collisions between robot links 1 to 6 and obstacles. For example, a safe radius is enforced to avoid contact with a water bottle for the robot link 6: , where denotes the minimum distance between the robot link 6 and obstacles, and represents the FK function of the robot link 6.

For all constraints, we set , considering the Gaussian nature of 2× standard deviation. To speed up convergence, we set for LBFGS and check the convergence conditions after each iteration.

We determine the feature timesteps violating task constraints and merely optimize those timesteps. In this experiment, we discover that there are no task constraint violations for all timesteps of each skill; that is, the optimized ProMPs are the same as the initial ProMPs. The robot thus directly utilizes the generalized trajectory for task execution. During task execution, the robot selects randomly from three skills with via-points sampled from the original via-points range. We repeat 100 experiments with the robot task execution snapshots, as shown in Figure 6d. We run the offline movement adaptation algorithm for three skills parallelly. The total time is 1.64332 s with a 100% task success rate. The results demonstrate that the robot can generalize smooth trajectories and encode continuous ProMPs distributions with discrete feature via-points. During task execution, the robot exhibits flexibility with different skills to avoid obstacles.

During the above experiment, for all timesteps, we discover that the probability satisfying the task constraints always equals 1. The reason is that the waypoint constraints are ensured by template trajectory generalization with the same start and end point; the hyperplane constraints, namely, the limit Y and Z constraints for the end-effector, are ensured by the feature via-points range for trajectory generalization; the repellers constraints for robot link6 to link1 are fulfilled with the bottle height, that is, the robot link6 to link1 will not collide at all timesteps. Meanwhile, for the above and left skills, if we generalize the collision-free end-effector trajectory sets, the robot link6 to link1 will not collide given the robot configuration.

As a result, to testify the performance of ProMPs optimization and adaptation, we elevate the obstacle height with a danger scene (see Figure 7a) and constrain the robot to move right around the obstacle. We set the proper feature via-points range so that partial collision-free end-effector trajectory sets will clearly collide with the bottle at other robot links (see Figure 7b). We define the same task constraints mentioned above; the difference is that there are timesteps violating the repellers constraints where the robot link6 and link5 may collide. We generalize the collision-free end-effector trajectory sets with 40 discrete feature via-points by fixing the X-, Y- and descend Z-positions, and encode the original 7DoF ProMPs distributions . For the repellers constraints, we find the minimum distance from three segments in four directions and perform moment-based distribution estimation of the danger distance (see Section 3.2.4). Utilizing double-loop LBFGS and EMM optimization in Algorithm 1, we obtain the optimized ProMPs distributions , satisfying task constraints, as shown in Figure 6c. The joint limit range and initial joint value are passed to the Jacobian IK to test the succeed number of the feature timesteps. To determine the threshold, we select the same via-points from the original range with the succeed number and obstacle avoidance result (success or failure) recorded; the corresponding relation is shown in Figure 6d. During task execution, we choose 100 uniform via-points to testify the optimized ProMPs; that is, if the succeed IK number of the generalized trajectories is more than a threshold, the robot will run the trajectory, otherwise, the robot will stop until success. The task execution result is shown in Figure 7e,f.

Figure 7.

Offline movement adaptation experiment under a danger scene with the elevated obstacle. (a) Experimental setup. (b) Evident collision for other robot links when generalizing collision-free end-effector trajectory with certain via-points. (c) Optimized ProMPs distributions under nonlinear task constraints with LBFGS and EMM. We choose typical joint 2 and 4 distributions with obvious changes, the line and shaded areas denote the original and optimized mean and standard deviations. (d) Threshold determination based on the relationship between via-point Z-position and obstacle avoidance result. The succeed number threshold is denoted with a blue solid circle. Blue and red areas denote success and failure results. (e) Generalized task execution trajectory sets with 100 discrete via-points; red and blue lines and areas denote success and failure results. (f) Robot task execution snapshots with the critical trajectory between success and failure. The first row and last rows show different motion views.

We run Algorithm 1 with a total time of 126.45 s. There are a total of 8 out of 30 timesteps violating the task constraints and the succeed number threshold is 7 given the optimized ProMPs distributions. The robot executes 39 trajectories from 100 feature via-points with a 100% success rate. The results illustrate that the robot can optimize ProMPs distributions under integrated nonlinear task constraints analytically. Considering the probability distribution essence, specific regions are excluded from the original ProMPs at the cost of losing some distributions satisfying the task constraints. Consequently, we determine the threshold with the same sparse and discrete via-points during trajectory generalization. The threshold and optimized ProMPs are then directly used for robot task execution by sampling from the continuous via-points range with Gaussian distributions. In a word, we provide a robot with the ability to perform tasks under multiple nonlinear constraints in a generic way; that is, there is no need to determine that the task constraints are satisfied each time when generalizing a new trajectory.

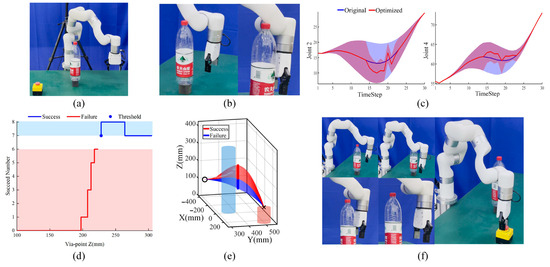

5.3. Real-Time Whole-Body Movement Adaptation

Following offline robot movement adaptation under static scenarios, we further evaluate our real-time whole-body movement adaptation method presented in Section 4 under the dynamic environment manifested as multiple stationary or moving obstacles, new target points, etc. Specifically, we first perform Algorithm 1 with the presence of a single obstacle (blue painting) and the emergency stop. The robot then randomly selects from three skills, namely, moving above, left, and right around the obstacle to generate the initial task execution trajectory. During task execution, the human gets involved in the process by suddenly moving a big bottle towards the gripper (see Figure 8b at t = 1.8 s), changing the obstacle and emergency stop positions in real-time (see Figure 8b at t = 6 s, 7.2 s, and 10.0 s, etc.).

Figure 8.

Robot end-effector real-time movement adaptation experiments. (a) Typical modulation cases for specific timesteps. The left, middle, and right columns denote the robot init positions, modulation process, and modulated positions. We utilize ellipsoids to envelop the task objects and robot gripper. (b) One episode execution snapshots when the robot selects the skill of moving above around the obstacle with 0.2 s intervals for each adjacement timestep.

We utilize YOLO to detect the object 3D positions and velocities at = 0.2 s intervals with an ellipsoid-shaped distance function enveloped (see Equation (36)). We treat the emergency stop as the target object with other objects considered obstacles. To formulate , we set the curvature parameter and derive the center , linear velocities , and angular velocities from YOLO. We obtain the normal vector from Equation (39) and formulate the basis matrix and the diagonal eigenvalue matrix from Equations (40) and (42). In this paper, we model the robot gripper with the same ellipsoid-function to derive equivalent and from two ellipsoids. We set a 10 mm margin for each direction to compute the corresponding safety magnitude , define the reactivity parameter , and remedy the tail-effect via to formulate Equation (43). Meanwhile, we disregard the impact of obstacles when . Given the initial task execution trajectory as input, the robot performs whole-body adaptation for real-time modulation (see Figure 4). Concerning end-effector adaptation, we derive the modulation matrix and weight coefficient for each dynamic obstacle with weighted magnitude and directions computed from Equations (46)–(52) to derive the modulted velocity . Regarding the non-end-effector adaptation, we detect the closest point with defined to perform null-space velocity control (see Section 4.2).

We conduct 10 dynamic experiments for each of the three skills by randomly placing and changing the objects, including obstacles and emergency stop, in real-time to evaluate the robot movement adaptation competence; the results are shown in Figure 8. Considering space restrictions, we select typical modulation cases for specific timesteps, as shown in Figure 8a, and choose one episode of moving above around the obstacle with snapshots, as shown in Figure 8b.

During the above experiments, considering the detected minimum distance for the robot, link1 to link6 are always larger than the safety margin = 10 mm (see Figure 3), null-space velocity control for the robot non-end-effector is not required, and we only denote end-effector modulation, as shown in Figure 8a. The left, middle, and right columns denote the robot init positions, modulation process, and modulated positions, respectively.

In the first row, the human suddenly moves a big bottle towards the robot end-effector with relative X and Y linear velocities , the distance functions for the big bottle and blue painting are derived. As , we ignore the influence of the blue painting, and the robot only modulates in response to the big bottle. We define the weight term to smooth the distance influence and obtain the weighted relative velocity from Equation (45); the direction coincides with the normal vector . Given the init velocity , we compute the diagonal eigenvalue matrix and the basis matrix to formulate the modulation matrix . The modulated velocity is then computed from Equation (46) with one single obstacle. We apply velocity integration to obtain the expected position for the next timestep, generalize new trajectory sets (see Section 2.1) at the current position, and compute the next velocity with position differencing, treating this as the initial velocity for subsequent modulation. After modulation, we discover that there is an evident backward movement for the robot in the right column.

In the second row, the init robot position after the last timestep modulation will collide with the big bottle, and the robot is subject to the combined effects of multiple obstacles. Distinct from [31], in this paper, if the robot init position will collide, we develop the method by iteratively modulating positions until they are collision-free. Specifically, to ensure the impenetrability of obstacles, we set the big bottle and blue painting distance function with the distance weights derived from Equation (44) as . Given the normal vector , we iteratively compute the modulation matrix for each obstacle and derive the weighted magnitude and direction from Equations (47)–(52) to obtain the modulated velocity until safe. New trajectory sets are generalized and the next velocity is computed. Note that if the emergency stop position changes, the robot can adapt in real-time with our trajectory generalization method. Figure 8b shows robot dynamic modulation snapshots with multiple dynamic obstacles and changing targets (the emergency stop). The total running time is 16.8 s with 84 timesteps, and the average modulation time for a single timestep is 0.1356 s. The results indicate that the robot exhibits real-time adaptation proficiency under dynamic environments.

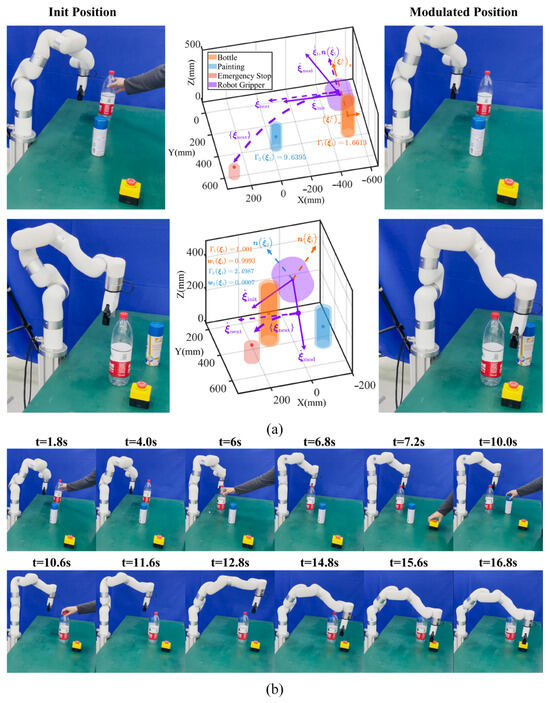

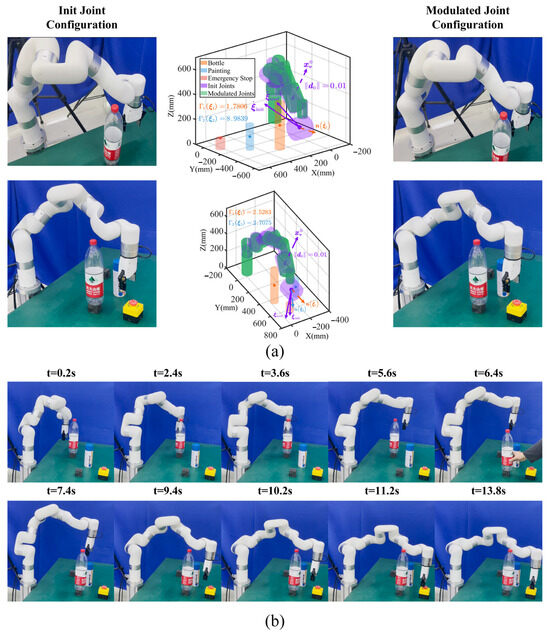

For the above end-effector modulation experiments, considering the minimum distances between the robot non-end-effector and obstacles are always larger than the safety margin , the non-end-effector adaptation proposed in Section 4.2 is not involved. Consequently, to further verify the mutual performance of end-effector and non-end-effector adaptation, we elevate the obstacle height and design a task scenario where the robot non-end-effector will collide with the big bottle at the beginning of task execution (see the first row of Figure 9a), and after the robot moves around, the human places the big bottle to a new position where the robot non-end-effector will collide when it tends to grasp the emergency stop (see the last row of Figure 9a).

Figure 9.

Robot whole-body online movement adaptation experiments. (a) Typical modulation cases for specific timesteps. The left, middle, and right columns denote the robot init joint configurations, modulation process, and modulated joint configurations. We utilize cylinders to envelop the task objects and robot links; the initial and modulated joints are colored in purple and green, respectively. (b) Task execution snapshots when the robot selects the skill of moving above around the obstacle with 0.2 s intervals for each adjacement timestep.

The robot selects the move above skill to generate the initial task trajectory; the typical modulation process is shown in Figure 9a with task execution snapshots shown in Figure 9b. The left, middle, and right columns in Figure 9a denote the initial joint configurations, the modulation process, and the modulated joint configurations, respectively. To achieve real-time efficiency, we utilize cylinders to envelop obstacles and robot links with minimum distance detected by the Flexible Collision Library (FCL). To balance offline-optimized Joint ProMPs and null-velocity contol, we set to formulate Equation (65), and we set to formulate Equation (66).

The first row illustrates the robot whole-body modulation at the beginning. Given the distance function , the robot is modulated under the big bottle normal vector with the expected end-effector modulated velocity . We detect the collision at the current position. To ensure impenetrability of the obstacles, we set and iteratively apply null-space velocity control until safe. To be specific, we treat the robot collision link center as the control point and derive the Jacobian matrix at . We compute the retreat velocity from Equation (66) and obtain the enforced velocity . We then utilize the control loop defined in Equation (69) to derive the modulated joint configuration iteratively. Note that if the modulated joint configurations will collide, we increase the magnitude order of until safe. The middle column shows the initial and modulated joint configurations colored in purple and green, and the right column demonstrates that the robot non-end-effector heads away from the big bottle while keeping the expected end-effector positions.

The second row shows the robot end-effector modulation with the big bottle and blue painting ; the robot non-end-effector will collide at this position with . We define the enforced velocity to obtain the modulated joint configurations iteratively until safe, and the robot tends to move away from the big bottle when approaching the emergency stop to grasp. Figure 9b illustrates robot task execution snapshots; the total running time is 13.8 s with 69 timesteps, and the average modulation time for a single timestep is 0.1757 s. The results demonstrate that our method can simultaneously perform end-effector and non-end-effector modulation in real-time, allowing the robot to adapt joint configurations in confined spaces while keeping its expected end-effector poses.

5.4. Comparative Experiments

In this paper, we propose a combined strategy for robot offline and real-time movement adaptation based on human–robot skill transfer. To validate the performance, we conduct several comparative experiments for proposed methods in the strategy.

5.4.1. Offline Movement Adaptation

Concerning offline movement adaptation, KMPs were proposed to address the limitation of explicitly defining the basis functions in ProMPs. The KMPs method was first introduced in [11] by employing GMM/GMR to encode the demonstrated distribution and then deriving the mean and covariance of each timestep under via-points based on dual transformation and KL divergence. The most notable contribution was the derivation of a kernel matrix that depends only on each timestep, eliminating the need to explicitly define the basis functions and reducing the dimensionality of the parameters. Furthermore, they extended KMPs to incorporate linear constraints in [22] (LC-KMPs), allowing for the rapid acquisition of optimal Lagrange multipliers via quadratic programming (QP). However, this method can only be applied to linear constraints in Cartesian space. In [23], they further extended LC-KMPs to incorporate nonlinear constraints (EKMPs) by using Taylor expansion to convert the problem into a QP problem and solve the optimal Lagrange multipliers. This approach can handle various nonlinear constraints in Joint space. To account for all task constraints, especially including collisions between the robot’s other links and obstacles, optimization needs to be performed in Joint space. As a result, to further compare the performance, we select EKMPs from [23] and the ProMPs-based method from [21], which is similar to ours.

We take the danger scene (see Figure 7a) with the same task constraints to compare. For the three methods, we provide the same initial collision-free trajectory sets of the robot end-effector and obtain the corresponding Joint space distributions with Jacobian IK. Some joint distributions may lead to collisions between the robot links 1 to 6 and obstacles. The goal is to optimize these distributions to generate new joint configurations for task execution. We then execute the task 100 times and evaluate the methods based on several metrics as follows: number of optimization parameters, number of constraints, total iterations, optimization time, distribution loss, and task success rate. The results are shown in Table 2.

Table 2.

Robot offline movement adaptation comparison. We set the same task constraints in the danger scene (Figure 7a). Given the initial collision-free end-effector trajectory sets, we obtain the corresponding Joint space distributions using Jacobian IK. Some Joint distributions may lead to collisions for robot links 1 to 6, and the goal is to optimize the distributions for task execution, which is then performed 100 times.

In [21], the number of optimization parameters mainly includes the weights and covariance matrices of the 35 basis functions in ProMPs. Due to the use of Cholesky decomposition, a total of parameters needs optimization. For 30 timesteps, each timestep involves 7 joint range constraints, 4 hyperplane constraints, 6 repellers constraints (from robot links 1 to 6), and waypoints constraints for the start and end points, resulting in a total of constraints.

In EKMPs [23], the optimization targets the Lagrange multipliers that define the task constraints, with a quadratic programming (QP) approach employed. As a result, the number of optimization variables is equal to the number of task constraints, which is 512. Using the optimal multipliers, a kernel matrix is constructed to derive the mean and covariance for each timestep.

In our method, before optimization, the overall ProMP distributions are decoupled into the mean and standard deviation for each timestep. We then identify timesteps that may violate the task constraints based on the mean and standard deviation at each timestep. We find that there are 8 timesteps violating the task constraints, and the waypoint constraints at the start and end points are already satisfied, resulting in constraints. In addition to optimizing the mean and covariance of ProMPs, we also optimize the mean and standard deviation of the joint positions for the 8 timesteps with parameters in total.

During optimization, we discover that the algorithm in [21] integrates all nonlinear task constraints with for each timestep. This integration necessitates the evaluation of symmetric positive definiteness for the covariance matrix during optimization, resulting in the most iterations and the longest optimization time. EKMPs [23], which involve large matrix computations, also encounter numerical instability. Specifically, as the number of timesteps and task constraints increases, the matrix size grows, and many matrix parameters become unstable during inversion. Some elements may also become redundant, which is a characteristic of the KMP formulation. Although QP optimization reduces the number of iterations, the extensive matrix computations required to calculate the mean and covariance at each timestep still lead to longer optimization times.

In contrast, our method essentially decouples the ProMP parameters to each timestep and identifies the feature timesteps that violate task constraints, optimizing the distribution for those specific timesteps separately. Notably, after optimizing the mean and standard deviation, the optimization of the mean and covariance of ProMPs becomes linear in terms of and , which avoids introducing numerical instability. Here, we do not rely on ProMPs’ inherent ability to generate new parameters. Instead, we address the issue of ProMPs’ limited extrapolation ability through optimization. However, since our method utilizes TensorFlow’s automatic differentiation rather than a QP formulation, the total number of iterations is slightly higher than that of EKMP. Nonetheless, the optimization time in our method is significantly reduced due to improved numerical stability.

Furthermore, we evaluate the generalization capability of each algorithm. In this context, generalization refers to the ability to maintain the initial distribution when encountering different task constraints and to achieve a high success rate. Specifically, if the optimized distribution closely matches the original distribution and achieves a high success rate, the method is considered to have strong generalization. The results show that [21] retains more of the original distribution compared to EKMPs [23]. However, in [21], due to the use of the unscented transform, which is sensitive to the coefficients, the estimates for and under task constraints are inaccurate. This leads to unfiltered joint distributions that violate the task constraints, resulting in task failures. Similarly, EKMP’s use of Taylor linear expansion does not separate out some failed distributions, leading to a lower task success rate.

Our method, however, after obtaining the optimized joint distributions, introduces a threshold to mitigate the distribution loss caused by the probabilistic characteristics. Specifically, the robot first generates task trajectories with via-point generalization, obtains the corresponding inverse kinematics solutions, and then evaluates the number of solutions within the optimized joint range. If this number exceeds the threshold, the robot executes the task. This approach reduces the distribution loss and improves success rates. The threshold determination process is obtained by sampling the via-points and recording the task execution results and the number of inverse solutions. However, due to the discrete nature of via-points, when the threshold intersects with continuous space, the optimization algorithm indicates task success, but the robot can fail. This issue requires more continuous via-point ranges for accurate task execution.

Overall, despite the higher number of optimization parameters, our method achieves reduced optimization time and higher success rates by decoupling the mean and Gaussian distribution for each timestep and employing a dual optimization process for the ProMP parameters. These results demonstrate the high generalization capability of our method. Our method exhibits efficiency at the cost of increasing optimization parameters. The optimization time cannot fulfill real-time needs; however, the robot is equipped with flexible skills incrementally augmented from human demonstrations before online task execution.

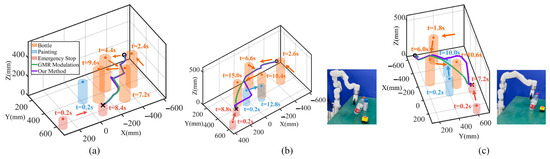

5.4.2. Real-Time Movement Adaptation

Concerning robot real-time movement adaptation based on imitation learning (IL), ref. [47] first proposes the method of learning stable nonlinear dynamical systems (SEDS) with GMM-GMR. They utilize GMM to learn the relation of the Cartesian positions and velocities. Given the current positions, they use GMR to generate the velocities for task execution. They further develop the method in [30,31], and ref. [32] to achieve real-time movement adaptation for the robot end-effector. In this paper, we compare our method to theirs (SEDS-GMR modulation) under the same dynamic environment (see Figure 8b). To be specific, the robot randomly generates the initial task execution trajectory from three skills (moving above, left, and right around obstacles). During task execution, the human gets involved by placing and changing objects in real-time. Given the initial task execution trajectories, we choose 6 Gaussian kernels and train the optimal GMM parameters with 1000 iterations offline to ensure accurate trajectory reproduction via GMR. We conduct 10 experiments for each skill with the qualitative and quantitative results shown in Figure 10 and Table 3. Figure 10a denotes 3D positions modulation when the robot selects to move right around the obstacle. We discover that the modulation remains consistent for both methods if the init 3D positions before modulation will not collide with the obstacle. However, if the collision occurs for the init positions (see Figure 10b,c), SEDS-GMR modulation will not ensure the process is collision-free despite the modulation matrix influence.

Figure 10.

Robot end-effector modulation comparison between SEDS-GMR and our method. Green and purple lines denote SEDS-GMR and our method, respectively. (a) Modulated 3D trajectories when the robot moves right. Arrows illustrate obstacle motion trends with cylinders denoting obstacle positions at different time, for instance, the emergency stop (the red cylinder) is moved to the new position at t = 8.4 s from the init position at t = 0.2 s. (b) End-effector trajectories when the robot moves left. (c) End-effector trajectories when the robot moves above. The snapshots show collision cases for SEDS-GMR during task execution.

Table 3.

Robot end-effector modulation comparision.

In contrast, our method iteratively performs modulation until collision-free; that is, if the modulated positions will collide, we treat them as init positions and modulate them until safe. Note that our method may fail in specific danger cases: if the robot iterates many times for modulation, during the motion process from the current position to the modulated position, collision will occur. However, in most cases, our method ensures a high success rate.

Table 3 shows several benchmarks for both methods. Our method exhibits a higher success rate. In addition, since SEDS-GMR modulation requires obtaining precise GMM parameters before task execution, the offline training time is longer. Our method generalizes the trajectories in real-time without training parameters (see Section 2.1) and computes the velocities via position differencing. Although our method involves iterating the modulation when encountering collisions, resulting in a relatively longer time for single-step modulation, it still meets real-time requirements. Moreover, our method outperfoms SEDS-GMR modulation in total task execution time, requiring fewer convergence timesteps and exhibiting a lower collision rate. The final position error effectively reflects the accuracy of the modulation in achieving the desired end-effector position while ensuring obstacle avoidance. Both methods achieve comparable final position accuracy, confirming that our approach maintains accuracy while ensuring obstacle avoidance. The results indicate that our method can complete real-time end-effector modulation while ensuring safety, and quickly generalize trajectories preserving human skill characteristics.

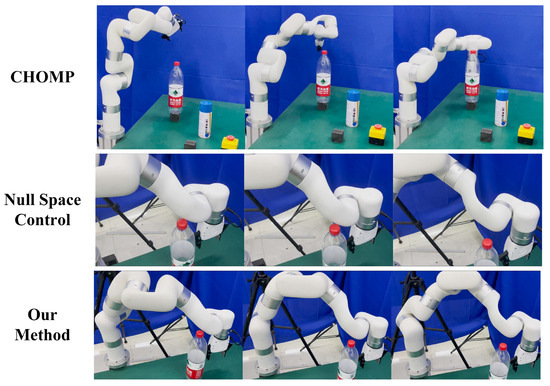

The aforementioned comparison experiments with SEDS-GMR only consider the robot end-effector given that the other robot links will not collide during task execution. To further evaluate the robot whole-body movement adaptation performance, we conduct comparison experiments under the scene in Figure 9. We select a commonly-used trajectory planner named Covariant Hamiltonian Optimization for Motion Planning (CHOMP) which iteratively optimizes the cost function including trajectory smoothness and collision cost; meanwhile, a null space velocity control method [36] similar to ours is involved.

As in Figure 9, we obtain the modulated robot end-effector trajectory with 69 timesteps. For all methods, we set the same expected 3D positions at each timestep and test the robot non-end-effector modulation performance. We repeat 30 experiments with the results shown in Figure 11 and Table 4. The first row of Figure 11 shows that CHOMP may occasionally generate erratic and random joint configurations when collisions are detected. Additionally, unnecessary spaces are utilized to reach the desired positions, leading to redundant planning. The null space control method in the second row employs a fixed retreat velocity , leading to collisions when the velocity is insufficient to maneuver the robot non-end-effector away from the obstacles. In contrast, our method iteratively employs null space velocity control, gradually increasing until collison-free to ensure safety, as shown in the last row.

Figure 11.

Robot non-end-effector modulation comparison experiments. The first, middle, and last rows denote task execution snapshots of CHOMP, null space control [36], and our method, respectively.

Table 4.

Robot non-end-effector modulation comparision.