Abstract

Farmland changes have a profound impact on agricultural ecosystems and global food security, making the timely and accurate detection of these changes crucial. Remote sensing image change detection provides an effective tool for monitoring farmland dynamics, but existing methods often struggle with high-resolution images due to complex scenes and insufficient multi-scale information capture, particularly in terms of missed detections. Missed detections can lead to underestimating land changes, which affects key areas such as resource allocation, agricultural decision-making, and environmental management. Traditional CNN-based models are limited in extracting global contextual information. To address this, we propose a CNN-Transformer-based Multi-Scale Attention Siamese Network (MT-SiamNet), with a focus on reducing missed detections. The model first extracts multi-scale local features using a CNN, then aggregates global contextual information through a Transformer module, and incorporates an attention mechanism to increase focus on key change areas, thereby effectively reducing missed detections. Experimental results demonstrate that MT-SiamNet achieves superior performance across multiple change detection datasets. Specifically, our method achieves an F1 score of 65.48% on the HRSCD dataset and 75.02% on the CLCD dataset, significantly reducing missed detections and improving the reliability of farmland change detection, thereby providing strong support for agricultural decision-making and environmental management.

1. Introduction

Farmland plays a critical role in global food security and sustainable development [1,2]. Monitoring changes in farmland—such as expansion, contraction, or shifts in land use—is essential for effective land management, resource allocation, and environmental planning [3]. Consequently, detecting changes in farmland is significant for agricultural and environmental research, providing insights that can inform sustainable practices and policy decisions [4].

Given the extensive distribution of arable land, manual field surveys to track changes are often time-consuming and labor-intensive [5]. In this context, remote sensing image change detection has emerged as a crucial tool for monitoring environmental changes [6] and analyzing land cover alterations [7]. Among various change detection techniques, bi-temporal change detection stands out due to its simplicity and effectiveness, as it identifies areas of significant change between two images captured at different times [8]. This method offers valuable information for decision-making in urban planning, agriculture, forestry, and natural resource management. However, despite advancements in change detection techniques for large-scale, high-resolution remote sensing images, challenges persist. Factors such as complex textures, seasonal variations, climate changes, and evolving demands continue to complicate the analysis of remote sensing data, making bi-temporal change detection one of the most demanding tasks in this field [9,10].

In recent years, the increasing accessibility of high-resolution satellite imagery, coupled with significant advancements in image processing algorithms, has led to a burgeoning interest in bi-temporal change detection among researchers and practitioners alike. Over the past few decades, a variety of optical remote sensing image change detection methodologies have been developed to address the inherent challenges in this field. For instance, Huang et al. [11] introduced the Morphological Building Index (MBI), which utilizes a series of morphological operations to automatically detect buildings in high-resolution imagery by capturing the intrinsic spectral characteristics of these structures, including brightness, contrast, and size. Similarly, Johnson et al. [12] employed Change Vector Analysis (CVA) and Principal Component Analysis (PCA) to facilitate land cover detection using multispectral data. Additionally, Nielsen [13] and Doxani [14] explored multivariate change detection techniques, allowing for the compact representation and rapid extraction of change features. Despite the efficacy of traditional change detection methods utilizing handcrafted features in relatively simple scenes, they frequently encounter challenges in more complex environments [15].

The rapid development of artificial intelligence (AI) technologies and remote sensing platforms has propelled deep learning-based algorithms to the forefront, with them surpassing traditional methods in numerous applications. This progress is largely attributed to their powerful feature learning capabilities, adaptability, and stability. Currently, Convolutional Neural Networks (CNNs) and Transformers are the primary methods used for extracting discriminative features from data [16,17]. CNNs have found widespread application across various tasks within the realm of computer vision, with their core strength residing in their ability to effectively capture local patterns and spatial hierarchies via local receptive fields. Notable models such as U-Net [18], DeepLab [19], and ResNet [20] exemplify the efficacy of CNNs in learning rich feature representations directly from raw data, making them particularly adept at processing two-dimensional images.

However, the inherent constraint of CNNs lies in their local receptive fields, which limits their capability to capture long-range dependencies and global information. This limitation becomes particularly pronounced in tasks that necessitate an understanding of the global context, such as sequence modeling or establishing global associations within images. Consequently, this shortcoming may hinder their performance in scenarios where the extraction of global features is critical.

Conversely, Transformers have gained significant traction as a robust architecture for modeling sequential data [21,22]. Originally developed for natural language processing tasks, their versatility has extended into a wide range of domains, including image processing [23] and time-series analysis [24]. Transformers have demonstrated remarkable performance in various tasks such as image classification [25], segmentation [26], object detection [27], and image captioning [28]. Unlike Convolutional Neural Networks (CNNs), which rely on local receptive fields, Transformers employ a self-attention mechanism that captures global dependencies across the entire input sequence. This ability to model long-range relationships enables them to effectively handle tasks that require contextual understanding and interactions between distant elements in the data. Consequently, Transformers are particularly advantageous for applications involving complex spatial and temporal dynamics, making them well suited for change detection in remote sensing and other fields requiring global feature representation.

In remote sensing images, objects frequently display varying scales and shapes, which complicates the process of single-scale feature extraction in capturing their complex characteristics [29]. For example, the same object, such as an airplane, may appear at considerably different scales due to factors such as the type of imaging sensor, variations in flight altitudes, or changes in viewing angles, leading to substantial size discrepancies across images. Single-scale feature extraction techniques, which operate at a fixed resolution or scale, are often inadequate in capturing the full spectrum of information across these varying scales. Therefore, the use of multi-scale feature extraction methods is advantageous, as these approaches can effectively integrate the spatial structures and semantic information of objects across different scales [30]. This multi-scale strategy notably improves the accuracy and robustness of tasks such as object classification, target detection, and image segmentation.

Furthermore, the attention mechanism plays a pivotal role in the processing of remote sensing images [31,32]. These images typically contain substantial background information and noise, which may hinder the model’s ability to discern and analyze critical objects or regions. By integrating attention mechanisms, models can autonomously learn to prioritize significant areas or objects within the image [33]. This capability not only improves the model’s performance in recognizing and analyzing essential objects but also facilitates more effective processing of remote sensing data [34].

In the domain of change detection, Zhan et al. were pioneers in applying the Siamese Convolutional Network to bi-temporal remote sensing image analysis, achieving remarkable results. The fundamental concept of Siamese networks involves passing two input samples through the same neural network to extract features, subsequently enabling a comparison of similarity or dissimilarity in the feature space. Building upon this foundation, Daudt et al. designed several effective change detection architectures based on Fully Convolutional Neural Networks (FCNNs), including Fully Convolutional Early Fusion (FC-EF) and Fully Convolutional Siamese Concatenation (FC-Siam-conc). The primary distinction between these networks lies in their methods of fusing bi-temporal information [35]. Liu et al. further advanced this field by developing a dual-task constrained Siamese Convolutional Neural Network that improves focus loss to effectively tackle sample imbalance, thereby enhancing change detection accuracy [36]. Similarly, Xu et al. proposed an attention-based Siamese network known as MFPNet, which leverages multi-path fusion and an adaptive weighted fusion strategy to integrate image features efficiently [33]. Zhang et al. introduced a change detection methodology based on a Differential Discriminative Network, employing an attention mechanism to fuse multi-level features with the image’s difference map [37]. Chen et al. contributed to the field by developing a Transformer-based architecture aimed at capturing global change features [38]. Finally, Liu et al. proposed a hybrid Siamese network combining CNN and Transformer elements to enhance the accuracy of farmland change detection models [39].

Although existing work has made some progress, the issue of high missed detection rates in farmland change detection tasks remains a challenge. Efficiently and robustly modeling the multi-scale information between bi-temporal images by unifying global and local features in the feature encoding process is a pressing requirement for rapid farmland data collection.

Inspired by recent advancements in change detection, we developed an attention-based multi-scale Siamese network for farmland change detection. This network combines the strengths of Convolutional Neural Networks (CNNs), Transformers, multi-scale modules, and attention mechanisms. By adopting a CNN-Transformer architecture, it achieves the unified modeling of multi-scale global semantic information and local contour details in farmland while using local feature mixing techniques for denoising. The model structure is shown in the figure below. The contributions of this paper can be summarized in four key points:

- A multi-scale channel information mixing module is designed within the convolutional network, which emphasizes local contours in remote sensing images and focuses on the denoising of farmland remote sensing images. Information loss across different scales and channels is also addressed.

- A multi-head channel interaction and attention encoder is designed, enabling global context modeling based on key local contour information.

- A multi-scale and attention-based spatial decoder is designed, which maps the one-dimensional vectors of local contour features and global semantic information—processed by the CNN feature mixing module and encoder—back into the spatial dimension.

- The model is developed by combining the strengths of Convolutional Neural Networks (CNNs), Transformers, multi-scale modules, and attention mechanisms, effectively integrating attention mechanisms with Transformers. The model’s performance is evaluated on two benchmark datasets: the Cropland Change Detection (CLCD) dataset [39], a high-resolution dataset specifically designed for cropland change monitoring, and the High-Resolution Semantic Change Detection (HRSCD) dataset [40], which focuses on general semantic change detection across diverse land cover types. A more detailed discussion of these datasets is provided in a later section of this paper.

In our research, although an optimized method is proposed to address this challenge, significant pressure is still placed on the model’s processing capabilities due to high-frequency image acquisition, especially when farmland changes are rapid and continuous. The high temporal frequency of remote sensing data brings a large amount of data and computational requirements, and existing models still have performance bottlenecks when processing large-scale continuous images. This situation is particularly prominent in the fields of land management and agricultural monitoring. Land managers often require real-time and high-frequency change detection results, yet the applicability of existing methods in this regard still needs further improvement.

Therefore, although this research makes significant progress in change detection methods, we still believe that future research needs to pay more attention to how to handle the bottlenecks of high-frequency image acquisition and change detection in order to better meet the high demands of land managers for real-time performance and accuracy. We further emphasize this issue in the Introduction Section, clearly point out the crucial role of high-frequency image acquisition in farmland change monitoring and land management, and propose possible directions for future research.

This study is structured as follows: Section 1 reviews the theoretical foundations and current research on farmland change detection, highlighting this study’s innovations. Section 2 details the proposed network’s architecture, key modules, and technical specifics. Section 3 covers the datasets, experimental environment, and parameter settings. Section 4 presents the model’s performance and visual analysis. Section 5 discusses the effectiveness of multi-scale feature extraction and attention mechanisms, identifying areas for improvement. Section 6 concludes with a summary of the findings and discusses the model’s potential applications and future directions.

2. Materials and Methods

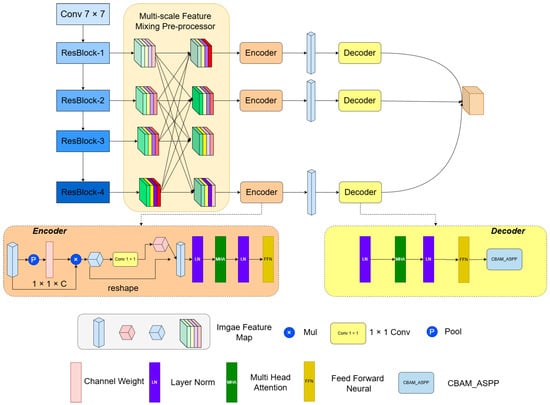

As depicted in Figure 1, the proposed model comprises three principal components: a Multi-Scale Mixed Preprocessor, a Cross-Channel Spatial Encoder, and a Multi-Scale Spatial Decoder. In the context of agricultural land change detection, the Multi-Scale Mixed Preprocessor extracts local features across multiple scales, thereby enhancing sensitivity to changes. The Cross-Channel Spatial Encoder integrates features from various channels, employing a self-attention mechanism to prioritize critical change areas. Finally, the Multi-Scale Spatial Decoder maps the encoded features back to spatial dimensions, yielding precise change detection outcomes. This structural integration markedly enhances the model’s performance and accuracy in agricultural land change detection.

Figure 1.

Overview of the proposed model. This model adopts ResNet-18 as the backbone network to extract multi-scale features. These features are then fused using a Multi-Scale Feature Mixer, enabling each output feature to contain multi-level semantic information. Subsequently, the features are processed by Cross-Channel Spatial Transformers in separate branches, capturing spatial dependencies across channels over long distances. Finally, a Multi-Scale Attention Decoder further extracts and integrates multi-scale features to enhance the performance of change detection.

2.1. Multi-Scale Feature Mixing Preprocessor

The Multi-Scale Feature Mixing Preprocessor extracts local and high-level semantic information from images through a multi-layer structure, learns features at different scales, exchanges channel features among scales, and increases parameter sharing and model complexity so as to capture the data distribution and adapt to environmental changes. As illustrated in Figure 1, the proposed model utilizes ResNet-18 as the backbone network. ResNet-18 consists primarily of a 7 × 7 convolutional layer, four residual blocks, and a fully connected layer. For simplicity, these five components are referred to as Conv1, Res2, Res3, Res4, and fc1. Each residual block includes two standard 3 × 3 convolutional layers, a batch normalization layer, and a Rectified Linear Unit (ReLU) function, with optional pooling layers for downsampling. Downsampling is achieved with a stride of 2 in Conv1, Res3, and Res4, resulting in a reduction of the image scale by half.

To reduce the model parameters, a convolutional layer is used to unify the feature maps of different scales obtained from the residual blocks into a 32-dimensional space. These feature maps are then evenly divided into four segments along the channel dimension, with each feature map obtaining data from eight channels. To ensure the thorough mixing of features across different channels and scales while maintaining image smoothness and continuity and effectively suppressing artifacts, trilinear interpolation is employed. For interpolation at the target pixel location , the mathematical formula for bilinear interpolation is given as follows:

Let f(x,y) denote the interpolated value at the target pixel location (x,y), and let g(x,y) represent the values of the known data points in the original image. Bicubic interpolation calculates f(x,y) by performing a weighted average of the 16 known data points surrounding the target pixel. The specific formula for bicubic interpolation is as follows:

where h(t) is the cubic interpolation weight function, defined as follows:

2.2. Cross-Channel Spatial Attention Encoder

To model and integrate multi-scale information from feature extractors more accurately, a Transformer-based method is designed, which includes three token encoders and three token decoders to capture and aggregate contextual information from features of different scales.

The primary task of the token encoders is to encode the global contextual information of features through cross-scale spatial attention modules and Transformer modules. First, considering computational and storage resource constraints, the spatial attention module transforms the input features into three-dimensional token embeddings of the target size. These embeddings are then passed to the Transformer module for further extraction and integration of feature information. Through this design, multi-scale contextual information is effectively captured and integrated, enhancing the model’s feature representation ability and generalization performance.

The specific steps are as follows: given input features , cross-channel attention is first processed through the Efficient Channel Attention (ECA) module. The processing steps of the ECA module are as follows: Global average pooling is performed on F, resulting in global features . A 1D convolution operation is then applied to , yielding cross-channel weights , where the kernel size k is automatically determined by the ECA module based on the number of channels. The weights W are applied to the original input features F, resulting in enhanced features , represented as , which denotes element-wise multiplication. A 1 × 1 convolution layer is used to convert into an intermediate feature, denoted as . The enhanced features and are then reshaped into three-dimensional tokens, denoted as and . Finally, and are merged into a token , represented as

where b, c, h, and w denote the batch size, number of channels, height, and width of the input features F, respectively; l is the token length, set to 4 in the model. To model the contextual information in the tokens, a Transformer encoder is used. First, a set of trainable parameters for positional embedding (PE) is added to the token t. The Transformer encoder employs a standard structure, including multi-head self-attention (MHA) blocks and feed-forward neural network (FFN) blocks, with layer normalization (LN) applied before each block.

The MHA initially expands t through a linear layer into a new embedding, denoted as , where , with representing the weights of the linear layer, n representing the number of MHA heads, and d representing the dimension of each head. Here, n and d are set to 8 and 64, respectively. The embedding is then input into the different heads of the MHA, with parameters not shared between heads. Each head consists of two steps: linear transformation and scaled dot-product attention (SDPA). is mapped into queries , , and , represented as

where , , and represent the weights of the linear layers mapping Q, K, and V, respectively. In the scaled dot-product attention (SDPA), the correlation between Q and K is computed using dot-product operations and Softmax activation to generate an attention map, which is then applied as weights to V, represented as

where represents the dot-product result of Q and K scaled by the square root of . The Softmax function is applied to normalize the dot-product results, thereby generating attention weights. These weights are then applied to V to complete the self-attention mechanism. This process effectively captures dependencies between features at different positions and enhances the relevance of feature representations. Following the self-attention mechanism, layer normalization (LN) is applied to the output. The output is then processed through two fully connected layers, which include the activation function ReLU. The formula for this process is as follows:

where and are the weights of the fully connected layers, and and are the biases. Through this process, the model is able to capture and integrate multi-scale contextual information.

2.3. Multi-Scale Spatial Decoder

In the task of farmland change detection, accurately modeling and integrating multi-scale information within feature extractors are crucial. To address the issue of potential image information loss during data interpolation, we designed a multi-scale decoder mirroring method, which combines a Transformer decoder and the module. This approach effectively captures and integrates multi-scale contextual information, enhancing the model’s feature representation capabilities and generalization performance. Both the Transformer decoder and the module possess unique advantages. The Transformer decoder excels at capturing global contextual information, allowing it to model long-distance dependencies between features, thereby enhancing the completeness of feature representation on a global scale. Specifically, the Transformer decoder employs a multi-head self-attention mechanism and a feed-forward neural network, leveraging positional embeddings to retain spatial information and performing decoding based on the following equation:

where F′ represents the input features, and M denotes the features output by the encoder. In contrast, the module excels in capturing local features and integrating multi-scale features. By employing parallel multi-scale convolutional branches, the module effectively extracts feature information at various receptive fields. Additionally, the channel attention mechanism further enhances the feature representation capabilities, ensuring that important features receive adequate attention. The specific calculation process is as follows:

Finally, the output features from the Transformer decoder are summed with the output features from the module to form the final decoding result.

This multi-scale decoder mirroring method performs exceptionally well in practical applications, enabling a more accurate detection of farmland changes and enhancing the model’s robustness and reliability. The combination of the Transformer decoder and the module allows the model to excel in both global information capture and local feature extraction, effectively addressing the information loss issues introduced by data interpolation. Consequently, this enhances the model’s feature representation capabilities and generalization performance.

2.4. Loss Function

Similar to MSCANet, our model also computes the cross-entropy loss function for the three change features output by the multi-scale decoder. Specifically, the three change feature maps generated by the multi-scale decoder are denoted as , , and . These feature maps correspond to change detection results at different scales. By calculating the cross-entropy loss between these feature maps and the ground truth Y, the model parameters are optimized to improve prediction accuracy. The expression for the cross-entropy loss is as follows:

where N represents the total number of samples, C denotes the total number of classes, is the ground truth label for sample i in class c, and is the predicted probability that sample i belongs to class c. The model calculates the cross-entropy loss for each scale’s change feature map separately, and then it performs a weighted sum of these losses to obtain the overall loss function. Specifically, let , , and denote the loss weights for the three scales’ change feature maps. The overall loss function is expressed as

where denotes the cross-entropy loss between the change feature map at scale and the ground truth Y. Through this multi-scale supervision mechanism, the model is able to learn rich contextual information from feature maps at different scales, thereby improving performance in the farmland change detection task. The Transformer decoder effectively captures global information, enhancing feature representation capabilities.

3. Experimental Setup

3.1. Datasets

To validate the effectiveness of the model, we use mainstream farmland change datasets, including the CLCD and HRSCD datasets.

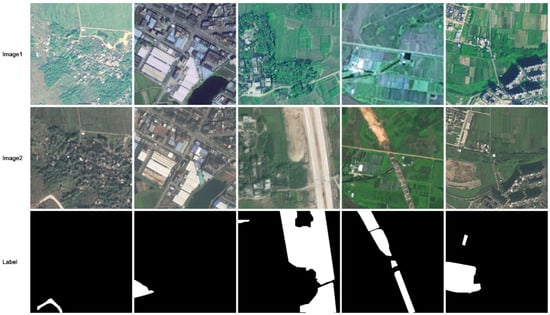

CLCD: The CLCD dataset is a public farmland dataset collected by the Gaofen-2 satellite in 2017 and 2019, as shown in Figure 2. It contains 600 pairs of farmland change samples from Guangdong Province, with a size of 512 × 512 pixels and a spatial resolution ranging from 0.5 m to 2 m. The dataset includes various land types such as buildings, roads, lakes, and bare soil. Each pair of sample images is associated with a binary label indicating farmland change. The samples are divided into training, validation, and test sets in a 6:2:2 ratio, resulting in sizes of 360, 120, and 120, respectively.

Figure 2.

CLCD dataset.

HRSCD: The HRSCD dataset consists of 291 pairs of RGB aerial images, each with a size of 10,000 × 10,000 pixels and a spatial resolution of 0.5 m, as shown in Figure 3. The dataset provides corresponding land cover information, including five land cover categories: artificial surfaces, agricultural areas, forests, wetlands, and water bodies. All images were acquired from urban and rural areas in Rennes and Caen, France. To obtain more refined information on cropland changes, we reclassified the original land cover labels of the bi-temporal images, assigning a label of 1 to agricultural areas and 0 to all other categories. The cropland change annotations were subsequently derived by comparing the reclassified labels from the two time periods. Given the large size of the original images, they were cropped into 4398 pairs of 512 × 512 pixel image patches for cropland change detection. These image patches were further split into training, validation, and test sets in a ratio of 6:2:2.

Figure 3.

HRSCD dataset.

3.2. Evaluation Metrics

To compare and analyze the proposed algorithm, we use four common evaluation metrics: accuracy, recall, F1-score, and Intersection over Union (IoU). The formulas for these metrics are as follows:

Among them, TP (true positive) denotes true instances, TN (true negative) denotes true-negative instances, FP (false positive) denotes false-positive instances, and FN (false negative) denotes false-negative instances.

The specific meanings of these indicators in the farmland change detection task are as follows:

- Precision: This indicates how well the areas of change correctly detected by the model match the actual areas of change. A high precision means that the model has a low false alarm rate, i.e., that most of the detected change areas are actual changes.

- Recall: This is a measure of how well the model covers the areas of change that it detects versus the actual areas of change. A high recall means that the model is able to detect more of the actual change area. A high recall is especially important in the task of change detection in agricultural fields, because omission (failure to detect an actual area of change) often has more serious consequences than misdetection (misclassifying an unchanged area as a change). Change detection in farmland is important for agricultural management and decision-making, and ensuring that all areas of change are detected can help farmers and agricultural managers take timely action.

- F1-score: This is a metric that combines precision and recall, and it is their reconciled average. A high F1-score indicates that the model performs well in terms of both accuracy and completeness, being a balance between precision and recall.

- Intersection and Union Ratio (IoU): This measures the degree of overlap between the region of change detected by the model and the actual region of change. A high IoU indicates that the region of change predicted by the model highly overlaps with the actual region of change, reflecting the overall detection performance of the model.

With these evaluation metrics, we can comprehensively and objectively assess the effectiveness of the proposed model in the task of farmland change detection, thus verifying its superiority and usefulness.

3.3. Comparison Methods

In this paper, we compare a range of advanced dual-temporal change detection (CD) models, including those based on Convolutional Neural Networks (CNNs) and Transformer architectures:

- FC-EF [35]: This model is based on the U-Net architecture and utilizes a pure Convolutional Neural Network for image-level fusion. The network takes as input a concatenation of a pair of dual-temporal images.

- FC-Siam-conc [35]: A variant of the FC-EF network, FC-Siam-conc employs a Siamese network structure to extract multi-layer image features, which are then concatenated using two fully connected layers.

- DTCDSCN [36]: This model is a variant of the FCN network that incorporates semantic-level and attention mechanisms. By focusing on channel and spatial features, it captures additional contextual information to discern changes in the images.

- MFPNet [30]: A Siamese network based on attention mechanisms, MFPNet uses multi-path fusion and adaptive weighted fusion strategies to integrate features effectively.

- DSIFN [29]: This model employs a differential discriminative network for change detection and integrates multi-layer features with image differential maps through attention mechanisms.

- BiT [38]: Designed with a Transformer-based encoder–decoder architecture, BiT models contextual information and effectively captures important features from the global feature space.

- MSCANet [39]: This model combines CNNs and Transformers in a multi-scale change detection network. It utilizes CNNs to capture local contour features at different scales and employs Transformers to encode and decode contextual information. These models represent some of the most advanced approaches in the field, each contributing unique methodologies for enhancing change detection performance.

3.4. Implementation Details

The proposed model and the comparison methods were all implemented and run on an NVIDIA GeForce RTX 4090 GPU environment, using Python 3.9 and PyTorch 1.8. The proposed model utilizes ResNet-18, pre-trained on ImageNet, as its backbone network. The training framework was set with an input size of 512 × 512 pixels, a batch size of 8, and an initial learning rate of , with AdamW used for parameter optimization. The training process was conducted for 100 epochs, and general data augmentation techniques were applied to the input dual-temporal images. Data augmentation included random rotations, vertical flips, and horizontal flips.

4. Results

4.1. HRSCD Results

The proposed method was comprehensively compared with other approaches in terms of precision (Pre), recall (Rec), F1-score, and Intersection over Union (IoU). Table 1 presents the quantitative results of various methods on the HRSCD dataset, with the bold part representing that this model has achieved relatively optimal performance. Specifically, the FC-EF and FC-Siam-conc exhibited relatively lower performance, with F1-scores and IoU values of 59.48%, 42.33% and 57.34%, 40.19%, respectively. The DTCDSCN showed slightly better performance, with an F1-score and IoU reaching 59.39% and 42.24%. BiT further enhanced these metrics, reaching an F1-score of 60.30% and an IoU of 43.16%. In contrast, MFPNet and DSIFN demonstrated superior performance, with F1-scores of 63.95% and 63.66% and IoU scores of 47.01% and 46.70%, respectively. Although MSCANet achieved an F1-score of 64.67% and an IoU of 47.79%, the proposed method attained a recall rate of 69.16%, significantly surpassing all other methods. This highlights the superior capability of the proposed method in capturing actual changes, effectively reducing the risk of missed detections. In farmland change detection tasks, higher recall is particularly critical, as missed detections can delay the timely identification of changes, thereby affecting agricultural management and decision-making. Furthermore, the proposed method achieved an F1-score of 65.48% and an IoU of 48.68%, demonstrating well-balanced performance between accuracy and spatial coverage, enabling more precise alignment with actual change regions.

Table 1.

Experimental results on HRSCD.

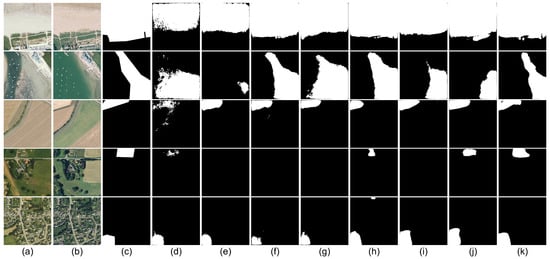

The Figure 4 illustrates the experimental results of the different methods on the HRSCD dataset under various scenarios. As shown in the second row, for the lake excavation changes, most methods exhibit limited detection results due to the relatively small number of relevant samples. In such cases, the proposed model is still able to completely identify these changes. Similarly, for scenarios with fewer buildings (fourth row) and more buildings (fifth row), the proposed method effectively reduces missed detections in low-building scenarios and demonstrates strong robustness in detecting farmland changes in high-building scenarios.

Figure 4.

Visualization of experimental results on HRSCD dataset: (a) Image1; (b) Image2; (c) Label; (d) FC-EF; (e) FC-Siam-conc; (f) DTCDSCN; (g) BiT; (h) MFPNet; (i) DSIFN; (j) MSCANet; (k) the proposed method.

4.2. CDLD Results

Table 2 presents the quantitative results of various methods on the CDLD dataset, with the bold part representing that this model has achieved relatively optimal performance. Unlike the HRSCD dataset, FC-Siam-conc, which includes a Siamese encoder and feature concatenation, performs better on the CDLD dataset compared to FC-EF and DTCDSCN, with an F1 score of 61.45%. BiT closely follows, demonstrating the advanced capabilities of the Transformer architecture compared to the traditional UNet models. MFPNet and DSIFN also show excellent performance on the CDLD dataset, with F1 scores exceeding 70%. This outstanding performance is attributed to their multi-scale feature fusion strategies, as the within-class scale variations in the CDLD dataset are much greater than those in the HRSCD dataset.

Table 2.

Experimental results on CDLD.

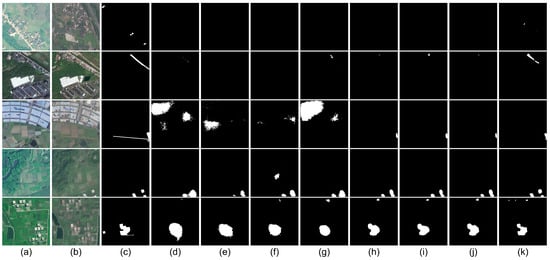

Notably, MSCANet achieves the highest performance among the compared methods in terms of recall, F1-score, and IoU, with scores of 67.64%, 71.29%, and 55.39%, respectively, surpassing DSIFN by 3.85%, 0.68%, and 0.81%. However, the proposed method further significantly improves these metrics on the CDLD dataset, especially in terms of recall and IoU, reaching 77.37% and 60.02%, respectively. Additionally, the F1-score of the proposed method is 75.02%, significantly higher than that of all other comparative methods. These results highlight the exceptional capability of the proposed method in detecting farmland change areas, providing a more accurate detection of changes, and reducing both false positives and missed detections, showcasing its superiority and stability in change detection tasks. The Figure 5 shows the experimental results of different methods on various scenarios of the CDLD dataset. Rows 1 to 3 reveal that, when change samples are sparse, other models exhibit poor detection performance, with numerous false positives and missed changes. In contrast, the proposed model maintains good detection capabilities. Rows 4 and 5 demonstrate that, for scenarios involving building encroachment on farmland, both MSCANet and the proposed model exhibit strong detection performance.

Figure 5.

Visualization of experimental results on CDLD dataset: (a) Image1; (b) Image2; (c) Label; (d) FC-EF; (e) FC-Siam-conc; (f) DTCDSCN; (g) BiT; (h) MFPNet; (i) DSIFN; (j) MSCANet; (k) the proposed method.

4.3. Ablation Experiments and Loss Curves

In this section, ablation experiments were conducted to assess the contribution of each component to the proposed method. The experiments were performed on the CDLD dataset, and the quantitative results are presented in Table 3. The baseline model, referred to as “Base”, is MSCANet, with the following performance metrics: a precision (Pre) of 75.36%, a recall (Rec) of 67.64%, an F1-score of 71.29%, and an Intersection over Union (IoU) of 55.39%.

Table 3.

Performance comparison on CLCD and HRSCD datasets.

First, the model with the added Multi-Scale Mixer, labeled as “Multi-Scale Mixer”, showed a decrease in precision to 68.35% but a significant increase in recall to 79.08%. This resulted in an improvement in the F1-score to 73.32% and an IoU of 57.88%. These results suggest that, while the Multi-Scale Mixer reduces false positives, it may limit the model’s ability to capture a wider range of change areas.

Next, incorporating the Cross-Channel Spatial Encoder into the model, referred to as “Cross-Channel Spatial Encoder”, showed further improvement, with precision increasing to 70.24%, recall to 78.35%, F1-score to 74.07%, and IoU to 58.82%. These enhancements indicate that the Cross-Channel Spatial Encoder effectively improves the model’s sensitivity and accuracy in detecting change areas, thus enabling it to capture them more effectively. Finally, the model incorporating the Multi-Scale Attention Decoder, referred to as “Multi-Scale Attention Decoder”, achieved the best performance across all metrics: a precision of 72.80%, a recall of 77.37%, an F1-score of 75.02%, and an IoU of 60.02%. The addition of the Multi-Scale Attention Decoder significantly enhanced the model’s accuracy and detail retention in detecting change areas, demonstrating the decoder’s critical role in integrating multi-scale information and implementing effective attention mechanisms.

These ablation experiments validate the effectiveness of each component and confirm the advantages of the proposed method in the farmland change detection task.

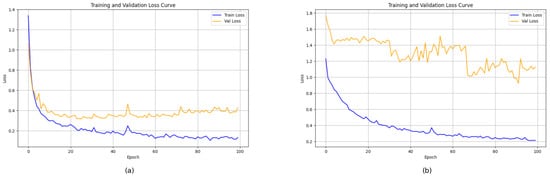

In the loss function experiments, the model demonstrated a consistent decreasing trend in the loss values across both the training and validation sets, as shown in Figure 6, indicating that the model effectively reduced prediction errors during the learning and optimization process. On the CLCD dataset, the loss function stabilized around the 40th epoch, suggesting that the model successfully captured key features related to farmland change and reached a stable state, enabling accurate change detection. This indicates that the model’s performance on this dataset approached an ideal state, exhibiting a strong learning ability and adaptability. On the HRSCD dataset, the loss on the training set stabilized around the 80th epoch. Although the validation set showed an overall downward trend, there were some fluctuations. These fluctuations may be attributed to the complexity of the dataset and the diversity of feature levels. Nonetheless, the overall trend suggests that the model was still able to effectively capture change patterns in the data and gradually converge to a stable state, demonstrating strong generalization capabilities. However, room remains for further optimization, particularly in addressing the fluctuations in the validation set and improving stability.

Figure 6.

(a) CLCD; (b) HSRCD.

Overall, the proposed model demonstrated outstanding performance across different datasets, effectively adapting to varying data distributions and complex feature variations while showcasing robust change detection capabilities. Despite the potential for further optimization, especially in enhancing stability on complex datasets, the current results strongly validate the model’s excellent performance in the farmland change detection task.

In addition to analyzing the loss curves of the training and validation sets, we further evaluated the effectiveness of the proposed multi-scale supervision strategy. As shown in Table 4, introducing multi-scale loss consistently improved performance across both the HRSCD and CLCD datasets. When using only the single-scale loss , the F1 scores for the two datasets were 63.74% and 74.14%, respectively. By incorporating an additional loss at the second scale, , the recall on the HRSCD dataset increased to 69.36%, while the recall on the CLCD dataset increased significantly to 81.20%. This indicates that multi-scale supervision effectively enhances the model’s ability to detect change regions. Furthermore, after introducing the third-scale loss , the F1 scores improved to 65.48% and 75.02% on the HRSCD and CLCD datasets, respectively, with the IoU scores also increasing to 48.68% and 60.02%, demonstrating the contribution of multi-scale supervision to both detection accuracy and spatial coverage.

Table 4.

Evaluation of multi-scale loss on CLCD and HRSCD datasets.

These results support the initial design motivation: features extracted at different scales possess unique advantages in capturing change patterns. Specifically, multi-scale features not only provide complementary information but also capture changes at different spatial resolutions and semantic abstraction levels. Therefore, explicitly imposing loss constraints at these intermediate scales effectively guides the model to progressively learn more discriminative and robust multi-scale representations, ultimately improving both the accuracy and stability of the change detection process.

5. Discussion

The experimental results demonstrate that the proposed model significantly outperforms existing methods, including MFPNet and MSCANet, on both the CLCD and HRSCD datasets, fully validating its effectiveness in capturing true farmland changes. Specifically, on the HRSCD dataset, the proposed method achieves an F1-score of 65.48% and an IoU of 48.68%, with both these values exceeding those of the best-performing baseline method MSCANet. On the CLCD dataset, the proposed method further achieves an F1-score of 75.02% and an IoU of 60.02%, highlighting its superior performance. This advantage can be attributed to the effective integration of multi-scale feature extraction and attention mechanisms, which significantly enhance the model’s capacity to characterize complex change regions. In addition, the results of an ablation study further verify the critical contributions of the Multi-Scale Mixer, the Cross-Channel Spatial Encoder, and the Multi-Scale Attention Decoder to the overall performance. Specifically, the introduction of the Multi-Scale Mixer alone leads to a substantial increase in recall, reaching 79.08% and 64.56% on the two datasets, with notable improvements in both F1-score and IoU. These results indicate that the Multi-Scale Mixer plays a vital role in enhancing the representation of multi-scale features and capturing potential change regions. However, this enhancement comes at the cost of reduced precision, which drops to 68.35% and 63.89%, suggesting that, while the Multi-Scale Mixer effectively reduces missed detections, it still struggles to fully suppress false alarms.

To address this issue, the Cross-Channel Spatial Encoder is further introduced, resulting in moderate improvements in precision across both datasets. This finding demonstrates that the Cross-Channel Spatial Encoder effectively enhances the spatial dependency and cross-channel interactions within the features, thereby improving the model’s sensitivity and accuracy in detecting change regions and enabling more comprehensive change capture. Building on this foundation, the addition of the Multi-Scale Attention Decoder achieves a balanced improvement in both precision and recall on the CLCD dataset while ensuring consistent leadership in terms of the F1-score and IoU. Similarly, on the HRSCD dataset, the proposed model maintains superiority in terms of recall, the F1-score, and IoU. This confirms that the Multi-Scale Attention Decoder not only effectively integrates multi-scale contextual information but also enhances the preservation and characterization of fine-grained changes through the attention mechanism. This highlights the critical role of the decoder in multi-scale information aggregation and fine-grained change detection. However, in the complex background of the HRSCD dataset, the intricate foreground–background relationships may lead to minor confusion or redundancy in certain feature representations.

Despite the significant improvements achieved across various evaluation metrics, there is still room for further optimization. The current model architecture could be further enhanced to better accommodate the diverse patterns of farmland changes, particularly when dealing with highly heterogeneous change types. Furthermore, future research could extend the model’s applicability by considering farmland change detection across diverse geographical regions and climatic conditions, thereby improving the model’s robustness and generalization capability. This would provide reliable technical support for large-scale farmland dynamics monitoring and sustainable agricultural management.

6. Conclusions

This paper proposes a Multi-Scale Attention Siamese Network that integrates Convolutional Neural Networks (CNNs) and Transformers, targeting farmland change detection in high-resolution remote sensing imagery. The proposed network incorporates a Multi-Scale Mixer and a Cross-Channel Spatial Encoder, enabling the joint modeling of local details and global spatial dependencies. This design significantly enhances the network’s ability to capture multi-scale representations of complex surface changes. Furthermore, the Multi-Scale Attention Decoder effectively improves the fusion quality and robustness of multi-level features. Experimental results demonstrate that the proposed method outperforms state-of-the-art approaches on both the CLCD and HRSCD datasets, especially in terms of recall, highlighting its superior capability in capturing real change targets, thereby providing strong support for reducing missed detections in farmland change monitoring.

Despite these promising results, the proposed approach still has certain limitations, particularly regarding the interpretability and stability of attention weights. Existing attention mechanisms typically derive weight distributions through feature similarity or weighted aggregation operations, but such distributions often lack clear physical or semantic interpretations. In farmland change detection tasks, the spatial positions, feature channels, and scale preferences reflected by attention weights are not explicitly aligned with the intrinsic laws of farmland change. This results in a “black-box” decision process, which limits transparent evaluations and reliable reasoning in practical applications. Moreover, the attention allocation process is susceptible to factors such as the training data distribution and noise interference, leading to noticeable variations in attention patterns across different samples. This instability further weakens the model’s generalization performance. In addition, the training and detection performance of the current model heavily rely on the quality and distributional balance of annotated data. For example, the model trained on the CLCD dataset achieves significantly better performance than that trained on HRSCD, revealing limited cross-region adaptability, which remains a challenge for future work.

From the application perspective, the core objective of farmland change detection is to develop an efficient and automated monitoring and early warning system for agricultural land use changes, providing scientific evidence and decision-making support for farmland protection, land use supervision, and illegal land occupation monitoring. The proposed MSA-SiamNet not only achieves a high pixel-wise recognition accuracy but also lays a solid foundation for the practical deployment and systematic development of change detection technologies in the future. Future research could focus on cross-region adaptation, multi-source information fusion, real-time monitoring and early warning integration, and edge deployment. Specifically, to address variations in farmland landscapes, crop types, and cultivation patterns across regions, future studies could incorporate few-shot transfer learning and self-supervised pretraining techniques to enhance cross-region generalization and reduce dependency on high-quality annotated data. Additionally, by integrating optical and SAR imagery with parcel management data and other heterogeneous information sources, the model’s capacity to analyze change types can be further improved. This could support the development of an intelligent monitoring and information service platform for agricultural regulatory agencies, enabling fully automated change reporting, dynamic classification-based early warning, policy response, and farmer feedback, thus advancing change detection technologies from algorithmic breakthroughs to systematic applications. To meet the practical needs of township agricultural authorities and grassroots agricultural extension stations, future work could also explore model pruning, knowledge distillation, and lightweight structural design, aiming to reduce computational complexity and develop efficient change detection tools deployable on conventional servers, drones, and other field-level devices, ultimately realizing collaborative edge-cloud farmland monitoring with real-time intelligent processing.

Author Contributions

Conceptualization, J.W. and J.T.; methodology, J.T. and L.Z.; software, J.T. and P.C.; validation, J.W. and L.Z.; formal analysis, J.W. and P.C.; investigation, P.C., J.X. and M.X.; data curation, J.T. and S.L.; writing—original draft preparation, J.T. and J.W.; writing—review and editing, J.X., M.X. and L.Z.; visualization, P.C.; supervision, J.W. and P.C; project administration, S.L and P.C. All authors have read and agreed to the published version of the manuscript.

Funding

This work was funded by the Hubei Province Key Research and Development Special Project of Science and Technology Innovation Plan under grant number 2023BAB087; Wuhan Key Research and Development Projects under grant number 2023010402010614; Wuhan Knowledge Innovation Special Dawn Project under grant number 2023010201020465; open competition project for selecting the best candidates, Wuhan East Lake High-tech Development Zone under grant number 2023KJB204; and Fund for Research Platform of South-Central Minzu University under grant number CZQ24011.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The data presented in this study are available from [HRSCD] at https://doi.org/10.1016/j.cviu.2019.07.003 and [CLCD] at https://doi.org/10.1109/JSTARS.2022.3177235 (accessed on 5 March 2025).

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Guo, A.; Yue, W.; Yang, J.; Xue, B.; Xiao, W.; Li, M.; He, T.; Zhang, M.; Jin, X.; Zhou, Q. Cropland abandonment in China: Patterns, drivers, and implications for food security. J. Clean. Prod. 2023, 418, 138154. [Google Scholar] [CrossRef]

- Tian, Z.; Ji, Y.; Xu, H.; Qiu, H.; Sun, L.; Zhong, H.; Liu, J. The potential contribution of growing rapeseed in winter fallow fields across Yangtze River Basin to energy and food security in China. Resour. Conserv. Recycl. 2021, 164, 105159. [Google Scholar] [CrossRef]

- Ye, S.; Wang, J.; Jiang, J.; Gao, P.; Song, C. Coupling input and output intensity to explore the sustainable agriculture intensification path in mainland China. J. Clean. Prod. 2024, 442, 140827. [Google Scholar] [CrossRef]

- Du, B.; Ye, S.; Gao, P.; Ren, S.; Liu, C.; Song, C. Analyzing spatial patterns and driving factors of cropland change in China’s National Protected Areas for sustainable management. Sci. Total Environ. 2024, 912, 169102. [Google Scholar] [CrossRef]

- Shi, W.; Zhang, M.; Zhang, R.; Chen, S.; Zhan, Z. Change detection based on artificial intelligence: State-of-the-art and challenges. Remote Sens. 2020, 12, 1688. [Google Scholar] [CrossRef]

- Liu, P.; Liu, X.; Liu, M.; Shi, Q.; Yang, J.; Xu, X.; Zhang, Y. Building footprint extraction from high-resolution images via spatial residual inception convolutional neural network. Remote Sens. 2019, 11, 830. [Google Scholar] [CrossRef]

- Jing, Q.; He, J.; Li, Y.; Yang, X.; Peng, Y.; Wang, H.; Yu, F.; Wu, J.; Gong, S.; Che, H.; et al. Analysis of the spatiotemporal changes in global land cover from 2001 to 2020. Sci. Total Environ. 2024, 908, 168354. [Google Scholar] [CrossRef]

- Cheng, G.; Huang, Y.; Li, X.; Lyu, S.; Xu, Z.; Zhao, H.; Zhao, Q.; Xiang, S. Change detection methods for remote sensing in the last decade: A comprehensive review. Remote Sens. 2024, 16, 2355. [Google Scholar] [CrossRef]

- Chen, S.; Shi, W.; Zhou, M.; Zhang, M.; Yu, Y.; Sun, Y.; Guan, L.; Li, S. CDasXORNet: Change detection of buildings from bi-temporal remote sensing images as an XOR problem. Int. J. Appl. Earth Obs. Geoinf. 2024, 130, 103836. [Google Scholar] [CrossRef]

- Pan, Y.; Lin, H.; Zang, Z.; Long, J.; Zhang, M.; Xu, X.; Jiang, W. A new change detection method for wetlands based on Bi-Temporal Semantic Reasoning UNet++ in Dongting Lake, China. Ecol. Indic. 2023, 155, 110997. [Google Scholar] [CrossRef]

- Ok, A.O.; Senaras, C.; Yuksel, B. Automated detection of arbitrarily shaped buildings in complex environments from monocular VHR optical satellite imagery. IEEE Trans. Geosci. Remote Sens. 2012, 51, 1701–1717. [Google Scholar] [CrossRef]

- Johnson, R.D.; Kasischke, E.S. Change vector analysis: A technique for the multispectral monitoring of land cover and condition. Int. J. Remote Sens. 1998, 19, 411–426. [Google Scholar] [CrossRef]

- Nielsen, A.A.; Conradsen, K.; Simpson, J.J. Multivariate alteration detection (MAD) and MAF postprocessing in multispectral, bitemporal image data: New approaches to change detection studies. Remote Sens. Environ. 1998, 64, 1–19. [Google Scholar] [CrossRef]

- Doxani, G.; Karantzalos, K.; Tsakiri-Strati, M. Monitoring urban changes based on scale-space filtering and object-oriented classification. Int. J. Appl. Earth Obs. Geoinf. 2012, 15, 38–48. [Google Scholar] [CrossRef]

- Cheng, G.; Xie, X.; Han, J.; Guo, L.; Xia, G.-S. Remote sensing image scene classification meets deep learning: Challenges, methods, benchmarks, and opportunities. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2020, 13, 3735–3756. [Google Scholar] [CrossRef]

- Thapa, A.; Horanont, T.; Neupane, B.; Aryal, J. Deep learning for remote sensing image scene classification: A review and meta-analysis. Remote Sens. 2023, 15, 4804. [Google Scholar] [CrossRef]

- Adegun, A.A.; Viriri, S.; Tapamo, J.-R. Review of deep learning methods for remote sensing satellite images classification: Experimental survey and comparative analysis. J. Big Data 2023, 10, 93. [Google Scholar] [CrossRef]

- Peng, D.; Zhang, Y.; Guan, H. End-to-end change detection for high resolution satellite images using improved UNet++. Remote Sens. 2019, 11, 1382. [Google Scholar] [CrossRef]

- Venugopal, N. Automatic semantic segmentation with DeepLab dilated learning network for change detection in remote sensing images. Neural Process. Lett. 2020, 51, 2355–2377. [Google Scholar] [CrossRef]

- Ke, Q.; Zhang, P. CS-HSNet: A cross-Siamese change detection network based on hierarchical-split attention. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2021, 14, 9987–10002. [Google Scholar] [CrossRef]

- Yew, A.N.J.; Schraagen, M.; Otte, W.M.; van Diessen, E. Transforming epilepsy research: A systematic review on natural language processing applications. Epilepsia 2023, 64, 292–305. [Google Scholar] [CrossRef] [PubMed]

- Islam, S.; Elmekki, H.; Elsebai, A.; Bentahar, J.; Drawel, N.; Rjoub, G.; Pedrycz, W. A comprehensive survey on applications of transformers for deep learning tasks. Expert Syst. Appl. 2023, 241, 122666. [Google Scholar] [CrossRef]

- Khan, S.; Naseer, M.; Hayat, M.; Zamir, S.W.; Khan, F.S.; Shah, M. Transformers in vision: A survey. ACM Comput. Surv. (CSUR) 2022, 54, 1–41. [Google Scholar] [CrossRef]

- Jin, Y.; Hou, L.; Chen, Y. A time series transformer based method for the rotating machinery fault diagnosis. Neurocomputing 2022, 494, 379–395. [Google Scholar] [CrossRef]

- Liu, M.; Zhang, C.; Bai, H.; Zhang, R.; Zhao, Y. Cross-part learning for fine-grained image classification. IEEE Trans. Image Process. 2021, 31, 748–758. [Google Scholar] [CrossRef]

- Guo, D.; Terzopoulos, D. A transformer-based network for anisotropic 3D medical image segmentation. In Proceedings of the 2020 25th International Conference on Pattern Recognition (ICPR), Milan, Italy, 10–15 January 2021; pp. 8857–8861. [Google Scholar]

- Chen, D.-J.; Hsieh, H.-Y.; Liu, T.-L. Adaptive image transformer for one-shot object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Online, 19–25 June 2021; pp. 12247–12256. [Google Scholar]

- Cornia, M.; Stefanini, M.; Baraldi, L.; Cucchiara, R. Meshed-memory transformer for image captioning. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Online, 13–19 June 2020; pp. 10578–10587. [Google Scholar]

- Zhang, C.; Yue, P.; Tapete, D.; Jiang, L.; Shangguan, B.; Huang, L.; Liu, G. A deeply supervised image fusion network for change detection in high resolution bi-temporal remote sensing images. ISPRS J. Photogramm. Remote Sens. 2020, 166, 183–200. [Google Scholar] [CrossRef]

- Xu, J.; Luo, C.; Chen, X.; Wei, S.; Luo, Y. Remote sensing change detection based on multidirectional adaptive feature fusion and perceptual similarity. Remote Sens. 2021, 13, 3053. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Spatial pyramid pooling in deep convolutional networks for visual recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2015, 37, 1904–1916. [Google Scholar] [CrossRef]

- Chen, L.-C.; Papandreou, G.; Kokkinos, I.; Murphy, K.; Yuille, A.L. Deeplab: Semantic image segmentation with deep convolutional nets, atrous convolution, and fully connected CRFs. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 40, 834–848. [Google Scholar] [CrossRef]

- Liu, M.; Shi, Q.; Marinoni, A.; He, D.; Liu, X.; Zhang, L. Super-resolution-based change detection network with stacked attention module for images with different resolutions. IEEE Trans. Geosci. Remote Sens. 2021, 60, 4403718. [Google Scholar] [CrossRef]

- Chen, H.; Shi, Z. A spatial-temporal attention-based method and a new dataset for remote sensing image change detection. Remote Sens. 2020, 12, 1662. [Google Scholar] [CrossRef]

- Daudt, R.C.; Le Saux, B.; Boulch, A.; Gousseau, Y. Urban change detection for multispectral earth observation using convolutional neural networks. In Proceedings of the IGARSS 2018–2018 IEEE International Geoscience and Remote Sensing Symposium, Valencia, Spain, 22–27 July 2018; pp. 2115–2118. [Google Scholar]

- Liu, Y.; Pang, C.; Zhan, Z.; Zhang, X.; Yang, X. Building change detection for remote sensing images using a dual-task constrained deep siamese convolutional network model. IEEE Geosci. Remote Sens. Lett. 2020, 18, 811–815. [Google Scholar] [CrossRef]

- Zhang, M.; Shi, W. A feature difference convolutional neural network-based change detection method. IEEE Trans. Geosci. Remote Sens. 2020, 58, 7232–7246. [Google Scholar] [CrossRef]

- Chen, H.; Qi, Z.; Shi, Z. Remote sensing image change detection with transformers. IEEE Trans. Geosci. Remote Sens. 2021, 60, 5607514. [Google Scholar] [CrossRef]

- Liu, M.; Chai, Z.; Deng, H.; Liu, R. A CNN-transformer network with multiscale context aggregation for fine-grained cropland change detection. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2022, 15, 4297–4306. [Google Scholar] [CrossRef]

- Daudt, R.C.; Le Saux, B.; Boulch, A.; Gousseau, Y. Multitask learning for large-scale semantic change detection. In Computer Vision and Image Understanding; Elsevier: Amsterdam, The Netherlands, 2019; Volume 187, p. 102783. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).