1. Introduction

Recent advances in large language models (LLMs) have opened new frontiers for assisting with complex engineering design tasks [

1]. However, their effective application in highly specialized domains faces two main challenges: the lack of deep, domain-specific knowledge, which limits their accuracy and reliability, and the high computational and energy costs associated with their training and deployment.

The following is a brief comparative analysis of previous work on the use of LLM in specialized engineering domains. In [

2], a technical analysis FT is performed using LLaMA 3.1 8B with QLoRA for hydrogen/renewable energy strategies, focusing on investment decisions and regulatory compliance. Their evaluation is based on multiple constraints (cost, efficiency) but does not include differential equation modeling or experimental validation of physical devices. Our work complements this approach by adding quantitative reasoning about coupled (thermal-electrical) phenomena.

In [

3] EnergyGPT model was presented, a LLaMA 3.1 8B model specializing in electricity markets with the EnergyBench benchmark for microgrid optimization. Although LoRA and local deployment is used, the model acts as a decision assistant, not as a generator of physical hypotheses. The key difference with our work lies in the capacity for physical synthesis: our LLM proposes redesigns of TEGs (thermal diffusers, thermal bridges) based on trade-offs derived from equations of state; it does not merely retrieve information.

While previous literature [

2,

3] optimizes decisions, our model executes symbolic reasoning, transforming it from an informational assistant to a physical design tool.

Furthermore, although alternative approaches such as RAG [

1,

4] can be effective when the task is limited to document retrieval, their performance is limited in domains such as TEGs, where the answer requires internal synthesis of equations, thermoelectric dependencies, and design criteria rather than simple access to external information. Therefore, this work adopts a parameter-efficient fine-tuning (PEFT) strategy using QLoRA, which allows the native incorporation of the physical-mathematical reasoning of the domain by modifying only a small fraction of the model parameters, achieving deep specialization without the costs or risks associated with full fine-tuning.

Ref. [

3] presents a study on an LLM specialized for the energy sector trained with FT that combines 4-bit quantization with low-range QLoRA adapters that allow memory savings.

In the health field, a comparative study between FT vs. Retrieval-Augmented Generation (RAG) [

5] is presented for different models, in [

6] an FT LLM is proposed, and in [

7] the advantages and disadvantages of FT in the agricultural field are presented.

This work addresses this gap by proposing a practical and accessible solution: the creation of a domain-specific, specialist AI assistant designed to operate efficiently on local hardware. The domain chosen to validate this hypothesis is thermoelectric generators (TEGs), a field that perfectly encapsulates engineering complexity. Their modeling requires a deep understanding of coupled physical phenomena such as the Seebeck, Peltier, and Joule effects, the formulation of nonlinear differential equation systems, and critical reasoning for design optimization. The goal, therefore, is to develop a tool that can reason, model, and analyze like a specialist engineer, thereby overcoming the limitations of generalist LLMs that often fall short of the required accuracy and technical depth, and going beyond the simple creation of a repository of information.

This study focuses on the domain of TEGs, solid-state devices that convert thermal energy directly into direct current electricity using the Seebeck and Peltier effects [

8]. Due to the absence of moving parts, they operate silently, making them ideal for applications in remote locations where thermal energy is the primary available source. However, their modeling and optimization are considerably complex. The performance of TEGs is intrinsically linked to the interrelation of coupled thermal and electrical phenomena, often described by systems of nonlinear partial differential equations [

9]. Furthermore, factors such as the geometric configuration decisively influence their maximum power output [

10]. To address this domain, this article uses a four-degree-of-freedom lumped-parameter model [

11], on which the variants used for LLM training are generated. The fundamental modification to the idea proposed in [

11] consists of the specific formulation of the Jacobian for steady-state analysis in order to reduce simulation times.

This work addresses the gap between the potential of LLMs and the demands of this specialized TEG domain, presenting a methodology for developing a specialist LLM based on the four-billion-parameter (4B) JanV1-4B generalist base model [

12], designed for application in local environments. It is called a base model because it has not yet been refined.

To overcome computational limitations and facilitate its use on consumer hardware, parameter-efficient fine-tuning (PEFT) techniques are employed [

13,

14]. These methods have demonstrated performance comparable to full fine-tuning (FT) by training only a minimal fraction of the parameters (<1%). In particular, this work implements the quantized low-rank adaptation (QLoRA) technique [

15], an evolution of LoRA [

16] that maximizes memory efficiency and makes FT accessible on consumer hardware. This approach not only validates the creation of an expert model in a highly complex field but also demonstrates the feasibility of democratizing access to advanced AI tools by eliminating dependence on large-scale computing infrastructures. The core of our methodology is based on two fundamental pillars.

First, the development of a custom-designed training dataset that combines deep domain knowledge—including physical principles, fundamental equations, and terminology—with a training dataset of instructions. This latter component is crucial for refining the model’s behavior, ensuring it follows complex guidelines, and, fundamentally, mitigating catastrophic forgetting [

17] of its general knowledge. The primacy of quality over quantity in the training dataset is a guiding principle in this work and a thesis empirically demonstrated in foundational studies such as LIMA (Less Is More for Alignment) [

18], which validate the use of small but high-quality datasets to achieve exceptional performance.

Second, the implementation of a rigorous multilevel assessment framework. This framework is designed to measure a spectrum of cognitive abilities, from retrieving fundamental knowledge and applying mathematical models to qualitative design reasoning and critical analysis of numerical data. This article not only presents the development of the specialist LLMs but also provides a comprehensive validation of their performance, detailing their strengths and areas for improvement. The network was trained on a well-curated dataset of concepts obtained from references in the TEG field [

19,

20,

21,

22,

23].

The fundamental contributions of this work, which do not appear in the previously analyzed state of the art, are four.

First, a comprehensive and reproducible methodology is presented, from data curation to local deployment, to transform two general purpose LLMs, JanV1-4B [

12] and Qwen3-4B-Thinking-2507 [

24], into a new specialist assistant within a highly specialized engineering domain in TEG.

Second, a strategic design is proposed for training a new dataset that balances the injection of deep knowledge—the “what”—with the shaping of behavior and response ability—the “how”—which is key to mitigating catastrophic forgetting and achieving robust performance.

Third, a new rigorous multi-level assessment framework is introduced that measures advanced cognitive abilities, such as critical reasoning and self-correction, going beyond traditional metrics.

And fourth, it is empirically demonstrated that it is feasible to achieve this high level of specialization using local hardware, validating the QLoRA approach as an effective way to democratize the development of specialist AI in TEG. In addition, the model’s utility is demonstrated through experimental TEG design, providing expert-level reasoning on thermal management strategies.

This document is structured as follows:

Section 2 presents the lumped-parameter mathematical model of the TEG, which serves as the knowledge base and reference for the evaluation.

Section 3 explains the FT methodology.

Section 4 describes the composition of the FT dataset.

Section 5 presents and discusses the results obtained. Finally,

Section 6 offers the main conclusions regarding the LLMs specializing in the field of TEG engineering that have been developed in this work.

2. Mathematical Model of the TEG

This section details the lumped-parameter mathematical model that describes the behavior of a TEG. This model fulfills two fundamental functions in this work: first, it serves as the basis for the synthetic generation of the dataset used in the FT LLM; and second, it constitutes the reference or ground truth for the quantitative validation of the responses generated by the expert model to questions related to its equations.

The fundamental assumptions of the lumped parameter model were explicitly established to ensure its validity and reproducibility. Heat flow is considered one-dimensional, which is justified by the flat and homogeneous geometry of the Peltier cells, although this simplification ignores edge effects in peripheral areas. Furthermore, material properties are assumed to be constant within the operating temperature range (0–90 °C). On the other hand, the Thomson effect is neglected since the temperature gradients between faces are relatively small, as argued by Feng et al. [

20]. These assumptions clearly define the application domain of the model, allowing its reliable use in low-to-medium-power thermoelectric generation scenarios while acknowledging its limitations under extreme conditions where nonlinearities become dominant.

The operating principle of a TEG is based on the application of heat flow from a high-temperature source, , to a lower-temperature sink, . This flow induces a temperature difference between the hot and cold faces of the device, which, due to the Seebeck effect, generates a direct current voltage. The objective of the model is, therefore, to establish a system of equations that allows calculation of the temperatures on the module’s faces in order to determine key performance metrics, such as the electrical power supplied to an external load.

2.1. Definition of Parameters and Variables

Figure 1 presents a simplified scheme of a TEG, showing its essential elements: heat source, heat sink, n-type and p-type semiconductors, structural heat-conducting ceramics, and the electric charge

.

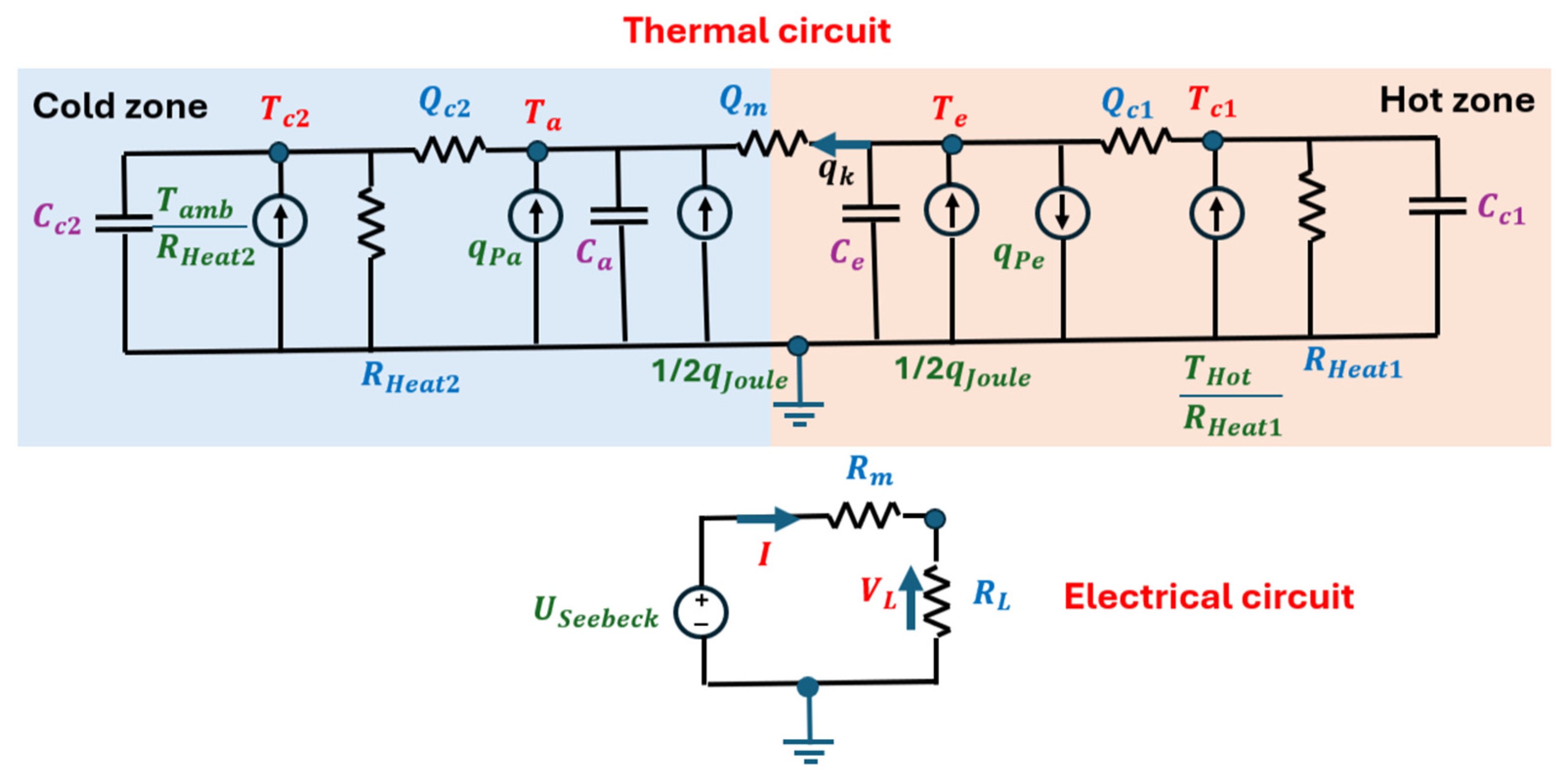

To construct this system, a thermoelectric analogy is used, whose equivalent circuit is illustrated in the thermal circuit shown in

Figure 2. Under this analogy, the heat flow [W] is modeled as if it were an electric current, and the temperature [K] is represented as if it were an electric potential, taking absolute zero as the ground reference node.

The physical magnitudes and properties used in the lumped parameter model shown in

Figure 2 are listed in

Table 1.

2.2. Transient Regime Analysis

The circuit shown in

Figure 2 is a proper nonlinear circuit. Its complexity order is four. The system order is four because it has four independent energy storage elements (thermal capacitances

,

,

,

), resulting in four state variables [

,

,

,

] per state-space theory. The four state variables are represented in the following vector,

The input vector

is composed of the external temperature sources expressed by the following equation:

The equations are presented as the energy balance at the four nodes of the thermal circuit in

Figure 2 shown in the following equation:

The

voltage used to calculate the current

of the electrical circuit is expressed according to the following equation:

The balance at node

, inner hot face, including the energy accumulation term, is given by the following equation:

By solving for the derivative and substituting

, we obtain the first equation of state:

Similarly, the balance at node

, internal cold face, is:

By solving for the derivative of Equation (7) and substituting

expressed in Equation (4), we obtain the second equation of state:

The balance at node

, external hot ceramic, depends on the inlet heat source

, as can be seen in the following equation:

By solving for the derivative, we obtain the third equation of state:

The balance at node

, external cold ceramic, depends on the ambient temperature

, as can be seen in the following equation:

And finally, by solving for the derivative, we obtain the fourth equation of state:

The complete system of nonlinear differential equations of the transient thermal and electrical circuit that describe the dynamics of the TEG is expressed by Equations (13)–(16):

This system has the form

and is ready to be solved numerically using an ordinary differential equation integrator (ODE) [

24], to simulate the transient behavior of the system under changes in

or

.

2.3. Stationary Regime Analysis

The steady-state analysis will be studied in two steps: the establishment of the balance equations, and the development of the Jacobian and the second member of the system of equations.

2.3.1. Energy Balance Equations

In steady state, the partial derivatives with respect to time are zero, so Equations (13)–(16) simplify considerably. It should be noted that in this case the resulting equations remain nonlinear.

To solve this nonlinear system using the Newton–Raphson method, the linear system to be solved in each iteration

is set up as shown in the following equation:

where

is:

To obtain greater numerical robustness, by searching for the diagonal domain, the system of equations is defined by Equations (19)–(22).

Function 1, balance at

:

Function 2, balance at

:

Function 3, balance at

:

Function 4, balance at

:

2.3.2. Solving Nonlinear Equations

To solve the system of nonlinear equations in steady state using the Newton–Raphson method, it is necessary to calculate the Jacobian matrix and the second member vector of Equation (17). The state vector is .

The functions of the system are expressed by Equations (19)–(22).

The Jacobian matrix

is defined as the matrix of first-order partial derivatives, where

takes the form:

The elements of the matrix are given by Equations (24)–(39):

The Newton–Raphson iterative system is , which is expressed by Equation (17).

The vector of the second member is calculated as:

The terms

and

are as follows:

And the terms and take the following form

For

, the calculation of

results in:

and likewise, for

we obtain:

Grouping all the components, we obtain the following expression which gives us the second member of the system of equations in steady state:

3. FT Methodology

The FT process was run on a Linux platform with an NVIDIA GeForce RTX 2070 SUPER GPU. Training times and inference times in later tables were all measured on the same setup. To optimize memory usage and accelerate training, the open-source Unsloth library [

25] was used, applying its optimizations to the base model JanV1-4B [

12]. According to its developers, this model is an FT of Qwen3-4B-Thinking, an architecture belonging to the Qwen2 model family [

26]. Training on 202 questions and answers (QA) found in this work’s repository [

27] over three epochs was highly efficient, completing in just 263 s. Each data sample was structured using a chat template that included a powerful system prompt, training the model to behave like an expert in thermoelectric materials and to proactively clarify ambiguous concepts, such as the definition of the power coefficient.

Monitoring training loss across the three epochs confirmed the effectiveness of the FT methodology. Starting with an initial loss of 2.38, the model showed the greatest learning gain during the second epoch, where the loss decreased by 13.6%. This process continued steadily, with an additional 7.4% reduction in the third epoch, culminating in a final loss value of 1.91. This reduction represents a total decrease of 19.9% and indicates robust and progressive learning. Furthermore, the gradient norm remained controlled throughout the process, confirming the stability of the convergence and the suitability of the selected hyperparameters. This indicates that the model successfully assimilated the new data.

Using the LoRA technique, 132,120,576 parameters were tuned, representing only 3.18% of the total architecture (4.15 × 109 parameters). The model was loaded in a 4-bit format to drastically minimize its memory footprint. A maximum context window of 2048 tokens was configured, striking a balance between the ability to process complex information and computational limitations. A conservative learning rate of 2 × 10−6 was applied, with a linear decline throughout training, to gradually integrate new knowledge without compromising the model’s existing capabilities.

The workflow concluded with the merging of the adapters and subsequent conversion to the Georgi Gerganov Universal Format (GGUF) [

28], leaving it ready for efficient inference in local environments.

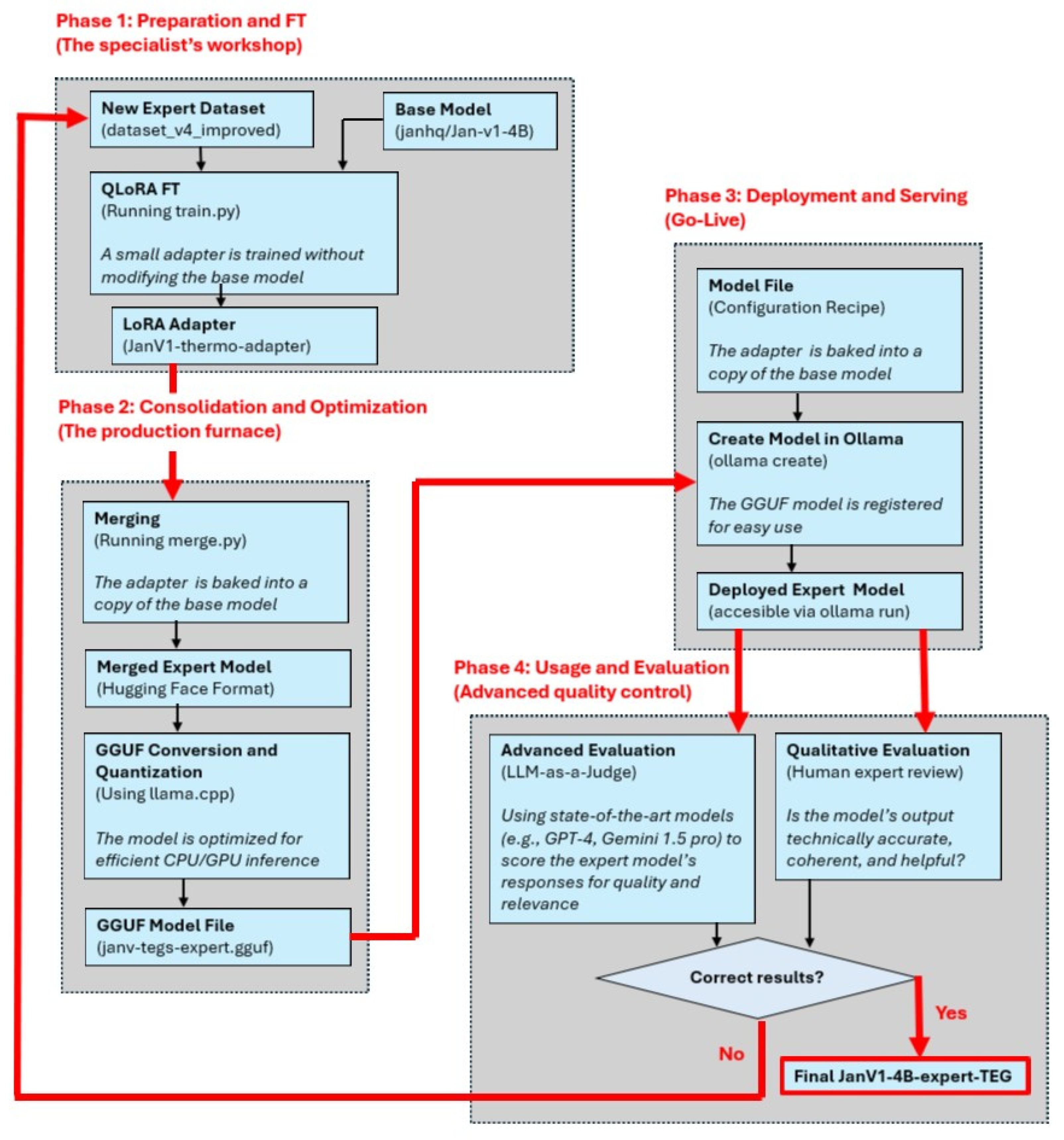

The following diagram illustrates the complete cycle for specializing a JanV1-4B general-purpose LLM into a TEG domain expert, using an efficient and reproducible workflow. This new model is called the JanV1-4B-expert-TEG model. The process begins with the base JanV1-4B model and culminates in its specialization in the field of thermoelectricity, TEG. It is divided into four key phases, summarized in

Figure 3.

Phase 1: Preparation and FT (the TEG specialist’s workshop).

The starting point consists of two essential components: a pre-trained JanV1-4B base model and a curated expert dataset with domain-specific knowledge—in this case, 202 QA on TEG [

27].

Instead of retraining the entire model, which is computationally prohibitive, we applied the QLoRA technique. A Python 3.10 script called train.py [

27] was written, which freezes the base 4B model and trains only a small set of new weights, called the LoRA adapter. This adapter, representing only a tiny fraction of the total model size (3.18%), learns the new skills and knowledge of the dataset. The result of this phase is not a new model, but rather this lightweight and portable adapter.

Phase 2: Consolidation and Optimization.

Once we have created a new adapter, we need to integrate it to create a standalone efficient model. This process has two steps:

Merging: A script, merge.py [

27], was created to combine the weights of the original JanV1-4B base model with those of the LoRA adapter. The result is a complete merged expert model in the Hugging Face standard format [

29]. This yields a single model containing both the general knowledge and the new specialization.

GGUF Conversion and Quantization: To make the model practical and fast for inference in real-world use, we converted it to GGUF using the tools from the open-source project llama.cpp [

28]. During this step, 4-bit quantization was also applied, a process that drastically reduces file size and RAM usage with minimal loss of precision. The result is a single file with the .gguf extension, optimized for efficient execution on both CPUs and GPUs.

Phase 3: Deployment and Serving (Go-Live).

With the optimized model in GGUF, the next step is to make it accessible. For deploying and running the LLM in a local environment, the open-source framework Ollama [

30] was used, which simplifies LLM management and inference on consumer hardware. Using a file called a modelfile [

27], which acts as a configuration recipe, we tell Ollama where to find the GGUF file and how the model should behave—for example, by providing its system prompt.

The ollama create command packages the result of this phase and registers the model on the local system. From this point on, the expert model is deployed and ready to be invoked with a simple command called ollama run and the expert model name, JanV1-expert-TEG.

Phase 4: Usage and Evaluation (Advanced quality control).

Next, it is crucial to verify the performance of the new JanV1-expert-TEG model through a qualitative and an advanced evaluation.

Qualitative evaluation: This involves interacting directly with the model, just as a human expert would. We ask complex questions and evaluate the coherence, technical accuracy, and style of its responses. It is a subjective but fundamental test.

Advanced evaluation: To ensure the highest quality of the expert model, a rigorous dual evaluation process is implemented that surpasses traditional metrics. First, a qualitative evaluation is performed, where human subject matter experts review the model’s responses to validate their technical accuracy, consistency, and practical utility in real-world scenarios. Next, a cutting-edge technique known as LLM-as-a-Judge [

31,

32] is applied. In this step, state-of-the-art language models GPT-4 [

31] and Gemini 1.5 Pro [

32] are used to act as impartial evaluators, scoring the expert model’s responses based on their quality, relevance, and correctness. This combined approach provides a much deeper and more nuanced assessment than traditional automated metrics [

33], as it is able to analyze the reasoning and semantic quality of the responses, not just word matching.

If, at the end of phase 4 in

Figure 3, the analysis result is not acceptable, the dataset can be expanded by returning to phase 1. In this way, the results of this evaluation phase feed into a continuous improvement cycle, providing input on how to refine the dataset or how to adjust the hyperparameters for the next FT iteration, if necessary. In our case, the dataset was improved with 12 iterations.

This methodology for obtaining the FT explained for the LLM JanV1-4B-expert-TEG represented in the diagram of

Figure 3 was also applied in the LLM Qwen3-4B-thinking-2507-TEG.

These two LLMs were refined because they had the best scores in the published generalist benchmarks, as will be justified later in

Section 5.2 TEG FT models vs. generalist LLMs.

4. Dataset Definition

To construct the training dataset for the FT LLM, information on the progress and applications of TEG [

34] was used, among other things. Concepts and laws related to TEG were classified, and reviews of the current state of TEG were taken into account [

8,

19,

35]. Additionally, a QA dataset related to the model developed in

Section 2 was created.

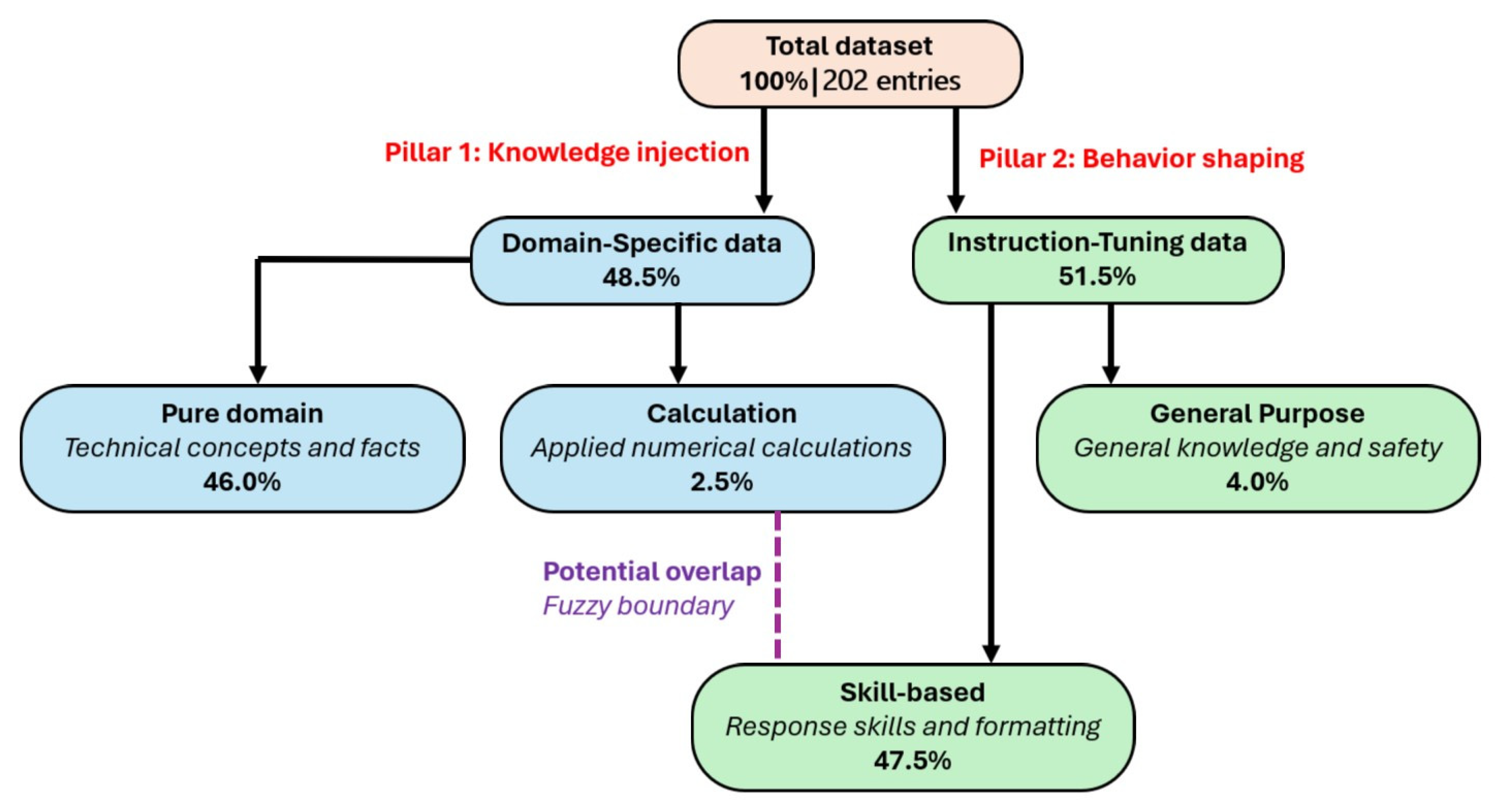

To explain the criteria for choosing the content of the dataset, a flowchart has been made, see

Figure 4, which is explained below.

This diagram visualizes the composition of the dataset used for the model’s technical testing and allows for the classification of QA elements categorized into subsets. The objective of this dataset is not only to teach the model new information but also to shape its behavior. The diagram shows a strategic division into two main branches: knowledge injection and behavior shaping.

Within the knowledge injection branch, there is a subset called Domain-Specific data that comprises 48.5% of the dataset. Its purpose is to make the model an expert in a specific field—in this case, thermoelectricity. It is subdivided into:

- (a)

Pure Domain (46.0%): The largest portion of the dataset focuses on pure factual knowledge, theoretical concepts, and terminology. This is the knowledge from scientific articles.

- (b)

Calculation (2.5%): A small but critical part dedicated to teaching the model how to apply mathematical formulas from the domain to solve practical problems.

Within the behavior-shaping branch, there is a subset called Instruction-Tuning data that comprises 51.5% of the dataset. This data is not about teaching what to say, but how to say it. It shapes the model’s behavior, response style, and safety. It is divided into:

- (a)

Skill-based (47.5%): A very significant portion, now the largest in the dataset, is dedicated to teaching the model how to structure complex responses—such as those involving equations—consistently and clearly, regardless of the specific instruction. This improves the quality and usability of the model’s responses.

- (b)

General Purpose (4.0%): This acts as a safeguard against catastrophic forgetting and overspecialization. It includes general knowledge and safety guidelines to ensure the model remains versatile and does not lose its core competencies after being tailored to such a specific topic.

Logically, some categories can be fuzzy, belonging to different subsets depending on the case. This is a common challenge in data classification. Although the diagram in

Figure 4 uses a closed classification implying a single main category, the reality is often more complex.

For example, a classified dataset entry referenced as applied numerical calculations could also have been referenced as a response skill such as presenting the calculation and the result.

To represent this overlap in the flowchart, a non-directional dashed line has been added between the Calculation and Skill-based nodes. This visually indicates a strong conceptual link and potential overlap between these two subsets, even though they are formally separated in the dataset classification.

The composition and strategy of the 202 QA dataset used in the FT process are detailed in

Table 2. This dataset was meticulously designed to address not only the “what”—knowledge—but also the “how”—responsiveness—a crucial aspect for developing an expert and reliable LLM.

As a strategy for cleaning, controlling overlap, and ensuring dataset integrity, the classification was based on the primary intent of the QA. One hundred percent of the 202 QA pairs were manually reviewed to eliminate conceptual duplicates such as duplicate questions and answers. Ambiguous entries were also classified in this way, ensuring that these QAs aligned with the computational and skills-based subsets.

The generation of the dataset is based on two fundamental pillars that coincide with the branches mentioned above: knowledge injection of the TEG domain and behavior shaping of the dataset.

Pillar 1: Knowledge injection of the TEG domain.

This pillar introduces 93 QA, representing 48.5% of the total QA. The main objective of this section is to build a solid and comprehensive knowledge base in the field of thermoelectricity.

This pillar forms the theoretical basis of the model. It covers fundamental definitions such as the Seebeck effect and the merit factor ZT [

36], properties of key materials used in TEGs (PbTe, SnSe, skutterudites) [

37,

38,

39], various applications (sensors, automotive, radioisotope thermoelectric generators, etc.), and essential physical principles such as the Wiedemann–Franz Law [

40]. The extensive QA in this section ensures that the model possesses the vocabulary and conceptual framework of an expert.

The applied calculations are presented through 5 QA. Although numerically small, this subset is functionally critical. It teaches the model to perform direct calculations, such as determining thermal conductance or internal resistance from geometric and material parameters, validating its ability to apply formulas.

Pillar 2: Behavior shaping of the dataset.

This pillar introduces 104 QA, representing 51.5% of the total QA. This pillar, the largest in the dataset, focuses on teaching the model to act like an engineer, structuring responses, formulating models, and recognizing the limits of its knowledge.

The skills and format are developed through 96 QAs. This is the core of the Instruction-Tuning data. The four-degree-of-freedom lumped-parameter mathematical models developed in

Section 2 are included, along with dozens of variations from the Instruction-Tuning data—formulate, derive, analyze, give me the equations, translate this netlist, etc. This repetition with variation technique is fundamental for the model to learn to recognize the underlying intent of a question, regardless of how it is phrased, and to always respond with a consistent and well-formatted structure—bold headings, lists, LaTeX formatting for equations, etc. It is direct training for robustness and reliability.

General knowledge and safety are addressed through 8 QA. These inputs act as safety railings or regulators. Including general knowledge questions—such as who painted the Mona Lisa?—helps mitigate catastrophic forgetting, preventing the model from overspecializing to the point of losing its general capabilities. Safety examples are also included to teach the model to identify and reject domain-insensitive questions—such as calculate the efficiency of a potato—which is an essential skill for a reliable AI assistant.

Of these 104 QA, 20 were established to clarify ambiguous concepts, specifically differentiating the thermoelectric power coefficient—a concept specific to thermoelectric generators—from the power factor of alternating current circuits—a general electrical concept. This deliberate repetition of the same question posed in different ways inscribes a conceptual distinction in the LLM that is often confusing, teaching the model to be precise and to actively correct common misunderstandings.

Furthermore, continuous expansion and improvement are implemented, as the dataset is designed to be a living resource that can be extended. Areas such as numerical calculations and complex design reasoning can be easily expanded. For example, by adding problems that require the model to deduce properties from experimental results or to propose TEG designs for specific scenarios. For instance, the specialized LLM could be tasked with designing a TEG for an industrial furnace at 800 K, justifying the choice of materials.

In conclusion, this dataset of 202 QA is a robust and strategically balanced dataset. Its dual approach, combining a deep knowledge base with rigorous training in response format and structure, is key to achieving an expert model not only in terminology but also in the mathematical modeling of the TEGs.

The guiding principle of this work is the primacy of quality over quantity in the training dataset. This is not only a methodological choice but also a thesis validated in the FT literature of LLMs. Foundational studies such as LIMA (Less Is More for Alignment) [

18] have empirically demonstrated that, for instruction-tuning, a small but highly curated dataset with maximum instructional coherence is significantly more effective at aligning the model’s behavior and reasoning skills than a massive dataset containing noise or redundancy, mitigating hallucinations and catastrophic forgetting. Therefore, the size of 202 QA pairs was intentionally selected to maximize information density and instructional coherence of the TEG domain, ensuring efficient, high-fidelity specialization without incurring the high computational costs and overfitting risks associated with an unnecessary volume.

5. Results and Discussion

This section examines the inference of the two refined models developed in this work: JanV1-4B-expert-TEG and Qwen3-4B-thinking-2507-TEG. It also includes a comparative analysis of these two models against five other unrefined baseline models.

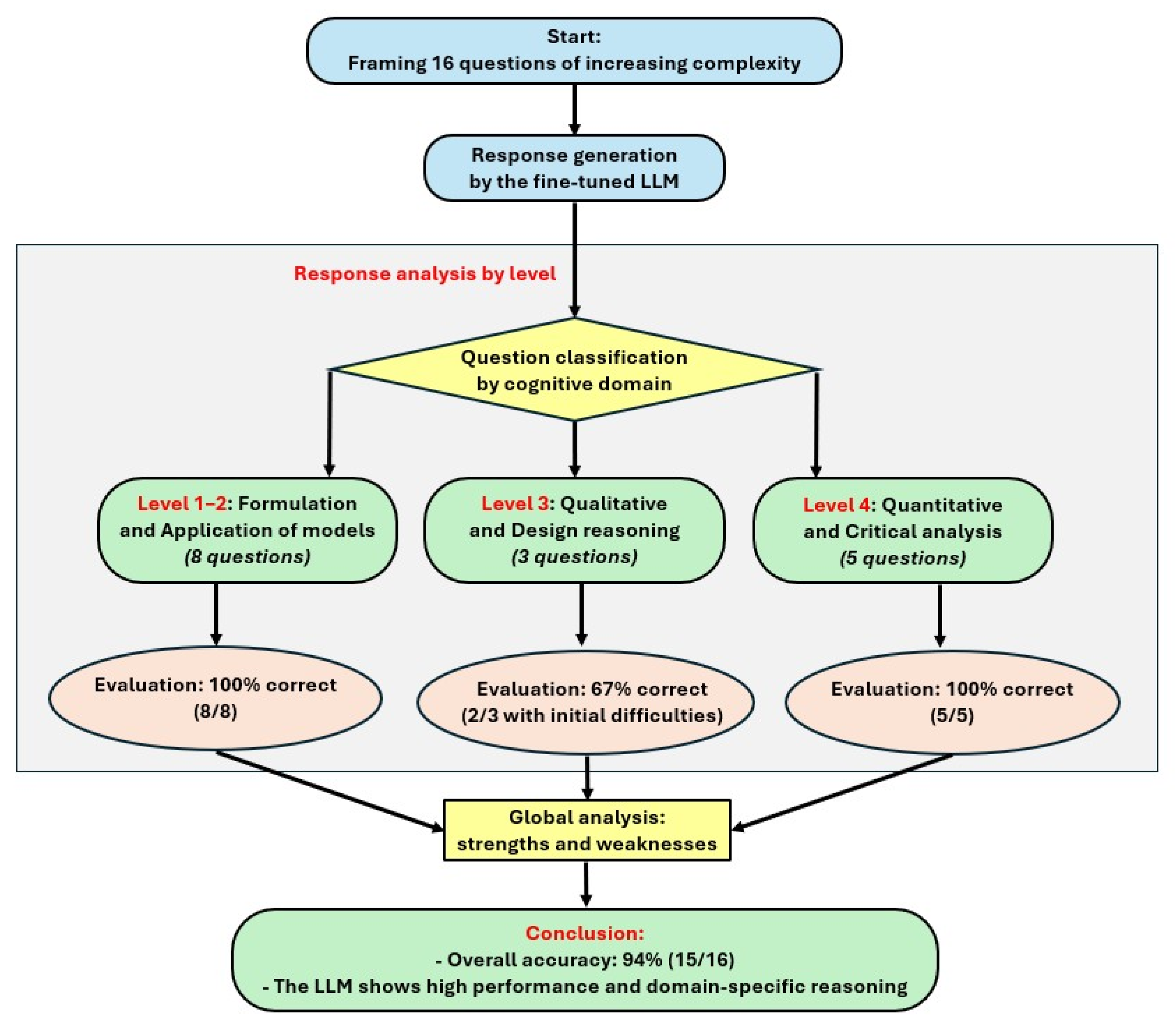

5.1. Analysis by Level of Difficulty

To validate the capabilities of the LLM JanV1-4B-expert-TEG that was trained with a dataset of 202 QA (see

Figure 3), a structured questionnaire of 16 questions [

27] was designed, which is shown in

Table 3. The performance of the LLM was evaluated as excellent, correct with difficulties, and incorrect.

To perform this inference, the trained model must be deployed in the Ollama environment [

30]. This is performed by executing the command ollama run JanV1-4B-expert-TEG. As a result of this execution, the Python command prompt appears, where questions are asked and the corresponding answers are obtained.

The 16 questions were classified into four levels of difficulty and cognitive domain to allow for granular analysis of the model’s performance:

Level 1: Formulation. Questions that require the direct formulation of heat balance equations for a single node. Questions 1, 2, 8, 9 and 11.

Level 2: Application of models. Questions that involve combining multiple heat flows, handling thermoelectric interactions, or simplifying equations under new conditions. Questions 3 to 7.

Level 3: Qualitative and Design reasoning. Questions that require a conceptual analysis of design trade-offs, without complex numerical calculations. Questions 10 and 12.

Level 4–5: Quantitative and Critical analysis. Questions that require numerical calculations, interpretation of tabulated data, and decision-making based on multidimensional analysis. Questions 13 to 16.

5.1.1. Level 1: Formulation

LLM JanV1-4B-expert-TEG answered all questions at this level flawlessly and without hesitation. It demonstrated a solid understanding of heat balance principles and was able to formulate the differential equations correctly. For example, for question 2 [

27] concerning the heat power balance of the dissipation node

, it generated the following answer, which is correct:

5.1.2. Level 2: Application of Models

QAs were generated a priori by the authors based on the model equations, ensuring they test distinct cognitive skills. The complete answers can be found in the Zenodo repository [

27].

Performance at this level was mostly excellent. The model correctly handled the inclusion of Joule and Peltier thermoelectric effects, and the simplification of equations in specific scenarios—open electrical circuit, .

The only difficulty arose in question 5, which requested the complete system of equations for all four nodes. The model initially struggled to structure the response, although the final equation for the most complex node, , was correct.

For question 4, it correctly provided the two internal equations. For example, for the hot junction equation

, the following correct expression was obtained:

5.1.3. Level 3: Qualitative and Design Reasoning

In this category, LLM JanV1-4B-expert-TEG demonstrated a remarkable capacity for abstract reasoning. In question 12, regarding the geometry of the TEG’s legs, the model was able to self-correct and arrived at the correct conclusion about the fundamental trade-off between electrical resistance and thermal conductance. This indicates second-order reasoning, where the LLM not only applies formulas but also understands the underlying design principles.

5.1.4. Level 4: Quantitative and Critical Analysis

This level of assessment was designed to measure the model’s more advanced cognitive abilities: quantitative analysis of numerical data, critical reasoning, and engineering decision-making. To this end, a numerical experiment was designed focusing on question 16, which simulated a scenario involving the analysis of optimization results for the parameters of the equivalent circuit in steady state.

The objective of the simulation was to identify the optimal parameters of the TEG model by comparing four different optimization methods: the canonical genetic algorithm (GA) [

41], a variant of GA with niche formation for real spaces (niching) that seeks to explore multiple local optima (NGA) [

42], the differential evolution (DE) algorithm [

43], and, finally, the simplicial homology global optimization (SHGO) method [

44], available in the SciPy 1.15.2 library [

24].

LLM JanV1-4B-expert-TEG was provided with the results of this process in the form of

Table 4 and

Table 5 and assigned the role of a TEG expert data analyst. Their task was to analyze the final error, simulation accuracy, and runtime of each algorithm to ultimately determine the best option and justify their choice based on a practical trade-off. The performance results and parameters identified for each algorithm are summarized in

Table 4.

Table 5 presents a comparison of the runtime, final objective function error, and optimal parameter values found by each of the four methods. Similar parameter estimation tasks have been addressed with metaheuristics [

45,

46], validating our Level 4 classification as representative of real TEG modeling research.

Based on the reference data used for the TEG Peltier cell model ET-031-10-20 [

11], the final steady-state model of Equation (17) was solved.

The summarized results are as follows:

Actual target values: , , , , , y .

To validate the accuracy of the identified parameters, the temperatures simulated by each optimized model were compared with the reference values obtained in the simulation.

Table 5 presents this comparison for the two extreme operating points of the studied range, 0.0 and 90.0 °C, corresponding to the minimum and maximum temperatures of the heat source,

. In other words, a comparison is presented between the experimental and simulated temperatures using the parameters obtained with the four optimization algorithms at points

and

, at the extremes of the operating range.

The LLM demonstrated exceptional competence in this task. Not only did it correctly and unequivocally identify the worst-performing algorithm, SHGO, but it also addressed the apparent conflict between the metrics in the two tables. It considered that, although one algorithm had a theoretically lower final error, the DE algorithm showed excellent practical accuracy in simulating real-world temperatures. It pragmatically and with good justification concluded that DE was the better option, due to its excellent balance between high accuracy and significantly higher speed.

This result is particularly relevant, as it demonstrates that the specialized LLM is not limited to retrieving information, but is capable of performing synthesis and critical analysis equivalent to that of a human expert in a realistic engineering scenario.

Therefore, small LLMs can reason, since although this model only has 4B, it demonstrated an ability for logical reasoning, comparison and synthesis when given the appropriate framework to work in.

In other questions at this level, the performance was outstanding. The LLM handled unit conversions, ZT figure of merit calculations, and temperature-dependent property analyses with ease.

The model occasionally showed initial difficulties when faced with questions requiring the synthesis of a complete system of equations, such as question 5 of the questionnaire [

27], which requested the complete system of equations for all four nodes. However, parts of the problem were eventually solved correctly. This suggests that structuring prompts for highly complex problems remains crucial.

It is worth highlighting that, although the validation focuses on the 4-DOF model for formulating equations, the pure domain category of the dataset provides fundamental knowledge, including the ZT merit factor and its influence on geometry. This has allowed the model developed in this work to generate answers regarding material selection at other temperatures and the geometric optimization of parameters.

Table 3 summarizes the 16 questions of the TEG expert questionnaire and the evaluation level achieved in the inference of the LLM trained with FT. The evaluation process is summarized in the flowchart in

Figure 5. Besides, an

Appendix A has been added which includes two examples of skill-based prompt questions and three examples of pure domain questions and answers. The overall accuracy analysis is 94%. In this way, the LLM JanV1-4B-expert-TEG showed high performance and domain-specific reasoning.

5.2. TEG FT Models vs. Generalist LLMs

This section aims to compare the specialized FT models developed in this work—JanV1-4B-expert-TEG and Qwen3-4B-thinking-2507-TEG—with other generalist models between 4B and 8B. More specifically, the Mistral-7B [

47], Llama3-8B [

48], Qwen3-4B-thinking-2507 [

49], Qwen2-7B [

50], and Janv1-4B [

12] models are compared against a set of 42 specialized thermoelectricity questions developed in this work called the Specialized Thermoelectricity Benchmark [

27].

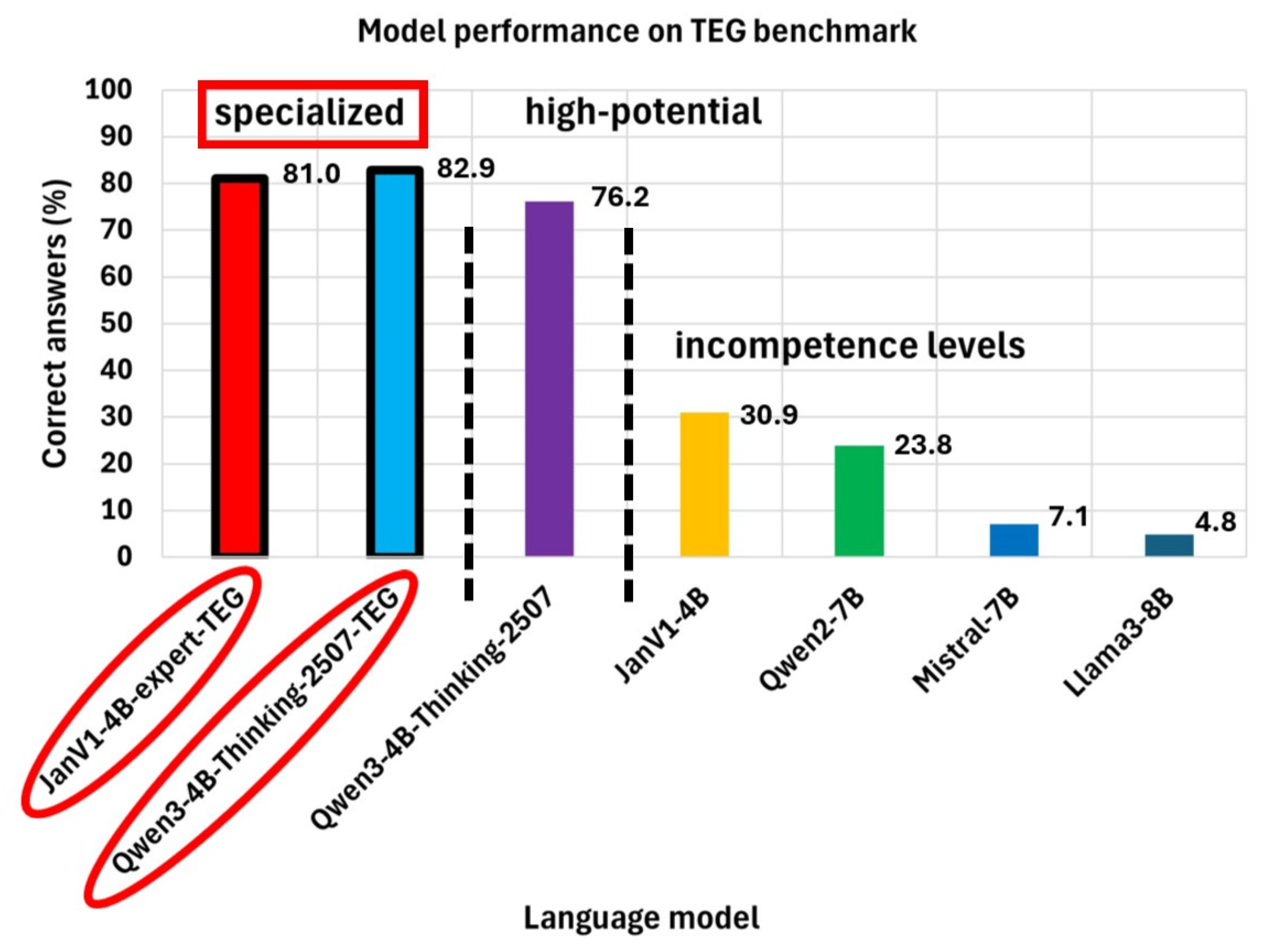

The analysis of the inference of the previous models on 42 questions about TEG is shown in

Figure 6. The clear superiority of the JanV1-expert-TEG model (81%) compared to its base version, Janv1-4B (31%), from which it is derived, is evident. The refinement was not an incremental improvement, but rather a qualitative leap that transformed a base model with low capacity for this domain in TEG into a highly competent and reliable one. This demonstrates that, for specialized domains, technical sensing is the most effective strategy for achieving expert performance.

The Qwen3-4B-Thinking-2507 model (76.2%) is the most interesting case. Despite being a base model without specific tuning, its performance is exceptionally high, almost on a par with the FT JanV1-4B-expert-TEG model. This suggests that it possesses a pre-existing architecture and training with a logical and mathematical reasoning capacity far superior to the average, allowing it to learn and correctly apply the formulas it deduces from the context.

This is consistent when comparing the performance of this model in five very demanding benchmarks. GPQA [

51], with graduate-level science questions requiring deep reasoning, AIME25 [

52], with a well-known, highly challenging mathematics exam, LiveCodeBench v6 [

53], consisting of a code generation and problem-solving test, Arena-Hard v2 [

54], which is based on a set of challenging questions where the quality of the model’s response is assessed, and finally BFCL-v3 [

55], another benchmark designed to assess logical reasoning and comprehension.

Table 6 compares the metrics of the five benchmarks [

49].

In contrast, the standard base models JanV1-4B (30.9%) and Qwen2-7B (23.8%) represent the typical performance of a base model (see

Figure 6). They have some conceptual knowledge—they know what the Peltier effect is or what a semiconductor is doped for—but they fail in applying the formulas.

The most popular generalist models, such as Mistral-7B (7.1%) and Llama3-8B (4.8%), perform very poorly. This result is critical because it demonstrates that a larger model size, 7B and 8B in this case, does not guarantee greater competence in a specialized technical domain like TEG. Lacking specific knowledge, these models appeal to hallucination [

56], inventing formulas and concepts, which makes them not only useless but dangerously misleading for this task.

Figure 6 perfectly illustrates three levels of competence: the specialized level—achieved with FT in the two models refined in this work, JanV1-4B-expert-TEG and Qwen3-4B-Thinking-2507-TEG—the high-potential level—Qwen3-4B-Thinking-2507, generalist model with strong reasoning—and the incompetence level—generalist models that are simply unrealistic, JanV1-4B, Quen2-7B, Mistral-7B and Llama3-8B. This is very powerful quantitative evidence of the value of specific benchmarks and the impact of FT.

The analysis of the results reveals the following:

- ○

The JanV1-4B-expert-TEG model improved from a low base 30.95% to 81.0%, an increase of 50 percentage points, a massive leap that demonstrates the quality of the dataset used.

- ○

The Qwen3-4B-Thinking-2507-TEG model improved upon an already very strong foundation of 76.2%, reaching 82.9%, an increase of 6.7 percentage points. Although the leap is smaller, it is significant, as it refines and specializes existing knowledge, correcting errors and adding nuances.

- 4.

The speed dilemma is a fundamental factor to be analyzed. The speed comparison between the two best FT models, which are the ones trained in this work, remains a key point:

- ○

The JanV1-expert-TEG model offers the best ratio between speed and accuracy, being fast (231 s/response) and very accurate (81.0%).

- ○

The Qwen3-4B-Thinking-2507-TEG model is the most accurate (82.9%), but the time cost is high, at 486 s per answer. This is double the answer time of the previous model.

- ○

Therefore, for this reason, JanV1-4B-expert-TEG achieved a better expert-level competence in the complex domain of TEGs.

Table 8 [

12] provides an explanation consistent with the results we saw in our own tests, adding 42 TEG-specific questions to our model.

Based on the data shown in

Figure 6 and

Table 8, the following conclusions can be drawn:

A specific benchmark for TEG is necessary.

Table 8 [

12] shows that the performance of the JanV1-4B (base LLM) model in the three benchmarks does not guarantee success in a specialized TEG technical domain. According to

Figure 6, the JanV1-4B model’s response to the specific TEG benchmark shows an accuracy of 30.9%, while the corresponding FT model achieves an accuracy of 81%. The acceptable scores in those three general benchmarks in

Table 7 for the JanV1-4B (base LLM) model drop considerably in our TEG benchmark because they lack knowledge in this domain before the FT. This underscores the need for the TEG benchmark that we have created.

The accuracy analysis of JanV1-4B-expert-TEG is consistent with the data in

Table 8, which shows that JanV1-4B (Base LLM) is the best performer in EQBench, scoring 83.61% in reasoning. This perfectly aligns with the success of our JanV1-4B-expert-TEG model in calculation. We taught it the concepts and formulas—the rules of the game—and its strong reasoning skills allowed it to apply them, solve for variables, and arrive at the correct answer with an 81% accuracy. Its ability to self-correct is a clear indication of robust reasoning.

Table 8 shows that JanV1-4B (Base LLM)’s weakness lies in the IFBench (Instruction Following Benchmark). This partially explains its errors in the JanV1-4B-expert-TEG model. For example, its most notable flaw was the inconsistency in the sign of the Seebeck coefficient in some responses. It may have been taught the concept correctly, but its weakness in following instructions meant it did not consistently apply that rule to some specific calculation problems. The isolated numerical errors could also be interpreted as a failure to follow the precise mathematical instruction to the end.

The analysis of the Qwen3-4B-Thinking-2507 model in

Table 6 shows good results. Furthermore,

Figure 6 positions it as a very capable model, closely following the JanV1-4B-expert-TEG model in reasoning and creativity. This explains why, even without specific FT, it achieved such a high score (76.2%) in our calculation test. Step-by-step reasoning is key, since for problems that cannot be solved directly, a model’s ability to generate an internal chain of reasoning is fundamental to arriving at the correct answer.

The methodology employed is inherently generalizable to any technical domain that can be coded in high-quality instruction/response pairs. We emphasize that the Skill-Based component of our dataset not only injects knowledge but also deliberately trains the LLM in structured reasoning skills, such as manipulating and solving systems of equations and handling abstract mathematical models in general. This demonstrates its potential and direct applicability to address more complex or higher-order models in the field of thermoelectric engineering.

5.3. Experimental Design of the TEG and LLM Strategies

This section details the practical application of the LLM JanV1-4B-expert-TEG to improve the experimental design of a TEG. Starting from an initial design (see

Figure 7), it demonstrates how this model, trained with QLoRA and a dataset specialized in TEGs, transcends mere information retrieval to offer expert reasoning, guiding the final TEG design.

The main characteristics of the experimental model are the following:

Heat source power: 2000 W

Hot air flow: axial fan with temperature-adjustable heat source.

Peltier cell dimensions: 30 × 30 × 3.9 mm

Aluminum thermal paste (k = 4 W/mK)

Ambient temperature 23 °C, relative humidity 45%, atmospheric pressure 984 mm Hg, maximum electrical voltage obtained 8.0 V.

5.3.1. Level 3: Qualitative and Design Reasoning Experimental

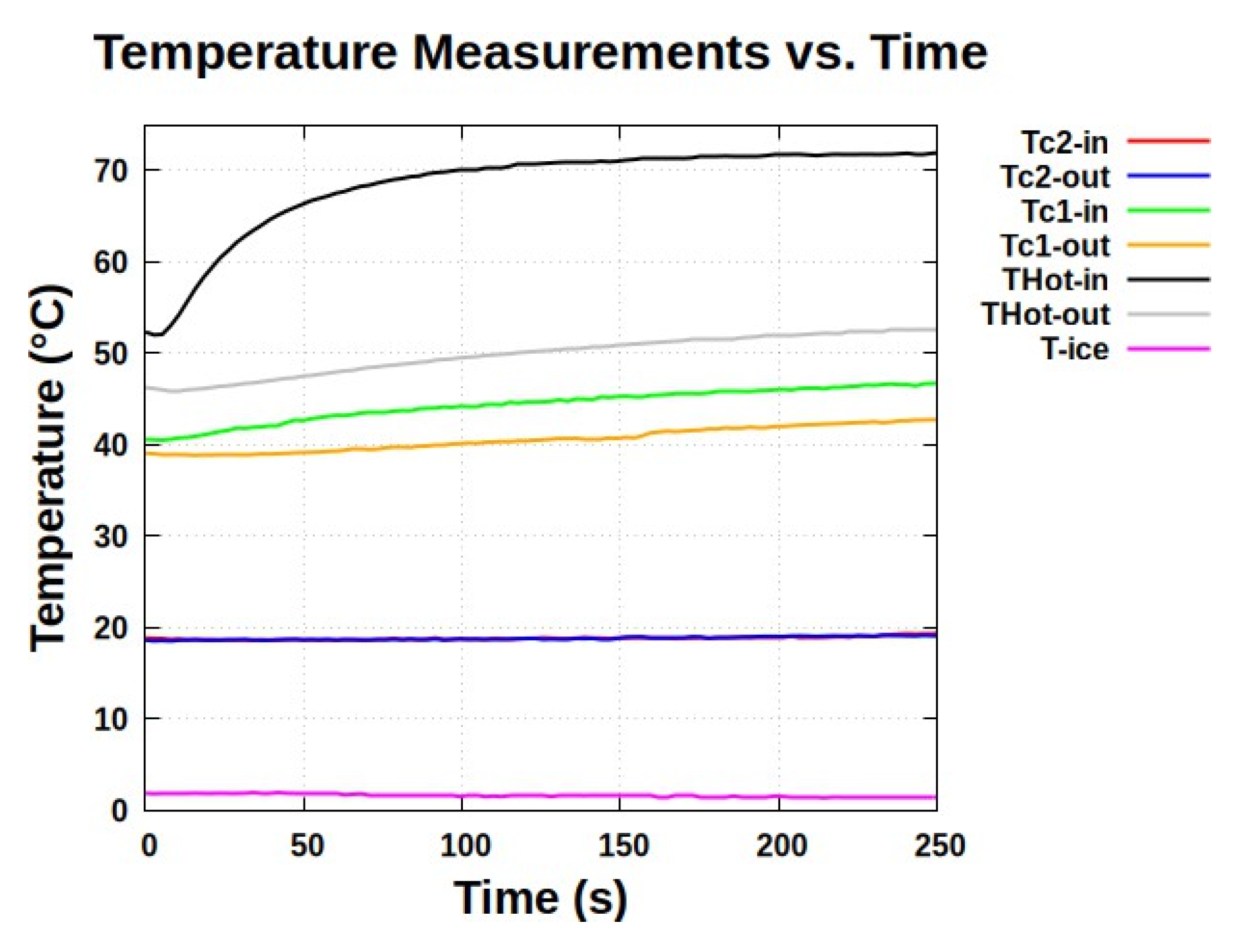

Figure 7a shows the TEG setup. A heat flow enters the system from the left, with an inlet temperature of

. After passing through the upper heat sink, this flow exits from the right at a lower temperature

.

The core of the TEG consists of 10 Peltier cells connected in series located between two heat sinks (see

Figure 7b). The upper heat sink is in direct contact with the heat flow, while the lower heat sink is immersed in a container with ice, whose temperature

is kept stable close to 0 °C.

To monitor the thermal profile, four thermocouples are used in contact with the ceramics of the cells:

Two are located near the hot flow inlet in the upper ceramic of the Peltier cell and in the lower ceramic.

Two are located near the outlet on the upper ceramic and on the lower ceramic.

The results of these measurements are presented in the temperature graph shown in

Figure 8.

Table 9 shows the magnitudes and physical properties of the TEG model. The temperature near the inlet on the lower ceramic coincides with the inlet temperature, and that is why the red curve cannot be observed on

Figure 8.

5.3.2. Analysis of Results and Recommendations from the LLM

The experimental results reveal a key discrepancy (see

Figure 8):

The temperatures on the cold lower face of the cells are practically identical at the inlet and outlet, ≈

However, the temperatures on the hot upper face show a significant temperature gradient, > , which is undesirable for the optimal operation of the generator.

Based on these results, the LLM was consulted about two scenarios:

The full results of the LLM are available in the Zenodo repository [

27].

The most noteworthy aspects of its answer are summarized below.

Scenario 1: Thermal solutions to the gradient.

The LLM demonstrated a deep understanding of the problem, identifying the non-uniform gradient on the hot side as the main challenge. It proposed five specific strategies, explaining their benefits, low cost, and ease of implementation. Specifically, the LLM proposed adding the following elements:

Side thermal diffusers: High conductivity plates over the inlet cells to redistribute heat.

Vertical thermal bridges: Conductive strips between rows to balance temperatures.

Improved high conductivity thermal interface material (TIM) to reduce thermal resistance.

Central thermal bus: A central copper plate to act as a thermal equalizer.

Heat sink optimization: Modify its geometry to achieve uniformly distributed contact points.

Scenario 2: Infeasibility of electrical solutions.

The LLM’s response was categorical: the thermal gradient is a physical phenomenon intrinsic to heat flow and cannot be compensated for or corrected through electrical connections. The model detailed how different electrical configurations (series, parallel) could, in fact, exacerbate thermal imbalance problems, causing cooler cells to act as a brake or hotter cells to become overloaded, thus limiting overall efficiency. While active electronic solutions, such as shifting the maximum power point or balancing with transistors, can mitigate losses, their impact is limited compared to the significant gradients proposed in Scenario 1. The LLM redirected the focus toward real thermal solutions, such as diffusers, as they are superior in addressing the root cause of the problem.

Therefore, the LLM not only responded accurately but also corrected potential conceptual fallacies of the user [

27]. By clearly defining the boundaries between electrical and physical solutions, the LLM prevents resources from being invested in ineffective strategies. It thus provides a baseline of reality for the experimenter, demonstrating its value as an engineering support tool.

6. Conclusions

This work establishes a large language model (LLM) specialized in the domain of thermo-electric generators (TEGs) for deployment on local hardware. Starting with the generalist JanV1-4B and Qwen3-4B-Thinking-2507 models, an efficient fine-tuning (FT) methodology (QLoRA) was employed, modifying only 3.18% of the total parameters of these base models. The key to the process is the use of a custom-designed dataset, which merges deep theoretical knowledge with rigorous instruction tuning to refine behavior and mitigate catastrophic forgetting. The dataset employed for FT contains 202 curated questions and answers (QAs), strategically balanced between domain-specific knowledge (48.5%) and instruction-tuning for response behavior (51.5%). Performance of the models was evaluated using two complementary benchmarks: a 16-question multilevel cognitive benchmark (94% accuracy) and a specialized 42-question TEG benchmark (81% accuracy), scoring responses as excellent, correct with difficulties, or incorrect, based on technical accuracy and reasoning quality. The model’s utility is demonstrated through experimental TEG design guidance, providing expert-level reasoning on thermal management strategies.

QLoRA has been validated as an exceptionally effective strategy for domain specialization on local hardware. The study provides a replicable roadmap for creating expert AI tools, democratizing access to a technology that traditionally requires large-scale computing infrastructures.

The specialized TEG model not only demonstrated deep conceptual knowledge but also exhibited advanced reasoning capabilities. It outperformed larger and more popular base models, such as Llama3-8B and Mistral-7B, proving that, for technical tasks, specialization is more important than the size of the LLM. The model’s ability to self-correct and perform critical analysis of numerical data elevates it from a simple information retrieval tool to a genuine engineering synthesis and analysis tool in TEG.

This study has significant implications for AI engineering and development. It demonstrates that it is possible to develop custom, secure, and high-performance AI assistants that operate locally, ensuring data privacy and accessibility. It paves the way for the creation of a new generation of engineering tools that can accelerate design, analysis, and problem-solving in highly technical domains.

In summary, the novelty of this research lies in four main contributions that advance the state of the art in applying LLMs to specialized engineering. First, a comprehensive and fully reproducible methodology is presented, encompassing everything from data curation to local deployment, to transform the general-purpose JanV1-4B LLM into a specialized assistant within the TEG engineering domain. Second, a strategic design for a training dataset is proposed that balances the injection of deep knowledge—the conceptual ‘what’—with the training of behavior and responsiveness—the procedural ‘how’—which is essential to mitigate catastrophic forgetting and ensure robust performance. Third, a rigorous, multi-level assessment framework is introduced, designed to measure advanced cognitive skills, such as critical reasoning and self-correction, transcending traditional performance metrics. And fourth, the feasibility of achieving this high level of specialization on local hardware is empirically demonstrated, validating the QLoRA approach as an effective way to democratize the development of AI specialized in the TEG sector.

This article could set more ambitious goals in the near future, such as expanding and curating the dataset by increasing the number of TEG specialists or carrying out and analyzing other experimental models.