Recent Advances in B-Mode Ultrasound Simulators

Abstract

1. Introduction

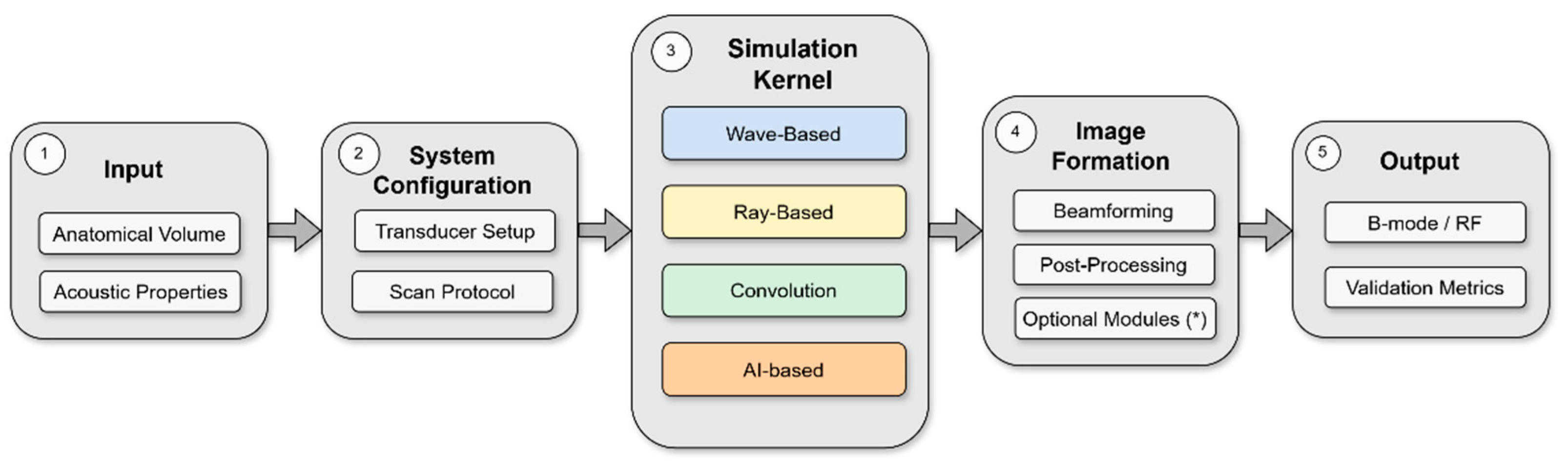

Principles and Methodological Frameworks in Ultrasound Simulation

2. Materials and Methods

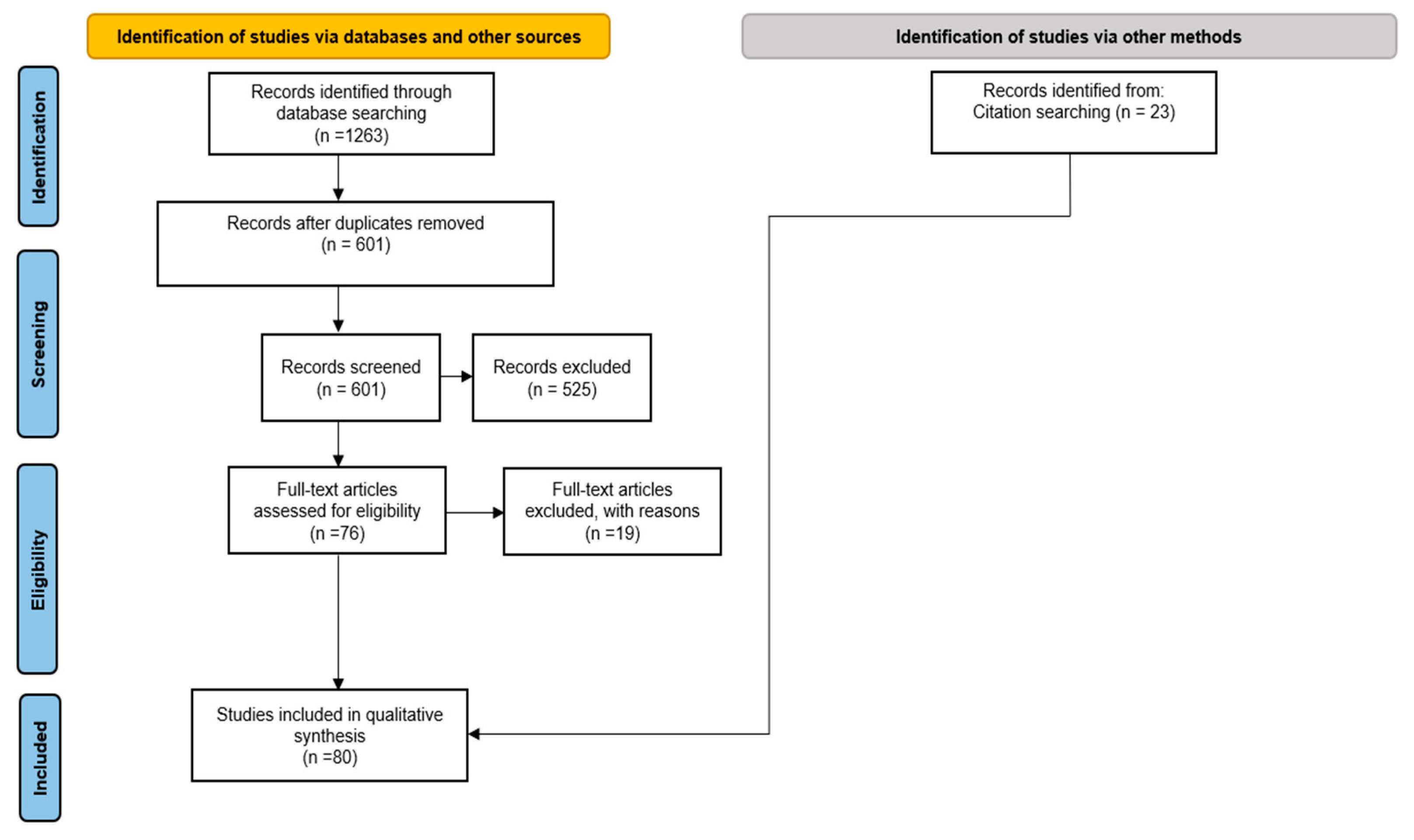

2.1. Methodology

- Articles published between 2014 and 2024, ensuring relevance to recent advancements in the field.

- Articles explicitly mentioning “ultrasound” AND “simulation” OR “simulator” OR “simulate” OR “synthetic” in their titles.

- Articles focusing on B-mode image formation, image realism, or training datasets were included.

- Articles published in languages other than English or Spanish were excluded.

- Studies not explicitly mentioning the key terms in their titles were excluded to maintain relevance to the research objectives, as well as those focusing on non-human populations or system-level simulations not related to image formation.

2.2. Study Selection Process

3. Results

3.1. Software and Toolboxes

3.2. Anatomical, Motion and Artifact Modeling

3.2.1. CT/MRI-Derived Ultrasound Simulation

3.2.2. Dynamic Motion

3.2.3. Speckle, Scatter, and Noise Modeling

3.3. Ultrasound Transport Models

3.3.1. Wave-Based Methods

3.3.2. Ray-Based and Convolution-Based Methods

3.3.3. AI-Based Methods

4. Discussion

5. Conclusions and Perspectives

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Appendix A. Search Strings

Appendix A.1. PubMed

- Query 1: ((ULTRASOUND[Title]) AND (SIMULATOR[Title] OR SIMULATION[Title] OR SIMULATE[Title] OR SYNTHETIC[Title])) AND (WAVE[Title/Abstract] OR ACOUSTICS[Title/Abstract] OR ALGORITHM[Title/Abstract] OR TOOLBOX[Title/Abstract])

- Query 2: ((ULTRASOUND[Title]) AND (SIMULATOR[Title] OR SIMULATION[Title] OR SIMULATE[Title] OR SYNTHETIC[Title])) AND (CT[Title/Abstract] OR COMPUTED TOMOGRAPHY[Title/Abstract] OR “RAY TRACING”[Title/Abstract] OR GPU OR “Monte Carlo” OR CONVOLUTION OR “deep learning” OR “convolutional neural network”)

Appendix A.2. Web of Science

- Query 1: TI = (ULTRASOUND) AND TI = (SIMULATOR OR SIMULATION OR SIMULATE OR SYNTHETIC) AND TS = (WAVE OR ACOUSTICS OR ALGORITHM OR TOOLBOX)

- Query 2: TI = (ULTRASOUND) AND TI = (SIMULATOR OR SIMULATION OR SIMULATE OR SYNTHETIC) AND TS = (CT OR “COMPUTED TOMOGRAPHY” OR “RAY TRACING” OR GPU OR “Monte Carlo” OR CONVOLUTION OR “deep learning” OR “convolutional neural network”)

Appendix A.3. Scopus

- Query 1: TITLE(ULTRASOUND) AND TITLE(SIMULATOR OR SIMULATION OR SIMULATE OR SYNTHETIC) AND TITLE-ABS-KEY(WAVE OR ACOUSTICS OR ALGORITHM OR TOOLBOX)

- Query 2: TITLE(ULTRASOUND) AND TITLE(SIMULATOR OR SIMULATION OR SIMULATE OR SYNTHETIC) AND TITLE-ABS-KEY(CT OR “COMPUTED TOMOGRAPHY” OR “RAY TRACING” OR GPU OR “Monte Carlo” OR CONVOLUTION OR “deep learning” OR “convolutional neural network”)

Appendix A.4. IEEE Xplore

- Query 1: (“Document Title”:“ultrasound”) AND (“Document Title”:“simulator” OR “Document Title”:“simulation” OR “Document Title”:“simulate” OR “Document Title”:“synthetic”) AND (“Document Title”:“wave” OR “Document Title”:“acoustics” OR “Document Title”:“algorithm” OR “Document Title”:“toolbox” OR Abstract:“wave” OR Abstract:“acoustics” OR Abstract:“algorithm” OR Abstract:“toolbox”)

- Query 2: (“Document Title”:“ultrasound”) AND (“Document Title”:“simulator” OR “Document Title”:“simulation” OR “Document Title”:“simulate” OR “Document Title”:“synthetic”) AND (“Document Title”:“CT” OR “Document Title”:“COMPUTED TOMOGRAPHY” OR Abstract:”CT” OR Abstract:”COMPUTED TOMOGRAPHY” OR “Document Title”:“RAY TRACING” OR Abstract:”RAY TRACING” OR “Document Title”:“GPU” OR Abstract:”GPU” OR “Document Title”:“Monte Carlo” OR Abstract:”Monte Carlo” OR “Document Title”:“convolution” OR Abstract:”convolution” OR “Document Title”:“deep learning” OR Abstract:”deep learning” OR “Document Title”:“convolutional neural network” OR Abstract:”convolutional neural network”)

References

- Tasnim, T.; Shuvo, M.M.H.; Hasan, S. Study of Speckle Noise Reduction from Ultrasound B-Mode Images Using Different Filtering Techniques. In Proceedings of the 2017 4th International Conference on Advances in Electrical Engineering (ICAEE), Dhaka, Bangladesh, 28–30 September 2017; pp. 229–234. [Google Scholar]

- Dietrich, C.F.; Lucius, C.; Nielsen, M.B.; Burmester, E.; Westerway, S.C.; Chu, C.Y.; Condous, G.; Cui, X.-W.; Dong, Y.; Harrison, G.; et al. The Ultrasound Use of Simulators, Current View, and Perspectives: Requirements and Technical Aspects (WFUMB State of the Art Paper). Endosc. Ultrasound 2022, 12, 38. [Google Scholar] [CrossRef]

- Nayahangan, L.J.; Dietrich, C.F.; Nielsen, M.B. Simulation-Based Training in Ultrasound—Where Are We Now? Ultraschall Med.-Eur. J. Ultrasound 2021, 42, 240–244. [Google Scholar] [CrossRef]

- Starkov Marion, A.; Vray, D. Toward a Real-Time Simulation of Ultrasound Image Sequences Based On a 3-D Set of Moving Scatterers. IEEE Trans. Ultrason. Ferroelectr. Freq. Control 2009, 56, 2167–2179. [Google Scholar] [CrossRef]

- Law, Y.C.; Knott, T.; Hentschel, B.; Kuhlen, T. Geometrical-Acoustics-Based Ultrasound Image Simulation. Eurographics Workshop Vis. Comput. Biol. Med. 2012, 25–32. [Google Scholar] [CrossRef]

- Goksel, O.; Salcudean, S.E. B-Mode Ultrasound Image Simulation in Deformable 3-D Medium. IEEE Trans. Med. Imaging 2009, 28, 1657–1669. [Google Scholar] [CrossRef]

- Hostettler, A.; Forest, C.; Forgione, A.; Soler, L.; Marescaux, J. Real-Time Ultrasonography Simulator Based on 3D CT-Scan Images. Stud. Health Technol. Inform. 2005, 111, 191–193. [Google Scholar]

- Shams, R.; Hartley, R.; Navab, N. Real-Time Simulation of Medical Ultrasound from CT Images. In Medical Image Computing and Computer-Assisted Intervention—MICCAI 2008, Proceedings of the 11th International Conference, New York, NY, USA, 6–10 September 2008; Springer: Berlin/Heidelberg, Germany, 2008; Volume 11, pp. 734–741. [Google Scholar] [CrossRef]

- Dillenseger, J.-L.; Laguitton, S.; Delabrousse, E. Fast Simulation of Ultrasound Images from a CT Volume. Comput. Biol. Med. 2009, 39, 180–186. [Google Scholar] [CrossRef]

- Gjerald, S.U.; Brekken, R.; Bø, L.E.; Hergum, T.; Nagelhus Hernes, T.A. Interactive Development of a CT-Based Tissue Model for Ultrasound Simulation. Comput. Biol. Med. 2012, 42, 607–613. [Google Scholar] [CrossRef]

- Cong, W.; Yang, J.; Liu, Y.; Wang, Y. Fast and Automatic Ultrasound Simulation from CT Images. Comput. Math. Methods Med. 2013, 2013, 327613. [Google Scholar] [CrossRef]

- Burger, B.; Bettinghausen, S.; Radle, M.; Hesser, J. Real-Time GPU-Based Ultrasound Simulation Using Deformable Mesh Models. IEEE Trans. Med. Imaging 2013, 32, 609–618. [Google Scholar] [CrossRef]

- Pinton, G.F.; Dahl, J.; Rosenzweig, S.; Trahey, G.E. A Heterogeneous Nonlinear Attenuating Full-Wave Model of Ultrasound. IEEE Trans. Ultrason. Ferroelectr. Freq. Control 2009, 56, 474–488. [Google Scholar] [CrossRef]

- Kutter, O.; Shams, R.; Navab, N. Visualization and GPU-Accelerated Simulation of Medical Ultrasound from CT Images. Comput. Methods Programs Biomed. 2009, 94, 250–266. [Google Scholar] [CrossRef]

- Reichl, T.; Passenger, J.; Acosta, O.; Salvado, O. Ultrasound Goes GPU: Real-Time Simulation Using CUDA. In Proceedings of the Medical Imaging 2009: Visualization, Image-Guided Procedures, and Modeling, Lake Buena Vista, FL, USA, 7–12 February 2009; SPIE: Bellingham, WA, USA, 2009; Volume 7261, pp. 386–395. [Google Scholar]

- Floquet, A.; Soubies, E.; Pham, D.-H.; Kouame, D. Spatially Variant Ultrasound Image Restoration with Product Convolution. IEEE Trans. Ultrason. Ferroelectr. Freq. Control 2025, 72, 1235–1244. [Google Scholar] [CrossRef]

- Gao, H.; Choi, H.F.; Claus, P.; Boonen, S.; Jaecques, S.; Van Lenthe, G.H.; Van Der Perre, G.; Lauriks, W.; D’hooge, J. A Fast Convolution-Based Methodology to Simulate 2-Dd/3-D Cardiac Ultrasound Images. IEEE Trans. Ultrason. Ferroelectr. Freq. Control 2009, 56, 404–409. [Google Scholar] [CrossRef]

- Jensen, J.A. Simulation of Advanced Ultrasound Systems Using Field II. In Proceedings of the 2004 2nd IEEE International Symposium on Biomedical Imaging: Nano to Macro (IEEE Cat No. 04EX821), Arlington, VA, USA, 15–18 April 2004; Volume 2, pp. 636–639. [Google Scholar] [CrossRef]

- Jensen, J.A. FIELD: A Program for Simulating Ultrasound Systems. Med. Biol. Eng. Comput. 1996, 34, 351–352. [Google Scholar]

- Zhu, Y.; Szabo, T.L.; McGough, R.J. A Comparison of Ultrasound Image Simulations with FOCUS and Field II. In Proceedings of the 2012 IEEE International Ultrasonics Symposium, Dresden, Germany, 7–10 October 2012; pp. 1694–1697. [Google Scholar]

- Treeby, B.E.; Cox, B.T. K-Wave: MATLAB Toolbox for the Simulation and Reconstruction of Photoacoustic Wave Fields. J. Biomed. Opt. 2010, 15, 021314. [Google Scholar] [CrossRef]

- Holm, S. Ultrasim-a Toolbox for Ultrasound Field Simulation. In Proceedings of the Nordic MATLAB Conference Proceedings, Oslo, Norway, 17–18 October 2001. [Google Scholar]

- Varray, F.; Cachard, C.; Tortoli, P.; Basset, O. Nonlinear Radio Frequency Image Simulation for Harmonic Imaging: Creanuis. In Proceedings of the 2010 IEEE International Ultrasonics Symposium, San Diego, CA, USA, 11–14 October 2010; IEEE: Piscataway, NJ, USA, 2010; pp. 2179–2182. [Google Scholar]

- Constantinescu, E.C.; Udriștoiu, A.-L.; Udriștoiu, Ș.C.; Iacob, A.V.; Gruionu, L.G.; Gruionu, G.; Săndulescu, L.; Săftoiu, A. Transfer Learning with Pre-Trained Deep Convolutional Neural Networks for the Automatic Assessment of Liver Steatosis in Ultrasound Images. Med. Ultrason. 2021, 23, 135–139. [Google Scholar] [CrossRef]

- Webb, J.M.; Meixner, D.D.; Adusei, S.A.; Polley, E.C.; Fatemi, M.; Alizad, A. Automatic Deep Learning Semantic Segmentation of Ultrasound Thyroid Cineclips Using Recurrent Fully Convolutional Networks. IEEE Access 2021, 9, 5119–5127. [Google Scholar] [CrossRef]

- Tricco, A.C.; Lillie, E.; Zarin, W.; O’Brien, K.K.; Colquhoun, H.; Levac, D.; Moher, D.; Peters, M.D.J.; Horsley, T.; Weeks, L.; et al. PRISMA Extension for Scoping Reviews (PRISMA-ScR): Checklist and Explanation. Ann. Intern. Med. 2018, 169, 467–473. [Google Scholar] [CrossRef]

- Rodriguez-Molares, A.; Rindal, O.M.H.; Bernard, O.; Nair, A.; Lediju Bell, M.A.; Liebgott, H.; Austeng, A.; L⊘vstakken, L. The UltraSound ToolBox. In Proceedings of the 2017 IEEE International Ultrasonics Symposium (IUS), Washington, DC, USA, 6–9 September 2017; pp. 1–4. [Google Scholar]

- Garcia, D. Make the Most of MUST, an Open-Source Matlab UltraSound Toolbox. In Proceedings of the 2021 IEEE International Ultrasonics Symposium (IUS), Xi’an, China, 11–16 September 2021; IEEE: Piscataway, NJ, USA, 2021; pp. 1–4. [Google Scholar]

- Gu, J.; Jing, Y. mSOUND: An Open Source Toolbox for Modeling Acoustic Wave Propagation in Heterogeneous Media. IEEE Trans. Ultrason. Ferroelectr. Freq. Control 2021, 68, 1476–1486. [Google Scholar] [CrossRef]

- Garcia, D. SIMUS: An Open-Source Simulator for Medical Ultrasound Imaging. Part I: Theory & Examples. Comput. Methods Programs Biomed. 2022, 218, 106726. [Google Scholar] [CrossRef]

- Cigier, A.; Varray, F.; Garcia, D. SIMUS: An Open-Source Simulator for Medical Ultrasound Imaging. Part II: Comparison with Four Simulators. Comput. Methods Programs Biomed. 2022, 220, 106774. [Google Scholar] [CrossRef]

- Ekroll, I.K.; Saris, A.E.C.M.; Avdal, J. FLUST: A Fast, Open Source Framework for Ultrasound Blood Flow Simulations. Comput. Methods Programs Biomed. 2023, 238, 107604. [Google Scholar] [CrossRef]

- Blanken, N.; Heiles, B.; Kuliesh, A.; Versuis, M.; Jain, K.; Maresca, D.; Lajoinie, G. PROTEUS: A Physically Realistic Contrast-Enhanced Ultrasound Simulator—Part I: Numerical Methods. IEEE Trans. Ultrason. Ferroelectr. Freq. Control 2024, 1. [Google Scholar] [CrossRef]

- Brevett, T. QUPS: A MATLAB Toolbox for Rapid Prototyping of Ultrasound Beamforming and Imaging Techniques. J. Open Source Softw. 2024, 9, 6772. [Google Scholar] [CrossRef]

- Garcia, D.; Varray, F. SIMUS3: An Open-Source Simulator for 3-D Ultrasound Imaging. Comput. Methods Programs Biomed. 2024, 250, 108169. [Google Scholar] [CrossRef]

- D’Amato, J.P.; Vercio, L.L.; Rubi, P.; Vera, E.F.; Barbuzza, R.; Fresno, M.D.; Larrabide, I. Efficient Scatter Model for Simulation of Ultrasound Images from Computed Tomography Data. In Proceedings of the 11th International Symposium on Medical Information Processing and Analysis, Cuenca, Ecuador, 17–19 November 2015; SPIE: Bellingham, WA, USA, 2015; Volume 9681, pp. 23–32. [Google Scholar]

- Salehi, M.; Ahmadi, S.-A.; Prevost, R.; Navab, N.; Wein, W. Patient-Specific 3D Ultrasound Simulation Based on Convolutional Ray-Tracing and Appearance Optimization. In Proceedings of the Medical Image Computing and Computer-Assisted Intervention—MICCAI 2015, Munich, Germany, 5–9 October 2015; Navab, N., Hornegger, J., Wells, W.M., Frangi, A., Eds.; Springer International Publishing: Cham, Switzerland, 2015; pp. 510–518. [Google Scholar]

- Szostek, K.; Piórkowski, A. Real-Time Simulation of Ultrasound Refraction Phenomena Using Ray-Trace Based Wavefront Construction Method. Comput. Methods Programs Biomed. 2016, 135, 187–197. [Google Scholar] [CrossRef]

- Rubi, P.; Vera, E.F.; Larrabide, J.; Calvo, M.; D’Amato, J.P.; Larrabide, I. Comparison of Real-Time Ultrasound Simulation Models Using Abdominal CT Images. In Proceedings of the 12th International Symposium on Medical Information Processing and Analysis, Tandil, Argentina, 5–7 December 2017; Volume 10160, pp. 55–63. [Google Scholar]

- Camara, M.; Mayer, E.; Darzi, A.; Pratt, P. Simulation of Patient-Specific Deformable Ultrasound Imaging in Real Time. In Proceedings of the Imaging for Patient-Customized Simulations and Systems for Point-of-Care Ultrasound, Québec City, QC, Canada, 14 September 2017; Cardoso, M.J., Arbel, T., Tavares, J.M.R.S., Aylward, S., Li, S., Boctor, E., Fichtinger, G., Cleary, K., Freeman, B., Kohli, L., et al., Eds.; Springer International Publishing: Cham, Switzerland, 2017; pp. 11–18. [Google Scholar]

- Satheesh B., A.; Thittai, A.K. A Method of Ultrasound Simulation from Patient-Specific CT Image Data: A Preliminary Simulation Study. In Proceedings of the 2018 IEEE 15th International Symposium on Biomedical Imaging (ISBI 2018), Washington, DC, USA, 4–7 April 2018; pp. 1483–1486. [Google Scholar]

- Satheesh B., A.; Thittai, A.K. A Fast Method for Simulating Ultrasound Image from Patient-Specific CT Data. Biomed. Signal Process. Control 2019, 48, 61–68. [Google Scholar] [CrossRef]

- Velikova, Y.; Azampour, M.F.; Simson, W.; Gonzalez Duque, V.; Navab, N. LOTUS: Learning to Optimize Task-Based US Representations. In Proceedings of the Medical Image Computing and Computer Assisted Intervention—MICCAI 2023, Vancouver, BC, Canada, 8–12 October 2023; Greenspan, H., Madabhushi, A., Mousavi, P., Salcudean, S., Duncan, J., Syeda-Mahmood, T., Taylor, R., Eds.; Springer Nature: Cham, Switzerland, 2023; pp. 435–445. [Google Scholar]

- Rivaz, H.; Collins, D.L. Simulation of Ultrasound Images for Validation of MR to Ultrasound Registration in Neurosurgery. In Proceedings of the Augmented Environments for Computer-Assisted Interventions, Boston, MA, USA, 14 September 2014; Linte, C.A., Yaniv, Z., Fallavollita, P., Abolmaesumi, P., Holmes, D.R., Eds.; Springer International Publishing: Cham, Switzerland, 2014; pp. 23–32. [Google Scholar]

- Storve, S.; Torp, H. Use of B-Splines in Fast Dynamic Ultrasound RF Simulations. In Proceedings of the 2015 IEEE International Ultrasonics Symposium (IUS), Taipei, Taiwan, 21–24 October 2015; IEEE: Piscataway, NJ, USA, 2015; pp. 1–4. [Google Scholar]

- Alessandrini, M.; De Craene, M.; Bernard, O.; Giffard-Roisin, S.; Allain, P.; Waechter-Stehle, I.; Weese, J.; Saloux, E.; Delingette, H.; Sermesant, M.; et al. A Pipeline for the Generation of Realistic 3D Synthetic Echocardiographic Sequences: Methodology and Open-Access Database. IEEE Trans. Med. Imaging 2015, 34, 1436–1451. [Google Scholar] [CrossRef]

- Alessandrini, M.; Heyde, B.; Giffard-Roisin, S.; Delingette, H.; Sermesant, M.; Allain, P.; Bernard, O.; De Craene, M.; D’hooge, J. Generation of Ultra-Realistic Synthetic Echocardiographic Sequences to Facilitate Standardization of Deformation Imaging. In Proceedings of the 2015 IEEE 12th International Symposium on Biomedical Imaging (ISBI), Brooklyn, NY, USA, 16–19 April 2015; IEEE: Piscataway, NJ, USA, 2015; pp. 756–759. [Google Scholar]

- Mastmeyer, A.; Wilms, M.; Fortmeier, D.; Schröder, J.; Handels, H. Real-Time Ultrasound Simulation for Training of US-Guided Needle Insertion in Breathing Virtual Patients. Stud. Health Technol. Inform. 2016, 220, 219–226. [Google Scholar]

- Zhou, Y.; Giffard-Roisin, S.; De Craene, M.; Camarasu-Pop, S.; D’Hooge, J.; Alessandrini, M.; Friboulet, D.; Sermesant, M.; Bernard, O. A Framework for the Generation of Realistic Synthetic Cardiac Ultrasound and Magnetic Resonance Imaging Sequences From the Same Virtual Patients. IEEE Trans. Med. Imaging 2018, 37, 741–754. [Google Scholar] [CrossRef]

- Alessandrini, M.; Chakraborty, B.; Heyde, B.; Bernard, O.; De Craene, M.; Sermesant, M.; D’Hooge, J. Realistic Vendor-Specific Synthetic Ultrasound Data for Quality Assurance of 2-D Speckle Tracking Echocardiography: Simulation Pipeline and Open Access Database. IEEE Trans. Ultrason. Ferroelectr. Freq. Control 2018, 65, 411–422. [Google Scholar] [CrossRef]

- Szostek, K.; Lasek, J.; Piórkowski, A. Real-Time Simulation of Wave Phenomena in Lung Ultrasound Imaging. Appl. Sci. 2023, 13, 9805. [Google Scholar] [CrossRef]

- Abhimanyu, F.N.U.; Orekhov, A.L.; Galeotti, J.; Choset, H. Unsupervised Deformable Image Registration for Respiratory Motion Compensation in Ultrasound Images. arXiv 2023, arXiv:2306.13332. [Google Scholar] [CrossRef]

- Velikova, Y.; Azampour, M.F.; Simson, W.; Esposito, M.; Navab, N. Implicit Neural Representations for Breathing-Compensated Volume Reconstruction in Robotic Ultrasound. In Proceedings of the 2024 IEEE International Conference on Robotics and Automation (ICRA), Yokohama, Japan, 13–17 May 2024; IEEE: Piscataway, NJ, USA, 2024; pp. 1316–1322. [Google Scholar]

- Burman, N.; Alessandra Manetti, C.; Heymans, S.V.; Ingram, M.; Lumens, J.; D’Hooge, J. Large-Scale Simulation of Realistic Cardiac Ultrasound Data with Clinical Appearance: Methodology and Open-Access Database. IEEE Access 2024, 12, 117040–117055. [Google Scholar] [CrossRef]

- Mattausch, O.; Goksel, O. Scatterer Reconstruction and Parametrization of Homogeneous Tissue for Ultrasound Image Simulation. In Proceedings of the 2015 37th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Milan, Italy, 25–29 August 2015; IEEE: Piscataway, NJ, USA, 2024; pp. 6350–6353. [Google Scholar]

- Mattausch, O.; Goksel, O. Image-Based PSF Estimation for Ultrasound Training Simulation. In Simulation and Synthesis in Medical Imaging, Proceedings of the 9th International Workshop, SASHIMI 2024, Held in Conjunction with MICCAI 2024; Marrakesh, Morocco, 10 October 2016, Tsaftaris, S.A., Gooya, A., Frangi, A.F., Prince, J.L., Eds.; Springer International Publishing: Cham, Switzerland, 2016; pp. 23–33. [Google Scholar]

- Singh, P.; Mukundan, R.; de Ryke, R. Synthetic Models of Ultrasound Image Formation for Speckle Noise Simulation and Analysis. In Proceedings of the 2017 International Conference on Signals and Systems (ICSigSys), Bali, Indonesia, 16–18 May 2017; IEEE: Piscataway, NJ, USA, 2017; pp. 278–284. [Google Scholar]

- Singh, P.; Mukundan, R.; de Ryke, R. Quality Analysis of Synthetic Ultrasound Images Using Co-Occurrence Texture Statistics. In Proceedings of the 2017 International Conference on Image and Vision Computing New Zealand (IVCNZ), Christchurch, New Zealand, 4–6 December 2017; IEEE: Piscataway, NJ, USA, 2017; pp. 1–6. [Google Scholar]

- Singh, P.; Mukundan, R.; de Ryke, R. Modelling, Speckle Simulation and Quality Evaluation of Synthetic Ultrasound Images. In Proceedings of the Medical Image Understanding and Analysis, Edinburgh, UK, 11–13 July 2017; Valdés Hernández, M., González-Castro, V., Eds.; Springer International Publishing: Cham, Switzerland, 2017; pp. 74–85. [Google Scholar]

- Mattausch, O.; Goksel, O. Image-Based Reconstruction of Tissue Scatterers Using Beam Steering for Ultrasound Simulation. IEEE Trans. Med. Imaging 2018, 37, 767–780. [Google Scholar] [CrossRef]

- Singh, P.; Mukundan, R.; De Ryke, R. Texture Based Quality Analysis of Simulated Synthetic Ultrasound Images Using Local Binary Patterns. J. Imaging 2018, 4, 3. [Google Scholar] [CrossRef]

- Starkov, R.; Zhang, L.; Bajka, M.; Tanner, C.; Goksel, O. Ultrasound Simulation with Deformable and Patient-Specific Scatterer Maps. Int. J. Comput. Assist. Radiol. Surg. 2019, 14, 1589–1599. [Google Scholar] [CrossRef]

- Gaits, F.; Mellado, N.; Basarab, A. Efficient 2D Ultrasound Simulation Based on Dart-Throwing 3D Scatterer Sampling. In Proceedings of the 2022 30th European Signal Processing Conference (EUSIPCO), Belgrade, Serbia, 29 August–2 September 2022; pp. 897–901. [Google Scholar]

- Gaits, F.; Mellado, N.; Bouyjou, G.; Garcia, D.; Basarab, A. Efficient Stratified 3-D Scatterer Sampling for Freehand Ultrasound:Simulation. IEEE Trans. Ultrason. Ferroelectr. Freq. Control 2024, 71, 127–140. [Google Scholar] [CrossRef]

- Pinton, G. Three Dimensional Full-Wave Nonlinear Acoustic Simulations: Applications to Ultrasound Imaging. AIP Conf. Proc. 2015, 1685, 070001. [Google Scholar] [CrossRef]

- Looby, K.; Herickhoff, C.D.; Sandino, C.; Zhang, T.; Vasanawala, S.; Dahl, J.J. Unsupervised Clustering Method to Convert High-Resolution Magnetic Resonance Volumes to Three-Dimensional Acoustic Models for Full-Wave Ultrasound Simulations. J. Med. Imaging 2019, 6, 037001. [Google Scholar] [CrossRef]

- Pinton, G. Ultrasound Imaging of the Human Body with Three Dimensional Full-Wave Nonlinear Acoustics. Part 1: Simulations Methods. arXiv 2020, arXiv:2003.06934. [Google Scholar]

- Pinton, G. Ultrasound Imaging with Three Dimensional Full-Wave Nonlinear Acoustic Simulations. Part 2: Sources of Image Degradation in Intercostal Imaging. arXiv 2020, arXiv:2003.06927. [Google Scholar]

- Varray, F.; Liebgott, H.; Cachard, C.; Vray, D. Fast Simulation of Realistic Pseudo-Acoustic Nonlinear Radio-Frequency Ultrasound Images. In Proceedings of the 2014 IEEE International Ultrasonics Symposium, Chicago, IL, USA, 3–6 September 2014; IEEE: Piscataway, NJ, USA, 2014; pp. 2217–2220. [Google Scholar]

- Haigh, A.A.; McCreath, E.C. Acceleration of GPU-Based Ultrasound Simulation via Data Compression. In Proceedings of the 2014 IEEE International Parallel & Distributed Processing Symposium Workshops, Phoenix, AZ, USA, 19–23 May 2014; IEEE: Piscataway, NJ, USA, 2014; pp. 1248–1255. [Google Scholar]

- Mattausch, O.; Goksel, O. Monte-Carlo Ray-Tracing for Realistic Interactive Ultrasound Simulation. In Proceedings of the Eurographics Workshop on Visual Computing for Biology and Medicine, Bergen, Norway, 7–9 September 2016; The Eurographics Association: Munich, Germany, 2016; pp. 173–181. [Google Scholar]

- Law, Y.; Kuhlen, T.; Cotin, S. Real-Time Simulation of B-Mode Ultrasound Images for Medical Training; RWTH Aachen University: Aachen, Germany, 2016. [Google Scholar]

- Keelan, R.; Shimada, K.; Rabin, Y. GPU-Based Simulation of Ultrasound Imaging Artifacts for Cryosurgery Training. Technol. Cancer Res. Treat. 2017, 16, 5–14. [Google Scholar] [CrossRef]

- Storve, S.; Torp, H. Fast Simulation of Dynamic Ultrasound Images Using the GPU. IEEE Trans. Ultrason. Ferroelectr. Freq. Control 2017, 64, 1465–1477. [Google Scholar] [CrossRef]

- Mattausch, O.; Makhinya, M.; Goksel, O. Realistic Ultrasound Simulation of Complex Surface Models Using Interactive Monte-Carlo Path Tracing. Comput. Graph. Forum 2018, 37, 202–213. [Google Scholar] [CrossRef]

- Tuzer, M.; Yazıcı, A.; Türkay, R.; Boyman, M.; Acar, B. Multi-Ray Medical Ultrasound Simulation without Explicit Speckle Modelling. Int. J. Comput. Assist. Radiol. Surg. 2018, 13, 1009–1017. [Google Scholar] [CrossRef] [PubMed]

- Tanner, C.; Starkov, R.; Bajka, M.; Goksel, O. Framework for Fusion of Data- and Model-Based Approaches for Ultrasound Simulation. In Proceedings of the Medical Image Computing and Computer Assisted Intervention—MICCAI 2018, Granada, Spain, 16–20 September 2018; Frangi, A.F., Schnabel, J.A., Davatzikos, C., Alberola-López, C., Fichtinger, G., Eds.; Springer International Publishing: Cham, Switzerland, 2018; pp. 332–339. [Google Scholar]

- Wang, Q.; Peng, B.; Cao, Z.; Huang, X.; Jiang, J. A Real-Time Ultrasound Simulator Using Monte-Carlo Path Tracing in Conjunction with Optix Engine. In Proceedings of the 2020 IEEE International Conference on Systems, Man, and Cybernetics (SMC), Toronto, ON, Canada, 11–14 October 11 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 3661–3666. [Google Scholar]

- Cambet, T.P.; Vitale, S.; Larrabide, I. High Performance Ultrasound Simulation Using Monte-Carlo Simulation: A GPU Ray-Tracing Implementation. In Proceedings of the 16th International Symposium on Medical Information Processing and Analysis, Lima, Perú, 3–4 November 2020; SPIE: Bellingham, WA, USA, 2020; Volume 11583, pp. 107–115. [Google Scholar]

- Amadou, A.A.; Peralta, L.; Dryburgh, P.; Klein, P.; Petkov, K.; Housden, R.J.; Singh, V.; Liao, R.; Kim, Y.-H.; Ghesu, F.C.; et al. Cardiac Ultrasound Simulation for Autonomous Ultrasound Navigation. Front. Cardiovasc. Med. 2024, 11, 1384421. [Google Scholar] [CrossRef] [PubMed]

- Peng, B.; Huang, X.; Wang, S.; Jiang, J. A Real-Time Medical Ultrasound Simulator Based on a Generative Adversarial Network Model. In Proceedings of the 2019 IEEE International Conference on Image Processing (ICIP), Taipei, Taiwan, 22–25 September 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 4629–4633. [Google Scholar]

- Abdi, A.H.; Tsang, T.; Abolmaesumi, P. GAN-Enhanced Conditional Echocardiogram Generation. arXiv 2019, arXiv:1911.02121. [Google Scholar] [CrossRef]

- Magnetti, C.; Zimmer, V.; Ghavami, N.; Skelton, E.; Matthew, J.; Lloyd, K.; Hajnal, J.; Schnabel, J.A.; Gomez, A. Deep Generative Models to Simulate 2D Patient-Specific Ultrasound Images in Real Time. In Proceedings of the Medical Image Understanding and Analysis, Oxford, UK, 15–17 July 2020; Papież, B.W., Namburete, A.I.L., Yaqub, M., Noble, J.A., Eds.; Springer International Publishing: Cham, Switzerland, 2020; pp. 423–435. [Google Scholar]

- Zhang, L.; Vishnevskiy, V.; Goksel, O. Deep Network for Scatterer Distribution Estimation for Ultrasound Image Simulation. IEEE Trans. Ultrason. Ferroelectr. Freq. Control 2020, 67, 2553–2564. [Google Scholar] [CrossRef]

- Zhang, L.; Portenier, T.; Paulus, C.; Goksel, O. Deep Image Translation for Enhancing Simulated Ultrasound Images. In Proceedings of the Medical Ultrasound, and Preterm, Perinatal and Paediatric Image Analysis: First International Workshop, ASMUS 2020, and 5th International Workshop, PIPPI 2020, Held in Conjunction with MICCAI 2020, Lima, Peru, 4–8 October 2020; Proceedings. Springer: Berlin/Heidelberg, Germany, 2020; pp. 85–94. [Google Scholar]

- Vitale, S.; Orlando, J.I.; Iarussi, E.; Larrabide, I. Improving Realism in Patient-Specific Abdominal Ultrasound Simulation Using CycleGANs. Int. J. Comput. Assist. Radiol. Surg. 2020, 15, 183–192. [Google Scholar] [CrossRef]

- Escobar, M.; Castillo, A.; Romero, A.; Arbeláez, P. UltraGAN: Ultrasound Enhancement Through Adversarial Generation. In Proceedings of the Simulation and Synthesis in Medical Imaging, Lima, Peru, 4 October 2020; Burgos, N., Svoboda, D., Wolterink, J.M., Zhao, C., Eds.; Springer International Publishing: Cham, Switzerland, 2020; pp. 120–130. [Google Scholar]

- Pigeau, G.; Elbatarny, L.; Wu, V.; Schonewille, A.; Fichtinger, G.; Ungi, T. Ultrasound Image Simulation with Generative Adversarial Network. In Proceedings of the Medical Imaging 2020: Image-Guided Procedures, Robotic Interventions, and Modeling, Houston, TX, USA, 15–20 February 2020; Volume 11315, pp. 54–60. [Google Scholar]

- Cronin, N.J.; Finni, T.; Seynnes, O. Using Deep Learning to Generate Synthetic B-Mode Musculoskeletal Ultrasound Images. Comput. Methods Programs Biomed. 2020, 196, 105583. [Google Scholar] [CrossRef]

- Ao, Y.; Yang, H.; Wei, G.; Ji, B.; Shi, W.; Jiang, Z. An Impoved U-GAT-IT Model for Enhancing the Realism of Simulated Ultrasound Images. In Proceedings of the 2021 International Conference on Electronic Information Engineering and Computer Science (EIECS), Changchun, China, 23–26 September 2021; IEEE: Piscataway, NJ, USA, 2021; pp. 336–340. [Google Scholar]

- Gilbert, A.; Marciniak, M.; Rodero, C.; Lamata, P.; Samset, E.; Mcleod, K. Generating Synthetic Labeled Data From Existing Anatomical Models: An Example with Echocardiography Segmentation. IEEE Trans. Med. Imaging 2021, 40, 2783–2794. [Google Scholar] [CrossRef]

- Zhang, L.; Portenier, T.; Goksel, O. Learning Ultrasound Rendering from Cross-Sectional Model Slices for Simulated Training. Int. J. Comput. Assist. Radiol. Surg. 2021, 16, 721–730. [Google Scholar] [CrossRef]

- Song, Y.; Zheng, J.; Lei, L.; Ni, Z.; Zhao, B.; Hu, Y. CT2US: Cross-Modal Transfer Learning for Kidney Segmentation in Ultrasound Images with Synthesized Data. Ultrasonics 2022, 122, 106706. [Google Scholar] [CrossRef]

- Maack, L.; Holstein, L.; Schlaefer, A. GANs for Generation of Synthetic Ultrasound Images from Small Datasets. Curr. Dir. Biomed. Eng. 2022, 8, 17–20. [Google Scholar] [CrossRef]

- Tiago, C.; Snare, S.R.; Šprem, J.; McLeod, K. A Domain Translation Framework with an Adversarial Denoising Diffusion Model to Generate Synthetic Datasets of Echocardiography Images. IEEE Access 2023, 11, 17594–17602. [Google Scholar] [CrossRef]

- Chen, L.; Liao, H.; Kong, W.; Zhang, D.; Chen, F. Anatomy Preserving GAN for Realistic Simulation of Intraoperative Liver Ultrasound Images. Comput. Methods Programs Biomed. 2023, 240, 107642. [Google Scholar] [CrossRef]

- Mendez, M.; Sundararaman, S.; Probyn, L.; Tyrrell, P.N. Approaches and Limitations of Machine Learning for Synthetic Ultrasound Generation. J. Ultrasound Med. 2023, 42, 2695–2706. [Google Scholar] [CrossRef]

- Stojanovski, D.; Hermida, U.; Lamata, P.; Beqiri, A.; Gomez, A. Echo from Noise: Synthetic Ultrasound Image Generation Using Diffusion Models for Real Image Segmentation. In Proceedings of the Simplifying Medical Ultrasound, Vancouver, BC, Canada, 8 October 2023; Kainz, B., Noble, A., Schnabel, J., Khanal, B., Müller, J.P., Day, T., Eds.; Springer Nature: Cham, Switzerland, 2023; pp. 34–43. [Google Scholar]

- Ghosh, R.K.; Sheet, D. Zero-Shot Multi-Frequency Ultrasound Simulation Using Physics Informed GAN. In Proceedings of the 2024 IEEE South Asian Ultrasonics Symposium (SAUS), Gujarat, India, 27–29 March 2024; IEEE: Piscataway, NJ, USA, 2024; pp. 1–4. [Google Scholar]

- Song, Z.; Zhou, Y.; Wang, J.; Ma, C.Z.-H.; Zheng, Y. Synthesizing Real-Time Ultrasound Images of Muscle Based on Biomechanical Simulation and Conditional Diffusion Network. IEEE Trans. Ultrason. Ferroelectr. Freq. Control 2024, 71, 1501–1513. [Google Scholar] [CrossRef] [PubMed]

- Jaros, J.; Vaverka, F.; Treeby, B.E. Spectral Domain Decomposition Using Local Fourier Basis: Application to Ultrasound Simulation on a Cluster of GPUs. Supercomput. Front. Innov. 2016, 3, 40–55. [Google Scholar] [CrossRef]

- Zhao, X.; Hamilton, M.; McGough, R. Simulations of Nonlinear Continuous Wave Pressure Fields in FOCUS. AIP Conf. Proc. 2017, 1821, 080001. [Google Scholar]

- Wise, E.S.; Robertson, J.L.B.; Cox, B.T.; Treeby, B.E. Staircase-Free Acoustic Sources for Grid-Based Models of Wave Propagation. In Proceedings of the 2017 IEEE International Ultrasonics Symposium (IUS), Washington, DC, USA, 6–9 September 2017; IEEE: Piscataway, NJ, USA, 2017; pp. 1–4. [Google Scholar]

- Sharifzadeh, M.; Benali, H.; Rivaz, H. An Ultra-Fast Method for Simulation of Realistic Ultrasound Images. In Proceedings of the 2021 IEEE International Ultrasonics Symposium (IUS), Xi’an, China, 11–16 September 2021; IEEE: Piscataway, NJ, USA, 2021; pp. 1–4. [Google Scholar]

- Jacquet, J.B.; Tamraoui, M.; Kauffmann, P.; Guey, J.L.; Roux, E.; Nicolas, B.; Liebgott, H. Integrating Finite-Element Model of Probe Element in GPU Accelerated Ultrasound Image Simulation. In Proceedings of the 2023 IEEE International Ultrasonics Symposium (IUS), Montreal, QC, Canada, 3–8 September 2023; IEEE: Piscataway, NJ, USA, 2023; pp. 1–4. [Google Scholar]

- Olsak, O.; Jaros, J. Techniques for Efficient Fourier Transform Computation in Ultrasound Simulations. In Proceedings of the 33rd International Symposium on High-Performance Parallel and Distributed Computing, Pisa, Italy, 3–7 June 2024; Association for Computing Machinery: New York, NY, USA, 2024; pp. 361–363. [Google Scholar]

- Su, M.; Xia, X.; Liu, B.; Zhang, Z.; Liu, R.; Cai, F.; Qiu, W.; Sun, L. High Frequency Focal Transducer with a Fresnel Zone Plate for Intravascular Ultrasound. Appl. Phys. Lett. 2021, 119, 143702. [Google Scholar] [CrossRef]

- Ji, J.; Gong, C.; Lu, G.; Zhang, J.; Liu, B.; Liu, X.; Lin, J.; Wang, P.; Thomas, B.B.; Humayun, M.S.; et al. Potential of Ultrasound Stimulation and Sonogenetics in Vision Restoration: A Narrative Review. Neural Regen. Res. 2024, 20, 3501–3516. [Google Scholar] [CrossRef] [PubMed]

- Heiles, B.; Blanken, N.; Kuliesh, A.; Versluis, M.; Jain, K.; Lajoinie, G.; Maresca, D. PROTEUS: A Physically Realistic Contrast-Enhanced Ultrasound Simulator—Part II: Imaging Applications. IEEE Trans. Ultrason. Ferroelectr. Freq. Control 2025, 72, 866–878. [Google Scholar] [CrossRef]

- Duelmer, F.; Azampour, M.F.; Wysocki, M.; Navab, N. UltraRay: Introducing Full-Path Ray Tracing in Physics-Based Ultrasound Simulation. arXiv 2025, arXiv:2501.05828. [Google Scholar]

- Isaac-for-Healthcare/I4h-Sensor-Simulation 2025. Available online: https://github.com/isaac-for-healthcare/i4h-sensor-simulation (accessed on 26 August 2025).

- Freiche, B.; El-Khoury, A.; Nasiri-Sarvi, A.; Hosseini, M.S.; Garcia, D.; Basarab, A.; Boily, M.; Rivaz, H. Ultrasound Image Generation Using Latent Diffusion Models. In Proceedings of the Medical Imaging 2025: Ultrasonic Imaging and Tomography, San Diego, CA, USA, 17–20 February 2025; SPIE: Bellingham, WA, USA, 2025; Volume 13412, pp. 287–292. [Google Scholar]

- Reynaud, H.; Gomez, A.; Leeson, P.; Meng, Q.; Kainz, B. EchoFlow: A Foundation Model for Cardiac Ultrasound Image and Video Generation. arXiv 2025, arXiv:2503.22357. [Google Scholar] [CrossRef]

| Category | Total Articles | First Author (Year) |

|---|---|---|

| Software and toolboxes | 9 | Rodríguez-Morales et al. (2017) [27], Garcia (2021) [28], Gu & Jing (2021) [29], Garcia (2022) [30], Cigier et al. (2022) [31], Ekroll et al. (2023) [32], Blanken et al. (2024) [33], Brevett (2024) [34], Garcia & Varray (2024) [35]. |

| CT/MRI Derived US Simulation | 8 | D’Amato et al. (2015) [36], Salehi et al. (2015) [37], Szostek & Piórkowski (2016) [38], Rubi et al. (2017) [39], Camara et al. (2017) [40], Satheesh B. & Thittai (2018) [41], Satheesh B. & Thittai (2019) [42], Velikova et al. (2023) [43]. |

| Dynamic motion modeling | 11 | Rivaz & Collins (2014) [44], Storve & Torp (2015) [45], Alessandrini et al. (2015) [46], Alessandrini et al. (2015) [47], Mastmeyer et al. (2016) [48], Zhou et al. (2018) [49], Alessandrini et al. (2018) [50], Szostek et al. (2023) [51], Abhimanyu et al. (2023) [52], Velikova et al. (2024) [53], Burman et al. (2024) [54] |

| Speckle, scatter or noise modeling | 10 | Mattausch & Goksel (2015) [55], Mattausch & Goksel (2016) [56], Singh et al. (2017) [57], Singh et al. (2017) [58], Singh et al. (2017) [59], Mattausch & Goksel (2018) [60], Singh et al. (2018) [61], Starkov et al. (2019) [62], Gaits et al. (2022) [63], Gaits et al. (2024) [64]. |

| Wave-based methods | 4 | Pinton (2015) [65], Looby et al. (2019) [66], Pinton (2020) [67], Pinton (2020) [68] |

| Ray and convolutionbased methods | 12 | Varray et al. (2014) [69], Haigh & McCreath (2014) [70], Mattausch & Goksel (2016) [71], Law et al. (2016) [72], Keelan et al. (2017) [73], Storve & Torp (2017) [74], Mattausch et al. (2018) [75], Tuzer et al. (2018) [76], Tanner et al. (2018) [77], Wang et al. (2020) [78], Cambet et al. (2020) [79], Amadou et al. (2024) [80]. |

| AI-based methods | 20 | Peng et al. (2019) [81], Abdi et al. (2019) [82], Magnetti et al. (2020) [83], Zhang et al. (2020) [84], Zhang et al. (2020) [85], Vitale et al. (2020) [86], Escobar et al. (2020) [87], Pigeau et al. (2020) [88], Cronin et al. (2020) [89], Ao et al. (2021) [90], Gilbert et al. (2021) [91], Zhang et al. (2021) [92], Song et al. (2022) [93], Maack et al. (2022) [94], Tiago et al. (2023) [95], Chen et al. (2023) [96], Mendez et al. (2023) [97], Stojanovski et al. (2023) [98], Ghosh & Sheet (2024) [99], Song et al. (2024) [100]. |

| Distinct approaches | 6 | Jaros et al. (2016) [101] Zhao et al. (2017) [102], Wise et al. (2017) [103], Sharifzadeh et al. (2021) [104], J-B. et al. (2023) [105], Olsak & Jaros (2024) [106]. |

| Simulator/Toolbox | Article’s Public. Year | Domain | GPU Support | Open-Source Availability |

|---|---|---|---|---|

| FIELD [19] | 1996 | Early spatial impulse response (SIR)–based computation of transducer fields (precursor to Field II). | See FIELD II | See FIELD II |

| ULTRASIM [22] | 2001 | Transducer array modeling and CW/PW acoustic field simulation (near and far field). | Not specified | Yes, (GNU GPL). https://www.mn.uio.no/ifi/english/research/groups/dsb/resources/software/ultrasim/ (accessed on 23 November 2025) |

| FIELD II [18] | 2004 | Linear RF and B-mode simulation using spatial impulse response (SIR); point-scatterer modeling; beamforming research. | No, just modified versions like Field IIpro or FIELDGPU | Free (non-commercial) https://field-ii.dk// (accessed on 23 November 2025) |

| k-WAVE [21] | 2010 | Full-wave nonlinear ultrasound and photoacoustics using k-space pseudospectral solver (acoustic/elastic media). | Yes | Yes, (LGPL). http://www.k-wave.org/documentation/k-wave.php (accessed on 23 November 2025) |

| CREANUIS [23] | 2010 | Fundamental + harmonic RF simulation with pseudo-acoustic modeling. | Yes | Yes, (CeCILL-B) https://www.creatis.insa-lyon.fr/site/fr/creanuis (accessed on 23 November 2025) |

| FOCUS [20] | 2012 | Fast transient field computation using spatial impulse response and convolution. | Not specified | Partially (Free, but unclear license) https://www.egr.msu.edu/~fultras-web/download.php (accessed on 23 November 2025) |

| Ultrasound Toolbox (USTB) [27] | 2017 | Toolbox for beamforming, processing, and benchmarking of 2D/3D datasets; standardizes data formats. | Yes | Partially (Free, but unclear license) https://www.ustb.no/ (accessed on 23 November 2025) |

| mSOUND [29] | 2021 | Linear and nonlinear acoustic wave propagation in heterogeneous media (Born approximation) | No | Yes, (GPL-3.0). https://m-sound.github.io/mSOUND/home (accessed on 23 November 2025) |

| MUST [28] | 2021 | Frequency-domain ultrasound simulation and design of imaging scenarios; attenuation/directivity modeling | No, but Multi-CPU (MATLAB Parallel Computing Toolbox) | Yes, (LGPL-3.0) https://www.biomecardio.com/MUST/index.html (accessed on 23 November 2025) |

| SIMUS [30,31] | 2022 | Fast ray-based/convolutional simulation of pressure fields and RF signals (2D). | See Must | Included in Must |

| FLUST [32] | 2023 | Blood-flow and Doppler simulation for velocity estimation benchmarking. | See USTB | Included in USTB |

| SIMUS 3 [35] | 2024 | 3D extension of SIMUS/PFIELD for matrix arrays and volumetric imaging. | See Must | Included in MUST |

| PROTEUS [33] | 2024 | Contrast-enhanced ultrasound (CEUS) RF data simulation, including bubble dynamics. | Yes | Yes, (MIT) https://github.com/PROTEUS-SIM/PROTEUS (accessed on 23 November 2025) |

| QUPS [34] | 2024 | Standardized data structures and GPU-accelerated beamforming for research workflows. | Yes | Yes, (Apache 2.0) https://github.com/thorstone25/qups (accessed on 23 November 2025) |

| Methodology | Physical Fidelity | Computational Cost | Primary Applications | Key Advantages/Limitations |

|---|---|---|---|---|

| Wave-based | Very high (captures diffraction, scattering, nonlinearity) | Very high (minutes–hours per frame; offline) | Device design, safety modeling, research requiring acoustic accuracy | + More realistic physics. − Not feasible for real-time or large volumes. |

| Ray-based | Medium-high (macroscopic artifacts: reflection, refraction, shadowing) | Low (real-time with GPU) | Procedural training, interactive environments | + Fast; handles large volumes. − Cannot reproduce interference or speckle physics. |

| Convolution-based | Medium–high (realistic speckle, PSF-based blurring) | Low (real-time; scalable dataset generation) | Speckle studies, motion modeling, ML dataset creation | + Fast and flexible. − Limited nonlinear and complex propagation modeling. |

| AI-based (GANs, Diffusion) | High visual realism | Very low (inference < 40 ms/frame) | Data augmentation, domain transfer, interactive training | + Extreme speed, high realism. − Limited explicit physical control; dependent on training data. |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Solano-Cordero, C.M.; Encina-Baranda, N.; Pérez-Liva, M.; Herraiz, J.L. Recent Advances in B-Mode Ultrasound Simulators. Appl. Sci. 2025, 15, 12535. https://doi.org/10.3390/app152312535

Solano-Cordero CM, Encina-Baranda N, Pérez-Liva M, Herraiz JL. Recent Advances in B-Mode Ultrasound Simulators. Applied Sciences. 2025; 15(23):12535. https://doi.org/10.3390/app152312535

Chicago/Turabian StyleSolano-Cordero, Cindy M., Nerea Encina-Baranda, Mailyn Pérez-Liva, and Joaquin L. Herraiz. 2025. "Recent Advances in B-Mode Ultrasound Simulators" Applied Sciences 15, no. 23: 12535. https://doi.org/10.3390/app152312535

APA StyleSolano-Cordero, C. M., Encina-Baranda, N., Pérez-Liva, M., & Herraiz, J. L. (2025). Recent Advances in B-Mode Ultrasound Simulators. Applied Sciences, 15(23), 12535. https://doi.org/10.3390/app152312535