The Cognitive Cost of Immersion: Experimental Evidence from VR-Based Technical Training

Featured Application

Abstract

1. Introduction

- Does VR-based instruction produce better immediate knowledge retention than PowerPoint and real-person instruction?

- How do students with no prior knowledge of a subject respond to different teaching methods in terms of comprehension and engagement?

- What are the implications of using VR as a primary teaching tool in technical education compared to traditional approaches?

- Does cognitive ability (as measured by an IQ test) influence learning outcomes differently across the three instructional methods?

2. Literature Review

2.1. The Rise of Immersive Technologies in Education

2.2. Theoretical Frameworks Explaining VR Learning Outcomes

2.2.1. Cognitive Load Theory and Immersive Learning

2.2.2. Presence, Engagement, and Cognitive Outcomes

2.3. Empirical Evidence from Technical and Vocational Education

2.4. Individual Differences: Cognitive Ability and Learning Styles

2.4.1. Cognitive Ability (IQ)

2.4.2. Learning Styles

2.5. Identified Gaps and Rationale for the Present Study

- VR enhances engagement and presence, but its effect on knowledge retention is inconsistent.

- Cognitive load is a central explanatory mechanism, yet few studies directly compare VR with traditional methods using controlled experimental designs and objective learning outcomes.

- Individual differences, such as IQ and learning styles, remain understudied moderators in VR learning.

- Most previous research focuses on domain-specific applications or short-term motivational measures rather than systematic comparisons of learning efficiency across instructional modes.

3. Materials and Methods

3.1. Participants

- Virtual Reality (VR) training group (n = 35),

- PowerPoint-based instruction group (n = 35), and

- Real-person (hands-on) instruction group (n = 36).

3.2. Instructional Materials and Equipment

3.2.1. VR Instructional Setup

3.2.2. PowerPoint-Based Instruction

3.2.3. Real-Person (Hands-On) Instruction

3.3. Measurement Instruments

3.3.1. Knowledge Test

3.3.2. Cognitive Ability (IQ)

3.3.3. Learning Styles

3.4. Procedure

- Pre-instruction phase: Participants completed the Raven IQ test and the Honey and Mumford Learning Styles questionnaire online. These data were used to examine whether individual differences influenced learning outcomes.

- Instructional phase: Participants were randomly assigned to one of the three methods (VR, PowerPoint, or real-person).

- PowerPoint group: received collective instruction in a computer laboratory.

- Real-person group: taught in groups of five in the engineering laboratory with the physical CNC machine.

- VR group: instructed individually using the Meta Quest 3 headset.

- 3.

- Assessment phase: Immediately following the instructional phase, all participants completed the 20-item knowledge test via Google Forms, administered concurrently for the three groups.

3.5. Data Analysis

3.5.1. Data Preparation

- Responses were screened for completeness and consistency.

- IQ scores were treated as a continuous variable.

- Learning-style scores (Activist, Reflector, Theorist, Pragmatist) were retained as separate numeric predictors.

- Knowledge test results were expressed both as raw scores (0–20) and percentages (0–100%).

3.5.2. Statistical Procedures

- Descriptive statistics (mean, standard deviation, 95% CI) for each instructional group.

- One-way ANOVA to test differences in knowledge scores among the three instructional methods.

- Tukey HSD post hoc comparisons to identify specific group differences.

- Analysis of Covariance (ANCOVA) with IQ as a covariate to control for individual cognitive ability.

- Moderation analysis (Method × IQ interaction) to test whether cognitive ability affected the relationship between instructional method and performance.

- Extended regression models including gender and the four learning-style scores to assess their predictive contribution.

3.6. Controls and Validity

4. Results

4.1. Participant Characteristics and Data Completeness

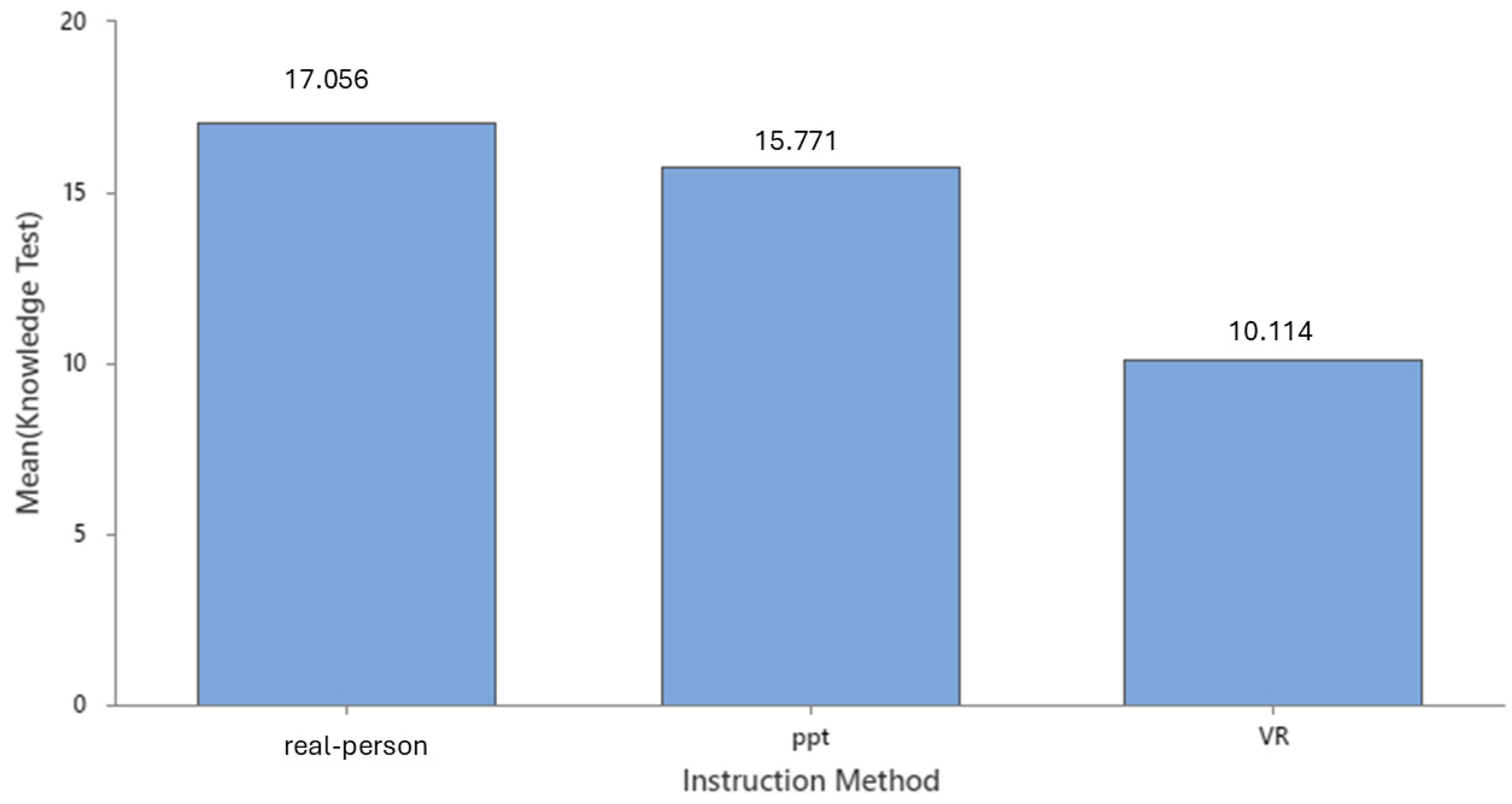

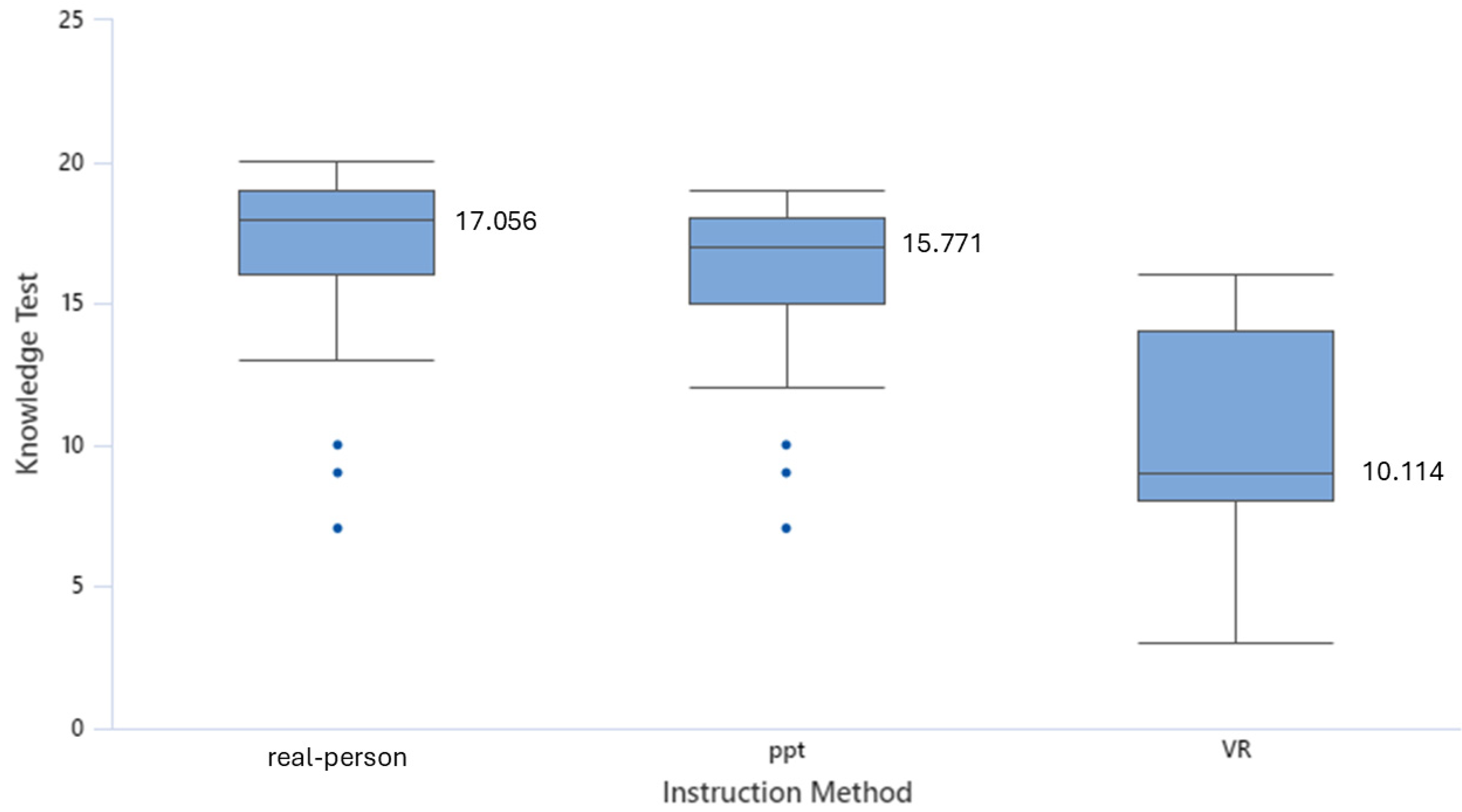

4.2. Descriptive Statistics

4.3. Primary Analysis: Effect of Instructional Method (RQ1)

- The VR group scored significantly lower than the PowerPoint group (p < 0.001). The difference was large: Mean Difference = −5.66 (95% CI: −7.44 to −3.88), Cohen’s d = 1.81 (95% CI: 1.27 to 2.35).

- The VR group also scored significantly lower than the Real-person group (p < 0.001). The difference was very large: Mean Difference = −6.95 (95% CI: −8.71 to −5.17), Cohen’s d = 2.22 (95% CI: 1.64 to 2.79).

4.4. Covariate Analysis: Controlling for IQ (RQ4)

- Real-person: adjusted M = 17.00

- PowerPoint: adjusted M = 15.82

- VR: adjusted M = 10.18

4.5. Moderation Analysis: Method × IQ Interaction (RQ4)

4.6. Gender Effects

4.7. Learning Style Effects

4.8. Summary of Findings

- Instructional method had a significant effect on immediate knowledge retention, with VR producing lower scores than both PowerPoint and Real-person instruction.

- IQ significantly predicted learning performance but did not interact with the instructional method.

- Gender and learning-style preferences were not associated with knowledge outcomes.

- The pattern of group differences remained consistent after adjusting for cognitive ability.

5. Discussion

5.1. Overview of Findings

5.2. Interpretation Through Cognitive Load Theory

5.3. The Role of Presence and Engagement

5.4. Comparison with Traditional Instruction

5.5. Individual Differences: IQ and Learning Style

6. Conclusions and Implications

6.1. Summary of Key Findings

6.2. Theoretical Implications

6.3. Practical Implications for Educators and Instructional Designers

- Use VR strategically within blended learning.

- Incorporate cognitive scaffolding.Designers should integrate signaling, pre-training, and segmentation features [32] into VR simulations to reduce extraneous load. Structured narration and prompts can direct attention to relevant components.

- Provide instructor presence and interaction.Learning in VR benefits from human guidance. Studies show that instructor or peer presence within virtual environments enhances focus and knowledge construction [30].

- Address user comfort and acclimation.First-time VR users may experience cybersickness, disorientation, or attentional fatigue [36]. Implementing short introductory sessions can help learners acclimate before full immersion.

- Evaluate long-term and procedural learning.While VR may not boost short-term factual recall, its potential for skill-based and procedural learning remains promising [37]. Future courses should assess VR’s impact on long-term retention and transfer.

6.4. Limitations

6.5. Recommendations for Future Research

- Assess long-term learning and skill transfer following VR exposure.

- Include direct measures of cognitive load, presence, and motivation to test mediation mechanisms.

- Compare VR-first versus VR-integrated sequences to identify optimal implementation strategies.

- Explore adaptive VR systems that adjust visual complexity or pace to learners’ cognitive profiles.

- Conduct multi-disciplinary replications in engineering, health, and vocational education to examine domain-specific effects.

6.6. Final Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Makransky, G.; Terkildsen, T.S.; Mayer, R.E. Adding Immersive Virtual Reality to a Science Lab Simulation Causes More Presence but Less Learning. Learn. Instr. 2019, 60, 225–236. [Google Scholar] [CrossRef]

- Krajčovič, M.; Gabajová, G.; Matys, M.; Furmannová, B.; Dulina, Ľ. Virtual Reality as an Immersive Teaching Aid to Enhance the Connection between Education and Practice. Sustainability 2022, 14, 9580. [Google Scholar] [CrossRef]

- Merchant, Z.; Goetz, E.T.; Cifuentes, L.; Keeney-Kennicutt, W.; Davis, T.J. Effectiveness of Virtual Reality-Based Instruction on Students’ Learning Outcomes in K–12 and Higher Education: A Meta-Analysis. Comput. Educ. 2014, 70, 29–40. [Google Scholar] [CrossRef]

- Radianti, J.; Majchrzak, T.A.; Fromm, J.; Wohlgenannt, I. A Systematic Review of Immersive Virtual Reality Applications for Higher Education. Comput. Educ. 2020, 147, 103778. [Google Scholar] [CrossRef]

- Albus, P.; Vogt, J.; Seufert, T.; Kapp, S. Signaling in Virtual Reality Influences Learning Outcome and Cognitive Load. Comput. Educ. 2021, 166, 104154. [Google Scholar] [CrossRef]

- Parong, J.; Mayer, R.E. Learning Science in Immersive Virtual Reality. J. Educ. Psychol. 2018, 110, 785. [Google Scholar] [CrossRef]

- Sweller, J. Cognitive Load during Problem Solving: Effects on Learning. Cogn. Sci. 1988, 12, 257–285. [Google Scholar] [CrossRef]

- Raven, J.; Raven, J.C.; Court, J.H. Manual for Raven’s Progressive Matrices and Vocabulary Scales; Oxford Psychologists Press: Oxford, UK, 1998. [Google Scholar]

- Honey, P.; Mumford, A. The Manual of Learning Styles; Peter Honey: Maidenhead, UK, 1986. [Google Scholar]

- Pashler, H.; McDaniel, M.; Rohrer, D.; Bjork, R. Learning Styles: Concepts and Evidence. Psychol. Sci. Public Interest 2008, 9, 105–119. [Google Scholar] [CrossRef]

- Dede, C. Immersive Interfaces for Engagement and Learning. Science 2009, 323, 66–69. [Google Scholar] [CrossRef] [PubMed]

- Lee, E.A.; Wong, K.W. Learning with Desktop Virtual Reality: Low Spatial Ability Learners Are More Positively Affected. Comput. Educ. 2014, 79, 49–58. [Google Scholar] [CrossRef]

- Pantelidis, P.; Chorti, A.; Papagiouvanni, I.; Paparoidamis, G.; Drosos, C.; Panagiotakopoulos, T.; Lales, G.; Sideris, M. Virtual and Augmented Reality in Medical Education. Med. Surg. Educ.-Past Present Future 2018, 26, 77–97. [Google Scholar]

- Petruse, R.E.; Grecu, V.; Chiliban, M.-B.; Tâlvan, E.-T. Comparative Analysis of Mixed Reality and PowerPoint in Education: Tailoring Learning Approaches to Cognitive Profiles. Sensors 2024, 24, 5138. [Google Scholar] [CrossRef]

- Petruse, R.E.; Grecu, V.; Gakić, M.; Gutierrez, J.M.; Mara, D. Exploring the Efficacy of Mixed Reality versus Traditional Methods in Higher Education: A Comparative Study. Appl. Sci. 2024, 14, 1050. [Google Scholar] [CrossRef]

- Sweller, J. Element Interactivity and Intrinsic, Extraneous, and Germane Cognitive Load. Educ. Psychol. Rev. 2010, 22, 123–138. [Google Scholar] [CrossRef]

- Makransky, G.; Petersen, G.B. The Cognitive Affective Model of Immersive Learning (CAMIL): A Theoretical Research-Based Model of Learning in Immersive Virtual Reality. Educ. Psychol. Rev. 2021, 33, 937–958. [Google Scholar] [CrossRef]

- Slater, M. Place Illusion and Plausibility Can Lead to Realistic Behaviour in Immersive Virtual Environments. Philos. Trans. R. Soc. B Biol. Sci. 2009, 364, 3549–3557. [Google Scholar] [CrossRef]

- Slater, M. Immersion and the Illusion of Presence in Virtual Reality. Br. J. Psychol. 2018, 109, 431–433. [Google Scholar] [CrossRef] [PubMed]

- Cummings, J.J.; Bailenson, J.N. How Immersive Is Enough? A Meta-Analysis of the Effect of Immersive Technology on User Presence. Media Psychol. 2016, 19, 272–309. [Google Scholar] [CrossRef]

- Grecu, V.; Deneș, C.; Ipiña, N. Creative Teaching Methods for Educating Engineers. Appl. Mech. Mater. 2013, 371, 764–768. [Google Scholar] [CrossRef]

- Jensen, L.; Konradsen, F. A Review of the Use of Virtual Reality Head-Mounted Displays in Education and Training. Educ. Inf. Technol. 2018, 23, 1515–1529. [Google Scholar] [CrossRef]

- Doerner, R.; Horst, R. Overcoming Challenges When Teaching Hands-on Courses about Virtual Reality and Augmented Reality: Methods, Techniques and Best Practice. Graph. Vis. Comput. 2022, 6, 200037. [Google Scholar] [CrossRef]

- Deary, I.J.; Penke, L.; Johnson, W. The Neuroscience of Human Intelligence Differences. Nat. Rev. Neurosci. 2010, 11, 201–211. [Google Scholar] [CrossRef]

- Kalyuga, S. Expertise Reversal Effect and Its Implications for Learner-Tailored Instruction. Educ. Psychol. Rev. 2007, 19, 509–539. [Google Scholar] [CrossRef]

- Coffield, F.; Moseley, D.; Hall, E.; Ecclestone, K. Learning Styles and Pedagogy in Post-16 Learning: A Systematic and Critical Review; Learning and Skills Research Centre: London, UK, 2004. [Google Scholar]

- Lin, Y.; Wang, S.; Lan, Y. The Study of Virtual Reality Adaptive Learning Method Based on Learning Style Model. Comput. Appl. Eng. Educ. 2022, 30, 396–414. [Google Scholar] [CrossRef]

- Fiorella, L.; Mayer, R.E. Learning as a Generative Activity: Eight Learning Strategies That Promote Understanding; Cambridge University Press: Cambridge, UK, 2015. [Google Scholar]

- Mayer, R.E. Multimedia Instruction. In Handbook of Research on Educational Communications and Technology; Springer: Berlin/Heidelberg, Germany, 2013; pp. 385–399. [Google Scholar]

- Chi, M.T.H.; Wylie, R. The ICAP Framework: Linking Cognitive Engagement to Active Learning Outcomes. Educ. Psychol. 2014, 49, 219–243. [Google Scholar] [CrossRef]

- Mayer, R.E. Using Multimedia for E-Learning. J. Comput. Assist. Learn. 2017, 33, 403–423. [Google Scholar] [CrossRef]

- Mayer, R.E.; Moreno, R. Nine Ways to Reduce Cognitive Load in Multimedia Learning. Educ. Psychol. 2003, 38, 43–52. [Google Scholar] [CrossRef]

- Efendi, D.; Apriliyasari, R.W.; Prihartami Massie, J.G.E.; Wong, C.L.; Natalia, R.; Utomo, B.; Sunarya, C.E.; Apriyanti, E.; Chen, K.-H. The Effect of Virtual Reality on Cognitive, Affective, and Psychomotor Outcomes in Nursing Staffs: Systematic Review and Meta-Analysis. BMC Nurs. 2023, 22, 170. [Google Scholar] [CrossRef]

- Wilson, K.E.; Martinez, M.; Mills, C.; D’Mello, S.; Smilek, D.; Risko, E.F. Instructor Presence Effect: Liking Does Not Always Lead to Learning. Comput. Educ. 2018, 122, 205–220. [Google Scholar] [CrossRef]

- Poupard, M.; Larrue, F.; Sauzéon, H.; Tricot, A. A Systematic Review of Immersive Technologies for Education: Effects of Cognitive Load and Curiosity State on Learning Performance. Br. J. Educ. Technol. 2025, 56, 5–41. [Google Scholar] [CrossRef]

- Stanney, K.; Lawson, B.; Rokers, B.; Dennison, M.; Fidopiastis, C.; Stoffregen, T. Identifying Causes of and Solutions for Cybersickness in Immersive Technology. Int. J. Hum. Comput. Interact. 2020, 36, 1783–1803. [Google Scholar] [CrossRef]

- Amin; Widiaty, I.; Yulia, C.; Abdullah, A.G. The Application of Virtual Reality (VR) in Vocational Education: A Systematic Review. In Proceedings of the 4th International Conference on Innovation in Engineering and Vocational Education (ICIEVE 2021), Bandung, Indonesia, 27 November 2021. [Google Scholar] [CrossRef]

| Variable | Instruction Method | N | Mean | SE Mean | StDev | Minimum | Q1 | Median | Q3 | Maximum |

|---|---|---|---|---|---|---|---|---|---|---|

| Knowledge Test | face-to-face | 36 | 17.056 | 0.502 | 3.014 | 7.000 | 16.000 | 18.000 | 19.000 | 20.000 |

| ppt | 35 | 15.771 | 0.482 | 2.850 | 7.000 | 15.000 | 17.000 | 18.000 | 19.000 | |

| VR | 35 | 10.114 | 0.591 | 3.496 | 3.000 | 8.000 | 9.000 | 14.000 | 16.000 |

| Index | Sum_sq | df | F | PR (>F) |

|---|---|---|---|---|

| C (method) | 962.170 | 2 | 49.080 | 0 |

| Residual | 1009.603 | 103 |

| Group 1 | Group 2 | Meandiff | p-Adj | Lower | Upper | Reject |

|---|---|---|---|---|---|---|

| PowerPoint | Real Person | 1.284 | 0.2 | −0.483 | 3.052 | FALSE |

| PowerPoint | VR | −5.657 | 0 | −7.437 | −3.877 | TRUE |

| Real Person | VR | −6.941 | 0 | −8.709 | −5.174 | TRUE |

| Index | Sum_sq | df | F | PR (>F) |

|---|---|---|---|---|

| C (method) | 839.250 | 2 | 49.355 | 0 |

| IQ | 142.386 | 1 | 16.747 | 0 |

| Residual | 867.217 | 102 |

| Index | Sum_sq | df | F | PR (>F) |

|---|---|---|---|---|

| C (method) | 839.250 | 2 | 48.840 | 0 |

| IQ | 142.386 | 1 | 16.572 | 0 |

| C (method):IQ | 8.040 | 2 | 0.468 | 0.628 |

| Residual | 859.177 | 100 |

| Index | Sum_sq | df | F | PR(>F) |

|---|---|---|---|---|

| C (method) | 838.705 | 2 | 49.137 | 0 |

| C (gender) | 5.242 | 1 | 0.614 | 0.435 |

| IQ | 137.329 | 1 | 16.091 | 0 |

| Residual | 861.975 | 101 |

| Knowledge_Score | iq | Activist | Reflector | Theorist | Pragmatist | |

|---|---|---|---|---|---|---|

| knowledge_score | 1 | 0.367 | 0.067 | 0.003 | −0.195 | −0.074 |

| iq | 0.367 | 1 | −0.094 | 0.024 | −0.021 | 0.027 |

| Activist | 0.067 | −0.094 | 1 | −0.403 | −0.262 | 0.182 |

| Reflector | 0.003 | 0.024 | −0.403 | 1 | 0.464 | −0.027 |

| Theorist | −0.195 | −0.021 | −0.262 | 0.464 | 1 | 0.088 |

| Pragmatist | −0.074 | 0.027 | 0.182 | −0.027 | 0.088 | 1 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Grecu, V.; Petruse, R.E.; Chiliban, M.-B.; Tâlvan, E.-T. The Cognitive Cost of Immersion: Experimental Evidence from VR-Based Technical Training. Appl. Sci. 2025, 15, 12534. https://doi.org/10.3390/app152312534

Grecu V, Petruse RE, Chiliban M-B, Tâlvan E-T. The Cognitive Cost of Immersion: Experimental Evidence from VR-Based Technical Training. Applied Sciences. 2025; 15(23):12534. https://doi.org/10.3390/app152312534

Chicago/Turabian StyleGrecu, Valentin, Radu Emanuil Petruse, Marius-Bogdan Chiliban, and Elena-Teodora Tâlvan. 2025. "The Cognitive Cost of Immersion: Experimental Evidence from VR-Based Technical Training" Applied Sciences 15, no. 23: 12534. https://doi.org/10.3390/app152312534

APA StyleGrecu, V., Petruse, R. E., Chiliban, M.-B., & Tâlvan, E.-T. (2025). The Cognitive Cost of Immersion: Experimental Evidence from VR-Based Technical Training. Applied Sciences, 15(23), 12534. https://doi.org/10.3390/app152312534